Abstract

Background

Many nations require child‐serving professionals to report known or suspected cases of significant child abuse and neglect to statutory child protection or safeguarding authorities. Considered globally, there are millions of professionals who fulfil these roles, and many more who will do so in future. Ensuring they are trained in reporting child abuse and neglect is a key priority for nations and organisations if efforts to address violence against children are to succeed.

Objectives

To assess the effectiveness of training aimed at improving reporting of child abuse and neglect by professionals and to investigate possible components of effective training interventions.

Search methods

We searched CENTRAL, MEDLINE, Embase, 18 other databases, and one trials register up to 4 June 2021. We also handsearched reference lists, selected journals, and websites, and circulated a request for studies to researchers via an email discussion list.

Selection criteria

All randomised controlled trials (RCTs), quasi‐RCTs, and controlled before‐and‐after studies examining the effects of training interventions for qualified professionals (e.g. teachers, childcare professionals, doctors, nurses, and mental health professionals) to improve reporting of child abuse and neglect, compared with no training, waitlist control, or alternative training (not related to child abuse and neglect).

Data collection and analysis

We used methodological procedures described in the Cochrane Handbook for Systematic Reviews of Interventions. We synthesised training effects in meta‐analysis where possible and summarised findings for primary outcomes (number of reported cases of child abuse and neglect, quality of reported cases, adverse events) and secondary outcomes (knowledge, skills, and attitudes towards the reporting duty). We used the GRADE approach to rate the certainty of the evidence.

Main results

We included 11 trials (1484 participants), using data from 9 of the 11 trials in quantitative synthesis. Trials took place in high‐income countries, including the USA, Canada, and the Netherlands, with qualified professionals. In 8 of the 11 trials, interventions were delivered in face‐to‐face workshops or seminars, and in 3 trials interventions were delivered as self‐paced e‐learning modules. Interventions were developed by experts and delivered by specialist facilitators, content area experts, or interdisciplinary teams. Only 3 of the 11 included studies were conducted in the past 10 years.

Primary outcomes

Three studies measured the number of cases of child abuse and neglect via participants’ self‐report of actual cases reported, three months after training. The results of one study (42 participants) favoured the intervention over waitlist, but the evidence is very uncertain (standardised mean difference (SMD) 0.81, 95% confidence interval (CI) 0.18 to 1.43; very low‐certainty evidence).

Three studies measured the number of cases of child abuse and neglect via participants’ responses to hypothetical case vignettes immediately after training. A meta‐analysis of two studies (87 participants) favoured training over no training or waitlist for training, but the evidence is very uncertain (SMD 1.81, 95% CI 1.30 to 2.32; very low‐certainty evidence).

We identified no studies that measured the number of cases of child abuse and neglect via official records of reports made to child protection authorities, or adverse effects of training.

Secondary outcomes

Four studies measured professionals’ knowledge of reporting duty, processes, and procedures postintervention. The results of one study (744 participants) may favour the intervention over waitlist for training (SMD 1.06, 95% CI 0.90 to 1.21; low‐certainty evidence).

Four studies measured professionals' knowledge of core concepts in all forms of child abuse and neglect postintervention. A meta‐analysis of two studies (154 participants) favoured training over no training, but the evidence is very uncertain (SMD 0.68, 95% CI 0.35 to 1.01; very low‐certainty evidence).

Three studies measured professionals' knowledge of core concepts in child sexual abuse postintervention. A meta‐analysis of these three studies (238 participants) favoured training over no training or waitlist for training, but the evidence is very uncertain (SMD 1.44, 95% CI 0.43 to 2.45; very low‐certainty evidence).

One study (25 participants) measured professionals' skill in distinguishing reportable and non‐reportable cases postintervention. The results favoured the intervention over no training, but the evidence is very uncertain (SMD 0.94, 95% CI 0.11 to 1.77; very low‐certainty evidence).

Two studies measured professionals' attitudes towards the duty to report child abuse and neglect postintervention. The results of one study (741 participants) favoured the intervention over waitlist, but the evidence is very uncertain (SMD 0.61, 95% CI 0.47 to 0.76; very low‐certainty evidence).

Authors' conclusions

The studies included in this review suggest there may be evidence of improvements in training outcomes for professionals exposed to training compared with those who are not exposed. However, the evidence is very uncertain. We rated the certainty of evidence as low to very low, downgrading due to study design and reporting limitations. Our findings rest on a small number of largely older studies, confined to single professional groups. Whether similar effects would be seen for a wider range of professionals remains unknown. Considering the many professional groups with reporting duties, we strongly recommend further research to assess the effectiveness of training interventions, with a wider range of child‐serving professionals. There is a need for larger trials that use appropriate methods for group allocation, and statistical methods to account for the delivery of training to professionals in workplace groups.

Keywords: Child, Humans, Child Abuse, Child Abuse/diagnosis, Child Abuse/prevention & control, Family, Health Personnel, Mandatory Reporting, Systematic Reviews as Topic

Plain language summary

Child protection training for professionals to improve reporting of child abuse and neglect

Key messages

‐ Due to a lack of strong evidence, it is unclear whether child protection training is better than no training or alternative training (e.g. cultural sensitivity training) at improving professionals’ reporting of child abuse and neglect.

‐ Larger, well‐designed studies are needed to assess the effects of training with a wider range of professional groups.

‐ Future research should compare face‐to‐face with e‐learning interventions.

Why do we need to improve the reporting of child abuse and neglect?

Child abuse and neglect results in significant harm to children, families, and communities. The most serious consequence is child fatality, but other consequences include physical injuries, mental health problems, alcohol and drug misuse, and problems at school and in employment. Many professional groups, such as teachers, nurses, doctors, and the police, are required by law or organisational policy to report known or suspected cases of child abuse and neglect to statutory child protection authorities. To prepare them for reporting, various training interventions have been developed and used. These can vary in duration, format, and delivery methods. For example, they may aim to increase knowledge and awareness of the indicators of child abuse and neglect; the nature of reporting duty and procedures; and attitudes towards reporting duty. Such training is usually undertaken postqualification as a form of continuing professional development; however, little is known about whether training works, either in improving reporting of child abuse and neglect generally, for different types of professionals, or for different types of abuse.

What did we want to find out?

We wanted to find out:

‐ if child protection training improves professionals' reporting of child abuse and neglect;

‐ what components of effective training help professionals to report child abuse and neglect; and

‐ if the training causes any unwanted effects.

What did we do?

We searched for studies that compared:

‐ child protection training with no training or with a waitlist control (those placed on a waiting list to receive the training at a later date); and

‐ child protection training with alternative training (not related to child abuse and neglect, e.g. cultural sensitivity training).

We compared and summarised study results and rated our confidence in the evidence based on factors such as study methods and size.

What did we find?

We found 11 studies that involved 1484 people. The studies ranged in size from 30 to 765 participants. Nine studies were conducted in the USA, one in Canada, and one in the Netherlands. A number of different types of training interventions were tested in the studies. Some were face‐to‐face workshops, ranging in duration from a single two‐hour workshop to six 90‐minute seminars conducted over one month; and some were self‐paced e‐learning interventions. The training was developed by experts and delivered by specialist facilitators, content area experts, or interdisciplinary teams. Nine studies received external funding: five from federal government agencies, two from a university and philanthropic organisation, one from the philanthropic arm of an international technology company, and one from a non‐government organisation (a training intervention developer).

Main results

It is unclear if child protection training has an effect on:

‐ the number of reported cases of child abuse and neglect (one study, 42 participants); or

‐ the number of reported cases based on hypothetical cases of child abuse and neglect (two studies, 87 participants).

Based on the available information, we were unable answer our question about whether training has an effect on the number of official cases recorded by child protection authorities, or the quality of those reports; or whether training has any unwanted effects.

Child protection training may increase professionals' knowledge of reporting duty, processes, and procedures (one study, 744 participants). However, it is unclear if this training has an effect on:

‐ professionals’ knowledge of core concepts in child abuse and neglect generally (two studies, 154 participants);

‐ professionals’ knowledge of core concepts in child sexual abuse specifically (three studies, 238 participants);

‐ professionals’ skill in distinguishing between reportable and non‐reportable cases (one study, 25 participants); or

‐ professionals’ attitudes towards the duty to report (one study, 741 participants).

What are the limitations of the evidence?

We have low to very low confidence in the evidence. This is because the results were based on a small number of studies, some of which were old and which had methodological problems. For example, the people involved in the studies were aware of which treatment they were getting, and not all of the studies provided data for all our outcomes of interest. In addition, our analyses sometimes only included one professional group, limiting the applicability of our findings to other professional groups.

How up‐to‐date is this evidence?

The evidence is current to 4 June 2021.

Summary of findings

Summary of findings 1. Child protection training for professionals to improve reporting of child abuse and neglect compared with no training, waitlist control, or alternative training not related to child abuse and neglect (primary outcomes).

|

Setting: professionals' workplaces or online e‐learning, mainly in the USA Patient of population: postqualified professionals, including elementary and high school teachers, childcare professionals, medical practitioners, nurses, and mental health professionals Intervention: face‐to‐face or online training, with a range of teaching strategies (e.g. didactic presentations, role‐plays, video, experiential exercises), ranging from 2 hours to 6 x 90‐minute sessions over a 1‐month period Comparator: no training, waitlist for training, alternative training (not related to child abuse and neglect) | ||||||

| Outcomes | Anticipated absolute effects* (95% CI) | Relative effect (95% CI) | No. of participants (studies) | Certainty of the evidence | Comments | |

| Risk with control conditions | Risk with training interventions | |||||

|

Number of reported cases of child abuse and neglect (professionals' self‐report, actual cases) Time of outcome assessment: short term (3 months postintervention) |

‐ | The mean number of cases reported in the training group was, on average, 0.81 standard deviations higher (0.18 higher to 1.43 higher). | ‐ | 42 (1 RCT) |

⨁◯◯◯ Very Lowa,b,c | SMD of 0.81 represents a large effect size (Cohen 1988). Outcome measured by professionals' self‐report of cases they had reported to child protection authorities. |

|

Number of reported cases of child abuse and neglect (professionals' self‐report, hypothetical vignette cases) Time of outcome assessment: short term (postintervention) |

‐ | The mean number of cases reported in the training group was, on average, 1.81 standard deviations higher (1.30 higher to 2.32 higher). | ‐ | 87 (2 RCTs) |

⨁◯◯◯ Very Lowa,b,c | SMD of 1.81 represents a large effect size (Cohen 1988). Outcome measured by professionals’ responses to hypothetical case vignettes. |

| Number of reported cases of child abuse and neglect (official records of reports made to child protection authorities) | ‐ | Unknown | ‐ | 0 (0 studies) |

‐ | No studies were identified that measured numbers of official reports made to child protection authorities. |

| Quality of reported cases of child abuse and neglect (official records of reports made to child protection authorities) | ‐ | Unknown | ‐ | 0 (0 studies) |

‐ | No studies were identified that measured the quality of official reports made to child protection authorities. |

| Adverse events | ‐ | Unknown | ‐ | 0 (0 studies) |

‐ | No studies were identified that measured adverse effects. |

| *The risk in the intervention group (and its 95% CI) is based on the assumed risk in the comparison group and the relative effect of the intervention (and its 95% CI). CI: confidence interval; RCT: randomised controlled trial; SMD: standardised mean difference | ||||||

|

GRADE Working Group grades of evidence High certainty: we are very confident that the true effect lies close to that of the estimate of the effect. Moderate certainty: we are moderately confident in the effect estimate: the true effect is likely to be close to the estimate of the effect, but there is a possibility that it is substantially different. Low certainty: our confidence in the effect estimate is limited: the true effect may be substantially different from the estimate of the effect. Very low certainty: we have very little confidence in the effect estimate: the true effect is likely to be substantially different from the estimate of effect. | ||||||

aDowngraded by one level due to high risk of bias for multiple risk of bias domains. bDowngraded by one level due to imprecision (CI includes small‐sized effect or small sample size, or both). cDowngraded by one level due to indirectness (single or limited number of studies, thereby restricting the evidence in terms of intervention, population, and comparators).

Summary of findings 2. Child protection training for professionals to improve reporting of child abuse and neglect compared with no training, waitlist control, or alternative training not related to child abuse and neglect (secondary outcomes).

|

Setting: professionals' workplaces or online e‐learning, mainly in the USA Patient of population: postqualified professionals, including elementary and high school teachers, childcare professionals, medical practitioners, nurses, and mental health professionals Intervention: face‐to‐face or online training, with a range of teaching strategies (e.g. didactic presentations, role‐plays, video, experiential exercises), ranging from 2 hours to 6 x 90‐minute sessions over a 1‐month period Comparator: no training, waitlist for training, alternative training (not related to child abuse and neglect) | ||||||

| Outcomes | Anticipated absolute effects* (95% CI) | Relative effect (95% CI) | No. of participants (studies) | Certainty of the evidence | Comments | |

| Risk with control conditions | Risk with training interventions | |||||

|

Knowledge of reporting duty, processes, and procedures Measured by: professionals' self‐reported knowledge of jurisdictional or institutional reporting duties, or both Time of outcome assessment: short term (postintervention) |

‐ | The mean knowledge score in the training group was, on average, 1.06 standard deviations higher (0.90 higher to 1.21 higher). | ‐ | 744 (1 RCT) |

⨁⨁◯◯ Lowa,b | SMD of 1.06 represents a large effect size (Cohen 1988). |

|

Knowledge of core concepts in child abuse and neglect (all forms) Measured by: professionals' self‐reported knowledge of all forms of child abuse and neglect (general measure) Time of outcome assessment: short term (postintervention) |

‐ | The mean knowledge score in the training group was, on average, 0.68 standard deviations higher (0.35 higher to 1.01 higher). | ‐ | 154 (2 RCTs) |

⨁◯◯◯ Very lowa,b,c | SMD of 0.68 represents a medium effect size (Cohen 1988). |

|

Knowledge of core concepts in child abuse and neglect (child sexual abuse only) Measured by: professionals' self‐reported knowledge of child sexual abuse (specific measure) Time of outcome assessment: short term (postintervention) |

‐ | The mean knowledge score in the training group was, on average, 1.44standard deviations higher (0.43 higher to 2.45 higher). | ‐ | 238 (3 RCTs) |

⨁◯◯◯ Very lowa,b,c,d | SMD of 1.44 represents a large effect size (Cohen 1988). |

|

Skill in distinguishing between reportable and non‐reportable child abuse and neglect cases Measured by: professionals’ performance on simulated cases scored by trained and blinded expert panel Time of outcome assessment: short term (postintervention) |

‐ | The mean skill score in the training group was, on average, 0.94standard deviations higher (0.11 higher to 1.77 higher). | ‐ | 25 (1 RCT) |

⨁◯◯◯ Very Lowa,b,c | SMD of 0.94 represents a large effect size (Cohen 1988). |

|

Attitudes toward the duty to report child abuse and neglect Measured by: professionals’ self‐reported attitudes towards the duty to report child abuse and neglect Time of outcome assessment: short term (postintervention) |

‐ | The mean attitude score in the training group were, on average, 0.61 standard deviations higher (0.47 higher to 0.76 higher). | ‐ | 741 (1 RCT) |

⨁◯◯◯ Very Lowa,b,c | SMD of 0.61 represents a medium effect size (Cohen 1988). |

| *The risk in the intervention group (and its 95% CI) is based on the assumed risk in the comparison group and the relative effect of the intervention (and its 95% CI). CI: confidence interval; RCT: randomised controlled trial; SMD: standardised mean difference | ||||||

|

GRADE Working Group grades of evidence High certainty: we are very confident that the true effect lies close to that of the estimate of the effect. Moderate certainty: we are moderately confident in the effect estimate: the true effect is likely to be close to the estimate of the effect, but there is a possibility that it is substantially different. Low certainty: our confidence in the effect estimate is limited: the true effect may be substantially different from the estimate of the effect. Very low certainty: we have very little confidence in the effect estimate: the true effect is likely to be substantially different from the estimate of effect. | ||||||

aDowngraded by one level due to high risk of bias for multiple risk of bias domains. bDowngraded by one level due to indirectness (one or both of the following reasons: (1) single or limited number of studies, thereby restricting the evidence in terms of intervention, population, and comparators; (2) outcome not a direct measure of reporting behaviour by professionals). cDowngraded by one level due to imprecision (one or both of the following reasons: (1) CI includes small‐sized effect; (2) small sample size) dAlthough studies can only be downgraded by three levels, it is important to note that there was significant heterogeneity of the effect for this outcome (i.e. inconsistency), which also impacts the certainty of the evidence.

Background

Description of the condition

Child abuse and neglect

Child abuse and neglect is a broad construct including physical abuse, sexual abuse, psychological or emotional abuse, and neglect. Exposure to domestic violence is increasingly considered to be a fifth domain (Kimber 2018). Most child abuse and neglect occurs in private, is inflicted or caused by parents and caregivers, and does not become known to government authorities or helping agencies. Except for sexual abuse, younger children (aged one year and under) are the most vulnerable of all children to be abused and neglected (US DHHS 2021). Whilst its true extent is unknown, child abuse and neglect is a well‐established problem worldwide (Hillis 2016; Pinheiro 2006). Numerous prevalence studies have established that the various forms of child abuse and neglect are very widespread, although some forms of abuse and neglect are more common than others (Almuneef 2018; Chiang 2016; Cuartas 2019; Finkelhor 2010; Lev‐Weisel 2018; Nguyen 2019; Nikolaidis 2018; Radford 2012; Stoltenborgh 2011; Stoltenborgh 2012; Stoltenborgh 2015; Ward 2018).

The adverse effects of child abuse and neglect are significant and can endure throughout a person's life. The most serious consequence is child fatality, with an estimated 155,000 deaths globally per annum (WHO 2006). Other effects include: physical injuries; failure to thrive; impaired social, emotional, and behavioural development; reduced reading ability and perceptual reasoning; depression; anxiety; post‐traumatic stress disorder; low self‐image; alcohol and drug use; aggression; delinquency; long‐term deficits in educational achievement; and adverse effects on employment and economic status (Bellis 2019; Egeland 2009; Gilbert 2009; Hildyard 2002; Hughes 2017; Landsford 2002; Maguire‐Jack 2015; Norman 2012; Paolucci 2001; Taillieu 2016). Coping mechanisms used to deal with the trauma, such as alcohol and drug use, can compound adverse health outcomes, and chronic stress can cause coronary artery disease and inflammation (Danese 2009; Danese 2012). There is some evidence suggesting that child abuse and neglect affects brain development and produces epigenetic neurobiological changes (Moffitt 2013; Nelson 2020; Shalev 2013; Tiecher 2016). For society, effects include lost productivity and cost to child welfare systems (Currie 2010; Fang 2012; Fang 2015), and intergenerational victimisation (Draper 2008). The annual economic cost in the USA has been estimated at USD 124 billion, based on a cost per non‐fatal case of USD 210,012 (Fang 2012).

Although there is some variance across cultures in perceptions of what may and may not constitute child abuse and neglect (Finkelhor 1988; Korbin 1979), in recent decades there is an emerging consensus about its parameters, especially for child sexual abuse (Mathews 2019), physical abuse (WHO 2006), emotional abuse (Glaser 2011), and neglect (Dubowitz 2007). This is reflected in criminal prohibitions on this conduct across low‐, middle‐, and high‐income countries, and scholarly research addressing the contribution of structural inequalities in societies to child maltreatment (Bywaters 2019; Finkelhor 1988). Global legal and policy norms recognise the main domains of child abuse and neglect and require substantial efforts to identify and respond to them. The Convention on the Rights of the Child has been almost universally ratified, and article 19 embeds children's right to be free from abuse and neglect (United Nations 1989). It requires States Parties to take all appropriate legislative, administrative, social, and educational measures to protect the child from all forms of maltreatment, and to include effective procedures for the identification and reporting of maltreatment. Similarly, the universal Sustainable Development Goals urge all nations to eradicate child maltreatment, with Target 16.2 aiming to end child abuse and requiring governments to report their efforts (United Nations 2015).

Professionals' reporting of child abuse and neglect

To identify child abuse and neglect, and to enable early intervention to assist children and their families, many nations' governments require members of specified professional groups to report known or suspected cases of significant child abuse and neglect (Mathews 2008a). The duty to report is usually conferred on professionals who encounter children frequently in their daily work, such as teachers, nurses, doctors, and law enforcement (Mathews 2008b). In some jurisdictions and for some categories of professionals, reporting duties have been enacted in child protection legislation (called 'mandatory reporting laws'), but in others, reporting duties are ascribed solely in organisational policies. Although differences exist across jurisdictions and professions with respect to some features of reporting duties (e.g. in stating which types of abuse and neglect must be reported), there is also consistency in the essential nature of reporting duties (e.g. in always requiring reports of child sexual abuse; and in activating the reporting duty when the reporter has a reasonable suspicion the abuse has occurred, rather than requiring knowledge or evidence) (Mathews 2008a). These differences and similarities also determine key dimensions of child protection training for professionals in different contexts.

Studies have found that professionals who are required to report child abuse and neglect consider that they have not had sufficient training to fulfil their role (Abrahams 1992; Christian 2008; Hawkins 2001; Kenny 2001; Kenny 2004; Mathews 2011; Reiniger 1995; Starling 2009; Walsh 2008). Research has also found low levels of knowledge about both the nature of the reporting duty, Beck 1994; Mathews 2009, and indicators of abuse and neglect (Hinson 2000), and that professionals may hold attitudes which are not conducive to reporting (Feng 2005; Jones 2008; Kalichman 1993; Mathews 2009; Zellman 1990). Effective reporting is thought to be influenced by several factors, including higher levels of knowledge of the reporting duty (Crenshaw 1995; Kenny 2004), ability to recognise abuse (Crenshaw 1995; Goebbels 2008; Hawkins 2001), and positive attitudes towards the duty (Fraser 2010; Goebbels 2008; Hawkins 2001).

Improved reporting offers the prospect of enhanced detection of child abuse and neglect (Mathews 2016), provision of interventions and redress for victims (Kohl 2009), and engagement with parents and caregivers to establish supportive measures (Drake 1996; Drake 2007). In this way, improved reporting is an essential part of a public health response to child abuse and neglect, which requires both tertiary and secondary prevention as well as primary prevention, and the full participation of communities and organisations (McMahon 1999). Improved reporting by professionals should also diminish clearly unnecessary reports and avoid the wasting of scarce government resources and unwarranted distress to families (Ainsworth 2006; Calheiros 2016). In addition, effective child protection training for professionals should also assist in developing greater understanding of legal protections conferred on professional reporters themselves, and avoidance of potential legal liability and professional discipline for non‐compliance. At its best, child protection training could also enhance professional ethical identities and contribute to broader workforce professionalisation.

Description of the intervention

In this review, child protection training for professionals is defined as education or training undertaken postqualification, after initial professional qualifications have been awarded, as a form of continuing or ongoing professional education or development. Child protection training interventions that are the subject of this review aim to improve reporting of child abuse and neglect to statutory child protection authorities by professionals who are required by law or policy to do so. Improving reporting is conceptualised as increasing the reporting of cases where abuse or neglect exists or can reasonably be thought to exist; and decreasing the reporting of cases where there are insufficient grounds upon which to make a report and where reporting is unnecessary or unwarranted.

Different approaches may be taken in training professionals to improve reporting of child abuse and neglect. Child protection training may focus on increasing knowledge and awareness of the indicators of each type of abuse and neglect, the nature of the reporting duty, and reporting procedures. Training may also focus on enhancing reporters' attitudes towards the reporting duty or to child protection generally. Training may vary in duration (Donohue 2002; Hazzard 1983), be implemented in a range of different formats (e.g. single sessions through to extended multisession courses), and target different skill levels (e.g. basic through to advanced) (Walsh 2019). Different delivery methods may be adopted, for example online, face‐to‐face, or blended learning modes (Kenny 2001; McGrath 1987).

How the intervention might work

Viewed as an application of adult learning (Knowles 2011), child protection training for professionals is an educational intervention through which professionals develop knowledge, skills, attitudes, and behaviours. By raising awareness, providing information and resources, developing skills and strategies, and fostering dispositions, training may change professionals' ability and willingness to engage in decision‐making processes that lead to improved reporting. There is some evidence to suggest that, for some categories of professionals and for some types of abuse, exposure to training is associated with effective reporting (Fraser 2010; Walsh 2012a), self‐reported preparedness to report (Fraser 2010), confidence identifying abuse (Hawkins 2001), and awareness of reporting responsibilities (Hawkins 2001). Some studies have indicated that lack of adequate training is associated with low awareness of the reporting duty (Hawkins 2001), low preparedness to report (Kenny 2001), low self‐reported confidence identifying child abuse (Hawkins 2001; Mathews 2008b; Mathews 2011), and low knowledge of indicators of abuse (Mathews 2011). However, the literature has not been synthesised, and the specific components of training that are responsible for improving reporting are not yet known.

Why it is important to do this review

Child abuse and neglect results in significant costs for children, families, and communities. As a core public health strategy, many professional groups are required by law and policy in many jurisdictions to report suspected cases. Numerous different training initiatives appear to have been developed and implemented for professionals, but there is little evidence regarding their effectiveness in improving reporting of child abuse and neglect both generally, for specific professions, and for distinct types of child abuse and neglect. To enhance reporting practice, designers of training programmes require detailed information about what programme features will offer the greatest benefit. A systematic review that identifies the effectiveness of different training approaches will advance the evidence base and develop a clearer understanding of optimal training content and methods. In addition, it will provide policymakers with a means by which to assess whether current training interventions are congruent with what is likely to be effective.

Objectives

To assess the effectiveness of training aimed at improving reporting of child abuse and neglect by professionals and to investigate possible components of effective training interventions.

Methods

Criteria for considering studies for this review

Types of studies

Randomised controlled trials (RCTs), quasi‐RCTs (i.e. studies in which participants are assigned to intervention or comparison or control groups using a quasi‐randomised method such as allocation by date of birth, or similar methods), and controlled before‐and‐after (CBA) studies (i.e. studies where participants are allocated to intervention and control groups by means other than randomisation). We included CBA studies because studies of educational interventions are often conducted in settings where truly randomised trials may not be feasible, for example in the course of a training series where enrolment decisions are based on group availability or logistics.

When deciding on included studies we used explicit study design features rather than study design labels. We followed the guidance on how to assess and report on non‐randomised studies in the Cochrane Handbook for Systematic Reviews of Interventions (Higgins 2022a; Reeves 2022; Sterne 2022).

Types of participants

Studies that involved qualified professionals who are typically required by law or organisational policy to report child abuse and neglect (e.g. teachers, nurses, doctors, and police/law enforcement).

Types of interventions

Included

Child protection training interventions aimed explicitly at improving reporting of child abuse and neglect by qualified professionals, irrespective of programme type, mode, content, duration, intensity, and delivery context. These interventions were compared with no training, waitlist control, or alternative training not related to child abuse and neglect (e.g. cultural sensitivity training).

Excluded

We excluded training interventions in which improving professionals' reporting of child abuse and neglect was a minor training focus, such as brief professional induction or orientation programmes targeting a broad range of employment responsibilities in which it would not be possible to isolate the specific intervention effects for a child protection training component (e.g. training for interagency working). We excluded child protection training conducted before professional qualifications were awarded (e.g. as part of undergraduate college or university professional preparation programmes in initial teacher education, pre‐service education for nurses, or entry‐level medical education).

Types of outcome measures

We included studies assessing the primary and secondary outcomes listed below. We excluded studies that did not set out to measure any of these outcomes.

Primary outcomes

-

Number of reported cases of child abuse and neglect:

as measured subjectively by participant self‐reports of actual cases reported;

as measured subjectively by participant responses to vignettes; and

as measured objectively in official records of reports made to child protection authorities.

Quality of reported cases of child abuse and neglect, as measured via coding of the actual contents of reports made to child protection authorities (i.e. in government records or archives).

-

Adverse events, such as:

increase in failure to report cases of child abuse and neglect that warrant a report as measured subjectively by participant self‐reports (i.e. in questionnaires); and

increase in reporting of cases that do not warrant a report as measured subjectively by participant self‐reports (i.e. in questionnaires).

We note that studies using official records (i.e. primary outcome 1c), such as the number of reports made and the number of reports substantiated after investigation as indicative of training outcomes, must be interpreted with caution. Although objective, official records cannot measure all types of reporting behaviours, for example non‐reporting behaviour in which a professional fails to report a case that should have been reported. Official records must also be interpreted within the context and purpose of training, for example if training was introduced in the context of responses to recommendations from a public inquiry, or if training was used for the purpose of encouraging or discouraging specific types of reports, or both.

Secondary outcomes

Knowledge of the reporting duty, processes, and procedures.

Knowledge of core concepts in child abuse and neglect such as the nature, extent, and indicators of the different types of abuse and neglect.

Skill in distinguishing between cases that should be reported from those that should not.

Attitudes towards the duty to report child abuse and neglect.

Timing of outcome assessment

We classified primary and secondary outcomes using three time periods: short‐term outcomes (assessed immediately after the training intervention and up to 12 months after); medium‐term outcomes (assessed between one and three years after the training intervention); and long‐term outcomes (assessed more than three years after the training intervention).

Search methods for identification of studies

We used the MEDLINE strategy from our protocol and adapted it for other databases (Mathews 2015). The first round of searches for the review were conducted in December 2016, with search updates in January 2017 and December 2018. When we came to update the searches in 2020, we noticed that errors had been made in earlier searches. We corrected the errors and re‐ran all searches in all databases up to June 2021. We de‐duplicated these records by comparing them with records from previous searches and removed records which had already been screened. We did not apply any date or language restrictions, and sought translation for papers published in languages other than English.

We recorded data for each search in a Microsoft Excel spreadsheet (Microsoft Corporation 2018), including: date of the search, database and platform, exact search syntax, number of search results, and any modifications to search strategies to accommodate variations in search functionalities for specific databases. The results for each search were exported as RIS files and stored in EndNote X8.0.1 (EndNote 2018), with a folder for each searched database. Search strategies and specific search dates are shown in Appendix 1. Changes to the planned search methods in our review protocol, Mathews 2015, are detailed in the Differences between protocol and review section.

Electronic searches

We searched the following databases.

Cochrane Central Register of Controlled Trials (CENTRAL; 2021, Issue 6), in the Cochrane Library (searched 11 June 2021).

Cochrane Database of Systematic Reviews (CDSR; 2021, Issue 6), in the Cochrane Library (searched 11 June 2021).

Ovid MEDLINE (1946 to 4 June 2021).

Embase.com Elsevier (1966 to 11 June 2021).

CINAHL (Cumulative Index to Nursing and Allied Health Literature) EBSCOhost (1981 to 4 June 2021).

ERIC EBSCOhost (1966 to 4 June 2021).

PsycINFO EBSCOhost (1966 to 4 June 2021).

Social Services Abstracts via ProQuest Research Library (1966 to 18 June 2021).

Science Direct Elsevier (1966 to 4 June 2021).

Sociological Abstracts via ProQuest Research Library (1952 to 18 June 2021).

ProQuest Psychology Journals via ProQuest Research Library (1966 to 11 June 2021).

ProQuest Social Science via ProQuest Research Library (1966 to 23 July 2021).

ProQuest Dissertations and Theses via ProQuest Research Library (1997 to 23 July 2021).

LexisNexis Lexis.com (1980 to 19 December 2018).

LegalTrac GALE (1980 to 19 December 2018).

Westlaw International Thomson Reuters (1980 to 19 December 2018).

Conference Proceedings Citation Index – Social Science & Humanities (Web of Science; Clarivate) (1990 to 11 June 2021).

Violence and Abuse Abstracts (EBSCOhost) (1971 to 4 June 2021).

EducationSource (EBSCOhost) (1880 to 4 June 2021).

LILACS (Latin American and Caribbean Health Science Information database) (lilacs.bvsalud.org/en/) (2003 to 11 June 2021).

World Health Organization International Clinical Trials Registry Platform (WHO ICTRP; trialsearch.who.int; searched 2000 to 11 June 2021).

OpenGrey (opengrey.eu/; searched 27 May 2019).

Searching other resources

We carried out additional searches to identify studies not captured by searching the databases listed above. We handsearched the following journals.

Child Maltreatment (2 July 2021).

Child Abuse and Neglect (2 July 2021).

Children and Youth Services Review (2 July 2021).

Trauma, Violence and Abuse (2 July 2021).

Child Abuse Review (2 July 2021).

We also searched the following key websites for additional studies.

International Society for Prevention of Child Abuse and Neglect via ispcan.org/ (2 July 2021).

US Department of Health and Human Services Children’s Bureau, Child Welfare Information Gateway via childwelfare.gov/ (2 July 2021).

Promising Practices Network operated by the RAND Corporation via promisingpractices.net/ (21 March 2019).

National Resource Center for Community‐Based Child Abuse Prevention (CBCAPP) via friendsnrc.org/ (2 July 2021).

California Evidence‐Based Clearinghouse for Child Welfare (CEBC) via cebc4cw.org/ (2 July 2021).

Coalition for Evidence‐Based Policy via coalition4evidence.org/ (21 March 2019).

Institute of Education Sciences What Works Clearinghouse via ies.ed.gov/ncee/wwc/ (2 July 2021).

National Institute for Health and Care Excellence (NICE) UK via nice.org.uk/ (9 July 2021).

Finally, we harvested the reference lists of included studies to identify further potential studies. We did not contact key researchers in the field for unpublished studies as prescribed in our review protocol. Instead, we circulated requests for relevant studies via email to the Child‐Maltreatment‐Research‐Listserv, a moderated electronic mailing list with over 1500 subscribers, as this offered the possibility of reaching a far larger number of researchers (Walsh 2018 [pers comm]).

Data collection and analysis

We conducted data collection and analysis following our published protocol (Mathews 2015), and in accordance with the guidance in the Cochrane Handbook for Systematic Reviews of Interventions (Higgins 2011; Higgins 2022a). In the following sections, we have reported only those methods that were used in this review. Preplanned but unused methods are reported in Appendix 2.

Selection of studies

We used SysReview review management software for title and abstract and full‐text screening (Higginson 2014). Search results were imported from Endnote into SysReview and duplicates removed prior to title and abstract screening. Each title and abstract was screened by at least two review authors working independently to determine eligibility according to the inclusion and exclusion criteria. During title and abstract screening, screeners (KW, EE, LH, BM, NA, ED, EP) assessed if each record was: (i) an eligible document type (e.g. not a book review); (ii) a unique document (i.e. not an undetected duplicate); (iii) about child protection training; and (iv) a study conducted with professionals. A third screener resolved any conflicts in screening decisions (either KW or EE). Titles and abstracts published in languages other than English were translated into English using Google Translate.

Three review authors (KW, EE, LH) working independently screened the full texts of potentially eligible studies against the inclusion criteria as described in Criteria for considering studies for this review. Any discrepancies were resolved by discussion with a third review author who had not previously screened the record (BM, MK, EE, KW) until consensus was reached. As authors of potentially included studies, BM and MK were excluded from decisions on studies for which they were authors.

We documented the primary reasons for exclusion of each excluded record. To determine eligibility for studies published in languages other than English, we translated studies into English using Google Translate. We contacted study authors to request missing information if there was insufficient information to determine eligibility.

We identified and linked together multiple reports on the same study so that each study, rather than each report, was the principal unit of interest (e.g. Hazzard 1984; Palusci 1995). We listed studies that were close to meeting the eligibility criteria but were excluded at the full‐text screening or data extraction stages, along with the primary reasons for their exclusion, in the Characteristics of excluded studies table. We recorded our study selection decisions in a PRISMA flow diagram (Moher 2009).

Data extraction and management

We used SysReview review management software for data extraction and management (Higginson 2014). We developed and pilot‐tested a data extraction template based on the checklist of items in the Cochrane Handbook for Systematic Reviews of Interventions (Higgins 2011, Table 7.3a; Li 2022, Table 5.3a), the PRISMA minimum standards (Liberati 2009), and the Template for Intervention Description and Replication (TIDieR) checklist and guide (Hoffmann 2014). We extracted data from study reports concerning details of:

study general information: title identifier, full citation, study name, document type, how located, country, ethical approval, funding;

study design and methods: research design, comparison condition, unit of allocation, randomisation (and details on how this was implemented), baseline assessment (including whether intervention and comparison conditions were equivalent at baseline), unit of analysis, adjustment for clustering;

participant characteristics: participants, recruitment, eligibility criteria, number randomised, number consented, number began (intervention and control groups), number completed (intervention and control groups), number T1, T2, T3 (etc.), age (mean, standard deviation (SD), range) (baseline, intervention and control groups), gender (% female) (baseline, intervention and control groups), ethnicity (intervention and control groups), socio‐economic status (intervention and control groups), years of experience, previous child protection training, previous experience with child maltreatment reporting, any other information;

intervention characteristics: name of intervention, setting, delivery mode, contents and topics, methods and processes, duration, intensity, trainers and qualifications, integrity monitoring, fidelity issues; and

outcome measures: primary outcomes, secondary outcomes, other outcomes.

As authors of potentially included studies, BM and MK were not involved in data extraction. Data were extracted from each study and entered into SysReview by at least two review authors (EE, LH, KW) working independently. A third review author (KW) also extracted data on intervention and outcome characteristics and prepared the Characteristics of included studies tables. Any discrepancies between review authors were resolved through discussion.

Assessment of risk of bias in included studies

Our study protocol, Mathews 2015, was designed prior to introduction of the Risk Of Bias In Non‐randomized Studies of Interventions (ROBINS‐I) tool (Sterne 2016), and predated new guidance for assessing risk of bias in non‐randomised studies provided in Chapter 25 of the Cochrane Handbook for Systematic Reviews of Interventions (Sterne 2022). As planned in our protocol (Mathews 2015), we used the original Cochrane risk of bias tool (Higgins 2011, Table 8.5a), which has seven domains: (i) sequence generation; (ii) allocation concealment; (iii) blinding of participants and personnel; (iv) blinding of outcome assessment; (v) incomplete outcome data; (vi) selective reporting; and (vii) other sources of bias. In our protocol, we added three additional domains: (viii) reliability of outcome measures, as we anticipated that some studies may have used custom‐made instruments and scales; (ix) group comparability; and (x) contamination. Adoption of this approach corresponds with the 'Suggested risk of bias criteria for EPOC reviews' from Cochrane Effective Practice and Organisation of Care (EPOC 2017).

One review author (EE) incorporated the above 10 domains into a module within SysReview. Three review authors (KW, EE, LH), working independently, assessed risk of bias of the included studies. Assessors were not blinded to the names of the authors, institutions, journals, or study results. Where possible, we extracted verbatim text from the study reports as support for risk of bias judgements, resolving any disagreements by discussion. For studies where essential information to assess risk of bias was not available, we planned to contact study authors with a request for missing information, but this was not needed. We entered the information first into SysReview and then into Review Manager 5 (Review Manager 2020), and summarised findings in the risk of bias tables for each included study. We generated two summary figures: a risk of bias graph and a risk of bias summary showing scores for all studies, and showing the proportion of studies for each risk of bias domain. We planned to conduct sensitivity analyses for each outcome to determine how results might be affected by our inclusion/exclusion of studies at high risk of bias; however, this was not possible owing to the small number of studies with data available for meta‐analyses.

For each included study, we scored the relevant risk of bias domains as 'low', 'high', or 'unclear' risk of bias. We made judgements by answering 'yes' (scored as low risk of bias), 'no' (scored as high risk of bias), or 'unclear' (scored as unclear risk of bias) to a prespecified question for each domain as detailed in Appendix 3, with reference to the Cochrane Handbook for Systematic Reviews of Interventions (Higgins 2011, Table 8.5b) and the 'Suggested risk of bias criteria for EPOC reviews' (EPOC 2017).

Measures of treatment effect

We calculated intervention effects using Cochrane software RevMan Web (RevMan Web 2021).

Continuous data

All eligible outcomes in all of the included studies were measured on continuous scales, most of which were slightly different from each other. For continuous outcomes, we extracted postintervention means and SDs and summarised study effects using standardised mean differences (SMDs) and 95% confidence intervals (CI), to account for scale differences in the meta‐analyses.

Unit of analysis issues

Cluster‐randomised trials

Cluster‐randomised trials are widespread in the evaluation of healthcare and educational interventions (Donner 2002), but are often poorly reported (Campbell 2004). Adjusting for this clustering in analyses is important in order to reduce the risk of overestimating the treatment effect or underestimating the variance (or both), and thereby the weight of the study in meta‐analyses (Hedges 2015; Higgins 2022b).

Congruent with our protocol (Mathews 2015), we planned that for included studies with incorrectly analysed data that did not account for clustering, we would use procedures for adjusting study sample sizes outlined in Section 16.3.4 of the Cochrane Handbook for Systematic Reviews of Interventions (Higgins 2011). None of the included studies reported an intracluster correlation coefficient (ICC), nor were these available from the study authors. No published ICC for child protection training interventions for professionals could be found, so we imputed a conservative ICC of 0.20 based on reviews of ICCs for professional development interventions with teachers (ICC range 0.15 to 0.21) (Kelcey 2013), and primary care providers (ICC range 0.01 to 0.16) (Eccles 2003).

We planned to test the robustness of these assumptions in sensitivity analysis, in which we would use two extreme ICC values reported in the literature for each professional subgroup to assess the extent to which different ICC values affected the weights assigned to the included trials. We also planned to investigate whether results were similar or different for cluster and non‐cluster trials. However, due to the small number of studies included for each outcome (one to three studies), we deemed these analyses to be inappropriate. Rather, where a study with clustering was included for a given outcome, we have presented two results: one without an adjustment for clustering, and one with an adjustment for clustering (using an ICC of 0.2).

Dealing with missing data

Missing data can be in the form of missing studies, missing outcomes, missing outcome data, missing summary data, or missing participants. We did not anticipate missing studies, as our search strategy was comprehensive, and we took all reasonable steps to locate the full texts of eligible studies. Where possible, we identified missing outcomes by cross referencing study reports with trial registrations. For studies with missing or incomplete outcome data, or missing summary data required for effect size calculation, we contacted first‐named study authors via email to supply the missing information (e.g. intervention and control group participant totals, means, SDs, ICCs).

If the data to calculate effect sizes with Review Manager Web or the RevMan Web calculator (or both) were not available in study reports or from study authors (RevMan Web 2021), we used David B Wilson's suite of effect size calculators to calculate an effect size (Wilson 2001). This was then entered directly into RevMan Web, and meta‐analyses were conducted using the generic inverse‐variance method in RevMan Web (Deeks 2022).

Assessment of heterogeneity

We used RevMan Web to conduct our analyses according to the guidance in Section 10.3 of the Cochrane Handbook (Deeks 2022). To estimate heterogeneity, this software uses the inverse‐variance method for fixed‐effect meta‐analysis, and the DerSimonian and Laird method for random‐effects meta‐analysis (Deeks 2022). We used standard default options in RevMan Web to calculate the 95% CI for the overall effect sizes.

To assess the extent of variation between studies, we initially examined the distributions of relevant participant (e.g. professional discipline), delivery (e.g. classroom), and trial (e.g. type and duration of intervention) variables. Using forest plots produced in RevMan Web (RevMan Web 2021), we visually examined CI for the outcome results of individual studies, paying particular attention to poor overlap, which can be used as an informal indicator of statistical heterogeneity (Deeks 2022; Higgins 2011). Using output provided by RevMan Web (RevMan Web 2021), we examined three estimates that assess different aspects of heterogeneity as recommended by Borenstein 2009. Firstly, as a test of statistical significance of heterogeneity, we examined the Q statistic (Chi²) and its P value. For any observed Chi², a low P value was deemed to provide evidence of heterogeneity of intervention effects (i.e. that studies do not share a common effect size) (Deeks 2022; Higgins 2011). Secondly, we examined Tau² to provide an estimate of the magnitude of variation between studies. Thirdly, we examined the I² statistic, which describes the proportion of variability in effect estimates due to heterogeneity rather than to chance (Deeks 2022; Higgins 2011). These three quantities (Chi², Tau², and the I² statistic) together provide a comprehensive summary of the presence and the degree of heterogeneity amongst studies and are viewed as complementary rather than mutually exclusive quantities.

Rather than defaulting to interpretations of heterogeneity based on rules of thumb (i.e. that an I² statistic value of 30% to 60% represents moderate heterogeneity, 50% to 90% substantial heterogeneity, and 75% to 100% considerable heterogeneity), we used all three measures of heterogeneity (Chi², Tau², and the I² statistic) to fully assess and describe the aspects of variability in the data as detailed in Borenstein 2009. For example, we used Tau² or the I² statistic (or both) to assess the magnitude of true variation, and the P value for Chi² as an indicator of uncertainty regarding the genuineness of the heterogeneity (P < 0.05).

Assessment of reporting biases

We assessed reporting bias in the form of selective outcome reporting as one of the domains within the risk of bias assessment.

Data synthesis

We calculated effect sizes for single studies and quantitatively synthesised multiple studies using RevMan Web (RevMan Web 2021). We first assessed the appropriateness of combining data from studies based on sufficient similarity with respect to training interventions delivered, study population characteristics, measurement tools or scales used, and summary points (i.e. outcomes measured within comparable time frames pre‐ and postintervention). We combined data for comparable professional groups (e.g. elementary and high school teachers), similar outcome measures (e.g. knowledge measures, attitude measures), and training types (i.e. online and face‐to‐face).

If studies reported means, SD, and the number of participants by group, we directly inputted that data into RevMan Web (RevMan Web 2021). If these data were not reported, and could not be obtained from the study authors, we consulted David B Wilson's suite of effect size calculators to ascertain if an effect size could be calculated (e.g. Randolph 1994 for primary outcome 1a). In cases where we needed to compute an effect size outside of RevMan Web (RevMan Web 2021), which then needed to be combined with other studies via meta‐analysis, we used the generic inverse method in RevMan Web to conduct the meta‐analysis (e.g. Dubowitz 1991 and analysis for secondary outcome 2a) (RevMan Web 2021).

If there was only one study with available data to calculate an effect size for a given outcome, we reported a single SMD with 95% CIs. We acknowledge this is not standard practice and that normally the mean difference would be reported, but we adopted this strategy in order to maintain consistency and comparability in the presentation of the results, mindful of readers.

If there were at least two comparable studies with available data to calculate effect sizes, we performed meta‐analysis to compute pooled estimates of intervention effects for a given outcome. We reported the results of the meta‐analyses using SMDs and 95% CIs. Where we judged that studies were estimating the same underlying treatment effect, we used fixed‐effect models to combine studies. Fixed‐effect models ignore heterogeneity, but are generally interpreted as being the best estimate of the intervention effect (Deeks 2022). However, where the intervention effects are unlikely to be identical (e.g. due to slightly varying intervention models), random‐effects models can provide a more conservative estimate of effect because they do not assume that included studies estimate precisely the same intervention effect (Deeks 2022). We thus used a random‐effects meta‐analysis to combine studies where we judged that studies may not be estimating an identical treatment effect (e.g. different training curriculum).

We had planned to develop a training intervention programme typology by independently coding and categorising intervention components (e.g. contents and methods) and then attempting to link specific intervention components to intervention effectiveness (Mathews 2015). However, we were unable to statistically test these proposals in subgroup analyses because there were too few studies. Instead, we provided a detailed narrative summary in the Characteristics of included studies tables.

Subgroup analysis and investigation of heterogeneity

An insufficient number of studies precluded our planned subgroup analyses. However, in future review updates these methods may be required (Appendix 2).

Sensitivity analysis

We planned several sensitivity analyses; however, these were precluded by an insufficient number of included studies. Planned methods are provided in Appendix 2.

Summary of findings and assessment of the certainty of the evidence

To provide a balanced summary of the review findings, we have presented all review findings in two summary of findings tables, one that summarises primary outcomes and adverse effects (Table 1), and one that summarises secondary outcomes (Table 2). We chose this approach as both sets of outcomes have utility for practice and research. Each table summarises the evidence for RCT and quasi‐RCT studies that compare child protection training to no training, waitlist control, or alternative training (not related to child protection). None of the studies included long‐term follow‐up, and therefore the tables present findings only for outcomes that were measured in the short term, that is immediately postintervention or within three months after the intervention. Although the review includes CBA studies, we created the summary of findings tables only for RCTs and quasi‐RCTs, and rated the certainty of the evidence only for these studies.

At least two review authors (KW, EE, LH) rated the certainty of the evidence for all primary and secondary outcomes, with no disagreements to resolve. We rated the certainty of the evidence using the GRADE approach (Guyatt 2008; Guyatt 2011; Schünemann 2013; Schünemann 2022). The GRADE system classifies the certainty of evidence into one of four categories, as follows.

High certainty: we are very confident that the true effect lies close to that of the estimate of the effect.

Moderate certainty: we are moderately confident in the effect estimate: the true effect is likely to be close to the estimate of the effect, but there is a possibility that it is substantially different.

Low certainty: our confidence in the effect estimate is limited: the true effect may be substantially different from the estimate of the effect.

Very low certainty: we have very little confidence in the effect estimate: the true effect is likely to be substantially different from the estimate of effect.

We considered the following factors when grading the certainty of evidence: study design, risk of bias, precision of effect estimates, consistency of results, directness of evidence, and magnitude of effect (Schünemann 2022). We based our decisions on whether to downgrade the certainty of the evidence following the guidance in the Cochrane Handbook (Schünemann 2022), and entered the data for each factor in the GRADEpro GDT tool to obtain the overall rating of certainty (GRADEpro GDT). We recorded the process and rationale for downgrading the certainty of the evidence in footnotes to Table 1 and Table 2.

All studies used to estimate treatment effects were RCTs or quasi‐RCTs. Each outcome began with an overall rating of high certainty; however, all outcomes were downgraded by a maximum of two or three levels. We downgraded the certainty of the evidence for all outcomes by one level due to high risk of bias and a further level due to indirectness of the evidence. We considered all findings to have concerns related to indirectness, either because the effect was estimated by a single study, thereby restricting evidence in terms of intervention, population, and comparators; or because the outcome was not a direct measure of reporting behaviour (i.e. the primary outcome of clinical relevance). Other outcomes were downgraded a further level due to inconsistency in the results (i.e. significant heterogeneity) or imprecision (i.e. CIs that included the possibility of a small effect size or small sample size), or both.

Results

Description of studies

Results of the search

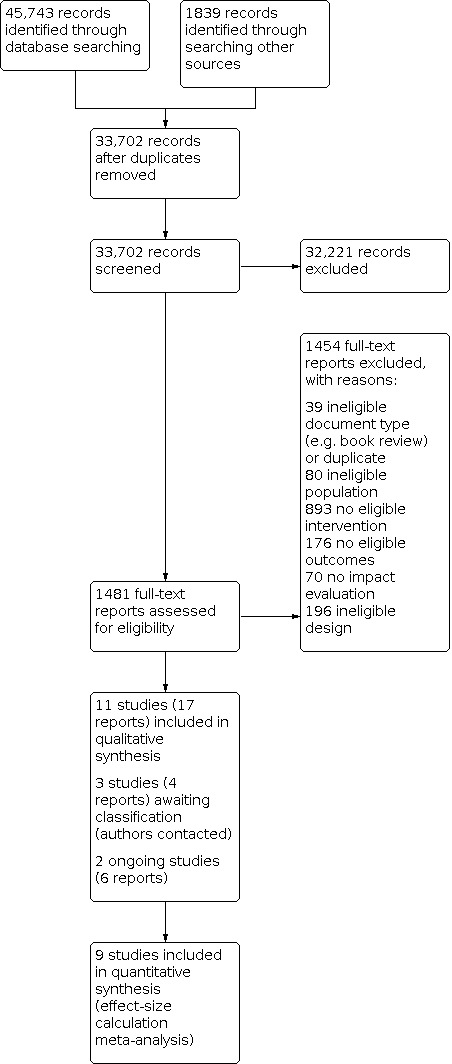

In total, we identified 45,743 records through database searching, and a further 1839 records from other sources. After duplicates were removed, we screened the titles and abstracts of 33,702 records, excluding 32,221 as irrelevant. We assessed 1481 full‐text reports against our inclusion criteria, as detailed in Criteria for considering studies for this review. We excluded 1454 of these reports with reasons, as shown in Figure 1, with 'near misses' detailed in the Characteristics of excluded studies tables. We identified two ongoing studies (Ongoing studies) and three studies awaiting classification (Studies awaiting classification).

1.

Study flow diagram.

Included studies

We included 11 unique studies reported in 17 papers, as shown in the study flow diagram (Figure 1). Details for each of the 11 included studies are summarised in the Characteristics of included studies tables.

Study design

Of the 11 included studies, five were RCTs (Kleemeier 1988; Mathews 2017; McGrath 1987; Randolph 1994; Smeekens 2011). Of these, two RCTs were conducted with individual participants (Mathews 2017; Smeekens 2011), and three were conducted with participants in groups (Kleemeier 1988; McGrath 1987; Randolph 1994). Four studies were quasi‐RCTs (Alvarez 2010; Dubowitz 1991; Hazzard 1984; Kim 2019). Of these, two were conducted at the individual level (Alvarez 2010; Dubowitz 1991), and two were conducted at the group level (Hazzard 1984; Kim 2019). The remaining two studies used a CBA design (Jacobsen 1993; Palusci 1995), with one apiece conducted with individuals, Palusci 1995, and groups, Jacobsen 1993.

Location

One study was conducted in Canada (McGrath 1987), one in the Netherlands (Smeekens 2011), and the remaining nine studies were conducted in the USA.

Sample sizes

The number of participants randomised per study ranged from 30, in Palusci 1995, to 765, in Mathews 2017. Only one study reported having used a sample size calculation (Mathews 2017).

Settings

Settings for the training interventions were aligned to workplaces. Reported settings included an urban public hospital (Palusci 1995), a university clinic (Dubowitz 1991), a rural school district (Jacobsen 1993; Randolph 1994), and a suburban school district (Kleemeier 1988). In three studies, interventions were conducted online as e‐learning modules (Kim 2019; Mathews 2017; Smeekens 2011). Specific settings for interventions were not reported in three studies (Alvarez 2010; Hazzard 1983; McGrath 1987).

Participants

Profession

The 11 studies included a total of 1484 participants. Participants were drawn from a small number of key groups having contact with children in their everyday work. In six studies, participants were elementary and high school teachers (Hazzard 1983; Jacobsen 1993; Kim 2019; Kleemeier 1988; McGrath 1987; Randolph 1994). In two studies, participants were doctors ‐ specifically paediatric residents, Dubowitz 1991, and physicians, Palusci 1995. One study apiece was conducted with mental health professionals (Alvarez 2010), childcare professionals (Mathews 2017), and nurses (Smeekens 2011).

One study included both professional and student participants, but did not separate outcome data by group (Alvarez 2010).

Demographic data contextually relevant to child protection training was reported in a minority of studies, including: years of professional work experience reported in seven studies (Jacobsen 1993; Kim 2019; Kleemeier 1988; Mathews 2017; McGrath 1987; Randolph 1994; Smeekens 2011); previous experience with child maltreatment reporting in three studies (Alvarez 2010; Dubowitz 1991; Hazzard 1983); and previous child protection training in three studies (Dubowitz 1991; Hazzard 1983; Mathews 2017). Participants in the 11 studies were relatively experienced in their professions, with the mean in the range of 9 years, in Smeekens 2011, to 15.4 years, in McGrath 1987.

Age, gender, and ethnicity

Study participants' demographic details at baseline were inconsistently reported. Four study authors reported mean age of participants at baseline separately for intervention and control groups (Alvarez 2010; Jacobsen 1993; Randolph 1994; Smeekens 2011). One study reported age bracket data for intervention, control, and total participants (Mathews 2017). Four studies reported statistical assessment of baseline differences in age (Alvarez 2010; Mathews 2017; Randolph 1994; Smeekens 2011). One study reported an age range of 18 to 55+ years for total participants (Kim 2019), and another study reported a median age bracket of 31 to 35 years (Hazzard 1983). Other studies reported means but not SDs for doctors (Dubowitz 1991: 27 years) and teachers (Kleemeier 1988: 41 years). One study did not report any data on participant age (McGrath 1987).

The distribution of females to males in the included studies was low at 2:5 (44% female) for doctors (Dubowitz 1991), and high at 10:1 (97.7% female) for childcare professionals (Mathews 2017). Seven studies did not report gender‐specific proportions (Alvarez 2010; Dubowitz 1991; Hazzard 1983; McGrath 1987; Palusci 1995; Randolph 1994; Smeekens 2011).

Ethnicity data were reported in only four studies, with the majority of participants in these studies being identified by use of the term 'White' or 'Caucasian': 70% (Jacobsen 1993), 75% (Kleemeier 1988), 84.2% (Mathews 2017), and 97.5% (Kim 2019). A minority of participants were Hispanic, African‐American, or Asian.

Interventions

Intervention conditions

The 11 trials examined the effectiveness of 11 distinct but comparable interventions. Interventions named were: child maltreatment reporting workshop (Alvarez 2010); child maltreatment course (Dubowitz 1991); one‐day training workshop on child abuse (Hazzard 1983); teacher training workshop (Kleemeier 1988); three‐hour inservice training on child sexual abuse adapted from Kleemeier 1988 (Jacobsen 1993); teacher awareness programme (McGrath 1987); child sexual abuse prevention teacher training workshop (Randolph 1994); interdisciplinary team‐based training (Palusci 1995); the Next Page (Smeekens 2011); iLookOut for Child Abuse (Mathews 2017); and Committee for Children Second Step Child Protection Unit (Kim 2019). All were education and training interventions aimed at building the capacity of postqualifying child‐serving professionals to protect children from harm by exposing these professionals to a series of intentional learning experiences.

In eight of the 11 trials, interventions were delivered in face‐to‐face workshops or seminars, whilst in the remaining three trials, interventions were delivered as self‐paced e‐learning modules (Kim 2019; Mathews 2017; Smeekens 2011).

Contents or topics covered

All trials reported contents or topics covered in the training interventions. The most common topics included: indicators of child abuse and neglect; definitions and types of child abuse and neglect; reporting laws, policies, and ethics; how to make a report; incidence or prevalence, or both; and concerns, fears, myths, and misconceptions. Fewer interventions addressed aetiology (Dubowitz 1991; Kleemeier 1988), effects (Jacobsen 1993; Kleemeier 1988), responding to disclosures (Jacobsen 1993; Kim 2019; Randolph 1994), or community resources and referrals (Kleemeier 1988).

Training interventions for teachers were more likely to cover primary prevention, that is strategies for preventing child abuse and neglect before it occurs or preventing its reoccurrence (Jacobsen 1993; Kim 2019; Kleemeier 1988). Training for doctors, nurses, and mental health professionals tended to emphasise evaluation and diagnosis, communicating with children, and interviewing caregivers (Alvarez 2010; Dubowitz 1991; Palusci 1995; Smeekens 2011).

In three studies, all of the which evaluated e‐learning interventions, the study authors explained elements of underlying programme theory. In iLookOut, training was conceptualised as having two key dimensions designed to enhance participants' cognitive and affective attributes for reporting child maltreatment (Mathews 2017). In the Next Page, content was built around three dimensions: recognition, responding (acting), and communicating (Smeekens 2011). In Second Step Child Protection Unit, the staff training component was part of a broader comprehensive 'whole school' approach to prevention of child sexual abuse. This training addressed multiple features of the school ecology: school policies and procedures, staff training, student lessons, and family education (Kim 2019).

Teaching methods, strategies, or processes

Teaching methods, strategies, or processes used in intervention delivery were reported in nine of the 11 trials, with two trials providing no information (Kim 2019; McGrath 1987). The most common methods included: the use of films/videos; modelling via observations of clinicians; experiential exercises; and role‐plays. With the advent of technology, case simulations were used (Mathews 2017; Smeekens 2011). These methods were directed towards providing insights into real‐life situations in which child abuse and neglect would be encountered, providing opportunities to observe experienced practitioners at work, engage in practice, and receive feedback. Some interventions also included question‐and‐answer sessions with experts (Hazzard 1984; Kleemeier 1988; Randolph 1994). Didactic presentations with group discussion were common, but less so the provision of reading tasks, Dubowitz 1991, and written activities, Randolph 1994. E‐learning modules offered opportunities for the use of interactive elements, including animations, Smeekens 2011, and filmmaking techniques designed to activate empathy for victims, Mathews 2017. For example, in iLookOut, e‐learning modules have an “interactive, video‐based storyline with films shot in point‐of‐view (i.e. the camera functioning as the learner’s eyes) ... as key events unfold through interactions involving children, parents, and co‐workers (all played by actors), the learner had to decide how to best respond” (Mathews 2017, p 19).

The duration and intensity of interventions in the included trials ranged from a single two‐hour workshop, Alvarez 2010; McGrath 1987, to six 90‐minute seminars conducted over a one‐month period (Dubowitz 1991). A six‐hour workshop for teachers, first reported in Hazzard 1984, was also used in Kleemeier 1988, and was then adapted for a three‐hour workshop by Jacobsen 1993. Similar content was spread over three two‐hour sessions by Randolph 1994. E‐learning interventions used in three studies offered the advantage of self‐paced learning within a specified window of availability, but also presented a challenge in specifying training length (Kim 2019; Mathews 2017; Smeekens 2011).

The interventions were developed and delivered by specialist facilitators (Alvarez 2010; Jacobsen 1993; McGrath 1987), content area experts (Hazzard 1984; Kim 2019; Kleemeier 1988; Mathews 2017; Randolph 1994), and interdisciplinary teams (Dubowitz 1991; Palusci 1995; Smeekens 2011).

Control conditions

In one trial, the comparison condition was an alternative training, that is a cultural sensitivity workshop, which study authors explained was used for its appeal in recruiting participants who were looking for continuing education credits (Alvarez 2010, p 213). In four studies, the training intervention group was compared to a waitlist control group (Kim 2019; Mathews 2017; McGrath 1987; Randolph 1994), and in five studies the comparison condition was no training (Dubowitz 1991; Hazzard 1984; Kleemeier 1988; Palusci 1995; Smeekens 2011). One study did not report the comparison condition (Jacobsen 1993).

Unit of analysis issues

Allocation of individuals to intervention or control conditions in many of the included studies occurred by workplace groups (e.g. all teachers in entire schools, all paediatricians on clinic rotations), thus forming clusters. None of the studies conducted at group level were labelled as clustered studies by study authors, nor were data analysed using statistical methods to account for similarities amongst participants in the same cluster. In some studies, all participants in a cluster (e.g. a school) were allocated to a condition (e.g. Hazzard 1984; Kim 2019). In other studies, clustered data were created by allocating several participants from the same workplace to one condition (e.g. Alvarez 2010; Kleemeier 1988). We identified unit of analysis issues, which we addressed in our reporting in the Effects of interventions section.

Missing data

We identified two types of missing data in the included studies: missing outcome data required for effect size calculation, and missing participant data due to attrition (Alvarez 2010; Dubowitz 1991; Hazzard 1984; Kim 2019; Kleemeier 1988; Mathews 2017; McGrath 1987; Smeekens 2011). For details, see Characteristics of included studies tables. The approaches we used for dealing with missing data and data synthesis for each of these studies are detailed in Appendix 4.

Funding sources

All but two of the 11 included studies reported receiving external funding. Studies were funded by federal government agencies in the USA, Alvarez 2010; Dubowitz 1991; Kleemeier 1988; Randolph 1994, and Canada (McGrath 1987), and by a combination of university and philanthropic funding in the USA (Hazzard 1984; Mathews 2017). One study was funded by the philanthropic arm of an international technology company, which also hosted the online training platform used in the study (Smeekens 2011). One study was funded by a training intervention developer, a non‐government organisation in the USA (Kim 2019).

Outcomes

In this section, we have summarised the primary and secondary outcomes of interest that were investigated in the 11 included studies. For details by individual study, see Characteristics of included studies.

Primary outcomes

1. Number of reported cases of child abuse and neglect

As shown in Table 1 below, three of the 11 included studies measured changes in the number of reported cases of child abuse and neglect via participants' self‐reports of actual cases reported (i.e. primary outcome 1a) (Hazzard 1984; Kleemeier 1988; Randolph 1994). Although differently named, the instruments used were almost identical, comprising a battery of seven, Hazzard 1984, and five items, Kleemeier 1988; Randolph 1994, assessing self‐reported actions taken in relation to child abuse and neglect (i.e. a behavioural measure). One common item in the batteries, 'reporting a case of suspected abuse' to a protective services agency, was classified as a 1b primary outcome measure. Data were collected in the three studies at six‐week, Kleemeier 1988, three‐month, Randolph 1994, and six‐month, Hazzard 1984, follow‐up periods.