Abstract

We present Low Distortion Local Eigenmaps (LDLE), a manifold learning technique which constructs a set of low distortion local views of a data set in lower dimension and registers them to obtain a global embedding. The local views are constructed using the global eigenvectors of the graph Laplacian and are registered using Procrustes analysis. The choice of these eigenvectors may vary across the regions. In contrast to existing techniques, LDLE can embed closed and non-orientable manifolds into their intrinsic dimension by tearing them apart. It also provides gluing instruction on the boundary of the torn embedding to help identify the topology of the original manifold. Our experimental results will show that LDLE largely preserved distances up to a constant scale while other techniques produced higher distortion. We also demonstrate that LDLE produces high quality embeddings even when the data is noisy or sparse.

Keywords: manifold learning, graph laplacian, local parameterization, procrustes analysis, closed manifold, non-orientable manifold

1. Introduction

Manifold learning techniques such as Local Linear Embedding (Roweis and Saul, 2000), Diffusion maps (Coifman and Lafon, 2006), Laplacian eigenmaps (Belkin and Niyogi, 2003), t-SNE (Maaten and Hinton, 2008) and UMAP (McInnes et al., 2018), aim at preserving local information as they map a manifold embedded in higher dimension into lower (possibly intrinsic) dimension. In particular, UMAP and t-SNE follow a top-down approach as they start with an initial low-dimensional global embedding and then refine it by minimizing a local distortion measure on it. In contrast, similar to LTSA (Zhang and Zha, 2003) and (Singer and Wu, 2011), a bottom-up approach for manifold learning can be conceptualized to consist of two steps, first obtaining low distortion local views of the manifold in lower dimension and then registering them to obtain a global embedding of the manifold. In this paper, we take this bottom-up perspective to embed a manifold in low dimension, where the local views are obtained by constructing coordinate charts for the manifold which incur low distortion.

1.1. Local Distortion

Let be a d-dimensional Riemannian manifold with finite volume. By definition, for every xk in , there exists a coordinate chart such that , and Φk maps into . One can envision to be a local view of in the ambient space. Using rigid transformations, these local views can be registered to recover . Similarly, can be viewed to be a local view of in the d-dimensional embedding space . Again, using rigid transformations, these local views can be registered to obtain the d-dimensional embedding of .

As there may exist multiple mappings which map into , a natural strategy would be to choose a mapping with low distortion. Multiple measures of distortion exist in literature (Vankadara and Luxburg, 2018). The measure of distortion used in this work is as follows. Let dg(x,y) denote the shortest geodesic distance between . The distortion of Φk on as defined in (Jones et al., 2007) is given by

| (1) |

where is the Lipschitz norm of Φk given by

| (2) |

and similarly,

| (3) |

Note that Distortion is always greater than or equal to 1. If Distortion , then Φk is said to have no distortion on . This is achieved when the mapping Φk preserves distances between points in up to a constant scale, that is, when Φk is a similarity on . It is not always possible to obtain a mapping with no distortion. For example, there does not exist a similarity which maps a locally curved region on a surface into a Euclidean plane. This follows from the fact that the sign of the Gaussian curvature is preserved under simil arity transformation which in turn follows from the Gauss’s Theorema Egregium.

1.2. Our Contributions

This paper takes motivation from the work in (Jones et al., 2007) where the authors provide guarantees on the distortion of the coordinate charts of the manifold constructed using carefully chosen eigenfunctions of the Laplacian. However, this only applies to the charts for small neighborhoods on the manifold and does not provide a global embedding. In this paper, we present an approach to realize their work in the discrete setting and obtain low-dimensional low distortion local views of the given data set using the eigenvectors of the graph Laplacian. Moreover, we piece together these local views to obtain a global embedding of the manifold. The main contributions of our work are as follows:

We present an algorithmic realization of the construction procedure in (Jones et al., 2007) that applies to the discrete setting and yields low-dimensional low distortion views of small metric balls on the given discretized manifold (See Section 2 for a summary of their procedure).

We present an algorithm to obtain a global embedding of the manifold by registering its local views. The algorithm is designed so as to embed closed as well as non-orientable manifolds into their intrinsic dimension by tearing them apart. It also provides gluing instructions for the boundary of the embedding by coloring it such that the points on the boundary which are adjacent on the manifold have the same color (see Figure 2).

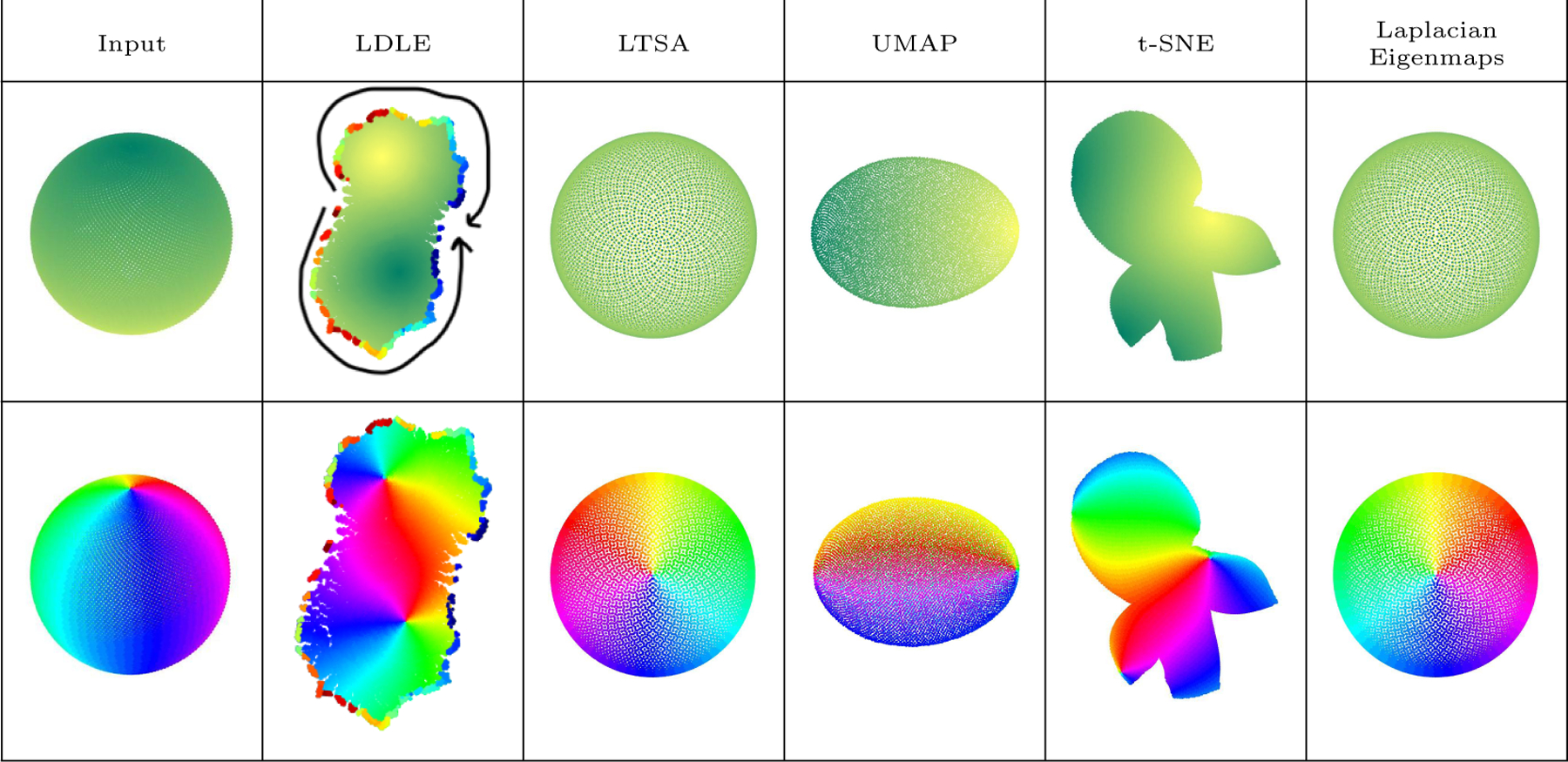

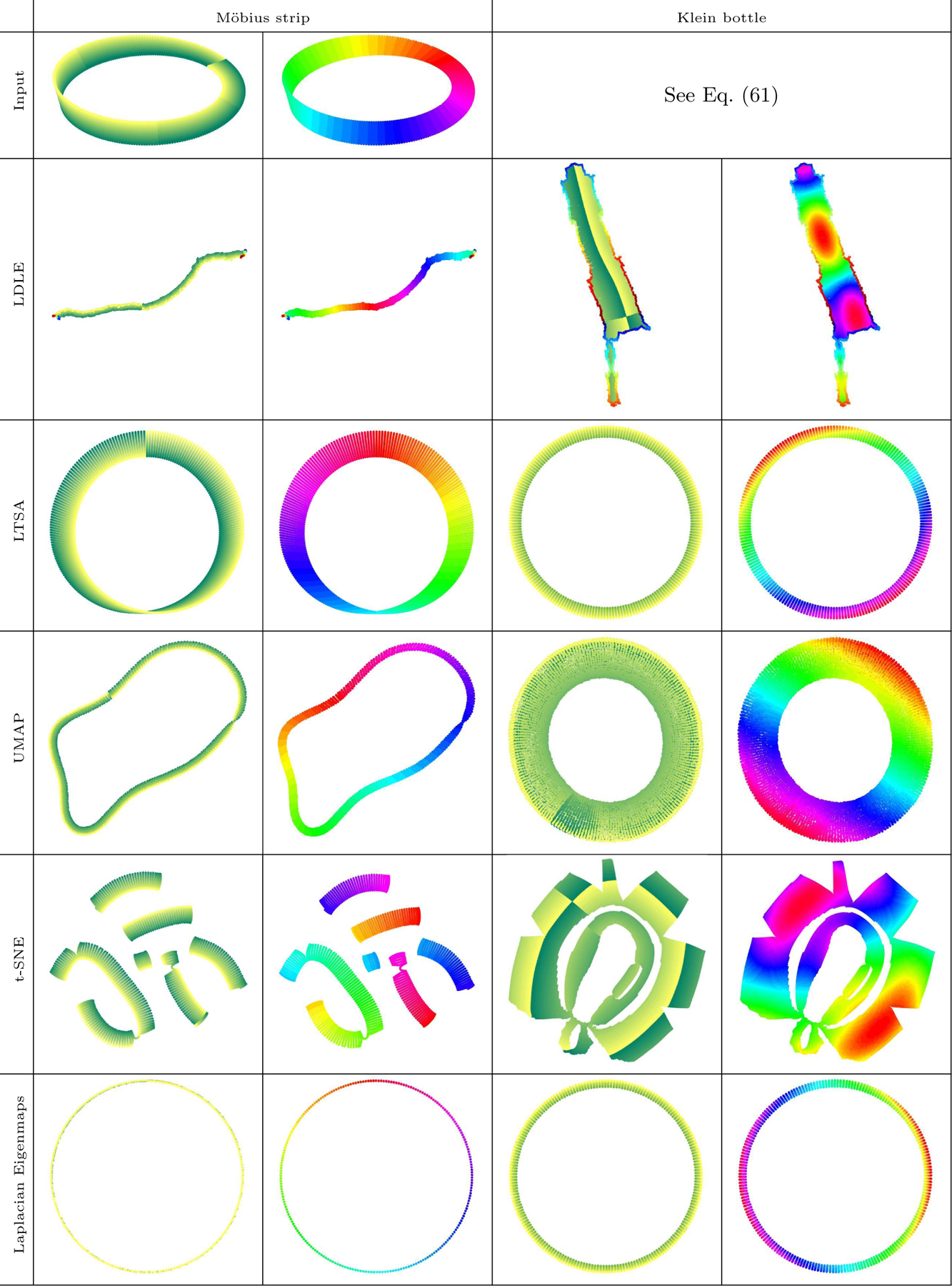

Figure 2:

Embeddings of a sphere in into . The top and bottom row contain the same plots colored by the height and the azimuthal angle of the sphere (0 − 2π), respectively. LDLE automatically colors the boundary so that the points on the boundary which are adjacent on the sphere have the same color. The arrows are manually drawn to help the reader identify the two pieces of the boundary which are to be stitched together to recover the original sphere. LTSA, UMAP and Laplacian eigenmaps squeezed the sphere into different viewpoints of (side or top view of the sphere). t-SNE also tore apart the sphere but the embedding lacks interpretability as it is “unaware” of the boundary.

LDLE consists of three main steps. In the first step, we estimate the inner product of the Laplacian eigenfunctions’ gradients using the local correlation between them. These estimates are used to choose eigenfunctions which are in turn used to construct low-dimensional low distortion parameterizations Φk of the small balls Uk on the manifold. The choice of the eigenfunctions depend on the underlying ball. A natural next step is to align these local views Φk(Uk) in the embedding space, to obtain a global embedding. One way to align them is to use Generalized Procrustes Analysis (GPA) (Crosilla and Beinat, 2002; Gower, 1975; Ten Berge, 1977). However, we empirically observed that GPA is less efficient and prone to errors due to large number of local views with small overlaps between them. Therefore, motivated from our experimental observations and computational necessity, in the second step, we develop a clustering algorithm to obtain a small number of intermediate views with low distortion, from the large number of smaller local views Φk(Uk). This makes the subsequent GPA based registration procedure faster and less prone to errors.

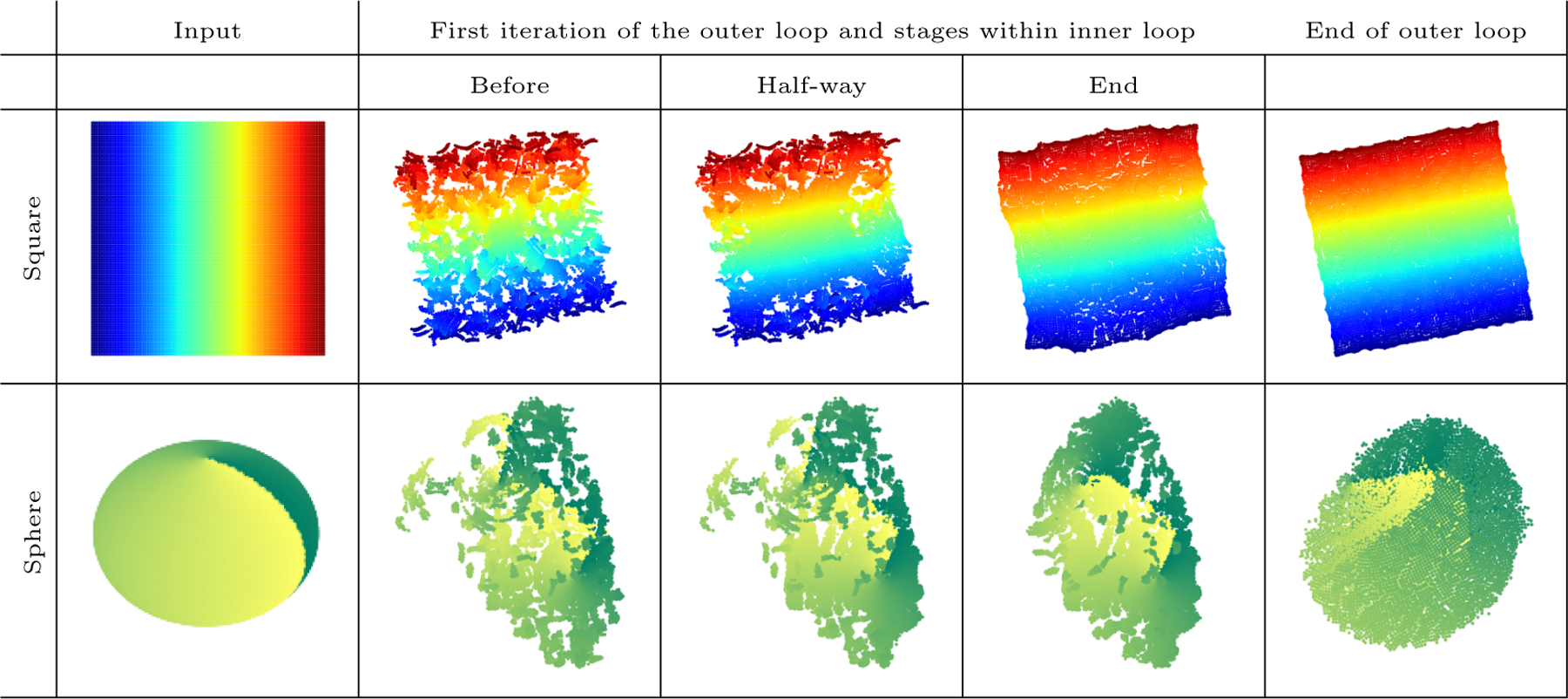

Finally, in the third step, we register intermediate views using an adaptation of GPA which enables tearing of closed and non-orientable manifolds so as to embed them into their intrinsic dimension. The results on a 2D rectangular strip and a 3D sphere are presented in Figures 1 and 2, to motivate our approach.

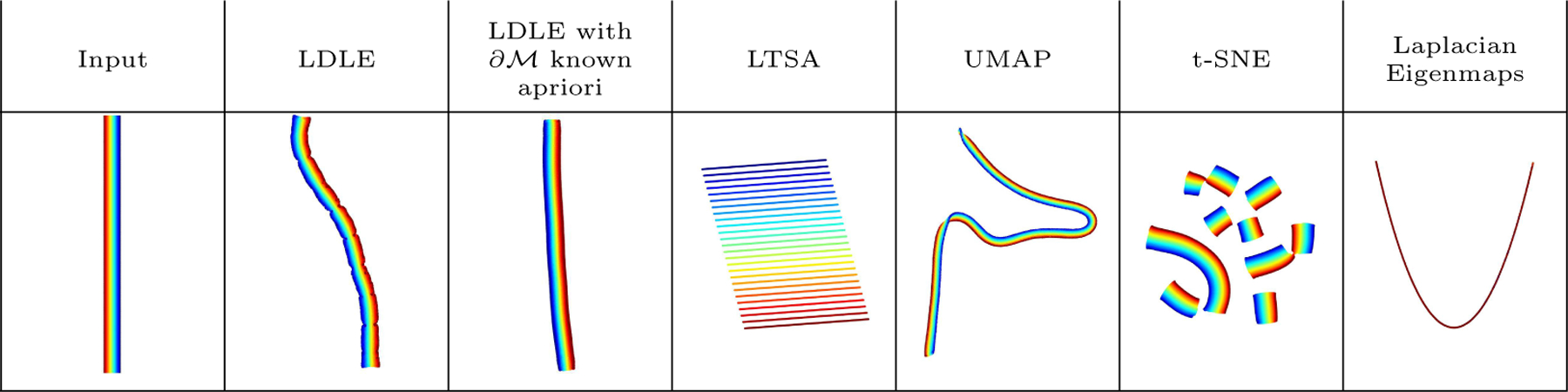

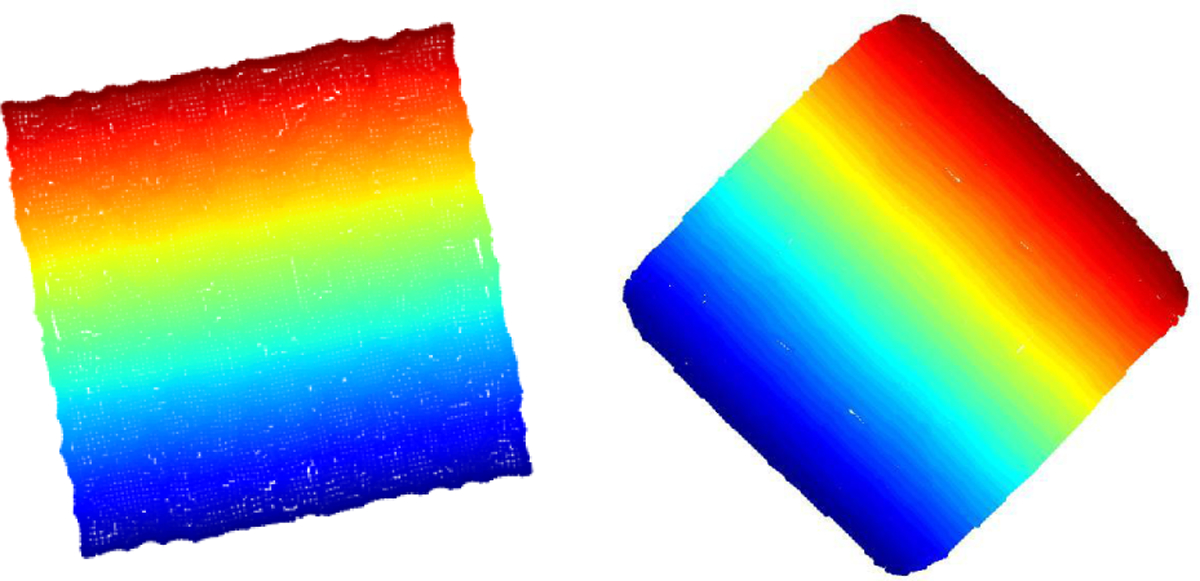

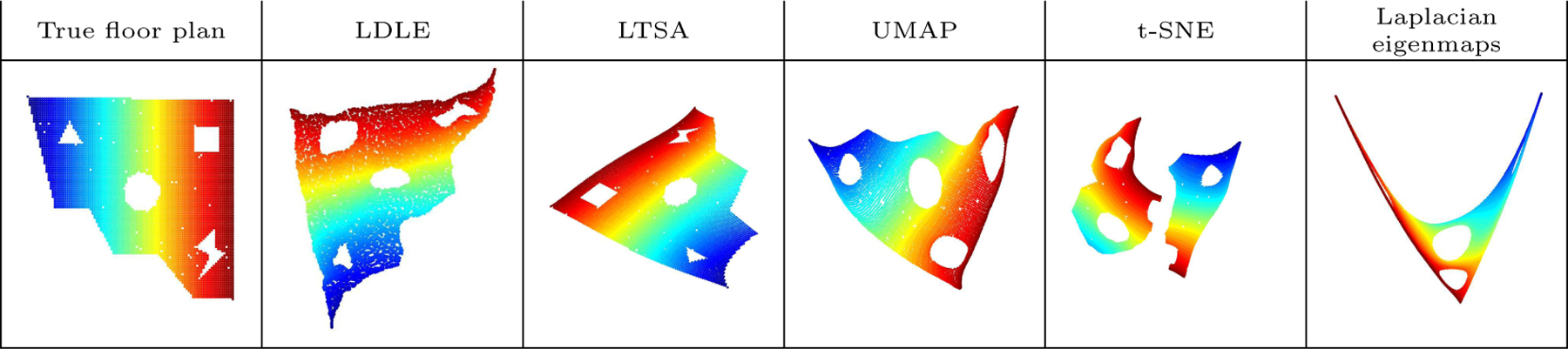

Figure 1:

Embeddings of a rectangle (4 × 0.25) with high aspect ratio in into .

The paper organization is as follows. Section 2 provides relevant background and motivation. In Section 3 we present the construction of low-dimensional low distortion local parameterizations. Section 4 presents our clustering algorithm to obtain intermediate views. Section 5 registers the intermediate views to a global embedding. In Section 6 we compare the embeddings produced by our algorithm with existing techniques on multiple data sets. Section 7 concludes our work and discusses future directions.

1.3. Related Work

Laplacian eigenfunctions are ubiquitous in manifold learning. A large proportion of the existing manifold learning techniques rely on a fixed set of Laplacian eigenfunctions, specifically, on the first few non-trivial low frequency eigenfunctions, to construct a low-dimensional embedding of a manifold in high dimensional ambient space. These low frequency eigenfunctions not only carry information about the global structure of the manifold but they also exhibit robustness to the noise in the data (Coifman and Lafon, 2006). Laplacian eigenmaps (Belkin and Niyogi, 2003), Diffusion maps (Coifman and Lafon, 2006) and UMAP (McInnes et al., 2018) are examples of such top-down manifold learning techniques. While there are limited bottom-up manifold learning techniques in the literature, to the best of our knowledge, none of them makes use of Laplacian eigenfunctions to construct local views of the manifold in lower dimension.

LTSA is an example of a bottom-up approach for manifold learning whose local mappings project local neighborhoods onto the respective tangent spaces. A local mapping in LTSA is a linear transformation whose columns are the principal directions obtained by applying PCA on the underlying neighborhood. These directions form an estimate of the basis for the tangent space. Having constructed low-dimensional local views for each neighborhood, LTSA then aligns all the local views to obtain a global embedding. As discussed in their work and as we will show in our experimental results, LTSA lacks robustness to the noise in the data. This further motivates our approach of using robust low-frequency Laplacian eigenfunctions for the construction of local views. Moreover, due to the specific constraints used in their alignment, LTSA embeddings fail to capture the aspect ratio of the underlying manifold (see Appendix F for details).

Laplacian eigenmaps uses the eigenvectors corresponding to the d smallest eigenvalues (excluding zero) of the normalized graph Laplacian to embed the manifold in . It can also be perceived as a top-down approach which directly obtains a global embedding that minimizes Dirichlet energy under some constraints. For manifolds with high aspect ratio, in the context of Section 1.1, the distortion of the local parameterizations based on the restriction of these eigenvectors on local neighborhoods, could become extremely high. For example, as shown in Figure 1, the Laplacian eigenmaps embedding of a rectangle with an aspect ratio of 16 looks like a parabola. This issue is explained in detail in (Saito, 2018; Chen and Meila, 2019; Dsilva et al., 2018; Blau and Michaeli, 2017).

UMAP, to a large extent, resolves this issue by first computing an embedding based on the d non-trivial low-frequency eigenvectors of a symmetric normalized Laplacian and then “sprinkling” white noise in it. It then refines the noisy embedding by minimizing a local distortion measure based on fuzzy set cross entropy. Although UMAP embeddings seem to be topologically correct, they occasionally tend to have twists and sharp turns which may be unwanted (see Figure 1).

t-SNE takes a different approach of randomly initializing the global embedding, defining a local t-distribution in the embedding space and local Gaussian distribution in the high dimensional ambient space, and finally refining the embedding by minimizing the Kullback–Leibler divergence between the two sets of distributions. As shown in Figure 1, t-SNE tends to output a dissected embedding even when the manifold is connected. Note that the recent work by Kobak and Linderman (2021) showed that t-SNE with spectral initialization results in a similar embedding as that of UMAP. Therefore, in this work, we display the output of the classic t-SNE construction, with random initialization only.

A missing feature in existing manifold learning techniques is their ability to embed closed manifolds into their intrinsic dimensions. For example, a sphere in is a 2-dimensional manifold which can be represented by a connected domain in with boundary gluing instructions provided in the form of colors. We solve this issue in this paper (see Figure 2).

2. Background and Motivation

Due to their global nature and robustness to noise, in our bottom-up approach for manifold learning, we propose to construct low distortion (see Eq. (1)) local mappings using low frequency Laplacian eigenfunctions. A natural way to achieve this is to restrict the eigenfunctions on local neighborhoods. Unfortunately, the common trend of using first d non-trivial low frequency eigenfunctions to construct these local mappings fails to produce low distortion on all neighborhoods. This directly follows from the Laplacian Eigenmaps embedding of a high aspect-ratio rectangle shown in Figure 1. The following example explains that even in case of unit aspect-ratio, a local mapping based on the same set of eigenfunctions would not incur low distortion on each neighborhood, while mappings based on different sets of eigenfunctions may achieve that.

Consider a unit square [0,1]×[0,1] such that for every point xk in the square, is the disc of radius 0.01 centered at xk. Consider a mapping based on the first two non-trivial eigenfunctions cos(πx) and cos(πy) of the Laplace-Beltrami operator on the square with Neumann boundary conditions, that is,

| (4) |

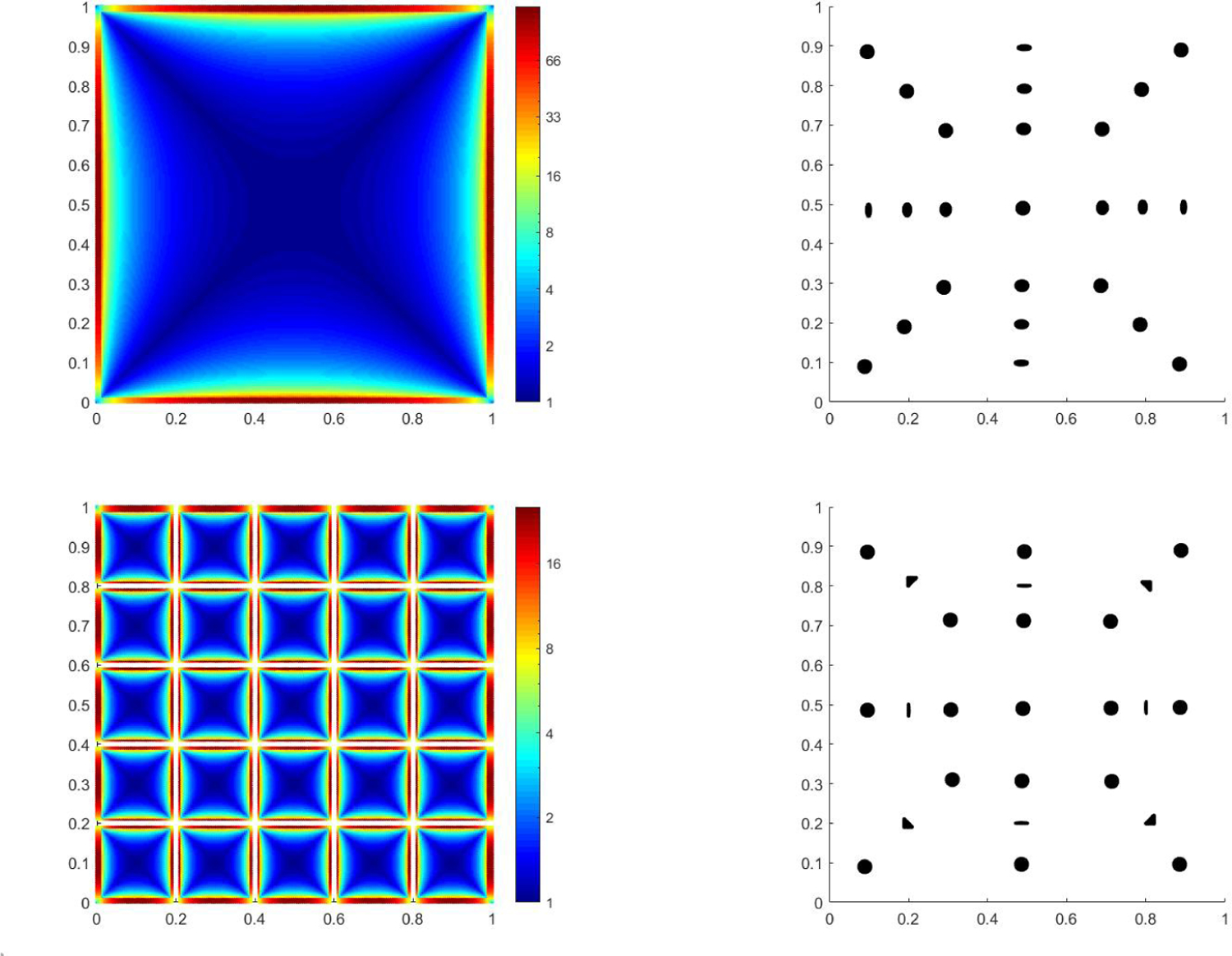

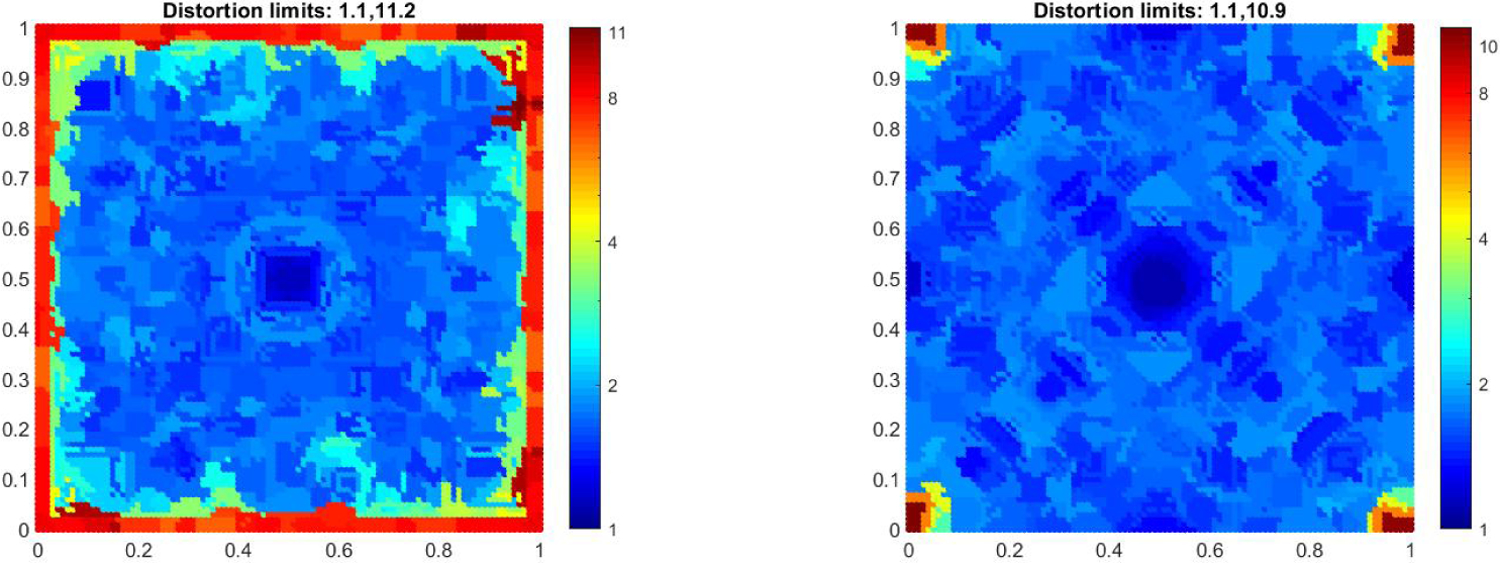

As shown in Figure 3, maps the discs along the diagonals to other discs. The discs along the horizontal and vertical lines through the center are mapped to ellipses. The skewness of these ellipses increases as we move closer to the middle of the edges of the unit square. Thus, the distortion of is low on the discs along the diagonals and high on the discs close to the middle of the edges of the square.

Figure 3:

(Left) Distortion of (top) and (bottom) on discs of radius 0.01 centered at (x,y) for all x, y ∈ [0, 1] × [0, 1]. produces close to infinite distortion on the discs located in the white region. (Right) Mapping of the discs at various locations in the square using (top) and (bottom).

Now, consider a different mapping based on another set of eigenfunctions,

| (5) |

Compared to , produces almost no distortion on the discs of radius 0.01 centered at (0.1, 0.5) and (0.9, 0.5) (see Figure 3). Therefore, in order to achieve low distortion, it seem to make sense to construct local mappings for different regions based on different sets of eigenfunctions.

The following result from (Jones et al., 2007) manifests the above claim as it shows that, for a given small neighborhood on a Riemannian manifold, there always exist a subset of Laplacian eigenfunctions such that a local parameterization based on this subset is bilipschitz and has bounded distortion. A more precise statement follows.

Theorem 1 ((Jones et al., 2007), Theorem 2.2.1). Let be a d-dimensional Riemannian manifold. Let ∆g be the Laplace-Beltrami operator on it with Dirichlet or Neumann boundary conditions and let ϕi be an eigenfunction of ∆g with eigenvalue λi. Assume that where is the volume of and the uniform ellipticity conditions for ∆g are satisfied. Let and rk be less than the injectivity radius at xk (the maximum radius where the the exponential map is a diffeomorphism). Then, there exists a constant κ > 1 which depends on d and the metric tensor g such that the following hold. Let ρ ≤ rk and where

| (6) |

Then there exist i1,i2,...,id such that, if we let

| (7) |

then the map

| (8) |

is bilipschitz such that for any y1,y2 ∈ Bk it satisfies

| (9) |

where the associated eigenvalues satisfy

| (10) |

and the distortion is bounded from above by κ2 i.e.

| (11) |

Motivated by the above result, we adopt the form of local paramterizations Φk in Eq. (8) as local mappings in our work. The main challenge then is to identify the set of eigenfunctions for a given neighborhood such that the resulting parameterization produces low distortion on it. The existence proof of the above theorem by Jones et al. (2007) suggests a procedure to identify this set in the continuous setting. Below, we provide a sketch of their procedure and in Section 3 we describe our discrete realization of it.

2.1. Eigenfunction Selection in the Continuous Setting

Before describing the procedure used in (Jones et al., 2007) to choose the eigenfunctions, we first provide some intuition about the desired properties for the chosen eigenfunctions so that the resulting parameterization Φk has low distortion on Bk.

Consider the simple case of Bk representing a small open ball of radius κ−1ρ around xk in equipped with the standard Euclidean metric. Then the first-order Taylor approximation of Φk(x), x ∈ Bk, about xk is given by

| (12) |

Note that are positive scalars constant with respect to x. Now, Distortion(Φk,Bk) = 1 if and only if Φk preserves distances between points in Bk up to a constant scale (see Eq. (1)). That is,

| (13) |

Using the first-order approximation of Φk we get,

| (14) |

Therefore, for low distortion Φk, J must approximately behave like a similarity transformation and therefore, J needs to be approximately orthogonal up to a constant scale. In other words, the chosen eigenfunctions should be such that are close to being orthogonal and have similar lengths. The same intuition holds in the manifold setting too. The construction procedure described in (Jones et al., 2007) aims to choose eigenfunctions such that

they are close to being locally orthogonal, that is, are approximately orthogonal, and

that their local scaling factors are close to each other.

Note. Throughout this paper, we use the convention where is the exponential map at xk. Therefore, ∇ϕi(xk) can be represented by a d-dimensional vector in a given d-dimensional orthonormal basis of . Even though the representation of these vectors depend on the choice of the orthonormal basis, the value of the canonical inner product between these vectors, and therefore the 2-norm of the vectors, are the same across different basis. This follows from the fact that an orthogonal transformation preserves the inner product.

Remark 1. Based on the above first order approximation, one may take our local mappings Φk to also be projections onto the tangent spaces. However, unlike LTSA (Zhang and Zha, 2003) where the basis of the tangent space is estimated by the local principal directions, in our case it is estimated by the locally orthogonal gradients of the global eigenfunctions of the Laplacian. Therefore, LTSA relies only on the local structure to estimate the tangent space while, in a sense, our method makes use of both local and global structure of the manifold.

A high level overview of the procedure presented in (Jones et al., 2007) to choose eigenfunctions which satisfy the properties in (a) and (b) follows.

A set Sk of the indices of candidate eigenfunctions is chosen such that i ∈ Sk if the length of γki∇ϕi(xk) is bounded from above by a constant, say C.

A direction is selected at random.

Subsequently i1 ∈ Sk is selected so that is sufficiently large. This motivates to be approximately in the same direction as p1 and the length of it to be close to the upper bound C.

Then, a recursive strategy follows. To find the s-th eigenfunction for s ∈ {2,...,d}, a direction is chosen such that it is orthogonal to .

Subsequently, is ∈ Sk is chosen so that is sufficiently large. Again, this motivates to be approximately in the same direction as ps and the length of it to be close to the upper bound C.

Since ps is orthogonal to and the direction of is approximately the same as ps, therefore (a) is satisfied. Since for all , has a length close to the upper bound C, therefore (b) is also satisfied. The core of their work lies in proving that these always exist under the assumptions of the theorem such that the resulting parameterization Φk has bounded distortion (see Eq. (11)). This bound depends on the intrinsic dimension d and the natural geometric properties of the manifold. The main challenge in practically realizing the above procedure lies in the estimation of . In Section 3, we overcome this challenge.

3. Low-Dimensional Low Distortion Local Parameterization

In the procedure to choose to construct Φk as described above, the selection of the first eigenfunction ϕi1 relies on the derivative of the eigenfunctions at along an arbitrary direction , that is, on . In our algorithmic realization of the construction procedure, we take p1 to be the gradient of an eigenfunction at xk itself (say ∇ϕj(xk)). We relax the unit norm constraint on p1; note that this will neither affect the math nor the output of our algorithm. Then the selection of ϕi1 would depend on the inner products . The value of this inner product does not depend on the choice of the orthonormal basis for . We discuss several ways to obtain a numerical estimate of this inner product by making use of the local correlation between the eigenfunctions (Steinerberger, 2017; Cloninger and Steinerberger, 2018). These estimates are used to select the subsequent eigenfunctions too.

In Section 3.1, we first review the local correlation between the eigenfunctions of the Laplacian. In Theorem 2 we show that the limiting value of the scaled local correlation between two eigenfunctions equals the inner product of their gradients. We provide two proofs of the theorem where each proof leads to a numerical procedure described in Section 3.2, followed by examples to empirically compare the estimates. Finally, in Section 3.3, we use these estimates to obtain low distortion local parameterizations of the underlying manifold.

3.1. Inner Product of Eigenfunction Gradients using Local Correlation

Let be a d-dimensional Riemannian manifold with or without boundary, rescaled so that . Denote the volume element at y by ωg(y). Let ϕi and ϕj be the eigenfunctions of the Laplacian operator ∆g (see statement of Theorem 1) with eigenvalues λi and λj. Let and define

| (15) |

Then the local correlation between the two eigenfunctions ϕi and ϕj at the point xk at scale as defined in (Steinerberger, 2017; Cloninger and Steinerberger, 2018) is given by

| (16) |

where p(t,x,y) is the fundamental solution of the heat equation on . As noted in (Steinerberger, 2017), for fixed, we have

| (17) |

Therefore, p(tk,xk,·) acts as a local probability measure centered at xk with scale (see Eq. (67) in Appendix A for a precise form of p). We define the scaled local correlation to be the ratio of the local correlation Akij and a factor of 2tk.

Table 1:

Default values of LDLE hyperparameters.

| Hyperparameter | Description | Default value |

|---|---|---|

| k nn | No. of nearest neighbors used to construct the graph Laplacian | 49 |

| k tune | The nearest neighbor, distance to which is used as a local scaling factor in the construction of graph Laplacian | 7 |

| N | No. of nontrivial low frequency Laplacian eigenvectors to consider for the construction of local views in the embedding space | 100 |

| d | Intrinsic dimension of the underlying manifold | 2 |

| p | Probability mass for computing the bandwidth tk of the heat kernel | 0.99 |

| k lv | The nearest neighbor, distance to which is used to construct local views in the ambient space | 25 |

| Percentiles used to restrict the choice of candidate eigenfunctions | 50 | |

| Fractions used to restrict the choice of candidate eigenfunctions | 0.9 | |

| η min | Desired minimum number of points in a cluster | 5 |

| to_tear | A boolean for whether to tear the manifold or not | True |

| ν | A relaxation factor to compute the neighborhood graph of the intermediate views in the embedding space | 3 |

| N r | No. of iterations to refine the global embedding | 100 |

Theorem 2. Denote the limiting value of the scaled local correlation by ,

| (18) |

Then equals the inner product of the gradients of the eigenfunctions ϕi and ϕj at xk, that is,

| (19) |

Two proofs are provided in Appendix A and B. A brief summary is provided below.

Proof 1. In the first proof we choose a sufficiently small ϵk and show that

| (20) |

where Bϵ(x) is defined in Eq. (6) and

| (21) |

Then, by using the properties of the exponential map at xk and applying basic techniques in calculus, we show that evaluates to ∇ϕi(xk)T ∇ϕj(xk).

Proof 2. In the second proof, as in (Steinerberger, 2014, 2017), we used the Feynman-Kac formula,

| (22) |

and note that

| (23) |

Then, by applying the formula of the Laplacian of the product of two functions, we show that the above equation equals ∇ϕi(xk)T ∇ϕj(xk).

3.2. Estimate of in the Discrete Setting

To apply Theorems 1 and 2 in practice on data, we need an estimate of in the discrete setting. There are several ways to obtain this estimate. A generic way is by using the algorithms (Cheng and Wu, 2013; Aswani et al., 2011) based on Local Linear Regression (LLR) to estimate the gradient vector ∇ϕi(xk) itself from the values of ϕi in a neighbor of xk. An alternative approach is to use a finite sum approximation of Eq. (20) combined with Eq. (18). A third approach is based on the Feynman-Kac formula where we make use of Eq. (23) in the discrete setting. In the following we explain the latter two approaches.

3.2.1. Finite Sum Approximation

Let be uniformly distributed points on . Let be the distance between xk and xk′. The accuracy with which can be estimated mainly depends on the accuracy of de(· , ·) to the local geodesic distances. For simplicity, we use to be the Euclidean distance . A more accurate estimate of the local geodesic distances can be computed using the method described in (Li and Dunson, 2019).

We construct a sparse unnormalized graph Laplacian L using Algo. 1, where the weight matrix K of the graph edges is defined using the Gaussian kernel. The bandwidth of the Gaussian kernel is set using the local scale of the neighborhoods around each point as in self-tuning spectral clustering (Zelnik-Manor and Perona, 2005). Let ϕi be the ith non-trivial eigenvector of L and denote ϕi(xj) by ϕij.

We estimate by evaluating the scaled local correlation Akij/2tk at a small value of tk. The limiting value of Akij is estimated by substituting a small tk in the finite sum approximation of the integral in Eq. (20). The sum is taken on a discrete ball of a small radius ϵk around xk and is divided by 2tk to obtain an estimate of .

We start by choosing ϵk to be the distance of klvth nearest neighbor of xk where klv is a hyperparameter with a small integral value (subscript lv stands for local view). Thus,

| (24) |

Then the limiting value of tk is given by

| (25) |

where chi2inv is the inverse cdf of the chi-squared distribution with d degrees of freedom evaluated at p. We take p to be 0.99 in our experiments. The rationale behind the above choice of tk is described in Appendix C.

Now define the discrete ball around xk as

| (26) |

Let Uk denote the kth local view of the data in the high dimensional ambient space. For convenience, denote the estimate of by where G is as in Eq. (21). Then

| (27) |

Finally, the estimate of is given by

| (28) |

where Gk is a column vector containing the kth row of the matrix G and ⊙ represents the Hadamard product.

3.2.2. Estimation Based on Feynman-Kac Formula

This approach to estimate is simply the discrete analog of Eq. (23),

| (29) |

where Lk is a column vector containing the kth row of L. A variant of this approach which results in better estimates in the noisy case uses a low rank approximation of L using its first few eigenvectors (see Appendix H).

Remark 2. It is not a coincidence that Eq. (28) and Eq. (29) look quite similar. In fact, if we take T to be a diagonal matrix with as the diagonal, then the matrix T−1(I −G) approximates ∆g in the limit of tending to zero. Replacing L with T−1(I − G) and therefore Lk with (ek − Gk)/tk reduces Eq. (29) to Eq. (28). Here ek is a column vector with kth entry as 1 and rest zeros. Therefore the two approaches are the same in the limit.

Remark 3. The above two approaches can also be generalized to compute the ∇fi(xk)T ∇fj(xk) for arbitrary mappings fi and fj from to as per our convention). To achieve this, simply replace ϕi and ϕj with fi and fj in Eq. (28) and Eq. (29).

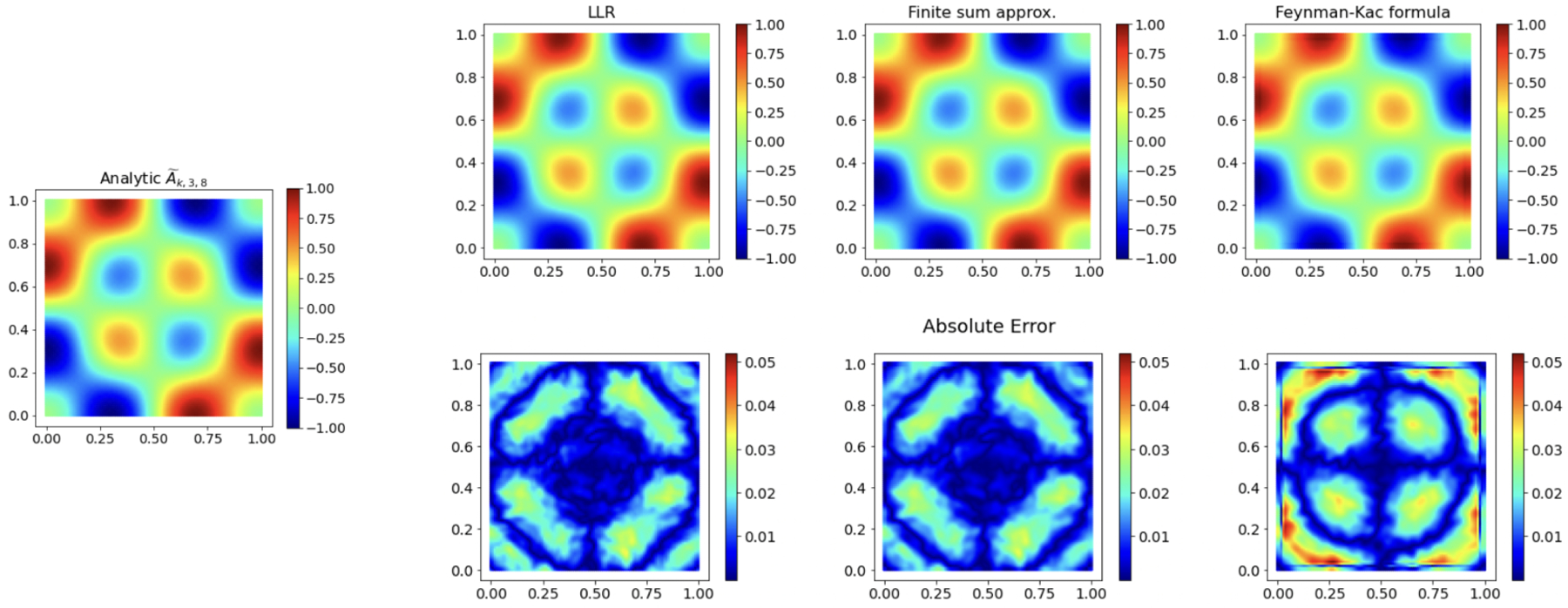

Example. This example will follow us throughout the paper. Consider a square grid [0, 1] × [0, 1] with a spacing of 0.01 in both x and y direction. With knn = 49, ktune = 7 and as input to the Algo. 1, we construct the graph Laplacian L. Using klv = 25, d = 2 and p = 0.99, we obtain the discrete balls Uk and tk. The 3rd and 8th eigenvectors of L and the corresponding analytical eigenfunctions are then obtained. The analytical value of is displayed in Figure 4, followed by its estimate using LLR (Cheng and Wu, 2013), finite sum approximation and Feynman-Kac formula based approaches. The analytical and the estimated values are normalized by to bring them to the same scale. The absolute error due to these approaches are shown below the estimates.

Figure 4:

Comparison of different approaches to estimate in the discrete setting.

Even though, in this example, the Feynman-Kac formulation seem to have a larger error, in our experiments, no single approach seem to be a clear winner across all the examples. This becomes clear in Appendix H where we provided a comparison of these approaches on a noiseless and a noisy Swiss Roll. The results shown in this paper are based on finite sum approximation to estimate .

3.3. Low Distortion Local Parameterization from Laplacian Eigenvectors

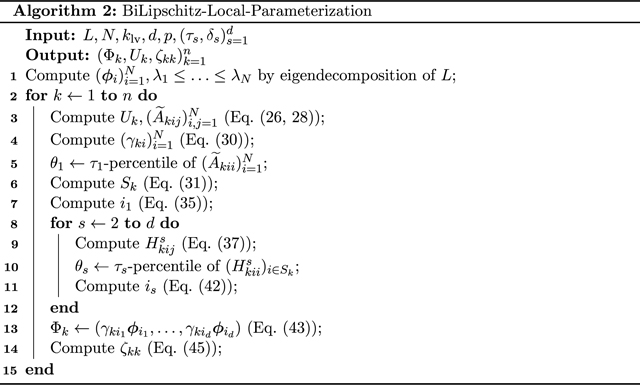

We use ∇ϕi ≡ ∇ϕi(xk) for brevity. Using the estimates of , we now present an algorithmic construction of low distortion local parameterization Φk which maps Uk into . The pseudocode is provided below followed by a full explanation of the steps and a note on the hyperparameters. Before moving forward, it would be helpful for the reader to review the construction procedure in the continuous setting in Section 2.1.

An estimate of γki is obtained by the discrete analog of Eq. (7) and is given by

| (30) |

Step 1. Compute a set Sk of candidate eigenvectors for Φk.

Based on the construction procedure following Theorem 1, we start by computing a set Sk of candidate eigenvectors to construct Φk of Uk. There is no easy way to retrieve the set Sk in the discrete setting as in the procedure. Therefore, we make the natural choice of using the first N nontrivial eigenvectors of L corresponding to the N smallest eigenvalues , with sufficiently large gradient at xk, as the set Sk. The large gradient constraint is required for the numerical stability of our algorithm. Therefore, we set Sk to be,

| (31) |

where θ1 is -percentile of the set and the second equality follows from Eq. (19). Here N and are hyperparameters.

Step 2. Choose a direction .

The unit norm constraint on p1 is relaxed. This will neither affect the math nor the output of our algorithm. Since p1 can be arbitrary we take p1 to be the gradient of an eigenvector r1, that is . The choice of r1 will determine . To obtain a low frequency eigenvector, r1 is chosen so that the eigenvalue is minimal, therefore

| (32) |

Step 3. Find i1 ∈ Sk such that is sufficiently large.

Since , using Eq. (19), the formula for becomes

| (33) |

Then we obtain the eigenvector so that is larger than a certain threshold. We do not know what the value of this threshold would be in the discrete setting. Therefore, we first define the maximum possible value of using Eq. (33) as

| (34) |

Then we take the threshold to be δ1α1 where δ1 ∈ (0,1] is a hyperparameter. Finally, to obtain a low frequency eigenvector , we choose i1 such that

| (35) |

After obtaining , we use a recursive procedure to obtain the s-th eigenvector where s ∈ {2,...,d} in order.

Step 4. Choose a direction orthogonal to .

Again the unit norm constraint will be relaxed with no change in the output. We are going to take ps to be the component of orthogonal to for a carefully chosen rs. For convenience, denote by Vs the matrix with as columns and let be the range of Vs. Let be an eigenvector such that . To find such an rs, we define

| (36) |

| (37) |

Note that is the squared norm of the projection of ∇ϕi onto the vector space orthogonal to . Clearly if and only if . To obtain a low frequency eigenvector such that we choose

| (38) |

where θs is the -percentile of the set and is a hyperparameter. Then we take ps to be the component of which is orthogonal to ,

| (39) |

Step 5. Find is ∈ Sk such that is sufficiently large.

Using Eq. (36, 39), we note that

| (40) |

To obtain such that is greater than a certain threshold, as in step 3, we first define the maximum possible value of using Eq. (40) as,

| (41) |

Then we take the threshold to be δsαs where δs ∈ [0,1] is a hyperparameter. Finally, to obtain a low frequency eigenvector we choose is such that

| (42) |

In the end we obtain a d-dimensional parameterization Φk of Uk given by

| (43) |

We call Φk(Uk) the kth local view of the data in the d-dimensonal embedding space. It is a matrix with |Uk| rows and d columns. Denote the distortion of on Uk by . Using Eq. (1) we obtain

| (44) |

| (45) |

Postprocessing.

The obtained local parameterizations are post-processed so as to remove the anomalous parameterizations having unusually high distortion. We replace the local parameterization Φk of Uk by that of a neighbor, where , if the distortion produced by on Uk is smaller than the distortion ζkk produced by Φk on Uk. If for multiple k′ then we choose the parameterization which produces the least distortion on Uk. This procedure is repeated until no replacement is possible. The pseudocode is provided below.

A note on hyperparameters N,.

Generally, N should be small so that the low frequency eigenvectors form the set of candidate eigenvectors. In almost all of our experiments we take N to be 100. The set of is reduced to two hyperparameters, one for all ‘s and one for all δs’s. As explained above, enforces certain vectors to be non-zero and δs enforces certain directional derivatives to be large enough. Therefore, a small value of in (0, 100) and a large value of δs in (0, 1] is suitable. In most of our experiments, we used a value of 50 for all and a value of 0.9 for all δs. Our algorithm is not too sensitive to the values of these hyperparameters. Other values of N, and δs would also result in the embeddings with high visual quality.

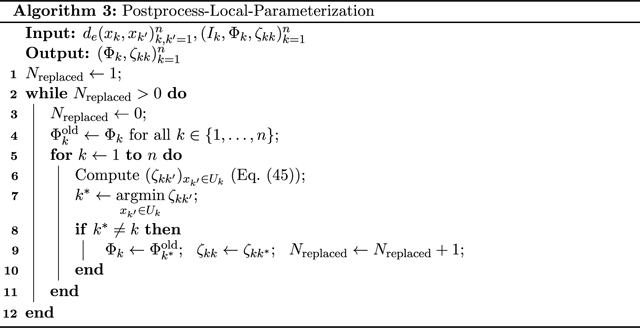

Example. We now build upon the example of the square grid at the end of Section 3.2. The values of the additional inputs are N = 100, and δs = 0.9 for all s ∈ {1,...,d}. Using Algo. 2 and 3 we obtain 104 local views Uk and Φk(Uk) where |Uk| = 25 for all k. In the left image of Figure 5, we colored each point xk with the distortion ζkk of the local parameterization Φk on Uk. The mapped discrete balls Φk(Uk) for some values of k are also shown in Figure 30 in the Appendix H.

Figure 5:

Distortion of the obtained local parameterizations when the points on the boundary are not known (left) versus when they are known apriori (right). Each point xk is colored by ζkk (see Eq. (45)).

Remark 4. Note that the parameterizations of the discrete balls close to the boundary have higher distortion. This is because the injectivity radius at the points close to the boundary is low and precisely zero at the points on the boundary. As a result, the size of the balls around these points exceeds the limit beyond which Theorem 1 is applicable.

At this point we note the following remark in (Jones et al., 2007).

Remark 5. As was noted by L. Guibas, when M has a boundary, in the case of Neumann boundary values, one may consider the “doubled” manifold, and may apply the result in Theorem 1 for a possibly larger rk.

Due to the above remark, assuming that the points on the boundary are known, we computed the distance matrix for the doubled manifold using the method described in (Lafon, 2004). Then we recomputed the local parameterizations Φk keeping all other hyperparameters the same as before. In the right image of Figure 5, we colored each point xk with the distortion of the updated parameterization Φk on Uk. Note the reduction in the distortion of the paramaterizations for the neighborhoods close to the boundary. The distortion is still high near the corners.

3.4. Time Complexity

The combined worst case time complexity of Algo. 1, 2 and 3 is where Npost is the number of iterations it takes to converge in Algo. 3 which was observed to be less than 50 for all the examples in this paper. It took about a minute1 to construct the local views in the above example as well as in all the examples in Section 6.

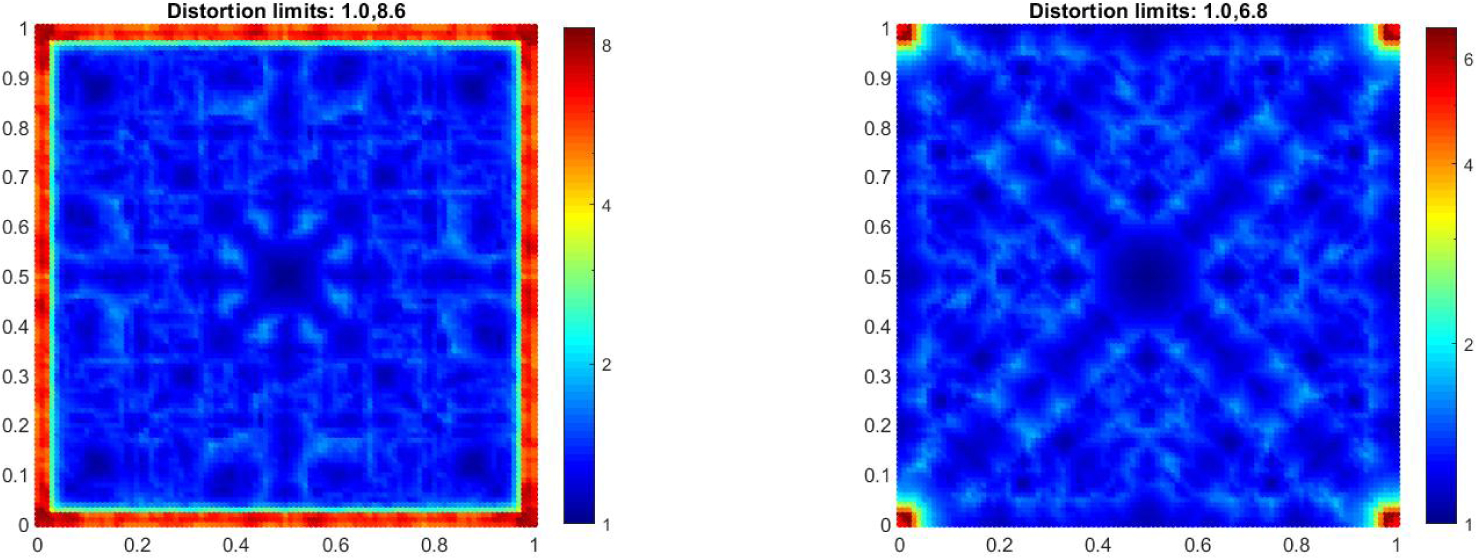

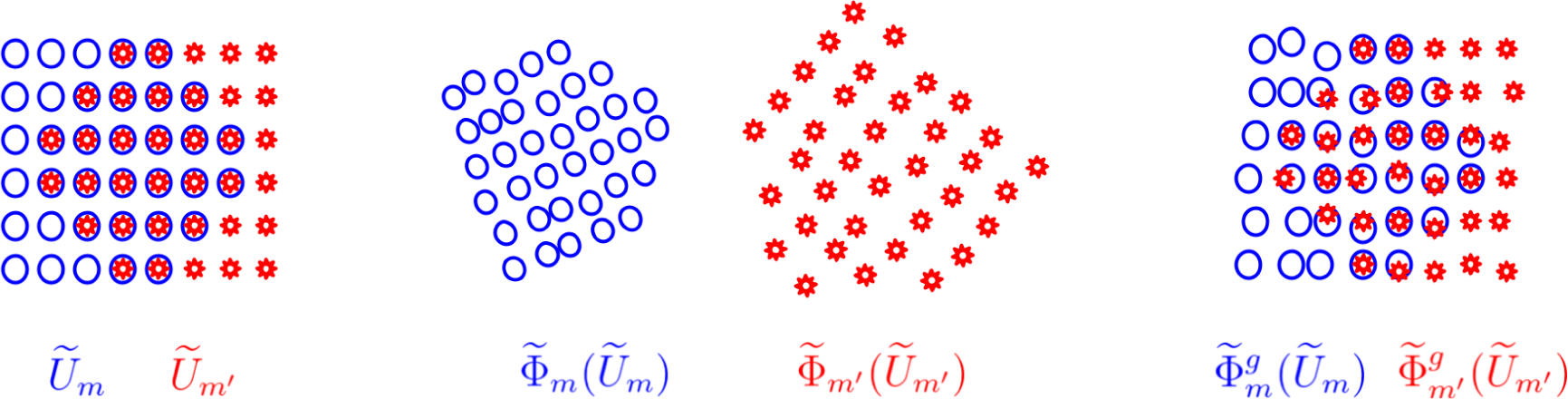

4. Clustering for Intermediate Views

Recall that the discrete balls Uk are the local views of the data in the high dimensional ambient space. In the previous section, we obtained the mappings Φk to construct the local views Φk(Uk) of the data in the d-dimensional embedding space. As discussed in Section 1.2, one can use the GPA (Crosilla and Beinat, 2002; Gower, 1975; Ten Berge, 1977) to register these local views to recover a global embedding. In practice, too many small local views (high n and small |Uk|) result in extremely high computational complexity. Moreover, small overlaps between the local views makes their registration susceptible to errors. Therefore, we perform clustering to obtain M ≪ n intermediate views, and , of the data in the ambient space and the embedding space, respectively. This reduces the time complexity and increases the overlaps between the views, leading to their quick and robust registration.

4.1. Notation

Our clustering algorithm is designed so as to ensure low distortion of the parameterizations on . We first describe the notation used and then present the pseudocode followed by a full explanation of the steps. Let ck be the index of the cluster xk belongs to. Then the set of points which belong to cluster m is given by

| (46) |

Denote by the set of indices of the neighboring clusters of xk. The neighboring points of xk lie in these clusters, that is,

| (47) |

We say that a point xk lies in the vicinity of a cluster m if . Let denote the mth intermediate view of the data in the ambient space. This constitutes the union of the local views associated with all the points belonging to cluster m, that is,

| (48) |

Clearly, a larger cluster means a larger intermediate view. In particular, addition of xk to grows the intermediate view to ,

| (49) |

Let be the d-dimensional parameterization associated with the mth cluster. This parameterization maps to , the mth intermediate view of the data in the embedding space. Note that a point xk generates the local view Uk (see Eq. (26)) which acts as the domain of the parameterization Φk. Similarly, a cluster obtained through our procedure, generates an intermediate view (see Eq. (48)) which acts as the domain of the parameterization. Overall, our clustering procedure replaces the notion of a local view per an individual point by an intermediate view per a cluster of points.

4.2. Low Distortion Clustering

Initially, we start with n singleton clusters where the point xk belongs to the kth cluster and the parameterization associated with the kth cluster is Φk. Thus, ck = k, and for all k,m ∈ {1,...,n}. This automatically implies that initially . The parameterizations associated with the clusters remain the same throughout the procedure. During the procedure, each cluster is perceived as an entity which wants to grow the domain of the associated parameterization by growing itself (see Eq. 49), while simultaneously keeping the distortion of on low (see Eq. 45). To achieve that, each cluster places a careful bid for each point xk. The global maximum bid is identified and the underlying point xk is relabelled to the bidding cluster, hence updating ck. With this relabelling, the bidding cluster grows and the source cluster shrinks. This procedure of shrinking and growing clusters is repeated until all non-empty clusters are large enough, i.e. have a size at least ηmin, a hyperparameter. In our experiments, we choose ηmin from {5,10,15,20,25}. We iterate over η which varies from 2 to ηmin.

In the η-th iteration, we say that the mth cluster is small if it is non-empty and has a size less than η, that is, when . During the iteration, the clusters either shrink or grow until no small clusters remain. Therefore, at the end of the η-th iteration the non-empty clusters are of size at least η. After the last (ηminth) iteration, each non-empty cluster will have at least ηmin points and the empty clusters are pruned away.

Bid by cluster m for xk.

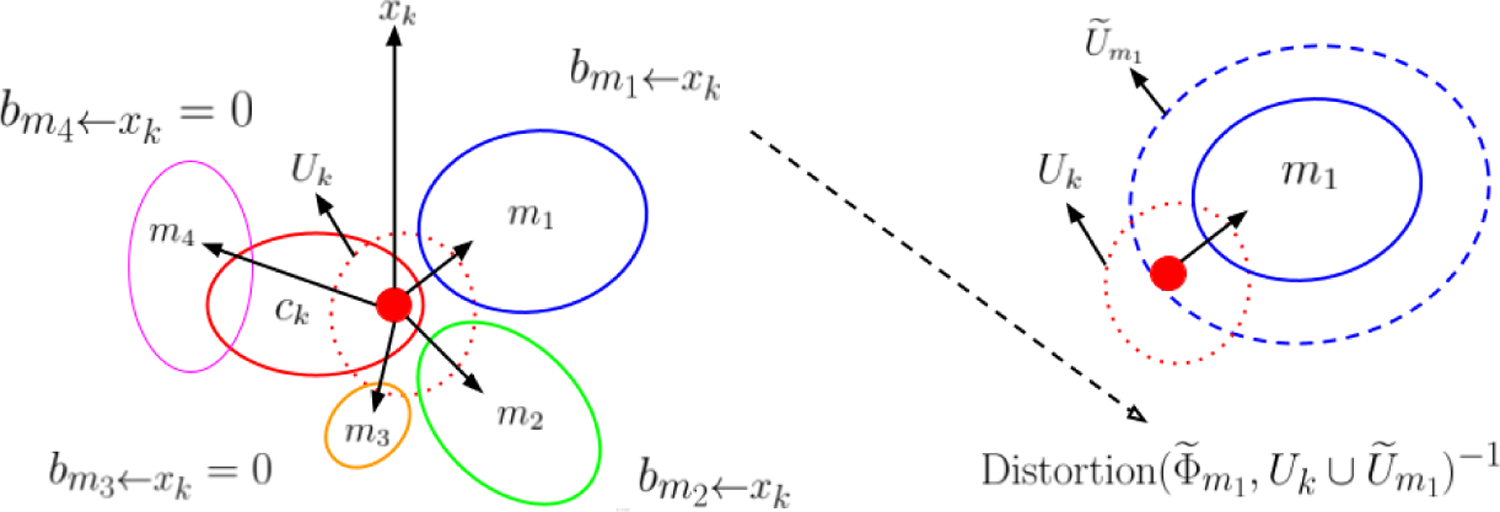

In the η-th iteration, we start by computing the bid by each cluster m for each point xk. The bid function is designed so as to satisfy the following conditions. The first two conditions are there to halt the procedure while the last two conditions follow naturally. These conditions are also depicted in Figure 6.

Figure 6:

Computation of the bid for a point in a small cluster by the neighboring clusters in η-th iteration. (left) xk is a point represented by a small red disc, in a small cluster ck enclosed by solid red line. The dashed red line enclose Uk. Assume that the cluster ck is small so that . Clusters m1, m2, m3 and m4 are enclosed by solid colored lines too. Note that m1, m2 and m3 lie in (the nonempty overlap between these clusters and Uk indicate that), while . Thus, the bid by m4 for xk is zero. Since the size of cluster m3 is less than the size of cluster ck i.e., the bid by m3 for xk is also zero. Since clusters m1 and m2 satisfy all the conditions, the bids by m1 and m2 for xk are to be computed. (right) The bid , is given by the inverse of the distortion of on , where the dashed blue line enclose . If the bid is greater (less) than the bid , then the clustering procedure would favor relabelling of xk to m1 (m2).

No cluster bids for the points in large clusters. Since xk belongs to cluster ck therefore, if then the is zero for all m.

No cluster bids for a point in another cluster whose size is bigger than its own size. Therefore, if then again is zero.

A cluster bids for the points in its own vicinity. Therefore, if (see Eq. 47) then is zero.

Recall that a cluster m aims to grow while keeping the distortion of associated parameterization low on its domain . If the mth cluster acquires the point xk, grows due to the addition of Uk to it (see Eq. (48)), and so does the distortion of on it. Therefore, to ensure low distortion, the natural bid by for the point xk, , is Distortion (see Eq. 45).

Combining the above conditions, we can write the bid by cluster m for the point xk as,

| (50) |

In the practical implementation of above equation, and are computed on the fly using Eq. (47, 48).

Greedy procedure to grow and shrink clusters.

Given the bids by all the clusters for all the points, we grow and shrink the clusters so that at the end of the current iteration η, each non-empty cluster has a size at least η. We start by picking the global maximum bid, say . Let xk be in the cluster s (note that ck, the cluster of xk, is s before xk is relabelled). We relabel ck to m, and update the set of points in clusters s and m, and , using Eq. (46). This implicitly shrinks and grows (see Eq. 48) and affects the bids by clusters m and s or the bids for the points in these clusters. Denote the set of pairs of the indices of all such clusters and the points by

| (51) |

Then the bids are recomputed for all . It is easy to verify that for all other pairs, neither the conditions nor the distortion in Eq. (50) are affected. After this computation, we again pick the global maximum bid and repeat the procedure until the maximum bid becomes zero indicating that no non-empty small cluster remains. This marks the end of the η-th iteration.

Final intermediate views in the ambient and the embedding space.

At the end of the last iteration, all non-empty clusters have at least ηmin points. Let M be the number of non-empty clusters. Using the pigeonhole principle one can show that M would be less than or equal to n/ηmin. We prune away the empty clusters and relabel the non-empty ones from 1 to M while updating ck accordingly. With this, we obtain the clusters with associated parameterizations . Finally, using Eq. (48), we obtain the M intermediate views of the data in the ambient space. Then, the intermediate views of the data in the embedding space are given by . Note that is a matrix with rows and d columns (see Eq. (43)).

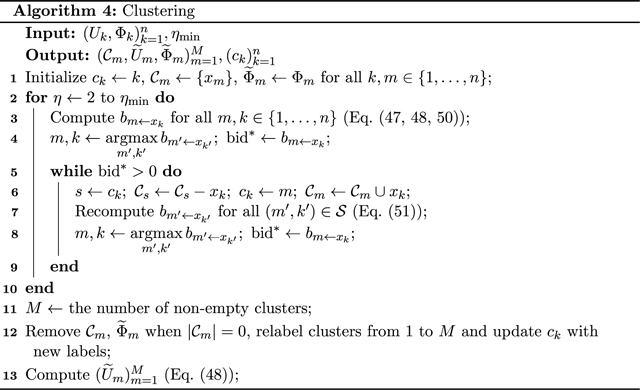

Example. We continue with our example of the square grid which originally contained about 104 points. Therefore, before clustering we had about 104 small local views Uk and Φk(Uk), each containing 25 points. After clustering with ηmin = 10, we obtained 635 clusters and therefore that many intermediate views and with an average size of 79. When the points on the boundary are known then we obtained 562 intermediate views with an average size of 90. Note that there is a trade-off between the size of the intermediate views and the distortion of the parameterizations used to obtain them. For convenience, define to be the distortion of on using Eq. (45). Then, as the size of the views are increased (by increasing ηmin), the value of would also increase. In Figure 7 we colored the points in cluster m, , with . In other words, xk is colored by . Note the increased distortion in comparison to Figure 5.

Figure 7:

Each point xk colored by when the points on the boundary of the square grid are unknown (left) versus when they are known apriori (right).

4.3. Time Complexity

Our practical implementation of Algo. 4 uses memoization for speed up. It took about a minute to construct intermediate views using in the above example with n = 104, klv = 25, d = 2 and ηmin = 10, and it took less than 2 minutes for all the examples in Section 6. It was empirically observed that the time for clustering is linear in n, ηmin and d while it is cubic in klv.

5. Global Embedding using Procrustes Analysis

In this section, we present an algorithm based on Procrustes analysis to align the intermediate views and obtain a global embedding. The M views are transformed by an orthogonal matrix Tm of size d × d, a d-dimensional translation vector vm and a positive scalar bm as a scaling component. The transformed views are given by such that

| (52) |

First we state a general approach to estimate these parameters, and its limitations in Section 5.1. Then we present an algorithm in Section 5.2 which computes these parameters and a global embedding of the data while addressing the limitations of the general procedure. In Section 5.3 we describe a simple modification to our algorithm to tear apart closed manifolds. In Appendix F, we contrast our global alignment procedure with that of LTSA.

5.1. General Approach for Alignment

In general, the parameters are estimated so that for all m and m′, the two transformed views of the overlap between and , obtained using the parameterizations and , align with each other. To be more precise, define the overlap between the mth and the m′th intermediate views in the ambient space as the set of points which lie in both the views,

| (53) |

In the ambient space, the mth and the m′th views are neighbors if is non-empty. As shown in Figure 8 (left), these neighboring views trivially align on the overlap between them. It is natural to ask for a low distortion global embedding of the data. Therefore, we must ensure that the embeddings of due to the mth and the m′th view in the embedding space, also align with each other. Thus, the parameters are estimated so that aligns with for all m and m′. However, due to the distortion of the parameterizations it is usually not possible to perfectly align the two embeddings (see Figure 8). We can represent both embeddings of the overlap as matrices with rows and d columns. Then we choose the measure of the alignment error to be the squared Frobenius norm of the difference of the two matrices. The error is trivially zero if is empty. Overall, the parameters are estimated so as to minimize the following alignment error

| (54) |

In theory, one can start with a trivial initialization of Tm, vm and bm as Id, 0 and 1, and directly use GPA (Crosilla and Beinat, 2002; Gower, 1975; Ten Berge, 1977) to obtain a local minimum of the above alignment error. This approach has two issues.

Figure 8:

(left) The intermediate views and of a 2d manifold in a possibly high dimensional ambient space. These views trivially align with each other. The red star in blue circles represent their overlap . (middle) The mth and m′th intermediate views in the 2d embedding space. (right) Transformed views after aligning with .

Like most optimization algorithms, the rate of convergence to a local minimum and the quality of it depends on the initialization of the parameters. We empirically observed that with a trivial initialization of the parameters, GPA may take a great amount of time to converge and may also converge to an inferior local minimum.

Using GPA to align a view with all of its adjacent views would prevent us from tearing apart closed manifolds; as an example see Figure 11.

Figure 11:

2d embeddings of a square and a sphere at different stages of Algo. 5. For illustration purpose, in the plots in the 2nd and 3rd columns the translation parameter vm was manually set for those views which do not lie in the set . Note that the embedding of the sphere is fallacious. The reason and the resolution is provided in Section 5.3.

These issues are addressed in subsequent Sections 5.2 and 5.3, respectively.

5.2. GPA Adaptation for Global Alignment

First we look for a better than trivial initialization of the parameters so that the views are approximately aligned. The idea is to build a rooted tree where nodes represent the intermediate views. This tree is then traversed in a breadth first order starting from the root. As we traverse the tree, the intermediate view associated with a node is aligned with the intermediate view associated with its parent node (and with a few more views), thus giving a better initialization of the parameters. Subsequently, we refine these parameters using a similar procedure involving random order traversal over the intermediate views.

Initialization (Iter = 1, to tear = False).

In the first outer loop of Algo. 5, we start with Tm = Id, vm as the zero vector and compute bm so as to bring the intermediate views to the same scale as their counterpart in the ambient space. In turn this brings all the views to similar scale (see Figure 9 (c)). We compute the scaling component bm to be the ratio of the median distance between unique points in and in , that is,

| (55) |

Then we transform the the views in a sequence . This sequence corresponds to the breadth first ordering of a tree starting from its root node (which represents s1th view). Let the th view be the parent of the smth view. Here lies in {s1,...,sm−1} and it is a neighboring view of the smth view in the ambient space, i.e. is non-empty. Details about the computation of these sequences is provided in Appendix D. Note that is not defined and consequently, the first view in the sequence (s1th view) is not transformed, therefore and are not updated. We also define , initialized with s1, to keep track of visited nodes which also represent the already transformed views. Then we iterate over m which varies from 2 to M. For convenience, denote the current (mth) node sm by s and its parent by p. The following procedure updates Ts and vs (refer to Figure 9 and 10 for an illustration of this procedure).

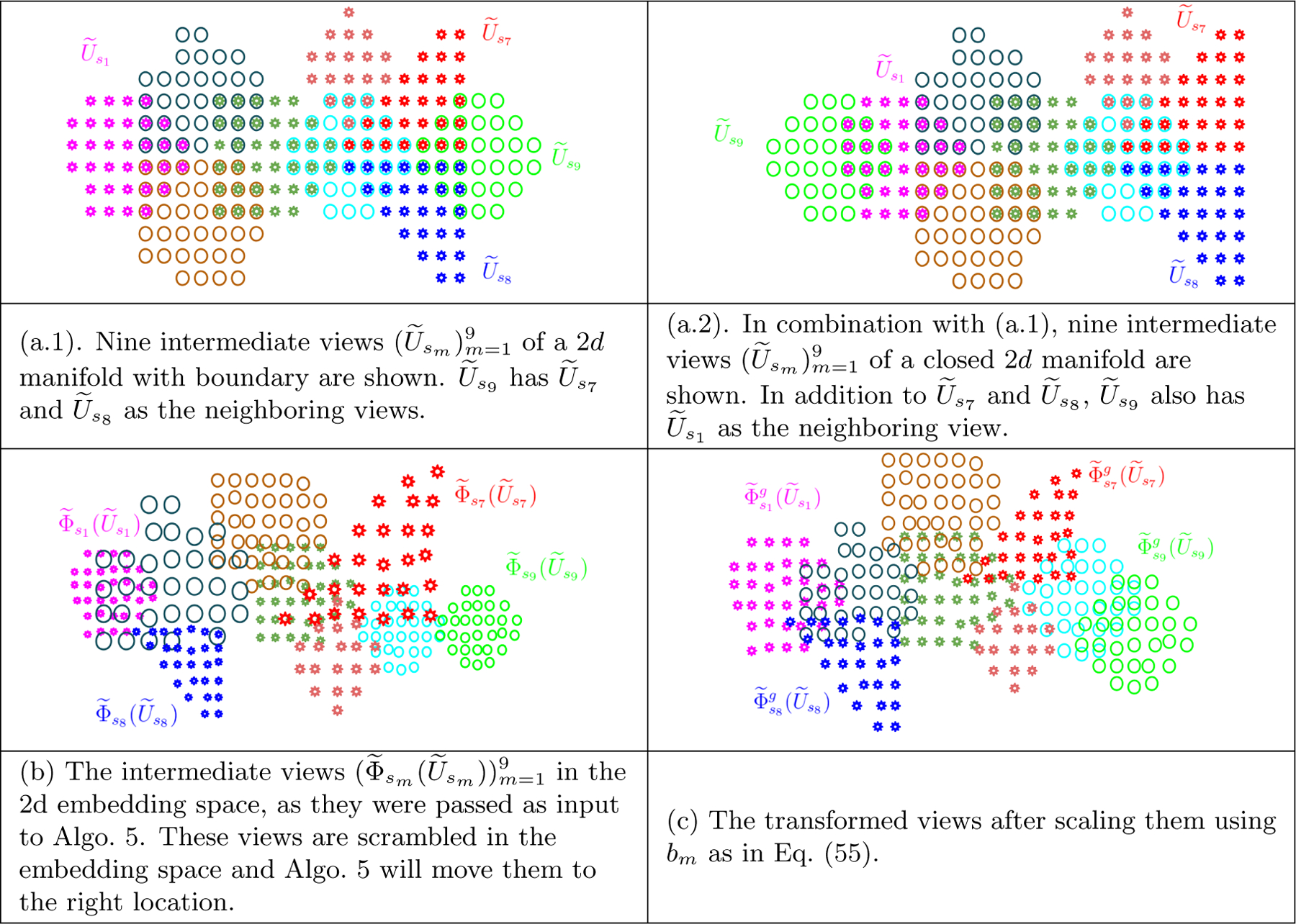

Figure 9:

An illustration of the intermediate views in the ambient and the embedding space as they are passed as input to Algo. 5 and are scaled using Eq. (55).

Figure 10:

An illustration of steps R1 to R4 in Algo. 5, in continuation of Figure 9.

Step R1. We compute a temporary value of Ts and vs by aligning the views and of the overlap , using Procrustes analysis (Gower et al., 2004) without modifying bs.

Step R2. Then we identify more views to align the sth view with. We compute a subset of the set of already visited nodes such that if the sth view and the m′th view are neighbors in the ambient space. Note that, at this stage, is the same as the set {s1,...,sm−1}, the indices of the first m − 1 views. Therefore,

| (56) |

Step R3. We then compute the centroid µs of the views . Here µs is a matrix with d columns and the number of rows given by the size of the set . A point in this set can have multiple embeddings due to multiple parameterizations depending on the overlaps it lies in. The mean of these embeddings forms a row in µs.

Step R4. Finally, we update Ts and vs by aligning the view with for all . This alignment is based on the approach in (Crosilla and Beinat, 2002; Gower, 1975) where, using the Procrustes analysis (Gower et al., 2004; MATLAB, 2018), the view is aligned with the centroid µs, without modifying bs.

Step R5. After the sth view is transformed, we add it to the set of transformed views .

Parameter Refinement (Iter ≥ 2, to tear = False).

At the end of the first iteration of the outer loop in Algo. 5, we have an initialization of such that transformed intermediate views are approximately aligned. To further refine these parameters, we iterate over in random order and perform the same five step procedure as above, Nr times. Besides the random-order traversal, the other difference in a refinement iteration is that the set of already visited nodes , contains all the nodes instead of just the first m − 1 nodes. This affects the computation of (see Eq. (56)) in step R2 so that the sth intermediate view is now aligned with all those views which are its neighbors in the ambient space. Note that the step R5 is redundant during refinement.

In the end, we compute the global embedding yk of xk by mapping xk using the transformed parameterization associated with the cluster ck it belongs to,

| (57) |

An illustration of the global embedding at various stages of Algo. 5 is provided in Figure 11.

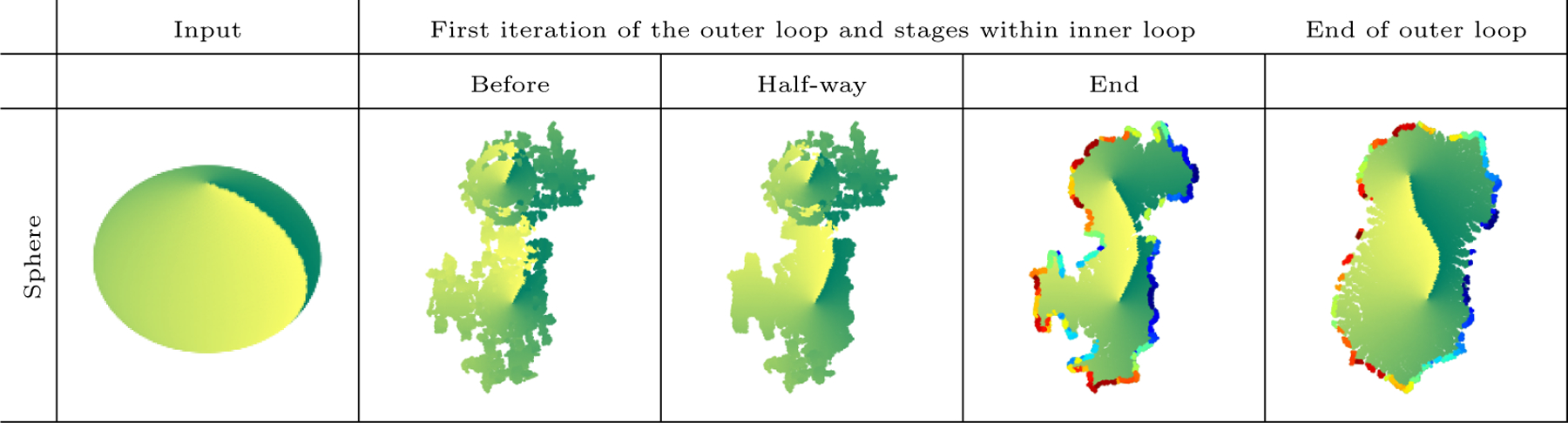

5.3. Tearing Closed Manifolds

When the manifold has no boundary, then the step R2 in above section may result in a set containing the indices of the views which are neighbors of the sth view in the ambient space but are far apart from the transformed sth view in the embedding space, obtained right after step R1. For example, as shown in Figure 10 (f.2), because the s9th view and the s1th view are neighbors in the ambient space (see Figure 9 (a.1, a.2)) but in the embedding space, they are far apart. Due to such indices in , the step R3 results in a centroid, which when used in step R4, results in a fallacious estimation of the parameters Ts and vs, giving rise to a high distortion embedding. By trying to align with all its neighbors in the ambient space, the s9th view is misaligned with respect to all of them (see Figure 10 (g.2)).

Resolution (to_tear = True).

We modify the step R2 so as to introduce a discontinuity by including the indices of only those views in the set which are neighbors of the sth view in both the ambient space as well as in the embedding space. We denote the overlap between the mth and m′th view in the embedding space by . There may be multiple heuristics for computing which could work. In the Appendix E, we describe a simple approach based on the already developed machinery in this paper, which uses the hyperparameter ν provided as input to Algo. 5. Having obtained , we say that the mth and the m′th intermediate views in the embedding space are neighbors if is non-empty.

Step R2. Finally, we compute as,

| (58) |

Note that if it is known apriori that the manifold can be embedded in lower dimension without tearing it apart then we do not require the above modification. In all of our experiments except the one in Section 6.5, we do not assume that this information is available.

With this modification, the set in Figure 10 (f.2) will not include s1 and therefore the resulting centroid in the step R3 would be the same as the one in Figure 10 (f.1). Subsequently, the transformed s9th view would be the one in Figure 10 (g.1) rather than Figure 10 (g.2).

Gluing instruction for the boundary of the embedding.

Having knowingly torn the manifold apart, we provide at the output, information on the points belonging to the tear and their neighboring points in the ambient space. To encode the “gluing” instructions along the tear in the form of colors at the output of our algorithm, we recompute . If is non-empty but is empty, then this means that the mth and m′th views are neighbors in the ambient space but are torn apart in the embedding space. Therefore, we color the global embedding of the points on the overlap which belong to clusters and with the same color to indicate that although these points are separated in the embedding space, they are adjacent in the ambient space (see Figures 19, 20 and 31).

Figure 19:

Embeddings of 2d manifolds without boundary into . For each manifold, the left and right columns contain the same plots colored by the two parameters of the manifold. A proof of the mathematical correctness of the LDLE embeddings is provided in Figure 31.

Figure 20:

Embeddings of 2d non-orientable manifolds into . For each manifold, the left and right columns contain the same plots colored by the two parameters of the manifold. A proof of the mathematical correctness of the LDLE embeddings is provided in Figure 31.

An illustration of the global embedding at various stages of Algo. 5 with modified step R2, is provided in Figure 12.

Figure 12:

2d embedding of a sphere at different stages of Algo. 5. For illustration purpose, in the plots in the 2nd and 3rd columns the translation parameter vm was manually set for those views which do not lie in the set .

Example. The obtained global embeddings of our square grid with to_tear = True and ν = 3, are shown in Figure 13. Note that the boundary of the obtained embedding is more distorted when the points on the boundary are unknown than when they are known apriori. This is because the intermediate views near the boundary have higher distortion in the former case than in the latter case (see Figure 7).

Figure 13:

Global embedding of the square grid when the points on the boundary are unknown (left) versus when they are known apriori (right).

5.4. Time Complexity

The worst case time complexity of Algo. 5 is when to tear is false. It costs an additional time of when to tear is true. In practice, one refinement step took about 15 seconds in the above example and between 15–20 seconds for all the examples in Section 6.

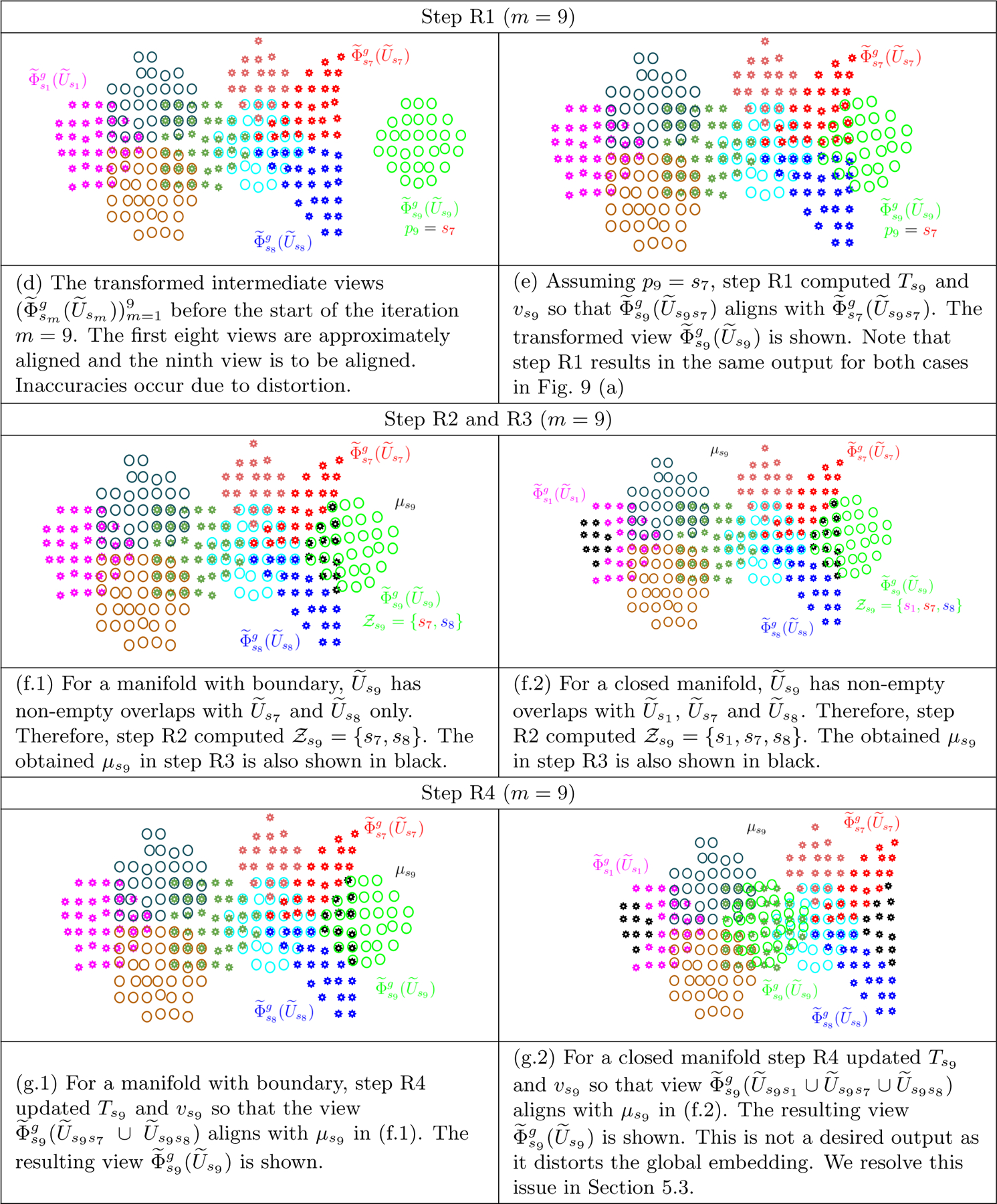

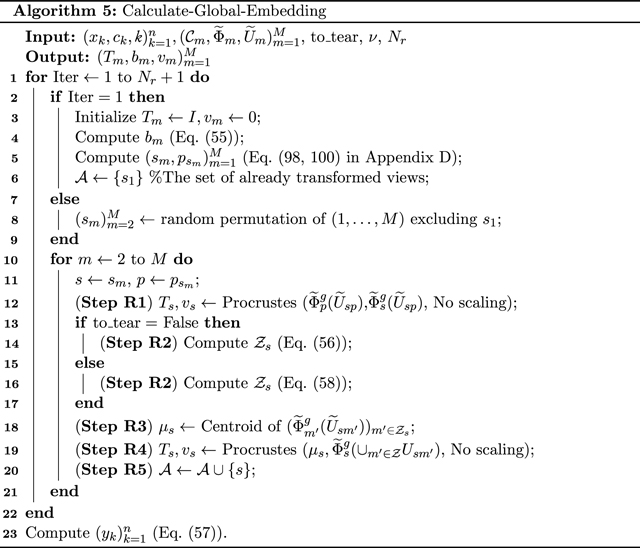

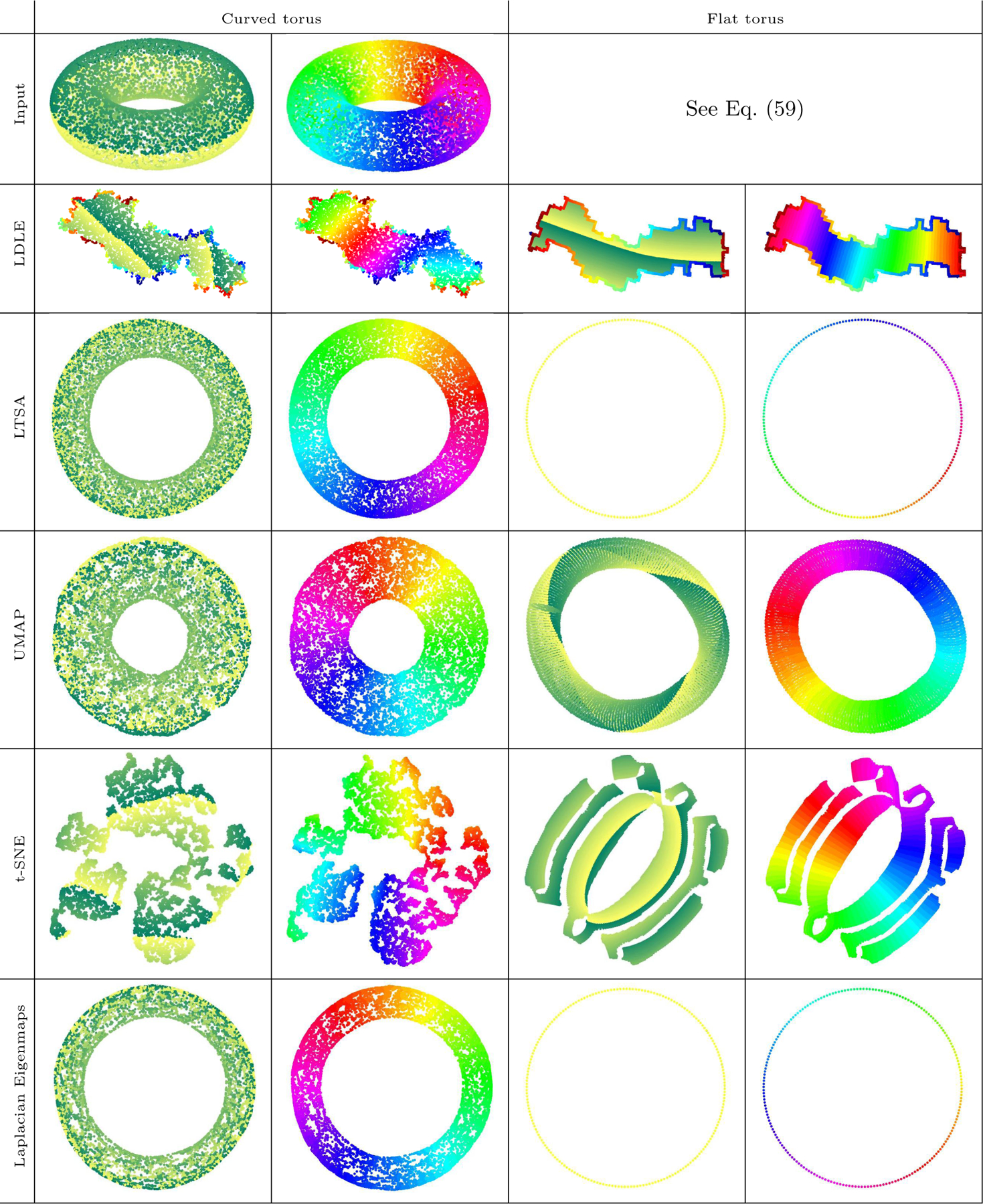

6. Experimental Results

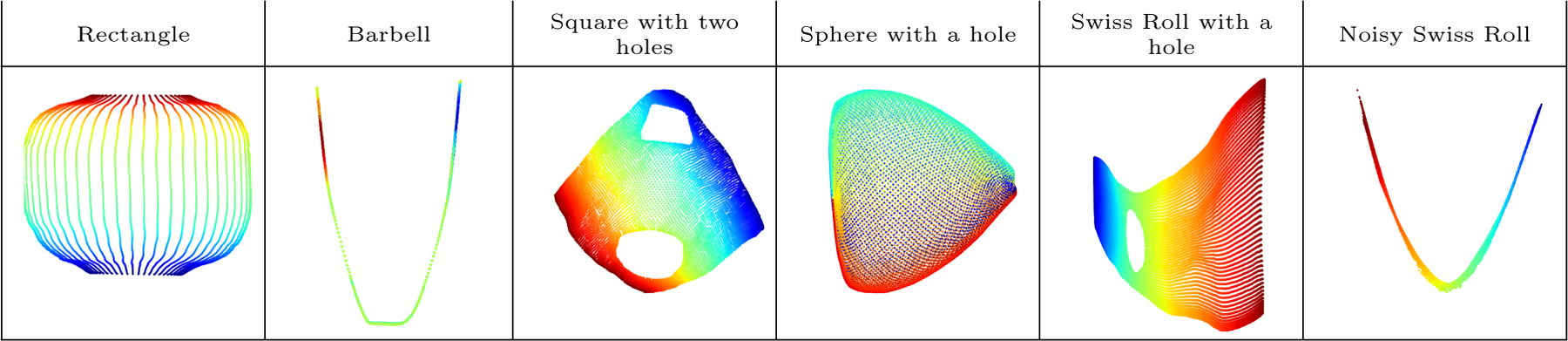

We present experiments to compare LDLE2 with LTSA (Zhang and Zha, 2003), UMAP (McInnes et al., 2018), t-SNE (Maaten and Hinton, 2008) and Laplacian eigenmaps (Belkin and Niyogi, 2003) on several data sets. First, we compare the embeddings of discretized 2d manifolds embedded in , or , containing about 104 points. These manifolds are grouped based on the presence of the boundary and their orientability as in Sections 6.2, 6.3 and 6.4. The inputs are shown in the figures themselves except for the flat torus and the Klein bottle, as their 4D parameterizations cannot be plotted. Therefore, we describe their construction below. A quantitative comparison of the algorithms is provided in Section 6.2.1. In Section 6.2.2 we assess the robustness of these algorithms to the noise in the data. In Section 6.2.3 we assess the performance of these algorithms on sparse data. Finally, in Section 6.5 we compare the embeddings of some high dimensional data sets.

Flat Torus. A flat torus is a parallelogram whose opposite sides are identified. In our case, we construct a discrete flat torus using a rectangle with sides 2 and 0.5 and embed it in four dimensions as follows,

| (59) |

where θi = 0.01iπ, ϕj = 0.04jπ, i ∈ {0,...,199} and j ∈ {0,...,49}.

Klein bottle. A Klein bottle is a non-orientable two dimensional manifold without boundary. We construct a discrete Klein bottle using its 4D Möbius tube representation as follows,

| (60) |

| (61) |

where θi = iπ/100, ϕj = jπ/25, i ∈ {0,...,199} and j ∈ {0,...,49}.

6.1. Hyperparameters

To embed using LDLE, we use the Euclidean metric and the default values of the hyperparameters and their description are provided in Table 1. Only the value of ηmin is tuned across all the examples in Sections 6.2, 6.3 and 6.4 (except for Section 6.2.3), and is provided in Appendix G. For high dimensional data sets in Section 6.5, values of the hyperaparameters which differ from the default values are again provided in Appendix G.

For UMAP, LTSA, t-SNE and Laplacian eigenmaps, we use the Euclidean metric and select the hyperparameters by grid search, choosing the values which result in best visualization quality. For LTSA, we search for optimal n neighbors in {5,10,25,50,75,100}. For UMAP, we use 500 epochs and search for optimal n neighbors in {25,50,100,200} and min dist in {0.01,0.1,0.25,0.5}. For t-SNE, we use 1000 iterations and search for optimal perplexity in {30,40,50,60} and early exaggeration in {2,4,6}. For Laplacian eigenmaps, we search for knn in {16,25,36,49} and ktune in {3,7,11}. The chosen values of the hyperparameters are provided in Appendix G. We note that the Laplacian eigenmaps fails to correctly embed most of the examples regardless of the choice of the hyperparameters.

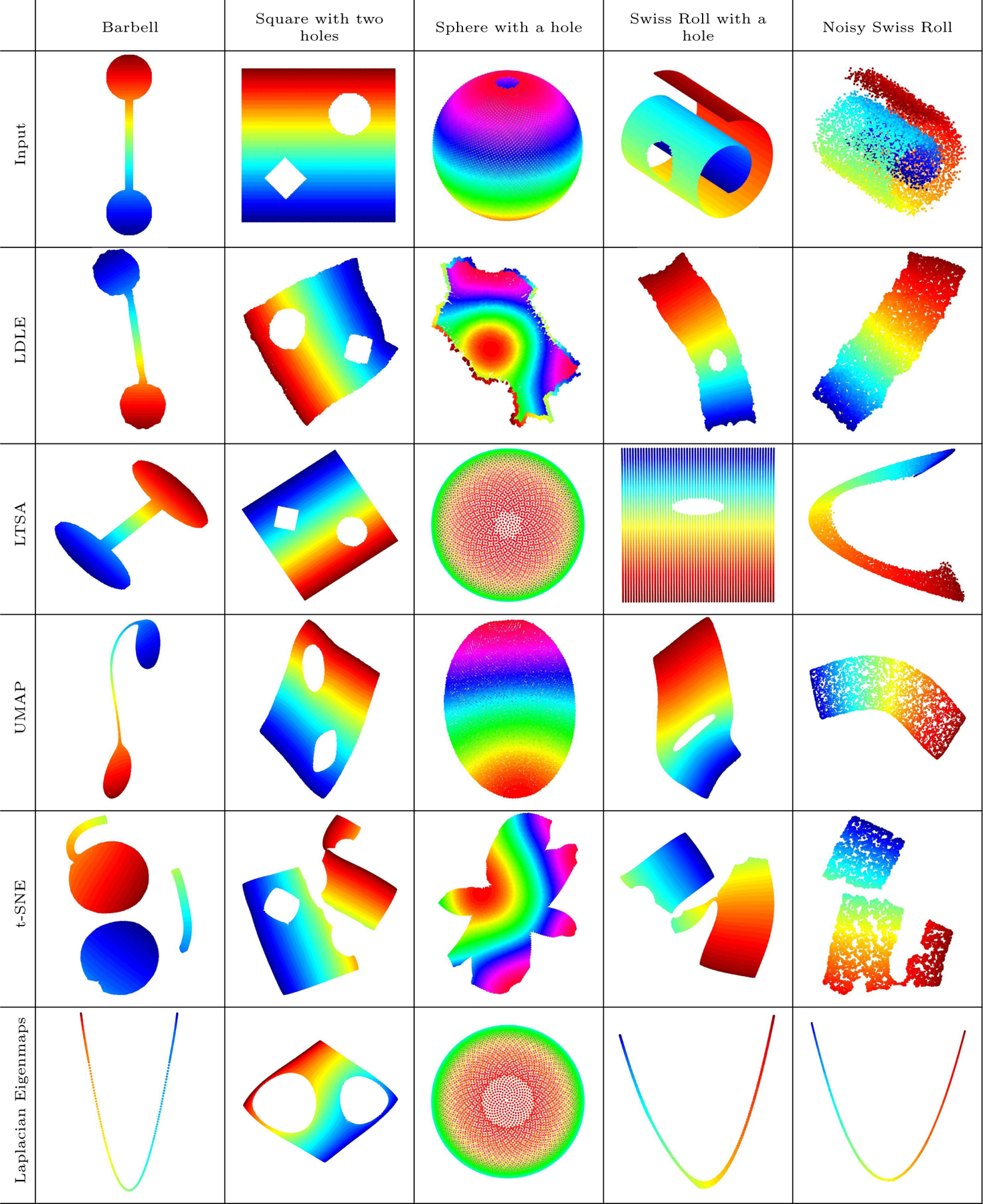

6.2. Manifolds with Boundary

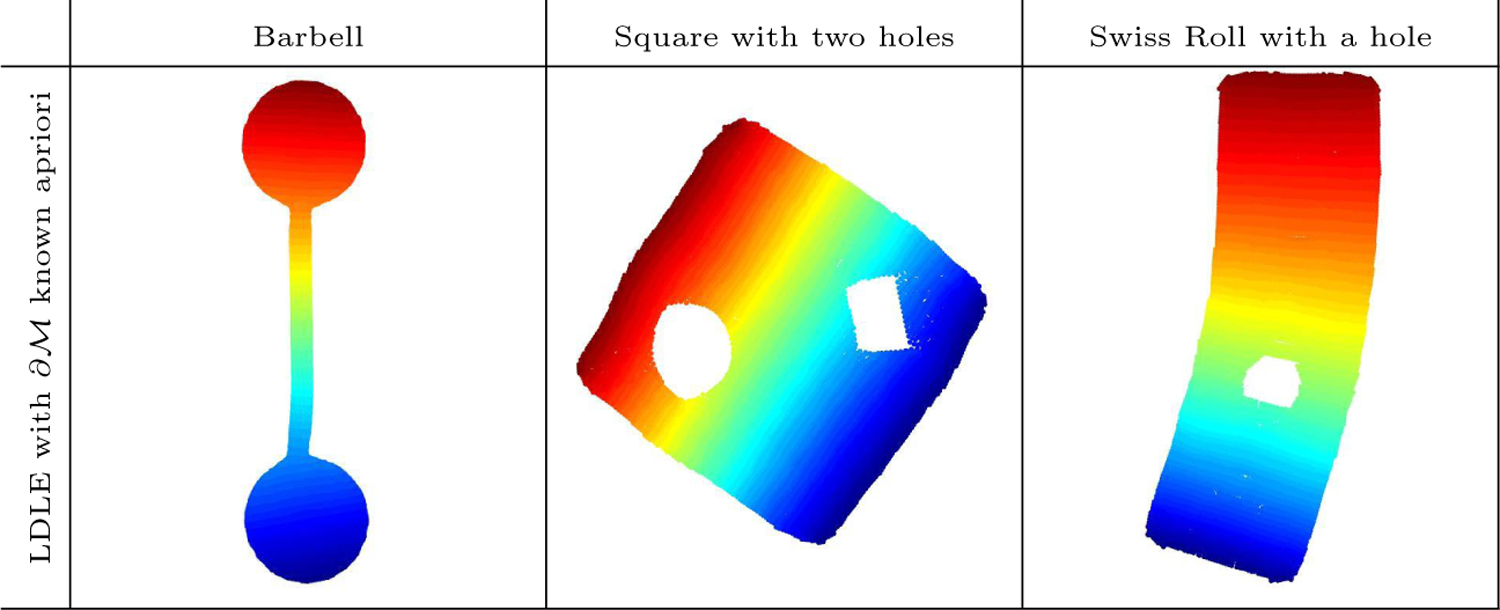

In Figure 14, we show the 2d embeddings of 2d manifolds with boundary, in or , three of which have holes. To a large extent, LDLE preserved the shape of the holes. LTSA perfectly preserved the shape of the holes in the square but deforms it in the Swiss Roll. This is because LTSA embedding does not capture the aspect ratio of the underlying manifold as discussed in Section F. UMAP and Laplacian eigenmaps distorted the shape of the holes and the region around them, while t-SNE produced dissected embeddings. For the sphere with a hole which is a curved 2d manifold with boundary, LTSA, UMAP and Laplacian eigenmaps squeezed it into while LDLE and t-SNE tore it apart. The correctness of the LDLE embedding is proved in Figure 31. In the case of noisy swiss roll, LDLE and UMAP produced visually better embeddings in comparison to the other methods.

Figure 14:

Embeddings of 2d manifolds with boundary into . The noisy Swiss Roll is constructed by adding uniform noise in all three dimensions, with support on [0,0.05].

We note that the boundaries of the LDLE embeddings in Figure 14 are usually distorted. The cause of this is explained in Remark 4. When the points in the input which lie on the boundary are known apriori then the distortion near the boundary can be reduced using the double manifold as discussed in Remark 5 and shown in Figure 4. The obtained LDLE embeddings when the points on the boundary are known, are shown in Figure 15.

Figure 15:

LDLE embeddings when the points on the boundary are known apriori.

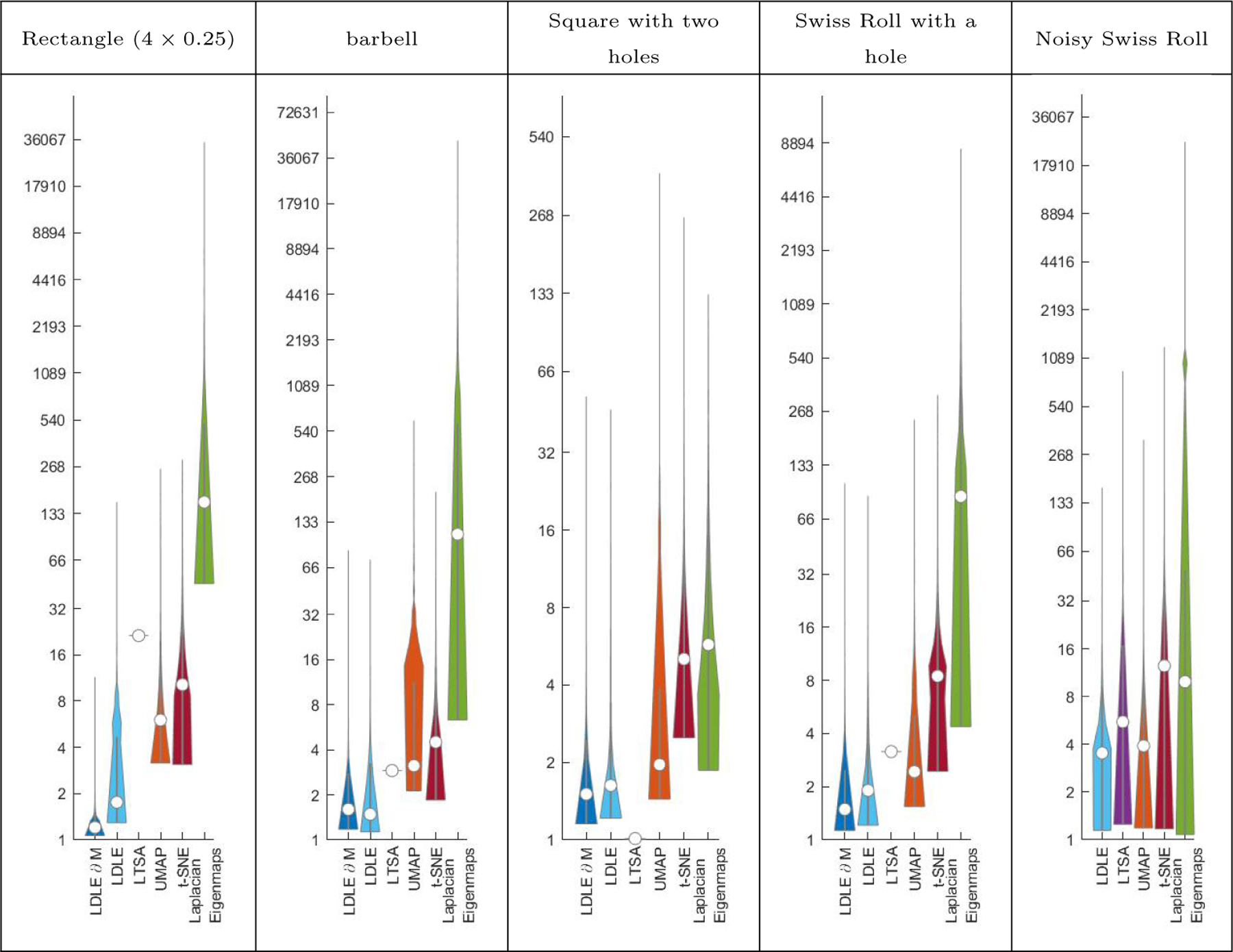

6.2.1. Quantitative Comparison

To compare LDLE with other techniques in a quantitative manner, we compute the distortion of the embeddings of the geodesics originating from xk and then plot the distribution of (see Figure 16). The procedure to compute follows. In the discrete setting, we first define the geodesic between two given points as the shortest path between them which in turn is computed by running Dijkstra algorithm on the graph of 5 nearest neighbors. Here, the distances are measured using the Euclidean metric de. Denote the number of nodes on the geodesic between xk and xk′ by nkk′ and the sequence of nodes by where x1 = xk and . Denote the embedding of xk by yk. Then the length of the geodesic in the latent space between xk and xk′, and the length of the embedding of the geodesic between yk and yk′ are given by

| (62) |

| (63) |

Finally, the distortion of the embeddings of the geodesics originating from xk is given by the ratio of maximum expansion and minimum contraction, that is,

| (64) |

Figure 16:

Violin plots (Hintze and Nelson, 1998; Bechtold et al., 2021) for the distribution of (See Eq. (64)). LDLE ∂M means LDLE with boundary known apriori. The white point inside the violin represents the median. The straight line above the end of the violin represents the outliers.

A value of 1 for means the geodesics originating from xk have the same length in the input and in the embedding space. If for all k then the embedding is geometrically, and therefore topologically as well, the same as the input up to scale. Figure 16 shows the distribution of due to LDLE and other algorithms for various examples. Except for the noisy Swiss Roll, LTSA produced the least maximum distortion. Specifically, for the square with two holes, LTSA produced a distortion of 1 suggesting its strength on manifolds with unit aspect ratio. In all other examples, LDLE produced the least distortion except for a few outliers. When the boundary is unknown, the points which result in high are the ones which lie on and near the boundary. When the boundary is known, these are the points which lie on or near the corners (see Figures 4 and 5). We aim to fix this issue in future work.

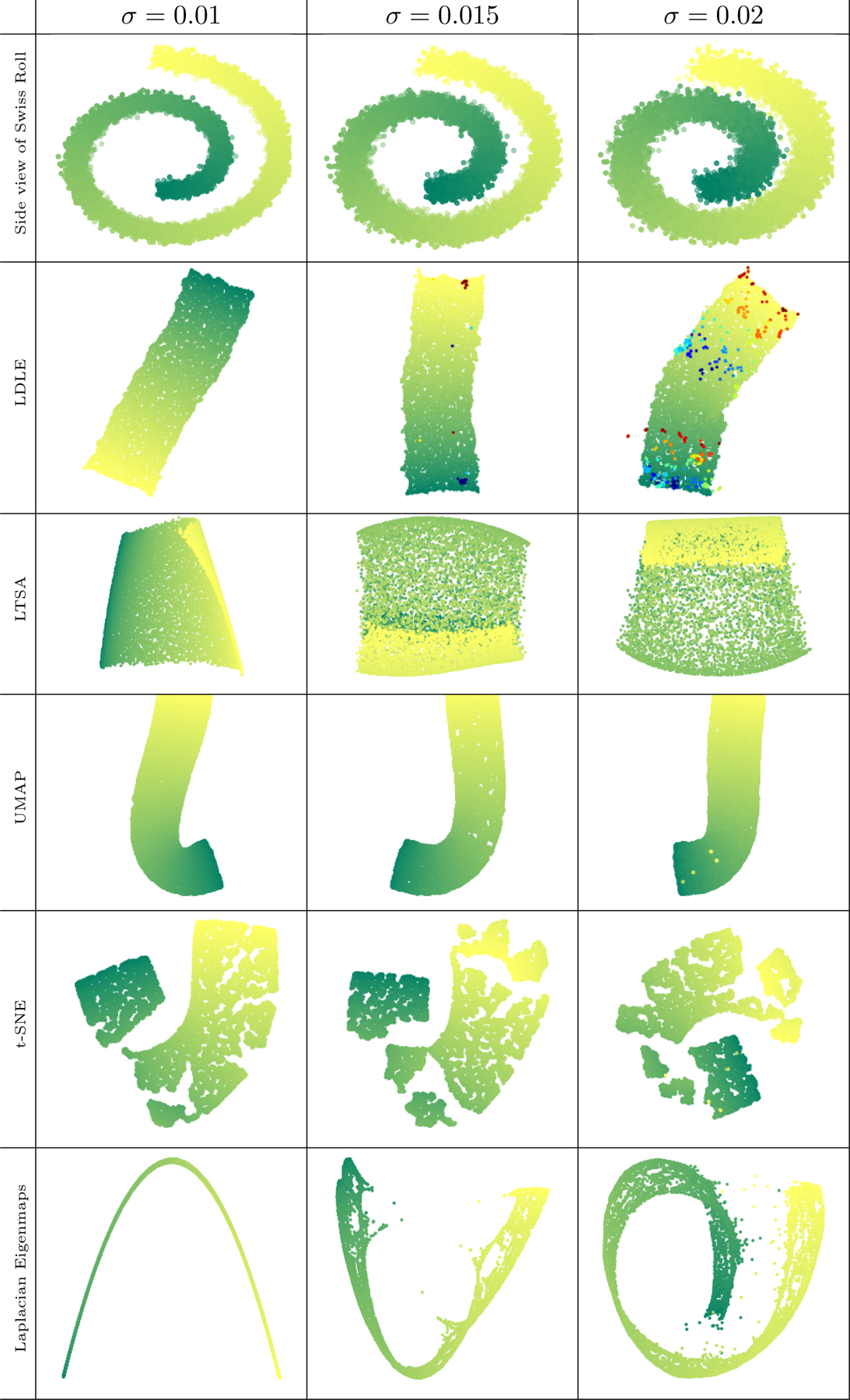

6.2.2. Robustness to Noise

To further analyze the robustness of LDLE under noise we compare the embeddings of the Swiss Roll with Gaussian noise of increasing variance. The resulting embeddings are shown in Figure 17. Note that certain points on LDLE embeddings have a different colormap than the one used for the input. As explained in Section 5.3, the points which have the same color under this colormap are adjacent on the manifold but away in the embedding. To be precise, these points lie close to the middle of the gap in the Swiss Roll, creating a bridge between those points which would otherwise be far away on a noiseless Swiss Roll. In a sense, these points cause maximum corruption to the geometry of the underlying noiseless manifold. One can say that these points are have adversarial noise, and LDLE embedding can automatically recognize such points. We will further explore this in future work. LTSA, t-SNE and Laplacian Eigenmaps fail to produce correct embeddings while UMAP embeddings also exhibit high quality.

Figure 17:

Embeddings of the Swiss Roll with additive noise sampled from the Gaussian distribution of zero mean and a variance of σ2 (see Section 6.2.2 for details).

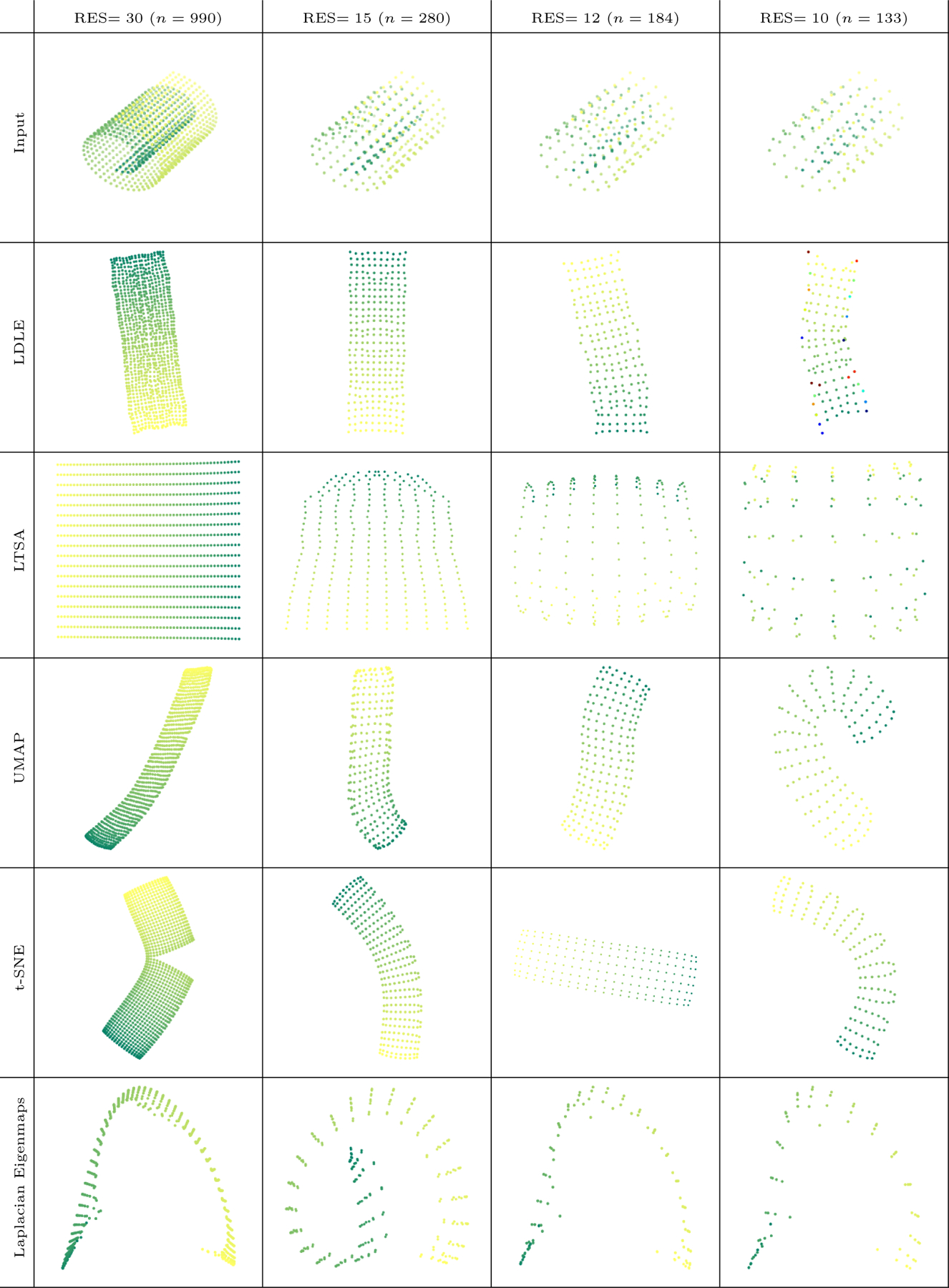

6.2.3. Sparsity

A comparison of the embeddings of the Swiss Roll with decreasing resolution and increasing sparsity is provided in Figure 18. Unlike LTSA and Laplacian Eigenmaps, the embeddings produced by LDLE, UMAP and t-SNE are of high quality. Note that when the resolution is 10, LDLE embedding of some points have a different colormap. Due to sparsity, certain points on the opposite sides of the gap between the Swiss Roll are neighbors in the ambient space as shown in Figure 32 in Appendix H. LDLE automatically tore apart these erroneous connections and marked them at the output using a different colormap. A discussion on sample size requirement for LDLE follows.

Figure 18:

Embeddings of the Swiss Roll with decreasing resolution and increasing sparsity (see Section 6.2.3 for details). Note that when RES= 7 (n = 70) none of the above method produced a correct embedding.

The distortion of LDLE embeddings directly depend on the distortion of the constructed local parameterizations, which in turn depends on reliable estimates of the graph Laplacian and its eigenvectors. The work in (Belkin and Niyogi, 2008; Hein et al., 2007; Trillos et al., 2020; Cheng and Wu, 2021) provided conditions on the sample size and the hyperparameters such as the kernel bandwidth, under which the graph Laplacian and its eigenvectors would converge to their continuous counterparts. A similar analysis in the setting of self-tuned kernels used in our approach (see Algo. 1) is also provided in (Cheng and Wu, 2020). These imply that, for a faithful estimation of graph Laplacian and its eigenvectors, the hyperparameter ktune (see Table 1) should be small enough so that the local scaling factors σk (see Algo. 1) are also small, while the size of the data n should be large enough so that is sufficiently large for all k ∈ {1,...,n}. This suggests that n needs to be exponential in d and inversely related to σk. However, in practice, the data is usually given and therefore n is fixed. So the above mainly states that to obtain accurate estimates, the hyperparameter ktune must be decreased. This indeed holds as we had to decrease ktune from 7 to 2 (see Appendix G) to produce LDLE embeddings of high quality for increasingly sparse Swiss Roll in Figure 18.

6.3. Closed Manifolds

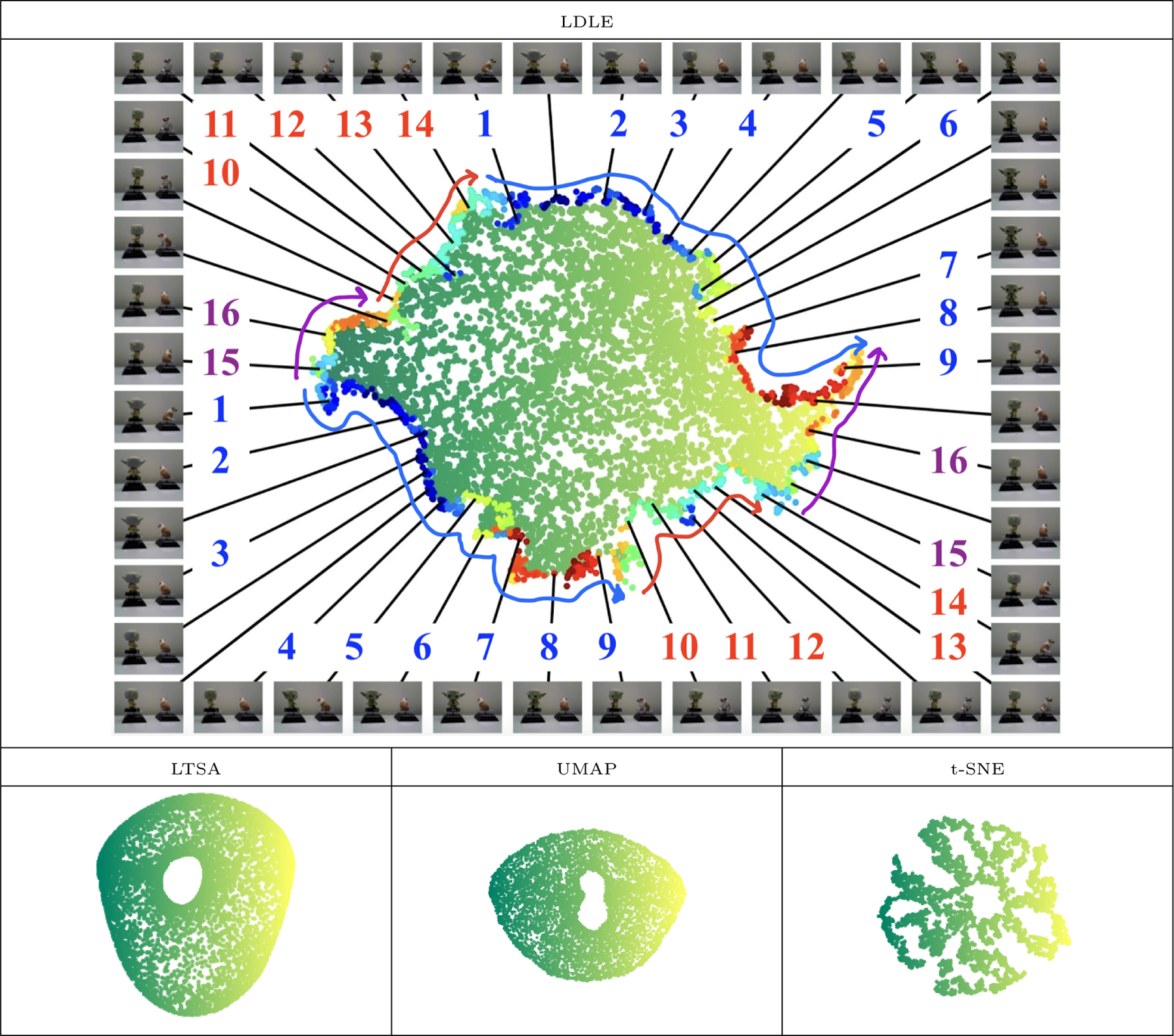

In Figure 19, we show the 2d embeddings of 2d manifolds without a boundary, a curved torus in and a flat torus in . LDLE produced similar representation for both the inputs. None of the other methods do that. The main difference in the LDLE embedding of the two inputs is based on the boundary of the embedding. It is composed of many small line segments for the flat torus, and many small curved segments for the curved torus. This is clearly because of the difference in the curvature of the two inputs, zero everywhere for the flat torus and non-zero almost everywhere on the curved torus. The mathematical correctness of the LDLE embeddings using the cut and paste argument is shown in Figure 31. LTSA, UMAP and Laplacian eignemaps squeezed both the manifolds into while the t-SNE embedding is non-interpretable.

6.4. Non-Orientable Manifolds

In Figure 20, we show the 2d embeddings of non-orientatble 2d manifolds, a Möbius strip in and a Klein bottle in . Laplacian eigenmaps produced incorrect embeddings, t-SNE produced dissected and non-interpretable embeddings and LTSA and UMAP squeezed the inputs into . LDLE produced mathematically correct embeddings by tearing apart both inputs to embed them into (see Figure 31).

6.5. High Dimensional Data

6.5.1. Synthetic Sensor Data

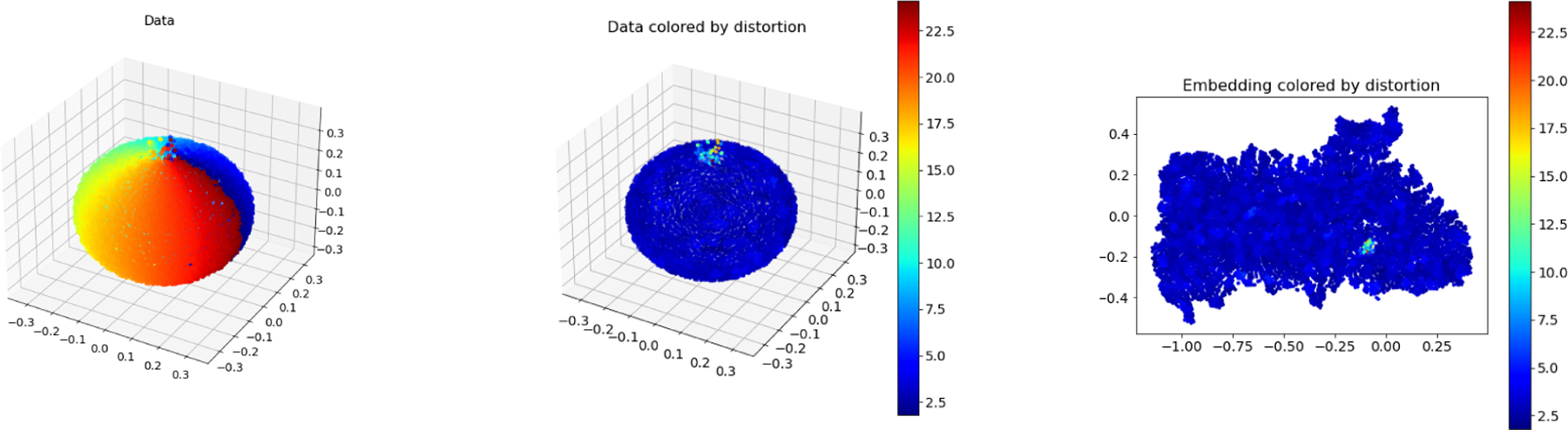

In Figure 21, motivated from (Peterfreund et al., 2020), we embed a 42 dimensional synthetic data set representing the signal strength of 42 transmitters at about n = 6000 receiving locations on a toy floor plan. The transmitters and the receivers are distributed uniformly across the floor. Let be the transmitter locations and ri be the ith receiver location. Then the ith data point xi is given by . The resulting data set is embedded using and other algorithms into . The hyperparameters resulting in the most visually appealing embeddings were identified for each algorithm and are provided in Table 2. The obtained embeddings are shown in Figure 21. The shapes of the holes are best preserved by LTSA, then LDLE followed by the other algorithms. The corners of the LDLE embedding are more distorted. The reason for distorted corners is given in Remark 4.

Figure 21:

Embedding of the synthetic sensor data into (see Section 6.5 for details).

6.5.2. Face Image Data

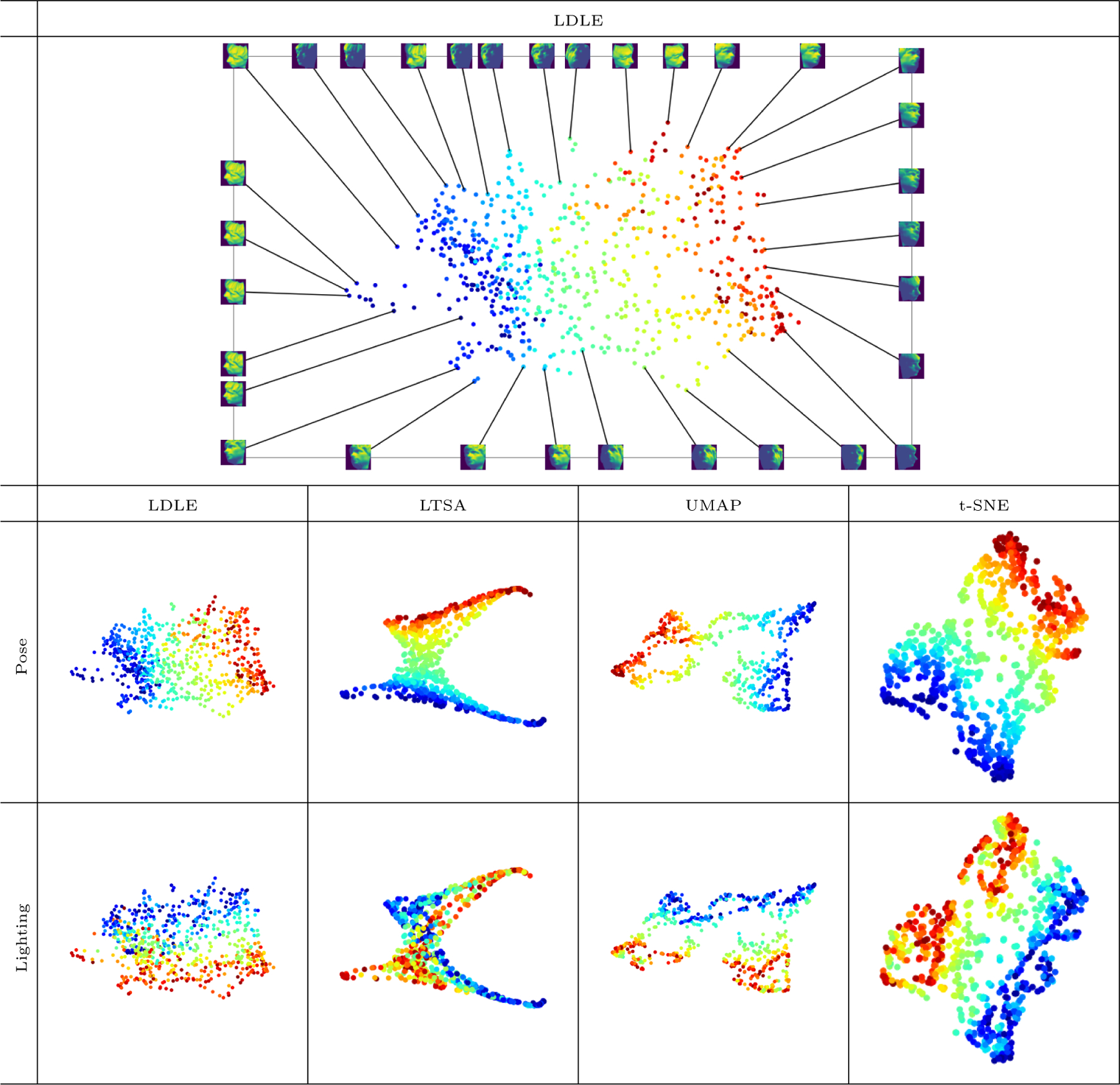

In Figure 22, we show the embedding obtained by applying LDLE on the face image data (Tenenbaum et al., 2000) which consists of a sequence of 698 64-by-64 pixel images of a face rendered under various pose and lighting conditions. These images are converted to 4096 dimensional vectors, then projected to 100 dimensions through PCA while retaining about 98% of the variance. These are then embedded using LDLE and other algorithms into . The hyperparameters resulting in the most visually appealing embeddings were identified for each algorithm and are provided in Table 5. The resulting embeddings are shown in Figure 23 colored by the pose and lighting of the face. Note that values of the pose and lighting variables for all the images are provided in the data set itself. We have displayed face images corresponding to few points of the LDLE embeddings as well. Embeddings due to all the techniques except LTSA reasonably capture both the pose and lighting conditions.

Figure 22:

Embedding of the face image data set (Tenenbaum et al., 2000) into colored by the pose and lighting conditions (see Section 6.5 for details).

Figure 23:

Embeddings of snapshots of a platform with two objects, Yoda and a bull dog, each rotating at a different frequency, such that the underlying topology is a torus (see Section 6.5 for details).

6.5.3. Rotating Yoda-Bulldog Data Set

In Figure 23, we show the 2d embeddings of the rotating figures data set presented in (Lederman and Talmon, 2018). It consists of 8100 snapshots taken by a camera of a platform with two objects, Yoda and a bull dog, rotating at different frequencies. Therefore, the underlying 2d parameterization of the data should render a torus. The original images have a dimension of 320 × 240 × 3. In our experiment, we first resize the images to half the original size and then project them to 100 dimensions through PCA (Jolliffe and Cadima, 2016) while retaining about 98% variance. These are then embedded using LDLE and other algorithms into . The hyperparameters resulting in the most visually appealing embeddings were identified for each algorithm and are provided in Table 5. The resulting embeddings are shown in Figure 23 colored by the first dimension of the embedding itself. LTSA and UMAP resulted in a squeezed torus. LDLE tore apart the underlying torus and automatically colored the boundary of the embedding to suggest the gluing instructions. By tracing the color on the boundary we have manually drawn the arrows. Putting these arrows on a piece of paper and using cut and past argument one can establish that the embedding represents a torus (see Figure 31). The images corresponding to a few points on the boundary are shown. Pairs of images with the same labels represent the two sides of the curve along which LDLE tore apart the torus, and as is evident these pairs are similar.

7. Conclusion and Future Work

We have presented a new bottom-up approach (LDLE) for manifold learning which constructs low-dimensional low distortion local views of the data using the low frequency global eigenvectors of the graph Laplacian, and registers them to obtain a global embedding. Through various examples we demonstrated that LDLE competes with the other methods in terms of visualization quality. In particular, the embeddings produced by LDLE preserved distances upto a constant scale better than those produced by UMAP, t-SNE, Laplacian Eigenmaps and for the most part LTSA too. We also demonstrated that LDLE is robust to the noise in the data and produces fine embeddings even when the data is sparse. We also showed that LDLE can embed closed as well as non-orientable manifolds into their intrinsic dimension, a feature that is missing from the existing techniques. Some of the future directions of our work are as follows.

It is only natural to expect real world data sets to have boundary and to have many corners. As observed in the experimental results, when the boundary of the manifold is unknown, then the LDLE embedding tends to have distorted boundary. Even when the boundary is known, the embedding has distorted corners. This is caused by high distortion views near the boundary (see Figures 4 and 5). We aim to fix this issue in our future work. One possible resolution could be based on (Berry and Sauer, 2017) which presented a method to approximately calculate the distance of the points from the boundary.

When the data represents a mixture of manifolds, for example, a pair of possibly intersecting spheres or even manifolds of different intrinsic dimensions, it is also natural to expect a manifold learning technique to recover a separate parameterization for each manifold and provide gluing instructions at the output. One way is to perform manifold factorization (Zhang et al., 2021) or multi-manifold clustering (Trillos et al., 2021) on the data to recover sets of points representing individual manifolds and then use manifold learning on these separately. We aim to adapt LDLE to achieve this.

The spectrum of the Laplacian has been used in prior work for anomaly detection (Cloninger and Czaja, 2015; Mishne and Cohen, 2013; Cheng et al., 2018; Cheng and Mishne, 2020; Mishne et al., 2019). Similar to our approach of using a subset of Laplacian eigenvectors to construct low distortion local views in lower dimension, in (Mishne et al., 2018; Cheng and Mishne, 2020), subsets of Laplacian eigenvectors were identified so as to separate small clusters from a large background component. As shown in Figures 4 and 5, LDLE produced high distortion local views near the boundary and the corners, though these are not outliers. However, if we consider a sphere with outliers (say, a sphere with noise only at the north pole as in Figure 24), then the distortion of the local views containing the outliers is higher than the rest of the views. Therefore, the distortion of the local views can help find anomalies in the data. We aim to further investigate this direction to develop an anomaly detection technique.

Figure 24:

Local views containing outliers exhibit high distortion. (left) Input data . (middle) xk colored by the distortion ζkk of Φk on Uk. (right) yk colored by ζkk.

Similar to the approach of denoising a signal by retaining low frequency components, our approach uses low frequency Laplacian eigenvectors to estimate local views. These eigenvectors implicitly capture the global structure of the manifold. Therefore, to construct local views, unlike LTSA which directly relies on the local configuration of data which may be noisy, LDLE relies on the local elements of low frequency global eigenvectors of the Laplacian which are supposed to be robust to the noise. Practical implication of this is shown in Figure 17 to some extent while we aim to further investigate the theoretical implications.

Supplementary Material

Acknowledgments

This work was supported by funding from the NIH grant no. R01 EB026936 to DK and GM. AC was supported by funding from NSF DMS 1819222, 2012266, Russell Sage Foundation grant 2196, and Intel Research.

A. First Proof of Theorem 2

Choose ϵ > 0 so that the exponential map is a well defined diffeomorphism on where is the tangent space to at x, expx(0) = x and

| (65) |

Then using (Canzani, 2013, lem. 48, prop. 50, th. 51), for all such that

| (66) |