Abstract

People use cognitive control across many contexts in daily life, yet it remains unclear how cognitive control is used in contexts involving language. Distinguishing language-specific cognitive control components may be critical to understanding aphasia, which can co-occur with cognitive control deficits. For example, deficits in control of semantic representations (i.e., semantic control), are thought to contribute to semantic deficits in aphasia. Conversely, little is known about control of phonological representations (i.e., phonological control) in aphasia. We developed a switching task to investigate semantic and phonological control in 32 left hemisphere stroke survivors with aphasia and 37 matched controls. We found that phonological and semantic control were related, but dissociate in the presence of switching demands. People with aphasia exhibited group-wise impairment at phonological control, although individual impairments were subtle except in one case. Several individuals with aphasia exhibited frank semantic control impairments, and these individuals had relative deficits on other semantic tasks. The present findings distinguish semantic control from phonological control, and confirm that semantic control impairments contribute to semantic deficits in aphasia.

Keywords: Semantic Control, Phonological Control, Aphasia, Task Switching, Domain General, Language Specific

1. INTRODUCTION

Cognitive control is the flexible adaptation of behavior that allows us to achieve our goals despite our constantly changing environments. Accordingly, we use cognitive control across many settings in our daily life. The extent to which cognitive control reflects distinct mechanisms is unclear when it comes to settings involving language, such as speaking, listening, reading, and writing. While some researchers propose that there are specialized cognitive control mechanisms specific to these speech and language processes, others propose that nonspecific domain-general cognitive control mechanisms are applied in language settings (Kompa & Mueller, 2020).

1.1. Switching Control, Semantic Control, and Phonological Control

Cognitive control is often used in settings that require shifting between targets or goals, a behavior known as task switching (Kiesel et al., 2010). The present paper uses the term switching control to refer to the cognitive control ability that underlies success in task switching, although this ability also goes by other names, including switching, shifting, task shifting and set shifting. Although contemporary theoretical frameworks differ in their labels, a cognitive control ability that allows for task switching is described across several frameworks (C. Gratton et al., 2018). Notably, it is one of three domain-general processes defined in the widely accepted Unity/Diversity Framework by Miyake et al. 2000, alongside updating and inhibition (Miyake et al., 2000; Miyake & Friedman, 2012). Switching control is considered to rely at least in part on domain-general cognitive control mechanisms. However, evidence suggests that language-specific mechanisms may also support switching control in a linguistic context.

Specifically, switching control can be supplemented using inner speech cueing, a language-based self-cueing strategy (Alderson-Day & Fernyhough, 2015; Emerson & Miyake, 2003; Miyake et al., 2004). Inner speech cueing is often examined using articulatory suppression, where the participant speaks aloud during task switching (Baddeley et al., 2001). Articulatory suppression interferes with switching performance to a greater extent than non-articulatory tasks such as foot tapping (Emerson & Miyake, 2003; Kray et al., 2004). Furthermore, effects of articulatory suppression are reduced by the presence of explicit cues for the participant to switch(Emerson & Miyake, 2003). Together, these two findings indicate that participants use inner speech to cue themselves to the upcoming task goal or target. Although inner speech in general can rely on other language-based representations, such as semantic representations and syntactic representations (Alderson-Day & Fernyhough, 2015), inner speech cueing is often assumed to be composed solely of phonological representations. We tested this assumption in the present study by examining the role of phonological and semantic representations in inner speech cueing.

Cognitive control is also often used in settings involving language-related distractors. For example, speech production and comprehension is achieved despite semantically-related and phonologically-related distractors in the environment as well as internally in the form of competing neighbors (Cervera-Crespo & González-Álvarez, 2019; Chen & Mirman, 2012). In the Unity/Diversity Framework, the inhibition component contributes to control of interference from such distractors (Friedman & Miyake, 2004). Similarly, much neurocognitive and psycholinguistic work suggests that domain-general cognitive control contributes to control of language interference (Fedorenko, 2014; Novick et al., 2010; Nozari & Novick, 2017). However, the contribution of domain-general control components does not preclude the contribution of language-specific cognitive control components (Jacquemot & Bachoud-Lévi, 2021a). The architecture of such language-specific control components is unclear. Here, we propose separate components that contribute to controlling semantic interference (i.e., semantic control), versus controlling phonological interference (i.e., phonological control). We hypothesized that phonological control and semantic control rely on dissociable cognitive control components, in addition to domain-general control components.

The distinction between phonological and semantic control is hinted at in the effects of semantic versus phonological interference in picture naming paradigms, since such paradigms have been proposed to recruit cognitive control (Nozari et al., 2016). In some studies of picture naming, the presentation of phonological distractors causes a deleterious interference effect (Nozari et al., 2016), but in many other studies paradoxically leads to improved performance (i.e., a priming effect) (Meyer & Damian, 2007; Morsella & Miozzo, 2002; Navarrete & Costa, 2005). On the other hand, the effect of semantic distractors during picture naming tends to be in the opposite direction. The presentation of semantic distractors during picture naming often yields an interference effect (Damian & Bowers, 2003; Navarrete & Costa, 2005; Roelofs & Piai, 2017; but see Damian & Spalek, 2014 about semantic distractor manipulations to create priming effects). The dissociation of performance in the face of phonological and semantic interference suggests that there may be distinctions in the mechanisms underlying phonological control versus semantic control. However, it is hard to make a conclusion about distinctions between control processes based on picture naming interference paradigms because they do not uniformly evoke interference effects or priming effects alone. Here, we set out to distinguish phonological control from semantic control using a task that reliably evokes interference effects. Additionally, since semantic control and phonological control theoretically tax language-specific control, we measured each in relation to the domain-general cognitive control ability of switching control.

1.2. Cognitive Control Impairments Following LH Stroke

Strokes to the left hemisphere (LH) of the brain are known to cause language impairments, i.e., aphasia. Although aphasia is traditionally thought of as a language-specific disorder, recent evidence has suggested that LH strokes that cause aphasia can also impair cognitive control (e.g., Kuzmina & Weekes, 2017). Cognitive control impairments could contribute to language impairments in aphasia. However, this potential contribution is unclear because the characterization of specific cognitive control impairments following LH stroke is still emerging (Brownsett et al., 2014; Schumacher et al., 2019).

Recent work on cognitive control impairments after LH stroke has focused on semantic control, which is described by the Controlled Semantic Cognition framework (CSC) (Lambon Ralph et al., 2017). CSC is a neurocognitive model that describes two networks: one that supports semantic representations and another that supports semantic control of those representations. CSC describes the network for semantic representation as a hub and spoke model, with the hub localized to the anterior temporal lobe and connected to widely distributed spokes containing modality-specific information (Lambon Ralph, 2014). On the other hand, CSC describes the network for semantic control as an executive control network that controls semantic representations. The executive machinery underlying semantic control is thought to include domain-general control regions in the prefrontal cortex in addition to language-specific control regions in the temporoparietal junction.

Researchers have hypothesized that deficits in lexical retrieval (i.e. anomia) in aphasia can result from an impairment profile in which semantic representations remain intact, but semantic control is impaired (Calabria et al., 2019; Rogers et al., 2015). As such, semantic control deficits are thought to account for impairments in comprehension as well as semantic paraphasias (Jefferies et al., 2008; Jefferies & Lambon Ralph, 2006; Rogers et al., 2015). The identification of semantic control impairments in aphasia has been supported by studies of individuals with stroke aphasia performing semantic tasks (Lambon Ralph et al., 2017). For example, when performing the Camel and Cactus Test, some individuals with stroke aphasia exhibit difficulty identifying semantic associations and rejecting distractors (Jefferies & Lambon Ralph, 2006). The intactness of semantic representations in such individuals is supported by the effectiveness of phonemic cues during picture naming (Jefferies & Lambon Ralph, 2006; Noonan et al., 2010). Another body of evidence suggesting semantic control deficits in stroke aphasia arises from comparisons with semantic dementia, a neurodegenerative disorder that is thought to reflect degraded semantic representations (Hoffman et al., 2011; Jefferies & Lambon Ralph, 2006; but see Chapman et al., 2020 for a dispute of representation-control distinctions between semantic dementia and stroke aphasia).

Although semantic deficits in stroke aphasia are often attributed to deficits in control of semantic representations, phonological deficits in aphasia are not typically attributed to deficits in control of phonological representations. Phonological deficits are an important aspect of aphasic deficits, especially in the context of anomia with phonological paraphasias (Madden et al., 2017; Nadeau, 2001). To our knowledge, it has not been studied whether impairments in phonological control contribute to phonological paraphasias in anomia or other language deficits in aphasia. Work on phonological control impairments after LH stroke is sparser than work on semantic control impairments. One study found that several participants with LH stroke were more susceptible to phonological interference relative to controls during a short term memory task (Barde et al., 2010). Moreover, picture-word interference studies generally find a priming effect of phonological distractors for both healthy controls and LH stroke survivors (Hashimoto & Thompson, 2010; Lee & Thompson, 2015). To our knowledge, phonological control is not established within its own cognitive theory in the same way that semantic control is within CSC (but note that broader domain-general theories of language control necessarily encompass both phonological and semantic control, e.g. Novick et al., 2010; Nozari & Novick, 2017). Here, we attempted to apply principles from CSC (Lambon Ralph et al., 2017) toward conceptualizing phonological control. In conceptualizing phonological control, we predicted (1) that phonological control and semantic control are distinct abilities that both partially rely on domain-general cognitive control processors, (2) that LH strokes can cause impairments in phonological control and/or semantic control, and (3) that such impairments contribute to aphasic deficits.

1.3. Present Study

In the present study, we investigated the relationship between semantic control and phonological control during task switching in neurotypical older adults. We then examined whether these cognitive control processes are impaired following LH stroke. In order to characterize semantic control and phonological control, we developed a novel task paradigm that measures cognitive control of phonological and semantic interference without relying on overt speech production or comprehension. We hypothesized that phonological control and semantic control are dissociable processes that partially rely on domain-general control mechanisms such as switching control. We also hypothesized that both phonological control and semantic control relate to inner speech cueing during task switching. We then investigated whether LH strokes can impair both semantic control and phonological control by comparing LH stroke survivors to matched neurotypical controls. Finally, we examined whether such impairments in LH stroke survivors relate to other aphasic deficits. The overall goal was to gain insight into the architecture of semantic control and phonological control, and to determine whether semantic control and phonological control contribute to language deficits in aphasia.

2. MATERIAL AND METHODS

2.1. Participants

Participants were 69 adults from an ongoing cross-sectional investigation on LH stroke, including 32 LH stroke survivors and 37 controls matched on age, sex, and years of education (Table 1). Stroke survivors were tested at least 6 months post-stroke. This study was approved by Georgetown University’s Institutional Review Board, and all participants provided written informed consent.

Table 1:

Demographics of LH stroke survivors and matched control participants

| Group | Controls | LH stroke survivors |

|---|---|---|

| Total | 37 | 32 |

| Sex | 19 Male 18 Female |

19 Male 13 Female |

| Age (years) |

M=60.5 SD=12.8 Range= 31-82 |

M=61.8 SD=10.0 Range=43-81 |

| Education (years based on degree) |

M=16.8 SD=2.4 Range=12-21 |

M=16.6 SD=2.5 Range= 12-21 |

| Handedness (Edinburgh Handedness Inventory Laterality Index) (Oldfield, 1971) |

M=73.8 SD=36.6 Range=−100-100 |

M=72.5 SD=46.7 Range=−100-100 |

| Western Aphasia Battery (Kertesz, 2007) Aphasia Quotient |

NA |

M=77.1 SD=23.3 Range=21.3-99.6 |

| Time since stroke (months) | NA |

M=48.2 SD=57.4 Range=6.9-213.8 |

| Western Aphasia Battery Diagnosis | NA | 16 Anomic 6 Broca 3 Conduction 5 Transcortical Motor 2 No Aphasia |

M = mean, SD = standard deviation.

2.2. Behavioral Tests

2.2.1. Phonological and Semantic Cognitive Control Task

A four-alternative forced choice task paradigm, titled Antelopes and Cantaloupes1 (A&C) was developed in-house using E-Prime 3 [https://pstnet.com/products/e-prime/]. Participants select the picture of a target object in a 2×2 array of four pictures on a touch screen as many times as possible within 20-second blocks. The location of the target object and the three foil objects change after each correct target selection. When an incorrect object is touched, the screen remains unchanged until the correct target is touched. Task blocks differ by sequence length to create three task conditions: (S1) selecting a single target repeatedly, (S2) selecting a sequence of two alternating targets, or (S3) selecting a sentence of three successive targets in a pre-specified order. At all times a cue is presented in the upper right-hand corner of the screen reminding the participant of the targets (Supplementary Figure 1). S2 and S3 are thought to recruit switching control because in these conditions the participant must switch to a new target after each correct target selection.

Blocks are presented in the order of S1 S2 S3 S1 S3 S2 S1, so that each sequence is preceded by all other sequence lengths, and the order of each type of block is balanced to have a mean position of 4th in the order of blocks. Preceding each block is a practice round of the same sequence length in which the participant must successfully select 6 targets. Instructions are given with visuals present on the computer screen to support comprehension of the task instructions, and feedback was given during the practice rounds to ensure the participant understood the task.

Each of the seven blocks was 20 seconds long, for a total of 140 seconds per task version, not counting time spent during instruction and practice. When counting instruction and practice for each block, task administration lasted an average of 5 minutes and 41 seconds. The choice to fix the duration of task blocks rather than the number of trials, and the choice to present seven blocks rather than a full Latin Square design was made with an eye toward feasibility in clinical populations based on pilot data.

This task was performed using five different task versions, each using different picture stimuli: (1) In the semantic version, the four objects in the array including the target are semantically related (cow, goat, sheep, pig); (2) In the phonological version, the four objects in the array including the target are phonologically related (can, cone, coin, corn); (3) In the unrelated version, the four objects are neither semantically nor phonologically related (bread, rope, duck, shoe) (Supplementary Figure S1). These first three task versions are referred to as the main task versions because they measure semantic control and phonological control, the topic of the present paper. (4) In the standard version, the four objects are simple common shapes (circle, square, triangle, star); and (5) In the nonverbal version, the objects are simple abstract shapes that have no canonical name. These last two task versions were designed to manipulate the availability of inner speech cues during task switching. For example, the nonverbal version was designed to reduce the availability of inner speech cues since nonverbal version items do not have obvious names. On the other hand, the standard version was designed to permit inner speech cueing since the items in the standard version are canonical shapes with obvious names (“circle”, “square”, “star”, “triangle”). The main task versions were performed in a testing session on one day, and the other two task versions were performed in a testing session on another day.

Except the standard and nonverbal versions, which utilized one exemplar picture per object, each task version employed ten different random exemplars of each object (e.g., ten different images of goats). Semantically similar objects tend to be perceived as visually similar (de Groot et al., 2016; Huettig & McQueen, 2007) so stimulus images were selected by the authors to have equivalent visual similarity across phonological, unrelated, and semantic test versions. Screenshots of stimulus images are provided in the supplement (Supplement Figure S1 and S2). To confirm that visual similarity was equivalent across test versions, all objects’ exemplars within each test version were classified by a machine learning algorithm. As there are four objects in each version, an error-correcting output codes approach was used to reduce the classification into multiple binary classifiers. Then, support vector machine learning was used to classify exemplars within each version (MATLAB function fitcecoc). For instance, in the phonological version an exemplar of a coin was classified on whether it belonged to the group of exemplars comprising the objects “corn”, “can”, “cone “or “coin”. For each version, the classifier was given 40 images (4 objects x 10 exemplars per object) in RGB format. The difficulty in learning to classify each exemplar was one of the four objects is expressed as a loss value. Loss values were initially calculated via a 10-fold resubstitution cross-validation approach, where the loss function was set as mean squared error (MATLAB function crossval). Loss values were then repeatedly calculated using 5-fold cross-validation, and resultant re-calculated loss values were compared pairwise across versions using a 5-by 2-paired F test (MATLAB function testckfold). Loss values were not significantly different when comparing the phonological version loss (0.75) to the semantic version loss (0.8) [F(10,2)=1.6; p=0.44], when comparing the phonological version loss (0.75) to the unrelated version loss (0.725) [F(10,2)=1; p=0.59], or when comparing the semantic version loss (0.8) to the unrelated version loss (0.725) [F(10,2)=1.5; p=0.47]. Since the loss values in image classification were not significantly different across task versions, we concluded that the visual similarity was matched across task versions.

To confirm the manipulated differences in semantic relatedness, the lexical database WordNet (Pedersen et al., 2004; Accessed at https://ws4jdemo.appspot.com/) was used to calculate values that evaluate the semantic similarity of pairs of words referred to as jcn values (Jiang & Conrath, 1997). These jcn values were used to compare word pairs within each version of the task. The jcn values were then compared across pairs of tasks using two sample t-tests (e.g., comparing the semantic version’s jcn values to the phonological version’s jcn values). These t-tests revealed that semantic foils were more semantically similar to each other than phonological foils were to each other [t(10) = −9.23, p < 0.0001. Semantic foils were also more semantically similar to each other than unrelated foils were to each other [t(10) = −11.46, p< 0.0001]. Phonological foils and unrelated foils did not significantly differ in how semantically similar they were to each other [t(10)=−0.54, p=0.60].

The task was administered on a 17-inch Dell 2-in-1 laptop computer that was positioned in a tent position to stabilize the monitor. The number of total successful target selections for each block was recorded by the computer. Importantly, since the task does not advance when the wrong target is selected, the total successful target selection count for each block integrates performance measures of speed and accuracy.

Behavioral indices were calculated as follows (Table 2). Time per target selection (TTS) was extracted by dividing allotted block time (i.e., 20 seconds) by the average number of correct item selections completed in blocks of the respective condition (S1, S2, S3). Considering only the correct selections achieved during the allotted block time allows TTS to integrate both speed and accuracy. TTS values are right skewed, so a natural log transform was applied to normalize the distributions. Phonological cost was calculated by subtracting transformed TTS in the unrelated condition from transformed TTS in the phonological condition. Semantic cost was calculated by subtracting transformed TTS in the unrelated condition from transformed TTS in the semantic condition. Cost was calculated separately for S1, S2, and S3 blocks to investigate whether phonological and semantic cost differs by the sequence length. For instance, semantic cost at S1 was calculated by subtracting semantic condition TTS scores at S1 minus unrelated condition TTS scores at S1. Correspondingly, the calculation of semantic cost at S2 utilized the respective semantic and unrelated TTS scores at S2, and the calculation of semantic cost at S3 utilized those scores at S3. Switching cost within a task version was calculated by subtracting the TTS in the non-switching condition (i.e., S1), from the mean TTS in the switching conditions (i.e. S2 and S3). The effects of increased sequence length on phonological and semantic cost was summarized by averaging the cost in S2 and S3, and subtracting that average from cost at S1, yielding two values coined the Phonological Switch Effect, and the Semantic Switch Effect.

Table 2.

Formulae for calculating behavioral indices in A&C paradigm.

| Behavioral Index | Formula |

|---|---|

| Time Per Target Selection (TTS) | ln (Block Time ÷ Number of Target Selections) |

| Phonological Cost | Phonological TTS - Unrelated TTS |

| Semantic Cost | Semantic TTS - Unrelated TTS |

| Switching Cost | (S2 TTS + S3 TTS) ÷ 2 - S1 TTS |

| Phonological Switch Effect | (S2 Phonological Cost + S3 Phonological Cost) ÷2 - S1 Phonological Cost |

| Semantic Switch Effect | (S2 Semantic Cost + S3 Semantic Cost) ÷ 2 - S1 Semantic Cost |

On average, participants completed 13.7 correct selections per block, although as intended, the exact number of correct selections per block varied systematically based on sequence length (S1, S2, S3), task version (semantic, phonological, unrelated, standard, nonverbal), and group (LH stroke survivors, neurotypical participants).

2.3. Measures of Aphasic Deficits

In order to determine whether phonological or semantic control impairments contribute to aphasic deficits, 6 speech and language tasks were selected. Five of the 6 tasks were selected because they produce measures of semantic abilities and/or phonological abilities. The sixth task, the Western Aphasia Battery, was selected to assess aphasia severity.

TALSA Picture Category Judgement (60 items): Participants are presented with two pictures in succession and judge whether the pictures belong to the same category (Martin et al., 2018). Participants respond via two-alternative forced choice button press (yes vs no). Performance is scored as percent accuracy.

Pyramids and Palm Trees (49 items): Participants are presented with three pictures. One is a stimulus, the other two are alternative answers. The participants must select which alternative is closest in meaning to the representation in the stimulus picture (Howard & Patterson, 1992). Performance is scored as percent accuracy.

Word-picture matching (48 items): Participants are presented with six pictures, including one target object and five semantically related alternatives. The participants hear an auditory word then must select the target picture that the word represents. Performance is scored as percent accuracy.

Picture naming (120 items): Adapted from the Philadelphia Naming Task (Roach 1996). Includes 60 items from the Philadelphia Naming Task, as well as another 60 items selected in-house(Fama et al., 2019). Participants say aloud the best one-word name for each picture. Errors are coded orthogonally on the basis of semantic and phonological relatedness to the picture. In the present study, semantic and phonological relatedness were not considered in a mutually exclusive manner (e.g., semantically related errors could also be phonologically related to the picture).

Auditory rhyme judgement (40 items): Participants are presented with two auditory words and decide whether the words rhyme (Fama et al., 2019). Participants respond via two-alternative forced choice button press (yes vs no). Performance is scored as percent accuracy.

Western Aphasia Battery Aphasia Quotient (AQ): AQ is a summary score that is calculated from the Western Aphasia Battery-Revised(Kertesz, 2007). AQ subtests measure spontaneous speech, repetition, naming, and auditory-verbal comprehension.

2.4. Data Analysis

All statistical analyses were performed in MATLAB version R2018a on a Windows PC, with the exception of the repeated-measure ANOVAs, which were run on IBM SPSS Statistics version 25.

2.4.1. Paradigm Validation.

To demonstrate that all participants were able to perform the task with high accuracy, means and standard deviations of accuracy were separately calculated for the 3 main task versions at all 3 sequence lengths. First, group differences between LH stroke survivors and neurotypical controls were investigated as interaction terms in a 2×3×3 repeated measures ANOVA. This 2×3×3 ANOVA compared the effect of groups (LH stroke survivors vs matched controls) on the aforementioned factors (i.e., the three sequence lengths by the three task versions). Then, accuracy was entered into a 3×3 repeated measures ANOVA to demonstrate the effects of increasing sequence lengths (S1, S2, S3) across the main task versions (semantic, phonological, and unrelated). This 3×3 repeated measures ANOVA was run separately on LH stroke survivors and matched neurotypical controls.

To demonstrate that the task had internal consistency, split-half Pearson, as well as Spearman-Brown reliability coefficients were calculated on block-wise TTS scores for each sequence length within a version. For example, each version only had two blocks for S3, thus the first block and second block of S3 were compared to investigate internal consistency. The same analysis was run for S2, which also only had two blocks. Since each task had three blocks for S1, the first two S1 blocks were compared.

To demonstrate that the task reliably evoked the intended performance costs across participants, TTS values were used to derive semantic cost and phonological cost, as well as switching cost in all 5 task versions (standard, nonverbal, semantic, phonological, and unrelated). Then, the proportion of participants with costs above zero were calculated for each of the two cost types. These proportions were calculated separately for LH stroke survivors and controls.

2.4.2. Relationship between phonological control and semantic control:

Pearson’s correlations were computed between phonological cost and semantic cost at each sequence length (S1, S2, S3) for matched controls and LH stroke survivors separately. P values from the resulting 6 correlation coefficients were assessed using a Bonferroni-adjusted alpha level of 0.0083 (0.05/6).

2.4.3. Effect of switching demands on phonological control and semantic control:

A 3×2×2 repeated measures ANOVA was used to investigate whether the cost of interference was affected by increasing sequence length (S1 to S2 to S3), interference type (phonological vs semantic), and group (LH stroke survivors vs matched controls).

2.4.4. Influence of phonological control and semantic control on inner speech cueing:

Correlations were run between the Phonological and Semantic Switch Effects (Table 1) and the switching cost in the standard and unrelated conditions. P values from the resulting 4 correlation coefficients were assessed using a Bonferroni-adjusted alpha level of 0.0125 (0.05/4).

2.4.5. Group impairments in semantic control and phonological control:

Two separate 3×2 repeated measures ANOVA were run to interrogate the effect of LH stroke on: 1) phonological cost across sequence lengths (S1 to S2 to S3) and 2) semantic cost across sequence lengths (S1 to S2 to S3).

2.4.6. Individual impairments in semantic control and phonological control:

Individual LH stroke survivors were identified as having a frank impairment in semantic control or phonological control using a non-parametric approach. That is, individuals were identified as impaired if they performed worse than the bottom rank of neurotypical participant performance. Supplementary Table 1 indicates each participant’s impairment status in semantic control and phonological control using this nonparametric approach. For reference, Supplementary Table 1 also includes the parametric Crawford & Howell t-scores for identifying impairments(Crawford & Howell, 1998).

ANOVAs and post-hoc Tukey tests were used to compare language task performance between individuals with frank semantic control impairment and other LH stroke survivors. A corresponding analysis was planned for phonological control, but was not possible because only one individual was identified to have frank impairment in phonological control.

3. RESULTS

3.1. Paradigm Validation

We first aimed to validate the novel switching paradigm. We consider the paradigm valid if: (1) the task can be performed accurately by most people with and without aphasia, (2) the task has internal consistency, (3) the paradigm reliably produces semantic and phonological interference effects, and (4) the paradigm reliably induces a cost of switching.

Participants were all able to perform the main versions of the task accurately, with an accuracy of 88.6% or greater across conditions (Table 3). We first investigated if the effects of sequence and task version differed by group (i.e., controls versus LH stroke survivors) by interrogating interaction terms in a 3×3×2 ANOVA. Significant interaction effects with group were found for sequence length level as well as task version [sequence length by group: F(1.9,124.6)=4.3, p=0.018, Greenhouse-Geisser corrected, task version by group: F(1.8,121.1)=6.1, p=0.004, Greenhouse-Geisser corrected]. However, the three-way interaction between group, sequence length and task version was not significant [F(3.1,204.8)=0.346, p=0.796, Greenhouse-Geisser corrected].

Table 3.

Average first attempt accuracy of participants in unrelated, phonological, and semantic conditions

| Unrelated Version Mean (SD) | Semantic Version Mean (SD) | Phonological Version Mean (SD) | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Sequence Length | S1 | S2 | S3 | S1 | S2 | S3 | S1 | S2 | S3 |

| LH Stroke n=32 | 99.5% (1.26%) | 95.4% (7.48%) | 96.5% (4.8%) | 93% (10.7%) | 90.9% (10.2%) | 88.6% (16.7%) | 96.1% (6.25%) | 92.4% (12.2%) | 89.9% (13.3%) |

| Controls n=37 | 99.8% (0.68%) | 98.9% (3.32%) | 99% (1.66%) | 98.8% (1.82%) | 98.7% (2.29%) | 98.4% (2.61%) | 99% (1.65%) | 97.8% (3.03%) | 95.5% (5.28%) |

Two separate 3×3 repeated measures ANOVAs were run in LH stroke survivors and matched controls to test the hypothesis that accuracy was reduced as sequence lengths increased (S1, S2, S3) across the main task versions (semantic, phonological, and unrelated). As predicted, a main effect of sequence length was found in neurotypical controls [F(2,72)=8.4; p=0.001], as well as LH stroke survivors [F(2,62)=9.0; p<0.001], confirming that accuracy was reduced with increasing sequence lengths (Table 3). Furthermore, accuracy differed across task versions in both groups [matched controls: (F(2,72)=13.7; p<0.001); LH stroke survivors(F(2,62)=7.0; p=0.002) ]. Task version significantly interacted with sequence length in controls [(F(2.9,105)=5.4; p=0.002), Greenhouse-Geisser corrected]. However, no interaction of task version and sequence length was found in LH stroke survivors [(F(2.9,99.0)=0.7; p=0.55), Greenhouse-Geisser corrected].

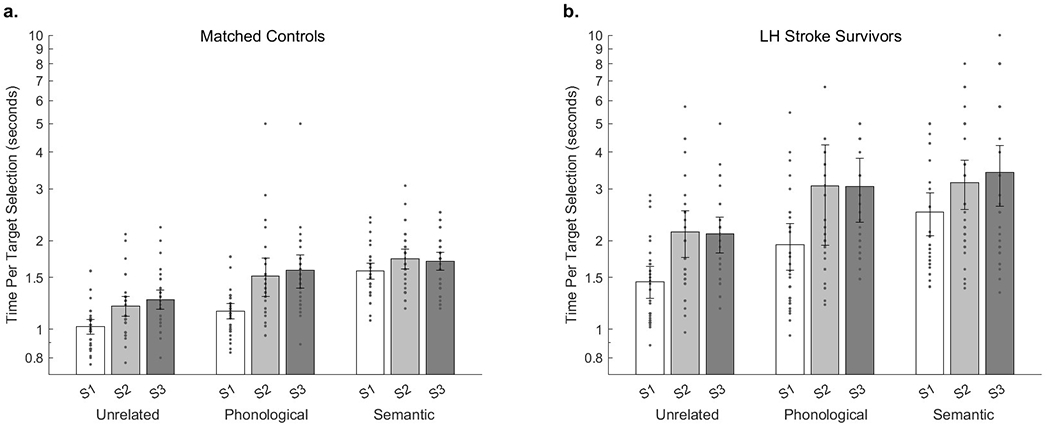

As described in the Methods section, TTS values that are used to derive cost inherently integrate speed and accuracy, since the task does not advance until the correct target is selected (Figure 1). These TTS values demonstrated high internal consistency across blocks (Table 4). Spearman-Brown consistency coefficients in each version’s sequence length were all equal to or greater than 0.81. This value is greater than the oft-considered minimum acceptable Spearman-Brown value of 0.70 (e.g., de Vet et al., 2017).

Figure 1.

(a.) TTS across versions and sequence lengths in matched controls. (b.) TTS across versions and sequence lengths in LH stroke survivors. (S1) selecting a single target repeatedly, (S2) selecting a sequence of two alternating targets, or (S3) selecting a sentence of three successive targets in a pre-specified order.

Table 4.

Split Half reliability coefficients for scores in each task version

| Unrelated | Standard | Nonverbal | Semantic | Phonological | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| S1 | S2 | S3 | S1 | S2 | S3 | S1 | S2 | S3 | S1 | S2 | S3 | S1 | S2 | S3 | |

| Pearson | 0.82 | 0.88 | 0.85 | 0.79 | 0.78 | 0.75 | 0.81 | 0.71 | 0.79 | 0.68 | 0.84 | 0.76 | 0.78 | 0.82 | 0.80 |

| Spearman-Brown | 0.90 | 0.94 | 0.92 | 0.88 | 0.88 | 0.86 | 0.90 | 0.83 | 0.88 | 0.81 | 0.91 | 0.86 | 0.88 | 0.90 | 0.89 |

Semantic costs and phonological costs were calculated at each sequence length based on the difference between TTS values on the semantic/phonological task version as compared to the unrelated task version (Table 2). All participants demonstrated semantic cost and phonological cost greater than zero when averaged across sequence length. Nearly all participants exhibited cost effects for each individual sequence length as well (Table 5). Participants also exhibited reliable switching costs for the standard, nonverbal, and unrelated conditions (Table 6).

Table 5.

Proportion of participants with greater than zero semantic and phonological cost by sequence length.

| Proportion Subjects with Cost>0 | Semantic Cost | Phonological Cost | ||||||

|---|---|---|---|---|---|---|---|---|

| Sequence Length | Average S1, S2, S3 | S1 | S2 | S3 | Average S1, S2, S3 | S1 | S2 | S3 |

| LH Stroke n=32 | 100% | 100% | 84% | 88% | 100% | 97% | 84% | 100% |

| Controls n=37 | 100% | 100% | 100% | 100% | 100% | 95% | 97% | 89% |

Table 6.

Proportion of participants with above zero switching cost by task version.

| Proportion Subjects with Cost>0 | Unrelated | Standard | Nonverbal | Semantic | Phonological |

|---|---|---|---|---|---|

| LH Stroke n=32 | 100% | 97% | 92% | 81 % | 100% |

| Controls n=37 | 100% | 100% | 100% | 91% | 97% |

Overall, these findings provide strong validation of the novel A&C task, demonstrating that it can be performed accurately by most people with and without aphasia, and that it reliably induces semantic and phonological interference effects as well as a cost of switching.

3.2. Relationship Between Phonological Control and Semantic Control

To specify the relationship between phonological control and semantic control, we correlated phonological cost and semantic cost values at each sequence length. The resultant 6 correlation values (3 sequence lengths * 2 participant groups) were corrected for multiple comparisons using a Bonferroni-adjusted alpha level of 0.0083 (0.05/6).

We predicted that if phonological and semantic control were non-dissociable, then phonological and semantic cost would correlate at all sequence lengths. Alternatively, we predicted that if phonological control and semantic control were partially dissociable during task switching, then phonological and semantic cost would correlate at sequence length 1, when there are no switching demands, but not at sequence length 2 or 3 where there are switching demands. Finally, we predicted if phonological control and semantic control were completely dissociable, then phonological and semantic cost would not be correlated at any sequence length.

3.2.1. Control group.

In the control group, phonological cost and semantic cost were correlated at sequence length 1 where there are no switching demands [r=0.44, p=0.0068]. However, semantic cost and phonological cost were not correlated at sequence length 2 or 3, where there are switching demands [sequence length 2 r=−0.06, p=0.74; Sequence Length 3 r=0.28 p=0.090].

3.2.2. LH stroke survivors.

In LH stroke survivors, phonological cost and semantic cost were not significantly correlated at any sequence length [sequence length 1 r=0.32, p=0.075; sequence length 2 r=0.34, p=0.060; sequence length 3 r=0.25, p=0.16].

3.3. Effects of Switching Demands on Phonological and Semantic Control

To determine the effect of switching demands on phonological control and semantic control, we examined effects of sequence length on phonological cost and semantic cost. We hypothesized that if phonological control and semantic control are dissociated by switching demands, then cost values would be dependent on the interaction between sequence length (S1, S2, S3) and interference type (phonological vs semantic).

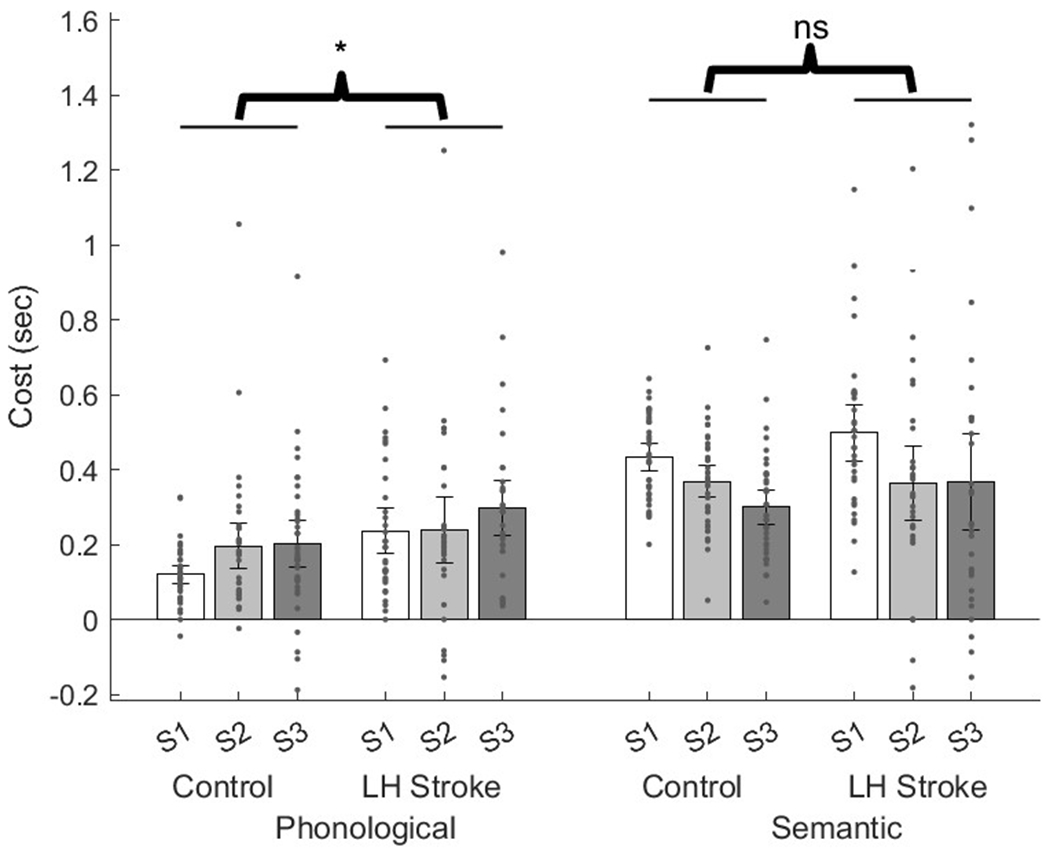

The effect of switching demands was quantified using a 3×2×2 repeated measures ANOVA (sequence length x interference type x group) which revealed main effects for interference type [semantic>phonological F(1,67)=47.8, p<.0001], and group [LH stroke survivors>controls F(1,67)=4.2, p=.045]. Importantly, this ANOVA found a significant interaction between interference type and sequence length [F(2,134)=22.7, p<.0001].

It is unclear from the 3×2×2 ANOVA whether each group independently demonstrates this interaction between sequence length and interference in this paradigm. This interaction in each group was investigated via two separate analyses: a 3×2 ANOVA in neurotypical controls, and a 3×2 ANOVA in LH stroke survivors. The interaction between interference type and sequence length was then replicated in a 3×2 repeated measures ANOVA for controls [F(2,72)=17.4; p<0.001] and a 3×2 repeated measures ANOVA for LH stroke survivors [F(2,62)=7.5; p=0.001]. In both groups, increased switching demands in the form of longer sequence lengths monotonically affected phonological control and semantic control such that increasing sequence length increased phonological cost, but paradoxically decreased semantic cost (Figure 2).

Figure 2.

Phonological and semantic cost. Controls vs LH stroke survivors at different sequence lengths. Asterisk reflects p<0.05, as the main effect of group (LH stroke survivors vs controls) in 3×2×2 repeated measures ANOVA.

We then investigated whether phonological control and semantic control interact with switching demands by influencing inner speech cueing. We investigated this question in the neurotypical control group. We did not test this in LH stroke survivors because although many people with aphasia demonstrate some preservation of inner speech relative to their overt speech abilities, many report frequent failures of inner speech, which is supported by objective evidence (Fama et al., 2017, 2019; Fama & Turkeltaub, 2020; Hayward et al., 2016). We summarized the effect of switching demands on phonological control by averaging the phonological cost in S2 and S3, and subtracting that average from phonological cost at S1. We labelled the resultant value as the Phonological Switch Effect (Table 2). We similarly summarized the effect of switching demands on semantic control by averaging the semantic cost in S2 and S3, and subtracting that average from semantic cost at S1. We labelled the resultant value as the Semantic Switch Effect (Table 2).

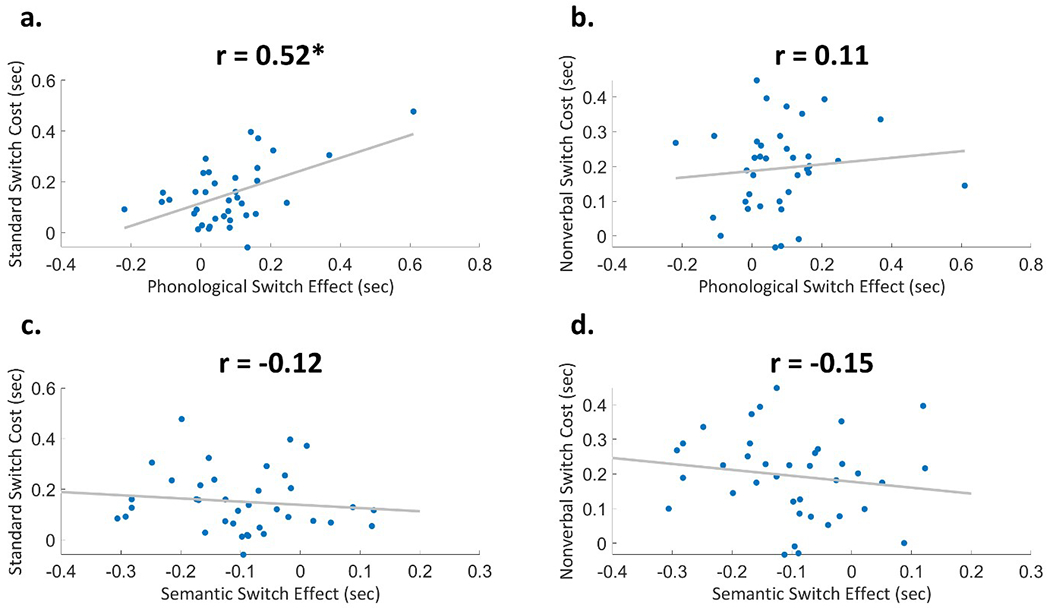

We then compared the Phonological and Semantic Switch Effects to switching cost in the nonverbal version and the standard version of the task. The resultant 4 correlation values (2 task versions * 2 Switch Effects) were corrected for multiple comparisons using a Bonferroni-adjusted alpha level of 0.0125 (0.05/4).

As discussed in Methods, the nonverbal version was designed to benefit less from inner speech cueing, and the standard version was designed to benefit more from inner speech cueing. Thus, we interpret differences in the relationships to the nonverbal versus the standard version as reflecting the role of inner speech cueing in switching control. Since we expected inner speech cueing to be comprised of both phonological and semantic content, we expected that the Phonological and Semantic Switch Effects would relate to switching cost on the standard version, but not the nonverbal version.

As expected, the Phonological Switch Effect was significantly correlated with switching cost on the standard version, which benefits from inner speech cueing [r(35)=0.52; p=0.0009] (Figure 3a). Furthermore, the Phonological Switch Effect was not significantly correlated with switching cost on the nonverbal version which does not benefit from inner speech cueing [r(35)=0.11; p=0.52] (Figure 3b).

Figure 3.

Plots of correlations between a) Phonological Switch Effect and standard switching cost b) Phonological Switch Effect and nonverbal switching cost c) Semantic Switch Effect and standard switching cost, d) Semantic Switch Effect and nonverbal switching cost. Asterisks * indicate significance at p<0.05.

Contrary to our expectation, the Semantic Switch Effect did not correlate with switching cost on neither the standard version nor the nonverbal version [standard: r(35)= −0.12; p=0.49; nonverbal: r(35)= −0.15; p=0.36] (Figure 3c,d). These correlations suggest that the interaction of switching with phonological interference is related to inner speech cueing, while the interaction of switching with semantic interference is not related to inner speech cueing.

3.4. Semantic and Phonological Control Deficits in Stroke Survivors

3.4.1. Group-wide Impairments.

The group effect of stroke status on each control ability (phonological control, semantic control) was examined using two separate 3×2 repeated measures ANOVAs. A 3×2 repeated measures ANOVA comparing phonological cost scores at all three sequence lengths between LH stroke survivors and controls found that phonological control was significantly worse in the stroke group [F(1,67)=5.7; p=0.020]. A 3×2 repeated measures ANOVA investigating semantic scores at all three sequence lengths between LH stroke survivors and controls found that semantic control was not significantly worse in the stroke group [F(1,67)=0.9; p=0.340].

3.4.2. Individual Impairments.

Although there was a group-level impairment of phonological control identified by the ANOVA, there was only one LH stroke survivor who had greater phonological cost than all the matched controls. This individual had severe broad impairments (WAB AQ= 28.5), was only able to correctly name 5% of the pictures on picture naming, and performed near chance in two-alternative forced choice tasks that tax phonology such as rhyme judgement (48% accuracy) and that tax semantics such as category judgement (61% accuracy). Notably, this individual had normal semantic control performance, with an average semantic cost of 0.47 (control range: 0.17- 0.62).

While there was not a significant group-level impairment of semantic control in LH stroke survivors, five LH stroke survivors had greater semantic interference scores (i.e. impaired semantic control) than all of the matched controls (Table 7, Greater Semantic Interference Group (GSI)). Unexpectedly, inspecting LH stroke survivor performance revealed that five LH stroke survivors had lower interference scores than all of the matched controls (Table 7, Less Semantic Interference Group (LSI)), leaving 22 stroke survivors with semantic interference in the range of the controls (Table 7, Normal Semantic Interference Group (NSI)).

Table 7.

Individual LH stroke survivors with extreme semantic control scores.

| Group | Subject Code | Age | Gender | Semantic Interference Across S1 S2 S3 | Phonological Interference Across S1 S2 S3 | Category Judgement Accuracy | Pyramids and Palm Trees Accuracy | Word Picture Matching Accuracy | Rhyme Judgement Accuracy | Picture Naming Accuracy | Picture Naming Semantic Errors ÷ All Errors | Picture Naming Phonological Errors ÷ All Errors | WAB Aphasia Quotient | WAB Aphasia Subtype |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| GSI | AKH | 54 | M | 0.91 | 0.31 | 0.88 | 0.64 | 0.74 | 0.85 | 0.77 | 0.61 | 0.36 | 77.3 | Anomic |

| KNK | 64 | M | 1.21 | 0.20 | 0.86 | 0.96 | 0.84 | 0.85 | 0.88 | 0.71 | 0.43 | 96.7 | Anomic | |

| SJK | 70 | M | 0.63 | 0.21 | 0.78 | 0.90 | 0.94 | 0.85 | 0.94 | 0.86 | 0.29 | 94.3 | Anomic | |

| WXW | 72 | F | 0.67 | 0.44 | 0.78 | 0.86 | 0.98 | 0.83 | 0.81 | 0.43 | 0.48 | 97.3 | Anomic | |

| ZOK | 67 | F | 0.73 | 0.38 | 0.72 | 0.71 | 0.74 | 0.88 | 0.35 | 0.46 | 0.26 | 68.7 | Transcortical Motor | |

| NSI | --- | M 61.23 (SD 10.45) | 14M, 8F | M 0.39 (SD 0.11) | M 0.26 (SD 0.2) | M 0.91 (SD 0.08) | M 0.88 (SD 0.15) | M 0.88 (SD 0.18) | M 0.85 (SD 0.17) | M 0.7 (SD 0.3) | M 0.42 (SD 0.27) | M 0.52 (SD 0.22) | M 78.28 (SD 23.89) | 11 Anomic, 4 Broca, 3 Conduction, 2 Transcortical Motor, 2 No Aphasia |

| LSI | DZY | 65 | M | 0.07 | 0.13 | 0.95 | 0.96 | 1 | 0.98 | 0.58 | 0.10 | 0.94 | 91.4 | Anomic |

| IWZ | 54 | F | 0.15 | 0.24 | 0.92 | 0.94 | 0.75 | 0.6 | 0.13 | 0.04 | 0.68 | 32.2 | Broca | |

| MPV | 68 | M | 0.07 | 0.11 | 0.95 | 0.98 | 1 | 0.85 | 0.33 | 0.04 | 0.16 | 76.0 | Transcortical Motor | |

| TAO | 71 | F | 0.03 | 0.19 | 0.93 | 0.78 | 0.69 | 0.88 | 0.66 | 0.10 | 0.24 | 71.1 | Transcortical Motor | |

| YLZ | 44 | F | 0.17 | 0.25 | 0.90 | 0.87 | 0.82 | 0.68 | 0.14 | 0.47 | 0.17 | 39.8 | Broca |

GSI: LH stroke survivors with greater semantic interference than controls, NSI: LH stroke survivors with semantic interference scores within control bounds, LSI: LH stroke survivors with less semantic interference than controls.

A series of one-way ANOVAs comparing the three groups (GSI, LSI, and NSI) on several measures of semantics, phonology, anomia, and aphasia severity found that the groups were significantly different on two of the four semantic measures, namely category judgement, and commission of semantically-related naming errors [category judgement: F(2,29)=4.51, p=0.020; semantically-related naming errors: F(2,28)=4.39, p=0.022]. A post-hoc Tukey test on category judgement revealed that the GSI group performed worse than both the LSI Group and the NSI Group [GSI and LSI: 95% CI (−0.24 −0.0085) p=0.034; GSI and NSI; 95% CI (−0.20 −0.012) p=0.025]. The LSI and NSI groups did not differ in their performance [95% CI (−0.070 0.11), p=0.82]. A post-hoc Tukey test on semantically-related naming errors revealed that the GSI group committed significantly more semantically-related naming errors than the LSI group [95% CI (0.072 0.85) p= 0.017]. The GSI group did not differ from the NSI Group in semantically-related naming errors [95% CI (−0.12 0.50) p= 0.29]. The LSI group and NSI group did not significantly differ in semantically-related naming errors, although the difference was trending [95% CI (−0.58 0.034), p=0.08].

The rest of the of 3×1 ANOVAs comparing GSI, LSI, and NSI groups on the other language measures were not significant [Pyramids and Palm Trees: F(2,29)=0.591, p=0.56; word-picture matching: F(2,28)=0.14, p=0.87; phonological control: F(2,29)=0.646, p=0.53; rhyme judgement: F(2,29)=0.262, p=0.77; phonologically-related naming errors: F(2,28)=0.971, p=0.39; WAB Aphasia Quotient: F(2,29)=1.56, p=0.23], although the ANOVA for naming accuracy was trending toward significance [naming accuracy: F(2,29)=2.99, p=0.066].

The ANOVAs were likely underpowered in general due to the small sample sizes in each group. Visual inspection of the data suggests that the GSI group may differ from the two other groups by having greater accuracy on picture naming, as well as higher (i.e., less severe) WAB Aphasia Quotient scores (Table 7). Furthermore, there appears to be qualitative difference between the GSI and LSI group in terms of aphasia subtypes as diagnosed by the WAB. Four of the 5 individuals in the GSI group were classified as Anomic, whereas 4 of the five individuals in the LSI group had nonfluent classifications including Broca and Transcortical Motor. The NSI Group had a mix of WAB aphasia subtypes (Table 7).

4. DISCUSSION

4.1. Summary

We developed a novel switching control paradigm, Antelopes and Cantaloupes - A&C, that reliably elicits switching costs as well as phonological and semantic costs in both LH stroke survivors and controls. Using this paradigm, we found that phonological control and semantic control are related but distinct abilities. Phonological control and semantic control were strongly related when there were no switching demands, but unrelated in the presence of switching demands. Increased switching demands monotonically increased phonological cost, but paradoxically decreased semantic cost. The interaction between switching and phonological control was related to inner speech cueing, but the interaction between switching and semantic control was not. Examining effects of stroke, we found that LH stroke survivors as a group are impaired at phonological control, although these deficits seem to be relatively subtle on an individual basis as nearly all were within the range of control values. In contrast, effects of LH stroke on semantic control appear more variable, in that there was no group-wise difference from controls, but several individuals had superior semantic control and several had frank semantic control impairments. These latter individuals had deficits on other semantic tasks compared to stroke survivors with preserved semantic control, which is consistent with the notion that semantic control impairments contribute to semantic deficits in some individuals with aphasia.

4.2. Phonological Control and Semantic Control are Dissociable

The present study found that phonological control and semantic control are related in some contexts, but dissociate in other contexts. Specifically, phonological control was related to semantic control during target selection when there were no switching demands (i.e., at sequence length 1) in controls. This relationship indicates that phonological control and semantic control overlap in the mechanisms required to ignore distractors during target selection, which in the Miyake framework corresponds to response-distractor inhibition, a subprocess of the inhibition ability (Friedman & Miyake, 2004). Notably, phonological control and semantic control during target selection were not significantly related in LH stroke survivors, although the relationship was trending toward significance. This nonsignificant relationship in LH stroke survivors could be attributed to low power in a limited sample size. Additionally, the nonsignificant relationship is broadly consistent with the proposition that phonological control and semantic control rely on distinct machinery that is differentially damaged by LH stroke. Indeed, we identified a double dissociation in these abilities: several LH stroke survivors in our study demonstrated normal phonological control and large impairments in semantic control, and one LH stroke survivor demonstrated the reverse.

Furthermore, we found that phonological control and semantic control dissociate in the presence of switching demands. There was no significant relationship between phonological control and semantic control in the presence of switching demands (i.e., at sequence lengths 2 and 3) in either controls or LH stroke survivors. Intriguingly, the present study’s evidence reviewed in the next two sections suggests two causes of this dissociation: 1) phonological control, but not semantic control contributes to inner speech cueing during task switching, and 2) that semantic control shares common processes with the domain-general ability of switching control. These proposed explanations constrain the linguistic content of inner speech cues and support the contribution of domain-general mechanisms to semantic control.

This dissociation between phonological and semantic control may inform the active debate surrounding language-specificity of cognitive control. As mentioned in the Introduction, a large body of research supports the importance of domain-general cognitive control abilities in language. Such work includes studies that find poor performance on nonverbal cognitive control tasks relates to speech and language deficits (e.g., Murray, 2017). It is plausible that language-specific cognitive control abilities coexist with domain-general control abilities. Language-specific control is evidenced by case studies of LH stroke survivors with impaired performance on verbal cognitive control tasks, but intact performance on nonverbal cognitive control tasks (Hamilton & Martin, 2005; Jacquemot & Bachoud-Lévi, 2021a). Along those lines, our present study distinguished language-specific control abilities by demonstrating dissociations between impairments in semantic control and impairments in phonological control. Future work is warranted to distinguish abilities supporting cognitive control of other language representations, such as morphology and syntax (Jacquemot & Bachoud-Lévi, 2021b).

4.3. Phonological Control, but not Semantic Control, Supports Inner Speech Cueing

Inner speech cueing strategies are employed by participants during task switching to supplement their performance by providing a cue for the upcoming target (Miyake et al., 2004). We found that phonological control, but not semantic control is important for the maintenance of inner speech cues during task switching. Specifically, the increase of phonological cost during switching, called the Phonological Switch Effect, was related to switching ability in the standard version where the targets are easily nameable shapes, but not related to switching ability in the nonverbal version when the targets are non-canonical shapes without obvious names. On the other hand, the Semantic Switch Effect did not relate to switching ability in either condition. These correlations suggest that phonological control helps maintain inner-speech based self-cues in working memory. Since correlations on their own do not prove causality, one might alternatively suggest the reverse relationship between phonological control and inner speech cueing. In other words, the relationship might indicate that more robust usage of inner speech cueing for switching exacerbates phonological interference. We consider such a reverse relationship unlikely because it requires substantial systematic variability of the robustness of inner speech cues across task versions. However, this relationship was investigated in our controls, who we expect to all have ceiling ability in internally conjuring the name of the standard version items, which are easily nameable canonical shapes (“circle”, “square”, “star”, “triangle”). On the other hand, we did expect our matched controls to have varying ability in phonological control, which in turn supports the maintenance of the conjured inner speech cues in working memory. Another explanation is that inner speech cueing is selectively employed to compensate for poor switching ability, such that the Phonological Switch Effect uncovers poor switching ability when inner speech is disrupted. This suggestion is unlikely because the Phonological Switch Effect was not related to switching in the nonverbal version, which was designed to measure switching ability without the aid of inner speech. Furthermore, our finding of a Phonological Switch Effect at the group level suggests that inner speech cueing is widely employed across the group and not only by select individuals. We therefore conclude that the role of phonological control in maintenance of inner speech cues explains the increase of phonological cost with increasing switching demands (Figure 2).

Furthermore, the dissociation between phonological and semantic control in the context of inner speech cueing specifies the composition of inner-speech based cues. Prior work has indicated that inner speech cueing relies on phonological representations (Miyake et al., 2004). The correlation between the Phonological Switch Effect and switching when inner speech is available (A&C standard version) supports this notion. Additionally, inner speech can sometimes rely on semantic representations and/or syntactic representations (Alderson-Day & Fernyhough, 2015). However, there has not been any work to our knowledge determining whether inner speech cueing rely on semantic representations during task switching. Here we found no evidence that inner speech cueing during task switching relies on semantic representations, since the Semantic Switch Effect was not related to switching during contexts that promote inner speech cueing (A&C standard version). Thus, it appears that inner speech cues conjured during switching is composed of phonological representations (e.g., the auditory and/or motor imagery of speech), and perhaps not of semantic representations. Such a conclusion is consistent with the common assumption that the inner speech cueing solely reflects phonological representations. This conclusion could constrain the language-related elements that contribute to inner speech cueing during task switching, and perhaps during other settings that require cognitive control. Of course, one should naturally be cautious drawing such conclusions from a negative result. An alternative interpretation arises from the fact that the standard switching task involved targets that were simple shapes (i.e., “circle”, “square”, “star”, “triangle”). If these targets are semantically impoverished, then one would expect them to conjure semantically impoverished inner speech. We therefore cannot preclude that a hypothetical switching task containing semantically rich targets could conjure semantically rich inner speech cues. The semantic properties of inner speech cues in switching could be further elucidated by future studies that manipulate semantic richness of targets.

4.4. Semantic Control Shares Processes with Switching Control

In both neurotypical controls and LH stroke survivors, we found a significant monotonic effect where increasing switching demands (i.e., from S1 to S2 to S3) paradoxically decreased semantic cost. This monotonic effect is another way of describing the Semantic Switch Effect. One might posit that while the semantic distractors interfere with target selection due to activation of neighboring semantic representations, they simultaneously act as semantic primes for inner speech cueing. While we acknowledge the possibility of such a semantic priming account, we alternatively posit that the Semantic Switch Effect instead suggests that the processes recruited for semantic control are also helpful for switching control, and vice versa.

Online recruitment of cognitive control in the presence of distractor-based interference is predicted by conflict monitoring theory, and is evidenced behaviorally by the Gratton effect, where control of task conflict is improved following earlier trials with task conflict (Blais et al., 2014; G. Gratton et al., 1992). Although the classic Gratton effect describes the recruitment of control within a single task, the mechanistic account we propose describes the recruitment of control across task types. Control recruitment across task types, called cross-task adaptation, is rarely found in cognitive control paradigms, except when the tasks types are very similar (Schuch et al., 2019). If the Semantic Switch Effect reflects cross-task adaptation, then it may suggest a similarity between the two types of control. In other words, switching control in the presence of semantic interference (i.e., suppression of previous target and retrieval of the present target), may rely on the same control mechanisms as semantic control at S1 (i.e., finding the target object amongst distractors). Recruiting semantic control may thus bolster switching control in the context of semantic distractors, resulting in a reduced cost of switching.

4.5. Frank Semantic Control Impairments Can Contribute to Semantic Deficits in Aphasia

The CSC framework suggests that semantic control impairments contribute to semantic deficits in aphasia (Jefferies et al., 2008; Jefferies & Lambon Ralph, 2006; Rogers et al., 2015), but this notion has recently been called into question (Chapman et al., 2020). While we do not contend that all aphasic semantic deficits are exclusively due to semantic control impairments, we did confirm that people with frank impairments in semantic control had worse performance on some semantic tasks, including poorer accuracy on category judgement, and an increase in the proportion of semantically-related errors during picture naming (Table 7). These behavioral relationships are consistent with the CSC model proposed by Lambon-Ralph and colleagues. Importantly, we identified these relationships using a semantic control measure that was independent from other semantic abilities namely, semantic access and representation. These abilities are independent from the semantic control measure because the semantic version and the unrelated version of the A&C task place similar demands on semantic access and representation. The effects of these demands are then subtracted out in the calculation of semantic control. Moreover, we expect minimal semantic access demands in each version because the same four common objects are repeatedly accessed throughout the version. Therefore, our findings suggest that impaired semantic control contributes to poor semantic task performance independently from abilities in semantic access or representation.

Notably, we identified frank semantic control impairments in only five of the 32 LH stroke survivors, which could indicate that semantic control impairments are not common in LH stroke. Additionally, there were individuals who performed poorly on some semantic tasks, but did not have a frank semantic control impairment as measured by the A&C task. These individuals may have deficits in semantic representation or deficits in aspects of semantic access that do not rely on cognitive control (see Mirman & Britt, 2014). It is also possible that semantic control is in itself multidimensional, and that these individuals had deficits in aspects of semantic control that were not measured in the A&C paradigm. More research is needed to determine the extent to which semantic control is composed of divisible subdomains (e.g., semantic distractor inhibition, controlled semantic access), as well as the extent to which LH stroke causes semantic deficits that are independent from semantic control.

As mentioned above, we found that individuals with semantic control impairments did not perform poorly on all semantic tasks. For instance, these individuals performed no worse than other LH stroke survivors on word-picture matching. One possible explanation for this finding is that the word-picture matching task may rely less on semantic control than comprehension and semantic access. Indeed, Thompson et al. (2015) noted that stroke survivors with semantic control impairments perform better on word-picture matching in comparison to people with Wernicke’s aphasia, a disorder characterized by deficits in comprehension and semantic access (Thompson et al., 2015).

4.6. The Curious Case of Weaker Semantic Interference: Exceptional Control Abilities in LH Stroke Survivors with Severe Aphasia

Although all participants exhibited expected semantic interference effects, a subset of LH stroke survivors (i.e., the LSI group) exhibited less semantic interference than all controls. It is unclear why the LSI group had reduced semantic interference. One possible explanation is that LH stroke weakened (e.g., degraded) these individuals’ semantic representations, leading to less registration of semantic interference. However, the LSI group did not perform worse than other LH stroke survivors on the semantic tasks (i.e., category judgement, Pyramids and Palm Trees, word-picture matching). In fact, some performed numerically above-average on category judgement and Pyramids and Palm Trees, despite severe aphasia based on the WAB AQ. Another explanation is that these individuals actually have superior semantic control ability. Indeed, the LSI group committed significantly fewer semantically-related picture naming errors than stroke survivors in the GSI group and numerically (but not significantly) fewer than those in the NSI group, which would be expected with greater semantic control. It is unlikely that these five individuals possessed superior premorbid semantic control than every control participant. Therefore, the LSI group’s increased semantic control abilities might result from post-stroke compensation, which could have been adopted as a response to certain types of acquired language deficits, and/or gained through speech-language therapy experiences. The potential of post-stroke compensatory increase in semantic control abilities warrants further investigation.

It is important to acknowledge an inherent limitation in measuring semantic control in individuals who may have semantic representation deficits. The magnitude of interference effects are often assumed to scale with differences in control, with larger interference effects indicating poorer control. However, prior to recruiting semantic control, sensitivity to semantic interference might vary across different types of semantic deficits. One could imagine that a semantic deficit that causes weak individual semantic representations would result in weaker sensitivity to semantic interference, as discussed above. On the other hand, one could imagine that a semantic deficit that blurs the boundaries between semantic representations would result in greater susceptibility to semantic interference due to more overlap between representations. Thus, effects of semantic deficits involving weak or ‘blurry’ semantic representations, or a combination of the two, can potentially confound any measure of semantic control. This limitation could motivate an alternative interpretation of the present finding that individuals with greater semantic interference had poor scores on some semantic tasks. It is conceivable that these individuals had a semantic deficit that affected their sensitivity to semantic interference. However, despite this limitation, we found evidence that all individuals did register semantic interference in our semantic control measure since all of our participants, including those with semantic deficits, demonstrated semantic cost.

4.7. Phonological Control Impairments in LH Stroke are Subtle and Appear on the Group Level

The first step towards determining the role of phonological control impairments in aphasic deficits requires verifying that phonological control impairments occur in aphasia. Indeed, we found that LH stroke survivors as a group demonstrated poorer phonological control than controls. This impairment in controlling phonological interference is consistent with the greater phonological interference effect found in Barde et al (Barde et al., 2010). A phonological control impairment might negatively impact speech and language performance in stroke survivors. However, although stroke survivors on a group level were impaired at phonological control, we found little evidence of individual impairments in phonological control. Only one individual in our sample had poorer phonological control than every control. This individual had broadly severe aphasia with floor or near-floor performance across most speech and language tasks. Intriguingly, this individual had normal semantic control, suggesting a dissociation between phonological control and semantic control. Several individuals also displayed the reverse pattern: having normal phonological control, and frank impairments in semantic control. The relationship between impairments of phonological control and specific aphasic deficits could not be further examined since all other LH stroke survivors performed within the control range for phonological control. Since we were unable to distinguish between premorbid phonological control ability from impairments in phonological control, we did not examine the relationship of phonological control scores to performance on speech and language tasks.

The relative lack of individual phonological control impairments in our LH stroke survivors is intriguing. It is possible that more participants in our study had phonological control impairments, but were not identified because the phonological interference was too easily overcome. The present stimuli were designed to evoke phonological interference by overlapping the initial and final consonant of target objects names (can, cone, coin, corn). Future stimulus sets could be manipulated to evoke phonological interference by overlapping a greater proportion of the phonetic features in target alternatives (e.g., minimal pair contrasts as in pole, bowl, coal, goal) or by overlapping all of the phonemes but in different positions (e.g., stop, tops, spot, pots). Examining which manipulations of phonological similarity produce the greatest interference will help elucidate the representations relevant to phonological control.

4.8. A Reliable and Flexible Paradigm for Measuring Cognitive Control

We have demonstrated that the novel A&C paradigm reliably produces switching costs, phonological interference, and semantic interference in both controls and LH stroke survivors. Importantly, it measures cognitive control without requiring reading, auditory comprehension, or overt speech, which makes it advantageous for use in people with speech, language, or reading deficits. Moreover, while the present study investigated effects of phonological and semantic interference, the A&C paradigm can be adapted to interrogate a wide range of interference effects. The stimulus set can be swapped out to investigate effects of specific interference types, while keeping the A&C task structure consistent. For example, above, we have discussed alternate stimulus sets to probe phonological control. One could also design stimuli that evoke interference on other linguistic dimensions such as orthography and morphology, or non-linguistic dimensions such as spatial location, color, or local versus global features. The reliability and versatility of the paradigm may make A&C paradigm an important new tool for investigating cognitive control.

4.9. Conclusions

Phonological control and semantic control are strongly related during response-distractor inhibition, but unrelated in the presence of switching demands. Specifically, semantic and phonological control interact differently with switching. These differences may result from the role of phonological control in inner speech, and on common mechanisms underlying semantic control and switching control. Semantic control impairments do explain some cases of semantic impairment in aphasia, but do not explain poor performance on semantic tasks in all cases in aphasia. Phonological control deficits are common but subtle in people with aphasia. Further investigation is needed to fully understand semantic and phonological control, and their importance for explaining language deficits.

Supplementary Material

Funding:

This work is supported by the National Institute on Deafness and other Communication Disorders (NIDCD grants R01DC014960 to PET, F30DC019024 to JDM, F30DC018215 to JVD), the National Center for Advancing Translational Science (KL2TR000102 to PET), the National Institute of Health’s StrokeNet (Grant U10NS086513 to ATD), and the National Center for Medical Rehabilitation Research (K12HD093427 to ATD)

Footnotes

DECLARATION OF COMPETING INTEREST

The authors have no competing interests to declare.

This name derives from a hypothetical version of the task in which participants switch between selecting pictures of antelopes and cantaloupes.

References:

- Alderson-Day B, & Fernyhough C (2015). Inner Speech: Development, Cognitive Functions, Phenomenology, and Neurobiology. Psychological Bulletin, 141(5), 931–965. 10.1037/bul0000021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baddeley A, Chincotta D, & Adlam A (2001). Working memory and the control of action: Evidence from task switching. Journal of Experimental Psychology. General, 130(4), 641–657. [PubMed] [Google Scholar]

- Barde LHF, Schwartz MF, Chrysikou EG, & Thompson-Schill SL (2010). Reduced short-term memory span in aphasia and susceptibility to interference: Contribution of material-specific maintenance deficits. Neuropsychologia, 48(4), 909–920. 10.1016/j.neuropsychologia.2009.11.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blais C, Stefanidi A, & Brewer GA (2014). The Gratton effect remains after controlling for contingencies and stimulus repetitions. Frontiers in Psychology, 5, 1207. 10.3389/fpsyg.2014.01207 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brownsett SLE, Warren JE, Geranmayeh F, Woodhead Z, Leech R, & Wise RJS (2014). Cognitive control and its impact on recovery from aphasic stroke. Brain: A Journal of Neurology, 137(Pt 1), 242–254. 10.1093/brain/awt289 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calabria M, Grunden N, Serra M, García-Sánchez C, & Costa A (2019). Semantic Processing in Bilingual Aphasia: Evidence of Language Dependency. Frontiers in Human Neuroscience, 13, 205. 10.3389/fnhum.2019.00205 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cervera-Crespo T, & González-Álvarez J (2019). Speech Perception: Phonological Neighborhood Effects on Word Recognition Persist Despite Semantic Sentence Context. Perceptual and Motor Skills, 126(6), 1047–1057. 10.1177/0031512519870032 [DOI] [PubMed] [Google Scholar]

- Chapman CA, Hasan O, Schulz PE, & Martin RC (2020). Evaluating the distinction between semantic knowledge and semantic access: Evidence from semantic dementia and comprehension-impaired stroke aphasia. Psychonomic Bulletin & Review. 10.3758/s13423-019-01706-6 [DOI] [PubMed] [Google Scholar]

- Chen Q, & Mirman D (2012). Competition and cooperation among similar representations: Toward a unified account of facilitative and inhibitory effects of lexical neighbors. Psychological Review, 119(2), 417–430. 10.1037/a0027175 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crawford JR, & Howell DC (1998). Comparing an Individual’s Test Score Against Norms Derived from Small Samples. The Clinical Neuropsychologist, 12(4), 482–486. 10.1076/clin.12.4.482.7241 [DOI] [Google Scholar]

- Damian MF, & Bowers JS (2003). Locus of semantic interference in picture-word interference tasks. Psychonomic Bulletin & Review, 10(1), 111–117. 10.3758/BF03196474 [DOI] [PubMed] [Google Scholar]

- Damian MF, & Spalek K (2014). Processing different kinds of semantic relations in picture-word interference with non-masked and masked distractors. Frontiers in Psychology, 5. 10.3389/fpsyg.2014.01183 [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Groot F, Koelewijn T, Huettig F, & Olivers CNL (2016). A stimulus set of words and pictures matched for visual and semantic similarity. Journal of Cognitive Psychology, 28(1), 1–15. 10.1080/20445911.2015.1101119 [DOI] [Google Scholar]

- de Vet HCW, Mokkink LB, Mosmuller DG, & Terwee CB (2017). Spearman–Brown prophecy formula and Cronbach’s alpha: Different faces of reliability and opportunities for new applications. Journal of Clinical Epidemiology, 85, 45–49. 10.1016/j.jclinepi.2017.01.013 [DOI] [PubMed] [Google Scholar]

- Emerson MJ, & Miyake A (2003). The role of inner speech in task switching: A dual-task investigation. Journal of Memory and Language, 48(1), 148–168. 10.1016/S0749-596X(02)00511-9 [DOI] [Google Scholar]

- Fama ME, Hayward W, Snider SF, Friedman RB, & Turkeltaub PE (2017). Subjective experience of inner speech in aphasia: Preliminary behavioral relationships and neural correlates. Brain and Language, 164, 32–42. 10.1016/j.bandl.2016.09.009 [DOI] [PMC free article] [PubMed] [Google Scholar]