Abstract

It is without question that the Internet has democratized access to medical information, with estimates that 70% of the American population use it as a resource, particularly for cancer-related information. Such unfettered access to information has led to an increase in health misinformation. Fortunately, the data indicate that health care professionals remain among the most trusted information resources. Therefore, understanding how the Internet has changed engagement with health information and facilitated the spread of misinformation is an important task and challenge for cancer clinicians. In this review, we perform a meta-synthesis of qualitative data and point toward empirical evidence that characterizes misinformation in medicine, specifically in oncology. We present this as a call to action for all clinicians to become more active in ongoing efforts to combat misinformation in oncology.

INTRODUCTION

Approximately 72% of the population of the United States engages in at least one type of social media.1 The 2018 Health Information National Trends Survey found that 70% of US adults have accessed health information online,2 with cancer being one of the most frequently searched health terms.3,4 However, what is reliable and what is not continues to be a significant issue.5 In this area of uncertainty, trust in health care professionals continues to be stable, with 94% of Americans trusting clinicians compared with 64% who trust what is found on the Internet.6

The Internet has democratized access to medical information, and consumers with varying degrees of health and scientific literacy flock to various sources, platforms, and social media. Unfortunately, this has led to the rise of health misinformation, defined as any health-related claim of fact that is false on the basis of current scientific consensus, which can have negative and detrimental consequences.7 Understanding how the Internet has changed engagement with health information and facilitated the spread of misinformation is an important task and challenge for cancer clinicians. This review presents a meta-synthesis of qualitative data that addresses the origin, spread, and scope of misinformation in oncology.

HEALTH MISINFORMATION ON THE INTERNET: WHAT IS IT?

In addition to the above definition, the US Surgeon General's Advisory on Building a Healthy Information Environment defines misinformation as information that is false, inaccurate, or misleading according to the best available evidence at the time.8 Misinformation can include information that is no longer current or information derived from an unreliable or irrelevant source.9

Misinformation is not the same as disinformation, which is defined as a coordinated or deliberate effort to circulate misinformation knowingly to gain power, money, or reputation.9 Simply put, disinformation is intentional misinformation for secondary gain. Although the notion that sugar causes hyperactivity in children constitutes an example of misinformation, the deliberate effort of a pharmaceutical company to hide the addictive nature of opiates constitutes an example of disinformation.10 These distinctions are not static concepts, especially given the evolving nature of medical knowledge as new data are reported. This remains especially true in the context of cancer, where knowledge and information are constantly changing.

CHALLENGES WITH EVALUATION AND VALIDATION OF ONLINE INFORMATION

Social media users are more likely to believe that information is correct if it is posted by a credible source,9 and in the case of medical information, it will typically be someone with health care–related credentials. However, there is no online verification of credentials for social media accounts, and anyone can engage in social media without providing credentials or by reporting false ones. In addition, there is a lack of incentive for social media companies to limit its spread.

Another challenge is defining fake scientific news, here defined as providing false or misleading information that is presented as scientifically believable and valid. Because fake news often has some basis in reality, it can be challenging for a layperson to distinguish it from what is scientifically valid and informed by current evidence. The most frequently cited example is a study published in The Lancet in 1998 linking the measles, mumps, and rubella vaccines to autism.11 Although this study has been used to support the belief that preventive vaccines cause serious and chronic health conditions, this seminal article was ultimately retracted in 2010 and the lead author stripped of his medical license.12,13 The false association persists despite the retraction, promoted in part by celebrities and others with large social media followings that claim personal experiences as proof.

Oncology is not immune to this type of misinformation. Haber et al demonstrated that 58% of the media articles covering the 50 most shared academic articles in 2015 inaccurately reported the question, results, methodology, or population of the study. There is a large disparity between the strength of the language presented in the media to the consumer and the underlying strength of causal inference.14 For example, the media coverage of a study evaluating coffee consumption and melanoma risk used stronger language than the language used in the scientific article.14,15

Boutron et al evaluated the impact of spin on abstract results from randomized controlled clinical trials in oncology. Clinicians who assessed an abstract with spin rated the experimental treatment as being more beneficial than those assessing an article without spin.16 Although this study was conducted in the scientific community, the results have implications for the way oncology research is presented and interpreted in the media and public opinion.

The burden and challenge of evaluating content falls on the user, yet there are no clear methods or guidelines to verify the validity of the information posted on social media. Those with limited health literacy and/or experience may not be able to distinguish legitimate sources from questionable ones. Trivedi et al conducted a study of 53 social media users looking at 16 target posts focusing on human papillomavirus (HPV) vaccination or sunscreen safety. Users with adequate health literacy were able to correctly rate evidence-based posts as more believable than non–evidence-based posts, whereas users with limited health literacy were not. Those with limited health literacy spent more time on the source of the message than users with adequate health literacy. These users may tend to be more likely to believe in false news if the message comes from what they consider to be trusted sources on social media.17

WHAT ACCOUNTS FOR THE SPREAD OF MISINFORMATION

The psychology of why misinformation spreads goes beyond the scope of this paper and we point the reader toward excellent resources.18-20 For the purpose of this discussion, we focus on the role of cognitive bias that influence our thinking and lead to errors in processing information. Two examples prevalent on social media are confirmation bias and the echo chamber effect. Confirmation bias refers to seeking information that supports a person's own hypothesis while ignoring information that deviates from it.21-23 It is a phenomenon common on closed social networks, most notably on Facebook, and is associated with the amplification of misinformation.20,24,25 An echo chamber refers to a situation in which users interact with other users who share their own viewpoints and avoid those with whom they do not agree.26 For example, a study of breast cancer retweeting behavior on Twitter demonstrated that messages written by users who had a higher number of followers, higher levels of personal influence over the interaction, and closer relationships and similarities with other users tended to be retweeted.27 These influences can lead to potential confirmation bias if users are exposed to messages from similar others. Similarly, in a study examining online HPV vaccine content, users who were more often exposed to antivaccine messages were more likely to have a negative opinion about the HPV vaccine in subsequent tweets.28 The combination of being susceptible to being influenced by others and the ease of access to information that confirms a person's bias makes it less likely that users will question the credibility of sources.24

The role of confirmation bias and the echo chamber effect has been clearly demonstrated in discussions around vaccine hesitancy (in general), which predated the COVID-19 pandemic (and the issues surrounding COVID-19 vaccines specifically, which are outside the scope of this paper). Schmidt et al29 performed a quantitative analysis of more than 2.6 million Facebook users around vaccine hesitancy and discovered that users consumed information either in favor or against vaccination, but not both, which over time resulted in highly polarized communities. Another study demonstrated that the social media content of users characterized as vaccine-hesitant was rarely shared between those in the mainstream community.30

It is important to understand who is more susceptible to online misinformation. In one study that included 923 Facebook participants, the accuracy of true and false social media posts on statin medications, cancer treatment, and the (HPV) vaccine was evaluated.31 People who believed in misinformation about the HPV vaccine were also likely to believe in misinformation about statins and cancer treatment. Individuals with less education and health literacy, less trust in the health care system, and more positive attitudes toward alternative medicine were more likely to believe in health misinformation. By contrast, health-related conditions that made the information more personally relevant, such as cancer or high cholesterol or cancer, did not predict misinformation.

However, when medical science does not have all the answers and the expectation of a clinical benefit from evidence-based therapies is low, it may be easier to see the appeal of an unproven treatment, even if it defies logical reasoning.32 Fear and doubt can further increase susceptibility to misinformation.33

In the case of people living with cancer, it may well be the existential threat of death and/or the occurrence or fear of severe side effects of standard treatment that drives people to search for any intervention that provides a source of hope and empowerment. Although data evaluating this possibility are lacking, the use of complementary and alternative medicines (CAM) in people with cancer has been noted. For example, in one survey of Polish patients with gynecologic cancer, CAM use was significantly associated with educational status and recurrent disease.34

ONLINE HEALTH MISINFORMATION IN ONCOLOGY

Oncology-related health misinformation on social media is a pressing concern. Loeb et al35 reported a significant negative correlation between scientific quality and viewer engagement among prostate cancer informational videos on YouTube. Users were more likely to view poor quality or biased videos rather than higher-quality information. Unfortunately, this suggests that medical misinformation can spread quite rapidly (or go viral), particularly because most social media platform algorithms push content with more views or engagement.36 In a separate study, Loeb et al37 reported that almost 70% of bladder cancer content on YouTube was judged to be of moderate to poor quality. Johnson et al reviewed 50 of the most popular social media articles on each of the four most common cancers (breast, prostate, colorectal, and lung) posted on Facebook, Reddit, Twitter, or Pinterest between January 2018 and December 2019. Nearly one third of these articles contained misinformation, and 76.9% contained harmful information that could lead to adverse consequences such as treatment delays, toxicity of recommended tests or procedures, and/or adverse interactions with the current standard of care. The role of misinformation and how it might relate to the standard of care was suggested in the study cited regarding people with gynecologic cancer: 26% received information about CAM from the Internet and 52% read other sources, whereas < 3% discussed its use with their doctors.4 Despite this, CAM use was 2.3-fold higher among those being treated with standard chemotherapy (v other therapies). Although not specifically addressing misinformation, this study informs people with cancer of role of alternative sources of information and suggests that they may still undergo standard treatment.

It is clear that online cancer information is inconsistent and sometimes at odds with published data and expert opinions. It is no wonder that patients may become confused and unsure where to turn and who to trust.16

THE ROLE OF HEALTH CARE PROFESSIONALS ON SOCIAL MEDIA AND A CALL TO ACTION

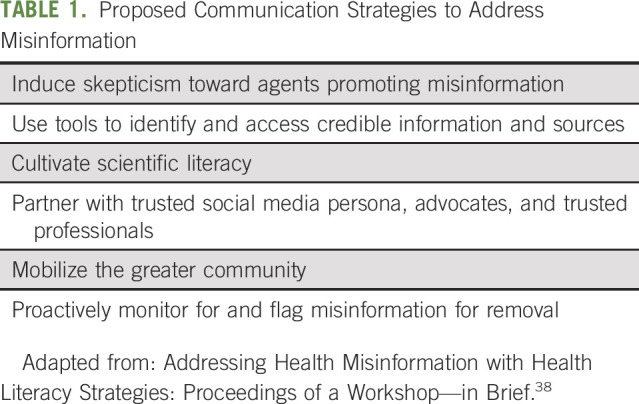

We are just beginning to understand how to respond to misinformation, especially in the field of oncology.9 However, steps to address this have been proposed by the National Academies of Sciences, Engineering, and Medicine in a 2020 roundtable on health literacy (Table 1).38

TABLE 1.

Proposed Communication Strategies to Address Misinformation

Calling out those who spread misinformation for secondary gain (eg, financial profit or Internet fame) is a means by which we can induce skepticism toward those who create or spread misinformation. A contemporary example was published in 2005 to expose the extent to which the tobacco industry was involved in the review of sudden infant death syndrome and secondhand smoke.39 The authors noted that the main author had financial conflicts of interest and that tobacco industry executives were directly involved in the preparation of the review.

There are many credible sources for cancer-related news and information, including multiple patient advocacy, nonprofit organizations, and university- and hospital-based websites. Social media users are in a unique position to counter misinformation by pointing followers in their direction so individuals searching for information can access more credible data.

Because scientific literacy is not only a contributor to the spread of misinformation but also a significant problem in the United States, health care providers should view the Internet as a collaborative tool that has the potential to assist patients and care partners in better managing their illness, especially when we use it to speak in plain language to our own constituents, thereby making complicated studies more accessible. In turn, health care organizations need to prioritize the dissemination of scientifically vetted and practical health information by providing resources and training of health care professionals in health communication and social media use.40-42

Our ability to fight misinformation cannot be accomplished alone, especially given the rapid global flow of information. It will take collaboration with all affected by its spread. Stakeholder engagement is critical to doing this, both by presenting unified messages that are accessible to as wide an audience as possible and by disseminating accurate information across multiple platforms. We should not forget that the majority of the public believe in science and medicine. This is evident even during the COVID-19 pandemic, where despite the havoc caused by vaccine misinformation, the majority of the public have accepted vaccination.43

Our role on social media is to collectively monitor and flag misinformation when we encounter it, regardless of the platform. Cancer professionals should proactively raise awareness of low-quality information (or egregiously false information) and educate and share high-quality information. Adding clinician engagement can aid in appropriate information provision and dissemination, as shown through the #BCSM (breast cancer social media) community, which was the first cancer support community established on Twitter and founded in 2011 by two breast cancer survivors.44,45 We must be careful not to devalue and judge patient experiences and beliefs, both in person and online.

In the current social media landscape, the responsibility is to identify what is accurate and what misinformation falls on the user consuming the information. On a larger scale, we encourage social media platforms to focus on vetting accounts, fact-checking, and supporting verification efforts for health information. This will help users who cannot distinguish accurate information from misleading information on the basis of the message or post content alone. Currently, a blue verified badge on Twitter denotes that a public interest account is active, authentic, and notable.46 Verified accounts tend to have greater credibility and tend to be shared and promoted more widely. Setting criteria for verification that focus specifically on health and science information accounts is imperative to begin to combat misinformation and help users better differentiate accurate information from misleading information.

In conclusion, misinformation has a pervasive impact on oncology. We anticipate that the rapid evolution of new communication technologies and social media will continue to raise new challenges and dilemmas, while at the same time, offer new opportunities for social connection and accessing information. Health care professionals need to be engaged in research to better understand misinformation, how to work to combat it, and reach the population most affected by online health misinformation. Interventional research in this area is in its infancy; however, some data suggest that it can be effective. Indeed, a 2021 meta-analysis concluded that social media interventions can produce a significant and positive impact, with effectiveness tied to the involvement of stakeholders and dissemination by news organizations and experts.47 These data suggest that our traditional methods for conducting scientific research require greater collaboration, from their design to implementation to analysis. Together, we can find new approaches to decrease the abundance of misinformation about cancer.

Eleonora Teplinsky

Consulting or Advisory Role: GlaxoSmithKline, Eisai, Tesaro

Emily K. Drake

Other Relationship: Emily Drake (EmilyDrake.ca)

Ann Meredith Garcia

Honoraria: Pfizer, MIMS

Consulting or Advisory Role: Docquity, Lilly

Speakers' Bureau: AstraZeneca, Boehringer Ingelheim, MSD, Roche, Unilab

Travel, Accommodations, Expenses: Sun Pharma, Fresenius Kabi, Goodfellow Pharma, orient EuroPharma

Stacy Loeb

Stock and Other Ownership Interests: Gilead Sciences

G.J. van Londen

Employment: CancerSurvivorMD, LLC, GvanLondenMD, LLC

Leadership: GvanLondenMD, LLC, CancerSurvivorMD, LLC

Stock and Other Ownership Interests: CancerSurvivorMD, LLC, GvanLondenMD, LLC

Consulting or Advisory Role: bioTheranostics

Open Payments Link: https://openpaymentsdata.cms.gov/physician/1218078

Deanna Teoh

Research Funding: Tesaro, Moderna Therapeutics (Inst)

Michael Thompson

Stock and Other Ownership Interests: Doximity

Consulting or Advisory Role: Celgene, VIA Oncology, Takeda, GlaxoSmithKline, Syapse, Adaptive Biotechnologies, AbbVie, GRAIL, Epizyme, Janssen Oncology, Sanofi

Research Funding: Takeda (Inst), Bristol Myers Squibb (Inst), TG Therapeutics (Inst), Cancer Research and Biostatistics (Inst), AbbVie (Inst), PrECOG (Inst), Strata Oncology (Inst), Lynx Biosciences (Inst), Denovo Biopharma (Inst), ARMO BioSciences (Inst), GlaxoSmithKline (Inst), Amgen (Inst)

Patents, Royalties, Other Intellectual Property: UpToDate, Peer Review for Plasma Cell Dyscrasias (Editor: Robert Kyle)

Travel, Accommodations, Expenses: Takeda, GlaxoSmithKline, Syapse

Other Relationship: Doximity

Uncompensated Relationships: Strata Onoclogy

Open Payments Link: https://openpaymentsdata.cms.gov/physician/192826/summary

Lidia Schapira

Consulting or Advisory Role: Rubedo Life Sciences, Blue Note Therapeutics

No other potential conflicts of interest were reported.

SUPPORT

This paper represents themes presented at the Inaugural Collaboration for Outcomes Using Social Media in Oncology (COSMO) Conference, funded in part by the National Cancer Institute 1R13CA239613-01A1, the Rhode Island Department of Health, Stanford Cancer Institute, Lifespan Cancer Institute, and The Warren Alpert Medical School of Brown University.

AUTHOR CONTRIBUTIONS

Conception and design: Eleonora Teplinsky, Emily K. Drake, G.J. van Londen, Deanna Teoh, Michael Thompson, Lidia Schapira

Collection and assembly of data: Eleonora Teplinsky, Sara Beltrán Ponce, Ann Meredith Garcia, Michael Thompson, Lidia Schapira

Data analysis and interpretation: Eleonora Teplinsky, Sara Beltrán Ponce, Stacy Loeb, G.J. van Londen, Deanna Teoh, Michael Thompson, Lidia Schapira

Manuscript writing: All authors

Final approval of manuscript: All authors

Accountable for all aspects of the work: All authors

AUTHORS' DISCLOSURES OF POTENTIAL CONFLICTS OF INTEREST

Online Medical Misinformation in Cancer: Distinguishing Fact From Fiction

The following represents disclosure information provided by authors of this manuscript. All relationships are considered compensated unless otherwise noted. Relationships are self-held unless noted. I = Immediate Family Member, Inst = My Institution. Relationships may not relate to the subject matter of this manuscript. For more information about ASCO's conflict of interest policy, please refer to www.asco.org/rwc or ascopubs.org/op/authors/author-center.

Open Payments is a public database containing information reported by companies about payments made to US-licensed physicians (Open Payments).

Eleonora Teplinsky

Consulting or Advisory Role: GlaxoSmithKline, Eisai, Tesaro

Emily K. Drake

Other Relationship: Emily Drake (EmilyDrake.ca)

Ann Meredith Garcia

Honoraria: Pfizer, MIMS

Consulting or Advisory Role: Docquity, Lilly

Speakers' Bureau: AstraZeneca, Boehringer Ingelheim, MSD, Roche, Unilab

Travel, Accommodations, Expenses: Sun Pharma, Fresenius Kabi, Goodfellow Pharma, orient EuroPharma

Stacy Loeb

Stock and Other Ownership Interests: Gilead Sciences

G.J. van Londen

Employment: CancerSurvivorMD, LLC, GvanLondenMD, LLC

Leadership: GvanLondenMD, LLC, CancerSurvivorMD, LLC

Stock and Other Ownership Interests: CancerSurvivorMD, LLC, GvanLondenMD, LLC

Consulting or Advisory Role: bioTheranostics

Open Payments Link: https://openpaymentsdata.cms.gov/physician/1218078

Deanna Teoh

Research Funding: Tesaro, Moderna Therapeutics (Inst)

Michael Thompson

Stock and Other Ownership Interests: Doximity

Consulting or Advisory Role: Celgene, VIA Oncology, Takeda, GlaxoSmithKline, Syapse, Adaptive Biotechnologies, AbbVie, GRAIL, Epizyme, Janssen Oncology, Sanofi

Research Funding: Takeda (Inst), Bristol Myers Squibb (Inst), TG Therapeutics (Inst), Cancer Research and Biostatistics (Inst), AbbVie (Inst), PrECOG (Inst), Strata Oncology (Inst), Lynx Biosciences (Inst), Denovo Biopharma (Inst), ARMO BioSciences (Inst), GlaxoSmithKline (Inst), Amgen (Inst)

Patents, Royalties, Other Intellectual Property: UpToDate, Peer Review for Plasma Cell Dyscrasias (Editor: Robert Kyle)

Travel, Accommodations, Expenses: Takeda, GlaxoSmithKline, Syapse

Other Relationship: Doximity

Uncompensated Relationships: Strata Onoclogy

Open Payments Link: https://openpaymentsdata.cms.gov/physician/192826/summary

Lidia Schapira

Consulting or Advisory Role: Rubedo Life Sciences, Blue Note Therapeutics

No other potential conflicts of interest were reported.

REFERENCES

- 1.Social Media Fact Sheet: Pew Research Center. 2021. https://www.pewresearch.org/internet/fact-sheet/social-media/ [Google Scholar]

- 2. Ratcliff CL, Krakow M, Greenberg-Worisek A, et al. Digital health engagement in the US population: Insights from the 2018 Health Information National Trends Survey. Am J Public Health. 2021;111:1348–1351. doi: 10.2105/AJPH.2021.306282. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Foroughi F, Lam AK, Lim MSC, et al. “Googling” for cancer: An infodemiological assessment of online search interests in Australia, Canada, New Zealand, the United Kingdom, and the United States. JMIR Cancer. 2016;2:e5. doi: 10.2196/cancer.5212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Hesse BW, Moser RP, Rutten LJ, et al. The Health Information National Trends Survey: Research from the baseline. J Health Commun. 2006;11(suppl 1):vii–xvi. doi: 10.1080/10810730600692553. [DOI] [PubMed] [Google Scholar]

- 5.American Society of Clinical Oncology . ASCO 2019 Cancer Opinions Survey 2019. https://www.asco.org/sites/new-www.asco.org/files/content-files/blog-release/pdf/2019-ASCO-Cancer-Opinion-Survey-Final-Report.pdf [Google Scholar]

- 6.HINTS Brief Number 39 . Trust in Health Information Sources Among American Adults. 2019. https://hints.cancer.gov/docs/Briefs/HINTS_Brief_39.pdf?msclkid=4e3cbbcfa53f11ec9b162216c2d3c7c5 [Google Scholar]

- 7. Sylvia Chou WY, Gaysynsky A, Cappella JN. Where we go from here: Health misinformation on social media. Am J Public Health. 2020;110(suppl 3):S273–S5. doi: 10.2105/AJPH.2020.305905. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Murthy VH. Confronting Health Misinformation: The U.S. Surgeon General’s Advisory on Building a Healthy Information Environment 2021. https://www.hhs.gov/sites/default/files/surgeon-general-misinformation-advisory.pdf [PubMed] [Google Scholar]

- 9. Swire-Thompson B, Lazer D. Public health and online misinformation: Challenges and recommendations. Annu Rev Public Health. 2020;41:433–451. doi: 10.1146/annurev-publhealth-040119-094127. [DOI] [PubMed] [Google Scholar]

- 10.Keefe PR. The Family That Built an Empire of Pain. The New Yorker; 2017. https://www.newyorker.com/magazine/2017/10/30/the-family-that-built-an-empire-of-pain [Google Scholar]

- 11. Wakefield AJ, Murch SH, Anthony A, et al. Ileal-lymphoid-nodular hyperplasia, non-specific colitis, and pervasive developmental disorder in children. Lancet. 1998;351:637–641. doi: 10.1016/s0140-6736(97)11096-0. [DOI] [PubMed] [Google Scholar]

- 12. Eggertson L. Lancet retracts 12-year-old article linking autism to MMR vaccines. CMAJ. 2010;182:E199–E200. doi: 10.1503/cmaj.109-3179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Park A. Doctor behind Vaccine-Autism Link Loses License. TIME; 2010. https://healthland.time.com/2010/05/24/doctor-behind-vaccine-autism-link-loses-license/ [Google Scholar]

- 14. Haber N, Smith ER, Moscoe E, et al. Causal language and strength of inference in academic and media articles shared in social media (CLAIMS): A systematic review. PLoS One. 2018;13:e0196346. doi: 10.1371/journal.pone.0196346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Loftfield E, Freedman ND, Graubard BI, et al. Coffee drinking and cutaneous melanoma risk in the NIH-AARP diet and health study. J Natl Cancer Inst. 2015;107:dju421. doi: 10.1093/jnci/dju421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Johnson SB, Parsons M, Dorff T, et al. Cancer misinformation and harmful information on Facebook and other social media: A brief report. J Natl Cancer Inst. 2022;114:1036–1039. doi: 10.1093/jnci/djab141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Trivedi N, Lowry M, Gaysynsky A, et al. Factors associated with cancer message believability: A mixed methods study on simulated Facebook posts. J Cancer Educ. doi: 10.1007/s13187-021-02054-7. [epub ahead of print on June 19, 2021] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Scheufele DA, Krause NM. Science audiences, misinformation, and fake news. Proc Natl Acad Sci USA. 2019;116:7662–7669. doi: 10.1073/pnas.1805871115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Pennycook G, Rand DG. The psychology of fake news. Trends Cogn Sci. 2021;25:388–402. doi: 10.1016/j.tics.2021.02.007. [DOI] [PubMed] [Google Scholar]

- 20.Ciampaglia GL. Biases Make People Vulnerable to Misinformation Spread by Social Media. Scientific American; 2018. https://www.scientificamerican.com/article/biases-make-people-vulnerable-to-misinformation-spread-by-social-media/ [Google Scholar]

- 21.Chapman GB, Elstein AS. Cognitive processes and biases in medical decision making. In: Chapman GB, Sonnenberg FA, editors. Decision Making in Health Care: Theory, Psychology, and Application. Cambridge, UK; Cambridge University Press; 2000. pp. 183–210. [Google Scholar]

- 22. Zhao H, Fu S, Chen X. Promoting users' intention to share online health articles on social media: The role of confirmation bias. Inf Process Manag. 2020;57:102354. doi: 10.1016/j.ipm.2020.102354. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Keselman A, Browne AC, Kaufman DR. Consumer health information seeking as hypothesis testing. J Am Med Inform Assoc. 2008;15:484–495. doi: 10.1197/jamia.M2449. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Thornhill C, Meeus Q, Peperkamp J, et al. A digital nudge to counter confirmation bias. Front Big Data. 2019;2:11. doi: 10.3389/fdata.2019.00011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Zimmer F, Scheibe K, Stock M, et al. Fake news in social media: Bad algorithms or biased users? J Inf Sci Theor Pract. 2019;7:40–53. [Google Scholar]

- 26. Fung IC, Blankenship EB, Ahweyevu JO, et al. Public health implications of image-based social media: A systematic review of Instagram, Pinterest, Tumblr, and Flickr. Perm J. 2020;24:18.307. doi: 10.7812/TPP/18.307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Kim E, Hou J, Han JY, et al. Predicting retweeting behavior on breast cancer social networks: Network and content characteristics. J Health Commun. 2016;21:479–486. doi: 10.1080/10810730.2015.1103326. [DOI] [PubMed] [Google Scholar]

- 28. Dunn AG, Leask J, Zhou X, et al. Associations between exposure to and expression of negative opinions about human papillomavirus vaccines on social media: An observational study. J Med Internet Res. 2015;17:e144. doi: 10.2196/jmir.4343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Schmidt AL, Zollo F, Scala A, et al. Polarization of the vaccination debate on Facebook. Vaccine. 2018;36:3606–3612. doi: 10.1016/j.vaccine.2018.05.040. [DOI] [PubMed] [Google Scholar]

- 30. Getman R, Helmi M, Roberts H, et al. Vaccine hesitancy and online information: The influence of digital networks. Health Educ Behav. 2018;45:599–606. doi: 10.1177/1090198117739673. [DOI] [PubMed] [Google Scholar]

- 31. Scherer LD, McPhetres J, Pennycook G, et al. Who is susceptible to online health misinformation? A test of four psychosocial hypotheses. Health Psychol. 2021;40:274–284. doi: 10.1037/hea0000978. [DOI] [PubMed] [Google Scholar]

- 32. Bernard VW. Why people become the victims of medical quackery. Am J Public Health Nations Health. 1965;55:1142–1147. doi: 10.2105/ajph.55.8.1142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Wang Y, McKee M, Torbica A, et al. Systematic literature review on the spread of health-related misinformation on social media. Soc Sci Med. 2019;240:112552. doi: 10.1016/j.socscimed.2019.112552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Michalczyk K, Pawlik J, Czekawy I, et al. Complementary methods in cancer treatment-cure or curse? Int J Environ Res Public Health. 2021;18:356. doi: 10.3390/ijerph18010356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Loeb S, Sengupta S, Butaney M, et al. Dissemination of misinformative and biased information about prostate cancer on YouTube. Eur Urol. 2019;75:564–567. doi: 10.1016/j.eururo.2018.10.056. [DOI] [PubMed] [Google Scholar]

- 36. Kite J, Foley BC, Grunseit AC, et al. Please like me: Facebook and public health communication. PLoS One. 2016;11:e0162765. doi: 10.1371/journal.pone.0162765. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Loeb S, Reines K, Abu-Salha Y, et al. Quality of bladder cancer information on YouTube. Eur Urol. 2021;79:56–59. doi: 10.1016/j.eururo.2020.09.014. [DOI] [PubMed] [Google Scholar]

- 38.National Academies of Sciences, Engineering, and Medicine; Health and Medicine Division; Board on Population Health and Public Health Practice; Roundtable on Health Literacy . Addressing Health Misinformation With Health Literacy Strategies: Proceedings of a Workshop—In Brief. Washington, DC: National Academies Press; 2020. [PubMed] [Google Scholar]

- 39. Tong EK, England L, Glantz SA. Changing conclusions on secondhand smoke in a sudden infant death syndrome review funded by the tobacco industry. Pediatrics. 2005;115:e356–e366. doi: 10.1542/peds.2004-1922. [DOI] [PubMed] [Google Scholar]

- 40. Gunn CM, Paasche-Orlow MK, Bak S, et al. Health literacy, language, and cancer-related needs in the first 6 months after a breast cancer diagnosis. JCO Oncol Pract. 2020;16:e741–e50. doi: 10.1200/JOP.19.00526. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Housten AJ, Gunn CM, Paasche-Orlow MK, et al. Health literacy interventions in cancer: A systematic review. J Cancer Educ. 2021;36:240–252. doi: 10.1007/s13187-020-01915-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Huhta AM, Hirvonen N, Huotari ML. Health literacy in web-based health information environments: Systematic review of concepts, definitions, and operationalization for measurement. J Med Internet Res. 2018;20:e10273. doi: 10.2196/10273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Mathieu E, Ritchie H, Ortiz-Ospina E, et al. A global database of COVID-19 vaccinations. Nat Hum Behav. 2021;5:947–953. doi: 10.1038/s41562-021-01122-8. [DOI] [PubMed] [Google Scholar]

- 44. Platt JR, Brady RR. BCSM and #breastcancer: Contemporary cancer-specific online social media communities. Breast J. 2020;26:729–733. doi: 10.1111/tbj.13576. [DOI] [PubMed] [Google Scholar]

- 45. Katz MS, Staley AC, Attai DJ. A history of #BCSM and insights for patient-centered online interaction and engagement. J Patient Cent Res Rev. 2020;7:304–312. doi: 10.17294/2330-0698.1753. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Twitter . About Verified Accounts. Twitter; 2022. https://help.twitter.com/en/managing-your-account/about-twitter-verified-accounts [Google Scholar]

- 47. Walter N, Brooks JJ, Saucier CJ, et al. Evaluating the impact of attempts to correct health misinformation on social media: A meta-analysis. Health Commun. 2021;36:1776–1784. doi: 10.1080/10410236.2020.1794553. [DOI] [PubMed] [Google Scholar]