Abstract

The “core language network” consists of left frontal and temporal regions that are selectively engaged in linguistic processing. Whereas functional differences among these regions have long been debated, many accounts propose distinctions in terms of representational grain-size—e.g., words vs. phrases/sentences—or processing time-scale, i.e., operating on local linguistic features vs. larger spans of input. Indeed, the topography of language regions appears to overlap with a cortical hierarchy reported by Lerner et al. (2011) wherein mid-posterior temporal regions are sensitive to low-level features of speech, surrounding areas—to word-level information, and inferior frontal areas—to sentence-level information and beyond. However, the correspondence between the language network and this hierarchy of “temporal receptive windows” (TRWs) is difficult to establish because the precise anatomical locations of language regions vary across individuals. To directly test this correspondence, we first identified language regions in each participant with a well-validated task-based localizer, which confers high functional resolution to the study of TRWs (traditionally based on stereotactic coordinates); then, we characterized regional TRWs with the naturalistic story listening paradigm of Lerner et al. (2011), which augments task-based characterizations of the language network by more closely resembling comprehension “in the wild”. We find no region-by-TRW interactions across temporal and inferior frontal regions, which are all sensitive to both word-level and sentence-level information. Therefore, the language network as a whole constitutes a unique stage of information integration within a broader cortical hierarchy.

1. Introduction

Language comprehension engages a cortical network of frontal and temporal brain regions, primarily in the left hemisphere (Binder et al., 1997; Bates et al., 2003; Fedorenko et al., 2010; Menenti et al., 2011). There is ample evidence that this “core language network” is language-selective and is not recruited by other mental processes (Fedorenko and Varley, 2016; see also Pritchett et al., 2018; Ivanova et al., 2019; Jouravlev et al., 2019), indicating that it either employs cognitively unique representational formats and/or implements algorithms distinct from those recruited in other cognitive domains. Nonetheless, the functional architecture of this network—i.e., the division of linguistic labor among its constituent regions—remains highly debated. On the one hand, some neuroimaging studies have suggested that different linguistic processes are localized to distinct, and sometimes focal, subsets of this network (e.g., Stowe et al., 1998; Vandenberghe et al., 2002; Bornkessel et al., 2005; Humphries et al., 2006; Caplan et al., 2008; Snijders et al., 2009; Meltzer et al., 2010; Pallier et al., 2011; Brennan et al., 2012; Goucha and Friederici, 2015; Zhang and Pylkkänen, 2015; Kandylaki et al., 2016; Frank and Willems, 2017; Wilson et al., 2018; Bhattasali et al., 2019). Other studies have, on the other hand, reported that different linguistic process (e.g., both lexical and combinatorial) are widely distributed across the network and are spatially overlapping (e.g., Keller et al., 2001; Vigneau et al., 2006; Fedorenko et al., 2012b; Bautista and Wilson, 2016; Blank et al., 2016; Fedorenko et al., 2020; Siegelman et al., 2019). Similar conundrums regarding the mapping of linguistic representations and processes onto distinct vs. shared circuits characterize the neuropsychological (patient) literature (e.g., Caplan et al., 1996; Dick et al., 2001; Bates et al., 2003; Wilson and Saygın, 2004; Grodzinsky and Santi, 2008; Tyler et al., 2011; Duffau et al., 2014; Mesulam et al., 2015; Mirman et al., 2015; Fridriksson et al., 2018; Matchin and Hickok, 2019).

Proposals for the functional architecture of the core language network vary substantially from one another in the theoretical constructs posited and the mapping of those constructs onto brain regions (for examples, see Friederici, 2002; Hickok and Poeppel, 2004; Ullman, 2004; Grodzinsky and Friederici, 2006; Hickok and Poeppel, 2007; Bornkessel-Schlesewsky and Schlesewsky, 2009; Friederici, 2011, 2012; Poeppel et al., 2012; Price, 2012; Bornkessel-Schlesewsky and Schlesewsky, 2013; Hagoort, 2013). Such differences notwithstanding, the majority of accounts share a common, fundamental hypothesis: different language regions integrate incoming linguistic input at distinct timescales. This hypothesis may take different forms: in some accounts, the processing of linguistic representations of different grain size (e.g., phonemes, morphemes/syllables, words, phrases/clauses, and sentences) is respectively mapped onto distinct regions; in other accounts, some region(s) function as mental lexicons (“memory”) that store smaller combinable linguistic units, whereas other regions combine these units into larger structural and meaning representations (“online processing”/“unification”/“composition”). Yet all forms of this hypothesis, while varying considerably in critical details, make the same general prediction: that a functional dissociation among language regions would manifest as differences in their respective timescales for processing and integration.

A brain region’s integration timescale constrains the amount of preceding context that influences the processing of current input. A relatively short integration timescale entails that the incoming signal is integrated with its local context, with more global context exerting little or no influence, whereas a longer integration timescale entails sensitivity to broader contexts extending farther into the past. The amount of context that a brain region is sensitive to governs how closely that region “tracks” input that deviates from well-formedness (e.g., Hasson et al., 2008). For example, a brain region with a short integration timescale (e.g., on the order of syllables or morphemes) should reliably track any locally well-formed input even in the face of coarser, global disorder (morphemes/syllables can be extracted even from ungrammatical sequences of unrelated words); but a region with a longer integration timescale (e.g., on the order of phrases or clauses) could not reliably track such locally intact but globally incoherent input (phrases/clauses would be difficult or impossible to identify in such sequences). Therefore, a straightforward prediction that follows from the general “different processing timescales” hypothesis is that different language regions should exhibit distinct patterns of tracking for input scrambled at different grain levels (i.e., coarser, more global disruptions that preserve local information vs. finer, more local violations, as described in the examples above).

Indeed, such a pattern of regional response profiles consistent with distinct integration timescales has been reported in a set of left temporal and frontal areas, whose topography appears to overlap with the core language network (Lerner et al., 2011). Specifically, Lerner and colleagues presented participants with a naturalistic spoken story (“intact story”) along with several, increasingly scrambled versions of it: a list of unordered paragraphs (“paragraph list”), a list of unordered sentences (“sentence list”), a list of unordered words (“word list”), and the audio recording played in reverse (“reverse audio”). As participants listened to each of these stimuli, fluctuations in the fMRI BOLD signal were recorded, and the reliability of voxel-wise input tracking was then evaluated. Following (Hasson et al., 2004, 2008), Lerner and colleagues reasoned that if neurons in a given voxel could reliably track a certain stimulus, then the resulting signal fluctuations would be stimulus-locked and, thus, similar across individuals; in contrast, untrackable input would elicit fluctuations that would not be reliably related to the stimulus and, thus, would differ across individuals. Therefore, the authors computed for each voxel and stimulus an inter-subject correlation (ISC; Hasson et al., 2004) of BOLD signal fluctuations. Their novel approach revealed a hierarchy of integration timescales (or “temporal receptive windows”; TRWs) extending from mid-temporal regions both anteriorly and posteriorly along the temporal lobe and on to frontal regions.

Mid-temporal regions early in the hierarchy reliably tracked all stimuli including the reverse audio and the word list conditions, which indicated a very short TRW (~phoneme or below). A little more posteriorly and anteriorly, temporal regions tracked all stimuli except for the reverse audio, indicative of sensitivity to sub-word (e.g., morpheme/syllable) or word-level information. Further posterior and anterior temporal regions could only track lists of sentences or paragraphs (but not word lists), indicative of sensitivity to phrase/clause- or sentence-level information. And, finally, some frontal regions exhibited this same pattern of sensitivity to phrase/clause/sentence information, with yet others reliably tracking only paragraph (but not sentence) lists, indicative of sensitivity to information above the sentence level (a very long TRW).

A hierarchy of integration timescales is an appealing organizing principle of the language network (DeWitt and Rauschecker, 2012; Bornkessel-Schlesewsky et al., 2015; Hasson et al., 2015; Chen et al., 2016; Baldassano et al., 2017; Yeshurun et al., 2017a; Sheng et al., 2018). Nevertheless, there are several reasons to question the putative correspondence between this hierarchy and the set of language-selective cortical regions. The first issue is neurobiological: the process of TRW characterization described above is carried out on a voxel-by-voxel basis and, hence, crucially relies on the assumption that a given voxel houses the same functional circuits across individuals, but this assumption is demonstrably invalid. Significant inter-individual variability characterizes the mapping of function onto macro-anatomy (Duffau, 2017; Vázquez-Rodríguez et al., 2019; Frost and Goebel, 2012; Tahmasebi et al., 2012), and this variability is especially problematic when functionally distinct regions lie in close proximity to one another, as is the case in both the temporal and frontal lobes (Jones and Powell, 1970; Gloor, 1997; Wise et al., 2001; Chein et al., 2002; Fedorenko et al., 2012a; Deen et al., 2015; Braga et al., 2019; for a related review, see: Fedorenko and Blank, 2020). In these areas, the same stereotactic coordinate may be part of the core language network in one brain but part of a functionally distinct network in another, which severely complicates the interpretation of voxel-based inter-subject correlations in BOLD signal fluctuations as markers of input tracking by a specific functional circuit.

The second issue is statistical. Even if different language regions showed evidence of differing integration timescales at the descriptive level, direct statistical comparisons across their response profiles would be required in order to establish that they are indeed functionally distinct. For instance, when the tracking of the word list condition is significant in one region but not in another, the difference between these two regions might itself still be non-significant (Nieuwenhuis et al., 2011). In other words, a region-by-condition interaction test is a crucial piece of statistical evidence in support of different integration timescales among the regions of the core language network, but such a test has hitherto been missing.

The third issue pertains to psycholinguistic theory and data. Although there is little doubt that comprehension proceeds via a cascaded integration of input along increasingly longer timescales (constructing larger meaningful units out of smaller ones; see, e.g., Christiansen and Chater, 2016), different stages of this process need not rely on qualitatively distinct mental structures or memory stores. Instead, language processing appears to operate over a continuum of merely “quantitatively” different representations that straddle the traditionally postulated boundaries between sounds and words (Farmer et al., 2006; Bradlow and Bent, 2008; Maye et al., 2008; Trude and Brown-Schmidt, 2012; Schmidtke et al., 2014) and between words and larger constructions and combinatorial rules (Clifton et al., 1984; MacDonald et al., 1994; Trueswell et al., 1994; Garnsey et al., 1997; Traxler et al., 2002; Reali and Christiansen, 2007; Gennari and MacDonald, 2008) (see also Joshi et al., 1975; Schabes et al., 1988; Goldberg, 1995; Bybee, 1998; Jackendoff, 2002; Culicover and Jackendoff, 2005; Wray, 2005; Bybee, 2010; Snider and Arnon, 2012; Jackendoff, 2007; Langacker, 2008; Christiansen and Arnon, 2017). By extension from cognition to its neural implementation, linguistic representations of different grain sizes, and their processing, need not be spatially segregated in the cortex across distinct regions.

Therefore, the current study directly tested for a functional dissociation among core language regions in terms of their temporal receptive windows. To this end, and to address the methodological issues discussed above, we synergistically combined two neuroimaging paradigms with complementary strengths: a traditional, task-based design and a naturalistic, task-free design. First, we used a well-validated localizer task to identify regions of the core language network individually in each participant (Fedorenko et al., 2010). This approach allowed us to establish correspondence across brains based on functional response profiles (Saxe et al., 2006) rather than stereotaxic coordinates in a common space, thus augmenting the common voxel-based methodologies for studying temporal receptive windows (Hasson et al., 2008). Then, we characterized the temporal receptive window of each language region by using the naturalistic story and its scrambled versions from Lerner et al. (2011). This paradigm broadly samples the space of representations and computations engaged during comprehension and, thus, tests the “different processing timescales” hypothesis in its most general formulation, decoupled from more detailed theoretical commitments. It therefore augments task-based paradigms, which rely on materials and tasks that isolate particular mental processes tied to specific theoretical constructs. Finally, we computed inter-subject correlations for each condition in each functionally localized language region, and tested for a region-by-condition interaction to directly compare the resulting temporal receptive window profiles across the network. In sum, our combined approach both (i) enjoys the increased ecological validity of naturalistic, “task-free” neuroimaging paradigms that mimic comprehension “in the wild” (Maguire, 2012; Sonkusare et al., 2019); and (ii) ensures the functional interpretability of the studied regions by harnessing a participant-specific localizer task instead of relying on precarious “reverse inference” from anatomy back to function (Poldrack, 2006, 2011).

2. Materials and methods

2.1. Participants

Twenty participants (12 females) between the ages of 18 and 47 (median = 22), recruited from the MIT student body and the surrounding community, were paid for participation. All participants were native English speakers, had normal hearing, and gave informed consent in accordance with the requirements of MIT’s Committee on the Use of Humans as Experimental Subjects (COUHES). One participant was excluded from analysis due to poor behavioral performance on a postscan assessment and neuroimaging data quality; the results below include the remaining 19 participants.

All participants but one had a left-lateralized language network, as determined based on visual inspection of their language localizer data (see section 2.2.1). The remaining participant was left-handed (Willems et al., 2014) and had a right-lateralized network; therefore, for this participant only, language fROIs were defined in the right hemisphere.

2.2. Design, materials and procedure

Each participant performed the language localizer task (Fedorenko et al., 2010) and, for the critical experiment, listened to all five versions of a narrated story (cf. Lerner et al., 2011, where different subsets of the sample listened to different subsets of the stimulus set). The localizer and critical experiment were run either in the same scanning session (13 participants) or in two separate sessions (6 participants, who have previously performed the localizer task while participating in other studies) (see Mahowald and Fedorenko, 2016 for evidence of high stability of language localizer activations over time; see also Braga et al., 2019). In each session, participants performed a few other, unrelated tasks, with scanning sessions lasting 90–120min.

2.2.1. Language localizer task

Regions in the core language network were localized using a passive reading task that contrasted sentences (e.g., DIANE VISITED HER MOTHER IN EUROPE BUT COULD NOT STAY FOR LONG) and lists of unconnected, pronounceable nonwords (e.g., LAS TUPING CUSARISTS FICK PRELL PRONT CRE POME VILLPA OLP WORNETIST CHO) (Fedorenko et al., 2010). Each stimulus consisted of 12 words/nonwords, presented at the center of the screen one word/nonword at a time at a rate of 450ms per word/nonword. Each trial began with 100ms of fixation and ended with an icon instructing participants to press a button, presented for 400ms and followed by 100ms of fixation, for a total trial duration of 6s. The button-press task was included to help participants remain alert and focused throughout the run. Trials were presented in a standard blocked design with a counterbalanced order across two runs. Each block, consisting of 3 trials, lasted 18s. Fixation blocks were evenly distributed throughout the run and lasted 14s. Each run consisted of 8 blocks per condition and 5 fixation blocks, lasting a total of 358s. (A version of this localizer is available for download from https://evlab.mit.edu/funcloc/download-paradigms.)

The sentences > nonwords contrast targets high-level aspects of language, to the exclusion of perceptual (speech/reading) and motor-articulatory processes (for a discussion, see Fedorenko and Thompson-Schill, 2014; Fedorenko, in press). We chose to use this particular localizer contrast for compatibility with other past and ongoing experiments in the Fedorenko lab and other labs using similar localizer contrasts. For the current study, the main requirements from the localizer contrast were that it neither be under-inclusive (i.e., fail to identify some regions of the core language network) nor over-inclusive (i.e., identify some regions that lie outside of, and are functionally distinct from, the core language network). Below, we address each requirement in turn.

First, to avoid under-inclusiveness, the contrast should identify regions engaged in a variety of high-level linguistic processes, from ones that might depend on relatively local information integration (e.g., single-word processing) to those that might depend on more global integration (e.g., processing of multi-word constructions, online composition). Because sentences differ from nonword lists in requiring processing at both the word level and phrase/clause/sentence level, the contrast’s content validity is appropriate in terms of capturing linguistic processes across multiple scales. Moreover, this localizer contrast has been extensively validated over the past decade, and shown to identify regions that all exhibit sensitivity to word-, phrase/clause-, and sentence-level semantic and syntactic processing (Fedorenko et al., 2010, 2012b, 2020; Blank et al., 2016; Mollica et al., 2018), which are the levels we focus on as described in the Results section. For instance, the regions identified with this localizer all exhibit reliable effects for narrower contrasts, like sentences vs. word lists; sentences vs. “Jabberwocky” sentences (where content words have been replaced with nonwords); word lists vs. nonword lists; and “Jabberwocky” sentences vs. nonword lists (similar patterns obtain in electrocorticographic data with high temporal resolution:Fedorenko et al., 2016). In addition, contrasts that are broader than sentences > nonwords and that do not subtract out phonology and/or discourse-level processes (e.g., a contrast between natural spoken paragraphs and their acoustically degraded versions: Scott et al., 2016; Ayyash et al., in prep.) identify the same network. Moreover, activations to the sentences > nonwords contrast exhibit extremely tight overlap with a fronto-temporal network identified solely based on resting-state data (Braga et al., 2019; Branco et al., 2020). Therefore, if we do not observe functional dissociations among language regions in their respective TRWs, it would not be simply because the language localizer subsamples a functionally homogeneous subset of regions out of a larger network.

Second, to avoid over-inclusiveness, the contrast should not identify functional networks that are distinct from the core language network and might be recruited during online comprehension for other reasons (e.g., task demands, attention, episodic encoding, non-verbal knowledge retrieval, or mentalizing). Whereas there are many potential differences between the processing of sentences vs. nonwords that might engage such non-linguistic processes, the identified regions exhibit robust language selectivity in their responses, showing little or no response to non-linguistic tasks (Fedorenko et al., 2011; Fedorenko et al., 2012a; Pritchett et al., 2018; Ivanova et al., 2019; Jouravlev et al., 2019; For a review, see Fedorenko and Varley, 2016; Scott, 2020). Moreover, whereas these regions are synchronized with one another during naturalistic cognition, they are strongly dissociated from other brain networks (Blank et al., 2014; Paunov et al., 2019; for evidence from inter-individual differences, see: Mineroff et al., 2018). Therefore, if we observe functional dissociations among regions in their respective TRWs, it would not be simply because the localizer oversamples a functionally heterogeneous set of regions that extend beyond the core language network. In sum, the contrast we use appears to identify a network that is a “natural kind”.

The evidence reviewed above provides strong support for both convergent and discriminant validity of the language localizer. In addition, this localizer generalizes across materials, tasks (passive reading vs. a memory probe task), and modality of presentation (visual and auditory: Fedorenko et al., 2010; Braze et al., 2011; Vagharchakian et al., 2012; Deniz et al., 2019). Presentation modality is particularly important because the main task of the current study relied on spoken language comprehension.

2.2.2. Critical experiment

Participants listened to the same materials that were originally used to characterize the cortical hierarchy of temporal receptive windows. These materials were based on an audio recording of a narrated story (“Pie-Man”, told by Jim O’Grady at an event of “The Moth” group, NYC). The conditions included (i) the intact audio; (ii) three “scrambled” versions of the story that differed in the temporal scale of incoherence, namely, lists of randomly ordered paragraphs, sentences, or words, respectively; and (iii) a reverse audio version. The last condition served as a low-level control, because reverse speech is acoustically similar to speech and is similarly processed (by lower-level auditory regions; Lerner et al., 2011), but does not carry linguistic information beyond the phonetic level (Kimura and Folb, 1968; Koeda et al., 2006; but see Norman-Haignere et al., 2015). We note that these conditions map only onto vague notions of “words”, “sentences”, and “paragraphs”; their mapping onto psycholinguistic constructs such as “phonemes”, “syllables”, “morphemes”, “phrases”, or “clauses” remains under-determined (for instance, the word-list condition differs from the reverse audio condition in the presence of many phonemes, as well as syllables, morphemes, and word-level lexical entries).

To render these materials suitable for our existing scanning protocol, which used a repetition time of 2s (see section 2.3.1.), the silence and/or music period preceding and following each stimulus were each extended from 15s to 16s so that they fit an integer number of scans. These periods were not included in the analyses reported below. In addition, the two longest paragraphs in the paragraph-list stimulus were each split into two sections, and one section was randomly repositioned in the stream of shuffled paragraphs. No other edits were made to the original materials.

Participants listened to the materials (one stimulus per run) over scanner-safe headphones (Sensimetrics, Malden, MA), in one of two orders: for 10 participants, the intact story was played first and was followed by increasingly finer levels of scrambling (from paragraphs to sentences to words). For the remaining 9 participants, the word-list stimulus was played first and was followed by decreasing levels of scrambling (from sentences to paragraphs to the intact story). The reverse story was positioned either in the middle of the scanning session or at the end, except for one participant for whom we could not fit this condition in the scanning session.

At the end of the scanning session, participants answered 8 multiple-choice questions concerning characters, places, and events from particular points in the narrative, with foils describing information presented elsewhere in the story. All participants demonstrated good comprehension of the story (17 of them answered all questions correctly, and the remaining two had only one error; the 20th participant, excluded from analysis, had 50% accuracy).

2.3. Data acquisition and preprocessing

2.3.1. Data acquisition

Structural and functional data were collected on a whole-body 3 Tesla Siemens Trio scanner with a 32-channel head coil at the Athinoula A. Martinos Imaging Center at the McGovern Institute for Brain Research at MIT. T1-weighted structural images were collected in 176 axial slices with 1 mm isotropic voxels (repetition time (TR) = 2,530ms; echo time (TE) = 3.48ms). Functional, blood oxygenation level-dependent (BOLD) data were acquired using an EPI sequence with a 90° flip angle and using GRAPPA with an acceleration factor of 2; the following parameters were used: thirty-one 4.4 mm thick near-axial slices acquired in an interleaved order (with 10% distance factor), with an in-plane resolution of 2.1 mm × 2.1 mm, FoV in the phase encoding (A ≫ P) direction 200 mm and matrix size 96 mm × 96 mm, TR = 2,000ms and TE = 30ms. The first 10s of each run were excluded to allow for steady state magnetization.

2.3.2. Data preprocessing

Spatial preprocessing was performed using SPM5 and custom MATLAB scripts. (Note that SPM was only used for preprocessing and basic first-level modeling, aspects that have not changed much in later versions; we used an older version of SPM because data for this study are used across other projects spanning many years and hundreds of participants, and we wanted to keep the SPM version the same across all the participants.) Anatomical data were normalized into a common space (Montreal Neurological Institute; MNI) template, resampled into 2 mm isotropic voxels, and segmented into probabilistic maps of the gray matter, white matter (WM) and cerebrospinal fluid (CSF). Functional data were motion corrected, resampled into 2 mm isotropic voxels, and high-pass filtered at 200s. Data from the localizer runs were additionally smoothed with a 4 mm FWHM Gaussian filter, but data from the critical experiment runs were not, in order to avoid blurring together the functional profiles of nearby regions with distinct TRWs (we obtained the same results following spatial smoothing in a supplementary analysis).

Additional temporal preprocessing of data from the critical experiment runs was performed using the SPM-based CONN toolbox (Whitfield-Gabrieli and Nieto-Castanon, 2012) with default parameters, unless specified otherwise. Five temporal principal components of the BOLD signal time-courses extracted from the WM were regressed out of each voxel’s time-course; signal originating in the CSF was similarly regressed out. Six principal components of the six motion parameters estimated during offline motion correction were also regressed out, as well as their first time derivative. Next, the residual signal was bandpass filtered (0.008–0.09 Hz) to preserve relatively low-frequency signal fluctuations, because higher frequencies might be contaminated by fluctuations originating from non-neural sources (Cordes et al., 2001).

We note that bandpass filtering was not used by Lerner et al. (2011). In another supplementary analysis, without filtering, we obtained the same pattern of results reported below. However, the unfiltered time-courses exhibited overall lower reliability across participants. We therefore chose to report the analyses of the filtered data.

2.4. Data analysis

All analyses were performed in MATLAB (The MathWorks, Natick, MA) unless specified otherwise.

2.4.1. Functionally defining language regions in individual participants

Data from the language localizer task were analyzed using a General Linear Model that estimated the voxel-wise effect size of each condition (sentences, nonwords) in each run of the task (the two runs were included in the same GLM model, but all regressors were defined perrun, i.e., the design matrix was block-diagonal). These effects were each modeled with a boxcar function (representing entire blocks) convolved with the canonical Hemodynamic Response Function (HRF). The model also included first-order temporal derivatives of these effects, as well as nuisance regressors representing entire experimental runs and offline-estimated motion parameters. The obtained beta weights were then used to compute the voxel-wise sentences > nonwords contrast, and these contrasts were converted to t-values. The resulting t-maps were restricted to include only gray matter voxels, excluding voxels that were more likely to belong to either the WM or the CSF based on the probabilistic segmentation of the participant’s structural data.

Functional regions of interest (fROIs) in the language network were then defined using group-constrained, participant-specific localization (Fedorenko et al., 2010). For each participant, the t-map of the sentences > nonwords contrast (pooled across the two runs) was intersected with binary masks that constrained the participant-specific language regions to fall within areas where activations for this contrast are relatively likely across the population. These five masks, covering areas of the left temporal and frontal lobes, were derived from a group-level probabilistic representation of the localizer contrast in an independent set of 220 participants (available for download from: https://evlab.mit.edu/funcloc/download-parcels). In order to increase functional resolution in the temporal cortex, where a gradient of multiple distinct TRWs was originally reported by Lerner et al. (2011), the two temporal masks were each further divided in two, approximately along the posterior-anterior axis. The border locations marking these divisions were determined based on an earlier version of the group-level representation of the localizer contrast, obtained from a smaller sample (Fedorenko et al., 2010). In total, seven masks were used (Fig. 1), in the posterior, mid-posterior, mid-anterior, and anterior temporal cortex, the inferior frontal gyrus, its orbital part, and the middle frontal gyrus. (Unlike previous reports from our group that had used an additional mask in the angular gyrus, we decided to exclude this region going forward because it does not appear to be a part of the core language network in either its task-based responses or its signal fluctuations during naturalistic cognition. See, e.g., Blank et al., 2014; Blank et al., 2016; Pritchett et al., 2018; Ivanova et al., 2019; Jouravlev et al., 2019; Paunov et al., 2019).

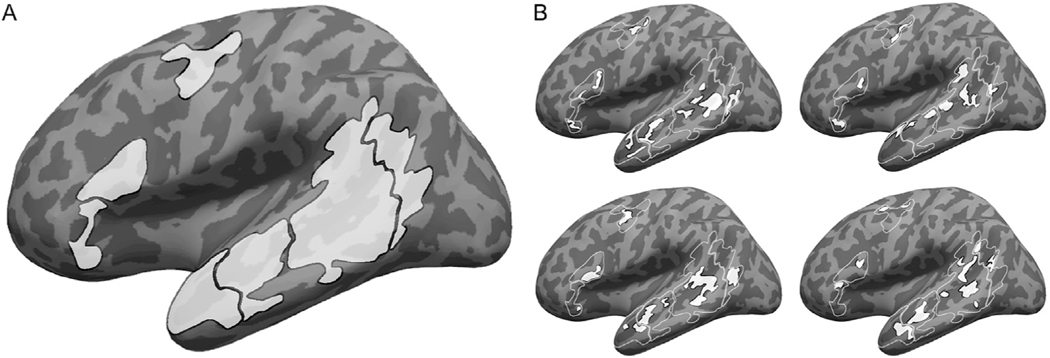

Fig. 1.

Defining participant-specific fROIs in the core language network. All images show approximate projections from functional volumes onto the surface of an inflated average brain in common (MNI) space. (A) Group-based masks used to constrain the location of fROIs. These masks were derived from a probabilistic group-level representation of the sentences > nonwords localizer contrast in a separate sample, following (Fedorenko et al., 2010). Contours of these masks are depicted in white in B. (B) Example fROIs of four participants. Note that, because data were analyzed in volume (not surface) form, some parts of a given fROI that appear discontinuous in the figure (e.g., separated by a sulcus) are contiguous in volumetric space.

In each of these masks, a participant-specific fROI was defined as the top 10% of voxels with the highest t-values for the sentences > nonwords contrast. fROIs within the smallest mask counted 37 voxels, and those within the largest mask—237 voxels (Fig. 1). This top n% approach ensures that fROIs can be defined in every participant and that their sizes are the same across participants, allowing for generalizable results (Nieto-Castañón and Fedorenko, 2012 ). In line with much prior work, these language fROIs showed highly replicable sentences > nonwords effects, estimated using independent portions of the data for fROI definition and response estimation (for all regions, t(18)>5.97, p < 10−4, corrected for multiple comparisons using false-discovery rate (FDR) correction (Benjamini and Yekutieli, 2001); Cohen’s d > 1.24, where this effect size, but not the previous measures, is based on a conservative, independent samples t-test).

We additionally defined a few alternative sets of fROIs for control analyses. First, to ensure that language fROIs were each functionally homogeneous and did not group together sub-regions with distinct TRWs, we also defined alternative, smaller fROIs based on the top 4% of voxels with the highest localizer contrast effects in each mask (these were 15–95 voxels in size). We were also interested in whether TRWs in the core language network differed from those in neighboring regions exhibiting weaker localizer contrast effects. Therefore, we defined fROIs based on the “second-best” 4% of voxels within each mask (i.e., those whose effect sizes were between the 92 and 96 percentile), as well as based on the “third best” (88–92 percentile), “fourth best” (84–88 percentile), and “fifth best” (80–84 percentile) sets.

2.4.2. Main analysis of temporal receptive windows in language fROIs

For each of the five conditions in the critical experiment (intact story, paragraph list, sentence list, word list, and reverse audio), in each of seven fROIs and for each participant, BOLD signal time-series were extracted from each voxel and were then averaged across voxels to obtain a single time-series per fROI, participant, and condition. When extracting these signals we skipped the first 6s (3 vol) following stimulus onset, in order to exclude a potential initial rise of the hemodynamic response relative to fixation; such a rise would be a trivially reliable component of the BOLD signal that might blur differences among conditions and fROIs. In addition, we included 6s of data following stimulus offset, in order to account for the hemodynamic lag (we obtained the same pattern of results in a supplementary analysis in which we skipped the first 10s and did not include any data post stimulus offset).

To compute ISCs per fROI and condition, we temporally z-scored the time-series of all participants but one, averaged them, and computed Pearson’s moment correlation coefficient between the resulting group-averaged time-series and the corresponding time-series of the left-out participant. This procedure was iterated over all partitions of the participant pool, producing 19 ISCs per fROI and condition. These ISCs were Fisher-transformed to improve the normality of their distribution (Silver and Dunlap, 1987).

To reiterate the logic detailed in the introduction, the resulting regional ISCs quantify the similarity of regional BOLD signal fluctuations across participants, with high values indicative of regional activity that reliably tracks the incoming input (correlations across participants mirror correlations within a single participant across stimulus presentations; Golland et al., 2007; Hasson et al., 2009; Blank and Fedorenko, 2017). Further, ISCs across the five conditions constitute a functional profile characterizing a region’s TRW. Namely, reliable input tracking (i.e., high ISCs) is expected only for stimuli that are well-formed at the timescale over which a given region integrates information; weaker tracking (i.e., low ISCs) is expected for stimuli that are scrambled at that scale and, thus, cannot be reliably integrated.

For descriptive purposes, we first labeled the TRW of each fROI based on the most scrambled condition for which tracking was still statistically indistinguishable from tracking of the intact story. For example, if ISCs in a certain region were uniformly high for the intact story, paragraph list, and sentence list, but were significantly lower for the word list, that region’s TRW was labeled as “sentence-level” (because input tracking incurred a cost when well-formedness at that level was violated). Similarly, if ISCs in another region were uniformly high for the intact story, paragraph list, sentence list, and word list, but dropped for the reverse audio, that region’s TRW was labeled as “word-level”. To thus label TRWs, for each fROI we compared ISCs between the intact story and every other stimulus using dependent-samples t-tests (α = 0.05, here and in all tests below). The resulting p-values were corrected for multiple comparisons using false-discovery rate (FDR) correction (Benjamini and Yekutieli, 2001) across all pairwise comparisons and fROIs.

For our main analysis, we directly compared the pattern of ISCs to the five conditions across the seven language fROIs via a two-way, repeated-measures analysis of variance (ANOVA) with fROI (7 levels) and condition (5 levels) as within-participant factors. The critical test was for a region-by-stimulus interaction. To further interpret our findings, we conducted follow-up analyses as detailed in the Results section. In addition to the parametric ANOVA, we ran empirical permutation tests of reduced residuals (Anderson and Braak, 2003), which are less sensitive to violations of the test’s assumptions, and obtained virtually identical results. We chose ANOVA over mixed-effects linear regression because the latter is more conservative due to estimator shrinkage, and we wanted to give any region-by-condition interaction—should one be present—the strongest chance of revealing itself. Nonetheless, inferences remained unchanged when tests were run via linear, mixed-effects regressions (using the lme4 toolbox in R) with varying intercepts by fROI, condition, and participant (Gelman, 2005).

2.4.3. Controlling for baseline differences in input tracking across fROIs

When comparing functional responses across brain regions, it is critical to take into account regional differences in baseline responsiveness, because these might mask fROI-by-condition interactions (or explain them away; Nieuwenhuis et al., 2011). For instance, whereas an ANOVA might conclude that a difference between an ISC of 0.5 for the intact story and an ISC of 0.4 for the reverse audio in one region is statistically indistinguishable from a difference between ISCs of 0.2 and 0.1 in another, the former difference constitutes only a 20% decrease whereas the latter constitutes a 50% decrease. Therefore, we corrected for such baseline differences and re-tested for a fROI-by-condition interaction.

To this end, we used regional ISCs for the intact story as a “ceiling” against which to normalize ISCs for the other four conditions: first, all ISCs were converted back to the [−1,1] range using the inverse Fisher transform. Then, we squared all ISCs, thus transforming them from correlations to “percentage of explained variance” (i.e., the fraction of variance in BOLD signal fluctuations from one participant that is explained by the corresponding BOLD signal fluctuations from other participants). Next, we divided squared-ISCs for each of the paragraph list, sentence list, word list, and reverse audio conditions by their corresponding squared-ISCs for the intact story (the division was performed separately for each participant and fROI). This division acted as a “normalization” procedure, where the percentages of explained variance in different conditions were compared against their ceiling value from the intact condition. Finally, we took the square root of the resulting values in order to transform them into (normalized) correlations. Whenever the resulting normalized correlation was greater than 1, it was rounded down to 1. We tested these normalized ISCs for fROI-by-condition interaction, as described in section 2.4.2.

2.4.4. Additional, voxel-based analyses

Whereas our main analyses examined ISCs in functionally defined, participant-specific fROIs, we also conducted two control analyses for which ISCs were defined using the common, voxel-based approach. Although we believe this approach is disadvantageous and suffers from interpretational limitations (see Introduction), we performed these analyses in order to provide a more comprehensive investigation of TRWs in the core language network.

Our first goal was to replicate the original findings from Lerner et al. (2011) so as to ensure that any differences between our main analyses and this previous study do not result from inconsistencies in the data. For this analysis, following Lerner et al. (2011), we smoothed the (temporally preprocessed) functional scans with a 6 mm FWHM Gaussian kernel. Then, for each of the five conditions in the main experiment, we computed voxel-wise ISCs for the subset of left-hemispheric voxels that met the following three criteria: (i) were more likely to be gray matter than either WM or CSF in at least 2/3 (n = 13) of the participants, based on the probabilistic segmentation of their individual, structural data; (ii) were part of the frontal, temporal, or parietal lobes as defined by the AAL2 atlas (Tzourio-Mazoyer et al., 2002; Rolls et al., 2015); and (iii) fell in the cortical mask used for the cortical parcellation in Yeo et al. (2011). We then labeled the TRW of each voxel following the approach of Lerner et al. (2011), namely, based on the most scrambled stimulus that, across participants, was still tracked significantly above chance (evaluated against a Gaussian fit to an empirical null distribution of ISCs that was generated from surrogate signal time-series; see Theiler et al., 1992). Tests were FDR-corrected for multiple comparisons across conditions and voxels. The resulting map of TRWs was projected onto Freesurfer’s average cortical surface in MNI space. No further quantitative tests were performed, as we were only interested in obtaining a visually similar gradient of TRWs to the one previously reported.

Our second goal was an alternative definition of fROIs that relied less heavily on the task-based functional localizer, in order to alleviate any remaining concerns regarding its use (see section 2.2.1.). Here, rather than defining fROIs that maximized the localizer contrast effect and subsequently characterizing their TRWs, we aimed at defining fROIs that directly maximized the ISC profiles consistent with certain TRWs. To avoid circularity from the use of the same data to define fROIs and to estimate their response profiles (Vul and Kanwisher, 2010; Kriegeskorte et al., 2009), we first created two independent sets of ISCs by splitting each BOLD signal time-series and computing ISCs for each half of the data. We then used data from the second half of each stimulus to define fROIs, and data from the first half to compare TRWs across the resulting fROIs. (We used the first half of each stimulus for the critical test because we suspected input tracking would be overall lower in the second half due to participants losing focus, especially for scrambled stimuli without coherent meaning; however, the pattern of results did not depend on which data half was used for fROI definition and which was used for the critical test.)

This analysis proceeded as follows: in each mask (same masks as the ones used in the main analyses), we first labeled the TRW of each voxel as in our main analysis (section 2.4.2.), i.e., based on the most scrambled condition for which tracking was statistically indistinguishable from tracking of the intact story (the alternative labeling scheme described in the second paragraph of this section, based on the most scrambled condition that was still tracked significantly above chance, yielded similar results). We then chose the voxels whose TRW label matched the label of the localizer-based fROI from the main analysis (see the penultimate paragraph of section 2.4.2, and an example below). We sorted these voxels based on the size of the difference between their tracking of the intact story and of the least scrambled condition that was tracked less reliably, and chose the 27 voxels whose p-values for that comparison were the smallest (most significant) (27 voxels is the number corresponding to a 3-voxel cubic neighborhood, but we did not constrain these voxels to be contiguous). For example, if the TRW of interest was “sentence-level”, this meant that we focused on voxels (i) whose tracking of the sentence list did not statistically differ from their tracking of the intact story, but (ii) their tracking of the word list was significantly less reliable; a “sentence-level” fROI was then chosen as the 27 voxels showing the most significant differences between ISCs for the intact story and the word list condition (in a dependent-samples t-test across participants). Once a fROI was defined this way in each mask, we averaged the ISCs for the first half of each stimulus across its voxels, and compared the resulting ISC profiles across fROIs using two-way, repeated-measures ANOVAs with the factors fROI (7 levels) and condition (5 level), as in our main analyses (section 2.4.2.).

2.4.5. Comparing TRWs between the language network and other functional regions

To situate data from the core language network in a broader context, we also computed ISCs in other cortical regions. First, we computed ISCs based on BOLD signal time-series averaged across voxels from an anatomically defined mask of lower-level auditory cortex in the anterolateral section of Heschl’s gyrus in the left hemisphere (Tzourio-Mazoyer et al., 2002) (we did not use a functional localizer because lower-level sensory regions show overall better mapping onto macro-anatomy compared to higher-level associative regions: Frost and Goebel, 2012; Tahmasebi et al., 2012; Vázquez-Rodríguez et al., 2019). This region has a short TRW (e.g., Lerner et al., 2011; Honey et al., 2012a), and was therefore expected to track all stimuli equally reliably.

Second, we extracted ISCs from regions of the “episodic” (or “default mode”) network (Gusnard and Raichle, 2001; Raichle et al., 2001; Buckner et al., 2008; Andrews-Hanna et al., 2010; Humphreys et al., 2015), which is engaged in processing episodic information. This network, recruited when we process events as part of a narrative, is expected to integrate input over longer timescales compared to the core language network (Regev et al., 2013; Chen et al., 2016; Margulies et al., 2016; Simony et al., 2016; Yeshurun et al., 2017a, 2017b; Zadbood et al., 2017; Nguyen et al., 2019). To identify fROIs in this network we relied on its profile of deactivation during tasks that tax executive functions for the processing of external stimuli, and used a visuo-spatial working-memory localizer that included a “hard” condition requiring the memorization of 8 locations on a 3 × 4 grid (Fedorenko et al., 2011, 2013). We defined the contrast hard < fixation and, following the approach outlined above (section 2.4.1.), chose the top 10% of voxels showing the strongest t-values for this contrast in two left-hemispheric masks, located in the posterior cingulate cortex and temporo-parietal junction (these masks were generated based on a group-level probabilistic representation of the localizer task data from 197 participants).

To compare TRWs between the core language network and each of these two other systems, we averaged ISCs for each condition across fROIs in each system and conducted two-way, repeated-measures ANOVAs with system (2 levels: language and auditory/language and episodic) and condition (5 levels) as within-participant factors. The critical test was for a system-by-condition interaction.

3. Results

3.1. No evidence for a region-by-condition interaction among language fROIs in the left inferior frontal and temporal cortices

3.1.1. Main analysis

The main results of the current study are presented in Fig. 2A. We computed inter-subject correlations for each of the five conditions in each of seven core language fROIs, and directly compared the resulting regional profiles of input tracking via a two-way (fROI × condition), repeated-measures ANOVA. As expected, there was a main effect of condition (F(4,68) = 32.7, partial η2 = 0.66, p < 10−14). Follow-up ANOVAs (FDR-corrected for multiple comparisons) contrasting the intact story to each other condition revealed no overall differences in tracking the intact story and the paragraph list (F(1,18) = 0, η2p = 0, p = 1) or the sentence list (F(1,18) = 0.02, η2p = 10−3, p = 1), but weaker tracking of the word list (F(1,18) = 27.27, η2p = 0.6, p < 10−3) and the reverse audio condition (F(1,18) = 169.90, η2p = 0.92, p < 10−7). Furthermore, the sentence list was tracked more reliably than the word list (F(1,18) = 9.76, η2p = 0.35, p = 0.04) which was, in turn, tracked more reliably than the reverse audio condition (F(1,18) = 48.56, η2p = 0.74, p < 10−4). In addition, there was a main effect of fROI (F(6,102) = 19.7, η2p = 0.54, p < 10−14), indicating that some fROIs overall tracked stimuli more strongly than others, a finding we return to in section 3.1.2.

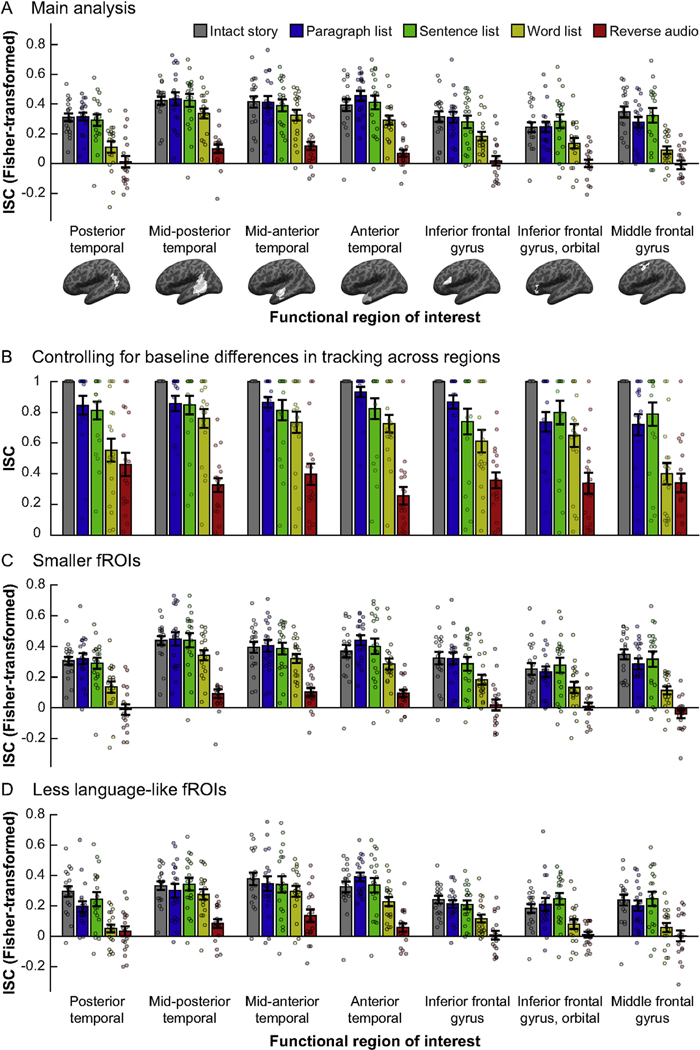

Fig. 2.

Comparing temporal receptive windows (TRWs) across core language regions. In each panel, inter-subject correlations (ISCs, a proxy for input tracking reliability; y-axis) are shown for each condition (bar colors) in each fROI (x-axis; brain images depict whole masks, but data are for participant-specific fROIs defined within those masks, as elaborated in Methods). Dots show individual data points, bars show means across the sample, and error-bars show standard errors of the mean. (A) Main analysis. (B) ISCs normalized relative to a “baseline” measure of tracking per fROI, i.e., the intact story condition. (C) ISCs in smaller fROIs, defined as the top 4% of voxels in each mask that showed the biggest sentences > nonwords effect in the localizer task, rather than the top 10% as in (A). (D) fROIs defined as the “fifth-best” 4% of voxels in each mask, i.e., those whose effect size for the localizer contrast was in the 80–84 percentile range. ISCs in all panels, except for panel B, were transformed from the original Pearson’s correlation scale to the Fisher-transformed scale.

As an initial characterization of the region-wise TRWs, we ran dependent-samples t-tests in each fROI to compare ISCs for the intact story and for each of the other conditions. We then identified the most scrambled condition whose tracking was still statistically indistinguishable from tracking of the intact story (correcting for multiple tests across pairwise comparisons and fROIs). These tests indicated that the mid-posterior, mid-anterior, and anterior temporal fROIs each exhibited “word-level” TRWs, with input tracking reliability not incurring a cost when words were randomly ordered, but becoming significantly weaker for the reverse audio condition (for all three regions: t(17)>6.54, Cohen’s d > 1.58, p < 10−4). The fROIs in the posterior temporal cortex, inferior frontal gyrus, its orbital part, and middle frontal gyrus each exhibited “sentence-level” TRWs, with input tracking reliability not incurring a cost for the sentence list condition, but becoming significantly weaker for the word list condition (for all four regions: t(18)>2.8, d > 0.66, p < 0.04). Prior to multiple comparison correction, all seven fROIs exhibited “word-level” TRWs. Based on these findings, in several of our analyses below we report tests focusing on the sentence list and word list conditions, which appear to be the locus of potential functional differences across fROIs.

Critically, whereas the ANOVA for a fROI-by-condition interaction in ISCs was significant (F(24,408) = 1.78, η2p = 0.10, p = 0.014), this interaction was explained by the middle frontal gyrus (MFG) fROI: follow-up analyses testing for an interaction across all regions but one (uncorrected for multiple comparisons, so as to be anti-conservative) failed to reach significance when the MFG was removed (F(20,340) = 1.32, η2p = 0.07, p = 0.16), but remained significant when each of the other fROIs was removed (for all tests, F(20,340)>1.71, η2p > 0.09, p < 0.03). The same results obtained when testing only the sentence list and word list conditions (all seven fROIs: F(6,108) = 4.32, η2p = 0.19, p < 10−3; MFG fROI excluded: F(5,90) = 2.24, partial η2 = 0.11, p = 0.057; any other fROI excluded: F(5,90)>4.11, η2p> 0.18, p < 0.002). Furthermore, the word list condition appeared to drive the interaction across the seven fROIs: when this condition was excluded from analysis, the interaction test was not significant (F(18,306) = 1.13, η2p = 0.06, p = 0.32), but it remained significant when each of the other conditions were removed (reverse audio excluded: F(18,324) = 2.56, η2p = 0.13, p < 10−3; any other condition excluded: F(18,306)>1.82, η2p = 0.10, p < 0.03).

Below, we report several additional analyses exploring the fROI-by-condition interaction (or lack thereof). Taken together, these analyses find no evidence that inferior frontal and temporal fROIs have functionally distinct temporal receptive windows.

3.1.2. Controlling for baseline differences in input tracking across fROIs

Language fROIs differed from one another in their “baseline” input tracking: a one-way ANOVA performed on the intact-story ISCs with fROI (7 levels) as a within-participant factor revealed a significant main effect (F(6,108) = 5.13, η2p= 0.22, p = 10−4). To control for these differences, we used the ISCs for the intact story as a “ceiling” against which to “normalize” the ISCs for the other four stimuli. Then, we re-ran the ANOVA testing for fROI-by-condition interaction on the normalized values. Following the patterns observed above, we limited this analysis only to the sentence list and word list conditions, which in our main analyses captured well the characteristics of the full dataset. (We observed that, due to noise in the data, many ISCs for scrambled stimuli exceeded the corresponding ISC for the intact condition and, thus, resulted in a normalized ISC value of 1. These values biased the distribution of ISCs and were difficult to interpret, but the dataset limited to only the sentence list and word lists conditions appeared to lend itself more readily to analysis). As in our main analysis, this test revealed a fROI-by-condition interaction (F(6,108) = 3.39, η2p= 0.16, p = 0.004) that was accounted for by the MFG fROI (MFG fROI excluded: F(5,90) = 1.22, η2p= 0.06, p = 0.30; any other region excluded: F(5,90)>3.32, η2p> 0.15, p < 0.008). Therefore, it is unlikely that evidence for distinct TRWs across language fROIs was “masked” by regional differences in baseline input tracking.

3.1.3. Testing smaller fROIs

The same results as in the main analysis were obtained when we tested smaller fROIs defined as the top 4% (rather than 10%) of voxels showing the strongest localizer contrast effects within each mask (Fig. 2B): when all 7 fROIs and 5 conditions were included, there was a fROI-by-condition interaction (F(24,408) = 1.62, η2p = 0.09, p = 0.03), which was no longer significant once the MFG fROI was excluded (F(20,340) = 1.20, η2p= 0.07, p = 0.25). Similarly, when we tested only the sentence list and word list conditions, there was a significant fROI-by-condition interaction across the 7 fROIs (F(6,108) = 2.80, η2p = 0.13, p < 0.02) but not across the six fROIs excluding the MFG fROI (F(20,340) = 1.48, η2p = 0.08, p = 0.21). Thus, the lack of evidence for a fROI-by-condition interaction in our main analysis is unlikely to result from using regions that were too large and grouped together several, functionally distinct sub-regions.

3.1.4. Testing less language-like fROIs

The lack of evidence for a fROI-by-stimulus interaction among core language regions might have resulted from lack of power to detect such interactions. To examine this possibility, we conducted the same analysis on each of several sets of alternative fROIs that, instead of showing the strongest sentences > nonwords effects, consisted of voxels that showed weaker localizer contrast effects. Specifically, the localizer contrast effects in these voxels were either in the 92–96 percentiles of their respective masks (“second-best” 4%), 88–92 percentiles, 84–92 percentiles, or 80–84 percentiles (“third-”, “fourth-”, and “fifth-best” 4%, respectively). We reasoned that such voxels, which showed less language-like responses, could either be more peripheral members of the language network, or belong to other functional networks that lie in close proximity to language regions (Chein et al., 2002; Fedorenko et al., 2012a; Deen et al., 2015). As such, these regions might differ from one another, and from the core language fROIs, in their integration timescales.

In each of these alternative sets of 7 fROIs, a two-way, repeated-measures ANOVA revealed a fROI-by-condition interaction, indicating that these regions differed from one another in their TRWs (second-best set: F(24,408) = 1.87, η2p= 0.09, p = 0.007; third-best set: F(24,408) = 1.95, η2p = 0.10, p = 0.005; fourth-best set: F(24,408) = 1.92, η2p = 0.10, p = 0.006; fifth-best set: F(24,408) = 1.95, η2p= 0.10, p = 0.006). For the fROIs consisting of second-best voxels—voxels that, being within the top 10%, were part of the fROIs used for the main analysis—this interaction was driven by the MFG fROI (fROI-by-condition interaction with the MFG fROI excluded: F(20,340) = 1.50, η2p = 0.08, p = 0.08). In contrast, for the other sets of fROIs, the interaction remained significant even with the MFG fROI removed, and its effect size descriptively grew as less language-like fROIs were tested (fROI-by-condition interaction with MFG excluded, third-best set: F(20,340) = 1.78, η2p= 0.09, p = 0.02; fourth-best set: F(20,340) = 1.96, η2p = 0.10, p = 0.008; fifth-best set: F(20,340) = 2.35, η2p= 0.12, p = 10−3) (Fig. 2C). These findings demonstrate that our study had sufficient power to detect fROI-by-condition interactions when those exist (e.g., when voxels from nearby functionally distinct networks are examined); such interaction is simply not evident in the core language network, whose regions show indistinguishable TRWs.

3.2. Additional, voxel-based analyses support the main finding

To demonstrate that the lack of evidence for a fROI-by-condition interaction in the core language network was not trivially caused by our choice of the localizer task, we re-computed ISCs using the common, voxel-based approach. First, we observed that the resulting ISCs qualitatively replicate the overall topography of the TRWs reported by Lerner et al. (2011) (Fig. 3A). Namely, large portions of the superior temporal cortex exhibit reliable tracking of all five stimuli, including the reverse audio condition, indicative of a short TRW; middle temporal regions reliably track word lists (as well as less scrambled stimuli) but not the reverse audio, i.e., are sensitive to well-formedness on the timescale of morphemes/syllables or words; extending further in inferior, posterior, and anterior directions, some temporal and parietal regions exhibit longer TRWs and reliably track only stimuli whose structure is well-formed at the level of phrases/clauses or sentences; and in the frontal lobe, voxels exhibit sensitivity to coherence at either the word-, sentence-, or paragraph-level. The broad consistency between this pattern and the previously established pattern of integration timescales indicates that the lack of functional dissociations across core language regions cannot be attributed to fundamental inconsistencies between the current data and those of Lerner et al. (2011).

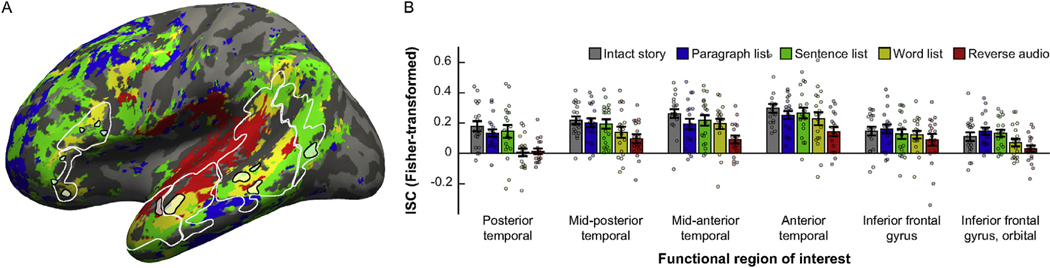

Fig. 3.

Voxel-based analyses. (A) Voxel-based ISCs in the frontal, temporal, and parietal lobes of the left-hemisphere are projected from a functional volume onto the surface of an inflated average brain in common (MNI) space. Each voxel is colored according to its TRW, defined as the most scrambled stimulus that, across participants, was still tracked significantly above chance (following the method in Lerner et al., 2011) (see legend in (B)). Note the progression from a short TRW (significant tracking of all stimuli, labeled in red), around the mid-posterior and mid-anterior temporal lobe, towards longer TRWs (yellow or green) in surrounding temporal areas, and finally to the frontal lobe (yellow, green, or blue). White contours mark the masks that were used for the main analysis (see Fig. 1), excluding the MFG. Small black contours surrounding faint colors mark a set of fROIs that was defined as an alternative to the main fROIs, by choosing the top 27 voxels in each mask showing the strongest pattern consistent with that mask’s TRWs as identified in the main analysis (see Fig. 2A). These voxels were chosen based on contrasting ISCs for the intact story to ISCs for each of the other stimuli, and relied on data from only the second half of each stimulus (unlike the coloring scheme in this panel, which is based on data from entire time-series of each stimulus and compared the ISCs of each stimulus against chance). (B) Cross-validated TRWs of the fROIs marked in (A), based on ISCs for data from only the first half of each stimulus. Conventions are the same as in Fig. 2.

Next, as a final attempt at uncovering distinct integration timescales across inferior frontal and temporal language regions, we defined an alternative set of fROIs by directly searching for certain TRWs. Recall that, in our descriptive labeling of fROIs in the main analysis (section 3.1.1), two functional profiles were observed: on the one hand, fROIs in the mid-posterior, mid-anterior, and anterior temporal areas exhibited sensitivity to morpheme/syllable or word-level information, with input tracking incurring a significant cost only for the reverse audio. On the other hand, fROIs in the posterior temporal, inferior frontal, and orbital areas exhibited sensitivity to phrase/clause/sentence-level information, with input tracking incurring a cost not only for the reverse audio but also for the word list. Nonetheless, these two profiles did not reliably differ from one another in the main analysis. Now, we tried using a different criterion for defining language regions such that the difference between these two profiles would be maximized. For this purpose, we defined the following fROIs: (i) within each of the former three masks, among those voxels whose ISCs did not significantly differ between the intact story and word list, we selected the 27 voxels with the biggest difference between ISCs for the intact story and the reverse audio; (ii) within each of the latter three masks, among those voxels whose ISCs did not significantly differ between the intact story and sentence list, we selected the 27 voxels with the biggest difference between ISCs for the intact story and the word list.

These six fROIs were defined based on data from the second half of each stimulus, and we then conducted a two-way, repeated-measures ANOVA to test for a fROI-by-condition interaction in ISCs from the first half of each stimulus (Fig. 3B). The interaction was not significant (F(20,340) = 1.26, η2p = 0.07, p = 0.21). When testing only the sentence list and word list stimuli, the interaction was very weak (F(5,90) = 2.35, η2p = 0.12, p = 0.047), especially considering that the choice of which TRW to define in each mask (based on the main analysis) relied on the same functional data tested here (even though, within the current test itself, fROI definition and response estimation were performed on two independent halves of those data). Similar results were obtained when fROIs were defined based on data from the first half of each stimulus and the ANOVA was run on ISCs from the second half (sentence list and word list only: F(5,90) = 1.54, η2p = 0.08, p = 0.19).

3.3. The core language network as a unified whole occupies a unique stage within a broader cortical hierarchy of integration timescales

The finding that core language regions in inferior frontal and temporal areas show indistinguishable TRWs does not challenge the hypothesis of a broader hierarchy of integration timescales throughout the cortex. Rather, it indicates that core language regions do not occupy multiple, distinct stages within this hierarchy. Yet other functional regions plausibly occupy other stages, some with shorter TRWs than those of the language network (e.g., low-level auditory cortex or speech-perception areas) and others with longer TRWs (e.g., regions engaged in episodic cognition).

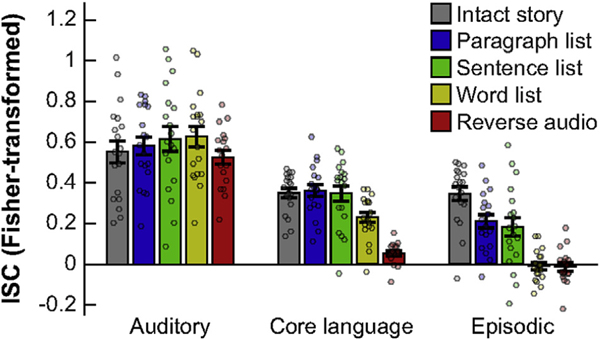

To demonstrate this, we first compared the ISCs for the five stimuli averaged across six language fROIs (excluding the MFG fROI) to ISCs from the auditory cortex. A two-way, repeated-measures ANOVA yielded a system (language vs. auditory) by condition interaction (F(4,68) = 10.53, η2p = 0.38, p < 10−5) (Fig. 4). We followed up on this result with system-by-condition interaction tests that only included the intact story and one other condition. These tests (FDR-corrected for multiple comparisons) revealed that, compared to the core language network, ISCs in the auditory region differed less between the intact story and the word list condition (F(1,18) = 9.75, η2p = 0.35, p = 0.025), as well as between the intact story and the reverse audio condition (F(1,17) = 34.11, η2p= 0.67, p < 10−3). In other words, input tracking in the auditory region incurred lower costs for fine-grained scrambling, indicative of a shorter TRW compared to that of the core language network.

Fig. 4.

Demonstrating a hierarchy of TRWs across functionally distinct brain regions. ISCs are shown for a low-level auditory region (around the anterolateral portion of Heschl’s gyrus; left), the core language network (averaged across fROIs, excluding the MFG fROI; middle), and a subset of the episodic network (averaged across fROIs in the left posterior cingulate cortex and temporo-parietal junction; right). Conventions are the same as in Fig. 2.

Next, we similarly compared ISCs in the language network to those in the episodic network (averaged across left-hemispheric posterior cingulate and temporo-parietal fROIs). Again, we found a network by stimulus interaction (F(4,68) = 10.82, η2p = 0.69, p < 10−6) (Fig. 4). Follow up interaction tests revealed that, compared to the core language network, ISCs in the episodic network differed more between the intact story and the paragraph list (F(1,18) = 21.30, η2p = 0.54, p = 10−3), sentence list (F(1,18) = 15.95, η2p = 0.47, p = 0.005), and word list (F(1,18) = 43.68, η2p = 0.71, p < 10−4) conditions. Input tracking in the episodic network thus incurred higher costs for coarse violations of well-formedness on the timescale of paragraphs and sentences and, further, showed no reliable tracking of word lists. This functional profile is indicative of a longer TRW compared to that of the core language network.

These findings situate the core language network within the context of a cortical hierarchy of integration timescales (Himberger et al., 2018). The common functional profile shared by the inferior frontal and temporal language regions occupies a particular stage within this broader hierarchy, which is located, as expected, downstream from auditory regions and upstream from the episodic network.

4. Discussion

The current study examined how reliably different regions in the core language network track linguistic stimuli that violate well-formedness at various representational grain levels. To this end, we recorded regional time-series of BOLD signal fluctuations elicited by increasingly scrambled versions of a narrated story, and measured the reliability of these fluctuations across individuals to quantify the extent to which they were stimulus-locked (e.g., Hasson et al., 2008). We found that left inferior frontal and temporal language regions all exhibited statistically indistinguishable profiles of sensitivity to linguistic structure at different timescales. Namely, these regions all tracked paragraph lists and sentence lists as reliably as they tracked the intact story, but tracked word lists less reliably, and tracked a reverse audio only weakly or not at all. These findings suggest that language regions integrate information over a common timescale, which is (i) sensitive to structure at the word level or below (e.g., morpheme/syllable), given the increased tracking of the word list compared to the reverse audio; (ii) also sensitive to structure at the phrase/clause or sentence level, given the further increase in tracking of the sentence list compared to the word list; but (iii) not sensitive to information above the sentence level, given no further boosts in tracking of the paragraph list compared to the sentence list. This common profile of information integration provides a novel functional signature of perisylvian, high-level language regions.

We emphasize that our main, null findings of a region-by-condition interaction constitute lack of evidence, and not evidence for a lack of functional dissociations across the core language network. Nevertheless, we extensively tested and rejected alternative explanations for these null results: they are not likely to be accounted for by baseline differences in input tracking across regions, which could have masked differences in TRWs (section 3.1.2); by fROIs being large enough to include—and average across— multiple, functionally distinct sub-regions (section 3.1.3); by lack of power to detect region-by-stimulus interactions in the general cortical areas we focused on (section 3.1.4); or by relying on a task-based, functional localizer to identify participant-specific regions of interest (section 3.2). We therefore conclude that no compelling evidence has been found in favor of a functional dissociation among the regions of the core language network in terms of their integration timescales.

The current results are therefore inconsistent with a division of linguistic labor across the core language network that is topographically organized by integration timescales (cf. Lerner et al., 2011; DeWitt and Rauschecker, 2012; Bornkessel-Schlesewsky et al., 2015; Hasson et al., 2015; Chen et al., 2016; Baldassano et al., 2017; Yeshurun et al., 2017a; Sheng et al., 2018). Instead, they support the hypothesis that inferior frontal and temporal core language regions form a unified whole that occupies a unique stage within a broader cortical hierarchy of temporal integration. In this cortical hierarchy, core language regions follow lower-level auditory (examined here) as well as speech perception regions (Mesgarani et al., 2014; Overath et al., 2015; Poeppel, 2003; Vagharchakian et al., 2012), and precede higher-level associative regions that integrate information over paragraphs and full narratives (Regev et al., 2013; Chen et al., 2016; Margulies et al., 2016; Simony et al., 2016; Yeshurun et al., 2017a; Yeshurun et al., 2017b; Zadbood et al., 2017; Nguyen et al., 2019; Ferstl and von Cramon, 2002; Jacoby and Fedorenko, 2018; Ferstl et al., 2008; Kuperberg et al., 2006; Maguire et al., 1999; Mar, 2011; Yarkoni et al., 2008). The only region within the core language network that might occupy a different stage in this hierarchy is the region that falls within the middle frontal gyrus, which has a somewhat longer integration timescale compared to the rest of the core language network. Given that many of the existing proposals regarding the functional architecture of the language network (for examples, see Friederici, 2002; Hickok and Poeppel, 2004; Ullman, 2004; Grodzinsky and Friederici, 2006; Hickok and Poeppel, 2007; Bornkessel-Schlesewsky and Schlesewsky, 2009; Friederici, 2011, 2012; Poeppel et al., 2012; Price, 2012; Bornkessel-Schlesewsky and Schlesewsky, 2013; Hagoort, 2013) focus on the inferior frontal and temporal regions, to the exclusion of the MFG, we leave further investigation into the relationship between the MFG and the rest of the core language network to future work.

4.1. Evidence for a distributed cognitive architecture of language processing

The finding of a common integration timescale shared across inferior frontal and temporal core language regions constrains comprehension models, challenging the notion of a functional dissociation between processes that either operate on different timescales and/or construct linguistic representations of different grain sizes. Instead, it suggests that linguistic processes at multiple timescales—from syllable/morpheme- or word-level to phrase/clause- or sentence-level—are implemented in neural circuits that are distributed rather than focal and, moreover, overlap with one another and are thus cognitively inseparable.

Nonetheless, our data do not exclude an alternative functional architecture. Recall that the conditions used in our experiment do not map neatly onto traditional psycholinguistic constructs, which are therefore confounded with one another: for instance, the sentence-list condition differs from the word-list condition in the presence of pairwise dependencies between words, multi-word units, phrases, and clauses. Thus, even though the sentence list is tracked more reliably than the word list throughout the core language network, different regions might show this pattern for functionally distinct reasons (e.g., one region might be sensitive to multi-word units, whereas another—to complete phrases). More broadly, inferior frontal and temporal core language regions, which all show the same functional profile in terms of their integration timescale, might each implement a distinct process or set of processes relevant to such integration (see, e.g., Hagoort and Indefrey, 2014; Brennan et al., 2016) (however, for recent evidence against such a pattern, see Shain et al., 2020).

This alternative view in favor of functional distinctions is inconsistent with much linguistic theorizing (Joshi et al., 1975; Schabes et al., 1988; Goldberg, 1995; Bybee, 1998, 2010; Jackendoff, 2002, 2007; Culicover and Jackendoff, 2005; Wray, 2005; Snider and Arnon, 2012; Langacker, 2008; Christiansen and Arnon, 2017) and empirical behavioral evidence (Clifton et al., 1984; MacDonald et al., 1994; Trueswell et al., 1994; Garnsey et al., 1997; Traxler et al., 2002; Farmer et al., 2006; Reali and Christiansen, 2007; Bradlow and Bent, 2008; Gennari and MacDonald, 2008; Maye et al., 2008; Trude and Brown-Schmidt, 2012; Schmidtke et al., 2014) in favor of a representational continuum that does away with traditionally posited boundaries and extends from phonemes, to morphemes, to words with their syntactic and semantic attributes, to phrase-level constructions and their meanings. A continuous gradient rather than a strict hierarchy of distinct stages is supported by the observation that different language regions exhibited TRWs that, while not statistically distinguishable, nonetheless somewhat descriptively differed from one another.

In addition, our finding of a functional signature distributed across the language network adds to prior neuroimaging studies reporting overlapping and distributed activations across diverse linguistic manipulations (Gernsbacher and Kaschak, 2003; Démonet et al., 2005; Vigneau et al., 2006; Price, 2012). These include manipulations of phonological (Scott and Wise, 2004; Hickok and Poeppel, 2007; Turkeltaub and Coslett, 2010), lexical (Paulesu et al., 1993; Indefrey and Levelt, 2004; Blumstein, 2009; Anderson et al., 2018), syntactic (Caplan, 2007; Bautista and Wilson, 2016; Blank et al., 2016; Pallier et al., 2011), and semantic (Bookheimer, 2002; Thompson-Schill, 2003; Patterson et al., 2007; Binder et al., 2009; Fedorenko et al., 2016, 2020; Mollica et al., 2018; Siegelman et al., 2019) processing. Unlike these traditional, task-based studies, which used controlled manipulations contrived to isolate particular aspects of linguistic processing, the current study employed an alternative approach (Hasson et al., 2004) based on richly structured stimuli in a naturalistic listening paradigm. It therefore importantly complements the prior evidence for a distributed architecture for language processing within which the very same neural circuits support the processing of linguistic units of varying grain size.

4.2. Why functional dissociations among language regions might go undetected

Although we interpret our findings as supporting the distributed implementation of language processing in overlapping neural circuits, they are not inconsistent with some forms of functional dissociations across distinct linguistic mechanisms. Indeed, neuropsychological findings, despite their many inconsistencies, indicate that at least some language regions or pathways may support some linguistic processes and not others, because some individuals with aphasia following brain lesions or degeneration drastically differ from one another in their behavioral symptoms (Caramazza and Coltheart, 2006; Gorno-Tempini et al., 2011). It is thus possible that some fMRI evidence for distributed linguistic processing underestimate a more complex functional architecture within the language network.