Abstract

Air pollution has emerged as a global problem in recent years. Particularly, particulate matter (PM2.5) with a diameter of less than 2.5 μm can move through the air and transfer dangerous compounds to the lungs through human breathing, thereby creating major health issues. This research proposes a large-scale, low-cost solution for detecting air pollution by combining hyperspectral imaging (HSI) technology and deep learning techniques. By modeling the visible-light HSI technology of the aerial camera, the image acquired by the drone camera is endowed with hyperspectral information. Two methods are used for the classification of the images. That is, 3D Convolutional Neural Network Auto Encoder and principal components analysis (PCA) are paired with VGG-16 (Visual Geometry Group) to find the optical properties of air pollution. The images are classified into good, moderate, and severe based on the concentration of PM2.5 particles in the images. The results suggest that the PCA + VGG-16 has the highest average classification accuracy of 85.93%.

Keywords: hyperspectral imaging technology, air pollution, PM2.5, deep neural network, 3D convolutional neural network, auto encoding

1. Introduction

Many contemporary studies proved that air pollution caused many different diseases in human beings [1,2,3]. Air pollution has been a predominant cause of cardiovascular and respiratory diseases, and sometimes even the malfunctioning of the lungs [4,5,6]. In the past, most studies inferred that particulate matter 2.5 (PM2.5) has a direct effect on the mortality rate. The diameter of PM2.5 is less than 2.5 μm; therefore, it can penetrate through the lungs and completely enter the blood vessels, spreading through the human body [7,8,9]. Therefore, knowing the concentration of PM2.5 in the environment is a necessity to take basic precautions.

Most of the current state-of-the-art methods use spot testing methods to extract the air from the surroundings to check for the gases present in it. In previous studies, open-path Fourier-transform infrared (OP-FTIR) spectroscopy has been extensively used by various authors to detect suspended particles in the atmosphere [10,11,12,13,14]. Chang et al. [15] combined OP-FTIR with principal component analysis (PCA) to determine the source of the volatile organic compounds (VOCs) in an industrial complex. However, the level of the signal-to-noise ratio in most of the OP/FTIR systems is very low because of the poor collimation ability, thereby affecting the results [16]. Ebner et al. [17] proposed another method. They used a quantum cascade laser (QCL) open-path system, which overcame the disadvantages of OP-FTIR. Yin et al. [18] also utilized QCL to measure the parts per billion value (ppb) of SO2 in the mid-IR range. Zheng et al. [19] designed a tunable laser adsorption spectroscope using QCL to determine the amount of NO. However, the QC lasers are expensive and, therefore, not a popular option for detecting air pollution. In recent years, machine learning and deep learning models have been used to detect air pollution. One such study by Ma et al. used six different models based on the MLR, kNN, SVR, RT, RF and BPNN algorithms individually to find out the PM2.5 concentration. However, the real measurement data required to validate the correctness of the retrieval findings at a resolution of 30 m are missing from the equipment [20]. Another study by Zhang et al. used CNN to predict and forecast the hourly PM 2.5 concentration [21]. Although the model increased the prediction performance, the study did not consider the effect of regulatory policy for air control.

One method which could overcome the aforementioned disadvantages is hyperspectral imaging (HSI) engineering. Apart from the various applications of HSI, it has also been used to detect air pollution in recent years [22,23,24,25,26,27]. Chan et al. [28] set up multi-axis, differential optical absorption spectroscopy to verify the measurements of the oxygen measurement instruments of NO2 and HCHO over Nanjing. Jeon et al. [29] used hyperspectral sensors to estimate the concentrations of NO2 coming out of the air pollutants from the industry. However, most methods have used sensors and other instruments, which are expensive and require heavy instruments. Most hyperspectral applications have remained only at the laboratory level [30].

Therefore, this study combines HSI technology and deep learning techniques to propose a large-scale, low-cost air pollution detection method. Three models have been developed: 3D Convolutional Neural Network Auto Encoder, principal components analysis (PCA), and the Red, Green, Blue (RGB) images, which are, respectively, proposed and combined with VGG-16 (Visual Geometry Group) to classify the images into three categories: good, moderate, and severe, based on the concentration of PM2.5. Finally, the accuracy of each of the methods was compared to determine the optimal model.

2. Materials and Methods

2.1. Dataset

As no suitable PM2.5 image dataset exists, this study created a dataset using an aerial camera DJI MAVIC MINI from 9:00 a.m. to 5:00 p.m. every day at an interval of an hour. The images were taken at the height of 100 m on the Innovation Building of National Chung Cheng University at an angle of 90°. The images have been scaled to make the input image match the input size of the first layer of VGG-16 to 224 × 224 in pixels. The data from PM2.5 of the Environmental Protection Agency’s Pakzi Monitoring Station were used as a reference to classify the images into three categories: good, moderate, and severe, according to the severity of air pollution. The total number of images in the dataset was 3340 images. In this study, the data were divided into training, validation, and test sets according to the ratio of 6:2:2, respectively.

2.2. HSI Algorithm

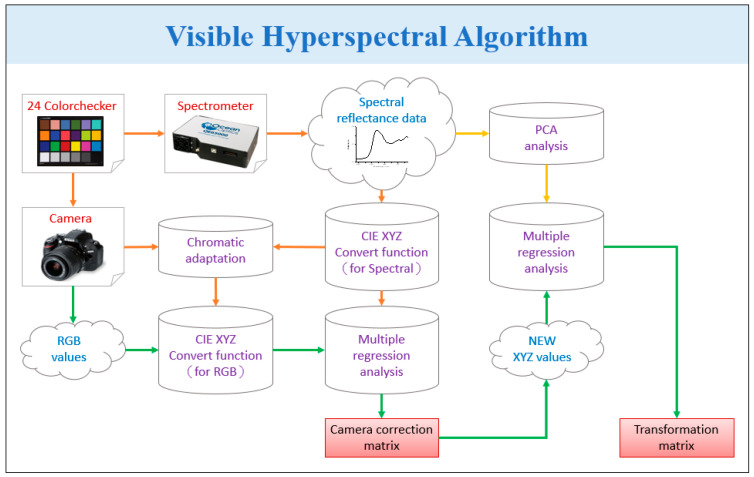

HSI has been recently used in many industries including agriculture [31], astronomy [32], military [33], biosensors [34,35,36], remote sensing [37], dental imaging [38], environment monitoring [39], satellite photography [40], cancer detection [27,41], forestry monitoring [42], food security [43], natural resources surveying [44], vegetation observation [45], and geological mapping [46]. The visible-light hyperspectral imaging (VIS-HSI) developed in this study uses an aerial camera (DJI MAVIC MINI) image to convert the image into a hyperspectral image in the visible wavelength range of 380–780 nm and up to a spectral resolution of 1 nm. The relationship matrix between the camera and the spectrometer has to be found to construct the VIS-HSI algorithm, as shown in Figure 1.

Figure 1.

Visible Hyperspectral Imaging Algorithm.

The camera (DJI MAVIC MINI) and the spectrometer (Ocean Optics, QE65000) must be given multiple common targets as analysis benchmarks, which will greatly improve the accuracy. Thus, the standard 24-color checker (x-rite classic, 24-color checker) was selected as the target because it contains the most important and all the common colors. As the camera may be affected by inaccurate white balance, the standard 24-color card must be passed through the camera and the spectrometer to obtain 24-color patch images (sRGB, 8bit) and 24-color images, respectively. The 24-color patch image and 24-color patch reflection spectrum data are converted to CIE 1931 XYZ color space (refer to Supplementary S1 for individual conversion formulas). In the camera part, the image (JPEG, 8bit) is stored according to the sRGB color-space specification. Before converting the image from the sRGB color gamut space to the XYZ color gamut space, the respective R, G, and B values (0–255) must be converted to a smaller scale range (0–1). Using the gamma function, the sRGB value is converted into a linear RGB value, and finally, through the conversion matrix, the linear RGB value is converted into the standard in the XYZ color gamut space. In the spectrometer part, to convert the reflection spectrum data (380–780 nm, 1 nm) to the XYZ color gamut space, the XYZ color matching functions and the light-source spectrum S(λ) are required. Brightness is calculated from the Y value of the XYZ color gamut space as both values are proportional. The brightness value is normalized between 0 and 100 to obtain the luminance ratio k, and finally, the reflection spectrum data are converted to the XYZ value (XYZSpectrum).

The variable matrix V is obtained by analyzing the factors that may cause errors in the camera, such as the nonlinear response of the camera, the dark current of the camera, inaccurate color separation of the color filter, and color shift. Regression analysis is performed on V to obtain the correction coefficient matrix C used to correct the errors from the camera, as shown in Equation (1). The average root-mean-square error (RMSE) of the data of XYZCorrect and XYZSpectrum was found to be only 0.5355. Once the calibration process is completed, the obtained XYZCorrect and the reflection spectrum data of the 24 color patches (RSpectrum) measured by the spectrometer are compared. The objective is to obtain the conversion matrix M by finding the important principal components of RSpectrum through PCA and multiple regression analysis, as shown in Equation (2). In the multivariate regression analysis of XYZCorrect and Score, the variable VColor is selected because it has listed all possible combinations of X, Y, and Z. The transformation matrix M is obtained through Equation (3), and XYZCorrect is used to calculate the analog spectrum (SSpectrum) through Equation (4).

| (1) |

| (2) |

| (3) |

| (4) |

Finally, the obtained analog spectrum of 24 color blocks (SSpectrum) is compared with the reflection spectrum of 24 color blocks (RSpectrum). The RMSE of each color block is calculated, and the average error is 0.0532. The difference between SSpectrum and RSpectrum can also be represented by the color difference. The VIS-HSI algorithm can be established through the above process and can simulate the reflection spectrum of the RGB values captured by the camera.

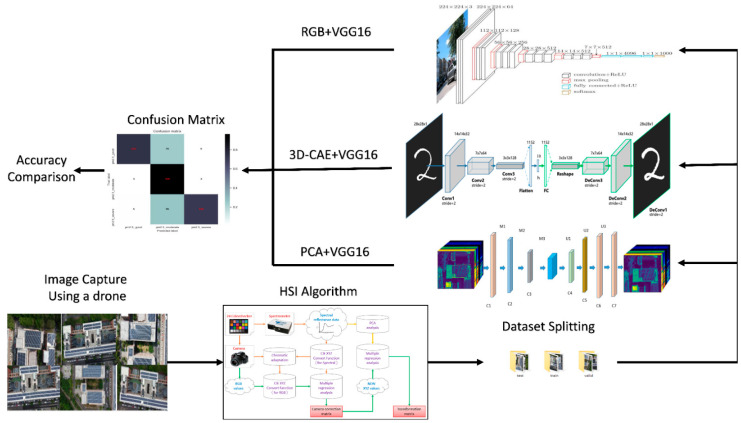

2.3. Three-Dimensional-Convolution Auto Encoder

The hyperspectral image converted from the original RGB image can be represented as X, where X = h × w × b, b is the number of input channels, and h × w is the size of the input image. The first layer L1 receives the input image X, whereas the last layer LN is the output layer. The intermediate layers include seven convolutional layers, three pooling layers, and one fully connected layer. In the proposed model, 3D-CNN is used for compression because, unlike 2D-CNN, using the spatial convolution kernel can learn both spatial and spectral features. After training, the compressed data will be used as the input layer of VGG-16 to continue training. The problem of data sparsity in high dimensions is solved using feature-dimension reduction or band selection of frequency bands. This study uses PCA. The dimensionality reduction method of the 3D convolutional autoencoder (3D-CAE) preprocesses the data to reduce the number of frequency bands and finally sends the dimensionally reduced images to VGG-16 for training. The input data can be expressed as (n, X), where n is the number of samples. The dimensions of the spectral features are reduced, and the data are organized in the form of (n × h × w × b) to perform PCA. The whole methodology developed in this study is shown in Figure 2.

Figure 2.

The methodology used in this study.

3. Results and Discussion

This study uses PCA in machine learning and the 3D-CAE model in deep learning for feature dimensionality reduction. The results of the study show that only three principal components are used to represent 99.87% of the data, retaining most of the information from the original data. The 3D-CAE model proposed in this study can jointly learn 2D spatial features and 1D spectral features from HSI data, and the compressed hyperspectral images. Such images can greatly reduce the data size and avoid the problem of insufficient memory space. The loss function and the accuracy of the results show that the loss has gradually converged when the fourth epoch is trained. The epoch refers to the process in which complete data pass through the neural network once and return once. As the epoch increases, the number of weight updates in the neural network will also increase (see Supplementary S1 for the training accuracy of all three models). The classification results will classify the images into three categories: good, moderate, and severe, according to the severity of air pollution.

A total of four indicators were used to judge the performance of the three models. Precision, also known as the positive predicted value, represents the number of truly positive cases out of all the predicted positive cases as shown in Equation (5). Recall rate, also known as sensitivity, represents the amount of predicted positive cases out of all the positive cases as shown in Equation (6). The F1 score is the harmonic mean of precision and recall. This value is high on an imbalanced dataset as shown in Equation (7), while accuracy of the predicted model can be calculated by dividing the total correct prediction and the total dataset as shown in Equation (8).

| (5) |

| (6) |

| (7) |

| (8) |

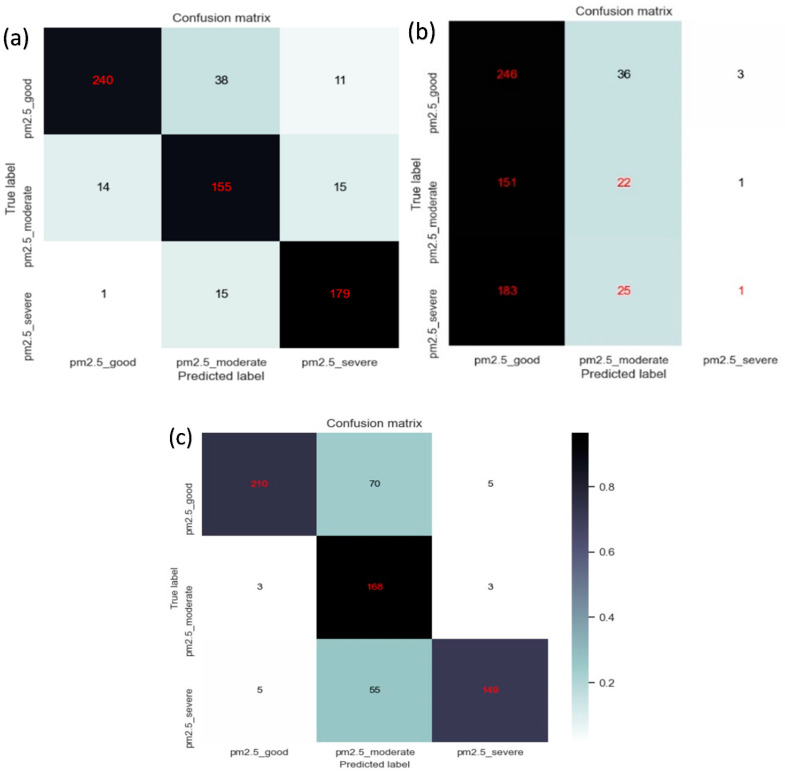

Figure 3 represents the confusion matrix of the three methods. The confusion matrix shows 210 good, 168 moderate, and 149 severe fully classified correctly in the RGB image. In the PCA + VGG16 method, 240 good, 155 moderate, and 179 severe were correctly classified, whereas 246 good, 22 moderate, and 1 severe were classified correctly in 3D-CAE images. Table 1 shows the accuracy of every method. The F1-score of moderate is lower because this category was more difficult to judge. The results show that the PCA + VGG-16 model has the best classification accuracy, followed by RGB + VGG-16, and finally 3D-CAE + VGG-16, as shown in Table 2. The 3D-CAE + VGG-16 has poor results because the compressed features do not necessarily have the optical properties of PM2.5.

Figure 3.

Confusion matrix of the methods developed. Panels (a–c) represent the PCA method, the 3D-CAE method, and the RGB method, respectively.

Table 1.

Shows the Precision, Recall and F1 score of all the three models.

| RGB | Precision | Recall | F1 Score |

| Good | 96.33% | 73.68% | 83.50% |

| Moderate | 57.34% | 96.55% | 71.95% |

| Severe | 94.90% | 71.29% | 81.42% |

| PCA | Precision | Recall | F1 Score |

| Good | 94.12% | 83.04% | 88.24% |

| Moderate | 74.52% | 84.24% | 79.08% |

| Severe | 87.32% | 91.79% | 89.50% |

| 3D-CAE | Precision | Recall | F1 Score |

| Good | 42.41% | 86.32% | 56.88% |

| Moderate | 26.54% | 12.64% | 17.12% |

| Severe | 20.00% | 0.48% | 0.93% |

Table 2.

Classification accuracy of the three methods proposed in the study.

| Method | Classification Accuracy (%) |

|---|---|

| PCA | 85.93 |

| RGB | 78.89 |

| 3D-CAE | 40.27 |

The main practical applicability of this study lies in its future scope, where a smart phone with a camera can be endowed with hyperspectral imaging technology. A mobile application can be developed, which could actually let the user know about the concentration of PM2.5 particulates in a specific image. In this method, the detection of air pollution in the atmosphere can be mobilized, thereby reducing the number of apparatuses required and the complexity of measurement. This method can also be extended to measure the PM10 concentration in the environment.

4. Conclusions

In this study, new VIS-HSI technology has been combined with artificial intelligence to estimate PM2.5 concentration in the images captured from the drone. Three algorithms have been developed. Through the dimensionality reduction methods of PCA, 3D-CAE, and VGG-16 neural network, the images are divided into three categories based on the PM2.5 concentration. In terms of experimental accuracy, PCA dimensionality reduction is the most effective, followed by the RGB method, and finally the 3D-CAE method. The accuracy of the methods can be improved by increasing the dataset that contains PM2.5 concentrations or fine-tuning the models. By integrating band selection with the CNN model, the accuracy of the prediction models can be improved significantly. Other weather features, such as wind speed, humidity, and temperature, can be considered to predict air pollution. Apart from PM2.5 concentrations, the prediction models can also be designed to predict PM10 concentrations. In the future, the same technology can be integrated with the cameras of the smartphone to detect real-time air pollution on the spot.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/s22166231/s1, Figure S1. Confusion matrix after training all three models. Figure S2. convergence of loss and accuracy during training of the three models. Figure S3. The difference between the reflectance of the original 24 color blocks and the simulated color. Figure S4. The difference between the measured 24 color blocks and the simulated 24 color blocks using the developed HSI algorithm. Figure S5. Cumulative explained variance vs. the number of Principal components. Table S1. Classification Training Results of all the three modes. Table S2. Definitions of the terms in Confusion Matrix.

Author Contributions

Conceptualization, H.-C.W. and C.-C.H.; data curation, C.-C.H.; formal analysis, C.-C.H. and A.M.; funding acquisition, F.-C.L. and H.-C.W.; investigation, C.-C.H. and A.M.; methodology, C.-C.H., H.-C.W. and A.M.; project administration, T.-C.M. and H.-C.W.; resources, T.-C.M. and H.-C.W.; software, T.-C.M. and A.M.; supervision, T.-C.M. and H.-C.W.; validation, T.-C.M. and A.M.; writing—original draft, A.M.; writing—review and editing, A.M. and H.-C.W. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Written informed consent was waived in this study because of the retrospective, anonymized nature of the study design.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This research was supported by the National Science and Technology Council, The Republic of China, under the grants NSTC 110-2634-F-194-006, and 111-2221-E-194-007. This work was financially/partially supported by the Advanced Institute of Manufacturing with High-tech Innovations (AIM-HI) and the Center for Innovative Research on Aging Society (CIRAS) from The Featured Areas Research Center Program within the framework of the Higher Education Sprout Project by the Ministry of Education (MOE), and Kaohsiung Armed Forces General Hospital research project 106-018 in Taiwan.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Kampa M., Castanas E. Human health effects of air pollution. Environ. Pollut. 2008;151:362–367. doi: 10.1016/j.envpol.2007.06.012. [DOI] [PubMed] [Google Scholar]

- 2.Lee K.K., Bing R., Kiang J., Bashir S., Spath N., Stelzle D., Mortimer K., Bularga A., Doudesis D., Joshi S.S. Adverse health effects associated with household air pollution: A systematic review, meta-analysis, and burden estimation study. Lancet Glob. Health. 2020;8:e1427–e1434. doi: 10.1016/S2214-109X(20)30343-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Liu W., Xu Z., Yang T. Health effects of air pollution in China. Int. J. Environ. Res. Public Health. 2018;15:1471. doi: 10.3390/ijerph15071471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Schraufnagel D.E., Balmes J.R., Cowl C.T., De Matteis S., Jung S.-H., Mortimer K., Perez-Padilla R., Rice M.B., Riojas-Rodriguez H., Sood A. Air pollution and noncommunicable diseases: A review by the Forum of International Respiratory Societies’ Environmental Committee, Part 2: Air pollution and organ systems. Chest. 2019;155:417–426. doi: 10.1016/j.chest.2018.10.041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Lelieveld J., Klingmüller K., Pozzer A., Pöschl U., Fnais M., Daiber A., Münzel T. Cardiovascular disease burden from ambient air pollution in Europe reassessed using novel hazard ratio functions. Eur. Heart J. 2019;40:1590–1596. doi: 10.1093/eurheartj/ehz135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Liu C., Chen R., Sera F., Vicedo-Cabrera A.M., Guo Y., Tong S., Coelho M.S., Saldiva P.H., Lavigne E., Matus P. Ambient particulate air pollution and daily mortality in 652 cities. N. Engl. J. Med. 2019;381:705–715. doi: 10.1056/NEJMoa1817364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Miller M.R. Oxidative stress and the cardiovascular effects of air pollution. Free Radic. Biol. Med. 2020;151:69–87. doi: 10.1016/j.freeradbiomed.2020.01.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Wang N., Mengersen K., Tong S., Kimlin M., Zhou M., Wang L., Yin P., Xu Z., Cheng J., Zhang Y. Short-term association between ambient air pollution and lung cancer mortality. Environ. Res. 2019;179:108748. doi: 10.1016/j.envres.2019.108748. [DOI] [PubMed] [Google Scholar]

- 9.Pang Y., Huang W., Luo X.-S., Chen Q., Zhao Z., Tang M., Hong Y., Chen J., Li H. In-vitro human lung cell injuries induced by urban PM2. 5 during a severe air pollution episode: Variations associated with particle components. Ecotoxicol. Environ. Saf. 2020;206:111406. doi: 10.1016/j.ecoenv.2020.111406. [DOI] [PubMed] [Google Scholar]

- 10.Herget W.F., Brasher J.D. Remote Fourier transform infrared air pollution studies. Opt. Eng. 1980;19:508–514. doi: 10.1117/12.7972551. [DOI] [Google Scholar]

- 11.Gosz J.R., Dahm C.N., Risser P.G. Long-path FTIR measurement of atmospheric trace gas concentrations. Ecology. 1988;69:1326–1330. doi: 10.2307/1941630. [DOI] [Google Scholar]

- 12.Russwurm G.M., Kagann R.H., Simpson O.A., McClenny W.A., Herget W.F. Long-path FTIR measurements of volatile organic compounds in an industrial setting. J. Air Waste Manag. Assoc. 1991;41:1062–1066. doi: 10.1080/10473289.1991.10466900. [DOI] [Google Scholar]

- 13.Bacsik Z., Komlósi V., Ollár T., Mink J. Comparison of open path and extractive long-path ftir techniques in detection of air pollutants. Appl. Spectrosc. Rev. 2006;41:77–97. doi: 10.1080/05704920500385494. [DOI] [Google Scholar]

- 14.Briz S., de Castro A.J., Díez S., López F., Schäfer K. Remote sensing by open-path FTIR spectroscopy. Comparison of different analysis techniques applied to ozone and carbon monoxide detection. J. Quant. Spectrosc. Radiat. Transf. 2007;103:314–330. doi: 10.1016/j.jqsrt.2006.02.058. [DOI] [Google Scholar]

- 15.Chang P.-E.P., Yang J.-C.R., Den W., Wu C.-F. Characterizing and locating air pollution sources in a complex industrial district using optical remote sensing technology and multivariate statistical modeling. Environ. Sci. Pollut. Res. 2014;21:10852–10866. doi: 10.1007/s11356-014-2962-0. [DOI] [PubMed] [Google Scholar]

- 16.Chen C.-W., Tseng Y.-S., Mukundan A., Wang H.-C. Air Pollution: Sensitive Detection of PM2.5 and PM10 Concentration Using Hyperspectral Imaging. Appl. Sci. 2021;11:4543. doi: 10.3390/app11104543. [DOI] [Google Scholar]

- 17.Ebner A., Zimmerleiter R., Cobet C., Hingerl K., Brandstetter M., Kilgus J. Sub-second quantum cascade laser based infrared spectroscopic ellipsometry. Opt. Lett. 2019;44:3426–3429. doi: 10.1364/OL.44.003426. [DOI] [PubMed] [Google Scholar]

- 18.Yin X., Wu H., Dong L., Li B., Ma W., Zhang L., Yin W., Xiao L., Jia S., Tittel F.K. ppb-Level SO2 Photoacoustic Sensors with a Suppressed Absorption–Desorption Effect by Using a 7.41 μm External-Cavity Quantum Cascade Laser. ACS Sens. 2020;5:549–556. doi: 10.1021/acssensors.9b02448. [DOI] [PubMed] [Google Scholar]

- 19.Zheng F., Qiu X., Shao L., Feng S., Cheng T., He X., He Q., Li C., Kan R., Fittschen C. Measurement of nitric oxide from cigarette burning using TDLAS based on quantum cascade laser. Opt. Laser Technol. 2020;124:105963. doi: 10.1016/j.optlastec.2019.105963. [DOI] [Google Scholar]

- 20.Ma P., Tao F., Gao L., Leng S., Yang K., Zhou T. Retrieval of Fine-Grained PM2.5 Spatiotemporal Resolution Based on Multiple Machine Learning Models. Remote Sens. 2022;14:599. doi: 10.3390/rs14030599. [DOI] [Google Scholar]

- 21.Zhang L., Na J., Zhu J., Shi Z., Zou C., Yang L. Spatiotemporal causal convolutional network for forecasting hourly PM2.5 concentrations in Beijing, China. Comput. Geosci. 2021;155:104869. doi: 10.1016/j.cageo.2021.104869. [DOI] [Google Scholar]

- 22.Liu C., Xing C., Hu Q., Wang S., Zhao S., Gao M. Stereoscopic hyperspectral remote sensing of the atmospheric environment: Innovation and prospects. Earth-Sci. Rev. 2022;226:103958. doi: 10.1016/j.earscirev.2022.103958. [DOI] [Google Scholar]

- 23.Meléndez J., Guarnizo G. Fast quantification of air pollutants by mid-infrared hyperspectral imaging and principal component analysis. Sensors. 2021;21:2092. doi: 10.3390/s21062092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Liu C., Hu Q., Zhang C., Xia C., Yin H., Su W., Wang X., Xu Y., Zhang Z. First Chinese ultraviolet–visible hyperspectral satellite instrument implicating global air quality during the COVID-19 pandemic in early 2020. Light Sci. Appl. 2022;11:28. doi: 10.1038/s41377-022-00722-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Nicks D., Baker B., Lasnik J., Delker T., Howell J., Chance K., Liu X., Flittner D., Kim J. Hyperspectral remote sensing of air pollution from geosynchronous orbit with GEMS and TEMPO; Proceedings of the Earth Observing Missions and Sensors: Development, Implementation, and Characterization V; Honolulu, HI, USA. 25–26 September 2018; pp. 118–124. [Google Scholar]

- 26.Liu C., Xing C., Hu Q., Li Q., Liu H., Hong Q., Tan W., Ji X., Lin H., Lu C. Ground-based hyperspectral stereoscopic remote sensing network: A promising strategy to learn coordinated control of O3 and PM2.5 over China. Engineering. 2021;7:1–11. doi: 10.1016/j.eng.2021.02.019. [DOI] [Google Scholar]

- 27.Tsai C.-L., Mukundan A., Chung C.-S., Chen Y.-H., Wang Y.-K., Chen T.-H., Tseng Y.-S., Huang C.-W., Wu I.-C., Wang H.-C. Hyperspectral Imaging Combined with Artificial Intelligence in the Early Detection of Esophageal Cancer. Cancers. 2021;13:4593. doi: 10.3390/cancers13184593. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Chan K.L., Wang Z., Heue K.-P. Hyperspectral ground based and satellite measurements of tropospheric NO2 and HCHO over Eastern China; Proceedings of the Optical Sensors and Sensing Congress (ES, FTS, HISE, Sensors); San Jose, CA, USA. 25 June 2019; p. HTh1B.3. [Google Scholar]

- 29.Jeon E.-I., Park J.-W., Lim S.-H., Kim D.-W., Yu J.-J., Son S.-W., Jeon H.-J., Yoon J.-H. Study on the Concentration Estimation Equation of Nitrogen Dioxide using Hyperspectral Sensor. J. Korea Acad.-Ind. Coop. Soc. 2019;20:19–25. [Google Scholar]

- 30.Schneider A., Feussner H. Biomedical Engineering in Gastrointestinal Surgery. Academic Press; Cambridge, MA, USA: 2017. [Google Scholar]

- 31.Lu B., Dao P.D., Liu J., He Y., Shang J. Recent advances of hyperspectral imaging technology and applications in agriculture. Remote Sens. 2020;12:2659. doi: 10.3390/rs12162659. [DOI] [Google Scholar]

- 32.Mukundan A., Patel A., Saraswat K.D., Tomar A., Kuhn T. Kalam Rover; Proceedings of the AIAA SCITECH 2022 Forum; San Diego, CA, USA. 3–7 January 2022; p. 1047. [Google Scholar]

- 33.Gross W., Queck F., Vögtli M., Schreiner S., Kuester J., Böhler J., Mispelhorn J., Kneubühler M., Middelmann W. A multi-temporal hyperspectral target detection experiment: Evaluation of military setups; Proceedings of the Target and Background Signatures VII; Online. 13–17 September 2021; pp. 38–48. [Google Scholar]

- 34.Mukundan A., Feng S.-W., Weng Y.-H., Tsao Y.-M., Artemkina S.B., Fedorov V.E., Lin Y.-S., Huang Y.-C., Wang H.-C. Optical and Material Characteristics of MoS2/Cu2O Sensor for Detection of Lung Cancer Cell Types in Hydroplegia. Int. J. Mol. Sci. 2022;23:4745. doi: 10.3390/ijms23094745. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Hsiao Y.-P., Mukundan A., Chen W.-C., Wu M.-T., Hsieh S.-C., Wang H.-C. Design of a Lab-On-Chip for Cancer Cell Detection through Impedance and Photoelectrochemical Response Analysis. Biosensors. 2022;12:405. doi: 10.3390/bios12060405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Mukundan A., Tsao Y.-M., Artemkina S.B., Fedorov V.E., Wang H.-C. Growth Mechanism of Periodic-Structured MoS2 by Transmission Electron Microscopy. Nanomaterials. 2021;12:135. doi: 10.3390/nano12010135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Gerhards M., Schlerf M., Mallick K., Udelhoven T. Challenges and future perspectives of multi-/Hyperspectral thermal infrared remote sensing for crop water-stress detection: A review. Remote Sens. 2019;11:1240. doi: 10.3390/rs11101240. [DOI] [Google Scholar]

- 38.Lee C.-H., Mukundan A., Chang S.-C., Wang Y.-L., Lu S.-H., Huang Y.-C., Wang H.-C. Comparative Analysis of Stress and Deformation between One-Fenced and Three-Fenced Dental Implants Using Finite Element Analysis. J. Clin. Med. 2021;10:3986. doi: 10.3390/jcm10173986. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Stuart M.B., McGonigle A.J., Willmott J.R. Hyperspectral imaging in environmental monitoring: A review of recent developments and technological advances in compact field deployable systems. Sensors. 2019;19:3071. doi: 10.3390/s19143071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Mukundan A., Wang H.-C. Simplified Approach to Detect Satellite Maneuvers Using TLE Data and Simplified Perturbation Model Utilizing Orbital Element Variation. Appl. Sci. 2021;11:10181. doi: 10.3390/app112110181. [DOI] [Google Scholar]

- 41.Fang Y.-J., Mukundan A., Tsao Y.-M., Huang C.-W., Wang H.-C. Identification of Early Esophageal Cancer by Semantic Segmentation. J. Pers. Med. 2022;12:1204. doi: 10.3390/jpm12081204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Vangi E., D’Amico G., Francini S., Giannetti F., Lasserre B., Marchetti M., Chirici G. The new hyperspectral satellite PRISMA: Imagery for forest types discrimination. Sensors. 2021;21:1182. doi: 10.3390/s21041182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Zhang X., Han L., Dong Y., Shi Y., Huang W., Han L., González-Moreno P., Ma H., Ye H., Sobeih T. A deep learning-based approach for automated yellow rust disease detection from high-resolution hyperspectral UAV images. Remote Sens. 2019;11:1554. doi: 10.3390/rs11131554. [DOI] [Google Scholar]

- 44.Hennessy A., Clarke K., Lewis M. Hyperspectral classification of plants: A review of waveband selection generalisability. Remote Sens. 2020;12:113. doi: 10.3390/rs12010113. [DOI] [Google Scholar]

- 45.Terentev A., Dolzhenko V., Fedotov A., Eremenko D. Current State of Hyperspectral Remote Sensing for Early Plant Disease Detection: A Review. Sensors. 2022;22:757. doi: 10.3390/s22030757. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.De La Rosa R., Tolosana-Delgado R., Kirsch M., Gloaguen R. Automated Multi-Scale and Multivariate Geological Logging from Drill-Core Hyperspectral Data. Remote Sens. 2022;14:2676. doi: 10.3390/rs14112676. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Not applicable.