Abstract

Background

Clinical dashboards used as audit and feedback (A&F) or clinical decision support systems (CDSS) are increasingly adopted in healthcare. However, their effectiveness in changing the behavior of clinicians or patients is still unclear. This systematic review aims to investigate the effectiveness of clinical dashboards used as CDSS or A&F tools (as a standalone intervention or part of a multifaceted intervention) in primary care or hospital settings on medication prescription/adherence and test ordering.

Methods

Seven major databases were searched for relevant studies, from inception to August 2021. Two authors independently extracted data, assessed the risk of bias using the Cochrane RoB II scale, and evaluated the certainty of evidence using GRADE. Data on trial characteristics and intervention effect sizes were extracted. A narrative synthesis was performed to summarize the findings of the included trials.

Results

Eleven randomized trials were included. Eight trials evaluated clinical dashboards as standalone interventions and provided conflicting evidence on changes in antibiotic prescribing and no effects on statin prescribing compared to usual care. Dashboards increased medication adherence in patients with inflammatory arthritis but not in kidney transplant recipients. Three trials investigated dashboards as part of multicomponent interventions revealing decreased use of opioids for low back pain, increased proportion of patients receiving cardiovascular risk screening, and reduced antibiotic prescribing for upper respiratory tract infections.

Conclusion

There is limited evidence that dashboards integrated into electronic medical record systems and used as feedback or decision support tools may be associated with improvements in medication use and test ordering.

Keywords: dashboard, audit and feedback, clinical decision support, review

INTRODUCTION

With the widespread uptake of electronic medical records, massive health-related datasets have been generated and they continue to grow at unprecedented rates.1,2 Despite the potential impact of using these datasets to improve patient care, clinicians are often overwhelmed with the complexity of processing electronic medical record data.3,4 To better utilize these routinely collected data, clinical dashboards have been developed and integrated into electronic medical record systems to help clinicians make informed decisions and ensure the quality and safety of the care delivered.1,2

Clinical dashboards are interactive data visualization tools that provide a visual summary of decision-related clinical information displayed in graphs, charts, or interactive tables.5 They are commonly used in healthcare as clinical decision support systems (CDSS) or audit and feedback (A&F) tools to help clinicians make informed decisions and to provide feedback on variations in care. Clinical dashboards integrated into electronic medical record systems can display critical indicators to clinicians allowing them to recognize suboptimal care, which has been used to motivate better performance.6,7 Suboptimal care related to medication prescription and test ordering has been extensively reported in the literature. Examples of this include overuse of medications (such as antibiotics and opioid analgesics)8 and unnecessary referrals for diagnostic imaging or laboratory tests,9 despite numerous guidelines endorsing rational use of these interventions. Optimizing medication prescription and test ordering are central to high-quality healthcare and clinical dashboards are therefore promising tools for enabling clinicians to reflect on their practice and identify areas to change.

Traditional methods of CDSS and A&F without the use of clinical dashboards have been shown to improve healthcare delivery. For instance, a recent systematic review revealed that CDSS integrated into electronic medical record systems increased the proportion of patients receiving desired care by 5.8% compared with usual care.10 A Cochrane review showed that A&F interventions resulted in a 4.3% absolute increase in healthcare professionals’ compliance with the desired practice.11 None of these reviews, however, considered clinical dashboards as CDSS and A&F mechanisms, despite the increasing use in the last decade. A previous narrative review of 11 studies on the effects of clinical dashboards included only 1 randomized controlled trial, which showed no effect on antibiotic prescribing for acute respiratory infection in primary care.5 This review is now 9 years old and did not conduct systematic searches nor assess the risk of bias. A more recent systematic review12 focused on critical care units and included a wide range of data visualization techniques, that is, not only clinical dashboards.

Despite the increasing popularity in healthcare, there is limited knowledge on the effectiveness of clinical dashboards in changing clinician or patient’s behavior. In this systematic review, we aimed to assess the effectiveness of clinical dashboards used as CDSS or A&F tools (as a standalone intervention or part of a multifaceted intervention) in primary care or hospital settings on medication prescription, adherence, and test ordering.

METHODS

This systematic review followed the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) recommendations.13 The protocol has been published in the Open Science Framework.14

Searches

Electronic literature searches were conducted in the following databases: MEDLINE via Ovid, EMBASE via Ovid, and CINAHL via EBSCO, CENTRAL via Cochrane Library, INSPEC, ACM Digital Library, and IEEEXplore, from inception to August 2021. We combined the following terms and their variations to construct the search strategies: dashboard, decision support, electronic health record, and quality indicators. The reference lists of included studies and relevant systematic reviews were screened for additional relevant citations. We did not restrict our searches to any language or date of publication. The search strategies used for the selected databases are outlined in Supplementary File S1.

Titles and abstracts of records retrieved from our electronic searches were screened independently by 2 reviewers. Full texts of potentially eligible articles were screened independently by 2 reviewers according to the eligibility criteria, and disagreements were resolved by consensus or in consultation with a third reviewer.

Eligibility criteria

Types of studies

Eligible studies had to be randomized controlled trials published in peer-reviewed journals. In our protocol, we stated we would consider observational studies (eg, prospective or retrospective cohorts) but we later decided to include only randomized controlled trials, which allowed us to focus on the highest level of evidence to investigate the effects of dashboard interventions. Conference abstracts and study protocols were excluded.

Types of participants and settings

Studies including clinicians or patients as participants, investigating any health condition in primary care or hospital settings (eg, emergency departments, hospital wards, and outpatient clinics) were considered. Studies including healthy populations or healthcare students were excluded.

Types of interventions and comparators

We considered clinical dashboard interventions used as CDSS or A&F. Clinical dashboards included those involving graphical user interfaces containing measures of clinical performance or clinical indicators to enable decision-making. We also considered clinical dashboards that provided a visual summary of decision-related information displayed in graphs, charts, or interactive tables. We also included studies with multifaceted/multicomponent interventions including a clinical dashboard as a core component. Studies comparing the effectiveness of clinical dashboard interventions with any type of control were considered, including usual care, no intervention, and a similar intervention without the dashboard component.

Types of outcome measures

The 2 outcomes of interest for this review were: (1) medication use, including the rate of medication prescribed/administered and medication intake adherence; (2) test ordering, such as the rate of imaging referrals and the count of routine laboratory test orders. We focused on these outcomes (rather than clinical or patient-reported outcomes) as we aimed to evaluate whether clinical dashboards with CDSS or A&F features achieve their main objective—changing clinician or patient’s behavior.

Data extraction

A standardized spreadsheet was developed, and 2 reviewers independently extracted the data from the included studies. The extracted data from the included studies were: study design, sample size, sample characteristics (source, health condition, age, sex), healthcare setting, country, type of dashboard, dashboard features, intervention characteristics, outcome measures, and time points. The effect sizes (eg, mean difference, risk ratio, and odds ratio) and their 95% confidence intervals (CIs) were also extracted.

Risk of bias assessment

The Cochrane Risk of Bias (RoB) 2.0 tool15 was used to assess the risk of bias of included randomized controlled studies. The risk of bias of each domain was judged as high risk of bias, low risk of bias, and some concerns, and overall risk of bias for each included study was also provided. Studies were considered as having an overall low risk of bias when all domains were judged as low risk, whereas studies were considered as having an overall high risk of bias when at least 1 bias domain was judged as high risk.15 For studies with some concerns in at least 1 domain, we recorded it as having some concerns.

Certainty of evidence

The Grading of Recommendations of Assessment, Development and Evaluation (GRADE) approach16 was used to assess the quality of the body of evidence for the primary outcomes of included studies. The GRADE ratings were summarized as either high, moderate, low, or very low across 5 domains: study limitations, inconsistency of results, indirectness of evidence, imprecision of estimates and publication bias.16 Since we did not conduct a meta-analysis, assessment against inconsistency and publication bias was not applicable in this review.

Data synthesis

Since high heterogeneity exists in health conditions and outcome measures, we were unable to perform a meta-analysis. Therefore, a narrative synthesis of the findings was conducted. We descriptively reported the effect sizes for both primary and secondary outcomes related to medication use and test ordering, the intervention time frame, and the number of participants in each trial. Relevant data were grouped and assessed based on the types of interventions (ie, CDSS, A&F, standalone, or multifaceted interventions) and types of comparators (eg, usual care/no intervention). Results are presented in the summary of findings tables along with the GRADE assessment.

RESULTS

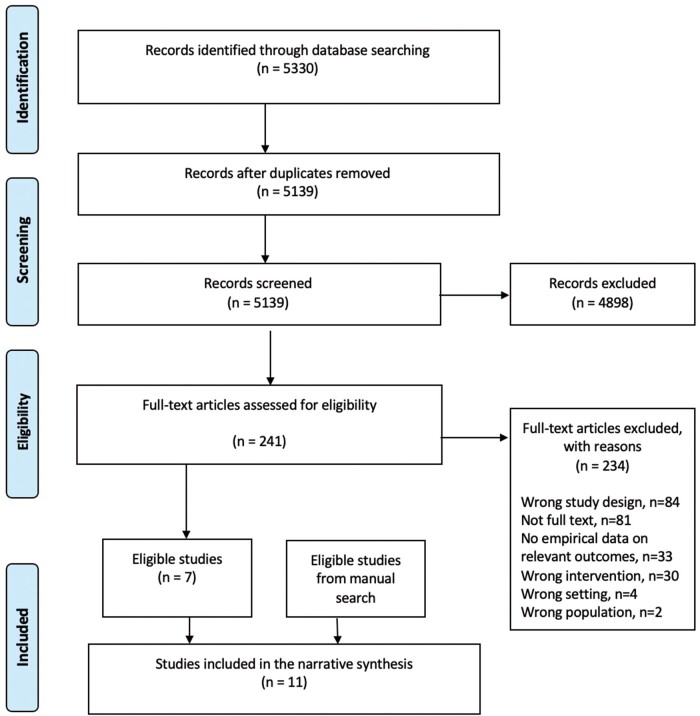

In total, after the removal of duplicates, there were 5139 studies screened by title and abstract, of which 4898 were deemed not to be relevant, leaving 241 studies for full-text screening. Of those full-text studies, 7 studies were eligible randomized controlled trials. Four additional randomized controlled trials were found via reference screened of included studies. We included 11 trials in this systematic review. The PRISMA flowchart is shown in Figure 1.

Figure 1.

PRISMA flow diagram. The PRIMSA flow diagram presents the systematic search and selection process in this review, detailing the number of records included and excluded at different stages and showing the final number of included studies.

Study characteristics

Table 1 provides a full description of the study characteristics (including key dashboard types and features). Of the 11 randomized controlled trials, 7 were cluster randomized trials,17,18,20–22,25,27 while 4 trials were 2-arm parallel randomized trials.19,23,24,26 Four trials were conducted in the United States,17,18,20,23 while another 4 studies were from other Asia-Pacific countries (Australia, China, and South Korea),21,22,24,25 and 3 studies were from European countries (United Kingdom, Switzerland).19,26,27 Six studies were conducted in primary care17,18,23,25–27 while 5 studies were done in hospital settings.19–22,24 The dashboard interventions targeted mainly clinician participants (n = 9) and 2 studies used the dashboard interventions in patients.19,24

Table 1.

Characteristics of the included studies

| Study | Design | Setting | Dashboard types | Dashboard features | Outcomes |

|---|---|---|---|---|---|

|

Cluster randomized controlled trial | 27 primary care practices |

|

|

|

|

Cluster randomized controlled trial | 32 primary care clinics at the University of Pennsylvania Health System |

|

|

Primary: statin prescribing rates for atherosclerotic cardiovascular disease in dashboard only group and dashboard with peer comparison group. |

|

Randomized controlled trial | Not reported |

|

|

Primary: the change in the patients’ adherence to medications. |

|

Cluster randomized controlled trial | Hospital of the University of Pennsylvania |

|

|

Primary outcome: the count of routine laboratory test orders placed by a physician per patient-day. |

|

Stepped-wedge, cluster-randomized trial | 4 EDs in New South Wales, Australia |

|

|

|

|

Cluster randomized crossover open controlled trial | 31 township public hospitals |

|

|

Primary outcome: 10-d antibiotic prescription rate of physicians (defined as the number of antibiotic prescriptions divided by the total number of the prescriptions in each 10-d time period). |

|

Randomized controlled trial | A telemedicine practice |

|

|

Primary outcome: antibiotic prescription rates for each of the 4 diagnostic categories: upper respiratory infection, bronchitis, sinusitis, and pharyngitis. |

|

Randomized controlled trial | A university hospital |

|

The ICT based centralized monitoring system alerted both patients and medical staff with texts and pill box alarms. | Primary outcome: medical adherence among kidney transplant recipients. |

|

Cluster Randomized Trial | 60 primary healthcare centers |

|

|

|

|

Randomized controlled trial | National-wide primary care practices |

|

Dashboard displayed of quarterly updated single-page graphical overview (bar chart) showing individual amount of antibiotic prescriptions per 100 consultations in the preceding months and the adjusted average in peer physicians across national-wide physician population. | Primary outcome: the prescribed defined daily doses (DDD) of any type of antibiotics to any patient per 100 consultations in the first and second year. |

|

Cluster Randomized Trial | 795 antibiotic prescribing NHS general dental practices in Scotland |

|

|

|

Dashboards were used as standalone interventions (A&F tools) in 8 studies,17–20,22,24,26,27 while 3 studies evaluated dashboards as a core component of a multifaceted intervention.21,23,25 In terms of features, all clinical dashboards employed a color-coded system and graphically displayed clinical/patient information (eg, bar chart, line chart, traffic light). Most included studies (n = 10) assessed the effectiveness of dashboards integrated into electronic health/medical record systems.17–25,27 In 2 studies, the dashboards had clinical reminder and alert functions24,25 and 4 used peer comparison by displaying feedback on clinicians’ performance.17,18,22,27 The dashboard interventions were predominantly compared with usual care or no intervention in most studies (n = 10),17–24,26 while one study23 compared the dashboard with a similar intervention without the dashboard component.

Outcomes

Six studies evaluated the changes in medication prescription as the primary outcome,17,18,22,23,26,27 with one study recording it as the secondary outcome.21 Two studies19,24 assessed medication adherence as the primary outcome, while 2 trials focused on test ordering.20,21 One study25 had coprimary outcomes on both medication prescription and test ordering.

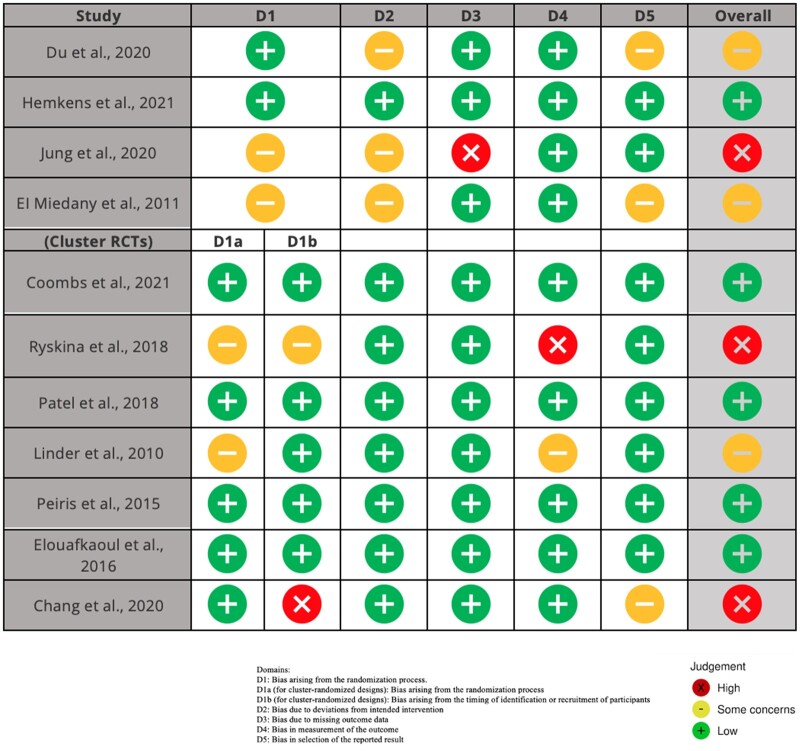

Risk of bias

Overall, 4 trials had the risk of bias judged as “some concerns,”17,19,23 5 trials had a “low” risk of bias,18,21,25–27 and 2 trials were considered at “high” risk of bias.20,22,24 One issue leading to a judgment of an overall “high” risk of bias was a lack of reporting on missing outcome data, and no analysis methods for correcting this bias. In cluster randomized trials, the main issue was the lack of concealment of the cluster allocation, which is likely to lead to selection bias. The risk of bias for individual trials is summarized in Figure 2.

Figure 2.

The risk of bias for individual trials. D1: Bias arising from the randomization process. D1a (for cluster-randomized designs): Bias arising from the randomization process. D1b (for cluster-randomized designs): Bias arising from the timing of identification or recruitment of participants. D2: Bias due to deviations from intended intervention. D3: Bias due to missing outcome data. D4: Bias in measurement of the outcome. D5: Bias in selection of the reported result. (X) high risk of bias; (−) some concerns; (+) low risk of bias.

Quality of the evidence (GRADE)

The quality of evidence is assessed against risk of bias, indirectness, and imprecision. Half of the trials17,20,23,25,27 had a moderate quality of evidence, the common reason for downgrading was due to some concerns within the risk of bias. Three trials18,21,26 had a high quality of evidence, 1 trial19 had a low quality of evidence, while 2 trials22,24 had a very low quality of evidence. The main issue leading to the judgment of low quality was a high risk of bias and uncertainty about imprecision of the estimates due to a lack of effect size reporting.

Effects of interventions

Table 2 provides the summary of findings for all included studies, including effect sizes (if reported) for both primary and secondary outcomes, the intervention time frame, and the number of participants in each trial. The effects of interventions were classified for dashboards as standalone interventions and dashboards as a core component of a multifaceted intervention, respectively.

Table 2.

Summary of findings

| Study | Time frame | Outcomes |

|||

|---|---|---|---|---|---|

| Trial groups Intervention (I) Control (C) | Medication prescription/adherence | Test ordering | Quality of the evidence (GRADE) | ||

| Computerized feedback dashboard compared with usual care/no intervention (n = 8) | |||||

|

9-mo intervention period |

|

|

Not reported | Moderate |

|

2-mo intervention period |

|

|

Not reported | High |

|

|

|

Medication adherence for disease-modifying antirheumatic drug therapy: I: 87% of patients vs. C: 43% of patients, P < .01 (no point estimates and 95% CI reported) | Not reported | Low |

|

6-mo intervention period |

|

Count of routine laboratory test orders: −0.14; 95% CI −0.56 to 0.27 | Moderate | |

|

6-mo intervention period |

|

10-d antibiotic prescription rate: coef. −0.04, 95% CI −0.07 to −0.01 | Not reported | Very low |

|

24-wk intervention period |

|

Medical adherence among kidney transplant recipients: no significant between-group difference (no point estimates and 95% CI reported) | Not reported | Very low |

|

2-y follow-up |

|

|

Not reported | High |

|

12-mo intervention period |

|

|

Not reported | Moderate |

| Multifaceted interventions incorporating a dashboard component compared with usual care/no intervention (n = 2) | |||||

|

4-mo intervention period |

|

|

Lumbar imaging referral rate: OR 0.77; 95% CI 0.47–1.26 | High |

|

|

|

Appropriate medication prescription rate: RR 1.11; 95% CI, 0.97–1.27

|

Appropriate CVD risk screening rate: RR 1.25; 95% CI 1.04–1.50 | Moderate |

| Multifaceted interventions incorporating a dashboard component compared with similar system without dashboard component (n = 1) | |||||

|

11-mo intervention period |

|

|

Not reported | Moderate |

Clinical dashboards as standalone interventions

Four trials investigated changes in antibiotic prescribing,17,22,26,27 with 2 trials having a moderate quality of evidence,17,27 one26 having a high quality of evidence and another22 having a very low quality of evidence. One trial including 2900 primary care physicians26 found a feedback dashboard did not lower nationwide antibiotic prescribing in primary care over 2 years (between-group difference −1.73%, 95% CI −5.07% to 1.72%). Another trial with 573 clinicians also found no effects on antibiotic prescribing for acute respiratory infection (odds ratio 0.97, 95% CI 0.70–1.40).16 One trial including 2566 dentists27 reported a significant reduction in antibiotic prescribing across all NHS general dental practices (between-group difference −5.7%, 95% CI −10.2% to −1.1%). Another crossover trial22 with 163 physicians revealed that a feedback dashboard reduced antibiotic prescribing in primary care by an average of 4% per 10-day period (coef. −0.04, 95% CI −0.07 to −0.01).

One trial18 providing a high quality of evidence investigated the effects of a feedback dashboard on statin prescribing for patients with atherosclerotic cardiovascular disease. The trial included 96 primary care physicians and showed no significant difference between the dashboard only intervention arm and the control arm (adjusted difference in percentage points 4.1%, 95% CI −0.8% to 13.1%). However, a significant increase in statin prescribing was seen in the dashboard with peer comparison group (adjusted difference in percentage points 5.8%, 95% CI 0.9% to 13.5%), when compared to the control group.

Patient adherence to prescribed medication was reported in 2 trials with low quality of evidence.19,24 One trial with 111 patients19 reported a higher proportion of patients diagnosed with inflammatory arthritis adhering to their prescribed disease-modifying antirheumatic drug therapy in the dashboard group compared to the control group (87% vs 43%, P < .01). In another trial24 with 114 South Korean kidney transplant recipients, there was no significant between-group difference in adherence to immunosuppressive medications (no effect size provided).

One trial with moderate evidence quality involving 114 medical interns20 found no difference in the count of routine laboratory test orders placed by a physician per patient-day in the dashboard intervention group—the ordering dropped by 0.14 less laboratory tests per patient-day among physicians in the intervention group (95% CI −0.56 to 0.27), when compared to the control group.

Clinical dashboards as a core component of a multifaceted intervention

One trial providing a high quality of evidence investigated a feedback dashboard as a component of a multifaceted intervention involving staff training and provision of education materials to support guideline-endorsed care of low back pain in emergency departments.21 This trial involved 269 clinicians and 4625 patients and found no effects on lumbar imaging referrals (odds ratio 0.77, 95% CI 0.47–1.26), but revealed a significant reduction in opioid administration (odds ratio 0.57, 95% CI 0.38–0.85).

Peiris and colleagues25 assessed a multicomponent cardiovascular disease intervention across 60 general practices with 38 725 patients, the quality of evidence was considered moderate. The intervention consisted of computerized decision support, A&F tools, and staff training, where the dashboard was the major component. There was a higher proportion of patients receiving appropriate screening of cardiovascular disease risk factors in the dashboard group versus the control group (risk ratio 1.25, 95% CI 1.04–1.50). This trial25 reported no difference in the proportion of patients at high cardiovascular disease risk receiving recommended medication prescription (risk ratio 1.11, 95% CI 0.97–1.27).

One trial with 45 primary care clinicians23 found that education plus a feedback dashboard significantly decreased antibiotic prescription rates for upper respiratory tract infections (interaction term ratio 0.60, 95% CI 0.47–0.77) and bronchitis (interaction term ratio 0.42, 95% CI 0.32–0.55), but not for sinusitis (interaction term ratio 1.05, 95% CI 0.91–1.21) and pharyngitis (interaction term ratio 0.91, 95% CI 0.76–1.09), compared to a control group that received education only (moderate quality of evidence).

DISCUSSION

Summary of main results

This systematic review assessed the effects of clinical dashboards used as A&F or CDSS on medication prescription, adherence, and test ordering. In standalone interventions, there was conflicting evidence on the effects of dashboards on prescription of antibiotics and statins: 3 trials found no effects on antibiotic or statin prescribing, while 2 trials detected a significant reduction in antibiotic prescribing. Dashboards improved medication adherence in patients with inflammatory arthritis but did not increase the adherence to immunosuppressive medicines in kidney transplant recipients. In multicomponent interventions, dashboards reduced opioid use for low back pain and antibiotic prescribing for upper respiratory tract infections. For test ordering, dashboards increased the proportion of patients receiving appropriate cardiovascular risk screening but had no effect on the rate of imaging referrals for low back pain.

Comparison with existing studies

A previous narrative systematic review investigating the effects of dashboards was conducted by Dowding and colleagues in 2015.5 Eleven studies were identified with empirical evaluations of dashboards; however, the majority of the studies were nonrandomized pre-post designs with only 1 randomized controlled trial included. Whilst our review focused on investigating dashboards on medication use and test orders through a comprehensive review of randomized controlled studies, we included 11 relevant trials. Another systematic review on the effectiveness of information display interventions on patient care outcomes was published in 2019.12 Of 22 eligible studies included, only 5 were randomized controlled studies. The findings suggested that there was limited evidence that dashboards significantly improve patient outcomes. However, this systematic review was conducted solely in critical care settings and included various information display interventions, such as physiologic and laboratory monitoring, expert systems, and multipatient dashboards. Notably, there was a recent review on patient safety dashboards published in late 2021.28 It analyzed 33 time-series studies and case studies and concluded limited evidence for dashboards directly or indirectly impacting patient safety.

Clinical dashboards are not only used as standard standalone interventions but are also frequently used as part of multifaceted interventions for improving healthcare performance and processes. Our review found the effects vary greatly in terms of medication prescription, medication adherence, and test ordering. This high level of heterogeneity aligns with findings from other systematics reviews evaluating the impacts of electronic A&F29 and CDSS30,31 without the dashboard component. The heterogeneity of our findings might result from dashboards being adopted using different formats, using different technologies, in different health settings, for different end-users. In the included studies of our review, intervention effects were assessed either solely on the dashboard itself or by incorporating dashboards into multicomponent interventions. Therefore, it is difficult to arrive at a definitive conclusion why some dashboard interventions contribute to improvements in healthcare performance while others do not.

Explanations and implications for future research

One issue leading to a lack of significant intervention effects might be related to data quality. This is evidenced by one trial26 that failed to reduce the antibiotic prescription rate, where the authors discussed that incompleteness of the routine health data may have hindered comprehensive feedback on prescribing rates to clinicians. The issues of accuracy, completeness, interoperability, and reliability that are associated with routine health data have been widely acknowledged and yet no perfect solutions have been brought forward. Therefore, provided that the digital dashboard, by its nature, is a data visualization and analytics tool, it would inevitably suffer from the deficiencies of healthcare data. This may result in the inability of digital health dashboards to accurately display a full picture of patient information or healthcare use, which might impede effective feedback and decision-making.

Another possible reason for the nonsignificant effects might be a lack of dashboard use by clinicians or patients.32 For effective implementation of dashboards into routine care there is a need for a thorough inspection of the organizational environment preimplementation. When implementing health informatics tools such as clinical dashboards, healthcare organizations should take multilevel factors into account, such as people, process, technology, and their interactions,33 to identify factors that might impede the health interventions from achieving their full potential.34 That is, to implement a health technology, not only should evaluation efforts focus on the intervention itself, but they should also consider the underlying infrastructure that supports the devices, and the human factors such as clinicians’ readiness and digital literacy, as well as the healthcare organizational environment and resources for properly implementing the technology.

Behavior change theories are encouraged to be used when designing interventions aiming to change clinicians’ practice, including clinical dashboards.11,35 Recently, Dowding and colleagues36 proposed a theory to guide the design of clinical dashboards, including 3 domains: the cues of the intervention message; the nature of the task or behavior to be performed; and situational/personality variables. Cues of the intervention message focus on providing specific tasks and performance goals as opposed to more generalized feedback.36 One trial in our review27 designed an A&F dashboard for dentists using a similar behavior change technique involving “instructions on how to perform the behavior” and “provision of information about health consequences of performing the behavior.” The authors found that this dashboard led to a significant reduction in antibiotic prescribing.27 The nature of the task to be performed concerns cognitive resources—the more cognitively demanding a task is, the less effective the intervention would be.36 Another trial in our review17 developed a quality dashboard for acute respiratory infections, which included data unrelated to study outcomes (eg, distribution of patient visits, billing information) displayed with 10 other reports unrelated to the trial’s activities. The high cognitive demand tasks associated with this dashboard could explain the lack of effects of the intervention on antibiotic prescribing for acute respiratory infections.17 Situational/personality factors relate to baseline performance.36 In one of our included trials,24 the authors claimed that the nonsignificant improvement in medication adherence was in part due to already high baseline adherence. Another trial26 also explained that the feedback dashboard was not associated with reduced prescribing rates possibly because Switzerland has the lowest antibiotic prescription rates in Europe. The same factor was observed in the SHaPED trial,21 which had low preintervention lumbar imaging rates. Thus, given the already high-level baseline performance, it would be more challenging for dashboard intervention to make a difference. None of the other trials included in this review explicitly stated that they have used theory to design dashboard components.

With the capability of integrating and sharing real-time health data, digital health dashboards are deemed as an important building block for developing a learning health system.37,38 A learning health system learns from routine health data and feeds the evidence back into practice to create cycles of continuous improvement.37 Within this process, the dashboard has therefore moved from a one-way linear data output model (health data input—dashboard—analyzed data presentation) to a cyclical data output model (data input from electronic health record—dashboard and other health technologies—integrated data presentation—update data into electronic health record). By harnessing the power of capturing real-time health data, and integrating data from various sources, digital health dashboards can be a critical enabler in accelerating the uptake of evidence into practice, thereby improving healthcare performance, patient safety, and quality of care. This significance has been increasingly recognized especially during the COVID-19 pandemic.39 With the urgent need to timely acquire patient’s demographics, COVID-19 severity, risk factors, and test results, population health dashboards/national dashboards have been developed to monitor pandemics and assist in making clinical decisions and public health policies.40 The evidence generated from disease diagnosis and management is then updated in the routine healthcare databases and shown in the dashboards to better inform practice. This learning health system has its unique role in promising us to rapidly adapt to public health emergencies, and the dashboard is no doubt a key enabler.

Strengths and limitations

The strengths of this study include the adoption of a robust methodology for systematically searching, screening, extracting, and summarizing the existing evidence. Meanwhile, we only included randomized controlled trials which helped to reduce the heterogeneity of included studies. Nevertheless, there was still a high level of heterogeneity regarding the study populations, health conditions, and outcomes measures in included trials, which is the main limitation of this review. Secondly, there is a deviation from the study protocol,14 where we initially planned to consider a wide range of study designs since the previous review on this topic included only 1 randomized trial. However, in our searches we were able to find 11 eligible randomized trials investigating the effects of dashboards, which allowed us to focus on the highest level of evidence. We also narrowed down the outcome measures into medication use and test orders since these are relevant outcomes related to quality and safety of healthcare and more likely to be influenced by dashboard interventions. We believe that we did not miss any relevant trials considering our sensitive search strategy and we manually screened potentially eligible studies cited in the included studies. Finally, as there were 2 dashboard types assessed in the review, when it comes to multifaceted interventions, assigning specific effects to the dashboard component inevitably became equivocal.

CONCLUSION

There is limited evidence indicating the positive impact of introducing clinical dashboards into routine practice on medication use and test ordering. Dashboards seem to have become an integral component of healthcare organizations with a prior assumption that they are useful, but the evidence from our review contradicts this assumption to some extent. When designing and implementing dashboards in healthcare, important aspects, such as design theories, data quality, healthcare processes, human factors and available resources, warrant further attention as they might influence the effects of dashboards on healthcare performance and quality of care.

AUTHOR CONTRIBUTIONS

CX, GCM, and CGM conceived the study. QC, LH, and CH supported the data extraction. All authors contributed to the planning of the study and the manuscript.

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

PATIENT AND PUBLIC INVOLVEMENT

There was no involvement from patients or the public in the design, conduct, or outcome of this work.

CONFLICT OF INTEREST STATEMENT

None declared.

Supplementary Material

Contributor Information

Charis Xuan Xie, Wolfson Institute of Population Health, Barts and The London School of Medicine and Dentistry, Queen Mary University of London, London, UK.

Qiuzhe Chen, Institute for Musculoskeletal Health, Sydney, NSW, Australia; Sydney School of Public Health, Faculty of Medicine and Health, The University of Sydney, Sydney, NSW, Australia.

Cesar A Hincapié, Department of Chiropractic Medicine, Faculty of Medicine, University of Zurich and Balgrist University Hospital, Zurich, Switzerland; Epidemiology, Biostatistics and Prevention Institute, University of Zurich, Zurich, Switzerland.

Léonie Hofstetter, Department of Chiropractic Medicine, Faculty of Medicine, University of Zurich and Balgrist University Hospital, Zurich, Switzerland.

Chris G Maher, Institute for Musculoskeletal Health, Sydney, NSW, Australia; Sydney School of Public Health, Faculty of Medicine and Health, The University of Sydney, Sydney, NSW, Australia.

Gustavo C Machado, Institute for Musculoskeletal Health, Sydney, NSW, Australia; Sydney School of Public Health, Faculty of Medicine and Health, The University of Sydney, Sydney, NSW, Australia.

REFERENCES

- 1. Caban JJ, Gotz D.. Visual analytics in healthcare – opportunities and research challenges. J Am Med Inform Assoc 2015; 22 (2): 260–2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Murdoch TB, Detsky AS.. The inevitable application of big data to health care. JAMA 2013; 309 (13): 1351–2. [DOI] [PubMed] [Google Scholar]

- 3. Stadler JG, Donlon K, Siewert JD, et al. Improving the efficiency and ease of healthcare analysis through use of data visualization dashboards. Big Data 2016; 4 (2): 129–35. [DOI] [PubMed] [Google Scholar]

- 4. West VL, Borland D, Hammond WE.. Innovative information visualization of electronic health record data: a systematic review. J Am Med Inform Assoc 2015; 22 (2): 330–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Dowding D, Randell R, Gardner P, et al. Dashboards for improving patient care: review of the literature. Int J Med Inform 2015; 84 (2): 87–100. [DOI] [PubMed] [Google Scholar]

- 6. Ivers NM, Sales A, Colquhoun H, et al. No more ‘business as usual’ with audit and feedback interventions: towards an agenda for a reinvigorated intervention. Implement Sci 2014; 9 (14): 14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Michie S, van Stralen MM, West R.. The behaviour change wheel: a new method for characterising and designing behaviour change interventions. Implement Sci 2011; 6 (42): 42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Casati A, Sedefov R, Pfeiffer-Gerschel T.. Misuse of medicines in the European Union: a systematic review of the literature. Eur Addict Res 2012; 18 (5): 228–45. [DOI] [PubMed] [Google Scholar]

- 9. Blackmore CC, Mecklenburg RS, Kaplan GS.. Effectiveness of clinical decision support in controlling inappropriate imaging. J Am Coll Radiol 2011; 8 (1): 19–25. [DOI] [PubMed] [Google Scholar]

- 10. Kwan JL, Lo L, Ferguson J, et al. Computerised clinical decision support systems and absolute improvements in care: meta-analysis of controlled clinical trials. BMJ 2020; 370: m3216. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Ivers N, Jamtvedt G, Flottorp S, et al. Audit and feedback: effects on professional practice and healthcare outcomes. Cochrane Database Syst Rev 2012; (6): CD000259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Waller RG, Wright MC, Segall N, et al. Novel displays of patient information in critical care settings: a systematic review. J Am Med Inform Assoc 2019; 26 (5): 479–89. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Moher D, Liberati A, Tetzlaff J, Altman DG, PRISMA Group. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Ann Intern Med 2009; 151 (4): 264. [DOI] [PubMed] [Google Scholar]

- 14. Xie CX, Maher C, Machado GC.. Digital Health Dashboards for Improving Health Systems, Healthcare Delivery and Patient Outcomes: A Systematic Review Protocol. OSF PreprintsOxford University Press; 2020. [Google Scholar]

- 15. Sterne JAC, Savović J, Page MJ, et al. RoB 2: a revised tool for assessing risk of bias in randomised trials. BMJ 2019: 366: l4898. [DOI] [PubMed] [Google Scholar]

- 16. Guyatt GH, Oxman AD, Vist GE, GRADE Working Group, et al. GRADE: an emerging consensus on rating quality of evidence and strength of recommendations. BMJ 2008; 336 (7650): 924–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Linder JA, Schnipper JL, Tsurikova R, et al. Electronic health record feedback to improve antibiotic prescribing for acute respiratory infections. Am J Manag Care 2010; 16 (12 Suppl HIT): e311–9. [PubMed] [Google Scholar]

- 18. Patel MS, Kurtzman GW, Kannan S, et al. Effect of an automated patient dashboard using active choice and peer comparison performance feedback to physicians on statin prescribing. JAMA Netw Open 2018; 1 (3): e180818. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. El Miedany Y, El Gaafary M, Palmer D.. Assessment of the utility of visual feedback in the treatment of early rheumatoid arthritis patients: a pilot study. Rheumatol Int 2012; 32 (10): 3061–8. [DOI] [PubMed] [Google Scholar]

- 20. Ryskina K, Jessica Dine C, Gitelman Y, et al. Effect of social comparison feedback on laboratory test ordering for hospitalized patients: a randomized controlled trial. J Gen Intern Med 2018; 33 (10): 1639–45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Coombs DM, Machado GC, Richards B, et al. Effectiveness of a multifaceted intervention to improve emergency department care of low back pain: a stepped-wedge, cluster-randomised trial. BMJ Qual Saf 2021; 30 (10): 825–35. [DOI] [PubMed] [Google Scholar]

- 22. Chang Y, Sangthong R, McNeil EB, et al. Effect of a computer network-based feedback program on antibiotic prescription rates of primary care physicians: a cluster randomized crossover-controlled trial. J Infect Public Health 2020; 13 (9): 1297–303. [DOI] [PubMed] [Google Scholar]

- 23. Du Yan L, Dean K, Park D, et al. Education vs clinician feedback on antibiotic prescriptions for acute respiratory infections in telemedicine: a randomized controlled trial. J Gen Intern Med 2021; 36 (2): 305–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Jung H-Y, Jeon Y, Seong SJ, et al. ICT-based adherence monitoring in kidney transplant recipients: a randomized controlled trial. BMC Med Inform Decis Mak 2020; 20 (1): 105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Peiris D, Usherwood T, Panaretto K, et al. Effect of a computer-guided, quality improvement program for cardiovascular disease risk management in primary health care. Circ Cardiovasc Qual Outcomes 2015; 8 (1): 87–95. [DOI] [PubMed] [Google Scholar]

- 26. Hemkens LG, Saccilotto R, Reyes SL, et al. Personalized prescription feedback using routinely collected data to reduce antibiotic use in primary care. JAMA Intern Med 2017; 177 (2): 176–83. [DOI] [PubMed] [Google Scholar]

- 27. Elouafkaoui P, Young L, Newlands R, Translation Research in a Dental Setting (TRiaDS) Research Methodology Group, et al. An audit and feedback intervention for reducing antibiotic prescribing in general dental practice: the RAPiD cluster randomised controlled trial. PLoS Med 2016; 13 (8): e1002115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Murphy DR, Savoy A, Satterly T, et al. Dashboards for visual display of patient safety data: a systematic review. BMJ Health Care Inform 2021; 28 (1): e100437. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Tuti T, Nzinga J, Njoroge M, et al. A systematic review of electronic audit and feedback: intervention effectiveness and use of behaviour change theory. Implement Sci 2017; 12 (1): 61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Groenhof TKJ, Asselbergs FW, Groenwold RHH, on behalf of the UCC-SMART Study Group, et al. The effect of computerized decision support systems on cardiovascular risk factors: a systematic review and meta-analysis. BMC Med Inform Decis Mak 2019; 19 (1): 108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Haynes RB, Wilczynski NL;. the Computerized Clinical Decision Support System (CCDSS) Systematic Review Team. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: methods of a decision-maker-researcher partnership systematic review. Implement Sci 2010; 5 (1): 12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Semler MW, Weavind L, Hooper MH, et al. An electronic tool for the evaluation and treatment of sepsis in the ICU. Crit Care Med 2015; 43 (8): 1595–602. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Thraen I, Bair B, Mullin S, et al. Characterizing “information transfer” by using a Joint Cognitive Systems model to improve continuity of care in the aged. Int J Med Inform 2012; 81 (7): 435–41. [DOI] [PubMed] [Google Scholar]

- 34. Tariq A, Georgiou A, Westbrook J.. Medication errors in residential aged care facilities: a distributed cognition analysis of the information exchange process. Int J Med Inform 2013; 82 (5): 299–312. [DOI] [PubMed] [Google Scholar]

- 35. Landis-Lewis Z, Brehaut JC, Hochheiser H, et al. Computer-supported feedback message tailoring: theory-informed adaptation of clinical audit and feedback for learning and behavior change. Implement Sci 2015; 10 (12): 12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Dowding D, Merrill J, Russell D.. Using feedback intervention theory to guide clinical dashboard design. AMIA Annu Symp Proc 2018; 2018: 395–403. [PMC free article] [PubMed] [Google Scholar]

- 37. Friedman C, Rubin J, Brown J, et al. Toward a science of learning systems: a research agenda for the high-functioning learning health system. J Am Med Inform Assoc 2015; 22 (1): 43–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Jeffries M, Keers RN, Phipps DL, et al. Developing a learning health system: insights from a qualitative process evaluation of a pharmacist-led electronic audit and feedback intervention to improve medication safety in primary care. PLoS One 2018; 13 (10): e0205419. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Douthit BJ. The influence of the learning health system to address the COVID‐19 pandemic: an examination of early literature. Int J Health Plann Manag 2021; 36 (2): 244–51. [DOI] [PubMed] [Google Scholar]

- 40. Tracking – Johns Hopkins Coronavirus Resource Center [Internet]. Johns Hopkins Coronavirus Resource Center. 2021. https://coronavirus.jhu.edu/data. Accessed December 25, 2021.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.