Abstract

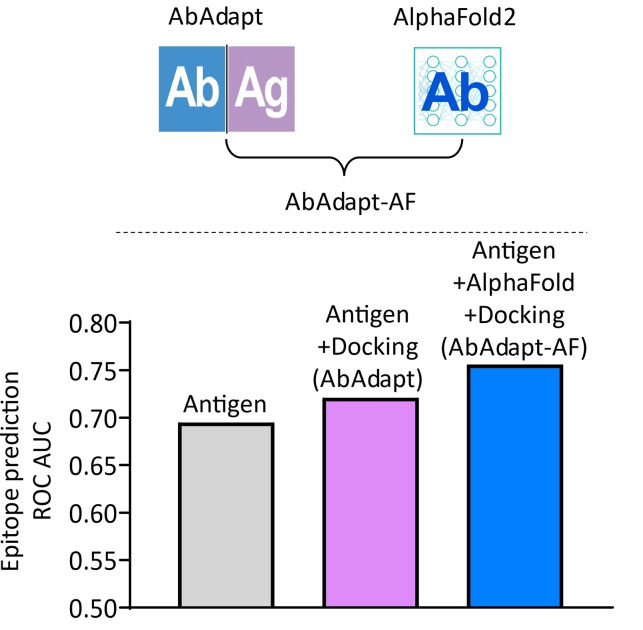

Antibodies recognize their cognate antigens with high affinity and specificity, but the prediction of binding sites on the antigen (epitope) corresponding to a specific antibody remains a challenging problem. To address this problem, we developed AbAdapt, a pipeline that integrates antibody and antigen structural modeling with rigid docking in order to derive antibody‐antigen specific features for epitope prediction. In this study, we systematically assessed the impact of integrating the state‐of‐the‐art protein modeling method AlphaFold with the AbAdapt pipeline. By incorporating more accurate antibody models, we observed improvement in docking, paratope prediction, and prediction of antibody‐specific epitopes. We further applied AbAdapt‐AF in an anti‐receptor binding domain (RBD) antibody complex benchmark and found AbAdapt‐AF outperformed three alternative docking methods. Also, AbAdapt‐AF demonstrated higher epitope prediction accuracy than other tested epitope prediction tools in the anti‐RBD antibody complex benchmark. We anticipate that AbAdapt‐AF will facilitate prediction of antigen‐antibody interactions in a wide range of applications.

Keywords: AlphaFold, antibody-antigen docking, antibody-specific epitope prediction, receptor binding domain, SARS-CoV-2

Integration of AlphaFold2 with AbAdapt (AbAdapt‐AF) resulted in improved docking, paratope and epitope prediction compared with AbAdapt alone.

Introduction

Highly specific antibody‐antigen interactions are a defining feature of adaptive immune responses to pathogens or other sources of non‐self molecules. [1] This adaptive molecular recognition has been exploited to engineer antibodies for various purposes, including laboratory assays and highly specific protein therapeutics. [2] Despite their widespread use, experimental identification of antibody‐antigen complex structures, or their interacting residues, is still a laborious process. Several computational methods for predicting complex models[ 3 , 4 , 5 , 6 ] or interface residues on antibody (paratope) or antigen (epitope) have been developed,[ 7 , 8 , 9 , 10 ] but the problem of integrating these methods to archive a robust and coherent solution remains challenging. With the recent breakthroughs in protein structural modeling by Deep Learning,[ 11 , 12 , 13 ] we revisit this important problem and assess the impact of state‐of‐the‐art protein modeling on antibody‐antigen docking and binding site prediction.

AbAdapt is a pipeline that combines antibody and antigen modeling with rigid docking and re‐scoring in order to derive antibody‐antigen specific features for epitope prediction. [6] As has been reported by others, the rigid docking and scoring steps are sensitive to the quality of the input models.[ 14 , 15 ] By default, AbAdapt accepts sequences as input and uses Repertoire Builder, [16] a high‐throughput template‐based method, for antibody modeling. However, AbAdapt can also accept structures as input for antibodies, antigens, or both. Here, we assessed the effect of using AlphaFold2 antibody models in the AbAdapt pipeline in a large‐scale benchmark using leave‐one‐out cross validation (LOOCV) and also a large and diverse Holdout set. In addition, the improved AbAdapt‐AlphaFold2 (AbAdapt‐AF) pipeline was tested using a set of recently determined antibodies that target various epitopes on a common antigen: the SARS‐Cov‐2 spike receptor binding domain (RBD). We found that the use of AlphaFold2 significantly improved the performance of AbAdapt, both at the level of protein structure and predicted binding sites.

Results and Discussion

Improvement in antibody modeling using AlphaFold2

The CDRs constitute the greatest source of sequence and structural variability in antibodies and also largely overlap with their paratope residues. Here, we systematically evaluated the performance of antibody variable region structural models by Repertoire Builder and AlphaFold2 using the LOOCV and Holdout datasets. The accuracy of antibody modeling improved significantly in both the LOOCV (Figure S1A) and Holdout (Figure S1B) sets. The improvement of AlphaFold2 over Repertoire Builder was particularly apparent in the modeling of the most challenging CDR loop, CDR−H3: the average RMSD by AlphaFold2 for the LOOCV set dropped from 4.38 Å to 3.44 Å, a 21.50 % improvement over Repertoire Builder (Table S1). Similar results were obtained for the Holdout set (4.44 Å to 3.62 Å, a 18.43 % improvement). We note that 58 % of the 720 queries were released to the PDB before 30 April 2018, which means that these PDB entries could have been used for training AlphaFold2.

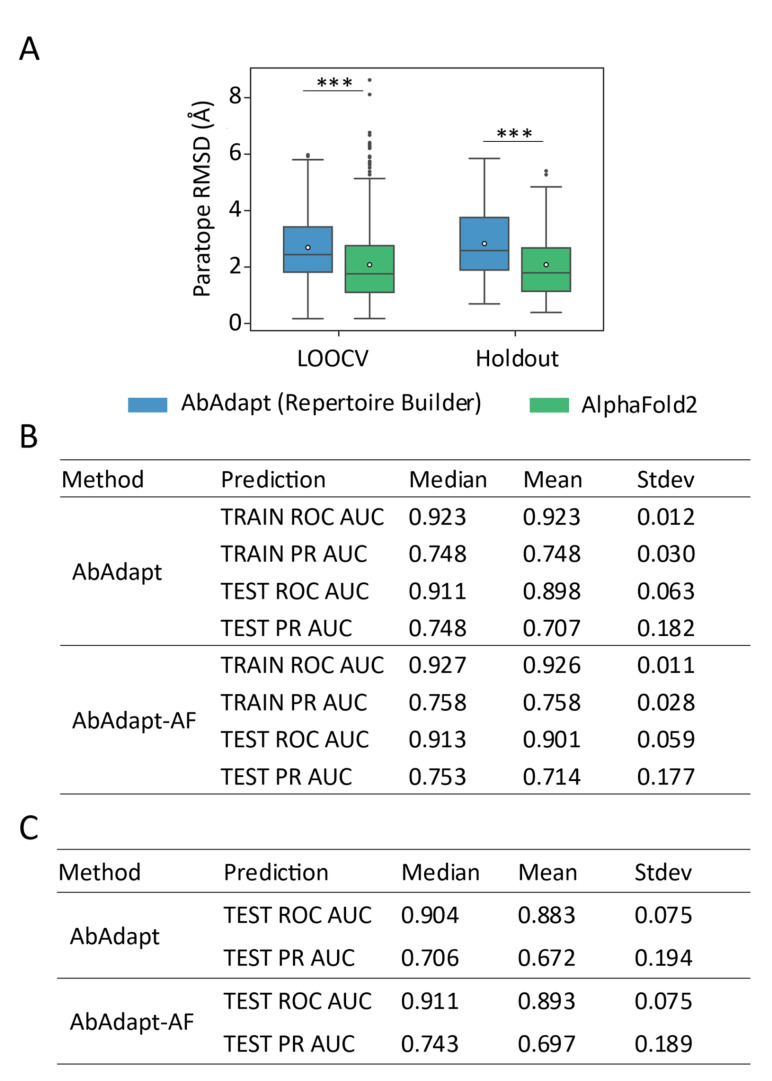

As expected, the improvement of CDR loop modeling by AlphaFold2 resulted in improved paratope modeling: the paratope RMSD dropped from 2.69 Å to 2.08 Å (a 22.73 % improvement) in the LOOCV set and from 2.83 Å to 2.12 Å (a 25.26 % improvement) in the Holdout set (Figure 1A and Table S1). Using a threshold of paratope RMSD >4 Å to define low‐quality models, the ratio of low‐quality models by AlphaFold2 dropped from 14.35 % to 7.74 % in the LOOCV set and 21.0 % to 11.0 % in the Holdout set, approximately a 2‐fold decrease. These improvements are of interest in antibody‐antigen docking because we previously observed that AbAdapt docking performance was sensitive to paratope structural modeling errors. [6] We also observed that the rank 1 AlphaFold2 models had the lowest CDR−H3 and paratope RMSDs while rank 5 models had the highest CDR−H3 and paratope RMSDs in both the LOOCV and Holdout sets (Table S4). This result justifies to use of rank 1 models in the following analysis.

Figure 1.

Comparison of the performance of paratope prediction between AbAdapt and AbAdapt‐AF. (A) The paratope RMSD of antibody model in LOOCV training set and Holdout set by AbAdapt or AlphaFold2. Wilcoxon matched‐pairs signed rank test was performed to compare the corresponding performance between AbAdapt and AbAdapt‐AF (***P≤ 0.001). The empty circle in each box indicated the average value. Comparison of the paratope prediction performance of antibodies by AbAdapt and AlpahFold2 in LOOCV set (B) and Holdout set (C).

Improvement of AbAdapt pipeline using AlphaFold2 antibody models

After including the more accurate AlphaFold2 antibody models in the AbAdapt pipeline, we evaluated the influence on each of the main steps: paratope prediction, initial epitope prediction, docking, scoring Piper‐Hex clusters and antibody‐specific epitope prediction.

Paratope prediction

The Area Under the Curve (AUC) of Receiver Operating Characteristic curve (ROC) and Precision‐Recall (PR) curves for paratope prediction for the LOOCV dataset were calculated for AbAdapt and AbAdapt‐AF (Figure 1B). We found close median ROC AUCs for both training (0.927) and testing (0.913) by AbAdapt‐AF which showed slight improvement over AbAdapt (0.923 for training and 0.911 for testing). The median PR AUC for testing improved from 0.748 (AbAdapt) to 0.753 (AbAdapt‐AF). In the Holdout set, the median ROC AUC for paratope prediction by AbAdapt‐AF was 0.911, which was close to that of the LOOCV set (0.913) (Figure 1B and 1 C). A dramatic improvement of median PR AUC was also observed: from 0.706 (AbAdapt) to 0.743 (AbAdapt‐AF) (Figure 1C). Thus, introducing more accurate antibody models from AlphaFold2 clearly improved paratope prediction.

Initial epitope prediction

In the case of initial epitope prediction, AbAdapt‐AF exhibited a median test ROC AUC of 0.694 in the LOOCV set and 0.695 in the Holdout set which was identical to AbAdapt, as expected, since both pipelines used the same antigen model from Spanner [17] for training. Meanwhile, these values were far lower than the median training ROC AUC: 0.863 (Table S2). The difference between training and testing suggests that the initial epitope predictor does not generalize well to unseen data and highlights the inherent limitation in epitope prediction without reference to a specific antibody. This issue is addressed in section “Antibody‐specific epitope predictions” by performing epitope prediction with specific antibody‐derived features.

Hex and Piper docking

We first analyzed the effect of AlphaFold2 models on the frequency of “true” poses produced by Hex [18] or Piper [19] in the LOOCV set. Here, as in our previous work, [6] a relatively loose cutoff value of 15 Å for the RMSD of the interface residues (IRMSD), along with epitope and paratope accuracies of 50 %, was used to define a “true” pose. To systematically compare the docking performance between AbAdapt and AbAdapt‐AF, two more strict IRMSD cutoffs (10 Å and 7 Å) were also utilized. The success ratio was defined as the fraction of queries with at least one “true” pose among all poses (on average 562 poses were generated by AbAdapt‐AF). The median true pose ratio of the Hex engine increased modestly from 1.50 % to 1.53 % (Figure S2C) using an IRMSD cutoff 15 Å, which was not statistically significant (Figure S2A). This is somewhat expected, since Hex was used for local docking, guided by the initial epitope predictions, which themselves were not significantly affected by the use of AlphaFold2 antibody models. In contrast to Hex, the median Piper true pose ratio, which increased from 1.43 % to 1.57 %, significantly improved upon use of AlphaFold2 antibody models (Figure S2A and S2 C). For the Holdout set, the median true pose ratio for both Hex and Piper were not significantly improved by use of AlphaFold2 (Figure S3A and S3 C). These results indicate that simply supplying better models does not guarantee improved docking. A similar observation was reported in a recent study using AlphaFold2 models in the ClusPro web server. [20]

Combined Piper‐Hex clustering and scoring

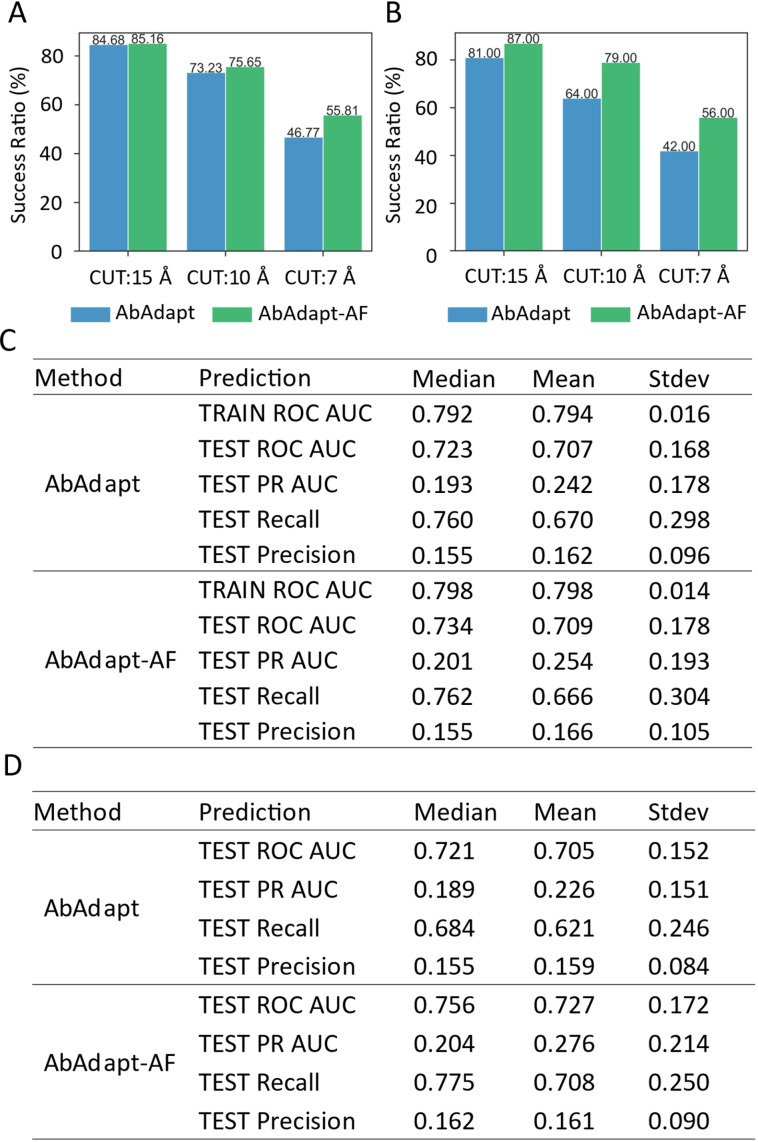

The top Piper and Hex poses from the docking steps were co‐clustered and re‐scored. Here, we observed an improvement in the AbAdapt‐AF pipeline compared with AbAdapt. In the LOOCV set, the median clustered true pose ratio of Hex improved from 1.16 % to 1.31 % while that for Piper improved 2.32 % to 2.78 %, resulting in a significantly improved median combined Hex‐Piper true pose ratio (2.42 % versus 2.83 %) for AbAdapt versus AbAdapt‐AF, respectively (Figure S2B and S2 C). The median rank of the best true pose (with 1 being perfect) dropped significantly from 17 to 13.5, a 20.59 % improvement (Figure S2D). We next imposed a stricter (7 Å) IRMSD cutoff; even under this stricter criterion, the median true rank dropped from 54.5 to 43, a 21.10 % improvement (Figure S2D). This improvement was also reflected in the ratio of queries with true poses under the stricter 7 Å IRMSD cutoff (success ratio) in the LOOCV set: The AbAdapt‐ AF success ratio was 55.81 %, compared with 46.67 % for AbAdapt (Figure 2A).

Figure 2.

Comparison of the performance of pose clustering and epitope prediction between AbAdapt and AbAdapt‐AF. The success ratio of pose clustering in LOOCV set (A) and Holdout set (B) after clustering and combining the pose from Piper and Hex. When setting the true cutoff of interface RMSD (RCUT) 15, 10 and 7 Å, the corresponding success ratio (queries with at least one correctly predicted pose among all poses) was shown above each bar. The final epitope prediction performance in LOOCV set (C) and Holdout set (D) after introducing the post‐docking features of specific antibodies.

For the unseen Holdout set, the median clustered true pose ratio for Hex improved but was not statistically significant: 0.56+/−8.15 % (AbAdapt) versus 1.25+/−6.69 % (AbAdapt‐AF) (Figure S3B and S3 C). However, the median clustered true pose ratio of Piper improved significantly: 2.18+/−3.97 % (AbAdapt) versus 2.66+/−3.72 % (AbAdapt‐AF), resulting in a significantly improved median combined Hex‐Piper true pose ratio (2.18+/−4.12 % versus 2.7+/−3.9 %) for AbAdapt versus AbAdapt‐AF. AbAdapt‐AF outperformed AbAdapt in all three IRMSD cutoff values and the success ratio was very close to that of the LOOCV set (Figure 2A and 2B). Taken together, the performance of scoring and clustering of Hex and Piper poses significantly improved by using AbAdapt‐AF.

Antibody‐specific epitope predictions

After retraining the epitope predictor with docking features, we observed significant improvement in antibody‐specific epitope prediction. In the LOOCV set, the median test ROC AUC increased from 0.694 (initial epitope prediction) to 0.734 (antibody‐specific epitope prediction) by AbAdapt‐AF, compared with 0.694 (initial epitope prediction) to 0.723 (antibody‐specific epitope prediction) by AbAdapt (Figure 2C and Table S2). An even greater improvement was observed in the Holdout set: AbAdapt‐AF, the median test ROC AUC improved from 0.695 (initial epitope prediction) to 0.756 (antibody‐specific epitope prediction); for AbAdapt, the corresponding values were 0.695 (initial epitope prediction) and 0.721 (antibody‐specific epitope prediction), as shown in Figure 2D and Table S2. There are relatively few antigen residues that make up the epitope (class imbalance of epitope and non‐epitope residues). The PR ROC baseline is given by the ratio Ntrue/Nfalse (number of epitope residues/number of non‐epitope residues). By this definition, the median PR AUC baseline was 0.094 in the Holdout set (Figure S4). In the Holdout set, the median PR AUC of the antibody‐specific epitope prediction reached 0.204 (a 117.02 % improvement over the baseline) by AbAdapt‐AF, while the corresponding value was 0.189 for AbAdapt, representing a smaller (101.06 %) improvement over the baseline (Figure 2D). This result supports the conclusion that the inclusion of AlphaFold2 significantly improved the final epitope predictions, even for inputs that have never been seen by the ML models.

We note that, in the current AbAdapt‐AF pipeline, antibody models were built by AlphaFold2 while antigen models were built by the template‐based modeling engine Spanner. [17] To investigate the sensitivity of performance to the antigen models, we repeated the antigen modeling using AlphaFold2. Here antibody‐specific epitope prediction median test ROC AUC values were 0.745 (0.721 by AbAdapt) and median test PR AUC values were 0.195 (0.189 by AbAdapt) (Table S3). Compared to the median test ROC AUC of the antibody‐specific epitope prediction using AbAdapt (0.721), AbAdapt‐AF achieved better performance, using either Spanner antigen models (0.756) or AlphaFold2 antigen models (0.745) as input. These results suggest that the main impact on AbAdapt was due to the use of AlphaFold2 for antibody modeling.

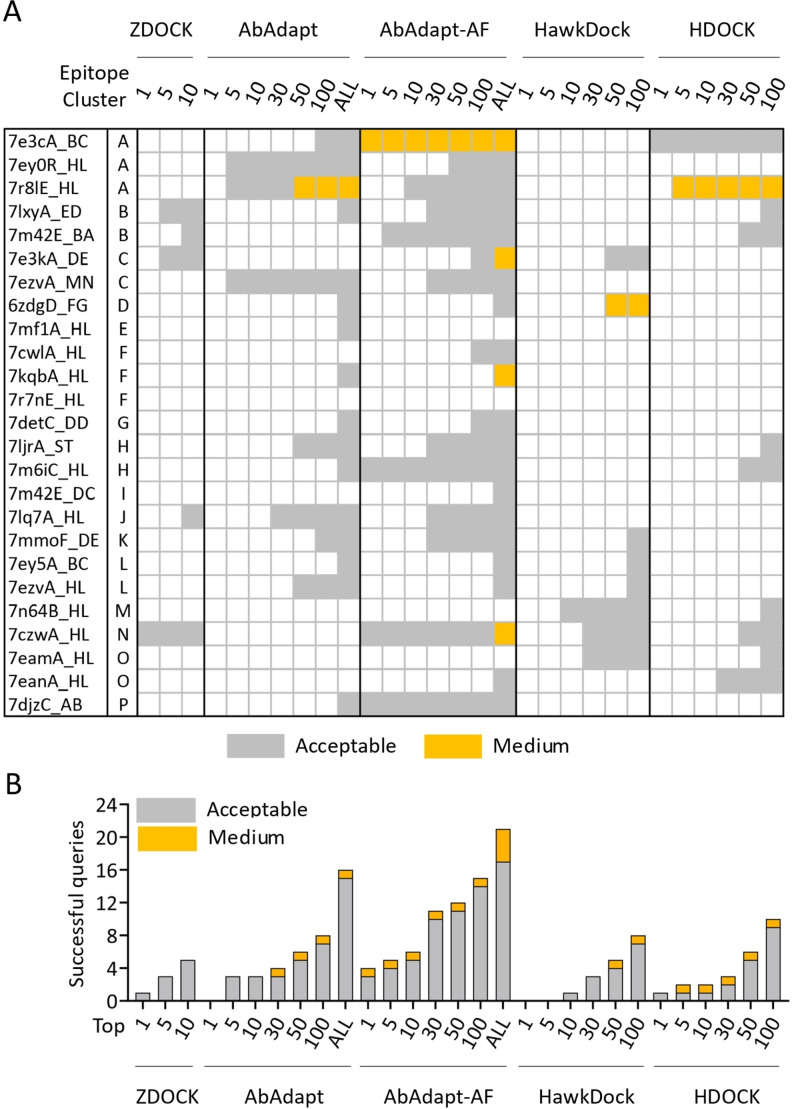

Anti‐RBD docking performance

To assess AbAdapt on a realistic test case, we prepared 25 non‐redundant SARS‐Cov‐2 anti‐RBD antibodies that were not among the training data used by AbAdapt or AlphaFold. These antibodies targeted 16 epitope clusters in the RBD (Figure S5). We next assessed the performance of AbAdapt‐AF along with ZDOCK, [3] HawkDock, [4] HDOCK, [5] and AbAdapt, [6] as shown in Figure 3A and 3B. In terms of rank1 models, AbAdapt‐AF, a total of 4 (16 %) acceptable or better models were produced by AbAdapt‐AF. The corresponding success rates for the other methods were: ZDOCK (1), AbAdapt (0), HawkDock (0), HDOCK (1). Similarly, in terms of 100 top‐ranked models, a total of 15 (60 %) acceptable or better models were built by AbAdapt‐AF. The corresponding success rates of the other tested methods were: AbAdapt (8), HawkDock (8), HDOCK (10). AbAdapt‐AF performed better than AbAdapt to a similar degree that described in the LOOCV and Holdout assessment. Considering all cluster representatives (mean value 539 per query), by CAPRI criteria, [21] the AbAdapt‐AF success ratio was 84 % (21 queries) which was close to 75.65 % in the LOOCV and 79 % in the Holdout set (Figure 2A, 2B and 3B).

Figure 3.

Docking performance of 25 anti‐RBD antibody complexes. (A) The CAPRI quality of the best model from top 1/5/10 ranked models by ZDOCK, top 1/5/10/30/50/100/all ranked models by AbAdapt and AbAdapt‐AF, and top 1/5/10/30/50/100 by HawkDock and HDOCK. The color of each cell was their corresponding CAPRI quality showed as acceptable (grey) and medium (orange). (B) The number of successful queries by ZDOCK, AbAdapt, AbAdapt‐AF, HawkDocK, HDOCK.

Antibody‐specific RBD epitope predictions

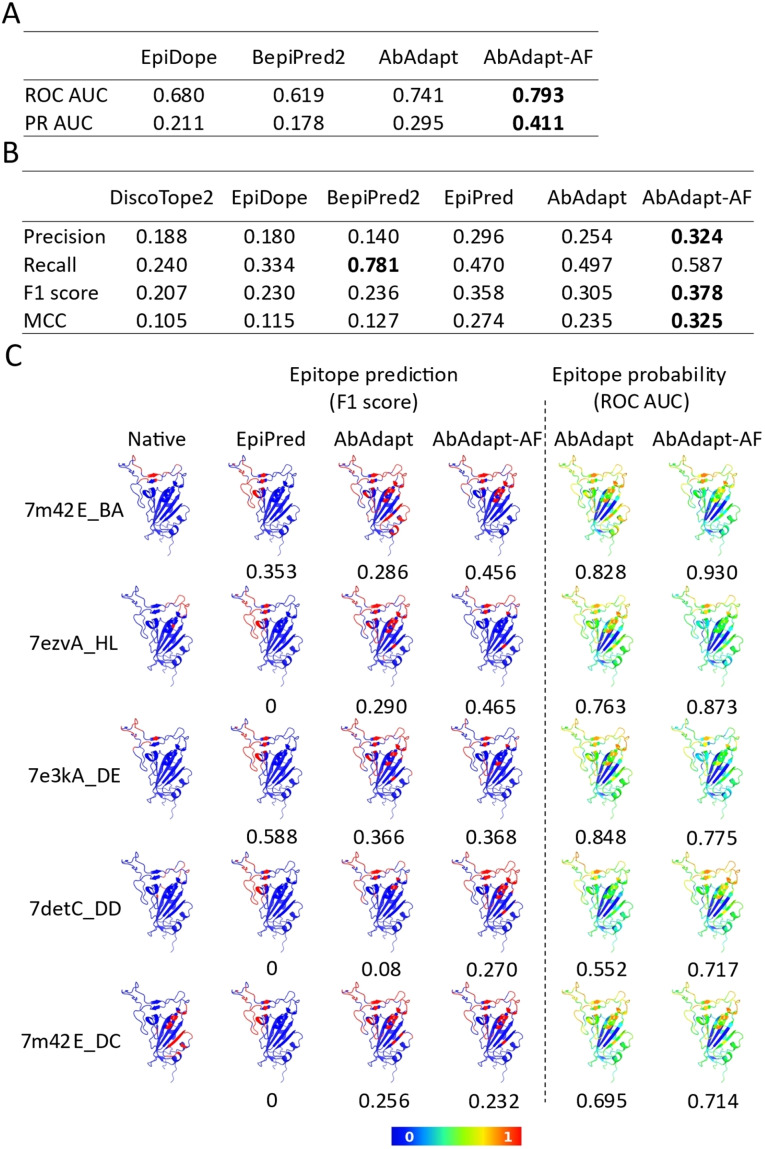

We next analyzed epitope prediction in the RBD benchmark. In addition to using Abadapt and Abadapt‐AF, we also evaluated epitope prediction tools that are not based on docking. These included: BepiPred2 [8] for linear epitope prediction, DiscoTope2 [7] for structural epitope prediction, EpiPred [10] for antibody‐specific epitope prediction, and EpiDope, [9] which uses a deep neural network based on antigen sequence features. AbAdapt‐AF achieved the highest ROC AUC (0.793) and PR AUC (0.411) using the antibody‐specific epitope prediction probability (Figure 4A). We found the average AbAdapt‐AF ROC AUC (0.793) of the RBD benchmark was close to the values in LOOCV (0.709) and Holdout (0.727) runs (Figure 2C and 2D). The improvement of average ROC AUC in the RBD benchmark (7.02 %) was similar to that observed in Holdout set (4.85 %) by AbAdapt‐AF (Figure 2D and 4 A), thus again demonstrating the robustness of antibody‐specific epitope prediction using AbAdapt‐AF. Meanwhile, AbAdapt‐AF achieved the highest precision (0.324), F1 score (0.378) and MCC (0.325) among the six tested methods when setting a fixed epitope probability threshold (Figure 4B) as described in section “RBD epitope prediction”.

Figure 4.

Epitope prediction of 25 anti‐RBD antibody complexes. The comparison of epitope prediction performance using probability (A) and a threshold for epitope classification (B). The performance indices are calculated in evaluation metrics and averaged. Bold character indicated the highest value of each item. (C) Epitope map visualization of representative queries. The native epitope (column 1) and predicted epitope by EpiPred (column 2), AbAdapt (column 3), and AbAdapt‐AF (column 4) are colored red on the RBD surface. The probability of prediction by AbAdapt and AbAdapt‐AF are shown in columns 5 and 6. The value below each prediction is indicated as F1 score (left) and ROC AUC (right).

The prediction performances of DiscoTope2, EpiDope, and BepiPred2, which do not take antibody into consideration, were systematically lower than those of the other methods. We noticed that BepiPred2 achieved the highest recall (0.781), but this value was obtained at a precision of only 0.140. Although EpiPred considers antibody information, the results were not very sensitive to the antibodies in this set, as all epitope predictions were in the same region of the RBD (Figure 4C).

Conclusion

In this study, we demonstrated that introducing a more accurate antibody model in the AbAdapt antibody‐specific epitope prediction pipeline had a significantly positive effect at various levels. Nevertheless, there is still much room for improvement. Considering the RBD benchmark, we evaluated six methods for epitope prediction on the SARS‐CoV‐2 spike RBD, an antigen that can be targeted by one or more antibodies on almost its entire molecular surface. [22] We found AbAdapt‐AF showed higher epitope prediction accuracy than tested methods in this benchmark. Nevertheless, the ROC AUC (0.793) and precision (0.324) values imply that many false‐positive epitope predictions are expected. To compare the epitope prediction performance of AbAdapt‐AF and other tools fairly and systematically, a broader benchmark containing various antigens will be needed. At present, the use of AlphaFold2 in the context of AbAdapt suggests that this combination represents a robust but incremental way of improving antibody‐specific epitope predictions. Newly released antibody modeling tools such as Ablooper [12] and DeepAb [13] showed better performance than AlphaFold2 in the Rosetta Antibody Benchmark, [12] and these methods should also be considered when running AbAdapt with structural models as input.

Experimental Section

Datasets: The preparation of data for LOOCV and Holdout tests was described previously. [6] In brief, crystal structures of antibody‐antigen complexes were gathered from the Protein Data Bank (downloaded May. 24, 2021). [23] We filtered the complexes using the following criteria: Complete heavy (H) and light (L) variable chain, antigen length equal to or greater than 60 amino acids and resolution less than 4 Å. This filtering process resulted in 1862 Ab−Ag complexes. In the antigen and antibody modeling process by the AbAdapt pipeline, 290 Ab−Ag complexes were discarded due to the poor modeling quality: Root Mean Square Deviation (RMSD) of either the paratope or epitope were higher than 6 Å. Also, antigens that were themselves antibodies were removed (5 queries). Antibody Complementarity‐determining regions (CDR) sequences were annotated with the AHo numbering scheme [24] by the ANARCI program. [25] Next, the sequence redundancy was removed as follows: A pseudo‐sequence of each antibody composed of heavy and light variable domain was constructed and clustered using CD‐HIT [26] at an 85 % identity threshold. Any sequences that resulted in failure by third‐party software in subsequent steps were also removed (3 queries). This filtering resulted in 720 non‐redundant antibody‐antigen model pairs. For training, LOOCV was performed using 620 randomly chosen antibody‐antigen queries. The remaining 100 queries functioned as an independent Holdout set for testing. We also collected anti‐SARS‐Cov‐2 RBD antibodies on Sept. 5, 2021 from the PDB. [23] Antibody pseudo‐sequences were constructed as described above and clustered with the LOOCV training dataset using CD‐HIT at an 85 % sequence identity threshold. After superimposing all common epitopes for all pairs of antibodies, the C‐alpha root‐mean‐square deviation (RMSD) was used to cluster the antibodies by single‐linkage hierarchical clustering with a 10.0 Å cutoff. We previously used average‐linkage hierarchical clustering to classify antibodies that target overlapping epitopes based on structural alignments. [27] Here, the antigens in the anti‐SARS‐Cov‐2 RBD antibody dataset were aligned well using sequence information, and appeared to form discrete clusters. Thus, we utilized single‐linkage hierarchical clustering in this case. Within each cluster, the PDB entry with the most recent release date was chosen as the representative. This procedure resulted in the identification of 25 novel anti‐RBD antibody‐antigen complexes.

Epitope and Paratope definition: The epitope (paratope) residues were defined as any residue with at least one heavy atom within 5 Å of the antigen (antibody), as measured by Prody 2.0. [28]

Antibody modeling by AlphaFold2: The antibodies from the LOOCV and Holdout sets were modeled independently from antigens using the full AlphaFold2 pipeline with default parameters. [11] We concatenated the sequences of H and L chains in the Fab region via a poly‐glycine linker (32G). After cleaving the linker, the rank‐one model was used in all subsequent calculations. Antibody CDRs were annotated as described in section “Datasets”. To evaluate the modeling accuracy of the CDRs, we first superimposed the+/−4 flanking amino acid residues of each CDR in predicted models onto native antibody structures. Next, we calculated the RMSD of the CDRs by Prody 2.0. [28] The paratope RMSD was computed similarly after superimposing the paratope of the model onto the native structure.

AbAdapt and AbAdapt‐AF pipelines: AbAdapt was run using default parameters, as described previously. [6] In brief, antibodies were modeled using Repertoire Builder [16] and antigens were modeled using Spanner. [17] Here, any templates with bound antibodies overlapping with the true epitope were excluded. Two docking engines (Hex [18] and Piper [19] ) were used to sample rigid docking degrees of freedom. Machine learning models were used to predict initial epitope and paratopes, score Hex poses, score Piper poses, score clusters of Hex and Piper poses, and predict antibody‐specific epitope residues. The description of above machine learning models are summarized in Supplementary Table. 5. For LOOCV calculations, each time an antibody‐antigen pair was used as a query, each ML model was re‐trained. For the Holdout datasets, training was performed on the entire LOOCV dataset. For the AbAdapt‐AF pipeline, the only procedural difference was that AlphaFold2 was used for antibody prediction instead of the default Repertoire Builder method. Naturally, all ML models were re‐trained specifically for this use case.

RBD‐antibody complex prediction: A model of SARS‐Cov‐2 RBD (329‐532 aa) was built using Spanner [17] and used as the antigen in all subsequent docking steps. The modeling of 25 anti‐RBD antibodies was performed using AlphaFold2 on the ColabFold platform (v1.2) using the AlphaFold2‐ptm model type with templates and Amber relaxation. [29] The rank‐one antibody model was used in the downstream workflow of docking and complex modeling. We used three third‐party docking methods to benchmark AbAdapt and AbAdapt‐AF performance on RBD‐antibody docking. The global protein‐protein docking program ZDOCK (https://zdock.umassmed.edu/, v3.0.2) [3] was utilized with default parameters for antibody‐RBD binding prediction. The top 10 models were retained for analysis. We used the HawkDock server with default parameters (http://cadd.zju.edu.cn/hawkdock) for antibody‐RBD binding prediction. HawkDock integrates the ATTRACT docking algorithm, the HawkRank scoring function, and the Molecular Mechanics/Generalized Born Surface Area free energy decomposition for protein‐protein interface analysis. [4] The top 100 models were retained for analysis. HDOCK (http://hdock.phys.hust.edu.cn) [5] was utilized with the template‐free. The top 100 models were retained for analysis. To evaluate the accuracy of all RBD‐antibody poses, DockQ (https://github.com/bjornwallner/DockQ) [30] was utilized with CAPRI criteria. [21]

RBD epitope prediction: We used the following third‐party methods for epitope prediction: DiscoTope2, which predicts discontinuous B‐cell epitopes (https://services.healthtech.dtu.dk/service.php?DiscoTope‐2.0); [7] BepiPred2, which uses a random forest regression algorithm (https://services.healthtech.dtu.dk/service.php?BepiPred‐2.0); [8] EpiDope (https://github.com/flomock/EpiDope), which uses a deep neural network to predict linear B‐cell epitopes based on antigen amino acid sequence. [9] For binary labeling of the predicted epitopes, the default thresholds of 0.5 for BepiPred2 [8] and 0.818 for EpiDope [9] were used. To predict the structural epitopes specific to a given antibody, EpiPred (http://opig.stats.ox.ac.uk/webapps/newsabdab/sabpred/epipred) [10] was used with 25 anti‐RBD antibody models from AlphaFold2 and an RBD model from Spanner. The rank‐one epitope prediction from EpiPred was chosen for all subsequent analysis. For AbAdapt‐AF and AbAdapt, a threshold of 0.5 for final epitope prediction was used. [6]

Statistical analysis and Visualization: Wilcoxon matched‐pairs signed rank test and descriptive analysis were performed using GraphPad Prism 8 (GraphPad Software, San Diego, CA). For graphing, the Python package Seaborn (v 0.11.0) and Matplotlib (v 3.3.1) were used. We visualized the RBD‐antibody complexes and RBD structure with its corresponding epitope map using PyMOL (The PyMOL Molecular Graphics System, v 2.3.3 Schrödinger, LLC.)

Performance measures: To evaluate the performance of epitope and paratope prediction by a threshold‐dependent measurement, four performance indices (Precision [Eq. 1], Recall (Sensitivity) [Eq. 2], F1 score [Eq. 3], and Matthews correlation coefficient (MCC) [Eq. 4]) were calculated by the following equations.

| (1) |

| (2) |

| (3) |

| (4) |

Where TP refers to true positive; FN refers to false negative; TN refers to true negative; FP refers to false positive. For Threshold‐independent measurement, the Area Under the Curve (AUC) of Receiver Operating Characteristic curve (ROC) and Precision‐Recall (PR) curves for paratope and epitope prediction were also calculated, based on the predicted probabilities. The performance indices of epitope and paratope prediction were calculated using the Python package Scikit‐learn (v 0.23.2). [31]

Conflict of interest

The authors declare no competing interests.

1.

Supporting information

As a service to our authors and readers, this journal provides supporting information supplied by the authors. Such materials are peer reviewed and may be re‐organized for online delivery, but are not copy‐edited or typeset. Technical support issues arising from supporting information (other than missing files) should be addressed to the authors.

Supporting Information

Acknowledgements

We would like to thank all members of the Systems Immunology Lab for very helpful discussion. The computation was performed using Research Center for Computational Science, Okazaki, Japan (Project: 21‐IMS−C116 and 22‐IMS−C136). This work was supported by Japan Agency for Medical Research and Development (AMED) Platform Project for Supporting Drug Discovery and Life Science Research (Basis for Supporting Innovative Drug Discovery and Life Science Research) under Grant Numbers 22ama121025j0001 and by a Grand‐in‐Aid for Scientific Research by the Japan Society for the Promotion of Science (JSPS) under Grant Number JP20K06610.

Z. Xu, A. Davila, J. Wilamowski, S. Teraguchi, D. M. Standley, ChemBioChem 2022, 23, e202200303.

A previous version of this manuscript has been deposited on a preprint server (https://doi.org/10.1101/2022.05.21.492907).

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

- 1.K. Murphy, C. Weaver, Janeway's Immunobiology, 12th ed., New York, Garland Science, 2017.

- 2. Maynard J., Georgiou G., Annu. Rev. Biomed. Eng. 2000, 2, 339–376. [DOI] [PubMed] [Google Scholar]

- 3. Pierce B. G., Wiehe K., Hwang H., Kim B. H., Vreven T., Weng Z., Bioinformatics 2014, 30, 1771–1773. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Weng G., Wang E., Wang Z., Liu H., Zhu F., Li D., Hou T., Nucleic Acids Res. 2019, 47, W322-W330. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Yan Y., Tao H., He J., Huang S. Y., Nat. Protoc. 2020, 15, 1829–1852. [DOI] [PubMed] [Google Scholar]

- 6. Davila A., Xu Z., Li S., Rozewicki J., Wilamowski J., Kotelnikov S., Kozakov D., Teraguchi S., Standley D. M., Bioinformatics 2022, vbac015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Kringelum J. V., Lundegaard C., Lund O., Nielsen M., PLoS Comput. Biol. 2012, 8, e1002829. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Jespersen M. C., Peters B., Nielsen M., Marcatili P., Nucleic Acids Res. 2017, 45, W24-W29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Collatz M., Mock F., Barth E., Holzer M., Sachse K., Marz M., Bioinformatics 2021, 37, 448–455. [DOI] [PubMed] [Google Scholar]

- 10. Dunbar J., Krawczyk K., Leem J., Marks C., Nowak J., Regep C., Georges G., Kelm S., Popovic B., Deane C. M., Nucleic Acids Res. 2016, 44, W474–478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Jumper J., Evans R., Pritzel A., Green T., Figurnov M., Ronneberger O., Tunyasuvunakool K., Bates R., Zidek A., Potapenko A., Bridgland A., Meyer C., Kohl S. A. A., Ballard A. J., Cowie A., Romera-Paredes B., Nikolov S., Jain R., Adler J., Back T., Petersen S., Reiman D., Clancy E., Zielinski M., Steinegger M., Pacholska M., Berghammer T., Bodenstein S., Silver D., Vinyals O., Senior A. W., Kavukcuoglu K., Kohli P., Hassabis D., Nature 2021, 596, 583–589. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Abanades B., Georges G., Bujotzek A., Deane C. M., Bioinformatics 2022, btac016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Ruffolo J. A., Sulam J., Gray J. J., Patterns 2022, 3, 100406. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Anishchenko I., Kundrotas P. J., Vakser I. A., Proteins 2017, 85, 470–478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Kundrotas P. J., Anishchenko I., Badal V. D., Das M., Dauzhenka T., Vakser I. A., Proteins 2018, 86 Suppl. 1, 302–310. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Schritt D., Li S., Rozewicki J., Katoh K., Yamashita K., Volkmuth W., Cavet G., Standley D. M., Mol. Syst. Des. Eng. 2019, 4, 761–768. [Google Scholar]

- 17. Lis M., Kim T., Sarmiento J. J., Kuroda D., Dinh H. V., Kinjo A. R., Amada K., Devadas S., Nakamura H., Standley D. M., Immunome Res. 2011, 7, 1–8. [Google Scholar]

- 18. Macindoe G., Mavridis L., Venkatraman V., Devignes M. D., Ritchie D. W., Nucleic Acids Res. 2010, 38, W445–449. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Kozakov D., Brenke R., Comeau S. R., Vajda S., Proteins 2006, 65, 392–406. [DOI] [PubMed] [Google Scholar]

- 20. Ghani U., Desta I., Jindal A., Khan O., Jones G., Kotelnikov S., Padhorny D., Vajda S., Kozakov D., bioRxiv 2021, 10.1101/2021.09.07.459290. [DOI] [Google Scholar]

- 21. Lensink M. F., Wodak S. J., Proteins 2013, 81, 2082–2095. [DOI] [PubMed] [Google Scholar]

- 22. Yuan M., Liu H., Wu N. C., Wilson I. A., Biochem. Biophys. Res. Commun. 2021, 538, 192–203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Berman H. M., Westbrook J., Feng Z., Gilliland G., Bhat T. N., Weissig H., Shindyalov I. N., Bourne P. E., Nucleic Acids Res. 2000, 28, 235–242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Honegger A., Plückthun A., J. Mol. Biol. 2001, 309, 657–670. [DOI] [PubMed] [Google Scholar]

- 25. Dunbar J., Deane C. M., Bioinformatics 2016, 32, 298–300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Huang Y., Niu B., Gao Y., Fu L., Li W., Bioinformatics 2010, 26, 680–682. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Xu Z., Li S., Rozewicki J., Yamashita K., Teraguchi S., Inoue T., Shinnakasu R., Leach S., Kurosaki T., Standley D. M., Mol. Syst. Des. Eng. 2019, 4, 769–778. [Google Scholar]

- 28. Zhang S., Krieger J. M., Zhang Y., Kaya C., Kaynak B., Mikulska-Ruminska K., Doruker P., Li H., Bahar I., Bioinformatics 2021, 37, 3657–3659. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Mirdita M., Schütze K., Moriwaki Y., Heo L., Ovchinnikov S., Steinegger M., Nat. Methods 2022, 19, 679–682. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Basu S., Wallner B., PLoS One 2016, 11, e0161879. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Pedregosa F., Varoquaux G., Gramfort A., Michel V., Thirion B., Grisel O., Blondel M., Prettenhofer P., Weiss R., Dubourg V., Vanderplas J., Passos A., Cournapeau D., J. Machine Learning Res. 2011, 12, 2825–2830. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

As a service to our authors and readers, this journal provides supporting information supplied by the authors. Such materials are peer reviewed and may be re‐organized for online delivery, but are not copy‐edited or typeset. Technical support issues arising from supporting information (other than missing files) should be addressed to the authors.

Supporting Information

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.