Abstract

Purpose:

Despite significant interest in improving behavioral health therapists’ implementation of measurement-based care (MBC)—and widespread acknowledgment of the potential importance of organization-level determinants—little is known about the extent to which therapists’ use of, and attitudes toward, MBC vary across and within provider organizations or the multilevel factors that predict this variation.

Methods:

Data were collected from 177 therapists delivering psychotherapy to youth in 21 specialty outpatient clinics in the USA. Primary outcomes were use of MBC for progress monitoring and treatment modification, measured by the nationally-normed Current Assessment of Practice Evaluation-Revised. Secondary outcomes were therapist attitudes towards MBC. Linear multilevel regression models tested the association of theory-informed clinic and therapist characteristics with these outcomes.

Results:

Use of MBC varied significantly across clinics, with means on progress monitoring ranging from values at the 25th to 93rd percentiles and means on treatment modification ranging from the 18th to 71st percentiles. At the clinic level, the most robust predictor of both outcomes was clinic climate for evidence-based practice implementation; at the therapist level, the most robust predictors were: attitudes regarding practicality, exposure to MBC in graduate training, and prior experience with MBC. Attitudes were most consistently related to clinic climate for evidence-based practice implementation, exposure to MBC in graduate training, and prior experience with MBC.

Conclusions:

There is important variation in therapists’ attitudes toward and use of MBC across clinics. Implementation strategies that target clinic climate for evidence-based practice implementation, graduate training, and practicality may enhance MBC implementation in behavioral health.

Keywords: measurement-based care, implementation, adherence, organizational climate, attitudes, workforce development

Introduction

Measurement-based care (MBC), which involves the administration of standardized rating scales prior to sessions and use of the results to guide treatment for individual clients, has been shown to improve the outcomes of behavioral health services across client ages, diagnoses, and treatment settings in more than 29 randomized controlled trials (de Jong et al., 2021; Fortney et al., 2017; Lambert, 2015; Shimokawa et al., 2010). Sometimes referred to as routine outcome monitoring (McAleavey & Moltu, 2021) or systematic client feedback (Bovendeerd et al., 2021), and sometimes implemented through digital technologies known as measurement-feedback systems (Landes et al., 2015), MBC provides a basis for therapists and systems to improve their effectiveness and to demonstrate the value of services (Bickman, 2008; Connors et al., 2021; Jensen-Doss et al., 2020; Lewis et al., 2019). Nonetheless, only 10–20% of community behavioral health therapists use MBC (Hatfield & Ogles, 2007; Jensen-Doss et al., 2018; Zimmerman & McGlinchey, 2008) and efforts to implement this practice are often unsuccessful (Bickman et al., 2016; Garland et al., 2003). Even when collection of measures is mandated and results are provided directly to therapists, less than half of therapists review the feedback and use it to inform treatment decisions (Bickman et al., 2016; de Jong et al., 2012; Garland et al., 2003). These implementation deficits are important because when therapists rely exclusively on clinical judgment and do not use feedback from standardized assessments, they are much less accurate in predicting client progress or risk of deterioration (Hannan et al., 2005; Hatfield et al., 2010; Henke et al., 2009; Lutz et al., 2021). Furthermore, there is a dose-response relationship between the extent to which therapists use measurement-based feedback and improvement in client well-being (Bickman et al., 2016; de Jong et al., 2012; Sale et al., 2021). There is also evidence that enhanced implementation of MBC, reflected in sharing measurement feedback directly with clients or using clinical tools to guide responses to feedback, generates even greater client benefit (Harmon et al., 2007; Krägeloh et al., 2015; Lambert et al., 2018). Identifying factors that explain not only why some therapists use MBC and others do not, but also variation in therapists’ quality of MBC implementation, is an important step toward developing strategies that improve the integration of this transdiagnostic evidence-based practice into community settings.

Prior research on MBC implementation in behavioral health is dominated by in-depth qualitative and mixed methods case studies (Gleacher et al., 2016; Kotte et al., 2016; Marty et al., 2008) as well as large-scale surveys that sample individual practitioners (Hatfield & Ogles, 2007; Jensen-Doss et al., 2018). Despite their differing methodologies, a notable point of convergence in these studies’ results is the repeated implication of organizational factors as potentially important. Qualitative studies have repeatedly identified organizational factors such as leadership championing and support, conducive policies and procedures, supportive culture and climate, fit with organizationally-defined role demands, and organizationally-mediated resources (e.g., information technology and administrative support) as potentially important barriers and facilitators of MBC implementation (Bickman et al., 2016; Kotte et al., 2016; Marty et al., 2008; Meehan et al., 2006). Large-scale surveys that sample individual practitioners have similarly highlighted the potential importance of therapists’ workplaces, albeit in the absence of organizationally-linked data. For example, summarizing their national survey of practicing therapists in the USA, Jensen-Doss et al. (2018) concluded “the most consistent predictors of both attitudes and use [of MBC] related to work setting” (p. 58). Recognizing the need for multilevel studies that incorporate organizationally-linked data and theory-informed organizational- and individual-level predictors, these investigators called for more research to “explicate the organizational factors that might support or hinder use of monitoring and feedback” (p. 58).

Results of these studies are concordant with models and frameworks that seek to explain innovation implementation in healthcare, such as the Consolidated Framework for Implementation Research (Damschroder et al., 2009), the Exploration, Preparation, Implementation, and Sustainment model (Aarons et al., 2011), and the PARIHS framework (Rycroft-Malone, 2010), among others (Nilsen, 2015). A shared tenet of these implementation models is that determinants of implementation success reside at multiple levels, with special emphasis on the levels of individual provider (i.e., those who deliver care) and organizational context (Tabak et al., 2012). Drawing on decades of research from the organizational sciences (see Schneider et al., 2013) and healthcare quality (West et al., 2014), these implementation models contend that practitioners’ implementation behavior cannot be understood outside of the organizational context within which it occurs. Emerging implementation research in behavioral health settings offers preliminary support for this assertion (Williams & Beidas, 2019), with some studies showing that organizational factors explain more of the variance in therapists’ implementation behavior than individual factors (Beidas et al., 2015). Research has also documented statistically and practically significant variation in client outcomes across behavioral health provider organizations (Ogles et al., 2008) which is believed to be driven in part by organization-level variability in therapists’ implementation of evidence-based practices (Garland et al., 2013).

Organizational climate theory, which seeks to explain how contextual characteristics of the work environment influence employees’ behavior (Ehrhart et al., 2012), has been especially influential in the study of implementation determinants in healthcare settings (Damschroder et al., 2009). According to organizational climate researchers, a variety of focused or strategic climates can emerge in an organization, depending on the shared meanings employees generate from their experiences with the organization’s policies, procedures, and practices (Ehrhart et al., 2012). Specific types of focused climate studied in the literature include: service climate (Schneider et al., 1998), safety climate (Zohar, 1980), innovation and flexibility climate (Patterson et al., 2005), and evidence-based practice implementation climate (Ehrhart et al., 2014), among others (see Ehrhart et al., 2012). Research on innovation implementation in behavioral health has focused on innovation climate (Aarons & Sommerfeld, 2012) and evidence-based practice implementation climate (Ehrhart et al., 2014), both of which have been shown to predict therapists’ attitudes toward evidence-based practices (Aarons & Sommerfeld, 2012; Powell et al., 2017). Evidence-based practice implementation climate has also been linked to increased use of, and fidelity to, evidence-based psychosocial interventions in both schools (Williams, Hugh et al., 2022) and outpatient behavioral health clinics (Williams et al., 2018; Williams et al., 2020; Williams, Becker-Haimes et al., 2022). We are unaware of any studies examining organizational climate as an antecedent to MBC implementation, a gap we fill in this study.

Despite growing evidence of the potential importance of therapists’ workplaces for MBC implementation and the prominence of organizational factors in implementation frameworks and theories, data are lacking on the extent to which therapists’ use of, and attitudes toward, MBC vary across (and within) behavioral health organizations or the organizational- and individual-level factors associated with this variation. Filling these gaps is essential to designing implementation strategies that address the full range of factors associated with MBC implementation in a cost-effective manner.

Drawing on a sample of outpatient mental health clinics serving youth, this study aimed to fill these gaps by addressing the following research questions: (1) to what extent does therapists’ use of, and attitudes toward, MBC vary across and within specialty outpatient clinics serving youth, and (2) what theory-informed, clinic and therapist factors are associated with this variation? Following prior research (Bickman et al., 2016; Lewis et al., 2019; Sale et al., 2021), we conceptualized MBC implementation as a multi-step process that includes both the routine administration of measures to monitor progress (i.e., progress monitoring) and subsequent use of the results and associated feedback to inform treatment (i.e., treatment modification); these were examined as distinct primary outcomes. Secondary outcomes included therapist attitudes.

Method

Participants

Data were collected as part of a larger project focused on understanding how to support the implementation of evidence-based practices in behavioral health settings serving youth. The study included 21 clinics from 17 agencies that provided outpatient psychotherapy to youth and their families in three western states in the USA which were targeted for enrollment. Clinics in this sample were below the US national average on number of youths served in the previous year (M = 387 vs. 902) and above average in percentage of revenue derived from Medicaid (M = 57.7 vs. 44.4%) (Schoenwald et al., 2008). All clinics were privately held and 38% were non-profits. Within each clinic, all therapists who delivered psychotherapy to youth on a 50% or greater full-time equivalent basis were eligible to participate. The average number of eligible therapists per clinic was 9.2 (SD = 4.7). Of the 193 eligible therapists within participating clinics, N=177 participated in the study by completing a survey, resulting in a 92% response rate (mean therapists per clinic = 8.4, SD = 4.5).

Procedure

All study procedures were reviewed and approved by the affiliated Institutional Review Board. Once clinic leaders agreed their site would participate in the research, therapists were notified about the study via in-person or web-based meetings with the research team which described the study purpose and procedures. Following the meeting, therapists received an email from the research team inviting them to participate in a confidential web-based survey. Therapists were assured that their individual responses would not be shared with clinic leadership, and they were free to decline participation if they wished. Therapists provided electronic informed consent before responding. Surveys were fielded from October 2019 to November 2019. Participants received a $30 gift card to a national retailer.

Measures

Dependent variables

Therapist use of MBC for progress monitoring was assessed using the 3-item Standardized Assessment subscale of the nationally-normed Current Assessment Practice Evaluation Revised (CAPE-R) (Lyon et al., 2019). United States national norms for the CAPE-R (including the Standardized Assessment and Treatment Modification subscales) were generated from a random sample of licensed therapists (mental health counselors, social workers, and marriage and family therapists) in the USA (Jensen-Doss et al., 2018; Lyon et al., 2019). Items assess the frequency with which therapists use MBC to monitor client progress as reflected in the administration of standardized assessments at intake and on a weekly basis throughout treatment. Responses are made on a 4-point scale reflecting the percentage of the therapist’s caseload to which the statement applies. Values range from 1 (“None – 0%”) to 4 (“Most – 61–100%”). Scores on the CAPE-R demonstrated evidence of structural, convergent, and discriminant validity in a US national sample (Lyon et al., 2019), and have demonstrated sensitivity to change (Lyon et al., 2015). Coefficient alpha in this sample was 0.80.

Therapist use of MBC for treatment modification was assessed using the 2-item Treatment Modification subscale of the CAPE-R (Lyon et al., 2019). These items assess the frequency with which therapists modify the treatment plan or their plan for specific sessions based on standardized assessment results. Similar to the Standardized Assessment subscale, responses are made on a 4-point scale describing the percentage of therapists’ caseloads with whom these activities are completed weekly. Values range from 1 (“None – 0%”) to 4 (“Most – 61–100%”). Coefficient alpha in this sample was 0.68.

Therapist attitudes toward MBC were measured using three subscales from the 18-item Attitudes toward Standardized Assessment – Monitoring and Feedback scale (ASA-MF) (Jensen-Doss et al., 2018). Subscales on the ASA-MF assess therapists’ attitudes regarding the practicality, clinical utility, and treatment planning benefit of MBC. Example items include: “Standardized progress measures can efficiently gather information” (practicality), “Clinical problems are too complex to be captured by a standardized progress measure” (clinical utility – reverse-scored), and “Standardized progress measures help identify when treatment is not going well” (treatment planning benefit). Response options range from 1 (“strongly disagree”) to 5 (“strongly agree”); items are averaged to produce subscale scores. Evidence from prior research supports the reliability and validity of scores on the ASA-MF (Jensen-Doss et al., 2018; Lyon et al., 2019) including evidence they predict use of MBC (Patel et al., 2021). Coefficient alphas in this sample were 0.78 (practicality), 0.79 (clinical utility), and 0.74 (treatment planning benefit).

Clinic-level predictors

Each clinic’s evidence-based practice implementation climate was measured using the 18-item Implementation Climate Scale (ICS) (Ehrhart et al., 2014). The ICS assesses the extent to which an organization’s policies, procedures, and practices are aligned with the goal of evidence-based practice implementation and therapists perceive that they are expected, supported, and rewarded to use evidence-based practices in their clinical work. The unit referent for the ICS in this study was the therapists’ clinic site. In alignment with the original validation studies, items referred to ‘evidence-based practice’ rather than a specific clinical intervention. An example item is: “One of this clinic’s main goals is to use evidence-based practices effectively.” Response options range from 0 (“not at all”) to 4 (“a very great extent”). Items are averaged to produce a total score. Prior research confirms the reliability and construct validity of scores on the ICS with regard to other dimensions of organizational climate (Ehrhart et al., 2014), therapists’ attitudes toward evidence-based practice (Powell et al., 2017), and therapists’ use of evidence-based psychotherapy techniques (Beidas et al., 2017; Beidas et al., 2015; Williams et al., 2018; Williams, Hugh et al., 2022). There is also evidence scores on the ICS are sensitive to change (Williams et al., 2020). Coefficient alpha in this sample was 0.93.

On the basis of theory and empirical research indicating that organizational climate operates as a shared, higher-level construct (Chan, 1998; Ehrhart et al., 2014), therapists’ individual ratings of climate on the ICS were aggregated (averaged) to the clinic level for analysis. Following best practices, the construct validity of these clinic-level climate scores was tested by examining interrater agreement amongst therapists within the same clinic using the rwg(j) index based on a null distribution (James et al., 1993). Values of rwg(j) range from 0 to 1 and values ≥ 0.7 provide strong evidence of agreement (LeBreton & Senter, 2008). In this sample, all values of rwg(j) for evidence-based practice implementation climate were ≥ 0.7 (M = 0.92, SD = 0.07).

Clinic innovation and flexibility climate was assessed using the 5-item subscale from the Organizational Climate Measure (OCM) (Patterson et al., 2005). This subscale assesses staff perceptions that they are expected to support new ideas and be positively oriented toward change in their role behaviors as a priority in their work (Patterson et al., 2004). Prior research supports the predictive validity of scores on this subscale with regard to organizational innovation (Patterson et al., 2004). An example item is “People in this organization are always searching for new ways of looking at problems.” Item responses range from 1 (“definitely false”) to 4 (“definitely true”). Coefficient alpha in this sample was 0.90. In accordance with our theoretical model, therapists’ ratings of clinic innovation and flexibility climate were aggregated to the clinic-level. Values of rwg(j) for this scale supported aggregation (M = 0.89, SD = 0.09).

Clinic size was measured as the number of youths served in the prior year as reported by executives. This variable was divided by 100 for analysis.

Therapist-level predictors

Therapist level of experience using MBC was assessed by providing a definition of MBC and asking therapists to indicate: “How would you describe your level of experience using measurement-based care with your clients?” Measurement-based care was defined as “the systematic evaluation of client symptoms using a standardized measure before or during each clinical encounter - or almost every clinical encounter - in order to inform treatment decisions.” Responses were made on a 5-point Likert-type scale ranging from 0 (“No experience”) to 4 (“Great deal of experience”).

Therapist level of exposure to MBC in graduate training was assessed by asking: “To what extent did your education and training in clinical practice emphasize measuring and monitoring clients’ treatment progress by having clients complete standardized assessments every 1 to 2 treatment sessions?” Responses were made on a 4-point scale from 0 (“Not at all”) to 3 (“To a great extent”).

Therapist professional and demographic characteristics.

Therapists self-reported their years of experience as a mental health therapist, years tenure in their present organization, status as a contractor employee versus salaried, full-time (30 or more hours per week) versus part-time status, highest level of education completed, age, and sex. Therapists self-reported their race by selecting all applicable categories from: American Indian or Alaska Native, Asian, Black or African American, Native Hawaiian or Other Pacific Islander, or White. Therapists self-reported their ethnicity by indicating whether they identified as Hispanic or Latino (no/yes).

Statistical Analyses

The research questions were addressed using two-level linear mixed-effects regression models with random clinic intercepts to address the nesting of therapists within clinics. Indicator variables were used to address the nesting of clinics within States. Analyses were conducted using the TYPE=TWOLEVEL command in Mplus which employs robust maximum likelihood estimation (Muthén & Muthén, 2017). Missing data were less than 3% on all variables and were addressed using Bayesian multiple imputation with n=10 datasets as implemented in Mplus version 8 (Muthén & Muthén, 2017). Unadjusted models estimated the bivariate relationships between each predictor and outcome. Adjusted models estimated the partial relationships between all clinic and therapist predictors and the focal outcome while holding the other variables in the model constant. Adjusted models included clinic-level variables of evidence-based practice implementation climate, innovation and flexibility climate, and clinic size; in addition, they included therapist-level variables of attitudes regarding MBC practicality, attitudes regarding MBC clinical utility, attitudes regarding MBC treatment planning benefit, level of exposure to MBC in graduate training, level of prior experience with MBC, years of clinical experience, years tenure in the clinic, full-time vs. part-time status, and contractor vs. salaried status. To facilitate model interpretation, all continuous predictor variables (with the exception of those measured in years) were standardized prior to model estimation so that a one-unit change in the predictor was equivalent to a one standard deviation increase. Following model estimation, residual plots and variance inflation factor values were examined to confirm the tenability of model assumptions.

Effect sizes were expressed as the standardized, marginal mean difference in the outcome contrasting two groups (i.e., analogue to Cohen’s d) (Cohen, 1988): for binary variables, d was calculated as the standardized marginal mean difference between the two groups; for continuous variables, d was calculated as the standardized marginal mean difference between respondents +/− 1.5 standard deviations from the sample mean of the predictor. Cohen (1988) suggested values of d could be interpreted as small (0.2), medium (0.5), and large (0.8).

Results

Table 1 presents characteristics of the study sample. There were significant differences across clinics on therapists’ average tenure in the organization, full-time vs. part-time status, and contractor vs. salaried status (p<0.001), all of which were included in the adjusted analyses.

Table 1.

Characteristics of the study sample

| Variable | M | SD | Min. | Max. | Missing (%) |

|

| |||||

| Clinics (K = 21) | |||||

| EBP implementation climate (0 – 4) | 1.93 | .37 | 1.32 | 2.64 | 0 |

| Innovation & flexibility climate (1 – 4) | 3.02 | .37 | 2.35 | 3.63 | 0 |

| N youth served in prior year | 387.43 | 243.50 | 95 | 1000 | 0 |

| Therapists (N = 177) | |||||

| Use of MBC to monitor client progress (1 – 4) | 2.17 | .86 | 1.00 | 4.00 | 0 |

| Use of MBC to modify treatment (1 – 4) | 1.44 | .50 | 1.00 | 3.00 | 0 |

| MBC attitudes: Practicality (1 – 4) | 3.43 | .68 | 1.60 | 5.00 | 0 |

| MBC attitudes: Clinical utility (1 – 5) | 3.25 | .55 | 1.71 | 4.86 | <1 |

| MBC attitudes: Treatment planning benefit (1– 4) | 3.68 | .52 | 1.40 | 5.00 | 0 |

| Graduate training in MBC (0 – 3) | 1.33 | .93 | 0 | 3 | <1 |

| Experience with MBC (0 – 4) | 1.58 | .97 | 0 | 4 | 1 |

| Years of clinical experience | 6.63 | 6.48 | 0 | 37 | 2 |

| Years tenure in clinic | 3.36 | 3.71 | 0 | 19 | 2 |

| Age (in years) | 39.06 | 9.98 | 24 | 65 | 3 |

|

| |||||

| n | % | Missing (%) | |||

|

| |||||

| Employment model | 1 | ||||

| Salaried | 68 | 38.9 | |||

| Contractor | 107 | 61.1 | |||

| Employment Status | 2 | ||||

| Part-time Employee | 43 | 16.9 | |||

| Full-time Employee | 130 | 78.5 | |||

| Race | 8 | ||||

| Asian | 4 | 2.3 | |||

| Black or African American | 2 | 1.1 | |||

| More than one race | 2 | 1.1 | |||

| Native Hawaiian or Other Pacific Islander | 2 | 1.1 | |||

| Other | 8 | 4.5 | |||

| White | 145 | 89.9 | |||

| Ethnicity | 2 | ||||

| Identify as Hispanic/Latino | 19 | 10.7 | |||

| Do not identify as Hispanic/Latino | 154 | 87.0 | |||

| Gender | 2 | ||||

| Male | 30 | 16.9 | |||

| Female | 139 | 78.5 | |||

| Other gender identity | 5 | 2.8 | |||

| Education | <1 | ||||

| Doctoral Degree | 7 | 4.0 | |||

| Non-Doctoral Degree | 169 | 95.5 | |||

Note: EBP = evidence-based practice; MBC = measurement-based care.

Variation in therapist use of measurement-based care

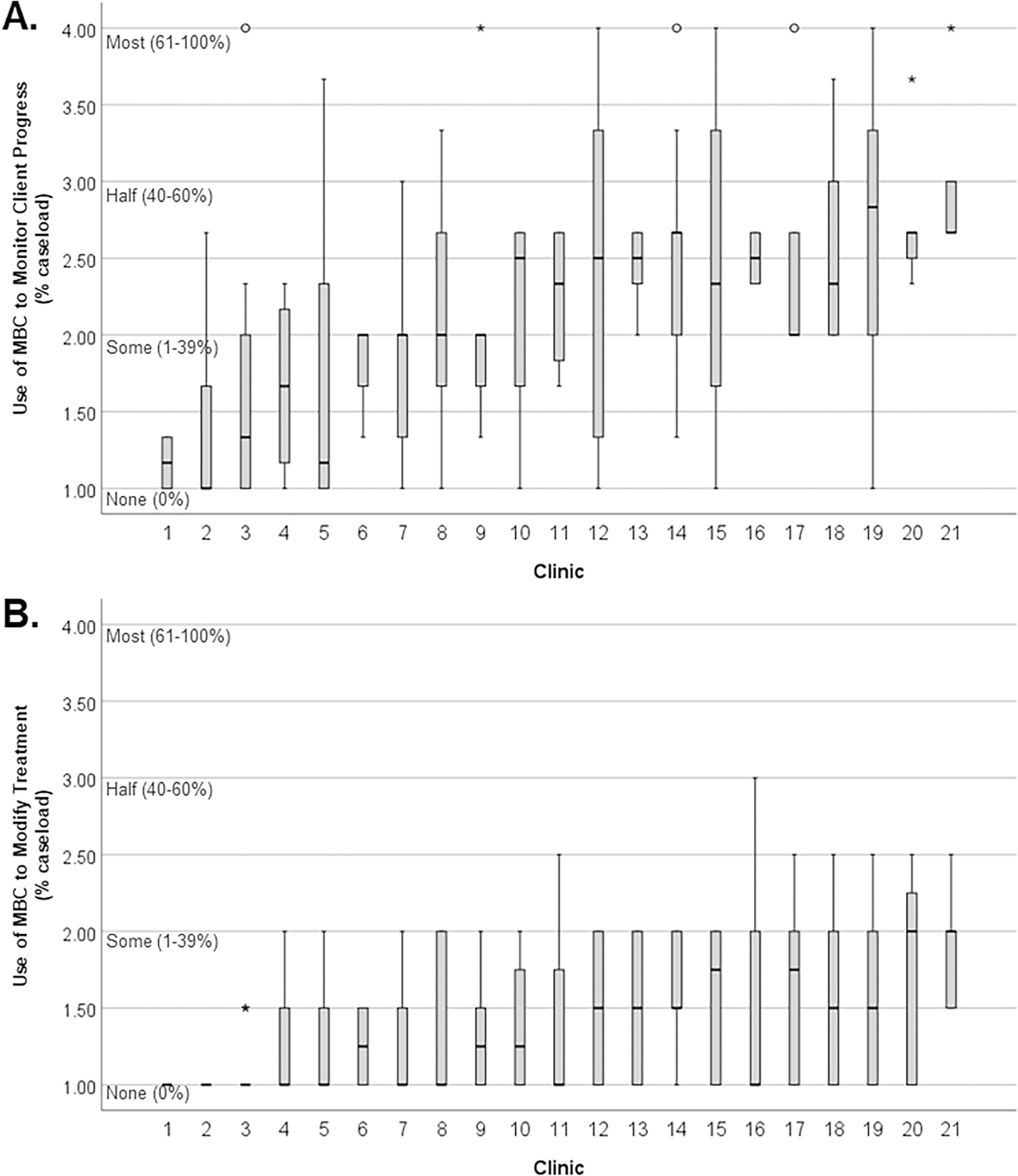

Figure 1 shows box plots of therapists’ use of MBC for progress monitoring (Figure 1A) and treatment modification (Figure 1B) by clinic. In the full sample, the mean use of MBC for progress monitoring corresponded to a rating of “Some (1–39%)” use with clients (M = 2.17, SD = .86). This value represents low use of MBC to monitor client progress but is above average (69th percentile) compared to US national norms provided by Lyon et al. (2019). However, there was significant variation across clinics in the mean use of MBC for progress monitoring (ICC(1) = .14, χ2 = 48.24, df = 20, p < .001). In the clinic with the lowest mean, average use of MBC to monitor client progress corresponded to a value at the 25th percentile, whereas in the clinic with the highest mean, the average corresponded to a value at the 93rd percentile.

Figure 1.

Variation in use of MBC for monitoring progress and modifying treatment by clinic.

Note: N = 177. Box plots of therapist scores by clinic on (A) the CAPE-R Standardized Assessment subscale, and (B) the CAPE-R Treatment Modification subscale. Scores range from 1 to 4 and indicate the percent (%) of caseload with whom the therapist uses the practice on a routine (monthly or weekly) basis, ranging from use with no clients (“None (0%)”) to “Most (61–100%)“ clients.

Use of MBC for treatment modification was less frequent (M = 1.44, SD = .50), corresponding to a value in-between “None (0%)” and “Some (1–39%).” This represents minimal use of MBC to modify treatment with clients and is below the national average (42nd percentile) (Lyon et al., 2019). However, there was also significant variation across clinics in mean use of MBC for treatment modification (ICC(1) = .06, χ2 = 32.09, df = 20, p = .042). The clinic with the lowest mean had a value corresponding to an 18th percentile score whereas the clinic with the highest mean had a value corresponding to a 71st percentile score.

Predictors of therapist use of measurement-based care

Use of MBC for Progress Monitoring

Clinic-level factors.

Table 2 presents unadjusted and adjusted parameter estimates from the linear mixed effects models predicting therapists’ use of MBC for progress monitoring. In unadjusted models, therapists used MBC to monitor client progress more frequently when they worked in clinics with higher levels of evidence-based practice implementation climate (d=0.76), whereas there was no evidence that innovation and flexibility climate or clinic size were related to use of MBC for progress monitoring (see Table 2). In adjusted models, the positive association between evidence-based practice implementation climate and therapist use of MBC for progress monitoring held, resulting in a medium effect size of d=0.55 when comparing therapists working in clinics with high versus low levels of implementation climate (see Table 2).

Table 2.

Predictors of therapists’ use of measurement-based care (MBC)

| Antecedents | Use of MBC for progress monitoring | Use of MBC for treatment modification | ||||||

|---|---|---|---|---|---|---|---|---|

|

|

||||||||

| Unadjusted | Adjusted | Unadjusted | Adjusted | |||||

|

|

||||||||

| Coeff | SE | Coeff | SE | Coeff | SE | Coeff | SE | |

|

| ||||||||

| Clinic-Level | ||||||||

| EBP Implementation Climatea | 0.22* | .09 | 0.16* | .08 | 0.12* | .05 | 0.09* | .05 |

| Innovation & Flexibility Climatea | 0.15 | .12 | 0.11 | .07 | 0.02 | .06 | 0.02 | .05 |

| N Youth Served (/100) | −0.03 | .03 | −0.03 | .03 | 0.01 | .02 | −0.01 | .02 |

| Therapist-Level | ||||||||

| Attitudes: Practicalitya | 0.25*** | .07 | 0.25*** | .05 | 0.08* | .04 | 0.04 | .05 |

| Attitudes: Clinical Utilitya | 0.11 | .08 | −0.04 | .09 | 0.06 | .04 | −0.02 | .05 |

| Attitudes: Treatment Planning Benefita | 0.08 | .07 | −0.04 | .05 | 0.07** | .03 | 0.05 | .04 |

| Experience with MBCa | 0.26*** | .07 | 0.26** | .09 | 0.14*** | .03 | 0.16*** | .04 |

| Graduate Training in MBCa | 0.14* | .06 | −0.07 | .09 | 0.05 | .03 | −0.08 | .04 |

| Years of Clinical Experience | −0.02* | .01 | −0.02** | .01 | −0.00 | .01 | −0.00 | .01 |

| Years Tenure in Clinic | −0.01 | .02 | 0.00 | .02 | 0.01 | .01 | 0.01 | .01 |

| Full-time Employee (ref=Part-time) | 0.09 | .13 | 0.00 | .15 | 0.08 | .09 | 0.05 | .10 |

| Contractor Employee (ref=Salary) | −0.02 | .23 | 0.07 | .18 | −0.10 | .11 | −0.094 | .10 |

| ICC(1) | 0.14 | 0.06 | ||||||

Note: N=177 clinicians in K = 21 clinics. Coefficients were estimated using 2-level linear mixed effects models with random intercepts for clinics. Missing data were addressed using Bayesian multiple imputation. All models controlled for State. EBP = evidence-based practice; ICC(1) = intraclass correlation coefficient; MBC = measurement-based care. Unadjusted models indicate the bivariate relationships between the antecedents and outcomes; adjusted models indicate the partial relationships between antecedents and outcomes controlling for all other variables in the model.

Variable is scaled so that a one-unit change is equal to one standard deviation.

p<.05

p<.01

p<.001

Therapist-level factors.

In unadjusted models, four therapist factors were related to therapists’ use of MBC for progress monitoring (see Table 2). Therapists used MBC to monitor client progress more frequently when they had more positive attitudes regarding the practicality of MBC (d=0.88), more experience using MBC (d=0.90), and greater exposure to MBC in graduate training (d=0.49); they used MBC for progress monitoring less often when they had more years of clinical experience (d=−0.46). Results were similar in the adjusted model (see Table 2): therapists with more positive attitudes regarding practicality (d=0.87) and more experience using MBC (d=0.93) used it to monitor client progress more frequently, whereas those with more years of clinical experience used it to monitor progress less frequently (d=−0.59).

Use of MBC for Treatment Modification

Clinic-level factors.

Therapists used MBC to modify treatment more frequently when they worked in clinics with higher levels of evidence-based practice implementation climate, resulting in an unadjusted effect size of d=0.70 when comparing therapists working in clinics with high versus low levels of implementation climate (see Table 2). This effect size was reduced to d=0.56 after controlling for all other variables in the adjusted model. No other clinic factors were related to therapists’ use of MBC to modify treatment.

Therapist-level factors.

Three therapist factors were related to use of MBC to modify treatment in the unadjusted models (see Table 2). Therapists used MBC to modify treatment more frequently when they had more positive attitudes regarding the practicality of MBC (d=0.49), more positive attitudes regarding the treatment planning benefit of MBC (d=0.44), and greater experience with MBC (d=0.82). In the adjusted model, therapists used MBC for treatment modification more frequently when they had greater experience with MBC (d=0.96, see Table 2).

Predictors of therapist attitudes towards measurement-based care

Practicality

Clinic-level factors.

Table 3 presents unadjusted and adjusted parameter estimates from the models predicting therapists’ attitudes toward MBC. In unadjusted models, therapists had more positive attitudes regarding the practicality of MBC when they worked in clinics with higher levels of evidence-based practice implementation climate (d=0.55). There was no evidence that innovation and flexibility climate or clinic size were related to attitudes regarding MBC practicality (see Table 3). In the adjusted model, the positive association between evidence-based practice implementation climate and clinician attitudes regarding MBC practicality remained significant, resulting in a medium effect size of d=0.65 (see Table 3). There was also evidence from the adjusted model that clinician attitudes regarding MBC practicality were worse in larger clinics (d=−0.52).

Table 3.

Predictors of therapist attitudes toward measurement-based care (MBC)

| Antecedents | Practicality | Clinical utility | Treatment planning benefit | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

||||||||||||

| Unadjusted | Adjusted | Unadjusted | Adjusted | Unadjusted | Adjusted | |||||||

|

|

||||||||||||

| Coeff | SE | Coeff | SE | Coeff | SE | Coeff | SE | Coeff | SE | Coeff | SE | |

|

| ||||||||||||

| Clinic-Level | ||||||||||||

| EBP Implementation Climatea | 0.12* | .06 | 0.15** | .05 | 0.04 | .06 | 0.01 | .05 | 0.02 | .06 | 0.02 | .06 |

| Innovation & Flexibility Climatea | −0.11 | .07 | −0.14 | .07 | −0.13 | .07 | −0.07 | .09 | −0.11 | .08 | −0.10 | .10 |

| N Youth Served (/100) | −0.03 | .04 | −0.05* | .02 | 0.02 | .02 | 0.02 | .02 | 0.02 | .02 | 0.00 | .02 |

| Therapist-Level | ||||||||||||

| Experience with MBCa | 0.16** | .06 | 0.07 | .08 | 0.12** | .04 | 0.04 | .05 | 0.12** | .04 | 0.06 | .05 |

| Graduate Training in MBCa | 0.20*** | .05 | 0.14 | .07 | 0.15*** | .04 | 0.12* | .06 | 0.13** | .04 | 0.09 | .05 |

| Years of Clinical Experience | 0.00 | .01 | 0.00 | .01 | 0.01 | .01 | 0.01 | .01 | 0.01 | .01 | 0.00 | .01 |

| Years Tenure in Clinic | 0.01 | .02 | −0.00 | .02 | 0.02 | .01 | 0.00 | .01 | 0.02 | .01 | 0.01 | .01 |

| Full-time Employee (ref=Part-time) | −0.07 | .13 | −0.18 | .14 | −0.01 | .09 | −0.12 | .09 | 0.01 | .09 | −0.04 | .09 |

| Contractor Employee (ref=Salary) | −0.48*** | .11 | −0.43*** | .09 | −0.28* | .11 | −0.23* | .11 | 0.03 | .13 | 0.10 | .13 |

| ICC(1) | 0.09 | 0.05 | 0.06 | |||||||||

Note: N=177 clinicians in K = 21 clinics. Coefficients were estimated using 2-level linear mixed effects models with random intercepts for clinics. Missing data were addressed using Bayesian multiple imputation. All models controlled for State. EBP = evidence-based practice; ICC(1) = intraclass correlation coefficient; MBC = measurement-based care. Unadjusted models indicate the bivariate relationships between the antecedents and outcomes; adjusted models indicate the partial relationships between antecedents and outcomes controlling for all other variables in the model.

Variable is scaled so that a one-unit change is equal to one standard deviation.

p<.05

p<.01

p<.001

Therapist-level factors.

Three therapist factors were related to therapists’ attitudes toward the practicality of MBC in unadjusted models (see Table 3): exposure to MBC in graduate training (d=0.88) and prior experience using MBC (d=0.69) predicted more positive attitudes regarding practicality, whereas employment as a contractor (vs. salaried) predicted more negative attitudes (d=−0.70). In the adjusted model, the negative association of therapist contractor status (vs. salaried) with attitudes toward practicality remained statistically significant (d=−0.64); however, the association with MBC exposure in graduate training (p = .051) and prior experience using MBC (p = .345) did not meet thresholds for statistical significance.

Clinical Utility

Clinic-level factors.

None of the clinic-level predictors explained variation in therapists’ attitudes regarding the clinical utility of MBC in either adjusted or unadjusted models (see Table 3).

Therapist-level factors.

The three therapist-level factors that predicted therapists’ attitudes regarding practicality of MBC were also significant predictors of therapists’ attitudes regarding the clinical utility of MBC in the unadjusted models (see Table 3): status as a contractor (vs. salaried) employee (d=−0.51), level of exposure to MBC in graduate training (d=0.84), and level of experience with MBC (d=0.65). In the adjusted model, therapist attitudes regarding the clinical utility of MBC remained positively related to their level of graduate training in MBC (d=0.67) and negatively related to contractor status (d=−0.43).

Treatment Planning Benefit

Clinic-level factors.

None of the clinic-level predictors explained variation in therapists’ attitudes regarding the treatment planning benefit of MBC in either unadjusted or adjusted models.

Therapist-level factors.

Similar to the other attitudinal outcomes, in unadjusted models therapists had more positive attitudes regarding the treatment planning benefit of MBC when they had greater exposure to MBC in graduate training (d=0.72) and increased experience with MBC (d=0.70). However, there were no significant predictors of therapists’ attitudes regarding treatment planning benefit of MBC in the adjusted model.

Discussion

Improving the implementation of MBC in behavioral health is a top priority as stakeholders seek to optimize the clinical outcomes of health systems and move toward value-based care (Axelson & Brent, 2020; Fortney et al., 2017; Harding et al., 2011; Zandi et al., 2020). This study fills an important gap in understanding the extent to which therapists’ use of, and attitudes toward, MBC vary across provider organizations, and the theory-informed organizational- and individual-level factors associated with this variation.

An important finding of this research was that the overall low use of MBC in the full sample masked statistically significant and practically important differences in the average use of MBC across clinics. These differences were reflected in the clinic means, which ranged from values at the 25th to the 93rd percentiles on progress monitoring and from the 18th to the 71st percentiles on treatment modification. The finding of significant clinic-level variation in therapists’ use of MBC is in concordance with a growing number of studies demonstrating important variation in implementation and clinical outcomes (Proctor et al., 2011) across provider organizations (Beidas et al., 2015; Garland et al., 2013; Ogles et al., 2008) and has implications for the development of implementation strategies to improve MBC use. Given that higher fidelity to MBC predicts improved clinical benefit from services (Fortney et al., 2017; Lambert, 2015; Lewis et al., 2019; Shimokawa et al., 2010), this finding also has implications for behavioral healthcare participants: the extent to which their care incorporates evidence-based measurement and feedback may depend in part on which clinic they choose or have access to.

The most consistent and robust predictor of clinic-level variation in therapists’ use of MBC for progress monitoring and treatment modification was clinic climate for evidence-based practice implementation. Results of this study indicate therapists who work in clinics with higher levels of evidence-based practice implementation climate use MBC for progress monitoring and treatment modification significantly more often than their peers in clinics with low levels of evidence-based practice implementation climate, even after controlling for a wide range of clinic and therapist characteristics, including organizational climate for innovation and flexibility and therapist attitudes (d=0.55 and d=0.54, respectively). This is a novel and important finding given the extensive theoretical and empirical development of the implementation climate construct in the organizational and implementation sciences (Ehrhart et al., 2014; Williams et al., 2022) and the absence of this variable from prior research on MBC implementation. Given emerging research which demonstrates that within-organization improvement in evidence-based practice implementation climate predicts within-organization improvement in therapists’ aggregate implementation of effective practices (Williams et al., 2020), this finding suggests that clinics and systems may be able to improve MBC implementation by generating clinic climates that support evidence-based practice implementation.

Importantly, theory suggests therapists’ shared perceptions of evidence-based practice implementation climate do not arise from a single, specific set of organizational policies, procedures, and practices, but rather develop from the overall pattern of these elements as therapists actively interpret and make sense of their work environment (Klein & Sorra, 1996; Zohar & Hofmann, 2012). Consequently, as long as leaders ensure organizational policies, procedures, and practices clearly communicate the priority on implementing evidence-based practices (relative to competing organizational goals), are internally consistent, and are consistently enacted even when trade-offs are required, a climate for evidence-based practice implementation will develop. This implies that a wide range of leadership interventions or strategic changes to organizational policies and procedures may contribute to generating an evidence-based practice implementation climate. Examples include: leadership communicating the priority placed on evidence-based practice through vision and mission statements, monitoring of services, and promotion of staff; providing resources and training for evidence-based practices such as MBC; hiring and promoting therapists based on their development of expertise in relevant evidence-based treatment models and tools (such as MBC); and, aligning resources and reward systems to support high-fidelity delivery of evidence-based practices (including MBC; Aarons, Ehrhart, Farahnak, & Sklar, 2014; Stetler et al., 2014). A growing body of research highlights specific leadership behaviors that foster evidence-based practice implementation climates in behavioral health care settings and readers are referred to those sources for examples (see Aarons, Ehrhart, & Farahnak, 2014). Clinical trials are also underway testing strategies that help leaders and organizations develop climates that support the effective implementation of evidence-based practices (Aarons et al., 2017).

Surveys that sample individual practitioners have repeatedly shown attitudes regarding practicality are one of the most consistent and strongest predictors of successful MBC implementation (Jensen-Doss et al., 2018; Kwan et al., 2021), a finding replicated in this study. However, results from this study also identify two novel therapist-level antecedents to attitudes which have implications for policy and implementation strategies. The most consistent individual-level predictors of therapists’ attitudes toward MBC across all models were exposure to MBC in graduate training and contract employee status. We are not aware of any prior research that assessed the association between therapists’ MBC attitudes and their exposure to MBC in graduate training; however, results of this study indicate these variables are consistently related. This is not surprising given therapists often rely on treatment approaches learned in graduate school later in their careers (Cook et al., 2009; Stein & Lambert, 1995). Incorporating a strong focus on MBC in graduate and professional training, including both classroom and fieldwork, is an attractive target for intervention. Competency in MBC is relevant to multiple disciplines that deliver behavioral health services including counseling, marriage and family therapy, psychiatry, psychology, social work, and allied health professions. However, very little research has examined the extent to which MBC is addressed in these graduate and professional training programs and studies are needed to better understand the nature of the gap and strategies for closing it (Becker-Haimes et al., 2019; Lewis et al., 2019). First steps may include assessing the relevance and alignment of MBC with competency standards of behavioral health professions, evaluating the extent to which MBC content is already infused into professional training curriculums and field placements across disciplines, and identifying the most important leverage points for increasing the capacity of programs to incorporate MBC competency development into training programs (e.g., Becker et al., 2021).

Behavioral health systems across the world are increasingly relying on independent contractors versus salaried employees to offset financial challenges (Stewart et al., 2016). Prior research has shown contractors may have less up-to-date knowledge of evidence-based practices, possibly due to less investment by employing organizations in their training (Beidas et al., 2016). Our results indicate that contractors also have poorer attitudes toward MBC, a finding which may also be driven by lower levels of organizational support or other factors such as less paid time to enter and review data from measures. Some providers express concerns that MBC data may be used inappropriately to evaluate staff rather than to guide treatment (Meehan et al., 2006) and these concerns may be elevated for independent contractors who lack strong integration within the organization. New models of compensating and supporting therapists may be needed in order to balance conflicting demands for resource efficiency and utmost standards of evidence-based, quality care.

Perhaps unsurprisingly, prior experience using MBC was one of the strongest predictors of therapists’ current use of MBC for progress monitoring and treatment modification; however, it is important to note that the direction of this relationship was positive: therapists with greater experience using MBC had more positive attitudes towards MBC in all three unadjusted models. While definitive conclusions cannot be drawn from this cross-sectional data, the pattern of findings suggests therapists who use MBC do not grow to dislike it but rather have improved attitudes toward it which appear to contribute to sustained use over time. These preliminary findings need to be replicated in longitudinal data and examined more in depth but are promising nonetheless as they suggest greater exposure to MBC leads to more positive attitudes and greater usage rather than the opposite.

The inclusion of clinic evidence-based practice implementation climate and therapist attitudes in the adjusted models predicting therapists’ use of MBC deserves special mention because theory suggests these variables may be linked in a causal chain such that evidence-based practice implementation climate (X) influences therapist attitudes (M) which subsequently influence therapist MBC use (Y) (i.e., X→M→Y) (Kuenzi & Schminke, 2009). While formal tests of mediation were not possible in this study due to the cross-sectional design, it is noteworthy that the pattern of results appears consistent with a partial mediation hypothesis. Variation in evidence-based practice implementation climate explained variation in therapists’ attitudes regarding MBC practicality which in turn explained variation in therapists’ self-reported behavior. However, the association of evidence-based practice implementation climate with MBC use remained statistically significant even after controlling for attitudes regarding practicality. This suggests that, at most, attitudes explain only part of the relationship between evidence-based practice implementation climate and MBC use. Other variables that may link implementation climate and MBC use include therapist self-efficacy and skill (Ehrhart et al., 2014). Future research may fruitfully explore this mediation chain to better understand the optimal points of intervention to improve and support therapists’ effective use of MBC (Williams, 2016).

Results of this study highlight multiple points along the workforce development pathway that may serve as fruitful targets of intervention. The finding that increased exposure to MBC in graduate training predicted improved MBC attitudes and increased use of MBC highlights the potential value of implementation strategies that intervene on graduate training to improve MBC training in curriculum and clinical practicums. Introducing therapists to MBC as part of their graduate training will begin them on a path toward increased experience with MBC which was also shown in this study to predict more positive attitudes and increased frequency of MBC use. Once therapists enter the workforce, a high level of clinic climate for evidence-based practice implementation will further support and reinforce the use of MBC and aid therapists in integrating MBC into clinical care. Hybrid Type III effectiveness-implementation trials are needed to test implementation strategies that target one or more of these points and to determine the comparative effectiveness and cost-effective of various approaches (Curran et al., 2012). Research evaluating moderators of these strategies’ effects will also be valuable for helping determine which strategies should be applied for which clinical interventions, in which settings, and with what populations.

Results of this study should be interpreted within the context of its limitations. Self-report measures of practitioner behavior do not always correspond with observed behavior (Hurlburt et al., 2010); however, self-report measures are more accurate when they assess general rather than highly granular behaviors, which was the case in this study (Chapman et al., 2013; Hogue et al., 2014). Studies are needed to replicate these findings with observed therapist adherence to MBC. This study is cross-sectional and therefore does not support causal inferences. The study design also prevented us from examining potential mediation paths suggested by theory such as attitudes mediating the relationship between clinic climate and therapist behavior. Although our sample size of 21 clinics from 3 states was relatively large for studies in behavioral health settings, future research should incorporate even more clinics to produce more precise estimates of the extent to which MBC use varies at the clinic and therapist levels. The extent to which results from this sample of clinics in the western USA generalize to other clinics is unknown; however, the high response rates within clinics increases confidence that findings generalize to clinics of similar size and scope. In conclusion, results of this study highlight the extensive variation in MBC use across clinics and identify new, actionable targets for improving the integration of MBC into outpatient behavioral health clinics.

Funding and competing interests:

Research reported in this publication was supported by the National Institute of Mental Health of the U.S. National Institutes of Health under Award Number R01MH119127. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. The authors have no conflicts of interest to disclose.

Footnotes

Guidelines: Reporting of this research is completed in compliance with the STROBE guidelines for observational studies.

Ethics Approval: Approval was obtained from the institutional review board of Boise State University (Protocol Number 041-SB19–081). The procedures used in this study adhere to the tenets of the Declaration of Helsinki.

Consent to Participate: Informed consent was obtained from all individual participants included in the study.

References

- Aarons GA, Ehrhart MG, Farahnak LR, & Sklar M (2014). Aligning leadership across systems and organizations to develop a strategic climate for evidence-based practice implementation. Annual Review of Public Health, 35, 255–274. 10.1146/annurev-publhealth-032013-182447 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, Ehrhart MG, & Farahnak LR (2014). The implementation leadership scale (ILS): development of a brief measure of unit level implementation leadership. Implementation Science, 9(45). 10.1186/1748-5908-9-45 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, Ehrhart MG, Moullin JC, Torres EM, & Green AE (2017). Testing the leadership and organizational change for implementation (LOCI) intervention in substance abuse treatment: a cluster randomized trial study protocol. Implementation Science, 12(29). 10.1186/s13012-017-0562-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, Hurlburt M, & Horwitz SM (2011). Advancing a conceptual model of evidence-based practice implementation in public service sectors. Administration and Policy in Mental Health and Mental Health Services Research, 38(1), 4–23. 10.1007/s10488-010-0327-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, & Sommerfeld DH (2012). Leadership, innovation climate, and attitudes toward evidence-based practice during a statewide implementation. Journal of the American Academy of Child & Adolescent Psychiatry, 51(4), 423–431. 10.1016/j.jaac.2012.01.018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Axelson A, & Brent D (2020). Regulators, Payors, and the Impact of Measurement-Based Care on Value-Based Care in Psychiatry. Child and Adolescent Psychiatric Clinics of North America, 29(4), 743–754. 10.1016/j.chc.2020.05.001 [DOI] [PubMed] [Google Scholar]

- Becker KD, Daleiden EL, Kataoka SH, Edwards SM, Best KM, Donohue A, & Chorpita BF (2021). Pilot study of the MAP curriculum for psychotherapy competencies in child and adolescent psychiatry. American Journal of Psychotherapy. 10.1176/appi.psychotherapy.20210010. [DOI] [PubMed] [Google Scholar]

- Becker-Haimes EM, Okamura KH, Baldwin CD, Wahesh E, Schmidt C, & Beidas RS (2019). Understanding the Landscape of Behavioral Health Pre-service Training to Inform Evidence-Based Intervention Implementation. Psychiatric Services, 70, 68–70. 10.1176/appi.ps.201800220 [DOI] [PubMed] [Google Scholar]

- Beidas R, Skriner L, Adams D, Wolk CB, Stewart RE, Becker-Haimes E, Williams N, Maddox B, Rubin R, Weaver S, Evans A, Mandell D, & Marcus SC (2017). The relationship between consumer, clinician, and organizational characteristics and use of evidence-based and non-evidence-based therapy strategies in a public mental health system. Behaviour Research and Therapy, 99, 1–10. 10.1016/j.brat.2017.08.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beidas RS, Marcus S, Aarons GA, Hoagwood KE, Schoenwald S, Evans AC, Hurford MO, Hadley T, Barg FK, & Walsh LM (2015). Predictors of community therapists’ use of therapy techniques in a large public mental health system. JAMA Pediatrics, 169(4), 374–382. 10.1001/jamapediatrics.2014.3736 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beidas RS, Stewart RE, Benjamin Wolk C, Adams DR, Marcus SC, Evans AC Jr., Jackson K, Neimark G, Hurford MO, Erney J, Rubin R, Hadley TR, Barg FK, & Mandell DS (2016). Independent Contractors in Public Mental Health Clinics: Implications for Use of Evidence-Based Practices. Psychiatric Services, 67(7), 710–717. 10.1176/appi.ps.201500234 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bickman L (2008). A measurement feedback system (MFS) is necessary to improve mental health outcomes. Journal of the American Academy of Child and Adolescent Psychiatry, 47(10), 1114. 10.1097/CHI.0b013e3181825af8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bickman L, Douglas SR, De Andrade ARV, Tomlinson M, Gleacher A, Olin S, & Hoagwood K (2016). Implementing a measurement feedback system: A tale of two sites. Administration and Policy in Mental Health and Mental Health Services Research, 43(3), 410–425. 10.1097/CHI.0b013e3181825af8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bovendeerd B, de Jong K, de Groot E, Moerbeek M & de Keijser J (2021). Enhancing the effect of psychotherapy through systematic client feedback in outpatient mental healthcare: A cluster randomized trial. Psychotherapy Research. 10.1080/10503307.2021.2015637 [DOI] [PubMed] [Google Scholar]

- Chan D (1998). Functional relations among constructs in the same content domain at different levels of analysis: A typology of composition models. Journal of Applied Psychology, 83(2), 234–246. 10.1037/0021-9010.83.2.234 [DOI] [Google Scholar]

- Chapman JE, McCart MR, Letourneau EJ, & Sheidow AJ (2013). Comparison of youth, caregiver, therapist, trained, and treatment expert raters of therapist adherence to a substance abuse treatment protocol. Journal of Consulting and Clinical Psychology, 81(4), 674–680. 10.1037/a0033021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen J (1988). Statistical power analysis for the behavioral sciences (2nd ed.). Lawrence Erlbaum Associates. [Google Scholar]

- Connors EH, Douglas S, Jensen-Doss A, Landes SJ, Lewis CC, McLeod BD, Stanick C, & Lyon AR (2021). What gets measured gets done: How mental health agencies can leverage measurement-based care for better patient care, clinician supports, and organizational goals. Administration and Policy in Mental Health and Mental Health Services Research, 48(2), 250–265. 10.1007/s10488-020-01063-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cook JM, Schnurr PP, Biyanova T, & Coyne JC (2009). Apples don’t fall far from the tree: influences on psychotherapists’ adoption and sustained use of new therapies. Psychiatric Services, 60(5), 671–676. 10.1176/appi.ps.60.5.671 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Curran GM, Bauer M, Mittman B, Pyne JM, & Stetler C (2012). Effectiveness-implementation hybrid designs: combining elements of clinical effectiveness and implementation research to enhance public health impact. Medical care, 50(3), 217–226. 10.1097/MLR.0b013e3182408812 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, & Lowery JC (2009). Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implementation Science, 4(50). 10.1186/1748-5908-4-50 [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Jong K, Conijn JM, Gallagher RA, Reshetnikova AS, Heij M, & Lutz MC (2021). Using progress feedback to improve outcomes and reduce drop-out, treatment duration, and deterioration: A multilevel meta-analysis. Clinical Psychology Review, 85, 102002. 10.1016/j.cpr.2021.102002 [DOI] [PubMed] [Google Scholar]

- de Jong K, van Sluis P, Nugter MA, Heiser WJ, & Spinhoven P (2012). Understanding the differential impact of outcome monitoring: Therapist variables that moderate feedback effects in a randomized clinical trial. Psychotherapy Research, 22(4), 464–474. 10.1080/10503307.2012.673023 [DOI] [PubMed] [Google Scholar]

- Ehrhart MG, Aarons GA, & Farahnak LR (2014). Assessing the organizational context for EBP implementation: The development and validity testing of the Implementation Climate Scale (ICS). Implementation Science, 9(157). 10.1186/s13012-014-0157-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ehrhart MG, Schneider B, & Macey WH (2013). Organizational climate and culture: An introduction to theory, research, and practice. Routledge. 10.4324/9781315857664 [DOI] [PubMed] [Google Scholar]

- Fortney JC, Unützer J, Wrenn G, Pyne JM, Smith GR, Schoenbaum M, & Harbin HT (2017). A Tipping Point for Measurement-Based Care. Psychiatric Services, 68(2), 179–188. 10.1176/appi.ps.201500439 [DOI] [PubMed] [Google Scholar]

- Garland AF, Haine-Schlagel R, Brookman-Frazee L, Baker-Ericzen M, Trask E, & Fawley-King K (2013). Improving community-based mental health care for children: Translating knowledge into action. Administration and Policy in Mental Health and Mental Health Services Research, 40(1), 6–22. 10.1007/s10488-012-0450-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garland AF, Kruse M, & Aarons GA (2003). Clinicians and outcome measurement: what’s the use? The Journal of Behavioral Health Services & Research, 30(4), 393–405. 10.1007/bf02287427 [DOI] [PubMed] [Google Scholar]

- Gleacher AA, Olin SS, Nadeem E, Pollock M, Ringle V, Bickman L, Douglas S, & Hoagwood K (2016). Implementing a measurement feedback system in community mental health clinics: A case study of multilevel barriers and facilitators. Administration and Policy in Mental Health and Mental Health Services Research, 43(3), 426–440. 10.1007/s10488-015-0642-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hannan C, Lambert MJ, Harmon C, Nielsen SL, Smart DW, Shimokawa K, & Sutton SW (2005). A lab test and algorithms for identifying clients at risk for treatment failure. Journal of clinical psychology, 61(2), 155–163. 10.1002/jclp.20108 [DOI] [PubMed] [Google Scholar]

- Harding KJ, Rush AJ, Arbuckle M, Trivedi MH, & Pincus HA (2011). Measurement-based care in psychiatric practice: a policy framework for implementation. Journal of Consulting and Clinical Psychology, 72(8), 1136–1143. 10.4088/JCP.10r06282whi [DOI] [PubMed] [Google Scholar]

- Harmon SC, Lambert MJ, Smart DM, Hawkins E, Nielsen SL, Slade K, & Lutz W (2007). Enhancing outcome for potential treatment failures: Therapist–client feedback and clinical support tools. Psychotherapy Research, 17(4), 379–392. 10.1080/10503300600702331 [DOI] [Google Scholar]

- Hatfield D, McCullough L, Frantz SH, & Krieger K (2010). Do we know when our clients get worse? An investigation of therapists’ ability to detect negative client change. Clinical Psychology & Psychotherapy: An International Journal of Theory & Practice, 17(1), 25–32. 10.1002/cpp.656 [DOI] [PubMed] [Google Scholar]

- Hatfield DR, & Ogles BM (2007). Why some clinicians use outcome measures and others do not. Administration and Policy in Mental Health and Mental Health Services Research, 34(3), 283–291. 10.1007/s10488-006-0110-y [DOI] [PubMed] [Google Scholar]

- Henke RM, Zaslavsky AM, McGuire TG, Ayanian JZ, & Rubenstein LV (2009). Clinical inertia in depression treatment. Medical care, 47(9), 959–967. 10.1097/MLR.0b013e31819a5da0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hogue A, Dauber S, Henderson CE, & Liddle HA (2014). Reliability of therapist self-report on treatment targets and focus in family-based intervention. Administration and Policy in Mental Health and Mental Health Services Research, 41(5), 697–705. 10.1007/s10488-013-0520-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hurlburt MS, Garland AF, Nguyen K, & Brookman-Frazee L (2010). Child and family therapy process: Concordance of therapist and observational perspectives. Administration and Policy in Mental Health and Mental Health Services Research, 37(3), 230–244. 10.1007/s10488-009-0251-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- James LR, Demaree RG, & Wolf G (1993). rwg: An assessment of within-group interrater agreement. Journal of Applied Psychology, 78(2), 306. [Google Scholar]

- Jensen-Doss A, Douglas S, Phillips DA, Gencdur O, Zalman A, & Gomez NE (2020). Measurement-based care as a practice improvement tool: Clinical and organizational applications in youth mental health. Evidence-based Practice in Child and Adolescent Mental Health, 5(3), 233–250. 10.1080/23794925.2020.1784062 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jensen-Doss A, Haimes EMB, Smith AM, Lyon AR, Lewis CC, Stanick CF, & Hawley KM (2018). Monitoring treatment progress and providing feedback is viewed favorably but rarely used in practice. Administration and Policy in Mental Health and Mental Health Services Research, 45(1), 48–61. 10.1007/s10488-016-0763-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klein KJ, & Sorra JS (1996). The challenge of innovation implementation. Academy of Management Review, 21(4), 1055–1080. 10.2307/259164 [DOI] [Google Scholar]

- Kotte A, Hill KA, Mah AC, Korathu-Larson PA, Au JR, Izmirian S, Keir SS, Nakamura BJ, & Higa-McMillan CK (2016). Facilitators and barriers of implementing a measurement feedback system in public youth mental health. Administration and Policy in Mental Health and Mental Health Services Research, 43(6), 861–878. 10.1007/s10488-016-0729-2 [DOI] [PubMed] [Google Scholar]

- Krägeloh CU, Czuba KJ, Billington DR, Kersten P, & Siegert RJ (2015). Using feedback from patient-reported outcome measures in mental health services: a scoping study and typology. Psychiatric Services, 66(3), 224–241. 10.1176/appi.ps.201400141 [DOI] [PubMed] [Google Scholar]

- Kuenzi M, & Schminke M (2009). Assembling Fragments Into a Lens: A Review, Critique, and Proposed Research Agenda for the Organizational Work Climate Literature. Journal of Management, 35(3), 634–717. 10.1177/0149206308330559 [DOI] [Google Scholar]

- Kwan B, Rickwood DJ, & Brown PM (2021). Factors affecting the implementation of an outcome measurement feedback system in youth mental health settings. Psychotherapy Research, 31(2), 171–183. 10.1080/10503307.2020.1829738 [DOI] [PubMed] [Google Scholar]

- Lambert MJ (2015). Progress feedback and the OQ-system: The past and the future. Psychotherapy, 52(4), 381–390. 10.1037/pst0000027 [DOI] [PubMed] [Google Scholar]

- Lambert MJ, Whipple JL, & Kleinstäuber M (2018). Collecting and delivering progress feedback: A meta-analysis of routine outcome monitoring. Psychotherapy, 55(4), 520–537. 10.1037/pst0000167 [DOI] [PubMed] [Google Scholar]

- Landes SJ, Carlson EB, Ruzek JI, Wang D, Hugo E, DeGaetano N, Chambers JG, & Lindley SE (2015). Provider-driven development of a measurement feedback system to enhance measurement-based care in VA mental health. Cognitive and Behavioral Practice, 22(1), 87–100. 10.1016/j.cbpra.2014.06.004 [DOI] [Google Scholar]

- Lewis CC, Boyd M, Puspitasari A, Navarro E, Howard J, Kassab H, Hoffman M, Scott K, Lyon A, Douglas S, Simon G, & Kroenke K (2019). Implementing measurement-based care in behavioral health: A review. JAMA Psychiatry, 76(3), 324–335. 10.1001/jamapsychiatry.2018.3329 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lutz W, de Jong K, Rubel JA, & Delgadillo J (2021). Measuring, predicting, and tracking change in psychotherapy. In Barkham M, Lutz W, & Castonguay LG (Eds.), Bergin and Garfield’s Handbook of Psychotherapy and Behavior Change (7th ed.) (pp. 89–134). Wiley. [Google Scholar]

- Lyon AR, Dorsey S, Pullmann M, Silbaugh-Cowdin J, & Berliner L (2015). Clinician use of standardized assessments following a common elements psychotherapy training and consultation program. Administration and Policy in Mental Health and Mental Health Services Research, 42(1), 47–60. 10.1007/s10488-014-0543-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lyon AR, Pullmann MD, Dorsey S, Martin P, Grigore AA, Becker EM, & Jensen-Doss A (2019). Reliability, validity, and factor structure of the Current Assessment Practice Evaluation-Revised (CAPER) in a national sample. The Journal of Behavioral Health Services & Research, 46(1), 43–63. 10.1007/s11414-018-9621-z [DOI] [PubMed] [Google Scholar]

- Marty D, Rapp C, McHugo G, & Whitley R (2008). Factors influencing consumer outcome monitoring in implementation of evidence-based practices: Results from the National EBP Implementation Project. Administration and Policy in Mental Health and Mental Health Services Research, 35(3), 204–211. 10.1007/s10488-007-0157-4 [DOI] [PubMed] [Google Scholar]

- McAleavey AA, & Moltu C (2021). Understanding routine outcome monitoring and clinical feedback in context: introduction to the special section. Psychotherapy Research, 31(2), 142–144. 10.1080/10503307.2020.1866786 [DOI] [PubMed] [Google Scholar]

- Meehan T, McCombes S, Hatzipetrou L, & Catchpoole R (2006). Introduction of routine outcome measures: staff reactions and issues for consideration. Journal of Psychiatric and Mental Health Nursing, 13(5), 581–587. 10.1111/j.1365-2850.2006.00985.x [DOI] [PubMed] [Google Scholar]

- Muthén LKM, & Muthén BO (2017). Statistical analysis with latent variables (8 ed.). Muthén & Muthén. [Google Scholar]

- Nilsen P (2015). Making sense of implementation theories, models and frameworks. Implementation Science, 10(53). 10.1186/s13012-015-0242-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ogles BM, Carlson B, Hatfield D, & Karpenko V (2008). Models of case mix adjustment for Ohio mental health consumer outcomes among children and adolescents. Administration and Policy in Mental Health and Mental Health Services Research, 35(4), 295–304. 10.1007/s10488-008-0171-1 [DOI] [PubMed] [Google Scholar]

- Patel ZS, Jensen-Doss A, & Lewis CC (2022). MFA and ASA-MF: A psychometric analysis of attitudes towards measurement-based care. Administration and Policy in Mental Health and Mental Health Services Research, 49, 13–28. 10.1007/s10488-021-01138-2 [DOI] [PubMed] [Google Scholar]

- Patterson M, Warr P, & West M (2004). Organizational Climate and Company Productivity: The Role of Employee Affect and Employee Level. Journal of Occupational and Organizational Psychology, 77, 193–216. 10.1348/096317904774202144 [DOI] [Google Scholar]

- Patterson MG, West MA, Shackleton VJ, Dawson JF, Lawthom R, Maitlis S, Robinson DL, & Wallace AM (2005). Validating the organizational climate measure: Links to managerial practices, productivity and innovation. Journal of Organizational Behavior, 26(4), 379–408. 10.1002/job.312 [DOI] [Google Scholar]

- Powell BJ, Mandell DS, Hadley TR, Rubin RM, Evans AC, Hurford MO, & Beidas RS (2017). Are general and strategic measures of organizational context and leadership associated with knowledge and attitudes toward evidence-based practices in public behavioral health settings? A cross-sectional observational study. Implementation Science, 12(64). 10.1186/s13012-017-0593-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, Griffey R, & Hensley M (2011). Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Administration and Policy in Mental Health and Mental Health Services Research, 38(2), 65–76. 10.1007/s10488-010-0319-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rycroft-Malone J (2010). Promoting action on research implementation in health services (PARIHS). In Rycroft-Malone J & Bucknall T (Eds.), Models and frameworks for implementing evidence-based practice: linking evidence to action (pp. 109–135). Wiley-Blackwell. [Google Scholar]

- Sale R, Bearman SK, Woo R, & Baker N (2021). Introducing a Measurement Feedback System for Youth Mental Health: Predictors and Impact of Implementation in a Community Agency. Administration and Policy in Mental Health and Mental Health Services Research, 48(2), 327–342. 10.1007/s10488-020-01076-5 [DOI] [PubMed] [Google Scholar]

- Schneider B, White SS, & Paul MC (1998). Linking service climate and customer perceptions of service quality: Tests of a causal model. Journal of Applied Psychology, 83(2), 150–163. 10.1037/0021-9010.83.2.150 [DOI] [PubMed] [Google Scholar]

- Schoenwald SK, Chapman JE, Kelleher K, Hoagwood KE, Landsverk J, Stevens J, Glisson C, & Rolls-Reutz J (2008). A survey of the infrastructure for children’s mental health services: Implications for the implementation of empirically supported treatments (ESTs). Administration and Policy in Mental Health and Mental Health Services Research, 35(1), 84–97. 10.1007/s10488-007-0147-6 [DOI] [PubMed] [Google Scholar]

- Shimokawa K, Lambert MJ, & Smart DW (2010). Enhancing treatment outcome of patients at risk of treatment failure: meta-analytic and mega-analytic review of a psychotherapy quality assurance system. Journal of Consulting and Clinical Psychology, 78(3), 298–311. 10.1037/a0019247 [DOI] [PubMed] [Google Scholar]

- Stein DM, & Lambert MJ (1995). Graduate training in psychotherapy: Are therapy outcomes enhanced? Journal of Consulting and Clinical Psychology, 63(2), 182–196. 10.1037/0022-006X.63.2.182 [DOI] [PubMed] [Google Scholar]

- Stetler CB, Ritchie JA, Rycroft-Malone J, & Charns MP (2014). Leadership for evidence-based practice: strategic and functional behaviors for institutionalizing EBP. Worldviews on Evidence-Based Nursing, 11(4), 219–226. 10.1111/wvn.12044 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stewart RE, Adams DR, Mandell DS, Hadley TR, Evans AC, Rubin R, Erney J, Neimark G, Hurford MO, & Beidas RS (2016). The perfect storm: Collision of the business of mental health and the implementation of evidence-based practices. Psychiatric Services, 67(2), 159–161. 10.1176/appi.ps.201500392 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tabak RG, Khoong EC, Chambers DA, & Brownson RC (2012). Bridging research and practice: models for dissemination and implementation research. American Journal of Preventive Medicine, 43(3), 337–350. 10.1016/j.amepre.2012.05.024 [DOI] [PMC free article] [PubMed] [Google Scholar]

- West MA, Topakas A, & Dawson JF (2014). Climate and culture for health care performance. In Schneider B & Barbera KM (Eds.), The Oxford handbook of organizational climate and culture (pp. 335–359). Oxford University Press. 10.1093/oxfordhb/9780199860715.013.0018 [DOI] [Google Scholar]

- Williams NJ (2016). Multilevel mechanisms of implementation strategies in mental health: integrating theory, research, and practice. Administration and Policy in Mental Health and Mental Health Services Research, 43(5), 783–798. 10.1007/s10488-015-0693-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams NJ, Becker-Haimes EM, Schriger SH, & Beidas RS (2022). Linking organizational climate for evidence-based practice implementation to observed clinician behavior in patient encounters: a lagged analysis. Implementation Science Communications. 10.1186/s43058-022-00309-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams NJ, & Beidas RS (2019). Annual research review: The state of implementation science in child psychology and psychiatry: A review and suggestions to advance the field. Journal of Child Psychology and Psychiatry, 60(4), 430–450. 10.1111/jcpp.12960 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams NJ, Ehrhart MG, Aarons GA, Marcus SC, & Beidas RS (2018). Linking molar organizational climate and strategic implementation climate to clinicians’ use of evidence-based psychotherapy techniques: cross-sectional and lagged analyses from a 2-year observational study. Implementation Science, 13(85). doi: 10.1186/s13012-018-0781-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams NJ, Hugh ML, Cooney DJ, Worley JA, & Locke J (2022). Testing a theory of implementation leadership and climate across autism evidence-based interventions of varying complexity. Behavior Therapy. 10.1016/j.beth.2022.03.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams NJ, Wolk CB, Becker-Haimes EM, & Beidas RS (2020). Testing a theory of strategic implementation leadership, implementation climate, and clinicians’ use of evidence-based practice: a 5-year panel analysis. Implementation Science, 15(10). 10.1186/s13012-020-0970-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zandi PP, Wang YH, Patel PD, Katzelnick D, Turvey CL, Wright JH, Ajilore O, Coryell W, Schneck CD, Guille C, Saunders EFH, Lazarus SA, Cuellar VA, Selvaraj S, Dill Rinvelt P, Greden JF, & DePaulo JR (2020). Development of the National Network of Depression Centers Mood Outcomes Program: A Multisite Platform for Measurement-Based Care. Psychiatric Services, 71(5), 456–464. 10.1176/appi.ps.201900481 [DOI] [PubMed] [Google Scholar]

- Zimmerman M, & McGlinchey JB (2008). Why don’t psychiatrists use scales to measure outcome when treating depressed patients? The Journal of Clinical Psychiatry, 69(12), 1916–1919. 10.4088/jcp.v69n1209 [DOI] [PubMed] [Google Scholar]

- Zohar D (1980). Safety climate in industrial organizations: Theoretical and applied implications. Journal of Applied Psychology, 65(1), 96–102. 10.1037/0021-9010.65.1.96 [DOI] [PubMed] [Google Scholar]

- Zohar D, & Hofmann DA (2012). Organizational culture and climate. In Kozlowski SWJ (Ed.), The Oxford handbook of organizational psychology (Vol 1, pp. 643–666). Oxford University Press. [Google Scholar]