Abstract

Recurrent neural networks (RNNs) are powerful dynamical models, widely used in machine learning (ML) and neuroscience. Prior theoretical work has focused on RNNs with additive interactions. However gating i.e., multiplicative interactions are ubiquitous in real neurons and also the central feature of the best-performing RNNs in ML. Here, we show that gating offers flexible control of two salient features of the collective dynamics: (i) timescales and (ii) dimensionality. The gate controlling timescales leads to a novel marginally stable state, where the network functions as a flexible integrator. Unlike previous approaches, gating permits this important function without parameter fine-tuning or special symmetries. Gates also provide a flexible, context-dependent mechanism to reset the memory trace, thus complementing the memory function. The gate modulating the dimensionality can induce a novel, discontinuous chaotic transition, where inputs push a stable system to strong chaotic activity, in contrast to the typically stabilizing effect of inputs. At this transition, unlike additive RNNs, the proliferation of critical points (topological complexity) is decoupled from the appearance of chaotic dynamics (dynamical complexity). The rich dynamics are summarized in phase diagrams, thus providing a map for principled parameter initialization choices to ML practitioners.

Subject Areas: Interdisciplinary Physics Nonlinear Dynamics, Statistical Physics

I. INTRODUCTION

Recurrent neural networks (RNNs) are powerful dynamical systems that can represent a rich repertoire of trajectories and are popular models in neuroscience and machine learning. In modern machine learning, RNNs are used to learn complex dynamics from data with rich sequential or temporal structure such as speech [1,2], turbulent flows [3–5], or text sequences [6]. RNNs are also influential in neuroscience as models to study the collective behavior of a large network of neurons [7] (and references therein). For instance, they have been used to explain the dynamics and temporally irregular fluctuations observed in cortical networks [8,9] and how the motor-cortex network generates movement sequences [10,11].

Classical RNN models typically involve units that interact with each other in an additive fashion—i.e., each unit integrates a weighted sum of the output of the rest of the network. However, researchers in machine learning have empirically found that RNNs with gating—a form of multiplicative interaction—can be trained to perform significantly more complex tasks than classical RNNs [6,12]. Gating interactions are also ubiquitous in real neurons due to mechanisms such as shunting inhibition [13]. Moreover, when single-neuron models are endowed with more realistic conductance dynamics, the effective interactions at the network level have gating effects, which confer robustness to time-warped inputs [14]. Thus, RNNs with gating interactions not only have superior information processing capabilities, but they also embody a prominent feature found in real neurons.

Prior theoretical work on understanding the dynamics and functional capabilities of RNNs has mostly focused on RNNs with additive interactions. The original work by Sompolinsky, Crisanti, and Sommers [15] identifies a phase transition in the autonomous dynamics of randomly connected RNNs from stability to chaos. Subsequent work extends this analysis to cases where the random connectivity additionally has correlations [16], a low-rank structured component [17,18], strong self-interaction [19], and heterogeneous variance across blocks [20]. The role of sparse connectivity and the single-neuron nonlinearity is studied in Ref. [9]. The effect of a Gaussian noise input is analyzed in Ref. [21].

In this work, we study the consequences of gating interactions on the dynamics of RNNs. We introduce a gated RNN model that naturally extends a classical RNN by augmenting it with two kinds of gating interactions: (i) an update gate that acts like an adaptive time constant and (ii) an output gate which modulates the output of a neuron. The choice of these forms for gates are motivated by biophysical considerations (e.g., Refs. [14,22]) and retain the most functionally important aspects of the gated RNNs in machine learning. Our gated RNN reduces to the classical RNN [15,23] when the gates are open and is closely related to the state-of-the-art gated RNNs in machine learning when the dynamics are discretized [24]. We further elaborate on this connection in Sec. VIII.

We develop a theory for the gated RNN based on non-Hermitian random matrix techniques [25,26] and the Martin–Siggia–Rose–De Dominicis-Janssen (MSRDJ) formalism [21,27–32] and use the theory to map out, in a phase diagram, the rich, functionally significant dynamical phenomena produced by gating.

We show that the update gate produces slow modes and a marginally stable critical state. Marginally stable systems are of special interest in the context of biological information processing (e.g., Ref. [33]). Moreover, the network in this marginally stable state can function as a robust integrator—a function that is critical for memory capabilities in biological systems [34–37] but has been hard to achieve without parameter fine-tuning and handcrafted symmetries [38]. Gating permits the network to serve this function without any symmetries or fine-tuning. For a detailed discussion of these issues, we refer the reader to Ref. [39] (pp. 329–350) and Refs. [38,40]. Integratorlike dynamics are also empirically observed in gated machine learning (ML) RNNs successfully trained on complex sequential tasks [41]; our theory shows how gates allow for this robustly.

The output gate allows fine control over the dimensionality of the network activity; control of the dimensionality can be useful during learning tasks [42]. In certain regimes, this gate can mediate an input-driven chaotic transition, where static inputs can push a stable system abruptly to a chaotic state. This behavior with gating is in stark contrast to the typically stabilizing effect of inputs in high-dimensional systems [21,43,44]. The output gate also leads to a novel, discontinuous chaotic transition, where the proliferation of critical points (a static property) is decoupled from the appearance of chaotic transients (a dynamical property); this is in contrast to the tight link between the two properties in additive RNNs as shown by Wainrib and Touboul [45]. This transition is also characterized by a nontrivial state where a stable fixed point coexists with long chaotic transients. Gates also provide a flexible, context-dependent way to reset the state, thus providing a way to selectively erase the memory trace of past inputs.

We summarize these functionally significant phenomena in phase diagrams, which are also practically useful for ML practitioners—indeed, the choice of parameter initialization is known to be one of the most important factors deciding the success of training [46], with best outcomes occurring near critical lines [10,47–49]. Phase diagrams, thus, allow a principled and exhaustive exploration of dynamically distinct initializations.

II. A RECURRENT NEURAL NETWORK MODEL TO STUDY GATING

We study an extension of a classical RNN [15,23] by augmenting it with multiplicative gating interactions. Specifically, we consider two gates: (i) an update (or z) gate which controls the rate of integration and (ii) an output (or r) gate which modulates the strength of the output. The equations describing the gated RNN are given by

| (1) |

where hi represents the internal state of the ith unit and σ(·)(x) = [1 + exp(−α(·)x + β(·))]−1 are sigmoidal gating functions. The recurrent input to a neuron is , where are the coupling strengths between the units and ϕ(x) = tanh(ghx + βh) is the activation function. ϕ and σz,r are parametrized by gain parameters (gh, αz,r) and biases (βh,z,r), which constitute the parameters of the gated RNN. Finally, Ih represents external input to the network. The gating variables zi(t) and ri(t) evolve according to dynamics driven by the output ϕ[h(t)] of the network:

| (2) |

where x ∈ {z, r}. Note that the coupling matrices Jz,r for z, r are distinct from Jh. We also allow for different inputs Ir and Iz being fed to the gates. For instance, they might be zero, or they might be equal up to a scaling factor to Ih.

The value of σz(zi) can be viewed as a dynamical time constant for the ith unit, while the output gate σr(ri) modulates the output strength of unit i. In the presence of external input, the r gate can control the relative strengths of the internal (recurrent) activity and the external input Ih. In the limit σz, σr → 1, we recover the dynamics of the classical RNN.

We choose the coupling weights from a Gaussian distribution with variance scaled such that the input to each unit remains O(1). Specifically, . This choice of couplings is a popular initialization scheme for RNNs in machine learning [6,46] and also in models of cortical neural circuits [15,20]. If the gating variables are purely internal, then (Jz,r) is diagonal; however, we do not consider this case below. In the rest of the paper, we analyze the various dynamical regimes the gated RNN exhibits and their functional significance.

III. HOW THE GATES SHAPE THE LINEARIZED DYNAMICS

We first study the linearized dynamics of the gated RNN through the lens of the instantaneous Jacobian and describe how these dynamics are shaped by the gates. The instantaneous Jacobian describes the linearized dynamics about an operating point, and the eigenvalues of the Jacobian inform us about the timescales of growth and decay of perturbations and the local stability of the dynamics. As we show below, the spectral density of the Jacobian depends on equal-time correlation functions, which are the order parameters in the mean-field picture of the dynamics, developed in the Appendix C. We study how the gates shape the support and the density of Jacobian eigenvalues in the steady state, through their influence on the correlation functions.

The linearized dynamics in the tangent space at an operating point x = (h, z, r) is given by

| (3) |

where 𝒟 is the 3N × 3N-dimensional instantaneous Jacobian of the full network equations. Linearization of Eqs. (1) and (2) yields

| (4) |

where [x] denotes a diagonal matrix with the diagonal entries given by the vector x. The term arises when we linearize about a time-varying state and is zero for fixed points. We introduce the additional shorthand ϕ′(t) = ϕ′(h(t)) and .

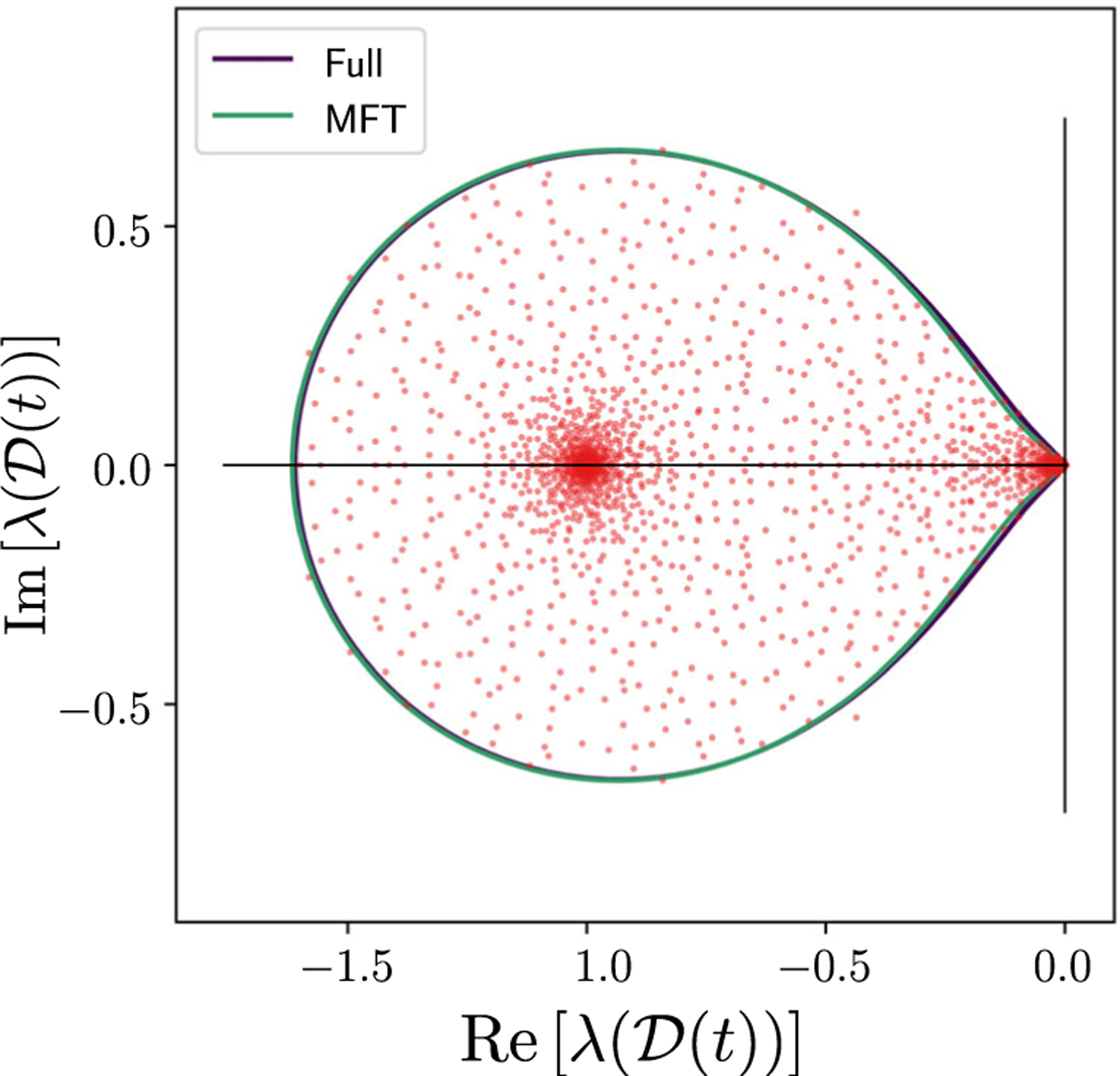

The Jacobian is a block-structured matrix involving random elements (Jz,h,r) and functions of various state variables. We need additional tools from non-Hermitian random matrix theory (RMT) [26] and dynamical mean-field theory (DMFT) [15] to analyze the spectrum of the Jacobian 𝒟. We provide a detailed, self-contained derivation of the calculations in Appendix C (DMFT) and Appendix A (RMT). Here, we state only the main results derived from these formalisms.

One of the main results is an analytical expression for the spectral curve, which describes the boundary of the Jacobian spectrum, in terms of the moments of the state variables. The most general expression for the spectral curve [Appendix A, Eq. (A34)] involves empirical averages over the 3N-dimensional state variables. However, for large N, we can appeal to a concentration of measure argument to replace these discrete sums with averages over the steady-state distribution from the DMFT (cf. Appendix C)—i.e., we can replace empirical averages of any function of the state variables (1/N) Σi F(hi, zi, ri) with 〈F[h(t), z(t), r(t)]〉, where the brackets indicate average over the steady-state distribution. The DMFT + RMT prediction for the spectral curve for a generic steady-state point is given in Appendix A, Eq. (A35). Strictly speaking, the analysis of the DMFT around a generic time-dependent steady state is complicated by the fact that the distribution for h is not Gaussian (while r and z are Gaussian). For fixed points, however, the distributions of h, z, and r are all Gaussian, and the expression for the spectral curve reduces simplifies. It is given by the set of which satisfy

| (5) |

Here, the averages are taken over the Gaussian fixed-point distributions (h, z, r) ~ 𝒩(0, Δh,z,r) which follow from the MFT [Eq. (C26)]. For example, .

We make two comments on the Jacobian of a time-varying state: (i) One might wonder if any useful information can be gleaned by studying the Jacobian at a time-varying state where the Hartman-Grobman theorem is not valid. Indeed, as we see below, the limiting form of the Jacobian in steady state crucially informs us about the suppression of unstable directions and the emergence of slow dynamics due to pinching and marginal stability in certain parameter regimes (also see Ref. [50]). In other words, the instantaneous Jacobian charts the approach to marginal stability and provides a quantitative justification for the approximate integrator functionality exhibited in Sec. IV B. (ii) Interestingly, the spectral curve calculated using the MFT [Eq. (5)] for a time-varying steady state not deep in the chaotic regime is a very good approximation for the true spectral curve (see Fig. 8 in Appendix A).

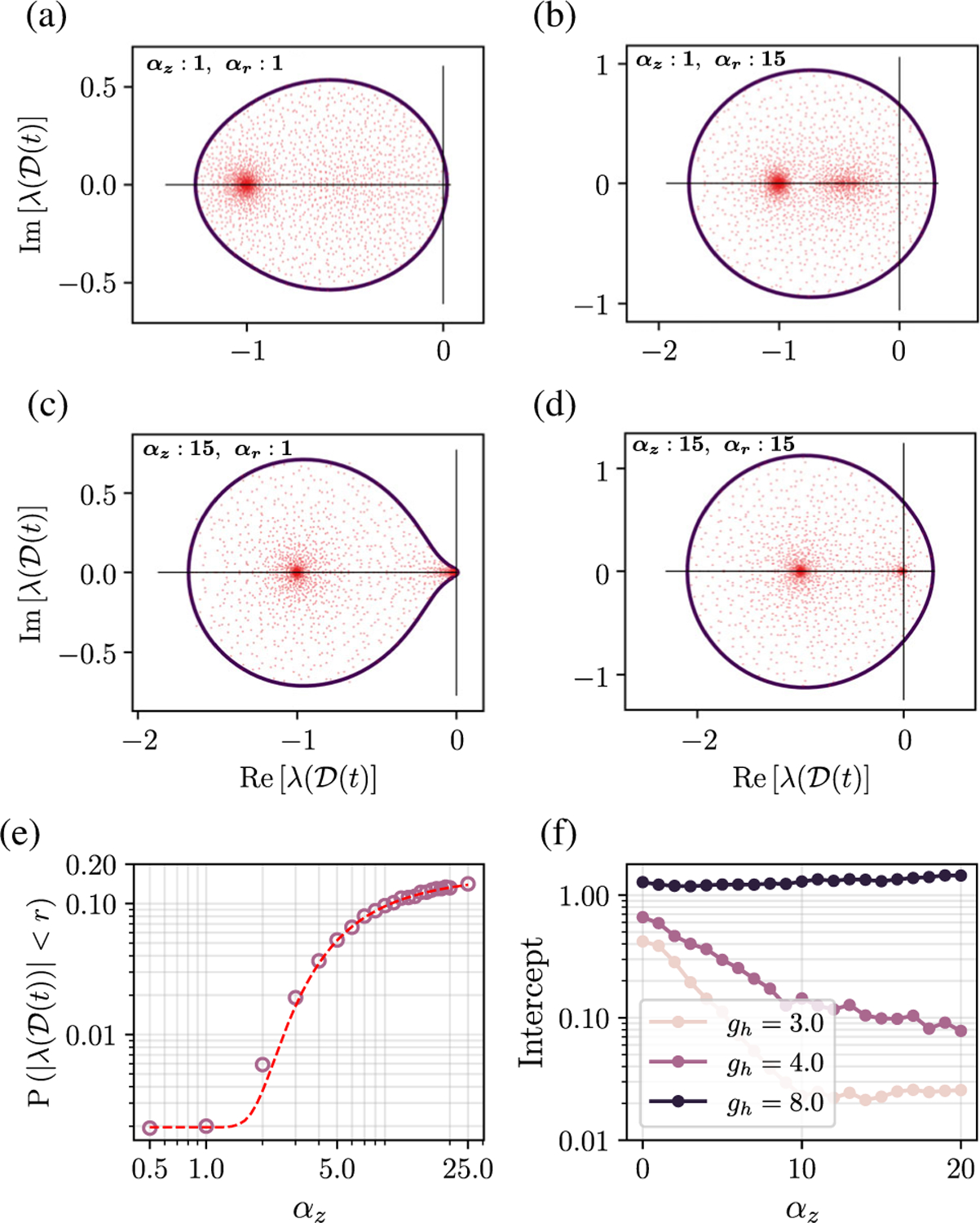

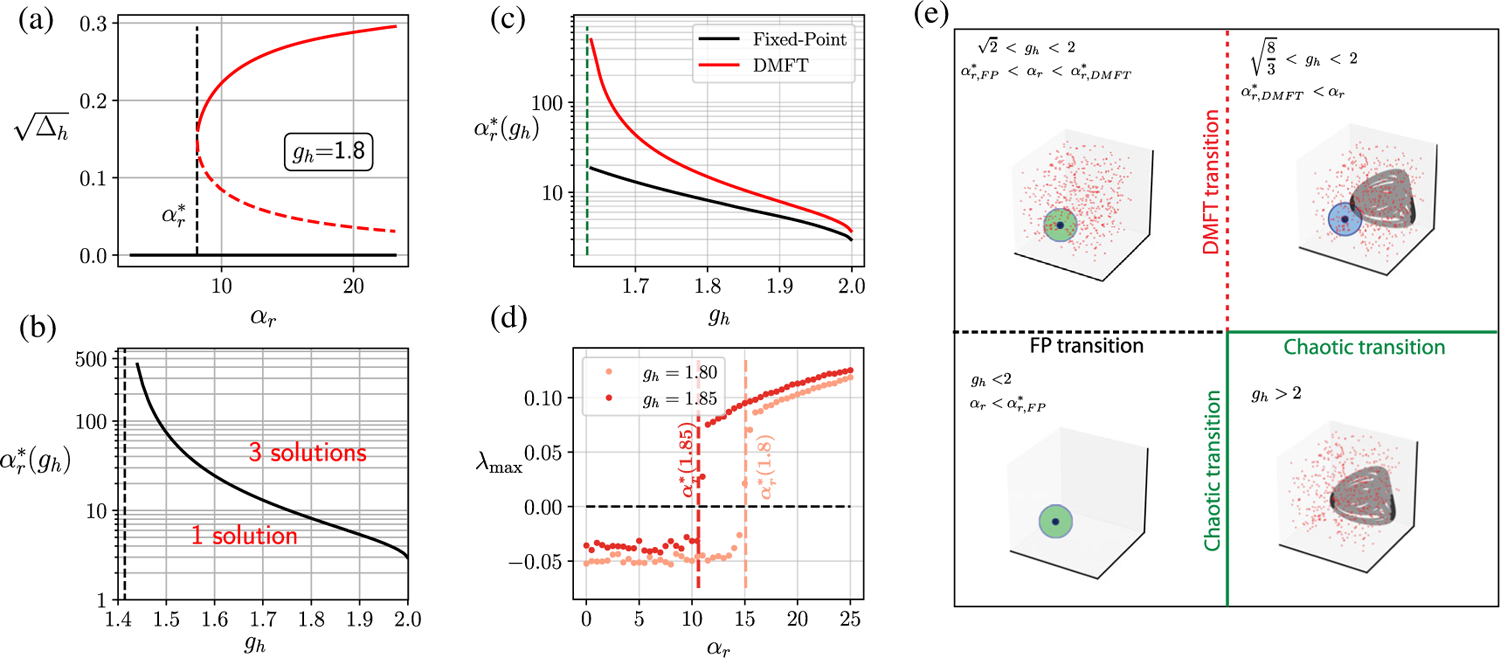

Figures 1(a)–1(d) show that the RMT prediction of the spectral support (dark outline) agrees well with the numerically calculated spectrum (red dots) in different dynamical regimes. As a consequence of Eq. (5), we get a condition for the stability of the zero fixed point. The leading edge of the spectral curve for the zero fixed point (FP) crosses the origin when . So, in the absence of biases, gh > 2 makes the zero FP unstable. More generally, the leading edge of the spectrum crossing the origin gives us the condition for the FP to become unstable:

| (6) |

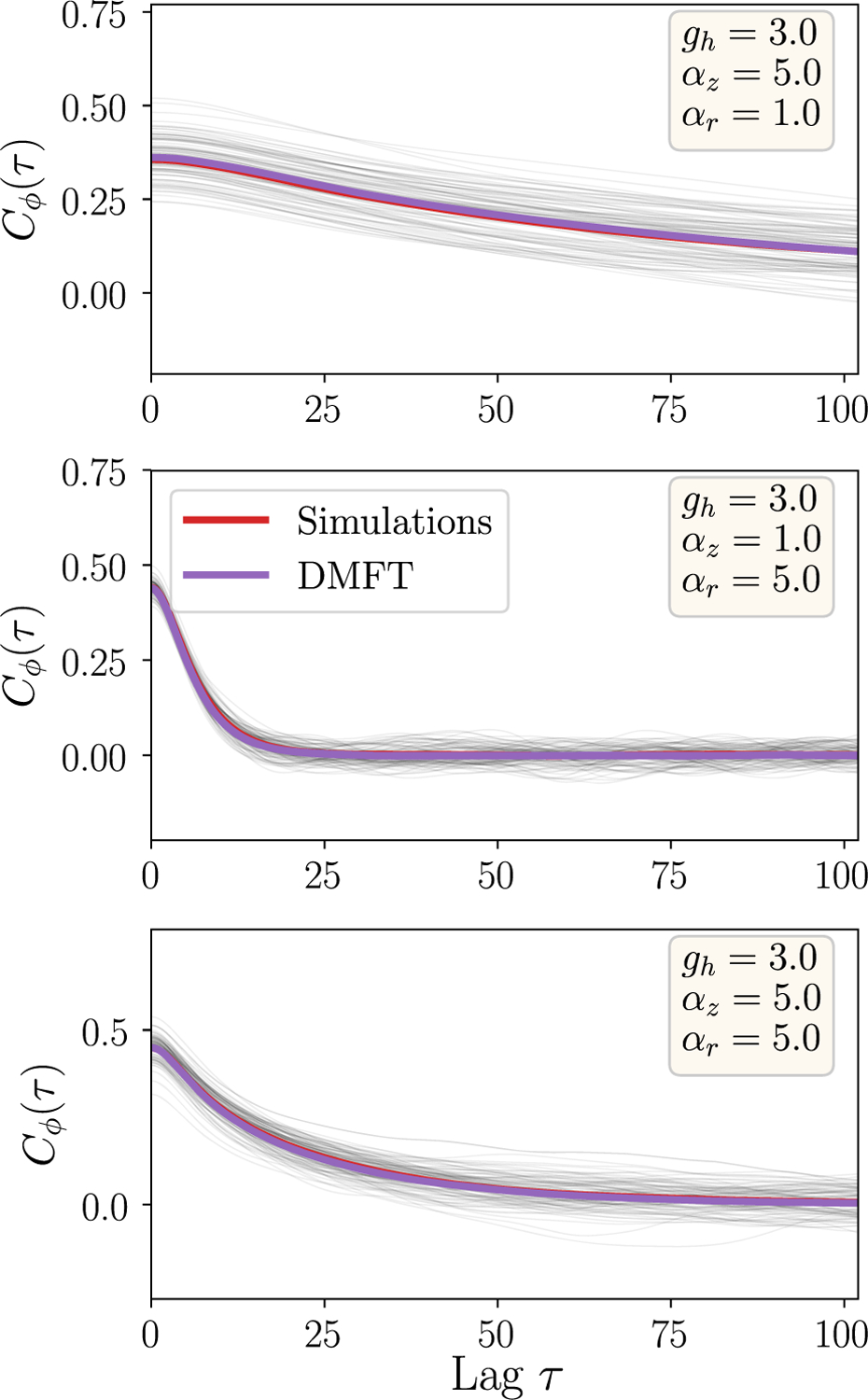

FIG. 1.

How gates shape the Jacobian spectrum. (a)–(d) Jacobian eigenvalues (red dots) of the gated RNN in (time-varying) steady state. The dark outline is the spectral support curve predicted by Eq. (5). The bottom row corresponds to larger αz, and the right column corresponds to large αr. (e) Cumulative distribution function of Jacobian eigenvalues in a disk of radius r = 0.05 centered at the origin plotted against αz. Circles are numerical density calculated from the true network Jacobian (averaged over ten instances), and the dashed line is a fit from Eq. (7). (f) Intercept of the spectral curve on the imaginary axis, plotted against αz for three different values of gh (αr = 0). For network simulations, N = 2000, gh = 3, and τr = τz = 1 unless otherwise stated, and all biases are zero.

We see later on that the time-varying state corresponding to this regime is chaotic. We now proceed to analyze how the two gates shape the Jacobian spectrum via the equation for the spectral curve.

A. Update gate facilitates slow modes and output gate causes instability

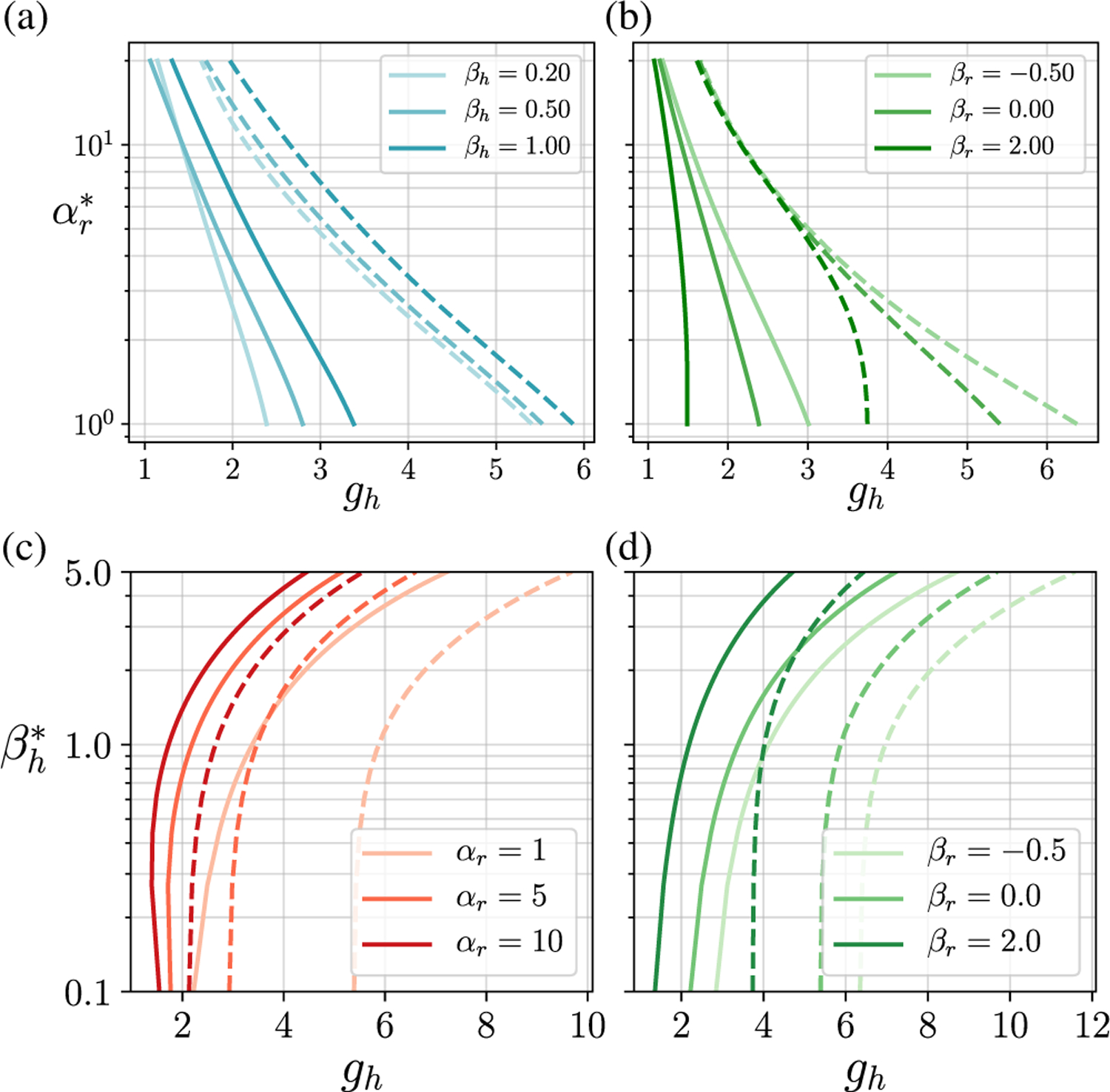

To understand how each gate shapes the local dynamics, we study their effect on the density of Jacobian eigenvalues and the shape of the spectral support curve—the eigenvalues tell us about the rate of growth or decay of small perturbations and, thus, timescales in the local dynamics, and the spectral curve informs us about stability. For ease of exposition, we consider the case without biases in the main text (βr,z,h = 0); we discuss the role of biases in Appendix H.

Figure 1 shows how the gain parameters of the update and output gates—αz and αr, respectively—shape the Jacobian spectrum. In Figs. 1(a)–1(d), we see that αz has two salient effects on the spectrum: Increasing αz leads to (i) an accumulation of eigenvalues near zero and (ii) a pinching of the spectral curve for certain values of gh wherein the intercept on the imaginary axis gets smaller [Fig. 1(f); also see Sec. IVA]. In Figs. 1(a)–1(d), we also see that increasing the value of αr leads to an increase in the spectral radius, thus pushing the leading edge (max Reλi) to the right and thereby increasing the local dimensionality of the unstable manifold. This behavior of the linearized dynamics is also reflected in the nonlinear dynamics, where, as we show in Sec. V, αr has the effect of controlling the dimensionality of full phase-space dynamics.

The accumulation of eigenvalues near zero with increasing αz suggests the emergence of a wide spectrum of timescales in the local dynamics. To understand this accumulation quantitatively, it is helpful to consider the scenario where αz is large and we replace the tanh activation functions with a piecewise linear approximation. In this limit, the density of eigenvalues within a radius δ of the origin is well approximated by the following functional form (details in Appendix B):

| (7) |

where c0 and c1 are constants that, in general, depend on ar, δ, and gh. Figure 1(e) shows this scaling for a specific value of δ: The dashed line shows the predicted curve, and the circles indicate the actual eigenvalue density calculated using the full Jacobian. In the limit of αz → ∞, we get an extensive number of eigenvalues at zero, and the eigenvalue density converges to (see Appendix B)

where fz = 〈σz(z)〉 is the fraction of update gates which are nonzero and fh is the fraction of unsaturated activation functions ϕ(h). For other choices of saturating nonlinearities, the extensive number of eigenvalues at zero remains; however, the expressions are more complicated. Analogous phenomena are observed for discrete-time gated RNNs in Ref. [51], using a similar combination of analytical and numerical techniques [52].

In Sec. VA, we show that the slow modes, as seen from linearization, persist asymptotically (i.e., in the nonlinear regime). This can be seen from the Lyapunov spectrum in Fig. 3(a), which for large αz exhibits an analogous accumulation of Lyapunov exponents near zero.

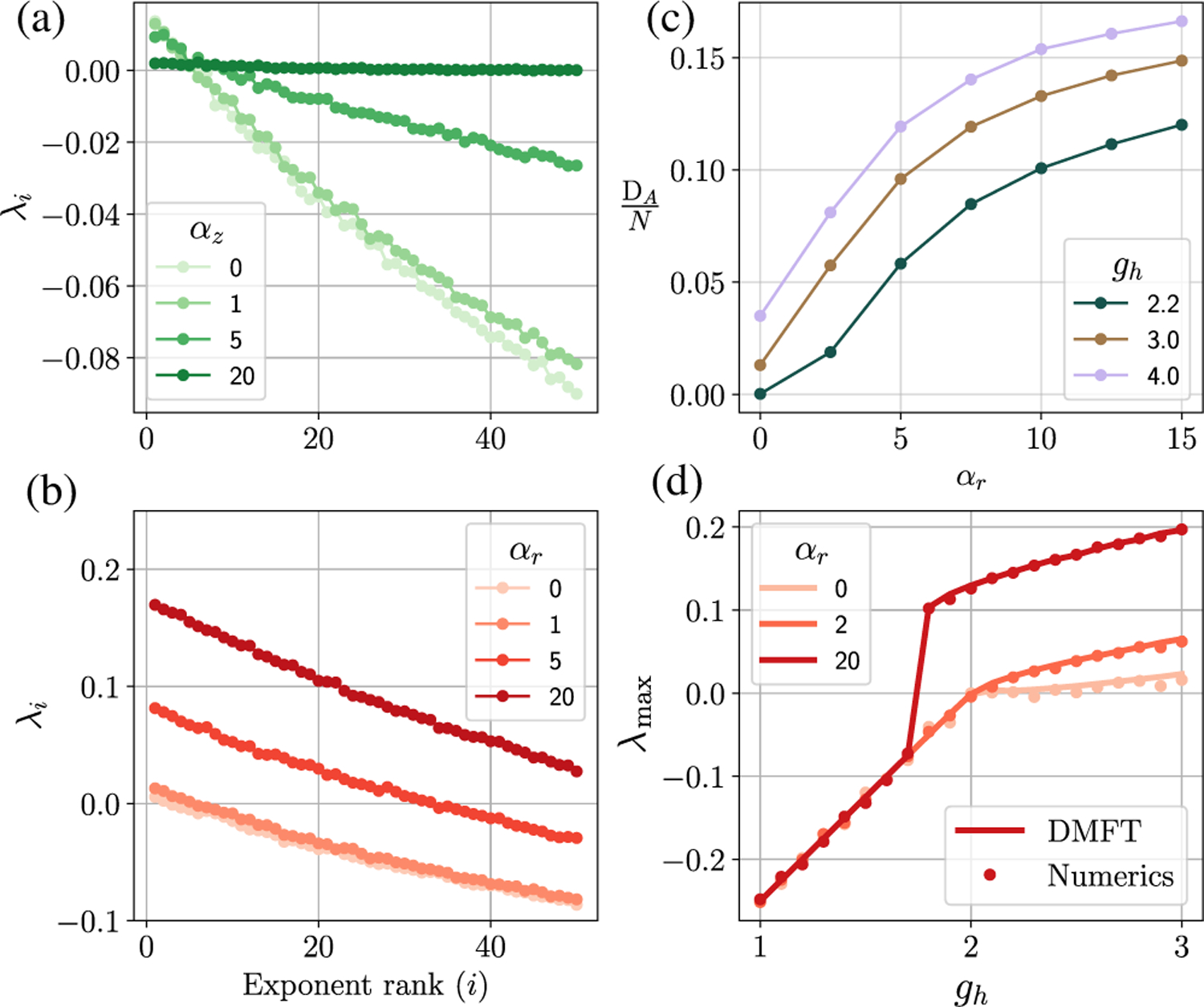

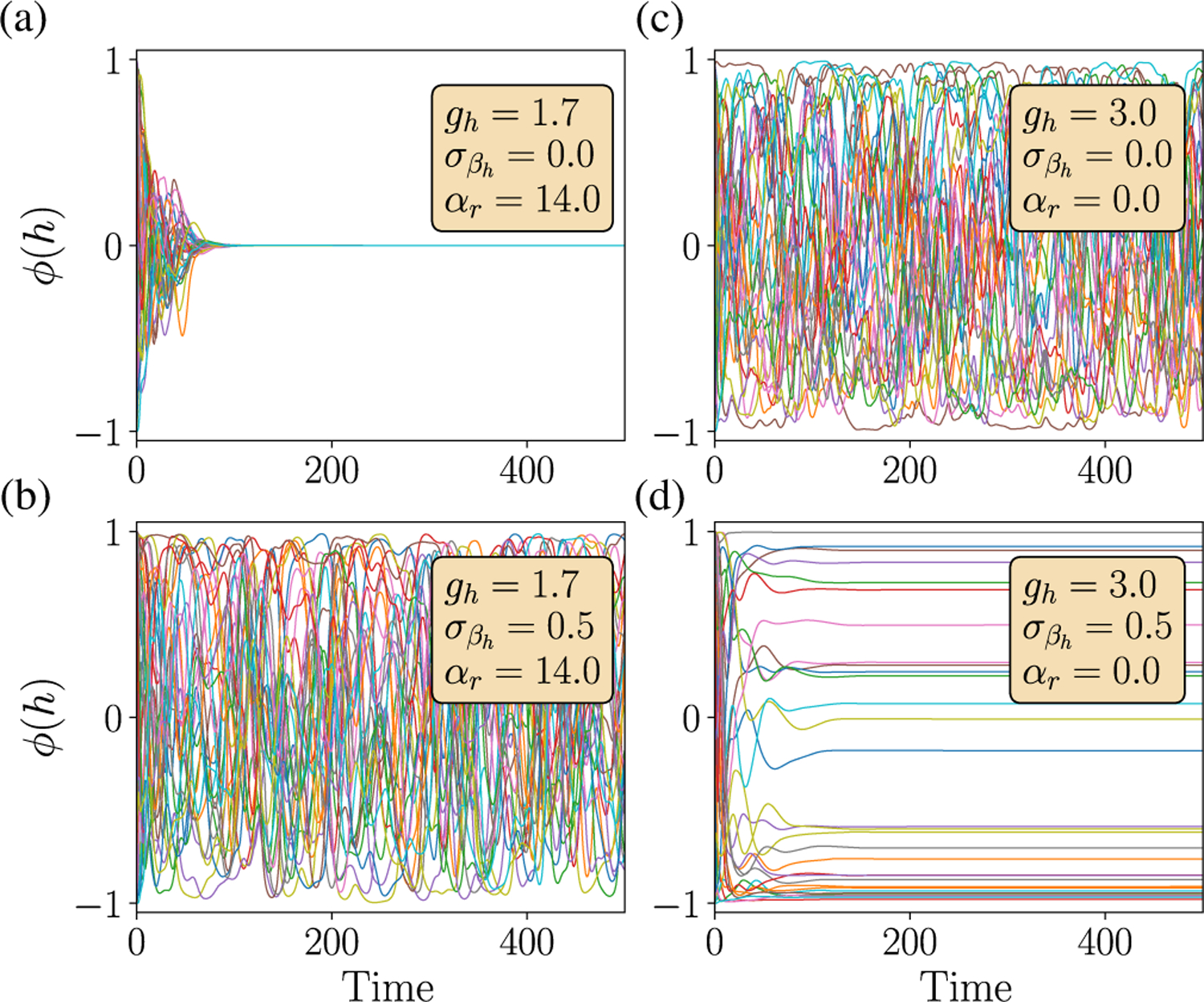

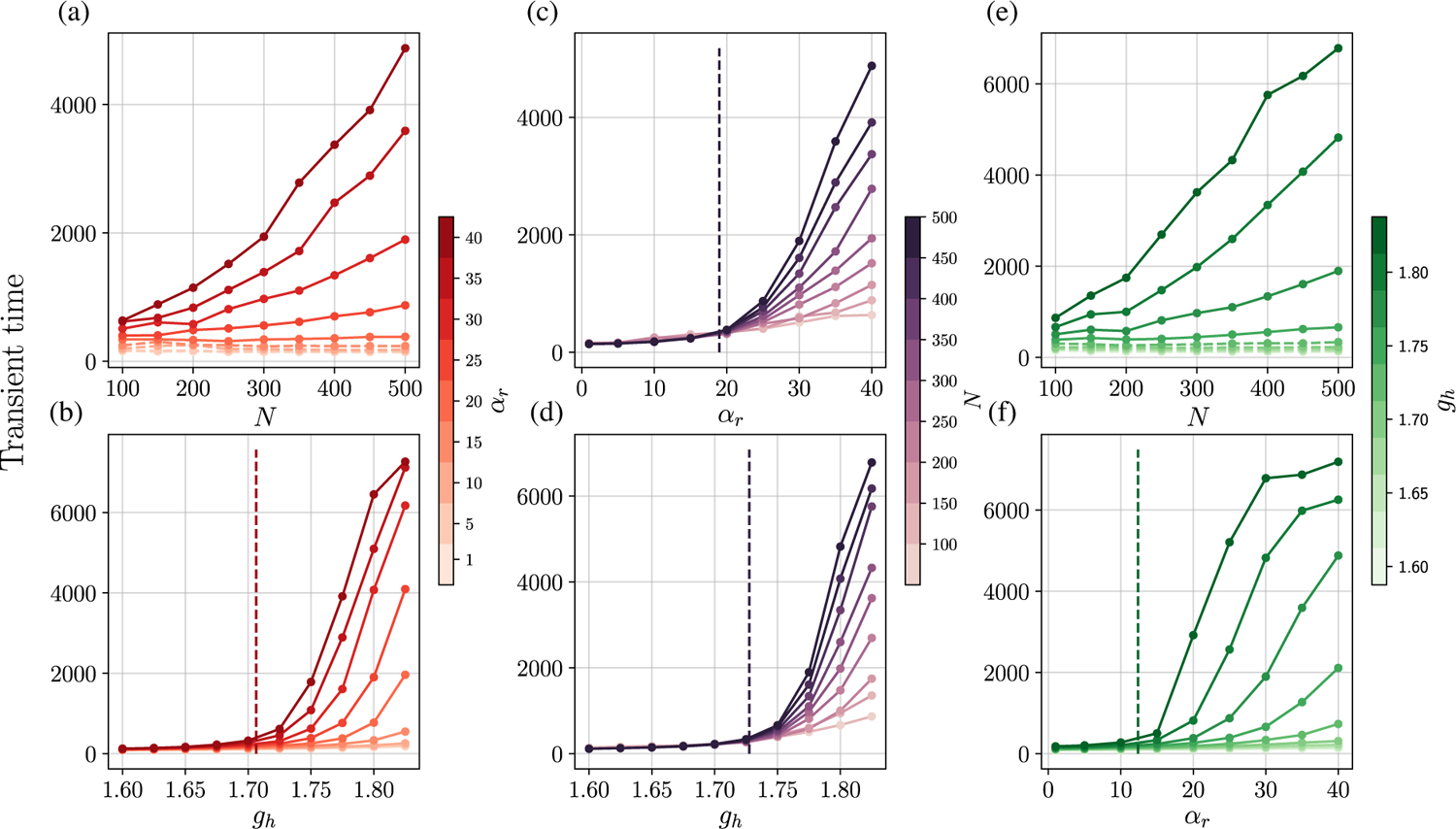

FIG. 3.

Lyapunov spectra and dimensionality of the gated RNN. (a),(b) The first 50 ordered Lyapunov exponents for a gated RNN (N = 2000) as a function of varying (a) αz and (b) αr. The Lyapunov spectrum is calculated as described in Appendix D. (c) The Kaplan-Yorke dimensionality of the dynamics as a function of αr. (d) The maximal Lyapunov exponent λmax predicted by the DMFT [solving Eqs. (10) and (11); solid line] and obtained numerically using the QR method (circles; N = 2000 and αz = 0). Note that the transition for αr = 20 is sharp; also cf. Fig. 5(c). τz = τt = 2.0 here.

In the next section, we study the profound functional consequences of the combination of spectral pinching and accumulation of eigenvalues near zero.

IV. MARGINAL STABILITY AND ITS CONSEQUENCES

As the update gate becomes more switchlike (higher αz), we see an accumulation of slow modes and a pinching of the spectral curve which drastically suppresses the unstable directions. In the limit αz → ∞, this can make previously unstable points marginally stable by pinning the leading edge of the spectral curve exactly at zero. Marginally stable systems are of significant interest because of the potential benefits in information processing—for instance, they can generate long timescales in their collective modes [33,39]. Moreover, achieving marginal stability often requires fine-tuning parameters close to a bifurcation point. As we see, gating allows us to achieve a marginally stable critical state over a wide range of parameters; this has been typically highly nontrivial to achieve (e.g., Ref. [39], pp. 329–350, and Ref. [33]). We first investigate the conditions under which marginal stability arises, and then we touch on one of its important functional consequences: the appearance of “line attractors” which allow the system to be used as a robust integrator.

A. Condition for marginal stability

Marginal stability is a consequence of pinching of the spectral curve with increasing αz, wherein the (positive) leading edge of the spectrum and the intercept of the spectral curve on the imaginary axis both shrink with αz [e.g., Fig. 1(f) and compare Figs. 1(a) and 1(c)]. However, we see in Fig. 1(f) (via the intercept) that pinching does not happen if gh is sufficiently large (even as αz → ∞). Here, we provide the conditions when pinching can occur and, thus, marginal stability can emerge. For simplicity, let us consider the case where τr = 1 and there are no biases.

Marginal stability strictly exists only for αz = ∞. We first examine the conditions under which the system can become marginally stable in this limit, and then we explain the route to marginal stability for large but finite αz, i.e., how a time-varying state ends up as a marginally stable fixed point. For αz = ∞, the spectral density has an extensive number N[1 − 〈σz(z)〉] of zero eigenvalues, and the remaining eigenvalues are distributed in a disk centered at λ = −1 with radius ρ. If ρ < 1, then the spectral density has two topologically disconnected configurations (the disk and the zero modes) and the system is marginally stable. If ρ > 1, the zero modes get absorbed in the interior of the disk and the system is unstable with fast, chaotic dynamics. The radius ρ is given by , where and . This follows from Eq. (5) by evaluating the z-expectation value assuming σz is a binary variable. Thus, the system is marginally stable in the limit αz = ∞ as long as

| (8) |

The crucial difference between this expression and Eq. (6) is that the rhs now has a factor of 〈σz〉−1 which can be greater than unity, thus pushing the transition to chaos further out along the gh and αr directions, as depicted in the phase diagram (Fig. 7). For concreteness, we report here how the transition changes at αr = 0. In this setting, the transition to chaos moves from gh = 2 to gh ⪅ 6.2, and the system is marginally stable for 2 < gh ⪅ 6.2.

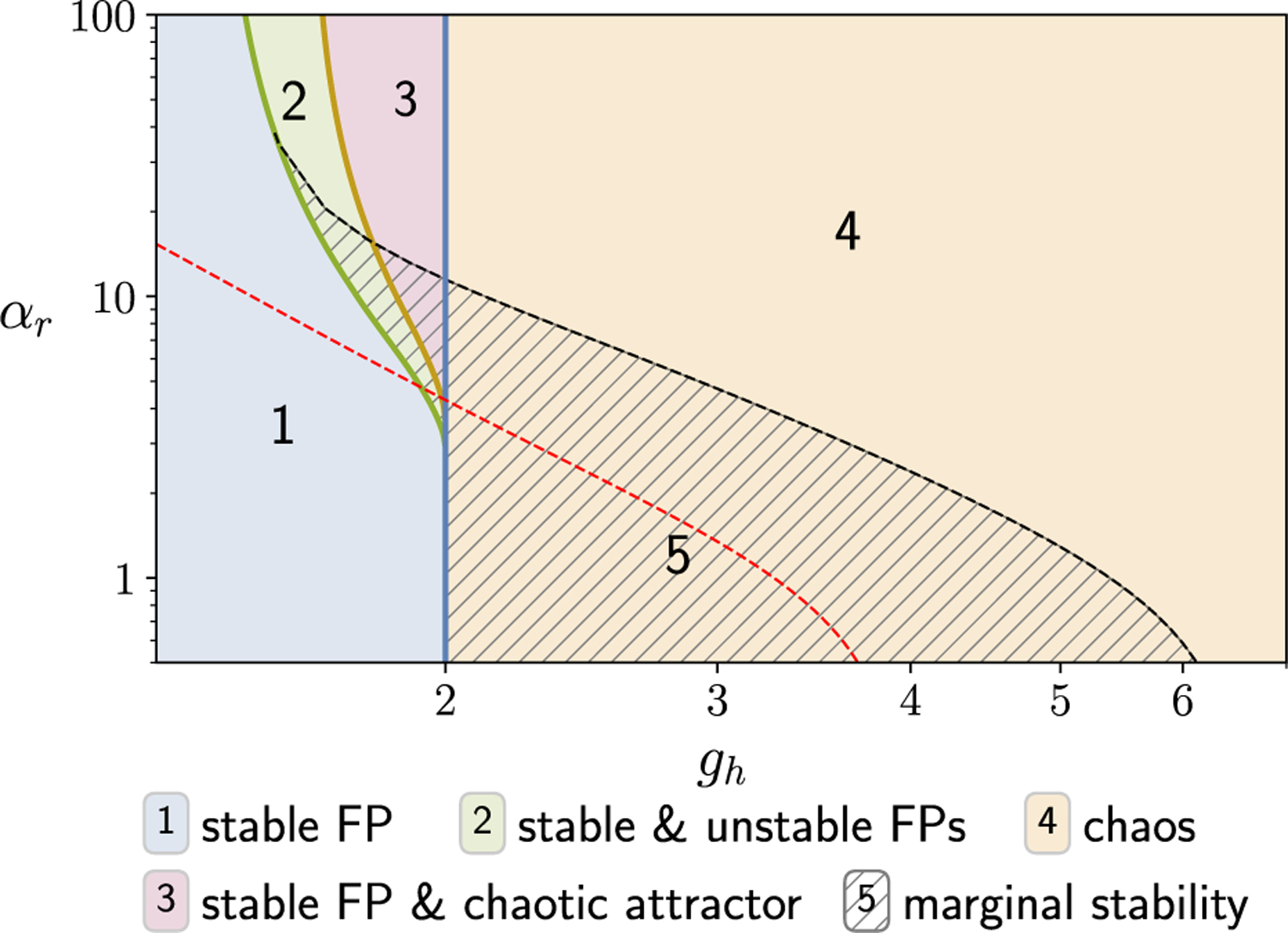

FIG. 7.

Phase diagram for the gated RNN. (a) (no biases) In regions 1 and 2, the zero FP is the global attractor of dynamics; however, in region 2, there is a proliferation of unstable FPs without any asymptotic dynamical signatures. In region 3, the (stable) zero FP coexists with chaotic dynamics. Note that the plotted curve separating regions 2 and 3 is computed for αz = 0 and remains valid for sufficiently small values of αz. In region 4, the zero FP is unstable, and dynamics are chaotic. For all parameter values in region 5, a previously unstable or chaotic state can be made marginally stable when αz = ∞. For any given parameter values in region 5, there are infinitely many marginally stable points in the phase space to which the dynamics converge. The red dashed line indicates the critical transition between a stable fixed point (below the line) and chaos (above the line) in the presence of static random input (to the h variable) with standard deviation σh = 0.5. Note that, while chaos is suppressed for small αr along the gh axis, for larger αr there are regions of stable FPs that become chaotic with finite input. This leads to the phenomenon of input-induced chaos.

Having identified the region in the phase diagram that can be made marginally stable for αz = ∞, we can now discuss the route to marginal stability for large but finite αz. In other words, how does an unstable chaotic state become marginally stable with increasing αz? Since the marginally stable region is characterized by a disconnected spectral density, evidently increasing αz must lead to singular behavior in the spectral curve. This takes the form of a pinching at the origin. We show that, for values of gh supporting marginal stability, the leading edge λe of the spectrum for the time-varying state gets pinched exponentially fast with αz as (see Appendix B). This accounts for the fact that, already for αz = 15, we observe the pinching in Fig. 1(c). In contrast, the parameters in Fig. 1(d) lie outside the marginally stable region, and, thus, there is no pinching, since the zero modes are asymptotically (in αz) buried in the bulk of the spectrum.

In summary, as αz → ∞ the Jacobian spectrum undergoes a topological transition from a single simply connected domain to two domains, both containing an extensive number of eigenvalues. A finite fraction of eigenvalues end up sitting exactly at zero, while the rest occupy a finite circular region. If the leading edge of the circular region crosses zero in this limit, then the state remains unstable; otherwise, the state becomes marginally stable. The latter case is achieved through a gradual pinching of the spectrum near zero; there is no pinching in the former case.

We emphasize that marginal stability requires more than just an accumulation of eigenvalues near zero. Indeed, this happens even when gh is outside the range supporting marginal stability as αz → ∞, but there is no pinching and the system remains unstable [e.g., see Fig. 1(d)]. We return to this when we describe the phase diagram for the gated RNN (Sec. VII). There, we see that the marginally stable region occupies a macroscopic volume in the parameter space adjoining the critical lines on one side.

B. Functional consequences of marginal stability

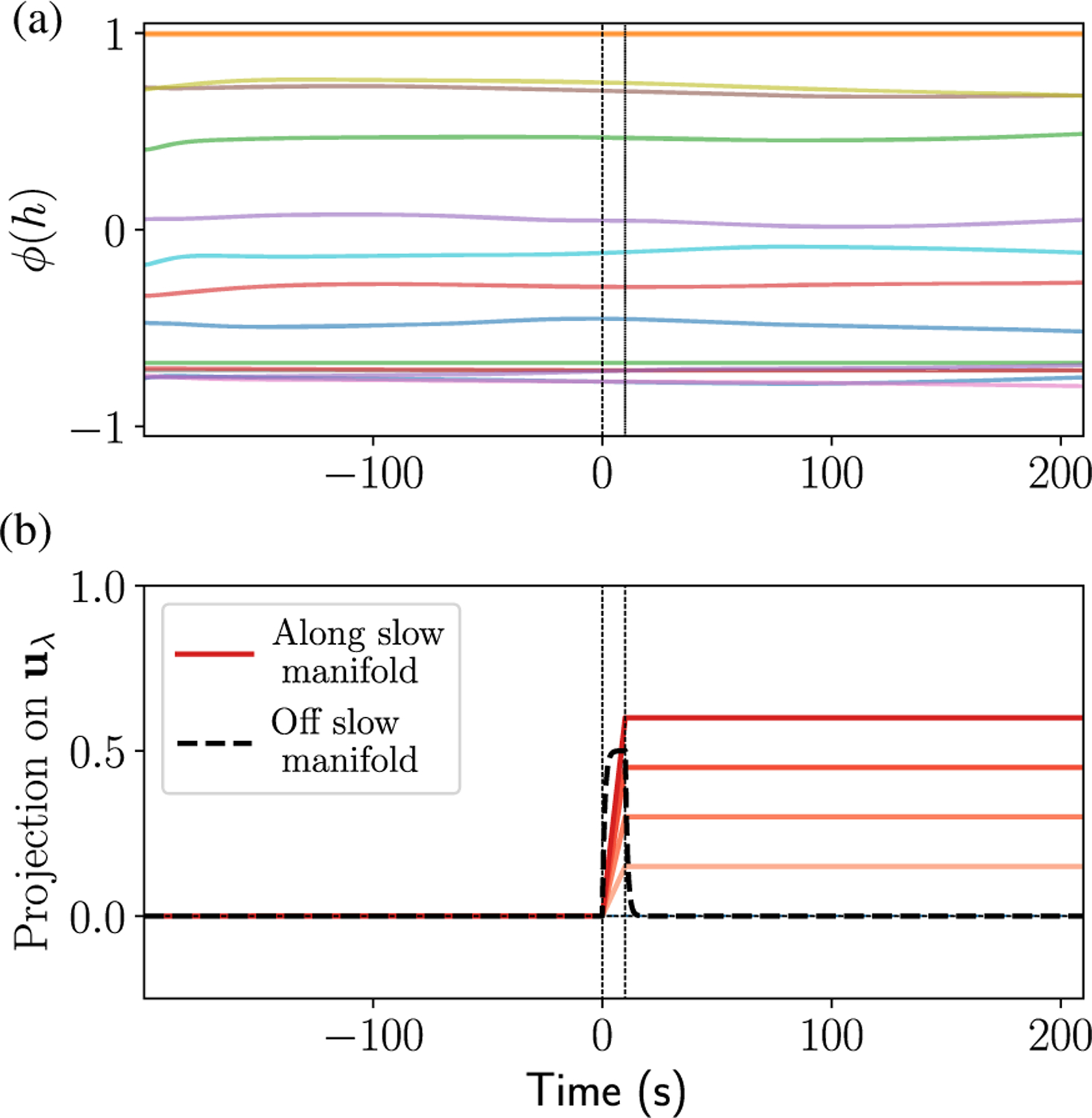

The marginally stable critical state produced by gating can subserve the function of a robust integrator. This integratorlike function is crucial for a variety of computational functions such as motor control [34–36], decision making [37], and auditory processing [53]. However, achieving this function has typically required fine-tuning or special handcrafted architectures [38], but gating permits the integrator function over a range of parameters and without any specific symmetries in Jh,z,r. Specifically, for large αz, any perturbation in the span of the eigenvectors corresponding to the eigenvalues with a magnitude close to zero is integrated by the network, and, once the input perturbation ceases, the memory trace of the input is retained for a duration much longer than the intrinsic time constant of the neurons; perturbations along other directions, however, relax with a spectrum of timescales dictated by the inverse of (the real part of) their eigenvalues. Thus, the manifold of slow directions forms an approximate continuous attractor on which input can effortlessly move the state vector around. These approximate continuous attractor dynamics are illustrated in Fig. 2. At time t = 0, an input Ih (with Ir = Iz = 0) is applied till t = 10 (between dashed vertical lines) along an eigenvector of the Jacobian with an eigenvalue close to zero. Inputs along this slow manifold with varying strengths (different shades of red) are integrated by the network as evidenced by the excess projection of the network activity on the left eigenvector uλ corresponding to the slow mode; on the other hand, inputs not aligned with the slow modes decay away quickly (dashed black line). Recall that the intrinsic time constant of the neurons here is set to one unit. The exponentially fast (in αz) pinching of the spectral curve (discussed above in Sec. IVA) suggests this slow-manifold behavior should also hold for moderately large αz (as in Fig. 2). Therefore, even though the state is technically unstable, the local structure of the Jacobian is responsible for giving rise to extremely long timescales and allows the network to operate as an approximate integrator within relatively long windows of time, as demonstrated in Fig. 2.

FIG. 2.

Network in the marginally stable state functions as an integrator. (a) Sample traces from a network with switchlike update gates (αz = 30, gh = 3) show slow evolution (time on x axis is relative to τh). (b) An input is applied to the same network in (a) from t = 0 till t = 10, either aligned with a slow eigenvector uλ (red traces) or unaligned with slow modes (black dashed trace). The plot shows the excess projection of the network state on the left eigenvector uλ. Different shades of red correspond to different input strengths. If the input is along the slow manifold, the trace of the input is retained for a long time after the cessation of input. [The traces in (a) are for the network with an input along the manifold.].

Of course, after sufficiently long times, the instability causes the state to evolve and the memory is lost. Exactly how long the memory lasts depends on the asymptotic stability of the network, which is revealed by the Lyapunov spectrum, discussed below in Sec. VA.

V. OUTPUT GATE CONTROLS DIMENSIONALITY AND LEADS TO A NOVEL CHAOTIC TRANSITION

We thus far use insights from local dynamics to study the functional consequences of the gates. To study the salient features of the output gate, it is useful to analyze the effect of inputs and the long-time behavior of the network through the lens of Lyapunov spectra. We see that the output gate controls the dimensionality of the dynamics in the phase space; dimensionality is a salient aspect of the dynamics for task function [42]. The output gate also gives rise to a novel discontinuous chaotic transition, near which inputs (even static ones) can abruptly push a stable system into strongly chaotic behavior—contrary to the typically stabilizing effect of inputs. Below, we begin with the Lyapunov analyses of the dynamics and then proceed to study the chaotic transition.

A. Long-time behavior of the network

We study the asymptotic behavior of the network and the nature of the time-varying state through the lens of its Lyapunov spectra. In this section, where we study the effects of αz, our results are numerical except in cases where αz = 0 [e.g., in Fig. 3(d)]. Lyapunov exponents specify how infinitesimal perturbations δx(t) grow or shrink along the trajectories of the dynamics—in particular, if the growth or decay is exponentially fast, then the rate is dictated by the maximal Lyapunov exponent defined as [54] λmax ≔ limT→∞ T−1 lim‖δx(0)‖→0 ln[‖δx(T)‖/‖δx(0)‖]. More generally, the set of all Lyapunov exponents—the Lyapunov spectrum—yields the rates at which perturbations along different directions shrink or diverge and, thus, provide a fuller characterization of asymptotic behavior. We first numerically study how the gates shape the full Lyapunov spectrum (details in Appendix D) and derive an analytical prediction for the maximum Lyapunov exponent using the DMFT (Sec. VA1) [55].

Figures 3(a) and 3(b) show how the update (z) and output (r) gates shape the Lyapunov spectrum. We see that, as the update gets more sensitive (larger αz), the Lyapunov spectrum flattens, pushing more exponents closer to zero, generating long timescales. As the output gate becomes more sensitive (larger αr), all Lyapunov exponents increase, thus increasing the rate of growth in unstable directions.

We can estimate the dimensionality of the activity in the chaotic state by calculating an upper bound DA on the dimension according to a conjecture by Kaplan and Yorke [54]. The Kaplan-Yorke upper bound for the attractor dimension DA is given by

| (9) |

where λi are the rank-ordered Lyapunov exponents. We see in Fig. 3(c) that the sensitivity of the output gate (αr) can shape the dimensionality of the dynamics—a more sensitive output gate leads to higher dimensionality. As we see below, this effect of the output gate is different from how the gain gh shapes dimensionality and can lead to a novel chaotic transition. Even more directly, if the r gate for neurons i1…iK is set to zero, then the activity is constrained to evolve in an N − K-dimensional subspace; however, the r gate allows the possibility—i.e., the “inductive bias”—of doing this dynamically.

1. DMFT prediction for λmax

We would also like to study the chaotic nature of the time-varying phase by means of the maximal Lyapunov exponent and characterize when the transition to chaos occurs. We extend the DMFT for the gated RNN to calculate the maximum Lyapunov exponent, and, to do this, we make use of a technique suggested by Refs. [56,57] and clearly elucidated in Ref. [21]. The details are provided in Appendix E, and the end result of the calculation is the DMFT prediction for λmax as the solution to a generalized eigenvalue problem for κ involving the correlation functions of the state variables:

| (10) |

| (11) |

where we denote the two-time correlation function Cx(t, t′) ≡ 〈x(t)x(t′)〉 for different (functions of) state variables x(t) [see Eq. (C25) for more context]. The largest eigenvalue solution to this problem is the required maximal Lyapunov exponent [58]. Note that this is the analog of the Schrodinger equation for the maximal Lyapunov exponent in the vanilla RNN. When αz = 0 (or small), the h field is Gaussian, and we can use Price’s theorem for Gaussian integrals to replace the variational derivatives on the rhs of Eqs. (10) and (11) by simple correlation functions, for instance, ∂Cϕ(τ)/∂Ch(τ) = Cϕ′(τ). In this limit, we see good agreement between the numerically calculated maximal Lyapunov exponent [Fig. 3(c), dots] compared to the DMFT prediction [Fig. 3(c), solid line] obtained by solving the eigenvalue problem [Eqs. (10) and (11)]. For large values of αz, we see quantitative deviations between the DMFT prediction and the true λmax. Indeed, for large αz, the distribution of h is strongly non-Gaussian, and there is no reason to expect that variational formulas given by Price’s theorem are even approximately correct. For more on this point, see the discussion toward the end of Appendix C.

2. Condition for continuous transition to chaos

The value of αz affects the precise value of the maximal Lyapunov exponent λmax; however, numerics suggest that, across a continuous transition to chaos, the point at which λmax becomes positive is not dependent on αz (data not shown). We can see this more clearly by calculating the transition to chaos when the leading edge of the spectral curve (for a FP) crosses zero. This condition is given by Eq. (6), and we see that it has no dependence on αz or the update gate. We stress that this condition [Eq. (6)] for the transition to chaos—when the stable fixed point becomes unstable—is valid when the chaotic attractor emerges continuously from the fixed point [Fig. 3(c), αr = 0, 2]. However, in the gated RNN, there is another discontinuous transition to chaos [Fig. 3(c), αr = 20]: For sufficiently large αr, the transition to chaos is discontinuous and occurs at a value of gh where the zero FP is still stable (gh < 2 with no biases). To our knowledge, this is a novel type of transition which is not present in the vanilla RNN and not visible from an analysis that considers only the stability of fixed points. We characterize this phenomenon in detail below.

B. Output gate induces a novel chaotic transition

Here, we describe a novel phase, characterized by a proliferation of unstable fixed points and the coexistence of a stable fixed point with chaotic dynamics. It is the appearance of this state that gives rise to the discontinuous transition observed in Fig. 3(c). The appearance of this state is mediated by the output gate becoming more switchlike (i.e., increasing αr) in the quiescent region for gh. To our knowledge, no such comparable phenomenon exists in RNNs with additive interactions. The full details of the calculations for this transition are provided in Appendix G. Here, we simply state and describe the salient features. For ease of presentation, the rest of the section assumes that all biases are zero. The results in this section are strictly valid only for αz = 0. In Appendix G3, we argue that they should also hold for moderate αz.

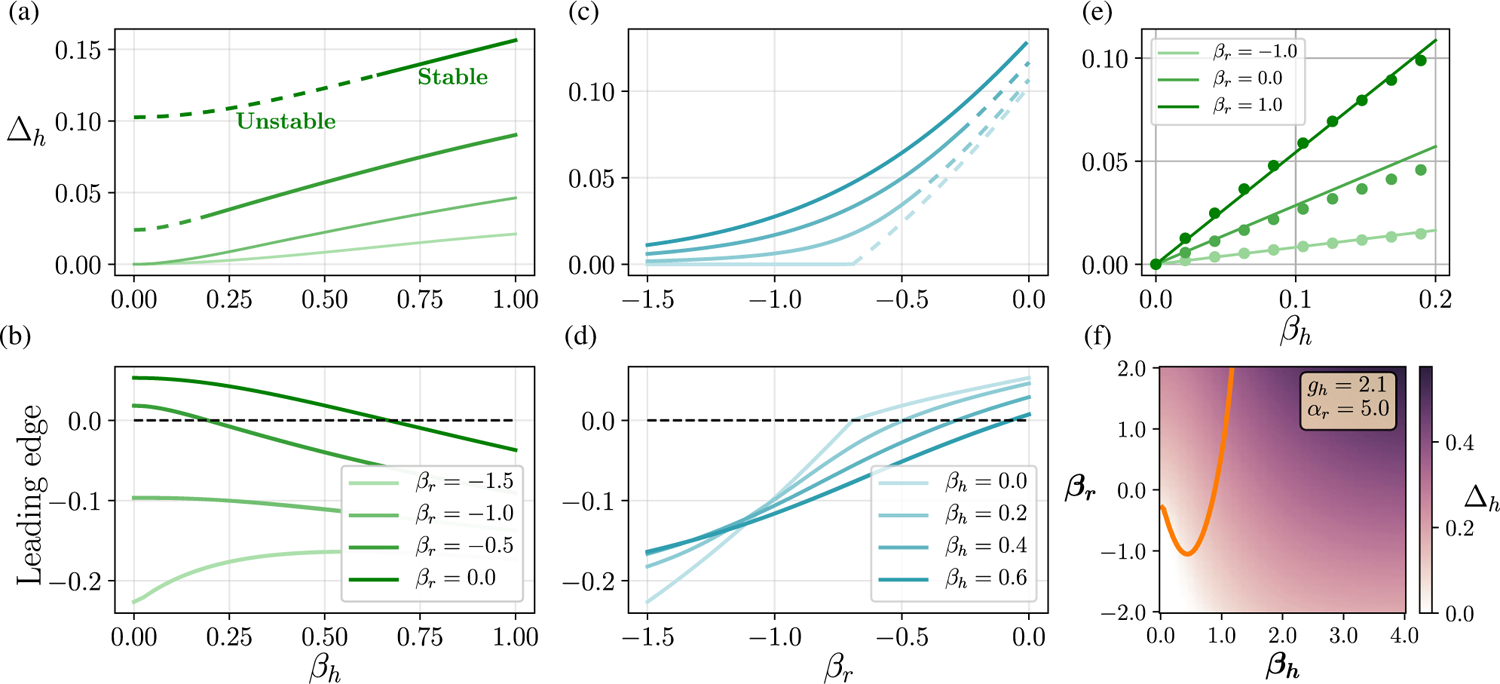

This discontinuous transition is characterized by a few noteworthy features.

1. Spontaneous emergence of fixed points

When gh < 2.0, the zero fixed point is stable. Moreover, for , when αr crosses a threshold value , unstable fixed points spontaneously appear in the phase space. The only dynamical signature of these unstable FPs are short-lived transients which do not scale with system size (see Fig. 11). Thus, we have a condition for fixed-point transition:

| (12) |

These unstable fixed points correspond to the emergence of nontrivial solutions to the time-independent MFT. Figure 4(a) shows the appearance of fixed-point MFT solutions for a fixed gh, and Fig. 4(b) shows the critical as a function of gh. As gh → 2−, we see that .

FIG. 4.

The discontinuous dynamical transition. (a) Spontaneous appearance of nonzero solutions (dashed and solid red lines) to the FP equations once αr crosses a critical value at fixed gh. (b) The critical as a function of gh. The vertical dashed line represents left critical value , below which a bifurcation is not possible. (c) The critical DMFT transition curve (red curve) calculated using Eqs. (G8) and (G9). The FP transition curve from (b) is shown in black. The green dashed line corresponds to , below which the dynamical transition is not possible. (d) Numerically calculated maximum Lyapunov exponent λmax as a function of αr for two different values of gh. The dashed lines correspond to the DMFT prediction for the discontinuous transition from (c). (e) Schematic of the bifurcation transition: For gh < 2 and , the zero FP is the only (stable) solution (bottom left box); for and , the zero FP is still stable, but there is a proliferation of unstable FPs without any obvious dynamical signature (top left); for and , chaotic dynamics coexist with the stable FP and this transition is discontinuous (top right); finally, for gh > 2.0, the stable FP becomes unstable, and only the chaotic attractor remains; this transition is continuous (bottom right).

These spontaneous MFT fixed-point solutions are unstable according to the criterion Eq. (6) derived from RMT. Moreover, in Appendix J, using a Kac-Rice analysis, we show that in this region the full 3N-dimensional system does indeed have a number of unstable fixed points that grows exponentially fast with N. Thus, this transition line represents a topological trivialization transition as conceived by, e.g., Refs. [59,60]. Our analysis shows that instability is intimately connected to the proliferation of fixed points. Remarkably, however, a time-dependent solution to the DMFT does not emerge across this transition, and the microscopic dynamics are insensitive to the transition in topological complexity, bringing us to the next point.

2. A delayed dynamical transition that shows a decoupling between topological and dynamical complexity

On increasing αr beyond , there is a second transition when αr crosses a critical value . This happens when we satisfy the condition for dynamical transition:

| (13) |

derived in Appendix G2. Figure 4(c) shows how varies with gh. As gh → 2−, we see that . Across this transition, a dynamical state spontaneously emerges, and the maximum Lyapunov exponent jumps from a negative value to a positive value [Fig. 4(d)]. This state exhibits chaotic dynamics that coexist with the stable zero fixed point. The presence of the stable FP means that the dynamical state is not strictly a chaotic attractor but rather a spontaneously appearing “chaotic set.” On increasing gh beyond 2.0, for large but fixed αr, the stable fixed point disappears, and the state smoothly transitions into a full chaotic attractor that is characterized above. This picture is summarized in the schematic in Fig. 4(e). This gap between the proliferation of unstable fixed points and the appearance of the chaotic dynamics differs from the result of Wainrib and Touboul [45] for purely additive RNNs, where the proliferation (topological complexity) is tightly linked to the chaotic dynamics (dynamical complexity). Thus, for gated RNNs, there appears to be another distinct mechanism for the transition to chaos, and the accompanying transition is a discontinuous one.

3. Long chaotic transients

For finite systems, across the transition the dynamics eventually flow into the zero FP after chaotic transients. Moreover, we expect this transient time to scale with the system size, and, in the infinite system size limit, the transient time should diverge in spite of the fact that the stable fixed point still exists. This is because the relative volume of the basin of attraction of the fixed point vanishes as N → ∞. In Appendix G [Figs. 11(c) and 11(d)], we do indeed see that the transient time for a fixed gh scales with system size [Fig. 11(c)] once αr is above the second transition (dashed line) and not otherwise [see Figs. 11(a) and 11(e), dashed lines].

4. An input-induced chaotic transition

The discontinuous chaotic transition has a functional consequence: Near the transition, static inputs can push a stable system to strong chaotic activity. This is in contrast to the typically stabilizing effects of inputs on the activity of random additive RNNs [21,43,44]. In Figs. 5(a) and 5(b), we see that, when static input with variance is applied to a stable system (a) near the discontinuous chaotic transition (in region 2 in Fig. 7), it induces chaotic activity (b); however, for the same input when applied to the system in the chaotic state [Fig. 5(c)], the dynamics are stabilized (d) as reported before.

FIG. 5.

Input-driven chaos. (a),(b) Near the discontinuous chaotic transition (in region 2 in Fig. 7), static input Ih (with Ir = Iz = 0) can push a stable system (a) to chaotic activity (b). (c),(d) In the purely chaotic state [(c), gh = 3.0], input has the familiar effect of stabilizing the dynamics (d). The elements of the input vector Ih are random Gaussian variables with zero mean and variance .

This phenomenon for static inputs can be understood using the phase diagram with nonzero biases, discussed in Sec. VII. There, we see how the transition curves move when a random bias βh is included. Near the classic chaotic transition (αr = 0), the bias moves the curve toward larger gh, thus suppressing chaos. Near the discontinuous chaotic transition , the bias pulls the curve toward smaller values of αr, thus promoting chaos. Thus, inputs can have opposite effects of inducing or stabilizing chaos within the same model in different parameter regimes. This phenomenon could, in principle, be leveraged for shaping the interaction between inputs and internal dynamics.

VI. GATES PROVIDE A FLEXIBLE RESET MECHANISM

Here, we discuss how the gates provide another critical function—a mechanism to flexibly reset the memory trace depending on external input or the internal state. This function complements the memory function; a memory that cannot be erased when needed is not very useful. To build intuition, let us consider a linear network ḣ = −h + Jh, where the matrix has a few eigenvalues that are zero, while the rest have a negative real part. The slow modes are good for memory function; however, that fact also makes it hard to forget memory traces along the slow modes. This trade-off is pointed out in Ref. [61]. To be functionally useful, it is critical that the memory trace can be erased flexibly in a context-dependent manner. The r gate allows this function naturally. Consider the same net, but now augmented with an r gate: ḣ = −h + Jh ⊙ σr. If the gate is turned off (σr = 0) for a short duration, the state h is reset to zero. One can actually be more specific: We may choose a with σr = σ[Jr(ϕh)], such that the r gate turns off whenever ϕ(h) gets aligned with u, thus providing an internal-context-dependent reset.

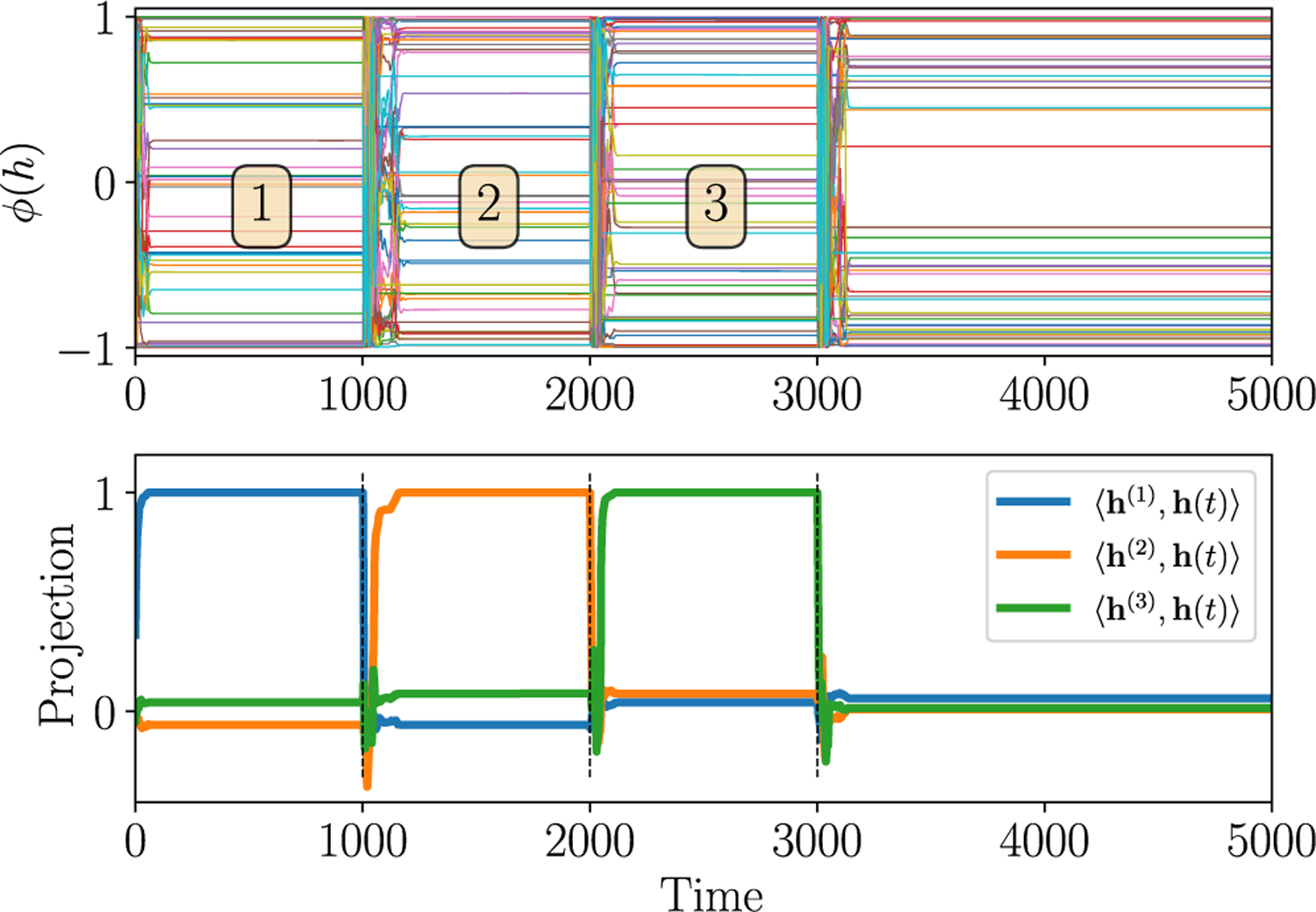

Apart from resetting to zero, the z gate also allows the possibility of rapidly scrambling the state to a random value by means of the input-induced chaos. This phenomenon is illustrated in Fig. 6, where the network in the marginally stable state normally functions as a memory (retains traces for long times, as in Fig. 2), but positive inputs Iz (with Ih = Ir = 0) to the z gate above a threshold strength even for a short duration can induce chaos, thereby scrambling the state and erasing the previous memory state (Fig. 6, bottom panel). The mechanism for this scrambling can be understood by appealing to Eq. (8). A finite input Iz with nonzero mean is able to change 〈σ(z)〉 and, thus, push the critical line for marginal stability in one way or the other. For instance, if 〈Iz〉 > 0, 〈σ(z)〉 > 1/2, which (for αr = 0) moves the transition to marginal stability to a smaller value of gh. This implies that a marginally stable state can be made chaotic in the presence of Iz with finite mean. This mechanism for input-induced chaos actually appears to be different from that explored in the previous section, which occurs across the discontinuous chaotic transition. We discuss this more in Sec. VII.

FIG. 6.

Gates provide a reset mechanism. Positive static inputs are applied to the z gate when the RNN is in the marginally stable state (gh = 3.0, αr = 2.5, and αz = ∞) for 20 time units at times indicated by dashed lines. The input induces chaos which rapidly scrambles the network state, thus erasing the trace of the previous memory; the bottom panel shows the normalized projection of the state h(t) on the directions h(1,2,3) aligned with the state in regions 1, 2, and 3.

In summary, gating imbues the RNN with the capacity to flexibly reset memory traces, providing an “inductive bias” for context-dependent reset. The specific method of reset depends on the task or function, and this can be selected, e.g., by gradient-based training. This inductive bias for resetting is found to be critical for performance in ML tasks [62].

VII. PHASE DIAGRAMS FOR THE GATED NETWORK

Here, we summarize the rich dynamical phases of the gated RNN and the critical lines separating them. The key parameters determining the critical lines and the phase diagram are the activation and output-gate gains and the associated biases: (gh, βh, αr, βr). The update gate does not play a role in determining continuous or critical chaotic transitions. On the other hand, it influences the discontinuous transition to chaos for sufficiently large values of αz (see Sec. G3 for discussion). Furthermore, the update gate has a strong effect on the dynamical aspects of the states near the critical lines. There are macroscopic regions of the parameter space adjacent to the critical lines where the states can be made marginally stable in the limit of αz → ∞. The shape of this marginal stability region is influenced by βz and Iz.

Figure 7(a) shows the dynamical phases for the network with no biases in the (gh, αr) plane. When gh is below 2.0 and , the zero fixed point is the only solution (region 1). As discussed in Sec. VB, on crossing the fixed-point bifurcation line [green line, Fig. 7(a)], there is a spontaneous proliferation of unstable fixed points in the phase space (region 2). This can occur only when . The proliferation of fixed points is not accompanied by any obvious dynamical signatures. However, if , we can increase αr further to cross a second discontinuous transition where a dynamical state spontaneously appears featuring the coexistence of chaotic activity and a stable fixed point (region 3). When gh is increased beyond the critical value of 2.0, the stable zero fixed point becomes unstable for all αr, and we get a chaotic attractor (region 4). All the critical lines are determined by gh and αr, and αz has no explicit role; however, for large αz there is a large region of the parameter space on the chaotic side of the chaotic transition that can be made marginally stable [thatched region 5 in Fig. 7(a)].

A. Role of biases and static inputs

Biases have the effect of generating nontrivial fixed points and controlling stability by moving the edge of the spectral curve. Another key feature of biases is the suppression of the discontinuous bifurcation transition observed without biases. This is explained in detail in Appendix H. A particularly illuminating illustration of the effects of a bias can be inferred from the critical line (red dashed) for finite bias shown in Fig. 7. This curve, computed using the FP stability criterion (6) combined with the MFT equations [(C28)–(C30)], represents the transition between stability and chaos for finite bias with zero mean and nonzero variance. Equivalently, we may think of this as the critical line for a network with static input (with Ir = Iz = 0). Along the gh axis, we can observe the well-documented phenomena whereby an input suppresses chaos. This corresponds to the region gh > 2 which lies to the left of the red dashed critical line, which is chaotic in the absence of input and flows to a stable fixed point in the presence of input. However, this behavior is reversed for gh < 2. Here, we see a significant swath of phase space which is stable in the absence of input but which becomes chaotic when input is present. Thus, the stability-to-chaos phase boundary in the presence of biases (or inputs) reveals that the output (r) gate can facilitate an input-induced transition to chaos.

VIII. DISCUSSION

Gating is a form of multiplicative interaction that is a central feature of the best-performing RNNs in machine learning, and it is also a prominent feature of biological neurons. Prior theoretical work on RNNs has considered only RNNs with additive interactions. Here, we present the first detailed study on the consequences of gating for RNNs and show that gating can produce dramatically richer behavior that have significant functional benefits.

The continuous-time gated RNN (gRNN) we study resembles a popular model used in machine learning applications, the gated recurrent unit (GRU) [see the note below Eq. (C27)]. Previous work [51] looks at the instantaneous Jacobian spectrum for the discrete-time GRU using RMT methods similar to those presented in Appendix A; however, this work does not go beyond time-independent MFT and presents a phase diagram showing only boundaries across which the MFT fixed points become unstable [63]. In the present manuscript, we illuminate the full dynamical phase diagram for our gated RNN, uncovering much richer structure. Both the GRU and our gRNN have a gating function which dynamically scales the time constant, which in both cases leads to a marginally stable phase in the limit of a binary gate. However, the dynamical phase diagram presented in Fig. 7 reveals a novel discontinuous transition to chaos. We conjecture that such a phase transition should also be present in the GRU. Also, Ref. [51] lacks any discussion of the influence of inputs or biases. The present paper includes discussion of the functional significance of the gates in the presence of inputs. We believe these results, combined with the refined dynamical phase diagram, can shed some light on the role of analogous gates in the GRU and other gated ML architectures. We review the significance of the gates in more detail below.

A. Significance of the update gate

The update gate modulates the rate of integration. In single-neuron models, such a modulation is shown to make the neuron’s responses robust to time-warped inputs [14]. Furthermore, normative approaches, requiring time reparametrization invariance in ML RNNs, naturally imply the existence of a mechanism that modulates the integration rate [64]. We show that, for a wide range of parameters, a more sensitive (or switchlike) update gate leads to marginal stability. Marginally stable models of biological function have long been of interest with regard to their benefits for information processing (cf. Ref. [33] and references therein). In the gated RNN, a functional consequence of the marginally stable state is the use of the network as a robust integrator—such integratorlike function is shown to be beneficial for a variety of computational functions such as motor control [34–36], decision making [37], and auditory processing [53]. However, previous models of these integrators often require handcrafted symmetries and fine-tuning [38]. We show that gating allows this function without fine-tuning. Signatures of integratorlike behavior are also empirically observed in successfully trained gated ML RNNs on complex tasks [41]. We provide a theoretical basis for how gating produces these. The update gate facilitates accumulation of slow modes and a pinching of the spectral curve which leads to a suppression of unstable directions and overall slowing of the dynamics over a range of parameters. This is a manifestly self-organized slowing down. Other mechanisms for slowing down dynamics have been proposed where the slow timescales of the network dynamics are inherited from other slow internal processes such as synaptic filtering [65,66]; however, such mechanisms differ from the slowing due to gating; they do not seem to display the pinching and clumping, and they also do not rely on self-organized behavior.

B. Significance of the output gate

The output gate dynamically modulates the outputs of individual neurons. Similar shunting mechanisms are widely observed in real neurons and are crucial for performance in ML tasks [62]. We show that the output gate offers fine control over the dimensionality of the dynamics in phase space, and this ability is implicated in task performance in ML RNNs [42]. This gate also gives rise to a novel discontinuous chaotic transition where inputs can abruptly push stable systems to strongly chaotic activity; this is in contrast to the typically stabilizing role of inputs in additive RNNs. In this transition, there is a decoupling between topological and dynamical complexity. The chaotic state across this transition is also characterized by the coexistence of a stable fixed point with chaotic dynamics—in finite size systems, this manifests as long transients that scale with the system size. We note that there are other systems displaying either a discontinuous chaotic transition or the existence of fixed points overlapping with chaotic (pseudo)attractors [19] or apparent chaotic attractors with finite alignment with particular directions [67]; however, we are not aware of a transition in large RNNs where static inputs can induce strong chaos or the topological and dynamical complexity are decoupled across the transition. In this regard, the chaotic transition mediated by the output gated seems to be fundamentally different. More generally, the output gate is likely to have a significant role in controlling the influence of external inputs on the intrinsic dynamics.

We also show how the gates complement the memory function of the update gate by providing an important, context- and input-dependent reset mechanism. The ability to erase a memory trace flexibly is critical for function [62]. Gates also provide a mechanism to avoid the accuracy-flexibility trade-off noted for purely additive networks—where the stability of a memory comes at the cost of the ease with which it is rewritten [61].

We summarize the rich behavior of the gated RNN via phase diagrams indicating the critical surfaces and regions of marginal stability. From a practical perspective, the phase diagram is useful in light of the observation that it is often easier to train RNNs initialized in the chaotic regime but close to the critical points. This is often referred to as the “edge of chaos” hypothesis [68–70]. Thus, the phase diagrams provide ML practitioners with a map for principled parameter initialization—one of the most critical choices deciding training success.

ACKNOWLEDGMENTS

K. K. is supported by a C. V. Starr fellowship and a CPBF fellowship (through NSF PHY-1734030). T. C. is supported by a grant from the Simons Foundation (891851, TC). D. J. S. was supported by the NSF through the CPBF (PHY-1734030) and by a Simons Foundation fellowship for the MMLS. This work was partially supported by the NIH under Grant No. R01EB026943. K. K. and D.J.S. thank the Simons Institute for the Theory of Computing at U. C. Berkeley, where part of the research was conducted. T. C. gratefully acknowledges the support of the Initiative for the Theoretical Sciences at the Graduate Center, CUNY, where most of this work was completed. We are most grateful to William Bialek, Jonathan Cohen, Andrea Crisanti, Rainer Engelken, Moritz Helias, Jonathan Kadmon, Jimmy Kim, Itamar Landau, Wave Ngampruetikorn, Katherine Quinn, Friedrich Schuessler, Julia Steinberg, and Merav Stern for fruitful discussions.

APPENDIX A: DETAILS OF RANDOM MATRIX THEORY FOR SPECTRUM OF THE JACOBIAN

In this section, we provide details of the calculation of the bounding curve for the Jacobian spectrum for both fixed points and time-varying states. Our approach to the problem utilizes the method of Hermitian reduction [25,26] to deal with non-Hermitian random matrices.The analysis here resembles that in Ref. [51], which also considers Jacobians that are highly structured random matrices arising from discrete-time gated RNNs.

The Jacobian 𝒟 is a block-structured matrix constructed from the random coupling matrices Jh,z,r and diagonal matrices of the state variables. In the limit of large N, we expect the spectrum to be self-averaging—i.e., the distribution of eigenvalues for a random instance of the network approaches the ensemble-averaged distribution. We can, thus, gain insight about typical dynamical behavior by studying the ensemble- (or disorder-) averaged spectrum of the Jacobian. Our starting point is the disorder-averaged spectral density μ(λ) defined as

| (A1) |

where the λi are the eigenvalues of 𝒟 for a given realization of Jh,z,r and the expectation is taken over the distribution of real Ginibre random matrices from which Jh,z,r are drawn. Using an alternate representation for the Dirac delta function in the complex plane , we can write the average spectral density as

| (A2) |

where is the 3N-dimensional identity matrix. 𝒟 is in general non-Hermitian, so the support of the spectrum is not limited to the real line, and the standard procedure of studying the Green’s function by analytic continuation is not applicable, since it is nonholomorphic on the support. Instead, we use the method of Hermitization [25,26] to analyze the resolvent for an expanded 6N × 6N Hermitian matrix H:

| (A3) |

| (A4) |

and the Green’s function for the original problem is obtained by considering the lower-left block of 𝒢:

| (A5) |

To make this problem tractable, we invoke an ansatz called the local chaos hypothesis [57,71], which posits that, for large random networks in steady state, the state variables are statistically independent of the random coupling matrices Jz,h,r (also see Ref. [72]). This implies that the Jacobian [Eq. (4)] has an explicit linear dependence only on Jh,z,r, and the state variables are governed by their steady-state distribution from the disorder-averaged DMFT (Appendix C). These assumptions make the random matrix problem tractable, and we can evaluate the Green’s function by using the self-consistent Born approximation, which is exact as N → ∞. We detail this procedure below.

The Jacobian itself can be decomposed into structured (A, L, R) and random parts (𝒥):

| (A6) |

At this point, we must make a crucial assumption: The structured matrices A, L, and R are independent of the random matrices appearing 𝒥. This implies that the dynamics is self-averaging and that the state variables reach a steady-state distribution determined by the DMFT and insensitive to the particular quenched disorder 𝒥. This self-averaging assumption leads to theoretical predictions which are in very good agreement with simulations of large networks, as presented in Fig. 1.

This independence assumption renders 𝒟 a linear function of the random matrix 𝒥, whose entries are Gaussian random variables. The next steps are to develop an asymptotic series in the random components of H, compute the resulting moments, and perform a resummation of the series. This is conveniently accomplished by the self-consistent Born approximation (SCBA). The output of the SCBA is a self-consistently determined self-energy functional Σ[𝒢] which succinctly encapsulates the resummation of moments. With this, the Dyson equation for 𝒢 is given by

| (A7) |

where the matrices on the right are defined in terms of 3N × 3N blocks:

| (A8) |

| (A9) |

and Q is a superoperator which acts on its argument as follows:

| (A10) |

Here, we express the self-energy using the 3N × 3N subblocks of the Green’s function 𝒢:

| (A11) |

At this point, we have presented all of the necessary ingredients for computing the Green’s function and, thus, determining the spectral properties of the Jacobian. These are the Dyson equation (A7), along with the free Green’s function (A8) and the self-energy (A9). Most of what is left is complicated linear algebra. However, in the interest of completeness, we proceed to unpack these equations and give a detailed derivation of the main equation of interest, the bounding curve of the spectral density.

To proceed further, it is useful to define the following transformed Green’s functions, which can be written in terms of N × N subblocks:

| (A12) |

| (A13) |

Denote also the mean trace of these subblocks as

| (A14) |

Then the self-energy matrix in Eq. (A9) is block diagonal, i.e., Σ[𝒢] = bdiag(Σ11, Σ22), with

| (A15) |

| (A16) |

With the self-energy in this form, we are able to solve the Dyson equation for the full Green’s function 𝒢 by direct matrix inversion:

| (A17) |

which can be carried out easily by symbolic manipulation software. The rhs of Eq. (A17) is a function of , whereas the lhs is a function of the Green’s function before the transformations (A12) and (A13). Thus, to get a set of equations we can solve, we apply these same transformations to both sides of Eq. (A17) after solving the Dyson equation. The final step is to take the limit η → 0, recovering the problem we originally wished to solve.

The result of these manipulations is a set of six equations for the mean traces of the transformed Green’s function defined in Eq. (A14). In order to write these down, we introduce some additional notation. The self-consistent equations are of the form

| (A18) |

where we denote 〈M〉 ≡ N−1TrM for shorthand and i runs from 1 to 6. Denote the state-variable-dependent diagonal matrices as

| (A19) |

and, because they appear frequently in the resulting equations, define

| (A20) |

| (A21) |

| (A22) |

The denominator in Eq. (A18) is then given by

| (A23) |

and the numerators Γi are given by

| (A24) |

| (A25) |

| (A26) |

| (A27) |

| (A28) |

The numerators and denominator are all diagonal matrices with real entries, which is why we use the simple notation of a ratio when referring to matrix inversion.

Solving these equations gives us the as implicit functions of λ. They are, in general, complicated and resist exact solution. However, the situation simplifies considerably when we are looking for the spectral curve. In this case, we are looking for all that satisfy the self-consistent equations with .

We must take this limit carefully, since the ratio of these functions can remain constant. For this reason, it is necessary to define

| (A29) |

We may do the same for , , and , but it turns out that x2 and x3 are sufficient to compute the spectral curve. Next, divide by and send all , keeping the ratios fixed. Applying this to the equation for results in

| (A30) |

Similarly, for and , we get

| (A31) |

| (A32) |

where the coefficients γi, which are functions of λ, are given by

The linear system of equations (A30)–(A32) is consistent iff

| (A33) |

In other words, γi must satisfy Eq. (A33) when . This expression depends on λ and implicitly defines a curve in , which is the boundary of the support of the spectral density.

Plugging in the explicit expression for γi, we get the implicit equation for the spectral curve as all that satisfy

| (A34) |

For large systems, we can replace the empirical traces of the state variable by their averages given by the DMFT variances. Then, the equation for the curve for a general steady state is given by

| (A35) |

FIG. 8.

Jacobian spectrum at a time-varying state. Red dots are the Jacobian eigenvalues for the full network in a (time-varying) steady state, and the spectral curve of the Jacobian is calculated using moments from (i) the full state vectors (blue curve) or using the variances from the fixed-point MFT (green). Surprisingly, the agreement is reasonably good. For network simulations, N = 1000, gh = 2.5, αr = 1, αz = 15, and all biases are zero.

For fixed points, we have , which makes γ3 = γ4 = 0. The equation for the spectral curve simplifies to that which is quoted in the main text [Eq. (5)]:

| (A36) |

1. Jacobian spectrum for the case αr = 0

In the case when αr = 0, it is possible to express the Green’s function [Eq. (A5)] in a simpler form. Recall that

| (A37) |

Let . Then, the Green’s function is given by

| (A38) |

| (A39) |

| (A40) |

where is defined implicitly to satisfy the equation

| (A41) |

The function acts as a sort of order parameter for the spectral density, indicating the transition on the complex plane between zero and finite density μ. Outside the spectral support, λ ∈ Σc, this order parameter vanishes, ξ = 0, and the Green’s function is holomorphic:

| (A42) |

which, of course, indicates that the density is zero since . Inside the support λ ∈ Σ, the order parameter ξ ≠ 0, and the Green’s function consequently picks up nonanalytic contributions, proportional to . Since the Green’s function is continuous on the complex plane, it must be continuous across the boundary of the spectral support. This must then occur precisely when the holomorphic solution meets the nonanalytic solution, at ξ = 0. This is the condition used to find the boundary curve above.

APPENDIX B: SPECTRAL CLUMPING AND PINCHING IN THE LIMIT αz → ∞

In this section, we provide details on the accumulation of eigenvalues near zero and the pinching of the leading spectral curve (for certain values of gh) as the update gate becomes switchlike (αz → ∞). To focus on the key aspects of these phenomena, we consider the case when the reset gate is off and there are no biases (αr = 0 and βr,h,z = 0). Moreover, we consider a piecewise linear approximation—sometimes called “hard” tanh—to the tanh function given by

| (B1) |

This approximation does not qualitatively change the nature of the clumping.

In the limit αz → ∞, the update gate σz becomes binary with a distribution given by

| (B2) |

where fz = 〈σz〉 is the fraction of update gates that are open (i.e., equal to one). Using this, along with the assumption that —which is valid in this regime—we can simplify the expression for the Green’s function [Eqs. (A38)–(A42)] to yield

| (B3) |

where fh is the fraction of hard tanh activations that are not saturated. In the limit of small τz and βr = 0, we get the expression for the density given in the text:

| (B4) |

Thus, we see an extensive number of eigenvalues at zero.

Now, let us study the regime where αz is large but not infinite. We would like to get the scaling behavior of the leading edge of the spectrum and the density of eigenvalues contained in a radius δ around the origin. We make an ansatz for the spectral edge close to zero , where c is a positive constant. With this ansatz, the equation for the spectral curve reads

| (B5) |

In the limit of large αz and βr = 0, this implies

| (B6) |

If this has a positive solution for c, then the scaling of the spectral edge as holds. Moreover, whenever there is a positive solution for c, we also expect pinching of the spectral curve, and in the limit αz → ∞ we have marginal stability.

Under the same approximation, we can approximate the eigenvalue density in a radius δ around zero as

| (B7) |

where we choose the contour along for θ ∈ [0, 2π) and . In the limit of large αz (thus, δ ≪ 1), we get the scaling form described in the main text:

| (B8) |

APPENDIX C: DETAILS OF THE DYNAMICAL MEAN-FIELD THEORY

The DMFT is a powerful analytical framework used to study the dynamics of disordered systems, and it traces its origins to the study of dynamical aspects of spin glasses [73,74] and has been later applied to the study of random neural networks [9,15,21,75]. In our case, the DMFT reduces the description of the full 3N-dimensional (deterministic) ordinary differential equations (ODEs) describing (h, z, r) to a set of three coupled stochastic differential equations for scalar variables (h, z, r).

Here, we provide a detailed, self-contained description of the dynamical mean-field theory for the gated RNN using the Martin–Siggia–Rose–De Dominicis–Janssen formalism. The starting point is a generating functional—akin to the generating function of a random variable—which takes an expectation over the paths generated by the dynamics. The generating functional is defined as

| (C1) |

where xj(t) ≡ [hj(t), zj(t), rj(t)] is the trajectory and is the argument of the generating functional. We also include external fields , which are used to calculate the response functions. The measure in the expectation is a path integral over the dynamics. The generating functional is then used to calculate correlation and response functions using the appropriate (variational) derivatives. For instance, the two-point function for the h field is given by

| (C2) |

Up until this point, things are quite general and do not rely on the specific form of the dynamics. However, for large random networks, we expect certain quantities such as the population averaged correlation function Ch ≡ N−1 Σi〈hi(t)hi(t′)〉 to be self-averaging and, thus, not vary much across realizations. Thus, we can study the disorder averaged (over 𝒥), the generating functional , and approximate with its value evaluated at the saddle point of the action. This approximation gives us the single-site DMFT picture of dynamics described in Eqs. (C19) and (C20).

To see how this all works, we start with the equations of motion (in vector form)

| (C3) |

| (C4) |

| (C5) |

where ⊙ stands for elementwise multiplication.

To write down the MSRDJ generating functional, let us discretize the dynamics (in the Itô convention). Note that in this convention the Jacobian is unity.

where we introduce external fields in the dynamics , , and . The generating functional is given by

| (C6) |

where , , and xj(t) ≡ [hj(t), zj(t), rj(t)]; also, the expectation is over the dynamics generated by the network. Writing this out explicitly, with δ functions enforcing the dynamics, we get the following integral for the generating functional:

| (C7) |

Now, let us introduce the Fourier representation for the δ function; this introduces an auxiliary field variable, which as we see allows us to calculate the response function in the MSRDJ formalism. The generating functional can then be expressed as

| (C8) |

where the functions fh,z,r summarize the gated RNN dynamics

Let us now take the continuum limit δt → 0 and formally define the measures 𝒟hi = limδt→0 ∏t dhi(t). We can then write the generating functional as a path integral:

| (C9) |

where , x = (hi, zi, ri), , and the action S which gives weights to the paths is given by

| (C10) |

The functional is properly normalized, so Z𝒥[0, b] = l. We can calculate correlation functions and response functions by taking appropriate variational derivatives of the generating functional Z, but first we address the role of the random couplings.

1. Disorder averaging

We are interested in the typical behavior of ensembles of the networks, so we work with the disorder-averaged generating functional ; Z𝒥 is properly normalized, so we are allowed to do this averaging on Z𝒥. Averaging over involves the following integral:

which evaluates to

and similarly for Jz and Jr we get terms

The disorder-averaged generating functional is then given by

| (C11) |

where the disorder-averaged action is given by

| (C12) |

With some foresight, we see the action is extensive in the system size, and we can try to reduce it to a single-site description. However, the issue now is that we have nonlocal terms (e.g., involving both i and j), and we can introduce the following auxiliary fields to decouple these nonlocal terms:

| (C13) |

To make the C’s free fields that we integrate over, we enforce these relations using the Fourier representation of δ functions with additional auxiliary fields:

This allows us to make the following transformations to decouple the nonlocal terms in the action :

We see clearly that the and Cϕ auxiliary fields which represent the (population-averaged) ϕσr − ϕσr and ϕ − ϕ correlation functions decouple the sites by summarizing all the information present in the rest of the network in terms of two-point functions; two different sites interact only by means of the correlation functions. The disorder-averaged generating functional can now be written as

| (C14) |

where C = (Ch, Cz, Cr) and Ĉ = (Ĉh, Ĉz, Ĉr). The sitewise decoupled action Sd contains only terms involving a single site (and the C fields). So, for a given value of Ĉ and C, the different sites are decoupled and driven by the sitewise action

| (C15) |

where

2. Saddle-point approximation for N → ∞

So far, we do not make any use of the fact that we are considering large networks. However, noting that N appears in the exponent in the expression for the disorder-averaged generating functional, we can approximate it using a saddle-point approximation:

We approximate the action ℒ in Eq. (C14) by its saddle-point value plus a Hessian term: ℒ ≃ ℒ0 + ℒ2 and the Q and Q̂ fields represent Gaussian fluctuations about the saddle-point values C0 and Ĉ0, respectively. At the saddle-point the action is stationary with respect to variations; thus, the saddle-point values of C fields satisfy

| (C16) |

In evaluating the saddle-point correlation function in the second line, we use the fact that equal-time response functions in the Itô convention are zero [29]. This is perhaps the first significant point of departure from previous studies of disordered neural networks and forces us to confront the multiplicative nature of the z gate. Here, 〈⋯〉0 denotes averages with respect to paths generated by the saddle-point action; thus, these equations are a self-consistency constraint. With the correlation fields fixed at their saddle-point values, if we neglect the contribution of the fluctuations (i.e., ignore ℒ2), then the generating functional is given by a product of identical sitewise generating functionals:

| (C17) |

where the sitewise functionals are given by

| (C18) |

where .

The sitewise decoupled action is now quadratic with the correlation functions taking on their saddle-point values. This corresponds to an action for each site containing three scalar variables driven by Gaussian processes. This can be seen explicitly by using a Hubbard-Stratonovich transform which makes the action linear at the cost of introducing three auxiliary Gaussian fields ηh, ηz, and ηr with correlation functions , , and , respectively. With this transformation, the action for each site corresponds to stochastic dynamics for three scalar variables given by

| (C19) |

| (C20) |

| (C21) |

where the Gaussian noise processes ηh, ηz, and ηr have correlation functions that must be determined self-consistently:

The intuitive picture of the saddle-point approximation is as follows: The sites of the full network become decoupled, and they are each driven by a Gaussian processes whose correlation functions summarize the activity of the rest of the network “felt” by each site. It is possible to argue about the final result heuristically, but one does not have access to the systematic corrections that a field theory formulation affords.

We comment here on the unique difficulty that gating presents to an analysis of the DMFT. While r(t) and z(t) are both described by Gaussian processes in the DMFT, the multiplicative σz(z) interaction in Eq. (C19) spoils the Gaussianity of h(t). Note that r(t) is always Gaussian and uncorrelated to h(t). In order to try solving for the correlation functions, we need to make a factorization assumption, justified numerically in Fig. 10. The story simplifies at a fixed point, where h = ηh (since σz > 0), and is, thus, Gaussian and independent of r.

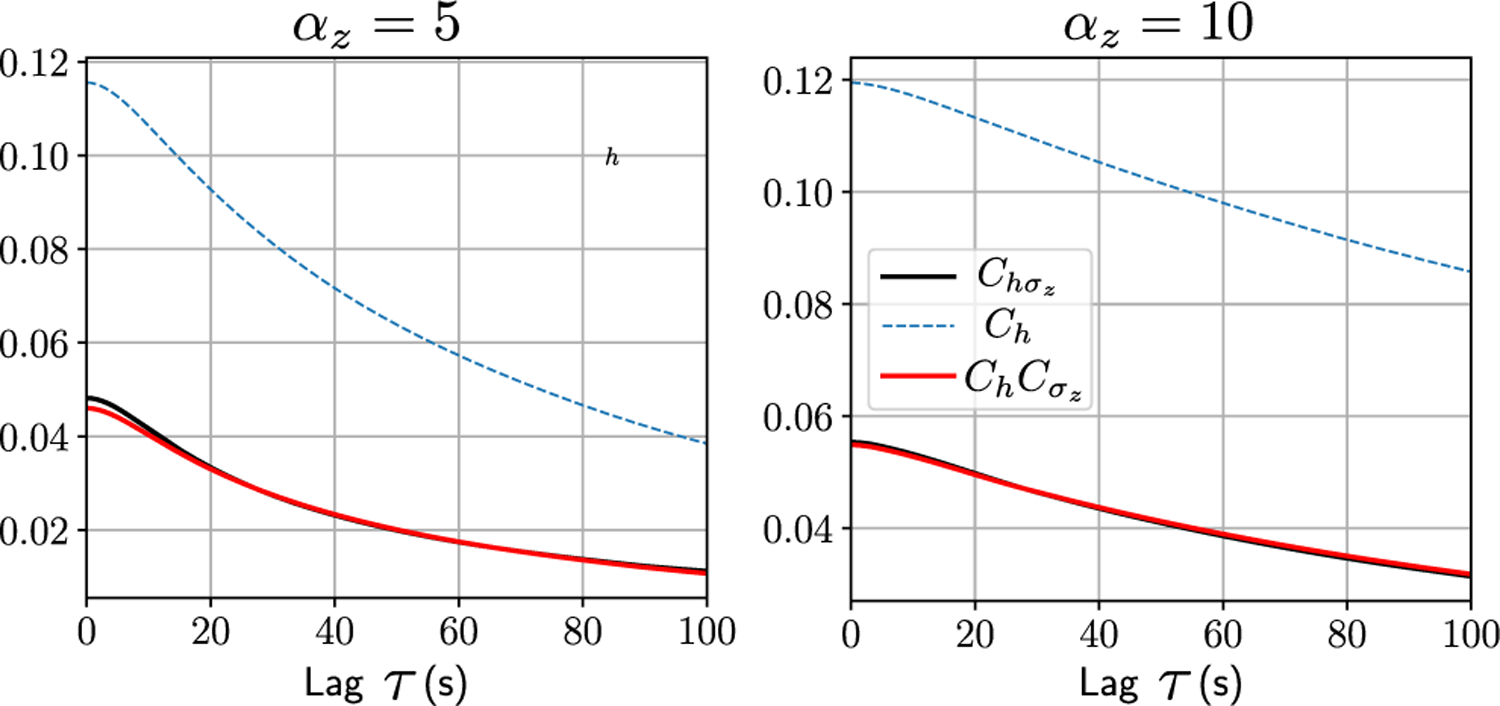

In order to solve the DMFT equations, we use a numerical method described in Ref. [76]. Specifically, we generate noise paths ηh,z,r starting with an initial guess for the correlation functions and then iteratively update the correlation functions using the mean-field equations till convergence. The classical method of solving the DMFT by mapping the DMFT equations to a second-order ODE describing the motion of a particle in a potential cannot be used in the presence of multiplicative gates. In Fig. 9, we see that the solution to the mean-field equations agrees well with the true population-averaged correlation function; Fig. 9 also shows the scale of fluctuations around the mean-field solutions (Fig. 9, thin black lines).

The correlation functions in the DMFT picture such as Ch(t, t′) = 〈h(t)h(t′)〉 are the order parameters and correspond to the population-averaged correlation functions in the full network. These turn out to useful in our analysis of the RNN dynamics in some analyses. Qualitative changes in the correlation functions correspond to transitions between dynamical regimes of the RNN.

In general, the non-Gaussian nature of h makes it impossible to get equations governing the correlation functions. However, when αz is not too large, Eqs. (C19) and (C20) can be extended to get equations of motions for the correlation functions Ch, Cz, and Cr, which proves useful later on. This requires a separation assumption between the h and σz correlators, which seems reasonable for moderate αz (see Fig. 10). “Squaring” Eqs. (C19) and (C20), we get

| (C22) |

| (C23) |

| (C24) |

where we use the shorthand σz(t) ≡ σz[z(t)], ϕ(t) ≡ ϕ[h(t)], and denote the two-time correlation functions as

| (C25) |

where x ∈ {h, z, r, σz, σr, ϕ} and the expectation here is over the random Gaussian fields in Eqs. (C19)–(C21). We assume that the network reaches steady state, so that the correlation functions are only a function of the time difference τ = t − t′. The role of the z gate as an adaptive time constant is evident in Eq. (C22).

FIG. 9.