Abstract

We present a unified conceptual framework and the associated software package for single-molecule Förster resonance energy transfer (smFRET) analysis from single-photon arrivals leveraging Bayesian nonparametrics, BNP-FRET. This unified framework addresses the following key physical complexities of a single-photon smFRET experiment, including: 1) fluorophore photophysics; 2) continuous time kinetics of the labeled system with large timescale separations between photophysical phenomena such as excited photophysical state lifetimes and events such as transition between system states; 3) unavoidable detector artefacts; 4) background emissions; 5) unknown number of system states; and 6) both continuous and pulsed illumination. These physical features necessarily demand a novel framework that extends beyond existing tools. In particular, the theory naturally brings us to a hidden Markov model with a second-order structure and Bayesian nonparametrics on account of items 1, 2, and 5 on the list. In the second and third companion articles, we discuss the direct effects of these key complexities on the inference of parameters for continuous and pulsed illumination, respectively.

Why it matters

smFRET is a widely used technique for studying kinetics of molecular complexes. However, until now, smFRET data analysis methods have required specifying a priori the dimensionality of the underlying physical model (the exact number of kinetic parameters). Such approaches are inherently limiting given the typically unknown number of physical configurations a molecular complex may assume. The methods presented here eliminate this requirement and allow estimating the physical model itself along with kinetic parameters, while incorporating all sources of noise in the data.

Introduction

Förster resonance energy transfer (FRET) has served as a spectroscopic ruler to study motion at the nanometer scale (1,2,3,4), and has revealed insight into intra- and intermolecular dynamics of proteins (5,6,7,8,9,10,11), nucleic acids (12), and their interactions (13,14). In particular, single-molecule FRET (smFRET) experiments have been used to determine the pore size and opening mechanism of ion channels sensitive to mechanical stress in the membrane (15), the intermediate stages of protein folding (16,17), and the chromatin interactions modulated by the helper protein HP1 involved in allowing genetic transcription for tightly packed chromatin (18).

A typical FRET experiment involves labeling molecules of interest with donor and acceptor dyes such that the donor may transfer energy to the acceptor via dipole-dipole interaction when separated by distances of 2–10 nm (19). This interaction weakens rapidly with increasing separation and goes as (20,21).

To induce FRET during experiments, the donor is illuminated by a continuous or pulsating light source for the desired time period or until the dyes photobleach. Upon excitation, the donor may emit a photon itself or transfer its energy nonradiatively to the acceptor which eventually relaxes to emit a photon of a different color (20,21). As such, the data collected consist of photon arrival times (for single-photon experiments) or, otherwise, brightness values in addition to photon colors collected in different detection channels.

The distance dependence in the rate of energy transfer between donor and acceptor is key in using smFRET as a molecular ruler. Furthermore, this distance dependence directly manifests itself in the form of higher fraction of photons detected in the acceptor channel when the dyes are closer together (as demonstrated in Fig. 1). This fraction is commonly referred to as the FRET efficiency,

where and are the number of donor and acceptor photons detected in a given time period, respectively. In addition, is the characteristic separation that corresponds to a FRET efficiency of 0.5 or 50% of the emitted photons emanating from the acceptor.

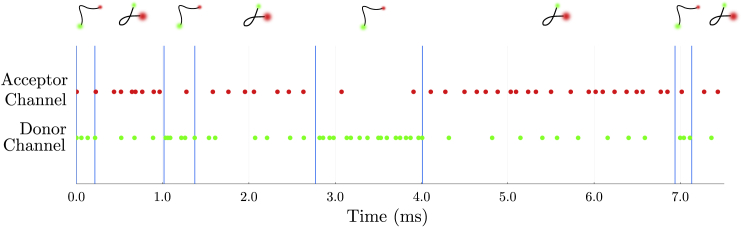

Figure 1.

A cartoon figure illustrating smFRET data. For the experiments considered here, the kinetics along the reaction coordinate defined along the donor-acceptor distance are monitored using single-photon arrival data. In the figure above, photon arrivals are represented by green dots for photons arriving into the donor channel and red dots for photons arriving in the acceptor channel. For the case where donor and acceptor label one molecule, a molecule’s transitions between system states (coinciding with conformations) is reflected by the distance between labels measured by variations in detected photon arrival times and colors.

Now, the aim of smFRET is to capture on-the-fly changes in donor-acceptor distance. However, this is often confounded by several sources of stochasticity, which unavoidably obscure direct interpretation. These include: 1) the stochasticity inherent to photon arrival times; 2) a detector’s probabilistic response to an incoming photon (22); 3) background emissions (2); and 4) fluorescent labels’ stochastic photophysical properties (2). Taken together, these problems necessarily contribute to uncertainty in the number of distinct system states visited by a labeled system over an experiment’s course (23,24,25).

Here, we delve into greater detail into items 2 and 4. In particular, item 2 pertains to questions of crosstalk, detector efficiency, dead time, dark current, and instrument response function (IRF) introducing uncertainty in excited photophysical state lifetime assessments (22,26,27).

Item 4 refers to a collection of effects including limited quantum yield and variable brightness due to blinking of dyes caused by nonradiative pathways (28,29), photobleaching or permanent deactivation of the dyes (2,28,29), spectral overlap between the donor and acceptor dyes, which may result in direct excitation of the acceptors or leaking of photons into the incorrect channel (2,26), or a donor-acceptor pair’s relative misalignment or positioning resulting in false signals and inaccurate characterization of the separation between labeled molecules (2,30).

Although the goal has always remained to analyze the rawest form of data, the reality of these noise properties has traditionally led to the development of approximate binned photon analyses even when data are collected at the level of single photons across two detectors. Binning is either achieved by directly summing photon arrivals over a time period when using single-photon detectors (23,31) or by integrating intensity over a few pixels when using widefield detectors (32).

While binned data analyses can be used to determine the number and connectivity of system states (33)—by computing average FRET efficiencies over bin time windows and using them in turn to construct FRET efficiency histograms (23,25,31,34,35,36)—they come at the cost of averaging kinetics that may exist below a time bin not otherwise easily accessible (32,37,38). They also eliminate information afforded by, say, the excited photophysical state lifetime in the case of pulsed illumination.

While histogram analyses are suited to infer static molecular properties, kinetics over binned time traces have also been extracted by supplementing these techniques with a hidden Markov model (HMM) treatment (23,25,34,35,36,39).

Using HMMs, binned analysis techniques immediately face the difficulty of an unknown number of system states visited. Therefore, they require the number of system states as an input to deduce the putative kinetics between the candidate system states.

What is more, the binned analysis’ accuracy is determined by the bin sizes where large bins may result in averaging of the kinetics. Moreover, increasing bin size may lead to estimation of an excess number of system states. This artifact arises when a system appears to artificially spend more time in the system states below the bin size (38). To address these challenges, we must infer continuous time trajectories below the bin size through, for example, the use of Markov jump processes (32), while retaining a binned, i.e., discrete measurement model.

When single-photon data are available we may avoid the binning issues inherent to HMM analysis (32,40,41). Doing so, also allows us to directly leverage the noise properties of detectors for single-photon arrivals (e.g., IRF) well calibrated at the single-photon level. Moreover, we can now also incorporate information available through photophysical state lifetimes when using pulsed illumination otherwise eliminated in binning data. Incorporating all of this additional information, naturally, comes with added computational cost (37) whose burden a successful method should mitigate.

Often, to help reduce computational costs, further approximations on the system kinetics are invoked, such as assuming system kinetics to be much slower than FRET label excitation and relaxation rates. This approximation helps decouple photophysical and system (molecular) kinetics (16,37,42,43).

What is more, as they exist, the rigor of direct photon arrival analysis methods are further compromised to help reduce computational cost by treating detector features and background as preprocessing steps (16,37,42,43). In doing so, simultaneous and self-consistent inference of kinetics and other molecular features becomes unattainable. Finally, all methods, whether relying on the analysis of binned photons or single-photon arrival, suffer from the “model selection problem.” That is, the problem associated with identifying the number of system states warranted by the data. More precisely, the problem associated with propagating the uncertainty introduced by items 1–4 into a probability over the models (i.e., system states). Existing methods for system state identification only provide partial reprieve.

For example, while FRET histograms identify peaks to intuit the number of system states, these peaks may provide unreliable estimates for a number of reasons: 1) fast transitions between system states may result in a blurring of otherwise distinct peaks (1) or, counter-intuitively, introduce more peaks (25,38); 2) system states may differ primarily in kinetics but not FRET efficiency (40); 3) detector properties and background may introduce additional features in the histograms.

To address the model selection problem, overfitting penalization criteria (such as the Bayesian information criterion or BIC) (23,44) or variational Bayesian (24) approaches have been employed.

Often, these model selection methods assume implicit properties of the system. For example, the BIC requires the assumption of weak independence between measurements (i.e., ideally independent identically distributed measurements and thus no Markov kinetics in state space) and a unique likelihood maximum, both of which are violated in smFRET data (24). Furthermore, BIC and other such methods provide point estimates rather than full probabilities over system states ignoring uncertainty from items 1–4 propagated over models (45).

As such, we need to learn distributions over system states and kinetics warranted by the data and whose breadth is dictated by the sources of uncertainty discussed above. More specifically, to address model selection and build joint distributions over system states and their kinetics, we treat the number of system states as a random variable just as the current community treats smFRET kinetic rates as random variables (25,40,41). Our objective is therefore to obtain distributions over all unknowns (including system states and kinetics) while accounting for items 1–4. Furthermore, this must be achieved in a computationally efficient way avoiding, altogether, the draconian assumptions of existing in single-photon analysis methods. In other words, we want to do more (by learning joint distributions over the number of system states alongside everything else) and we want it to cost less.

If we insist on learning distributions over unknowns, then it is convenient to operate within a Bayesian paradigm. Also, if the model (i.e., the number of system states) is unknown, then we must further generalize to the Bayesian nonparametric (BNP) paradigm (25,41,46,47,48,49,50,51,52,53). BNPs directly address the model selection problem concurrently and self-consistently while learning the associated model’s parameters and output full distributions over the number of system states and the other parameters.

In this series of three companion articles, we present a complete description of single-photon smFRET analysis within the BNP paradigm addressing noise sources discussed above (items 1–4). In addition, we develop specialized computational schemes for both continuous and pulsed illumination for it to “cost less.”

Indeed, mitigating computational cost becomes critical, especially with the added complexity of working within the BNP paradigm. This, in itself, warrants a detailed treatment of continuous and pulsed illumination analyses in two companion articles.

To complement this theoretical framework, we also provide to the community a suite of programs called BNP-FRET written in the compiled language Julia for high performance. These freely available programs allow for comprehensive analysis of single-photon smFRET time traces on immobilized molecules obtained with a wide variety of experimental setups.

In what follows, we first present a forward model. Next, we build an inverse strategy to learn full posteriors within the BNP paradigm. Finally, multiple examples are presented by applying the method to simulated data sets across different parameter regimes. Experimental data are treated in the two subsequent companion articles (54,55).

Forward model

Conventions

To be consistent throughout our three-part article, we precisely define some terms as follows.

-

1.

a macromolecular complex under study is always referred to as a system,

-

2.

the configurations through which a system transitions are termed system states, typically labeled using ,

-

3.

FRET dyes undergo quantum mechanical transitions between photophysical states, typically labeled using ,

-

4.

a system-FRET combination is always referred to as a composite,

-

5.

a composite undergoes transitions among its superstates, typically labeled using ,

-

6.

all transition rates are typically labeled using ,

-

7.

the symbol is generally used to represent the total number of discretized time windows, typically labeled with , and

-

8.

the symbol is generally used to represent the observations in the -th time window.

smFRET data

Here, we briefly describe the data collected from typical smFRET experiments analyzed by BNP-FRET. In such experiments, donor and acceptor dyes labeling a system can be excited using either continuous illumination or pulsed illumination, where short laser pulses arrive at regular time intervals. Moreover, acceptors can also be excited by nonradiative transfer of energy from an excited donor to a nearby acceptor. Upon relaxation, both donor and acceptor can emit photons collected by single-photon detectors. These detectors record the set of photon arrival times and detection channels. We denote the arrival times by

and detection channels with

for a total number of photons. In the equations above, and are experiment’s start and end times. Further, we emphasize here that the strategy used to index the detected photons above is independent of the illumination setup used.

Throughout the experiment, photon detection rates from the donor and acceptor dyes vary as the distance between them changes, due to the system kinetics. In cases where the distances form an approximately finite set, we treat the system as exploring a discrete system state space. The acquired FRET traces can then be analyzed to estimate the transition rates between these system states assuming a known model (i.e., known number of system states). We will lift this assumption of knowing the model a priori in the section “nonparametrics: predicting the number of system states.”

Cases where the system state space is continuous fall outside the scope of the current work and require extensions of (56) and (57) currently in progress.

In the following subsections, we present a physical model (forward model) describing the evolution of an immobilized system labeled with a FRET pair. We use this model to derive, step-by-step, the collected data’s likelihood given a choice of model parameters. Furthermore, given the mathematical nature of what is to follow, we will accompany major parts of our derivations with a pedagogical example of a molecule labeled with a FRET pair undergoing transitions between just two system states to demonstrate each new concept in example boxes.

Likelihood

To derive the likelihood, we begin by considering the stochastic evolution of an idealized system, transitioning through a discrete set of total system states, , labeled with a FRET pair having discrete photophysical states, , representing the fluorophores in their ground, excited, triplet, blinking, photobleached, or other quantum mechanical states. The combined system-FRET composite now undergoes transitions between superstates, , corresponding to all possible ordered pairs of the system and photophysical states. To be precise, we define , where .

Assuming Markovianity (memorylessness) of transitions among superstates, the probability of finding the composite in a specific superstate at a given instant evolves according to the master equation (40).

| (1) |

where the row vector of length has elements coinciding with probabilities for finding the system-FRET composite in a given superstate at time . More explicitly, defining the photophysical portion of the probability vector corresponding to system state as

we can write as

Furthermore, in the master equation above, is the generator matrix of size populated by all transition rates between superstates.

Each diagonal element of the generator matrix corresponds to self-transitions and is equal to the negative sum of the remaining transition rates within the corresponding row. That is, . This results in zero row-sums, assuring that remains normalized at all times as described later in more detail (see Eq. 5). Furthermore, for simplicity, we assume no simultaneous transitions among system states and photophysical states as such events are rare (although the incorporation of these events in the model may be accommodated by expanding the superstate space). This assumption results in for simultaneous and , which allows us to simplify the notation further. That is, (for any ) and (for any ). This leads to the following form for the generator matrix containing blocks of exclusively photophysical and exclusively system transition rates, respectively

| (2) |

where the matrices on the diagonal are the photophysical parts of the generator matrix for a system found in the system state. In addition, is the identity matrix of size .

For later convenience, we also organize the system transition rates in Eq. 2 as a matrix

| (3) |

which we call system generator matrix.

Moreover, the explicit forms of in Eq. 2 depend on the photophysical transitions allowed in the model. For instance, if the FRET pair is allowed to go from its ground photophysical state to the excited donor or excited acceptor states only, the matrix is given as

| (4) |

where the along the diagonal represents the negative row-sum of the remaining elements, is the excitation rate, and are the donor and acceptor relaxation rates, respectively, and is direct excitation of the acceptor by a laser, and is the donor to acceptor FRET transition rate when the system is in its -th system state. We note that only FRET transitions depend on the system states (identified by dye-dye separations) and correspond to FRET efficiencies given by

where the ratio on the right hand side represents the fraction of FRET transitions among all competing transitions out of an excited donor, that is, the fraction of emitted acceptor photons among total emitted photons.

With the generator matrix at hand, we now look for solutions to the master equation of Eq. 1. Due to its linearity, the master equation accommodates the following analytical solution:

| (5) |

illustrating how the probability vector arises from the propagation of the initial probability vector at time by the exponential of the generator matrix (the propagator matrix ). The exponential maps the transition rates in the generator matrix to their corresponding transition probabilities populating the propagator matrix. The zero row-sums of the generator matrix guarantee that the resulting propagator matrix is stochastic (i.e., has rows of probabilities that sum to unity, ).

Example I: State space and generator matrix.

For a molecule undergoing transitions between its two conformations, we have system states given as . The photophysical states of the FRET pair labeling this molecule are defined according to whether the donor or acceptor are excited. Denoting the ground state by and excited state by , we can write all photophysical states of the FRET pair as , where the first element in the ordered pair represents the donor state. Furthermore, here, we assume no simultaneous excitation of the donor and acceptor owing to its rarity.

Next, we construct the superstate space with ordered pairs . Finally, the full generator matrix for this setup reads

Both here, and in similar example boxes that follow, we choose values for rates commonly encountered in experiments (17). We consider a laser exciting a donor at rate . Next, we suppose that the molecule switches between system states and at rates and .

Furthermore, assuming typical lifetimes of 3.6 and 3.5 ns for the donor and acceptor dyes (17), their relaxation rates are, respectively, ns−1 and ns−1. We also assume that there is no direct excitation of the acceptor and thus . Next, we choose FRET efficiencies of 0.2 and 0.9 for the two system states resulting in ns−1 and ns−1.

Finally, these values lead to the following generator matrix (in units)

After describing the generator matrix and deriving the solution to the master equation, we continue by explaining how to incorporate observations into a likelihood.

In the absence of observations, any transition among the set of superstates are unconstrained. However, when monitoring the system using suitable detectors, observations rule out specific transitions at the observation time. For example, ignoring background for now, the detection of a photon from a FRET label identifies a transition from an excited photophysical state to a lower energy photophysical state of that label. On the other hand, no photon detected during a time period indicates the absence of radiative transitions or the failure of detectors to register such transition. Consequently, even periods without photon detections are informative in the presence of a detector. In other words, observations from a single-photon smFRET experiment are continuous in that they are defined at every point in time.

In addition, since smFRET traces report radiative transitions of the FRET labels at photon arrival times, uncertainty remains about the occurrences of unmonitored transitions (e.g., between system states). Put differently, smFRET traces (observations) only partially specify superstates at any given time.

Now, to compute the likelihood for such smFRET traces, we must sum those probabilities over all trajectories across superstates (superstate trajectories) consistent with a given set of observations. Assuming the system ends in superstate at , this sum over all possible trajectories can be very generally given by the element of the propagated vector corresponding to superstate . Therefore, a general likelihood may be written as

| (6) |

However, as the final superstate at time is usually unknown, we must therefore marginalize (sum) over the final superstate to obtain the following likelihood

| (7) |

where all elements of the vector are set to 1 as a means to sum the probabilities in vector . In the following sections, we describe how to obtain concrete forms for these general likelihoods.

Absence of observations

For pedagogical reasons, it is helpful to first look at the trivial case where a system-FRET composite evolves but no observations are made (due to a lack, say, of detection channels). In this case, all allowed superstate trajectories are possible between the start time of the experiment, , and end, . This is because the superstate cannot be specified or otherwise restricted at any given time by observations previously explained. Consequently, the probability vector remains normalized throughout the experiment as no superstate trajectory is excluded. As such, the likelihood is given by summing over probabilities associated to the entire set of trajectories, that is,

| (8) |

where are the emission times of all emitted photons, not recorded due to lack of detection channels and thus not appearing on the right hand side of the expression.

In what follows, we describe how the probability vector does not remain normalized as it evolves to when detectors partially collapse knowledge of the occupied superstate during the experiment. This results in a likelihood smaller than one. We do so for the conceptually simpler case of continuous illumination for now.

Introducing observations

To compute the likelihood when single-photon detectors are present, we start by defining a measurement model where the observation at a given time is dictated by ongoing transitions and detector features (e.g., crosstalk, detector efficiency). As we will see in more detail later, if we describe the evolution of a system by defining its states at discrete time points and these states are not directly observed, and thus hidden, then this measurement model adopts the form of a HMM. Here, Markovianity arises when a given hidden state only depends on its immediate preceding hidden state. In such HMMs, an observation at a given time is directly derived from the concurrent hidden state.

As an example of an HMM, for binned smFRET traces, an observation is often approximated to depend only on the current hidden state. However, contrary to such a naive HMM, an observation in a single-photon setup in a given time period depends on the current superstate and the immediate previous superstate. This naturally enforces a second-order structure on the HMM where each observed random variable depends on two superstates, as we demonstrate shortly. A similar HMM structure was noted previously to model a fluorophore’s photo-switching behavior in (58).

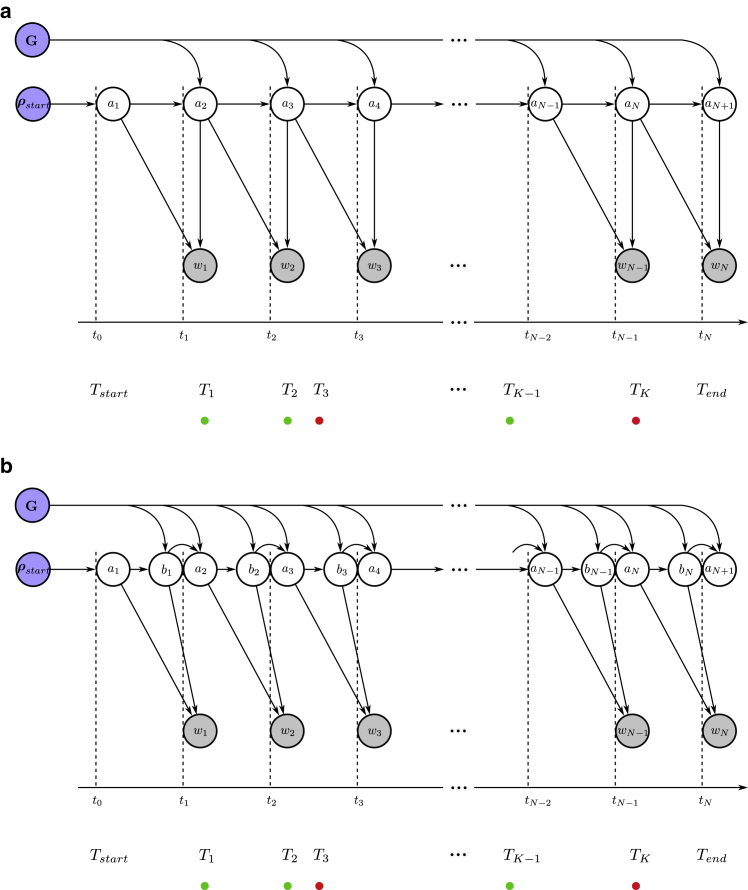

Now, to address this observation model, we first divide the experiment’s time duration into windows of equal size, . We will eventually take the continuum limit to recover the original system as described by the master equation. We also sum over all possible transitions between superstates within each window. These windows are marked by the times (see Fig. 2 a)

where the -th window is given by with and . Corresponding to each time window, we have observations

where if no photons are detected and otherwise, with the -th photon in a window being recorded by the channel at time . Note here that observations in a time window, being a continuous quantity, allow for multiple photon arrivals or none at all.

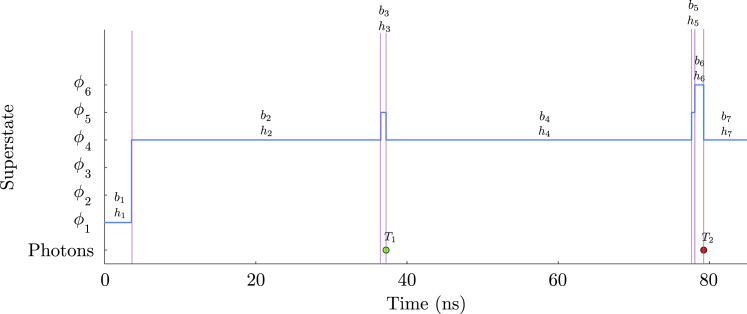

Figure 2.

Graphical models depicting the random variables and parameters involved in the generation of photon arrival data for smFRET experiments. Circles shaded in blue represent parameters of interest we wish to deduce, namely transition rates and probabilities. The circles shaded in gray correspond to observations. The unshaded circles represent the superstates. The arrows reflect conditional dependence among these variables and colored dots represent photon arrivals. Going from (a) to (b), we convert the original HMM with a second-order structure to a naive HMM where each observation only depends on one state.

As mentioned earlier, each of these observations originate from the evolution of the superstate. Therefore, we define superstates occupied at the beginning of each window as

where is the superstate at the beginning of the -th time window as shown in Fig. 2 a. The framework described here can be employed to compute the likelihood. However, the second-order structure of the HMM leads to complications in these calculations. In the rest of this section, we first illustrate the mentioned complication using a simple example and then describe a solution to this issue.

Example II: Naive likelihood computation.

Here, we calculate the likelihood for our two-state system described earlier. For simplicity alone, we attempt the likelihood calculation for a time period spanning the first two time windows in Fig. 2 a. Within this period the system-FRET composite evolves from superstate to giving rise to observations . The likelihood for such a setup is typically obtained using a recursive strategy by marginalizing over superstates (summing over all possible superstate trajectories)

Here, we have applied the chain rule of probabilities in each step. Moreover, in the last step, we have only retained the parameters that are directly connected to the random variable on the left in each term, as shown by arrows in Fig. 2 a.

Now, for our two system state example, can be any of the six superstates given earlier. As such, the sum above contains terms for such a simple example. For a large number of time windows, computing this sum becomes prohibitively expensive. Therefore, it is common to use a recursive approach to find the likelihood, only requiring operations, as we describe in the next section. However, due to our HMM’s second-order structure, the two first terms (involving observations) in the above sum are conditioned on a mutual superstate , which forbids recursive calculations.

After describing the issue in computing the likelihood due to the second-order structure of our HMM, we now describe a solution to this problem. As such, to simplify the likelihood calculation, we temporarily introduce superstates at the end of -th window separated from superstate at the beginning of -th window by a short time as shown in Fig. 2 b during which no observations are recorded (inactive detectors). This procedure allows us to conveniently remove dependency of consecutive observations on a mutual superstate. That is, consecutive observations and now do not depend on a common superstate , but rather on separated pairs; see Fig. 2 b. The sequence of superstates now looks like (see Fig. 2 b)

| (9) |

which now permits a recursive strategy for likelihood calculation as described in the next section. Furthermore, we will eventually take the limit to obtain the likelihood of the original HMM with the second-order structure.

Recursion formulas

We now have the means to compute the terminal probability vector by evolving the initial vector . This is most conveniently achieved by recursively marginalizing (summing) over all superstates in Eq. 9 backward in time, starting from the last superstate as follows

| (10) |

where are elements of the vector of length , commonly known as a filter (59). Moving backward in time, the filter at the beginning of the -th time window, , is related to the filter at the end of the -th window, , due to Markovianity, as follows

| (11) |

or in matrix notation as

where are the elements of the transition probability matrix described in the next section. Again due to Markovianity, the filter at the end of the -th window, , is related to the filter at the beginning of the same time window, , as

| (12) |

or in matrix notation as

where the terms populate the transition probability matrix described in the next section. Here, we use the superscript to denote that elements of this matrix include observation probabilities. We note here that the last filter in the recursion formula, , is equal to starting probability vector itself.

Reduced propagators

To derive the different terms in the recursive filter formulas, we first note that the transition probabilities and do not involve observations. As such, we can use the full propagator as follows

and

respectively. On the other hand, the term includes observations that result in modification to the propagator by ruling out a subset of transitions. For instance, observation of a photon momentarily eliminates all nonradiative transitions. The modifications now required can be structured into a matrix of the same size as the propagator with elements . We term all such matrices detection matrices. The product in Eq. 12 can now be written as

relating the modified propagator (termed reduced propagator and distinguished by the superscript hereafter) in the presence of observations to the full propagator (no observations). Plugging in the matrices introduced above into the recursive filter formulas (Eqs. 11 and 12), we obtain in matrix notation

| (13) |

where the symbol represents element-by-element product of matrices. Here, however, the detection matrices cannot yet be computed analytically as the observations allow for an arbitrary number of transitions within the finite time window . However, they become manageable in the limit that the time windows become vanishingly small, as we demonstrate later.

Likelihood for the HMM with second-order structure

Now, inserting the matrix expressions for filters of Eq. 13 into the recursive formula likelihood Eq. 10, we arrive at

where, in the second step, we added a row vector of ones, at the end to sum over all elements. Here, the superscript denotes matrix transpose. As we now see, the structure of the likelihood above amounts to propagation of the initial probability vector to the final probability vector via multiple propagators corresponding to time windows.

Now, under the limit , we have

where is the identity matrix. In this limit, we recover the likelihood for the HMM with a second-order structure as

| (14) |

We note here that the final probability vector is not normalized to one upon propagation due to the presence of reduced propagators corresponding to observations. More precisely, the reduced propagators restrict the superstates evolution to only a subset of trajectories over a time window in agreement with the observation over this window. This, in turn, results in a probability vector whose elements sum to less than one. That is,

| (15) |

Continuum limit

Up until now, the finite size of the time window allowed for an arbitrary number of transitions per time window , which hinders the computation of an exact form for the detection matrices. Here, we take the continuum limit, as the time windows become vanishingly small (that is, as ). Thus, no more than one transition is permitted per window. This allows us to fully specify the detection matrices .

To derive the detection matrices, we first assume ideal detectors with 100% efficiency and include detector effects in the subsequent sections (see the “detection effects” section). In such cases, the absence of photon detections during a time window, while detectors are active, indicates that only nonradiative transitions took place. Thus, only nonradiative transitions have nonzero probabilities in the detection matrices. As such, for evolution from superstate to , the elements of the nonradiative detection matrix, , are given by

| (16) |

On the other hand, when the -th photon is recorded in a time window, only elements corresponding to radiative transitions are nonzero in the detection matrix denoted by as

| (17) |

Here, we note that the radiative detection matrices have zeros along their diagonals, since self-transitions are nonradiative.

We can now define the reduced propagators corresponding to the nonradiative and radiative detection matrices, and , using the Taylor approximation as

| (18) |

| (19) |

In the equations above, and , where the symbol represents an element-by-element product of the matrices. Furthermore, the product between the identity matrix and above vanishes in the radiative propagator due to zeros along the diagonals of .

Example III: Detection matrices.

For our example with two system states described earlier, the detection matrices of Eqs. 16 and 17 take simple forms. The radiative detection matrix has the same size as the generator matrix with nonzero elements wherever there is a rate associated to a radiative transition

where the subscripts and , respectively, denote photon detection in donor and acceptor channels. Similarly, the nonradiative detection matrix is obtained by setting all elements of the generator matrix related to radiative transitions to zero and the remaining to one as

Final likelihood

With the asymptotic forms of the reduced propagators in Eq. 14 now defined in the last subsection, we have all the ingredients needed to arrive at the final form of the likelihood.

To do so, we begin by considering the period right after the detection of the -th photon until the detection of the -th photon. For this time period, the nonradiative propagators in Eq. 14 can now be easily merged into a single propagator , as the commutative arguments of the exponentials can be readily added. Furthermore, at the end of this interphoton period, the radiative propagator marks the arrival of the -th photon. The product of these two propagators

| (20) |

now governs the stochastic evolution of the system-FRET composite during that interphoton period.

Inserting Eq. 20 for each interphoton period into the likelihood for the HMM with second-order structure in Eq. 14, we finally arrive at our desired likelihood

| (21) |

This likelihood has the same structure as shown by Gopich and Szabo in (40).

Example IV: Propagator and likelihood.

Here, we consider a simple FRET trace where two photons are detected at times 0.05 and 0.15 ms in the donor and acceptor channels, respectively. To demonstrate the ideas developed so far, we calculate the likelihood of these observations as (see Eq. 21)

To do so, we first need to calculate using the nonradiative detection and generator matrices found in the previous example boxes

and similarly

Next, we proceed to calculate and . Remembering that the first photon was detected in the donor channel, we have (in units)

Similarly, since the second photon was detected in the acceptor channel, we can write (in units)

We also assume that the system is initially in the superstate giving . Finally, putting everything together, we can find the likelihood as where is a constant and does not contribute to parameter estimations, as we show later.

Effect of binning single-photon smFRET data

When considering binned FRET data, the time period of an experiment is typically divided into a finite number of equally sized time windows (bins), and the photon counts (intensities) in each bin are recorded in the detection channels. This is in contrast to single-photon analysis where individual photon arrival times are recorded. To arrive at the likelihood for such binned data, we start with the single-photon likelihood derived in Eq. 15 where is not infinitesimally small, that is,

| (22) |

where

or in the matrix notation

| (23) |

where is the detection matrix introduced in the “reduced propagators” section.

Next, we must sum over all superstate trajectories that may give rise to the recorded photon counts (observations) in each bin. However, such a sum is challenging to compute analytically and has been attempted in (38). Here, we only show likelihood computation under commonly applied approximations/assumptions when analyzing binned smFRET data, which are: 1) bin size is much smaller than typical times spent in a system state or, in other words, for a system transition rate , we have ; and 2) excitation rate is much slower than dye relaxation and FRET rates, or in other words, interphoton periods are much larger than the excited state lifetimes.

The first assumption is based on realistic situations where system kinetics (at seconds timescale) are many orders of magnitude slower than the photophysical transitions (at nanoseconds timescale). This timescale separation allows us to simplify the propagator calculation in Eq. 23. To see that, we first separate the system transition rates from photophysical transition rates in the generator matrix as

| (24) |

where denotes a tensor product, is the portion of generator matrix containing only system transition rates previously defined in Eq. 3, and is the portion containing only photophysical transition rates, that is,

| (25) |

and

| (26) |

where is the photophysical generator matrix corresponding to system state given in Eq. 4.

Now plugging Eq. 24 into the full propagator and applying the famous Zassenhaus formula for matrix exponentials, we get

| (27) |

where the square brackets represent the commutator of the constituting matrices and the last term represents the remaining exponentials involving higher-order commutators. Furthermore, the commutator results in a very sparse matrix given by

| (28) |

where

Now, the propagator calculation in Eq. 27 simplifies if the commutator , implying that either the bin size is very small such that (our first assumption) or that FRET rates/efficiencies are almost indistinguishable . Under such conditions, the system state can be assumed to stay constant during a bin, with system transitions only occurring at the ends of bin periods. Furthermore, the full propagator in Eq. 27 can now be approximated as

| (29) |

where the last equality follows from the block diagonal form of given in Eq. 25 and is the system transition probability matrix (propagator) given as

| (30) |

Moreover, is the photophysical transition probability matrix (propagator) as

| (31) |

where the elements are given as . Furthermore, because of the block diagonal structure of , the matrix multiplication in Eq. 29 results in

| (32) |

After deriving the full propagator for the time period (bin) under our first assumption, we now proceed to incorporate observations during this period via detection matrices to compute the reduced propagator of Eq. 23. To do so, we now apply our second assumption of relatively slower excitation rate . This assumption implies that interphoton periods are dominated by the time spent in the ground state of the FRET pair and are distributed according to a single exponential distribution, Exponential. Consequently, the total photon counts per bin follow a Poisson distribution, Poisson, independent of the photophysical portion of the photophysical trajectory taken from superstate to .

Now, the first and the second assumptions imply that the observation during the -th bin only depends on the system state (or the associated FRET rate ). As such we can approximate the detection matrix elements as

| (33) |

Using these approximations, the reduced propagator in Eq. 23 can now be written as

| (34) |

Next, to compute the likelihood for the -th bin, we need to sum over all possible superstate trajectories within this bin as

| (35) |

where is a normalized row vector populated by probabilities of finding the system-FRET composite in the possible superstates at the beginning of the -th bin. Furthermore, we have written portions of corresponding to system state as . To be more explicit, we have

following the convention in the “likelihood” section and using to now represent time .

Moreover, since each row of sums to one, we have , which simplifies the bin likelihood of Eq. 35 to

| (36) |

where we have defined as the probability of the system to occupy system state . We can also write the previous equation in the matrix form as

| (37) |

where is a row vector of length (number of system states) populated by for each system state, and , in the same spirit as , is a detection matrix of dimensions populated by observation probability in each row corresponding to system state . Furthermore, defining , we note here that propagates probabilities during the -th bin in a similar manner as the reduced propagators of Eq. 23.

Therefore, we can now multiply these new propagators for each bin to approximate the likelihood of Eq. 22 as

| (38) |

where is a row vector, similar to , populated by probabilities of being in a given system state at the beginning of an experiment.

To conclude, our two assumptions regarding system kinetics and excitation rate allow us to significantly reduce the dimensions of the propagators. This, in turn, leads to much lowered expense for likelihood computation. However, cheaper computation comes at the expense of requiring a large number of photon detections or excitation rates per bin to accurately determine FRET efficiencies (identify system states) since we marginalize over photophysics in each bin. Such high excitation rates lead to faster photobleaching and increased phototoxicity, and thereby much shorter experiment durations. As we will see in the “pulsed illumination” section, this problem can be mitigated by using pulsed illumination, where the likelihood takes a similar form as Eq. 38, but FRET efficiencies can be accurately estimated from the measured microtimes.

Detection effects

In the previous section, we assumed idealized detectors to illustrate basic ideas on detection matrices. However, realistic FRET experiments must typically account for detector nonidealities. For instance, many emitted photons may simply go undetected when the detection efficiency of single-photon detectors, i.e., the probability of an incident photon being successfully registered, is less than one due to inherent nonlinearities associated with the electronics (22) or the use of filters in cases of polarized fluorescent emission (60,61). In addition, donor photons may be detected in the channel reserved for acceptor photons or vice-versa due to emission spectrum overlap (62). This phenomenon, commonly known as crosstalk, crossover, or bleedthrough, can significantly affect the determination of quantities, such as transition rates and FRET efficiencies, as we demonstrate later in our results. Other effects adding noise to fluorescence signals include dark current (false signal in the absence of incident photons), dead time (the time a detector takes to relax back into its active mode after a photon detection), and timing jitter or IRF (stochastic delay in the output signal after a detector receives a photon) (22). In this section, we describe the incorporation of all such effects into our model except dark current and background emissions, which require more careful treatment and will be discussed in the “background emissions” section.

Crosstalk and detection efficiency

Noise sources such as crosstalk and detection efficiency necessarily result in photon detection being treated as a stochastic process. Both crosstalk and detection efficiency can be included into the propagators in both cases by substituting the zeros and ones, appearing in the ideal radiative and nonradiative detection matrices (Eqs. 16 and 17), with probabilities between zero and one. In such a way, the resulting propagators obtained from these detection matrices, in turn, incorporate into the likelihood the effects of crosstalk and detection efficiency into the model.

Here, in the presence of crosstalk, for clarity, we add a superscript to the radiative detection matrix of Eq. 17 for the -th photon, . The elements of this detection matrix for the transition, when a photon intended for channel is registered in channel reads

where is the probability for this event (upon transition from superstate to ). Further, detector efficiencies can also be accounted for in these probabilities to represent the combined effects of crosstalk, arising from spectral overlap, and absence of detection channel registration. When we do so, we recover (for cases where and can be both the same or different), as not all emitted photons can be accounted for by the detection channels.

This new detection matrix above results in the following modification to the radiative propagator of Eq. 19 for the -th photon

The second equality above follows by recognizing that the identity matrix multiplied, element-wise, by is zero. By definition, is the remaining nonzero product.

On the other hand, for time periods when no photons are detected, the nonradiative detection matrices in Eq. 16 become

where the sum gives the probability of the photon intended for channel to be registered in any channel. The nonradiative propagator of Eq. 18 for an infinitesimal period of size in the presence of crosstalk and inefficient detectors is now

| (39) |

where . With the propagators incorporating crosstalk and detection efficiency now defined, the evolution during an interphoton period between the -th photon and the -th photon of size is now governed by the product

| (40) |

where the nonradiative propagators in Eq. 39 have now been merged into a single propagator following the same procedure as Eq. 20.

Finally, inserting Eq. 40 for each interphoton period into the likelihood of Eq. 14, we arrive at the final likelihood incorporating crosstalk and detection efficiency as

After incorporating crosstalk and detector efficiencies into our model, we briefly explain the calibration of the crosstalk probabilities/detection efficiencies . To calibrate these parameters, two samples, one containing only donor dyes and another containing only acceptor dyes, are individually excited with a laser under the same power to determine the number of donor photons and number of acceptor photons detected in channel .

From photon counts recorded for the donor-only sample, assuming ideal detectors with 100% efficiency, we can compute the crosstalk probabilities for donor photons going to channel , , using the photon count ratios as , where is the absolute number of emitted donor photons. Similarly, crosstalk probabilities for acceptor photons going to channel , , can be estimated as , where is the absolute number of emitted acceptor photons. In the matrix form, these crosstalk factors for a two-detector setup can be written as

| (41) |

Using this matrix, for the donor-only sample, we can now write

| (42) |

and similarly for the acceptor only sample

| (43) |

However, it is difficult to estimate the absolute number of emitted photons and experimentally, and therefore the crosstalk factors in can only be determined up to multiplicative factors of and .

Since scaling the photon counts in an smFRET trace by an overall constant does not affect the FRET efficiency estimates (determined by photon count ratios) and escape rates (determined by changes in FRET efficiency), we only require crosstalk factors up to a constant as in the last equation.

For this reason, one possible solution toward determining the matrix elements of up to one multiplicative constant is to first tune dye concentrations such that the ratio , which can be accomplished experimentally. This allows us to write the crosstalk factors in the matrix form up to a constant as follows

| (44) |

It is common to set the multiplicative factor in Eq. 44 by the total donor photon counts to give

| (45) |

We note that from the convention adopted here, we have .

Furthermore, in situations where realistic detectors affect the raw counts, the matrix elements of as computed above automatically incorporate the effects of detector inefficiencies including the fact that .

In addition, the matrix can be further generalized to account for more than two detectors by appropriately expanding the size of the matrix dimensions to coincide with the number of detectors. Calibration of the matrix elements then follows the same procedure as above.

Now, in performing single-photon FRET analysis, we will use directly the elements of in constructing our measurement matrix. However, it is also common, to compute the matrix elements of from what is termed the route correction matrix (RCM) (63) typically used in binned photon analysis. The RCM is defined as the inverse of to obtain corrected counts and up to a proportionality constant as

| (46) |

Example V: Detection matrices with crosstalk and detector efficiencies.

For our example with two system states, we had earlier shown detection matrices for ideal detectors. Here, we incorporate crosstalk and detector efficiencies into these matrices. Moreover, we assume a realistic RCM (64) given as

However, following the convention of Eq. 45, we scale the matrix provided by a sum of absolute values of its first row elements, namely 1.22, leading to effective crosstalk factors given as

As such, these values imply approximately 18% crosstalk from donor to acceptor channel and 84% detection efficiency for acceptor channel without any crosstalk using the convention adopted in Eq. 45. Now, we modify the ideal radiative detection matrices by replacing their nonzero elements with the calibrated values above

Similarly, we modify the ideal nonradiative detection matrix by replacing the zero elements by and as follows

Effects of detector dead time

Typically, a detection channel becomes inactive (dead) after the detection of a photon for a period as specified by the manufacturer. Consequently, radiative transitions associated with that channel cannot be monitored during that period.

To incorporate this detector dead period into our likelihood model, we break an interphoton period between the -th and -th photon into two intervals: the first interval with an inactive detector and the second one when the detector is active. Assuming that the -th photon is detected in the -th channel, the first interval is thus long. As such, we can define the detection matrix for this interval as

Next, corresponding to this detection matrix, we have the propagator

that evolves the superstate during the detector dead time. This propagator can now be used to incorporate the detector dead time into Eq. 20 to represent the evolution during the period between the -th and -th photons as

| (47) |

where describes the evolution when the detector is active.

Finally, inserting Eq. 47 for each interphoton period into the likelihood for the HMM with a second-order structure in Eq. 14, we arrive at the following likelihood that includes detector dead time

| (48) |

To provide an explicit example on the effect of the detector dead time on the likelihood, we take a detour for pedagogical reasons. In this context, we consider a very simple case of one detection channel (dead time ) observing a fluorophore with two photophysical states, ground and excited , illuminated by a laser. The data in this case contains only photon arrival times

The generator matrix containing the photophysical transition rates for this setup is

where the along the diagonal represents the negative row-sum of the remaining elements, is the excitation rate, and is the donor relaxation rate.

Here, all transitions are possible during detector dead times as there are no observations. As such, the dead time propagators in the likelihood (Eq. 48) are simply expressed as exponentials of the full generator matrix, that is, , leaving the normalization of the propagated probability vector unchanged, e.g., just as we had seen in Eq. 8.

As we will see, these dead times, similar to detector inefficiencies, simply increase our uncertainty over parameters we wish to learn, such as kinetics, by virtue of providing less information. By contrast, background emissions and crosstalk provide false information. However, the net effect is the same: all noise sources increasing uncertainty.

Adding the detection IRF

Due to various sources of noise impacting the detection timing electronics (also known as jitter), the time elapsed between photon arrival and detection is itself a hidden (latent) random variable (22). Under continuous illumination, we say that this stochastic delay in time is sampled from a probability density, , termed the detection IRF. To incorporate the detection IRF into the likelihood of Eq. 48, we convolute the propagators with as follows

| (50) |

where we have used dead time propagators to incorporate detector inactivity during the period between photon reception and detector reactivation. Moreover, we have as described in Eq. 18.

To facilitate the computation of this likelihood, we use the fact that typical acquisition devices record at discrete (but very small) time intervals. For instance, a setup with the smallest acquisition time of 16 ps and a detection IRF distribution that is approximately 100 ps wide will have the detection IRF spread over, roughly, six acquisition periods. This allows each convolution integral to be discretized over the six acquisition intervals and computed in parallel, thereby avoiding extra real computational time associated to this convolution other than the overhead associated with parallelization.

Illumination features

After discussing detector effects, we continue here by further considering different illumination features. For simplicity alone, our likelihood computation until now assumed continuous illumination with a uniform intensity. More precisely, the element of the generator matrix in Eq. 4 was assumed to be time independent. Here, we generalize our formulation and show how other illumination setups (such as pulsed illumination and alternating laser excitation, ALEX (65)) can be incorporated into the likelihood by simply assigning a time dependence to the excitation rate .

Pulsed illumination

Here, we consider an smFRET experiment where the FRET pair is illuminated using a laser for a very short period of time (a pulse), , at regular intervals of size ; see Fig. 3 a. Now, as in the case of continuous illumination with constant intensity, the likelihood for a set of observations acquired using pulsed illumination takes a similar form to Eq. 21 involving products of matrices as follows

| (51) |

where , with , denotes the propagator evolving the superstate during the -th interpulse period between the -th and the -th pulse.

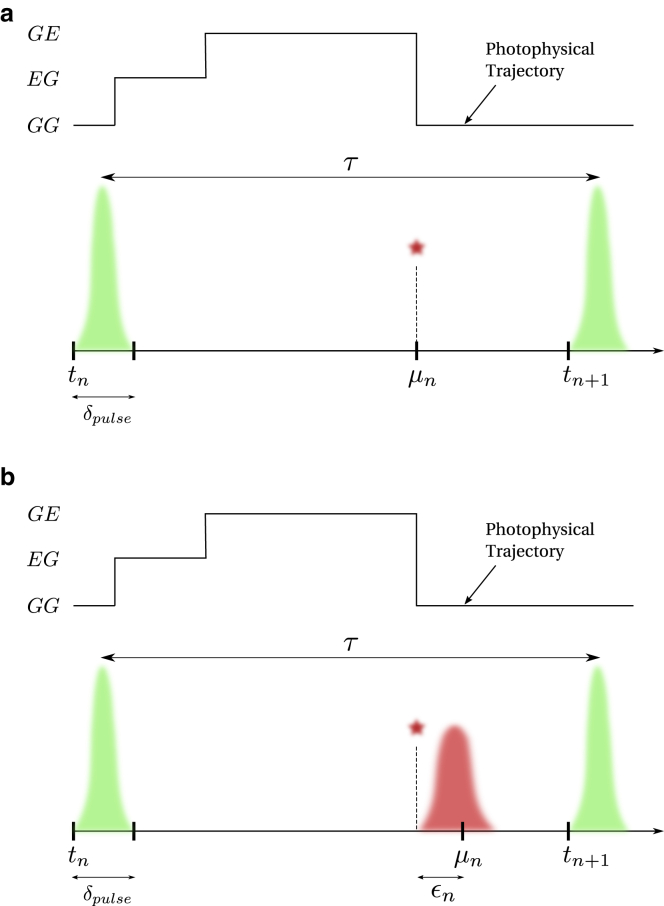

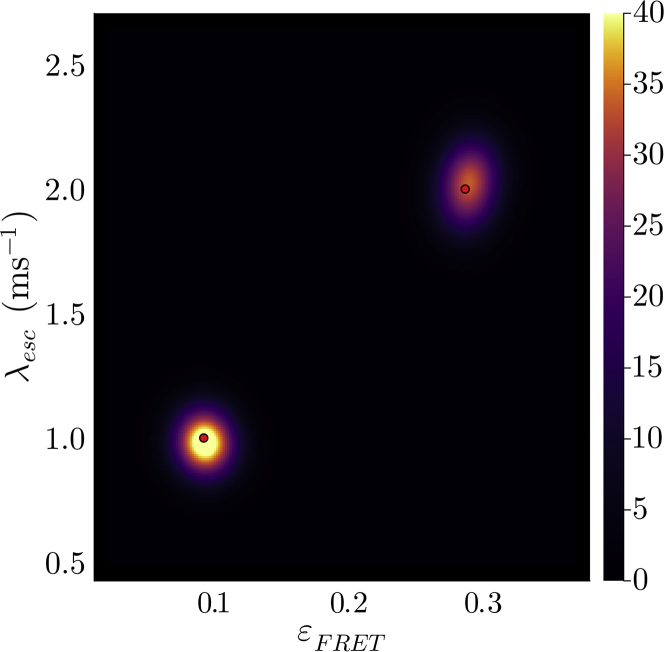

Figure 3.

Events over a pulsed illumination experiment pulse window. Here, the beginning of the -th interpulse window of size is marked by time . The FRET labels originally in the state GG (donor and acceptor, respectively, in ground states) are excited by a high-intensity burst (shown by the green) to the state EG (only donor excited) for a very short time . If FRET occurs, the donor transfers its energy to the acceptor and resides in the ground state leaving the FRET labels in the GE state (only acceptor excited). The acceptor then emits a photon to be registered by the detector at microtime . When using ideal detectors, the microtime is the same as the photon emission time as shown in (a). However, when the timing hardware has jitter (shown in red), a small delay is added to the microtime as shown in (b).

To derive the structure of during the -th interpulse period, we break it into two portions: 1) pulse with nonzero laser intensity where the evolution of the FRET pair is described by the propagator introduced shortly; 2) dark period with zero illumination intensity where the evolution of the FRET pair is described by the propagator introduced shortly. Furthermore, depending on whether a photon is detected or not over the -th interpulse period the propagators and assume different forms.

First, when no photons are detected, we have

| (52) |

| (53) |

where the integration over the pulse period now involves a time-dependent due to temporal variations in . The integral in Eq. 52 is sometimes termed the excitation IRF, although we will not use this convention here. For this reason, when we say IRF, we imply detection IRF alone. In addition, is the same as except for the excitation rate that is now set to zero due to lack of illumination. Finally, the propagator for an interpulse period with no photon detection can now be written as

| (54) |

On the other hand, if a photon is detected sometime after a pulse (as in Fig. 3 a), the pulse propagator remains as in Eq. 52. However, the propagator must now be modified to include a radiative generator matrix similar to Eq. 20

| (55) |

where is the photon arrival time measured with respect to the -th pulse (also termed microtime) as shown in Fig. 3 a. Here, the two exponential terms describe the evolution of the superstate before and after the photon detection during the dark period.

Moreover, we can construct the propagator for situations where a photon is detected during a pulse itself in a similar fashion. Here, the propagator remains the same as in Eq. 53 but must now be modified to include the radiative generator matrix as

| (56) |

The propagators derived so far in this section assumed ideal detectors. We now describe a procedure to incorporate the IRF into this formulation. This is especially significant in accurate estimation of fluorophores’ lifetimes, which is commonly done in pulse illumination smFRET experiments. To incorporate the IRF, we follow the same procedure as in the “adding the detection IRF” section and introduce convolution between the IRF function and propagators above involving photon detections. That is, when there is a photon detected during the dark period, we modify the propagator as

| (57) |

while the stays the same as in Eq. 52. Here, is the stochastic delay in photon detection resulting from the IRF as shown in Fig. 3 b.

Moreover, when there is a photon detected during a pulse, the propagator of Eq. 56 can be modified in a similar fashion to accommodate the IRF, while the propagator remains the same as in Eq. 53.

The propagators presented in this section involve integrals over large generator matrices that are analytically intractable and computationally expensive when considering large pulse numbers. Therefore, we follow a strategy similar to the one used in the “effect of binning single-photon smFRET data” sectionfor binned likelihood to approximate these propagators.

To reduce the complexity of the calculations, we start by making realistic approximations. Given the timescale separation between the interpulse period (typically tens of nanoseconds) and the system kinetics (typically of seconds timescale) in a pulsed illumination experiment, it is possible to approximate the system state trajectory as being constant during an interpulse period. In essence, rather than treating the system state trajectory as a continuous time process, we discretize the trajectory such that system transitions only occur at the beginning of each interpulse period. This allows us to separate the photophysical part of the generator matrix in Eq. 4 from the portion describing the evolution of the system under study given in Eq. 3. Here, by contrast to the likelihood shown in the “pulsed illumination” section, we can now independently compute photophysical and system likelihood portions, as described below.

To derive the likelihood, we begin by writing the system state propagator during an interpulse period as

| (58) |

Furthermore, we must incorporate observations into these propagators by multiplying each system transition probability in , , with the observation probability if that transition had occurred. We organize these observation probabilities using our newly defined detection matrices similar to the “continuum limit” section and write the modified propagators as

| (59) |

where again represents the element-by-element product. Here, the elements of depend on the photophysical portion of the generator matrix and their detailed derivations are shown in the third companion article (54). We note here that propagator matrix dimensions are now making them computationally less expensive than in the continuous illumination case. Finally, the likelihood for the pulsed illuminated smFRET data with these new propagators reads

| (60) |

which, similar to the case of binned likelihood under continuous illumination (see the “effect of binning single-photon smFRET data” section), sums over all possible system state trajectories.

We will later use this likelihood to put forward an inverse model to learn transition probabilities (elements of ) and photophysical transition rates appearing in .

Background emissions

Here, we consider background photons registered by detectors from sources other than the labeled system under study (2). The majority of background photons comprise ambient photons, photons from the illumination laser entering the detectors, and dark current (false photons registered by detectors) (22).

Due to the uniform laser intensity in the continuous illumination case, considered in this section, we may model all background photons using a single distribution from which waiting times are drawn. Often, such distributions are assumed (or verified) to be exponential with fixed rates for each detection channel (66,67). Here, we model the waiting time distribution for background photons arising from both origins as a single exponential as is often the most common case. However, in the pulsed illumination case, laser source and the two other sources of background require different treatments due to nonuniform laser intensity. That is, the ambient photons and dark current are still modeled by an Exponential distribution, although it is often further approximated as a Uniform distribution given that interpulse period if much shorter than the average background waiting time. The full formulation describing all background sources under pulsed illumination is provided in the third companion article (54).

We now proceed to incorporate background into the likelihood under continuous illumination. We do so, as mentioned earlier, by assuming an Exponential distribution for the background, which effectively introduces new photophysical transitions into the model. As such, these transitions may be incorporated by expanding the full generator matrix (described in the “likelihood” section) appearing in the likelihood, thereby leaving the structure of the likelihood itself intact, cf., Eq. 21.

To be clear, in constructing the new generator matrix, we treat background in each detection channel as if originating from fictitious independent emitters with constant emission rates (exponential waiting time). Furthermore, we assume that an emitter corresponding to channel is a two-state system with photophysical states denoted by

Here, each transition to the other state coincides with a photon emission with rate . As such, the corresponding background generator matrix for channel can now be written as

Since the background emitters for each channel are independent of each other, the expanded generator matrix for the combined setup (system-FRET composite plus background) can now be computed. This can be achieved by combining the system-FRET composite state space and the background state spaces for all of the total detection channels using Kronecker sums (68) as

where the symbol denotes the matrix Kronecker sum, and represents previously shown generator matrices without any background transition rates.

The propagators needed to compute the likelihood can now be obtained by exponentiating the expanded generator matrix above as

where the symbol denotes the matrix Kronecker product (tensor product) (68).

Furthermore, the same detection matrices defined earlier to include only nonradiative transitions or only radiative transitions, and their generalization with crosstalk and detection efficiency, can be used to obtain nonradiative and radiative propagators, as shown in the “continuum limit” section.

Consequently, as mentioned earlier, by contrast to incorporating the effects of dead time or IRF, addition of background sources do not entail any changes in the basic structure (arrangement of propagators) of the likelihood appearing in Eq. 21.

Example VI: Background.

To provide a concrete example for background, we again return to our FRET pair with two system states. The background free full generator matrix for this system-FRET composite was provided in the example box in the “likelihood” section as (in units of )

Here, we expand the above generator matrix to incorporate background photons entering two channels at rates of ms−1 and ms−1. We do so by performing a Kronecker sum of with the following generator matrix for the background

resulting in

Here, is a 24 24 matrix and we do not include its explicit from.

Fluorophore characteristics: Quantum yield, blinking, photobleaching, and direct acceptor excitation

As demonstrated for background in the previous section, to incorporate new photophysical transitions, such as fluorophore blinking and photobleaching, into the likelihood we must modify the full generator matrix . This can again be accomplished by adding extra photophysical states, relaxing nonradiatively, to the fluorophore model. These photophysical states can have long or short lifetimes depending on the specific photophysical phenomenon at hand. For example, donor photobleaching can be included by introducing a third donor photophysical state into the matrix of Eq. 4 without any escape transitions as follows

where is the lowest energy combined photophysical state for the FRET labels, represents the excited donor, represents the excited acceptor, and represents a photobleached donor, respectively. In addition, and denote donor and acceptor relaxation rates, respectively, represents permanent loss of emission from the donor (photobleaching), and represents FRET transitions when the system is in its -th system state.

Fluorophore blinking can be implemented similarly, except with a nonzero escape rate out of the new photophysical state, allowing the fluorophore to resume emission after some time (52,69). Here, assuming that the fluorophore cannot transition into the blinking photophysical state from the donor ground state results in the following generator matrix

So far, we have ignored direct excitation of acceptor dyes in the likelihood model. This effect can also be incorporated into the likelihood by assigning a nonzero value to the transition rate , that is,

Other photophysical phenomena can also be incorporated into our likelihood by following the same procedure as above. Finally, just as when adding background, the structure of the likelihood (arrangement of the propagators) when treating photophysics (including adding the effect of direct acceptor excitation) stays the same as in Eq. 21.

Synthetic data generation

In the previous subsections, we described how to compute the likelihood, which is the sum of probabilities over all possible superstate trajectories that could give rise to the observations made by a detector, as demonstrated in the “forward model” section. Here, we demonstrate how one such superstate trajectory can be simulated to produce synthetic photon arrival data using the Gillespie algorithm (70), as described in the next section, followed by the addition of detector artefacts. We then use the generated data to test our BNP-FRET sampler.

Gillespie and detector artefacts

The Gillespie algorithm generates two sets of random variables. The times at which superstates change (indexed 1 through ). These times occur anywhere along a continuous time grid. The next set of random variables are the states associated to the superstate preceding the time at which the superstate changes.

We designate the sequence of superstates

where . Here, unlike earlier in the “likelihood” section, the time index on superstates is not on a regular temporal grid.

Now, to generate the superstate sequence above, we first randomly draw the first superstate, , from the set of possible superstates given their corresponding probabilities. Next, we draw the second superstate of the sequence using the set of transition rates out of the first state with self-transitions excluded by construction. Now, after choosing , we generate the holding time (the time spent in ) from the Exponential distribution with rate constant associated with transitions . Finally, we repeat the two previous steps to sequentially generate the full sequence of superstates along with the corresponding holding times.

More formally, we generate a trajectory, by first sampling the initial superstate as

where is the initial probability vector and the Categorical distribution is the generalization of the Bernoulli distribution for more than two possible outcomes. The remaining superstates can now be sampled as

where is the escape rate for the superstate and rates for self-transitions are zero. The above equation reads as follows: “the superstate is drawn (sampled) from a Categorical distribution given the superstate and the generator matrix .”

Once the -th superstate is chosen, the holding time (the time spent in ) is sampled as follows

Finally, with ideal detectors, the detection channel is assigned deterministically to the -th photon emitted at time , which can be computed by summing all the holding times preceding the corresponding radiative transition.

Furthermore, in the presence of detection effects, such as crosstalk, detection efficiency, and IRF, we must add to the stochastic output of the Gillespie simulation another layer of stochasticity originating from the measurement model. That is, we stochastically assign detection channel and detection times to an emitted photon, as described below.

In the presence of crosstalk and inefficient detectors, we choose the detection channel for the -th photon emitted upon a radiative transition as

where , and , respectively, denote the probability of the photon going undetected, being detected in channels 1 and 2.

Moreover, in the presence of the IRF, we assign a stochastic delay , sampled from a probability distribution , to the absolute photon emission time . This results in the detection time, , as registered by the timing hardware.

In addition, when photophysical effects (such as blinking and photobleaching) and background are present, we can generate a superstate trajectory following the same procedure as above using the generator matrices incorporating these effects as described in the previous sections.

Finally, we obtain our desired smFRET trace (see Fig. 4) consisting of photon arrival times and detection channels as

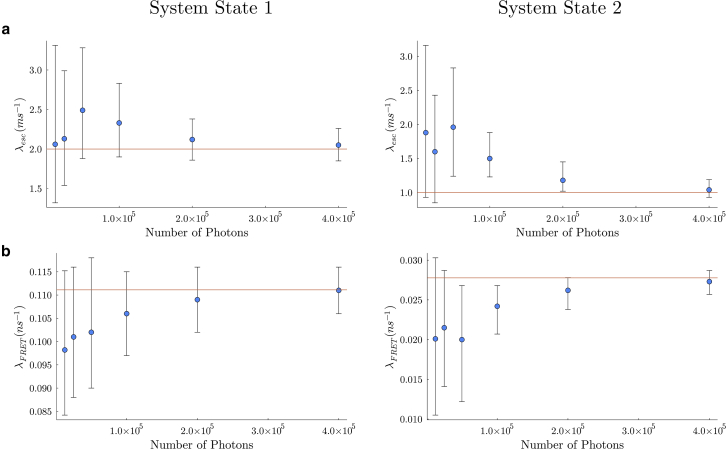

Figure 4.

Simulated data. Here, we show a superstate trajectory (in blue) generated using Gillespie algorithm for a system-FRET composite with two system states and three photophysical states. Collectively, superstates correspond to photophysical states when the system resides in and superstates correspond to photophysical states when the system resides in . The pink vertical lines mark the time points where transitions between the superstates occur. The variables and between each set of vertical lines represent the superstates and associated holding times, respectively. The green and red dots show the photon detections at times and in channels 1 and 2, respectively. The first photon is detected upon transition (or ), while the second photon is detected upon transition (or ). For this plot, we have used very fast system transition rates of ns−1 and ns−1 for demonstrative purposes only.

Inverse strategy

Now, armed with the likelihood for different experimental setups and a means by which to generate synthetic data (or having experimental data at hand), we proceed to learn the parameters of interest. Assuming precalibrated detector parameters, these include transition rates that enter the generator matrix , and elements of . However, accurate estimation of the unknowns requires an inverse strategy capable of dealing with all existing sources of uncertainty in the problem, such as photon’s stochasticity and detector noise. This naturally leads us to adopt a Bayesian inference framework where we employ Monte Carlo methods to learn distributions over the parameters.

We begin by defining the distribution of interest over the unknown parameters we wish to learn termed the posterior. The posterior is proportional to the product of the likelihood and prior distributions using Bayes’ rule as follows

| (62) |

where the last term is the joint prior distribution over and defined over the same domains as the parameters. The prior is often selected on the basis of computational convenience. The influence of the prior distribution on the posterior diminishes as more data are incorporated through the likelihood. Furthermore, the constant of proportionality is the inverse of the absolute probability of the collected data, , and can be safely ignored as generation of Monte Carlo samples only involves ratios of posterior distributions or likelihoods.

In addition, the factor in the likelihood first derived in Eq. 21 can be absorbed into the proportionality constant as it does not depend on any of the parameters of interest, resulting in the following expression for the posterior (in the absence of detector dead time and IRF for simplicity)

| (63) |

Next, assuming a priori that different transition rates are independent of each other and initial probabilities, we can simplify the prior as follows

| (64) |

where we select the Dirichlet prior distribution over initial probabilities as this prior is conveniently defined over a domain where the probability vectors, drawn from it, sum to unity. That is,

| (65) |

where the Dirichlet distribution is a multivariate generalization of the Beta distribution and is a vector of the same size as the superstate space. Typically parameters of the prior are termed hyperparameters and as such collects as many hyperparameters as its size.

In addition, we select Gamma prior distributions for individual rates. That is,

| (66) |

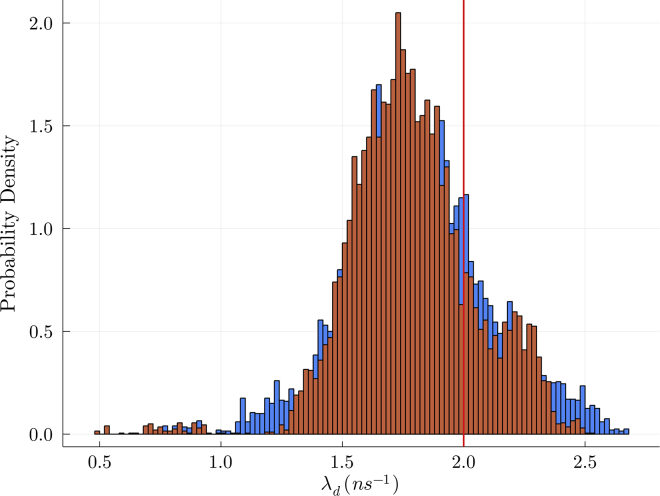

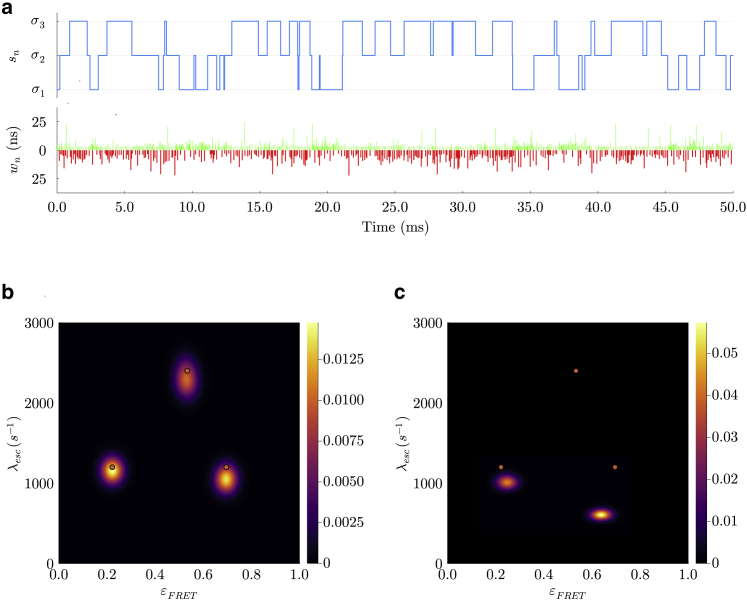

guaranteeing positive values. Here, and (a reference rate parameter) are hyperparameters of the Gamma prior. For simplicity, these hyperparameters are usually chosen (with appropriate units) such that the prior distributions are very broad, minimizing their influence on the posterior.