Abstract

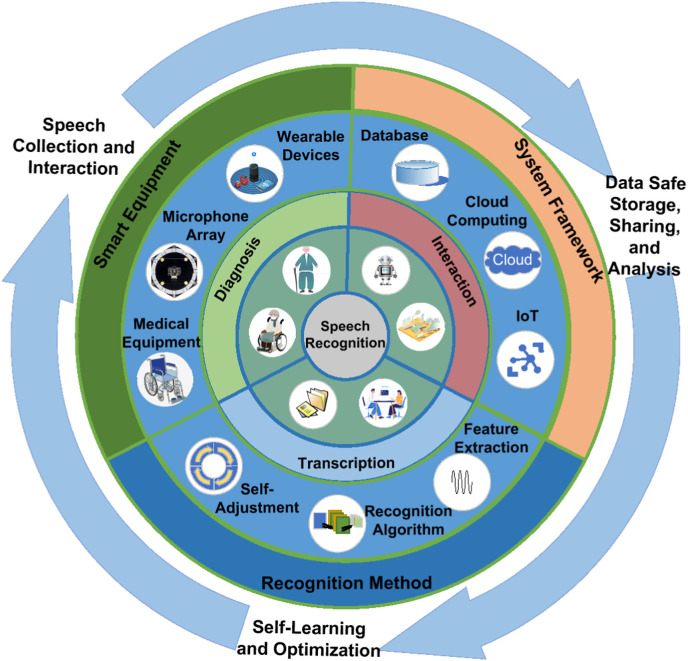

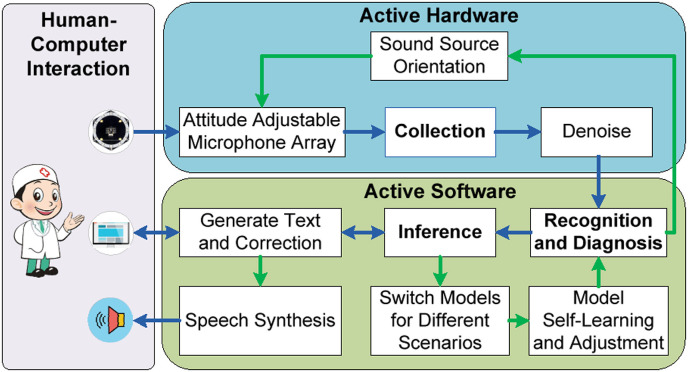

The growing and aging of the world population have driven the shortage of medical resources in recent years, especially during the COVID-19 pandemic. Fortunately, the rapid development of robotics and artificial intelligence technologies help to adapt to the challenges in the healthcare field. Among them, intelligent speech technology (IST) has served doctors and patients to improve the efficiency of medical behavior and alleviate the medical burden. However, problems like noise interference in complex medical scenarios and pronunciation differences between patients and healthy people hamper the broad application of IST in hospitals. In recent years, technologies such as machine learning have developed rapidly in intelligent speech recognition, which is expected to solve these problems. This paper first introduces IST's procedure and system architecture and analyzes its application in medical scenarios. Secondly, we review existing IST applications in smart hospitals in detail, including electronic medical documentation, disease diagnosis and evaluation, and human-medical equipment interaction. In addition, we elaborate on an application case of IST in the early recognition, diagnosis, rehabilitation training, evaluation, and daily care of stroke patients. Finally, we discuss IST's limitations, challenges, and future directions in the medical field. Furthermore, we propose a novel medical voice analysis system architecture that employs active hardware, active software, and human-computer interaction to realize intelligent and evolvable speech recognition. This comprehensive review and the proposed architecture offer directions for future studies on IST and its applications in smart hospitals.

Keywords: Automatic speech recognition, Smart hospital, Machine learning, Transcription, Diagnosis, Human-computer interaction

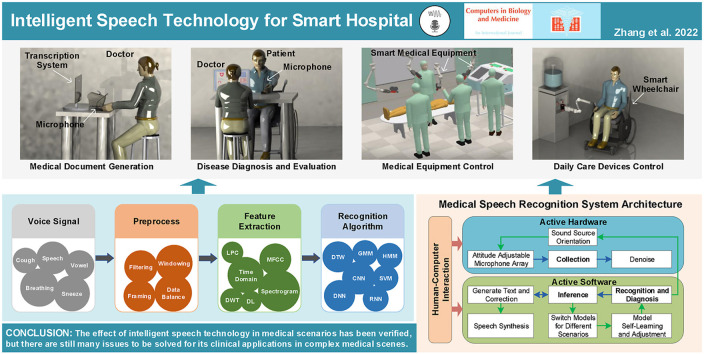

Graphical abstract

1. Introduction

The average lifespan of humans is increasing with the improvement of living standards and medical technology, leading to a rapidly aging population. The world's population aged 60 and over is expected to increase to 22% by 2050 [1], which poses numerous challenges to the healthcare system [2]. The aggravation of aging has caused an increase in healthcare costs and shortages in human and material resources. In addition, the unbalanced distribution of medical resources worldwide and the lack of advanced medical technology and equipment in underdeveloped areas, make some sudden diseases not treated timely and effectively [3]. Moreover, some early symptoms are often imperceptible, resulting in the aggravation of the diseases and the delay in the best treatment.

With the development of robotics and artificial intelligence (AI) technologies, machines can achieve more efficient and accurate disease diagnosis and assessment in some cases and replace nurses to assist patients in their lives, which alleviate the problem of insufficient medical resources. For example, intelligent image processing methods based on deep learning (DL) have been applied to processing X-ray, CT, ultrasound, and facial images for diagnosing diseases such as COVID-19 detection [[4], [5], [6]], paralysis assessment [7,8], and autism screening [9]. In addition, intelligent speech technology (IST) plays a critical role in smart hospitals because language is the most natural mean of communication between doctors and patients and contains much information, such as patients’ identity, age, emotion, and even symptoms of diseases [10].

IST refers to the use of machine learning (ML) methods to process human vocal signals to obtain information and realize human-machine communication. In recent years, speech signals research has developed rapidly with ML. IST contains many research areas, such as Automatic Speech Recognition (ASR) [11], Voiceprint Recognition, and Speech Synthesis. After years of development, IST has made significant progress and has gradually been applied in social life. For example, Apple's Siri, Google and Baidu's speech-based search services, and smart speakers [12] have all entered people's lives and provided convenience for us.

There are many review articles on speech technologies in medical applications, such as medical reporting [13], clinical documentation [14], speech impairment assessment [15], and speech therapy [16], healthcare [10,17]. However, we still require the review of state-of-the-art IST applications in smart hospitals. The smart hospital is the key to significantly improving the efficiency of medical behavior, alleviating the medical burden, and strengthening the robustness of the medical system in response to public health events such as the COVID-19 pandemic. Therefore, the application of IST in smart hospitals and smart healthcare needs to be reviewed for further development.

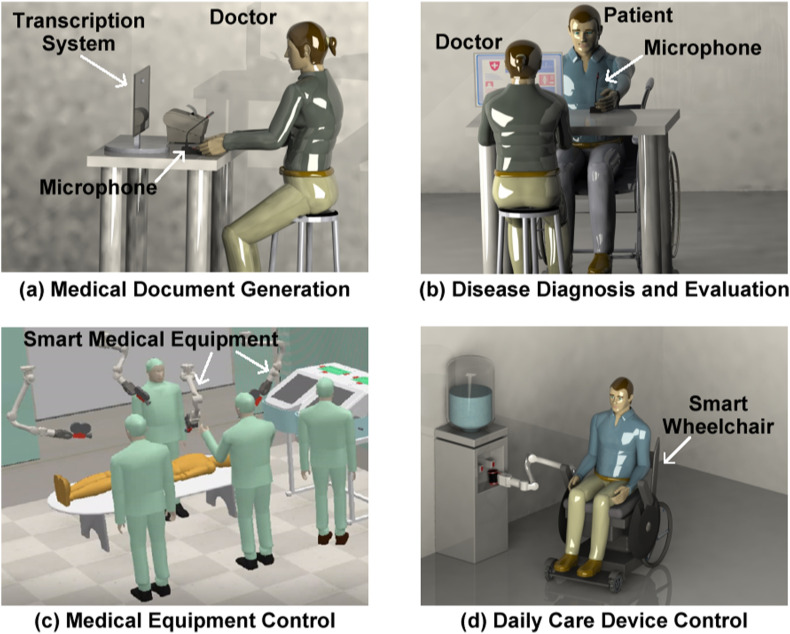

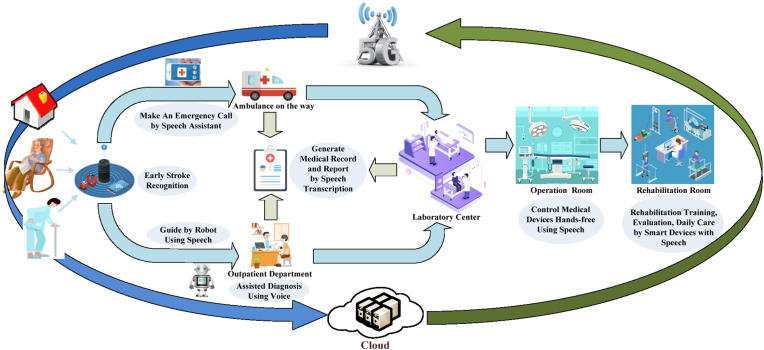

As shown in Fig. 1 , in addition to applying it in daily life, IST is a crucial part of smart hospitals to process vocal signals produced by healthy people and patients. It is gradually applied in medical and rehabilitation scenarios [17,18]. For example, IST can be used as a transcription tool to help doctors to record patient information such as personal information and chief complaints. It can also interactively guide patients to seek medical services. Moreover, IST can be an auxiliary tool for doctors to diagnose diseases preliminarily. At the same time, speech can identify patients’ emotional states to help doctors communicate better with them. Furthermore, IST combined with robotics, Internet of Things (IoT) technology, and 5G communication technology can support identifying and monitoring early symptoms of diseases, healthcare for the elderly, telemedicine, etc.

Fig. 1.

Examples of the applications of intelligent speech technology (IST) in smart hospitals.

This paper mainly introduces the latest research progress and applications of IST in the healthcare field, summarizes and analyzes the existing research from the perspective of technical realization, and proposes the current challenges and future development directions. The rest of the paper is organized as follows. Section 2 gives the search methodology. Section 3 introduces the typical flow of intelligent speech signal processing, the system architecture of ASR, and an overview of the IST in applications of medical scenarios. The applications of IST in electronic medical documentation, disease diagnosis and evaluation, and human-medical equipment interaction are reviewed in Sections 4, 5, 6, respectively. Section 7 presents a case study of IST in stroke patients’ early recognition, diagnosis, rehabilitation training, evaluation, and daily care. The limitations of current speech technologies in the applications of smart hospitals and future directions are proposed in Section 8. Finally, we conclude this work in Section 9.

2. Search methodology

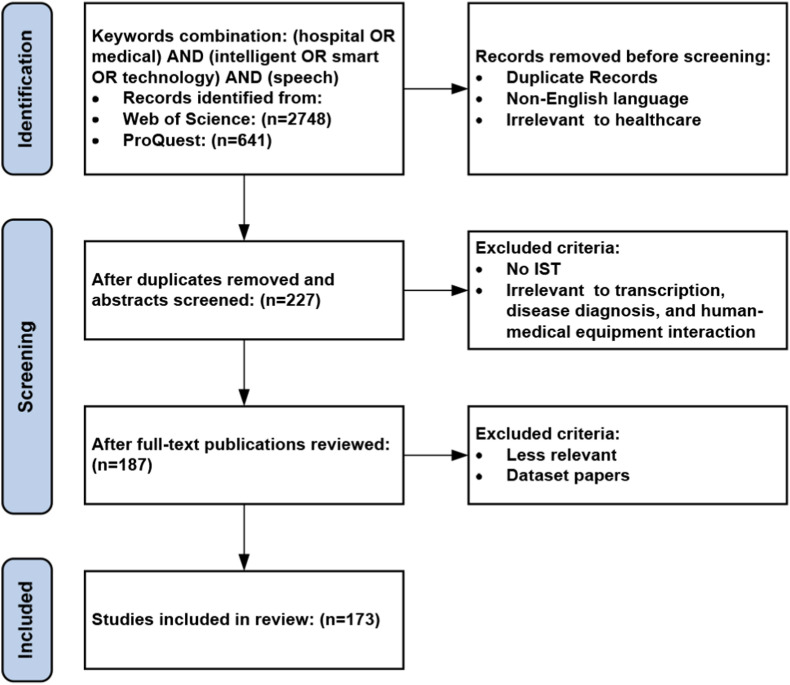

We performed the literature search on Web of Science and ProQuest. The literature search included all available English-language journal articles published in peer-reviewed journals up to July 2022 to ensure the quality of this review. Moreover, in order to target only papers related to IST and healthcare, the following keyword combinations are searched limiting in the title and abstract: (hospital OR medical) AND (intelligent OR smart OR technology) AND (speech). Only Review articles and Research articles are included.

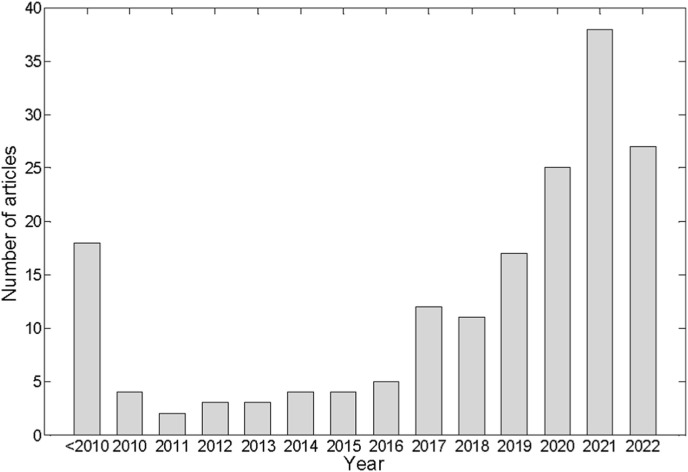

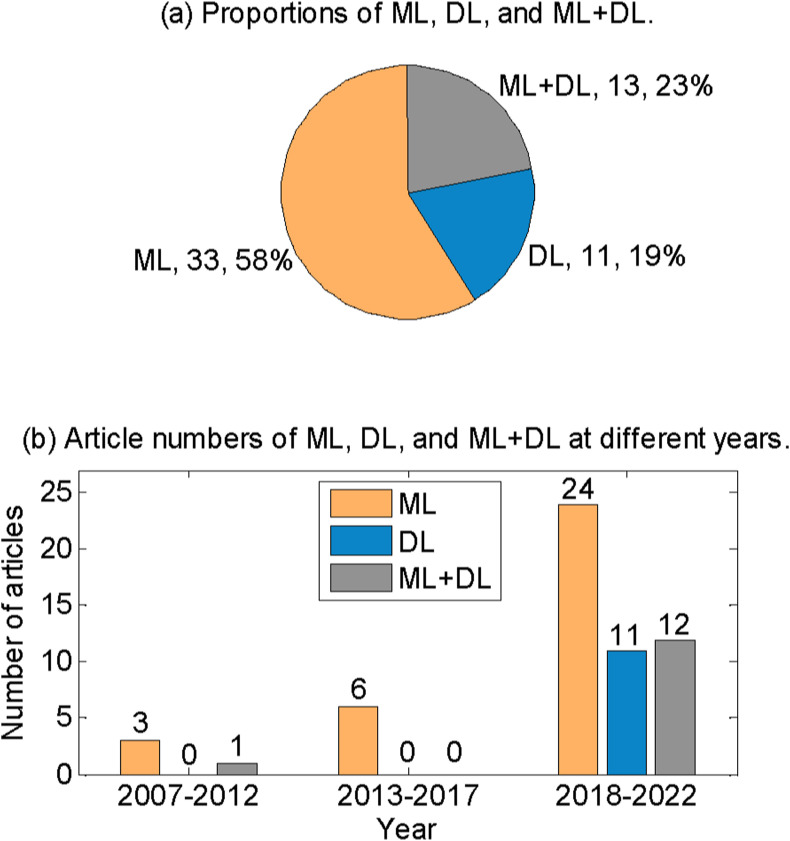

Fig. 2 illustrates the article selection process. The initial search returned 3389 articles. 227 articles were retained after removing duplicates, non-English language, and irrelevant to healthcare by screening the titles and abstracts. Then, 187 articles were retained after screening the full text and excluded the studies irrelevant to IST, transcription, disease diagnosis, and human-medical equipment interaction. Finally, we included 173 articles after removing the less relevant articles and dataset papers. The 173 articles are classified by the year of publication, as shown in Fig. 3 . We also included 28 articles about the methods and algorithms of IST. Furthermore, 15 web pages of medical equipment using IST were also included.

Fig. 2.

Systematic Reviews selection process.

Fig. 3.

The number of studies included in this review by the year of publication and its trend.

3. Overview of intelligent speech technologies

Speech technology generally includes collecting, coding, transmitting, and processing speech signals. However, the speech signals of the doctors and patients collected in the hospital's public areas contain background noise. Moreover, some patients cannot speak and pronounce clearly due to illness or dialect. These issues bring challenges to the acquisition and processing of speech signals. We can upgrade the acquisition equipment for noise interference, such as using a microphone array, to suppress noise and acquire speech signals directionally [19,20]. In addition to noise suppression and collecting high-quality speech signals, the current research mainly focuses on their processing by state-of-the-art AI algorithms.

As shown in Fig. 4 , speech signal processing mainly includes pre-processing, feature extraction, and recognition [21]. Among them, feature extraction and recognition are the critical steps of IST. Currently, the latest AI technologies are mainly used to improve the performance of feature extraction and recognition. Therefore, without loss of generality, this section first introduces the general flow of intelligent speech processing, presents the architecture of an ASR system, and then summarizes the application of IST in the medical field.

Fig. 4.

Typical processing flow of a speech system.

3.1. Procedure of intelligent speech processing

3.1.1. Pre-processing

The pre-processing of speech signals is the first step in IST. The speech signals are generally real-time audio streams and time sequences. There may be many invalid and silent segments in the speech signals that need to be segmented and filtered through the voice activity detection algorithm. Only the valid speech segments are retained for subsequent processing [22]. Hence, the speech signals are usually processed by pre-emphasis, framing, and windowing.

To improve the high-frequency resolution of the speech signals, they are usually pre-emphasized by using the first-order Finite Impulse Response high-pass digital filter [23]. The speech signals are time-varying signals. However, speech signals have short-term characteristics and can be treated as steady-state signals because the movement of the human muscles during speaking is slow. Therefore, the speech signals are needed to be divided into frames before processing and regarded as many short-term speech frames of equal length. Overlaps between adjacent speech frames are set during framing to ensure the short-term reliability of speech signal features and avoid feature mutation between adjacent speech signals.

Windowing is usually performed on each frame of the speech signals to reduce the error between the related speech segments and the original signals caused by the truncation of the voice signals. The commonly used window functions include the Rectangular window, Hanning window, and Hamming window [24]. We can obtain the speech signal required for feature extraction by processing each frame of the speech signals using these window functions with low-pass characteristics.

3.1.2. Feature extraction

The second step of IST is feature extraction, which is also crucial in determining the performance of the intelligent voice processing system. The feature extraction of speech signals aims to convert them into time-varying feature vector sequences through feature value extraction algorithms. The features of speech signals include time domain features, frequency domain features, and other transform domain features [26].

-

a)

Time domain features

The common time domain features of speech signals include short-term amplitude, short-term energy, pitch period, pitch frequency, pitch, and zero-crossing rate. The short-term amplitude M(i) is:

| (1) |

The short-term energy E(i) is:

| (2) |

where y i(n) refers to the amplitude of the n-th sample in the i-th frame of the speech signals, N is the total number of frames after framing, and L is the frame length. M(i) and E(i) are mainly used to distinguish the unvoiced and voiced segments in speech pronunciation. The difference between M(i) and E(i) is that the former has fewer fluctuations than the latter.

The pitch period is the vibration period of the vocal tract when a person makes a sound and is the reciprocal of the fundamental frequency F 0 [48], which can be estimated from the speech signal using pitch detection algorithms. Pitch represents the level of the sound frequency, which can be expressed by F 0 as

| (3) |

The zero-crossing rate Z(i) refers to the number of changes of the sign of the sampled value in each frame of the speech signal:

| (4) |

where the symbolic function sgn[x] is:

| (5) |

Z(i) is also used to distinguish between unvoiced and voiced and is often combined with E(i) for endpoint detection of speech segments, that is, the non-speech and speech segments. Z(i) is more effective than E(i) when there is considerable background noise.

-

b)

Frequency domain features

The spectrum of the speech signal can be obtained by converting each frame of a time-domain speech signal to the frequency domain using the Fast Fourier Transform (FFT). The spectrum contains the frequency and amplitude information of the speech signal. The spectrum can only show the feature of one frame of the speech signal. Therefore, we can combine the spectrum of all speech frames to form a spectrogram to observe the frequency domain features of the whole speech signal. The spectrogram contains three kinds of information: frequency, time, and energy.

-

c)

Other transform domain features

In addition to the characteristic parameters of speech signals commonly used in the time and frequency domains, researchers also use other characteristic parameters in the transform domain to improve the performance of the recognition. For example, the parameters in the transform domain can reflect the characteristics of people's vocal organs and auditory organs as speech features. Therefore, these feature parameters have a significant effect on speech signal recognition. Other domain features commonly used for speech signals include Mel Frequency Cepstral Coefficients (MFCC) [49,50], Discrete Wavelet Transform (DWT), Linear Prediction Coefficients (LPC), Linear Prediction Cepstral Coefficients, Perceptual Linear Prediction [51], and Line Spectral Frequency [26].

The above are common feature extraction methods in IST. However, in specific scenarios, we need to adjust the feature extraction method according to the type and characteristics of the collected signals and the performance of the speech recognition system. The extracted speech features are the input of speech recognition.

3.1.3. Recognition

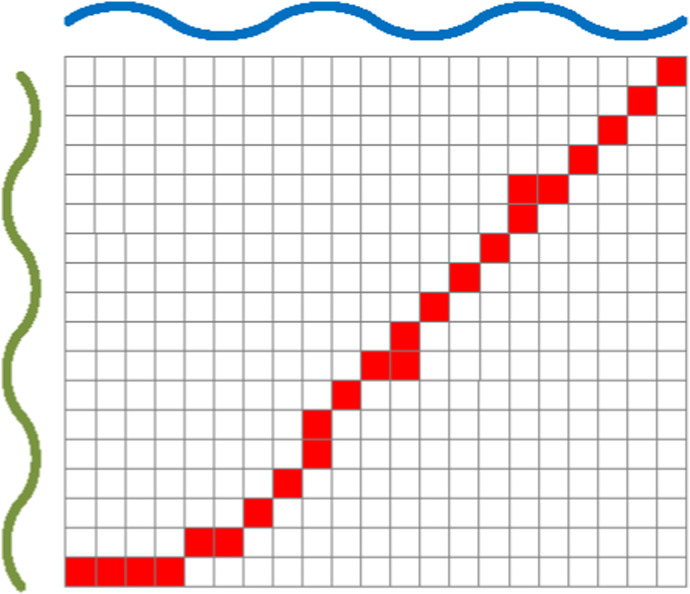

Recognition based on the digital features of the speech signals is the final step in intelligent speech processing. There are many recognition algorithms. For example, Dynamic Time Warping (DTW) is a method for calculating the similarity of two temporal sequences. The similarity between the speech signal sample and the standard speech signal is obtained by comparing their feature sequences [52]. As shown in Fig. 5 , DTW borrows the idea of dynamic programming, the minimum distance D(i, j) between any time i and j of two sequences is

| (6) |

where Dist(i, j) is the relative distance between two speech signals at times i and j, respectively. The distance generally is Euclidean distance. DTW requires less data and does not need pre-training, which is easy to implement and apply, and plays a vital role in small sample scenarios.

Fig. 5.

Schematic diagram of the shortest path of dynamic time warping (DTW) algorithm.

ML is the mainstream algorithm used in the current intelligent speech recognition. It utilizes the knowledge of probability and statistics and a dataset to train a model containing the mapping relationship between input and output to realize the feature recognition of speech signals. Table 1 shows the commonly used ML algorithms in medical speech signal processing. The traditional ML algorithms include Gaussian Mixture Model (GMM), Hidden Markov Model (HMM), Support Vector Machine (SVM), etc. The DL algorithms include Deep Neural Network (DNN), Convolutional Neural Network (CNN), and the Long Short-Term Memory (LSTM) algorithm in the Recurrent Neural Network (RNN), etc. Some of the algorithms are briefly introduced as follows.

Table 1.

Common machine learning algorithms for medical speech signal processing.

| Algorithm | Characteristics | Ref. |

|---|---|---|

| GMM | The probability density function of observed data samples using a multivariate Gaussian mixture density. | [[27], [28], [29]] |

| HMM | The Markov process is a double stochastic process in which there is an unobservable Markov chain defined by a state transition matrix. Each state of the chain is associated with a discrete or a continuous output probability distribution. | [[30], [31], [32], [33]] |

| SVM | Support vector machine (SVM) is a binary classifier with advantages in few-shot classification, such as pathological voice detection. | [[34], [35], [36], [37]] |

| DNN | Consists of fully connected layers and is popular in learning a hierarchy of invariant and discriminative features. Features learned by DNNs are more generalized than the traditional hand-crafted features. | [[38], [39], [40]] |

| CNN | A convolutional layer is the main building block of CNNs. Designed for image recognition but also extended for speech technology. Using the spectrogram of speech signals to classify them. | [[41], [42], [43], [44]] |

| LSTM | A type of recurrent neural network (RNN) architecture and well-suited to learn from experience to classify, process, and predict time series when there are very long-time lags of unknown size between important events. | [[45], [46], [47]] |

Gaussian model is a one-dimensional variable Gaussian distribution

| (7) |

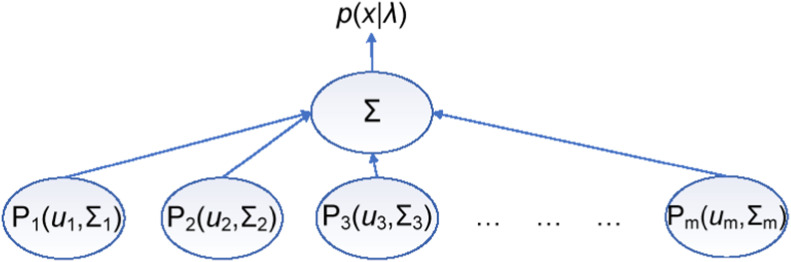

As shown in Fig. 6 , GMM refers to the superposition of multiple Gaussian models, and its variables are multi-dimensional vectors [53]. Then, the mixed Gaussian distribution p(x) is generally represented by the mean and covariance matrix of the variables

| (8) |

where the multidimensional variables x=(x 1, x 2, x 3, …, x D), the covariance matrix is Σ = E[(x−μ)(x−μ)T], μ = E(x). This model is usually trained using an Expectation-Maximization algorithm to obtain the maximum expectation on the training set.

Fig. 6.

Schematic diagram of Gaussian Mixture Model (GMM).

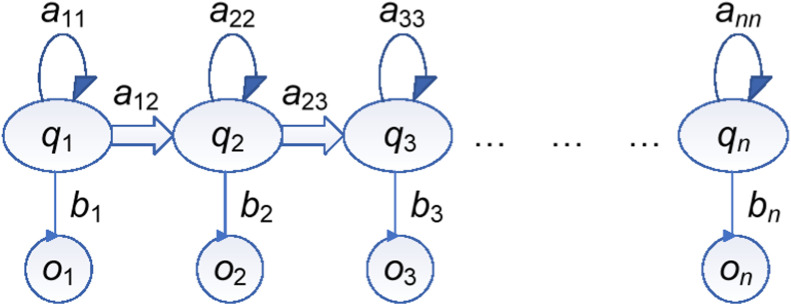

Markov chains represent the transition relationship of states. As shown in Fig. 7 , HMM adds the mapping from observations and states based on Markov chains [54]. a ij is the probability of transitioning from the current state to the next state

| (9) |

b t is the probability that the current state maps to the observed value

| (10) |

Fig. 7.

Schematic diagram of Hidden Markov Model (HMM).

We can use this model to establish the mapping relationship between the observation value and the actual state sequences. Then, the internal state with the highest probability can be found as the model's output, with input speech features as the observation value.

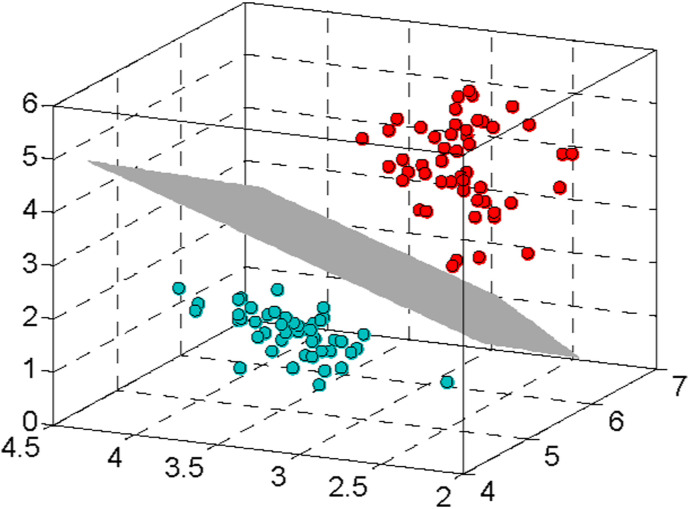

As illustrated in Fig. 8 , the basic idea of SVM is to find an optimal hyperplane in a high-dimensional space for the segmentation of the binary classification problem. The hyperplane should ensure the minimum error rate of the classification [55]. The hyperplane in the high-dimensional space can be expressed as

| (11) |

Fig. 8.

Diagram of the hyperplane-based classification of Support Vector Machine (SVM).

The training process of SVM is to find more suitable parameters W and b so that the hyperplane can better divide different categories.

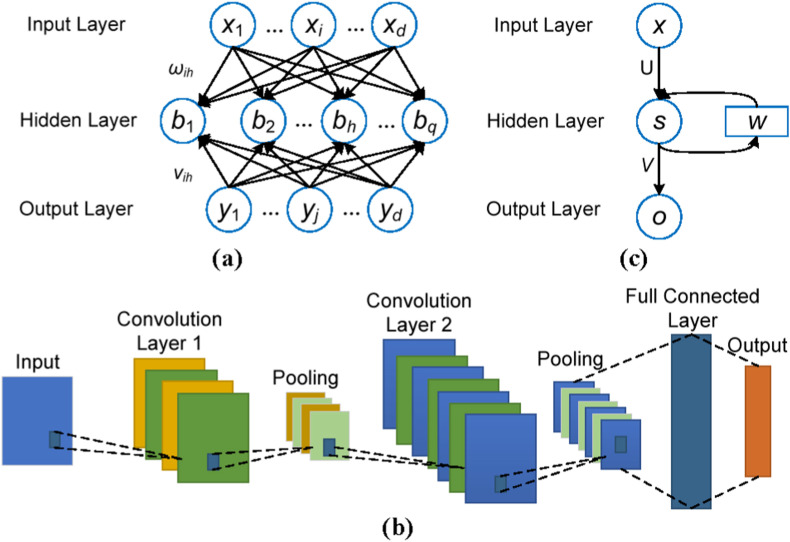

In recent years, DL has considerably improved the performance of intelligent speech processing. As shown in Fig. 9 (a), the basic unit of a neural network is a neuron. In addition to an input layer and an output layer, a DNN has multiple hidden layers. Each layer contains numerous neurons, fully connected between adjacent layers to form a network [56]. The output vector v l of layer l is

| (12) |

which is also the input vector of the next layer, where W l and b l are the weight matrix and the bias coefficient matrix of layer l, respectively. v l ∈ R Nl×1, W l ∈ R Nl×Nl−1, b l ∈ R Nl×1, Nl is the number of the neurons in layer l. Therefore, by adjusting the model's W l and b l through the training data, we can establish connections among neurons in the current and previous layers and finally obtain the mapping relationship between the input and output.

Fig. 9.

Schematic diagram of several classic neural network models. (a) Deep Neural Network (DNN). (b) Convolutional Neural Network (CNN). (c) Recurrent Neural Network (RNN).

As shown in Fig. 9(b), CNN mainly consists of two components. One is a convolutional layer composed of filters to calculate the local feature maps. (h k)ij refers to the k-th output feature obtained by the input feature unit at position (i, j)

| (13) |

where q represents the input feature unit, W k and b k represent the k-th filter and bias, respectively, obtained from the training data. Another component of the CNN is the pooling layer, which can reduce the dimensionality of each feature and retain only the more critical features. Finally, the last layer of the CNN is usually a fully connected layer, which is utilized to implement regression or classification tasks [57].

As illustrated in Fig. 9(c), the characteristic of the RNN is that it will be affected by the previous input while processing the current input, which can better process the time sequences [58]. The state transition and output of the hidden layer are:

| (14) |

where s t and s t‒1 are the states of the hidden layer at time t and time t‒1, respectively, o t is the output of the network, W is the weight matrix converting state t‒1 to the input of state t, U and V are the weight matrices of input and output, respectively.

Typical RNN has the problem of vanishing gradient. Hence, researchers propose LSTM networks to solve this problem [59]. In addition to these recognition algorithms, many researchers have proposed other algorithms, such as Generative Adversarial Networks and Variational Auto Encoders, etc., which are less related to this paper and will not be repeated here.

The performance of speech recognition algorithms based on DL is far better than those based on traditional ML algorithms. Especially the performance of speech recognition has been dramatically improved by the end-to-end algorithm based on Attention and Transformer in recent years. However, due to insufficient pathological speech data, traditional ML algorithms are still primarily used in pathological speech recognition.

3.2. Automatic speech recognition system architecture

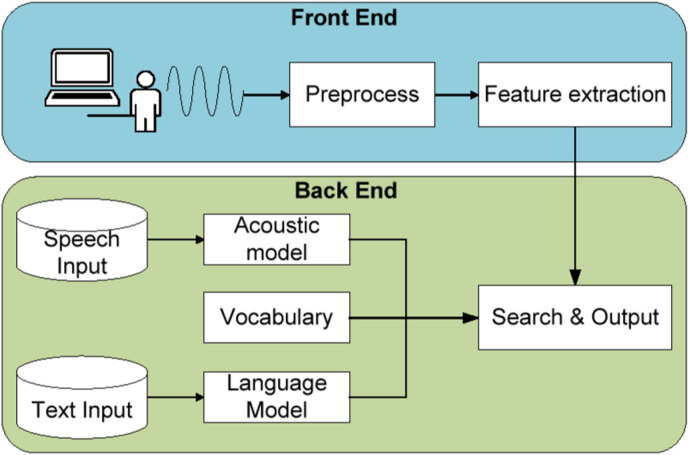

As one of the representative ISTs, speech recognition plays a vital role in healthcare. As shown in Fig. 10 , speech recognition has developed over a long time, from the initial DTW algorithm to the later GMM-HMM algorithm and then to the algorithm combining DNN with GMM in recent years. They all have a process model. Pathological speech recognition is mainly based on traditional front-end and back-end architectures. The conventional architecture of a speech recognition system is briefly introduced as follows.

Fig. 10.

Development process of the main technologies of speech recognition.

As shown in Fig. 11 , a speech recognition system is generally divided into the front end and back end. The front end mainly completes speech signals’ acquisition, pre-processing, and feature extraction. The back end realizes the recognition of the obtained speech feature sequences and gets the final recognition result. Unlike traditional architectures, the latest end-to-end speech recognition algorithms can directly convert speech signals into text or classification results, significantly improving speech recognition performance. The applications of these novel algorithms in medical speech recognition are attracting much attention. The state-of-the-art methods will be introduced in the following sections.

Fig. 11.

Schematic diagram of the typical framework of speech recognition system with the front end and back end.

3.3. Intelligent speech technology in medical scenarios

Healthcare services and treatment are indispensable in human society. The health system's capacity is essential to people's life and health. However, a hospital can only treat a limited number of patients daily due to its capacity, which is more severe in densely populated and undeveloped areas. We can utilize medical resources more efficiently if the non-medical workload of doctors is reduced and their work efficiency is improved [60]. As the aging population increases, patients' timely treatment, rehabilitation, and daily care are essential for their health. Many studies are trying to apply IST in different medical scenarios, such as speech-based assistants, telemedicine, and health monitoring [61], to change the working ways of medical staff and improve the efficiency of the medical system.

This paper reviews the applications of IST in smart hospitals, mainly from three aspects. (1) Use IST to recognize the doctors' voices and reduce their time spent in non-medical related work, which was studied by researchers from an early stage [62,63]. (2) IST is also utilized to process the patients’ speech signals to assist doctors in diagnosing and evaluating diseases [16]. This application has made significant breakthroughs in recent years with the development of ML and is also a hotspot of current research [64]. (3) IST is applied to medical equipment control to help doctors work efficiently [65,66]. These three aspects of applications are reviewed and summarized in the following three sections.

4. Speech recognition for electronic medical documentation

This section introduces the application of IST in electronic medical documentation, mainly including electronic medical record (EMR) transcription and electronic report generation. Then, we discuss some common issues of the existing medical transcription systems and typical solutions recently proposed. Finally, we present the critical indicators for evaluating the application effect of the transcription systems and their future directions.

Transcription refers to converting a speech signal into text using IST. The application of transcription in medical scenarios mainly refers to the generation of EMR and reports; that is, doctors' speech during diagnosis and pathological examination, the dialogue between doctors and patients, are all converted into text records. Transcription can reduce doctors’ burden of manual document editing, allowing them to focus on medical work and thereby improving their work efficiency [67].

In addition, transcription significantly affects many aspects, such as doctors’ enthusiasm for diagnosis, hospital treatment costs, and the treatment process [68]. Transcription also has some commercial value. Many companies have already developed some products. For example, Nuance designed an integrated healthcare system that could generate clinical records based on doctor-patient conversations [69]. A user survey has found that the system allowed physicians to devote more time to patients and their lives. Media Interface developed the Digital Patientendokumente product, which stored patient-related medical documents, nursing documents, and wills. This product allowed medical staff to review and sign patient documents quickly [70]. Unisound [71] and iFLYTEK [72] launched medical document entry systems, which effectively improved the work efficiency of medical staff. For instance, the entry system of iFLYTEK played an essential role in the fight against COVID-19.

4.1. Related studies and challenges

Using EMR transcription technology in medical scenarios has demonstrated apparent benefits. Table 2 shows that many researchers have used transcription technology to generate medical documents and investigated its application effects. They analyzed the accuracy, medical efficiency, and hospital cost of documentation by IST and proposed some problems and improvement methods.

Table 2.

Examples of speech recognition technology in the application of electronic medical documentation.

| Institute | Application scenario | Technical description | Application effect | Ref. |

|---|---|---|---|---|

| Zhejiang Provincial People's Hospital | Generate and extract pathological examination reports: 52h labeled pathological report recordings. | ASR system with Adaptive technology | Recognition rate = 77.87%; reduces labor costs; improves work efficiency and service quality | [81] |

| Western Paraná State University | Audios collected from 30 volunteers | Google API and Microsoft API integrated with the web | Reduces the time to elaborate reports in the radiology | [89] |

| University Hospital Mannheim | Lab test: 22 volunteers; Filed test: 2 male emergency physicians | IBM's Via-Voice Millennium Edition version 7.0 | The overall recognition rate is about 85%. About 75% in emergency medical missions | [77] |

| Kerman University of Medical Sciences | Notes of hospitalized Patients from 2 groups of 35 nurses | Offline SR (Nevisa) Online SR (Speechtexter) | Users' technological literacy; Possibility of error report: handwritten < offline SR < online SR | [74] |

| University of North Carolina School of Medicine | 6 radiologists dictated using speech-recognition software | PowerScribe 360 v4.0-SP2 reporting software | Near-significant increase in the rate of dictation errors; most errors are minor single incorrect words. | [79] |

| King Saud University | CENSREC-1 database: 422 utterances spoken by 110 speakers | Interlaced derivative pattern | 99.78% and 97.30% accuracies using speeches recorded by microphone and smartphone | [18] |

| KPR Institute of Engineering and Technology | 6660 medical speech transcription audio files and 1440 audio files from the RAVDESS dataset | Hybrid Speech Enhancement Algorithm | Minimum word error rates of 9.5% for medical speech and 7.6% for RAVDESS speech | [80] |

| Simon Fraser University |

Co-occurrence statistics for 2700 anonymized magnetic resonance imaging reports | Dragon Naturally Speaking speech-recognition system; Bayes' theorem | Error detection rate as high as 96% in some cases | [83] |

| Graz University of Technology | 239 clinical reports | Semantic and phonetic automatic reconstruction | Relative word error rate reduction of 7.74% | [25] |

| Zhejiang University | Radiology Information System Records | Synthetic method | About 3% superior to the traditional MAP + MLLR | [49] |

| Brigham and Women's Hospital | Records of 10 physicians who had used SR for at least 6 months | Morae usability software | Dictated notes have higher mean quality considering uncorrected errors and document time. | [75] |

Previous work has shown the effects and problems of transcription technology in medical document generation. For example, Ajami et al. investigated the previous medical transcription studies according to the usage scenario. Their results showed that the document generation performance was poor when the same vocabulary was used for different purposes. In addition, they found that although the use of speech recognition in the radiology report generation saved much time, the strict error checking in the later stage caused an increase in the overall turnaround time due to the high accuracy requirements of the report [73]. Peivandi et al. [74] and Poder et al. [13] also made a similar point that speech recognition accuracy was not as good as the accuracy of manual transcription. Although speech recognition has dramatically shortened the turnaround time of reports, doctors need to spend more time on dictation and correction due to the higher error rate of transcription [13].

Moreover, the advantages of electronic report generation are offset by the doctor's burden of verification and the risk of extra errors in the report. At the same time, previous studies have found considerable differences in the efficiency improvement of using transcription technology in different departments. By studying the previous work, Blackley et al. obtained some valuable and novel insights. For example, they found significant differences in the types and frequencies of words used when dictating and typing documents [75]. These differences may affect the quality of the documentation. They also found a lack of a unified and effective method for evaluating the impact of IST in medical scenarios [17].

The effects of transcription technology in medical scenarios include positive and negative aspects. The main advantages include reducing the turnaround time of most texts and quickly uploading the texts to the patient's electronic health record. Transcription also ensures the correctness of electronic documents in some scenarios that require multiple transcriptions and copies. In addition, transcription frees the doctors' eyes and hands, improves work efficiency in some scenarios, and brings them positive emotions [76]. Furthermore, in emergency medical missions, transcription technology can better meet the requirements for accurate time recordings of resuscitation than traditional methods [77]. Moreover, medical documents produced by transcription systems are more concise, standardized, and maintainable.

Negatively, there are potential recognition errors in the documents, resulting in the turnaround time not being shortened as expected in the scenarios with high accuracy requirements [78]. In addition, the delays in speech signal processing make doctors and patients lose patience with IST. Moreover, the background noise in public areas of hospitals, non-standard pronunciation, interruptions during speaking, and wearing surgical masks [79] will lead to decreased recognition accuracy and affect the mood of doctors and patients and their acceptance of IST [73].

4.2. Solutions for performance improvements

The critical question of medical transcription technology is continuous speech recognition. The current continuous speech recognition technology has high accuracy in most scenarios. However, improvements can be made in different processing stages of speech recognition to ensure accuracy and overcome the problems of IST in medical scenarios. Some improvement schemes have been proposed in several studies.

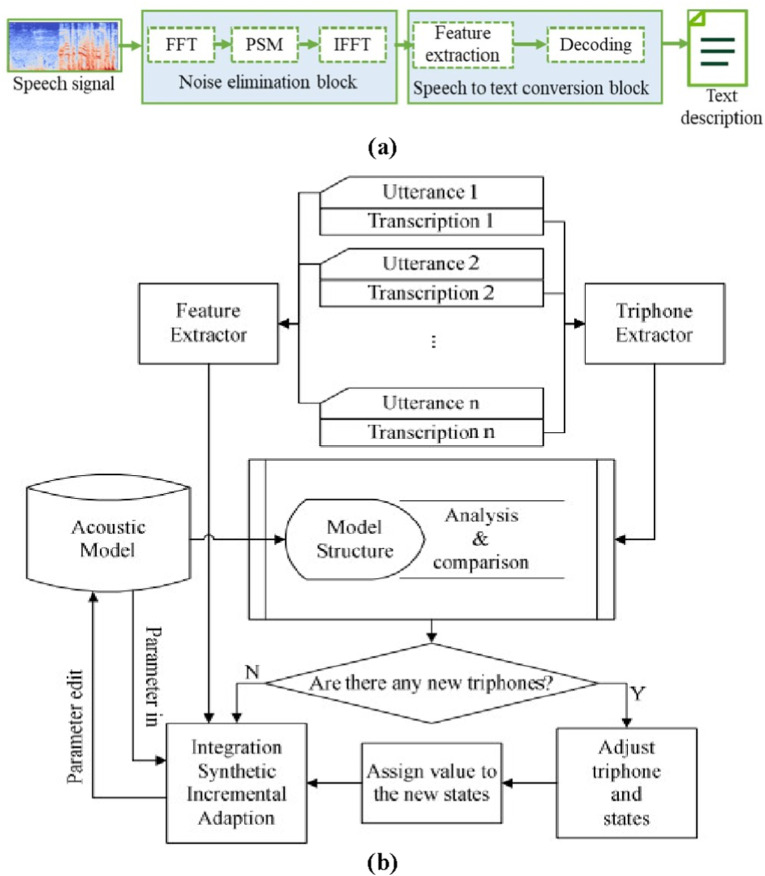

There are some methods to improve the adaptability of transcription systems. For the background noise problem, the microphone array combined with noise reduction algorithms can reduce the impact of the noise [19]. As shown in Fig. 12 (a), Gnanamanickam et al. proposed a cascaded speech enhancement algorithm using HMM to optimize the algorithm of nonlinear spectral subtraction, which improved the effect of medical speech recognition [80]. For different department scenarios, Duan et al. added the noise of the corresponding department when training the acoustic model [81]. They combined the knowledge transfer technique to improve the adaptability of the acoustic model and its recognition performance in specific application scenarios.

Fig. 12.

Diagram of typical improvement scheme of transcription systems. (a) A hybrid enhancement algorithm for speech signal [80]. (b) An adaptation strategy for acoustic model [49].

Regarding acoustic models, Muhammad et al. proposed a feature extraction technique less affected by noise, the interlaced derivative pattern, which achieved higher accuracy and shorter recognition time in a cloud computing-based speech medical framework [18]. In terms of language models, according to the different types of generated medical documents and the various probabilities of lexical occurrences, training the corresponding language models in a targeted manner is a method to improve recognition accuracy. As shown in Fig. 12(b), to make the model more adaptable in different departments, Wu et al. introduced a simplified Maximum Likelihood Linear Regression (MLLR) into the incremental Maximum A Posteriori (MAP) process to enable the parameters to be continuously adjusted according to the speech and text [49]. Speech transcription technology has also been applied to some products. For example, Unisound developed a pathology entry system for the radiology department [71]. The system can free the doctors' hands and allow them to enter the examination report while observing the image of the lesion. iFLYTEK also designed a medical document generation system for the dental department [82]. By wearing a small microphone, dentists can record information about the patient's condition during oral diagnosis.

Researchers also proposed methods to improve the quality of reports generated by transcription systems. For example, correction reports in electronic documents usually cause the problem of massive waste of resources [62]. Voll et al. proposed a text error correction scheme in post-processing for different medical documents to address this problem [83]. After the radiology report was generated, the frequency of different words appearing in the context was used to correct the report and mark the keywords, which was convenient for manual proofreading to shorten the document generation time [83]. In addition, Klann et al. proposed that using the Key-Val method to structure the report could reduce errors and improve its quality [84].

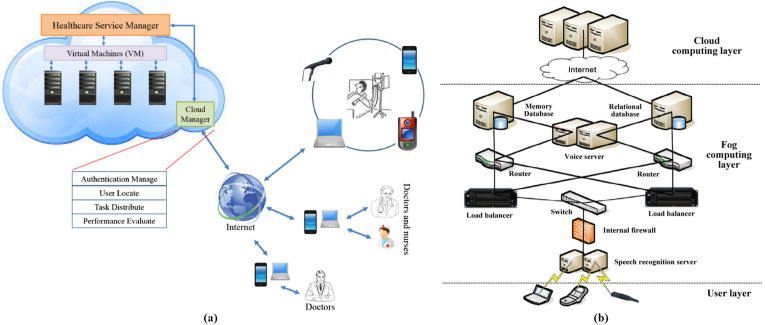

Sharing and security of electronic medical documents are also important issues. As shown in Fig. 13 (a), Muhammad et al. proposed an Internet-based cloud service architecture, which can realize unified management of electronic medical documents and facilitate communication between doctors and patients whenever and wherever possible. However, some scenarios have time delays and data security problems [18]. As shown in Fig. 13(b), Qin et al. proposed a hospital intelligence framework based on cloud computing and fog computing to alleviate the delay problem. The service nodes are deployed in the hospital, which can improve the quality of the voice transcription service [85] and ensure the security of the data. Singh et al. also presented an architecture similar to the one shown in Fig. 13(b). In addition, they proposed adding an IoT layer as a data source [86] so that guardians could obtain a real-time alert on students’ overall emotions in response to their stressful situations.

Fig. 13.

Typical cloud computing-based voice medical frameworks. (a) A cloud-based framework for speech-enabled healthcare [18]. (b) A medical big data fog computing system [85].

4.3. Summary and discussion

Accuracy is a significant indicator for electronic document and report generation systems used in medical scenarios [87]. We also should pay more attention to the efficiency improvement of hospitals after using these systems [88]. Therefore, four key evaluation indicators shown in Table 3 can be referred to when we evaluate these systems. The four indicators reflect the primary concerns of doctors and patients in actual medical scenarios.

-

a)

Report average turnaround time can measure the improvement of medical efficiency. Reducing this time is the primary purpose of applying transcription technology in medical scenarios.

-

b)

The average number of critical errors in the generated medical documents can measure the reliability of the transcription system. Healthcare is related to the patient's health, so an error-prone transcription system is unacceptable.

-

c)

The average word error rate of the generated documents will increase the time for medical staff to correct errors and affect the patient experience. We can quantitatively evaluate the above three indicators through the generated medical documents.

-

d)

Questionnaires and other methods need to be adopted to assess the user experience of the medical staff and patients in different departments and scenarios to serve as a benchmark for improving the transcription system.

Table 3.

Key evaluation metrics for transcription systems.

| Indicators | Definition | Meaning | Ref. |

|---|---|---|---|

| Report average turnaround time | Average time from the start of report generation to patient accessibility | Turnaround time reduction reflects medical efficiency improvement brought by the transcription system. | [13] |

| Average number of critical errors | Number of medically misleading errors in generated documents | Reflects the reliability of the transcription system. | [87] |

| Average word error rate | Number of typos in generated documents | Reflects the effect of the document and influences the satisfaction of doctors. | [13] [87] |

| User experience of doctors and patients | Satisfaction of doctors and patients with all aspects of the generated documents | Improving work efficiency and user experience and reducing medical burden are goals of transcription systems. | [90] [91] |

The interaction between the system and doctors should be considered a priority in the future development of medical transcription systems. Firstly, a more reasonable transcription process can be designed according to different departments so medical staff can use transcription tools efficiently after training. Secondly, we need to apply new speech recognition solutions in other fields to medical scenarios to enhance the reliability of the electronic medical documentation system. Thirdly, it can also start from the post-processing stage to improve the system's error correction capability and adaptability in generating different types of documents to provide doctors convenience [25].

5. Pathological voice recognition for diagnosis and evaluation

This section introduces the application of IST in disease diagnosis (disease unknown) and evaluation (disease known) using pathological voice. Then, we discuss data types, features, and recognition algorithms of pathological voices from a technical perspective. Finally, we present IST's future directions and trends in medical diagnosis. Since diseases can affect the patient's normal speech, cause them to cough and sneeze, and even make their breathing voice abnormal, we have investigated speech signals and other voice types in this section for disease diagnosis and evaluation.

5.1. Related studies and voice signal types

People express their feelings and thoughts by speaking. Speaking is accomplished through coordinated movements of the head, neck, and abdomen muscles. Individuals who cannot correctly coordinate these muscles will produce pathological speech [156]. Pathological speech-based disease diagnosis uses speech signal processing technologies to judge whether the patient suffers from certain diseases or to evaluate the patient's condition.

As shown in Table 4 , many studies use speech technology to diagnose diseases that cause voice problems [157]. The diseases include Voice disorder [99], Acute decompensated heart failure [100], Alzheimer's Disease (AD) [104], Dysphonia [118], Parkinson's Disease (PD) [122,[125], [126], [127], [128]], Stroke [125,224], COVID-19 [130,132,135], Chronic Obstructive Pulmonary Disease [142,143], Aphasia [169,170,181], Tuberculosis (TB) [[147], [148], [149]], and organ lesions such as oral cancer [158], head and neck cancer [159], nodules, polyps, and Reinke's edema [95]. These studies are divided into four categories by diseases, including the otorhinolaryngology department, respiratory department, neurology department, and others, and are shown in the four sub-tables, respectively.

Table 4.

Application of speech technology in pathological voice recognition and evaluation (otorhinolaryngology department).

| Disease | Data sources | Voice type | Voice feature | Classifier | Effect | Ref. |

|---|---|---|---|---|---|---|

| Vocal Fold disorders | 41 HP, 111 Ps | SV/a/ | Jitter, RAP, Shimmer, APQ, MFCC Harmonic to Noise Ratio (HNR), SPI | ANN, GMM, HMM, SVM | Average classification rate in GMM reaches 95.2% | [92] |

| KAY database: 53 HP, 94 Ps | SV/a/ | Wavelet-packet coefficients, energy, and entropy, selected by algorithms | SVM, KNN | Best accuracy = 91% | [93] | |

| MEEI: 53 HP, 657 Ps | SV/a/ | Features based on the phenomena of critical bandwidths | GMM | Best accuracy = 99.72% | [94] | |

| Benign Vocal Fold Lesions | MEEI: 53 HP, 63 Ps; SVD: 869 HP, 108 Ps; Hospital Universitario Príncipe de Asturias (HUPA): 239 HP, 85 Ps; UEX-Voice: 30 HP, 84 Ps | SV/a/and SS | MFCC, HNR, Energy, Normalized Noise Energy | Random-Forest (RF) and Multi-condition Training | Accuracies: about 95% in MEEI, 78% in HUPA, and 74% in SVD | [95] |

| Voice disorder | MEEI: 53 HP, 372 Ps SVD: 685 HP, 685 Ps VOICED: 58 HP, 150 Ps |

SV/a/ | Fundamental Frequency (F0), jitter, shimmer, HNR | Boosted Trees (BT), KNN, SVM, Decision Tree (DT), Naive Bayes (NB) | Best performance achieved by BT (AUC = 0.91) | [96] |

| KAY: 213 Ps | SV/a/ | Features are extracted through an adaptive wavelet filterbank | SVM | Sort six types of disorders successfully | [97] | |

| KAY: 57 HP, 653 Ps samples from Persian native speakers: 10 HP, 19 Ps | SV/a/ | Same as above | SVM | Accuracy = 100% on both databases | [98] | |

| 30 HP, 30 Ps | SV/a/ | Daubechies' DWT, LPC | Least squares SVM | Accuracy >90% | [97] | |

| MEEI: 53 HP, 173 Ps | SV/a/and SS | Linear Prediction Coefficients | GMM | Accuracy = 99.94% (voice disorder), Accuracy = 99.75% (running speech) | [101] | |

| Dysphonia | Corpus Gesproken Nederlands corpus; EST speech database: 16 Ps; CHASING01 speech database: 5 Ps; Flemish COPAS pathological speech corpus: 122 HP, 197 Ps | SV/a/and SS. | Gammatone filterbank features and bottleneck feature | Time-frequency CNN | Accuracy ≈89% | [144] |

| TORGO Dataset: 8 HP, 7 Ps | SS | Mel-spectrogram | Transfer learning based CNN model | Accuracy = 97.73%, | [145] | |

| UA-Speech: 13 HP, 15 Ps | SS | Time- and frequency-domain glottal features and PCA-based glottal features | Multiclass-SVM | Best accuracy ≈ 69% | [146] | |

| Pathological Voice | SVD: approximately 400 native Germans | SV/a/ | Co-Occurrence Matrix | GMM | Accuracy reaches 99% only by voice | [102] |

| MEEI: 53 HP SVD: 1500 Ps |

SV/a/ | Local binary pattern, MFCC | GMM, extreme learning machine | Best accuracy = 98.1% | [103] | |

| SVD | SV/a/,/i/,/u/ | Multi-center and multi-threshold based ternary patterns and Features selected by Neighborhood Component Analysis | NB, KNN, DT, SVM, bagged tree, linear discriminant | Accuracy = 100% | [108] | |

| SVD: samples of speakers aged 15–60 years | SV/a/ | Feature extracted from spectrograms by CNN | CNN, LSTM | Accuracy reaches 95.65% | [109] | |

| Cyst Polyp Paralysis | SVD: 262 HP, 244 Ps MEEI: 53 HP, 95 Ps |

SV/a/ | spectrogram | CNN (VGG16 Net and Caffe-Net), SVM | Accuracy = 98.77% on SVD | [105] |

| SVD: 686 HP, 1342 Ps | SV/a/,/i/,/u/and SS | Spectro-temporal representation of the signal | Parallel CNN | Accuracy = 95.5% | [106] | |

| Acute decompensated heart failure | 1484 recordings from 40 patients | SS | time, frequency resolution, and linear versus perceptual (ear) mode | Similarity calculation and Cluster algorithm | 94% of cases are tagged as different from the baseline | [100] |

| Common vocal diseases | FEMH data: 588 HP Phonotrauma data: 366 HP |

SV/a/; | MFCC and medical record features | GMM and DNN, two stages DNN | Best accuracy = 87.26% | [107] |

| Application of speech technology in pathological voice recognition and evaluation (neurology department) | ||||||

|---|---|---|---|---|---|---|

| Disease | Data sources | Voice type | Voice feature | Classifier | Effect | Ref. |

| Parkinson's Disease (PD) | UCI Machine Learning repository: 8 HP, 23 Ps | SV | Features selected by the Relief algorithm | SVM and bacterial foraging algorithm | Best accuracy = 97.42% | [119] |

| 98 S | SV/a/, SS | OpenSMILE features, MPEG-7 features, etc. | RF | Best accuracy ≈80% | [120] | |

| UCI Machine Learning repository; Training: 20 HP, 20 Ps; Testing: 28 S | SV and SS | Wavelet Packet Transforms, MFCC, and the fusion | HMM, SVM | Best accuracy = 95.16%, | [121] | |

| Group 1: 28 PD Ps Group 2: 40 PD Ps |

SS | Diadochokinetic sequences with repeated [pa], [ta], and [ka] syllables | Ordinal regression models | The [ka] model achieves agreements with human raters' perception | [122] | |

| Istanbul acoustic dataset (IAD) [123]: 74 PH, 188 Ps Spanish acoustic dataset (SAD) [124]: 80 PH, 40 Ps |

SV/a/ | MFCC, Wavelet and Tunable Q-Factor wavelet transform, Jitter, Shimmer, etc. | Three DTs. | Best accuracy = 94.12% on IAD and = 95% on SAD | [125] | |

| Training: 392 HP, 106 Ps Testing: 80 HP, 40 Ps |

SS | MFCC, Bark-band Energies (BBE) and F0, etc. | RF, SVM, LR, Multiple Instance Learning | The best model yielded 0.69/0.68/0.63/0.8 AUC for four languages | [126] | |

| Istanbul acoustic dataset: 74 HP, 188 Ps | SV/a/ | MFCC, Deep Auto Encoder (DAE), SVM | LR, SVM, KNN, RF, GB, Stochastic Gradient Descent | Accuracy = 95.49% | [127] | |

| PC-GITA: 50 HP, 50 Ps SVD: 687 HP, 1355 Ps Vowels dataset: 1676 S |

SV | Spectrogram | CNN | Best accuracy = 99% | [128] | |

| Alzheimer's disease (AD) | 50 HP, 20 Ps | SS | Fractal dimension and some features selected by algorithms | MLP, KNN | Best accuracy = 92.43% on AD | [104] |

| PD, Huntington's disease (HD), or dementia | 8 HP, 7 Ps | SS | Pitch, Gammatone cepstral coefficients, MFCC, wavelet scattering transform | Bi-LSTM | Accuracy = 94.29% | [110] |

| Dementia | Two corpora recorded at the Hospital's memory clinic in Sheffield, UK; corpora 1: 30 Ps corpora 2: 12 Ps, 24 S | SS | 44 features (20 conversation analysis based, 12 acoustic, and 12 lexical) | SVM | Accuracy = 90.9% | [111] |

| DementiaBank Pitt Corpus [112]: 98 HP, 169 Ps PROMPT Database [113]: 72 HP, 91 Ps | SS | Combined Low-Level Descriptors (LLD) features extracted by openSMILE [114] | Gated CNN | Accuracy = 73.1% on Pitt Corpus and = 74.1 on PROMPT | [115] | |

| Dysarthria | UA-Speech: 12 HP, 15 CP Ps MoSpeeDi: 20 HP, 20 Ps PC-GITA database [116]: 45 HP, 45 PD Ps |

SS | Spectro-temporal subspace, MFCC, the frequency-dependent shape parameter | Grassmann Discriminant Analysis | Best accuracy = 96.3% on UA-Speech | [117] |

| 65 HP, 65 MS-positive Ps | SS | Seven features including Speech duration, vowel-to-recording ratio, etc. | SVM, RF, KNN, MLP, etc. | Accuracy = 82% | [118] | |

| Distinguishing two kinds of dysarthria | 174 HP, 76 Ps | SV and SS | Cepstral peak prominence | classification and regression tree; RF; Gradient Boosting Machine (GBM); XGBoost | Accuracy = 83% | [155] |

| Application of speech technology in pathological voice recognition and evaluation (respiratory department) | ||||||

|---|---|---|---|---|---|---|

| Disease | Data sources | Voice type | Voice feature | Classifier | Effect | Ref. |

| COVID-19 | 130 HP, 69 Ps | SV/a/and cough | feature sets extracted with the openSMILE, open-source software, and Deep CNN, respectively | SVM and RF | Accuracy ≈80% | [129] |

| Sonda Health COVID-19 2020 (SHC) dataset [130]: 44 HP, 22 Ps | SV and SS | Features (glottal, spectral, prosodic) extracted by COVAREP speech toolkit | DT | Feature-task combinations accuracy >80% | [131] | |

| Coswara: 490 HP, 54 Ps | SV/a/,/i/,/o/; | Fundamental, MFCC Frequency (F0), jitter, shimmer, HNR | SVM | Accuracy ≈ 97% | [132] | |

| DiCOVA Challenge dataset and COUGHVID: Training: 772 HP, 50 Ps Validation: 193 HP, 25 Ps Testing: 233 S | Cough | MFCC, Teager Energy Cepstral Coefficients TECC |

Light GBM | The best result is 76.31% | [133] | |

| MSC-COVID-19 database: 260 S | SS | Mel spectrogram | SVM & Resnet | Assess patient status by sound is effective | [134] | |

| Integrated Portable Medical Assistant collected: 36 S | Cough and speech | Mel spectrogram, Local Ternary Pattern | SVM | Accuracy = 100% | [135] | |

| COUGHVID: more than 20,000 S Cambridge Dataset [136]: 660 HP, 204 Ps; Coswara: 1785 HP, 346 Ps |

Cough | MFCC, spectral features, chroma features | Resnet and DNN | Sensitivity = 93%, specificity = 94% | [137] | |

| COUGHVID: 1010 Ps; Coswara: 400 Ps; Covid19-Cough: 682 Ps | Cough, breathing cycles, and SS | Mel-spectrograms and cochlea-grams, etc. | DCNN, Light GBM | AUC reaches 0.8 | [138] | |

| Cambridge dataset: 330 HP, 195 Ps; Coswara: 1134 HP, 185 Ps; Virufy: 73 HP, 48 Ps; NoCoCODa: 73 Ps | Cough | audio features, including MFCC, Mel-Scaled Spectrogram, etc. | Extremely Randomized Trees, SVM, RF, MLP, KNN, etc. | AUC reaches 0.95 | [139] | |

| Coswara: 1079 HP, 92 Ps Sarcos: 26 HP, 18 Ps |

Cough | MFCC | LR, KNN, SVM, MLP, CNN, LSTM, Restnet50 | AUC reaches 0.98 | [140] | |

| Coswara, ComParE dataset, Sarcos dataset | Cough, breathing, sneeze, speech | Bottleneck feature | LR, SVM, KNN, MLP | AUC reaches 0.98 | [141] | |

| Chronic Obstructive Pulmonary Disease | 25 HP, 30 Ps | respiratory sound signals | MFCC, LPC, etc. | SVM, KNN, LR, DT, etc. | Accuracies of SVM and LR are 100% | [142] |

| 429 respiratory sound samples | respiratory sound signals | MFCC; Hilbert-Huang Transform (HHT)-MFCC; HHT-MFCC-Energy | SVM | Accuracy = 97.8% by HHT-MFCC-Energy | [143] | |

| Tuberculosis (TB) | 21 HP, 17 Ps, cough recordings: 748 | Cough | MFCC, Log spectral energy | LR | AUC reaches 0.95 | [148] |

| 35 HP, 16 Ps, cough recordings:1358 | Cough | MFCC, Log-filterbank energies, zero-crossing-rate, Kurtosis | LR, KNN, SVM, MLP, CNN | LR outperforms the other four classifiers, achieving an AUC of 0.86 | [147] | |

| TASK, Sarcos, Brooklyn datasets: 21 HP, 17 Ps Wallacedene dataset: 16 Ps Coswara: 1079 HP, 92 Ps; ComParE: 398 HP, 199 Ps |

Cough | MFCC | CNN, LSTM, Resnet50 | Resnet50 AUC: 91.90% CNN AUC: 88.95% LSTM AUC: 88.84% |

[149] | |

| Application of speech technology in pathological voice recognition and evaluation. (Others) | ||||||

|---|---|---|---|---|---|---|

| Disease | Data sources | Voice type | Voice feature | Classifier | Effect | Ref. |

| Juvenile Idiopathic Arthritis | 5 HP, 3 Ps | Knee Acoustical | Spectral, MFCC, or band power feature | Gradient Boosted Trees, neural network | Accuracy = 92.3% using GBT, Accuracy = 72.9% using neural network | [150] |

| Stress | 6 categories of emotions, namely: Surprise, Fear, Neutral, Anger, Sad, and Happy | SS (facial expressions, content of speech) | Mel scaled spectrogram | Multinomial Naïve Bayes, Bi-LSTM, CNN | Assess students' stress by facial expressions and speech is effective | [86] |

| Depression and Other Psychiatric Conditions | Gruop1: depression (DP) 27 S; Gruop2: other psychiatric conditions (OP) 12 S; Gruop3: normal controls (NC) 27 S |

SS | Features extracted by openSMILE and Weka program [151] | Five multiclass classifier schemes of scikit-learn | Accuracy = 83.33%, sensitivity = 83.33%, and specificity = 91.67% | [152] |

| Depression | AVEC 2014 dataset: 84 S; TIMIT dataset | SS | TEO-CB-Auto-Env, Cepstral, Prosodic, Spectral, and Glottal, MFCC | Cosine similarity | Accuracy = 90% | [154] |

SV=Sustained vowel, SS=Spontaneous speech, Ps = Patients, HP=Healthy People, S=Subjects.

Most of the speech data used in these studies come from existing or small private datasets collected from medical institutions. For example, the frequently adopted pathological speech datasets include Parkinson's Telemonitoring Dataset [160], Saarbrucken Voice database (SVD) [161], Massachusetts Eye & Ear Infirmary (MEEI), TORGO [162], VOICED [163], University of California Irvine (UCI) Machine Learning repository [164], Universal Access Speech Database (UA-Speech) [165], Coswara database [166], the COUGHVID corpus [167], and Computational Paralinguistics ChallengE (ComParE) [190]. The above datasets contain pathological voices of many diseases, which provide convenience for IST-based diagnosis system research.

We can see from Table 4 that the accuracies of most studies are over 90% (sensitivity and specificity are not shown), which proves the feasibility of diagnosis through speech signals. Meanwhile, we can find that even if the same dataset is used to diagnose the same disease, there are significant differences between different studies. The main reasons include differences between identification methods and an implicit problem that different studies will screen the data in the same dataset. Therefore, it is not very meaningful to directly compare the recognition effects of different methods. Nevertheless, the trend and proposed methods can inspire our further research.

The studies surveyed have one or more recognition algorithms for processing pathological voices. As the statistical analysis results shown in Fig. 14 , about 81% of the articles try ML methods, achieving satisfactory accuracy in disease diagnosis. In recent years, the proportion of DL methods has increased (42%), but ML methods are still the primary ones. A crucial reason is that the data is difficult to meet the needs of state-of-the-art end-to-end recognition methods. Therefore, some studies have tried solutions such as data augmentation [139,140,149,171] and transfer learning [141,145,149] to solve this problem. The details of the diagnosis systems in these studies, including data sources, voice type, voice feature, classifier, and effect, can be found in Table 4.

Fig. 14.

Statistical analysis of traditional machine learning (ML), deep learning (DL), and ML + DL methods used in disease diagnosis.

For feature extraction, in addition to the common features in the time domain and frequency domain, some studies also try rare features [94,103,104,117,121,127,133,137,144] or use existing feature sets for research, such as OpenSMILE features [114,115,120,129,152], features extracted by the Weka program [152], and COVAREP speech toolkit [131], MPEG-7 features [120]. Moreover, some studies extract features by DL algorithms, dimension reduction algorithms [108,111,119,146], or heuristic algorithms [93,97,98].

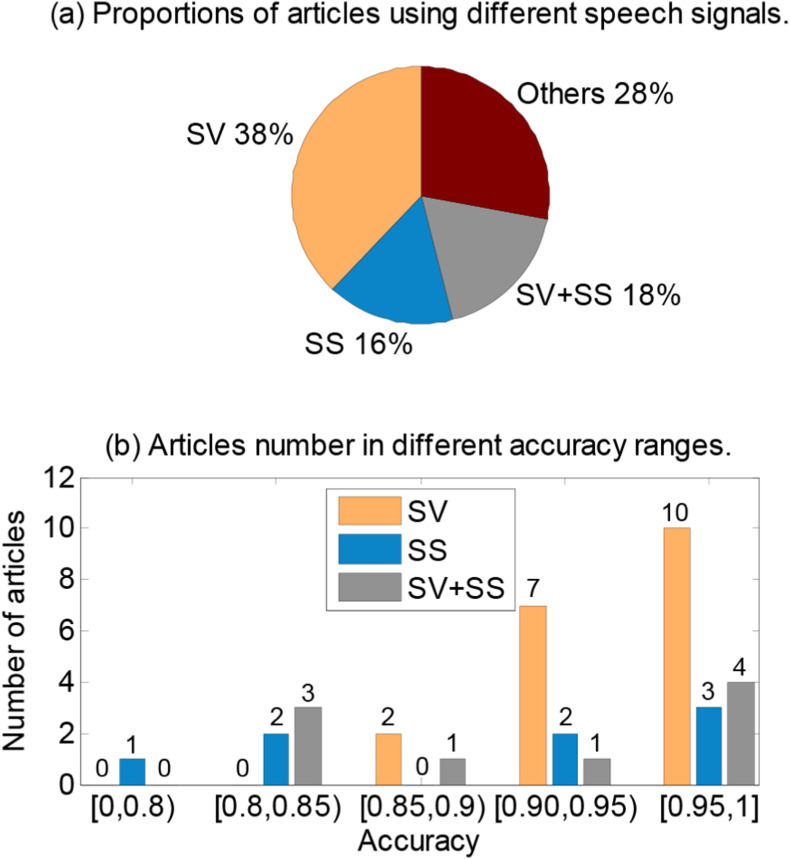

The main types of voice data are sustained vowels (SV), spontaneous speech (SS) sentences, coughs, and breathing sounds. SV signals are generally processed by collecting the SV articulations of patients [92,96,101,106]. The SS processing-based method uses sentence-level features to collect patient speech or continuous pronunciation of a given text as experimental data [110]. Because the voice types of speech signals differ, their research also has apparent differences in feature extraction and recognition methods. In addition, some studies directly use general speech transcription systems to evaluate the condition of patients. Fig. 15 shows the statistical analysis results. 38% of articles adopted SV as the speech signal, which also obtained the highest average accuracy. 18% of articles used both SV and SS as the speech signal. Although more data types are utilized, there is no significant performance improvement. It shows how we can extract information from different types of voices and combine them effectively is also an issue. Other types of voice signals account for 28% because coughing, breathing, and sneezing are the main diagnostic signals in diagnosing respiratory-related diseases.

Fig. 15.

Statistical analysis of articles using different voice signals of sustained vowels (SV), spontaneous speech (SS), SV + SS, and others.

5.2. Conventional methods

The research using SV data as the object is generally carried out from the quality and frequency domain characteristic parameters of pathological voice signals. Wang et al. combined MFCC with six speech quality features (jitter, shimmer, harmonic-to-noise ratio (HNR), soft phonation index (SPI), amplitude perturbation quotient (APQ), and relative average perturbation (RAP)) of the SV pronunciation/a/to recognize the pathological voice. They used HMM, GMM, SVM, and Artificial Neural Networks (ANN) to conduct two-class comparison experiments and found that the GMM method has the best classification accuracy, with an accuracy rate of 95.2% [92]. Similar research work was done by Verde et al. [96]. The difference between them is that Verde et al. extracted features that included the fundamental frequency F 0 of the speech signal. In addition, they used a boosted tree algorithm as a classifier to conduct an experimental study on data selected from three different databases. Ali et al. also adopted the patient's SV pronunciation/a/as the research object. They proposed features based on the phenomena of critical bandwidths and combined them with HMM to detect vocal cord disorders, with an accuracy rate of more than 95% [94]. Baird et al. extracted features such as pitch, intensity, and HNR from the SV in the Dusseldorf Anxiety Corpus to assess the anxiety of patients [172]. Their results verified the effectiveness of using speech-based features to predict anxiety and showed better recognition performance of higher-level anxiety.

For research based on sentence-level speech data, in addition to the quality and time-frequency domain characteristics, the prosodic characteristics of sentence data are also an effective breakthrough. Kim et al. adopted the speech signal parameters of phonemes, prosody, and speech quality as the features. They predicted the intelligibility of aphasia speech in the Korean database Quality-of-Life Technology using Support Vector Regression (SVR) [173]. They also proposed a structured sparse linear model containing phonological knowledge to predict the speech intelligibility of patients with dysarthria [174]. Martínez et al. assessed dysarthria intelligibility using i-vectors extracted by factor analysis from the supervector of universal GMM [175]. After being evaluated by SVR and Linear Prediction, the speeches in Wall Street Journal 1 and UA-Speech databases were divided into four levels: very low, low, mid, and high. Kadi et al. also used a set of prosodic features selected by linear discriminant analysis combined with SVM and GMM, respectively, to classify dysarthria speech of the Nemours database into four severity levels and got the best classification rate of 93% [176]. Kim et al. classified pathological voice using the features of abnormal changes in prosody, phonological quality, and pronunciation at the sentence level. The pathological speeches of the NKI CCRT Speech Corpus and the TORGO databases were classified into two categories (intelligible and incomprehensible), and posterior smoothing was performed after classification [177]. These studies all make use of the characteristics of prosody. However, different languages have different pronunciations in prosody, which means that compared with the model obtained by SVs, the model trained by this method has low generalization ability.

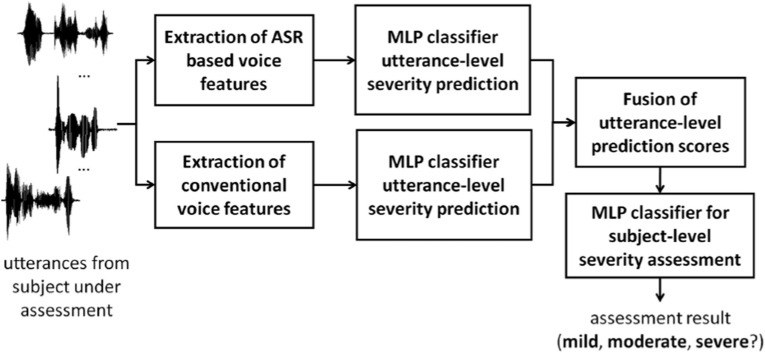

There are many other studies based on speech recognition technology [178]. As shown in Fig. 16 , Liu et al. used speech recognition to extract features and then integrated traditional acoustic feature classification to assess the severity of the voice disorder [168]. Bhat et al. utilized a bidirectional LSTM network for binary classification of the speech intelligibility of dysarthria in the TORGO dataset [179]. They also compared the classification performances when using the features of MFCC, log filter banks, and i-vector. In addition, Dimauro et al. adopted Google's speech recognition system to convert patients' speech into text [180]. Their result showed that the PD group's recognition error rate was almost always higher than that of the normal group.

Fig. 16.

Three-category voice disorder evaluation system based on Automatic speech recognition (ASR) [168].

5.3. State-of-the-art methods

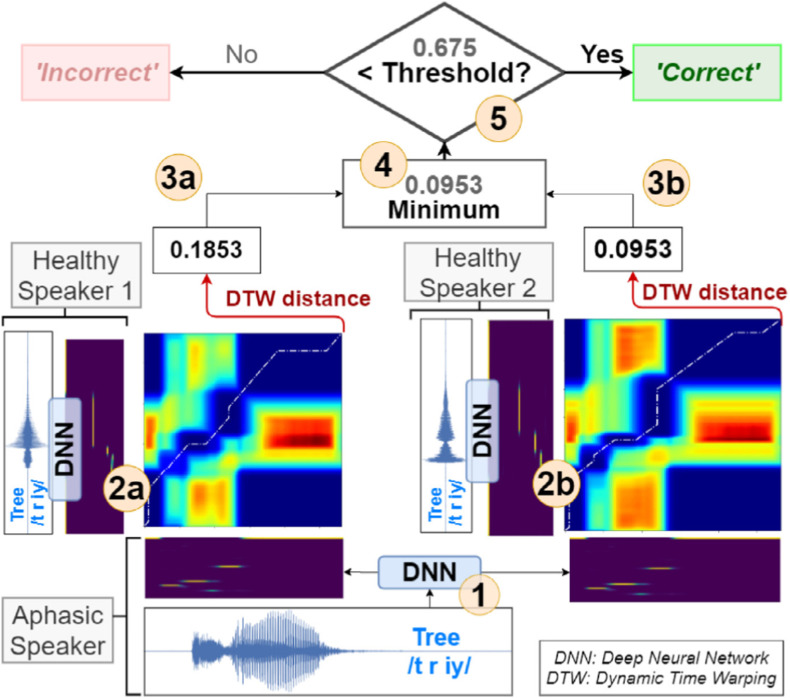

In addition to the traditional identification methods, some new methods have also been designed in recent years. As shown in Fig. 17 , Barbera et al. obtained the posterior probability of the patient's speech according to the acoustic model trained by a DNN network, compared it with the posterior probability of normal speech, and used the DTW algorithm to calculate the distance for classification [169,170]. The combination of DNN and vector matching method achieved a good result on the speech of word naming tests, which inspires us to integrate traditional methods with recent ones. For example, Lee et al. analyzed the distribution of frame-level posteriors produced by the DNN-HMM acoustic models [182]. They proposed an effective method for continuous speech utterances to extract dysphonia features from a specific set of discriminative phones with an ASR system.

Fig. 17.

NUVA: An utterance verification system for word naming of aphasic patients based on DNN and DTW [170].

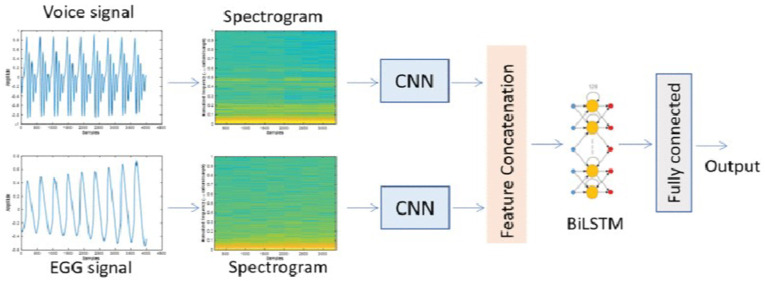

Many studies also transformed the features of one-dimensional speech signals into two-dimensional features and used algorithms in the field of image recognition to investigate disease diagnosis. For example, Alhussein et al. converted pathological speech signals into spectrograms and then adopted CNNs for classification [105,106]. Qin et al. conducted a similar study, except that the input was a posterior probability map [181]. Muhammad et al. proposed to use the co-occurrence matrix feature combined with the GMM algorithm to classify pathological voices in the SVD database [102]. As shown in Fig. 18 , in their recent study, Muhammad et al. utilized the LSTM algorithm to complete the recognition task [109]. They achieved an accuracy of 95% based on using CNN to fuse the spectrogram features of the voice and Electroglottograph (EGG) signals. Turning speech signal recognition into image recognition allows us to learn from the solutions in the field of image recognition to solve problems better. However, we also need to be careful in dealing with the problem of strict data alignment and the increase in computation.

Fig. 18.

A system architecture based on spectrograms [109].

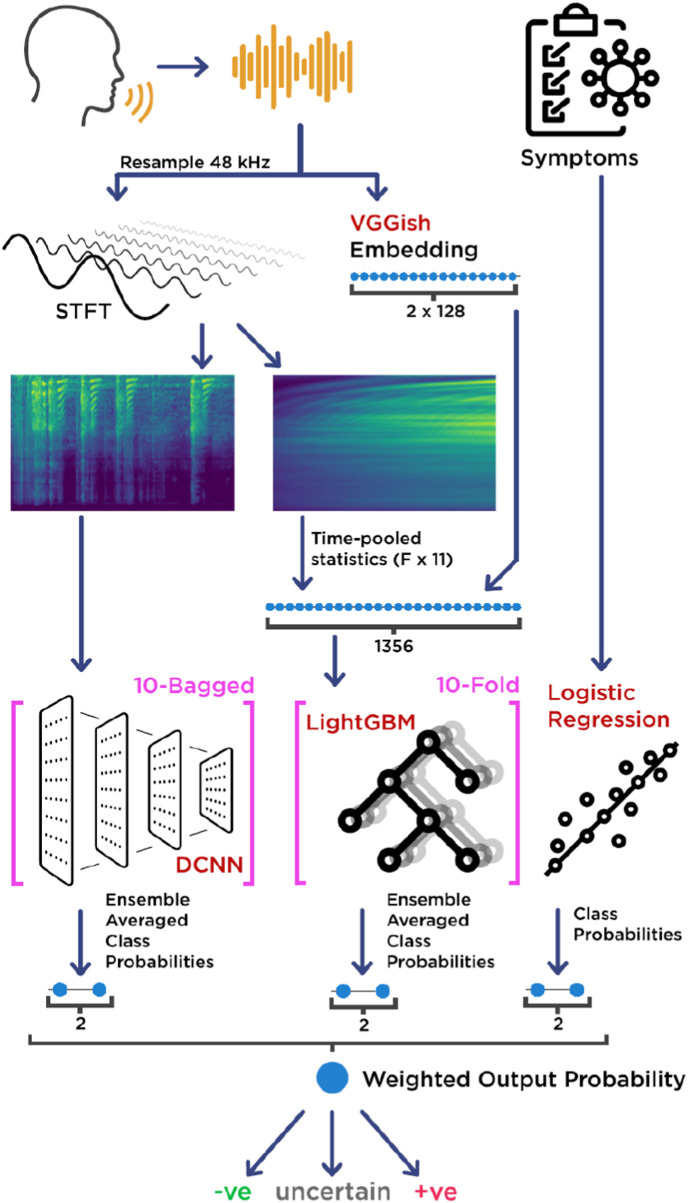

As shown in Fig. 18, information fusion using multimodal data from different systems is also one of the main strategies used in speech-based disease diagnosis [102,109]. Fig. 19 shows the identification flow chart of the COVID-19 detection system [138] designed by Ponomarchuk et al. The patients' voice signals and symptom information are the system's input. First, the speech signal is processed by subsystem 1 based on Deep CNN and spectrogram and by subsystem 2 based on LightGBM and VGGish features to obtain the ensemble average class probabilities. Next, the symptom information is processed by Logistic Regression (LR) algorithm in subsystem 3 to obtain the class probabilities. Then, the final result of the weighted output probability is obtained based on the fusion of the results of the three subsystems. Botha et al. proposed a fused system combining the classifier based on objective clinical measurements and the classifier based on cough audio using LR, which improved sensitivity, specificity, and accuracy [148].

Fig. 19.

Diagram of a COVID-19 detection system by fusion processing of speech and other signals [138].

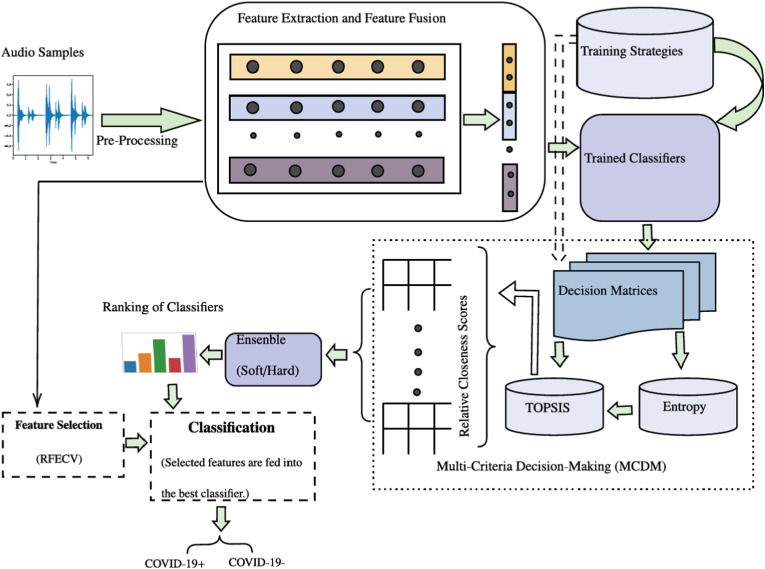

Similarly, Lauraitis et al. used information from three modalities of sound, finger tapping, and self-administered cognitive testing for symptom diagnosis [110]. The authors in Refs. [107,129,152,184] also conducted similar studies with multimodal data, and the recognition performances of their systems were higher than that with only one type of data. The COVID-19 detection system [131] designed by Stasak et al. used speech signals as the only input modality in their system. However, the classification performance was effectively improved by adding a second-stage classifier to fuse the results of multiple first-stage classifiers. With the increase in data modalities, the amount of information, computation, and cost also increases, and the requirements for processing methods are also higher. For excellent performance, selecting several most effective modalities according to the experience of doctors may be a precondition for progress. As shown in Fig. 20 , Chowdhury et al. also designed a complex ensemble-based system to detect COVID-19 [139]. The trained classifier layer is composed of 10 ML classifiers, which will be ranked by technique for order preference similarity to ideal solution and Entropy in Multi-Criteria Decision-Making blocks. At last, the features selected by Recursive Feature Elimination with Cross-Validation are fed into the best classifier. This method improves the diagnostic accuracy and adaptability of the whole system.

Fig. 20.

An overview of an ensemble-based COVID-19 detection system [139].

In addition to the above methods, some new attempts at pathological voice recognition exist.

Pahar et al. adopted speech, cough, and breath signals and rare bottleneck feature for pathological voice recognition [141]. In addition, they utilized transfer learning for training the model on the cough sounds of patients without COVID-19. Then the recognition system was tested with multiple pathological voice datasets and multiple classifiers, which verified the feasibility of this scheme [141]. Later, they adopted three DL classifiers, Restnet50, CNN, and LSTM, to classify TB, Covid-19, and health by cough. Finally, to make DL based-approaches achieve excellent performance and robustness, they adopted a synthetic minority over-sampling technique and transfer learning to address the issues of the class imbalance and insufficiency of their dataset, respectively [149].

Moreover, Harimoorthy et al. proposed an adaptive linear kernel SVM algorithm with higher prediction accuracy than traditional ML algorithms such as KNN, Random Forest (RF), Adaptive Weighted Probabilistic, and other k-SVMs [185]. Kambhampati et al. also proposed a fundamental heart sound segmentation algorithm based on sparse signal decomposition. They tested the algorithm's performance using various ML algorithms (hidden semi-Markov model, multilayer perceptron (MLP), SVM, and KNN) on real-time phonocardiogram (PCG) and PCG in a standard database. The results showed that their algorithm outperformed traditional heart sound segmentation algorithms [186].

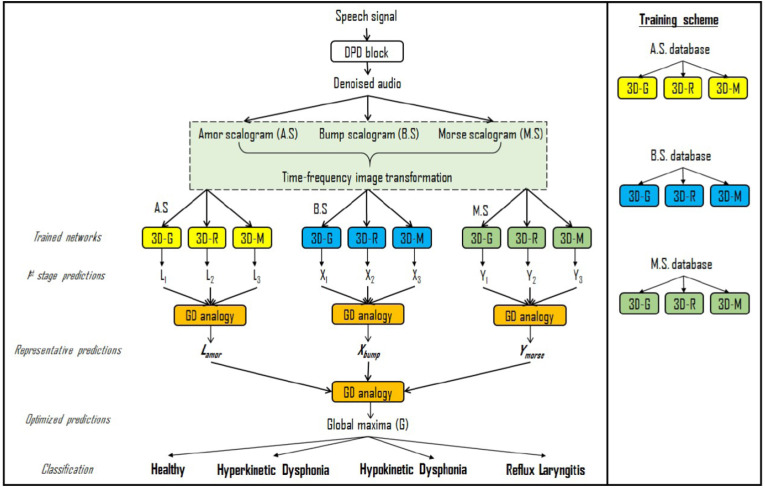

Furthermore, Saeedi et al. used a genetic algorithm to find the filter bank parameters for feature extraction. They achieved an accuracy of 100% in classifying normal and pathological voices when the tests were performed on two databases [97,98]. Qian et al. [134] and Huang et al. [187] tried the popular end-to-end models in speech recognition and Transformer-based models for pathological speech signals processing. Their recognition results were very consistent with the evaluation scales of the patients. Fig. 21 is the framework diagram of pathological speech-based diagnosis designed by Wahengbam et al. [183]. First, a deep pathological denoiser (DPD) block is obtained by training the silence and noise features using CNN and has an inverse STFT operation to revert the spectrum of the voice signal to the time domain. The DPD block is the first step of the group decision analogy. Then, the three kinds of features of the denoised pathological speech obtained from the wavelet transform of Amor, Bump, and Morse are sent to three decision-making subsystems, respectively. Each subsystem uses multiple 3D convolutional network models for predictions. Finally, the fusion and decision-making are performed using the proposed group decision analogy strategy, and the accuracy was increased from 80.59% to 97.7% [183].

Fig. 21.

Graphical workflow of group decision analogy showing the multiclass pathology identification framework [183].

The studies mentioned above have made innovations in the procedures of speech technology and brought us many inspirations. Innovations in data include using different types of voice signals, integrating data of multiple modalities such as SVs, continuous speech, cough, breath, finger tapping, EGG, and disease symptoms, and utilizing transfer learning to train models to avoid the problem of insufficient data. For feature selection, these studies try genetic algorithms, DL algorithms, recursive feature reduction methods, fractal dimension approach, etc. In terms of classifiers, in addition to improving ML, these studies also try group decision strategy, end-to-end models, etc.

5.4. Summary and discussion

SV and SS are the two main types of speech signals used for pathological speech-based disease diagnosis and evaluation. In addition, cough is also considered indispensable in diagnosing respiratory diseases and is usually treated as an SV. The SV method mainly uses the abnormality of the patients' pronunciation as the basis for judgment, which is relatively less complicated in the process of experiment and application. However, the SV method ignores that the patient's speech differs from that of healthy people. On the other hand, SS utilizes the entire sentence as the judgment basis and can more accurately identify the obvious abnormal speech of the patient. However, the training of the algorithm model and the procedure of this method are relatively complicated.

In addition, Bhosale et al. [188] and Casanova et al. [189] also used cough sounds to diagnose COVID-19. Moreover, Gosztolya et al. utilized SS to distinguish schizophrenia from bipolar disorder [190]. No matter what kind of speech data is adopted, researchers try to find more effective speech signal features based on different speech signals for disease diagnosis and evaluation and choose a more suitable recognition algorithm according to the actual effect [15].

Using voice technology for disease diagnosis and assessment can effectively reduce the burden on doctors and improve the efficiency of medical resources. The traditional diagnosis methods rely on medical instruments combined with the doctors' experience. However, the application of speech technology only depends on the patient's speech and a pre-training algorithm model incorporating medical experience, which is more objective than the traditional methods [192]. In addition, the combination of pathological voice recognition technology with the IoT [103], telemedicine technology, and other technologies [193] allows patients to diagnose anytime and anywhere, reducing medical costs dramatically. We can also integrate pathological voice recognition functions into wearable devices to monitor patients' health during daily activities [194,195] to diagnose a disease early and prevent its deterioration [196].

In the future, to achieve better diagnosis and evaluation results, in addition to exploring more effective features and recognition algorithms, it is crucial to design multimodal data fusion methods [115,184,191,197,198] and build richer pathological voice datasets.

6. Speech recognition for human-medical equipment interaction

Doctors need to operate various equipment in their work. In addition, patients often require equipment to assist in treatment and rehabilitation. Integrating voice technology into medical equipment can bring great convenience to doctors and patients in many medical scenarios [199]. For example, smart medicine boxes remind patients to take medicines on time, intelligent ward round systems help doctors collect patient information [200], and voice systems perform automatic postoperative follow-up visits [201,202]. This section discusses related studies on medical device control using IST and how they can help doctors and patients in different scenarios. Finally, we discuss the requirements and future directions for the application of voice technology in smart medical equipment and devices.

6.1. Doctor and patient assist

6.1.1. Doctor assist

With the rapid development of medical speech technology, many studies have attempted to use it to assist doctors in operating equipment. For example, intelligent minimally invasive surgical systems have been put into clinical use, and doctors can control the robotic arm to perform precise operations through voice [208]. Ren et al. tried to embed speech recognition in the laparoscopic holder [209]. The holder with the speech command recognition function can replace the assistant and give corresponding feedback according to the instructions of the chief surgeon [209]. In addition, Tao et al. proposed an intelligent interactive operating room to solve the problem that the attending doctor must be in a sterile and non-contact environment and cannot view the lesion image in time during the operation [210]. The doctor can remotely control the display instrument using speech commands to locate and observe the image of the lesion quickly.

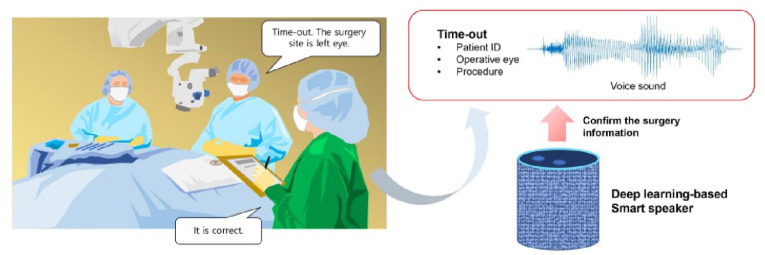

Furthermore, as shown in Fig. 22 , Yoo et al. presented an intelligent voice assistant for the problem that the surgeon needs an assistant to check information during surgery continuously [211]. The voice assistant could recognize the proofreading speech of the attending doctor and compare it with the pre-input surgical information to ensure the smooth progress of the operation. Moreover, it also can remind the attending doctor of the length of the operation.

Fig. 22.

A smart speaker to confirm surgical information in ophthalmic surgery [211].

All these studies use IST to reduce the burden of inefficient labor on doctors and make the medical process more standardized and efficient.

6.1.2. Patient assist

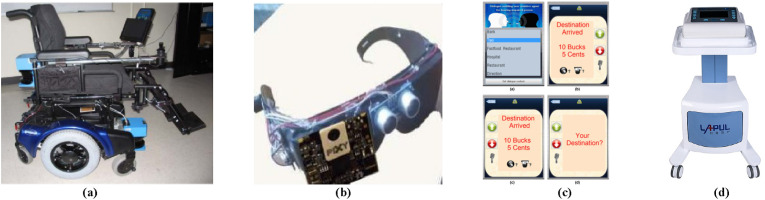

In addition to using voice technology to assist doctors, many studies embed it in assistive devices for patients to help them have a better life quality. For example, intelligent wheelchairs integrated with voice technology are comprehensively studied. Li et al. designed a voice-controlled intelligent wheelchair that determines specific commands by comparing the appropriate distance of characteristic parameters [212]. As shown in Fig. 23 (a), Atrash et al. added a computer, a display, a laser rangefinder, and an odometer to the wheelchair to realize an intelligent wheelchair that can navigate autonomously according to voice commands [203]. Al-Rousan et al. realized the movement direction control of an electric wheelchair using voice command recognition based on wavelets and neural networks [213]. Wang et al. developed an intelligent wheelchair that used a brain-computer interface and speech recognition for coordinated control for mentally ill patients with dysarthria [214].

Fig. 23.