Abstract

The current important limitations to the implementation of Evidence-Based Practice (EBP) in the rehabilitation field are related to the validation process of interventions. Indeed, most of the strict guidelines that have been developed for the validation of new drugs (i.e., double or triple blinded, strict control of the doses and intensity) cannot—or can only partially—be applied in rehabilitation. Well-powered, high-quality randomized controlled trials are more difficult to organize in rehabilitation (e.g., longer duration of the intervention in rehabilitation, more difficult to standardize the intervention compared to drug validation studies, limited funding since not sponsored by big pharma companies), which reduces the possibility of conducting systematic reviews and meta-analyses, as currently high levels of evidence are sparse. The current limitations of EBP in rehabilitation are presented in this narrative review, and innovative solutions are suggested, such as technology-supported rehabilitation systems, continuous assessment, pragmatic trials, rehabilitation treatment specification systems, and advanced statistical methods, to tackle the current limitations. The development and implementation of new technologies can increase the quality of research and the level of evidence supporting rehabilitation, provided some adaptations are made to our research methodology.

Keywords: rehabilitation, new technology, validation, study design, methods

1. Introduction

For centuries the practice of medicine was based on clinical judgment and doctors’ intuition, rather than on scientific evidence. At this time, medicine was referred to as an art—“the art of Medicine”—rather than a science. In the 1960s, thanks to the development of modern research, the amount of data was growing, and some researchers, led by Alvan Feinstein, started to challenge the way medicine was performed, bringing the risk of bias into attention that could affect clinical judgment [1]. A few years later, Archie Cochrane highlighted the lack of scientific evidence supporting the practices and treatment commonly used [2]. He called for an international register of randomized controlled trials, and for explicit quality criteria for appraising published research. “Evidence-based medicine” (EBM) slowly started to emerge, and the practice was now quickly evolving. EBM was defined as “a systemic approach to analyse published research as the basis of clinical decision making” [3]. Although the awareness of the importance of basing clinical decisions on strong scientific evidence began years before, the use of EBM started in clinics in the 1990s. The development of EBM, and the modification of patients’ management, cannot be detached from the development of modern and clinical epidemiology [4].

The cornerstones of EBM are still—currently—randomized controlled trials (RCT), which are studies with the highest level of evidence [5]. However, these studies have some limitations: they are expensive and time-consuming, since many patients need to be included to reach enough statistical power and lower the risk of bias [6].

On the other hand, over the last few years, there have been significant advances and developments in modern epidemiology, both in medicine (e.g., genetics and immunology in medicine) and in rehabilitation, with the growing development of new technologies (e.g., sensor-based assessment, robotics, virtual reality, brain stimulation, etc.), allowing for objective measurements and technology-supported rehabilitation [7].

RCTs are currently not fully adapted to the development of precision therapy (i.e., personalized rehabilitation programs), or are difficult to put in place quickly in the context of emerging infectious diseases (e.g., the COVID-19 pandemic) [8]. EBM has become the benchmark for medical intervention, and today it is increasingly the benchmark for other healthcare professions. This is why we now prefer to use the term Evidence-Based Practice (EBP). For a healthcare provider, regardless of its activity, EBP is the combination of three elements [9]: (1) the provider’s own clinical expertise, (2) scientific evidence, usually in the form of practice guidelines, and (3) the preferences and values of each individual patient. However, to date, the concept of EBP has only been partially transferred to physical rehabilitation, and most rehabilitation interventions are only corroborated by a low level of evidence [10].

Therefore, the aim of this narrative review is to summarize the different study designs currently available in rehabilitation research and discuss their limitations. Then, the current limitations and future perspectives of EBP are discussed in the context of current rehabilitation interventions.

2. Current Situation and Limitations of the Research and Its Translation to the Care

2.1. Study Design and Level of Evidence

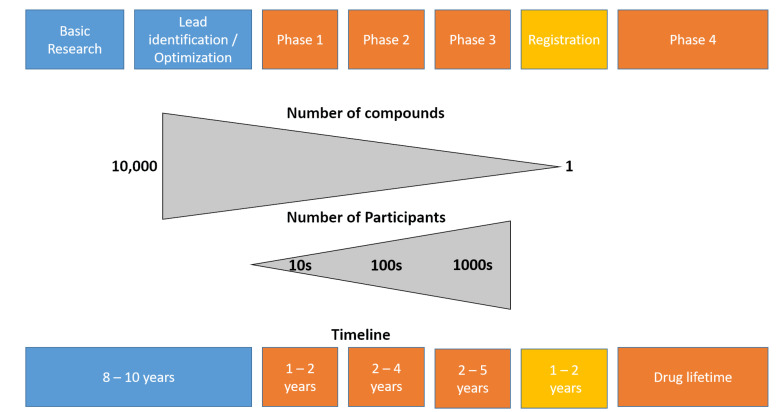

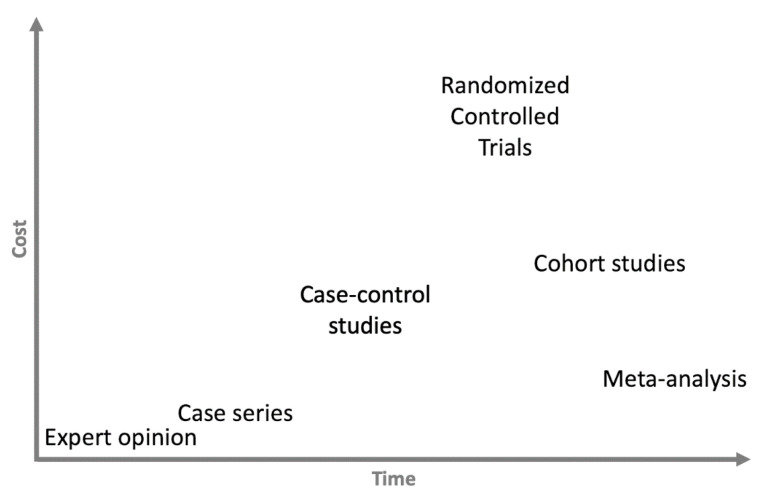

The traditional flow of development and the different validation steps of a new treatment are presented in Figure 1 (adapted from the drug development pipeline since these numbers are not available for the development of new interventions or of technology in rehabilitation) [11]. It is interesting to note the very large number of participants (healthy subjects and then patients) required to carry out the various stages of clinical validation, as well as the length of time between the development of a product and its launch on the market. The different study designs and their levels of evidence are presented in Figure 2. Some authors question this pyramid, highlighting the fact that the most significant constraint of every pyramid is that the top levels gradually build on those below them—lower-quality studies can only result in poorer-quality systematic reviews and guidelines [12]; however, the different levels of evidence on which EBP is based are generally well accepted in research and in clinical practice.

Figure 1.

The rocket model, adapted from Verweij et al., 2019 [11]. Note that this model and its numbers relate to the development of new drugs, as no data are available for new interventions in rehabilitation sciences. Blue colors indicate the different steps of the discovery, development and preclinical research, orange represents the clinical development. The different steps of the clinical development are as follows. Phase 1—healthy volunteer study: this is the first time the drugs is tested in people; less than 100 volunteers are usually involved, and the pharmacokinetics, absorption, metabolism, and excretion effects on the body, as well as any adverse effects associated with safe dose ranges, will be determined. Phase 2—small sample size study in patient population: Evaluates the safety and effectiveness of the medicine in an additional 100–500 patients who may receive a placebo or a previously utilized standard of care. The analysis of the ideal dosage strength aids in the development of schedules, while adverse events and dangers are documented. Phase 3—large-scale clinical study: typically enrolls 1000–5000 patients, allowing medication labeling and adequate drug usage instructions. Phase 3 studies need substantial cooperation, planning, and coordination and control on the part of an Independent Ethics Committee (IEC) or an Institutional Review Board (IRB) in preparation for full-scale manufacturing after medication approval.

Figure 2.

Study design and levels of evidence [16]. This pyramid could be more detailed, but the general idea is to differentiate the four kinds of studies: The meta-analysis of published studies is at the top (e.g., systematic review and meta-analysis), as level I evidence. Then the experimental studies—fully controlled (RCT) and pseudo-RCT (level II) quasi-experimental designs (i.e., prospective studies). The observational large-scale studies (cohort studies and case–control studies (level III)) and the case reports or case series are at the base of the pyramid, with a low level of evidence (level IV). Finally, expert opinion, not based on any scientific data or evidence, represents the lowest level of evidence (level V).

Concerning drug validation, RCTs are requested by the authorities before starting discussions on the marketing of a new molecule. In the past, a new treatment had to be more efficient (superiority trial) than a placebo (i.e., sham treatment without an active substance). However, when treatment is already available (gold-standard), it is unethical to deprive half of the participants of a study of this treatment [13]. Therefore, currently, in the presence of a gold standard, the common practice is to perform equivalence or non-inferiority trials to compare the efficacy of the new drug against this gold-standard [14]. From a statistical point of view, there are some differences, but methodologically the critical point is the randomization. The randomization allows one to get rid of some potential confounding factors, but having randomization does not mean that a study is not biased. In the next part, we will discuss the main limitations of RCTs and the sources of error in (para)medical research [15], and thus of the meta-analyses that derive from it [16].

2.2. Challenges in the Validation of New Intervention and Suggestions for Reconsideration of the Choice of the Outcomes

The complexity and challenges related to the validation of a new intervention can be summarized in two constraints—time and cost, which will lead to various challenges, such as small sample sizes and low statistical power, bias, and low external validity in the studies.

Most of the time and financial resources are deployed during Phase 3 of clinical development (see Figure 1 for the different steps, phases, and their definitions). We present the different study designs according to the time and money needed to perform them in Figure 3. Because they require strict control, regular testing (e.g., biological testing, imaging, functional assessment) and many patients to have sufficient statistical power, the RCTs are—by far—the most expensive studies. As an example—again taken from the pharmaceutical world since these numbers are not known in the rehabilitation sciences—in the USA, the average cost of a Phase 1 study (healthy volunteers study) ranges from USD 1.4 million (pain and anesthesia) to USD 6.6 million (immunomodulation), including estimated site overheads and monitoring costs of the sponsoring organization. A Phase 2 study (small sample size study in patient population) costs from USD 7.0 million (cardiovascular) to USD 19.6 million (hematology), whereas a Phase 3 study (large scale clinical study) ranges in cost from USD 11.5 million (dermatology) to USD 52.9 (pain and anesthesia), on average [17]. Therefore, such studies cannot be conducted without sponsors—mainly pharmaceutical companies—and this can lead to a conflict of interest [18]. In the wake of numerous scandals that have warred the scientific community [19], clinical trial governance frameworks have been developed for pharmaceutical industry-funded clinical trials [20]. However, the recent retractions of studies focused on COVID-19 treatments have highlighted the existing relationships between researchers, private companies, and scientific journals, leading to public mistrust in the independence of research [21]. Furthermore, several studies have found a significant association between funding sources and pro-industry conclusions [22].

Figure 3.

Relationship between time and cost of the different study design.

Another issue is the sample size needed to complete these studies. In the case of rare, or very rare, diseases, it is sometimes impossible to recruit enough patients in an RCT to reach the required statistical power [23]. It has, for example, been clearly shown that a lack of statistical power is one of the main limitations of current research in neuroscience [24], but the situation is exactly the same in the field of rehabilitation [25]. In addition to the small number of subjects included in the studies, intention to treat analysis is not always straightforward in rehabilitation (e.g., loss in follow-up, change of rehabilitation strategies according to the evolution of the patients through the rehabilitation process and its specific needs) [26]. This point may weaken the power of individual studies and RCTs. While RCTs in all (para)medical specialties are subject to loss to follow-up, rehabilitation trials have a poor track record of both reporting loss to follow-up and attaining adequate follow-up. As a result, minimizing follow-up loss should become a critical methodological priority in rehabilitation research.

RCTs are the interventional studies with the highest level of evidence. However, their results are not always reliable and unbiased [27]. Strict guidelines have been developed to increase the quality of the studies and decrease the risk of bias [28]. The protocol must be strictly followed, the allocation, the treatment, and the assessment should be performed blind, and ideally, the blind statistical analysis should be carried out by an external team. Adhering to these measures ensures that the results of the study can be trusted (internal validity). These parameters are, however, rather difficult to implement in rehabilitation research. In rehabilitation research, the blinding of the patients or the therapists is not always possible. Other problems such as low participant numbers due to the low incidence of the studied diseases, high heterogeneity, and a lack of consensus on how to demonstrate a treatment’s effect (choice of the best outcomes) are also common. Additionally, one of the specificities of rehabilitation is to propose a personalized treatment adapted as much as possible to the specificities and needs of the patient; therefore, in daily clinic the treatment is very often not fixed in time. For these reasons, treatment is often referred to as the “black box of rehabilitation” [29,30]. This lack of precise definitions, standardization and specifications of the interventions used in the studies [31] is responsible for the lack of replicability in RCTs in rehabilitation [32], and this has limited the establishment and synthesis of evidence-based practice in rehabilitation [33]. Note that efforts are being made to improve reporting, such as the establishment of CERT guidelines on exercise reporting from the equator network [34].

Furthermore, there are also some discrepancies between the results of RCTs and real-life results [35]. Two mains factors explain this (thus affecting external validity): the treatment adherence is lower in real life compared to the strict and controlled RCT environment [36], and the other issue is selection bias (or representative bias) [37]. It is well known that patients participating in clinical trials are not representative of the total patient population [38]. For example, in asthma and chronic obstructive pulmonary disease, RCTs often represent only a minority (5 to 10%) of the routine care population in whom licensed interventions will be applied [39].

A last important limitation of the research on rehabilitation, which is particularly important in the validation of new technologies, is the very important development speed of these technologies. We have seen that the validation process is time-consuming, and therefore, some validation studies may only become available when the device is already outdated, due to limited sustainability (e.g., mobile applications).

Combining the results of different studies in a meta-analysis helps to address, partially, the external validity issues (multiple clinical centers involved, different populations, different teams of clinicians, etc.), but above all it increases the statistical power by increasing the number of participants [40]. It is worth mentioning that one should distinguish between statistically significant differences (that can be achieved with the inclusion of more patients) and the clinical relevance of the observed difference (independent of the sample size) [41]. Unfortunately, although they offer the highest level of evidence, it does not mean that the conclusions of meta-analyses cannot be biased [27]. There are two main sources of bias that could influence the results of a meta-analysis. The first one is selection bias [38]: if not all the published studies are analyzed, the final results will not represent the real situation, and this is determined by the quality of the systematic review performed. The second issue is publication bias or reporting bias. This describes a phenomenon in which certain data from trials are not published, and so remain inaccessible [42]. It is well known that studies with statistically significant results have an increased likelihood of being published, and publication bias is commonly associated with inflated treatment effects, which lower the certainty of decision-makers regarding the evidence [43]. We will discuss in the next part how to deal with this phenomenon and evaluate the direction and magnitude of this bias.

3. New Developments Allow for Innovative Clinical Trials

In the first part, we inventoried the challenges inherent in RCTs and meta-analyses. In this section, we aim to discuss new developments and techniques that can be used to speed up research by decreasing the number of participants required, reduce the time of studies if necessary, and allow the better allocation of human and financial resources [44], while additionally reducing uncertainty.

3.1. Innovative Technologies (Including Sensors) to Facilitate High-Quality Clinical Trials

The development, implementation and use of technology in rehabilitation is not new. We might mention here the development of the passive system of pulley therapy to assist physiotherapists, and of course the development of continuous passive motion devices, which have revolutionized the care of patients after total knee prostheses [45]. However, over the last few decades, the technology has evolved a lot, opening up new perspectives in the management and evaluation of patients.

Rehabilitation may be aided by one or more existing technologies, such as robotics, muscle and brain stimulation, sensors-based exergames, or virtual reality [46,47].

Recent years have seen an increase in the use of robot-mediated treatment in rehabilitation to enable highly adaptable, repetitive, rigorous, and quantified physical exercise [48]. Therefore, the development of technology-supported innovative rehabilitation solutions appears to be a promising way to solve the above-mentioned limitations of current research in rehabilitation, where clinical outcomes are not sensitive enough to small changes and continuous assessment and evaluation seem impossible. The technology that is, or can be, used in rehabilitation can be divided into three categories: (i) high-tech devices the price and complexity of use of which limit their use in specialized centers (i.e., robotic treadmill); (ii) devices that can be used by clinicians in their daily practice (i.e., serious games exercises with virtual reality headsets), and (iii) systems and solutions that can be used by patients alone at home (i.e., sensors-based system, mobile health applications).

Technology-supported rehabilitation has gained appeal due to its ability to give an objective and, if necessary, blinded assessment, which can be automated (time saver) and allows for the measurable evaluation of motor function by taking into account the characteristics of the patients [49] (e.g., kinematics, activity level, intensity, muscle activity, co-contraction, posture, smoothness of the motion during the rehabilitation exercises, heart rate, stress level, etc.), as well as their therapy adherence [48]. Today, the development of affordable and portable systems using wearables sensors is becoming more and more popular in the rehabilitation field, and such systems can, for instance, be used in daily clinics with stroke patients to assess upper limb motion [50], hand function [51], gait [52] or balance [53]. Indeed, most of the devices allow for the continuous recording of motions performed by the patients during rehabilitation exercises [54,55]. These analyses could be used later on to adapt the dose and intensity at an earlier stage of the research, or to decide to stop an intervention if it turns out that the patient will not benefit from it [56].

Mobile health technologies (wearable, portable, body-fixed sensors, or home-integrated devices) that measure mobility in unsupervised, everyday living situations are indeed gaining traction as forms of adjunctive clinical evaluations. Because the data collected in these ecologically valid and patient-relevant environments can capture varied and unexpected events, they may be able to overcome the limitations of routine clinical tests [57]. Remote health monitoring, based on non-invasive and wearable sensors, actuators, and modern communication and information technologies, provides an efficient and cost-effective solution that enables the patients to remain safer at home [58]. Beside the safety effect, another salient aspect of the use of new technology in rehabilitation is the remote monitoring of patients between sessions during the activities of daily living. This continuous data collection helps to detect much smaller changes in the status of the patients (i.e., improvement or deterioration) [59], and should be used in research to provide more accurate and sensitive outcomes (digital biomarkers) [60]. A digital biomarker can objectively and continuously measure and collect biological, physiological, and anatomical data through digital biosensors [61]. In addition, continuous, objective monitoring can reveal disease characteristics not observed in the clinic [62]. Different sensors can be used to perform this longitudinal data collection and assessment; for example, single- [63] or multiple-accelerometer/inertial sensor systems [64], smartwatches [65], connected insoles [66], etc., or other types of technologies (e.g., markerless camera, smartmat), can be used to assess and monitor patients at home. Due to their huge availability, another important field of development is the use of smartphone-based digital biomarkers for both assessment and to drive the rehabilitation process [67]. There are two different types of digital biomarkers: active (supervised) ones, whereby the participants have to perform specific tasks such as cognitive tasks [68], and passive (unsupervised) ones, whereby the outcomes are derived from the natural use of a smartphone, a technique known as digital phenotyping [69]. Digital phenotyping can be used to monitor age-sensitive cognitive and behavioral processes [70], but also the evolution and fluctuation of chronic diseases, such as Parkinson’s disease [71]. In this study, the authors created a single score based on a combination of supervised and unsupervised assessments (speeded tapping, gait/balance, phonation, and memory). This score was predictive of self-reported PD status and correlated with the clinical evaluation of disease severity [71].

Interestingly, this monitoring can be coupled with direct feedback and better communication with the care team, which may result in better goal setting. Studies show that continuous follow-up is not only useful for monitoring the evolution of patients and gathering data, but also has a direct effect on the progress of the patients, and leads to a significant reduction in rehospitalizations among those receiving the continuous monitoring [72].

Remote health assessments that collect real-world data outside of clinic settings necessitate a thorough understanding of appropriate data collection, quality evaluation, analysis, and interpretation techniques. Therefore, future development must focus on the integration of information from the rehabilitation and unsupervised assessments to better evaluate the efficacy of rehabilitation interventions.

3.2. Adaptative Trials

In the past, patient allocation in RCTs was done equally between the different groups, and the study went on until the end (i.e., the inclusion and follow-up of the required number of patients). This poses two major threats: it does not allow one to stop the study in the event of a higher occurrence of side effects in the treated group, or in the event of a more favorable evolution in the treated group, in which case it is unethical to continue giving non-effective treatment to half of the subjects included in the study. Adaptive trial designs permit planned data-driven modifications during the trial program, which may reduce costs, accelerate study timelines, address challenges with recruitment and heterogeneity, and mitigate inefficiencies associated with non-responders. The use of existing information to model estimated outcomes, explore statistical methods, and model the effects of adaptations to the operational elements of the trial without compromising the trial’s integrity and validity, is essential to such designs [73,74]. Interim analysis may also be used to adapt the required sample size based on the current results or stop the study, if the revised sample size is deemed to be inappropriate [75]. Finally, the adaptive trial design has been proposed as a means to increase the efficiency of RCTs [76], as it is more flexible than interim analysis. It has multiple advantages for both the patients (increasing the odds of benefiting from the treatment) and the researchers (reducing the cost and increasing the speed), while increasing the likelihood of finding a real benefit [77]. Different adaptative trials have been developed, suited to both Phase 2 (e.g., effective doses and dose-response modeling) and Phase 3 trials [78,79]. The adaptive nomenclature refers to making prospectively planned changes to the future course of an ongoing trial based on the analysis of accumulating data from the trial itself, in a fully blinded or unblinded manner, without undermining the statistical validity of the conclusions [76].

In rehabilitation, to the authors’ best knowledge, this type of study design is not yet being implemented, but it would be particularly well adapted to studies on rare pathologies (i.e., recruitment difficulties for research on acute lateral sclerosis) and/or on expensive and long treatments (i.e., exoskeleton, robotics). Some of the many factors implicated in the failure of conventional RCTs may be mitigated through the use of more adaptable, flexible methods to make trials “smarter”. For rehabilitation, given the limited research resources in some facilities and the relatively small population, it may be impossible to conduct additional studies concurrently. Therefore, reducing the length of time required to complete a study could encourage more research and the expedited development of novel methods [80].

3.3. Advanced Statistical Methods to Increase the Efficiency of the Research

Adaptative trials rely on the development of robust intermediate or continuous statistical analyses. New methods, such as Bayesian adaptative designs [81] and deep learning [82], are also used in development, and will help to increase follow-up and allow for the quicker modification of trial designs, in order to speed up the process and allow a maximum number of patients to benefit from the best treatment, while guaranteeing the power necessary for the study. Describing these different methods is beyond the scope of this article, but we could not discuss adaptative trials without at least mentioning the development of the statistical method.

Indeed, changes in the design of the studies are made after analyses are carried out by the statisticians (most of the time, external companies analyze the results blindly). The development of data sciences and the implementation of new techniques in the field of rehabilitation also open up new perspectives for the agile adaptation of the treatment, ultimately leading to precision treatment [56]. However, it must be noted that for deep learning methods, and even more so for artificial intelligence techniques, a huge amount of data is required to train the systems in order to ensure high-quality results. It is not possible for clinicians to collect this type of data manually, and it is therefore essential to use new technologies (see Section 3.1), including smartphones and mHealth, to collect a large quantity of data, but also and above all to ensure the quality of these data [83].

We have previously seen that personalized treatment conflicts with the strict protocols for RCTs [29,84,85]. If the personalization uses the same algorithms for all participants, protocolization is ensured, and there is no more conflict with the RCT set up.

Concerning the meta-analysis, the two main biases were the selection bias and the publication bias. As regards the selection bias, researchers are working on automated methods (e.g., text mining) that could improve and speed up the selection of studies, but this method is still under development [86]. On the other hand, concerning publication bias, the statistical method (i.e., trim and fill method) has been developed to estimate the size of the effect and to correct it [87]. Bayesian methods can also be used to minimize this bias [88].

4. Perspectives on the Development of Scientific Evidence in Rehabilitation

The goal of clinical research is to improve the health and quality of life of the patients. For this, EBP has been developed to guide practitioners in their daily practice and to ensure that they integrate the latest research optimally into their treatments. In this last part, we are going to discuss how to ease and increase the translation between research and clinical practice, focusing on pragmatic trials and the development of the rehabilitation treatment specification system.

4.1. Pragmatic Trials

As stated above, one of the most significant limitations of RCTs is the weak translation between research results and clinical reality [35]. The two main limitations of the translation are the treatment adherence, which is much lower in real clinical conditions compared to RCTs, and the representativeness of the population participating in the RCTs.

Improving treatment adherence may have a more significant influence on the health of our population than the discovery of any new therapy [89]. Although the factors favoring, or, on the contrary, hindering the treatment have been well identified, it is estimated that patients are nonadherent to their treatment 50% of the time [89]. In rehabilitation, this problem occurs only in relation to the exercises that the patients need to perform at home in addition to the sessions under the supervision of the physiotherapist [90]. Solutions are being developed to increase adherence by decreasing the frequency of face-to-face sessions [91], motivational interviewing [92], and smartphone applications that support treatment instruction, track adherence, and provide patients with education and feedback on their performance [93]. To be closer to the clinical reality, some studies can be performed under real-life conditions. Although closer to reality, the problem with this type of approach is that it risks losing the well-controlled aspect that is specific to RCTs. Another limitation is that due to the larger heterogeneity in participants or interventions, larger sample sizes are often needed than in well-controlled RCTs [94]. To combine both the positive aspects of RCTs and the real-world data, some authors have suggested using a hybrid approach comprising randomization coupled with the use of pragmatic outcomes [95].

Thanks to the development of technologies, home-based assessment and monitoring are becoming popular in research and practice [96], and smart homes can be used to collect medical data that can be used for follow-up and monitoring in RCTs [97]. In addition to allowing a quick response in the case of the detection of abnormal values, this kind of approach also allows us to collect a huge amount of data that could be used in machine learning to find more sensitive outcomes.

The second limitation, the lack of representativeness of the patients included in the studies, is trickier to handle. Most of the research is performed in university hospitals, representing only a specific part of the population [98]. The problem of access to care is highly multidimensional, concerning not only financial resources but also health literacy, social support, the representation of the disease, etc. [99].

4.2. Rehabilitation Treatment Specification System

Although significant advances have been made in measuring the outcomes of rehabilitation interventions, comparably less progress has been made in measuring the treatment processes that lead to improved outcomes [100]. The field of rehabilitation still suffers from the black box problem: the inability to characterize treatments in a systematic fashion across diagnoses, settings, and disciplines, so as to identify and disseminate the active aspects of those treatments [101]. The rigorous definition of rehabilitation treatments, supported by theory, has been proposed in the framework of the Rehabilitation Treatment Specification System (RTSS) [100]. In the future, accurate measurements of the dose and intensity should be integrated within the RTSS to make it more closely representative of the clinical reality, and thus improve the reporting, as these parameters are crucial in rehabilitation [102].

5. Call for Action: The Development of Evidence-Based Technology Supported Rehabilitation

Although EBP is the current trend in healthcare practice, some clinicians and physicians are above all focused on the limitations of this approach (i.e., overall reductionism). According to them, EBP does not represent a scientific approach to health and care—it is only a restrictive interpretation of the scientific approach to clinical practice [103]. Another major limitation pointed out by some authors is the dehumanization of the patients, who are reduced to a set of numbers without taking into account social and human aspects [104]. The development and implementation of the International Classification of Functioning, Disability and Health (ICF) in rehabilitation, at least in research, is no longer in question [105]. However, while in clinics clinicians try to integrate the different components in their treatment, and to adapt the treatments from session to session, in research we are still miles away from the concept of personalized or precision rehabilitation [106]. The concept of personalized rehabilitation is closely associated with black box rehabilitation [85]. We have seen that remains a limitation in the replication of studies on rehabilitation. However, the latest and most advanced statistical methods allow for new perspectives to emerge in the management of black box rehabilitation. One of the prerequisites of this analysis is to describe as precisely as possible the different interventions and techniques used—using, for example, the RTSS framework that incorporates dose and intensity, and the outcomes derived from the ICF. The different components of the black box could then be analyzed individually to assess their specific effects. These approaches will also allow us to identify which patients will most likely benefit from a certain intervention in a certain moment of the rehabilitation process.

Currently, most physiotherapists perceive EBP as useful and necessary, but it is important to note that there is a gap between perceived and actual knowledge of EBP [107]. We think that if we improve EBP by integrating its different components, clinicians would be more likely to support it and use it in their practice. It is also important to teach clinicians about the importance of this process [108].

6. Conclusions

Although RCTs have been, and still are, considered the most robust form of clinical studies, we have seen that they present a risk of bias, similar to meta-analysis, and are sometimes difficult to apply in the rehabilitation field. To resolve some of the weakness of RCTs, mainly the length of study and the number of patients needed, adaptative trials have been developed and are increasingly used in medical research, but this is not yet being applied (or is to a very limited extent) in the field of rehabilitation. The development of technology-supported rehabilitation offers unique perspectives to monitor the progress of patients during the rehabilitation process. The data collected should be used to increase the quality of the trial by allowing the blinding of assessors and automated standardized data collection.

Author Contributions

B.B. conceived this paper and wrote the first draft. All the authors made substantial contributions to the conception and ideas reported in this perspective paper, participated in drafting the article or revising it critically for important intellectual content, gave final approval of the version to be published, and agreed to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This research received no external funding.

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Feinstein A.R. Clinical Judgment. Williams & Wilkins; Philadelphia, PA, USA: 1967. [Google Scholar]

- 2.Cochrane A.L. Effectiveness & Efficiency: Random Reflections on Health Services. RSM Books; London, UK: 1999. New. [Google Scholar]

- 3.Claridge J.A., Fabian T.C. History and Development of Evidence-Based Medicine. World J. Surg. 2005;29:547–553. doi: 10.1007/s00268-005-7910-1. [DOI] [PubMed] [Google Scholar]

- 4.Fletcher R.H. Clinical Medicine Meets Modern Epidemiology—And Both Profit. Ann. Epidemiol. 1992;2:325–333. doi: 10.1016/1047-2797(92)90065-X. [DOI] [PubMed] [Google Scholar]

- 5.Jenicek M. Epidemiology, Evidenced-Based Medicine, and Evidence-Based Public Health. J. Epidemiol. 1997;7:187–197. doi: 10.2188/jea.7.187. [DOI] [PubMed] [Google Scholar]

- 6.Reveiz L., Chapman E., Asial S., Munoz S., Bonfill X., Alonso-Coello P. Risk of Bias of Randomized Trials over Time. J. Clin. Epidemiol. 2015;68:1036–1045. doi: 10.1016/j.jclinepi.2014.06.001. [DOI] [PubMed] [Google Scholar]

- 7.Kuroda Y., Young M., Shoman H., Punnoose A., Norrish A.R., Khanduja V. Advanced Rehabilitation Technology in Orthopaedics—A Narrative Review. Int. Orthop. 2021;45:1933–1940. doi: 10.1007/s00264-020-04814-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Sanders J.M., Monogue M.L., Jodlowski T.Z., Cutrell J.B. Pharmacologic Treatments for Coronavirus Disease 2019 (COVID-19): A Review. JAMA. 2020;323:1824–1836. doi: 10.1001/jama.2020.6019. [DOI] [PubMed] [Google Scholar]

- 9.Lehane E., Leahy-Warren P., O’Riordan C., Savage E., Drennan J., O’Tuathaigh C., O’Connor M., Corrigan M., Burke F., Hayes M., et al. Evidence-Based Practice Education for Healthcare Professions: An Expert View. BMJ Evid.-Based Med. 2019;24:103–108. doi: 10.1136/bmjebm-2018-111019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Howick J., Koletsi D., Ioannidis J.P.A., Madigan C., Pandis N., Loef M., Walach H., Sauer S., Kleijnen J., Seehra J., et al. Most Healthcare Interventions Tested in Cochrane Reviews Are Not Effective According to High Quality Evidence: A Systematic Review and Meta-Analysis. J. Clin. Epidemiol. 2022;148:160–169. doi: 10.1016/j.jclinepi.2022.04.017. [DOI] [PubMed] [Google Scholar]

- 11.Verweij J., Hendriks H.R., Zwierzina H., on behalf of the Cancer Drug Development Forum Innovation in Oncology Clinical Trial Design. Cancer Treat. Rev. 2019;74:15–20. doi: 10.1016/j.ctrv.2019.01.001. [DOI] [PubMed] [Google Scholar]

- 12.Shaneyfelt T. Pyramids Are Guides not Rules: The Evolution of the Evidence Pyramid. Evid. Based Med. 2016;21:121–122. doi: 10.1136/ebmed-2016-110498. [DOI] [PubMed] [Google Scholar]

- 13.Miller F.G., Colloca L. The Placebo Phenomenon and Medical Ethics: Rethinking the Relationship between Informed Consent and Risk–Benefit Assessment. Theor. Med. Bioeth. 2011;32:229–243. doi: 10.1007/s11017-011-9179-8. [DOI] [PubMed] [Google Scholar]

- 14.Lesaffre E. Superiority, Equivalence, and Non-Inferiority Trials. Bull. NYU Hosp. Jt. Dis. 2008;66:150–154. [PubMed] [Google Scholar]

- 15.Kacha A.K., Nizamuddin S.L., Nizamuddin J., Ramakrishna H., Shahul S.S. Clinical Study Designs and Sources of Error in Medical Research. J. Cardiothorac. Vasc. Anesth. 2018;32:2789–2801. doi: 10.1053/j.jvca.2018.02.009. [DOI] [PubMed] [Google Scholar]

- 16.Whiting P., Savović J., Higgins J.P.T., Caldwell D.M., Reeves B.C., Shea B., Davies P., Kleijnen J., Churchill R., ROBIS Group ROBIS: A New Tool to Assess Risk of Bias in Systematic Reviews Was Developed. J. Clin. Epidemiol. 2016;69:225–234. doi: 10.1016/j.jclinepi.2015.06.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Sertkaya A., Wong H.-H., Jessup A., Beleche T. Key Cost Drivers of Pharmaceutical Clinical Trials in the United States. Clin. Trials Lond. Engl. 2016;13:117–126. doi: 10.1177/1740774515625964. [DOI] [PubMed] [Google Scholar]

- 18.Waldstreicher J., Johns M.E. Managing Conflicts of Interest in Industry-Sponsored Clinical Research: More Physician Engagement Is Required. JAMA. 2017;317:1751–1752. doi: 10.1001/jama.2017.4160. [DOI] [PubMed] [Google Scholar]

- 19.Han B., Wang S., Wan Y., Liu J., Zhao T., Cui J., Zhuang H., Cui F. Has the Public Lost Confidence in Vaccines Because of a Vaccine Scandal in China. Vaccine. 2019;37:5270–5275. doi: 10.1016/j.vaccine.2019.07.052. [DOI] [PubMed] [Google Scholar]

- 20.Nair S.C., AlGhafli S., AlJaberi A. Developing a Clinical Trial Governance Framework for Pharmaceutical Industry-Funded Clinical Trials. Account. Res. 2018;25:373–386. doi: 10.1080/08989621.2018.1527222. [DOI] [PubMed] [Google Scholar]

- 21.Pew Research Center . Trust and Mistrust in Americans’ Views of Scientific Experts. Pew Research Center; Washington, DC, USA: 2019. [Google Scholar]

- 22.Gluud L.L. Bias in Clinical Intervention Research. Am. J. Epidemiol. 2006;163:493–501. doi: 10.1093/aje/kwj069. [DOI] [PubMed] [Google Scholar]

- 23.Kempf L., Goldsmith J.C., Temple R. Challenges of Developing and Conducting Clinical Trials in Rare Disorders. Am. J. Med. Genet. Part A. 2018;176:773–783. doi: 10.1002/ajmg.a.38413. [DOI] [PubMed] [Google Scholar]

- 24.Button K.S., Ioannidis J.P.A., Mokrysz C., Nosek B.A., Flint J., Robinson E.S.J., Munafò M.R. Power Failure: Why Small Sample Size Undermines the Reliability of Neuroscience. Nat. Rev. Neurosci. 2013;14:365–376. doi: 10.1038/nrn3475. [DOI] [PubMed] [Google Scholar]

- 25.Kinney A.R., Eakman A.M., Graham J.E. Novel Effect Size Interpretation Guidelines and an Evaluation of Statistical Power in Rehabilitation Research. Arch. Phys. Med. Rehabil. 2020;101:2219–2226. doi: 10.1016/j.apmr.2020.02.017. [DOI] [PubMed] [Google Scholar]

- 26.Bai A.D., Komorowski A.S., Lo C.K.L., Tandon P., Li X.X., Mokashi V., Cvetkovic A., Findlater A., Liang L., Tomlinson G., et al. Intention-to-Treat Analysis May Be More Conservative than per Protocol Analysis in Antibiotic Non-Inferiority Trials: A Systematic Review. BMC Med. Res. Methodol. 2021;21:75. doi: 10.1186/s12874-021-01260-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Krauss A. Why All Randomised Controlled Trials Produce Biased Results. Ann. Med. 2018;50:312–322. doi: 10.1080/07853890.2018.1453233. [DOI] [PubMed] [Google Scholar]

- 28.Moher D., Hopewell S., Schulz K.F., Montori V., Gøtzsche P.C., Devereaux P.J., Elbourne D., Egger M., Altman D.G. Consolidated Standards of Reporting Trials Group CONSORT 2010 Explanation and Elaboration: Updated Guidelines for Reporting Parallel Group Randomised Trials. J. Clin. Epidemiol. 2010;63:E1–E37. doi: 10.1016/j.jclinepi.2010.03.004. [DOI] [PubMed] [Google Scholar]

- 29.Dijkers M.P. An End to the Black Box of Rehabilitation? Arch. Phys. Med. Rehabil. 2019;100:144–145. doi: 10.1016/j.apmr.2018.09.108. [DOI] [PubMed] [Google Scholar]

- 30.Whyte J., Hart T. It’s More than a Black Box; it’s a Russian Doll: Defining Rehabilitation Treatments. Am. J. Phys. Med. Rehabil. 2003;82:639–652. doi: 10.1097/01.PHM.0000078200.61840.2D. [DOI] [PubMed] [Google Scholar]

- 31.Zanca J.M., Turkstra L.S., Chen C., Packel A., Ferraro M., Hart T., Van Stan J.H., Whyte J., Dijkers M.P. Advancing Rehabilitation Practice through Improved Specification of Interventions. Arch. Phys. Med. Rehabil. 2019;100:164–171. doi: 10.1016/j.apmr.2018.09.110. [DOI] [PubMed] [Google Scholar]

- 32.Negrini S., Arienti C., Pollet J., Engkasan J.P., Francisco G.E., Frontera W.R., Galeri S., Gworys K., Kujawa J., Mazlan M., et al. Clinical Replicability of Rehabilitation Interventions in Randomized Controlled Trials Reported in Main Journals Is Inadequate. J. Clin. Epidemiol. 2019;114:108–117. doi: 10.1016/j.jclinepi.2019.06.008. [DOI] [PubMed] [Google Scholar]

- 33.Van Stan J.H., Dijkers M.P., Whyte J., Hart T., Turkstra L.S., Zanca J.M., Chen C. The Rehabilitation Treatment Specification System: Implications for Improvements in Research Design, Reporting, Replication, and Synthesis. Arch. Phys. Med. Rehabil. 2019;100:146–155. doi: 10.1016/j.apmr.2018.09.112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Slade S.C., Dionne C.E., Underwood M., Buchbinder R., Beck B., Bennell K., Brosseau L., Costa L., Cramp F., Cup E., et al. Consensus on Exercise Reporting Template (CERT): Modified Delphi Study. Phys. Ther. 2016;96:1514–1524. doi: 10.2522/ptj.20150668. [DOI] [PubMed] [Google Scholar]

- 35.Zarbin M. Real Life Outcomes vs. Clinical Trial Results. J. Ophthalmic Vis. Res. 2019;14:88–92. doi: 10.4103/jovr.jovr_279_18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Nieuwlaat R., Wilczynski N., Navarro T., Hobson N., Jeffery R., Keepanasseril A., Agoritsas T., Mistry N., Iorio A., Jack S., et al. Interventions for Enhancing Medication Adherence. Cochrane Database Syst. Rev. 2014:CD000011. doi: 10.1002/14651858.CD000011.pub4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Infante-Rivard C., Cusson A. Reflection on modern methods: Selection Bias—A Review of Recent Developments. Int. J. Epidemiol. 2018;47:1714–1722. doi: 10.1093/ije/dyy138. [DOI] [PubMed] [Google Scholar]

- 38.Page M.J., McKenzie J.E., Kirkham J., Dwan K., Kramer S., Green S., Forbes A. Bias Due to Selective Inclusion and Reporting of Outcomes and Analyses in Systematic Reviews of Randomised Trials of Healthcare Interventions. Cochrane Database Syst. Rev. 2014:MR000035. doi: 10.1002/14651858.MR000035.pub2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Wong G.W.K., Miravitlles M., Chisholm A., Krishnan J.A., Krishnan J. Respiratory Guidelines—Which Real World? Ann. Am. Thorac. Soc. 2014;11((Suppl. 2)):S85–S91. doi: 10.1513/AnnalsATS.201309-298RM. [DOI] [PubMed] [Google Scholar]

- 40.Charles P., Giraudeau B., Dechartres A., Baron G., Ravaud P. Reporting of Sample Size Calculation in Randomised Controlled Trials: Review. BMJ. 2009;338:b1732. doi: 10.1136/bmj.b1732. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Gianola S., Castellini G., Corbetta D., Moja L. Rehabilitation Interventions in Randomized Controlled Trials for Low Back Pain: Proof of Statistical Significance Often Is Not Relevant. Health Qual. Life Outcomes. 2019;17:127. doi: 10.1186/s12955-019-1196-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Brassington I. The Ethics of Reporting All the Results of Clinical Trials. Br. Med. Bull. 2017;121:19–29. doi: 10.1093/bmb/ldw058. [DOI] [PubMed] [Google Scholar]

- 43.Murad M.H., Chu H., Lin L., Wang Z. The Effect of Publication Bias Magnitude and Direction on the Certainty in Evidence. BMJ Evid.-Based Med. 2018;23:84–86. doi: 10.1136/bmjebm-2018-110891. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Castellini G., Gianola S., Bonovas S., Moja L. Improving Power and Sample Size Calculation in Rehabilitation Trial Reports: A Methodological Assessment. Arch. Phys. Med. Rehabil. 2016;97:1195–1201. doi: 10.1016/j.apmr.2016.02.013. [DOI] [PubMed] [Google Scholar]

- 45.Schulz M., Krohne B., Röder W., Sander K. Randomized, Prospective, Monocentric Study to Compare the Outcome of Continuous Passive Motion and Controlled Active Motion after Total Knee Arthroplasty. Technol. Health Care Off. J. Eur. Soc. Eng. Med. 2018;26:499–506. doi: 10.3233/THC-170850. [DOI] [PubMed] [Google Scholar]

- 46.Feys P., Straudi S. Beyond Therapists: Technology-Aided Physical MS Rehabilitation Delivery. Mult. Scler. Houndmills Basingstoke Engl. 2019;25:1387–1393. doi: 10.1177/1352458519848968. [DOI] [PubMed] [Google Scholar]

- 47.Bonnechère B. Serious Games in Physical Rehabilitation. Springer; Cham, Switzerland: 2017. [DOI] [PubMed] [Google Scholar]

- 48.Garro F., Chiappalone M., Buccelli S., De Michieli L., Semprini M. Neuromechanical Biomarkers for Robotic Neurorehabilitation. Front. Neurorobot. 2021;15:742163. doi: 10.3389/fnbot.2021.742163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Bonnechère B., Sholukha V., Omelina L., Van Vooren M., Jansen B., Van Sint Jan S. Suitability of functional evaluation embedded in serious game rehabilitation exercises to assess motor development across lifespan. Gait Posture. 2017;57:35–39. doi: 10.1016/j.gaitpost.2017.05.025. [DOI] [PubMed] [Google Scholar]

- 50.Werner C., Schönhammer J.G., Steitz M.K., Lambercy O., Luft A.R., Demkó L., Easthope C.A. Using Wearable Inertial Sensors to Estimate Clinical Scores of Upper Limb Movement Quality in Stroke. Front. Physiol. 2022;13:877563. doi: 10.3389/fphys.2022.877563. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Song X., van de Ven S.S., Chen S., Kang P., Gao Q., Jia J., Shull P.B. Proposal of a Wearable Multimodal Sensing-Based Serious Games Approach for Hand Movement Training After Stroke. Front. Physiol. 2022;13:811950. doi: 10.3389/fphys.2022.811950. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Gavrilović M.M., Janković M.M. Temporal Synergies Detection in Gait Cyclograms Using Wearable Technology. Sensors. 2022;22:2728. doi: 10.3390/s22072728. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Bonnechère B., Jansen B., Omelina L., Sholukha V., Van Sint Jan S. Validation of the Balance Board for Clinical Evaluation of Balance During Serious Gaming Rehabilitation Exercises. Telemed. e-Health. 2016;22:709–717. doi: 10.1089/tmj.2015.0230. [DOI] [PubMed] [Google Scholar]

- 54.Bonnechère B., Jansen B., Haack I., Omelina L., Feipel V., Van Sint Jan S., Pandolfo M. Automated Functional Upper Limb Evaluation of Patients with Friedreich Ataxia Using Serious Games Rehabilitation Exercises. J. Neuroeng. Rehabil. 2018;15:87. doi: 10.1186/s12984-018-0430-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Bonnechère B., Klass M., Langley C., Sahakian B.J. Brain Training Using Cognitive Apps Can Improve Cognitive Performance and Processing Speed in Older Adults. Sci. Rep. 2021;11:12313. doi: 10.1038/s41598-021-91867-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Adans-Dester C., Hankov N., O’Brien A., Vergara-Diaz G., Black-Schaffer R., Zafonte R., Dy J., Lee S.I., Bonato P. Enabling Precision Rehabilitation Interventions Using Wearable Sensors and Machine Learning to Track Motor Recovery. NPJ Digit. Med. 2020;3:121. doi: 10.1038/s41746-020-00328-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Warmerdam E., Hausdorff J.M., Atrsaei A., Zhou Y., Mirelman A., Aminian K., Espay A.J., Hansen C., Evers L.J.W., Keller A., et al. Long-Term Unsupervised Mobility Assessment in Movement Disorders. Lancet Neurol. 2020;19:462–470. doi: 10.1016/S1474-4422(19)30397-7. [DOI] [PubMed] [Google Scholar]

- 58.Majumder S., Mondal T., Deen M.J. Wearable Sensors for Remote Health Monitoring. Sensors. 2017;17:E130. doi: 10.3390/s17010130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Joshi M., Ashrafian H., Aufegger L., Khan S., Arora S., Cooke G., Darzi A. Wearable Sensors to Improve Detection of Patient Deterioration. Expert Rev. Med. Devices. 2019;16:145–154. doi: 10.1080/17434440.2019.1563480. [DOI] [PubMed] [Google Scholar]

- 60.Dillenseger A., Weidemann M.L., Trentzsch K., Inojosa H., Haase R., Schriefer D., Voigt I., Scholz M., Akgün K., Ziemssen T. Digital Biomarkers in Multiple Sclerosis. Brain Sci. 2021;11:1519. doi: 10.3390/brainsci11111519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Dorsey E.R., Papapetropoulos S., Xiong M., Kieburtz K. The First Frontier: Digital Biomarkers for Neurodegenerative Disorders. Digit. Biomark. 2017;1:6–13. doi: 10.1159/000477383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Adams J.L., Dinesh K., Xiong M., Tarolli C.G., Sharma S., Sheth N., Aranyosi A.J., Zhu W., Goldenthal S., Biglan K.M., et al. Multiple Wearable Sensors in Parkinson and Huntington Disease Individuals: A Pilot Study in Clinic and at Home. Digit. Biomark. 2017;1:52–63. doi: 10.1159/000479018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Yang Y., Schumann M., Le S., Cheng S. Reliability and Validity of a New Accelerometer-Based Device for Detecting Physical Activities and Energy Expenditure. PeerJ. 2018;6:e5775. doi: 10.7717/peerj.5775. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Dinesh K., Snyder C.W., Xiong M., Tarolli C.G., Sharma S., Dorsey E.R., Sharma G., Adams J.L. A Longitudinal Wearable Sensor Study in Huntington’s Disease. J. Huntingt. Dis. 2020;9:69–81. doi: 10.3233/JHD-190375. [DOI] [PubMed] [Google Scholar]

- 65.Lipsmeier F., Simillion C., Bamdadian A., Tortelli R., Byrne L.M., Zhang Y.-P., Wolf D., Smith A.V., Czech C., Gossens C., et al. A Remote Digital Monitoring Platform to Assess Cognitive and Motor Symptoms in Huntington Disease: Cross-Sectional Validation Study. J. Med. Internet Res. 2022;24:e32997. doi: 10.2196/32997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Jacobs D., Farid L., Ferré S., Herraez K., Gracies J.-M., Hutin E. Evaluation of the Validity and Reliability of Connected Insoles to Measure Gait Parameters in Healthy Adults. Sensors. 2021;21:6543. doi: 10.3390/s21196543. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Torous J., Rodriguez J., Powell A. The New Digital Divide for Digital BioMarkers. Digit. Biomark. 2017;1:87–91. doi: 10.1159/000477382. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Dagum P. Digital Biomarkers of Cognitive Function. NPJ Digit. Med. 2018;1:10. doi: 10.1038/s41746-018-0018-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Jacobson N.C., Summers B., Wilhelm S. Digital Biomarkers of Social Anxiety Severity: Digital Phenotyping Using Passive Smartphone Sensors. J. Med. Internet Res. 2020;22:e16875. doi: 10.2196/16875. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Ceolini E., Kock R., Band G.P.H., Stoet G., Ghosh A. Temporal Clusters of Age-Related Behavioral Alterations Captured in Smartphone Touchscreen Interactions. iScience. 2022;25:104791. doi: 10.1016/j.isci.2022.104791. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Omberg L., Chaibub Neto E., Perumal T.M., Pratap A., Tediarjo A., Adams J., Bloem B.R., Bot B.M., Elson M., Goldman S.M., et al. Remote Smartphone Monitoring of Parkinson’s Disease and Individual Response to Therapy. Nat. Biotechnol. 2022;40:480–487. doi: 10.1038/s41587-021-00974-9. [DOI] [PubMed] [Google Scholar]

- 72.Mehta S.J., Hume E., Troxel A.B., Reitz C., Norton L., Lacko H., McDonald C., Freeman J., Marcus N., Volpp K.G., et al. Effect of Remote Monitoring on Discharge to Home, Return to Activity, and Rehospitalization After Hip and Knee Arthroplasty: A Randomized Clinical Trial. JAMA Netw. Open. 2020;3:e2028328. doi: 10.1001/jamanetworkopen.2020.28328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Berry D.A. Emerging Innovations in Clinical Trial Design. Clin. Pharmacol. Ther. 2016;99:82–91. doi: 10.1002/cpt.285. [DOI] [PubMed] [Google Scholar]

- 74.Stegert M., Kasenda B., von Elm E., You J.J., Blümle A., Tomonaga Y., Saccilotto R., Amstutz A., Bengough T., Briel M., et al. An Analysis of Protocols and Publications Suggested that Most Discontinuations of Clinical Trials Were Not Based on Preplanned Interim Analyses or Stopping Rules. J. Clin. Epidemiol. 2016;69:152–160. doi: 10.1016/j.jclinepi.2015.05.023. [DOI] [PubMed] [Google Scholar]

- 75.WOMAN Trial Collaborators Effect of Early Tranexamic Acid Administration on Mortality, Hysterectomy, and Other Morbidities in Women with Post-Partum Haemorrhage (WOMAN): An International, Randomised, Double-Blind, Placebo-Controlled Trial. Lancet Lond. Engl. 2017;389:2105–2116. doi: 10.1016/S0140-6736(17)30638-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Bhatt D.L., Mehta C. Adaptive Designs for Clinical Trials. N. Engl. J. Med. 2016;375:65–74. doi: 10.1056/NEJMra1510061. [DOI] [PubMed] [Google Scholar]

- 77.Bauer P., Bretz F., Dragalin V., König F., Wassmer G. Twenty-Five Years of Confirmatory Adaptive Designs: Opportunities and Pitfalls. Stat. Med. 2016;35:325–347. doi: 10.1002/sim.6472. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Fraiman J., Erviti J., Jones M., Greenland S., Whelan P., Kaplan R.M., Doshi P. Serious Adverse Events of Special Interest following mRNA COVID-19 Vaccination in Randomized Trials in Adults. Vaccine. 2022;40:5798–5805. doi: 10.1016/j.vaccine.2022.08.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Sato A., Shimura M., Gosho M. Practical Characteristics of Adaptive Design in Phase 2 and 3 Clinical Trials. J. Clin. Pharm. Ther. 2018;43:170–180. doi: 10.1111/jcpt.12617. [DOI] [PubMed] [Google Scholar]

- 80.Mulcahey M.J., Jones L.A.T., Rockhold F., Rupp R., Kramer J.L.K., Kirshblum S., Blight A., Lammertse D., Guest J.D., Steeves J.D. Adaptive Trial Designs for Spinal Cord Injury Clinical Trials Directed to the Central Nervous System. Spinal Cord. 2020;58:1235–1248. doi: 10.1038/s41393-020-00547-8. [DOI] [PubMed] [Google Scholar]

- 81.Wason J.M.S., Abraham J.E., Baird R.D., Gournaris I., Vallier A.-L., Brenton J.D., Earl H.M., Mander A.P. A Bayesian Adaptive Design for Biomarker Trials with Linked Treatments. Br. J. Cancer. 2015;113:699–705. doi: 10.1038/bjc.2015.278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Korotcov A., Tkachenko V., Russo D.P., Ekins S. Comparison of Deep Learning with Multiple Machine Learning Methods and Metrics Using Diverse Drug Discovery Data Sets. Mol. Pharm. 2017;14:4462–4475. doi: 10.1021/acs.molpharmaceut.7b00578. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Bui Q., Kaufman K.J., Munsell E.G., Lenze E.J., Lee J.-M., Mohr D.C., Fong M.W., Metts C.L., Tomazin S.E., Pham V., et al. Smartphone Assessment Uncovers Real-Time Relationships between Depressed Mood and Daily Functional Behaviors after Stroke. J. Telemed. Telecare. 2022:1357633X221100061. doi: 10.1177/1357633X221100061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Horn S.D., DeJong G., Ryser D.K., Veazie P.J., Teraoka J. Another Look at Observational Studies in Rehabilitation Research: Going beyond the Holy Grail of the Randomized Controlled Trial. Arch. Phys. Med. Rehabil. 2005;86:S8–S15. doi: 10.1016/j.apmr.2005.08.116. [DOI] [PubMed] [Google Scholar]

- 85.Jette A.M. Opening the Black Box of Rehabilitation Interventions. Phys. Ther. 2020;100:883–884. doi: 10.1093/ptj/pzaa078. [DOI] [PubMed] [Google Scholar]

- 86.Pham B., Jovanovic J., Bagheri E., Antony J., Ashoor H., Nguyen T.T., Rios P., Robson R., Thomas S.M., Watt J., et al. Text Mining to Support Abstract Screening for Knowledge Syntheses: A Semi-Automated Workflow. Syst. Rev. 2021;10:156. doi: 10.1186/s13643-021-01700-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Shi L., Lin L. The Trim-and-Fill Method for Publication Bias: Practical Guidelines and Recommendations Based on a Large Database of Meta-Analyses. Medicine. 2019;98:e15987. doi: 10.1097/MD.0000000000015987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Du H., Liu F., Wang L. A Bayesian “Fill-in” Method for Correcting for Publication Bias in Meta-Analysis. Psychol. Methods. 2017;22:799–817. doi: 10.1037/met0000164. [DOI] [PubMed] [Google Scholar]

- 89.Brown M.T., Bussell J., Dutta S., Davis K., Strong S., Mathew S. Medication Adherence: Truth and Consequences. Am. J. Med. Sci. 2016;351:387–399. doi: 10.1016/j.amjms.2016.01.010. [DOI] [PubMed] [Google Scholar]

- 90.Bonnechère B., Van Vooren M., Jansen B., Van Sint J.S., Rahmoun M., Fourtassi M. Patients’ Acceptance of the Use of Serious Games in Physical Rehabilitation in Morocco. Games Health J. 2017;6:290–294. doi: 10.1089/g4h.2017.0008. [DOI] [PubMed] [Google Scholar]

- 91.Coleman C.I., Limone B., Sobieraj D.M., Lee S., Roberts M.S., Kaur R., Alam T. Dosing Frequency and Medication Adherence in Chronic Disease. J. Manag. Care Pharm. 2012;18:527–539. doi: 10.18553/jmcp.2012.18.7.527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Palacio A., Garay D., Langer B., Taylor J., Wood B.A., Tamariz L. Motivational Interviewing Improves Medication Adherence: A Systematic Review and Meta-Analysis. J. Gen. Intern. Med. 2016;31:929–940. doi: 10.1007/s11606-016-3685-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Morawski K., Ghazinouri R., Krumme A., Lauffenburger J.C., Lu Z., Durfee E., Oley L., Lee J., Mohta N., Haff N., et al. Association of a Smartphone Application with Medication Adherence and Blood Pressure Control: The MedISAFE-BP Randomized Clinical Trial. JAMA Intern. Med. 2018;178:802–809. doi: 10.1001/jamainternmed.2018.0447. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Pickler R.H., Kearney M.H. Publishing Pragmatic Trials. Nurs. Outlook. 2018;66:464–469. doi: 10.1016/j.outlook.2018.04.002. [DOI] [PubMed] [Google Scholar]

- 95.Baumfeld Andre E., Reynolds R., Caubel P., Azoulay L., Dreyer N.A. Trial Designs Using Real-World Data: The Changing Landscape of the Regulatory Approval Process. Pharmacoepidemiol. Drug Saf. 2020;29:1201–1212. doi: 10.1002/pds.4932. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Steinhubl S.R., Waalen J., Edwards A.M., Ariniello L.M., Mehta R.R., Ebner G.S., Carter C., Baca-Motes K., Felicione E., Sarich T., et al. Effect of a Home-Based Wearable Continuous ECG Monitoring Patch on Detection of Undiagnosed Atrial Fibrillation: The mSToPS Randomized Clinical Trial. JAMA. 2018;320:146–155. doi: 10.1001/jama.2018.8102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Liu L., Stroulia E., Nikolaidis I., Miguel-Cruz A., Rios Rincon A. Smart Homes and Home Health Monitoring Technologies for Older Adults: A Systematic Review. Int. J. Med. Inf. 2016;91:44–59. doi: 10.1016/j.ijmedinf.2016.04.007. [DOI] [PubMed] [Google Scholar]

- 98.Anderson A., Borfitz D., Getz K. Global Public Attitudes about Clinical Research and Patient Experiences with Clinical Trials. JAMA Netw. Open. 2018;1:e182969. doi: 10.1001/jamanetworkopen.2018.2969. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Hayes S.L., Riley P., Radley D.C., McCarthy D. Reducing Racial and Ethnic Disparities in Access to Care: Has the Affordable Care Act Made a Difference? Issue Brief Commonw. Fund. 2017;2017:1–14. [PubMed] [Google Scholar]

- 100.Van Stan J.H., Whyte J., Duffy J.R., Barkmeier-Kraemer J.M., Doyle P.B., Gherson S., Kelchner L., Muise J., Petty B., Roy N., et al. Rehabilitation Treatment Specification System: Methodology to Identify and Describe Unique Targets and Ingredients. Arch. Phys. Med. Rehabil. 2021;102:521–531. doi: 10.1016/j.apmr.2020.09.383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Hart T., Dijkers M.P., Whyte J., Turkstra L.S., Zanca J.M., Packel A., Van Stan J.H., Ferraro M., Chen C. A Theory-Driven System for the Specification of Rehabilitation Treatments. Arch. Phys. Med. Rehabil. 2019;100:172–180. doi: 10.1016/j.apmr.2018.09.109. [DOI] [PubMed] [Google Scholar]

- 102.Pierce J.E., O’Halloran R., Menahemi-Falkov M., Togher L., Rose M.L. Comparing Higher and Lower Weekly Treatment Intensity for Chronic Aphasia: A Systematic Review and Meta-Analysis. Neuropsychol. Rehabil. 2021;31:1289–1313. doi: 10.1080/09602011.2020.1768127. [DOI] [PubMed] [Google Scholar]

- 103.Fava G.A. Evidence-Based Medicine Was Bound to fail: A Report to Alvan Feinstein. J. Clin. Epidemiol. 2017;84:3–7. doi: 10.1016/j.jclinepi.2017.01.012. [DOI] [PubMed] [Google Scholar]

- 104.Duffau H. Paradoxes of Evidence-Based Medicine in Lower-Grade Glioma: To Treat the Tumor or the Patient? Neurology. 2018;91:657–662. doi: 10.1212/WNL.0000000000006288. [DOI] [PubMed] [Google Scholar]

- 105.Angeli J.M., Schwab S.M., Huijs L., Sheehan A., Harpster K. ICF-Inspired Goal-Setting in Developmental Rehabilitation: An Innovative Framework for Pediatric Therapists. Physiother. Theory Pract. 2019;37:1167–1176. doi: 10.1080/09593985.2019.1692392. [DOI] [PubMed] [Google Scholar]

- 106.Nonnekes J., Nieuwboer A. Towards Personalized Rehabilitation for Gait Impairments in Parkinson’s Disease. J. Park. Dis. 2018;8:S101–S106. doi: 10.3233/JPD-181464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107.Castellini G., Corbetta D., Cecchetto S., Gianola S. Twenty-Five Years after the Introduction of Evidence-Based Medicine: Knowledge, Use, Attitudes and Barriers among Physiotherapists in Italy—A Cross-Sectional Study. BMJ Open. 2020;10:e037133. doi: 10.1136/bmjopen-2020-037133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 108.Benfield A., Krueger R.B. Making Decision-Making Visible-Teaching the Process of Evaluating Interventions. Int. J. Environ. Res. Public. Health. 2021;18:3635. doi: 10.3390/ijerph18073635. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data sharing not applicable.