Abstract

Technological advancements in health sciences have led to enormous developments in artificial intelligence (AI) models designed for application in health sectors. This article aimed at reporting on the application and performances of AI models that have been designed for application in endodontics. Renowned online databases, primarily PubMed, Scopus, Web of Science, Embase, and Cochrane and secondarily Google Scholar and the Saudi Digital Library, were accessed for articles relevant to the research question that were published from 1 January 2000 to 30 November 2022. In the last 5 years, there has been a significant increase in the number of articles reporting on AI models applied for endodontics. AI models have been developed for determining working length, vertical root fractures, root canal failures, root morphology, and thrust force and torque in canal preparation; detecting pulpal diseases; detecting and diagnosing periapical lesions; predicting postoperative pain, curative effect after treatment, and case difficulty; and segmenting pulp cavities. Most of the included studies (n = 21) were developed using convolutional neural networks. Among the included studies. datasets that were used were mostly cone-beam computed tomography images, followed by periapical radiographs and panoramic radiographs. Thirty-seven original research articles that fulfilled the eligibility criteria were critically assessed in accordance with QUADAS-2 guidelines, which revealed a low risk of bias in the patient selection domain in most of the studies (risk of bias: 90%; applicability: 70%). The certainty of the evidence was assessed using the GRADE approach. These models can be used as supplementary tools in clinical practice in order to expedite the clinical decision-making process and enhance the treatment modality and clinical operation.

Keywords: machine learning, deep learning, artificial neural network, conventional neural network, root canal treatment, apical lesions, diagnosis, detection, prediction

1. Introduction

The specialty of endodontics deals with the diseases and conditions that affect the root canal complex and are developed due to untreated or incompletely treated dental carious lesions [1,2]. Diseases related to the pulp and periapical tissues are most commonly managed by nonsurgical root canal treatment (RCT). The basis of endodontic diagnosis and treatment planning relies on an adequate and accurate understanding of the diseases related to the pulp and periapical tissues. Inaccurate diagnosis may result in unanticipated pain, which may have a negative impact on the therapeutic plan and eventually result in unpleasant experiences among patients [3]. Preoperative assessment of the tooth, before initiating RCT, is a very crucial step in determining the success of the endodontic treatment.

Intraoral periapical radiographs, orthopantomograms, and cone-beam computed tomography (CBCT) imaging are the most frequently adopted radiographic techniques for diagnosing diseases related to pulp and periapical areas [2]. Periapical and panoramic radiographs generate two-dimensional (2D) images of the maxillofacial structures, with lesser exposure than CBCT imaging [4,5]. CBCT imaging is widely used among dentists as it enables more radiological analysis. This technology provides three-dimensional images with more precision [6]. The accuracy in detecting periapical lesions is significantly higher with CBCT imaging in comparison to periapical radiography [7,8]. However, considering its high cost and radiation dose, the use of CBCT imaging is restricted to special clinical circumstances. In such cases, the benefits obtained from the imaging should outweigh any potential risks resulting from radiographic exposure associated with this technology.

The ongoing rapid technological advancements have resulted in enormous development in diagnostic models for medical imaging and diagnosis [9]. Advancements in computer-assisted diagnosis have resulted in the development of AI models designed for application in health sectors. AI technology, which is mainly based on mimicking the functioning of the human brain, is a breakthrough in the technological world. Machine learning algorithms were the first AI algorithms developed, the performance of which is dependent on the characteristics and number of datasets used for training. These algorithms are utilized to learn the intrinsic statistical patterns and structures in the data and are later applied for making predictions when applied to unseen data [10]. Deep learning (DL) or convolutional neural networks (CNNs) are developed to mimic the functioning of the human brain; they are designed to solve equations by passing through a series of convolutional filters and are trained on a large number of datasets [11]. These advanced neural networks are applied for processing large and complex images, where they have demonstrated superior achievements in recognizing objects, faces, and activity [12,13]. AI models have been widely applied in medical imaging for systemic diseases such as cardiovascular diseases and respiratory diseases and have displayed exceptional performances that are similar to those of experienced specialists [14,15,16]. Additionally, in dentistry, AI models are designed for diagnosing oral diseases such as dental caries, periodontal diseases, and oral cancer as well as treatment planning for orthognathic surgeries and predicting the treatment outcomes [17,18,19]. These models have demonstrated excellent performances, with a major advantage of this being improved diagnostic efficiency with a reduced image interpretation time [20]. Nagendrababu et al. [21] reported on AI models designed for application in endodontics for performing tasks such as studying root canal anatomy, detecting and diagnosing periapical lesions and root fractures, and determining the working length for planning root canal treatment. The authors concluded that these AI models can aid clinicians with precise diagnosis and treatment planning, ultimately resulting in better treatment outcomes. Umer et al. [22] also reported on AI models designed for application in endodontic diagnosis and treatment planning. The authors concluded that these models demonstrated an accuracy greater than 90% in performing the tasks. However, the authors also stated that the reporting of AI-related research is irregular. Hence this systematic review aimed to report on the application and performances of AI models designed for application in endodontics.

2. Materials and Methods

Ethical clearance was obtained from King Abdullah International Medical Research Center (Institutional Review Board Approval No. 2439-22, 6 November 2002) before the literature search process was initiated for this systematic review.

The updated Preferred Reporting Items for Systematic Reviews and Meta-analyses (PRISMA) guidelines were considered for preparing this systematic review [23]. A search of the literature was conducted systematically in various renowned electronic databases, primarily Scopus, Web of Science, Embase, PubMed, and Cochrane and secondarily the Saudi Digital Library and Google Scholar, for studies relevant to the research topic that were published from 1 January 2000 to 30 November 2022.

2.1. Search Strategy

The article search was performed based on the research question, which was developed in accordance with the PICO elements (P: problem/patient/population, I: intervention/indicator, C: comparison, and O: outcome).

Research Question: What are the developments, applications, and performances of AI models in endodontics?

Population: Patients who underwent investigation for endodontic diagnosis (dental radiographs such as intraoral periapical, bitewing, occlusal, and panoramic radiographs; cephalograms; cone-beam computed tomography (CBCT); digital photographs; 3D CBCT images).

Intervention: Artificial intelligence applications that were designed for the detection, diagnosis, and prediction of endodontic lesions.

Comparison: Various reference standards, testing models, expert/specialist interpretations

Outcome: Predictable or measurable outcomes such as accuracy, specificity, sensitivity, receiver operating characteristic curve (ROC), area under the curve (AUC), area under the receiver operating characteristic (AUROC), intersection over union (IOU), intraclass correlation coefficient (ICC), statistical significance, F1 scores, volumetric Dice similarity coefficient (vDSC), surface Dice similarity coefficient (sDSC), positive predictive value (PPV), negative predictive value (NPV), and Dice coefficient.

Medical Subject Headings (MeSH) included artificial intelligence, automatic learning, supervised learning, unsupervised learning, deep learning, machine learning, neural networks, convolutional neural network, computer-assisted diagnosis, endodontic dentistry, root canal treatment, apical lesions, periapical lesions, periapical pathology, periapical diseases, deep caries detection, tooth segmentation, pulp cavity segmentation, root segmentation, root morphology, canal shape, cracked tooth, tooth fractures, root fractures, accuracy, prediction, and diagnosis. Boolean operators such as and/or were also used in the advanced stage of the search for combining these MeSH terms, with predetermined publication time range and language as filters (Table 1).

Table 1.

Structured search strategy carried out in electronic databases.

| Search/Filters | Topic and Terms |

|---|---|

| “English” Language | “artificial intelligence” OR “automatic learning” OR “supervised learning” OR “unsupervised learning” OR “deep learning” OR “machine learning” OR “neural networks” OR “convolutional neural network” OR “computer assisted diagnosis” “endodontic dentistry” OR “root canal treatment” OR “apical lesions” OR “periapical lesions” OR “periapical pathology” OR “periapical diseases” OR “deep caries detection” OR “tooth segmentation” OR “pulp cavity segmentation” OR “root segmentation” OR “root morphology” OR “canal shape” OR “cracked tooth” OR “tooth fractures” OR “root fractures” OR “accuracy” OR “prediction” OR “diagnosis” OR ”expert systems” OR ” fuzzy networks” OR ” AI networks” OR “ AI models” |

| “English” Language | “artificial intelligence” AND “automatic learning” AND “supervised learning” AND “unsupervised learning” AND “deep learning” AND “machine learning” AND “neural networks” AND “convolutional neural network” AND “computer assisted diagnosis” “endodontic dentistry” AND “root canal treatment” AND “apical lesions” AND “periapical lesions” AND “periapical pathology” AND “periapical diseases” AND “deep caries detection” AND “tooth segmentation” AND “pulp cavity segmentation” AND “root segmentation” AND “root morphology” AND “canal shape” AND “cracked tooth” AND “tooth fractures” AND “root fractures” AND “accuracy” AND “prediction” AND “diagnosis” AND “expert systems” AND “fuzzy networks” AND “AI networks” AND “AI models” |

Simultaneously, a manual search for articles was also performed by cross-referencing and screening the bibliography list of the selected articles.

2.2. Study Selection

The article selection process was carried out in two phases. In the first stage, the articles that were related to the research question were selected based on their title and abstract. In this phase, two experienced authors (S.B.K. and A.O.J.) simultaneously carried out the search process and 264 articles were selected. After screening, 124 articles were eliminated due to duplication, and the rest of the articles (140 articles) were assessed for meeting the eligibility criteria

2.3. Eligibility Criteria

The inclusion criteria were the following: (a) original research articles with a clear statement on AI applications designed for endodontics; (b) articles published between 1 January 2000 and 30 November 2022 in a scholarly peer-reviewed journal; (c) articles with a clear mention of a type of study modality used for developing, training, validating, and testing an AI model; (d) articles with a clear mention of quantifiable outcome measures for assessing the performance of the AI model; (e) AI models applied for determining working length, vertical root fractures, or root morphology or for detecting and diagnosing pulpal diseases, periapical lesions, predicting prognosis, postoperative pain, or case difficulties. The study design was not limited and hence did not affect the articles’ inclusion.

The determined exclusion criteria were as follows: (a) non-full-text articles with only abstracts; (b) non-peer-reviewed publications (such as conference papers and unpublished thesis projects); (c) review articles, letters to editors, and commentaries.

2.4. Data Extraction

After the preliminary evaluation of the selected papers based on the title and abstract and the elimination of the duplicates, the authors further analyzed the full text of these articles and assessed their eligibility, following which the total number of articles included in this systematic review decreased to 38. Following that, in the second phase, the identifiers of the journal and author details were removed, and the articles were distributed for critical evaluation by two independent authors who did not contribute to the initial search (M.A. and K.A.). The data from these included articles were further extracted and entered into a Microsoft Excel sheet. This data comprised details of the authors; year of publication; objective of the study; type of algorithm used for developing the AI model; data used for training, validating, and testing the model; results; conclusions; and suggestions.

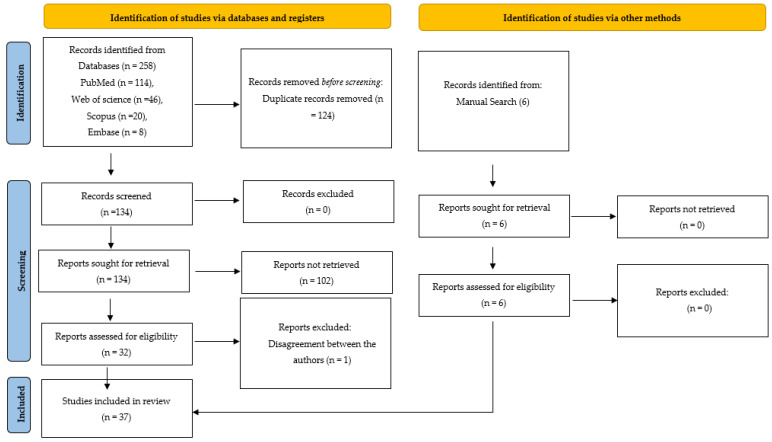

The quality assessment of the articles was conducted utilizing the Quality Assessment and Diagnostic Accuracy Tool (QUADAS-2) guidelines [24]. This tool was developed to assess the quality of studies that have reported on diagnostic tools. The assessment is based on four domains (patient selection, index test, reference standard, and flow and timing), each of which is evaluated for risk of bias and applicability. The inter-rater reliability between the two authors was assessed on a sample of articles, where Cohen’s kappa showed 86% agreement. The authors had a disagreement regarding the inclusion of one article since the quantifiable outcome measures of performance were not clearly mentioned. This was further resolved through a third opinion obtained (A.F.), after which the article was excluded. Thirty-seven articles finally underwent qualitative synthesis (Figure 1).

Figure 1.

PRISMA 2020 flow diagram for new systematic reviews which included searches of databases, registers, and other sources.

3. Results

The qualitative data synthesis was performed on the 37 articles [25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61] that fulfilled the inclusion criteria. The research trend shows there has been a gradual increase in the number of research publications that have reported on the application of AI in endodontics.

3.1. Qualitative Synthesis of the Included Studies

In endodontics, AI models have been applied for determining working length (n = 3) [25,26,38], determining VRFs (n = 6) [27,29,30,32,41,60], detecting pulpal diseases (n = 2) [28,42], detecting and diagnosing periapical lesions (n = 13) [31,35,36,37,40,43,44,49,50,52,54,55,61], determining root morphology (n = 4) [33,39,45,56], predicting postoperative pain (n = 1) [48], determining root canal failures (n = 4) [51,57,58], predicting case difficulty (n = 1) [34], determining thrust force and torque in canal preparation(n = 1) [46], segmenting pulp cavities (n = 1) [47], and predicting curative effect after treatment (n = 1) [53,59].

The data from these included articles were extracted. However, due to the heterogeneity in the data extracted from these articles, performing a meta-analysis was not possible. The heterogeneity was mainly with respect to the different types of data samples applied for assessing the performance of AI models. Hence, in this systematic review, only the descriptive data of the included studies are presented (Table 2).

Table 2.

Details of the studies that have reported on the application of AI-based models in endodontics.

| Serial No. | Authors | Year of Publication | Study Design | Algorithm Architecture | Objective of the Study | No. of Patients/Images/Photographs for Testing | Study Factor | Modality | Comparison If Any | Evaluation Accuracy/Average Accuracy/Statistical Significance | Results: (+) Effective, (−) Non-Effective, (N) Neutral |

Outcomes | Authors’ Suggestions/Conclusions |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | Saghiri et al. [25] | 2011 | Comparative study | ANNs | AI-based model for locating the minor apical foramen | 50 samples | Apical foramen | Intraoral radiographs | Two experienced endodontists | For 93% of the samples, the model determined the location of the apical foramen correctly | (+) Effective | ANN-based model demonstrated good accuracy in detecting the apical foramen | The AI model can be useful for secondary opinion in order to achieve better clinical decision-making |

| 2 | Saghiri et al. [26] | 2012 | Comparative study | ANNs | AI-based model for determining the working length | 50 samples | Working length | Intraoral radiographs | Dentist | AI model demonstrated 96% accuracy in comparison with the experienced endodontists whose accuracy was 76% | (+) Effective | AI model demonstrated more accuracy in determining the working length in comparison with experienced endodontists | This model was efficient in determining the working length |

| 3 | Kositbowornchai et al. [27] | 2013 | Comparative study | ANNs | AI-based model for determining vertical root fracture (VRFs) | 200 samples (80 for training, 120 for testing) |

Vertical root fracture | Digital radiographs | Between groups | Sensitivity (98%), specificity (90.5%), and accuracy (95.7%) | (+) Effective | This AI model displayed sufficient sensitivity, specificity, and accuracy | This model make can be useful for making correct interpretations of root fractures |

| 4 | Tumbelaka et al. [28] | 2014 | Observational study | PNNs | AI-based model for identifying pulpitis | 20 samples | Pulpitis | Periapical radiographs | None | Mean square error around 0.0003 | (+) Effective | This model precisely diagnosed reversible and irreversible pulpitis | In order to obtain a better diagnosis, radiographs have to be digitalized |

| 5 | Johari et al. [29] | 2017 | Comparative study | Probabilistic neural networks (PNNs) | AI-based model for diagnosing VRFs in intact and endodontically treated teeth | 240 samples | Vertical root fracture | CBCT images | Other state-of-the-art approaches | Accuracy of 96.6%, sensitivity of 93.3%, and specificity of 100% | (+) Effective | This model is efficient in diagnosing VRFs using CBCT images | Additional training of AI-based models is required before clinical use |

| 6 | Shah et al. [30] | 2018 | Comparative study | CNNs | AI model for automatically detecting cracks in teeth | 6 samples | Cracked teeth | CBCT images | Frangi’s vessel enhancement algorithm | Mean ROC was 0.97 | (+) Effective | This model was efficient in detecting cracked teeth | The model can detect cracked teeth in earlier stages and prevent pain and suffering associated with them |

| 7 | Ekert et al. [31] | 2019 | Comparative study | CNNs | AI model for detecting apical lesions | 85 samples | Apical lesions | Panoramic radiographs | 6 independent examiners | AUC was 0.85 (0.04). Sensitivity was 0.65 (0.12) and specificity was 0.87 (0.04) | (+) Effective | This model showed a satisfying ability to detect apical lesions | Sensitivity of the model needs to be improved before application in clinics |

| 8 | Fukuda et al. [32] | 2019 | Comparative study | CNNs | AI model for detecting vertical root fractures (VRFs) |

300 samples (240 for training and 60 for testing) | Vertical root fracture | Panoramic radiographs | 2 radiologists and 1 endodontist | Recall was 0.75, precision was 0.93, and F measure was 0.83 | (+) Effective | This model showed promising results in detecting VRFs | This model has to be trained and applied on datasets from other hospitals |

| 9 | Hiraiwa et al. [33] | 2019 | Comparative study | ANNs | AI model for assessing the root morphology of the mandibular first molar | 760 samples | Root morphology | Panoramic and CBCT images | Radiologist | Accuracy of 86.9% | (+) Effective | The model displayed high accuracy in diagnosing a single or extra root in the distal roots of mandibular first molars | This model displayed a high level of diagnostic ability |

| 10 | Mallishery et al. [34] | 2019 | Comparative study | ML | AI-based ML model for predicting the difficulty level of the case | 500 samples | Case difficulty | Datasets | 2 endodontists | Sensitivity of 94.96% | (+) Effective | This model displayed an excellent prediction of case difficulty | This model displayed excellent prediction ability which can increase the speed of decision-making and referrals |

| 11 | Setzer et al. [35] | 2020 | Comparative study | CNNs | A deep learning model for automated segmentation of CBCT images and detecting periapical lesions | 20 CBCT images (16 CBCT images for training, 4 CBCT images for validation) |

Apical lesions | CBCT images | 1 radiologist, 1 endodontist, and 1 senior graduate | Accuracy of 0.93 and specificity of 0.88 | (+) Effective | With a limited CBCT training, this model displayed excellent results in detecting the lesion | This model can aid clinicians with automated lesion detection |

| 12 | Orhan et al. [36] | 2020 | Comparative study | ANNs | AI model for detecting periapical pathosis | 153 samples | Periapical lesions | CBCT images | 1 maxillofacial radiologist | Reliability of 92.8% in correctly detecting periapical lesions | (+) Effective | There was no difference in the accuracy of humans and AI model in detecting apical lesions | This model will be useful for detecting periapical pathosis in clinical scenarios |

| 13 | Endres et al. [37] | 2020 | Comparative study | CNNs | Deep learning model for detecting periapical disease |

197 samples (95 images for training and 102 images for testing) | Periapical disease | Panoramic radiographs | 24 oral and maxillofacial (OMF) surgeons |

Average precision of 0.60 and an F1 score of 0.58 | (+) Effective | This deep learning algorithm achieved a better performance than 14 of 24 OMF surgeons | The deep learning model has potential to assist OMF surgeons in detecting periapical lucencies |

| 14 | Qiao et al. [38] | 2021 | Comparative study | CNNs | Deep learning models for root canal length measurement |

21 samples | Root canal length | Tooth | Dual-frequency impedance ratio method | Accuracy of 95% | (+) Effective | This model demonstrated better accuracy in comparison with other models | The performance of this model can be enhanced by increasing the number of samples |

| 15 | Sherwood et al. [39] | 2021 | Comparative study | CNNs | Deep learning model for classifying C-shaped canal anatomy in mandibular second molars |

135 samples (100 images for training and 35 images for testing) | Canal shapes | CBCT images | U-Net, residual U-Net, and Xception U-Net architectures | The mean Dice coefficients were 0.768 ± 0.0349 for Xception U-Net, 0.736 ± 0.0297 for residual U-Net, and 0.660 ± 0.0354 for U-Net on the test dataset | (+) Effective | Both Xception U-Net and residual U-Net performed significantly better than U-Net | Deep learning models can aid clinicians in detecting and classifying C-shaped canal anatomy |

| 16 | Li et al. [40] | 2021 | Comparative study | CNNs | Deep learning model for detecting apical lesions | 460 samples (322 images for training and 138 images for testing) | Apical lesions | Periapical radiographs | 3 experienced dentists | Diagnostic accuracy of the model was 92.5% | (+) Effective | Deep neural models demonstrated excellent accuracy in detecting the periapical lesions | This automated model allows dentists to make the diagnosis process shorter and more efficient |

| 17 | Vicory et al. [41] | 2021 | Comparative study | ML | AI model for detecting tooth microfractures | 36 samples | Tooth microfractures | High-resolution (hr) CBCT and micro-CT scans |

Direction–projection–permutation | Significant separation result | (+) Effective | The data suggest that this approach can be applied to hr-CBCT (clinically) when the images are not over-processed | Early detection of microfractures can help in planning appropriate treatment |

| 18 | Zheng et al. [42] | 2021 | Comparative study | CNNs | Deep learning model for detecting deep caries and pulpitis | 844 samples (717 images for training and 127 images for testing) | Deep caries and pulpitis | Periapical radiographs | VGG19, Inception V3, ResNet18, 5 experienced dentists | Accuracy of 0.86, precision of 0.85, sensitivity of 0.86, specificity of 0.86, and AUC of 0.94 | (+) Effective | ResNet18 demonstrated the best performance also in comparison with experienced dentists | The promising potential of this model can be applied for clinical diagnosis |

| 19 | Moidu et al. [42] | 2021 | Comparative study | CNNs | Deep learning model for categorization of endodontic lesions | 1950 samples |

Periapical lesions | Periapical radiographs | 3 endodontists | Sensitivity/recall of 92.1%, 76% specificity, 86.4% positive predictive value, and 86.1% negative predictive value | (+) Effective | The model exhibited excellent sensitivity, positive predictive value, and negative predictive value | This AI model can be beneficial for clinicians and researchers |

| 20 | Pauwels et al. [44] | 2021 | Comparative study | CNNs | Deep learning model for detecting periapical lesions | 10 samples |

Periapical lesions | Periapical radiographs | 3 oral radiologists | Mean sensitivity of 0.87, specificity of 0.98, and ROC-AUC of 0.93 | (+) Effective | This CNN model displayed perfect accuracy for the validation data | This model showed promising results in detecting periapical lesions |

| 21 | Jeon et al. [45] | 2021 | Comparative study | CNNs | Deep learning model for predicting C-shaped canals in mandibular second molars | 2040 samples (1632 images for training and 408 images for testing) |

C-shaped canals | Panoramic radiographs | 1 experienced radiologist and 1 experienced endodontist | Accuracy of 95.1, sensitivity of 92.7, specificity of 97.0, and precision of 95.9% | (+) Effective | This CNN model displayed significant accuracy in predicting C-shaped canals |

This model can assist clinicians with dental image interpretation |

| 22 | Guo et al. [46] | 2021 | Comparative study | ANNs | Radial basis function neural network (RBFN)-based AI model for predicting thrust force and torque for root canal preparation | 2 samples |

Thrust force and torque | CT scans | Comparative ANN model | Prediction error less than 14% | (+) Effective | This model displayed an excellent prediction of thrust force and torque in canal preparation | Can be useful for instructing dentists during root canal preparations and also for improving the geometrical design of nickel titanium files |

| 23 | Lin et al. [47] | 2021 | Comparative study | ANNs | AI model for automatic and accurate segmentation of the pulp cavity and tooth | 30 samples (25 sets for training and 5 sets for testing) | Segmentation of the pulp cavity | CBCT images | 1 experienced endodontist | Dice similarity coefficient of 96.20% ± 0.58%, precision rate of 97.31% ± 0.38%, recall rate of 95.11% ± 0.97%, average symmetric surface distance of 0.09 ± 0.01 mm, and Hausdorff distance of 1.54 ± 0.51 mm in the tooth and Dice similarity coefficient of 86.75% ± 2.42%, precision rate of 84.45% ± 7.77%, recall rate of 89.94% ± 4.56%, average symmetric surface distance of 0.08 ± 0.02 mm, and Hausdorff distance 1.99 ± 0.67 mm in the pulp cavity |

(+) Effective | The analysis performed by the model was better than that of the experienced endodontist | This model demonstrated excellent accuracy and hence can be applied in research and clinical tasks in order to achieve better endodontic diagnosis and therapy |

| 24 | Gao et al. [48] | 2021 | Observational study | ANNs | Backpropagation (BP) AI model for predicting postoperative pain following root canal treatment | 300 samples (210 for training, 45 for validating, and 45 for testing) | Postoperative pain | Datasets | None | Accuracy of prediction was 95.60% | (+) Effective | This model displayed an excellent prediction of postoperative pain following RCT | The results displayed by this model have shown clinical feasibility and clinical application value |

| 25 | Ngoc et al. [49] | 2021 | Comparative study | CNNs | AI-based model for diagnosis of periapical lesions | 130 samples | Periapical lesions | Bitewing images | Endodontists | Sensitivity of 89.5, specificity of 97.9, and accuracy of 95.6% | (+) Effective | This model displayed excellent performance and can be used as a support tool in the diagnosis of periapical lesions | This model can be used in teledentistry for the diagnosis of periapical diseases where there is a lack of dentists |

| 26 | Kirnbauer et al. [50] | 2022 | Observational study | CNNs | AI model for the automated detection of periapical lesions | 144 samples | Periapical lesions | CBCT images | None | Sensitivity of 97.1% and specificity of 88.0% for lesion detection | (+) Effective | This AI model displayed excellent results compared with related literature | This model can be applied for testing under clinical conditions |

| 27 | Herbst et al. [51] | 2022 | Comparative study | ML | AI-based ML model for predicting failure of root canal treatment | 591 samples | Root canal failure | Datasets | Random forest, gradient boosting machine, extreme gradient boosting, predictive modeling |

logR 0.63, gradient boosting machine (GBM) 0.59, random forest (RF) 0.59, extreme gradient boosting (XGB) 0.60 | (N) Neutral | This study found tooth-level factors to be associated with failure | With this AI model, predicting failure was only limitedly possible |

| 28 | Bayrakdar et al. [52] | 2022 | Observational study | CNNs | AI-based deep convolutional neural network (D-CNN) model for the segmentation of apical lesions | 470 samples | Apical lesions | Panoramic radiographs | None | Sensitivity of 0.92, precision of 0.84, and F1-score of 0.88 | (+) Effective | This AI model was efficient in evaluating periapical pathology |

This AI model may facilitate clinicians in the assessment of periapical pathology |

| 29 | Zhao et al. [53] | 2022 | Comparative study | CNNs | AI model for evaluating the curative effect after treatment of dental pulp disease (DPD) | 120 samples | Dental pulp disease | Radiographs and CBCT images | Control group with healthy teeth | Segmentation accuracy was 85.5%; diagnostic rate of X-ray was 43.7% and diagnostic rate of CBCT was 100% | (+) Effective | CBCT evaluation using an AI model can be an effective method for evaluating the curative effect of dental pulp disease treatment during and after the surgery | This model has a higher application prospect in the diagnosis and treatment of DPD |

| 30 | Hamdan et al. [54] | 2022 | Comparative study | CNNs | AI model for detecting apical radiolucencies | 68 samples | Apical radiolucencies | Periapical radiographs | Eight experienced specialists | Alternative free-response receiver operating characteristic (AFROC) of 0.892, specificity of 0.931, and sensitivity of 0.733 | (+) Effective | This model has the potential to improve the diagnostic efficacy of clinicians | This AI model enhances clinicians’ abilities to detect apical radiolucencies |

| 31 | Calazans et al. [55] | 2022 | Comparative study | CNNs | AI models for classifying periapical lesions | 1000 samples (training 60%, validation 20%, testing 20%) | Periapical lesions | CBCT scans | Experienced oral and maxillofacial radiologist | Accuracy of 70%, specificity of 92.39% |

(+) Effective | DenseNet-121 network was superior to VGG-16 and human experts | The proposed models displayed a satisfactory classification performance |

| 32 | Yang et al. [56] | 2022 | Comparative study | CNNs | AI-based deep learning model for classifying C-shaped canals in mandibular second molars | 1000 samples | C-shaped canals | Periapical and panoramic radiographs | Specialist and general clinician | AUC of 0.98 on periapical and AUC of 0.95 on panoramic | (+) Effective | This model displayed high accuracy in predicting the C-shaped canal in both periapical and panoramic images and was similar to the performance of a specialist and better than a general dentist | This model was effective in diagnosing C-shaped canals and therefore can be a valuable aid for clinicians and also in dental education |

| 33 | Xu et al. [57] | 2022 | Comparative study | ML | AI-based models for identifying the history of root canal therapy | 920 samples (736 for training and 184 for testing) | Root canal therapy | Datasets | VGG16, VGG19, and ResNet50 | Accuracies were above 95% and AUC area was 0.99 | (+) Effective | This model displayed excellent accuracy and can aid in clinical auxiliary diagnosis based on image display | This AI-assisted diagnosis of oral medical images can be effectively promoted for clinical practice |

| 34 | Qu et al. [58] | 2022 | Comparative study | ML | AI-based machine learning models for predicting prognosis of endodontic microsurgery | 234 samples (80% for the training set and 20% for the test set) | Predicting prognosis | Datasets | Gradient boosting machine (GBM) and random forest (RF) models | Accuracy of 0.80, sensitivity of 0.92, specificity of 0.71, positive predictive value (PPV) of 0.71, negative predictive value (NPV) of 0.92, F1 score of 0.80, and area under the curve (AUC) of 0.88 | (+) Effective | The GBM model outperformed the RF model slightly on the dataset | The models can improve efficiency and assist clinicians in decision-making |

| 35 | Li et al. [59] | 2022 | Comparative study | ANNs | AI-based anatomy-guided multibranch transformer (AGMB-Transformer) network for assessing the result of root canal therapy |

245 samples | Root canal therapy evaluation | Datasets | 2 experienced specialists and other models (ResNet50, ResNeXt50, GCNet50, BoTNet50) | Accuracy ranged from 57.96% to 90.20%, AUC of 95.63%, sensitivity of 91.39%, specificity of 95.09%, F1 score of 90.48% | (+) Effective | This model achieved a highly accurate evaluation | The performance of this model has important clinical value in reducing the workload of endodontists |

| 36 | Hu et al. [60] | 2022 | Comparative study | CNNs | AI-based deep learning models for diagnosing vertical root fracture | 276 samples | Vertical root fracture | CBCT images | 2 experienced radiologists, ResNet50, VGG19, and DenseNet169 |

The accuracy, sensitivity, specificity, and AUC were 97.8%, 97.0%, 98.5%, and 0.99 | (+) Effective | ResNet50 presented the highest accuracy and sensitivity for diagnosing VRF teeth | ResNet50 presented the highest diagnostic efficiency in comparison with other models. Hence, this model can be used as an auxiliary diagnostic technique to screen for VRF teeth |

| 37 | Vasdev et al. [61] | 2022 | Comparative study | CNNs | AI-based deep learning model for detecting healthy and non-healthy periapical images | 16,000 samples | Periapical lesions | Periapical radiographs | ResNet-18, ResNet-34, and AlexNet | Accuracy of 0.852, precision and F1 score of 0.850 | (+) Effective | This AlexNet model outperformed the other models | This model generalizes effectively to previously unseen data and can aid clinicians in diagnosing a variety of dental diseases |

Footnotes: ML = machine learning, ANNs = artificial neural networks, CNNs = convolutional neural networks, DCNNs = deep neural networks, c-index = concordance index, CT = computed tomography, CBCT = cone-beam computed tomography, OCT = optical coherence tomography.

3.2. Study Characteristics

The study characteristics extracted from the included studies included details of the authors; year of publication; objective of the study; type of algorithm used for developing the AI model; data used for training, validating, and testing the model; results; conclusions; and suggestions.

3.3. Outcome Measures

The outcome was measured in terms of task performance efficiency. The outcome measures were reported in terms of accuracy, sensitivity, specificity, receiver operating characteristic curve (ROC), area under the curve (AUC), area under the receiver operating characteristic curve (AUROC), intraclass correlation coefficient (ICC), intersection over union (IOU), precision–recall curve (PRC), statistical significance, F1 scores, volumetric Dice similarity coefficient (vDSC), surface Dice similarity coefficient (sDSC), positive predictive value (PPV), negative predictive value (NPV), mean decreased Gini (MDG) coefficient, mean decreased accuracy (MDA) coefficient, and Dice coefficient.

3.4. Risk of Bias Assessment and Applicability Concerns

Assessment of the quality of the included studies through the risk of bias is essential in order to understand and report the selection of the samples, reference standards, and methods applied for validating and testing the models.

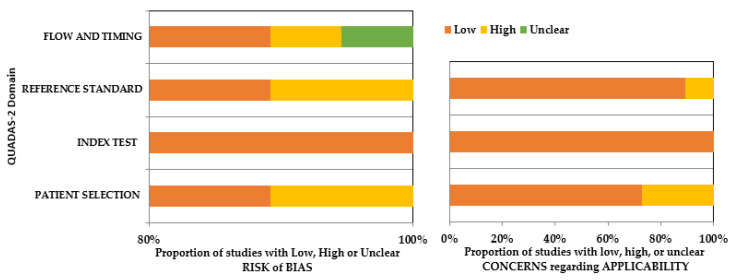

A low risk of bias was observed in the patient selection domain in most of the studies (risk of bias: 90%; applicability: 70%). However, cadaver samples (Saghiri et al. [25]), extracted teeth (Saghiri et al. [26], Kositbowornchai et al. [27], Johari et al. [29], Qiao et al. [38]), and bone samples (Guo et al. [46]) had been utilized in six studies. Therefore, the patient selection domain of the applicability arm of the tool for these above-mentioned studies was reported to have a high risk of bias. Index tests were regarded as low risk in both the arms of QUADAS-2 since all the studies had made use of a highly standardized system of AI for training purposes. There was no clear mention of the reference standard for interpreting index test results in four of the included studies, which raised concerns regarding bias related to patient selection, reference standard, flow, and timing of these studies in both arms. Overall, there was a low risk of bias in both arms, considering all the categories across the included studies. Details about the risk of bias assessment using QUADAS-2 are mentioned in the Supplementary Materials (Table S1) and Figure 2.

Figure 2.

QUADAS-2 assessment of the individual risk of bias domains and applicability concerns.

3.5. Assessment of Strength of Evidence

The certainty of the selected studies in the systematic review was assessed using the Grading of Recommendations Assessment Development and Evaluation (GRADE) approach [62]. Risk of bias, inconsistency, indirectness, imprecision, and publication bias are major domains under which the certainty of the evidence is rated and categorized as very low, low, moderate, or high. Overall, the studies included in this systematic review showed moderate evidence (Table 3).

Table 3.

Assessment of Strength of Evidence.

| Outcome | Inconsistency | Indirectness | Imprecision | Risk of Bias | Publication Bias | Strength of Evidence |

|---|---|---|---|---|---|---|

| Application of AI for determining working length [25,26,38] | Not Present | Not Present | Not Present | Present | Not Present | ⨁⨁⨁◯ |

| Application of AI for determining vertical root fracture [27,29,30,32,41,60] | Not Present | Not Present | Not Present | Present | Not Present | ⨁⨁⨁◯ |

| Application of AI for detecting pulpal diseases [28,42] | Not Present | Not Present | Not Present | Present | Not Present | ⨁⨁⨁◯ |

| Application of AI for, detecting and diagnosing periapical lesions [31,35,36,37,40,43,44,49,50,52,54,55,61] | Not Present | Not Present | Not Present | Present | Not Present | ⨁⨁⨁◯ |

| Application of AI for determining root morphology [33,39,45,56] | Not Present | Not Present | Not Present | Not Present | Not Present | ⨁⨁⨁⨁ |

| Application of AI for determining root canal failures [51,57,58] | Not Present | Not Present | Not Present | Not Present | Not Present | ⨁⨁⨁⨁ |

| Application of AI for predicting postoperative pain [42] | Not Present | Not Present | Not Present | Present | Not Present | ⨁⨁⨁◯ |

| Application of AI for predicting case difficulty [34] | Not Present | Not Present | Not Present | Not present | Not Present | ⨁⨁⨁⨁ |

| Application of AI for determining thrust force and torque in canal preparation [46] | Not Present | Not Present | Not Present | Present | Not Present | ⨁⨁⨁◯ |

| Application of AI for segmenting pulp cavities [47] | Not Present | Not Present | Not Present | Not Present | Not Present | ⨁⨁⨁⨁ |

| Application of AI in curative effect after treatment [53,59] | Not Present | Not Present | Not Present | Not Present | Not Present | ⨁⨁⨁⨁ |

⨁⨁⨁⨁ = high evidence; ⨁⨁⨁◯ = moderate evidence.

4. Discussion

Technological advancements in health sciences have led to enormous developments in the AI models that have been designed for application in health sectors. In recent developments, CNN-based AI models have demonstrated excellent efficiency in diagnosing diseases in comparison with experienced specialists [63,64].

AI has been applied in endodontics for detecting pulpal diseases. Tumbelaka et al. [28] published details of an AI model for identifying pulpitis. This model was very efficient in precisely diagnosing reversible and irreversible pulpitis. However, the authors suggested using digital radiographs in order to achieve better validation. Zheng et al. [44] investigated a DL model designed for detecting deep caries and pulpitis, and the model demonstrated excellent performance. The ResNet18 model displayed outstanding performance when compared with reference models and experienced clinicians. However, this study focused only on teeth with single carious lesions and not on multiple carious lesions. Hence, further clinical validation is required before application in clinical practice.

Untreated dental caries progresses into periapical diseases, which are a result of the inflammatory lesions affecting the pulpal and periapical tissues, 90% of which are classified as apical granulomas, apical cysts, or abscesses [65]. The prevalence of apical periodontitis ranges between 34 and 61%, followed by periapical cysts and granulomas which range from 6 to 55% and from 46 to 94%, respectively [66,67,68]. Periapical pathosis can be detected radiographically as periapical radiolucencies, which are also termed apical lesions. Detecting apical lesions using radiographs is a daily task of clinicians; however, regardless of their discriminatory ability, radiographic examinations are influenced by inter- and intra-examiner reliability [69,70]. Ekert et al. [31] described the application of an AI model for detecting apical lesions; this model displayed satisfactory ability in detecting apical lesions, with an AUC of 0.85 and a sensitivity of 0.65. However, the sensitivity of the model was limited and needs to be improved by using a larger number of datasets to avoid the under-detection of the lesions before the model can be applied in clinics. Setzer et al. [35] described an AI model designed for segmenting CBCT images and detecting periapical lesions. The model displayed excellent accuracy and specificity. However, the limitation of this study was the comparison of the performance of the CNN model with clinicians’ segmentation, which can be subject to human error. Another limitation was the lower Dice index ratios for segmentation of the label lesions, which need to be addressed by increasing the training size. Orhan et al. [36] described an AI model designed for detecting periapical pathosis; the model displayed outstanding reliability in correctly detecting periapical lesions, which was equivalent to the performance of human experts. However, the presence of endo-perio lesions and periodontal defects can alter the performance of the model. In addition, anatomical structures such as the mental foramen and nasal fossa need segmentation, which can impact the analysis of the models’ measurements, and therefore, further programming will be required to address these issues. Endres et al. [37] reported the performance of an AI model designed for detecting periapical disease which displayed an acceptable precision and F1 score. The model achieved a better performance than experienced specialists. However, the model was trained using datasets labeled by the surgeons, which can be subject to human bias and be reflected in a degradation of the model performance. Another limitation was with the data used for training and evaluating the model, which were from a single center. Hence, further tests may be required with data from multiple centers to demonstrate generalizability. Li et al. [38]. studied the performance of a DL model designed for detecting apical lesions. The model demonstrated an excellent diagnostic accuracy of 92.5%. This model displayed a performance superior to that of a previous model [36]. However, the limitation of this model was with datasets that were obtained from a single hospital. Again, in order to demonstrate the generalizability of these results, further research is required with data from multiple sources [40].

Pauwels et al. [44] described the performance of a DL model designed for detecting periapical lesions. The results of this study were very promising, with a mean sensitivity of 0.87, specificity of 0.98, and ROC-AUC of 0.93. This model outperformed in comparison with experienced oral radiologists. This model further needs to be trained and validated on large samples/clinical radiographs before implementation in clinical scenarios, since this study used bovine ribs and simulated lesions. Ngoc et al. [49] detailed the performance of an AI model for diagnosing periapical lesions. This model displayed exceptional performance in comparison with endodontists’ diagnoses. However, this model was developed with a limited number of datasets using periapical radiographs.

Kirnbauer et al. [50] described the performance of an AI model for automatically detecting periapical lesions; the model displayed a sensitivity of 97.1% and a specificity of 88.0% for lesion detection. Bayrakdar et al. [52] reported on an AI model designed for segmenting apical lesions. This model was efficient in evaluating the periapical pathology and displayed a remarkable performance. However, there were a few limitations with the radiographic data used for this study, as they were obtained from a single piece of equipment and the number of samples used was very limited. Calazans et al. [55] reported on AI models for classifying periapical lesions and compared their performance with that of experienced oral and maxillofacial radiologists. The model displayed an accuracy of 70% and specificity of 92.39%, which were superior to those of the AI model VGG-16 and human experts.

Determining the working length is one of the crucial clinical steps that influence the outcome of root canal treatment. This will reduce the chances of insufficient cleaning of the canal and help in confining the root canal filling material into the canal and not invading the periapical tissues, ultimately resulting in a successful treatment outcome [70]. Saghiri et al. [25] described the performance of an AI-based model for locating the minor apical foramen. This model demonstrated good accuracy in detecting the apical foramen. Saghiri et al. [26] also described the performance of an AI model for determining the working length. The AI model demonstrated 96% accuracy in comparison with experienced endodontists. However, the quality of patient selection in these studies was low since the samples used were extracted teeth and cadavers. Qiao et al. [38] described the performance of an AI model designed for root canal length measurement. The accuracy of the model was exceptional and was better than the accuracy of the dual-frequency impedance ratio method, which demonstrated an accuracy of 85%. However, very limited samples were used, and increasing the sample size in future studies can further enhance the performance.

VRFs are crack types that can be complete or incomplete fractures of the root in the longitudinal plane and can be seen in teeth that are either endodontically treated or untreated [71,72]. These fractures are often unnoticed by clinicians and in most cases are only thought of when significant periapical changes occur, ultimately resulting in a delay in diagnosis and treatment [73]. To increase the diagnostic efficiency of clinicians, AI models have been applied for assisting clinicians in the early diagnosis of tooth cracks and fractures. Kositbowornchai et al. [27] described the performance of an AI model designed for detecting VRFs, and the model displayed an outstanding performance. However, the limitation of this study was with the samples, since they only used single-rooted premolar teeth; thus, these results cannot be generalized unless applied to different tooth types. Johari et al. [29] described the performance of an AI model for determining VRFs; the model displayed exceptional performance. However, in this study, only single-rooted premolar teeth were used. These findings were similar to the findings of the study conducted by Fukuda et al. [32] in which the AI model displayed a precision of 0.93 and an F measure of 0.83. However, the limitation of this study was with the datasets used, which were only from a single center, and only the radiographs with clear VRF lines were included [32]. Hu et al. [60] described the performance of AI models for diagnosing VRFs; the ResNet50 model presented the highest accuracy and sensitivity for diagnosing VRF teeth. Shah et al. [30] described the application of an AI model for automatically detecting cracks in teeth; this model displayed a mean ROC of 0.97 in detecting cracked teeth.

Assessing the shape of the roots and canals of a tooth can be very important in successfully treating a carious tooth. However, the variations in the root canal morphology pose a difficulty in canal preparation, irrigation, and obturation. C-shaped canals are the most difficult variation in the performance of a root canal treatment [74,75]. Hiraiwa et al. [32] described the application of an AI model designed for assessing the root morphology of the mandibular first molar; this model displayed an accuracy of 86.9%. Sherwood et al. [39] also described the performance of a DL model for classifying C-shaped canal anatomy in mandibular second molars. Both Xception U-Net and residual U-Net performed significantly better than the U-Net model. However, the limited sample used in this study and the focus on only C-shaped root canal anatomy were limitations of the study. Jeon et al. [45] reported on a DL model designed for predicting C-shaped canals in mandibular second molars; the model displayed outstanding performance in predicting C-shaped canals. Yang et al. [58] described the performance of a DL model for classifying C-shaped canals in mandibular second molars; the model displayed excellent performance in predicting C-shaped canals in both periapical and panoramic images. However, in this study, the number of samples used was insufficient, and the samples were from a single center.

AI has also been applied in predicting the prognosis of RCT. Herbst et al. [51] reported on an AI model for predicting factors associated with the failure of root canal treatments. This model was efficient in predicting tooth-level factors. Qu et al. [58] described the application of machine learning models for the prognosis prediction of endodontic microsurgery. The gradient boosting machine (GBM) model displayed excellent performance. These findings were similar to the finding of the study conducted by Li et al. [59] in which the model displayed an accuracy of 57.96–90.20%, an AUC of 95.63%, and a sensitivity of 91.39%. These automated models can be of great value to clinicians by assisting them in decision-making, providing quick and accurate results, overcoming the requirement of high-level clinical experience, and avoiding inter-observer variability.

The findings of this systematic review show that the majority of the AI models are designed for automated digital diagnosis and treatment planning. These findings are in accordance with the systematic reviews that have previously reported on various disciplines of dentistry. Mohammad-Rahimi et al. [76] reported on the performance of deep learning models in periodontology and oral implantology, where the authors concluded that the performance of the models is generally high. Albalawi et al. [77] reported on a wide range of AI models applied in orthodontics and concluded that these AI models are reliable and can automatically complete tasks with an enhanced speed and an efficiency equivalent to that of experienced specialists. Junaid et al. [78] reported on the application and performance of AI models designed for cephalometric landmark identification. The authors concluded that these models are of great benefit to orthodontists as they can perform tasks very efficiently. Carrillo-Perez et al. [79] reported on the application of AI models in dentistry; the authors concluded that the AI models display outstanding performance in performing the tasks. Thurzo et al. [80] reported on a wide range of AI models that have been designed for application in dentistry. The authors reported that there has been extraordinary growth in the development of AI models designed for application in dentistry. In the last few years, significant growth has been witnessed in the application of AI in dentistry.

This systematic review might have a few limitations. Even though we performed a comprehensive search for articles that have reported on the application of AI models in endodontics, we might have missed a few. Another limitation could be with the assessment of the risk of bias, which might vary between subjective judgments. However, considering the potential of AI applications in improving the diagnosis and treatment outcomes in endodontics, regulatory bodies should expedite the process of policy-making, approval, and marketing of these products for application in clinical scenarios.

5. Conclusions

In endodontics, AI models have been applied for determining working length, vertical root fractures, and root morphology; detecting and diagnosing pulpal diseases and periapical lesions; and predicting prognosis, postoperative pain, and case difficulties. Most of the included studies (n = 21) were developed using convolutional neural networks. Among the included studies, datasets that were used were mostly cone-beam computed tomography images, followed by periapical radiographs and panoramic radiographs. QUADAS-2, used to assess the quality of the included articles, revealed a low risk of bias in the patient selection domain in most of the studies (risk of bias: 90%; applicability: 70%). These models can be used as supplementary tools in clinical practice in order to expedite the clinical decision-making process and enhance the treatment modality and clinical operation. However, in most of the studies, the models were developed using a limited number of datasets for training and evaluation. The data samples collected were from a single clinic/center and from a single radiographic instrument. Hence, the results obtained from these studies cannot be generalized due to the lack of heterogeneity in the samples. In order to overcome these limitations, future studies should focus on considering a large number of datasets for training and testing the models. Samples need to be collected from multiple centers and from different radiographic instruments.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/diagnostics13030414/s1, Table S1: Assessment of risk of bias domains and applicability concerns.

Author Contributions

Conceptualization, S.B.K. and K.A.; methodology, A.A. (Ali Alaqla); software, M.A.; validation, A.A. (Abdulmohsen Alfadley), A.A. (Ali Alaqla), and A.J.; formal analysis, M.A.; investigation, S.B.K.; resources, K.A.; data curation, S.B.K.; writing—original draft preparation, S.B.K.; writing—review and editing, A.J. and A.A. (Abdulmohsen Alfadley); visualization, A.A. (Ali Alaqla); supervision, K.A.; project administration, A.A. (Abdulmohsen Alfadley). All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This research received no external funding.

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Segura-Egea J.J., Martín-González J., Castellanos-Cosano L. Endodontic Medicine: Connections between Apical Periodontitis and Systemic Diseases. Int. Endod. J. 2015;48:933–951. doi: 10.1111/iej.12507. [DOI] [PubMed] [Google Scholar]

- 2.Velvart P., Hecker H., Tillinger G. Detection of the Apical Lesion and the Mandibular Canal in Conventional Radiography and Computed Tomography. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. Endodontology. 2001;92:682–688. doi: 10.1067/moe.2001.118904. [DOI] [PubMed] [Google Scholar]

- 3.Law A.S., Nixdorf D.R., Aguirre A.M., Reams G.J., Tortomasi A.J., Manne B.D., Harris D.R. Predicting Severe Pain after Root Canal Therapy in the National Dental PBRN. J. Dent. Res. 2014;94:37S–43S. doi: 10.1177/0022034514555144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Ridao-Sacie C., Segura-Egea J.J., Fernández-Palacín A., Bullón-Fernández P., Ríos-Santos J.V. Radiological Assessment of Periapical Status Using the Periapical Index: Comparison of Periapical Radiography and Digital Panoramic Radiography. Int. Endod. J. 2007;40:433–440. doi: 10.1111/j.1365-2591.2007.01233.x. [DOI] [PubMed] [Google Scholar]

- 5.Dutra K.L., Haas L., Porporatti A.L., Flores-Mir C., Santos J.N., Mezzomo L.A., Corrêa M., De Luca Canto G. Diagnostic Accuracy of Cone-Beam Computed Tomography and Conventional Radiography on Apical Periodontitis: A Systematic Review and Meta-Analysis. J. Endod. 2016;42:356–364. doi: 10.1016/j.joen.2015.12.015. [DOI] [PubMed] [Google Scholar]

- 6.Ludlow J.B., Ivanovic M. Comparative Dosimetry of Dental CBCT Devices and 64-Slice CT for Oral and Maxillofacial Radiology. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. Endodontology. 2008;106:106–114. doi: 10.1016/j.tripleo.2008.03.018. [DOI] [PubMed] [Google Scholar]

- 7.Hansen S.L., Huumonen S., Gröndahl K., Gröndahl H.G. Limited Cone-Beam CT and Intraoral Radiography for the Diagnosis of Periapical Pathology. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. Endodontology. 2007;103:114–119. doi: 10.1016/j.tripleo.2006.01.001. [DOI] [PubMed] [Google Scholar]

- 8.Estrela C., Bueno M.R., Azevedo B.C., Azevedo J.R., Pécora J.D. A New Periapical Index Based on Cone Beam Computed Tomography. J. Endod. 2008;34:1325–1331. doi: 10.1016/j.joen.2008.08.013. [DOI] [PubMed] [Google Scholar]

- 9.Allen B., Seltzer S.E., Langlotz C.P., Dreyer K.P., Summers R.M., Petrick N., Marinac-Dabic D., Cruz M., Alkasab T.K., Hanisch R.J., et al. A Road Map for Translational Research on Artificial Intelligence in Medical Imaging: From the 2018 National Institutes of Health/RSNA/ACR/the Academy Workshop. J. Am. Coll. Radiol. 2019;16:1179–1189. doi: 10.1016/j.jacr.2019.04.014. [DOI] [PubMed] [Google Scholar]

- 10.Schwendicke F., Samek W., Krois J. Artificial Intelligence in Dentistry: Chances and Challenges. J. Dent. Res. 2020;99:769–774. doi: 10.1177/0022034520915714. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Burt J.R., Torosdagli N., Khosravan N., RaviPrakash H., Mortazi A., Tissavirasingham F., Hussein S., Bagci U. Deep Learning beyond Cats and Dogs: Recent Advances in Diagnosing Breast Cancer with Deep Neural Networks. Br. J. Radiol. 2018;91:20170545. doi: 10.1259/bjr.20170545. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Hwang J.-J., Jung Y.-H., Cho B.-H., Heo M.-S. An Overview of Deep Learning in the Field of Dentistry. Imaging Sci. Dent. 2019;49:1. doi: 10.5624/isd.2019.49.1.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Sklan J.E.S., Plassard A.J., Fabbri D., Landman B.A. Toward Content Based Image Retrieval with Deep Convolutional Neural Networks; Proceedings of the SPIE the International Society for Optical Engineering; Orlando, FL, USA. 21–26 February 2015; p. 94172C. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kumar Y., Koul A., Singla R., Ijaz M.F. Artificial Intelligence in Disease Diagnosis: A Systematic Literature Review, Synthesizing Framework and Future Research Agenda. J. Ambient. Intell. Humaniz. Comput. 2022:1–28. doi: 10.1007/s12652-021-03612-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Shi F., Wang J., Shi J., Wu Z., Wang Q., Tang Z., He K., Shi Y., Shen D. Review of Artificial Intelligence Techniques in Imaging Data Acquisition, Segmentation and Diagnosis for COVID-19. IEEE Rev. Biomed. Eng. 2020;14:4–15. doi: 10.1109/RBME.2020.2987975. [DOI] [PubMed] [Google Scholar]

- 16.Thomas B. Artificial Intelligence: Review of Current and Future Applications in Medicine. Fed. Pract. 2021;38:527–538. doi: 10.12788/fp.0174. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Putra R.H., Doi C., Yoda N., Astuti E.R., Sasaki K. Current Applications and Development of Artificial Intelligence for Digital Dental Radiography. Dentomaxillofac. Radiol. 2021;15:20210197. doi: 10.1259/dmfr.20210197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Tanikawa C., Yamashiro T. Development of Novel Artificial Intelligence Systems to Predict Facial Morphology after Orthognathic Surgery and Orthodontic Treatment in Japanese Patients. Sci. Rep. 2021;11:15853. doi: 10.1038/s41598-021-95002-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.García-Pola M., Pons-Fuster E., Suárez-Fernández C., Seoane-Romero J., Romero-Méndez A., López-Jornet P. Role of Artificial Intelligence in the Early Diagnosis of Oral Cancer. A Scoping Review. Cancers. 2021;13:4600. doi: 10.3390/cancers13184600. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Pauwels R. A Brief Introduction to Concepts and Applications of Artificial Intelligence in Dental Imaging. Oral Radiol. 2021;37:153–160. doi: 10.1007/s11282-020-00468-5. [DOI] [PubMed] [Google Scholar]

- 21.Nagendrababu V., Aminoshariae A., Kulild J. Artificial Intelligence in Endodontics: Current Applications and Future Directions. J. Endod. 2021;47:1352–1357. doi: 10.1016/j.joen.2021.06.003. [DOI] [PubMed] [Google Scholar]

- 22.Umer F., Habib S. Critical Analysis of Artificial Intelligence in Endodontics: A Scoping Review. J. Endod. 2021;48:152–160. doi: 10.1016/j.joen.2021.11.007. [DOI] [PubMed] [Google Scholar]

- 23.Page M.J., McKenzie J.E., Bossuyt P.M., Boutron I., Hoffmann T.C., Mulrow C.D., Shamseer L., Tetzlaff J.M., Akl E.A., Brennan S.E., et al. The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. Br. Med. J. 2021;372:n71. doi: 10.1136/bmj.n71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Whiting P.F., Rutjes A.W.S., Westwood M.E., Mallett S., Deeks J.J., Reitsma J.B., Leeflang M.M.G., Sterne J.A.C., Bossuyt P.M.M., QUADAS-2 Group QUADAS-2: A Revised Tool for the Quality Assessment of Diagnostic Accuracy Studies. Ann. Intern. Med. 2011;155:529–536. doi: 10.7326/0003-4819-155-8-201110180-00009. [DOI] [PubMed] [Google Scholar]

- 25.Saghiri M.A., Asgar K., Boukani K.K., Lotfi M., Aghili H., Delvarani A., Karamifar K., Saghiri A.M., Mehrvarzfar P., Garcia-Godoy F. A New Approach for Locating the Minor Apical Foramen Using an Artificial Neural Network. Int. Endod. J. 2012;45:257–265. doi: 10.1111/j.1365-2591.2011.01970.x. [DOI] [PubMed] [Google Scholar]

- 26.Saghiri M.A., Garcia-Godoy F., Gutmann J.L., Lotfi M., Asgar K. The Reliability of Artificial Neural Network in Locating Minor Apical Foramen: A Cadaver Study. J. Endod. 2012;38:1130–1134. doi: 10.1016/j.joen.2012.05.004. [DOI] [PubMed] [Google Scholar]

- 27.Kositbowornchai S., Plermkamon S., Tangkosol T. Performance of an Artificial Neural Network for Vertical Root Fracture Detection: An Ex Vivo Study. Dent. Traumatol. 2013;29:151–155. doi: 10.1111/j.1600-9657.2012.01148.x. [DOI] [PubMed] [Google Scholar]

- 28.Tumbelaka B., Baihaki F., Oscandar F., Rukmo M., Sitam S. Identification of Pulpitis at Dental X-Ray Periapical Radiography Based on Edge Detection, Texture Description and Artificial Neural Networks. Saudi Endod. J. 2014;4:115. doi: 10.4103/1658-5984.138139. [DOI] [Google Scholar]

- 29.Johari M., Esmaeili F., Andalib A., Garjani S., Saberkari H. Detection of Vertical Root Fractures in Intact and Endodontically Treated Premolar Teeth by Designing a Probabilistic Neural Network: An Ex Vivo Study. Dentomaxillofacial Radiol. 2017;46:20160107. doi: 10.1259/dmfr.20160107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Shah H., Paniagua B., Hernandez-Cerdan P., Budin F., Chittajallu D., Walter R., Mol A., Khan A., Vimort J.-B. Automatic Quantification Framework to Detect Cracks in Teeth; Proceedings of the Medical Imaging 2018: Biomedical Applications in Molecular, Structural, and Functional Imaging; Houston, TX, USA. 10–15 February 2018; [DOI] [Google Scholar]

- 31.Ekert T., Krois J., Meinhold L., Elhennawy K., Emara R., Golla T., Schwendicke F. Deep Learning for the Radiographic Detection of Apical Lesions. J. Endod. 2019;45:917–922.e5. doi: 10.1016/j.joen.2019.03.016. [DOI] [PubMed] [Google Scholar]

- 32.Fukuda M., Inamoto K., Shibata N., Ariji Y., Yanashita Y., Kutsuna S., Nakata K., Katsumata A., Fujita H., Ariji E. Evaluation of an Artificial Intelligence System for Detecting Vertical Root Fracture on Panoramic Radiography. Oral Radiol. 2019;36:337–343. doi: 10.1007/s11282-019-00409-x. [DOI] [PubMed] [Google Scholar]

- 33.Hiraiwa T., Ariji Y., Fukuda M., Kise Y., Nakata K., Katsumata A., Fujita H., Ariji E. A Deep-Learning Artificial Intelligence System for Assessment of Root Morphology of the Mandibular First Molar on Panoramic Radiography. Dentomaxillofacial Radiol. 2019;48:20180218. doi: 10.1259/dmfr.20180218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Mallishery S., Chhatpar P., Banga K.S., Shah T., Gupta P. The Precision of Case Difficulty and Referral Decisions: An Innovative Automated Approach. Clin. Oral Investig. 2019;24:1909–1915. doi: 10.1007/s00784-019-03050-4. [DOI] [PubMed] [Google Scholar]

- 35.Setzer F.C., Shi K.J., Zhang Z., Yan H., Yoon H., Mupparapu M., Li J. Artificial Intelligence for the Computer-Aided Detection of Periapical Lesions in Cone-Beam Computed Tomographic Images. J. Endod. 2020;46:987–993. doi: 10.1016/j.joen.2020.03.025. [DOI] [PubMed] [Google Scholar]

- 36.Orhan K., Bayrakdar I.S., Ezhov M., Kravtsov A., Özyürek T. Evaluation of Artificial Intelligence for Detecting Periapical Pathosis on Cone-Beam Computed Tomography Scans. Int. Endod. J. 2020;53:680–689. doi: 10.1111/iej.13265. [DOI] [PubMed] [Google Scholar]

- 37.Endres M.G., Hillen F., Salloumis M., Sedaghat A.R., Niehues S.M., Quatela O., Hanken H., Smeets R., Beck-Broichsitter B., Rendenbach C., et al. Development of a Deep Learning Algorithm for Periapical Disease Detection in Dental Radiographs. Diagnostics. 2020;10:430. doi: 10.3390/diagnostics10060430. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Qiao X., Zhang Z., Chen X. Multifrequency Impedance Method Based on Neural Network for Root Canal Length Measurement. Appl. Sci. 2020;10:7430. doi: 10.3390/app10217430. [DOI] [Google Scholar]

- 39.Sherwood A.A., Sherwood A.I., Setzer F.C., Shamili J.V., John C., Schwendicke F. A Deep Learning Approach to Segment and Classify C-Shaped Canal Morphologies in Mandibular Second Molars Using Cone-Beam Computed Tomography. J. Endod. 2021;47:1907–1916. doi: 10.1016/j.joen.2021.09.009. [DOI] [PubMed] [Google Scholar]

- 40.Li C.-W., Lin S.-Y., Chou H.-S., Chen T.-Y., Chen Y.-A., Liu S.-Y., Liu Y.-L., Chen C.-A., Huang Y.-C., Chen S.-L., et al. Detection of Dental Apical Lesions Using CNNs on Periapical Radiograph. Sensors. 2021;21:7049. doi: 10.3390/s21217049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Vicory J., Chandradevan R., Hernandez-Cerdan P., Huang W.A., Fox D., Abu Qdais L., McCormick M., Mol A., Walter R., Marron J.S., et al. Dental Microfracture Detection Using Wavelet Features and Machine Learning; Proceedings of the Medical Imaging 2021: Image Processing; Online. 15–20 February 2021; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Zheng L., Wang H., Mei L., Chen Q., Zhang Y., Zhang H. Artificial Intelligence in Digital Cariology: A New Tool for the Diagnosis of Deep Caries and Pulpitis Using Convolutional Neural Networks. Ann. Transl. Med. 2021;9:763. doi: 10.21037/atm-21-119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Moidu N.P., Sharma S., Chawla A., Kumar V., Logani A. Deep Learning for Categorization of Endodontic Lesion Based on Radiographic Periapical Index Scoring System. Clin. Oral Investig. 2021;26:651–658. doi: 10.1007/s00784-021-04043-y. [DOI] [PubMed] [Google Scholar]

- 44.Pauwels R., Brasil D.M., Yamasaki M.C., Jacobs R., Bosmans H., Freitas D.Q., Haiter-Neto F. Artificial Intelligence for Detection of Periapical Lesions on Intraoral Radiographs: Comparison between Convolutional Neural Networks and Human Observers. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2021;131:610–616. doi: 10.1016/j.oooo.2021.01.018. [DOI] [PubMed] [Google Scholar]

- 45.Jeon S.-J., Yun J.-P., Yeom H.-G., Shin W.-S., Lee J.-H., Jeong S.-H., Seo M.-S. Deep-Learning for Predicting C-Shaped Canals in Mandibular Second Molars on Panoramic Radiographs. Dentomaxillofacial Radiol. 2021;50:20200513. doi: 10.1259/dmfr.20200513. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Guo W., Wang L., Li J., Li W., Li F., Gu Y. Prediction of Thrust Force and Torque in Canal Preparation Process Using Taguchi Method and Artificial Neural Network. Adv. Mech. Eng. 2021;13:168781402110524. doi: 10.1177/16878140211052459. [DOI] [Google Scholar]

- 47.Lin X., Fu Y., Ren G., Yang X., Duan W., Chen Y., Zhang Q. Micro–Computed Tomography-Guided Artificial Intelligence for Pulp Cavity and Tooth Segmentation on Cone-Beam Computed Tomography. J. Endod. 2021;47:1933–1941. doi: 10.1016/j.joen.2021.09.001. [DOI] [PubMed] [Google Scholar]

- 48.Gao X., Xin X., Li Z., Zhang W. Predicting Postoperative Pain Following Root Canal Treatment by Using Artificial Neural Network Evaluation. Sci. Rep. 2021;11:17243. doi: 10.1038/s41598-021-96777-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Ngoc V.T., Viet D.H., Anh L.K., Minh D.Q., Nghia L.L., Loan H.K., Tuan T.M., Ngan T.T., Tra N.T. Periapical Lesion Diagnosis Support System Based on X-Ray Images Using Machine Learning Technique. World J. Dent. 2021;12:189–193. doi: 10.5005/jp-journals-10015-1820. [DOI] [Google Scholar]

- 50.Kirnbauer B., Hadzic A., Jakse N., Bischof H., Stern D. Automatic Detection of Periapical Osteolytic Lesions on Cone-Beam Computed Tomography Using Deep Convolutional Neuronal Networks. J. Endod. 2022;48:1434–1440. doi: 10.1016/j.joen.2022.07.013. [DOI] [PubMed] [Google Scholar]

- 51.Herbst C.S., Schwendicke F., Krois J., Herbst S.R. Association between Patient-, Tooth- and Treatment-Level Factors and Root Canal Treatment Failure: A Retrospective Longitudinal and Machine Learning Study. J. Dent. 2022;117:103937. doi: 10.1016/j.jdent.2021.103937. [DOI] [PubMed] [Google Scholar]

- 52.Bayrakdar I.S., Orhan K., Çelik Ö., Bilgir E., Sağlam H., Kaplan F.A., Görür S.A., Odabaş A., Aslan A.F., Różyło-Kalinowska I. A U-Net Approach to Apical Lesion Segmentation on Panoramic Radiographs. BioMed Res. Int. 2022;2022:7035367. doi: 10.1155/2022/7035367. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Zhao L., Pan J., Xu L. Cone-Beam Computed Tomography Image Features under Intelligent Three-Dimensional Reconstruction Algorithm in the Evaluation of Intraoperative and Postoperative Curative Effect of Dental Pulp Disease Using Root Canal Therapy. Sci. Program. 2022;2022:3119471. doi: 10.1155/2022/3119471. [DOI] [Google Scholar]

- 54.Hamdan M.H., Tuzova L., Mol A., Tawil P.Z., Tuzoff D., Tyndall D.A. The Effect of a Deep-Learning Tool on Dentists’ Performances in Detecting Apical Radiolucencies on Periapical Radiographs. Dentomaxillofacial Radiol. 2022;51 doi: 10.1259/dmfr.20220122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Calazans M.A.A., Ferreira F.A.B.S., Alcoforado M.d.L.M.G., Santos A.d., Pontual A.d.A., Madeiro F. Automatic Classification System for Periapical Lesions in Cone-Beam Computed Tomography. Sensors. 2022;22:6481. doi: 10.3390/s22176481. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Yang S., Lee H., Jang B., Kim K.-D., Kim J., Kim H., Park W. Development and Validation of a Visually Explainable Deep Learning Model for Classification of C-Shaped Canals of the Mandibular Second Molars in Periapical and Panoramic Dental Radiographs. J. Endod. 2022;48:914–921. doi: 10.1016/j.joen.2022.04.007. [DOI] [PubMed] [Google Scholar]

- 57.Xu T., Zhu Y., Peng L., Cao Y., Zhao X., Meng F., Ding J., Liang S. Artificial Intelligence Assisted Identification of Therapy History from Periapical Films for Dental Root Canal. Displays. 2022;71:102119. doi: 10.1016/j.displa.2021.102119. [DOI] [Google Scholar]

- 58.Qu Y., Lin Z., Yang Z., Lin H., Huang X., Gu L. Machine Learning Models for Prognosis Prediction in Endodontic Microsurgery. J. Dent. 2022;118:103947. doi: 10.1016/j.jdent.2022.103947. [DOI] [PubMed] [Google Scholar]

- 59.Li Y., Zeng G., Zhang Y., Wang J., Jin Q., Sun L., Zhang Q., Lian Q., Qian G., Xia N., et al. AGMB-Transformer: Anatomy-Guided Multi-Branch Transformer Network for Automated Evaluation of Root Canal Therapy. IEEE J. Biomed. Health Inform. 2022;26:1684–1695. doi: 10.1109/JBHI.2021.3129245. [DOI] [PubMed] [Google Scholar]

- 60.Hu Z., Cao D., Hu Y., Wang B., Zhang Y., Tang R., Zhuang J., Gao A., Chen Y., Lin Z. Diagnosis of in Vivo Vertical Root Fracture Using Deep Learning on Cone-Beam CT Images. BMC Oral Health. 2022;22:382. doi: 10.1186/s12903-022-02422-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Vasdev D., Gupta V., Shubham S., Chaudhary A., Jain N., Salimi M., Ahmadian A. Periapical Dental X-Ray Image Classification Using Deep Neural Networks. Ann. Oper. Res. 2022:1–29. doi: 10.1007/s10479-022-04961-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Granholm A., Alhazzani W., Møller M.H. Use of the GRADE Approach in Systematic Reviews and Guidelines. Br. J. Anaesth. 2019;123:554–559. doi: 10.1016/j.bja.2019.08.015. [DOI] [PubMed] [Google Scholar]

- 63.LeCun Y., Bengio Y., Hinton G. Deep Learning. Nature. 2015;521:436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 64.Srinidhi C.L., Ciga O., Martel A.L. Deep Neural Network Models for Computational Histopathology: A Survey. Med. Image Anal. 2021;67:101813. doi: 10.1016/j.media.2020.101813. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Koivisto T., Bowles W.R., Rohrer M. Frequency and Distribution of Radiolucent Jaw Lesions: A Retrospective Analysis of 9,723 Cases. J. Endod. 2012;38:729–732. doi: 10.1016/j.joen.2012.02.028. [DOI] [PubMed] [Google Scholar]

- 66.Braz-Silva P.H., Bergamini M.L., Mardegan A.P., De Rosa C.S., Hasseus B., Jonasson P. Inflammatory Profile of Chronic Apical Periodontitis: A Literature Review. Acta Odontol. Scand. 2018;77:173–180. doi: 10.1080/00016357.2018.1521005. [DOI] [PubMed] [Google Scholar]

- 67.Natkin E., Oswald R.J., Carnes L.I. The Relationship of Lesion Size to Diagnosis, Incidence, and Treatment of Periapical Cysts and Granulomas. Oral Surg. Oral Med. Oral Pathol. 1984;57:82–94. doi: 10.1016/0030-4220(84)90267-6. [DOI] [PubMed] [Google Scholar]

- 68.Shrout M.K., Hall J.M., Hildebolt C.E. Differentiation of Periapical Granulomas and Radicular Cysts by Digital Radiometric Analysis. Oral Surg. Oral Med. Oral Pathol. 1993;76:356–361. doi: 10.1016/0030-4220(93)90268-9. [DOI] [PubMed] [Google Scholar]

- 69.Nardi C., Calistri L., Grazzini G., Desideri I., Lorini C., Occhipinti M., Mungai F., Colagrande S. Is Panoramic Radiography an Accurate Imaging Technique for the Detection of Endodontically Treated Asymptomatic Apical Periodontitis? J. Endod. 2018;44:1500–1508. doi: 10.1016/j.joen.2018.07.003. [DOI] [PubMed] [Google Scholar]

- 70.Nardi C., Calistri L., Pradella S., Desideri I., Lorini C., Colagrande S. Accuracy of Orthopantomography for Apical Periodontitis without Endodontic Treatment. J. Endod. 2017;43:1640–1646. doi: 10.1016/j.joen.2017.06.020. [DOI] [PubMed] [Google Scholar]

- 71.Schaeffer M.A., White R.R., Walton R.E. Determining the Optimal Obturation Length: A Meta-Analysis of Literature. J. Endod. 2005;31:271–274. doi: 10.1097/01.don.0000140585.52178.78. [DOI] [PubMed] [Google Scholar]

- 72.Khasnis S., Kidiyoor K., Patil A., Kenganal S. Vertical Root Fractures and Their Management. J. Conserv. Dent. 2014;17:103. doi: 10.4103/0972-0707.128034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Liao W.-C., Chen C.-H., Pan Y.-H., Chang M.-C., Jeng J.-H. Vertical Root Fracture in Non-Endodontically and Endodontically Treated Teeth: Current Understanding and Future Challenge. J. Pers. Med. 2021;11:1375. doi: 10.3390/jpm11121375. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Kim Y., Lee D., Kim D.-V., Kim S.-Y. Analysis of Cause of Endodontic Failure of C-Shaped Root Canals. Scanning. 2018;2018:2516832. doi: 10.1155/2018/2516832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Jung H.-J., Lee S.-S., Huh K.-H., Yi W.-J., Heo M.-S., Choi S.-C. Predicting the Configuration of a C-Shaped Canal System from Panoramic Radiographs. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. Endodontology. 2010;109:e37–e41. doi: 10.1016/j.tripleo.2009.08.024. [DOI] [PubMed] [Google Scholar]