Abstract

Background: Facilitation is an effective strategy to implement evidence-based practices, often involving external facilitators (EFs) bringing content expertise to implementation sites. Estimating time spent on multifaceted EF activities is complex. Furthermore, collecting continuous time–motion data for facilitation tasks is challenging. However, organizations need this information to allocate implementation resources to sites. Thus, our objectives were to conduct a time–motion analysis of external facilitation, and compare continuous versus noncontinuous approaches to collecting time–motion data. Methods: We analyzed EF time–motion data from six VA mental health clinics implementing the evidence-based Collaborative Chronic Care Model (CCM). We documented EF activities during pre-implementation (4–6 weeks) and implementation (12 months) phases. We collected continuous data during the pre-implementation phase, followed by data collection over a 2-week period (henceforth, “a two-week interval”) at each of three time points (beginning/middle/end) during the implementation phase. As a validity check, we assessed how closely interval data represented continuous data collected throughout implementation for two of the sites. Results: EFs spent 21.8 ± 4.5 h/site during pre-implementation off-site, then 27.5 ± 4.6 h/site site-visiting to initiate implementation. Based on the 2-week interval data, EFs spent 2.5 ± 0.8, 1.4 ± 0.6, and 1.2 ± 0.6 h/week toward the implementation’s beginning, middle, and end, respectively. Prevalent activities were preparation/planning, process monitoring, program adaptation, problem identification, and problem-solving. Across all activities, 73.6% of EF time involved email, phone, or video communication. For the two continuous data sites, computed weekly time averages toward the implementation’s beginning, middle, and end differed from the interval data’s averages by 1.0, 0.1, and 0.2 h, respectively. Activities inconsistently captured in the interval data included irregular assessment, stakeholder engagement, and network development. Conclusions: Time–motion analysis of CCM implementation showed initial higher-intensity EF involvement that tapered. The 2-week interval data collection approach, if accounting for its potential underestimation of irregular activities, may be promising/efficient for implementation studies collecting time–motion data.

Keywords: implementation facilitation, implementation strategy, external facilitator, time–motion analysis, data collection, mental health, Collaborative Chronic Care Model, evidence-based practice

Implementation facilitation is a multifaceted process that incorporates a bundle of implementation strategies (Ritchie et al., 2020a), and is used by a growing number of efforts to implement innovations into practice (Carter et al., 2021; Ruud et al., 2021). Implementation facilitation consists of various forms of support (e.g., task management, accountability checks, process monitoring, and relationship building) that is provided to personnel at a site implementing an evidence-based practice (Kitson et al., 1998; Olmos Ochoa et al., 2021). A common feature of implementation facilitation is to utilize an external facilitator (EF) who may bring a range of expertise including or such as content and process improvement expertise to an implementation site (Sullivan et al., 2021). Recent years have seen a noticeable increase in the use of external facilitation in implementation efforts. For instance, as of mid-October 2021, searching the online database PubMed for publications on implementation-related external facilitation yielded 5 articles prior to 2010, 14 articles from 2010 to 2015, and 58 articles from 2016 to 2021. Common implementation facilitation activities include implementation planning, engaging stakeholders, monitoring progress, and problem-solving (Smith et al., 2019). How much time EFs spend on implementation facilitation activities is of great interest to entities planning or funding implementation efforts. This information is critical for allocating appropriate EF resources to a site.

Measuring who spends how much time, on what activities and when, can be accomplished through a time–motion study. Time–motion studies conduct such measurement by documenting both the time spent on a work task (e.g., in minutes) and what the task entailed (e.g., the task’s date, purpose, and individuals involved) (Bradley et al., 2021; Lim et al., 2022). Time–motion studies are widely used in fields such as industrial engineering to measure the amount of time and movements that employees require to complete work tasks (Burri Jr. & Helander, 1991; Magu et al., 2015). Time–motion studies are increasingly used in health care settings to assess and improve clinic workflow (Kato et al., 2021; Solomon et al., 2021). Examples include comparing nurses’ time spent in face-to-face versus care management activities in the Collaborative Chronic Care Model (CCM) (Glick et al., 2004), comparing physicians’ time spent working in the electronic health record versus face-to-face time with patients in clinic visits (Young et al., 2018), and improving patient wait times by assessing and restructuring clinical practice patterns to eliminate inefficiencies (Racine & Davidson, 2002).

Time–motion methodology is beginning to be more widely used for implementation science. Especially as economic evaluation of implementation is receiving increased attention within the field (since implementation cost can influence whether and how to implement an evidence-based intervention) (Eisman et al., 2020; Yoon, 2020), time–motion methodology is being embraced as an approach for collecting and analyzing data that inform implementation cost (Matthieu et al., 2020; Lamper et al., 2020; Pittman et al., 2021). Notably, Ritchie et al. (2020b) conducted a by-week time–motion study of implementation facilitation activities for the EFs, internal facilitators, and participating staff across all levels of the health care systems in which implementation of integrated mental health services within primary care settings was being carried out. They used a structured spreadsheet to document time and activities, based on the weekly collection of these data from the facilitators throughout the 28-month study. In reporting their study, they noted the challenges of collecting these data, especially given the burden of weekly documentation.

Building on Ritchie et al. (2020b)’s work, we adapted their structured spreadsheet to conduct a time–motion analysis of EF efforts in a randomized trial. To minimize the burden of time–motion data documentation, we used a less intensive data collection approach—namely, collection over a 2-week period (henceforth, “a two-week interval”) near each of the beginning, middle, and end of the active implementation phase. Hence, our primary objective was to understand how much, and how, EF time is spent on a multi-site randomized implementation trial. Our secondary objective was to examine the extent to which the less intensive data collection approach could potentially substitute for data collection throughout the entire active implementation phase.

Methods

Time–motion data for this study were from a multi-site randomized stepped wedge implementation trial conducted from February 2016 to February 2018 at the U.S. Department of Veterans Affairs (VA), in close partnership with VA’s national Office of Mental Health and Suicide Prevention (OMHSP) (Bauer et al., 2019). As a hybrid type II trial (Curran et al., 2012), it examined the effectiveness of implementation facilitation in establishing the evidence-based CCM to structure the clinical care delivered by interdisciplinary outpatient mental health provider teams. The CCM organizes health care delivery to be anticipatory, coordinated, and patient-centered (Von Korff et al., 1997; Wagner et al., 1996; Coleman et al., 2009). It comprises six core elements: work role redesign, patient self-management support, provider decision support, clinical information systems, linkages to community resources, and organizational/leadership support. The CCM has demonstrated effectiveness for improving outcomes in medical conditions (Bodenheimer et al., 2002a; Bodenheimer et al., 2002b) and mental health conditions (Badamgarav et al., 2003; Woltmann et al., 2012; Miller et al., 2013). The trial also assessed the impact of CCM implementation on health outcomes of team-treated individuals. Published articles provide additional information regarding the stepped wedge design used (Bauer et al., 2016; Bauer et al., 2019), the implemented evidence-based CCM (Miller et al., 2019; Sullivan et al., 2021), and the EFs who worked with the sites (Connolly et al., 2020). Research procedures were approved by the VA Central Institutional Review Board (IRB). Implementation efforts and related measures were exempt from IRB review, considered to be program evaluation in support of OMHSP’s endeavors to enhance VA’s mental health care delivery.

Implementation Facilitation

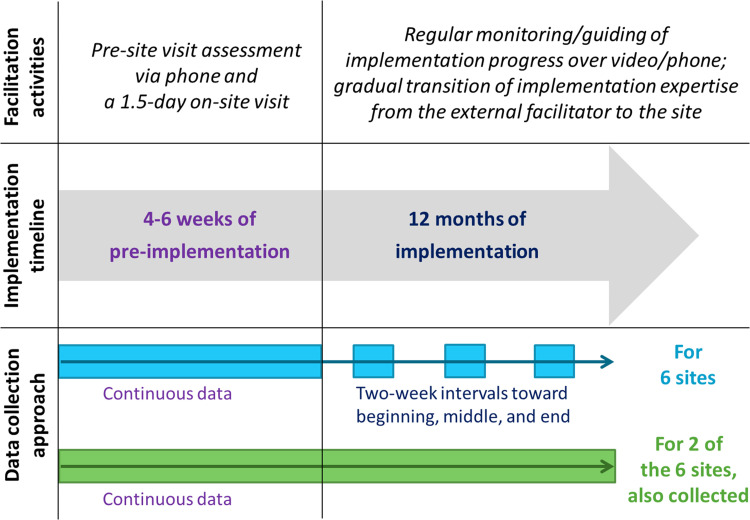

For each site, EF activities consisted of approximately 4–6 weeks (5.3 ± 0.8 weeks across six sites) of a pre-implementation phase and a kick-off site visit followed by 12 months of an active implementation phase. During the pre-implementation phase, the EF held semi-structured conversations with site personnel to begin to understand the context under which the CCM implementation was to be carried out (henceforth referred to as the “pre-site visit assessment”). In addition to the time spent on these conversations with site personnel, the pre-implementation phase’s data also included time spent on scheduling the conversations, preparing for the site visit, and beginning to correspond with the internal facilitator to collaboratively prepare for implementation. A 1.5-day on-site visit by the EF continued these conversations, informed stakeholders about the implementation that was about to commence, and served as the point in time after which the active implementation phase began. During the active implementation phase, the EF monitored and guided the site’s implementation progress, regularly coordinating with an on-site internal facilitator and joining the site’s implementation team meetings over video or by phone.

Time–Motion Tracking

For the six implementation sites involved in this study, we used an adaptation of Ritchie et al. (2020b)’s structured spreadsheet to collect the following information on EF time and activities:

Facilitation activity (e.g., stakeholder engagement, process monitoring).

Date of the activity.

Type of activity (e.g., preparatory time for an implementation task, one-on-one or group-facing).

Mode of communication used for the activity (e.g., email, phone).

Interaction target—that is, with whom the activity involved interacting (e.g., internal facilitator, clinic leadership).

Duration of the activity (in increments of 15 min).

The study had three EFs, each facilitating implementation at two of the six sites. Each of the three EFs was allocated one site to work with per wave of the stepped wedge trial. The data for each site were logged on the spreadsheet by the EF working with the site. Whenever an EF came across a question regarding the logging procedure (e.g., whether to log a facilitation activity as being primarily stakeholder engagement or education), ongoing weekly meetings among the EFs were used to discuss the procedure in question and reach a consensus on how to log the data.

The implementation facilitation approach used by the trial (Bauer et al., 2016), as shown in Figure 1, had the EF gradually transition implementation expertise to the site over the course of the active implementation phase. We thus expected the amount of EF time spent on implementation facilitation activities to similarly decrease between the beginning and the end of the 12-month active implementation phase. Accordingly, after collecting continuous time–motion data through the 4–6 weeks pre-implementation phase (i.e., for the pre-site visit assessment and the site visit), we selected one 2-week interval (deemed typical by the EF—e.g., not overlapping with holidays or irregular work schedules) from each of months 1–4 (weeks 1–17), months 5–8 (weeks 18–34), and months 9–12 (weeks 35–51) of the active implementation phase over which to collect time–motion data. For two of these sites (which worked with one EF, were located in two different regions, and were one in each of two stepped wedge waves), we also collected continuous data throughout the active implementation phase. We then assessed the extent to which the selected interval data could be used to estimate the weekly EF time spent on implementation facilitation activities.

Figure 1.

Implementation facilitation activities, implementation timeline, and time–motion data collection approach.

Analysis Plan

Our analyses proceeded in three stages. First, we assessed how much EF time was spent per site, both overall and for each of (i) the pre-site visit assessment, (ii) the site visit, and (iii) the beginning, middle, and end of the 12-month active implementation phase (i.e., the first, second, and third 17-weeklong periods, respectively, in a year). Second, we examined the activities and interaction targets across which the EF time spent was allocated, and calculated the percent EF time across activities and across interaction targets. Third, we compared the time estimates from 2-week intervals versus continuous data collection, and examined the changes in our results when comparing the 2-week intervals to intervals of different lengths (derived from the continuous data) ranging from 2 to 8 weeks. We used 8 weeks as the longest interval length for comparison, since longer intervals would mean collecting data for more than half of the 17 weeks in each of the beginning, middle, and end of the 12-month active implementation phase.

For each analytical stage, means and SD were calculated for the time estimates, and comparisons of the means were conducted using t-tests or analyses of variance with Tukey’s method for post hoc tests. Specifically, using 2-week interval data, we compared the mean total time spent per site by EF, site (region), and calendar time (wave of stepped wedge). We also compared mean time spent per week with a site between the beginning, middle, and end of the active implementation phase, accounting for the EF, site, and calendar time through stratified analyses. For the two sites for which we had time estimates from both 2-week intervals and continuous data collection, we compared the mean time spent with a site between the interval and continuous data, both total and per week for the beginning, middle, and end of the active implementation phase. We also compared the mean time spent per week with a site during the beginning, middle, and end of the active implementation phase, between the interval lengths of 2 and 8 weeks.

Results

The three EFs, facilitating two sites each, were health services researchers (e.g., psychiatrist, clinical psychologist, and health systems engineer). The six sites were distributed across the southern, midwestern, and northeastern United States (two sites per region). CCM implementation commenced at these sites in two waves (different calendar times) of the stepped wedge (three sites per wave).

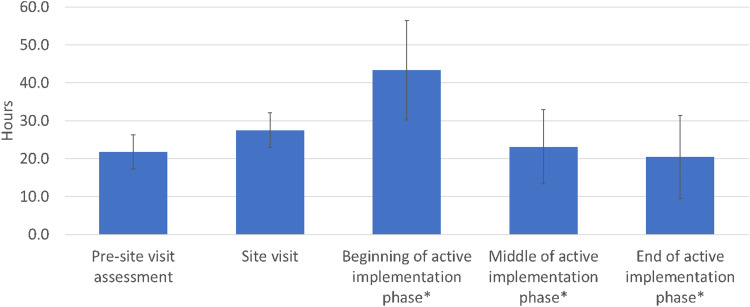

Time Spent on External Facilitation

Table 1 shows our results regarding the time spent on external facilitation. Across both the pre-implementation phase and the active implementation phase, EFs spent 136.2 ± 27.6 (mean ± SD) hours per site for implementation over the course of 55–57 weeks, based on 2-week interval data. Figure 2 shows the average number of hours spent per site, for each of the (i) pre-site visit assessment, (ii) the site visit, and (iii) the beginning, middle, and end of the active implementation phase.

Table 1.

Time spent on external facilitation.

| Pre-implementation phase (continuous data, six sites) | Active implementation phase (2-week interval data, six sites) | ||||||

|---|---|---|---|---|---|---|---|

| Total (hours per site) | Pre-site visit assessment (hours per site) | Site visit (hours per site) | Beginning (hours per week per site) | Middle (hours per week per site) | End (hours per week per site) | Difference in means (p-value): beginning–middle, middle–end, beginning–end | |

| Mean ± SD | 136.2 ± 27.6 | 21.8 ± 4.5 | 27.5 ± 4.6 | 2.5 ± 0.8 | 1.4 ± 0.6 | 1.2 ± 0.6 | .0200*, .9108, .0088** |

| Difference in means (p-value) | |||||||

| Between three EFs (X, Y, Z), two sites each: X-Y, Y-Z, X-Z | .9020, .8740, 0.9976 | .8966, .8002, .9768 | .9952, .1122, .1207 | .9190, .8536, .9872 | .7727, .0148*, .0204* | .9411, .8631, .6949 | n/a |

| Between three regions (A, B, C), two sites each: A-B, B-C, A-C | .7165, .2989, .1471 | .9866, .7663, .8442 | .0308*, .0900, .3338 | .9462, .5097, .3833 | .0089**, .0045**, .2488 | .0337*, .9001, .0266* | n/a |

| Between two waves of stepped wedge, three sites each | .1388 | .2948 | .8802 | .4252 | .6895 | .0627 | n/a |

Note: *p < .05, **p < .01.

Figure 2.

Average external facilitator (EF) time spent across six implementation sites.

Note: *Data were collected over a 2-week interval, as described in the text; shown is the collected data divided by 2 (for the weekly average) then multiplied by 17, as an estimate of the number of hours spent over 17 weeks—that is, the duration of each of the beginning, middle, and end of the 12-month active implementation phase.

For the 4-6 weeks of the pre-implementation phase, the EFs spent 21.8 ± 4.5 h per site for the pre-site visit assessment, orienting the site and assessing its contextual factors. The EFs spent 27.5 ± 4.6 h per site for the 1.5-day on-site visit (which was scheduled for one full 8-h work day plus another half work day of 4 h) including travel time to and from the site. For the 12-month active implementation phase, based on 2-week interval data, the EFs spent 2.5 ± 0.8 h per week per site toward the beginning of the active implementation phase, 1.4 ± 0.6 h per week per site around the middle of the active implementation phase, and 1.2 ± 0.6 h per week per site toward the end of the active implementation phase, or a total of 86.9 ± 24.1 h per site (an overall average of 1.7 ± 0.5 h per week per site). The time spent along this transition did not always monotonically decrease—for example, for two of our six sites, time spent was higher at the end of the active implementation phase than at the middle. Differences in the mean time spent with a site were significant (p < .05) between the beginning and the middle of active implementation phase, and also between the beginning and the end of the active implementation phase. Differences in the mean total time spent per site between the three EFs were not significant (p = .87).

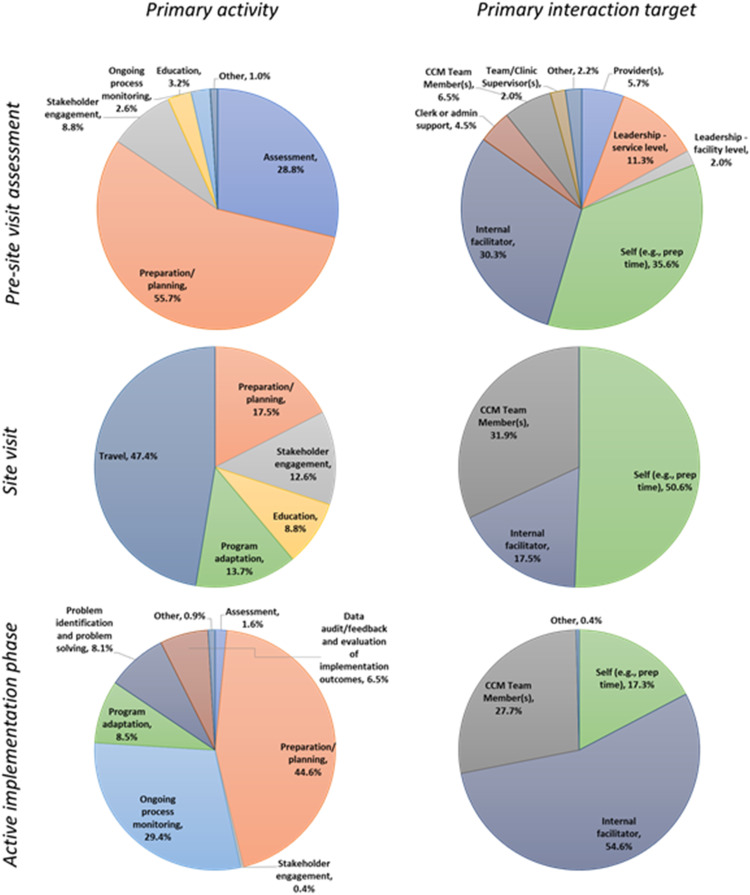

Figure 3 shows a breakdown of the activities and interaction targets across which the EF time was allocated, for each of (i) the pre-site visit assessment, (ii) the site visit, and (iii) the active implementation phase. The most prevalent activities were preparation/planning, ongoing process monitoring, program adaptation, problem identification, and problem-solving. The activities that were found to be prevalent were those that are considered to be core activities for implementation facilitation (Smith et al., 2019), which by definition is a bundle of strategies including implementation planning, engaging stakeholders, monitoring progress, and problem-solving (Ritchie et al., 2020a). Across all of these and other activities, 73.6% of EF time involved communication via email, phone, or video.

Figure 3.

Breakdown of the primary activities and interaction targets across which the external facilitator (EF)

time spent was allocated.

Note: CCM: Collaborative Chronic Care Model.

Comparison of Time Estimates From 2-week Intervals Versus Continuous Data Collection

Comparing these averages based on 2-week interval data to those based on continuous data collected throughout the active implementation phase for two of the sites (Table 2), we found that the interval-based data underestimated the averages by 1.0, 0.1, and 0.2 h per week per site for the beginning, middle, and end of the active implementation phase, respectively. This represents an 18.1% difference in terms of total time spent on facilitation. To better understand the source of this discrepancy, we manually reviewed logged EF activities for the weeks in the continuous data that measured a higher amount of time spent than the time estimate based on the 2-week interval data. We noticed that the continuous data more consistently accounted for irregular time spent on assessing how implementation was progressing, engaging stakeholders, and continuously developing the network of needed personnel and resources for implementation. Using the continuous data available for two of the sites, we examined increasing the interval length from two, to three, to four, etc., up to eight for each of the beginning, middle, and end of the active implementation phase. (Using such 8-week intervals would mean collecting data for 8 × 3 = 24 weeks total—i.e., almost half of the 12-month active implementation phase.) There was a small percentage difference in the average estimate of weekly EF time spent between using 2- versus 8-week intervals for each of the beginning, middle, and end of the active implementation phase (3.0 ± 0.3%, 2.3 ± 1.9%, and 6.7 ± 2.8%, respectively; in hours per week, these correspond to 0.05 ± 0.01, 0.02 ± 0.02, and 0.07 ± 0.03, respectively). Thus, there appears to be little advantage to extending data collection intervals from 2 to 8 weeks.

Table 2.

Comparison of time estimates for 2-week intervals, 8-week intervals, and continuous data collection.

| Active implementation phase | |||

|---|---|---|---|

| Beginning (hours per week per site) | Middle (hours per week per site) | End (hours per week per site) | |

| Two-week interval data, two sites (mean ± SD) | 1.7 ± 0.6 | 1.0 ± 0.1 | 1.1 ± 0.1 |

| Eight-week interval data, two sites (mean ± SD) | 1.7 ± 0.6 | 1.0 ± 0.2 | 1.1 ± 0.1 |

| Continuous data, two sites (mean ± SD) | 2.7 ± 0.6 | 1.1 ± 0.2 | 1.3 ± 0.1 |

| Difference in means (p-value): two-eight, eight-continuous, two-continuous | .9958, .3209, .2967 | .9961, .7723, .8147 | .9295, .0537, .0428* |

Note: *p < .05.

Discussion

This study examined how EF time was spent on a multi-site randomized trial that used implementation facilitation as a strategy to implement the evidence-based CCM in interdisciplinary outpatient mental health provider teams. For each of the six implementation sites, we collected time–motion data continuously over 4–6 weeks of the pre-implementation phase and a kick-off site visit, then over a 2-week interval for each of the beginning, middle, and end of the 12-month active implementation phase. We estimated that EFs spent a total of 136.2 ± 27.6 h per site over the pre-implementation phase, the site visit, and the active implementation phase. EFs spent significantly more time at the beginning of the active implementation phase than at the middle or the end. The most prevalent activities were preparation/planning, ongoing process monitoring, program adaptation, problem identification, and problem-solving. Based on data from two of the sites at which we also collected continuous data throughout the active implementation phase, we found that the interval-based data underestimated the continuous data’s averages by 1.0, 0.1, and 0.2 h per week per site for the beginning, middle, and end of the active implementation phase, respectively, representing an 18.1% difference in terms of total time spent on facilitation.

As implementation facilitation has become increasingly embraced as a strategy for implementing innovations into practice (McCormack et al., 2021; Moore et al., 2020), there is heightened interest in examining the time allocated to implementation facilitation (Edelman et al., 2020). This interest is heavily fueled by growing demands to better estimate the cost of implementation (Yoon, 2020), and to adequately plan for resource allocation that can sufficiently support both the spread and sustainability of implemented practices (Hunter et al., 2020). The burden of measurement and documentation is considerable, however, when it comes to collecting data continuously throughout an implementation effort (Ritchie et al., 2020b), especially when it involves multifaceted strategies such as implementation facilitation. Pragmatic methods for economic evaluations of implementation strategies are beginning to be proposed (Cidav et al., 2020), and as economic analyses of implementation trials (Miller et al., 2020) are actively sought after by the field, this study offers one potentially useful approach to EF time data collection that is less time-intensive than continuous data collection.

Our finding that the EFs spent more time at the beginning of the active implementation phase than at the middle or the end is consistent with the expectation that EFs transition implementation expertise to the site over the course of the implementation phase (Kirchner et al., 2014). We found, however, that the time spent along this transition did not always monotonically decrease—for example, for two of our six sites, time spent was higher at the end of the active implementation phase than at the middle. The trial had deliberately designed the EF’s site-facing efforts to be on an “as needed” basis for the latter months of the active implementation phase, to move away from pre-scheduled weekly meetings with the implementation site while remaining flexible to offer assistance to the site when needed. This flexibility may have contributed to EFs increasing their engagement with sites that underwent contextual changes requiring attention at the end of the implementation phase. To accurately understand reasons for such specific observed patterns in time spent, future studies may consider additionally documenting the reasons for EF activities at the time of data collection, or triangulating with other contemporaneously collected implementation data (e.g., through interviews with EFs [Connolly et al., 2020]).

Close to three-quarters of the EF time spent involved communication via email, phone, or video. EF activities involving email, phone, or video communication are unlikely to be drastically altered even for fully virtual implementation facilitation that involves no in-person contact (Hartmann et al., 2021; Ritchie et al., 2020a). This makes our findings applicable to future implementation efforts that use virtual implementation facilitation, which has always offered potential travel cost savings and is currently being further considered in light of physical distancing guidelines and travel restrictions due to the COVID-19 pandemic (Sacco & De Domenico, 2021). Moreover, the less intense 2-week interval-based data collection approach has the potential to better enable resource-limited implementation efforts to feasibly collect data that they can use for economic evaluation. This can in turn more accurately inform future implementation in such contexts that are often responsible for delivering care to vulnerable populations, and thus help to devise implementation strategies that can serve as countermeasures to existing health disparities (Woodward et al., 2019).

Our study has its limitations. First, the 2-week interval-based data collection approach underestimated the EF time spent on irregular activities. By design, each EF had selected 2-week intervals for their sites that they deemed typical, to avoid random selection of nonrepresentative weeks that overlap with holidays and other irregular work schedules. Losing accurate capture of irregular activities was thus a tradeoff, and our findings point to even 8-week interval-based data not providing large improvements in accuracy. Future studies can potentially mitigate the impact of this limitation by (i) accounting for the underestimation tendency of the interval-based approach in projecting resource allocation needs and (ii) adapting the approach to have the EF document irregular activities that are time-expensive and are not during their selected data collection weeks. Second, our study did not measure implementation time spent by internal facilitators and other site personnel participating in facilitation efforts. This was a design decision that we made both to limit the burden of implementation-related tasks on site personnel and to focus on our operational partner’s priority regarding how much external resources need to be provided to sites to conduct CCM implementation. Future implementation efforts, especially those for which the burden on the implementation site is considered relatively low, can explore the extension of interval-based data collection to site personnel’s implementation activities. Third, the data collection approach has been used only in a CCM implementation trial so far; facilitation of more or less complex interventions may require different amounts of EF effort. Further work is needed to examine the approach’s applicability to implementation efforts across different settings and practices, which is also crucial for rigorously assessing the exact extent to which time estimates based on this approach deviate from those based on continuous data collection across various implementation efforts. Fourth, the two continuous data collection sites had the same EF; this EF had expertise and a strong interest in time–motion analyses, and their activities may have differed from those of the other EFs. To minimize the impact of this limitation, the EFs were trained in facilitation by the same curriculum (Ritchie et al., 2020a), followed a manualized guide to implementing the CCM (available upon request), and held weekly coordination meetings among them to make consistent their facilitation activities throughout implementation. Fifth, the number of sites included in this study is small. Especially in light of the aforementioned impact that contextual changes may have had on the results, further work on a larger sample and across multiple projects is needed to enable a more robust examination of external facilitation time-related findings that accounts for potential confounding factors including EF, site, and calendar time.

Conclusions

EFs spent 136.2 ± 27.6 h per site over the course of 55–57 weeks during a study investigating the implementation of the evidence-based CCM in interdisciplinary outpatient mental health provider teams. Nearly three-quarters of their time was spent on communication with the site via email, phone, or video, and they spent more time at the beginning of the active implementation phase than at the middle or the end. The 2-week interval-based data collection approach that we used may be a practical option for future implementation studies collecting time–motion data. This approach is a timely contribution to the field, especially as many implementation efforts are asked to evaluate their associated cost to inform adequate resource allocation that can support sustained and scaled-up implementation.

Footnotes

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This study was supported by funding from the Department of Veterans Affairs Quality Enhancement Research Initiative (QUERI), grants QUE 15-289 and QUE 20-026. The funding sources had no involvement in the design and conduct of the study; collection, analysis, and interpretation of data; and preparation, review, or approval of the manuscript. The views expressed in this article are those of the authors and do not represent the views of the U.S. Department of Veterans Affairs or the United States Government.

References

- Badamgarav E., Weingarten S. R., Henning J. M., Knight K., Hasselblad V., Gano A., Ofman J. J. (2003). Effectiveness of disease management programs in depression: A systematic review. The American Journal of Psychiatry, 160(12), 2080–2090. 10.1176/appi.ajp.160.12.2080 [DOI] [PubMed] [Google Scholar]

- Bauer M. S., Miller C. J., Kim B., Lew R., Stolzmann K., Sullivan J., Riendeau R., Pitcock J., Williamson A., Connolly S., Elwy A. R., Weaver K. (2019). Effectiveness of implementing a collaborative chronic care model for clinician teams on patient outcomes and health Status in mental health: A randomized clinical trial. JAMA network open, 2(3), e190230. 10.1001/jamanetworkopen.2019.0230 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bauer M. S., Miller C., Kim B., Lew R., Weaver K., Coldwell C., Henderson K., Holmes S., Seibert M. N., Stolzmann K., Elwy A. R., Kirchner J. (2016). Partnering with health system operations leadership to develop a controlled implementation trial. Implementation science : IS, 11, 22. 10.1186/s13012-016-0385-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bodenheimer T., Wagner E. H., Grumbach K. (2002a). Improving primary care for patients with chronic illness. JAMA, 288(14), 1775–1779. 10.1001/jama.288.14.1775 [DOI] [PubMed] [Google Scholar]

- Bodenheimer T., Wagner E. H., Grumbach K. (2002b). Improving primary care for patients with chronic illness: The chronic care model, part 2. JAMA, 288(15), 1909–1914. 10.1001/jama.288.15.1909 [DOI] [PubMed] [Google Scholar]

- Bradley D. F., Romito K., Dockery J., Taylor L., ONeel N., Rodriguez J., Talbot L. A. (2021). Reducing setup and turnover times in the OR With an innovative sterilization container: implications for the COVID-19 Era military medicine. Military Medicine, 186(12 Suppl 2), 35–39. 10.1093/milmed/usab214 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burri Jr. G. J., Helander M. G. (1991). A field study of productivity improvements in the manufacturing of circuit boards. International Journal of Industrial Ergonomics, 7(3), 207–215. 10.1016/0169-8141(91)90004-6 [DOI] [Google Scholar]

- Carter H. E., Lee X. J., Farrington A., Shield C., Graves N., Cyarto E. V., Parkinson L., Oprescu F. I., Meyer C., Rowland J., Dwyer T., Harvey G. (2021). A stepped-wedge randomised controlled trial assessing the implementation, effectiveness and cost-consequences of the EDDIE + hospital avoidance program in 12 residential aged care homes: Study protocol. BMC geriatrics, 21(1), 347. 10.1186/s12877-021-02294-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cidav Z., Mandell D., Pyne J., Beidas R., Curran G., Marcus S. (2020). A pragmatic method for costing implementation strategies using time-driven activity-based costing. Implementation science : IS, 15(1), 28. 10.1186/s13012-020-00993-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coleman K., Austin B. T., Brach C., Wagner E. H. (2009). Evidence on the chronic care model in the new millennium. Health affairs (Project Hope), 28(1), 75–85. 10.1377/hlthaff.28.1.75 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Connolly S. L., Sullivan J. L., Ritchie M. J., Kim B., Miller C. J., Bauer M. S. (2020). External facilitators’ perceptions of internal facilitation skills during implementation of collaborative care for mental health teams: A qualitative analysis informed by the i-PARIHS framework. BMC health Services Research, 20(1), 165. 10.1186/s12913-020-5011-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Curran G. M., Bauer M., Mittman B., Pyne J. M., Stetler C. (2012). Effectiveness-implementation hybrid designs: Combining elements of clinical effectiveness and implementation research to enhance public health impact. Medical Care, 50(3), 217–226. 10.1097/MLR.0b013e3182408812 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Edelman E. J., Dziura J., Esserman D., Porter E., Becker W. C., Chan P. A., Cornman D. H., Rebick G., Yager J., Morford K., Muvvala S. B., Fiellin D. A. (2020). Working with HIV clinics to adopt addiction treatment using implementation facilitation (WHAT-IF?): rationale and design for a hybrid type 3 effectiveness-implementation study. Contemporary Clinical Trials, 98, 106156. 10.1016/j.cct.2020.106156 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eisman A. B., Kilbourne A. M., Dopp A. R., Saldana L., Eisenberg D. (2020). Economic evaluation in implementation science: making the business case for implementation strategies. Psychiatry Research, 283, 112433. 10.1016/j.psychres.2019.06.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glick H. A., Kinosian B., McBride L., Williford W. O., Bauer M. S., & CSP #430 Study Team. (2004). Clinical nurse specialist care managers’ time commitments in a disease-management program for bipolar disorder. Bipolar Disorders, 6(6), 452–459. 10.1111/j.1399-5618.2004.00159.x [DOI] [PubMed] [Google Scholar]

- Hartmann C. W., Engle R. L., Pimentel C. B., Mills W. L., Clark V. A., Keleher V. C., Nash P., Ott C., Roland T., Sloup S., Frank B., Brady C., Snow A. L. (2021). Virtual external implementation facilitation: Successful methods for remotely engaging groups in quality improvement. Implementation science communications, 2(1), 66. 10.1186/s43058-021-00168-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hunter S. C., Kim B., Mudge A., Hall L., Young A., McRae P., Kitson A. L. (2020). Experiences of using the i-PARIHS framework: A co-designed case study of four multi-site implementation projects. BMC health Services Research, 20(1), 573. 10.1186/s12913-020-05354-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kato K., Yoshimi T., Tsuchimoto S., Mizuguchi N., Aimoto K., Itoh N., Kondo I. (2021). Identification of care tasks for the use of wearable transfer support robots - an observational study at nursing facilities using robots on a daily basis. BMC health Services Research, 21(1), 652. 10.1186/s12913-021-06639-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kirchner J. E., Ritchie M. J., Pitcock J. A., Parker L. E., Curran G. M., Fortney J. C. (2014). Outcomes of a partnered facilitation strategy to implement primary care-mental health. Journal of General Internal Medicine, 29(Suppl 4), 904–912. 10.1007/s11606-014-3027-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kitson A., Harvey G., McCormack B. (1998). Enabling the implementation of evidence based practice: A conceptual framework. Quality in health care : QHC, 7(3), 149–158. 10.1136/qshc.7.3.149 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lamper C., Huijnen I., Goossens M., Winkens B., Ruwaard D., Verbunt J., Kroese M. E. (2020). The (cost-)effectiveness and cost-utility of a novel integrative care initiative for patients with chronic musculoskeletal pain: The pragmatic trial protocol of network pain rehabilitation Limburg. Health and Quality of Life Outcomes, 18(1), 320. 10.1186/s12955-020-01569-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lim C. K., Connolly M., Mirkazemi C. (2022). A budget impact analysis of iron polymaltose and ferric carboxymaltose infusions. International Journal of Clinical Pharmacy, 44(1), 110-117 10.1007/s11096-021-01320-4. [DOI] [PubMed] [Google Scholar]

- Magu P., Khanna K., Seetharaman P. (2015). Path process chart – A technique for conducting time and motion study. Procedia Manufacturing, 3, 6475–6482. 10.1016/j.promfg.2015.07.929 [DOI] [Google Scholar]

- Matthieu M. M., Ounpraseuth S. T., Painter J., Waliski A., Williams J. S., Hu B., Smith R., Garner K. K. (2020). Evaluation of the national implementation of the VA diffusion of excellence initiative on advance care planning via group visits: Protocol for a quality improvement evaluation. Implementation science communications, 1, 19. 10.1186/s43058-020-00016-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCormack R. P., Rotrosen J., Gauthier P., D'Onofrio G., Fiellin D. A., Marsch L. A., Novo P., Liu D., Edelman E. J., Farkas S., Matthews A. G., Mulatya C., Salazar D., Wolff J., Knight R., Goodman W., Hawk K. (2021). Implementation facilitation to introduce and support emergency department-initiated buprenorphine for opioid use disorder in high need, low resource settings: Protocol for multi-site implementation-feasibility study. Addiction Science & Clinical Practice, 16(1), 16. 10.1186/s13722-021-00224-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller C. J., Griffith K. N., Stolzmann K., Kim B., Connolly S. L., Bauer M. S. (2020). An economic analysis of the implementation of team-based collaborative care in outpatient general mental health clinics. Medical Care, 58(10), 874–880. 10.1097/MLR.0000000000001372 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller C. J., Grogan-Kaylor A., Perron B. E., Kilbourne A. M., Woltmann E., Bauer M. S. (2013). Collaborative chronic care models for mental health conditions: Cumulative meta-analysis and metaregression to guide future research and implementation. Medical Care, 51(10), 922–930. 10.1097/MLR.0b013e3182a3e4c4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller C. J., Sullivan J. L., Kim B., Elwy A. R., Drummond K. L., Connolly S., Riendeau R. P., Bauer M. S. (2019). Assessing collaborative care in mental health teams: qualitative analysis to guide future implementation. Administration and Policy in Mental Health, 46(2), 154–166. 10.1007/s10488-018-0901-y [DOI] [PubMed] [Google Scholar]

- Moore J. L., Virva R., Henderson C., Lenca L., Butzer J. F., Lovell L., Roth E., Graham I. D., Hornby T. G. (2020). Applying the knowledge-to-action framework to implement gait and balance assessments in inpatient stroke rehabilitation. Archives of Physical Medicine and Rehabilitation, S0003–9993(20), 31255–7. Advance online publication. 10.1016/j.apmr.2020.10.133. [DOI] [PubMed] [Google Scholar]

- Olmos-Ochoa T. T., Ganz D. A., Barnard J. M., Penney L., Finley E. P., Hamilton A. B., Chawla N. (2021). Sustaining implementation facilitation: A model for facilitator resilience. Implementation science communications, 2(1), 65. 10.1186/s43058-021-00171-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pittman J., Lindamer L., Afari N., Depp C., Villodas M., Hamilton A., Kim B., Mor M. K., Almklov E., Gault J., Rabin B. (2021). Implementing eScreening for suicide prevention in VA post-9/11 transition programs using a stepped-wedge, mixed-method, hybrid type-II implementation trial: A study protocol. Implementation science communications, 2(1), 46. 10.1186/s43058-021-00142-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Racine A. D., Davidson A. G. (2002). Use of a time-flow study to improve patient waiting times at an inner-city academic pediatric practice. Archives of Pediatrics & Adolescent Medicine, 156(12), 1203–1209. 10.1001/archpedi.156.12.1203 [DOI] [PubMed] [Google Scholar]

- Ritchie M. J., Dollar K. M., Miller C. J., Smith J. L., Oliver K. A., Kim B., Connolly S. L., Woodward E., Ochoa-Olmos T., Day S., Lindsay J. A., Kirchner J. E. (2020a). Using implementation facilitation to improve healthcare (version 3). Veterans Health Administration, Behavioral Health Quality Enhancement Research Initiative (QUERI), https://www.queri.research.va.gov/tools/Facilitation-Manual.pdf. [Google Scholar]

- Ritchie M. J., Kirchner J. E., Townsend J. C., Pitcock J. A., Dollar K. M., Liu C. F. (2020b). Time and organizational cost for facilitating implementation of primary care mental health integration. Journal of General Internal Medicine, 35(4), 1001–1010. 10.1007/s11606-019-05537-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ruud T., Drake R. E., Šaltytė Benth J., Drivenes K., Hartveit M., Heiervang K., Høifødt T. S., Haaland VØ, Joa I., Johannessen J. O., Johansen K. J., Stensrud B., Woldsengen Haugom E., Clausen H., Biringer E., Bond G. R. (2021). The effect of intensive implementation support on fidelity for four evidence-based psychosis treatments: A cluster randomized trial. Administration and Policy in Mental Health, 48(5), 909-920. 10.1007/s10488-021-01136-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sacco P. L., De Domenico M. (2021). Public health challenges and opportunities after COVID-19. Bulletin of the World Health Organization, 99(7), 529–535. 10.2471/BLT.20.267757 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith J. L., Ritchie M. J., Kim B., Miller C. J., Chinman M., Landes S., Kelly P. A., Kirchner J. E. (2019). Getting to Fidelity: Identifying Core Components of Implementation Facilitation Strategies. Presented at the 2019 VA HSR&D/QUERI National Conference, ‘Innovation to Impact: Research to Advance VA’s Learning Healthcare Community’, Washington DC. [DOI] [PMC free article] [PubMed]

- Solomon J. M., Bhattacharyya S., Ali A. S., Cleary L., Dibari S., Boxer R., Healy B. C., Milligan T. A. (2021). Randomized study of bedside vs hallway rounding: neurology rounding study. Neurology, 97(9), 434–442. 10.1212/WNL.0000000000012407. [DOI] [PubMed] [Google Scholar]

- Sullivan J. L., Kim B., Miller C. J., Elwy A. R., Drummond K. L., Connolly S. L., Riendeau R. P., Bauer M. S. (2021). Collaborative chronic care model implementation within outpatient behavioral health care teams: Qualitative results from a multisite trial using implementation facilitation. Implementation science communications, 2(1), 33. 10.1186/s43058-021-00133-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- Von Korff M., Gruman J., Schaefer J., Curry S. J., Wagner E. H. (1997). Collaborative management of chronic illness. Annals of Internal Medicine, 127(12), 1097–1102. 10.7326/0003-4819-127-12-199712150-00008 [DOI] [PubMed] [Google Scholar]

- Wagner E. H., Austin B. T., Von Korff M. (1996). Organizing care for patients with chronic illness. The Milbank Quarterly, 74(4), 511–544. 10.2307/3350391 [DOI] [PubMed] [Google Scholar]

- Woltmann E., Grogan-Kaylor A., Perron B., Georges H., Kilbourne A. M., Bauer M. S. (2012). Comparative effectiveness of collaborative chronic care models for mental health conditions across primary, specialty, and behavioral health care settings: Systematic review and meta-analysis. The American Journal of Psychiatry, 169(8), 790–804. 10.1176/appi.ajp.2012.11111616 [DOI] [PubMed] [Google Scholar]

- Woodward E. N., Matthieu M. M., Uchendu U. S., Rogal S., Kirchner J. E. (2019). The health equity implementation framework: Proposal and preliminary study of hepatitis C virus treatment. Implementation science : IS, 14(1), 26. 10.1186/s13012-019-0861-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yoon J. (2020). Including economic evaluations in implementation science. Journal of General Internal Medicine, 35(4), 985–987. 10.1007/s11606-020-05649-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- Young R. A., Burge S. K., Kumar K. A., Wilson J. M., Ortiz D. F. (2018). A time-motion study of primary care Physicians’ work in the electronic health record Era. Family Medicine, 50(2), 91–99. 10.22454/FamMed.2018.184803 [DOI] [PubMed] [Google Scholar]