Summary

Gene regulation is a central topic in cell biology. Advances in omics technologies and the accumulation of omics data have provided better opportunities for gene regulation studies than ever before. For this reason deep learning, as a data-driven predictive modeling approach, has been successfully applied to this field during the past decade. In this article, we aim to give a brief yet comprehensive overview of representative deep-learning methods for gene regulation. Specifically, we discuss and compare the design principles and datasets used by each method, creating a reference for researchers who wish to replicate or improve existing methods. We also discuss the common problems of existing approaches and prospectively introduce the emerging deep-learning paradigms that will potentially alleviate them. We hope that this article will provide a rich and up-to-date resource and shed light on future research directions in this area.

Keywords: deep learning, neural network, gene regulation, omics

In this review, Li et al. comprehensively discuss deep-learning applications for understanding gene regulation at various omics levels. They point out the problems and challenges of deep learning in such applications and ways to alleviate them. Emerging deep-learning methods and prospective applications are also discussed.

Introduction

Understanding gene regulation is a central topic in cell biology. Gene regulation in eukaryotes takes place at various stages of the central dogma, including the genomic level, transcriptomic level, and proteomic level. During the past two decades, advances in omics technologies, including those in genomics, transcriptomics, and proteomics, have enabled a better systematic understanding of multiple levels of gene regulation than ever before. Developments in microarray, DNA and RNA sequencing, mass spectrometry, and single-cell technologies have provided foundations for experimental techniques to study gene regulation at a greater scale and finer resolution. This includes techniques such as chromatin immunoprecipitation sequencing (ChIP-seq)1 for protein-DNA binding, cross-linking immunoprecipitation sequencing (CLIP-seq)2,3 for protein-RNA binding, DNase I hypersensitive sites sequencing (DNase-seq)4 and assay for transposase-accessible chromatin using sequencing (ATAC-seq)5 for genomic-wide chromatin accessibility, high-throughput RNA sequencing (RNA-seq) for gene expression level profiling, and 3′ region extraction and deep sequencing (3′-READS)6 for polyadenylation. In the meantime, large omics projects utilizing such techniques, such as the 1000 Genomes Project,7 Encyclopedia of DNA Elements (ENCODE),8 Roadmap Epigenomics,9 and the Genotype-Tissue Expression (GTEx) project,10 have been launched to decipher biological processes at various levels from genotype to phenotype in various individuals, species, and tissue types. Concurrently, omics data from individual studies are continuously uploaded to and collected by publicly accessible databases such as the Sequence Read Archive (SRA),11 the European Nucleotide Archive (ENA),12 and the UniProt Archive (UniParc).13 The aforementioned projects and databases have proved to be invaluable resources for omics studies because they not only support discoveries in the original studies but also enable continued analysis by independent researchers.

As a result, the requirement of analytical algorithms to process, interpret, and discover patterns in omics data has been stronger than ever before. Statistical learning-based data-mining algorithms, such as logistic regression (LR), support vector machines (SVM), and hidden Markov models, have been extensively applied in omics since its inception.14,15 Such algorithms are sometimes termed “shallow learning” algorithms because they operate on extracted features from an object of interest and run only a few inference steps as specified by a pre-determined statistical model. Although effective, such models rely heavily on how those features are engineered. A good feature engineering technique that captures a highly discriminative pattern will result in much better performance than those that overlook them. In the fields where knowledge of such patterns is limited (as is usually the case in omics), feature engineering-based machine-learning algorithms using a priori knowledge usually fail to take care of important aspects that are beyond our current understanding. Relying on feature engineering will result in degraded performance and potentially miss new discoveries. Such an approach could also result in poor model generalizability, as features used in one scenario may not be as effective in other cases. It would be very much appreciated if such discriminative features can be automatically discovered by the learning algorithms directly from the data themselves.

Since 2012, deep learning has achieved remarkable success in various other fields via a data-driven approach.16 Deep learning is a general term for machine-learning algorithms that are made up of deep neural networks (DNNs). DNNs consist of multiple artificial neural network layers, which are biologically inspired data-processing units that serve as non-linear transformation functions of their inputs. As each layer takes as input the result from the previous layer, the transformation becomes increasingly complex when the number of layers increases. Those functions are learnable in the sense that they can adjust themselves during the “training” process. Deep-learning models are usually trained by fitting themselves on the training data through the optimization of an objective function (the “loss function”) using the gradient descent algorithm.16 It is then expected to be able to perform inference tasks on data that come from the same or similar statistical distribution as the training data. Although the success of deep learning is in part due to its learning capacity, generalizability, and computational efficiency on dedicated computational architectures, the most important aspect is its representation learning ability. In contrast to the “shallow learning” algorithms, deep learning, based on DNNs, perform inference tasks with a deep and hierarchical architecture. The lower layers in the hierarchy learn the “representations,” which are highly discriminative features discovered by the algorithm using a data-driven approach. Its higher layers summarize the representations from the lower layers and produce the result of the inference. This makes deep learning especially useful in omics because it overcomes the limitations of the “shallow learning” methods and could discover patterns in biological sequences or measurements that are yet unknown. This is undoubtedly one of the reasons why many successful deep-learning applications in omics have emerged in the past decade. In addition, copious omics data take a form that is amenable to being processed by deep-learning algorithms. For example, there is a similarity between biological sequences and natural languages. Certain sequence motifs serve as regulatory codes, and the interactions between such codes serve as the regulatory grammar. This has led to numerous successful biological applications of off-the-shelf deep-learning models.17,18,19 Returning to the topic of gene regulation, it will be of great interest to find out whether deep learning in omics can decipher the regulatory code and grammar, model the regulatory process, help us understand the regulatory mechanism, and assist us in achieving the major goals of omics.

In this survey and perspective, we aim to give a brief yet systematic review of the application of deep learning in gene regulation studies with various kinds of omics data. We will cover applications of deep learning at various omics levels, including the genomic, transcriptomic, and proteomic levels (Figure 1). We will focus on the formulation of various prediction tasks addressing different biological questions that are attempted by deep learning. We will investigate the model architectures used by the studies and discuss their functionalities and design principles. In particular, we comprehensively list the datasets that are used in each study as a convenient reference for researchers willing to replicate existing methods or develop new methods in this field. Prospectively, we will discuss the application potential of various emerging deep-learning paradigms, such as self-supervised learning, meta-learning, and large-scale pre-trained models for biological sequences, that will potentially alleviate the problems of existing approaches. We also point out the trend of using the integration of structural information, multi-omics profiles, and single-cell profiles for gene regulation studies. We hope that this article will provide a rich and up-to-date resource and serve as a starting point for new researchers interested in this area.

Figure 1.

Deep-learning applications in gene regulation at various omics levels

Review of deep-learning applications in gene regulation

Types of neural networks used in gene regulation studies

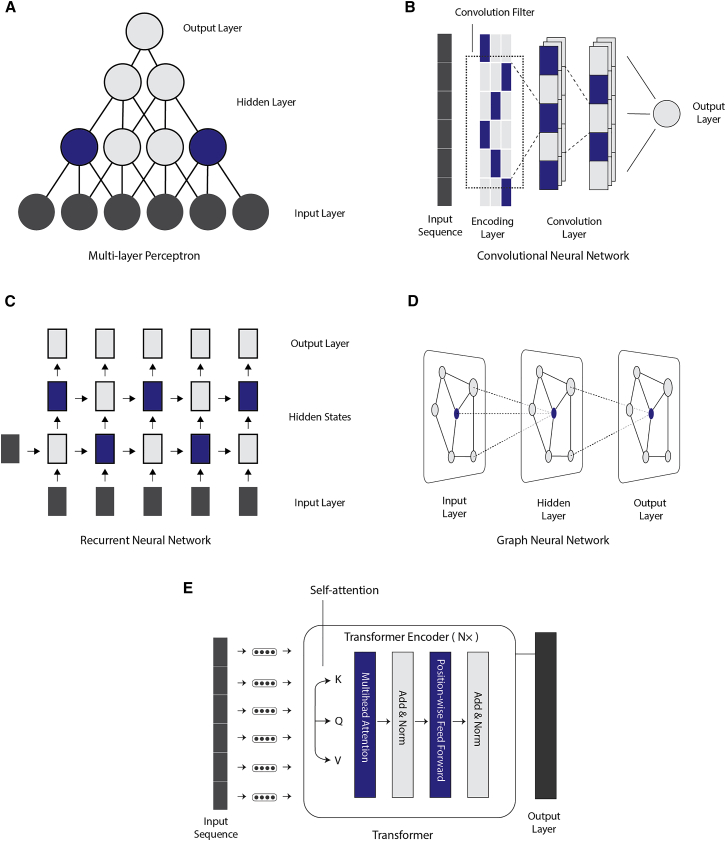

During the past decade, the neural network models used in gene regulation studies have largely followed what has been used in computer vision and natural language processing, from which deep learning first originated. The most popular types include multi-layer perceptrons (MLPs) (Figure 2A), which are quite popular in early applications of deep learning on tabular data. The input layer of MLPs directly takes in the data values from the input dataset and is subsequently processed by one or more hidden layers. Finally, an output layer summarizes the processed information of the earlier hidden layers to produce the final prediction.

Figure 2.

Common deep-learning architectures used in gene regulation studies

(A) Multi-layer perceptron.

(B) Convolutional neural network.

(C) Recurrent neural network.

(D) Graph neural network.

(E) Transformer.

Following the success in image recognition and text classification, convolutional neural networks (CNNs)20 have proved to be very useful for handling raw biological sequence data, whether it is DNA, RNA, or protein sequences (Figure 2B). The CNNs employ convolution filters to process sequential or image data in a way that respects the spatial structure of the data. To handle long-range interactions of sequential data, recurrent neural networks (RNNs) such as gated recurrent units (GRUs)21 and long short-term memory (LSTM)22 have received particular interest in biological sequence analysis (Figure 2C). The RNNs employ hidden states in the network that will remember sequential information at earlier locations. These hidden states benefit the modeling of long-range interactions. Graph neural networks (GNNs) are designed to handle structured datasets that are represented as a graph (Figure 2D). GNNs also have input, hidden, and output layers in their architectures. In contrast to plain MLPs that handle individual data points independently, the hidden layers and output layers of GNNs respect the topological structure of the dataset. In more recent years, inspired by the success in natural language processing and understanding, Transformers19,23,24 have also received a lot of attention for biological sequence data processing (Figure 2E). Transformers are powerful learners of sequential data, partly due to their employment of the self-attention mechanism that handles the pairwise interdependencies between the sequence elements. More recently, Transformers are also popular choices for self-supervised learning on biological sequences,18 which we will discuss in subsequent sections. According to the nature of the prediction tasks formulated in each study, researchers designed deep-learning architectures utilizing one or more of the aforementioned networks with highly customized configurations to achieve higher performance, greater computational efficiency, and better biological interpretability. In this article, we do not wish to provide a comprehensive introduction to the neural network types used in deep learning. Instead, we refer readers to dedicated hands-on tutorials25,26 and introductory textbooks27 on deep learning.

Genomic-level applications

In this section, we review the most representative research works that applied deep learning to the study of genomic-level regulations. All works that we review have formulated a supervised learning problem and can predict functional genomic features from the genomic sequence. In this way, they aim to decipher the regulatory code and grammar from the genomic sequence and predict how genetic variations will affect a particular regulatory mechanism.

The relevant studies and methods are listed and summarized in Table 1. In particular, we list the functionalities each method achieves, the datasets each method uses, and the deep-learning architectures on which the methods are based. As the regulatory code and grammar of genomic sequences are interpreted differently in different organisms and tissue/cell types, most models have particular ways to provide organism- and tissue-/cell-type-specific predictions. Therefore, we particularly highlight the species and tissue/cell types that are involved in each study.

Table 1.

Genomic-level deep-learning applications

| Method name | Year | Functionalities |

Datasets | Model | Species | Tissue/cell types | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| DHSa | Histonea | TFa | Varianta | DNA met.a | 3Da | ||||||

| Functional genomic models: | |||||||||||

| Deepbind17 | 2015 | ✓ | ✓ |

|

human, mouse | multiple | |||||

| DeepSEA30 | 2015 | ✓ | ✓ | ✓ | ✓ |

|

human | multiple | |||

| Basset34 | 2016 | ✓ | ✓ |

|

|

human | multiple | ||||

| DanQ35 | 2016 | ✓ | ✓ | ✓ | ✓ |

|

|

human | multiple | ||

| CpGenie36 | 2017 | ✓ | ✓ |

|

|

human, mouse | multiple lymphoblastoid cell lines | ||||

| De-Fine37 | 2018 | ✓ | ✓ |

|

|

human | K562 and GM12878 | ||||

| Basenji38 | 2018 | ✓ | ✓ | ✓ |

|

|

human | multiple | |||

| Expecto41 | 2018 | ✓ | ✓ | ✓ | ✓ |

|

|

human | multiple (>200) | ||

| Xpresso42 | 2020 | ✓ | ✓ |

|

|

human, mouse | multiple | ||||

| DeepMEL43 | 2020 | ✓ | ✓ |

|

|

trained on human, with cross-species generalizability | melanoma samples | ||||

| Enformer46 | 2021 | ✓ | ✓ | ✓ | ✓ |

|

|

human, mouse | multiple | ||

| BPNet48 | 2021 | ✓ |

|

|

mouse | mESC | |||||

| 3D genomic models: | |||||||||||

| Akita50 | 2020 | ✓ | ✓ |

|

human, mouse | multiple (multiple human and mouse cell lines; mouse neuronal tissues) | |||||

| Orca56 | 2022 | ✓ | ✓ | ✓ | ✓ |

|

human | multiple (HFF and H1 hESC cell lines) | |||

| GraphReg57 | 2022 | ✓ | ✓ | ✓ | ✓ |

|

human, mouse | multiple | |||

DHS, DNase I hypersensitivity prediction; Histone, histone modification prediction; TF, transcription factor binding prediction; Variant, effect of genetic variants prediction; DNA met, DNA methylation prediction; 3D, 3D genome-folding prediction.

Deepbind17 is a pioneer work dedicated to the prediction of nucleic acid-protein binding. Based on a CNN architecture, it can learn from multiple DNA-protein binding experimental profiling technologies, including protein binding microarrays60 (PBM), ChIP-seq,1 and HT-SELEX.61 DeepSEA30 is one of the seminal works that applied deep learning to whole-genome functional genomics annotations. DeepSEA uses a CNN-based architecture that takes in 1,000-bp human genomic DNA sequence and performs a multi-task prediction of DNase I hypersensitivity (DHS), transcription factor (TF) binding, and histone modification in multiple cell lines. DeepSEA was trained on 125 DHS profiles (by DNase-seq4) and 690 TF binding profiles (by ChIP-seq1 for 160 distinct TFs) from ENCODE,8 and 104 histone modification profiles (by ChIP-seq) from Roadmap Epigenomics.31 DeepSEA uses one model to predict the signals measured from 919 epigenomic profiles (including 125 DHS predictions, 690 TF binding predictions, and 104 histone modification predictions). This multi-task design allows it to share the learned genomic grammar while performing different tasks. Additionally, the authors trained a boosted logistic classifier using the predictions of DeepSEA and showed that it could prioritize functional non-coding regulatory mutations in HGMD32 and expression quantitative trait loci (eQTL) in GRASP.33

Motivated by the success of DeepSEA, follow-up works have improved and extended DeepSEA in multiple different aspects. Basset34 is also a CNN-based model particularly focused on DHS prediction. It extends DeepSEA’s DHS prediction to a total of 164 cell types (125 cell types from ENCODE8 and 39 cell types from Roadmap Epigenomics9). Basset trained cell-type-specific models by fitting one model for each cell type. Instead of using the pure CNN architecture, DanQ35 explored the effectiveness of a hybrid architecture combining CNN and bidirectional LSTM (BiLSTM). It outperformed DeepSEA even though they were trained exactly on the same dataset.

The above integrative functional genomic models do not shadow the effectiveness of dedicated predictive models. CpGenie36 predicts genomic DNA methylation status from their sequences. It was trained on restricted representation bisulfite sequencing (RRBS) and whole-genome bisulfite sequencing (WGBS) profiles from ENCODE. De-Fine37 focused on TF binding prediction and was trained specifically on TF binding profiles from the K562 and the GM12878 cell lines from ENCODE. In contrast to the previous studies, De-Fine used a cell-line-specific genome sequence instead of the human reference genome sequence, pointing out that cell-line-specific genomic variation may affect model training and prediction. It also experimented with quantitative prediction instead of binary prediction of functional genomic elements.

More recent studies further improved the network architecture and integrated genomic predictions with transcriptomic predictions, such as promoter activity and gene expression levels. Compared with the previous methods, Basenji,38 as a successor to Basset, significantly enlarged the size of genomic sequence that a CNN-based model can take in. Using the “dilated convolution”62 that exponentially increases the receptive field of high-level neurons, the model is able to process 131 kbp inputs. The model is, therefore, able to recognize motifs that have long-range dependencies. To make quantitative predictions of each genomic feature, Basenji uses Poisson regression-based log likelihood as the loss function. Expecto,41 which is an official redesign of DeepSEA by the original authors, enlarges DeepSEA to a three-stage model. The first stage is still CNN based and resembles the original DeepSEA model, but with larger-sized input (2,000 bp). The second stage is a spatial transformation module that reduces dimensionality and weighs contributions from nearby sites according to their relative distance. The third stage performs a gradient-boosted linear regression of the gene expression levels using the genomic features produced from stage 2 and 218 tissue expression profiles from GTEx,10 Roadmap Epigenomics, and ENCODE. Expecto was shown to be able to prioritize mutations related to several immunity-related diseases, such as Crohn’s disease, ulcerative colitis, inflammatory bowel disease, and Bechet’s disease. Similar to the prediction target of Expecto, Xpresso42 is also a gene expression level predictor. However, Xpresso was deliberately designed to make such predictions based purely on the genomic sequence surrounding the promoter (∼10.5 kbp). By inspecting the discrepancy between model prediction and ground-truth measurements, the researchers made interesting discoveries of several genes’ regulatory mechanisms beyond their promoter activity. This included polycomb-mediated transcriptional gene silencing,63 enhancer-mediated transcriptional gene activation, and microRNA-mediated gene repression. Enformer46 is the first to employ the CNN + Transformer hybrid architecture for gene expression level and epigenetic feature prediction. Using the same dataset as Basenji247 (an updated version of Basenji), it achieved remarkably higher accuracy than its predecessors. Enformer was trained by alternately feeding in human and mouse genomic sequences, enabling it to perform cross-species inference.

Recent works have also explored the possibility of different problem formulations and measurements from alternative experimental techniques. DeepMEL43 constructed a model similar to that of DanQ for the prediction of chromatin accessibility in melanoma cell lines. DeepMEL was trained using melanoma omniATAC-seq64 data (an improvement over plain ATAC-seq), and instead of predicting the binary chromatin accessibility per location it predicts 24 co-accessible regions as identified by cisTopic.45 This better utilizes the co-regulatory mechanism of accessible chromatin regions. BPNet,48 a 10-layer CNN with dilated convolution and residual connections, trained on ChIP-nexus49 (a high-resolution improvement of ChIP-seq) data of four pluripotency TFs (Oct4, Sox2, Nanog, and Klf4), is able to produce base-resolution binding affinity prediction to genomic sequences of all four TFs in a multi-task fashion. BPNet is able to discover interesting cooperativity between TF motifs located within 1,000 bp regions, such as the Oct4-Sox2 motif and Oct4-Oct4 motif, and the cooperation between the Nanog motif and AT-rich motif in a periodic manner.

All of the above methods regard the genome as linear sequences per chromosome. However, the cellular genome does have a three-dimensional (3D) structure. The 3D structure of the genome is under extensive regulation and is able to affect gene expression, DNA replication, and DNA repair. Several approaches have been dedicated to deciphering the regulatory code and grammar of the 3D structure of the genome from genomic sequences. Akita50 is a deep-learning method that can predict genome folding from the genomic sequences. After training on Hi-C65 and Micro-C51 profiling data from five human cell lines, one mouse cell line, and multiple mouse neuronal tissues, Akita is able to infer the genome-folding map of each cell type, which is a two-dimensional (2D) matrix representing pairwise contact between genomic regions. Akita utilizes a Basenji-like architecture as its “trunk” for processing ∼1 Mb genomic sequences. It then uses a “head” to transform the one-dimensional (1D) genomic sequence representations into 2D maps. The mean squared error between the predicted 2D map and experimental Hi-C or Micro-C data is used as the training objective. Orca,56 a very recent improvement on Akita, enables the prediction of the genome-folding map at multiple resolutions. Orca uses a multi-resolution 1D-CNN genomic sequence encoder which can take in 256 Mb, 32 Mb, or 1 Mb inputs and encodes them into 1D sequence representations. Orca then uses a cascading 2D-CNN decoder to decode sequence representations into 2D genome-folding maps. Using only the Micro-C profiles of the two human cell lines (HFF and H1 hESC) that Akita has used, Orca produces genome-folding map predictions at various scales, from 1 Mbp regions each within one chromosome to 256 Mbp regions that cover multiple chromosomes. Furthermore, Orca’s sequence encoder is simultaneously trained on the DHS and histone modification profiles of the two cell lines from ENCODE and Roadmap Epigenomics, making it an integrative and multi-purpose model. In contrast to the earlier works that have focused on sequence-based prediction of genome-folding maps, a recent model, GraphReg,57 instead utilized the 3D structure of the genome for better prediction of gene expression levels. GraphReg contains a set of two models, Epi-GraphReg and Seq-GraphReg. Epi-GraphReg infers tissue-agnostic gene expression levels based on epigenetic and 3D genomic profiles, and Seq-GraphReg infers tissue-aware gene expression levels based on genomic sequence and 3D genomic profiles. Both Epi-GraphReg and Seq-GraphReg utilize graph attention networks59 for modeling the spatial interactions between genomic locations.

Transcriptomic-level applications

The transcriptome serves as a central stage for gene regulation. The initiation of transcription requires the recognition of a promoter sequence by an RNA polymerase, binding of transcription factors to enhancers, and determination of a transcriptional start site (TSS). Such a process can be extensively regulated to control the rate of gene expression, and, if a gene has multiple promoters, the utilization of different promoters may produce RNA transcripts with different 5′ UTRs that will potentially have different translational efficiency.66 RNA splicing is also a highly regulated process and is a significant contributor to eukaryotic transcriptome diversity.67 In eukaryotes, the possible usage of multiple polyadenylation sites (PASs) produces mRNAs with different 3′ UTRs that may contain important regulatory elements.68 After the completion of the above process, the mRNA molecule is transported out of the nucleus. RNA subcellular localization controls the spatial distribution of the newly transcribed mRNAs. Post-transcriptional mRNAs may also be selectively targeted by microRNAs (miRNAs), which are able to downregulate the expression of certain genes. The 5′ UTR of mRNA has an important effect on its translational efficiency, which directly controls the rate of protein synthesis.

We summarize research works that use deep learning to model each of the aforementioned processes in Table 2. Although some of the “genomic-level” prediction methods in the previous section may also have some transcriptomic-level predictions, especially for the integrative functional genomic models such as Basenji and Expecto, we focus here on methods that are dedicated to particular aspects of transcriptomic-level regulation.

Table 2.

Transcriptomic-level deep-learning applications

| Method name | Year | Main functionalities | Datasets | Model | Species | Tissue/cell types |

|---|---|---|---|---|---|---|

| Promoter/TSS: | ||||||

| CNNProm69 | 2017 | promoter recognition |

|

human mouse Arabidopsis E. coli B. subtilis |

Non-specific | |

| DeeReCT-PromID73 | 2019 | promoter recognition on highly imbalanced dataset |

|

|

human | non-specific |

| DeeReCT-TSS74 | 2021 | promoter recognition guided by RNA-seq |

|

|

human | multiple (10 cell types) |

| Splicing: | ||||||

| Barash et al.75 | 2010 | splicing prediction of cassette exons |

|

|

mouse | multiple (27 tissues) |

| Leung et al.77 | 2014 | splicing prediction of cassette exons |

|

|

mouse | multiple (5 tissues) |

| Xiong et al.79 | 2015 | splicing prediction of cassette exons in exon triplets |

|

|

human | multiple (16 tissues) |

| DARTS80 | 2019 | splicing prediction of cassette exons guided by RNA-seq |

|

human | K562, HepG2 | |

| SpliceAI81 | 2019 | Splice sites prediction from pre-mRNA |

|

human | non-specific | |

| Pangolin83 | 2022 | splice sites prediction from pre-mRNA |

|

|

human rhesus monkey mouse rat |

multiple (4 tissues in each species) |

| Polyadenylation: | ||||||

| Leung et al., 201886 | 2018 | PAS quantification (pairwise comparison) |

|

|

human | multiple (7 tissue types) |

| DeeReCT-PolyA91 | 2019 | PAS recognition |

|

human mouse |

non-specific | |

| APARENT96 | 2019 | PAS quantification |

|

|

human | non-specific |

| DeeReCT-APA97 | 2021 | PAS quantification |

|

|

mouse (BL, SP, and BLxSP F1 hybrid) | fibroblast |

| RNA subcellular localization: | ||||||

| RNATracker98 | 2019 | subcellular localization prediction |

|

human | HepG2 and HEK293T cell lines | |

| MicroRNA targets: | ||||||

| MiRTDL102 | 2015 | microRNA targets prediction |

|

|

human mouse rat |

non-specific |

| Translation: | ||||||

| Cuperus et al.104 | 2017 | 5′ UTR translational efficiency prediction |

|

|

yeast | N/A |

| RNA-protein binding: | ||||||

| DeepBind17 | 2015 | sequence-based RNA-protein binding prediction |

|

|

multiple (24 eukaryotes) | non-specific |

| NucleicNet106 | 2019 | structure-based RNA-protein binding prediction |

|

|

multiple | non-specific |

CNNProm69 is an early deep-learning-based method for promoter sequence recognition. The model uses one to two layers of CNN for the binary classification of sequences into promoter/non-promoter sequences. Effectiveness has been demonstrated in both prokaryotes (Escherichia coli and Bacillus subtilis) and eukaryotes (human, mouse, and Arabidopsis). As a successor to CNNProm, DeeReCT-PromID73 enlarged the size of the input to 600 bp and enabled genome-wide scanning of promoters. The authors pointed out that models for promoter recognition that are trained on curated balanced datasets may not be directly applicable for genome-wide scanning. This is because the majority of genomic regions are negative examples (non-promoters) and, therefore, the tolerability of the false-positive rate should be much lower. DeeReCT-PromID employed a strategy for iteratively selecting hard negative samples to reduce the false-positive rate of the model. DeeReCT-TSS further improved on DeeReCT-PromID by inferring promoter usage in different cell lines through both promoter sequences and RNA-seq evidence. It demonstrated its functionality by training and evaluating on ten FANTOM539 cell lines, using genomic sequence and RNA-seq as input and matched CAGE-seq40 data as ground truth.

RNA splicing plays a critical role in transcriptomic-level regulation. By producing transcripts with different combinations of exons and introns, it contributes significantly to eukaryotic transcriptomic diversity. Given the complexity of different patterns of alternative splicing, early works on deep-learning-based splicing prediction particularly focused on one alternative splicing type: the cassette exons. For example, an early work of Barash et al. developed a one-layer neural network for the prediction of cassette exon differential usage across mouse tissues.75 The network takes in a 1,014-dimensional vector containing sequence features flanking the exon of interest and outputs three-class classification scores for “increased usage,” “decreased usage,” or “no change.” The model is trained on microarray profiles of 3,665 cassette exons in 27 mouse tissues.76 Leung et al. further enlarged the dataset to a total of 11,019 cassette exons in mouse and increased the dimension of sequence features to 1,393 for tissue-specific splicing pattern prediction.77 Using the same sequence features as Leung et al., Xiong et al. developed an MLP for the prediction of 10,689 human cassette exons.79 The model was trained on Bodymap 2.0 RNA-seq data (NCBI accession GEO: GSE30611) and used a Bayesian MCMC procedure to reduce overfitting. Using the predictive model, the researchers were able to examine mutations that alter splicing in genes involved in several human genetic diseases. DARTS80 provided an example of integrating deep-learning-processed sequence features and RNA-binding protein (RBP) expression levels with low-coverage RNA-seq evidence for the differential usage analysis of cassette exons between conditions. DARTS integrates MLP-based deep learning with the Bayesian hypothesis test (BHT) by asking its deep-learning module to provide a prior distribution for its BHT module. In this way, DARTS enables deep-learning-guided study of alternative splicing even when the experimental RNA-seq data are not of enough sequencing depth.

SpliceAI81 is the first deep-learning-based splice site predictor for all splicing types. SpliceAI simulates the in vivo pre-mRNA processing machinery and directly predicts splice sites from raw pre-mRNA sequences. SpliceAI takes in long input (5,000 nt) to handle large chunks of pre-mRNA sequences and performs three-class classification (no splice site, donor site, and acceptor site) per each pre-mRNA location. SpliceAI also utilized the dilated convolution62 and residual block108 for increased receptive field of high-level neurons. Pangolin83 further extends SpliceAI in a multi-task fashion for the detection of splice sites in a total of four tissue types (heart, liver, brain, and testis) from four species (human, rhesus monkey, mouse, and rat).

The termination of eukaryotic Pol II transcription in eukaryotic cells requires cleavage at the 3′ end of the transcript and an addition of a poly(A) tail, a process called polyadenylation. Similar to promoters that determine TSSs, PASs determine transcription termination sites. A gene may have multiple competing PASs, and cells from different tissue types or conditions may preferentially use each of them. Such alternative polyadenylation (APA) could modify the 3′ UTRs of transcripts and could strongly affect mRNA stability109 and cellular localization,110 and is involved in various human diseases. DeeReCT-PolyA91 is one of the first deep-learning methods for recognizing PASs. Using a CNN with group normalization95 to increase robustness, DeeReCT-PolyA takes in 200 nt sequences and predicts whether they contain a PAS or not. The model achieved state-of-the-art performance and substantially outperformed non-deep-learning methods on two human polyadenylation datasets, the Dragon human poly(A) dataset92 and the Omni human poly(A) dataset,93 and one mouse polyadenylation dataset from Xiao et al.94 Instead of tackling the PAS recognition problem, Leung et al. were the first to address the PAS quantification problem by applying a two-branch CNN for pairwise comparison of competing PAS of a gene.86 Leung et al. assembled a reference of PASs in the human genome using four different reference databases and used 3′-seq data from Lianoglou et al.90 for quantification. Instead of casting the PAS quantification problem into pairwise comparison problems, DeeReCT-APA97 handles variable numbers of PASs per gene using a combined CNN and BiLSTM architecture. DeeReCT-APA is able to model the interactions between competing PASs and achieves better performance than the model from Leung et al.86 Instead of training models only on endogenous PAS sequences, APARENT96 trained a two-layer CNN on 3 million synthesized massively parallel reporter assay (MPRA) sequences of APA. The MPRA is able to measure hundreds of thousands of synthesized PAS sequences’ regulatory activities in parallel. APARENT’s CNN was trained to predict the measured regulatory activity given the PAS sequence. Using a gradient-based optimization of input sequences, the APARENT model is able to engineer PAS sequences to have desired levels of regulatory activity.

Transcriptomic-level regulation can also be carried out through other post-transcriptional mechanisms. mRNA subcellular localization controls gene expression both spatially (by transporting mRNA into different subcellular structures) and quantitatively (by modulating the accessibility of mRNA to ribosomes). RNATracker98 is a deep-learning tool that predicts such localization patterns of mRNAs. Utilizing mRNA sequence and RNA secondary structure predicted from RNAplfold,99 RNATracker is able to classify mRNAs into their plausible subcellular localizations. The version of RNATracker trained on CeFra-Seq data100 classifies mRNA localizations into cytosol, nuclear, membranes, and insoluble, while the version trained on APEX-RIP data101 classifies localizations into ER mitochondrial, cytosol, and nuclear. mRNAs can also be targeted with miRNAs that could silence mRNA expression. MiRTDL102 is a CNN-based tool that is able to predict potential miRNA-mRNA interactions. The 5′ UTR of an mRNA greatly affects ribosomal translational efficiency and is under frequent regulation. Similar to the motivation of the APARENT model for the prediction of PAS strength, Cuperus et al. developed a 3-layer CNN to predict 5′ UTR translational efficiency by training it on 489,348 synthesized 5′ UTRs in yeast.104 The translational efficiency of each synthesized 50-nt-long 5′ UTR was measured by a massively parallel growth selection experiment and was used as the CNN’s prediction target.

Under the hood of all the transcriptomic-level gene regulations are complex interactions between RNA and various other types of biomolecules. RNA-protein binding is certainly one of the most important, as most post-transcriptional regulations are mediated through RBPs. Besides predicting DNA-protein binding, DeepBind17 is also able to predict RNA-protein bindings. After being trained on the RNAcompete assay data,105 it is able to predict the binding preference of an RNA molecule to 207 distinct RBPs from 24 eukaryotes based on the RNA sequence. NucleicNet106 pursued a path different from DeepBind. Instead of performing sequence-based RNA-protein binding predictions, it makes RBP-centric predictions based on their structures. Trained on 158 ribonucleoprotein structures from the PDB,107 it is able to predict an RBP’s binding sites for RNAs as well as each binding site’s preference of each type of RNA constituents. Readers are referred to Wei et al.111 for a detailed survey of deep-learning applications in RNA-protein binding predictions.

Proteomic-level applications

After a protein is translated from its mRNA template, regulation can take place at the proteomic level via a number of mechanisms. Protein post-translational modifications (PTMs) contain a family of such regulatory processes that covalently modify a protein after it is translated. For example, the serine (Ser), threonine (Thr), and tyrosine (Tyr) residues can be modified by phosphorylation, which plays an important role in intracellular signal transduction. Furthermore, the lysine (Lys) residues can be modified through ubiquitination, which can mark a protein for degradation. Protein subcellular localization determines the cellular compartments where a protein resides and exerts its functions, which will substantially affect its function and activities.

We summarize these proteomic-level deep-learning applications in Table 3 (upper half). Similarly, we highlight their functionalities, training datasets, and model architectures to facilitate future explorations in this area.

Table 3.

Proteomic- and phenotype-level deep-learning applications

| Method name | Year | Main functionalities | Datasets | Model | Species |

|---|---|---|---|---|---|

| Post-translational modification (PTM): | |||||

| DeepPhos112 | 2019 | phosphorylation site prediction (general/residual-specific/kinase-specific) |

|

|

human |

| DeepUbi120 | 2019 | ubiquitination prediction |

|

|

multiple (176 species) |

| MusiteDeep122,123,124 | 2017-2020 | multiple PTM prediction |

|

|

multiple animal species |

| Protein-subcellular localization: | |||||

| DeepLoc126 | 2017 | subcellular localization prediction |

|

|

multiple eukaryotes |

| Genotype-to-phenotype inference in animal species: | |||||

| Zhou et al.128 | 2019 | prediction of the effect of non-coding variants to autism spectrum disorder |

|

|

human |

| DeepWAS129 | 2020 | using genomic deep-learning model to enhance genome-wide association studies |

|

human | |

| Genotype-to-phenotype inference in plant species: | |||||

| DeepGP133 | 2020 | multiple phenotype prediction in polyploid outcrossing species |

|

|

strawberry blueberry |

| Shook et al.137 | 2021 | crop yield prediction based on genotype and environmental factors |

|

|

soybean |

Deep-learning models have been developed for the prediction of PTMs from protein sequences. DeepPhos112 is a densely connected CNN architecture119 that predicts phosphorylation sites from protein sequences. DeepPhos112 formulates three different phosphorylation prediction tasks. The general prediction involves predicting whether an amino acid position is a phosphorylation site. The residual-specific prediction requires a model to predict in which amino acid type phosphorylation occurs. The kinase-specific prediction requires a model to predict which kinase is responsible for the phosphorylation event. DeepUbi120 is a CNN architecture that predicts ubiquitination from protein sequences, achieving an area under the curve of 0.9 in a total of 176 species. MusiteDeep122,123,124 is a series of works for multiple PTM type prediction. Its latest version uses an ensemble of multi-layer CNN and Capsule Network125 that is able to handle 13 different PTM types. MusiteDeep updates its predictions for UniProt protein sequences every 3 months, and its prediction results are available at https://www.musite.net.

DeepLoc126 is a deep-learning-based prediction tool that is able to infer protein subcellular localization from their sequence. DeepLoc is designed as a combined CNN and LSTM architecture. It uses attention-based decoding to identify sequence regions with high predictive power. It organizes the ten subcellular locations in a hierarchical manner and uses hierarchical tree classification likelihood to train the model. In this way, without using information from homology sequences, it is able to achieve 78% accuracy for a total of ten subcellular locations.

Phenotypic-level applications in animal and plant species

The aforementioned deep-learning applications in gene regulation have been mainly at the microscopic level of biological processes. However, bridging the gap between the genotype and the phenotype represents one of the ultimate goals of gene regulation studies. In this section, we summarize deep-learning models that have been developed to assist in genotype-to-phenotype and phenotype-to-genotype inferences in animal and plant species (Table 3, lower half).

Following the development of DeepSEA, Zhou et al. further developed DeepSEA-based models for the prediction of the effect of non-coding variants on autism spectrum disorder (ASD).128 In this work, two DeepSEA-based models were trained to predict transcriptional and post-transcriptional regulatory effects separately. The resulting predictions were summarized into disease impact scores through training an LR model on top of the model predictions using known disease-associated mutations. Using the disease impact scores, it was then able to prioritize disease-associated mutations observed in 1,790 ASD-affected families. DeepWAS129 introduces a deep-learning-assisted genome-wide association study (GWAS) pipeline. DeepWAS utilizes the pre-trained DeepSEA model to produce a list of candidate variants for a GWAS. In this way, it reduces the number of candidates for GWAS and increased its statistical power. The authors demonstrated its effectiveness by improving three existing GWASs for multiple sclerosis,130 major depressive disorder,131 and body height.132

In plant species, deep learning has also been applied in multiple plant phenotype prediction tasks. For example, DeepGP133 applied a CNN-based model for phenotype prediction in two polyploid outcrossing species: strawberry and blueberry. Five strawberry fruit quality traits were predicted for strawberry individuals based on microarray genotypes, and five blueberry fruit quality traits were predicted for blueberry individuals based on genotypes obtained from Rapid Genomics Capture-seq.136 Shook et al. studied the possibility of predicting crop yield based on genotype and environmental factors. Using the Uni-form Soybean Tests data,138 which contains soybean yields in United States and Canada during 2003–2015, the authors separately built LSTM and temporal attention139 models for soybean crop yield prediction. At each time step, the model considers the crop’s genotype and seven weather variables during its growth period and forecasts the yield during harvest seasons. Such deep-learning applications in plant phenotype prediction tasks will provide valuable insights for plant breeding.

Problems and limitations of current deep-learning applications

Challenges in training and interpreting deep-learning models

In this section we discuss challenges that are common in the applications of deep learning, especially those in model training and model interpretation.

Deep-learning models are known to be difficult to train because their high non-linearity makes the optimization of the objective function difficult. Typically, deep-learning models are trained using stochastic gradient descent (SGD), as it is an efficient first-order optimization algorithm and its stochasticity allows it to jump out from local minima.27 However, the size of each gradient descent step (the “learning rate”) can be difficult to configure. A small learning rate can result in slow training progress and becoming stuck in a local minimum; a high learning rate will make the algorithm fail to converge. The direction of an SGD update, i.e., the direction toward which the objective function decreases the fastest, could also alternate too rapidly, making the optimization trajectory oscillate around a local minimum.140

Therefore, several improvements to SGD have been made to make the algorithm more efficient and stable, as summarized in Table 4. Adagrad141 adaptively modulates the learning rate for each model parameter based on its magnitude. RMSprop142 also adaptively modulates the learning rates, but it is based on the exponential moving averages of the parameters’ magnitude. Improvements to the direction of parameter update are also available, such as momentum and Nesterov momentum.156 Adam140 introduces the momentum update to RMSprop and has been effective in the optimization of large CNNs. More recently, several successors to Adam have become more and more popular, including NAdam143 (Adam with Nesterov momentum), AdamW144 (Adam with decoupled weight decay), and RAdam145 (Adam with more stabilized adaptive learning rate in the warm-up process). To boost model performance, researchers are always encouraged to apply these algorithms and their variants in real-world practice.

Table 4.

Common problems in deep learning and their solutions

| Method name | Year | Features |

|---|---|---|

| Improvements to SGD optimization: | ||

| Adagrad141 | 2011 | adaptive learning rate |

| RMSprop142 | 2013 | adaptive learning rate |

| Adam140 | 2014 | adaptive learning rate, momentum update |

| NAdam143 | 2016 | adaptive learning rate, Nesterov momentum update |

| AdamW144 | 2017 | adaptive learning rate, decoupled weight decay |

| RAdam145 | 2019 | adaptive learning rate, momentum update |

| Tools for hyperparameter tuning: | ||

| Raytune146 | 2018 | Tensorflow,147 Pytorch148 |

| KerasTuner149 | 2019 | Keras150 |

| Model interpretation: | ||

| Example-based methods | explain models using data points themselves application examples: Alipanahi et al., Bogard et al.17,96 |

|

| Perturbation-based methods | application examples: Alipanahi et al., Avsec et al.17,48 | |

| Attribution-based methods | application examples: Avsec et al., Janssens et al.48,151 | |

| Integrated gradients152 | 2017 | |

| SHAP153 | 2017 | |

| DeepLIFT154 | 2018 | |

| Captum155 | 2020 | |

| Model-based methods | encourage model interpretability through model design application examples: Ji et al., Zhou et al.19,41 |

|

Another challenge in training deep-learning models concerns hyperparameter selection. The selection of hyperparameters can substantially affect training stability and model performance. As the model grows larger, the space of hyperparameter combinations increases exponentially. Therefore, it is necessary to employ heuristic search strategies in hyperparameter tuning. Techniques such as random search,157 coordinate descent, and Bayesian optimization158 are common choices in practice. To facilitate the hyperparameter tuning process, software libraries with integrated hyperparameter selection algorithms such as Raytune146 and KerasTuner149 can be applied to existing projects with minimal modifications to the existing source code.

Another challenge of deep learning is the difficulty in its interpretation. Unlike shallow models such as linear models, decision trees, and SVMs, deep-learning models have complex hierarchical architectures and their hidden states cannot be interpreted in easy-to-understand terminologies. However, existing deep-learning studies in gene regulation have employed various kinds of methods to improve model interpretability. We categorize such methods into four general categories, which are summarized in Table 4.

Example-based methods

For example-based methods, the deep-learning models are explained by training or testing examples in the dataset. To interpret a specific layer or a hidden state neuron, examples that result in their high activation are selected (e.g., top 5% among all examples). The commonality among those examples can be used as an interpretation. For example, the subsequences that result in high activation of a specific convolution filter can be collapsed into position weight matrices (PFMs) and visualized by sequence logos. In this way, the regulatory motifs that the model is “looking at” can be revealed. This technique is commonly used by sequence-based models, such as described by Alipahani et al.17,96 and Bogard et al.17,96

Perturbation-based methods

Another way to interpret model predictions is based on perturbation. This is done by modifying (“perturbing”) the model’s input and inspecting the changes in the output. It is expected that the model’s prediction will substantially decrease if the most discriminative part of the input example is perturbed. For example, by performing in silico pointwise mutations for a biological sequence, we can produce a so-called mutation map of the sequence by asking the model to predict a score for each of the mutated sequences. This method has been systematically investigated by DeepBind to confirm the putative sequence motifs that are recognized by its convolution filters.17

Attribution-based methods

In contrast to example-based and perturbation-based methods which still treat the deep-learning model as a black box, attribution-based methods open up the black box by attributing a model’s intermediate network value to the model’s input. Such methods compute an attribution score for each element of the input. The magnitude of the score indicates the amount of its contribution, and the sign of the score shows whether the contribution is positive or negative. Saliency map159 is one the simplest attribution-based methods. It is defined just as the model’s gradients with respect to the input. In recent years more theoretically guaranteed approaches have been developed, including Integrated Gradients,152 SHAP,153 and DeepLIFT.154 For instance, BPNet extensively utilized DeepLIFT when inspecting the model’s binding affinity predictions to the TFs. Attribution-based methods seem to have a higher sensitivity than example-based methods. In the BPNet paper, the researchers systematically discussed the complex sequence motifs that could only be discovered by DeepLIFT but not by PFMs, such as the helical periodicity patterns of Nanog.48

Model-based methods

Instead of making post hoc interpretations of the model, it is better to consider interpretability during the model’s development. There are building blocks of deep-learning models that are inherently interpretable, such as attention modules.23 Attention scores can provide the location of regions to which the model is paying attention. Dividing models into different stages and producing interpretable results at the end of each stage is also a common strategy. For example, Expecto predicts gene expression prediction in three stages.41 In the first stage, it transforms genomics sequences into epigenomic features, which consists of 2,002 genome-wide histone marks; in the second stage, it aggregates epigenomic features produced in the second step based on spatial closeness; in the third stage, it predicts gene expression level from the aggregated features. In this way, the transparency at each stage provides interpretability for the whole pipeline.

Limitations of existing deep-learning applications in gene regulation

Apart from the aforementioned general problems of deep-learning algorithms, there are limitations specific to gene regulation that will potentially challenge their application potential.

The problem of overfitting

Deep-learning models are well known for the overfitting issue. The apparent high performance on a benchmark dataset does not always imply successful generalization to other unseen examples. This is particularly true for applications in gene regulation, due to three problems that are not trivial to overcome.

-

1.

The limitation of data volume in biological studies may hinder machine-learning model development. Unlike fields such as computer vision and natural language processing, where it is easy to collect terabytes or even petabytes of training data from the internet or from crowd-sourcing platforms,160 biological data have to be generated from biological experiments. If a particular regulatory mechanism cannot be studied by an established experimental technique that generates a large enough number of training examples, it will be impossible to study them using machine-learning methods. Even though such experimental techniques are available, the unavailability of such data may also arise from financial constraints or privacy concerns.

-

2.

The biological and technical variations across experimental conditions may limit the model’s generalization performance. For example, in the Basenji paper, the authors observed, on average, a Pearson correlation of 0.479 between biological replicates,38 even though they are from the same consortium. It is therefore difficult to tell whether a model with a high performance score is generalizable to other examples or is simply overfitting to those random variations.

-

3.

The use of endogenous sequences does not always imply the model’s generalizability to unseen cases. Most models take in biological sequences as input for their prediction, but most of them only use endogenous sequences for training. For example, DeepSEA,30 Basset,34 and Expecto41 were trained solely on the human reference genome GRCh37. It remains elusive how well those models are generalizable to genetic variations that may have a different “regulatory grammar” from those that are in the observed endogenous sequences. Furthermore, the number of such sequences pertinent to a particular regulatory event may comprise only a tiny fraction of the organism’s genome, transcriptome, or proteome. For example, the promoter sequences that regulate TSS only consist of genomic sequences at the beginning of each gene. This could further limit the diversity of training data. As we have introduced in previous sections, only APARENT96 for polyadenylation and the Cuperus et al.104 model for 5′ UTR translation efficiency utilized measurements of synthesized exogenous sequences from MPRA data for model training and evaluation.

The limitation of sequence-only models

Most gene regulation is a concerted effect of both cis-acting sequence motifs and trans-acting binding molecules (mainly binding proteins) residing in a cellular environment. Most of the aforementioned deep-learning models take nucleotide or amino acid sequences containing only cis-acting information as input. Some methods modeled the trans-acting effects implicitly by making tissue-specific predictions. For example, DeepSEA, Basset, and Basenji38 perform multi-task prediction across multiple tissue types, and in Leung et al.,78 the researchers trained separate models for each tissue type. For some methods, such trans-acting effects are ignored completely (e.g., in CNNProm69 and APARENT96). For all those models with tissue-specific predictions, the trans-acting environment is assumed to be static, and the models always produce the same results for a tissue type even though gene regulation is dynamic with respect to internal and external conditions. When prediction in a new tissue type is needed, the model needs to be retrained using experimental profiles coming from that tissue, which may not always be available. The only model that explicitly models trans-acting effects is DARTS,80 which considers the expression levels of 1,498 RBPs for splicing prediction.

Not enough consideration of interactions among regulatory events

The multiple layers of gene regulation do not happen independently. A proteomic-level regulation of one protein may affect the transcriptomic-level regulation of another gene. However, most methods developed so far have only considered regulatory events independently. Even for the multi-task models that predict multiple genomic features simultaneously, the interactions between those predicted events are not explicitly taken into consideration. DeeReCT-APA97 considers the interactions among multiple PASs; however, the interaction with the regulatory events of other types, e.g., splicing, is beyond its reach.

New deep-learning methods and perspectives

In the following sections, we discuss several promising new paradigms in deep learning that will potentially overcome the limitations already described (Figure 3). We list related works in Table 5 as examples for each new paradigm and hope that they can shed light on new deep-learning-based gene regulation studies.

Figure 3.

New deep-learning paradigms for gene regulation studies

(A) Self-supervised pre-trained models.

(B) Few-shot and meta-learning models.

(C) Incorporation of structural information.

(D) Multi-omics models.

(E) Single-cell omics models.

Table 5.

New methods and perspectives

| Method name | Year | Datasets | Model | Functionalities |

|---|---|---|---|---|

| Self-supervised pre-trained models: | ||||

| ESM-1b Transformer18 | 2020 | 250 million protein sequences from UniProt Archive (UniParc)161 collected by Uniref. 50129 | Transformer (33 layers, 650M params) | pre-train task: protein masked language modeling with amino acid sequencedownstream tasks: contact map prediction, secondary structure prediction |

| DNABERT19 | 2021 | k-mers of the human genome | BERT-base162 (12 layers, 110M params) | DNA masked language modeling; downstream fine-tuning achieves strong performance on: promoter recognition, TF binding sites prediction, splice sites prediction, functional genetic variants classification, cross-species transfer learning |

| MSA Transformer24 | 2021 | 260 million MSAs from UniProt collected by UniClust30163 | Transformer adapted to MSA (12 layers, 100M params) | protein masked language modeling with MSA; downstream tasks: unsupervised and supervised contact map prediction, 8-class secondary structure prediction |

| Few-shot/meta- learning: | ||||

| DeeReCT-TSS74 | 2021 | FANTOM539 | Reptile algorithm164 for meta-learning | the Reptile meta-learning algorithm allows fast adaptation to new tissue types |

| MIMML165 | 2022 | starPepDB166 BIOPEP-UWM167 |

Prototypical Network168 for metric-based meta-learning (few-shot learning) mutual information maximization loss |

few-shot bioactive peptide function prediction |

| Incorporation of structural information: | ||||

| NucleicNet106 | 2019 | 483 RNA-protein complexes from the PDB107 and de-duplicated to 158 ribonucleoprotein structures | CNN with ResNet-like architecture | structure-based RNA-protein binding prediction |

| MaSIF169 | 2020 | PDB | geodesic convolutional neural networks170 | protein pocket classification (MaSIF-ligand) protein interface prediction (MaSIF-site)protein-protein interaction (PPI) search (MaSIF-search) |

| dMaSIF171 | 2021 | PDB | quasi-geodesic convolution on point cloud representation of protein surfaces | protein interface prediction PPI search |

| Multi-omics models: | ||||

| MOMA172 | 2016 | the study curated the dataset “Ecomics,” which has integrated data of the transcriptome, proteome, metabolome, fluxome, and phenome of E. coli under different experimental conditions (available from http://prokaryomics.com) | combination of RNN-based deep learning and LASSO regression | using a layer-by-layer approach, the model predicts multi-omics quantities (transcriptomic, proteomic, metabolomic, fluxomic, and phenomic) |

| DSPN173 | 2018 | the study curated the dataset resource “PsychENCODE,” which includes comprehensive functional genomic data (genotype, bulk transcriptome, chromatin, and Hi-C profiles) of the brain of 1,866 individuals | conditional deep Boltzmann machine174 | the model predicts brain phenotypes in an interpretable and generative way and is able to impute intermediate “molecular phenotypes” |

| deepManReg175 | 2022 | the Patch-seq176 multi-omics transcriptomic and electrophysiological data for neuron phenotype classification177 | DNN with manifold alignment178 | multi-modal alignment of multi-omics data |

| Chaudhary et al.179 | 2018 | 230 samples from TCGA with RNA-seq data, microRNA-seq data, and DNA methylation profiles | autoencoder-based dimensionality reduction,180 feature selection, and integration of multi-omics data | the multi-omics model is able to cluster patients into different survival groups, and survival-correlated autoencoder features have verified predictive performance on independent datasets |

| Utilizing single-cell profiles: | ||||

| Pseudobulk level: | ||||

| Cusanovich et al., 2018181 | 2018 | scATAC-seq of 100,000 cells from 13 tissues of adult mice | Basset model trained to predict chromatin accessibility in each of the 13 tissues |

|

| DeepFlyBrain151 | 2022 | scATAC-seq profiling of 240,919 cells of Drosophila whole brain | CNN + LSTM model used in DeepMEL | prediction of co-accessible regions in three cell subtypes: Kenyon cell, T neurons, and glia |

| Single-cell level: | ||||

| DeepCpG182 | 2017 |

|

CNN + bidirectional GRU | imputation of methylation states at the single-cell level |

| CNNC185 | 2019 |

|

CNN | TF target gene prediction, disease-related genes prediction, and causality inference between genes |

| SCALE190 | 2019 | variational autoencoder197 with Gaussian mixture model | clustering, batch effect removal, and imputation of scATAC-seq data | |

| scFAN198 | 2020 |

|

3-layer CNN | inferring TF binding activity of scATAC-seq using TF binding model pre-trained on bulk data |

| scGNN199 | 2021 |

|

GNN-based autoencoder | clustering, scRNA-seq data imputation |

| scBasset200 | 2022 |

|

6-layer CNN (1,344 bp input) | sequence-aware scATAC-seq imputation, de-noising, and cell clustering |

| scTenifoldKnk202 | 2022 |

|

quasi-manifold alignment206 | virtual gene knockout prediction |

Pre-trained self-supervised models could alleviate the problem of data insufficiency

In recent years, pre-trained models have achieved great success in processing and understanding the natural language. Pre-trained Transformer models such as BERT162 and GPT207,208,209 perform self-supervised learning on massive corpora, aiming to predict randomly masked tokens from their context (the “masked language modeling” task) or the next token given the previous tokens (the “causal language modeling” task). The pre-trained models then display strong transfer learning ability. After fine-tuning on a very small amount of data from some downstream tasks, the model achieves state-of-the-art performance (Figure 3A).

Pre-trained models for biological sequences have been developed in parallel. For example, Rives et al.18 developed a protein sequence model, ESM-1b, which is a 33-layer Transformer architecture with 650 million parameters. ESM-1b performs BERT-like masked language modeling and is trained on 250 million protein sequences from Uniref. 50,210 which contains clusters from the UniProt Archive with 50% sequence similarity. Taking the network representations from ESM-1b, downstream classifiers trained on small datasets perform quite well on protein secondary structure and protein contact map prediction. DNABERT19 is a DNA sequence model based on a 12-layer BERT-base162 Transformer architecture with 110 million parameters pre-trained on the k-mer representation of the human genome for genomic sequence modeling. DNABERT is trained with the masked language modeling task by tokenizing the human genome into k-mers. The model showed similar or even better performance on several sequence classification tasks such as promoter recognition, TF binding site prediction, splice site prediction, and functional genetic variants classification. The model also showed cross-species transfer learning ability through the prediction of mouse TF binding sites. Instead of performing the pre-training task on one amino acid sequence only, the MSA Transformer24 extended the Transformer model to handle multiple sequence alignments (MSAs) of amino acid sequences to better utilize contextual information both within sequences and across homologous sequences. The MSA Transformer showed even superior performance on downstream protein secondary structure prediction and protein contact map prediction than ESM-1b.

Through a language modeling objective, the pre-trained models can utilize a massive amount of unlabeled biological sequence data that are not specific to one species or one prediction task. In this way, it is able to discover regulatory grammars across multiple genomic regions or from multiple species. It will be of great interest to see whether such pre-trained models are systematically beneficial to downstream prediction tasks of gene regulation, especially when the size of the downstream task datasets is not enough to train deep-learning models from scratch.

Few-shot and meta-learning mechanisms produce data-efficient deep-learning models

Another trend in the deep-learning community to tackle the problem of data insufficiency is to develop deep-learning models that utilize data efficiently. In particular, “few-shot learning” is aimed at solving a prediction task with only a few training examples (Figure 3B). This challenging problem is usually tacked by “meta-learning,” whereby a “meta-model” is trained that is easily generalizable across a set of similar tasks. When it is required to perform a specific task, it is able to quickly adapt itself to it with a few provided training examples from the task.

Such methods have already been applied in the classification of biological sequences. For example, the previously introduced DeeReCT-TSS74 applied a gradient-based meta-learning algorithm, Reptile,164 for the fast adaptation of the TSS prediction model to a total of ten cell types. The authors discovered that using ∼20% of data from each cell type to pre-train a meta-model and then adapt it to a specific cell type using the rest of the data benefited model performance. MIMML165 is a newly proposed meta-learning framework for bioactive peptide function prediction. MIMML is based on the Prototypical Network,168 which performs few-shot classification by measuring the distance from a query example to a few exemplars of each class. MIMML is able to perform few-shot prediction of a total of 16 peptide functions. With the above successful applications, we expect meta-learning to have a greater impact, especially on prediction tasks, with many related classes but only a few training examples for each of them.

Incorporation of structural information benefits modeling

We have previously pointed out the limitation of sequence-only models for not explicitly considering trans-acting factors. At the molecular level, such factors are constantly dependent on the interactions between protein-protein interactions or nucleic acid-protein interactions. Therefore, the accurate modeling of trans-acting factors in gene regulation requires the incorporation of structural information from the cis- and trans-acting counterparts. Recent breakthroughs in de novo protein structure prediction by AlphaFold2 have greatly enriched our resource for protein structures.211 Prediction of 3D structures of the genome50,56 and secondary structures of RNA has also experienced significant progress.212,213 Therefore, systematically incorporating structural information for deep-learning models in gene regulation is closer to reality than ever before (Figure 3C).

Recent works that perform deep learning on protein 3D structures are able to inspire future works that aim to incorporate such information into deep-learning models. MaSIF169 enabled deep learning on protein surfaces. Using a geodesic convolutional neural network,170 MaSIF is able to predict the binding of common ligands to protein interfaces and search for protein surfaces involved in PPIs. dMaSIF,214 as a successor to MaSIF, reduced the computational complexity of MaSIF by replacing geodesic convolution with quasi-geodesic convolution. Both methods produce deep representations of a protein surface that can be easily reused by other downstream deep-learning predictors. They could serve as a starting point for incorporating structural information in proteomic-level regulation prediction tasks, where protein-protein interaction is abundant. NucleicNet,106 introduced in the section “transcriptomic-level applications,” could serve as an example for transcriptomic-level models that aim to factor in such structural information. NucleicNet represents the binding protein’s 3D structure as a 3D grid with physicochemical properties. Using a CNN with residual connections,108 it is able to predict binding specificities of each type of the constituents of RNA. It will be of great interest as to whether such structural information and binding preference of RBPs, encoded in the deep representations of NucleicNet, can be explicitly utilized in modeling transcriptomic-level gene regulation in order to make such kinds of predictions more reliable and convincing.

Development of multi-omics models

Biologists usually rely on multiple experimental techniques for the confirmation of certain discoveries. Utilizing multiple data sources will also provide more evidence for predictive deep-learning models (Figure 3D). There are already existing methods that integrate multi-omics data from multiple sources for clinical predictive modeling. For example, Chaudhary et al.179 developed a multi-omics deep-learning model for the prediction of patient survival in hepatocellular carcinoma. The model is trained using 230 samples from The Cancer Genome Atlas (TCGA) with DNA methylation profiles, RNA-seq data, and microRNA-seq data. Their strategy to aggregate multi-omics data was to use autoencoder-based dimensionality reduction180 and feature selection in each data type. The selected features are then concatenated for downstream multi-omics clustering and discriminative prediction.

The developments of multi-omics models can be further inspired by multi-modal machine learning.215 Processing multi-omics data is essentially dealing with data coming from multiple modalities. The concept of multi-modal fusion strategies for multi-modal inputs, e.g., early fusion versus late fusion, is also applicable to multi-omics models. Early fusion, i.e., data integration at the networks’ early processing stage, may favor two omics profiles that are similar in the technology of measurement, and late fusion, i.e., data integration at the networks’ late processing stage, may favor two omics profiles that are similar in their subject of measurement but with very different technologies. Production of the so-called joint representations215 by mapping data points from multiple omics data sources into a same semantic space may also be beneficial for unified downstream network processing and analysis. DeepManReg175 shows itself as such an example. Designed as a DNN with a manifold alignment objective, it performs multi-modal alignment of transcriptomic and electrophysiological data in a Patch-seq multi-omics experiment176 and has been effective in neuron phenotype classification.

Although most existing deep-learning methods independently consider each regulatory event, gene regulation itself is a holistic cellular process. Future deep-learning models for gene regulation modeling should not only integrate multi-omics data sources as input but also consider the relationship between multi-omics quantities in their output (Figure 3D). For this, the Multi-Omics Model and Analytics (MOMA)172 provided such an example in E. coli. The MOMA model predicts multi-omics quantities of E. coli given their different growth conditions. Using RNN-based deep learning and LASSO regression, MOMA adopts a layer-by-layer approach to predict transcriptomic, proteomic, metabolomic, fluxomic, and phenomic quantities one after another, specifically taking into consideration the effect on an omics quantity by the quantities from previous omics layers. Similarly, the Deep Structured Phenotype Network (DSPN)173 predicts brain phenotypes from multiple functional genomic data modalities based on a hierarchical conditional deep Boltzmann machine (DBM) architecture.174 The DBMs are also arranged in a layer-by-layer fashion by first predicting the “intermediate molecular phenotypes” and then the brain phenotypes. This makes DSPN a generative model that is more interpretable than the common discriminative models in deep learning. These could shed light on future gene regulation modeling works that aim to simulate the underlying biological processes more realistically.

Utilization of single-cell profiles

Nearly all of the aforementioned deep-learning models for gene regulation have been trained on bulk sequencing profiles. In recent years, single-cell omics profiling technologies have improved substantially. This includes single-cell RNA-seq (scRNA-seq) for gene expression level profiling, single-cell ATAC-seq (scATAC-seq)192,216 for chromatin accessibility profiling, single-cell bisulfite sequencing (scBS-seq),183 and single-cell reduced representation bisulfite sequencing (scRRBS-seq)217 for methylation profiling, single-cell ChIP-seq (scChIP-seq)218 for protein-DNA binding profiling, and Smart-seq219 for full-length transcriptome profiling. Therefore, more and more data at single-cell resolution have accumulated. Single-cell profiles distinguish themselves from bulk profiles in their high dimensionality, high dropout rate (sparsity), and low sequencing quality and coverage. This not only introduces new challenges in data processing, analysis, and interpretation but also for the development of gene regulation models that utilize them.

Current deep-learning-based gene regulation models generally utilize single-cell profiles in two different ways (Figure 3E). The first operates at the pseudobulk level. The model aggregates single-cell measurements within each cell cluster into one rofile. The model then utilizes the aggregated pseudobulk profiles in the same way as bulk omics profiles. Despite information loss during the aggregation process, the utilization of pseudobulk profiles still has an advantage over real bulk omics profiles because they represent measurements from pure cell types without interference from others. As an example, Cusanovich et al. performed scATAC-seq on ∼100,000 somatic cells from adult mice.181 The researchers developed a model based on the architecture of Basset to predict chromatin accessibility in each of the 85 identified cell types in a multi-task fashion. The model was trained on the aggregated pseudobulk profiles within each cell cluster. Very recently Janssens et al. developed the DeepFlyBrain model, based on DeepMEL, for the prediction of chromatin co-accessible regions in the Drosophila brain.151 Similarly, the authors trained the DeepFlyBrain model on the aggregated pseudobulk profiles of three cell types, namely Kenyon cell, T neurons, and glia.

The other way is to utilize single-cell profiles at the genuine single-cell level. As single-cell profiles are well known for their sparsity, much research has been dedicated to applying deep learning for the imputation and inference on single-cell profiles. For example, DeepCpG,182 trained on the scBS-seq and scRRBS-seq profiles of multiple human and mouse tissues, uses a CNN + bidirectional GRU architecture and can impute methylation status for low-coverage single-cell methylation profiles. scGNN199 is a GNN-based autoencoder model for scRNA-seq data enhancement. scGNN utilizes multiple GNN and autoencoders that are effective in producing relationship-aware cell embeddings. The authors demonstrated that scGNN was effective in improving cell clustering and data imputation among four independent publicly available scRNA-seq datasets. SCALE190 is a variational autoencoder and Gaussian mixture model-based deep-learning model that performs imputation for low-coverage scATAC-seq profiles. Additionally, SCALE’s latent embedding of each cell was shown to be effective in scATAC-seq cell clustering and batch effect removal. scBasset is a recent model for scATAC-seq profile imputation. It improves upon SCALE by guiding imputation with the underlying genomic sequence. This is achieved by processing the genomic sequences into deep representations with a 6-layer CNN and incorporating them at the imputation step. scFAN198 is able to infer single-cell TF binding activity from scATAC-seq profiles. scFAN utilizes a sequence-based TF binding model that was trained on bulk TF binding profiles. scFAN then infers the per-cell TF binding activity by asking the model to predict the TF binding affinity to the chromatin-accessible regions of each cell as reported by the scATAC-seq profile.