1. Introduction

Understanding human anatomy is fundamental to research and education related to medicine and forensic anthropology [1,2]. Physical specimens such as cadaveric prosections, dissection or, human bone collections are arguably irreplaceable for anatomical teaching [3,4]. However, the use of physical specimens introduces significant ethical considerations [5,6], as well as limitations related to cost and a gradual diminishing of specimen quality due to time and prolonged handling. The advent of medical imaging and its different modalities have provided research fields related to anatomy with the means to examine and visualise internal morphologies such as bone and soft tissues without the need for dissections or serial sections [7,8]. With digitisation techniques becoming increasingly utilised in archaeological and anthropological sciences [9], computed tomography (CT) databases are being accessed as repositories of virtual skeletal data [10]. In forensic anthropology, relevant techniques in scanning and processing of CT images are utilised in the development of novel methods for identification [[11], [12], [13], [14], [15], [16], [17], [18]]. CT scanning is a non-destructive acquisition method traditionally used in medical applications. CT scanning follows the basic physics principles of x-rays [7]. As x-rays traverse through matter, the beam undergoes attenuation. This effect is the reduction of x-ray beam intensity as it is either absorbed or deflected by the tissue [19]. In CT, a series of x-ray images of the object cross section are captured to construct three-dimensional visualisations with volumetric information [7,[20], [21], [22]]. While accessibility of CT data (including associated software) have improved in recent years through the efforts of open-source data repositories [23] and methods [24], dependencies on manual thresholding and segmentations continue to limit the number of images and sample size of data that can feasibly be processed for research. This has meant that it has been difficult to undertake research on modern day populations at sufficient scale to address identification challenges at sufficient scale.

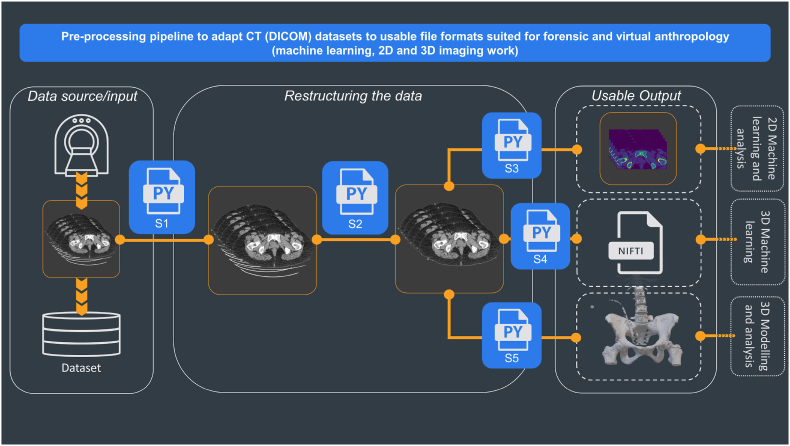

The following is a pre-processing pipeline that has been created to remove irrelevant and non-osseous structures (such as fat, lymph nodes, and skin) or noise (such as the sliding table, stents, and medical tubing apparatus) in a CT scan. It offers the possibility of processing large numbers of CT scans to create large datasets that have relevance for the assessment of human remains in the context of modern populations. The pipeline presents three distinct outputs, 2D images thresholded for the tissue(s) of interest, stereolithography (STL) files for quantitative mesh analysis and 3D printing, and 3D image files readily available for machine learning applications.

2. Background

The increase in the application of medical imaging and digital data on the analysis of human remains and anatomy in forensic anthropology (FA) is well-documented [16]. This growing trend, now commonly referred to as virtual anthropology, has contributed to the development of quantitative forensic anthropology methodologies [11,[25], [26], [27]]. Medical imaging, specifically CT scanning, is able to provide high contrast digital image data that allow the slightest differences in tissues to be detected [19]. Structures scanned through CT can be visualised as high-fidelity grayscale images. These images can be further reconstructed as three-dimensional models or thresholded into images with regions/tissues of interest highlighted, enabling the investigation of complex skeletal morphology [28]. The features of CT have aided researchers across disciplines in the interpretation of medical images [7,8,29]. CT images are also used in other established and emerging research fields related to medicine, forensic anthropology, virtual anthropology, and 3D forensic science [10,[30], [31], [32]]. The advances in medical imaging technologies alongside disciplines such as computer science, has provided new ways of generating 3D visualisations of human anatomy for study and research from CT scans [33]. CT scans, recorded as Digital Imaging and Communications in Medicine (DICOM) data, can be visualised as two-dimensional image files in axial, coronal, and sagittal views, and the tissues of interest can be differentiated and highlighted using the differences in attenuation [34]. Attenuation is the overall decrease in the intensity of the emitted x-ray beam as it passes through the object due to processes such as absorption and scattering [19]. As result of the attenuation effect, dense tissue/material will appear bright, while less dense tissue/material will appear dark [35]. Further, the DICOM images can be stacked and reconstructed as a three-dimensional volume rendering [28]. Through three-dimensional reconstructions and renderings using DICOM data from CT, size and shape information of the scanned object, or anatomy of interest can be accurately determined [28,31]. Comparative studies have further shown a high degree of comparability between dry bones and three-dimensional models rendered from CT scanning [[36], [37], [38]]. As a result of this accuracy and continual efforts to adapt traditional FA methods into virtual settings enabled by CT, CT scanning has seen increased use and in some institutes it has become part of routine procedure in medico-legal investigations [26,27,31,39].

The growth of virtual anthropology has resulted in an increasing demand for CT data [31,40] that is currently being met by CT databases created using modern populations from clinical scans or post-mortem scans [9,41]. The New Mexico Decedent Image Database (NMDID) and other open-source data archives (such as The Cancer Imaging Archive (TCIA)) are reflective of contemporary populations. The virtual nature of these databases not only provides research opportunities for digital preservation, clinical diagnostics, and post-mortem examinations, but also provides the opportunity for non-destructive analysis of contemporary skeletal structures [42]. Using these valuable sources of data can provide a better representation of modern populations in comparison to historical skeletal collections [9,43]. Further, collections of high-resolution post-mortem and clinical CT databases eliminates the need for bone preparation, the deterioration of physical samples over time, and allows for easy data sharing and distribution for rapid analysis [28,44]. The virtual nature of CT and its associated imaging modalities allows access where physical examination is impossible [45,46]. The virtual access also provides users with the opportunity to engage in transnational research and analysis, with virtual reconstructions being analysed between institutions in different geolocations [27].

The recent increase in the availability of data from medical imaging and interest in virtual anthropology has resulted in studies which conduct estimation methods based on morphological feature analysis on digital models [11,26,42,[47], [48], [49], [50]]. This is typically done through the conversion of DICOM data into a surface model, an STL (stereolithography) file. With the success of machine learning techniques in analysing nonmedical images [51], there is currently a rise in number of studies which utilise machine learning (ML) and artificial intelligence on biological profile estimations in FA [14,16,17,46,52,53,[53], [53], [54], [55], [56], [57], [58], [59], [60], [61], [62], [63], [64], [65]]. ML studies in medicine and FA have shown that 3D image files restructured from DICOM images such as Neuroimaging Informatics Technology Initiative (NIfTI), Wavefront Alias (OBJ), and STL is gaining interest and may be a valuable file format for convolutional neural networks in processing volumetric data [66].

The limitation to study sample sizes can be seen as a two-fold problem. Lack of data availability, and the feasibility of manually processing high quantities medical images to acceptable file formats and standards. While the former is being addressed by the emergence of databases such as the NMDID, TCIA, and the usage of diagnostic clinical CT scans from hospitals and medical facilities, the latter is often overlooked in research literature. Forensic science and virtual anthropology studies involving CT scans often overlook or underemphasize the time taken to process medical images for machine learning. As medical images used in virtual anthropology tend to be from post-mortem and/or clinical settings, the scanned specimen may include irrelevant and unnecessary biological and non-biological structures such as the sliding table (as part of the CT scanning apparatus), medical tubing, clothing, fat, skin, or lymph nodes. While noisy structures can be removed in medical imaging programs such as 3D Slicer [67] using guides and resources developed [24], it is arguably unfeasible and time-consuming to undergo these steps if hundreds, if not thousands of 3D models or individual scans were required for machine learning studies in FA or VA.

Given the current interest to utilise ML in Forensic Anthropology and Virtual Anthropology, large-scale CT databases are being turned to as a viable source of data. However, the manual steps involved in removing noise and unnecessary structures from great quantities of CT images remain a challenge for researchers and the tight timings of research project cycles. The purpose of this technical note is therefore, to introduce a pre-processing pipeline based in Python 3. Allowing users to adapt the Python scripts provided to handle and automatically process CT images to remove noise, and to generate three discrete file outputs relevant to 3D modelling, machine learning, and image analysis in forensic and virtual anthropology.

3. Pre-processing pipeline

A pre-processing pipeline was designed for application with Python 3.8 programming language to remove noise, generate thresholded CT images, 3D models in STL format, and 3D image files in NIfTI format. This pipeline has been developed to run on all operating systems compatible with Python 3.8.0 and the packages required in the pipeline. The packages utilised to construct this pipeline include pydicom, dicom2nifti, scipy, skimage, ITK, and VTK among others. The code and additional detail regarding dependencies is available for review and use at https://github.com/preprocessing_pipeline.

Fig. 1 provides a visual representation of the framework developed in this empirical section. The framework consists of three distinct stages, where each stage involves different Python scripts to produce the output desired.

-

(1)Data source/input

-

(a)Dataset sorting and extraction script

-

(a)

-

(2)Restructuring data

-

(a)Noise removal and segmentation script

-

(a)

-

(3)Useable output

-

(a)Thresholding for tissues of interest script

-

(b)Conversion to NIfTI format

-

(c)Conversion to stereolithography format.

-

(a)

Fig. 1.

Pre-processing pipeline showing the steps for adapting CT (DICOM) datasets into file formats suitable for 2D and 3D image processing, virtual modelling, and machine learning work in forensic anthropology. The first stage is retrieving the data (Data Source), followed by the operations of data cleaning (Restructuring the data); the last stage is the Useable output, where different scripts prepare the scans for outputs required for the specifics of different types of research.

3.1. Data source/input

The first set of scripts (S1) takes DICOM images as input and checks for corrupted and non-DICOM files, if found the identified files are deleted. It then sorts them into folders according to the series numbers. This enables the user to automatically sort and extract relevant DICOM files into folders created according to the series of each scan. Each CT study consists of multiple series of DICOM files; each DICOM file is an image consisting of an X-Ray slice of the body and the image metadata, while each series of DICOM files is the collection of the images of the scanned body. Each series may differ depending on the presence or absence of contrast agents or the scanned regions of interest. The metadata contain information such as slice thickness, series number, series date, patient sex, patient age etc. These fields or tags are defined by a pair of four alphanumeric characters [68], for example, (0020, 000E) denotes the Series Number tag of a DICOM file. While the DICOM files are generally extracted and provided in sequential order, each folder for a CT study for each patient could have multiple uncollated series of CT scans (Fig. 2). This first script therefore utilises the tags stored in each DICOM files' metadata with Pydicom [69], a python package capable of reading metadata to sort and extract DICOM files into its own ‘series folder’ within each CT study folder (Fig. 3). Finally, the third part of S1 enables users to delete DICOM files based on metadata information.

Fig. 2.

Uncollated DICOM files within individual CT study folder.

Fig. 3.

DICOM files automatically sorted and extracted into subfolders matching the series number in the metadata of each DICOM file.

3.2. Restructuring the data

The second script (S2) removes undesired structures such as the sliding table, which has been highlighted in Fig. 4. To ensure the script's adaptability for other CT scanning procedures, manufacturers, and protocols, the code in S2 was optimised so that a selection of the patient's tissues can be detected and the noisy structures outside of the selection can be removed. The selected section of the image matrix effectively becomes a mask; any sections outside of the selected mask is overwritten as ‘zero’, thus deleting the unwanted structures. As S2 directly overwrites the original file, metadata pertaining to each file is preserved. To achieve the described functions of S2, a few notable Python packages were used. The DICOM files were first thresholded using a combination of modules within the Pydicom package [69]. Thresholding highlights specific materials based on their radio density – measured in Hounsfield units [70]. The pixel matrix was thresholded to only display structures which had the radio density of bone tissue.

Fig. 4.

DICOM file with sliding table highlighted in red. (For interpretation of the references to colour in this figure legend, the reader is referred to the Web version of this article.)

First, the DICOM file is loaded as an array of pixels and labelled as ‘Original Image’. This array of pixels undergoes a series of thresholding functions to only display the structures which has the radiodensity of bone and surrounding tissue. The thresholding values in S2 can be used as a fixed threshold if the tissue of interest is of bone tissue only. The selected area or area of interest is returned as a ‘Mask’, which denotes the parts of the pixel array that should be kept, returning an area of interest labelled as ‘Mask’. Using the area identified by the ‘Mask’, the ‘Original Image’ is segmented to produce the ‘Final Image’, the ‘Final Image’ array overwrites the original DICOM file while maintaining relevant metadata and the appropriate image matrix size (512 by 512) for future processing. The noise removal function of this script can be visualised in Fig. 5. In addition to the remove noise function above, S2 can detect corrupted DICOM files and non-DICOM files to be filtered and flagged for the user. This is to ensure the script's adaptability for other CT scanning procedures, manufacturers, protocols, and datasets from other sources. By removing incompatible and corrupted files, S2 enables the smooth transition to the next stage of the pre-processing pipeline.

Fig. 5.

DICOM file labelled ‘Original Image’ undergoes a series of thresholding and masking functions to return an area of interest labelled as ‘Mask’. The ‘Final Image’ returned overwrites into the DICOM file, thus removing noisy structures such as the sliding gantry.

3.3. Useable outputs

With the noise removed, the third script, S3, enables users to pass DICOM files through thresholding functions from Pydicom and coded to produce 2D general-purpose image formats. General-purpose image formats such as PNG (portable network graphics) and JPEG (joint photographic experts group) are regarded as standards and widely used as image storage formats and thus, commonly used in image-based machine learning [71]. As both PNG and JPEG are capable of compressed storage of image data without visible reductions of image quality, this conversion from DICOM to PNG/JPEG reduces the strain on computational power and resources [72,73]. Depending on the filetype requirements of the user/researcher, S3 can be altered to produce either PNG or JPEG files.

The fourth set of script (S4), enables users to compile and convert the processed DICOM files to the NIfTI file format using dicom2nifti package [74]. This package takes the collections of DICOM files sorted into each series folder to generate a single NIfTI file per series. By stacking the DICOM files, the resulting NIfTI file which contains volumetric data converted from the metadata and image data in the DICOM files is now fit for machine learning. Although originally created for neuroimaging, NIfTI is not limited to neurological tissue. Recent studies have demonstrated that NIfTI files can be thresholded through radiodensity thresholding to examine other soft tissues such as lung connective tissue and hard tissues such as bone [57,[75], [76], [77]]. With clinical and anatomical research demonstrating the feasibility of using NIfTI to examine bone tissues, this development implicates that the NIfTI format can be explored in the field of forensic and virtual anthropology. Moreover, the NIfTI format has been noted as the preferred format to conduct 3D machine learning as it contains the equivalent information of hundreds or even thousands of DICOM files in a single file [78]. Lastly, the NIfTI format is not exclusive of existing software, it is compatible with many current ready-made viewers, image analysis software such as 3DSlicer, ImageJ, InVesalius, OsiriX, R, and Python – through the Nibabel package [67,[79], [80], [81], [82], [83], [84]]. The second part of S4 enables users to move the generated NIfTI files into a new and separate directory.

The fifth script (S5), enables users to pass the processed DICOM files through the visualization toolkit (VTK) package, which is an open-source, Python compatible toolkit for 3D visualization and image processing [85], and contributes to the core of many open-source, multi-platform, ready-made software such as 3DSlicer, InVesalius, FreeSurfer [67,81,86]. The compiled DICOM files can be converted into stacks of array and thresholded to highlight the tissues of interest. The stacks of arrays utilise volumetric data from the DICOM files to form vertices and triangles which can be tessellated into a manipulable virtual model. The model can be written and saved as an STL file which can be visualised in virtual 3D viewing platforms/software or printed as a physical 3D model. The printed models provides tactile surface information of the scanned object [87,88]. This technology has since been incorporated into various fields of forensic science such as FA, crime scene reconstruction, ballistic reconstruction, and forensic medicine [[88], [89], [90], [91], [92]]. Recent research involving the usage of STL files have demonstrated that it can be used in both virtual and physical environments to an acceptable degree of accuracy and validity [11,93,94]. Through the automation potential presented by this script, large-scale datasets can be readily converted into STL files for either virtual manipulation or printed 3D structures for surface analysis or even used as demonstrative evidence in court [94,95]. Examples of the thresholded 2D image files, 3D models, and 3D image files produced by scripts S3 – 5 can be found in the examples folder of GitHub repository provided above.

4. Pipeline implementation

The pipeline described above was tested and implemented on a large-scale database of 3575 CT scans retrieved from the Picture Archiving and Communication System (PACS) office at University College London Hospital (UCLH). The scans were anonymised by removing all patient identifiers identified by NHS Patient Identification Policy and official documentation on Patient Safety Alert [96,97], leaving only minimal demographic information. Ethics approval was granted by the Health Research Authority and Health and Care Research Wales under protocol number 130244. The age of the patients ranged from 19 to 97 for females and 18–99 for males. All scans in the UCLH database were acquired using 120 kVp at 0.50 mm slice thickness on a single scanner – Aquillion ONE ViSION 320-detector row detector scanner (Toshiba Medical Systems, Otawara, Japan). Additionally, the pipeline was tested on single scans obtained from open-source public repositories NMDID and TCIA. The NMDID scans were acquired using 120 kVp at 0.50 mm slice thickness on the Philips Big Bore CT Scanner (Philips Medical Systems, Amsterdam, Netherlands), while the TCIA scans were acquired using 120 kVp at 0.625 mm slice thickness on an unknown CT scanner. Fig. 6, Fig. 7 show that the scripts, particularly the ‘noise removal and segmentation’ was able to remove the sliding table from CT scans from alternative datasets such as the NMDID and the TCIA as designed. S3 – 5 were also able to produce the discrete outputs as designed. Examples of the thresholded 2D image files, 3D models, and 3D image files from the single case files of the NMDID and TCIA databases can be found in the GitHub repository provided above.

Fig. 6.

Noise removal and segmentation script on CT scan from the NMDID.

Fig. 7.

Noise removal and segmentation script on CT scan from the TCIA.

5. Discussion

This pre-processing pipeline is an effective tool available to researchers that seeks to process large amounts of DICOM data from hospitals, forensic science institutes, scanned historical collections, or post-mortem databases to create any of the outputs offered for ML, 2D and 3D image analysis. This pipeline is flexible: the DICOM file standardisation allows the processing of any CT scans with little to no changes to the code provided. The processing thresholds and the noise removal (S2) have been tested and demonstrated against three different sets of scans from different data sources and regions of body anatomy. Consequently, the outputs provided at the end of the pipeline present high reliability outputs which can be used for multiple research purposes.

The capabilities shown by this preliminary pre-processing pipeline address both growing demands and problems faced by modern forensic and virtual anthropology. Firstly, the demand for modern population data is fully addressed by the pipeline as it has shown the capacity to transform clinical CT data and post-mortem CT data straightforwardly. Second, the pipeline addresses the limitations due to manual segmentations. While it has been noted by previously published studies that noise can be manually deleted through the use of visualization software programs [93], the task is time-consuming for larger data sets. This manual cleaning of noise ultimately impacts the sample size that a research study can feasibly process in the life cycle of the research project itself. As shown by the current ML research in FA, medical images have been shown to produce successful ML classification models which contribute to advanced ML techniques such as neural networks and random forests for age and sex estimation in post-mortem skeletal remains and living skeletal data. These studies utilise X-ray images [[98], [99], [100], [101], [102]], MRI images [103,104], photography [105], and CT scans [56,102,[106], [107], [108]] of bony anatomy to either cluster or classify individuals’ age and biological sex. While the growing body of literature has presented ML algorithms with promising results, study sample sizes are often limited to below 500 individuals/scans [17,64,65,109,110]. Though small datasets may be sufficient for the training of machine learning algorithms, the generalisability, accuracy, validity, and reliability of developed ML and AI models can be greatly improved with larger high-quality datasets [111].The larger sample sizes that the pipeline is able to produce will allow ML and AI models to decrease variability in predictive accuracies and increase model robusticity [112]. Additionally, the outputs of S2-4 are file formats are consistent with the inputs used in current ML research in FA. This pipeline therefore not only addresses the time-consuming problem removing undesirable background noise and structures, but enables robust future ML research in FA. The outputs produced by the pipeline can be used beyond ML and image analysis research. For example, as the pipeline is able to utilise large DICOM datasets to produce STL models via S5, it therefore serves as a tool to create virtual or printed 3D models representative of the contemporary population for training, teaching, and development purposes. Another example of pipeline flexibility can be seen in the output generated by S3, while the current script thresholds for bone tissue, and the thresholding value can be adjusted to highlight any tissue of interest. Thus, enabling the user to detect and highlight other tissues structures such as muscle. This flexibility allows the pipeline presented here to produce outputs that are relevant in fields outside of FA, e.g., medicine. Although this pipeline has shown success in processing different sets of CT data, it is noted that the STL models produced by S5 could be further improved. Surface differences were observed throughout the model, and it is noted that current step-by-step guides developed on open-source software will allow users to generate models of higher quality. While the scripts in the present pipeline were developed using CT scans, the code developed processes DICOM files. DICOM image files produced by other imaging modalities such as MRI or plain radiography can also be feasibly processed with some modification to the scripts.

The pipeline scripts are publicly available on GitHub for any interested stakeholders or researchers to download, use and validate to produce impactful work in relevant fields. This commitment to Open Science contributes to the transparency of the databases produced, and increases their value as tools in the evaluative interpretations made to assist the court [113,114]. Moreover, as this is the first tool published for the aforementioned purposes, the use of platforms such as GitHub allows the modification and improvement of the code by any interested member of the community. Therefore, we welcome any computationally advanced users of the pipeline to alter and refine the code provided, to generate higher quality models and other outputs on a mass scale. It is noted that the development of such a tool to process large quantities of medical image data could pose an impact to data privacy [115]. Medical images contain vast amounts of personal data in the form of the image and metadata that can be used to re-identify scanned individuals which violates privacy. However, it is noted in the published literature that medical images stored as DICOM images can be anonymised to reduce the risk of re-identification. Metadata associated with DICOM images is typically removed when these images are used for research purposes [116,117]. As the present pipeline uses DICOM images as the sole input format, future works that may utilise this pipeline would also adhere to the recommendations in the current literature to de-identify images. The potential of data privacy violations is mitigated with anonymisation processes and ethical safeguards in place.

6. Conclusion

The pipeline presented here is a set of novel procedures to prepare CT image data, specifically but not limited to clinical data, for forensic and virtual anthropology. The processes automated by the scripts found in the pipeline also allows a greater quantity of CT studies and scans to be processed, thus, removing the manual constraints such as manual segmentations and labelling that have limited the sample sizes of current research. With the automated processes in place, the pipeline grants the user with the flexibility to access and transform CT datasets from virtually any source and of any size. Finally, the three distinct outputs provided by the pipeline enables the potentially large scale and automated production of 2D and 3D image files for machine learning purposes and 3D models for printing and virtual manipulation at a minimum. In the forensic anthropology context where funding, time, and access to modern population data may be restricted, this pipeline creates a process to transform CT data from a variety of contexts such as post-mortem, living, or virtual preservations of historical collections into ad-hoc datasets with useable and relevant outputs.

Disclosure statement

The authors declare no conflicts of interest were present in this research.

Disclaimers

There was no external funding for this research.

Presentation

This research was be presented at ANZFSS 2022 Brisbane, Forensics: Designing the Future. 11–15 Sept 2022 as a keynote presentation.

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgments

The authors wish to thank the data administrators at UCLH for their work in anonymising the data used in the development of the pipeline. Ethical approval was granted by the Health Research Authority and Health and Care Research Wales under protocol number 130244.

References

- 1.Marom A., Tarrasch R. On behalf of tradition: an analysis of medical student and physician beliefs on how anatomy should be taught. Clin. Anat. 2015;28:980–984. doi: 10.1002/ca.22621. [DOI] [PubMed] [Google Scholar]

- 2.Rissech C. The importance of human anatomy in forensic anthropology. Eur. J. Anat. 2021;25:1–18. [Google Scholar]

- 3.Korf H.-W., Wicht H., Snipes R.L., Timmermans J.-P., Paulsen F., Rune G., Baumgart-Vogt E. The dissection course – necessary and indispensable for teaching anatomy to medical students. Ann.Anatomy - AnThe Sci. Ethic. Concern. Legacy Hum. Remain. Hist. Collect.atomischer Anzeiger. 2008;190:16–22. doi: 10.1016/j.aanat.2007.10.001. [DOI] [PubMed] [Google Scholar]

- 4.White T.D., Black M.T., Folkens P.A. In: Human Osteology. third ed. White T.D., Black M.T., Folkens P.A., editors. Academic Press; San Diego: 2012. Chapter 3 - bone biology and variation; pp. 25–42. [DOI] [Google Scholar]

- 5.DeWitte S.N. Bioarchaeology and the ethics of research using human skeletal remains. Hist. Compass. 2015;13:10–19. doi: 10.1111/hic3.12213. [DOI] [Google Scholar]

- 6.Ballestriero R. The science and ethics concerning the legacy of human remains and historical collections: the Gordon Museum of Pathology in London. The Science and Ethics Concerning the Legacy of Human Remains and Historical Collections: The Gordon Museum. Pathol. London. 2021:135–149. [Google Scholar]

- 7.Rowbotham S.K., Blau S. In: Sex Estimation of the Human Skeleton. Klales A.R., editor. Academic Press; 2020. Chapter 22 - the application of medical imaging to the anthropological estimation of sex; pp. 351–369. [DOI] [Google Scholar]

- 8.Spoor F., Jeffery N., Zonneveld F. 2021. Imaging Skeletal Growth and Evolution. [Google Scholar]

- 9.Villa C., Buckberry J., Lynnerup N. Evaluating osteological ageing from digital data. J. Anat. 2019;235:386–395. doi: 10.1111/joa.12544. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Garvin H.M., Stock M.K. The utility of advanced imaging in forensic anthropology. Acad. Forensic Pathol. 2016;6:499–516. doi: 10.23907/2016.050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Robles M., Rando C., Morgan R.M. The utility of three-dimensional models of paranasal sinuses to establish age, sex, and ancestry across three modern populations: a preliminary study. Aust. J. Forensic Sci. 2020:1–20. doi: 10.1080/00450618.2020.1805014. 0. [DOI] [Google Scholar]

- 12.Simmons-Ehrhardt T.L., Ehrhardt C.J., Monson K.L. Evaluation of the suitability of cranial measurements obtained from surface-rendered CT scans of living people for estimating sex and ancestry. J. Forensic Radiol. Imag. 2019;19 doi: 10.1016/j.jofri.2019.100338. [DOI] [Google Scholar]

- 13.Turner W.D., Brown R.E.B., Kelliher T.P., Tu P.H., Taister M.A., Miller K.W.P. A novel method of automated skull registration for forensic facial approximation. Forensic Sci. Int. 2005;154:149–158. doi: 10.1016/j.forsciint.2004.10.003. [DOI] [PubMed] [Google Scholar]

- 14.Hefner J.T., Ousley S.D. Statistical classification methods for estimating ancestry using morphoscopic traits. J. Forensic Sci. 2014;59:883–890. doi: 10.1111/1556-4029.12421. [DOI] [PubMed] [Google Scholar]

- 15.Nikita E., Nikitas P. On the use of machine learning algorithms in forensic anthropology. Leg. Med. 2020;47 doi: 10.1016/j.legalmed.2020.101771. [DOI] [PubMed] [Google Scholar]

- 16.Spiros M.C., Hefner J.T. Ancestry estimation using cranial and postcranial macromorphoscopic traits. J. Forensic Sci. 2020;65:921–929. doi: 10.1111/1556-4029.14231. [DOI] [PubMed] [Google Scholar]

- 17.Toneva D., Nikolova S., Agre G., Zlatareva D., Hadjidekov V., Lazarov N. Machine learning approaches for sex estimation using cranial measurements. Int. J. Leg. Med. 2021;135:951–966. doi: 10.1007/s00414-020-02460-4. [DOI] [PubMed] [Google Scholar]

- 18.Imaizumi K., Usui S., Taniguchi K., Ogawa Y., Nagata T., Kaga K., Hayakawa H., Shiotani S. Development of an age estimation method for bones based on machine learning using post-mortem computed tomography images of bones. Forensic Imag. 2021;26 doi: 10.1016/j.fri.2021.200477. [DOI] [Google Scholar]

- 19.Mikla V.I., Mikla V.V. In: Medical Imaging Technology. Mikla V.I., Mikla V.V., editors. Elsevier; Oxford: 2014. 2 - computed tomography; pp. 23–38. [DOI] [Google Scholar]

- 20.Brogdon B.G. vol. 41. 2000. pp. 1–12. (Definitions in Forensics and Radiology, Critical Reviews in Diagnostic Imaging). [DOI] [PubMed] [Google Scholar]

- 21.Spoor F., Jeffery N., Zonneveld F. In: Development, Growth and Evolution: Implications for the Study of the Hominid Skeleton (Linnean Society Symposium) Volume 20, Illustrated edition. O'Higgins P., Cohn M.J., editors. Academic Press; San Diego: 2000. Imaging skeletal growth and evolution. [Google Scholar]

- 22.Seibert J.A., Boone J.M. X-ray imaging physics for nuclear medicine technologists. Part 2: X-ray interactions and image formation. J. Nucl. Med. Technol. 2005;33:16. [PubMed] [Google Scholar]

- 23.Edgar H., Daneshvari Berry S., Moes E., Adolphi N., Bridges P., Nolte K. Office of the Medical Investigator, University of New Mexico; Albuquerque, NM, USA: 2020. New Mexico Decedent Image Database. [Google Scholar]

- 24.Robles M., Carew R.M., Morgan R.M., Rando C. A step-by-step method for producing 3D crania models from CT data. Forensic Imag. 2020;23 doi: 10.1016/j.fri.2020.200404. [DOI] [Google Scholar]

- 25.Decker S.J., Davy‐Jow S.L., Ford J.M., Hilbelink D.R. Virtual determination of sex: metric and nonmetric traits of the adult pelvis from 3D computed tomography models. J. Forensic Sci. 2011;56:1107–1114. doi: 10.1111/j.1556-4029.2011.01803.x. [DOI] [PubMed] [Google Scholar]

- 26.Gillet C., Costa-Mendes L., Rérolle C., Telmon N., Maret D., Savall F. Sex estimation in the cranium and mandible: a multislice computed tomography (MSCT) study using anthropometric and geometric morphometry methods. Int. J. Leg. Med. 2020;134:823–832. doi: 10.1007/s00414-019-02203-0. [DOI] [PubMed] [Google Scholar]

- 27.Dedouit F., Savall F., Mokrane F.-Z., Rousseau H., Crubézy E., Rougé D., Telmon N. Virtual anthropology and forensic identification using multidetector CT. BJR (Br. J. Radiol.) 2014;87 doi: 10.1259/bjr.20130468. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Franklin D., Swift L., Flavel A. ‘Virtual anthropology’ and radiographic imaging in the forensic medical sciences. Egypt. J. Food Sci. 2016;6:31–43. doi: 10.1016/j.ejfs.2016.05.011. [DOI] [Google Scholar]

- 29.Stickle R.L., Hathcock J.T. Interpretation of computed tomographic images. Vet. Clin. Small Anim. Pract. 1993;23:417–435. doi: 10.1016/S0195-5616(93)50035-9. [DOI] [PubMed] [Google Scholar]

- 30.Carew R.M., French J., Morgan R.M. 3D forensic science: a new field integrating 3D imaging and 3D printing in crime reconstruction. Forensic Sci. Int.: Synergy. 2021;3 doi: 10.1016/j.fsisyn.2021.100205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Uldin T. Virtual anthropology – a brief review of the literature and history of computed tomography. Forensic Sci. Res. 2017;2:165–173. doi: 10.1080/20961790.2017.1369621. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Weber G.W., Schäfer K., Prossinger H., Gunz P., Mitteröcker P., Seidler H. Virtual anthropology: the digital evolution in anthropological sciences. J. Physiol. Anthropol. 2001;20:69–80. doi: 10.2114/jpa.20.69. [DOI] [PubMed] [Google Scholar]

- 33.Triepels C.P.R., Smeets C.F.A., Notten K.J.B., Kruitwagen R.F.P.M., Futterer J.J., Vergeldt T.F.M., Van Kuijk S.M.J. Does three-dimensional anatomy improve student understanding? Clin. Anat. 2020;33:25–33. doi: 10.1002/ca.23405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Mayo J.R. CT evaluation of diffuse infiltrative lung disease: dose considerations and optimal technique. J. Thorac. Imag. 2009;24:252–259. doi: 10.1097/RTI.0b013e3181c227b2. [DOI] [PubMed] [Google Scholar]

- 35.DenOtter T.D., Schubert J. StatPearls, StatPearls Publishing. 2021. Hounsfield unit.http://www.ncbi.nlm.nih.gov/books/NBK547721/ Treasure Island (FL. [PubMed] [Google Scholar]

- 36.Franklin D., Cardini A., Flavel A., Kuliukas A., Marks M.K., Hart R., Oxnard C., O'Higgins P. Concordance of traditional osteometric and volume-rendered MSCT interlandmark cranial measurements. Int. J. Leg. Med. 2013;127:505–520. doi: 10.1007/s00414-012-0772-9. [DOI] [PubMed] [Google Scholar]

- 37.Colman K.L., van der Merwe A.E., Stull K.E., Dobbe J.G.G., Streekstra G.J., van Rijn R.R., Oostra R.-J., de Boer H.H. The accuracy of 3D virtual bone models of the pelvis for morphological sex estimation. Int. J. Leg. Med. 2019 doi: 10.1007/s00414-019-02002-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Carew R.M., Viner M.D., Conlogue G., Márquez-Grant N., Beckett S. Accuracy of computed radiography in osteometry: a comparison of digital imaging techniques and the effect of magnification. J. Forensic Radiol. Imag. 2019;19 doi: 10.1016/j.jofri.2019.100348. [DOI] [Google Scholar]

- 39.Poulsen K., Simonsen J. Computed tomography as routine in connection with medico-legal autopsies. Forensic Sci. Int. 2007;171:190–197. doi: 10.1016/j.forsciint.2006.05.041. [DOI] [PubMed] [Google Scholar]

- 40.O. Kullmer, Benefits and Risks in Virtual Anthropology, (n.d.) vol. 3. [PubMed]

- 41.Franchi A., Valette S., Agier R., Prost R., Kéchichan R., Fanton L. The prospects for application of computational anatomy in forensic anthropology for sex determination. Forensic Sci. Int. 2019;297:156–160. doi: 10.1016/j.forsciint.2019.01.009. [DOI] [PubMed] [Google Scholar]

- 42.Christensen A.M., Smith M.A., Gleiber D.S., Cunningham D.L., Wescott D.J. The use of X-ray computed tomography technologies in forensic anthropology. Forensic Anthropol. 2018;1:124–140. doi: 10.5744/fa.2018.0013. [DOI] [Google Scholar]

- 43.Dirkmaat D.C., Cabo L.L. A Companion to Forensic Anthropology. John Wiley & Sons, Ltd; 2012. Forensic anthropology: embracing the new paradigm; pp. 1–40. [DOI] [Google Scholar]

- 44.Telmon N., Gaston A., Chemla P., Blanc A., Joffre F., Rougé D. Application of the Suchey-Brooks method to three-dimensional imaging of the pubic symphysis. J. Forensic Sci. 2005;50:507–512. [PubMed] [Google Scholar]

- 45.Dedouit F., Gainza D., Franchitto N., Joffre F., Rousseau H., Rougé D., Telmon N. Radiological, forensic, and anthropological studies of a concrete block containing bones. J. Forensic Sci. 2011;56:1328–1333. doi: 10.1111/j.1556-4029.2011.01742.x. [DOI] [PubMed] [Google Scholar]

- 46.Donato L., di Luca A., Vecchiotti C., Cipolloni L. Study of skeletal remains: solving a homicide case with forensic anthropology and review of the literature. J. Forensic Anthropol. 2016 doi: 10.35248/2684-1304.16.1.105. 01. [DOI] [Google Scholar]

- 47.Krishan K., Chatterjee P.M., Kanchan T., Kaur S., Baryah N., Singh R.K. A review of sex estimation techniques during examination of skeletal remains in forensic anthropology casework. Forensic Sci. Int. 2016;261:165.e1–165.e8. doi: 10.1016/j.forsciint.2016.02.007. [DOI] [PubMed] [Google Scholar]

- 48.Klales A.R., editor. Sex Estimation of the Human Skeleton: History, Methods, and Emerging Techniques. Elsevier Science; 2020. [Google Scholar]

- 49.García-Donas J.G., Ors S., Inci E., Kranioti E.F., Ekizoglu O., Moghaddam N., Grabherr S. Sex estimation in a Turkish population using Purkait’s triangle: a virtual approach by 3-dimensional computed tomography (3D-CT) Forensic Sci. Res. 2021:1–9. doi: 10.1080/20961790.2021.1905203. 0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Carew R.M., Iacoviello F., Rando C., Moss R.M., Speller R., French J., Morgan R.M. A multi-method assessment of 3D printed micromorphological osteological features. Int. J. Leg. Med. 2022 doi: 10.1007/s00414-022-02789-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Langlotz C.P., Allen B., Erickson B.J., Kalpathy-Cramer J., Bigelow K., Cook T.S., Flanders A.E., Lungren M.P., Mendelson D.S., Rudie J.D., Wang G., Kandarpa K. A roadmap for foundational research on artificial intelligence in medical imaging: from the 2018 NIH/RSNA/ACR/the academy workshop. Radiology. 2019;291:781–791. doi: 10.1148/radiol.2019190613. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Bewes J., Low A., Morphett A., Pate F.D., Henneberg M. Artificial intelligence for sex determination of skeletal remains: application of a deep learning artificial neural network to human skulls. J. Forensic and Legal Med. 2019;62:40–43. doi: 10.1016/j.jflm.2019.01.004. [DOI] [PubMed] [Google Scholar]

- 53.Cameriere R., Giuliodori A., Zampi M., Galić I., Cingolani M., Pagliara F., Ferrante L. Age estimation in children and young adolescents for forensic purposes using fourth cervical vertebra (C4) Int. J. Leg. Med. 2015;129:347–355. doi: 10.1007/s00414-014-1112-z. [DOI] [PubMed] [Google Scholar]

- 54.der Mauer M.A., Well E.J., Herrmann J., Groth M., Morlock M.M., Maas R., Säring D. Automated age estimation of young individuals based on 3D knee MRI using deep learning. Int. J. Leg. Med. 2021;135:649–663. doi: 10.1007/s00414-020-02465-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Thurzo A., Kosnáčová H.S., Kurilová V., Kosmeľ S., Beňuš R., Moravanský N., Kováč P., Kuracinová K.M., Palkovič M., Varga I. Use of advanced artificial intelligence in forensic medicine, forensic anthropology and clinical anatomy. Healthcare. 2021;9:1545. doi: 10.3390/healthcare9111545. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Etli Y., Asirdizer M., Hekimoglu Y., Keskin S., Yavuz A. Sex estimation from sacrum and coccyx with discriminant analyses and neural networks in an equally distributed population by age and sex. Forensic Sci. Int. 2019;303 doi: 10.1016/j.forsciint.2019.109955. [DOI] [PubMed] [Google Scholar]

- 57.Telfer S., Kleweno C.P., Hughes B., Mellor S., Brunnquell C.L., Linnau K.F., Hebert-Davies J. Changes in scapular bone density vary by region and are associated with age and sex. J. Shoulder Elbow Surg. 2021;30:2839–2844. doi: 10.1016/j.jse.2021.05.011. [DOI] [PubMed] [Google Scholar]

- 58.Peña-Solórzano C.A., Albrecht D.W., Bassed R.B., Gillam J., Harris P.C., Dimmock M.R. Semi-supervised labelling of the femur in a whole-body post-mortem CT database using deep learning. Comput. Biol. Med. 2020;122 doi: 10.1016/j.compbiomed.2020.103797. [DOI] [PubMed] [Google Scholar]

- 59.Hefner J.T., Pilloud M.A., Black C.J., Anderson B.E. Morphoscopic trait expression in “hispanic” populations. J. Forensic Sci. 2015;60:1135–1139. doi: 10.1111/1556-4029.12826. [DOI] [PubMed] [Google Scholar]

- 60.Maier C.A., Zhang K., Manhein M.H., Li X. Palate shape and depth: a shape-matching and machine learning method for estimating ancestry from human skeletal remains. J. Forensic Sci. 2015;60:1129–1134. doi: 10.1111/1556-4029.12812. [DOI] [PubMed] [Google Scholar]

- 61.Navega D., Coelho C., Vicente R., Ferreira M.T., Wasterlain S., Cunha E. AncesTrees: ancestry estimation with randomized decision trees. Int. J. Leg. Med. 2015;129:1145–1153. doi: 10.1007/s00414-014-1050-9. [DOI] [PubMed] [Google Scholar]

- 62.Santos F., Guyomarc’h P., Bruzek J. Statistical sex determination from craniometrics: comparison of linear discriminant analysis, logistic regression, and support vector machines. Forensic Sci. Int. 2014;245:204.e1–204.e8. doi: 10.1016/j.forsciint.2014.10.010. [DOI] [PubMed] [Google Scholar]

- 63.d'Oliveira Coelho J., Curate F. CADOES: an interactive machine-learning approach for sex estimation with the pelvis. Forensic Sci. Int. 2019;302 doi: 10.1016/j.forsciint.2019.109873. [DOI] [PubMed] [Google Scholar]

- 64.Bertsatos A., Chovalopoulou M.-E., Brůžek J., Bejdová Š. Advanced procedures for skull sex estimation using sexually dimorphic morphometric features. Int. J. Leg. Med. 2020;134:1927–1937. doi: 10.1007/s00414-020-02334-9. [DOI] [PubMed] [Google Scholar]

- 65.Ortiz A., Costa C., Silva R., Biazevic M., Michel-Crosato E. Sex estimation: anatomical references on panoramic radiographs using Machine Learning. Forensic Imag. 2020;20 doi: 10.1016/j.fri.2020.200356. [DOI] [Google Scholar]

- 66.Zunair H., Rahman A., Mohammed N., Cohen J.P. In: Predictive Intelligence in Medicine. Rekik I., Adeli E., Park S.H., Valdés Hernández M. del C., editors. Springer International Publishing; Cham: 2020. Uniformizing techniques to process CT scans with 3D CNNs for tuberculosis prediction; pp. 156–168. [DOI] [Google Scholar]

- 67.Fedorov A., Beichel R., Kalpathy-Cramer J., Finet J., Fillion-Robin J.-C., Pujol S., Bauer C., Jennings D., Fennessy F., Sonka M., Buatti J., Aylward S., Miller J.V., Pieper S., Kikinis R. 3D slicer as an image computing platform for the quantitative imaging network. Magn. Reson. Imaging. 2012;30:1323–1341. doi: 10.1016/j.mri.2012.05.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Muschelli J. Recommendations for processing head CT data. Front. Neuroinf. 2019;13:61. doi: 10.3389/fninf.2019.00061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Mason D., scaramallion, mrbean-bremen, rhaxton J., Suever Vanessasaurus, Orfanos D.P., Lemaitre G., Panchal A., Rothberg A., Herrmann M.D., Massich J., Kerns J., Golen K. van, Robitaille T., Biggs S., moloney, Bridge C., Shun-Shin M., pawelzajdel, Conrad B., Mattes M., Lyu Y., Morency F.C., Meine H., Wortmann J., Hahn K.S., Wada M., Rachum R. colonelfazackerley. pydicom/pydicom: pydicom 2.2.2. 2021 doi: 10.5281/zenodo.5543955. [DOI] [Google Scholar]

- 70.DenOtter T.D., Schubert J. StatPearls, StatPearls Publishing. 2021. Hounsfield unit.http://www.ncbi.nlm.nih.gov/books/NBK547721/ Treasure Island (FL. [PubMed] [Google Scholar]

- 71.Brownlee J. 2019. How to Load and Manipulate Images for Deep Learning in Python with PIL/Pillow, Machine Learning Mastery.https://machinelearningmastery.com/how-to-load-and-manipulate-images-for-deep-learning-in-python-with-pil-pillow/ [Google Scholar]

- 72.Wallace G.K. The JPEG still picture compression standard. IEEE Trans. Consum. Electron. 1992;38 doi: 10.1109/30.125072. xviii–xxxiv. [DOI] [Google Scholar]

- 73.Boutell T. RFC Editor; 1997. PNG (Portable Network Graphics) Specification Version 1.0. [DOI] [Google Scholar]

- 74.Brys A., Rothberg A., Costa M.E., Leockx D., Cates J. 2021. Fmljr, Icometrix/dicom2nifti.https://github.com/icometrix/dicom2nifti [Google Scholar]

- 75.Sander I.M., McGoldrick M.T., Helms M.N., Betts A., van Avermaete A., Owers E., Doney E., Liepert T., Niebur G., Liepert D., Leevy W.M. Three-dimensional printing of X-ray computed tomography datasets with multiple materials using open-source data processing. Anat. Sci. Educ. 2017;10:383–391. doi: 10.1002/ase.1682. [DOI] [PubMed] [Google Scholar]

- 76.Zunair H., Rahman A., Mohammed N., Cohen J.P. 2020. Uniformizing Techniques to Process CT Scans with 3D CNNs for Tuberculosis Prediction.http://arxiv.org/abs/2007.13224 ArXiv:2007.13224 [Cs, Eess] [Google Scholar]

- 77.Veneziano A., Cazenave M., Alfieri F., Panetta D., Marchi D. Novel strategies for the characterization of cancellous bone morphology: virtual isolation and analysis. Am. J. Phys. Anthropol. 2021;175:920–930. doi: 10.1002/ajpa.24272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Roy T., Permanente K. vol. 4. KDnuggets; 2017. https://www.kdnuggets.com/medical-image-analysis-with-deep-learning -part-4.html/ (Medical Image Analysis with Deep Learning). [Google Scholar]

- 79.Schneider C.A., Rasband W.S., Eliceiri K.W. NIH Image to ImageJ: 25 years of image analysis. Nat. Methods. 2012;9(7):671–675. doi: 10.1038/nmeth.2089. W.S. Rasband, ImageJ (1997) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Rosset A., Spadola L., Ratib O. OsiriX: an open-source software for navigating in multidimensional DICOM images. J. Digit. Imag. 2004;17:205–216. doi: 10.1007/s10278-004-1014-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Franco de Moraes T., Amorim P., Azevedo F., Silva J. 2011. InVesalius – an Open-Source Imaging Application. [DOI] [Google Scholar]

- 82.R Core Team R. 2013. A Language and Environment for Statistical Computing. [Google Scholar]

- 83.Larobina M., Murino L. Medical image file formats. J. Digit. Imag. 2014;27:200–206. doi: 10.1007/s10278-013-9657-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Brett M., Markiewicz C.J., Hanke M., Côté M.-A., Cipollini B., McCarthy P., Jarecka D., Cheng C.P., Halchenko Y.O., Cottaar M., Larson E., Ghosh S., Wassermann D., Gerhard S., Lee G.R., Wang H.-T., Kastman E., Kaczmarzyk J., Guidotti R., Duek O., Daniel J., Rokem A., Madison C., Moloney B., Morency F.C., Goncalves M., Markello R., Riddell C., Burns C., Millman J., Gramfort A., Leppäkangas J., Sólon A., van den Bosch J.J.F., Vincent R.D., Braun H., Subramaniam K., Gorgolewski K.J., Raamana P.R., Klug J., Nichols B.N., Baker E.M., Hayashi S., Pinsard B., Haselgrove C., Hymers M., Esteban O., Koudoro S., Pérez-García F., Oosterhof N.N., Amirbekian B., Nimmo-Smith I., Nguyen L., Reddigari S., St-Jean S., Panfilov E., Garyfallidis E., Varoquaux G., Legarreta J.H., Hahn K.S., Hinds O.P., Fauber B., Poline J.-B., Stutters J., Jordan K., Cieslak M., Moreno M.E., Haenel V., Schwartz Y., Baratz Z., Darwin B.C., Thirion B., Gauthier C., Papadopoulos Orfanos D., Solovey I., Gonzalez I., Palasubramaniam J., Lecher J., Leinweber K., Raktivan K., Calábková M., Fischer P., Gervais P., Gadde S., Ballinger T., Roos T., Reddam V.R. freec84, nipy/nibabel. 2020;3.2(1) doi: 10.5281/zenodo.4295521. [DOI] [Google Scholar]

- 85.Schroeder W., Martin K., Lorensen B., vtk . 2021. VTK Is an Open-Source Toolkit for 3D Computer Graphics, Image Processing, and Visualization.https://vtk.org [Google Scholar]

- 86.Fischl B. FreeSurfer, Neuroimage. 2012;62:774–781. doi: 10.1016/j.neuroimage.2012.01.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Mitsouras D., Liacouras P., Imanzadeh A., Giannopoulos A.A., Cai T., Kumamaru K.K., George E., Wake N., Caterson E.J., Pomahac B., Ho V.B., Grant G.T., Rybicki F.J. Medical 3D printing for the radiologist. Radiographics. 2015;35:1965–1988. doi: 10.1148/rg.2015140320. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Jani G., Johnson A., Marques J., Franco A. Three-dimensional(3D) printing in forensic science–An emerging technology in India. Annals of 3D Printed Medicine. 2021;1 doi: 10.1016/j.stlm.2021.100006. [DOI] [Google Scholar]

- 89.Komar D.A., Davy-Jow S., Decker S.J. The use of a 3-D laser scanner to document ephemeral evidence at crime scenes and postmortem examinations. J. Forensic Sci. 2012;57:188–191. doi: 10.1111/j.1556-4029.2011.01915.x. [DOI] [PubMed] [Google Scholar]

- 90.Hazeveld A., Huddleston Slater J.J.R., Ren Y. Accuracy and reproducibility of dental replica models reconstructed by different rapid prototyping techniques. Am. J. Orthod. Dentofacial Orthop. 2014;145:108–115. doi: 10.1016/j.ajodo.2013.05.011. [DOI] [PubMed] [Google Scholar]

- 91.Biggs M., Marsden P. Dental identification using 3D printed teeth following a mass fatality incident. Journal of Forensic Radiology and Imaging. 2019;18:1–3. doi: 10.1016/j.jofri.2019.07.001. [DOI] [Google Scholar]

- 92.Carew R.M., Errickson D. An overview of 3D printing in forensic science: the tangible third-dimension. J. Forensic Sci. 2020;65:1752–1760. doi: 10.1111/1556-4029.14442. [DOI] [PubMed] [Google Scholar]

- 93.Robles M., Carew R.M., Morgan R.M., Rando C. A step-by-step method for producing 3D crania models from CT data. Forensic Imag. 2020;23 doi: 10.1016/j.fri.2020.200404. [DOI] [Google Scholar]

- 94.Carew R.M., Morgan R.M., Rando C. Experimental assessment of the surface quality of 3D printed bones. Aust. J. Forensic Sci. 2021;53:592–609. doi: 10.1080/00450618.2020.1759684. [DOI] [Google Scholar]

- 95.Errickson D., Fawcett H., Thompson T.J.U., Campbell A. The effect of different imaging techniques for the visualisation of evidence in court on jury comprehension. Int. J. Leg. Med. 2020;134:1451–1455. doi: 10.1007/s00414-019-02221-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.NHS . 2019. Policy for Identification of Patients.https://www.uhb.nhs.uk/Downloads/pdf/controlled-documents/PatientIDPolicy.pdf [Google Scholar]

- 97.NHS Improvement . 2018. Patient Safety Alert: Safe Temporary Identification Criteria for Unknown or Unidentified Patients.https://www.england.nhs.uk/wp-content/uploads/2019/12/Patient_Safety_Alert_-_unknown_or_unidentified_patients_FINAL.pdf [Google Scholar]

- 98.De Tobel J., Radesh P., Vandermeulen D., Thevissen P.W. An automated technique to stage lower third molar development on panoramic radiographs for age estimation: a pilot study. J. Forensic Odontostomatol. 2017;35:42–54. [PMC free article] [PubMed] [Google Scholar]

- 99.Štepanovský M., Ibrová A., Buk Z., Velemínská J. Novel age estimation model based on development of permanent teeth compared with classical approach and other modern data mining methods. Forensic Sci. Int. 2017;279:72–82. doi: 10.1016/j.forsciint.2017.08.005. [DOI] [PubMed] [Google Scholar]

- 100.Li Y., Huang Z., Dong X., Liang W., Xue H., Zhang L., Zhang Y., Deng Z. Forensic age estimation for pelvic X-ray images using deep learning. Eur. Radiol. 2019;29:2322–2329. doi: 10.1007/s00330-018-5791-6. [DOI] [PubMed] [Google Scholar]

- 101.Guo Y., Han M., Chi Y., Long H., Zhang D., Yang J., Yang Y., Chen T., Du S. Accurate age classification using manual method and deep convolutional neural network based on orthopantomogram images. Int. J. Leg. Med. 2021;135:1589–1597. doi: 10.1007/s00414-021-02542-x. [DOI] [PubMed] [Google Scholar]

- 102.Vila-Blanco N., Varas-Quintana P., Aneiros-Ardao Á., Tomás I., Carreira M.J. Automated description of the mandible shape by deep learning. Int. J. CARS. 2021;16:2215–2224. doi: 10.1007/s11548-021-02474-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Štern D., Payer C., Urschler M. Automated age estimation from MRI volumes of the hand. Med. Image Anal. 2019;58 doi: 10.1016/j.media.2019.101538. [DOI] [PubMed] [Google Scholar]

- 104.Armanious K., Abdulatif S., Bhaktharaguttu A.R., Küstner T., Hepp T., Gatidis S., Yang B. 2020 28th European Signal Processing Conference. EUSIPCO); 2021. Organ-based chronological age estimation based on 3D MRI scans; pp. 1225–1228. [DOI] [Google Scholar]

- 105.Ortega R.F., Irurita J., Campo E.J.E., Mesejo P. Analysis of the performance of machine learning and deep learning methods for sex estimation of infant individuals from the analysis of 2D images of the ilium. Int. J. Leg. Med. 2021 doi: 10.1007/s00414-021-02660-6. [DOI] [PubMed] [Google Scholar]

- 106.Oner Z., Turan M.K., Oner S., Secgin Y., Sahin B. Sex estimation using sternum part length by means of artificial neural networks. Forensic Sci. Int. 2019;301:6–11. doi: 10.1016/j.forsciint.2019.05.011. [DOI] [PubMed] [Google Scholar]

- 107.Chen X., Lian C., Deng H.H., Kuang T., Lin H.-Y., Xiao D., Gateno J., Shen D., Xia J.J., Yap P.-T. Fast and accurate craniomaxillofacial landmark detection via 3D faster R-CNN. IEEE Trans. Med. Imag. 2021;40:3867–3878. doi: 10.1109/TMI.2021.3099509. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 108.Pham C.V., Lee S.-J., Kim S.-Y., Lee S., Kim S.-H., Kim H.-S. Age estimation based on 3D post-mortem computed tomography images of mandible and femur using convolutional neural networks. PLoS One. 2021;16 doi: 10.1371/journal.pone.0251388. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 109.Techataweewan N., Hefner J.T., Freas L., Surachotmongkhon N., Benchawattananon R., Tayles N. Metric sexual dimorphism of the skull in Thailand. Forensic Sci. Int.: Report. 2021;4 doi: 10.1016/j.fsir.2021.100236. [DOI] [Google Scholar]

- 110.Hefner J.T., Spradley M.K., Anderson B. Ancestry assessment using random forest modeling. J. Forensic Sci. 2014;59:583–589. doi: 10.1111/1556-4029.12402. [DOI] [PubMed] [Google Scholar]

- 111.Soffer S., Ben-Cohen A., Shimon O., Amitai M.M., Greenspan H., Klang E. Convolutional neural networks for radiologic images: a radiologist’s guide. Radiology. 2019;290:590–606. doi: 10.1148/radiol.2018180547. [DOI] [PubMed] [Google Scholar]

- 112.Wisz M.S., Hijmans R.J., Li J., Peterson A.T., Graham C.H., Guisan A., Group N.P.S.D.W. Effects of sample size on the performance of species distribution models. Divers. Distrib. 2008;14:763–773. doi: 10.1111/j.1472-4642.2008.00482.x. [DOI] [Google Scholar]

- 113.Tully G. Forensic Science Regulator; 2019. Forensic Science Regulator Annual Report 2018.https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/918342/FSRAnnual_Report_2018.pdf [Google Scholar]

- 114.The House of Lords Science and Technology Select Committee . House of Lords; London, United Kingdom: 2019. Forensic Science and the Criminal Justice System: a Blueprint for Change.https://publications.parliament.uk/pa/ld201719/ldselect/ldsctech/333/333.pdf [Google Scholar]

- 115.Razzak M.I., Naz S., Zaib A. 2017. Deep Learning for Medical Image Processing: Overview, Challenges and Future. [DOI] [Google Scholar]

- 116.Natu P., Natu S., Agrawal U. Data Protection and Privacy in Healthcare: Research and Innovations. first ed. CRC Press; 2021. Privacy issues in medical image analysis; p. 14. [DOI] [Google Scholar]

- 117.Willemink M.J., Koszek W.A., Hardell C., Wu J., Fleischmann D., Harvey H., Folio L.R., Summers R.M., Rubin D.L., Lungren M.P. Preparing medical imaging data for machine learning. Radiology. 2020;295:4–15. doi: 10.1148/radiol.2020192224. [DOI] [PMC free article] [PubMed] [Google Scholar]