Significance

Data assimilation is an essential component of numerical models for forecasting and uncertainty quantification of dynamical systems given incomplete knowledge of the state and governing equations. Here, we develop theory and computational methods for data assimilation through a combination of ideas from operator algebras, quantum information, and ergodic theory. Our approach leverages properties of noncommutative operator spaces to design computational schemes that i) preserve the sign of positive quantities such as mass in ways that are not possible with classical commutative methods and ii) are amenable to consistent data-driven approximation using machine learning. Furthermore, our framework provides a route for implementing data assimilation algorithms on quantum computers. We present applications to multiscale chaotic systems and the El Niño Southern Oscillation.

Keywords: data assimilation, operator algebras, quantum information, Koopman operators, kernel methods

Abstract

We develop an algebraic framework for sequential data assimilation of partially observed dynamical systems. In this framework, Bayesian data assimilation is embedded in a nonabelian operator algebra, which provides a representation of observables by multiplication operators and probability densities by density operators (quantum states). In the algebraic approach, the forecast step of data assimilation is represented by a quantum operation induced by the Koopman operator of the dynamical system. Moreover, the analysis step is described by a quantum effect, which generalizes the Bayesian observational update rule. Projecting this formulation to finite-dimensional matrix algebras leads to computational schemes that are i) automatically positivity-preserving and ii) amenable to consistent data-driven approximation using kernel methods for machine learning. Moreover, these methods are natural candidates for implementation on quantum computers. Applications to the Lorenz 96 multiscale system and the El Niño Southern Oscillation in a climate model show promising results in terms of forecast skill and uncertainty quantification.

Since its inception in weather forecasting (1) and object tracking problems (2), sequential data assimilation, also known as filtering, has evolved into an indispensable tool in forecasting and uncertainty quantification of dynamical systems (3, 4). In its essence, data assimilation is a Bayesian inference framework: Knowledge about the state of the system at time t is described by a probability distribution pt. The system dynamics acts on probability distributions, carrying along pt to a time-dependent family pt, τ, which can be used to forecast observables of the system at time t + τ, τ ≥ 0. When an observation is made, at time t + Δt, the forecast distribution pt, Δt is updated in an analysis step using Bayes’ rule to a posterior distribution pt + Δt, and the cycle is repeated.

In real-world applications, the Bayesian theoretical “gold standard” is seldom feasible to employ due to a variety of challenges, including high-dimensional nonlinear dynamics, nonlinear observation modalities, and model error. Weather and climate dynamics (5) represent a classical application domain where these challenges are prevalent due to the extremely large number of active degrees of freedom (which necessitates making dynamical approximations such as subgrid-scale parameterization) and nonlinear equations of motion and observation functions (which prevent direct application of Bayes’ rule). Addressing these issues has stimulated the creation of a broad range of data assimilation techniques, including variational (6), ensemble (7), and particle (8) methods.

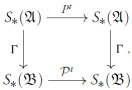

In this paper, we examine Bayesian data assimilation and its representation through finite-dimensional computational methods from an algebraic perspective. Our formulation employs different levels of description, depicted schematically in Fig. 1. We begin by assigning to a measure-preserving dynamical flow Φt: X → X, , an algebra of observables (complex-valued functions of the state) , where X is the state space and μ the invariant measure. This algebra is a commutative, or abelian, von Neumann algebra (9) under pointwise function multiplication. The state space of , denoted as , is the set of continuous linear functionals , satisfying the positivity condition ω(f*f)≥0 for all and the normalization condition ω1 = 1. Here, * denotes the complex conjugation of functions, and 1 is the unit of , 1(x)=1 for all x ∈ X. Every probability density induces a state that acts on as an expectation functional, ωpf = ∫Xfp dμ. Such states ωp constitute the set of normal states of , denoted as .

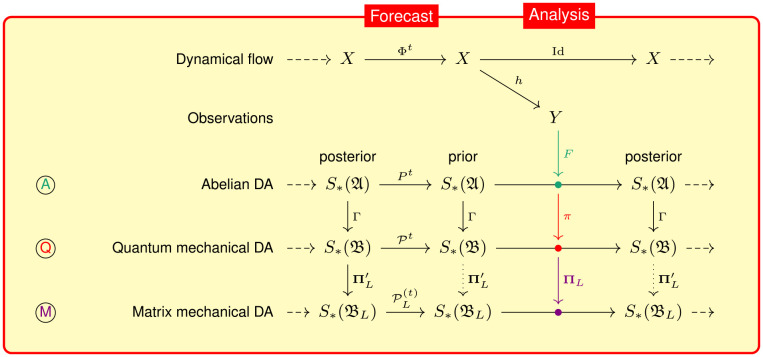

Fig. 1.

Schematic representation of the abelian and nonabelian formulations of sequential data assimilation (DA), showing a forecast–analysis cycle. The Top row of the diagram shows the dynamical flow Φt : X → X. The second row shows the observation map h : X → Y used to update the state of the DA system in the analysis step. The rows labeled  ,

,  , and

, and  show the abelian, infinite-dimensional nonabelian (quantum mechanical), and finite-dimensional nonabelian (matrix mechanical) DA systems, respectively. In

show the abelian, infinite-dimensional nonabelian (quantum mechanical), and finite-dimensional nonabelian (matrix mechanical) DA systems, respectively. In  , the forecast step is carried out by the transfer operator acting on states of the abelian algebra . The analysis step (green dot) is represented by an effect-valued map that updates the state given observations in Y. In

, the forecast step is carried out by the transfer operator acting on states of the abelian algebra . The analysis step (green dot) is represented by an effect-valued map that updates the state given observations in Y. In  , the forecast step is carried out by the transfer operator acting on states of the nonabelian operator algebra . The analysis step (red dot) is carried out by an effect given by the composition of F with the regular representation π of into (red arrow). The state space is embedded into by means of a map Γ, which is compatible with both forecast and analysis; Eqs. 4 and 9. This compatibility is represented by the commutative loops between

, the forecast step is carried out by the transfer operator acting on states of the nonabelian operator algebra . The analysis step (red dot) is carried out by an effect given by the composition of F with the regular representation π of into (red arrow). The state space is embedded into by means of a map Γ, which is compatible with both forecast and analysis; Eqs. 4 and 9. This compatibility is represented by the commutative loops between  and

and  having Γ as a vertical arrow. To arrive at the matrix mechanical DA,

having Γ as a vertical arrow. To arrive at the matrix mechanical DA,  , we project into an L2-dimensional operator algebra using a positivity-preserving projection . The composition of this projection with ℱ leads to an effect employed in the analysis step (purple arrow and dot). Moreover, induces a state space projection and a projected transfer operator employed in the forecast step. Vertical dotted arrows indicate asymptotically commutative relationships that hold as L → ∞.

, we project into an L2-dimensional operator algebra using a positivity-preserving projection . The composition of this projection with ℱ leads to an effect employed in the analysis step (purple arrow and dot). Moreover, induces a state space projection and a projected transfer operator employed in the forecast step. Vertical dotted arrows indicate asymptotically commutative relationships that hold as L → ∞.

Elements of evolve under the Koopman operator , which is the composition operator by the dynamical flow, Utf = f ° Φt. Moreover, algebra states evolve under the transfer operator , which is the adjoint of the Koopman operator, Ptω = ω ⚬ Ut (10–12). The space of normal states is invariant under Pt, and we have Ptωp = ωU−tp for every probability density . In this picture, the evolution pt ↦ pt, τ of the forecast density is represented by dynamics on under the transfer operator, ωpt, τ = Pτωpt. Moreover, Bayesian analysis, pt, Δt ↦ pt + Δt, is represented by projective conditioning of the state. Together, these two steps encapsulate classical data assimilation within an abelian algebraic setting, labeled  in Fig. 1.

in Fig. 1.

The next level of our framework, labeled  in Fig. 1, generalizes data assimilation by embedding it in a nonabelian operator algebra. Operator algebras form the mathematical backbone of quantum mechanics (13)—one of the most successful theories in physics. Quantum information theory and quantum probability provide a unified mathematical framework to characterize the properties of information transfer in both abelian and nonabelian systems through maps (quantum operations) acting on elements of the algebra and the corresponding states (14–16). Our nonabelian formulation

in Fig. 1, generalizes data assimilation by embedding it in a nonabelian operator algebra. Operator algebras form the mathematical backbone of quantum mechanics (13)—one of the most successful theories in physics. Quantum information theory and quantum probability provide a unified mathematical framework to characterize the properties of information transfer in both abelian and nonabelian systems through maps (quantum operations) acting on elements of the algebra and the corresponding states (14–16). Our nonabelian formulation  is based on the von Neumann algebra of bounded linear operators on the Hilbert space H = L2(X, μ), equipped with operator composition as the algebraic product. The state space is defined as the space of continuous, positive, normalized functionals analogously to ; that is, every state satisfies ω(A*A)≥0 and ωI = 1, where * denotes the operator adjoint on B(H) and I is the identity operator. Analogously to , has a subset of normal states, , induced in this case by trace-class operators. Specifically, letting denote the space of trace-class operators in B(H), every positive operator of unit trace induces a state such that ωρA = tr(ρA). Such operators ρ are called density operators and can be thought of as nonabelian analogs of probability densities . As we will see below, analogs of the transfer operator and Bayesian update described above for are naturally defined for .

is based on the von Neumann algebra of bounded linear operators on the Hilbert space H = L2(X, μ), equipped with operator composition as the algebraic product. The state space is defined as the space of continuous, positive, normalized functionals analogously to ; that is, every state satisfies ω(A*A)≥0 and ωI = 1, where * denotes the operator adjoint on B(H) and I is the identity operator. Analogously to , has a subset of normal states, , induced in this case by trace-class operators. Specifically, letting denote the space of trace-class operators in B(H), every positive operator of unit trace induces a state such that ωρA = tr(ρA). Such operators ρ are called density operators and can be thought of as nonabelian analogs of probability densities . As we will see below, analogs of the transfer operator and Bayesian update described above for are naturally defined for .

To arrive at practical data assimilation algorithms, we project (discretize) the infinite-dimensional system on to a system on a finite-dimensional subalgebra , which is concretely represented by an L × L matrix algebra ( in Fig. 1). We show that this approach leads to computational techniques which are well suited for assimilation of high-dimensional observables, while enjoying structure-preservation properties that cannot be obtained from orthogonal projections of abelian function spaces. Moreover, by virtue of being rooted in linear operator theory, these methods are amenable to consistent data-driven approximation using kernel methods for machine learning.

in Fig. 1). We show that this approach leads to computational techniques which are well suited for assimilation of high-dimensional observables, while enjoying structure-preservation properties that cannot be obtained from orthogonal projections of abelian function spaces. Moreover, by virtue of being rooted in linear operator theory, these methods are amenable to consistent data-driven approximation using kernel methods for machine learning.

Previous Work.

Recently, an operator-theoretic framework for data assimilation, called quantum mechanical data assimilation (QMDA) (17), was developed using ideas from Koopman operator theory (10, 12) in conjunction with the Dirac–von Neumann axioms of quantum dynamics and measurement (18). In QMDA, the state of the data assimilation system is a density operator ρt acting on H = L2(X, μ) (rather than a classical probability density pt ∈ L1(X, μ)), and the assimilated observables are multiplication operators in B(H) (rather than functions in L∞(X, μ)). Between observations, ρt evolves under the induced action of the transfer operator, and the forecast distribution of observables is obtained as a quantum mechanical expectation with respect to ρt. During observations, the density operator ρt is updated projectively as a von Neumann measurement, which is the quantum analog of Bayes’ rule. QMDA has a data-driven formulation based on kernel methods for Koopman operator approximation (19–21), which was shown to perform well in low-dimensional applications. Meanwhile, the paper (22) has shown that Koopman operators of systems with pure point spectra can be approximated on quantum computers using shallow quantum circuits, offering an exponential computational advantage over classical deterministic algorithms for Koopman operator approximation.

Contributions.

We provide a general algebraic framework that encompasses classical data assimilation and QMDA as particular instances (abelian and nonabelian, respectively). The principal distinguishing aspects of this framework are as follows:

-

1.

Dynamical consistency. We employ a dynamically consistent embedding of abelian data assimilation

into the nonabelian framework

into the nonabelian framework  . As in ref. (17), observables in are mapped into multiplication operators in , but here, we also employ an embedding that is compatible with the transfer operator (see the commutative loops between

. As in ref. (17), observables in are mapped into multiplication operators in , but here, we also employ an embedding that is compatible with the transfer operator (see the commutative loops between  and

and  in Fig. 1). This allows us to study QMDA in relation to the underlying classical theory and establish the consistency between the two approaches.

in Fig. 1). This allows us to study QMDA in relation to the underlying classical theory and establish the consistency between the two approaches. -

2.

Effect system. In both the abelian and nonabelian settings, the analysis step, given observations in a space Y acquired through an observation map h : X → Y, is carried out using quantum effects (loosely speaking, algebra-valued logical predicates) (14). In the abelian case, the effect is induced by a kernel feature map. In the nonabelian setting, F is promoted to an operator-valued feature map ; see the column in the schematic of Fig. 1 labeled “Analysis.” Our use of feature maps enables assimilation of data of arbitrarily large dimension, overcoming an important limitation of the original QMDA scheme (17) (which becomes prohibitively expensive for high-dimensional observation maps).

-

3.

Positivity-preserving discretization. The discretization procedure leading to the finite-dimensional scheme

has the important property that positive elements of are mapped into positive elements of . Moreover, the transfer operator on is mapped into a completely positive, trace-nonincreasing map, so the finite-dimensional data assimilation system is a quantum operation. We call this system “matrix mechanical.” By virtue of these properties, the sign of sign-definite observables, i.e., observables which are either positive or negative, is preserved. Relevant examples include positive physical quantities such as mass and temperature but also statistical quantities such as probability density or standard deviation, which are useful for uncertainty quantification. We emphasize that the approach of first embedding classical data assimilation in to the nonabelian operator setting of and then projecting to the finite-dimensional system on is important in positivity preservation.

has the important property that positive elements of are mapped into positive elements of . Moreover, the transfer operator on is mapped into a completely positive, trace-nonincreasing map, so the finite-dimensional data assimilation system is a quantum operation. We call this system “matrix mechanical.” By virtue of these properties, the sign of sign-definite observables, i.e., observables which are either positive or negative, is preserved. Relevant examples include positive physical quantities such as mass and temperature but also statistical quantities such as probability density or standard deviation, which are useful for uncertainty quantification. We emphasize that the approach of first embedding classical data assimilation in to the nonabelian operator setting of and then projecting to the finite-dimensional system on is important in positivity preservation. -

4.

Data-driven formulation. The matrix mechanical system

on admits a data-driven approximation in which all operators are represented in a kernel eigenbasis learned from time-ordered training data, without requiring a priori knowledge of the equations of motion. In the limit of large training data, predictions made by the data-driven assimilation system converge to those from

on admits a data-driven approximation in which all operators are represented in a kernel eigenbasis learned from time-ordered training data, without requiring a priori knowledge of the equations of motion. In the limit of large training data, predictions made by the data-driven assimilation system converge to those from  , which in turn converge to those from the infinite-dimensional system

, which in turn converge to those from the infinite-dimensional system  on as L → ∞.

on as L → ∞. -

5.

Route to quantum computing. QMDA is well suited for implementation on quantum computers as we demonstrate here with simulated quantum circuit experiments. Our approach provides a route to quantum algorithms that sequentially alternate between unitary evolution and projective measurement to perform inference and prediction of classical dynamics with quantum computers.

To place this work in the context of our previous work (17, 22), we note that ref. (17) proposed QMDA as an ad hoc data assimilation scheme, without attempting to connect it to the underlying classical Bayesian framework. Moreover, ref. (17) did not investigate the positivity-preserving aspects of QMDA, nor did it employ an operator-valued feature map in the analysis step. Ref. (22) developed a scheme for quantum computational simulation without addressing data assimilation. There, the focus was on representing classical dynamics via quantum circuits of low complexity (depth); to that end, the scheme was limited to systems with pure point spectra, which do not include systems with mixing (chaotic) dynamics that lie in the scope of QMDA.

Embedding Data Assimilation in Operator Algebras

Consider a dynamical flow Φt : X → X, , on a completely metrizable, separable space X with an ergodic, invariant, Borel probability measure μ. The flow induces Koopman operators Ut : f ↦ f ° Φt, which are isomorphisms of the Lp(X, μ) spaces with p ∈ [1, ∞]. The flow also induces transfer operators Pt : Lp(X, μ)* → Lp(X, μ)* on the dual spaces Lp(X, μ)*, given by the adjoint of the Koopman operator, Ptα = α ⚬ Ut. Under the canonical identification of Lp(X, μ)*, p ∈ [1, ∞), with finite, complex Borel measures with densities in Lq(X, μ), , the transfer operator is identified with the inverse Koopman operator; that is, for α ∈ Lp(X, μ)* with density , αt := Ptα has density with 𝜚t = U−t𝜚. In what follows, ∥f∥Lp(X, μ) = (∫X|f|p dμ)1/p for p ∈ [1, ∞) and ∥f∥L∞(X, μ) = limp → ∞∥f∥Lp(X, μ) denote the standard Lp(X, μ) norms.

Among the Lp(X, μ) spaces, H := L2(X, μ) is a Hilbert space, and is an abelian von Neumann algebra with respect to function multiplication and complex conjugation. In particular, for any two elements , we have

| [1] |

making a C*-algebra, and moreover, has a predual (i.e., a Banach space whose dual is ), making it a von Neumann algebra. We let ⟨f, g⟩ = ∫Xf*g dμ denote the inner product on H. On H, the Koopman operator is unitary, Ut* = U−t.

Embedding Observables.

Let be the space of bounded operators on H, equipped with the operator norm, . This space is a nonabelian von Neumann algebra with respect to operator composition and adjoint. That is, for any , we have

which is the nonabelian analog of Eq. 1 making a C*-algebra. Moreover, has a predual, , making it a von Neumann algebra. Here, the space of trace-class operators B1(H)⊆B(H) is equipped with the norm , which can be thought of as a nonabelian analog of L1(X, μ). The unitary group of Koopman operators Ut on H induces a unitary group (i.e., a group of linear maps mapping unitary operators to unitary operators), which acts by conjugation, i.e.,

| [2] |

The abelian algebra embeds isometrically into through the map , such that πf is the multiplication operator by f, (πf)g = fg. This map is injective, and satisfies π(fg) = (πf)(πg), π(f*)=(πf)* for all . Thus, π is a *-representation, preserving the von Neumann algebra structure of . The representation π is also compatible with Koopman evolution, in the sense that 𝒰t ⚬ π = π ⚬ Ut holds for all . Equivalently, we have the commutative diagram

|

which shows that π provides a dynamically consistent representation of observables of the dynamical system in as elements of the nonabelian operator algebra . In Fig. 1, we refer to the level of description involving as quantum mechanical due to the central role that operator algebras play in the algebraic formulation of quantum mechanics (13). In particular, Eq. 2 is mathematically equivalent to the Heisenberg picture for the unitary evolution of quantum observables (here, under the Koopman operator).

Embedding States.

A dual construction to the representation of observables can be carried out for states. Let be a normal state induced by a probability density . Since p is a positive function with , we have that is a real unit vector in H, and thus is a rank-1 orthogonal projection. Every such projection is a density operator inducing a normal state , where

| [3] |

Such states ωρ induced by unit vectors in H are called vector states. In fact, ωρ is a pure state, i.e., it is an extremal point of the state space (which is a convex set). Defining the map such that Γ(ωp)=ωρ, one can readily verify that Γ is compatible with the regular representation π; i.e., for every observable and probability density ,

| [4] |

Next, analogously to the transfer operator , we define as the adjoint of from Eq. 2, 𝒫tω = ω ⚬ 𝒰t. Note that 𝒰t and 𝒫t form a dual pair, i.e., (𝒫tω)A = ω(𝒰tA) for every state and element . Moreover, if is induced by a density operator , then 𝒫tωρ = ωρt, where ρt is the density operator given by ρt = 𝒰−tρ = Ut*ρUt.

In quantum mechanics, the evolution ρ ↦ ρt is known as the Schrödinger picture, and it is the dual of the Heisenberg picture from Eq. 2. In the particular case that ρ = ⟨ξ, ⋅⟩ξ is a vector state induced by ξ ∈ H (which would be called a wavefunction in quantum mechanical language), we have ρt = ⟨ξt, ⋅⟩ξt, where ξt = Ut*ξ. Using this fact and Eq. 3, it follows that Γ is compatible with the evolution on and under the transfer operator; that is, 𝒫t ° Γ = Γ ° Pt. This relation is represented by the commutative diagram

|

[5] |

which also captures the correspondence between the abelian and quantum mechanical forecast steps in Fig. 1.

Probabilistic Forecasting.

In both abelian and nonabelian data assimilation, we can describe probabilistic forecasting of observables of the dynamical system using the formalism of positive operator-valued measures (POVMs) (23). First, we recall that an element a of a C*-algebra is i) self-adjoint if a* = a; ii) positive (denoted as a ≥ 0) if a = b*b for some ; and iii) a projection if a* = a = a2. Supposing that is also a von Neumann algebra, a map on the σ-algebra of a measurable space (Ω, Σ) is said to be a POVM if i) for every set S ∈ Σ, E(S)≥0; ii) E(Ω)=I, where I is the unit of ; and iii) for every countable collection S1, S2, … of disjoint sets in Σ, E(⋃iSi) = ∑iE(Si), where the sum converges in the weak-* topology of (i.e., for every , .) These properties imply that for every , the map given by

| [6] |

is a complex normalized measure. In particular, if γ induces a normal state , then is a probability measure on Ω. We say that the POVM E is a projection-valued measure (PVM) if E(S) is a projection for every S ∈ Σ.

In quantum mechanics, a triple (Ω, Σ, E) where E is a POVM is referred to as an observable. We alert the reader to the fact that in dynamical systems theory, an observable is generally understood as a function f : X → V on state space X taking values in a vector space, V. Thus, in situations where the space of dynamical observables forms an algebra (e.g., the algebra corresponding to ), the term “observable” is overloaded, and its meaning must be understood from the context.

Given a POVM (Ω, Σ, E) as above, and a bounded, measurable function , we define the integral ∫Ωu(ω) dE(ω) as the unique element a of such that for every , . If a is a self-adjoint element of , i.e., a* = a, the spectral theorem states that there exists a unique PVM E : ℬ(ℝ)→𝔚 on the Borel σ-algebra ℬ(ℝ) of the real line such that a = ∫ℝω dE(ω).

In abelian data assimilation, the self-adjoint elements are the real-valued functions f in the von Neumann algebra , and every such f has an associated PVM . Explicitly, we have Ef(S)=χf−1(S), where is the characteristic function of the set f−1(S)⊆X. If, at time t, the data assimilation system is in a state induced by a probability density , then the forecast distribution for f at lead time τ ≥ 0 is , where pt, τ is the probability density associated with the state ωpt, τ = Pτωpt.

The forecast distribution is equivalent to the distribution obtained via classical probability theory. That is, given an observable f ∈ L∞(X, μ), the density pt, τ ∈ L1(X, μ) induces a probability measure on such that Prob(S) = ∫f−1(S)pt, τ dμ is the probability that f takes values in a set . It follows by definition of that .

In the non-abelian setting of , the spectral theorem states that for every self-adjoint operator , there exists a unique PVM , such that . If, at time t, the nonabelian data assimilation system is in a normal state ωρt induced by a density operator , then the forecast distribution for A at lead time τ ≥ 0 is given by ℙA, t, τ ≡ ℙEA, ρt, τ, where ρt, τ is the density operator associated with . This distribution is compatible with the embeddings of states and observables introduced above. That is, for every observable , probability density , and Borel set , we have , where Γ(ωpt, τ)=ωρt, τ.

Representing Observations by Effects.

For a unital C*-algebra , an effect is an element satisfying 0 ≤ e ≤ I. Intuitively, one can think of effects as generalizations of logical truth values, used to model outcomes of measurements or observations (24, 25). In Boolean logic, truth values lie in the set {0, 1}. In fuzzy logic, truth values are real numbers in the interval [0, 1]. In unital C*-algebras, the analogs of truth values are elements e satisfying 0 ≤ e ≤ I (26). We denote the set of effects in a C*-algebra as . It can be shown that is a convex space, whose extremal points are projections. Given a state and an effect , the number ωe ∈ [0, 1] is called the validity of e. Note that every effect induces a binary POVM such that E({1}) = e and E({0}) = I − e.

Suppose now that is a von Neumann algebra, let be a normal state induced by an element , and let be an effect. If the validity ωe is nonzero, we can define the conditional state as the normal state induced by , where

| [7] |

The map ωρ ↦ ωρ|e generalizes the Bayesian conditioning rule employed in the analysis step of classical data assimilation.

As an example, let and χS : X → {0, 1} be the characteristic function of measurable set (event) . According to Bayes’ theorem, if is a probability density and ∫Sp dμ > 0, the conditional density of p given S is

| [8] |

Since χS(x)∈{0, 1} for every x ∈ X, it follows that χS is an effect in , and since ∫XpχS dμ = ωpχS, the Bayesian formula above is a special case of Eq. 7 with p|χS = q. Note that to obtain the second equality in Eq. 8, we made use of the commutativity of function multiplication, which does not hold in a nonabelian algebra.

An important compatibility result between effects in the abelian algebra and effects in the nonabelian algebra is as follows: The regular representation maps the effect space into the effect space . As a result, and by virtue of Eq. 4, for every normal state and effect , the conditioned state ωp|e satisfies

| [9] |

This means that conditioning by effects in consistently embeds to conditioning by effects in .

Next, let Y be a set. In first-order logic, a predicate is a map F : Y → {0, 1} such that F(y)=1 means that the proposition F(y) is true, and F(y)=0 means that it is false. In fuzzy logic, predicates are generalized to maps F : Y → [0, 1]. In quantum logic, predicates are represented by effect-valued maps . Applying Eq. 7 for e = F(y) leads to the update rule p ↦ p|F(y), which represents the conditioning of the normal state associated with p by the truth value of the proposition F(y) associated with y ∈ Y.

In our algebraic data assimilation framework, we use an effect-valued map to carry out the analysis step given observations of the system in a space Y (Fig. 1). Specifically, let h : X → Y be a measurable observation map, such that y = h(x) corresponds to the assimilated data given that the system is in state x ∈ X. Let ψ : Y × Y → [0, 1] be a measurable kernel function on Y, taking values in the unit interval. Every such kernel induces an effect-valued map given by F(y)=ψ(y, h(⋅)). Possible choices for ψ include bump kernels—in such cases, F(y) can be viewed as a relaxation of a characteristic function χS of a set S containing h−1({y}) (SI Appendix, Eq. S25).

If, immediately prior to an observation at time t + Δt, the abelian data assimilation system has state (recall that pt, Δt is the forecast density for lead time Δt initialized at time t), and F(y) has nonzero validity with respect to ωpt, Δt, our analysis step updates ωpt, Δt to the conditional state ωpt, Δt|F(y) ≡ ωpt + Δt using Eq. 7. In the nonabelian setting, we promote F to the operator-valued function with and use again Eq. 7 to update the prior state to ; see the Analysis column of the schematic in Fig. 1. By Eq. 9, the abelian and nonabelian analysis steps are mutually consistent, in the sense that if ωρt, Δt = Γ(ωpt, Δt), then for every observable , we have ωρt + Δt(πf)=ωpt + Δtf.

We should note that the effect-based analysis step introduced above can naturally handle data spaces Y of arbitrarily large dimension, overcoming an important limitation of the QMDA framework proposed in ref. (17). It is also worthwhile pointing out connections between effect-valued maps and feature maps from RKHS theory (27): If ψ is positive-definite, there is an associated RKHS of complex-valued functions on X with w(x, x′) := ψ(h(x),h(x′)) as its reproducing kernel. The map F then takes values in the space and is thus an instance of a feature map. In the nonabelian case, one can think of as an operator-valued feature map.

Positivity-Preserving Discretization.

The abelian and nonabelian formulations of data assimilation described thus far employ the infinite-dimensional algebras and , respectively. To arrive at practical computational algorithms, these algebras must be projected to finite dimensions, carrying along the associated dynamical and observation operators to finite-rank operators. We refer to this process as discretization.

To motivate our approach, we recall the definitions of quantum operations and channels (15): A linear map between two von Neumann algebras and is said to be a quantum operation if i) 𝒯 is completely positive, i.e., for every , the tensor product map is positive, where and are the von Neumann algebras of n × n matrices over and , respectively; ii) is the adjoint of a map such that for every normal state . If, in addition, , is said to be a quantum channel.

In quantum theory, operations and channels characterize the transfer of information in open and closed systems, respectively. Here, the requirement of complete positivity of (as opposed to mere positivity) ensures that is extensible to a state-preserving map between any two systems that include and as subsystems. If is abelian, then positivity and complete positivity of are equivalent notions. If for a Hilbert space H2, Stinespring’s theorem (28) states that is completely positive if and only if there is a Hilbert space H1, a representation , and a bounded linear map V : H2 → H1 such that .

It follows from these considerations that the Koopman operator is a quantum operation (since Ut is positive, the transfer operator preserves normal states, and is abelian), and so is (by Stinespring’s theorem). In fact, Ut and are both quantum channels. It is therefore natural to require that the discretization procedure leads to a quantum operation in both of the abelian and nonabelian cases. A second key requirement is that the discretization procedure is positivity preserving; that is, positive elements of the infinite-dimensional algebra are mapped into positive elements of the finite-dimensional algebra associated with the projected system. This requirement is particularly important when modeling physical systems, where failure to preserve signs of sign-definite quantities may result in loss of physical interpretability and lead to numerical instabilities (29). Our third requirement is that the finite-dimensional approximations converge in an appropriate sense to the original system as the dimension increases. One of the main perspectives put forward in this paper is that the construction of discretization schemes meeting these requirements is considerably facilitated by working in the nonabelian setting of rather than the abelian setting of .

First, as an illustration of the fact that a “naive” projection will fail to meet our requirements, consider the Koopman operator Ut : H → H. Fix an orthonormal basis {ϕ0, ϕ1, …} of H with , and let ΠL : H → H be the orthogonal projection that maps into the L-dimensional subspace HL := span{ϕ0, …, ϕL − 1}. A common approach to Koopman and transfer operator approximation (30, 31) is to orthogonally project elements of H to elements of HL, f ↦ fL := ΠLf and similarly approximate Ut by the finite-rank operator UL(t) := ΠLUtΠL. The rank of UL(t) is at most L, and it is represented in the {ϕl} basis by an L × L matrix U with elements Uij = ⟨ϕi, Utϕj⟩. Note the inclusions and that HL and are invariant subspaces of H under UL(t). Moreover, UL(t) maps f ∈ H to g = UL(t)f ∈ HL such that , where . Letting and with be the L-dimensional column vectors giving the representation of ΠLf and g in the {ϕl} basis of H, respectively, we can express the action of UL(t) on f as the matrix–vector product g = Uf.

Unfortunately, such methods are not positivity preserving; that is, if f is a positive function in , ΠLf need not be positive. A classical example is a tophat function on the real line, which develops oscillations to negative values upon Fourier filtering (the Gibbs phenomenon). Even if f is a positive function in the finite-dimensional subspace HL (so that ΠLf = f), the function g = UL(t)f need not be positive. Thus, standard discretization approaches based on orthogonal projections fail to meet the requirements laid out above.

Next, we turn to positivity-preserving discretizations utilizing the abelian algebra , as opposed to the Hilbert space H. Recalling that the projections in are the characteristic functions of measurable sets, let S be a measurable subset of X, and consider the multiplication operator such that MSf = χSf. The map MS is positive, and the projected Koopman operator, MSUtMS is a quantum operation. However, in order for MS to be a discretization map, we must have that its range is a finite-dimensional algebra. This is equivalent to asking that the restriction of μ to S is supported on a finite number of atoms, i.e., measurable sets that have no measurable subsets of positive measure. This is a highly restrictive condition that fails to hold for broad classes of dynamical systems (e.g., volume-preserving flows on manifolds), so the abelian algebra does not provide an appropriate environment to perform discretizations meeting our requirements.

We now come to discretizations based on the operator algebra . Working with allows us to use both Hilbert space techniques to construct finite-rank operators by orthogonal projection and algebraic techniques to ensure that these projections are positivity preserving. With HL as above, consider the von Neumann algebra . This algebra has dimension L2 and is isomorphic to the algebra of L × L complex matrices. In particular, each element is represented by a matrix with elements Aij = ⟨ϕi, Aϕj⟩. Correspondingly, we refer to data assimilation based on as matrix mechanical; see  in Fig. 1.

in Fig. 1.

Next, note that can be canonically identified with the subalgebra of consisting of all operators A satisfying kerA ⊇ HL⊥ and ranA ⊆ HL. Thus, we can view the projection with as an operator from to . By Stinespring’s theorem, is completely positive. As a result, i) the projection is positivity preserving, and thus, so is the projected representation with ; and ii) the projected Koopman operator with is a quantum operation. Moreover, since {ϕl} is an orthonormal basis of H, for any f ∈ H, we have limL → ∞ΠLf = f. This implies that for every , the operators converge strongly to A, i.e., limL → ∞ALg = Ag, for all g ∈ H. In particular, πLf with converges strongly to πf. Further details on these approximations can be found in SI Appendix, sections 2.D–2.H. Note that, in general, πLf is not a multiplication operator. That is, the act of embedding in the nonabelian algebra using and then projecting to the finite-dimensional subalgebra using is not equivalent to projecting into HL using ΠL and then embedding HL into using π.

Consider now a normal state induced by a probability density , and let ωρ = Γ(ωp) be the associated normal state on obtained via Eq. 3. For L sufficiently large, is nonzero, and thus, is a density operator in inducing a state , which extends to . In Fig. 1, we denote the map ωρ ↦ ωρL as . By construction, the state ωρL satisfies for all . Setting, in particular, A = πf with , it follows from Eq. 4 and the strong convergence of πLf to πf that

| [10] |

SI Appendix, section 2.E. It should be kept in mind that, aside from special cases, ωρL is not the image of a state under Γ for a probability density ; that is, in general, ωρL is a “nonclassical” state. Note also that ωρL is a vector state (Eq. 3) induced by the unit vector , which, as just mentioned, is generally not the square root of a probability density.

Let now with be the projected transfer operator on . Unless HL is a Ut-invariant subspace, is not equal to ; see the dashed arrow in the third column of the schematic in Fig. 1. Nevertheless, we have the asymptotic consistency , which holds for all and ; SI Appendix, section 2.I. Applying this result for A = πf and ωρ = Γ(ωp), with and , it follows that

| [11] |

Eq. 11 implies that the matrix mechanical data assimilation scheme consistently recovers forecasts from data assimilation in the abelian algebra in the limit of infinite dimension L.

In SI Appendix, section 2.F.2, we describe how for any self-adjoint element , the spectral measures of converge to the spectral measure of A. Since is self-adjoint whenever is real, the spectral convergence of πLf to πf implies that the forecast distributions induced by consistently recover the forecast distributions and from the infinite-dimensional quantum mechanical and abelian systems, respectively.

With a similar approach (SI Appendix, section 2.J), one can deduce that the analysis step is also consistently recovered: Defining the effect-valued map with , it follows from Eq. 9 and Eq. 10 that for every and ,

| [12] |

where ωρ = Γ(ωp) and , so the matrix mechanical analysis step is asymptotically consistent with the infinite-dimensional quantum mechanical and abelian analyses.

On the basis of Eqs. 11 and 12, we conclude that as the dimension L increases, the matrix mechanical data assimilation scheme is consistent with the abelian formulation of sequential data assimilation. Moreover, the discretization leading to this scheme is positivity preserving, and the projected Koopman operator is a quantum operation. Thus, matrix mechanical data assimilation provides a nonabelian, finite-dimensional framework that simultaneously meets all of the requirements listed in the beginning of this subsection.

Data-Driven Approximation.

The matrix mechanical data assimilation scheme described above admits a consistent data-driven approximation using kernel methods for machine learning (17, 20, 22). The data-driven scheme employs three, possibly related, types of training data, all acquired along a dynamical trajectory XN = {x0, x1, …, xN − 1}⊂X with xn = Φn Δt(x0), where Δt > 0 is a sampling interval: i) samples yn = h(xn) from the observation map h : X → Y; ii) samples fn = f(xn) from the forecast observable ; iii) samples zn = z(xn) from a map z : X → Z, used as proxies of the dynamical states xn. If the xn are known, we set Z = X and z = Id. Otherwise, we set Z = Y2Q + 1 for a parameter and define z as the delay-coordinate map z(x)=(h(Φ−Q Δt(x)), h(Φ( − Q + 1) Δt(x)), …, ΦQ Δt(x)), giving zn = (yn − Q, yn − Q + 1, …, yQ). By delay-embedding theory (32), for sufficiently large Q and typical observation maps h and sampling intervals Δt, z is an injective map.

The dynamical trajectory xn has an associated sampling measure and a finite-dimensional Hilbert space . By ergodicity, as N increases, the measures μN converge to the invariant measure μ in weak-* sense, so we can interpret as a data-driven analog of the infinite-dimensional Hilbert space H (SI Appendix, section 1). Given the training data z0, z1, …, zN − 1, and without requiring explicit knowledge of the underlying states xn, we use kernel integral operators to build an orthonormal basis {ϕ0, N, …, ϕL − 1, N} of an L-dimensional subspace that plays the role of a data-driven counterpart of HL. More specifically, the basis elements ϕl, N are eigenvectors of a kernel integral operator induced by a kernel function . The operator KN is represented by an N × N kernel matrix KN constructed from the training data zn; SI Appendix, section 2.A. We let be the L2-dimensional algebra of linear maps on HL, N, which, as in the case of , is isomorphic to the matrix algebra .

Every operator employed in the matrix-mechanical scheme described in the previous section has a data-driven counterpart, represented as an L × L matrix with respect to the {ϕl, N} basis. Specifically, the projected Koopman operator UL(t) at time t = q Δt, , is replaced by an operator induced by the shift map on the trajectory xn (30), with a corresponding quantum operation . Moreover, the projected multiplication operator πLf is replaced by , and the effect-valued map by a map . Here, is the restriction of f on . Further details are provided in SI Appendix, sections 2.D–2.J.

The data-driven scheme is positivity preserving and constitutes a quantum operation analogously to the matrix mechanical scheme. Moreover, by results on spectral approximation of kernel integral operators (33) and ergodicity of the dynamics, the kernel matrices KN exhibit spectral convergence in the large-data limit, N → ∞, to a kernel integral operator K : H → H in a suitable sense (SI Appendix, Theorem 1). Correspondingly, all matrix representations of operators, and thus all predictions made by the data-driven scheme, converge to the predictions of the matrix mechanical scheme  in Fig. 1. Overall, we obtain a data-driven, positivity-preserving, and asymptotically consistent data assimilation scheme. The data requirements and computational complexity of this scheme are comparable to standard kernel methods for supervised machine learning (SI Appendix, section 2.K).

in Fig. 1. Overall, we obtain a data-driven, positivity-preserving, and asymptotically consistent data assimilation scheme. The data requirements and computational complexity of this scheme are comparable to standard kernel methods for supervised machine learning (SI Appendix, section 2.K).

Lorenz 96 Multiscale System

As our first numerical example, we apply QMDA to assimilate and predict the slow variables of the Lorenz 96 (L96) multiscale system (34). This system was introduced by Lorenz in 1996 as a low-order model of atmospheric circulation at a constant-latitude circle. The dynamical degrees of freedom include K slow variables x1, …, xK, representing the zonal (west to east) component of the large-scale atmospheric velocity field at K zonally equispaced locations. Each slow variable xk is coupled to J fast variables y1, k, …, yJ, k, representing small-scale processes such as atmospheric convection. The dynamical state space is thus X = ℝJ(K + 1) with .

The governing equations are

| [13] |

where the parameter F represents large-scale forcing (e.g., solar heating), hx and hy control the coupling between the slow and fast variables, and ε is a parameter that controls the timescale separation between the fast and slow variables. The governing equations for xk feature large-scale forcing, F, a quadratic nonlinearity, −xk − 1(xk − 2 − xk + 1), representing advection, a linear damping term, −xk, representing surface drag, and a flux term, , representing forcing from the fast variables. The terms in the yj, k equations have similar physical interpretations. In general, the dynamics becomes more turbulent/chaotic as F increases.

Here, we focus on the chaotic dynamical regime studied in refs. (35) and (36) with K = 9, J = 8, ε = 1/128, F = 10, hx = −0.8, and hy = 1. In this regime, ε is sufficiently small so that the dynamics of the (x1, …, xK) variables is approximately Markovian. We consider that the observation map h : X → Y projects the state vector x ∈ X to the slow variables, i.e., and h(x)=y := (x1, …, xK). Our forecast observable is the first slow variable, f(x)=x1.

Training.

We employ a training dataset consisting of N = 40,000 samples y0, …, yN − 1 ∈ Y and with yn = h(xn), fn = f(xn), and xn = Φn Δt(x0), taken at a sampling interval Δt = 0.05. To assess forecast skill, we use samples with and , taken on an independent dynamical trajectory from the training data. The data z0, z1, …, zN − 1 ∈ Z for computation of the data-driven basis {ϕl, N} of HL, N consist of snapshots of the slow variables, zn = yn. That is, we have with Q = 0 delays and z = Id. This choice is motivated by the fact that the evolution of yn is approximately Markovian for ε ≪ 1, and the forecast observable f(x)=x1 depends on x ∈ X only through y = h(x). Following ref. (21), we compute the ϕl, N using a variable-bandwidth Gaussian kernel (37) with a bistochastic normalization (38). Further details on this kernel and the L96 data are provided in SI Appendix, sections 2.B and 5.A, respectively.

Using the basis vectors, we compute L × L matrix representations of the projected Koopman operators for lead times τj = j Δt with j ∈ {0, 1, …, Jf}, Jf = 150 (SI Appendix, Algorithm S9). Moreover, using the ϕl, N and the training samples fn, we compute the L × L matrix representation AL, N of the operator AL, N := πL, Nf associated with the forecast observable. To evaluate forecast distributions for f, we compute the PVM EAL, N of AL, N, which amounts to computing an eigendecomposition of AL, N (SI Appendix, Algorithm S7). To report forecast probabilities, we evaluate EAL, N on a collection of bins of equal probability mass in the equilibrium distribution of f. As our observation kernel ψ : Y × Y → [0, 1], we use a variable-bandwidth bump function. The corresponding effect-valued map is represented by a matrix-valued function; further details are provided in SI Appendix, section 2.J. In Figs. 2 and 3, we show results for Hilbert space dimension L = 2, 000, though forecast skill does not change appreciably for values of L in the range 500 to 2,000 (SI Appendix, Fig. S1).

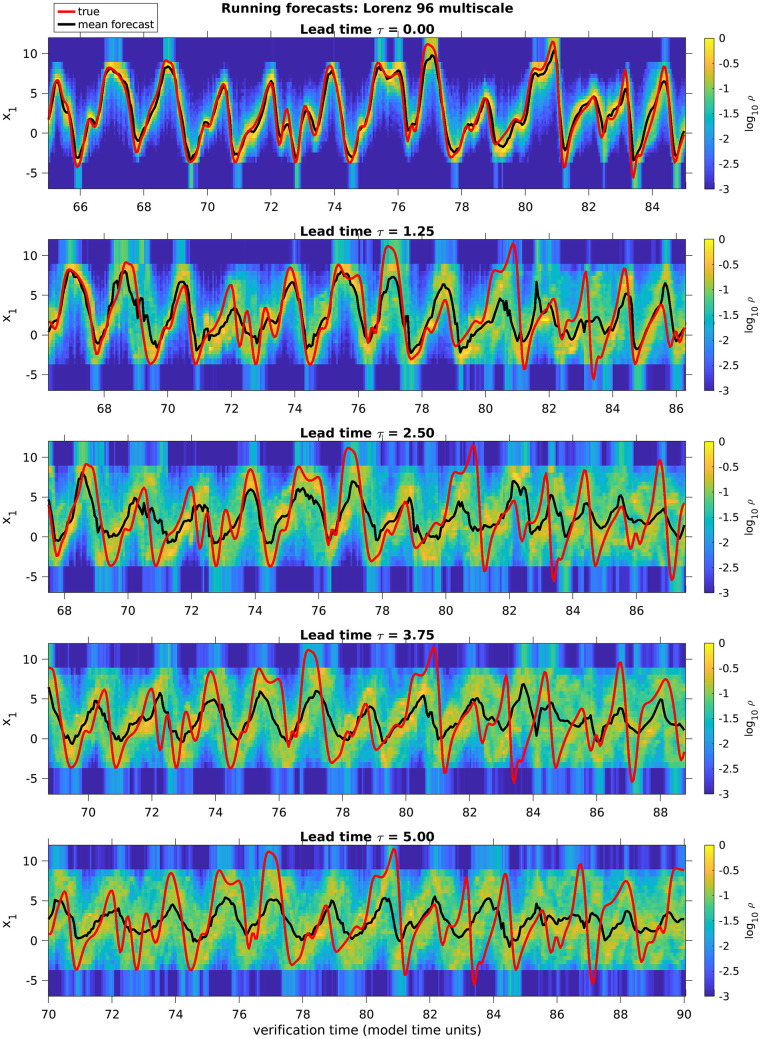

Fig. 2.

Running QMDA forecasts of the x1 variable of the L96 multiscale system in a chaotic regime. The panels show the true x1 evolution (black lines), the logarithm of the discrete forecast probability density 𝜚n, j (colors), and the corresponding forecast mean (red lines) as a function of verification time for lead times in the range 0 to 5 model time units (Top to Bottom). The assimilated observable is the K-dimensional vector (x1, …, xK) of the L96 slow variables.

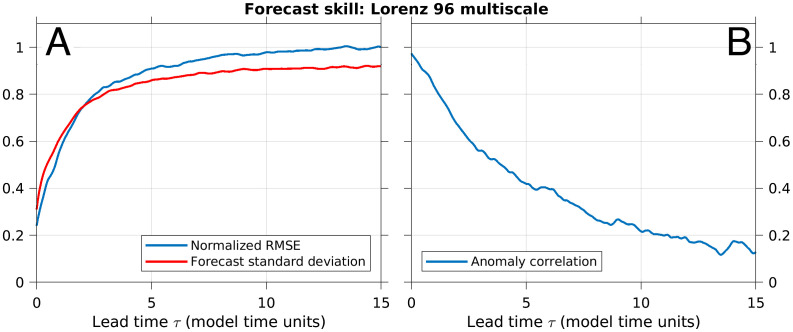

Fig. 3.

NRMSE (A) and AC score (B) of the L96 forecasts from Fig. 2.

Data Assimilation.

We perform data assimilation experiments initialized with the pure state induced by the density operator . We interpret this state as an uninformative equilibrium state, in the sense that i) , where is the empirical mean of f, and ii) ωρ0 is invariant under the action of the transfer operator, i.e., .

Starting from ω0, QMDA produces a sequence of states by repeated application of the forecast–analysis steps, as depicted schematically in Fig. 1 and in pseudocode form in SI Appendix, Algorithm S1. Specifically, for , we compute ωn by first using the transfer operator to compute the state (which is analogous to the prior in classical data assimilation) and then applying the effect map to observation to yield (which is analogous to the classical posterior). For each , we also compute forecast states and associated forecast distributions for the observable f. We evaluate on the bins Sm and normalize the result by the corresponding bin size, sm := length(Sm) to produce discrete probability densities 𝜚n, j = (𝜚n, j, 0, …, 𝜚n, j, M − 1) with . We also compute the forecast mean and standard deviation, and , respectively. We assess forecast skill through the normalized mean square error (NRMSE) and anomaly correlation (AC) scores, computed for each lead time τj by averaging over the samples in the verification dataset (SI Appendix, section S4).

Fig. 2 shows the forecast probability densities 𝜚n, j (colors), forecast means (black lines), and true signal (red lines), plotted as a function of verification time tn + j over intervals spanning 20 time units for representative lead times τj in the range 0 to 5 time units. The corresponding NRMSE and AC scores are displayed in Fig. 3. Given the turbulent nature of the dynamics, we intuitively expect the forecast densities 𝜚n, j to start from being highly concentrated around the true signal for small τj and progressively broaden as τj increases (i.e., going down the panels of Fig. 2), indicating that the forecast uncertainty increases. Correspondingly, we expect to accurately track the true signal for small τj and progressively relax toward the equilibrium mean ∫Xf dμ.

The results in Figs. 2 and 3 are broadly consistent with this behavior: The forecast starts at τj = 0 from a highly concentrated density around the true signal (note that Fig. 2 shows logarithms of 𝜚n, j), which is manifested by low NRSME and large AC values in Fig. 3 of approximately 0.24 and 0.98, respectively. As τj increases, the forecast distribution broadens, and the NRMSE (AC) scores exhibit a near-monotonic increase (decrease). In Fig. 3A, the estimated error based on the forecast variance σn, j is seen to track well the NRMSE score, which indicates that the forecast distribution 𝜚n, j well represents the true forecast uncertainty. It should be noted that errors are present even at time τj = 0, particularly for periods of time where the true signal takes extreme positive or negative values. Such reconstruction errors are expected for a fully data-driven driven method applied to a system with a high-dimensional attractor. Overall, the skill scores in Fig. 3 are comparable with the results obtained in ref. (36) using the kernel analog forecasting (KAF) technique (39).

El Niño Southern Oscillation

The El Niño Southern Oscillation (ENSO) (40) is the dominant mode of interannual (3- to 5-y) variability of the Earth’s climate system. Its primary manifestation is an oscillation between positive sea surface temperature (SST) anomalies over the eastern tropical Pacific Ocean, known as El Niño events, and episodes of negative anomalies known as La Niñas (41). Through atmospheric teleconnections, ENSO drives seasonal weather patterns throughout the globe, affecting the occurrence of extremes such as floods and droughts, among other natural and societal impacts (42). Here, we demonstrate that QMDA successfully predicts ENSO within a comprehensive climate model by assimilating high-dimensional SST data.

Our experimental setup follows closely ref. (43), who performed data-driven ENSO forecasts using KAF. As training and test data, we use a control integration of the Community Climate System Model Version 4 (CCSM4) (44), conducted with fixed preindustrial greenhouse gas forcings. The simulation spans 1,300 y, sampled at an interval Δt = 1 month. Abstractly, the dynamical state space X consists of all degrees of freedom of CCSM4, which is of order 107 and includes variables such as density, velocity, and temperature for the atmosphere, ocean, and sea ice, sampled on discretization meshes over the globe. Since this simulation has no climate change, there is an implicit invariant measure μ sampled by the data, and we can formally define the algebras and associated with the invariant measure as described above.

In our experiments, the observation map h : X → Y returns monthly averaged SST fields on an Indo-Pacific domain; that is, we have , where d is the number of surface ocean gridpoints within the domain. We have d = 44,414, so these experiments test the ability of QMDA to assimilate high-dimensional data. However, note that h is a highly noninvertible map since Indo-Pacific SST comprises only a small subset of CCSM4’s dynamical degrees of freedom. As our forecast observable we choose the Niño 3.4 index—a commonly used index for ENSO monitoring defined as the average SST anomaly over a domain in the tropical Pacific Ocean. Large positive (negative) values of Niño 3.4 represent El Niño (La Niña) conditions, whereas values near zero represent neutral conditions. Additional information on the CCSM4 data is included in SI Appendix, section 5.B.

Following ref. (43), we use the SST and Niño 3.4 samples from the first 1,100 y of the simulation as training data and the corresponding samples for the last 200 y as test data. Thus, with the notation of the previously described L96 experiments, our training data are yn = h(xn) (Indo-Pacific SST) and fn = f(xn) (Niño 3.4) for n ∈ {0, …, N − 1} and N = 1,100 × 12= 13,200, and our test data are and for and 2,400. Here, xn = Φn Δt(x0)∈X is the (unknown) dynamical trajectory of the CCSM4 model underlying our training and test data. Using the SST samples yn, we build the training data zn using delay-coordinate maps with parameter Q = 5; i.e., the data zn used for building the basis of HL, N are SST “videos” that span a total of 2Q + 1 = 11 months and have dimension 11d ≃ 4.9 × 105. We compute the basis {ϕl, N} using a kernel κ that depends on the pairwise Euclidean distances between points in Z as well as Niño 3.4 trajectories evaluated on these points (SI Appendix Eq. S5). This approach improves the ability of the basis vectors to capture covariability between Indo-Pacific SST fields and the Niño 3.4 index, leading to a modest improvement of short-term forecast skill and a more significant improvement of uncertainty quantification over kernels that depend only on SST. Aside from the different kernel κ, the procedure for initializing and running QMDA is identical to the L96 experiments.

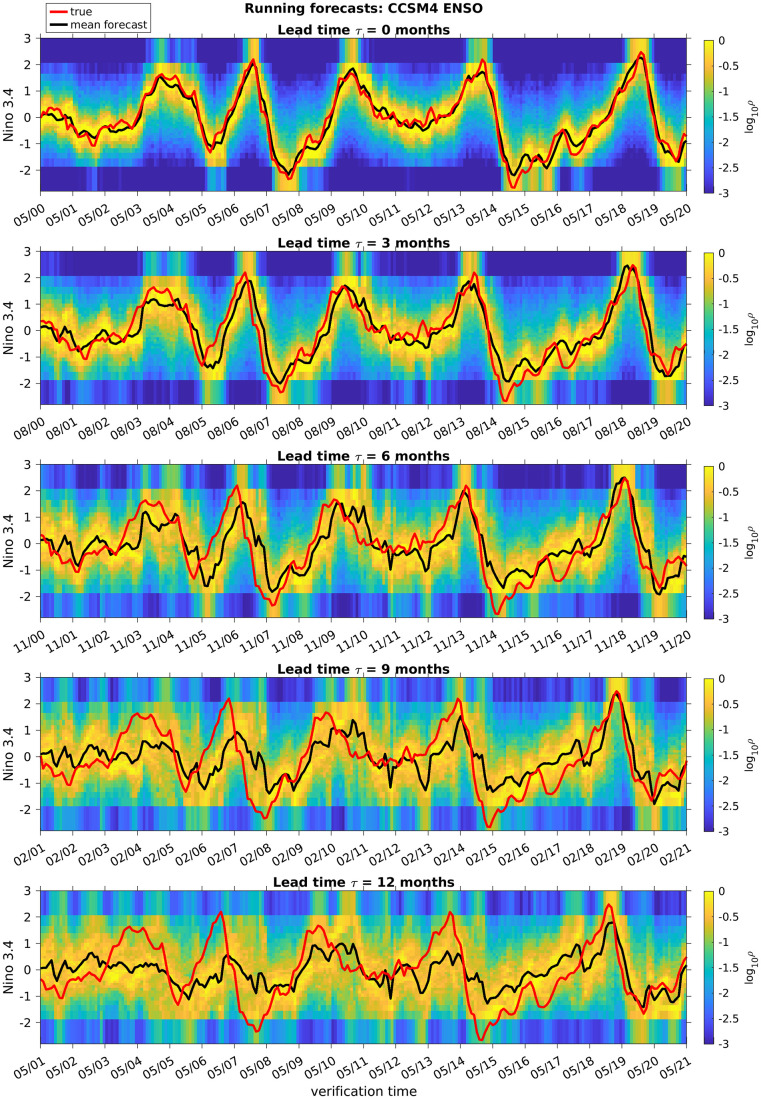

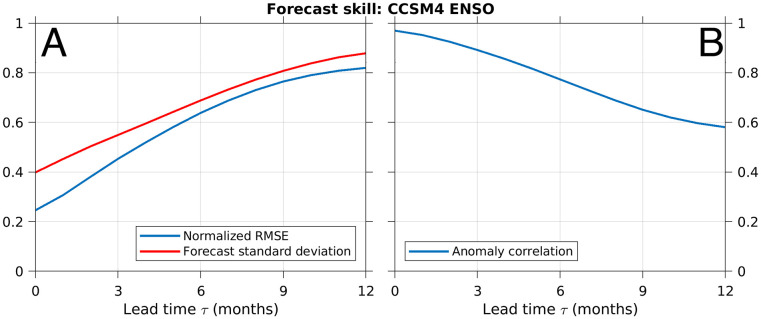

Fig. 4 shows the forecast probability density (𝜚n, j; colors), forecast mean (; black lines), and true signal (; red lines) for the Niño 3.4 index as a function of verification time tn + j over 20-y portions of the test dataset for lead times τj in the range 0 to 12 mo, obtained for Hilbert space dimension L = 1,000. The corresponding NRMSE and AC scores are displayed in Fig. 5. The skill scores do not vary significantly for values of L in the interval 500 to 2,000 (SI Appendix, Fig. S2).

Fig. 4.

As in Fig. 2, but for forecasts of the Niño 3.4 index in CCSM4. The assimilated observable is the vector of Indo-Pacific SST gridpoint values. The forecast lead times are in 3-mo increments in the range 0 to 12 mo. The verification times are shown in mm/yy date format relative to an arbitrary year in the verification interval.

Fig. 5.

NRMSE (A) and AC score (B) of the Niño 3.4 forecasts from Fig. 4.

Qualitatively, the forecast density 𝜚n, j displays a similar behavior as in the L96 experiments; that is, it is concentrated around the true signal on short lead times (τj ≲ 3 months) and gradually broadens as forecast uncertainty grows with increasing lead time τj due to chaotic climate dynamics. In Fig. 5A, the estimated forecast error based on the forecast variance σn, j agrees reasonably well with the actual NRMSE evolution. Adopting AC = 0.6 as a commonly used threshold for ENSO predictability, we see from the AC results in Fig. 5B that QMDA produces useful forecasts out to τj ≃ 12 months. The performance of QMDA in terms of the NRMSE and AC metrics is comparable to that found for KAF in ref. (43), but QMDA has the advantage of producing full forecast probability distributions instead of point estimates. Compared to KAF, QMDA also has the advantage of being positivity preserving. While this property may not be critical for sign-indefinite ENSO indices, there are many climatic variables where sign preservation is particularly important.

Quantum Circuit Implementation

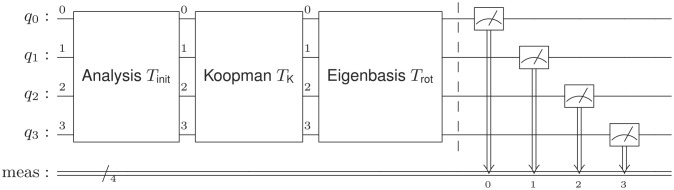

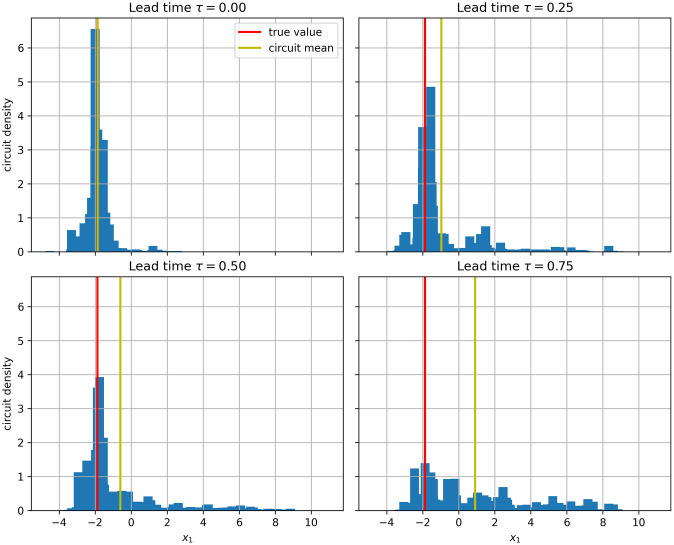

As a demonstration of the potential of QMDA for implementation on quantum computing platforms, we present forecasting results for the L96 multiscale system obtained from quantum circuit simulations performed using the Qiskit Aer Python library (45). Our quantum circuit architecture consists of initialization, Koopman evolution, eigenbasis rotation, and measurement stages, depicted in Fig. 6 for a 4-qubit setup. The initialization and Koopman stages implement the QMDA analysis and forecast steps, respectively, via unitary operations acting on the qubits. The eigenbasis rotation implements a unitary induced by the eigenvectors of the quantum mechanical forecast observable AL, N so that measurement at the output of the circuit samples the desired probability distribution at the given initialization time tn and forecast lead time τj.

Fig. 6.

Four-qubit circuit implementation of an analysis–forecast QMDA cycle.

In more detail, associated with a quantum computational system of qubits is a tensor product Hilbert space of dimension , where is the two-dimensional Hilbert space generated by |0⟩ (“up”) and |1⟩ (“down”) vectors (46). The standard basis of , known as quantum computational basis, has the tensor product form , indexed by binary strings b = (b1, …, b𝔫) of length . A (noise-free) quantum computer is represented as a quantum channel on the -dimensional von Neumann algebra , which is isomorphic to the algebra of matrices. Typically, the channel has the form , where is a unitary map. In the circuit shown in Fig. 6, we express T as the composition T = Trot ⚬ TK(j) ⚬ Tinit(ξ, y), where Tinit(ξ, y) represents the initialization (analysis) step given a prior state vector ξ ∈ HL, N, and an observation y ∈ Y, TK(j) represents Koopman evolution over forecast timesteps, and Trot is the eigenbasis rotation stage of the circuit.

In an actual quantum computational environment, Tinit, TK, and Trot would be implemented by combining quantum logic gates through operations such as compositions and tensor products, the goal being to implement T by a circuit of low depth (longest path from input to output). For instance, circuits whose depth scales as a polynomial in allow simulation of quantum states and observables on the exponentially large-dimensional Hilbert space in a polynomial running time. Here, we do not address the important questions of how to implement Tinit, TK, and Trot efficiently and robustly on an actual quantum computer, so the results presented in this section should be viewed as a proof of concept.

Suppose that the Hilbert space dimension of the matrix mechanical data assimilation system  is for some . Given the data-driven basis {ϕl, N} of HL, N indexed by integers l ∈ {0, …, L − 1}, we define a unitary such that WLϕl, N = |b⟩, where is the binary representation of l, i.e., . Using WL, we can encode any into an operator . In particular, if is a state induced by a density operator , then the transformed state with satisfies ωρ(A)=ωσ(B), so predictions from the matrix mechanical and quantum computational systems are equivalent. If ωρ is a vector state induced by unit vector ξ ∈ HL, N, then ωσ is a vector state induced by .

is for some . Given the data-driven basis {ϕl, N} of HL, N indexed by integers l ∈ {0, …, L − 1}, we define a unitary such that WLϕl, N = |b⟩, where is the binary representation of l, i.e., . Using WL, we can encode any into an operator . In particular, if is a state induced by a density operator , then the transformed state with satisfies ωρ(A)=ωσ(B), so predictions from the matrix mechanical and quantum computational systems are equivalent. If ωρ is a vector state induced by unit vector ξ ∈ HL, N, then ωσ is a vector state induced by .

Given a prior state at time tn with corresponding state vector ξn − 1, 1 ∈ HL, N, an observation , and a forecast lead time τj, the circuit in Fig. 6 operates by acting on the computational basis vector |0⟩ (which is the “default” state vector at the start of a quantum computation) by the transformations , TK(j), and Trot, leading to the state vector . At the end of the computation, a measurement in the quantum computational basis yields a random binary string b with probability . It can be shown that , where l ∈ {0, …, L − 1} is the integer with binary representation b and al is the l-th eigenvalue in the spectrum of AL, N (ordered in increasing order), is a sample from the distribution induced from the spectral measure EAL, N and the quantum state ωn, j. Repeating this procedure over M identically prepared circuits leads to an ensemble of measurements (or “shots”) {al1, …, alM}, which provides a Monte Carlo approximation of the theoretical forecast distribution . Further details are provided in SI Appendix, section 3.

Fig. 7 shows representative forecast distributions of the x1 variable of the L96 multiscale system obtained via this approach using qubits (i.e., L = 210 = 1024). In these experiments, the verification time is held fixed, so there is a fixed true value x1 ≈ −1.86 and the lead time τj = j Δt varies from 0 to 0.75 in increments of 0.25. The panels show histograms of the normalized counts centered on x1 = al, where Mnjl is the number of occurrences of eigenvalue al of the multiplication operator AL, N in the experiment with lead time τj, M = 106 is the number of shots, and sl = (al + 1 − al − 1)/2 is an effective bin size. Also shown are the empirical mean that approximates the forecast expectation and the true value of x1 (yellow and red lines, respectively).

Fig. 7.

Simulated quantum circuit prediction of the x1 variable of the L96 multiscale system using qubits. The histograms show the empirical probability density computed from M = 106 shots for lead times in the range 0 to 3.75 time units. The vertical red lines show the true value of x1 at the verification time, which is held fixed in all experiments. The vertical yellow lines show the empirical mean forecasts.

The time evolution of the histograms illustrates the increase of forecast uncertainty due to chaotic dynamics in conjunction with finite-rank operator approximation. At τj = 0, the empirical density is strongly concentrated around the truth, and the empirical mean has an approximately 5.9% error. As τj increases, the probability density spreads predominantly to larger values of x1, causing the mean forecast to increasingly deviate from the truth. Intriguingly, the peak of the histograms remains collocated with the truth, which suggests that there may be opportunities to increase skill using an estimator based on the mode of the distribution rather than the mean. Overall, the quantum circuit simulation results are qualitatively consistent with the L96 results from deterministic computation presented earlier, which demonstrates the suitability of QMDA for implementation on quantum computers.

Concluding Remarks

We have developed theory and methods for sequential data assimilation of partially observed dynamical systems using techniques from operator algebra, quantum information, and ergodic theory. At the core of this framework, called quantum mechanical data assimilation (QMDA), is the nonabelian algebraic structure of spaces of operators. One of the main advantages that this structure provides is that it naturally enables finite-dimensional discretization schemes that preserve the sign of sign-definite observables in ways that are not possible with classical projection-based approaches.

We build these schemes starting from a generalization of Bayesian data assimilation based on a dynamically consistent embedding into an infinite-dimensional operator algebra acting on the L2 space associated with an invariant measure of the system. Under this embedding, forecasting is represented by a quantum operation induced by the Koopman operator of the dynamical system, and Bayesian analysis is represented by quantum effects. In addition to providing a useful starting point for discretizing data assimilation, this construction draws connections between statistical inference methods for classical dynamical systems with quantum information and quantum probability, which should be of independent interest.

QMDA leverages properties of operator algebras to project the infinite-dimensional framework into the level of a matrix algebra in a manner that positive operators are represented by positive matrices, and the finite-dimensional system is a quantum operation. QMDA also has a data-driven formulation based on kernel methods for machine learning with consistent asymptotic behavior as the amount of training data increases. We have demonstrated the efficacy of QMDA with forecasting experiments of the slow variables of the Lorenz 96 multiscale system in a chaotic regime and the El Niño Southern Oscillation in a climate model. QMDA was shown to perform well in terms of point forecasts from quantum mechanical expectations, while also providing uncertainty quantification by representing entire forecast distributions via quantum states.

This work motivates further application and development of algebraic approaches and quantum information to building models and performing inference of complex dynamical systems. In particular, as we enter the quantum computing era, there is a clear need to lay out the methodological and algorithmic foundations for quantum simulation of complex classical systems. Being firmly rooted in quantum information and operator theory, the QMDA framework presented in this paper is a natural candidate for implementation in quantum computers, which we have demonstrated here by means of simulated quantum circuit experiments. As noted in the opening section of the paper, efforts to simulate classical dynamical systems on quantum computers are being actively pursued (22, 47, 48). Porting data assimilation algorithms such as QMDA to a physical quantum computational environment presents new challenges as the iterative nature of the forecast–analysis cycle will require repeated interaction between the quantum computer and the assimilated classical system, possibly using quantum sensors (49). We believe that addressing these challenges is a fruitful area for future research with both theoretical and applied dimensions.

Supplementary Material

Appendix 01 (PDF)

Acknowledgments

We thank Philipp Pfeffer, Travis Russell, and Jörg Schumacher for stimulating discussions. D.G. acknowledges support from the US National Science Foundation under grants 1842538 and DMS-1854383, the US Office of Naval Research under MURI grant N00014-19-1-242, and the US Department of Defense, Basic Research Office under Vannevar Bush Faculty Fellowship grant N00014-21-1-2946. D.C.F. is supported as a PhD student under the last grant. A.O. was supported by the US Department of Energy, Office of Science, Basic Energy Sciences under award DE-SC0002164 (underlying dynamical techniques), and by the US National Science Foundation under awards STC-1231306 (underlying data analytical techniques) and DBI-2029533 (underlying analytical models). J.S. acknowledges support from NSF EAGER grant 1551489.

Author contributions

D.G., A.O., and J.S. designed research; D.F., D.G., B.M., A.O., and J.S. performed research; D.F., D.G., B.M., and J.S. contributed new reagents/analytic tools; D.G. and J.S. analyzed data; and D.G. wrote the paper.

Competing interests

The authors declare no competing interest.

Footnotes

This article is a PNAS Direct Submission.

Data, Materials, and Software Availability

The CCSM4 data analyzed in this study are available at the Earth System Grid repository under accession code https://www.earthsystemgrid.org/dataset/ucar.cgd.ccsm4.joc.b40.1850.track1.1deg.006.html (accessed January 2023). MATLAB code reproducing the ENSO and L96 results in Figs. 2–5 is available in the repository https://doi.org/10.5281/zenodo.7554628 under directory /pubs/FreemanEtAl23_PNAS. This directory also contains a Python Jupyter notebook that reproduces the quantum circuit simulation results in Fig. 7.

Supporting Information

References

- 1.Cressman G. P., An operational objective analysis system. Mon. Wea. Rev. 87, 367–374 (1959). [Google Scholar]

- 2.Kalman R. E., A new approach to linear filtering and prediction problems. J. Basic Eng. 82, 35–45 (1960). [Google Scholar]

- 3.Majda A. J., Harlim J., Filtering Complex Turbulent Systems (Cambridge University Press, Cambridge, 2012). [Google Scholar]

- 4.Law K., Stuart A., Zygalakis K., Data Assimilation: A Mathematical Introduction, Texts in Applied Mathematics (Springer, New York, 2015), vol. 62. [Google Scholar]

- 5.Kalnay E., Atmospheric Modeling, Data Assimilation, and Predictability (Cambridge University Press, Cambridge, 2003). [Google Scholar]

- 6.Bannister R. N., A review of operational methods of variational and ensemble-variational data assimilation. Quart. J. Roy. Meteorol. Soc. 143, 607–633 (2016). [Google Scholar]

- 7.Karspeck A. R., et al. , A global coupled ensemble data assimilation system using the Community Earth System Model and the Data Assimilation Research Testbed. Quart. J. Roy. Meteor. Soc. 144, 2404–2430 (2018). [Google Scholar]

- 8.van Leuuwen P. J., Künsch H. R., Nerger L., Potthast R., Reich S., Particle filters for high-dimensional geoscience applications: A review. Quart. J. Roy. Meteorol. Soc. (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Takesaki M., Theory of Operator Algebras I, Encyclopaedia of Mathematical Sciences (Springer, Berlin, 2001), vol. 124. [Google Scholar]

- 10.Koopman B. O., Hamiltonian systems and transformation in Hilbert space. Proc. Natl. Acad. Sci. U.S.A. 17, 315–318 (1931). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Baladi V., Positive Transfer Operators and Decay of Correlations, Advanced Series in Nonlinear Dynamics (World Scientific, Singapore, 2000), vol. 16. [Google Scholar]

- 12.Eisner T., Farkas B., Haase M., Nagel R., Operator Theoretic Aspects of Ergodic Theory, Graduate Texts in Mathematics (Springer, Cham, 2015), vol. 272. [Google Scholar]

- 13.Emch G. G., Algebraic Methods in Statistical Mechanics and Quantum Field Theory (Dover Publications, Mineola, 2009). [Google Scholar]

- 14.Alber G., et al. , Quantum Information: An Introduction to Basic Theoretical Concepts and Experiments, Springer Tracts in Modern Physics (Springer-Verlag, Berlin, 2001), vol. 173. [Google Scholar]

- 15.Holevo A. S., Statistical Structure of Quantum Theory, Lecture Notes in Physics Monographs (Springer, Berlin, 2001), vol. 67. [Google Scholar]

- 16.Wilde M. M., Quantum Information Theory (Cambridge University Press, Cambridge, 2013). [Google Scholar]

- 17.Giannakis D., Quantum mechanics and data assimilation. Phys. Rev. E 100, 032207 (2019). [DOI] [PubMed] [Google Scholar]

- 18.Takhtajan L. A., Quantum Mechanics for Mathematicians, Graduate Series in Mathematics (American Mathematical Society, Providence, 2008), vol. 95. [Google Scholar]

- 19.Giannakis D., Slawinska J., Zhao Z., “Spatiotemporal feature extraction with data-driven Koopman operators” in Proceedings of the 1st International Workshop on Feature Extraction: Modern Questions and Challenges at NIPS 2015, Proceedings of Machine Learning Research, Storcheus D., Rostamizadeh A., Kumar S., Eds. (PMLR, Montreal, Canada, 2015), vol. 44, pp. 103–115. [Google Scholar]

- 20.Giannakis D., Data-driven spectral decomposition and forecasting of ergodic dynamical systems. Appl. Comput. Harmon. Anal. 47, 338–396 (2019). [Google Scholar]

- 21.Das S., Giannakis D., Slawinska J., Reproducing kernel Hilbert space compactification of unitary evolution groups. Appl. Comput. Harmon. Anal. 54, 75–136 (2021). [Google Scholar]

- 22.Giannakis D., Ourmazd A., Schumacher J., Slawinska J., Embedding classical dynamics in a quantum computer. Phys. Rev. A 105, 052404 (2022). [Google Scholar]

- 23.Davies E. B., Lewis J. T., An operational approach to quantum probability. Commun. Math. Phys. 17, 239–260 (1970). [Google Scholar]

- 24.Leifer M. S., Spekkens R. W., Towards a formulation of quantum theory as a causeally neutral theory of Bayesian inference. Phys. Rev. A 88, 052130 (2013). [Google Scholar]

- 25.Jacobs B., Zanasi F., A predicate/state transformer semantics for Bayesian learning. Electron. Notes Theor. Comput. Sci. 325, 185–200 (2016). [Google Scholar]

- 26.Gudder S., “Quantum probability” in Handbook of Quantum Logic and Quantum Structures, Engesser K., Gabbary D. M., Lehmann D., Eds. (Elsevier, Amsterdam, 2007), pp. 121–146. [Google Scholar]

- 27.Paulsen V. I., Raghupathi M., An Introduction to the Theory of Reproducing Kernel Hilbert Spaces, Cambridge Studies in Advanced Mathematics (Cambridge University Press, Cambridge, 2016), vol. 152. [Google Scholar]

- 28.Stinespring W. F., Positive functions on C*-algebras. Proc. Amer. Math. Soc. 6, 211–216 (1955). [Google Scholar]

- 29.Yuval J., O’Gormann P. A., Stable machine-learning parameterization of subgrid processes for climate modeling at a range of resolutions. Nat. Commun. 11, 3295 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Berry T., Giannakis D., Harlim J., Nonparametric forecasting of low-dimensional dynamical systems. Phys. Rev. E. 91, 032915 (2015). [DOI] [PubMed] [Google Scholar]

- 31.Klus S., Koltai P., Schütte C., On the numerical approximation of the Perron-Frobenius and Koopman operator. J. Comput. Dyn. 3, 51–79 (2016). [Google Scholar]

- 32.Sauer T., Yorke J. A., Casdagli M., Embedology. J. Stat. Phys. 65, 579–616 (1991). [Google Scholar]

- 33.von Luxburg U., Belkin M., Bousquet O., Consitency of spectral clustering. Ann. Stat. 26, 555–586 (2008). [Google Scholar]

- 34.Lorenz E. N., “Predictability of weather and climate” in Predictability of Weather and Climate, Palmer T., Hagedorn R., Eds. (Cambridge University Press, Cambridge, 1996), pp. 40–58. [Google Scholar]

- 35.Fatkullin I., Vanden-Eijnden E., A computational strategy for multiscale systems with applications to Lorenz 96 model. J. Comput. Phys. 200, 605–638 (2004). [Google Scholar]

- 36.Burov D., Giannakis D., Manohar K., Stuart A., Kernel analog forecasting: Multiscale test problems. Multiscale Model. Simul. 19, 1011–1040 (2021). [Google Scholar]

- 37.Berry T., Harlim J., Variable bandwidth diffusion kernels. Appl. Comput. Harmon. Anal. 40, 68–96 (2016). [Google Scholar]

- 38.Coifman R., Hirn M., Bi-stochastic kernels via asymmetric affinity functions. Appl. Comput. Harmon. Anal. 35, 177–180 (2013). [Google Scholar]

- 39.Alexander R., Giannakis D., Operator-theoretic framework for forecasting nonlinear time series with kernel analog techniques. Phys. D 409, 132520 (2020). [Google Scholar]

- 40.Bjerknes J., Atmospheric teleconnections from the equatorial pacific. Mon. Wea. Rev. 97, 163–172 (1969). [Google Scholar]

- 41.Wang C., et al. , Southern Oscillation (ENSO): A review in Coral Reefs of the Eastern Tropical Pacific: Persistence and Loss in a Dynamic Environment, Coral Reefs of the World, Glynn P. W., Manzello D. P., Enoch I. C., Eds. (Springer Netherlands, Dordrecht, 2017), vol. 8, pp. 85–106. [Google Scholar]

- 42.McPhaden M. J., Zebiak S. E., Glantz M. H., ENSO as an integrating concept in earth system science. Science 314, 1740–1745 (2006). [DOI] [PubMed] [Google Scholar]

- 43.Wang X., Slawinska J., Giannakis D., Extended-range statistical ENSO prediction through operator-theoretic techniques for nonlinear dynamics. Sci. Rep. 10, 2636 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Gent P. R., et al. , The community climate system model version 4. J. Climate 24, 4973–4991 (2011). [Google Scholar]

- 45.Anis M. S., et al. , Qiskit: An open-source framework for quantum computing (2021).

- 46.Nielsen M. A., Chuang I. L., Quantum Computation and Quantum Information (Cambridge University Press, Cambridge, 2010). [Google Scholar]

- 47.Joseph I., Koopman-von Neumann approach to quantum simulation of nonlinear classical dynamics. Phys. Rev. Res. 2, 043102 (2020). [Google Scholar]