Abstract

Background:

Identifying feasible and effective implementation strategies remains a significant challenge. At present, there is a gap between the number of strategies prospectively included in implementation trials, typically four or fewer, and the number of strategies utilized retrospectively, often 20 or more. This gap points to the need for developing a better understanding of the range of implementation strategies that should be considered in implementation science and practice.

Methods:

This study elicited expert recommendations to identify which of 73 discrete implementation strategies were considered essential for implementing three mental health care high priority practices (HPPs) in the US Department of Veterans Affairs: depression outcome monitoring in primary care mental health (n = 20), prolonged exposure therapy for treating posttraumatic stress disorder (n = 22), and metabolic safety monitoring for patients taking antipsychotic medications (n = 20). Participants had expertise in implementation science, the specific HPP, or both. A highly structured recommendation process was used to obtain recommendations for each HPP.

Results:

Majority consensus was identified for 26 or more strategies as absolutely essential; 53 or more strategies were identified as either likely essential or absolutely essential across the three HPPs.

Conclusions:

The large number of strategies identified as essential starkly contrasts with existing research that largely focuses on application of single strategies to support implementation. Systematic investigation and documentation of multi-strategy implementation initiatives is needed.

Plain Language Summary

Most implementation studies focus on the impact of a relatively small number of discrete implementation strategies on the uptake of a practice. However, studies that systematically survey providers find that dozens or more discrete implementation strategies can be identified in the context of the implementation initiative. This study engaged experts in implementation science and clinical practice in a structured recommendation process to identify which of the 73 Expert Recommendations for Implementing Change (ERIC) implementation strategies were considered absolutely essential, likely essential, likely inessential, and absolutely inessential for each of the three distinct mental health care practices: depression outcome monitoring in primary care, prolonged exposure therapy for posttraumatic stress disorder, and metabolic safety monitoring for patients taking antipsychotic medications. The results highlight that experts consider a large number of strategies as absolutely or likely essential for supporting the implementation of mental health care practices. For example, 26 strategies were identified as absolutely essential for all three mental health care practices. Another 27 strategies were identified as either absolutely or likely essential across all three practices. This study points to the need for future studies to document the decision-making process an initiative undergoes to identify which strategies to include and exclude in an implementation effort. In particular, a structured approach to this documentation may be necessary to identify strategies that may be endogenous to a care setting and that may not be otherwise be identified as being “deliberately” used to support a practice or intervention.

Keywords: Expert consensus, implementation strategies, mental health, US Department of Veterans Affairs, depression, measurement-based care, posttraumatic stress disorder, prolonged exposure, antipsychotic, metabolic

Background

Key terms within implementation science are often inconsistently applied (McKibbon et al., 2010; Powell et al., 2012) and insufficiently described (Michie et al., 2009; Proctor et al., 2013). This presents challenges to those planning implementation initiatives. Discrete implementation strategies involve one process or action that helps adopt or integrate evidence-based health innovations into usual care (Powell et al., 2012). The typical implementation initiative utilizes many of these strategies, and there have been efforts within the last decade to improve the field of implementation science’s ability to more consistently characterize these discrete strategies. Such coordination is necessary for scientific replication and coherent meta-analytic efforts (Michie et al., 2009; Mitchell & Chambers, 2017).

Activities from the first aim of the Expert Recommendations for Implementing Change (ERIC) project established a common nomenclature for discrete implementation strategy terms, definitions, and categories (Waltz et al., 2014). The early phases of the project led to the identification and characterization of 73 discrete strategies to support implementation of evidence-based practices (Powell et al., 2015). The establishment of a standardized nomenclature for the primary independent variables in implementation science is an important maturational step for this field. Specifically, improvements in the specification of implementation strategies (i.e., construct validity) facilitates the generation of knowledge that extends beyond the particular parameters of a single study (i.e., external validity; Shadish et al., 2002). It also presents the practical benefit of aiding implementation practitioners in more fully considering the breadth of strategies that may be relevant for a particular initiative. The range of strategies considered for an initiative are not typically documented, and the majority of studies focus on investigating the effects of one or a very limited number of strategies. Rationales are rarely provided for the strategies selected (Hooley et al., 2020), and it is even rarer to see any rationale provided for omitted strategies.

This study presents data from the second aim of the ERIC project (Waltz et al., 2014), which involves understanding which strategies are viewed as most important for implementing three diverse high priority practices (HPP) for mental health. The study occurs within the context of the national health care system for Veterans, the US Veterans Health Administration, the largest integrated health care system in the United States. A Department of Veterans Affairs (VA) Mental Health Quality Enhancement Research Initiative (QUERI) advisory committee comprised operations and clinical managers as well as patient stakeholders were asked to identify high priority and emerging areas of practice change for VA mental health services. This resulted in the identification of three specific HPPs that were also considered representative of separate categories of mental health care. Outcome monitoring for depression in integrated primary care mental health settings (Depression hereafter) is an example of measurement-based care (an evidence-based assesment framework, see Lewis et al., 2019; Scott & Lewis, 2015) that involves gathering current data on patient symptoms and functioning to support data-based decision-making during care. As approximately 12% of veterans in primary care screen positive for depression (Yano et al., 2012), this HPP has broad relevance to a sizable portion of the patient population served in these health care settings. Prolonged exposure for posttraumatic stress disorder (PTSD hereafter) is a psychotherapy that involves structured training for clinicians before they administer the treatment to clients for 8–15 sessions, delivered once or more weekly. This treatment is arguably one of the more complex psychotherapies implemented in the VA due to its unique scheduling (i.e., 90 min instead of the standard session schedule block of 60 min or less) and the incorporation of technology to support client homework engagement (i.e., audio recordings of sessions; Karlin et al., 2010). The third HPP involves metabolic side-effect monitoring for individuals taking antipsychotics (Safety hereafter) and serves as a quality indicator for psychotropic medication safety monitoring (Mittal et al., 2013). All three of these HPPs have strong evidence bases and accompanying VA organizational policy recommendations (i.e., included in service line handbooks and practice guidelines).

In theory, implementation scientists and practitioners consider the universe of possible strategies when planning an implementation initiative. In practice, this selection process is typically much less thorough and comprehensive. Currently, the selection process is not adequately reported in “Methods” sections of published reports, and the strategies that are selected are not described in sufficient detail to support replication (Michie et al., 2009). This study aims to characterize the breadth of discrete implementation strategies viewed as important to consider for implementing three diverse HPPs.

Methods

This study engaged experts in a two-phase process where they first completed an online survey to capture their key characteristics and interest in participating in a structured recommendation task that involved identifying how essential individual discrete implementation strategies would be for implementing specific HPPs across various scenarios involving hypothetical clinics. This approach was taken as meta-analytic findings in implementation research focus on single strategies or the combination of a limited range of strategies, and provide little guidance regarding how essential a particular strategy may be for a specific HPP (Waltz et al., 2014). Since systematic reporting of discrete implementation strategies has not occurred in the relevant literature to date, structured reviews of evidence were not appropriate (e.g., Guyatt et al., 2008).

To obtain recommendations involving the consideration of 73 discrete implementation strategies, a structured rating format was used to decrease the overall cognitive burden of the task on participants. The methodology for recording the recommendations was inspired by the menu-based choice literature (Slywotzky, 2000). Structured choices that are organized by themes can better support contextually based complex decision-making (Orme, 2010). Results from the previous phase of the ERIC project identified that the 73 implementation strategies could be grouped into nine thematic clusters (Waltz et al., 2015) and the rating task was organized by these clusters. These ratings are analyzed descriptively.

A minimum goal of 20 participants for each HPP was established. This was determined to be a practical target for the specialized set of respondents included in this study while being consistent with numbers associated with topic saturation in qualitative research of this type. Specifically, the study can be characterized as having high information power in that the aims (descriptive and relevant to three specific HPPs), expert respondents, specificity of the content (using the ERIC discrete implementation strategy compilation), structure of the reporting (structured ratings), and analysis at the individual HPP level provide the opportunity for rich information to be obtained from a relatively smaller number of respondents (Malterud et al., 2015).

Participants

Participants were drawn from the larger ERIC study which used snowball reputation-based sampling (Waltz et al., 2014). At least 20 self-identified implementation science and clinical content level experts were obtained for each HPP. Consent was obtained via an online survey platform. Participant nominees self-selected which HPPs they would provide recommendations for (described below) at the time of consent. An optional US$100 honorarium was available for completing ratings for all three scenarios for a specific HPP to compensate for participant time. The honorarium was optional because some employers (e.g., the US VA) prohibit receiving compensation for such activities. Our sampling procedures resulted in the recruitment of 133 experts who received a participation nomination email with a link to the initial survey. From this list, 53 completed the initial survey. Of these 53, six declined but indicated they would be willing to be contacted again if the study had difficulty meeting its recruitment goals, 47 consented to participate in the recommendation task, and 43 completed recommendations for one or more HPPs.

Materials

Initial survey

Demographic information specific to the study was obtained via online survey at the time of consent and involved three questions with yes or no as the available responses. To identify implementation expertise, participants responded the following question: Implementation experts have knowledge and experience related to changing care practices, procedures, and/or systems of care. Based on the above definition, could someone accuse you of being an implementation expert? To identify individuals with clinical expertise, participants responded to the following question: Do you regularly directly oversee, manage, supervise, or advise frontline clinicians? And finally, to identify individuals affiliated with the VA, participants were asked the following question: Do you have any affiliation with the VA?

Also at the time of consent, participants also specified their area(s) of expertise for each HPP (e.g., implementation science, the specific HPP, related clinical practice but not the specific HPP, implementation science and clinical practice, and no relevant expertise) and their willingness to participate in providing recommendations (e.g., yes, no, I would consider participating if you have difficulty meeting your recruitment goals). Finally, participants had the opportunity to nominate peers to participate in the project by providing their name, email address, and area of expertise. All nominations received an invitation to participate in the study.

Recommendation task

The recommendation task was one file within an electronic dossier that participants received. This dossier included a specific HPP description document which included a brief synopsis of the HPP, a description of the research evidence supporting the HPP, itemizations of structural requirements necessary to support the HPP (e.g., VA standards for certifying staff in prolonged exposure for PTSD), specific processes necessary for routine delivery of the HPP (e.g., for PTSD, the ability to schedule 90-min therapy sessions), and the specific scenarios described below.

Participants were asked to review three different scenarios for each practice. Each scenario involved a hypothetical clinic. The scenarios were characterized across two domains: evidence and context. Each domain and its factors were characterized as relatively weak (i.e., possible barriers to implementing the HPP) or relatively strong (i.e., features that may facilitate implementing the HPP). These relative strengths and weaknesses were presented both in narrative form and in tabular form, the latter supporting the respondent in systematically contrasting the relatively weak and strong descriptions. The structure used for contrasting these relative strengths and weaknesses across the scenarios can be found in Table 1. The content of the scenarios were constructed using VA handbooks and policies (e.g., Department of Veterans Affairs Veterans Health Administration, 2008, 2012; Dundon et al., 2011; The Management of Post-Traumatic Stress Working Group, 2010) and reviewed by VA Mental Health QUERI stakeholders with practice and research expertise in these practice areas.

Table 1.

Scenario construction template.

| Domain/Factor | Relatively weak | Relatively strong |

|---|---|---|

| Evidence | ||

| Evidence strength and quality | Research supporting the practice change is viewed as poorly applicable to the clinical setting or is not valued as evidence | Research supporting the practice change is viewed as applicable to the clinical setting and is valued as evidence for the practice change |

| Relative advantage | Low relative advantage to existing practice and/or high perceived costs in terms of allocating resources away from other needs | High relative advantage to existing practice and/or low perceived costs in terms of allocating resources away from other needs |

| Practice-based evidence | Low perceived compatibility with existing practice and resources | High perceived compatibility with existing practice and resources |

| Perceived adaptability | Low perceived adaptability to local needs | High perceived adaptability to local needs |

| Context | ||

| Readiness for implementation | Poor/inconsistent resources or other support for the

practice change Resources not allocated well Leadership is indifferent to the practice change |

Strong/consistent resources or other support for the

practice change Resources generally allocated well Leadership is strongly committed to the practice change |

| Structural characteristics | Poorly defined roles Poor organizational structures |

Clearly defined roles Effective organizational structures |

| Networks and communications | Lack of consistent communications and working

relationships across units Staff/clients held in low regard Lack of consistency in an individuals’ roles in relation to the treatment team and leadership |

Strong positive communications and working relationships

across organizational units Staff/clients valued Consistency of individuals’ roles in relation to the treatment team and leadership |

| Goals and feedback | Absence of feedback Narrow use of performance information sources Productivity measures de-incentivize the practice change |

Feedback for individual, team, and system

performance Use of multiple sources of information on performance Productivity measures incentivize the practice change |

| Implementation climate | Low priority relative to other initiatives Staff members do not feel valued or an essential part of change |

High priority relative to other initiatives Staff members feel valued and essential for change |

Evidence was characterized using four factors from the Consolidated Framework for Implementation Research (CFIR; Damschroder et al., 2009). Evidence strength and quality characterized how personnel in the clinic perceived the value of the research evidence as it applied to their setting. Relative advantage characterized the perceived benefit in relation to existing practice and the perceived costs of the HPP in the resources that may be drawn away from other needs when the HPP is implemented. Practice-based evidence characterized how compatible the HPP was with existing practices and resources. Finally, the last subfactor of evidence was the perceived adaptability of the HPP to be tailored to local needs and conditions.

Context was characterized using five factors from CFIR. Readiness for implementation characterized the strength and consistency of resources necessary for supporting the HPP, whether these resources were allocated well, and the commitment of leadership to the practice change. Structural characteristics characterized whether staff members had clearly defined roles and whether effective organizational structures were in place for supporting the HPP. Networks and communications characterized the effectiveness of communication patterns and working relationships across organizational units, whether staff and clients are held in high regard, and the consistency of individuals’ roles in relationship to the treatment team and leadership. Goals and feedback characterized the degree to which goals are clearly communicated, acted upon, and fed back to staff in addition to the alignment of that feedback with the goals. Finally, the last subfactor of context is implementation climate which focused on the HPP’s relative priority, given other organizational initiatives and whether staff feel like their actions are an essential part of the change.

The panelists were provided with three scenarios for each practice change. Scenario A characterized a setting with relatively weak perceived evidence and a relatively weak context. Scenario B characterized a setting with relatively strong perceived evidence and a relatively weak context. Scenario C characterized a setting with relatively weak perceived evidence and a relatively strong context. After consulting with senior stakeholders, it was determined that a fourth scenario (i.e., strong perceived evidence, strong context) was unrealistic as the vast majority of settings have at least some challenges present. Furthermore, pilot data suggested it could take respondents 45–120 min per scenario to complete recommendations, and the addition of a fourth scenario with limited applicability was judged to be an unnecessary response burden for the high-level experts donating their time to this project. The HPP-specific support materials are provided in Supplemental Files 1, 2, and 3. The tabular form of each scenario in the Supplemental Files will be useful to readers interested in viewing how the CFIR factors map on to the content in each example.

Data collection

Participant consent, key characteristics (type of expert, VA affiliation), and nomination of additional experts to the project were obtained via online survey that was embedded in an email invitation. Within 24 hr of completing the survey, participants received an email with a dossier that included the recommendation task described above and an HPP specific document. Recommendations were obtained via the use of structured worksheets in Microsoft® Excel® on a computer and in a setting of the participant’s choosing. The structured worksheets presented the strategies in thematic groups, allowing similar strategies to be considered proximally (Waltz et al., 2015). Pop-up comment boxes were enabled for the worksheet cells with the strategy labels that provided respondents with the definition for the strategy without having to reference the lengthier Supplemental Materials. Recommendations were provided by selecting one of the four alternatives from drop-down menus for each strategy: absolutely essential, likely essential, likely inessential, and absolutely inessential (see Supplemental File 4 for an example). The rating scale did not have a neutral midpoint such as “neither essential nor inessential” due to concerns that it may not be continuous with the other ratings. In this application, a midpoint may reflect a different underlying dimension (e.g., wanting more information) than the non-midpoint ratings.

The worksheets and support materials were distributed via email by the first author. Respondents were encouraged to complete their rating tasks within 2 weeks and participants with outstanding materials received weekly reminders until they opted out of the study (e.g., expressed regrets due to time constraints) or recruitment concluded on 20 November 2014.

The study’s structured recommendation process encouraged participants to systematically consider which of the 73 strategies were essential for implementing a specific HPP. Separate recommendations were obtained for each implementation phase (pre-implementation, active implementation, and sustainment) for each scenario (Scenario A, B, and C) resulting in a total of nine ratings for each discrete implementation strategy per HPP, 657 ratings in total.

At the bottom of each column of the rating worksheets was a message that would change from “Missing recommendations in this column” to “Column is complete!” This feedback was designed to protect against accidental omissions of ratings, given the number of strategies being reviewed.

Data analytic approach

The practical yet modest number of expert volunteers recruited for this study limits the quantitative comparisons that can responsibly be made when comparing ratings across the three scenarios presented within each HPP. Non-parametric tests of contingency tables involving fewer than five observations are extremely conservative. As the recommendation responses available and the number of respondents ranged from 20 to 22, nearly all strategy-level contingency tables would have cells with fewer than five observations. As a result, two criteria were established to identify practical differences: (a) differences reflecting a change in status from being endorsed by 50% or more of participants and (b) the amount of change being at least 20%.

Consistent with “criterion a” above, a recommendation consensus criterion value of 50% or more was established to identify non-minority recommendations. For the summary recommendation of a strategy to be considered to be categorized as absolutely essential, 50% or more of respondents needed to provide the strategy with that rating. If less than 50% of respondents rated the strategy as absolutely essential, but the combination of absolutely essential and likely essential ratings were equal to or greater than 50%, the summary recommendation for the strategy was categorized as likely essential. Participants who elected not to respond to individual items remained in the denominator for the percent consensus calculation. Omissions were not interpreted as accidental as respondents received feedback within the worksheets whether ratings were provided for all items.

Results

Participant characteristics

The minimum recruitment goal of 20 participants was achieved for each HPP with the PTSD group having the largest (n = 22). Considering all three HPPs, 77% had an affiliation with the VA, 33% were implementation science experts, 16% were experts in the HPP, and 51% were experts in both implementation science and the HPP. Table 2 presents a summary of participant characteristics broken down by specific HPP.

Table 2.

Participant characteristics.

| Depression | PTSD | Safety | |

|---|---|---|---|

| Participants | 20 | 22 | 20 |

| VA affiliation | 75% | 91% | 68% |

| IS expertise | 35% | 27% | 40% |

| Clinical expertise | 10% | 14% | 15% |

| IS and clinical expertise | 55% | 59% | 45% |

PTSD: posttraumatic stress disorder; VA: Department of Veterans Affairs; IS: implementation science.

Scenario differences

The data were first examined to determine whether differential recommendations were made across the three scenarios for each of the HPPs. In general, Scenario A (weak context, weak evidence) had the highest number of endorsements for strategies as being absolutely and likely essential. Non-parametric (chi-square) analyses failed to identify significant differences reflecting a change in majority absolutely essential endorsement status for any of the ERIC strategies across scenarios. This null finding was largely a function of the high endorsement rates obtained across scenarios and a lack of power to detect small differences. Secondary criteria for practical significance were explored including (a) differences reflecting a change in status from being endorsed by 50% or more of participants and (b) the difference in ratings across scenario being at least 20%. Neither of these criteria were met for any of the differences across scenarios.

Average number of strategies receiving each level of rating

As no significant differences were found by scenario, the recommendations participants made were consolidated by looking across all nine assessment points for each ERIC strategy within a practice change. The subsequent analyses reflect each participant’s highest rating for each of the strategies across all assessment points.

The number of strategies considered absolutely essential by the majority of participants was relatively high and varied somewhat across the HPPs. The average number of strategies endorsed as absolutely essential for Depression was 26.1, PTSD was 27.5, and Safety was 33.8. There was considerable variability in the number of absolutely essential strategies that were rated by each participant (SDs > 12.5, range = 5–60 across all three HPPs). At the other end of the endorsement scheme, on average fewer than 14 of the 73 strategies were rated as absolutely inessential across the three HPPs.

Majority endorsement by strategy for each HPP

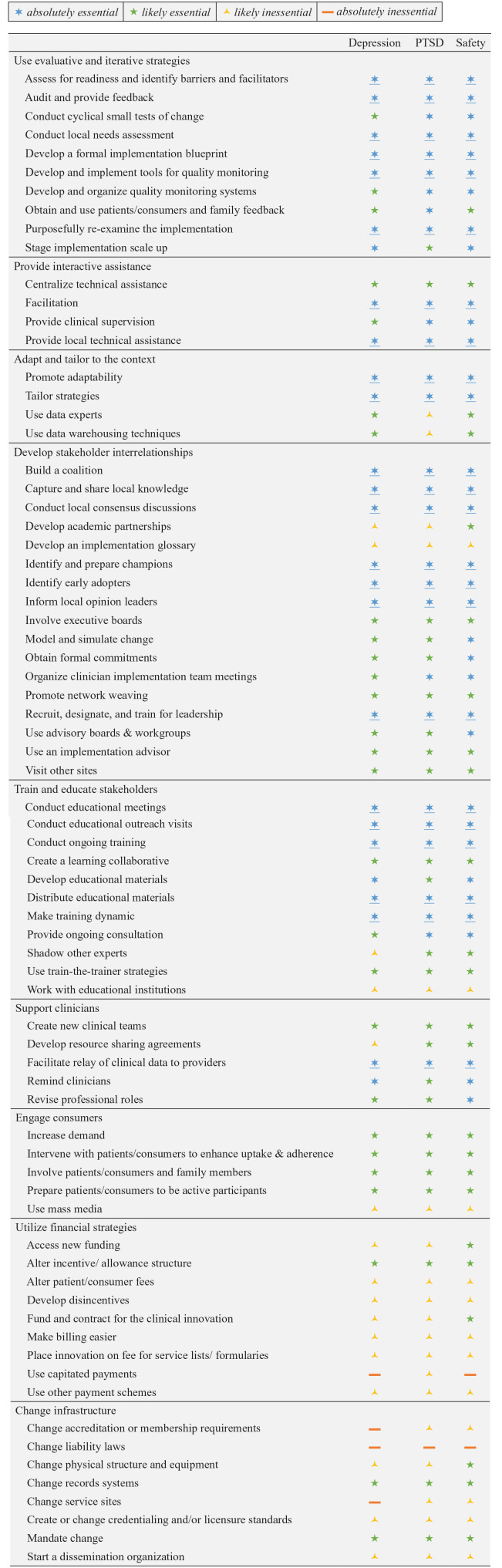

We now shift analytic focus to characterizing participant endorsement that reflects majority consensus for each of the strategies. Figure 1 provides an overview of the composite recommendations for each strategy in relation to each of the three HPPs. Two thirds of the strategies received the same essential rating level for all three HPPs. This includes 23 strategies rated as absolutely essential, 14 strategies rated as likely essential, 10 strategies rated as likely inessential, and one strategy rated as absolutely inessential (i.e., change liability laws). For strategies in which different essential ratings were obtained across the three HPPs (n = 25), the rating categories only differed by one level.

Figure 1.

Summary of recommendations.

Composite ratings are provided for each strategy where the six-pointed blue star reflects ⩾50% endorsements for a strategy as absolutely essential. Some blue stars are underlined to highlight strategies receiving absolutely essential ratings across all three HPPs. The five-pointed green star reflects ⩾50% endorsements for a strategy as likely essential (inclusive of absolutely essential ratings <50%). The three-pointed yellow symbol reflects ⩾50% endorsements for a strategy as likely inessential (inclusive of higher ratings <50%). The orange bar reflects ⩾50% endorsements for a strategy as absolutely inessential. These composite recommendations were derived by aggregating recommendations by taking the highest endorsement each expert provided across the nine assessment points (three scenarios by three phases) for each HPP.

Discussion

The project engaged experts in implementation science and clinical practice in a structured recommendation process to identify essential and non-essential strategies for successfully implementing three mental health care HPPs. In total, 51 strategies across all three HPPs were rated as either absolutely essential or likely essential while the participants were instructed to take care “not to overburden the implementation site with unnecessary activities.” These numbers greatly exceed the number of strategies described and tested in a typical implementation study (Grimshaw et al., 2005; Mazza et al., 2013), but these numbers are consistent with the higher number of strategies documented outside most formal implementation trials.

Phase 4 implementation surveillance (Medical Research Council, 2000) or “real world” implementation efforts have documented larger numbers of implementation strategies (BootsMiller et al., 2004; Boyd et al., 2018; Hoagwood et al., 2014; Hysong et al., 2007; Magnabosco, 2006; Powell et al., 2016). For example, Bunger and colleagues (2017) used activity logs completed by project personnel to identify that 45 implementation strategies were utilized to improve children’s access to behavioral health services in a child welfare agency.

Research has failed to find strong and consistent relationships between the number of implementation strategies employed and implementation outcomes (Baker et al., 2015; Grimshaw et al., 2005; Lau et al., 2015; Squires et al., 2014; Wensing & Grol, 2005); however, the research serving as the basis for these inconsistent relationships has not included tools to systematically evaluate the presence of strategies. Rogal and colleagues, for example, demonstrated a relationship between the number of strategies used (median: 27) and the number of treatment starts for hepatitis C medications by having key stakeholders from 80 sites retrospectively report on which ERIC implementation strategies were used (Rogal et al., 2017, 2019; Yakovchenko et al., 2020). This type of retrospective report, and activity logs (Bunger et al., 2017) may help resolve the inconsistent finding regarding the relationship between implementation strategies, their tailoring, and outcomes.

Another limitation in drawing inferences from existing research failing to find consistent relationships between the number of implementation strategies employed and implementation outcomes is that investigators typically only report strategies they are specifically investigating as part of their trial and ignore those that are endogenous to their care setting. The participants in Rogal and colleagues (2019) were part of a study involving the retrospective report on each of the 73 ERIC implementation strategies that were used during an initiative. These respondents were also asked whether they attributed the use of each strategy to the implementation initiative. They found that respondents typically attributed only half of these strategies to the specific implementation initiative under study even if these strategies were specifically part of the treatment rollout. These results suggest that strategies considered endogenous by key informants may be viewed as a routine component of the practice context. Thus, a more thorough monitoring of implementation strategies and their context may shed important light on determinants and processes related to the uptake of HPPs.

This study has several limitations. The recommendations provided by the participants in this study, including those for strategies rated with majority consensus as absolutely essential, should not be interpreted as compulsory for supporting the HPPs included in this study. Rather, these recommendations reflect the strategies that should be given serious consideration prospectively in both research and practice settings. Similarly, 24 or more strategies were identified as likely essential for each of the three HPPs included in this study. This likely rating (vs. absolutely essential) may highlight that many implementation strategies are contingent on local circumstances, and their use should be driven by an assessment of contextual factors, including availability of resources to feasibly support the use of multiple strategies. Similar caution should be held when considering strategies receiving a likely inessential or absolutely inessential rating. These strategies should not be immediately disregarded due to their relatively low rates of endorsement in this study involving three specific HPPs in the context of the VA. Strategy selection should reflect the needs of a particular implementation effort.

The obtained recommendations are limited in that they do not specify the intensity of the activity or resources related to the recommended strategies. This may vary substantially across HPPs and with respect to local conditions. While we did not identify statistically significant or practical differences in recommendations across the three scenarios used in this study, we did not capture important dimensions such as dosage, intensity, and temporality of the strategies (Proctor et al., 2013). Future research may benefit from providing stakeholders opportunities to specify further details regarding how strategies are applied to a particular implementation effort.

A more general limitation of the study is that it used only the ERIC discrete implementation strategy compilation. While this compilation represents the range of implementation strategies identified in the literature and as specified by implementation scientists and practitioners (Powell et al., 2015; Waltz et al., 2015), it should not be considered comprehensive. The range of possible implementation strategies is ever-expanding, and different literatures or stakeholders may have led to the inclusion of additional strategies that could have been considered. For example, additional strategies may have been identified if experts in care policy or finance had been included, as is illustrated by Dopp and colleagues (2020) who recently identified 23 different strategies for financing the implementation of programs and practices.

The present project used the ERIC strategy compilation for three diverse HPPs; however, specific service sectors or types of practice innovations may benefit from the identification of discrete implementation strategies that are specific to their efforts. For example, Cook and colleagues (2019) took the ERIC compilation through a seven-step adaptation process to tailor the language of the compilation to better fit the school-based service sector. In the process, surface level changes in the definitions were made to improve the school-based relevance of 52 strategies. An additional five strategies required more extensive modifications to reflect their use in a school context. Five strategies from the original were omitted as irrelevant. Seven new strategies were added based on research from this sector resulting in a modified compilation of 75 strategies. This modified compilation of strategies was evaluated in terms of their perceived importance and feasibility by educational sector stakeholders (Lyon et al., 2019). More recently, Graham and colleagues (2020) identified discrete strategies to address barriers to implementing digital mental health interventions in health care settings.

The present results have implications for implementation science and practice moving forward. The field of implementation would benefit from documenting the decision-making process regarding which strategies to include in an implementation effort. Current guidelines direct researchers to document the rationale for the strategies they select (Pinnock et al., 2015; Proctor et al., 2013), but provide no guidance regarding strategies not included in the study. At present, we have no way of determining which strategies are actually present in a study and whether strategy omissions were deliberate or unintentional. A thorough review of implementation strategies can help researchers and practitioners identify strategies that need to be added as part of a new implementation effort, existing strategies endogenous to the organization that can be leveraged for an effort, existing strategies that are present as part of organizational capacity but are not considered immediately relevant to an effort, and document strategies hypothesized to not be relevant to an effort. This more thorough accounting of new versus existing/endogenous strategies may shed important light on the differential effectiveness strategies that have or have not been institutionalized prior to the implementation effort (Hoagwood & Kolko, 2009).

Finally, contemporary efforts to focus on the mechanisms of change affected by implementation strategies may help provide more clarity regarding selection and tailoring of discrete implementations strategies as part of multifaceted strategies of varying complexity employed within an implementation effort (Lewis et al., 2018, 2020; Powell et al., 2019). While this study did not have participants map strategies to mechanisms of change theorized as important for each HPP, doing so may influence the total number of strategies recommended.

Supplemental Material

Supplemental material, sj-docx-1-irp-10.1177_26334895211004607 for Consensus on strategies for implementing high priority mental health care practices within the US Department of Veterans Affairs by Thomas J Waltz, Byron J Powell, Monica M Matthieu, Jeffrey L Smith, Laura J Damschroder, Matthew J Chinman, Enola K Proctor and JoAnn E Kirchner in Implementation Research and Practice

Supplemental material, sj-docx-2-irp-10.1177_26334895211004607 for Consensus on strategies for implementing high priority mental health care practices within the US Department of Veterans Affairs by Thomas J Waltz, Byron J Powell, Monica M Matthieu, Jeffrey L Smith, Laura J Damschroder, Matthew J Chinman, Enola K Proctor and JoAnn E Kirchner in Implementation Research and Practice

Supplemental material, sj-docx-3-irp-10.1177_26334895211004607 for Consensus on strategies for implementing high priority mental health care practices within the US Department of Veterans Affairs by Thomas J Waltz, Byron J Powell, Monica M Matthieu, Jeffrey L Smith, Laura J Damschroder, Matthew J Chinman, Enola K Proctor and JoAnn E Kirchner in Implementation Research and Practice

Supplemental material, sj-xlsx-4-irp-10.1177_26334895211004607 for Consensus on strategies for implementing high priority mental health care practices within the US Department of Veterans Affairs by Thomas J Waltz, Byron J Powell, Monica M Matthieu, Jeffrey L Smith, Laura J Damschroder, Matthew J Chinman, Enola K Proctor and JoAnn E Kirchner in Implementation Research and Practice

Acknowledgments

We would like to acknowledge the assistance of Angela B. Swensen in the development of the scenarios for the expert review and the contributions of each member of the expert panel. Members of the Depression panel are as follows: Mark Bauer, Joe Cerimele, Laura Damschroder, Brad Felker, Hildi Hagedorn, Kathy Henderson, Amy Kilbourne, Sarah Krein, Sara Landes, Cara Lewis, Julie Lowery, Aaron R. Lyon, Steve Marder, Alan McGuire, Princess Osei-Bonsu, Mona Ritchie, Angie Rollins, Carol VanDeusen Lukas, Melissa Van Dyke, and Cynthia Zubritsky. Members of the PTSD panel are as follows: Mark Bauer, Marty Charns, Joan Cook, Laurel Copeland, Torrey Creed, Alison Hamilton, Shannon Kehle-Forbes, Sara Landes, Julie Lowery, Monica Matthieu, Alan McGuire, Danesh Mittal, Sonya Norman, Princess Osei-Bonsu, Samantha Outcalt, Sheila Rauch, Angie Rollins, Craig Rosen, Joe Ruzek, Alex Sox-Harris, Melissa Van Dyke, and Shannon Wiltsey Stirman. Members of the Safety panel are as follows: Mark Bauer, Ian Bennett, Lydia Chwastiak, Joan Cook, Geoff Curran, Brad Felker, Alison Hamilton, Kathy Henderson, Amy Kilbourne, Sarah Krein, Julie Kreyenbuhl, Steve Marder, Alan McGuire, Danesh Mittal, Rick Owen, Anne Sales, Cenk Tek, Melissa Van Dyke, and Dawn Velligan.

Footnotes

Authors’ note: Preliminary analyses of these data were presented at the Eighth Annual Conference on the Science of Dissemination and Implementation, Washington, DC, and the Third Biennial Conference of the Society for Implementation Research Collaboration (SIRC; Damschroder, Waltz, & Powell, 2015; Matthieu, et al., 2015; Waltz, Matthieu, et al., 2015; Waltz, Powell, Chinman, et al., 2015).

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: Support for the study design, data collection, data analysis, and interpretation for this project is funded through the US Department of Veterans Affairs (VA) Veterans Health Administration Mental Health Quality Enhancement Research Initiative (QUERI) (QLP 55–025) and Diabetes QUERI (DIB 98-001). Dissemination is funded through the VA Health Services Research and Development Center grant for the Center for Mental Healthcare and Outcomes Research (CeMHOR), North Little Rock, Arkansas, USA. The views expressed in this article are those of the authors and do not necessarily reflect the position or policy of the VA or the US government. In addition, T.J.W., B.J.P., and M.J.C. received funding in the form of salary support that made possible their roles in all aspects of this project. T.J.W. received support from the VA Office of Academic Affiliations Advanced Fellowships Program in Health Services Research and Development at CeMHOR. B.J.P. received support from the National Institute of Mental Health (F31 MH098478; R25MH080916; K01MH113806). M.J.C. received support from the VISN 4 Mental Illness Research, Education, and Clinical Center at the VA Pittsburgh Healthcare System.

ORCID iDs: Thomas J Waltz  https://orcid.org/0000-0001-7976-430X

https://orcid.org/0000-0001-7976-430X

Byron J Powell  https://orcid.org/0000-0001-5245-1186

https://orcid.org/0000-0001-5245-1186

Supplemental material: Supplemental material for this article is available online.

References

- Baker R., Camosso-Stefinovic J., Gillies C., Shaw E. J., Cheater F., Flottorp S., Robertson N., Wensing M., Fiander M., Eccles M. P., Godycki-Cwirko M., van Lieshout J., Jager C. (2015). Tailored interventions to address determinants of practice. Cochrane Database of Systematic Reviews, 4(4), CD005470. 10.1002/14651858.CD005470.pub3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- BootsMiller B. J., Yankey J. W., Flach S. D., Ward M. M., Vaughn T. E., Welke K. F., Doebbeling B. N. (2004). Classifying the effectiveness of Veterans Affairs guideline implementation approaches. American Journal of Medical Quality, 19(6), 248–254. 10.1177/106286060401900604 [DOI] [PubMed] [Google Scholar]

- Boyd M. R., Powell B. J., Endicott D., Lewis C. C. (2018). A method for tracking implementation strategies: An exemplar implementing measurement-based care in community behavioral health clinics. Behavior Therapy, 49, 525–537. 10.1016/j.beth.2017.11.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bunger A. C., Powell B. J., Robertson H. A., MacDowell H., Birken S. A., Shea C. (2017). Tracking implementation strategies: A description of a practical approach and early findings. Health Research Policy and Systems, 15, Article 15. 10.1186/s12961-017-0175-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cook C. R., Lyon A. R., Locke J., Waltz T., Powell B. J. (2019). Adapting a compilation of implementation strategies to advance school-based implementation research and practice. Prevention Science, 20(6), 914–935. 10.1007/s11121-019-01017-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Damschroder L. J., Aron D. C., Keith R. E., Kirsh S. R., Alexander J. A., Lowery J. C. (2009). Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implementation Science, 4, Article 50. 10.1186/1748-5908-4-50 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Damschroder L. J., Waltz T. J., Powell B. J. (2015, December). Expert recommendations for tailoring strategies to context. In Goldman D. (discussant), Tailoring Implementation Strategies to Context. Symposium conducted at the 8th Annual Conference on the Science of Dissemination and Implementation, Washington, DC. https://academyhealth.confex.com/academyhealth/2015di/meetingapp.cgi/Paper/7809 [Google Scholar]

- Department of Veterans Affairs Veterans Health Administration. (2008). Clozapine patient management protocol (VHA Handbook 1160.02). [Google Scholar]

- Department of Veterans Affairs Veterans Health Administration. (2012). Local implementation of evidence-based psychotherapies for mental and behavioral health conditions (VHA Handbook 1160.06). [Google Scholar]

- Dopp A. R., Narcisse M.-R., Mundey P., Silovsky J. F., Smith A. B., Mandell D., Funderburk B. W., Powell B. J., Schmidt S., Edwards D., Luke D., Mendel P. (2020). A scoping review of strategies for financing the implementation of evidence-based practices in behavioral health systems: State of the literature and future directions. Implementation Research and Practice, 1. 10.1177/2633489520939980 [DOI] [PMC free article] [PubMed]

- Dundon M., Dollar K., Schohn M., Lantinga L. J. (2011). Primary care-mental health integration co-located, collaborative care: An operations manual. Center for Integrated Healthcare. https://www.mentalhealth.va.gov/coe/cih-visn2/Documents/Clinical/Operations_Policies_Procedures/MH-IPC_CCC_Operations_Manual_Version_2_1.pdf [Google Scholar]

- Graham A. K., Lattie E. G., Powell B. J., Lyon A. R., Smith J. D., Schueller S. M., Stadnick N. A., Brown C. H., Mohr D. C. (2020). Implementation strategies for digital mental health interventions in health care settings. American Psychologist, 75(8), 1080–1092. 10.1037/amp0000686 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grimshaw J. M., Thomas R. E., MacLennan G., Fraser C., Ramsay C. R., Vale L., Whitty P., Eccles M. P., Matowe L., Shirran L., Wensing M., Dijkstra R., Donaldson C. (2005). Effectiveness and efficiency of guideline dissemination and implementation strategies. International Journal of Technology Assessment in Health Care, 21(1), 149–149. 10.1017/S0266462305290190 [DOI] [PubMed] [Google Scholar]

- Guyatt G. H., Oxman A. D., Vist G. E., Kunz R., Falck-Ytter Y., Alonso-Coello P., Schünemann H. J., Group G. W. (2008). GRADE: An emerging consensus on rating quality of evidence and strength of recommendations. British Medical Journal, 336(7650), 924–926. 10.1136/bmj.39489.470347.AD [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoagwood K. E., Kolko D. J. (2009). Introduction to the special section on practice contexts: A glimpse into the nether world of public mental health services for children and families. Administration and Policy in Mental Health and Mental Health Services Research, 36, 35–36. 10.1007/s10488-008-0201-z [DOI] [PubMed] [Google Scholar]

- Hoagwood K. E., Olin S. S., Horwitz S., McKay M., Cleek A., Gleacher A., Lewandowski E., Nadeem E., Acri M., Chor K. H. B., Kuppinger A., Burton G., Weiss D., Frank S., Finnerty M., Bradbury D. M., Woodlock K. M., Hogan M. (2014). Scaling up evidence-based practices for children and families in New York State: Toward evidence-based policies on implementation for state mental health systems. Journal of Clinical Child and Adolescent Psychology, 43(2), 145–157. 10.1080/15374416.2013.869749 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hooley C., Amano T., Markovitz L., Yaeger L., Proctor E. (2020). Assessing implementation strategy reporting in the mental health literature: A narrative review. Administration and Policy in Mental Health and Mental Health Services Research, 47(1), 19–35. 10.1007/s10488-019-00965-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hysong S. J., Best R. G., Pugh J. A. (2007). Clinical practice guideline implementation strategy patterns in Veterans Affairs primary care clinics. Health Services Research, 42(1, Pt 1), 84–103. 10.1111/j.1475-6773.2006.00610.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karlin B. E., Ruzek J. I., Chard K. M., Eftekhari A., Monson C. M., Hembree E. A., Resick P. A., Foa E. B. (2010). Dissemination of evidence-based psychological treatments for posttraumatic stress disorder in the Veterans Health Administration. Journal of Traumatic Stress, 23(6), 663–673. 10.1002/jts.20588 [DOI] [PubMed] [Google Scholar]

- Lau R., Stevenson F., Ong B. N., Dziedzic K., Treweek S., Eldridge S., Everitt H., Kennedy A., Qureshi N., Rogers A., Peacock R., Murray E. (2015). Achieving change in primary care—Effectiveness of strategies for improving implementation of complex interventions: Systematic review of reviews. BMJ Open, 5, Article e009993. 10.1136/bmjopen-2015-009993 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis C. C., Boyd M., Puspitasari A., Navarro E., Howard J., Kassab H., Hoffman M., Scott K., Lyon A., Douglas S., Simon G., Kroenke K. (2019). Implementing measurement-based care in behavioural health: A review. JAMA Psychiatry, 76(3), 324–335. 10.1001/jamapsychiatry.2018.3329 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis C. C., Boyd M. R., Walsh-Bailey C., Lyon A. R., Beidas R., Mittman B., Aarons G. A., Weiner B. J., Chambers D. A. (2020). A systematic review of empirical studies examining mechanisms of implementation in health. Implementation Science, 15, Article 21. 10.1186/s13012-020-00983-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis C. C., Klasnja P., Powell B. J., Lyon A. R., Tuzzio L., Jones S., Walsh-Bailey C., Weiner B. (2018). From classification to causality: Advancing understanding of mechanisms of change in implementation science. Frontiers in Public Health, 6, Article 136. 10.3389/fpubh.2018.00136 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lyon A. R., Cook C. R., Locke J., Davis C., Powell B. J., Waltz T. J. (2019). Importance and feasibility of an adapted set of implementation strategies in schools. Journal of School Psychology, 76, 66–77. 10.1016/j.jsp.2019.07.014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Magnabosco J. L. (2006). Innovations in mental health services implementation: A report on state-level data from the U.S. evidence-based practices project. Implementation Science, 1, Article 13. 10.1186/1748-5908-1-13 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Malterud K., Siersma V. D., Guassora A. D. (2015). Sample size in qualitative interview studies: Guided by information power. Qualitative Health Research, 26, 1753–1760. 10.1177/1049732315617444 [DOI] [PubMed] [Google Scholar]

- The Management of Post-Traumatic Stress Working Group. (2010). VA/DoD clinical practice guideline for management of post-traumatic stress (2nd ed.). Department of Veterans Affairs & Department of Defense. [Google Scholar]

- Matthieu M. M., Rosen C., Waltz T. J., Powell B. J., Chinman M. J., Damschroder L. J., Smith J. L., Proctor E. K., Kirchner J. E. (2015, September). Implementing Prolonged Exposure for PTSD in the VA: Expert recommendations from the ERIC project. Implementation Science, 11: 1. 10.1186/s13012-016-0428-0#Sec95 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazza D., Bairstow P., Buchan H., Chakraborty S. P., Van Hecke O., Grech C., Kunnamo I. (2013). Refining a taxonomy for guideline implementation: Results of an exercise in abstract classification. Implementation Science, 8, Article 32. 10.1186/1748-5908-8-32 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McKibbon K. A., Lokker C., Wilczynski N. L., Ciliska D., Dobbins M., Davis D. A., Haynes R. B., Straus S. E. (2010). A cross-sectional study of the number and frequency of terms used to refer to knowledge translation in a body of health literature in 2006: A Tower of Babel? Implementation Science, 5, Article 16. 10.1186/1748-5908-5-16 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Medical Research Council. (2000). A framework for development and evaluation of RCTs for complex interventions to improve health. https://www.mrc.ac.uk/documents/pdf/rcts-for-complex-interventions-to-improve-health/

- Michie S., Fixsen D. L., Grimshaw J. M., Eccles M. P. (2009). Specifying and reporting complex behaviour change interventions: The need for a scientific method. Implementation Science, 4, Article 40. 10.1186/1748-5908-4-40 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitchell S. A., Chambers D. A. (2017). Leveraging implementation science to improve cancer care delivery and patient outcomes. Journal of Oncology Practice, 13(8), 523–529. 10.1200/JOP.2017.024729 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mittal D., Li C., Williams J. S., Viverito K., Landes R. D., Owen R. R. (2013). Monitoring veterans for metabolic side effects when prescribing antipsychotics. Psychiatric Services, 64(1), 28–35. 10.1176/appi.ps.201100445 [DOI] [PubMed] [Google Scholar]

- Orme B. (2010). Getting started with conjoint analysis: Strategies for product design and pricing research (2nd ed.). Research Publishers. [Google Scholar]

- Pinnock H., Epiphaniou E., Sheikh A., Griffiths C., Eldridge S., Craig P., Taylor S. J. C. (2015). Developing standards for reporting implementation studies of complex interventions (StaRI): A systematic review and e-Delphi. Implementation Science, 10, Article 42. 10.1186/s13012-015-0235-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell B. J., Beidas R. S., Rubin R. M., Stewart R. E., Wolk C. B., Matlin S. L., Weaver S., Hurford M. O., Evans A. C., Hadley T. R., Mandell D. S. (2016). Applying the policy ecology framework to Philadelphia’s behavioral health transformation efforts. Administration and Policy in Mental Health and Mental Health Services Research, 43(6), 909–926. 10.1007/s10488-016-0733-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell B. J., Fernandez M. E., Williams N. J., Aarons G. A., Beidas R. S., Lewis C. C., McHugh S. M., Weiner B. J. (2019). Enhancing the impact of implementation strategies in healthcare: A research agenda. Frontiers in Public Health, 7, Article 3. 10.3389/fpubh.2019.00003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell B. J., McMillen J. C., Proctor E. K., Carpenter C. R., Griffey R. T., Bunger A. C., Glass J. E., York J. L. (2012). A compilation of strategies for implementing clinical innovations in health and mental health. Medical Care Research and Review, 69(2), 123–157. 10.1177/1077558711430690 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell B. J., Waltz T. J., Chinman M. J., Damschroder L. J., Smith J. L., Matthieu M. M., Proctor E. K., Kirchner J. E. (2015). A refined compilation of implementation strategies: Results from the Expert Recommendations for Implementing Change (ERIC) project. Implementation Science, 10, Article 21. 10.1186/s13012-015-0209-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Proctor E. K., Powell B. J., McMillen J. C. (2013). Implementation strategies: Recommendations for specifying and reporting. Implementation Science, 8, Article 139. 10.1186/1748-5908-8-139 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rogal S. S., Yakovchenko V., Waltz T. J., Powell B. J., Gonzalez R., Park A., Chartier M., Ross D., Morgan T. R., Kirchner J. E., Proctor E. K., Chinman M. J. (2019). Longitudinal assessment of the association between implementation strategy use and the uptake of hepatitis C treatment: Year two. Implementation Science, 14, Article 36. 10.1186/s13012-019-0881-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rogal S. S., Yakovchenko V., Waltz T. J., Powell B. J., Kirchner J. E., Proctor E. K., Gonzalez R., Park A., Ross D., Morgan T. R., Chartier M., Chinman M. J. (2017). The association between implementation strategy use and the uptake of hepatitis C treatment in a national sample. Implementation Science, 12, Article 60. 10.1186/s13012-017-0588-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott K., Lewis C. C. (2015). Using measurement-based care to enhance any treatment. Cognitive and Behavioral Practice, 22(1), 49–59. 10.1016/j.cbpra.2014.01.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shadish W. R., Cook T. D., Campbell D. T. (2002). Experimental and quasi-experimental designs for generalized causal inference. Wadsworth. [Google Scholar]

- Slywotzky A. J. (2000). The age of the choiceboard. Harvard Business Review, 78, 40–41. [Google Scholar]

- Squires J. E., Sullivan K., Eccles M. P., Worswick J., Grimshaw J. M. (2014). Are multifaceted interventions more effective than single-component interventions in changing health-care professionals’ behaviours? An overview of systematic reviews. Implementation Science, 9, Article 152. 10.1186/s13012-014-0152-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Waltz T. J., Matthieu M. M., Powell B. J., Chinman M. J., Smith J. L., Proctor E. K., Damschroder L. J., Kirchner J. E. (2015, December). Using menu-based choice tasks to obtain expert recommendations for implementing three high-priority practices in the VA. Symposium conducted at the 8th Annual Conference on the Science of Dissemination and Implementation, Washington, DC. https://academyhealth.confex.com/academyhealth/2015di/meetingapp.cgi/Paper/7810 [Google Scholar]

- Waltz T. J., Powell B. J., Chinman M. J., Damschroder L. J., Smith J. L., Matthieu M. M., Proctor E. K., Kirchner J. E. (2015, September). Structuring complex recommendations: methods and general findings. Implementation Science, 11(Supplement): 1. 10.1186/s13012-016-0428-0#Sec91 [DOI] [Google Scholar]

- Waltz T. J., Powell B. J., Chinman M. J., Smith J. L., Matthieu M. M., Proctor E. K., Damschroder L. J., Kirchner J. E. (2014). Expert recommendations for implementing change (ERIC): Protocol for a mixed methods study. Implementation Science, 9, Article 39. 10.1186/1748-5908-9-39 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Waltz T. J., Powell B. J., Matthieu M. M., Damschroder L. J., Chinman M. J., Smith J. L., Proctor E. K., Kirchner J. E. (2015). Use of concept mapping to characterize relationships among implementation strategies and assess their feasibility and importance: Results from the Expert Recommendations for Implementing Change (ERIC) study. Implementation Science, 10, Article 109. 10.1186/s13012-015-0295-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wensing M., Grol R. (2005). Multifaceted interventions. In Grol R., Wensing M., Eccles M. (Eds.), Improving patient care: The implementation of change in clinical practice (pp. 197–206). Elsevier. [Google Scholar]

- Yakovchenko V., Miech E. J., Chinman M. J., Chartier M., Gonzalez R., Kirchner J. E., Morgan T. R., Park A., Powell B. J., Proctor E. K., Ross D., Waltz T. J., Rogal S. S. (2020). Strategy configurations directly linked to higher hepatitis C virus treatment starts: An applied use of configurational comparative methods. Medical Care, 58(5), e31–e38. 10.1097/MLR.0000000000001319 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yano E. M., Chaney E. F., Campbell D. G., Klap R., Simon B. F., Bonner L. M., Lanto A. B., Rubenstein L. V. (2012). Yield of practice-based depression screening in VA primary care settings. Journal of General Internal Medicine, 27(3), 331–338. 10.1007/s11606-011-1904-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental material, sj-docx-1-irp-10.1177_26334895211004607 for Consensus on strategies for implementing high priority mental health care practices within the US Department of Veterans Affairs by Thomas J Waltz, Byron J Powell, Monica M Matthieu, Jeffrey L Smith, Laura J Damschroder, Matthew J Chinman, Enola K Proctor and JoAnn E Kirchner in Implementation Research and Practice

Supplemental material, sj-docx-2-irp-10.1177_26334895211004607 for Consensus on strategies for implementing high priority mental health care practices within the US Department of Veterans Affairs by Thomas J Waltz, Byron J Powell, Monica M Matthieu, Jeffrey L Smith, Laura J Damschroder, Matthew J Chinman, Enola K Proctor and JoAnn E Kirchner in Implementation Research and Practice

Supplemental material, sj-docx-3-irp-10.1177_26334895211004607 for Consensus on strategies for implementing high priority mental health care practices within the US Department of Veterans Affairs by Thomas J Waltz, Byron J Powell, Monica M Matthieu, Jeffrey L Smith, Laura J Damschroder, Matthew J Chinman, Enola K Proctor and JoAnn E Kirchner in Implementation Research and Practice

Supplemental material, sj-xlsx-4-irp-10.1177_26334895211004607 for Consensus on strategies for implementing high priority mental health care practices within the US Department of Veterans Affairs by Thomas J Waltz, Byron J Powell, Monica M Matthieu, Jeffrey L Smith, Laura J Damschroder, Matthew J Chinman, Enola K Proctor and JoAnn E Kirchner in Implementation Research and Practice