Abstract.

Significance

The expansion of functional near-infrared spectroscopy (fNIRS) methodology and analysis tools gives rise to various design and analytical decisions that researchers have to make. Several recent efforts have developed guidelines for preprocessing, analyzing, and reporting practices. For the planning stage of fNIRS studies, similar guidance is desirable. Study preregistration helps researchers to transparently document study protocols before conducting the study, including materials, methods, and analyses, and thus, others to verify, understand, and reproduce a study. Preregistration can thus serve as a useful tool for transparent, careful, and comprehensive fNIRS study design.

Aim

We aim to create a guide on the design and analysis steps involved in fNIRS studies and to provide a preregistration template specified for fNIRS studies.

Approach

The presented preregistration guide has a strong focus on fNIRS specific requirements, and the associated template provides examples based on continuous-wave (CW) fNIRS studies conducted in humans. These can, however, be extended to other types of fNIRS studies.

Results

On a step-by-step basis, we walk the fNIRS user through key methodological and analysis-related aspects central to a comprehensive fNIRS study design. These include items specific to the design of CW, task-based fNIRS studies, but also sections that are of general importance, including an in-depth elaboration on sample size planning.

Conclusions

Our guide introduces these open science tools to the fNIRS community, providing researchers with an overview of key design aspects and specification recommendations for comprehensive study planning. As such it can be used as a template to preregister fNIRS studies or merely as a tool for transparent fNIRS study design.

Keywords: functional near-infrared spectroscopy, study design, preregistration, guide, template, open science

1. Introduction

The design and analysis of hemodynamic brain-imaging studies encompasses many degrees of freedom in terms of parameter choices for data acquisition, preprocessing, and analysis.1,2 Replicability and reproducibility issues due to undisclosed analytical flexibility have been intensively discussed and demonstrated for several brain imaging techniques, including hemodynamic functional magnetic resonance imaging (fMRI)3–5 and electroencephalography.6,7 Similarly, the impact of different design and analytical choices in functional near-infrared spectroscopy (fNIRS) research has recently been a focus.8,9 When undisclosed, these bear the risk of questionable research practices (QRPs) and may imperil the reproducibility and replicability of published results.10 Addressing these widely pervasive concerns requires adopting transparent study designs, reporting, and other highly encouraged open-research practices that researchers across fields have developed. These include sharing materials, data, and code, releasing openly accessible preprints, and publishing a preregistration protocol.11–14,15 In particular, study preregistration has been recently highlighted as an important best practice tool for functional neuroimaging,3–6,16,17 including fNIRS research.18–20 However, drafting a preregistration protocol can feel especially daunting for beginners as it requires formulating a detailed a priori study and analysis plan,14,21 especially in a field with frequent methodological and technical advances that make any attempt to standardize procedures challenging.

Preregistration protocols are time-stamped documents in which researchers specify their hypotheses and plans for data collection, preprocessing, and analysis typically before data collection starts.13,22 This procedure allows for transparency regarding which aspects of the study were decided before (i.e., planned) or after (i.e., post-hoc) data collection and, thus, evaluating where the study falls on the spectrum between confirmatory and exploratory research (Fig. 1). Preregistration is expected to effectively prevent undisclosed analytical flexibility, including (unintentional) -hacking, cherry-picking of results, and certain forms of hypothesizing after the results are known (HARKing).10,24–28 Preregistrations are made publicly available before data collection or can be embargoed for a specific period of time on websites such as the Open Science Framework (OSF; osf.io). An even more compelling variant of preregistration is a Registered Report, which in its essence is a peer-reviewed preregistration with in-principle acceptance. This publishing format is increasingly offered at peer-reviewed journals29 (or independent of journals, e.g., Peer Community In Registered Reports30) and involves an initial stage 1 peer review based on the proposed methodology, hypotheses, analysis, and sampling plan. Successful submissions are accepted-in-principle before the research is conducted and irrespective of the study’s outcome. Registered Reports are published following a final stage 2 peer review to assess whether the research was conducted according to the preregistered research protocol. Thereby, the format aims to mitigate researcher and publication biases.

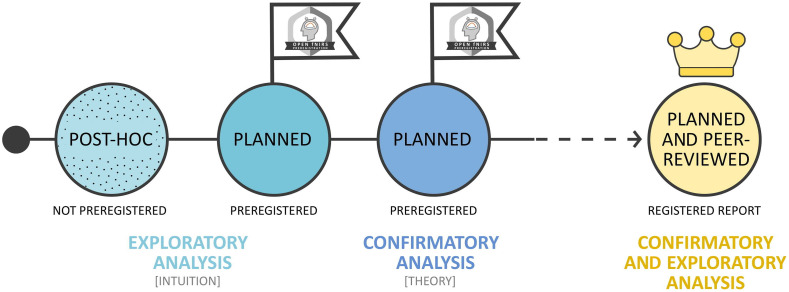

Fig. 1.

Overview of continuum between mostly intuition based (i.e., rather exploratory) and mostly theory based (i.e., rather confirmatory) analyses and their relation to study preregistration and Registered Reports.23

The first meta-analyses of preregistered research showed that reported effect size estimates are indeed substantially smaller,31,32 while the proportion of reported non-significant results14,33 is substantially larger than those reported for non-preregistered research. Taken together, these findings may indicate that the process mitigates publication bias and other QRPs that inflate effect sizes and result in false positive findings. Thus, it has been advocated that preregistration benefits both the scientist directly and the field more broadly.13,14,22,27,34 For example, preregistration can be a planning process to help researchers and their collaborators think through decisions in advance in a structured way as the process involves documenting hypotheses before the data were seen (i.e., a priori) and designing analysis and sampling plans accordingly. It can thereby protect the researcher from implicit biases that may arise during data analysis (e.g., incorrectly recalling that the only significant effect is the one that was originally predicted). In fNIRS research, several recent efforts have been made to develop guidelines for preprocessing and analyzing fNIRS data,9,35,36 as well as for reporting practices.19 Similar guidance would be desirable for the planning stage of an fNIRS experiment. For the field, preregistration is a transparency tool that allows others to verify, understand, and reproduce a study. It can thereby increase the perceived quality of research and may also enhance the trust that researchers have in both their own and others’ research.14,21,27,37,38

To make the task of writing a preregistration protocol more tractable, proponents of open science have developed guides, checklists, and templates to facilitate transparent study design, preregistration, and reporting for various types of research including neuroimaging.7,39–45 For fNIRS research, however, no similar resources exist yet, and they are highly desired.18

In what follows, we share and discuss a comprehensive preregistration guide and template for continuous-wave (CW), task-based fNIRS experiments that were developed following the 2021 hybrid fNIRS summer school hosted by the University of Tübingen.46 Specifically, this preregistration guide walks the fNIRS user through specific design and analysis considerations. Further, we adapted and extended the previously established psychological research preregistration-quantitative template34 toward fNIRS research-specific needs, which are elaborated in the respective sections. For a better illustration, we provide examples and link the corresponding text sections below to the items of the adapted preregistration template. We note that the examples may also include technical details, which should not be considered to be recommendations regarding fNIRS methodology itself but are only included to exemplify the desired level of detail for respective preregistration items. The preregistration template file can be accessed via an OSF project page,55 and readers are welcome to contribute for future extensions.

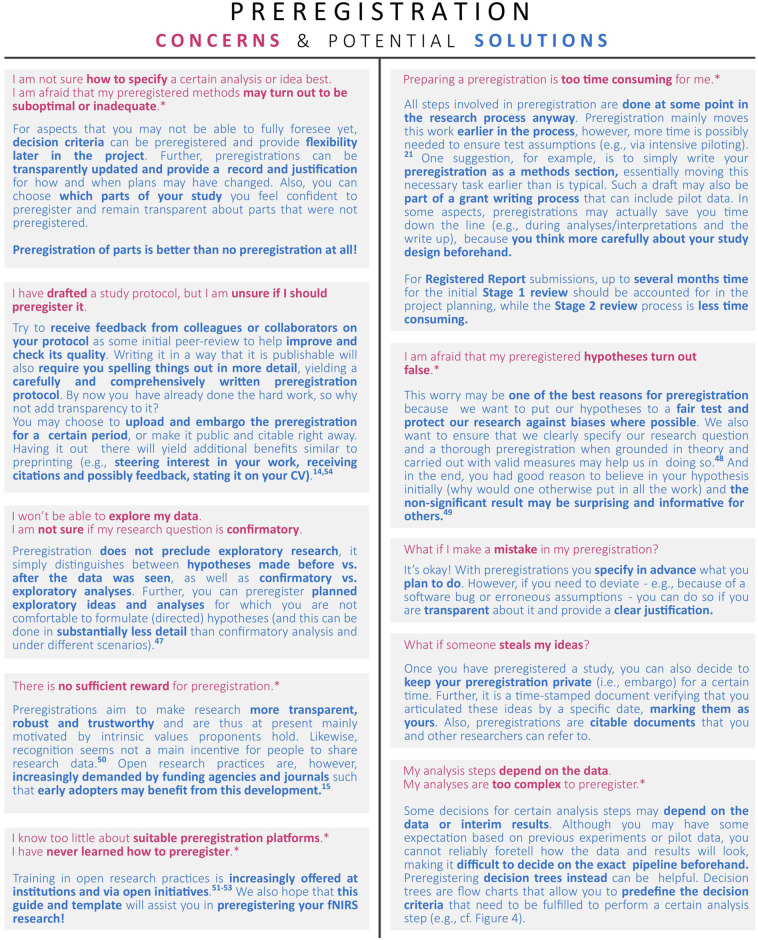

We further wish to highlight that the overall goal of preregistration is to maximize transparency as much as possible, not to write the perfect and most complete preregistration. For some researchers, especially those who write their first preregistration or who conduct their first fNIRS study, this may mean that they may only be able to fill in details for those sections of the preregistration template that are most addressable for the current study, while stating explicitly for which sections sufficient knowledge about study details at the point of conceptualization is still lacking. Others may choose not to submit a preregistration at all, but instead to use this guide and the template as a tool for designing an fNIRS study. Although we encourage researchers to publish preregistrations of their fNIRS studies whenever possible, we have attempted to make this template useful for those who wish to do either. For researchers who are still hesitant to preregister, we discuss some common concerns and suggestions for potential solutions in Fig. 2. Further, we note that, although this guide and the associated template were created with the aim to assist researchers in designing their fNIRS studies, they can also support evaluating these (e.g., as part of a literature search, systematic review, or manuscript submission review process).

Fig. 2.

Common preregistration concerns and potential solutions.14,15,47–54 Those indexed by “*” have recently been identified in a survey conducted with researchers working in the field of functional neuroimaging.21

Finally, we note that with recent optical engineering and methodological advances, there is an increasing demand for new analytic methodologies and procedures.56 This bears one particular preregistration concern relevant for fNIRS: with regards to preprocessing and data analysis, it may be possible that approaches considered state-of-the-art at the time of preregistration may seem somewhat outdated at a later study phase. This possibility can be daunting for fNIRS researchers generally and for beginners in the field in particular. However, it can be mitigated when researchers disclose and justify methodological deviations from the preregistered protocol in the final manuscript or report updated analyses in addition to the preregistered analyses.

2. Step-by-Step fNIRS Study Design

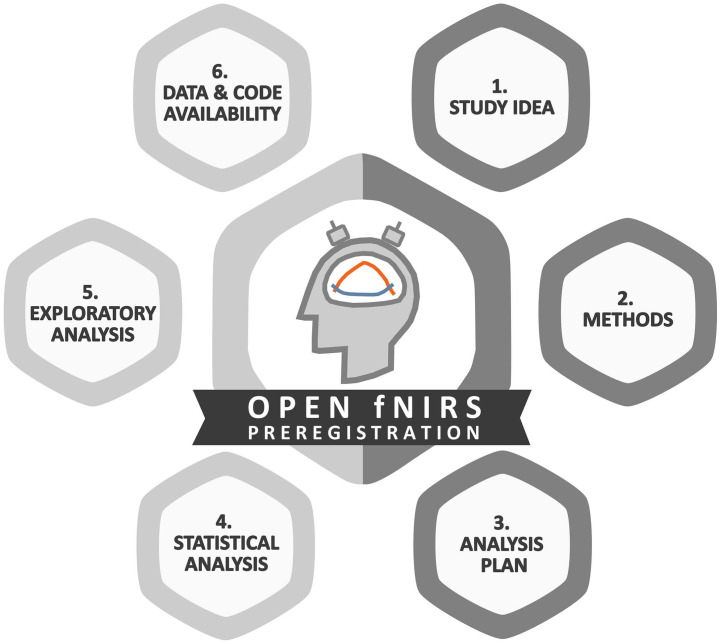

In the following, we provide a comprehensive step-by-step preregistration guide for transparent fNIRS study design as shown in Fig. 3. The guide covers all steps from generating a study idea (cf., Sec. 2.1) to publishing research outcomes, including open access to data and code (cf., Sec. 2.6), the corresponding items of the complementary preregistration template are referenced. It aims to aid researchers getting started with preregistering an fNIRS study.

Fig. 3.

Overview of the fNIRS study design guide structure.

2.1. Study Idea

The idea of a study comes first. To conceptualize a new study, researchers conventionally start with reviewing what is already known, i.e., they build up background knowledge (based on existing literature). This process is ideally guided by genuine interest and allows researchers to identify knowledge gaps. To fill in such a gap requires a specific study motivation (“Why is it important to address this problem?”) and a justification (“Why is fNIRS an appropriate method for this study?”). Researchers should consider the possible scope of their study: ideally, the study should tell a specific story; otherwise, if the scope goes beyond a single story, it will likely require defining a set of experiments or even a whole research program on this topic. Importantly, in addition to original empirical and replication studies, researchers can also preregister methodological studies (e.g., comparison of different motion artifact correction algorithms), exploratory studies,47 or secondary analyses of existing data sets.40,57

The motivation and justification for a study provide its rationale. They lay the groundwork for formulating research questions and generating hypotheses based on theory and previous findings. Thereby, preregistration allows for evaluation of whether a research question and analysis is rather confirmatory (i.e., mostly theory-based) or rather exploratory (i.e., mostly intuition-based) as shown in Fig. 1.26 In confirmatory research, hypotheses are defined in advance to test a specific relation between the variables (e.g., activation in area A is larger in condition C1 than C2). Confirmatory hypotheses are the basis of designing a sampling and analysis plan (whereas post-hoc exploratory analyses can follow confirmatory analyses). In exploratory research, open questions or hypotheses with alternative outcomes under different assumptions can be stated.47 It is worth noting, however, that exploratory analyses can also be planned and preregistered.

Preregistration templates vary in the extent to which the study idea, theoretical background, and previous results need to be articulated. We recommend providing sufficient theoretical information to comprehend the study’s goals and the adequacy of investigating the research question with fNIRS.

Preregistration template item(s): T1, T9, I1/A1, I2/A2, I3, and I4.

2.2. Methods

2.2.1. Study design

The study design includes within-subject factors (e.g., different conditions in a cognitive or motor task) and, where applicable, between-subject factors (e.g., different groups such as patients and healthy controls or different training groups). fNIRS will be measured during a certain task (e.g., task-related activation or intervention-induced activation changes) or during rest (e.g., resting-state activation or functional connectivity). For a given task, the effect of interest should be operationalized as the difference between conditions of interest (see Box 2 in Ref. 58). Additionally, control variables (e.g., tests and questionnaires) and localizer tasks can be considered.

Task-based conditions are usually presented in a block design, an event-related design, or a mixed block/event-related design. The number of blocks/trials, the duration between the blocks/trials, and the order of trials/blocks determine the signal-to-noise ratio (SNR). These parameters can be explicitly stated in a preregistration, for example: “In this study, a block design of 10 blocks per condition was presented in a fixed order with a duration of 15 s and a corresponding jittered inter-block-interval of 20 to 23 s.” or “In this study, an event-related design was realized with 30 trials per condition in a randomized order with a duration of 2 s and a jittered inter-trial-interval of 10 to 15 s.” or “In this study, a rapid event-related design was realized with 15 trials per condition presented in a randomized order with a duration of 5 s and a jittered inter-trial-interval of 2 to 3.5 s.”

Preregistration template item(s): A4, M11, M12, M14, and M15

2.2.2. Sample characteristics

A preregistration specifies the characteristics of the sample. This includes brief information about the type of sample that will be recruited (e.g., healthy population, specific clinical population, specific age group, etc.). These details are relevant as they may impact any behavioral but also fNIRS effects, e.g., due to atrophic processes or changes in hemodynamics that are related to aging or a pathological condition. If the sample is divided into groups, objective criteria or randomization procedures should be defined. Possible confounders inherent to groups and how to address these (e.g., motion artifacts in children versus adolescents or patients versus healthy controls) should be considered. For instance, sample characteristics such as age or clinical status may impact fNIRS data analysis plans.19,59,60

An fNIRS preregistration should further specify study-specific inclusion and exclusion criteria in accordance with the study’s ethical review board. Basic demographic information should be collected and reported, if possible (e.g., age, gender, ethnicity, head size, hair color, absence of hair, and skin pigmentation). Although including health information is likely informative and encouraged, sharing such information should be done with care to prevent harming the privacy and data protection of participants, who must remain anonymous. In cases in which sharing sensitive data is not required or adequate, such personal data may still be relevant for screening exclusion criteria. If sample characteristics are relevant for the purpose of the study, this should be apparent in the hypotheses.

NIR light transmission is affected by optical properties such as skin pigmentation, hair thickness, and cortical thickness. The impact and correction of racial bias in fNIRS and other oxygen-based measurements is an open topic that still needs further evaluation.61–64 Researchers should be aware of this discussion and weigh the possible benefits of inclusive study designs (e.g., better generalizability, the possibility to include moderators in a large sample) against less inclusive designs (e.g., the possibility of a better signal quality) along with ethical considerations.

Preregistration template item(s): AP1-AP3 and M12

2.2.3. Sample size planning

The sample size (i.e., the final number of individuals included in a study) should be determined in the study design phase as it is crucial for answering the research question. Justifying the choice of sample sizes is essential in an adequate study design as it provides transparency about the criterion used to determine when data collection is completed.65,66 Ideally, justifications should account for potential drop-out and data censoring (e.g., due to compromised/missing data or behavioral performance). Data drop-out/censoring may be considerable for fNIRS research, depending on the context (e.g., for clinical or infant studies).

Transparent sample size justifications are pivotal to allow for evaluating the precision and hence robustness of effects that a study is trying to detect. Different justification procedures exist: conducting an a priori power or sensitivity analysis is conventionally recommended as best practice for confirmatory research when employing inferential statistics.3 In reality, however, sampling plans may often be determined by external factors such as resource constraints (e.g., time, funding or sample characteristics), which reflect another, albeit limited, justification for sample size.65 Often resource constraints and heuristics result in too small sample sizes, leading to lower statistical power and thus over or underestimation of true effects due to imprecision in the outcome estimate. This is why small studies, in particular, are more likely to yield outcome estimates that are not as reliable and should thus not be a basis for future sample size calculations.67 Further, in the presence of publication bias, they can contribute to inflated effect size estimates in the literature.68

Putting a priori hypotheses to a fair confirmatory test requires sample size estimations that are informed by an adequate statistical power analysis based on realistic or relevant effect size assumptions.29 Statistical power describes the probability that a certain effect (size) can be detected, assuming that it exists. All relevant details of sample size estimation should be reported (i.e., assumed or targeted effect size, statistical model, assumptions on correlational designs, and inferential parameters) in sufficient detail such that a power analysis can be reproduced. Alternatively, Bayesian sequential sampling plans allow for flexible stopping once a predefined evidence threshold in the form of a parameter estimate, or Bayes factor (following a Bayes factor design analysis), is reached (see for example Ref. 67). They should be informed by a Bayesian sensitivity analysis, which also relies on specifying an expected effect under the alternative (and null) hypothesis. Further, they take into account the uncertainty around this point estimate via the use of a prior distribution that covers a range of plausible effect sizes.69,70 Although Bayesian approaches are far less used, they offer the main advantages that they are more resource efficient and allow for sampling until sufficient evidence for the absence of an effect is accumulated.69,70 Bayesian sampling plans should be reported in sufficient detail, including the assumed effect size, prior distribution, and stopping criterion in the form of a posterior interval or Bayes factor for the alternative and null hypotheses.71,72

Frequentist and Bayesian sample size estimations both require specifying a minimum effect size that the used statistical test aims to detect with a specific power or sensitivity, respectively. Several open-source tools are available for power analysis computations (see Table 1). Researchers should provide a rationale for the effect size estimate that is included in their power or sensitivity analysis. Desired power or sensitivity thresholds are commonly set to 80% or 90%, depending on the field and context. Importantly, the relationship between the targeted effect size and its corresponding sample size and statistical power is curve shaped.86 Thus, for instance, even small deviations between targeted versus observed effect sizes may result in a substantial loss of statistical power. Traditionally, effect size estimates are drawn from previous, comparable research. For example, if a previous published fNIRS study reported a medium effect size of for interpersonal brain synchronization,87 with an acceptable power of and a significance level of 0.05, replication of the effect in a paired samples -test of HbO mean amplitude in the predefined region of interest (ROI) would require participants. For more complicated tests that might include interaction terms with other factors such as hemisphere, channels, other ROIs, or conditions, it can be expedient to model the expected pattern of results73; see M3 for an example with annotated R code. However, we advise caution with this approach given the concerns described in the next paragraph. We further note that, in principle, results from fMRI could also inform about the range of an anticipated effect size. However, the sensitivity of fNIRS to deeper cortical regions is usually weaker and depends on the probe as well as on other system and design characteristics such as the SNR and experimental design. We are not aware of current standards for power analysis in fNIRS research and warrant that effect sizes will be different for specific populations (adults, children, patient populations), for different setups (e.g., fNIRS versus diffuse optical tomography), and parameters implied in experimental design (e.g., different inter-stimulus intervals in event-related design88). If an effect size estimate is available, researchers should specify the dependent variable [e.g., measure in the ROI/channel(s)], clearly report the statistical model and effect of interest for the sample size justification (e.g., the main effect in a mixed design) and the implicated type I and type II error rates, as well as possible correction methods.

Table 1.

Open-source tools to conduct power and sensitivity analyses.

| Name | Description | Link |

|---|---|---|

| InteractionPoweR | R library for power analysis of interaction effects. | https://github.com/dbaranger/InteractionPoweR |

| ANOVA power shiny app73 | Monte Carlo simulations of factorial experimental designs to estimate power for an ANOVA and follow-up pairwise comparisons. | Ref. 74 |

| Brainpower | List with tools for power analyses, in particular for fMRI studies. | Ref. 75 |

| Bayes factor design analysis69,70 | R library to conduct Bayesian sensitivity analyses and sampling plans. | https://github.com/nicebread/BFDA |

| Superpower | E-book, R library, and Shiny apps for power analysis in factorial experimental designs. | Ref. 76 |

| Jamovi77 | Open-source software to conduct informative power and sensitivity analyses for -test family (jpower plugin), including power curve illustrations.68 | Ref. 78 |

| G*power79,80 | Widely used open-source software to conduct power and sensitivity analyses for commonly used statistical tests and more advanced statistical models. | Ref. 81 |

| pwr | R library for commonly used statistical tests. | Ref. 82 |

| More Power | GUI for commonly used statistical tests and more advanced statistical models. | Ref. 83 |

| SampleSizePlanner84 | R library for different sample size planning strategies and shiny app | Ref. 85 |

Further, researchers should take into account that effect sizes reported in non-preregistered studies and meta-analyses—in particular those that report significant findings—are more likely to be affected by publication bias, small sample sizes, and researcher bias, and they thus more likely overestimate true effect sizes. For instance, recent meta-research found overestimations of a factor of two to three when compared with preregistered research.31,32,68 It is thus recommended to either adjust for potential inflation in reported effect size estimates89 or refer exclusively to literature that is unlikely to be affected by publication bias and is sufficiently powered (e.g., meta-analyses based on Registered Reports and sufficiently large samples with high precision in reported effect size estimates).

Alternatively, an effect size of interest without prior empirical results could be also the smallest effect size of interest (SESOI), which expresses the smallest relevant effect that the researchers care about (e.g., clinical significance and theoretical implication65). Researchers should justify their choice of SESOI and may use anchors specific to their research question. For instance, when powering for group or condition differences, other measures (e.g., clinical or behavioral) can be used as an anchor for a SESOI. To provide an example from clinical research, for instance, it has been estimated that improvements in depressive symptoms may need to exceed a standardized mean difference (SMD) of at least 0.24 to be considered a clinically meaningful change. If the goal of fNIRS recordings in the context of a depression trial is to detect accompanying hemodynamic correlates as a potential biomarker that can indicate clinically relevant improvements of depressive symptoms, the SESOI for hypothesized hemodynamic changes assessed by fNIRS should be at least 90 to be sufficiently sensitive and thus informative. An alternative anchor for SESOIs of hemodynamic effects may be effect sizes that are observed for behavioral effects. For instance, rates of anticipated non-responders (e.g., percentages of successful trials) can inform the lower effect size boundary. Using sampling plans that are based on SESOIs, it is more likely to detect meaningful effects. Moreover, in the case of non-significant/inconclusive results, they are more likely to yield evidence for the absence of an effect through follow-up equivalence tests (or their Bayesian equivalent) that allow for rejecting the alternative hypothesis.48–92 In the context of hemodynamic brain imaging, such equivalence tests have been recently discussed for fMRI applications.93

Sample size planning of any form described above should be done at least for the primary outcome measures used for the critical hypothesis test of a study. In case other tests are also planned (e.g., secondary outcomes and manipulation checks), researchers are free to calculate and report multiple sample size estimations and determine the most conservative sample size estimate.

To assist researchers in performing adequate sample size planning, we provide an overview of open-source software tools (cf., Table 1).

Preregistration template item(s): M3 and AP8

2.2.4. Instrumentation

In general, a detailed description of the fNIRS device including the name of the system and the manufacturer should be provided. Further, the specific fNIRS optode placement settings are determined by the used fNIRS device and to a lesser extent by several researcher decisions. For instance, different options for attaching the optodes to a head cap (e.g., within an fNIRS cap or a headband, self-printed) exist and should be reported. Moreover, the type and number of sources (i.e., LED versus laser), detectors (i.e., silicon photodiode versus avalanche photodiode), and short-distance detectors, including the corresponding source-detector distances (e.g., for long-separation channels and for short-separation channels for adults94), should be documented. If planned, auxiliary measurements integrated into the head cap (e.g., accelerometer) and peripheral physiological measurements (e.g., blood pressure, electrocardiogram, and plethysmography) should be documented together with a motivation for their recording [e.g., as nuisance regressors in the general linear model (GLM)]. If any additional measurements [e.g., electroencephalography (EEG), eye-tracking, and transcranial electric stimulation] will be taken during the experiment, the preregistration should include how the data across different measurement devices will be synchronized (e.g., via lab-streaming layer).

Preregistration template item(s): M9 and M10

2.2.5. Optode array design and optode placement

fNIRS recordings are limited to superficial cortical layers with a spatial resolution of around 2 to 3 cm and a penetration depth of around 1.5 to 2 cm into the cerebral cortex.36,56,95 Cortical brain activity is measured by fNIRS from the brain area over which the optodes (i.e., sources and detectors) are placed. Of note, the distance between optodes and underlying brain regions may be variable, depending on the brain region that is targeted, and the amount of extra-cerebral tissue present between the optode and the respective cortical area. In addition, optode placement at a certain ROI can be challenging due to lacking anatomical information. Accordingly, designing an optode array (i.e., optode arrangement) in relation to anatomical landmarks and/or standardized locations (e.g., 10 to 20 EEG locations96,97) is essential for every fNIRS study to enhance reproducibility and replicability. It is noteworthy, however, that the position of channels relative to the scalp can also be variable between setups or even recording sessions.98,99 From a practical point of view, any arrangements for reducing potential discomfort of the participant caused by scalp-optode contact (e.g., different spring holder pressures) and additional materials used during participant preparation (e.g., use of a cotton swab to move the hair away, use of a light-shielding overcap) should be reported.

The most accurate optode array design results from individual anatomical information in combination with individual 3D optode coordinates that are obtained by digitization, photogrammetry-based,100–102 and neuronavigation tools.103–105 The precision of this procedure can be further improved by incorporating functional MRI data of the same participant or based on a probabilistic approach.89 If such procedures are anticipated, the applied tools or the performed custom-made steps for the co-registration should be reported. Alternatively, procedures that allow for the design of an optode array with respect to anatomical landmarks (e.g., nasion, inion, and left and right preauricular points) and to the standard EEG 10 to 20 positions96,97 are often applied. For this purpose, several software tools have been invented and validated by the fNIRS community [e.g., AtlasViewer,106 Array Designer,107 fNIRS optode location designer (fOLD),108 dev-fOLD,109 modular optode configuration analyzer (MOCA),110 and simple and timely optode registration method for functional near-infrared spectroscopy (STORM-Net)111].

In addition to the applied software, the used settings such as the brain parcellation atlas (e.g., AAL2112,113 and Brodmann114), the basis of anatomical landmarks (e.g., 10–20, 10–10, and 10–5 positions96,97), and the employed head model (e.g., Colin27115 and SPM12116) should be specified in the preregistration. Moreover, the software-specific input parameters and settings for the probe design should be documented in as much detail as necessary to be reproducible. For example, fOLD108 allows for settings such as brain atlas, the basis of anatomical landmarks, probe symmetry, and level of specificity (%) that are all necessary to reconstruct the optode array.

Preregistration template item(s): M10 and M13

2.3. Analysis Plan

2.3.1. Data exclusion criteria

After data collection and before starting data analysis, the quality of the data needs to be ensured. To ensure sufficient fNIRS data quality, it is important to avoid the inclusion of channels with a poor signal quality. These often result from light instabilities due to poor optode-scalp coupling,117,118 which can influence the subsequent preprocessing steps. Therefore, the preregistration should define criteria for signal quality evaluation and data exclusion at the trial, channel (so-called pruning), and participant levels. The quality of the fNIRS signal, for example, can be evaluated by specific calculations (e.g., SNR, coefficient of variation,119 contrast-to-noise ratio, and scalp coupling index19,120) applied on a certain type of fNIRS data (raw density, optical density, or concentration data). Exclusion criteria should specify the critical measure (i.e., threshold value or qualitative measure), the type of exclusion (i.e., listwise versus casewise), and the level of exclusion (i.e., participant, channel, or trial). For example, studies might exclude channels with an SNR below 15 dB,121 participants with usable channels,122 missing data or invalid cap placement,123 or trials based on incorrectly solved trials.124

Defining data exclusion criteria a priori may be challenging and is highly dependent on prior knowledge, making it particularly difficult for researchers new to fNIRS. This challenge can be overcome by performing initial pilot experiments that allow for the specification or change of values. Moreover, hemodynamic data exclusion criteria can be deduced from comparable fNIRS setups (when using identical fNIRS systems). Further, behavioral data exclusion criteria can be retrieved from basic studies with comparable tasks.

Preregistration template item(s): M7

2.3.2. Data Preprocessing

An important step in fNIRS studies is the preprocessing of the data as one major issue of fNIRS measurements is the contamination with different noise components that are either related to motion (e.g., signal quality and motion artifacts117,118,125) or to systemic physiology (e.g., evoked and non-evoked cerebral and extracerebral systemic confounds.36,126 These artifacts constrain the main goal of task-related fNIRS research, namely, to restore the underlying hemodynamic response to a certain task.

Most of the preprocessing steps, are complex and different parameter selection and/or different orders of the applied preprocessing steps might lead to different results and thus to different interpretations of the data.8,127,128 For instance, some parameters might be device-specific (e.g., sampling rate, but also measurement units and scales) and should be interpreted relative to the technical setup. Furthermore, the implementation and parameters of similar preprocessing steps may vary slightly between different fNIRS analysis toolboxes; therefore, the used toolbox should be stated in preregistration protocols. We refer to other resources for an overview of existing fNIRS analysis toolboxes.36,129–131

As a result, researchers might adapt their preprocessing pipeline until they find the desired effect, which can increase type I error rates (i.e., the risk for false positive findings).7,132 To prevent results-based analytic decisions, the decisions regarding data preprocessing should be transparently documented a priori and ideally in a specific, precise, and exhaustive way.7,24 Accordingly, the preregistration of fNIRS data analysis steps should provide all details that are necessary to reproduce the whole pipeline without seeing any of the code (i.e., specific) so that each step is only interpretable in one direction (i.e., precise) without the possibility of changing the options, for instance, after seeing the collected data (i.e., exhaustive).

However, we agree that data-driven approaches to adjust, for instance, specific parameters necessary for a preprocessing step are important as those can differ between studies and depend on the data. For instance, parameter tuning for motion artifact detection might differ between participants, instruments, and brain regions.133 Accordingly, it is highly recommended to run a pilot study on which these decisions can be based.7 A pilot study can help to estimate the feasibility of the chosen parameters or algorithms, verify activation in ROIs, and test the pipeline for possible errors. Alternatively, certain preprocessing steps can be tested on similar data from existing open datasets, emphasizing the importance of data and code sharing (see section Sec. 2.6).

In the following sections, we focus on the main and, in most cases, absolute necessary preprocessing steps in CW-fNIRS research. We appreciate that there are other fNIRS systems (e.g., time domain NIRS and frequency domain NIRS), experimental setups, and designs that might require preprocessing steps that can differ from the ones mentioned here. However, by controlling whether the documentation of the steps is specific, precise, and exhaustive, finding the correct way of accurately documenting them should be straightforward.

Modified Beer–Lambert law

CW-fNIRS cannot directly measure absolute values of hemoglobin concentration due to its inability to determine the optical properties of the underlying tissues, for instance, the amount of absorbed and scattered light.36 Instead, it uses the modified Beer–Lambert law (mBLL) to calculate hemoglobin concentration changes, taking together the linear relationship between the optical density (i.e., how much light survives the path from the source to the detector) and the concentration of a medium and the assumption of constant light scattering.

However, the mBLL depends on several parameters that should be determined beforehand, such as the wavelength-dependent differential pathlength factors (DPFs) and the molar extinction coefficients. First, as DPFs, a fixed or an age-adjusted value can be chosen.134,135 Alternatively, a partial pathlength factor (PPF134,135), which corrects for the effective pathlength of the brain-relevant tissue instead of the pathlength through all tissues, can be used. If a default value of DPF/PPF is used, this should be mentioned accordingly (e.g., DPF = 6 as in the HomER2/HomER3 software136 or PPF = 0.1 as in the NIRS Brain AnalyzIR toolbox137 or HomER2/HomER3136,137). Second, tabularized molar extinction coefficients are typically applied in the mBLL. For more information about the mBLL, see for instance Refs. 36, 56, 134, 135, and 137.

Motion artifact correction

Motion artifacts in the fNIRS time courses result mostly from optode-scalp decoupling and/or head movement. These artifacts are typically characterized by spikes and baseline shifts in the fNIRS signal133 and, if not properly corrected, can highly decrease the reliability of the underlying hemodynamic response. There are several motion artifact correction algorithms available,60,133,138,139 and these can be split into two categories: (1) algorithms that rely on a prior motion artifact detection step and (2) algorithms that need no extra step for motion artifact detection. However, in both cases, most of the algorithms require parameter tuning.

Accordingly, in the case of (1), the motion artifact detection procedure and all respective parameters and thresholds should be reported (e.g., a motion artifact was defined as present if a signal exceeded the times SD). For both (1) and (2), the correction method including all parameters and/or corresponding thresholds should be documented (e.g., a filter based on principal component analysis that accounted for 85% of the variance in the signal was applied). Further, whether the choice of thresholds is based on the literature or pilot data should be mentioned.

For possible choices of motion artifact algorithms, see for example Refs. 60, 125, 133, and 138–145.

Filtering

A major part of the noise in the fNIRS signal is related to non-evoked or spontaneous physiological processes.36,126 The frequency components mainly result from heartbeat (), respiration (), Mayer waves (), and very low frequency oscillations ( to 0.05 Hz).9,36 Depending on the task frequency, i.e., 1/(task period [s] + rest period [s]), some components can already relatively easily be eliminated by applying a conventional temporal filter.9 In the preregistration, whether or not a filter will be used should be noted.

As already recommended by Pinti et al.,9 whether an infinite or finite impulse response (i.e., infinite impulse response versus finite impulse response) filter will be applied should be documented, and the filter type itself should be specified (e.g., Butterworth filter and moving-average filter). Moreover, the filter design (e.g., low-pass, high-pass, or band-pass filter), cut-off frequencies (e.g., band-pass filter with cut-off frequencies of [0.01, 0.09] Hz) and the filter order (e.g., a second-order Butterworth filter) should be documented. And finally, it is important to specify whether a causal [e.g., MATLAB function filter()] or an acausal/zero-phase filter [e.g., MATLAB function filtfilt()] will be applied.

The process of digital filtering is a complex topic and can be deepened by considering for instance Refs. 146 and 147. For the specific application on fNIRS data we highly recommend Ref. 9.

Systemic activity correction

Other signal confounds might result from task-evoked cerebral and extracerebral systemic activity and are thus more difficult to remove. These artifacts mostly result from changes in the partial pressure of arterial carbon dioxide as well as blood pressure.36,126 They can mask or mimic hemodynamic activity and hence, if not properly corrected, can result in false negative or false positive results.36,126 Conventional temporal filters fall short of removing these artifacts because the artifact frequencies can overlap with the task frequency. So far, the most accurate way of removing the extracerebral part of such artifacts is using the hardware-based solution: short-distance channels (SDCs). SDCs are generated by placing the source and detector at a distance of , and ideally at for adults,94 to capture the hemodynamic activity from extracerebral tissue only.36,126,148–152 SDC signals can be used for correcting the regular distance channels, for instance, by applying a regression-based approach. If no SDCs are available, it is recommended to apply an alternative method to reduce the extracerebral systemic activity.8,19,150,152

In any case, the type of algorithm that will be applied for correction should be documented. For instance, for a simple regression, it is possible to use the closest SDC, the SDC with the highest correlation, the average across SDCs, or the first principal components resulting from a principal component analysis of all SDCs. How correction will be handled if a number of SDCs have a poor channel quality should be further noted.

If no SDCs are available for correction, the algorithm and potential algorithm-specific parameters should be specified. For instance, if a global signal is computed (e.g., by taking the average of all channels), it is possible to simply subtract it from each channel or to use it as a regressor either for simple channel-wise regression or within a GLM framework. If a more advanced algorithm is applied, for instance, a principal component analysis of all available channels, the number of principal components (or the amount of explained variance) that are filtered out and the filter itself (e.g., subtracting, regression-based, and GLM) should be specified.

The topic of systemic activity correction is relatively new and very important for fNIRS preprocessing; hence, recently it has been much discussed, and methods have been validated in Refs. 36, 126, 148, and 150–154.

Preregistration template item(s): AP3 and AP4

2.4. Statistical Analysis

Similar to data preprocessing, the many options available for fNIRS statistics lead to analytic flexibility, and therefore any intended statistical test for confirmatory analysis should be preregistered before data analysis. We hereby differentiate between first-level (i.e., within-subject) and second-level (i.e., group) analyses. First-level analysis of fNIRS data is typically performed by block/trial averaging hemoglobin concentration changes over trials of the same condition or by modeling the signal response with a GLM.9,35,155 The advantage of GLM over block-averaging is its higher level of statistical power,9 and the possibility of modeling confounding factors along with the expected hemodynamic responses (e.g., by adding SDCs as nuisance regressors to the model).126 The choice of the GLM should be informed by the research context. For instance, if the shape of the expected HRF is unknown, then performing a block averaging or a GLM-based deconvolution with multiple Gaussian functions is potentially preferable to performing a GLM with a fixed HRF shape.156–158 For the second-level analysis, certain variables of the first-level analysis are chosen (e.g., mean of the block average over a certain time period, GLM estimates for certain conditions), and inferences are made with appropriate frequentist or Bayesian statistical tests (e.g., -test, ANOVA, and mixed models). All chosen methods and variables should appropriately be reported and justified, and the researcher should clearly specify on which measured variable the research hypothesis is tested [e.g., oxygenated hemoglobin (HbO), deoxygenated hemoglobin (HbR), total hemoglobin, and hemoglobin difference]. Reporting of both HbO and HbR in the paper or its supplementary materials is recommended even if the primary hypotheses refer to only one measure.

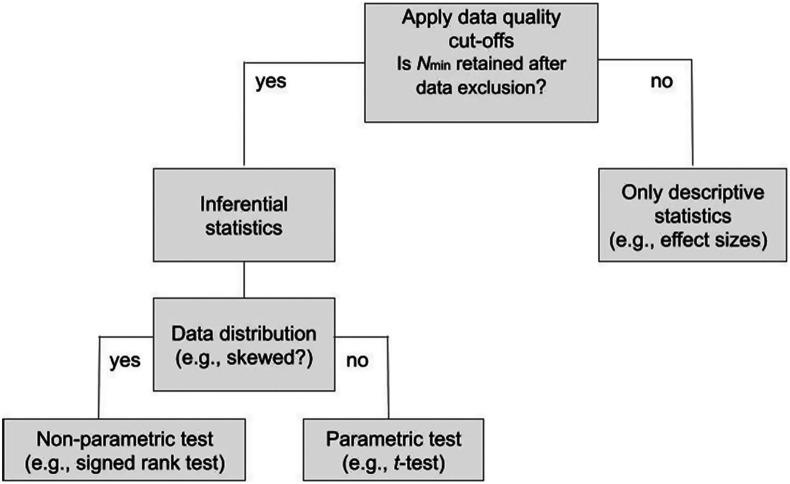

To control for researchers’ degrees of freedom, clear and comprehensible guidelines for analytic decisions are critical. For complex data with numerous interdependent preprocessing steps the use of analytic decision trees is encouraged (cf., Fig. 4). These define the sequence of analysis steps and decision rules that will be applied, depending on the outcome of a previous analysis step.13 For example, analytic decision trees may start with quality criteria thresholds, continue with assumption checks for statistical tests (e.g., parametric versus non-parametric), and follow with branches for adequate statistical tests, depending on whether respective test assumptions are met or not (cf., Fig. 4). Analytic decision trees can also be used to specify which follow-up tests are carried out and for the event of inconclusive findings. Results initially found to be inconclusive (often erroneously referred to as null findings) may still be informative if followed up with frequentist equivalence tests or Bayesian statistics that can provide evidence for the absence of an effect.48 In general, the results of any analysis should be reported in the final manuscript irrespective of their outcome.

Fig. 4.

Example decision tree for fundamental test choices that are contingent on the data.

Regardless of the choice to perform a block average or GLM, all potential analytical options related to the method should be mentioned in the preregistration. For instance, when performing a block average, the researcher should specify the variable that will be used for the second-level analysis (e.g., average over a certain time period, peak, time to peak, slope, area under the curve), as well as the way of defining this variable and the applied time periods (e.g., time period used for baseline correction and average over task period). In contrast, when choosing a GLM-based analysis, the researcher should clearly state not only the variable used for the second-level analysis (e.g., beta values) but also the details about the model: the specific HRF model used including its parameters (e.g., a double gamma function with ; ; ; and ),159 the extra nuisance regressors added to the model (e.g., all available HbO and HbR SDCs, accelerometers),150,160 and the method used to solve the GLM (e.g., ordinary least-squares and autoregressive iteratively reweighted least-squares model).161

Further, when multiple fNIRS channels and measures (e.g., HbO and HbR) are tested simultaneously, there is an increased risk of obtaining false positive results. When applying inferential frequentist statistics, the significance level should be declared (and ideally justified162,163). Furthermore, adequate correction methods for multiple comparisons should be stated (e.g., false discovery rate, Holm correction, and Bonferroni correction).35,164–166 To limit the number of statistical tests performed, researchers may first combine signals across multiple neighboring channels, yielding a smaller number of ROIs.167 However, this approach requires careful specification of the methods used to define (1) which channel belongs to which ROI and how was this defined (cf., Sec. 2.2.5 Optode array design and optode placement) and (2) how the data over channels belonging to the same ROI are combined (e.g., average, weighted average, the channel with the highest activity, and the channel with the highest sensitivity based on a localizer task). For Bayesian statistical approaches, the used prior distributions should be declared (and ideally be justified168). We further encourage researchers to report descriptive statistics and effect sizes, and where possible, to use effect sizes that are easy to interpret.169,170 For fNIRS studies that employ machine-learning methodologies including classifiers, we recommend consulting respective debates about best practices and the role of study preregistration.171–176

Preregistration template item(s): M13, AP5, AP6, and AP7

2.5. Optional: Exploratory Analyses

During or after data collection and analysis, new research questions and hypotheses may emerge. We refer to those as post-hoc exploratory analyses, which should be differentiated from planned exploratory analyses. Preregistration of an fNIRS study lends credibility to a researcher’s hypothesis-driven analysis plan and distinguishes planned confirmatory from post-hoc exploratory analyses. In contrast to confirmatory hypotheses and analyses, post-hoc exploratory analyses tend to investigate intuitions and ideas that evolve during the research process but did not primarily guide the research design. By this definition, exploratory analyses have lower prior odds, leading to a higher rate of false positive findings.177 Overall, unplanned and non-preregistered exploratory analyses have a characteristic that can inform unspecific theories.178 Reporting of unplanned and non-preregistered exploratory results might therefore potentially inspire subsequent confirmatory study designs. As such they should be given less weight in the overall conclusion of the study in which they were investigated and first replicated via independent, confirmatory testing. Therefore, exploratory analyses need to be labeled explicitly and differentiated from confirmatory analyses (although some authors argue for purely confirmatory scientific research26,179). However, researchers may have exploratory analyses already in mind at the planning stage, which they can preregister as planned exploratory analyses (i.e., either as specific analyses or as part of a decision tree under different analysis options/scenarios/outcomes; cf., Fig. 4), lending them more credibility compared with post-hoc (i.e., non-preregistered) exploratory analyses that may be subjected to undisclosed analytical flexibility and QRPs. Of note, preregistration does not preclude transparent, exhaustive post-hoc data exploitation in the form of multi-verse analyses that can be of particular methodological interest (e.g., artificial groups, moderators, and alternative data processing strategies2,180).

Preregistration template item(s): AP9

2.6. Data and Code Availability

Sharing data and code is undoubtedly part of open science. In general, open data and code improve verification and reproducibility of study results, increase citation rates, encourage data reuse for new research ideas, and are therefore increasingly part of research policies of public funders, ethical committees, and scientific journals.181–183 With regards to preregistration, we specifically encourage data and code sharing as a source for researchers new to fNIRS to plan their own analysis pipelines.

There are several reasons that data and code sharing should be considered prior to data collection. First, organizing and labeling data during data collection will save much time in the end when sharing data with other researchers in an understandable way. Second, analysis pipelines that work on sharable file formats and data structures will simplify the verification process for later reviewers. Third, sharing analysis code prior to data collection or keeping a version control can be considered the ultimate way of preregistration as it offers full transparency about changes in analysis plans. We specifically encourage this for the validation of new analysis methods as this should prevent overfitting of methodological choices based on the obtained research data. Finally, when drafting informed consent forms for future participants, researchers should consider asking permission to openly share personal data in accordance with local regulatory and institution specific data protection regulations.184

In general, shared data and code should be compliant with the FAIR principles (i.e., findable, accessible, interoperable, and reusable).185 Here, we provide some practical tips to make fNIRS data and code FAIR.

First, fNIRS data should be converted to .snirf186 (shared NIR spectroscopy format, https://github.com/fNIRS/snirf) files and organized and labeled according to the Brain Imaging Data Structure (BIDS).185,187 This standardization can help to increase interoperability and reusability, i.e., the data contains enough details to be easily interpreted by humans and machines. Specifically, .snirf is a standardized open access data format that is developed by the fNIRS community to facilitate sharing and analysis of fNIRS data, whereas BIDS is a standard specifying the organization and naming of data and metadata of neuroimaging studies. It is noteworthy that BIDS was recently extended (version 1.8.0) to include fNIRS data as a newly supported modality.188

Second, several platforms and repositories are available to enhance the findability and accessibility of data, code, and preregistrations. Data sharing repositories can vary from very general (e.g., Zenodo) to more specific for neuro-imaging (e.g., openneuro and NITRC) or even fNIRS data (e.g., openfnirs). When it comes to code sharing, platforms that include versioning control, such as GitHub, GitLab, or BitBucket, are preferred. Similarly, multiple options are available to share a preregistered study (e.g., on the OSF; osf.io). Further, the OSF provides a platform for organizing and version-controlled sharing data, code, and all other related research materials of one research project185,187,189 and provides the option to embargo a preregistration (i.e., it will only be made publicly available after a preset period of time). In Table 2, we provide an overview of several available data formats, repositories, and sharing platforms.

Table 2.

Overview of available fNIRS-data standards and data-sharing repositories that aim to improve the FAIR-ness of research.

| Specialized for sharing of: | Description | Website | |

|---|---|---|---|

| Standards | |||

| .snirf | Raw data | Open-source file format for sharing fNIRS data. | https://github.com/fNIRS/snirf |

| BIDS | Raw data | Standard specifying the organization and naming of raw data and metadata of neuroimaging studies. | Refs. 190, 191 |

| Repositories | |||

| Openfnirs | Data | Platform sharing open-access fNIRS datasets. | Ref. 192 |

| Openneuro | Data | Platform for validating and sharing BIDS-compliant neuroimaging datasets. | Ref. 193 |

| NITRC-IR | Data | Image repository sharing neuroimaging datasets. | Ref. 194 |

| brainlife | Data, code | Platform for sharing neuroscience code (apps) and data with an integrated cloud-computing and high-performance computing environment to run the apps. | Ref. 195 |

| Zenodo | Data, code | General-purpose open repository developed by the European Commission for sharing data, code, papers, and reports. | https://zenodo.org/ |

| GitHub | Code | Developer-focused platform to share code and software, including version control. | https://github.com/ |

| GitLab | Code | Developer-focused platform to share code and software, including version control. | Ref. 196 |

| BitBucket | Code | Platform to share code and software, including version control (private repositories for up to five users). | Ref. 197 |

| OSF | Preregistration, data, code | Platform to organize and share all research data and material related to a research project, including version control; different preregistration templates, including open formats, can be used. | Ref. 198 |

| Peer Community in Registered Reports | Registered Reports, preprints | Journal-independent research community platform that performs and publishes peer reviews of preprints as well as stage 1 and stage 2 reviews of Registered Reports. Following a positive evaluation of the complete review process, authors may submit their final Registered Report manuscript to cooperating journals. | Ref. 30 |

| Other useful platforms: | |||

| INCF | Portfolio with standards and best practices supporting open and FAIR neuroscience. | Ref. 199 | |

| FAIRsharing | Resource platform on data and metadata standards, inter-related on databases and data policies. | Ref. 200 | |

Third, when researchers intend to share data with other researchers, they should carefully reflect on the balance between open science practices and privacy protection regulations,201,202 specifically the General Data Protection Regulation for research conducted in the European Union and the United Kingdom, the Health Insurance Portability and Accountability Act for research conducted in the United States of America, and similar regulations in other countries. As far as we know, the fNIRS time series signal itself is not identifiable and therefore, in principle, can be shared as anonymous data that does not fall under the privacy protection rules.203 However, data from participants is never measured solely by itself but often is linked to direct or indirect personal identifiers. For example, the name and contact information of participants (i.e., direct identifiers) are usually registered for administrative purposes. Researchers typically remove the direct link between the fNIRS data and these personal identifiers by pseudonymization. In addition, it is often desired and/or encouraged for scientific purposes to give context to our data with demographic and clinical information (Sec. 2.2.2). The combination of these snippets of information – each of which by itself is not identifying – does increase the chance of identification of study participants by means of a linkage attack (see for example, Ref. 204) and can therefore be considered to be indirect identifiable data.

Data sharing should always be in accordance with local data protection laws and requirements of the institutional review boards. Because common principles apply to many, we give a few practical recommendations regarding data from fNIRS studies. First, we recommend explaining to participants why it is intended to share data, what data will be shared, and how it will be shared. Even for anonymous data, it is best practice to always ask for explicit consent, and it is required for pseudonymize data. Importantly, sharing directly identifiable information (e.g., name, recording date, birthdate, and address) is prohibited under commonly used data protection regulation. Researchers should be aware that participants may use social media to share their whereabouts; researchers are strongly advised to prevent that any information shared by the participant outside their control—such as the post on social media “I participated in a study at the university this morning”—can be linked inadvertently to information that is intended to become later publicly available through data sharing by the researcher. Photos and videos can also be directly identifiable. Photogrammetry pictures should not be shared. Instead, we advise that only the extracted optode and anatomical landmark positions of the photogrammetry pictures are shared, or in case participants are shown on the picture, their face and appearance should not be recognizable (e.g., via blurring or removal). We further recommend avoiding sharing data that is not essential for the research question or follow-up analyses but has a high disclosure risk (e.g., an unusual finding). Furthermore, one should reduce the amount of detail when it comes to meta data.205 For example, instead of reporting a table with the exact ages of participants, a range can be reported instead. Finally, many departments and universities employ data stewards or data protection managers that can advise researchers on how to comply with local and national data sharing policies and implement FAIR data sharing principles.

Preregistration template item(s): T10 and T11

3. Summary

This step-by-step guide aims to assist researchers in both planning the design and drafting a preregistration protocol for task-related CW-fNIRS studies. In particular, fNIRS-specific design aspects such as optode placement and adequate sample size planning, as well as analytical aspects around the signal quality and data analysis, are elaborated. One focus is also set on using open research tools that facilitate study planning for hemodynamic studies and data sharing for fNIRS. Altogether, this guide thus complements recent efforts in providing best practice guidance for fNIRS researchers for careful study planning and addresses the call for tools that support researchers in preregistering their fNIRS study.18,19 It is further accompanied by a comprehensive preregistration template that includes 48 items, covering key aspects that are exemplified. These tools can aid in particular researchers new to the field in getting acquainted with the relevant steps and decisions of fNIRS study planning, and they can serve as tools for designing fNIRS studies transparently through the means of study preregistration.

Acknowledgments

This guide and template were inspired by a previous similar initiative for EEG research.7,45 We would like to thank the following people (in alphabetical order) for their helpful input on an earlier version of the manuscript: Addison Billing, Thomas Dresler, Gisela Govaart, Robert Oostenveld, Antonio Schettino, Bettina Sorger, Lucas Trambaiolli, and Meryem A. Yücel. The authors of this work were supported by the following funders: DMAM was supported by a Junior Principal Investigator (JPI) fellowship funded by the Excellence Strategy of the Federal Government and the Laender (Grant No. JPI074-21); HC was supported by the Operational Program European Regional Development Fund [OP ERDF; Grant No. PROJ-00872 (PROMPT)]; JEK was supported by the Eunice Kennedy Shriver National Institute of Child Health and Human Development (NICHD; grant reference number: F32 HD103439); and JP was supported by the Portuguese Foundation for Science and Technology (FCT; Grant No. 2020.04899.BD).

Biographies

Philipp A. Schroeder received his PhD for work on transcranial direct current stimulation. He is a researcher and lecturer in psychology at the University of Tübingen, Germany. His current research in clinical psychology and behavioral neuroscience covers implicit processing and cognitive control of cognition and behavior, both in general and in relation to eating disorders.

Christina Artemenko is a postdoctoral researcher at the University of Tübingen and was awarded a Margarete-von-Wrangell-Fellowship. Her research focuses on the neurocognitive foundations of arithmetic and number processing and its development from childhood up until old age. To investigate the neural correlates of arithmetic across the lifespan, she mainly uses fNIRS besides behavioral methods embedded in the field of educational neuroscience. She appreciates open science—in particular, preregistrations and registered reports.

Jessica E. Kosie is an NIH Postdoctoral Research Fellow at Princeton University where she works with infants. Broadly, she is interested in how learners find structure in their environment using behavioral assessments and neuroimaging. She uses open science practices (e.g., open code, pre-registration, and large-scale collaborative research) in her own work and educates researchers in these approaches. She is involved in several open science initiatives (e.g., ManyBabies and meta-research projects).

Helena Cockx received her degree as a medical doctor in 2018 at the KU Leuven, Belgium. She is currently a PhD candidate at Donders Institute and Radboud University of Nijmegen, The Netherlands. Her research focuses on the interpretation of various (neuro)physiological signals, including fNIRS signals, related to neurological disorders, such as Parkinson’s disease. She is a great defender of open science practices and is a contributor to several open science initiatives (including BIDS extension proposals for fNIRS and motion capture systems).

Katharina Stute received her MSc degree in sport sciences from the University of Freiburg, Germany, in 2016. She worked as a researcher and lecturer at Chemnitz University of Technology, Germany, from 2017 to 2022, and is now employed as an application specialist for (f)NIRS at Artinis Medical Systems B.V. Her research interests are centered around motor control and learning, the interaction between motor and cognitive performance, and the neural control of movement.

João Pereira is currently a biomedical engineering PhD candidate at the University of Coimbra, with current work being developed at Coimbra Institute for Biomedical Imaging and Translational Research (CIBIT). His research focuses on the development and validation of neurorehabilitative strategies based on multimodal imaging (fMRI and fNIRS).

Franziska Klein is a postdoctoral researcher with a background in math and physics as well as neurocognitive psychology. She is currently working in the Neurocognition and Functional Neurorehabilitation Group at the University of Oldenburg and in the Applied Computational Neuroscience Lab at the University Hospital RWTH Aachen. The focus of her research lies in the development and use of fNIRS-based real-time applications such as neurofeedback and BCIs as well as in advancing and validating (real-time) signal processing techniques.

David M. A. Mehler is a physician-scientist and heads the Applied Computational Neuroscience Lab at the University Hospital RWTH Aachen, Germany. His research focuses on non-invasive brain-computer-interfaces for clinical use in psychiatry and neurology, machine-learning approaches in big (neuroimaging) data for classification problems and meta-research for interventions and health tech to treat brain disorders. The lab is strongly committed to open science research practices.

Disclosures

KS works at the (f)NIRS manufacturer Artinis Medical Systems B.V. DMAM has previously received payments to consult with an fNIRS neurofeedback start-up company, which was not in relationship with or support of this work. All other authors declare no conflicts of interest.

Contributor Information

Philipp A. Schroeder, Email: philipp.schroeder@uni-tuebingen.de.

Christina Artemenko, Email: christina.artemenko@uni-tuebingen.de.

Jessica E. Kosie, Email: jkosie@princeton.edu.

Helena Cockx, Email: helena.cockx@donders.ru.nl.

Katharina Stute, Email: katharina.stute@hsw.tu-chemnitz.de.

João Pereira, Email: jpereira@uc.pt.

Franziska Klein, Email: franziska.klein@uni-oldenburg.de.

David M. A. Mehler, Email: mehlerdma@gmail.com.

References

- 1.Carp J., “On the plurality of (methodological) worlds: estimating the analytic flexibility of FMRI experiments,” Front. Neurosci. 6, 149 (2012). 10.3389/fnins.2012.00149 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Churchill N. W., et al. , “Correction: an automated, adaptive framework for optimizing preprocessing pipelines in task-based functional MRI,” PLoS One 10(12), e0145594 (2015). 10.1371/journal.pone.0145594 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Poldrack R. A., et al. , “Scanning the horizon: towards transparent and reproducible neuroimaging research,” Nat. Rev. Neurosci. 18(2), 115–126 (2017). 10.1038/nrn.2016.167 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Mehler D., “11 - The replication challenge: is brain imaging next?” in Casting Light on the Dark Side of Brain Imaging, Raz A., Thibault R. T., Eds., pp. 67–71, Academic Press; (2019). [Google Scholar]

- 5.Botvinik-Nezer R., et al. , “Variability in the analysis of a single neuroimaging dataset by many teams,” Nature 582(7810), 84–88 (2020). 10.1038/s41586-020-2314-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Niso G., et al. , “Good scientific practice in MEEG research: progress and perspectives,” Neuroimage 257, 119056 (2022). 10.1016/j.neuroimage.2022.119056 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Paul M., Govaart G. H., Schettino A., “Making ERP research more transparent: guidelines for preregistration,” Int. J. Psychophysiol. 164, 52–63 (2021). 10.1016/j.ijpsycho.2021.02.016 [DOI] [PubMed] [Google Scholar]

- 8.Pfeifer M. D., Scholkmann F., Labruyère R., “Signal processing in functional near-infrared spectroscopy (fNIRS): methodological differences lead to different statistical results,” Front. Hum. Neurosci. 11, 641 (2017). 10.3389/fnhum.2017.00641 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Pinti P., et al. , “Current status and issues regarding pre-processing of fNIRS neuroimaging data: an investigation of diverse signal filtering methods within a general linear model framework,” Front. Hum. Neurosci. 12, 505 (2018). 10.3389/fnhum.2018.00505 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Simmons J. P., Nelson L. D., Simonsohn U., “False-positive psychology: undisclosed flexibility in data collection and analysis allows presenting anything as significant,” Psychol. Sci. 22(11), 1359–1366 (2011). 10.1177/0956797611417632 [DOI] [PubMed] [Google Scholar]

- 11.Niso G., et al. , “Open and reproducible neuroimaging: from study inception to publication,” NeuroImage 263, 119623 (2022). 10.1016/j.neuroimage.2022.119623 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Lowndes J. S. S., et al. , “Our path to better science in less time using open data science tools,” Nat. Ecol. Evol. 1(6), 160 (2017). 10.1038/s41559-017-0160 [DOI] [PubMed] [Google Scholar]

- 13.Nosek B. A., et al. , “The preregistration revolution,” Proc. Natl. Acad. Sci. U. S. A. 115(11), 2600–2606 (2018). 10.1073/pnas.1708274114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Allen C., Mehler D. M. A., “Open science challenges, benefits and tips in early career and beyond,” PLoS Biol. 17(5), e3000246 (2019). 10.1371/journal.pbio.3000246 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Nosek B. A., et al. , “Replicability, robustness, and reproducibility in psychological science,” Annu. Rev. Psychol. 73, 719–748 (2022). 10.1146/annurev-psych-020821-114157 [DOI] [PubMed] [Google Scholar]

- 16.Gorgolewski K. J., Poldrack R. A., “A practical guide for improving transparency and reproducibility in neuroimaging research,” PLoS Biol. 14(7), e1002506 (2016). 10.1371/journal.pbio.1002506 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Gentili C., et al. , “The case for preregistering all region of interest (ROI) analyses in neuroimaging research,” Eur. J. Neurosci. 53(2), 357–361 (2021). 10.1111/ejn.14954 [DOI] [PubMed] [Google Scholar]

- 18.Kelsey C. M., et al. , “Shedding light on functional near infrared spectroscopy and open science practices,” https://www.biorxiv.org/content/10.1101/2022.05.13.491838v1 (2022). [DOI] [PMC free article] [PubMed]

- 19.Yücel M. A., et al. , “Best practices for fNIRS publications,” Neurophotonics 8(1), 012101 (2021). 10.1117/1.NPh.8.1.012101 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kohl S. H., et al. , “The potential of functional near-infrared spectroscopy-based neurofeedback—a systematic review and recommendations for best practice,” Front. Neurosci. 14, 594 (2020). 10.3389/fnins.2020.00594 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Sarafoglou A., et al. , “A survey on how preregistration affects the research workflow: better science but more work,” R. Soc. Open Sci. 9(7), 211997 (2022). 10.1098/rsos.211997 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Nosek B. A., Lindsay D. S., “Preregistration becoming the norm in psychological science,” APS Obs. 31(3) (2018). [Google Scholar]

- 23.Paret C., et al. , “Survey on open science practices in functional neuroimaging,” Neuroimage 257, 119306 (2022). 10.1016/j.neuroimage.2022.119306 [DOI] [PubMed] [Google Scholar]

- 24.Wicherts J. M., et al. , “Degrees of freedom in planning, running, analyzing, and reporting psychological studies: a checklist to avoid p-hacking,” Front. Psychol. 7, 1832 (2016). 10.3389/fpsyg.2016.01832 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Rubin M., “When does HARKing hurt? Identifying when different types of undisclosed post hoc hypothesizing harm scientific progress,” Rev. Gen. Psychol. 21(4), 308–320 (2017). 10.1037/gpr0000128 [DOI] [Google Scholar]

- 26.Wagenmakers E.-J., et al. , “An agenda for purely confirmatory research,” Perspect. Psychol. Sci. 7(6), 632–638 (2012). 10.1177/1745691612463078 [DOI] [PubMed] [Google Scholar]

- 27.Nosek B. A., et al. , “Preregistration is hard, and worthwhile,” Trends Cognit. Sci. 23(10), 815–818 (2019). 10.1016/j.tics.2019.07.009 [DOI] [PubMed] [Google Scholar]

- 28.Hardwicke T. E., Wagenmakers E.-J., “Reducing bias, increasing transparency, and calibrating confidence with preregistration,” Nat. Hum. Behav. 7, 15–26 (2023). 10.1038/s41562-022-01497-2 [DOI] [PubMed] [Google Scholar]

- 29.Chambers C. D., Tzavella L., “The past, present and future of Registered Reports,” Nat. Hum. Behav. 6(1), 29–42 (2022). 10.1038/s41562-021-01193-7 [DOI] [PubMed] [Google Scholar]

- 30.Peer Community In, https://rr.peercommunityin.org/.

- 31.Kvarven A., Strømland E., Johannesson M., “Author correction: comparing meta-analyses and preregistered multiple-laboratory replication projects,” Nat. Hum. Behav. 4(6), 659–663 (2020). 10.1038/s41562-020-0864-3 [DOI] [PubMed] [Google Scholar]

- 32.Schäfer T., Schwarz M. A., “The meaningfulness of effect sizes in psychological research: differences between sub-disciplines and the impact of potential biases,” Front. Psychol. 10, 813 (2019). 10.3389/fpsyg.2019.00813 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Scheel A. M., Schijen M., “An excess of positive results: comparing the standard psychology literature with registered reports,” Adv. Methods and Practices in Psychological Sci. 4(2) (2021). 10.1177/25152459211007467 [DOI] [Google Scholar]

- 34.Bosnjak M., et al. , “A template for preregistration of quantitative research in psychology: report of the joint psychological societies preregistration task force,” Am. Psychol. 77, 602–615 (2021). 10.1037/amp0000879 [DOI] [PubMed] [Google Scholar]

- 35.Tak S., Ye J. C., “Statistical analysis of fNIRS data: a comprehensive review,” NeuroImage 85, 72–91 (2014). 10.1016/j.neuroimage.2013.06.016 [DOI] [PubMed] [Google Scholar]

- 36.Scholkmann F., et al. , “A review on continuous wave functional near-infrared spectroscopy and imaging instrumentation and methodology,” Neuroimage 85(Pt 1), 6–27 (2014). 10.1016/j.neuroimage.2013.05.004 [DOI] [PubMed] [Google Scholar]

- 37.Field S. M., et al. , “The effect of preregistration on trust in empirical research findings: results of a registered report,” R. Soc. Open Sci. 7(4), 181351 (2020). 10.1098/rsos.181351 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Song H., Markowitz D. M., Taylor S. H., “Trusting on the shoulders of open giants? Open science increases trust in science for the public and academics,” J. Commun. 72(4), 497–510 (2021). 10.1093/joc/jqac017 [DOI] [Google Scholar]

- 39.Havron N., Bergmann C., Tsuji S., “Preregistration in infant research-a primer,” Infancy 25(5), 734–754 (2020). 10.1111/infa.12353 [DOI] [PubMed] [Google Scholar]

- 40.van den Akker O. R., et al. , “Preregistration of secondary data analysis: a template and tutorial,” Mol. Pathol. 5, 2625 (2021). 10.15626/MP.2020.2625 [DOI] [Google Scholar]

- 41.Aczel B., et al. , “A consensus-based transparency checklist,” Nat. Hum. Behav. 4(1), 4–6 (2020). 10.1038/s41562-019-0772-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Beyer F., et al. , “A fMRI pre-registration template,” PsychArchives (2021).

- 43.Ros T., et al. , “Consensus on the reporting and experimental design of clinical and cognitive-behavioural neurofeedback studies (CRED-nf checklist),” Brain 143(6), 1674–1685 (2020). 10.1093/brain/awaa009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Crüwell S., Evans N. J., “Preregistration in diverse contexts: a preregistration template for the application of cognitive models,” R. Soc. Open Sci. 8(10), 210155 (2021). 10.1098/rsos.210155 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Govaart G. H., et al. , “EEG ERP preregistration template,” 2022, https://osf.io; metaarxivhttps://osf.io; metaarxivhttps://osf.io; pvrn6https://osf.io, pvrn6.

- 46.https://uni-tuebingen.de/de/202162.