Abstract

Quantifying individual differences in higher-order cognitive functions is a foundational area of cognitive science that also has profound implications for research on psychopathology. For the last two decades, the dominant approach in these fields has been to attempt to fractionate higher-order functions into hypothesized components (e.g., “inhibition”, “updating”) through a combination of experimental manipulation and factor analysis. However, the putative constructs obtained through this paradigm have recently been met with substantial criticism on both theoretical and empirical grounds. Concurrently, an alternative approach has emerged focusing on parameters of formal computational models of cognition that have been developed in mathematical psychology. These models posit biologically plausible and experimentally validated explanations of the data-generating process for cognitive tasks, allowing them to be used to measure the latent mechanisms that underlie performance. One of the primary insights provided by recent applications of such models is that individual and clinical differences in performance on a wide variety of cognitive tasks, ranging from simple choice tasks to complex executive paradigms, are largely driven by efficiency of evidence accumulation (EEA), a computational mechanism defined by sequential sampling models. This review assembles evidence for the hypothesis that EEA is a central individual difference dimension that explains neurocognitive deficits in multiple clinical disorders and identifies ways in which in this insight can advance clinical neuroscience research. We propose that recognition of EEA as a major driver of neurocognitive differences will allow the field to make clearer inferences about cognitive abnormalities in psychopathology and their links to neurobiology.

Keywords: diffusion model, linear ballistic accumulator, mathematical psychology, executive function, cognitive control, transdiagnostic risk

Introduction

The study of individual differences in performance on laboratory cognitive tasks and the neural basis of these differences has been a pillar of biological psychiatry research over the past several decades. This work is driven by the consistent observation that impairments in executive functions and cognitive control (hereafter “higher-order cognition”) are observed transdiagnostically across multiple mental disorders, including schizophrenia, externalizing disorders (ADHD, substance use)(1–4), depression, and anxiety(5,6). Links between deficits in higher-order cognition and psychopathology have prompted a swell of clinical neuroscience research aimed at better understanding their psychological and neurobiological basis(7–16). Moreover, this work is heavily emphasized in major funding agency initiatives, such as the Research Domain Criteria project(17) and Computational Psychiatry Program(18).

Our aim in this review is to offer a critical perspective on the current state of the science; we identify a set of interrelated obstacles that have arisen for current approaches and lay out the case for an alternative framework. Section 1 reviews the dominant “fractionation paradigm”, which aims to use factor analysis to break cognitive functions into constituent elements with selective relations to clinical disorders, and details recent findings that present serious problems for this approach. The next three sections introduce an alternative paradigm based on computational modeling, specifically focusing on efficiency of evidence accumulation (EEA), a central individual difference dimension measured in sequential sampling models of cognition. We review evidence that EEA is a primary driver of individual and clinical differences in cognitive performance across a broad array of ostensibly quite distinct cognitive tasks and exhibits several advantages over metrics derived from the fractionation paradigm. Finally, we highlight key implications of this framework for clinical neuroscience.

1. The Fractionation Paradigm and Recent Challenges

The dominant approach towards studying individual differences, and by extension clinical differences, in higher-order cognition involves fractionation. This framework assumes that cognition consists of multiple component functions and that each constitutes a relatively distinct individual difference dimension. This latter assumption is especially relevant to clinical neuroscience research, where it is common to postulate that disorders involve selective impairments in specific functions.

A primary tool for fractionation involves batteries of carefully constructed experimental tasks that are intended to selectively engage specific functions. For example, in the “incongruent” condition of the Stroop task(19), participants must respond as to the ink color of a word while ignoring the word’s semantic meaning, which indicates a discrepant color. This discrepancy is thought to engage an inhibition process that suppresses the dominant tendency to provide the (incorrect) word response. In an otherwise-similar “congruent” condition, where the color of the word and its meaning are matched, it is assumed that the inhibition process is unengaged. Performance differences between the two conditions are thus assumed to precisely index individuals’ inhibition. Tasks like the Stroop are often paired with factor analysis to study patterns of covariance across task batteries. Foundational work by Miyake and colleagues(20) yielded evidence for three core executive dimensions–response inhibition, task switching, and working memory updating–and this framework remains the most influential fractionation taxonomy (e.g.,(21,22)).

A growing body of findings, however, presents serious challenges for the fractionation approach. First, in a systematic review, Karr and colleagues(23) provided evidence that many factor models in this literature were overfit to underpowered samples, and that alternate models that contradict the foundational three-factor structure may be more plausible. A second challenge for this paradigm concerns fundamental psychometric properties of widely used tasks that utilize difference scores to selectively index higher-order functions (e.g., the Stroop). Such measures consistently demonstrate poor test-retest reliability(24–27); that is, the rank order of subjects fails to be preserved across testing occasions, limiting the usefulness of these metrics for individual-differences research(28,29). The same measures also have poor predictive validity; recent well-powered studies show that they have tenuous relationships with relevant criterion variables, such as self-regulation questionnaires(30–33). A third challenge for fractionation is the failure of disorder specificity, the idea that selective executive deficits could help establish boundaries between disorders. Researchers have long sought to selectively link deficits in working memory to schizophrenia(34–36), behavioral inhibition to ADHD(37–39), and inhibition of negative thoughts to anxiety and depression(40–42). However, such selectivity has been elusive. People with psychiatric disorders, including schizophrenia, bipolar disorder and ADHD, typically exhibit diverse cognitive impairments that cut across the higher-order domains fractionation researchers seek to distinguish(3,15,43–49).

None of these challenges to the fractionation paradigm are necessarily decisive, but they are serious enough that alternative approaches, the subject to which we now turn, deserve greater attention.

2. Mathematical Psychology, Computational Psychiatry and Sequential Sampling Models

Multiple recent commentaries in psychiatry, clinical psychology and the broader behavioral sciences(50–53) have highlighted a critical paradox: these fields have largely eschewed the use of formal mathematical process models, despite the substantial advancements in precision, theory development, and cumulative knowledge that such models have provided for other sciences. One notable exception is the subfield of mathematical psychology, which has a long tradition of using formalisms to specify, and stringently test, theories about the mechanisms behind cognitive processing(54–56). Beyond the general scientific advantages of mathematical modeling, including allowing greater explanatory clarity and stronger empirical tests of theoretical predictions(52,53,55), this approach has recently shown unique promise for identifying links between human cognition and neural functioning(57–59). Furthermore, mathematical psychology’s models are beginning to play a pivotal role in the emerging field of computational psychiatry, where they are used to identify candidate biobehavioral dimensions linked to psychopathology that may have clearer relationships with neurobiological mechanisms than existing cognitive constructs(50,60,61).

Sequential sampling models (SSMs)(62) are a prime example of computational frameworks from mathematical psychology that are now seeing wide application in the neurosciences and psychiatry. Although models in this class were originally developed to explain recognition memory and simple perceptual decisions(62–64), they have been successfully applied to a variety of complex behavioral domains(65–67), including “executive” tasks(68–72). For tasks in which individuals must choose between response options, SSMs assume they gradually accumulate noisy evidence for each option from the environment over time until evidence for one option reaches a critical threshold, which initiates the corresponding response.

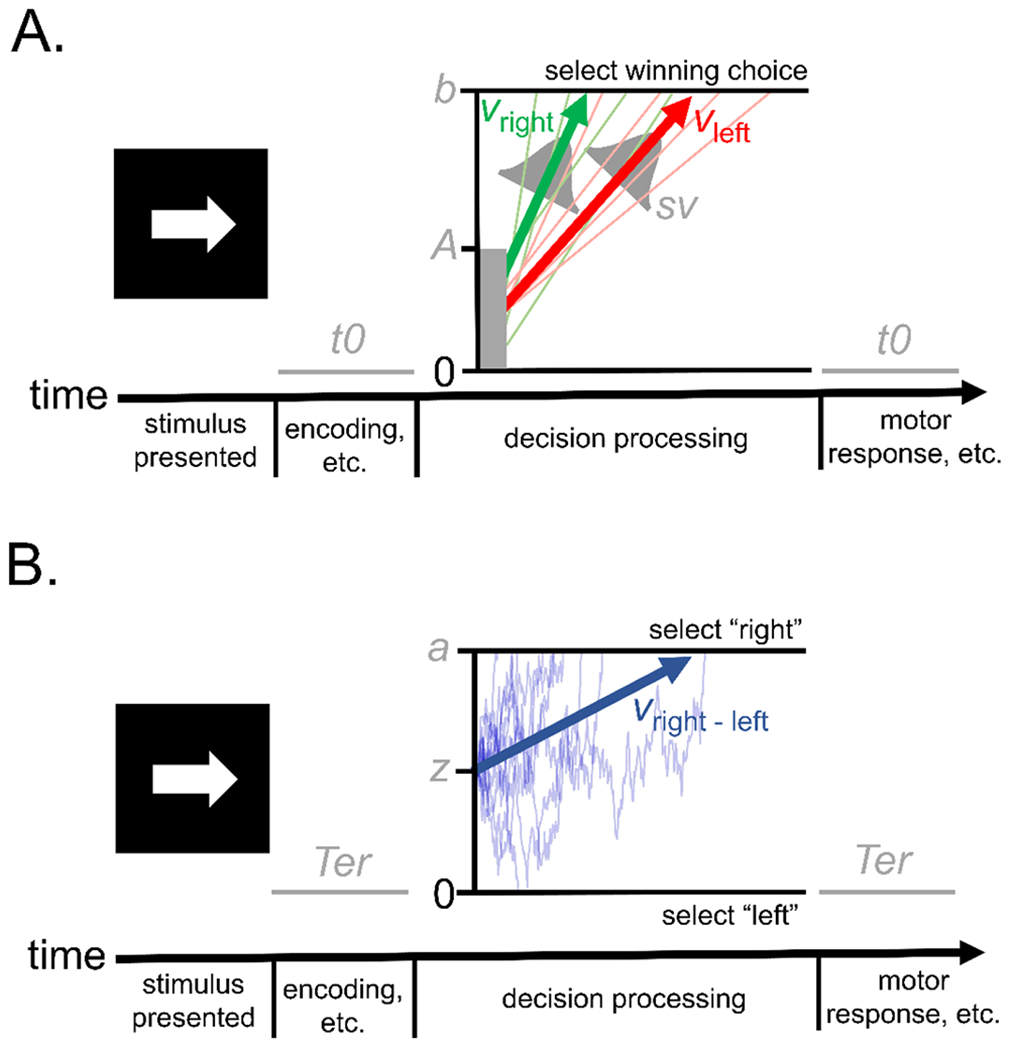

SSMs come in two general variants(62) (Figure 1). In accumulator-type models, such as the linear ballistic accumulator (LBA)(73), evidence is gathered by separate accumulators for each response option that race towards an upper threshold. In random-walk models, such as the diffusion decision model (DDM)(63,74), relative evidence for each choice is represented as a single total that drifts between boundaries representing each option. The DDM and LBA, which are the most widely-used models in each class, differ on several major assumptions. Most prominently, DDM assumes that the rate of evidence accumulation varies stochastically over time within a trial. Conversely, the LBA assumes that the evidence accumulates in a linear and deterministic manner and that any variability in accumulation rate occurs between trials. Although the within-trial variability of the DDM may be more biologically plausible, the LBA’s simplified assumptions do not appear to limit its descriptive power, and make it easier to apply(73).

Figure 1.

Schematics of the (a) linear ballistic accumulator (LBA) and (b) diffusion decision model (DDM), which are commonly applied sequential sampling models in the accumulator-type class and random-walk class, respectively. In both illustrations, the models describe a task in which an individual must decide whether a presented arrow is pointing to the left or the right, similar to the “go” choice task from common stop signal paradigms (e.g.,(78)). The LBA assumes that accumulators for the correct choice (“right” in green) and incorrect choice (“left” in red) start at a level drawn from a uniform distribution between 0 and parameter A and proceed to gather evidence at linear and deterministic rates over time as they race towards an upper response threshold, set at parameter b. The rates of evidence accumulation on individual trials, represented by the light-green and light-red traces, are drawn from normal distributions with a mean of v (represented by the green, vright, and red, vleft, arrows) and a standard deviation of sv. The DDM instead assumes a single decision variable that represents the relative amount of evidence for each of the two possible choices (e.g., evidence for “right” vs. “left”; these models are typically applied to two-choice decisions). This variable begins at parameter z and drifts over time between boundaries for each possible response, set at 0 (for “left”) and parameter a (for “right”). The drift process on individual trials, represented by the light blue traces, is stochastic and moves toward the boundary for the correct choice at an average rate of v (represented by the blue arrow, vright-left). Efficiency of evidence accumulation (EEA), defined as the rate at which an individual is able to gather relevant evidence from the environment to make accurate choices, can be measured in the LBA by subtracting the average accumulation rate for the incorrect choice (vleft) from that of the correct choice (vright). EEA is also measured by the DDM’s single average drift rate parameter (vright-left). Individuals’ level of response caution (i.e., speed/accuracy trade-off) can be indexed by parameters that represent the distance evidence accumulators must travel to trigger a response in both the LBA (parameter b) and DDM (parameter a). Both models also include parameters for time taken up by perceptual and motor processes peripheral to the decision: t0 and Ter, respectively.

Despite these differences, parameters from both models can be used to measure three key latent processes: 1) the “drift rate”, or efficiency of evidence accumulation (EEA), 2) the “threshold” or “boundary separation”, which reflects an individual’s level of caution (i.e., speed/accuracy trade-off), and 3) “non-decision time”, which accounts for time spent on peripheral (e.g., motor) operations. Applications of the DDM and LBA to the same empirical data generally suggest similar conclusions about these three key processes(75,76), although process parameterization differs slightly between the models (Figure 1) and they sometimes offer divergent accounts of other constructs (e.g., variability in memory evidence:(77)).

Several considerations are relevant when using these models. First, researchers should seek to ensure that the behavioral tasks analyzed respect SSM assumptions (e.g., number of processing stages, parameter invariance across time, and others detailed in:(65)). That said, recent work on complex paradigms has suggested that inferences from the SSMs often remain robust despite violations of certain assumptions(79,80). Second, parameters that measure processes of interest must be able to be accurately estimated from empirical data(81). Small numbers of trials and greater model complexity (i.e., more parameters) impede parameter estimation, which may force investigators to select more parsimonious models. For example, several specialized SSMs have been proposed to explain processing on inhibition (e.g., Stroop) tasks(70,72), but parameters for these complex models are difficult to estimate at trial numbers common in empirical studies(82). Therefore, an alternate approach (e.g.,(25,30)) is to fit a standard DDM to these tasks under the assumption that measurement of the main processes of interest will be robust despite some misspecification in the simpler model.

The use of SSMs to describe and differentiate cognitive mechanisms has several benefits. First, SSMs posit detailed mechanistic accounts that explain how underlying cognitive operations produce observed patterns of behavior. Thus, they make specific, quantitative predictions about behavioral data (e.g., skew of response time distributions, slow vs. fast errors) that are generally well-supported in a substantial literature(73,74,83). Second, mechanisms posited in these models have clear links to neurophysiological processes. Neural firing patterns recorded in primates across multiple brain regions during decision making display properties consistent with evidence accumulation (62,84–86), and these patterns have recently been quantitatively linked to SSM parameters in joint neural and behavioral models(87,88). Hence, SSMs display clear evidence of biological plausibility, providing an important bridge between neurophysiology and human behavioral research. Third, SSMs allow selective measurement of latent cognitive mechanisms. Standard metrics derived from laboratory tasks, such as response time (RT) and accuracy, are influenced by confounding factors such as subjects’ preferences to prioritize speed versus accuracy. However, SSMs can recover precise estimates of critical parameters irrespective of subjects’ strategies(65). A recent simulation study suggests that SSMs’ ability to measure latent processes selectively (e.g., indexing cognitive efficiency independent of speed/accuracy preferences) boosts statistical power(89). Finally, as detailed below, SSMs are beginning to provide novel insights into the structure of individual differences in cognition across the spectrum of health and psychopathology.

3. Efficiency of Evidence Accumulation as a Foundational Individual Difference Dimension

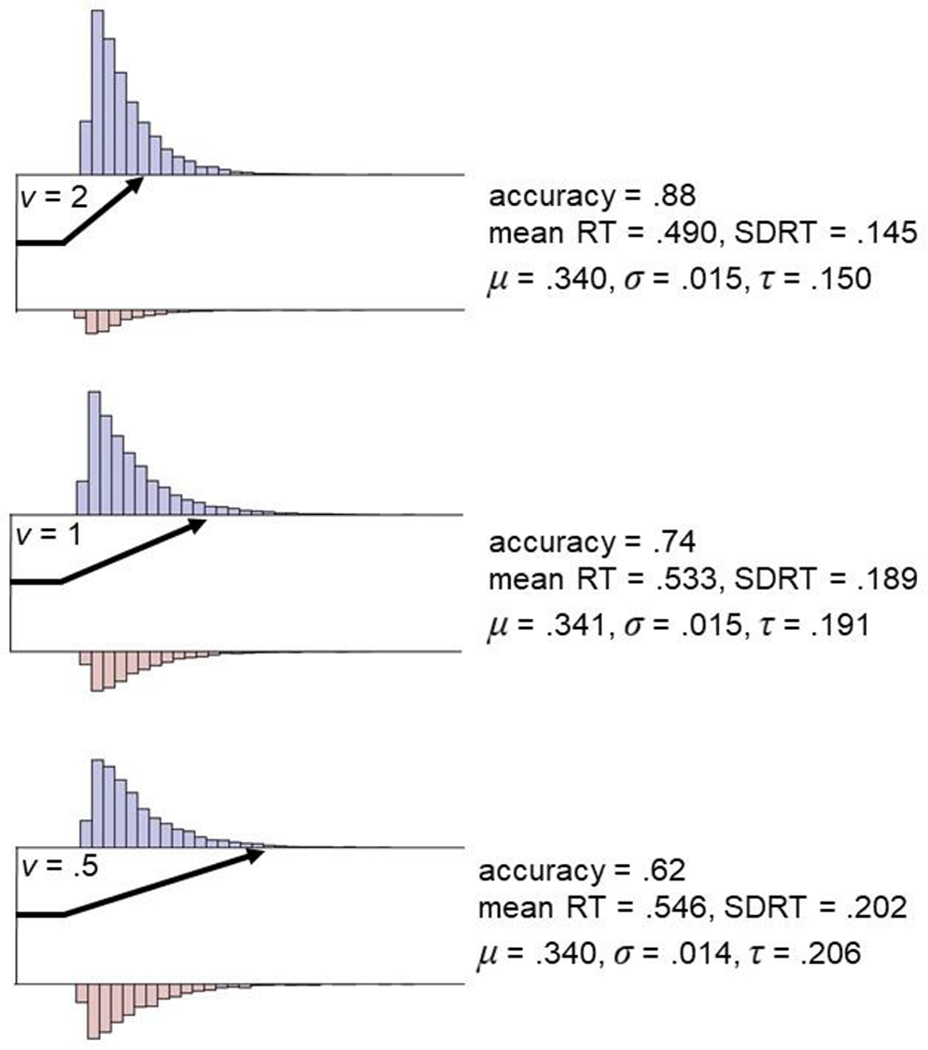

A burgeoning individual differences literature (reviewed in detail by:(90,91)) has begun to demonstrate SSMs’ utility for characterizing fundamental mechanisms of cognition. This work has primarily focused on the DDM’s “drift rate” parameter, which indexes efficiency of evidence accumulation (EEA), or the rate at which an individual gathers relevant evidence from the environment to make accurate choices in the context of background noise. Simulated DDM data in Figure 2 illustrate the behavioral consequences of variation in EEA; lower drift rates lead to lower accuracy and greater RT variability, primarily by increasing the positive skew of RT distributions(92).

Figure 2.

Simulated data that illustrate the behavioral manifestations of differences in efficiency of evidence accumulation (EEA). Response time (RT) data from 10k trials were simulated with the diffusion decision model (DDM) implemented in the R package rtdists(93) while varying drift rate (v = 2, 1, .5) and holding other DDM parameters constant (a = 1, z = .5, Ter = .300). Blue histograms represent simulated correct RTs while red histograms represent simulated error RTs. As EEA (v) decreases, accuracy rates are reduced and both the mean and standard deviation (SD) of RT increase. However, analysis of RTs with the ex-Gaussian distribution, a statistical model that allows Gaussian and exponential components of RT distributions to be indexed separately, reveals that the mean (μ) and Gaussian variability (σ) stay relatively constant, while exponential RT variability (τ; positive skew) substantially increases at lower levels of EEA. Therefore, as demonstrated in previous large-scale simulation studies(92), EEA primarily impacts RT distributions ’ level of exponential RT variability, with larger τ estimates (i.e., greater levels of positive skew) providing a behavioral hallmark for reduced EEA.

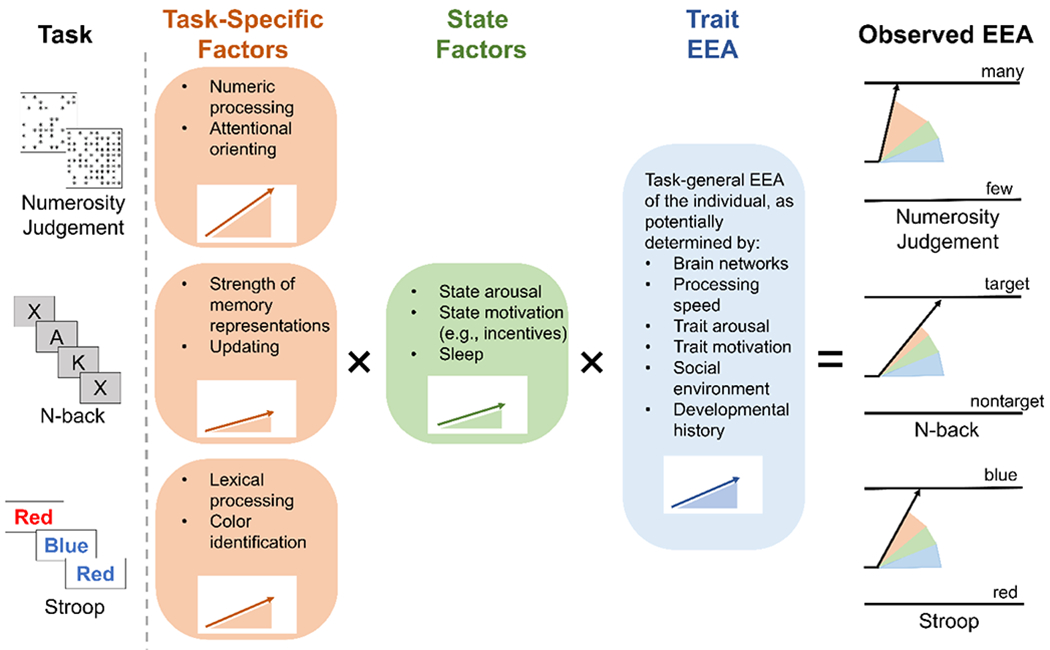

Observed EEA for an individual on a given cognitive task is likely the product of multiple processes (Figure 3). Although task-specific mechanisms (e.g., color identification on the Stroop) and state factors (e.g., motivation(94–97)) may play key roles, a growing body of findings suggests that a large portion of the variance in EEA is explained by a domain-general, trait-like factor. EEA estimates from choice tasks across different cognitive domains show strong correlations with one another, allowing the formation of a domain-general latent variable(98–103), and recent work demonstrates that this general factor remains present even after explicitly accounting for domain-specific variance in EEA(79). EEA estimates are test-retest reliable under ideal measurement conditions (e.g., 200-400 trials:(104)), and work using latent state-trait modeling across an eight-month interval found that state-related variance in EEA measures was statistically indistinguishable from zero, while trait-related variance was close to that found for intelligence tests (44% on average)(98). As EEA measured via relatively simple choice tasks correlates strongly with EEA on more complex paradigms and predicts better working memory ability and intelligence(79,99–103,105–107), trait EEA may be a critical determinant of individual differences in higher-order cognitive abilities. Taken together, this body of work indicates that EEA is a psychometrically robust cognitive individual-difference dimension that appears to be foundational to the performance of a wide variety of tasks. Importantly, the fact that trait EEA is derived from a formal, mechanistic theory of the data-generating process across cognitive measures contrasts with constructs in the fractionation paradigm, which are not linked to a generally-applicable, mechanistic theory.

Figure 3.

Hypothesized determinants of efficiency of evidence accumulation (EEA) manifested on specific cognitive tasks for a given individual.

4. Reduced EEA as a Transdiagnostic Neurocognitive Risk Factor for Psychopathology

The behavioral signatures of reduced EEA—variable RTs and less accurate responding—have long been documented in the task performance of individuals with diverse psychiatric diagnoses(97,108–112). Yet SSMs have only recently been applied in the context of clinical research. Because RT variability has been of longstanding interest in ADHD(97), SSMs have been most extensively used to study this disorder. As reviewed by others(97,113,114), and supported by subsequent work(106,115–119), individuals with ADHD consistently display reduced EEA in SSM analyses, and meta-analytic effect size estimates for comparisons with healthy participants are in the moderate to large range (d=0.75(114);g=0.63(97)). What is arguably most striking about these effects is the breadth of domains in which EEA reductions are observed, including: simple perceptual decision making(107,116,120), sustained attention(114,119,121), inhibition(122–125), pattern learning(118,126), and interval timing(117). Furthermore, stimulant medication treatments for ADHD have been found to improve EEA in both children with the disorder(94) and healthy adults(127), suggesting EEA could partially mediate treatment effects. In the latter study(127), stimulants enhanced EEA similarly in an incongruent task condition (thought to engage executive control) and a congruent task condition (where control is thought to be unengaged). Taken together with the pattern of cross-task effects observed in ADHD, this finding suggests that both ADHD-related deficits and treatment-related improvements in EEA are domain-general, spanning diverse tasks and conditions with varying levels of complexity and executive demands.

Beyond ADHD, reduced EEA has been documented in schizophrenia(128,129), depression(130), and individuals at risk for frequent substance use(131). Extending these findings, our recent work has provided evidence that EEA is a transdiagnostic risk factor for psychopathology(132). In a large sample drawn from the UCLA Consortium for Neuropsychiatric Phenomics(133) we found that a latent EEA factor derived from multiple tasks was substantially reduced in ADHD, schizophrenia, and bipolar disorder relative to healthy participants (d=0.51,1.12, and 0.40 respectively), and displayed a negative correlation with the overall severity of individuals’ cross-disorder psychopathology symptoms (r=.20). As this study made the simplifying assumption, discussed above, that the standard DDM can provide adequate measures of EEA on inhibition tasks, replication of these results using more complex modeling procedures is warranted.

We now present a hypothesis that seeks to build on this growing array of observations: We posit that lower trait EEA conveys broad risk for psychopathology, and that EEA can therefore account for a substantial proportion of performance decrements on tests of neurocognitive abilities that are observed across psychiatric disorders. Moreover, we propose that reductions in EEA similarly impair performance across tasks of varying levels of complexity, rather than selectively impacting “executive” tasks. These claims are rendered plausible by the research reviewed above documenting that: 1) trait EEA displays clear validity as a task-general cognitive individual difference dimension; 2) EEA explains a large portion of the variance in higher-order cognitive functioning; 3) EEA is impaired across multiple psychopathologies; 4) EEA impairments are present across a wide range of cognitive paradigms; and 5) individuals with psychiatric diagnoses linked to neurocognitive decrements, such as ADHD and schizophrenia, have long been found to display such decrements across both complex “executive” tasks and simple choice RT paradigms.

Although we view this evidence as compelling, we note that direct tests of our hypothesis, which have yet to be completed, would require several features. First, these tests would require that large and demographically diverse samples of individuals with and without psychiatric diagnoses complete batteries of tasks that can be used to accurately estimate SSM parameters. Second, as precise measurement of trait EEA requires latent variables informed by performance in multiple domains(79,91), tasks would need to span cognitive processing modalities (e.g., verbal, numeric) and the executive/non-executive continuum. Such data would allow the derivation of latent trait EEA metrics and assessments of EEA’s relations with an array of disorders and psychopathology symptoms.

We also note three important qualifications to our claims. First, the task-generality of trait EEA does not imply that the computational processes involved the execution of tasks from diverse cognitive domains are identical. Rather, the psychometric work reviewed above indicates that trait EEA is a primary factor driving individual differences (and therefore, we suspect, clinical differences) in task performance. Although different tasks require cognitive operations involving distinct types of evidence (Figure 3), the fact that SSMs provide a highly generalizable account of processing across tasks suggests that task-general mechanisms involved in accumulation of multiple types of evidence could plausibly drive individual differences in EEA. Indeed, estimates of task-specific variance in EEA from state-trait models are strikingly low (≤17%)(98). Second, we do not claim that trait EEA is itself determined by a single underlying process. As we outline below, current evidence suggests EEA is likely influenced by an array of biological and contextual factors. EEA may thus serve as a “watershed node”(134) in a complex matrix of causation. Watersheds are shaped by multitudinous converging water channels, but once formed, they are subsequently relatively unitary drivers of downstream effects. Similarly, we propose that EEA has multifactorial determinants, but serves as a relatively unitary driver of cognitive deficits and clinical symptoms. Third, although we posit that EEA is a prominent contributor to psychopathology-related deficits on tests of cognitive abilities, it is almost certainly the case that a much broader array of factors, beyond EEA and other influences on cognitive test performance, contribute to psychopathology symptoms. Unlike EEA, other contributors to psychopathology are likely difficult to capture on laboratory cognitive tasks and may be better-measured with alternative methods (e.g., questionnaires, biomarkers).

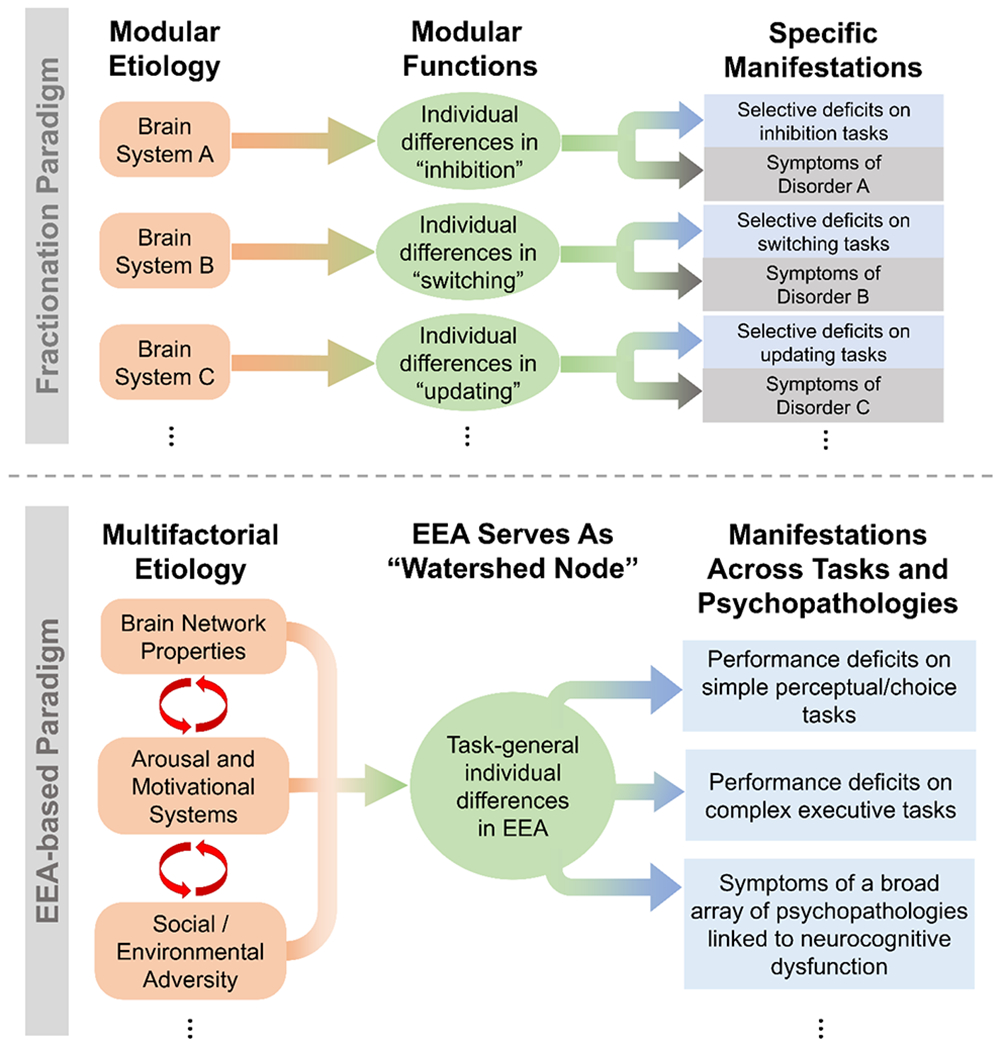

The overall framework we propose is outlined and contrasted with the conventional fractionation framework in Figure 4. We now examine its broader implications.

Figure 4:

Diagrams contrasting the general assumptions of two different approaches to studying neurocognitive contributions to psychopathology: the dominant fractionation paradigm (top) and the alternative EEA-based paradigm we highlight in the current review (bottom).

5. Implications of an EEA-Based Computational Framework for Clinical Neuroscience

A focus on complex executive tasks may be misplaced.

The preoccupation of psychiatric cognitive neuroscience with response inhibition and other “executive” constructs is understandable. There are clearly clinically-important individual differences in the ability to resist cravings for an addictive substance or to regulate tendencies to mind wander during a boring lecture. As tasks such as the Stroop were designed to selectively isolate top-down control, it makes sense that these tasks are seen as key elements of research into regulatory problems in psychopathology. However, the evidence reviewed above suggests that these tasks are not, in fact, selectively isolating executive processes.

The alternative possibility we put forward is that aberrant performance on complex executive tasks in psychopathology largely reflects task-general reductions in EEA. If this view is correct, it follows that the field’s focus on executive functions, and the experimental paradigms thought to measure them, is overly narrow. To better understand the ability to attend to a lecture or resist cravings, it may be more fruitful to investigate the clinical correlates and neural basis of task-general impairments in EEA. At the level of study design, cross-domain batteries of relatively simple cognitive tasks that are optimized for computational modeling (e.g., perceptual choice) may be as good as, or preferable to, complex tasks that attempt to experimentally isolate regulatory processes.

Subtraction in cognitive and neuroimaging measures is counterproductive.

EEA’s potential role in task-general deficits similarly calls into doubt the use of subtraction methods that attempt to isolate individual differences in specific neurocognitive processes (e.g., contrasting behavior or neural activation in conditions that do and do not require inhibition). If EEA is the primary driver of individual differences in performance across task conditions, subtraction likely obscures, rather than enhances, measurement of the clinically-relevant process.

Recent findings support this notion. In a large non-clinical sample(30), we found that subtraction-based metrics show negligible relations across tasks and do not predict self-report indices of self-regulation(135). Nonetheless, EEA estimates across these same tasks, and across executive/non-executive conditions (again obtained under the simplifying assumption that the standard DDM can adequately index EEA from inhibition paradigms), formed a coherent latent factor that was related to self-regulation (r=.18)(135). Similarly, in a neuroimaging study of the go/no-go task(136), EEA estimated from trials that require inhibition (“no-go”) was strongly correlated (r=.73) with EEA on trials that do not (“go”), suggesting that performance across conditions was largely determined by a single dimension(136). At the neural level, activation from the commonly-used neuroimaging contrast that subtracts activity during “go” trials from activity during correct “no-go” trials displayed little evidence of relationships with performance metrics (including EEA), questioning the utility of subtraction for neural measures(136). Hence, clinical neuroscientists may be better off focusing on commonalties across cognitive task conditions than on differences between them.

Findings of disorder-specific deficits in neurocognitive test data may be elusive.

A related implication is that efforts to use neurocognitive test data to identify deficits in specific cognitive functions that differentiate disorders (e.g., inhibition in ADHD) may face significant challenges. Indeed, our hypothesis that EEA is a primary driver of individual differences in performance across commonly-used tasks provides an explanation for the already well-acknowledged failure of such tasks to characterize selective deficits for many disorders(3,15,43–49). Although some may view this conclusion as discouraging, we believe it fits with an emerging view of psychopathology that emphasizes transdiagnostic individual difference dimensions (e.g., Research Domain Criteria and Hierarchical Taxonomy of Psychopathology)(17,137), where positions on multiple such dimensions characterize disorders. Specifically, it is likely that EEA, as measured on neurocognitive tasks, is one of many relevant transdiagnostic dimensions, and must be combined with indices of constructs derived from other measurement domains (e.g., socioemotional, biological) to better characterize variation in, and multifactorial causes of, psychopathology.

EEA can provide a window into the basis of neurocognitive deficits in psychopathology.

A shift in clinical research focus towards EEA is likely to produce novel mechanistic insights and facilitate translation across behavioral, systems, and neurophysiological levels of analysis. As outlined above, a major advantage of using SSMs to guide research is that the evidence accumulation processes they posit are not only biologically-plausible, but well-supported by extant neurophysiological research in non-human primates(62,84–87,138). Corresponding neural signatures of these processes in humans have also been well-characterized with electroencephalogram (EEG)(139–141) and functional magnetic resonance imaging (fMRI)(142–145). Although these signatures are distributed throughout multiple cortical areas, there is converging evidence that the frontoparietal network (FPN) and anterior insula play especially important roles(146).

Research on the neural basis of trait EEA is sparser. A handful of studies using disparate methodologies have linked between-individual differences in EEA to parietal activation during decision making(147), salience network responses to errors(136), and greater structural and functional connectivity in FPN(148). However, these studies are limited by their measurement of EEA with individual tasks, rather than with the recommended cross-domain latent factors(91). Findings that EEA is enhanced by catecholamine agonists(94,127,149) indicate EEA may be related to the integrity of dopamine or norepinephrine systems. Incentives also alter EEA(94–96), suggesting that stable traits related to motivational processes (e.g., cognitive effort discounting(150)) could impact how individuals react to these state-related factors during cognitive performance. We do not offer a comprehensive hypothesis about the etiology of individual and clinical differences in EEA because we believe doing so would be premature. However, strong evidence for the existence of a task-general trait EEA factor suggests that broad neurobiological and/or contextual (e.g., poverty) influences could impact cognitive performance though EEA.

The study of individual differences in EEA could usher in a new paradigm for understanding cognitive abnormalities in psychopathology. Rather than attempting to fractionate putative disorder-specific deficits, this paradigm would instead focus on how EEA is determined by neurophysiological processes, neurotransmitter systems, brain networks, and contextual factors such as motivation, stress and social adversity. Doing so would move the study of these influences on disordered cognition into a more mechanistic computational framework.

Conclusion

This review assessed the emerging literature on the application of mathematical process models to the study of individual and clinical differences in neurocognition. We argue that this literature presents a compelling case that trait EEA, a foundational individual difference dimension formally defined in computational models, is likely a primary driver of observed deficits on tests of neurocognitive abilities across clinical disorders. Adopting an EEA-focused research approach has the potential to transition clinical neuroscience away from measures that have poor psychometric properties and constructs that are biologically amorphous. In contrast, EEA is a precisely defined construct that has clear links to psychopathology and is well-positioned to yield richer connections with neurobiology.

Acknowledgements

AW was supported by T32 AA007477 (awarded to Dr. Frederic Blow). CS was supported by R01 MH107741 and the Dana Foundation David Mahoney Neuroimaging Program. A preprint version of this manuscript was previously released on PsyArXiv: psyarxiv.com/q9hge.

Footnotes

Disclosures

The authors have no conflicts of interest to disclose.

References

- 1.Lipszyc J, Schachar R (2010): Inhibitory control and psychopathology: a meta-analysis of studies using the stop signal task. Journal of the International Neuropsychological Society: JINS 16: 1064. [DOI] [PubMed] [Google Scholar]

- 2.Smith JL, Mattick RP, Jamadar SD, Iredale JM (2014): Deficits in behavioural inhibition in substance abuse and addiction: a meta-analysis. Drug and alcohol dependence 145: 1–33. [DOI] [PubMed] [Google Scholar]

- 3.Willcutt EG, Doyle AE, Nigg JT, Faraone SV, Pennington BF (2005): Validity of the executive function theory of attention-deficit/hyperactivity disorder: a meta-analytic review. Biol Psychiatry 57: 1336–1346. [DOI] [PubMed] [Google Scholar]

- 4.Barch DM, Ceaser A (2012): Cognition in schizophrenia: core psychological and neural mechanisms. Trends in cognitive sciences 16: 27–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Snyder HR (2013): Major depressive disorder is associated with broad impairments on neuropsychological measures of executive function: a meta-analysis and review. Psychological bulletin 139: 81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Bantin T, Stevens S, Gerlach AL, Hermann C (2016): What does the facial dot-probe task tell us about attentional processes in social anxiety? A systematic review. Journal of behavior therapy and experimental psychiatry 50: 40–51. [DOI] [PubMed] [Google Scholar]

- 7.Aron AR (2007): The neural basis of inhibition in cognitive control. The neuroscientist 13: 214–228. [DOI] [PubMed] [Google Scholar]

- 8.Rosenberg MD, Finn ES, Scheinost D, Papademetris X, Shen X, Constable RT, Chun MM (2016): A neuromarker of sustained attention from whole-brain functional connectivity. Nature neuroscience 19: 165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Mill RD, Ito T, Cole MW (2017): From connectome to cognition: The search for mechanism in human functional brain networks. NeuroImage 160: 124–139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.McTeague LM, Huemer J, Carreon DM, Jiang Y, Eickhoff SB, Etkin A (2017): Identification of common neural circuit disruptions in cognitive control across psychiatric disorders. American Journal of Psychiatry 174: 676–685. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Chambers CD, Garavan H, Bellgrove MA (2009): Insights into the neural basis of response inhibition from cognitive and clinical neuroscience. Neuroscience & biobehavioral reviews 33: 631–646. [DOI] [PubMed] [Google Scholar]

- 12.Green MF, Kern RS, Braff DL, Mintz J (2000): Neurocognitive deficits and functional outcome in schizophrenia: are we measuring the “right stuff”? Schizophrenia bulletin 26: 119–136. [DOI] [PubMed] [Google Scholar]

- 13.Green MF, Nuechterlein KH, Gold JM, Barch DM, Cohen J, Essock S, et al. (2004): Approaching a consensus cognitive battery for clinical trials in schizophrenia: the NIMH-MATRICS conference to select cognitive domains and test criteria. Biological psychiatry 56: 301–307. [DOI] [PubMed] [Google Scholar]

- 14.Abi-Dargham A, Mawlawi O, Lombardo I, Gil R, Martinez D, Huang Y, et al. (2002): Prefrontal dopamine D1 receptors and working memory in schizophrenia. Journal of Neuroscience 22: 3708–3719. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Castellanos FX, Sonuga-Barke EJ, Milham MP, Tannock R (2006): Characterizing cognition in ADHD: beyond executive dysfunction. Trends in cognitive sciences 10: 117–123. [DOI] [PubMed] [Google Scholar]

- 16.Casey BJ, Jones RM (2010): Neurobiology of the Adolescent Brain and Behavior: Implications for Substance Use Disorders. Journal of the American Academy of Child & Adolescent Psychiatry 49: 1189–1201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Cuthbert BN, Insel TR (2013): Toward the future of psychiatric diagnosis: the seven pillars of RDoC. BMC medicine 11: 126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Ferrante M, Redish AD, Oquendo MA, Averbeck BB, Kinnane ME, Gordon JA (2019): Computational psychiatry: a report from the 2017 NIMH workshop on opportunities and challenges. Mol Psychiatry 24: 479–483. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Stroop JR (1935): Studies of interference in serial verbal reactions. Journal of experimental psychology 18: 643. [Google Scholar]

- 20.Miyake A, Friedman NP, Emerson MJ, Witzki AH, Howerter A, Wager TD (2000): The unity and diversity of executive functions and their contributions to complex “frontal lobe” tasks: A latent variable analysis. Cognitive psychology 41: 49–100. [DOI] [PubMed] [Google Scholar]

- 21.Hull R, Martin RC, Beier ME, Lane D, Hamilton AC (2008): Executive function in older adults: a structural equation modeling approach. Neuropsychology 22: 508. [DOI] [PubMed] [Google Scholar]

- 22.Lee K, Bull R, Ho RM (2013): Developmental changes in executive functioning. Child development 84: 1933–1953. [DOI] [PubMed] [Google Scholar]

- 23.Karr JE, Areshenkoff CN, Rast P, Hofer SM, Iverson GL, Garcia-Barrera MA (2018): The unity and diversity of executive functions: A systematic review and re-analysis of latent variable studies. Psychological bulletin 144: 1147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Hedge C, Powell G, Sumner P (2018): The reliability paradox: Why robust cognitive tasks do not produce reliable individual differences. Behavior Research Methods 50: 1166–1186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Enkavi AZ, Eisenberg IW, Bissett PG, Mazza GL, MacKinnon DP, Marsch LA, Poldrack RA (2019): Large-scale analysis of test–retest reliabilities of self-regulation measures. Proceedings of the National Academy of Sciences 116: 5472–5477. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Rouder JN, Haaf JM (2019): A psychometrics of individual differences in experimental tasks. Psychonomic bulletin & review 26: 452–467. [DOI] [PubMed] [Google Scholar]

- 27.Dang J, King KM, Inzlicht M (2020): Why Are Self-Report and Behavioral Measures Weakly Correlated? Trends in Cognitive Sciences 24: 267–269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Nunnally JC Jr (1970): Introduction to psychological measurement. [Google Scholar]

- 29.Draheim C, Mashburn CA, Martin JD, Engle RW (2019): Reaction time in differential and developmental research: A review and commentary on the problems and alternatives. Psychological Bulletin 145: 508. [DOI] [PubMed] [Google Scholar]

- 30.Eisenberg IW, Bissett PG, Enkavi AZ, Li J, MacKinnon DP, Marsch LA, Poldrack RA (2019): Uncovering the structure of self-regulation through data-driven ontology discovery. Nature communications 10: 1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Toplak ME, West RF, Stanovich KE (2013): Practitioner review: Do performance-based measures and ratings of executive function assess the same construct? Journal of Child Psychology and Psychiatry 54: 131–143. [DOI] [PubMed] [Google Scholar]

- 32.Stahl C, Voss A, Schmitz F, Nuszbaum M, Tüscher O, Lieb K, Klauer KC (2014): Behavioral components of impulsivity. Journal of Experimental Psychology: General 143: 850. [DOI] [PubMed] [Google Scholar]

- 33.Saunders B, Milyavskaya M, Etz A, Randles D, Inzlicht M (2018): Reported self-control is not meaningfully associated with inhibition-related executive function: A Bayesian analysis. Collabra: Psychology 4. [Google Scholar]

- 34.Forbes NF, Carrick LA, McIntosh AM, Lawrie SM (2009): Working memory in schizophrenia: a meta-analysis. Psychological medicine 39: 889. [DOI] [PubMed] [Google Scholar]

- 35.Glahn DC, Therman S, Manninen M, Huttunen M, Kaprio J, Lönnqvist J, Cannon TD (2003): Spatial working memory as an endophenotype for schizophrenia. Biological psychiatry 53: 624–626. [DOI] [PubMed] [Google Scholar]

- 36.Park S, Gooding DC (2014): Working memory impairment as an endophenotypic marker of a schizophrenia diathesis. Schizophrenia Research: Cognition 1: 127–136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Slaats-Willemse D, Swaab-Barneveld H, De Sonneville L, Van Der Meulen E, Buitelaar JAN (2003): Deficient response inhibition as a cognitive endophenotype of ADHD. Journal of the American Academy of Child & Adolescent Psychiatry 42: 1242–1248. [DOI] [PubMed] [Google Scholar]

- 38.Wodka EL, Mark Mahone E, Blankner JG, Gidley Larson JC, Fotedar S, Denckla MB, Mostofsky SH (2007): Evidence that response inhibition is a primary deficit in ADHD. Journal of clinical and experimental neuropsychology 29: 345–356. [DOI] [PubMed] [Google Scholar]

- 39.Crosbie J, Arnold P, Paterson A, Swanson J, Dupuis A, Li X, et al. (2013): Response inhibition and ADHD traits: correlates and heritability in a community sample. Journal of abnormal child psychology 41: 497–507. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Becker ES, Rinck M, Roth WT, Margraf J (1998): Don’t worry and beware of white bears: Thought suppression in anxiety patients. Journal of anxiety Disorders 12: 39–55. [DOI] [PubMed] [Google Scholar]

- 41.Wenzlaff RM, Luxton DD (2003): The role of thought suppression in depressive rumination. Cognitive Therapy and Research 27: 293–308. [Google Scholar]

- 42.Beevers C, Meyer B (2004): Thought suppression and depression risk. Cognition and Emotion 18: 859–867. [Google Scholar]

- 43.Heinrichs RW, Zakzanis KK (1998): Neurocognitive deficit in schizophrenia: a quantitative review of the evidence. Neuropsychology 12: 426. [DOI] [PubMed] [Google Scholar]

- 44.Mesholam-Gately RI, Giuliano AJ, Goff KP, Faraone SV, Seidman LJ (2009): Neurocognition in first-episode schizophrenia: a meta-analytic review. Neuropsychology 23: 315. [DOI] [PubMed] [Google Scholar]

- 45.Bora E, Pantelis C (2015): Meta-analysis of cognitive impairment in first-episode bipolar disorder: comparison with first-episode schizophrenia and healthy controls. Schizophrenia bulletin 41: 1095–1104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Snyder HR, Friedman NP, Hankin BL (2019): Transdiagnostic mechanisms of psychopathology in youth: executive functions, dependent stress, and rumination. Cognitive therapy and research 43: 834–851. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Snyder HR, Kaiser RH, Warren SL, Heller W (2015): Obsessive-compulsive disorder is associated with broad impairments in executive function: A meta-analysis. Clinical Psychological Science 3: 301–330. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Bloemen AJP, Oldehinkel AJ, Laceulle OM, Ormel J, Rommelse NNJ, Hartman CA (2018): The association between executive functioning and psychopathology: general or specific? Psychological medicine 48: 1787–1794. [DOI] [PubMed] [Google Scholar]

- 49.Nigg JT (2005): Neuropsychologic theory and findings in attention-deficit/hyperactivity disorder: the state of the field and salient challenges for the coming decade. Biological psychiatry 57: 1424–1435. [DOI] [PubMed] [Google Scholar]

- 50.Wiecki TV, Poland J, Frank MJ (2015): Model-based cognitive neuroscience approaches to computational psychiatry: Clustering and classification. Clinical Psychological Science 3: 378–399. [Google Scholar]

- 51.Sharp PB, Eldar E (2019): Computational models of anxiety: Nascent efforts and future directions. Current Directions in Psychological Science 28: 170–176. [Google Scholar]

- 52.Haslbeck J, Ryan O, Robinaugh D, Waldorp L, Borsboom D (2019): Modeling Psychopathology: From Data Models to Formal Theories. [DOI] [PMC free article] [PubMed]

- 53.Guest O, Martin AE (2020): How computational modeling can force theory building in psychological science. [DOI] [PubMed]

- 54.Estes W (1975): Some targets for mathematical psychology. Journal of Mathematical Psychology 12: 263–282. [Google Scholar]

- 55.Townsend JT (2008): Mathematical psychology: Prospects for the 21st century: A guest editorial. Journal of mathematical psychology 52: 269–280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Van Zandt T, Townsend JT (2012): Mathematical psychology. [Google Scholar]

- 57.Forstmann BU, Dutilh G, Brown S, Neumann J, Von Cramon DY, Ridderinkhof KR, Wagenmakers E-J (2008): Striatum and pre-SMA facilitate decision-making under time pressure. Proceedings of the National Academy of Sciences 105: 17538–17542. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Turner BM, Forstmann BU, Love BC, Palmeri TJ, Van Maanen L (2017): Approaches to analysis in model-based cognitive neuroscience. Journal of Mathematical Psychology 76: 65–79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Hawkins G, Mittner M, Boekel W, Heathcote A, Forstmann BU (2015): Toward a model-based cognitive neuroscience of mind wandering. Neuroscience 310: 290–305. [DOI] [PubMed] [Google Scholar]

- 60.Maia TV, Huys QJ, Frank MJ (2017): Theory-based computational psychiatry. Biological psychiatry 82: 382–384. [DOI] [PubMed] [Google Scholar]

- 61.Huys QJM, Maia TV, Frank MJ (2016): Computational psychiatry as a bridge from neuroscience to clinical applications. Nat Neurosci 19: 404–413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Smith PL, Ratcliff R (2004): Psychology and neurobiology of simple decisions. Trends in Neurosciences 27: 161–168. [DOI] [PubMed] [Google Scholar]

- 63.Ratcliff R (1978): A theory of memory retrieval. Psychological review 85: 59. [DOI] [PubMed] [Google Scholar]

- 64.Usher M, McClelland JL (2001): The time course of perceptual choice: the leaky, competing accumulator model. Psychological review 108: 550. [DOI] [PubMed] [Google Scholar]

- 65.Voss A, Nagler M, Lerche V (2013): Diffusion models in experimental psychology: A practical introduction. Experimental psychology 60: 385. [DOI] [PubMed] [Google Scholar]

- 66.Krajbich I, Lu D, Camerer C, Rangel A (2012): The attentional drift-diffusion model extends to simple purchasing decisions. Frontiers in psychology 3: 193. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Johnson EJ, Ratcliff R (2014): Computational and process models of decision making in psychology and behavioral economics. Neuroeconomics. Elsevier, pp 35–47. [Google Scholar]

- 68.Ratcliff R, Huang-Pollock C, McKoon G (2018): Modeling individual differences in the go/no-go task with a diffusion model. Decision 5: 42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Evans NJ, Steyvers M, Brown SD (2018): Modeling the covariance structure of complex datasets using cognitive models: An application to individual differences and the heritability of cognitive ability. Cognitive science 42: 1925–1944. [DOI] [PubMed] [Google Scholar]

- 70.White CN, Ratcliff R, Starns JJ (2011): Diffusion models of the flanker task: Discrete versus gradual attentional selection. Cognitive psychology 63: 210–238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Servant M, Montagnini A, Burle B (2014): Conflict tasks and the diffusion framework: Insight in model constraints based on psychological laws. Cognitive psychology 72: 162– 195. [DOI] [PubMed] [Google Scholar]

- 72.Ulrich R, Schröter H, Leuthold H, Birngruber T (2015): Automatic and controlled stimulus processing in conflict tasks: Superimposed diffusion processes and delta functions. Cognitive psychology 78: 148–174. [DOI] [PubMed] [Google Scholar]

- 73.Brown SD, Heathcote A (2008): The simplest complete model of choice response time: Linear ballistic accumulation. Cognitive Psychology 57: 153–178. [DOI] [PubMed] [Google Scholar]

- 74.Ratcliff R, Smith PL, Brown SD, McKoon G (2016): Diffusion Decision Model: Current Issues and History. Trends Cogn Sci 20: 260–281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Donkin C, Brown S, Heathcote A, Wagenmakers E-J (2011): Diffusion versus linear ballistic accumulation: different models but the same conclusions about psychological processes? Psychonomic bulletin & review 18: 61–69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Dutilh G, Annis J, Brown SD, Cassey P, Evans NJ, Grasman RP, et al. (2019): The quality of response time data inference: A blinded, collaborative assessment of the validity of cognitive models. Psychonomic bulletin & review 26: 1051–1069. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Osth AF, Bora B, Dennis S, Heathcote A (2017): Diffusion vs. linear ballistic accumulation: Different models, different conclusions about the slope of the zROC in recognition memory. Journal of Memory and Language 96: 36–61. [Google Scholar]

- 78.Casey B, Cannonier T, Conley MI, Cohen AO, Barch DM, Heitzeg MM, et al. (2018): The adolescent brain cognitive development (ABCD) study: imaging acquisition across 21 sites. Developmental cognitive neuroscience 32: 43–54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Lerche V, von Krause M, Voss A, Frischkorn G, Schubert A-L, Hagemann D (2020): Diffusion modeling and intelligence: Drift rates show both domaingeneral and domain-specific relations with intelligence. Journal of Experimental Psychology: General. [DOI] [PubMed] [Google Scholar]

- 80.Lerche V, Voss A (2019): Experimental validation of the diffusion model based on a slow response time paradigm. Psychological research 83: 1194–1209. [DOI] [PubMed] [Google Scholar]

- 81.Matzke D, Logan GD, Heathcote A (2020): A Cautionary Note on Evidence-Accumulation Models of Response Inhibition in the Stop-Signal Paradigm. Computational Brain & Behavior, 10.1007/s42113-020-00075-x [DOI] [Google Scholar]

- 82.White CN, Servant M, Logan GD (2018): Testing the validity of conflict drift-diffusion models for use in estimating cognitive processes: A parameter-recovery study. Psychonomic bulletin & review 25: 286–301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Evans NJ, Wagenmakers E-J (2019): Evidence accumulation models: Current limitations and future directions.

- 84.Gold JI, Shadlen MN (2007): The neural basis of decision making. Annual review of neuroscience 30. [DOI] [PubMed] [Google Scholar]

- 85.Hanes DP, Schall JD (1996): Neural control of voluntary movement initiation. Science 274: 427–430. [DOI] [PubMed] [Google Scholar]

- 86.Kiani R, Hanks TD, Shadlen MN (2008): Bounded integration in parietal cortex underlies decisions even when viewing duration is dictated by the environment. Journal of Neuroscience 28: 3017–3029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Cassey PJ, Gaut G, Steyvers M, Brown SD (2016): A generative joint model for spike trains and saccades during perceptual decision-making. Psychon Bull Rev 23: 1757–1778. [DOI] [PubMed] [Google Scholar]

- 88.Aoi MC, Mante V, Pillow JW (2020): Prefrontal cortex exhibits multidimensional dynamic encoding during decision-making. Nature Neuroscience 1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Stafford T, Pirrone A, Croucher M, Krystalli A (2020): Quantifying the benefits of using decision models with response time and accuracy data. Behavior Research Methods 1–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Schubert A-L, Frischkorn GT (2020): Neurocognitive Psychometrics of Intelligence: How Measurement Advancements Unveiled the Role of Mental Speed in Intelligence Differences. Current Directions in Psychological Science 0963721419896365. [Google Scholar]

- 91.Frischkorn GT, Schubert A-L (2018): Cognitive models in intelligence research: advantages and recommendations for their application. Journal of Intelligence 6: 34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Matzke D, Wagenmakers E-J (2009): Psychological interpretation of the ex-Gaussian and shifted Wald parameters: A diffusion model analysis. Psychonomic Bulletin & Review 16: 798–817. [DOI] [PubMed] [Google Scholar]

- 93.Singmann H, Brown S, Gretton M, Heathcote A (2016): rtdists: Response time distributions. R package version 04–9 URL http://CRAN R-project org/package= rtdists.

- 94.Fosco WD, White CN, Hawk LW (2017): Acute stimulant treatment and reinforcement increase the speed of information accumulation in children with ADHD. Journal of abnormal child psychology 45: 911–920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Spaniol J, Voss A, Bowen HJ, Grady CL (2011): Motivational incentives modulate age differences in visual perception. Psychology and aging 26: 932. [DOI] [PubMed] [Google Scholar]

- 96.Dix A, Li S (2020): incentive motivation improves numerosity discrimination: insights from pupillometry combined with drift-diffusion modelling. Scientific reports 10: 1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Karalunas SL, Geurts HM, Konrad K, Bender S, Nigg JT (2014): Annual research review: Reaction time variability in ADHD and autism spectrum disorders: Measurement and mechanisms of a proposed trans-diagnostic phenotype. Journal of Child Psychology and Psychiatry 55: 685–710. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Schubert A-L, Frischkorn G, Hagemann D, Voss A (2016): Trait characteristics of diffusion model parameters. Journal of Intelligence 4: 7. [Google Scholar]

- 99.Schmiedek F, Oberauer K, Wilhelm O, Süß H-M, Wittmann WW (2007): Individual differences in components of reaction time distributions and their relations to working memory and intelligence. Journal of Experimental Psychology: General 136: 414. [DOI] [PubMed] [Google Scholar]

- 100.Schmitz F, Wilhelm O (2016): Modeling mental speed: Decomposing response time distributions in elementary cognitive tasks and correlations with working memory capacity and fluid intelligence. Journal of Intelligence 4: 13. [Google Scholar]

- 101.Schulz-Zhecheva Y, Voelkle MC, Beauducel A, Biscaldi M, Klein C (2016): Predicting fluid intelligence by components of reaction time distributions from simple choice reaction time tasks. Journal of Intelligence 4: 8. [Google Scholar]

- 102.Ratcliff R, Thapar A, McKoon G (2010): Individual differences, aging, and IQ in two-choice tasks. Cognitive psychology 60: 127–157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Schubert A-L, Nunez MD, Hagemann D, Vandekerckhove J (2019): Individual differences in cortical processing speed predict cognitive abilities: a model-based cognitive neuroscience account. Computational Brain & Behavior 2: 64–84. [Google Scholar]

- 104.Lerche V, Voss A (2017): Retest reliability of the parameters of the Ratcliff diffusion model. Psychological research 81: 629–652. [DOI] [PubMed] [Google Scholar]

- 105.Ratcliff R, Thapar A, McKoon G (2011): Effects of aging and IQ on item and associative memory. Journal of Experimental Psychology: General 140: 464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106.Weigard A, Huang-Pollock C (2017): The role of speed in ADHD-related working memory deficits: A time-based resource-sharing and diffusion model account. Clin Psychol Sci 5: 195–211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107.Karalunas SL, Huang-Pollock CL (2013): Integrating impairments in reaction time and executive function using a diffusion model framework. Journal of abnormal child psychology 41: 837–850. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 108.Epstein JN, Langberg JM, Rosen PJ, Graham A, Narad ME, Antonini TN, et al. (2011): Evidence for higher reaction time variability for children with ADHD on a range of cognitive tasks including reward and event rate manipulations. Neuropsychology 25: 427. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 109.Kofler MJ, Rapport MD, Sarver DE, Raiker JS, Orban SA, Friedman LM, Kolomeyer EG (2013): Reaction time variability in ADHD: a meta-analytic review of 319 studies. Clinical psychology review 33: 795–811. [DOI] [PubMed] [Google Scholar]

- 110.Kaiser S, Roth A, Rentrop M, Friederich H-C, Bender S, Weisbrod M (2008): Intra-individual reaction time variability in schizophrenia, depression and borderline personality disorder. Brain and cognition 66: 73–82. [DOI] [PubMed] [Google Scholar]

- 111.Schwartz F, Carr AC, Munich RL, Glauber S, Lesser B, Murray J (1989): Reaction time impairment in schizophrenia and affective illness: the role of attention. Biological psychiatry 25: 540–548. [DOI] [PubMed] [Google Scholar]

- 112.Brotman MA, Rooney MH, Skup M, Pine DS, Leibenluft E (2009): Increased intrasubject variability in response time in youths with bipolar disorder and at-risk family members. Journal of the American Academy of Child & Adolescent Psychiatry 48: 628–635. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 113.Ziegler S, Pedersen ML, Mowinckel AM, Biele G (2016): Modelling ADHD: A review of ADHD theories through their predictions for computational models of decision-making and reinforcement learning. Neuroscience & Biobehavioral Reviews 71: 633–656. [DOI] [PubMed] [Google Scholar]

- 114.Huang-Pollock CL, Karalunas SL, Tam H, Moore AN (2012): Evaluating vigilance deficits in ADHD: a meta-analysis of CPT performance. Journal of abnormal psychology 121: 360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 115.Kofler MJ, Irwin LN, Sarver DE, Fosco WD, Miller CE, Spiegel JA, Becker SP (2019): What cognitive processes are “sluggish” in sluggish cognitive tempo? Journal of consulting and clinical psychology 87: 1030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 116.Weigard A, Huang-Pollock C, Brown S, Heathcote A (2018): Testing formal predictions of neuroscientific theories of ADHD with a cognitive model—based approach. Journal of Abnormal Psychology 127: 529–539. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 117.Shapiro Z, Huang-Pollock C (2019): A diffusion-model analysis of timing deficits among children with ADHD. Neuropsychology. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 118.Weigard A, Huang-Pollock C, Brown S (2016): Evaluating the consequences of impaired monitoring of learned behavior in attention-deficit/hyperactivity disorder using a Bayesian hierarchical model of choice response time. Neuropsychology 30: 502–515. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 119.Huang-Pollock C, Ratcliff R, McKoon G, Roule A, Warner T, Feldman J, Wise S (2020): A diffusion model analysis of sustained attention in children with attention deficit hyperactivity disorder. Neuropsychology. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 120.Karalunas SL, Huang-Pollock CL, Nigg JT (2012): Decomposing attention-deficit/hyperactivity disorder (ADHD)-related effects in response speed and variability. Neuropsychology 26: 684. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 121.Killeen PR, Russell VA, Sergeant JA (2013): A behavioral neuroenergetics theory of ADHD. Neuroscience & Biobehavioral Reviews 37: 625–657. [DOI] [PubMed] [Google Scholar]

- 122.Salum G, Sergeant J, Sonuga-Barke E, Vandekerckhove J, Gadelha A, Pan P, et al. (2014): Specificity of basic information processing and inhibitory control in attention deficit hyperactivity disorder. Psychological Medicine 44: 617–631. [DOI] [PubMed] [Google Scholar]

- 123.Metin B, Roeyers H, Wiersema JR, van der Meere JJ, Thompson M, Sonuga-Barke E (2013): ADHD performance reflects inefficient but not impulsive information processing: A diffusion model analysis. Neuropsychology 27: 193. [DOI] [PubMed] [Google Scholar]

- 124.Huang-Pollock C, Ratcliff R, McKoon G, Shapiro Z, Weigard A, Galloway-Long H (2017): Using the Diffusion Model to Explain Cognitive Deficits in Attention Deficit Hyperactivity Disorder. J Abnorm Child Psychol 45: 57–68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 125.Karalunas SL, Weigard A, Alperin B (2020): Emotion-cognition interactions in ADHD: Increased early attention capture and weakened attentional control in emotional contexts. Biological Psychiatry: Cognitive Neuroscience and Neuroimaging. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 126.Weigard A, Huang-Pollock C (2014): A diffusion modelling approach to understanding contextual cueing effects in children with ADHD. J Child Psychol Psychiatry 55: 1336–1344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 127.Weigard A, Heathcote A, Sripada C (2019): Modeling the effects of methylphenidate on interference and evidence accumulation processes using the conflict linear ballistic accumulator. Psychopharmacology 236: 2501–2512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 128.Heathcote A, Suraev A, Curley S, Gong Q, Love J, Michie PT (2015): Decision processes and the slowing of simple choices in schizophrenia. Journal of Abnormal Psychology 124: 961. [DOI] [PubMed] [Google Scholar]

- 129.Fish S, Toumaian M, Pappa E, Davies TJ, Tanti R, Saville CW, et al. (2018): Modelling reaction time distribution of fast decision tasks in schizophrenia: Evidence for novel candidate endophenotypes. Psychiatry research 269: 212–220. [DOI] [PubMed] [Google Scholar]

- 130.Lawlor VM, Webb CA, Wiecki TV, Frank MJ, Trivedi M, Pizzagalli DA, Dillon DG (2019): Dissecting the impact of depression on decision-making. Psychological medicine 1–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 131.Weigard AS, Brislin SJ, Cope LM, Hardee JE, Martz ME, Ly A, et al. (2020): Evidence accumulation and associated error-related brain activity as computationally informed prospective predictors of substance use in emerging adulthood. bioRxiv. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 132.Sripada C, Weigard AS (2020): Impaired Evidence Accumulation as a Transdiagnostic Vulnerability Factor in Psychopathology. PsyArXiv. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 133.Poldrack RA, Congdon E, Triplett W, Gorgolewski K, Karlsgodt K, Mumford J, et al. (2016): A phenome-wide examination of neural and cognitive function. Scientific data 3: 1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 134.Keller MC, Miller G (2006): Resolving the paradox of common, harmful, heritable mental disorders: which evolutionary genetic models work best? Behavioral and brain sciences 29: 385. [DOI] [PubMed] [Google Scholar]

- 135.Weigard A, Clark DA, Sripada C (2020): Cognitive efficiency beats subtraction-based metrics as a reliable individual difference dimension relevant to self-control. PsyArXiv. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 136.Weigard A, Soules M, Ferris B, Zucker RA, Sripada C, Heitzeg M (2019): Cognitive modeling informs interpretation of go/no-go task-related neural activations and their links to externalizing psychopathology. Biological Psychiatry: Cognitive Neuroscience and Neuroimaging 614420. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 137.Kotov R, Krueger RF, Watson D, Achenbach TM, Althoff RR, Bagby RM, et al. (2017): The Hierarchical Taxonomy of Psychopathology (HiTOP): a dimensional alternative to traditional nosologies. Journal of abnormal psychology 126: 454. [DOI] [PubMed] [Google Scholar]

- 138.Ratcliff R, Cherian A, Segraves M (2003): A comparison of macaque behavior and superior colliculus neuronal activity to predictions from models of two-choice decisions. Journal of neurophysiology 90: 1392–1407. [DOI] [PubMed] [Google Scholar]

- 139.O’connell RG, Dockree PM, Kelly SP (2012): A supramodal accumulation-to-bound signal that determines perceptual decisions in humans. Nature neuroscience 15: 1729. [DOI] [PubMed] [Google Scholar]

- 140.Nunez MD, Vandekerckhove J, Srinivasan R (2017): How attention influences perceptual decision making: Single-trial EEG correlates of drift-diffusion model parameters. Journal of mathematical psychology 76: 117–130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 141.Loughnane GM, Newman DP, Bellgrove MA, Lalor EC, Kelly SP, O’Connell RG (2016): Target selection signals influence perceptual decisions by modulating the onset and rate of evidence accumulation. Current Biology 26: 496–502. [DOI] [PubMed] [Google Scholar]

- 142.Ho TC, Brown S, Serences JT (2009): Domain general mechanisms of perceptual decision making in human cortex. Journal of Neuroscience 29: 8675–8687. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 143.Liu T, Pleskac TJ (2011): Neural correlates of evidence accumulation in a perceptual decision task. Journal of neurophysiology 106: 2383–2398. [DOI] [PubMed] [Google Scholar]

- 144.Krueger PM, van Vugt MK, Simen P, Nystrom L, Holmes P, Cohen JD (2017): Evidence accumulation detected in BOLD signal using slow perceptual decision making. Journal of neuroscience methods 281: 21–32. [DOI] [PubMed] [Google Scholar]

- 145.Heekeren HR, Marrett S, Bandettini PA, Ungerleider LG (2004): A general mechanism for perceptual decision-making in the human brain. Nature 431: 859–862. [DOI] [PubMed] [Google Scholar]

- 146.Mulder M, Van Maanen L, Forstmann B (2014): Perceptual decision neurosciences—a model-based review. Neuroscience 277: 872–884. [DOI] [PubMed] [Google Scholar]

- 147.Kühn S, Schmiedek F, Schott B, Ratcliff R, Heinze H-J, Düzel E, et al. (2011): Brain areas consistently linked to individual differences in perceptual decision-making in younger as well as older adults before and after training. Journal of Cognitive Neuroscience 23: 2147–2158. [DOI] [PubMed] [Google Scholar]

- 148.Brosnan MB, Sabaroedin K, Silk T, Genc S, Newman DP, Loughnane GM, et al. (2020): Evidence accumulation during perceptual decisions in humans varies as a function of dorsal frontoparietal organization. Nature Human Behaviour 1–12. [DOI] [PubMed] [Google Scholar]

- 149.Loughnane GM, Brosnan MB, Barnes JJ, Dean A, Nandam SL, O’Connell RG, Bellgrove MA (2019): Catecholamine modulation of evidence accumulation during perceptual decision formation: a randomized trial. Journal of cognitive neuroscience 31: 1044–1053. [DOI] [PubMed] [Google Scholar]

- 150.Westbrook A, van den Bosch R, Määttä J, Hofmans L, Papadopetraki D, Cools R, Frank MJ (2020): Dopamine promotes cognitive effort by biasing the benefits versus costs of cognitive work. Science 367: 1362–1366. [DOI] [PMC free article] [PubMed] [Google Scholar]