Abstract

Humans readily recognize familiar rhythmic patterns, such as isochrony (equal timing between events) across a wide range of rates. This reflects a facility with perceiving the relative timing of events, not just absolute interval durations. Several lines of evidence suggest this ability is supported by precise temporal predictions arising from forebrain auditory–motor interactions. We have shown previously that male zebra finches, Taeniopygia guttata, which possess specialized auditory–motor networks and communicate with rhythmically patterned sequences, share our ability to flexibly recognize isochrony across rates. To test the hypothesis that flexible rhythm pattern perception is linked to vocal learning, we ask whether female zebra finches, which do not learn to sing, can also recognize global temporal patterns. We find that females can flexibly recognize isochrony across a wide range of rates but perform slightly worse than males on average. These findings are consistent with recent work showing that while females have reduced forebrain song regions, the overall network connectivity of vocal premotor regions is similar to males and may support predictions of upcoming events. Comparative studies of male and female songbirds thus offer an opportunity to study how individual differences in auditory–motor connectivity influence perception of relative timing, a hallmark of human music perception.

Keywords: auditory perception, auditory–motor interaction, comparative cognition, isochrony, sensorimotor circuit, zebra finch

The ability to recognize auditory rhythms is critical for many species (Clemens et al., 2021; Garcia et al., 2020; Mathevon et al., 2017), but the underlying neural mechanisms are only beginning to be understood. One area of progress in elucidating the neural circuits for rhythm perception is understanding how communication signals are recognized based on tempo. For example, female field crickets are attracted by male calling songs composed of trains of short sound pulses when the tempo is ~30 syllables/s. This selectivity is hardwired and mediated by a small network of interneurons that processes instantaneous pulse rate (Schöneich et al., 2015). While this preference is genetically fixed, in other animals, experience can sculpt neural responses to behaviourally salient call rates. For example, in the mouse auditory cortex, excitatory cells are innately sensitive to the most common pup distress call rate (~5 syllables/s), but their tuning can broaden to a wider range of rates following co-housing with pups producing calls across a range of rates (Schiavo et al., 2020).

Much less is known about how the brain recognizes rhythmic patterns independently of rate. While humans can encode and remember the rate of auditory sequences (Levitin & Cook, 1996), we also readily recognize a given rhythmic pattern, e.g. from a favourite song, when it is played at different tempi. The ability to recognize a specific rhythmic pattern whether it is played fast or slow is present in infants (Trehub & Thorpe, 1989) and is based on recognition of the relative timing of intervals more than on their absolute durations. One important rhythmic pattern that humans recognize across a broad range of rates is isochrony, or equal timing between events (Espinoza-Monroy & de Lafuente, 2021). Isochronous patterns at different tempi can differ substantially in absolute timing but are identical in relative timing, so the human facility for recognizing isochrony across a range of tempi is a prime example of the use of relative timing in rhythmic pattern recognition. Isochronous rhythms are common in music around the world (Savage et al., 2015) and are fundamental to the human ability to synchronize movements and sounds with music (Merker et al., 2009; Ravignani & Madison, 2017). In addition, the ability to detect and predict isochrony across a range of rates is central to the positive effects of music-based therapies on a variety of neurological disorders, including normalizing gait in Parkinson’s disease (Dalla Bella et al., 2017; Krotinger & Loui, 2021).

In humans, there is growing evidence that the neural mechanisms underlying perception of relative timing are distinct from those involved in encoding absolute timing (Breska & Ivry, 2018; Grube et al., 2010; Teki et al., 2011). In addition, neuroimaging studies have shown that both auditory and motor regions are active when people listen to rhythms, even in the absence of overt movement. Responses in several motor regions are greater when the stimulus has an underlying isochronous pulse or ‘beat’ (Chen et al., 2008; Grahn & Brett, 2007), and transient manipulation of auditory–motor connections using transcranial magnetic stimulation can disrupt beat perception without affecting single-interval timing (Ross et al., 2018). Based on such findings, we and others have suggested that perception of temporal regularity (independent of rate) depends on the interaction of motor and auditory regions: the motor planning system uses information from the auditory system to make predictions about the timing of upcoming events and communicates these predictions back to auditory regions via reciprocal connections (Cannon & Patel, 2021; Patel & Iversen, 2014). Such predictions could support detection of rhythmic patterns independent of tempo because the relative duration of adjacent intervals remains the same across different rates (e.g. 1:1 for an isochronous pattern).

Given that vocal learning species often communicate using rhythmically patterned sequences (Norton & Scharff, 2016; Roeske et al., 2020) and have evolved specialized motor planning regions that are reciprocally connected to auditory forebrain regions, we have hypothesized that vocal learners are advantaged in flexible auditory rhythm pattern perception (Rouse et al., 2021). Consistent with this hypothesis, prior work has shown that zebra finches, Taeniopygia guttata, and European starlings, Sternus vulgaris, two species of vocal learning songbirds, can learn to discriminate isochronous from arrhythmic sound sequences and can generalize this ability to stimuli at novel tempi, including rates distant from the training tempi (Hulse et al., 1984; Rouse et al., 2021; but see ten Cate et al., 2016; van der Aa et al., 2015, for evidence of more limited generalization in zebra finches). This ability, also seen in humans (Espinoza-Monroy & de Lafuente, 2021), demonstrates a facility with recognizing a rhythm based on global temporal patterns (relative timing), since absolute durations of intervals differ markedly at distant tempi. These findings contrast with similar research conducted with vocal nonlearning species. For example, pigeons, Columbia livia, can learn to discriminate sound sequences based on tempo but cannot learn to discriminate isochronous from arrhythmic sound patterns (Hagmann & Cook, 2010). Norway rats, Rattus norvegicus, can be trained to discriminate isochronous from arrhythmic sound sequences but show limited generalization when tested at novel tempi, suggesting a strong reliance on absolute timing for rhythm perception (Celma-Miralles & Toro, 2020).

Here, we further test the hypothesis that differences in vocal learning abilities correlate with differences in flexible rhythm pattern perception by taking advantage of sex differences in zebra finches (Nottebohm & Arnold, 1976). Although auditory sensitivity is similar across sexes in this species (Yeh et al., 2022), only males learn to imitate song and the neural circuitry subserving vocal learning is greatly reduced in females. Thus, we predicted that male zebra finches would exhibit faster learning rates for discriminating isochronous versus arrhythmic stimuli and/or a greater ability to recognize these categories at novel tempi. By using the same apparatus, stimuli and methods, we can meaningfully compare the flexibility of male versus female rhythmic pattern perception in this species.

Several prior findings, however, support the opposite prediction: either no sex differences in rhythmic pattern perception, or better rhythmic pattern perception in female zebra finches. One reason females may not be disadvantaged in our task is that they analyse male song when choosing a mate, and a previous meta-analysis found that female zebra finches are faster than males at learning to discriminate spectrotemporally complex auditory stimuli (Kriengwatana et al., 2016). In addition, while the volume of vocal motor regions that subserve song performance is substantially greater in male zebra finches, a recent anatomical study found male-typical patterns of connectivity in the vocal premotor region in females, including minimal sex differences in afferent auditory and other inputs (Shaughnessy et al., 2019). Moreover, a recent study of antiphonal calling suggests that female zebra finches may perform better than males on rhythmic pattern processing (Benichov et al., 2016). In that study, both male and female zebra finches could predict the timing of calls of a rhythmically calling vocal partner, allowing them to adjust the timing of their own answers to avoid overlap. This ability to predictively adjust call timing was enhanced in females and was disrupted by lesions of vocal motor forebrain regions, suggesting that brain regions associated with singing in males may subserve auditory perception and/or timing abilities in female zebra finches.

METHODS

Subjects

Subjects were 22 experimentally naïve female zebra finches from our breeding colony (mean ± SD age = 72 ± 8 days posthatch at the start of training; range 61–87 days posthatch). Thirteen females were tested on rhythm discrimination, six females were tested on pitch discrimination and tempo discrimination (see below) and three females did not learn to use the apparatus. Data from 14 age-matched male zebra finches were collected by Rouse et al. (2021), 10 for rhythm discrimination and four for pitch and tempo discrimination. Data from six of the 13 females were collected during the same period as data for the males (March–December 2019); data from the other seven females were collected following a pandemic-related research hiatus (June–December 2020).

Ethical Note

All procedures were approved by the Tufts University Institutional Animal Care and Use Committee under protocols M2016-51 and M2019-21. Birds were housed individually in cages (13 × 9 inches and 10.5 inches high; ~33 × 23 cm and 27 cm high) inside modified coolers for sound isolation, with one cage per box. These coolers had constant airflow through use of aquarium pumps, and humidity and temperature in each box were checked daily. Birds were maintained on a 12:12 h light:dark cycle. Once a week, birds were housed together in a single large cage with access to a water bath, dried fruit, millet and social interaction for several hours.

Testing and training ran from lights-on to lights-off every day except during cage cleaning. Dehydrated seed was available ad libitum. Birds also received cuttlebone, grit and millet weekly and small amounts of burlap as enrichment. During experimentation, all subjects were mildly water-restricted and worked for water rewards (~5–10 μl/drop), routinely performing ~520 trials/day on average. Daily water consumption was monitored per bird to ensure a minimum water intake. Any bird that consumed an average of <1.5 ml over 2 consecutive days was temporarily removed from the experiment and given free access to water. If a bird was not running an experiment, water was provided ad libitum. Water dispensers were cleaned biweekly with a ≥10% ethanol solution and flushed with fresh water. Bird weight was also monitored daily (during shaping) or weekly (during training and testing). When a bird was finished, either by completing the experiment or failing to learn the task, it was returned to group housing or used in a separate experiment.

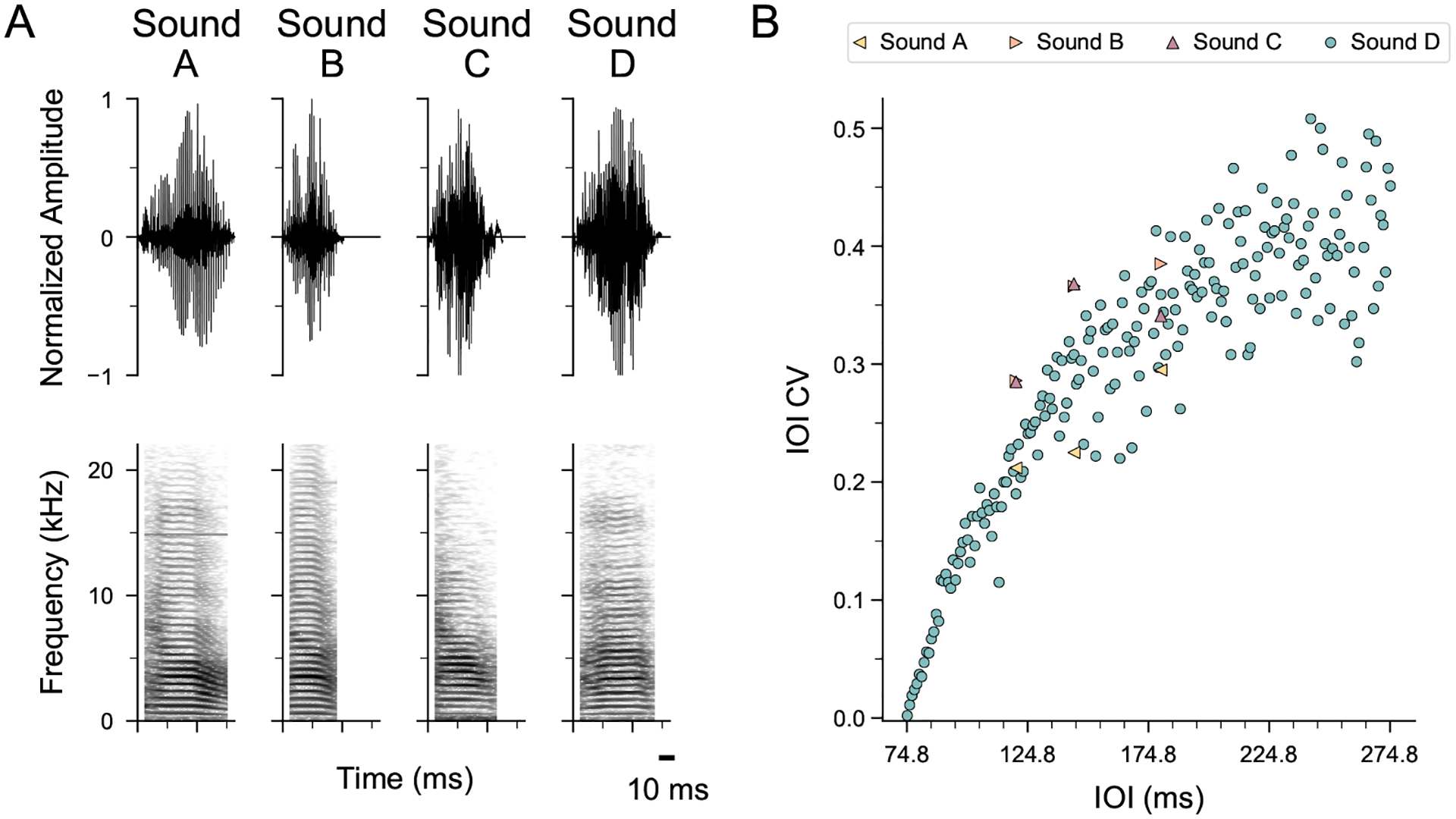

Auditory Stimuli

All stimuli used in this experiment were the same as those described in Rouse et al. (2021). Briefly, we used sequences of natural sounds from unfamiliar male conspecifics: either an introductory element that is typically repeated at the start of a song, or a short harmonic stack (Zann, 1996) (see Appendix, Fig. A1a). For each sound, an isochronous sequence (~2.3 s long) with equal time intervals between event onsets and an arrhythmic sequence with a unique temporal pattern (but the same mean inter-onset interval (IOI)) were generated at two base tempi: 120 ms and 180 ms IOI (Fig. 1a). These tempi were chosen based on the average syllable rate in zebra finch song (~7–9 syllables/s, or 111–142 ms IOI; Zann, 1996).

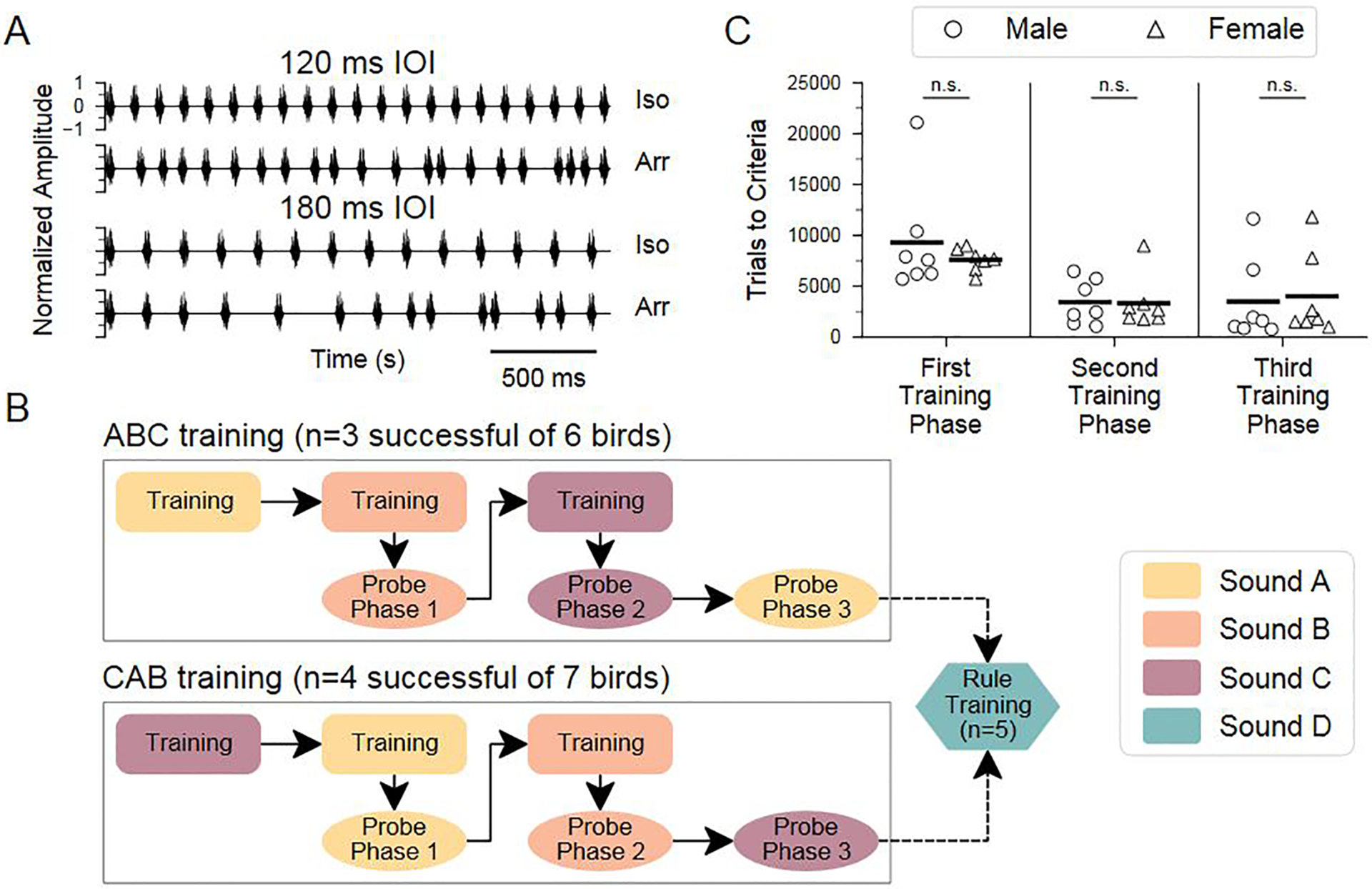

Figure 1.

Experimental design for testing the ability to flexibly perceive rhythmic patterns. (a) Normalized amplitude waveforms of isochronous (Iso) and arrhythmic (Arr) sequences of a repeated song element (sound B; see Appendix, Fig. A1) with 120 ms and 180 ms mean inter-onset interval (IOI). (b) Schematic of the protocol. After a pretraining procedure in which birds learned to use the apparatus (not shown), birds learned to discriminate between isochronous and arrhythmic sound sequences at 120 and 180 ms IOI, for sounds A, B and C (‘ABC training’, N = 6 females) or starting with sound C followed by sounds A and B (‘CAB training’, N = 7 females) (colour indicates sound type; see Appendix, Fig. A1). To test for the ability to generalize the discrimination to new tempi, probe stimuli (144 ms IOI) were introduced after birds had successfully completed two training phases. A subset of birds was then tested with a broader stimulus set using a novel sound element (sound D) and every integer rate between 75 and 275 ms IOI (‘rule training’; see Methods for details). (c) Comparison of training time (‘trials to criteria’) for male and female birds that completed rhythm discrimination training for all three phases. Data from male birds collected by Rouse et al. (2021) are shown for comparison.

Arrhythmic stimuli were generated in an iterative process using MATLAB (Mathworks, Natick, MA, U.S.A.). Each isochronous stimulus had a known number of intervals, and an equal number of IOIs were randomly drawn from a uniform distribution ranging between a 0 ms gap between sound elements (i.e. an IOI equal to the sound element duration) and an IOI 1.5 times the IOI of the corresponding isochronous stimulus (e.g. maximum IOI of 180 ms and 270 ms for arrhythmic sequences whose mean tempi were 120 and 180 ms IOI, respectively). This process was repeated until it generated a set of intervals that had the same number of intervals, average IOI and overall duration as the paired isochronous stimulus. To ensure that the arrhythmic pattern was significantly different from isochrony, we also required a minimum SD for interval durations (SD ≥ 10 ms). An additional pair of probe stimuli (one isochronous, one arrhythmic) was generated at a tempo (144 ms IOI) 20% faster/slower than the training stimuli, using the same minimum SD for interval durations and same minimum and maximum IOI constraints (Fig. 1b).

For ‘rule training’ (see below), an isochronous/arrhythmic pair was generated with a novel harmonic stack (sound D; see Appendix, Fig. A1a) at tempi ranging from 75 ms to 275 ms IOI in 1 ms steps (i.e. at 201 rates). Each arrhythmic stimulus had a temporally unique pattern. For IOIs < 90 ms, the minimum SD of IOI required for arrhythmic sequences was reduced from 10 ms to 1 ms because sound element D was ~75 ms long (Appendix, Fig. A1a), making the silent gaps very short and reducing variability in the IOIs (Appendix, Fig. A1b). Variability in the IOIs of these 201 arrhythmic sequences was calculated using two measures: (1) the coefficient of variation (CV = the standard deviation of IOIs divided by the mean IOI); (2) the normalized pairwise variability index (nPVI), which measures the difference in duration between each IOI and its neighbour, normalized by the average duration of the pair. Unlike the CV, the nPVI is a measure of relative timing because it is sensitive to duration ratios between successive intervals in a sequence. The nPVI has been used in research on rhythmic patterning in speech, music and animal communication (Burchardt & Knörnschild, 2020; Low et al., 2000; Patel et al., 2006) and is calculated using the following equation:

where dk indicates the duration of the kth IOI and m is the total number of IOIs. nPVI ranges between 0 for isochronous sequences and a theoretical upper limit of 200. The higher the nPVI, the more adjacent IOIs tend to differ in duration. Two sequences with the same IOIs but in different orders (e.g. 10 ms→50 ms→10 ms→50 ms versus 10 ms→10 ms→50 ms→50 ms) would have the same CV but very different nPVI values given the difference in duration contrast between adjacent elements.

For all stimuli, the amplitude of each element was constant within a sequence, but across sound types, amplitude was not normalized. Received levels of stimuli were calibrated to ~77 dBA at 3 cm from the floor of the cage with sound A. For a subset of stimuli using sound D (N = 15/201), the amplitude was inadvertently higher (see Figure S3B in Rouse et al., 2021).

Auditory Operant Training Procedure

Training and probe testing used a go/interrupt paradigm as described in Rouse et al. (2021). Briefly, female birds were housed singly in a cage that had a trial switch to activate playback of an auditory stimulus and a response switch with a waterspout. Both switches had adjacent LED lights that were only lit when the switches were active and could be pecked. Birds were mildly water-restricted and worked for water rewards, routinely performing ~520 trials/day. Pecking the trial switch initiated a trial and triggered playback of a stimulus (~2.3 s): either rewarded (S+, 50% probability) or unrewarded (S−, 50% probability). For rhythm discrimination experiments, the isochronous patterns were rewarded (S+ stimuli). Trial and response switches were activated 500 ms after stimulus onset, and pecking either switch immediately stopped playback. ‘Hits’ were correct pecks of the response switch during S+ trials. ‘False alarms’ were pecks of the response switch on S− trials and resulted in lights out (up to 25 s) (Gess et al., 2011). If neither switch was pecked within 5 s after stimulus offset, the trial would end (‘no response’). No response to the S− stimulus was counted as a ‘correct rejection’, and no response to the S+ stimulus was considered a ‘miss’. During the response window, a bird could also peck the trial switch again to ‘interrupt’ and immediately stop the current trial (and any playback), which was counted as a ‘miss’ or ‘correct rejection’ depending on whether a S+ or S− stimulus had been presented. The use of the trial switch to interrupt trials varied widely among birds and was not analysed further. Regardless of response, birds had to wait 100 ms after the stimulus stopped playing before a new trial could be initiated.

Shaping and performance criteria

To learn the go/interrupt procedure, birds were first trained to distinguish between two unfamiliar conspecific songs (~2.4 s long, ‘shaping’ phase), one acting as the S+ (rewarded or ‘go’) stimulus and the other as the S− (unrewarded or ‘no-go’) stimulus. Lights-out punishment was not implemented until a bird initiated ≥ 100 trials. Throughout the experiment, the criterion for advancing to the next phase was ≥ 60% hits, ≥ 60% correct rejections and ≥ 75% overall correct for two of three consecutive days (Nagel et al., 2010; van der Aa et al., 2015). In each phase, birds were required to reach the performance criterion within 30 days. If a bird failed to reach performance criterion within 30 days in any phase, it was removed from further training/testing. The mean (± SD) number of trials to criterion for the shaping stimuli was 1734 ± 727. Three females did not regularly trigger playback of auditory stimuli and were removed from the study without completing shaping.

Training: rhythm discrimination

Once a bird reached criterion performance on the shaping stimuli, she was trained to discriminate isochronous versus arrhythmic stimuli (N = 13 birds). As described previously, each bird was trained using multiple sound types and multiple stimulus rates. In the first training phase, each bird learned to discriminate two isochronous stimuli (120 ms and 180 ms IOI) from two arrhythmic stimuli (matched for each mean IOI). Once performance criterion was reached, the bird was presented with a new set of stimuli at the same tempi but with a novel sound element (and a novel irregular temporal pattern at each tempo; see Fig. 1b). One group of females (‘ABC’; N = 6) was trained to discriminate sound A stimuli, followed by sound B and then sound C; a second group (‘CAB’; N = 7) was trained with sound C, followed by sounds A and B. One female ‘ABC’ bird was presented with stimuli at three additional tempi, but those trials were not reinforced and were excluded from analysis.

Probe testing/generalization

To test whether birds could generalize the isochronous versus arrhythmic classification at a novel tempo, females were tested with probe stimuli at 144 ms IOI, 20% slower/faster than the training stimuli (120 ms and 180 ms IOI). Male zebra finches have been previously shown to discriminate between two stimuli that differ in tempo by 20% (Rouse et al., 2021), and we confirmed this in female zebra finches (see below and Appendix, Fig. A2b). Probe sounds were introduced after a bird had successfully completed two phases of training (see Fig. 1b). Prior to probe testing, the reinforcement rate for training stimuli was reduced to 80% for at least 2 days. During probe testing, training stimuli (90% of trials) and probe stimuli (10% of trials) were interleaved randomly. Probe trials and 10% of the interleaved training stimuli were not reinforced or punished (Rouse et al., 2021; van der Aa et al., 2015).

Rule training

Following probe testing with all three sound types, subjects (N = 5 birds) were presented with a new set of isochronous and arrhythmic stimuli using a novel sound (sound D). In this phase, stimulus tempi included every integer rate from 75 ms to 275 ms IOI (201 total rates). Arrhythmic stimuli were again generated independently so that each rate had a novel irregular pattern, with the same mean IOI as its corresponding isochronous pattern. Trials were randomly drawn with replacement from this set of 402 stimuli, and all responses were rewarded or punished as during training. This large stimulus set made it unlikely that subjects could memorize individual temporal patterns.

Discrimination of other acoustic features

To determine whether the 20% difference between the training and probe stimuli could be detected by female zebra finches, we tested a separate cohort of females on tempo discrimination (N = 6; mean ± SD age = 71 ± 9.1 days posthatch at the start of shaping). Following shaping, these birds were trained to discriminate isochronous sequences of sound A based on rate: 120 ms versus 144 ms IOI. The rewarded (S+) stimulus was 120 ms IOI for four females and 144 ms IOI for two females. To assess the perceptual abilities of female zebra finches more generally, these females were also tested on their ability to discriminate spectral features using frequency-shifted isochronous sequences of sound B: one shifted three semitones up and the other shifted six semitones up (‘change pitch’ in Audacity version 2.1.2). Four females were tested with the six-semitone shift as the S+ stimulus and two females were tested with the three-semitone shift as the S+ stimulus.

Data Analysis

All statistical tests were performed in R (version 3.6.2) within RStudio (version 1.2.5033) except for the binomial tests for training and generalization, the nonparametric tests for comparisons across perceptual discrimination tasks and linear regressions. Binomial logistic regressions were performed with ‘lme4’ (‘glmer’) statistical package for R, and binomial and nonparametric tests and linear regressions were performed using Python (version 3.7.6).

Training and generalization testing

To quantify performance, the proportion of correct responses ((hits + correct rejections)/total number of trials) was computed for each stimulus pair (isochronous and arrhythmic patterns of a given sound at a particular tempo). For training phases, proportion correct was always computed based on the last 500 trials. For probe testing, performance was computed for 80 probe trials, except for one female that was inadvertently presented with only 56 probe trials for sound A due to a procedural error. Data from this female are included as her performance was comparable to that of other females that performed 80 probes for each sound type. The proportion of correct responses was compared to chance performance (P = 0.5) with a binomial test using α = 0.05/2 in the training conditions (2 tempi) and α = 0.05/3 for probe trials (3 tempi). A linear least-squares regression was used to examine the correlation between each subject’s average performance on interleaved training stimuli and probe stimuli.

To account for incorrect responses to the S– stimuli, we also calculated the d′ measure for both training stimuli and probe stimuli:

where H is the proportion of correct responses to the S+ stimulus (hits) and F is the proportion of incorrect responses to the S− stimulus (false alarm rate). A d′ value of 0 is equivalent to chance performance, and d′ values greater than 1 are considered ‘good’ discrimination (Green & Swets, 1966).

Performance of females (N = 7) during probe trials was compared to that of male zebra finches (N = 7) collected in a prior study (Rouse et al., 2021). We analysed performance across all probe trials for these birds with a binomial logistic regression using a generalized linear mixed model with sex, probe phase number and sex*probe phase number interaction as fixed effects and subject as a random effect. All birds performed 240 probe trials each (80 probe trials per song element × 3 song elements) except for one female that performed 216 probe trials (see Results, Appendix, Fig. A3).

Rule training

As described previously in Rouse et al. (2021), we analysed the first 1000 trials of rule training for each bird (N = 5 females) to minimize any potential effect of memorization. Trials were binned by IOI in 10 ms increments, and the number of correct responses was analysed with a binomial logistic regression using a generalized linear mixed model (tempo bin as a fixed effect and subject as a random effect). Performance in each bin was compared to performance in the 75–85 ms IOI bin, where performance fell to chance since the degree of temporal variation in interelement intervals was severely limited by the duration of the sound element (Appendix, Fig. A1b). To identify possible sex differences, we performed an additional analysis using data from a range of tempi (95–215 ms) in which both males and females performed significantly above chance (binomial test: P < 0.005). The number of correct and incorrect trials was calculated per sex and analysed for significant group differences with a chi-square test.

Discrimination of other acoustic features

Performance on tempo or frequency discrimination was analysed in the same manner as for rhythm discrimination: the proportion of correct responses in the last 500 trials was compared to chance performance using a binomial test with α = 0.05.

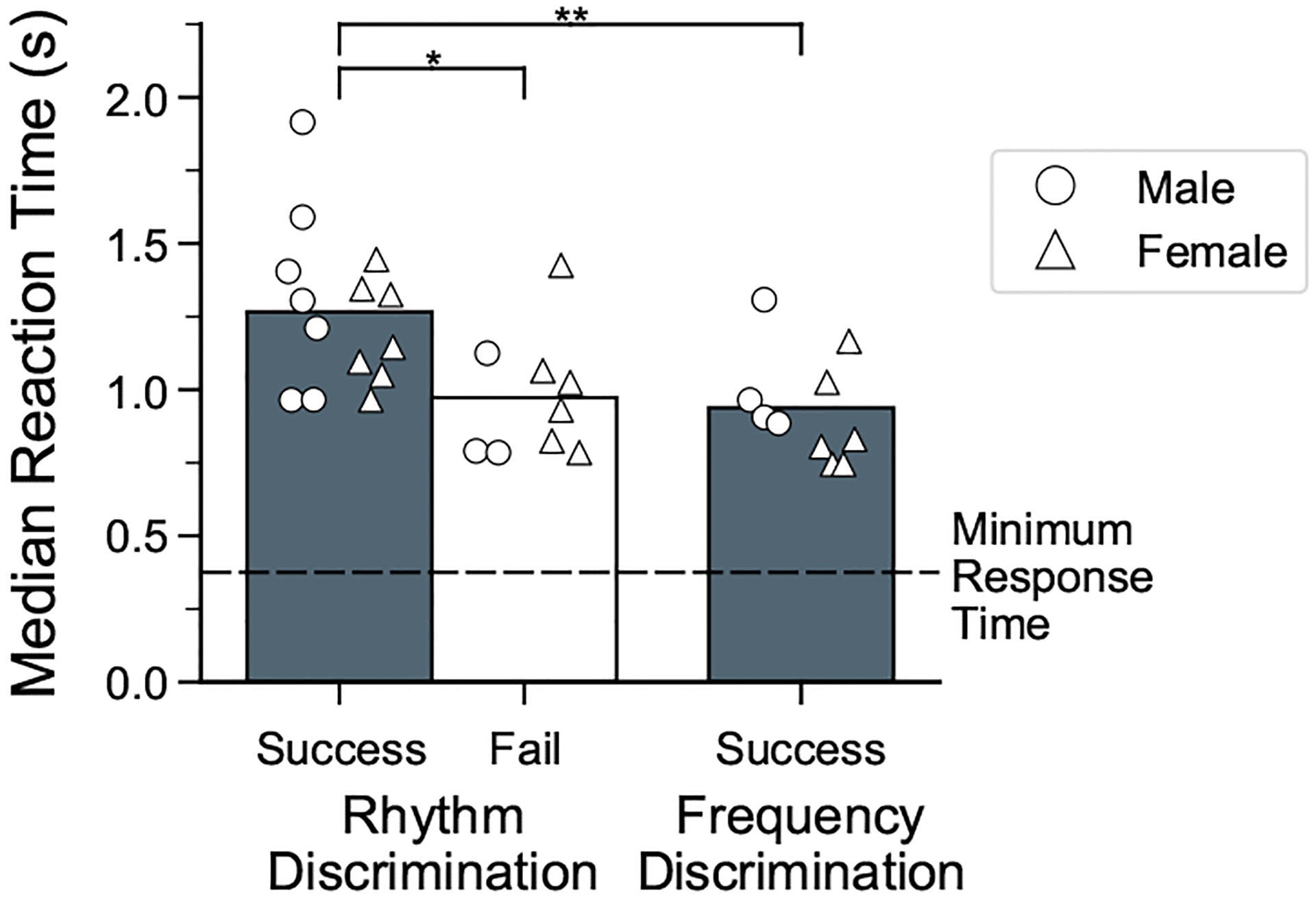

Reaction time

Given that our task involved perception of temporal patterns, we quantified how long birds listened to the stimuli before responding. To estimate the duration of the stimulus that a bird heard prior to responding, we computed the median time between trial initiation and response selection (when the bird pecked a switch) for the last 500 trials in a training phase for both males and females. For the rhythm discrimination birds, only the first training phase (prior to probe testing) was used. Median reaction time values were compared between three groups: 14 birds that completed all rhythmic training phases (‘successful’ birds), 9 birds that failed to complete rhythm discrimination training and 10 birds that learned the frequency discrimination. No sex differences were observed when pooling reaction time data across rhythm discrimination and frequency discrimination tasks (Mann–Whitney U test: U = 0.69, N1 = N2 = 7, P = 0.49). We used a Kruskal–Wallis test to compare differences across groups, followed by a Dunn’s post hoc test with Bonferroni correction for multiple comparisons.

RESULTS

Rhythmic Pattern Training and Generalization Testing in Female Zebra Finches

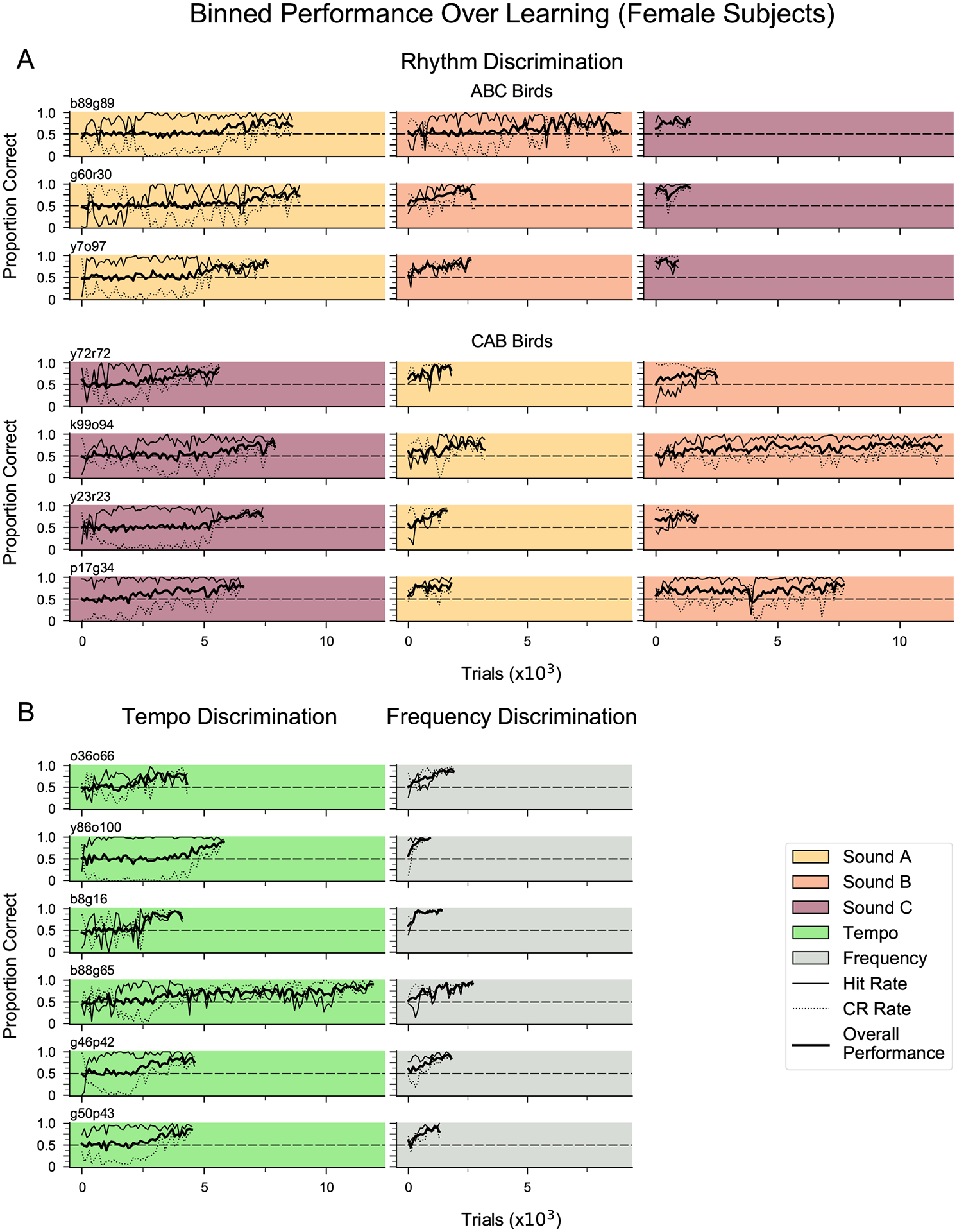

To test the ability of female songbirds to recognize a rhythmic pattern based on the relative timing of events, 13 female zebra finches were first trained to discriminate isochronous from arrhythmic sequences using a go/no-go paradigm with three training phases (Fig. 1a, b). Seven out of 13 females learned to discriminate these sequences in all three phases, each of which used a different zebra finch song element (the remaining six females did not reach our performance criterion within 30 days per phase and therefore did not complete training, so their potential performance on subsequent tests is not known). The number of trials for the seven females to reach criteria for rhythmic discrimination (see Methods) was comparable to that of male zebra finches tested previously (Fig. 1c; data from male birds in Rouse et al., 2021). There were no sex-based differences in training time for any phase (Mann–Whitney U test: U = −0.1917, P > 0.05). Figure 2a shows the time course of learning for a representative female zebra finch (y7o97), which gradually learned to withhold her response to the arrhythmic stimulus. Across the seven successful females, rhythm discrimination performance was ~81% accurate (mean d′ = 1.9; Appendix, Fig. A3) at the end of the first training phase (Fig. 2b), and a comparable accuracy level was attained by the last 500 trials of each training phase (median = 80% proportion correct; mean d′ = 1.7; see Appendix, Fig. A2a for learning curves for each bird on the rhythm discrimination task).

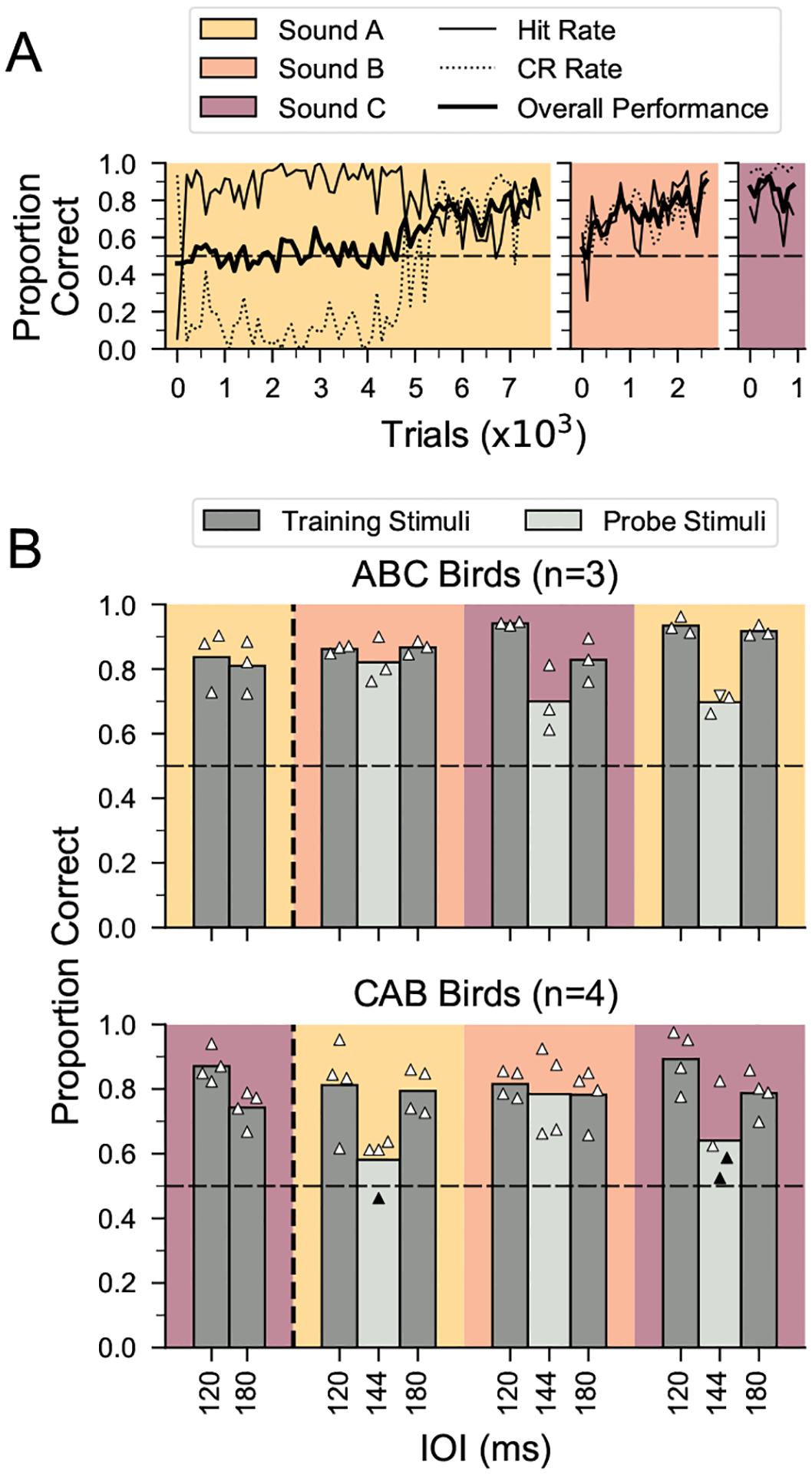

Figure 2.

Learning of rhythmic pattern discrimination and generalization to new tempi for female birds. (a) Learning curves for rhythm discrimination for a representative female bird (y7o97) across three training phases (ABC training) in 100-trial bins. Data are plotted until criterion performance was reached. Chance performance is indicated by the dashed horizontal line. Hit rate: proportion correct responses for isochronous stimuli (S+); CR: proportion correct rejections for arrhythmic stimuli (S−). (b) Results for rhythm discrimination training and probe testing for successful female birds (N = 7). Data to the left of the vertical dashed line show performance in the final 500 trials of the first rhythm discrimination training phase (no probe testing, see Fig. 1b). Data to the right of the vertical dashed line show performance during probe testing with stimuli at an untrained tempo of 144 ms IOI (light grey) and for interleaved training stimuli (dark grey). Symbols denote performance for each female (N = 3 probe tests/bird; 6 females were tested with 240 probe stimuli (triangles) and 1 female was tested with 80 probe stimuli for two sound types and 56 probe stimuli for the third sound type (inverted triangle)). Average performance across birds in each group is indicated by vertical bars. Filled symbols denote performance not significantly different from chance.

After successful completion of two phases of rhythm discrimination, we tested whether females could generalize the discrimination of isochronous versus arrhythmic stimuli at a novel tempo (Fig. 1b, ‘probe testing’). Figure 2b shows the performance on randomly interleaved training stimuli (90% of the trials) and probe stimuli at a novel tempo (10% of the trials) for the seven females that successfully completed all three phases of rhythm discrimination training. Performance on probe stimuli was significantly above chance for 18 of 21 probe tests (binomial test with Bonferroni correction: N = 3 probe tests/bird × 7 birds, P < 0.0167), indicating that female zebra finches robustly generalized the discrimination of isochronous versus arrhythmic stimuli to a novel tempo distant from the training tempi (Fig. 2b). Female performance on probe trials was not significantly different from performance on interleaved training trials for sound B (χ21 = 0.1, P = 0.75) but was significantly lower for sound A (χ21 = 96.2, P < 0.0001) and sound C (χ21 = 29.4, P < 0.0001). Notably, this same result was seen in previously tested males (sound B: χ21 = 0.9, P = 0.36; sound A: χ21 = 183.2, P < 0.0001; sound C: χ21 = 55.7, P < 0.0001; Rouse et al., 2021).

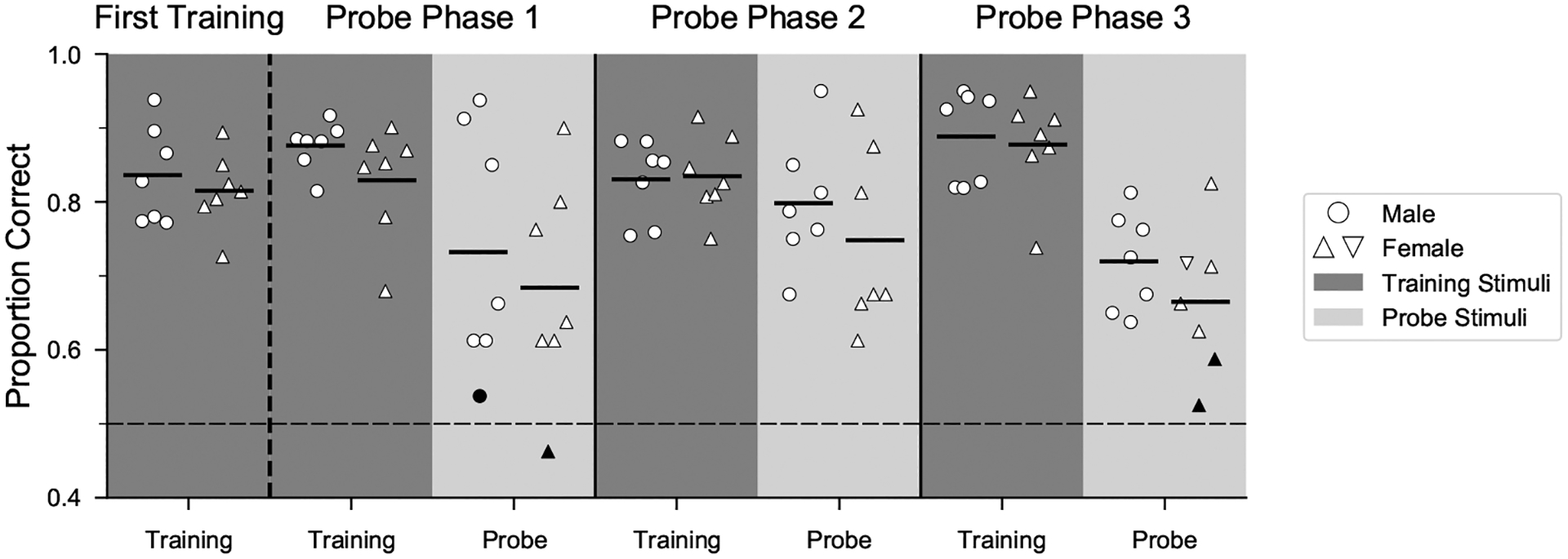

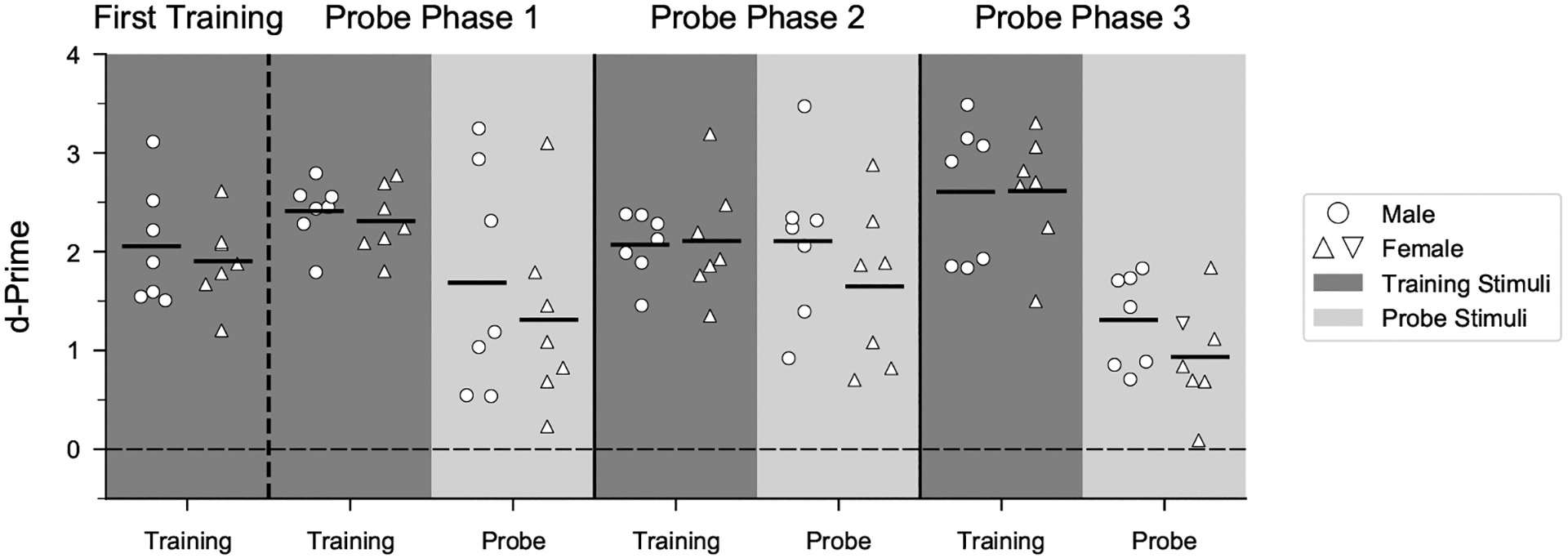

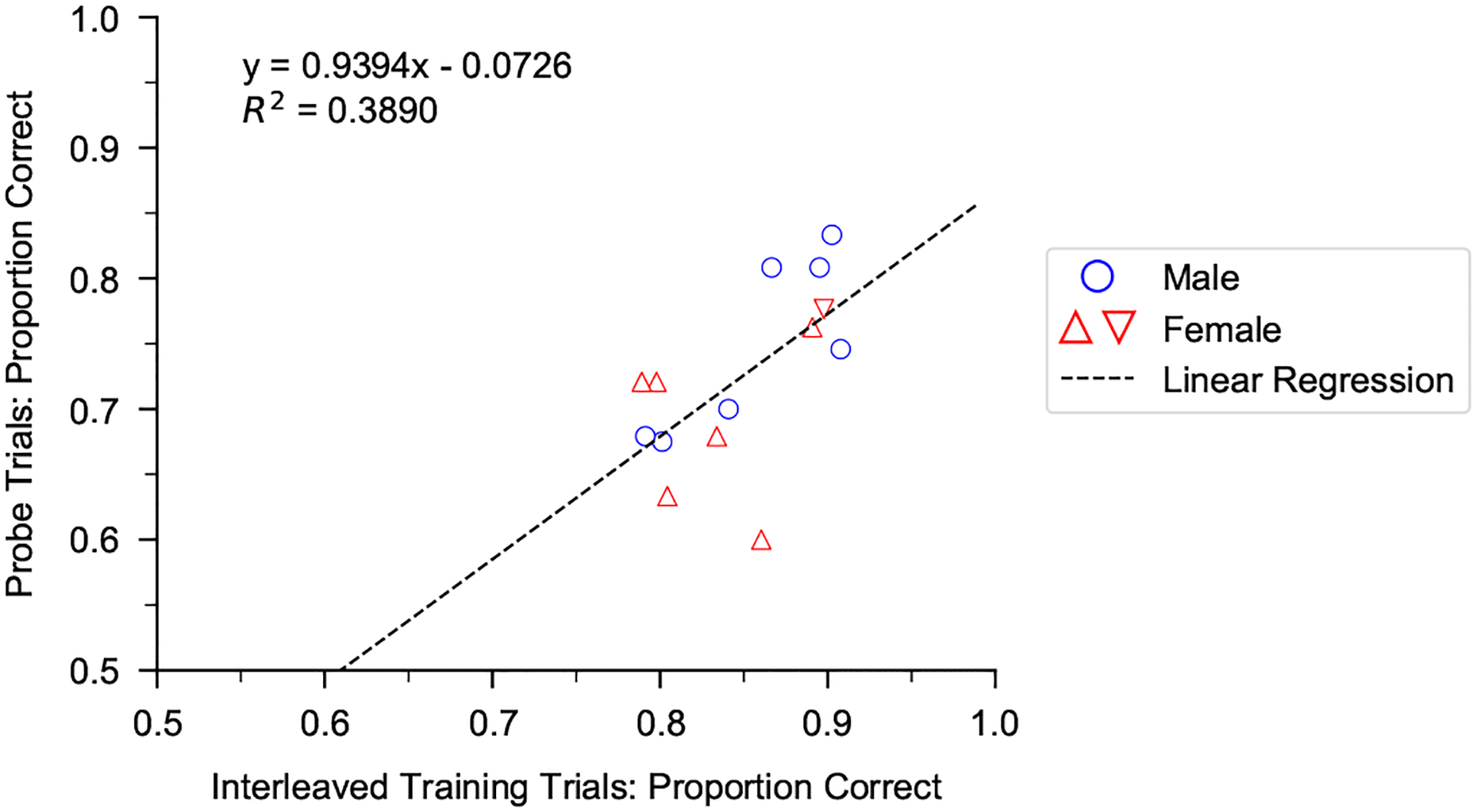

Sex Differences in Rhythm Pattern Perception

To test the hypothesis that the ability to flexibly perceive rhythmic patterns is linked to vocal learning, we first compared performance of female zebra finches, which do not learn to imitate song, with that of vocal learning males (Fig. 3, Appendix, Fig. A3). Male and female zebra finches appeared equally motivated to perform the task: on average, females performed ~520 trials/day compared to ~530 trials/day for males in a prior study. Discrimination of isochronous versus arrhythmic stimuli at a novel tempo (probe stimuli) appeared lower for female zebra finches compared to males on average (by ~5%) but was not significantly different (mixed-effects logistic regression: P = 0.338). Across all female and male subjects, performance on interleaved training stimuli (learned discrimination) was positively correlated with performance on probe stimuli (measure of generalization) (least squares regression fit: R2 = 0.39, P = 0.0172; Appendix, Fig. A4).

Figure 3.

Comparison of generalization of rhythm discrimination by sex. Mean performance of all successful males (N = 7) and females (N = 7) during the last 500 trials of the first training phase and for probe and interleaved training stimuli in all phases. Data are collapsed across training order, and interleaved training trials are combined across tempi. Dashed horizontal line indicates chance performance. Filled symbols denote performance not significantly different from chance.

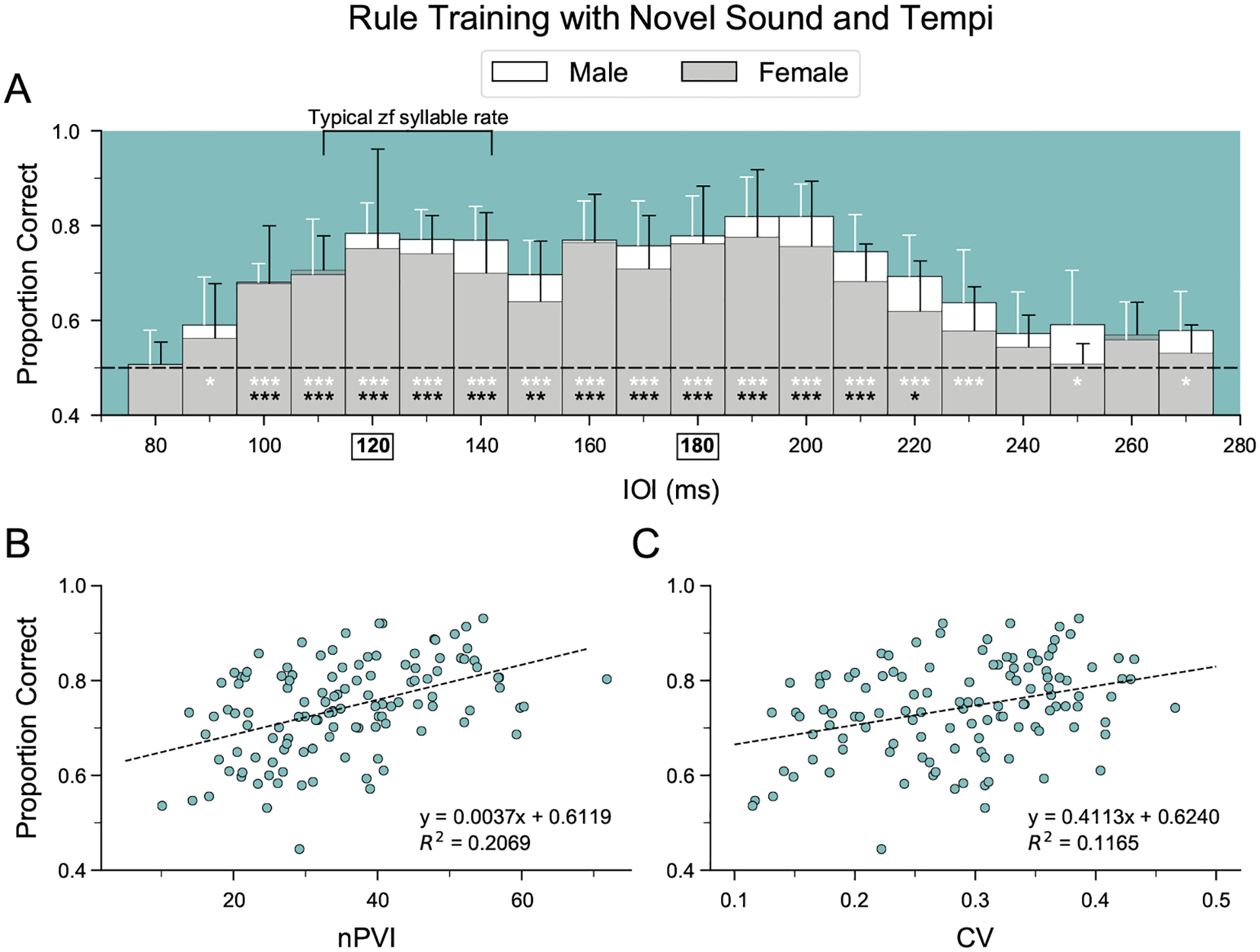

As a second test of the ability to recognize temporal regularity across tempi, five females that completed rhythm discrimination training were tested with sequences of a new sound element (sound D; see Fig. 1, Appendix, Fig. A1) across a wide range of tempi (75–275 ms IOI; ‘rule training’, i.e. 201 different tempi). For each trial, one of 402 stimuli was played (with equal odds of an isochronous or arrhythmic sequence), reducing the likelihood that correct discrimination was based on memorization. Figure 4a shows the average performance of all birds over the first 1000 trials, broken into 10 ms IOI bins (~50 trials per bin). For female birds, performance was best between 95 and 215 ms IOI (tempi ~20% slower to ~20% faster than the original training range; mixed effect logistic regression: P < 0.005; Fig. 4a). Performance fell to chance at faster tempi (75–85 ms IOI), when temporal variability in IOIs was limited by the length of the sound element (see Appendix, Fig. A1b) and at slower tempi (225–275 ms IOI). To directly compare performance to that of male zebra finches, the number of correct and incorrect trials within the 95–215 ms IOI tempo range (where both sexes performed well above chance) were grouped by sex. In this range, female performance was significantly worse than male performance (76% for males versus 72% for females; χ21 = 13.919, P < 0.001, average d′ = 1.5 versus 1.2).

Figure 4.

Accuracy of the learned discrimination across a wide range of tempi in male and female zebra finches. (a) Average performance of five female birds during the initial 1000 trials of ‘rule training’ with a novel sound element (sound D). Mean performance ± SD is plotted for 10 ms inter-onset interval (IOI) bins. Chance performance is indicated by the horizontal dashed line. Black asterisks indicate female performance significantly above chance (mixed-effect logistic regression: *P < 0.05; **P < 0.005; ***P < 0.001). Data from seven males from Rouse et al. (2021) are plotted for comparison (white asterisks denote performance significantly above chance). IOIs used in rhythm discrimination training phases before rule training are shown in bold and boxed on the X axis. (b–c) Average performance across all male and female birds in relation to the normalized pairwise variability index (nPVI) and the coefficient of variation (CV) of the IOI durations for tempi within the 95–215 ms IOI tempo range (ANOVA: P < 0.001).

To examine whether variation in performance across tempi in rule training could be explained by the temporal properties of the arrhythmic stimuli, we measured the normalized pairwise variability index (nPVI) and the coefficient of variation (CV) of the IOIs for each of the 121 arrhythmic stimuli within the 95–215 ms IOI tempo range. Across all birds, the average accuracy of discriminating an isochronous from an arrhythmic stimulus at a given tempo was positively correlated with the nPVI (R2 = 0.21, P < 0.0001) and the CV (R2 = 0.12, P < 0.001) of the arrhythmic stimulus at that tempo, with nPVI explaining more variance in accuracy than CV (Fig. 4b, c).

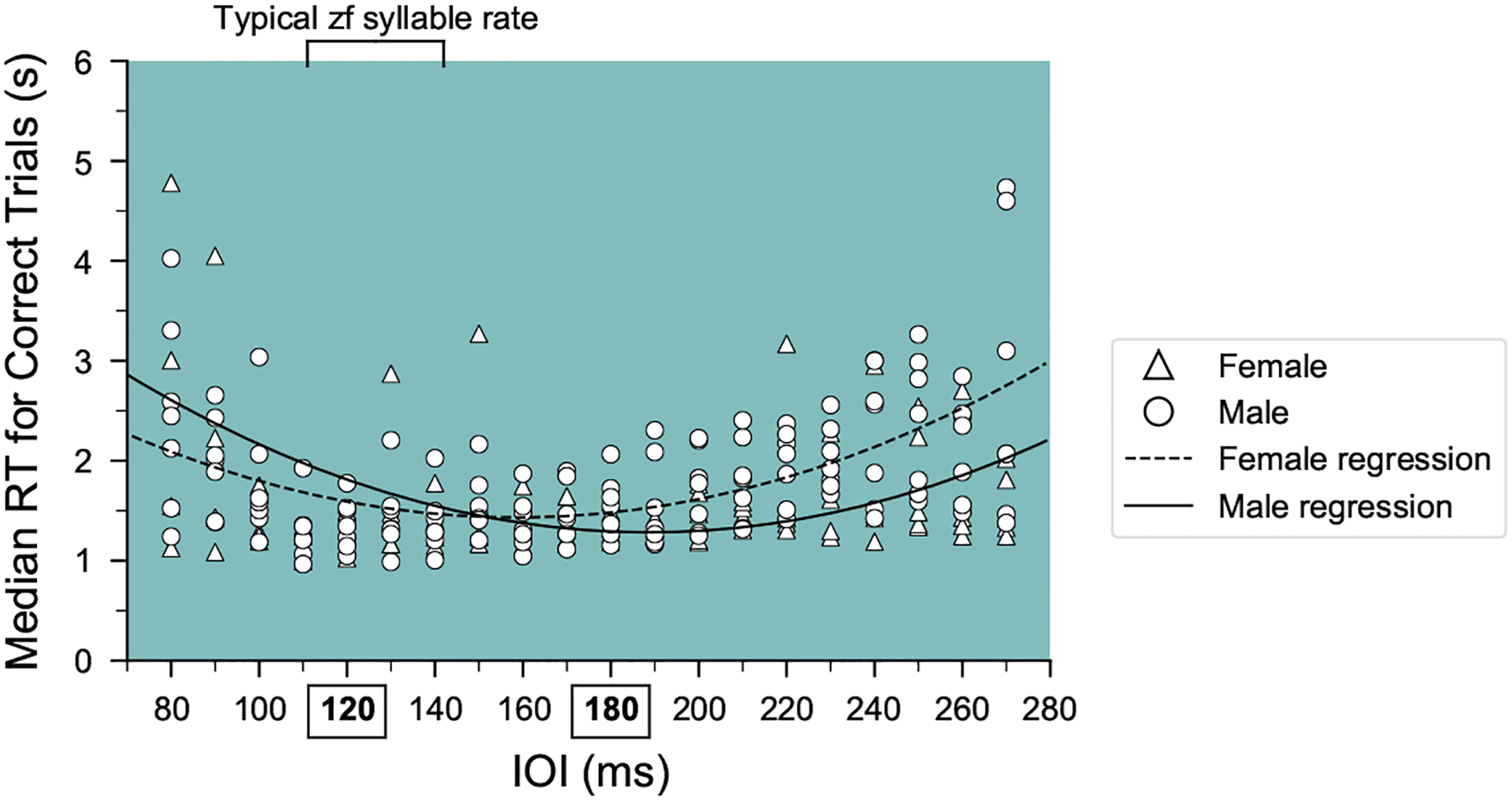

Reaction Times for Discrimination of Acoustic Features

Across female birds that succeeded in rhythm discrimination training (N = 7), the median reaction time at the end of the first training phase of rhythm discrimination averaged ~1.20 s after stimulus onset, or ~50% of the duration of the stimulus training (Fig. 5, Appendix, Table A1). Data from previously tested males that succeeded in rhythm discrimination training (N = 7) showed similar median reaction times (average = 1.30 s), indicating that both males and females heard ~7–11 intervals on average before responding. In contrast, the median reaction time for the birds that did not reach the criterion for rhythm discrimination during training averaged ~1.0 s for females (N = 6) and 0.9 s for males (N = 3).

Figure 5.

Reaction times during rhythmic pattern discrimination and frequency discrimination tasks. Median reaction times for the final 500 responses in the first training phase are shown for three groups of birds. For rhythm discrimination, data are shown for birds that completed all three training phases (success: N = 7 males, 7 females) and birds that did not complete rhythm discrimination training (fail: N = 3 males, 6 females). A separate cohort of birds (N = 4 males, 6 females) succeeded at discriminating isochronous sequences that differed in frequency. The 500 ms period between trial start and activation of the response switch is indicated by the horizontal dashed line. Symbols indicate the median reaction time for each bird; bars indicate means of these values collapsed across sex. Asterisks indicate significant differences between groups (post hoc Dunn’s test with Bonferroni correction: *P <0.05; **P < 0.01).

Shorter reaction times, however, do not necessarily indicate poor perceptual discrimination. In a separate cohort of birds that learned to discriminate isochronous sequences that differed in frequency by three semitones (i.e. a quarter of an octave; N = 4 previously tested males and N = 6 females; average proportion correct responses in last 500 trials = 91%; mean d′ = 2.8; see Appendix, Fig. A2b, Table A1), the median reaction times for successful discrimination averaged ~0.9 s. This suggests that unlike rhythm discrimination, discrimination based on spectral features does not require hearing long stretches of the sequence.

Comparison of reaction times between successful rhythm discrimination birds, unsuccessful rhythm discrimination birds and successful frequency discrimination birds showed a significant effect of group (Kruskal–Wallis test: H2 = 11.33, P = 0.003; Fig. 5, Appendix, Table A1). Dunn’s post hoc tests showed that the median reaction times of the successful rhythm birds were significantly higher (slower) than both the unsuccessful rhythm birds and the successful frequency birds. Taken together, these results suggest that success at rhythm discrimination may be related to how long birds listened to the stimuli before responding. Indeed, even among the birds that successfully recognized temporal regularity across a wide range of tempi (Fig. 4a), median reaction time was longer for very fast and slow tempi where the discrimination was more difficult (i.e. when the proportion of correct responses was closer to chance performance: 75–85 ms IOI and >235 ms IOI; Appendix, Fig. A5).

DISCUSSION

To test the hypothesis that differences in the capacity for flexible rhythm pattern perception are related to differences in vocal learning ability, we investigated the ability of a sexually dimorphic songbird to recognize a fundamental rhythmic pattern common in music and animal communication – isochrony (Norton & Scharff, 2016; Ravignani & Madison, 2017; Roeske et al., 2020; Savage et al., 2015). In zebra finches and many other songbirds, only the males learn to sing and possess pronounced forebrain motor regions for vocal production learning (Nottebohm & Arnold, 1976). Male zebra finches have been shown to discriminate isochronous versus arrhythmic patterns in a tempo-flexible manner (Rouse et al., 2021), and we predicted that female zebra finches would perform less well when tested with the same rhythm discrimination and generalization tasks. Using a sequential training paradigm with multiple sound types and tempi, we found that about half of females tested (N = 7/13) could learn to discriminate isochronous from arrhythmic patterns in all three phases of our training paradigm (Fig. 1b), and the learning rates for these females were similar to those of males (Fig. 1c). In each training phase, birds were required to reach a performance criterion of >75% correct responses within 30 days, so it is possible that more birds may have learned the discrimination with additional training. Females that completed all three phases of training showed generalization of the learned discrimination for a novel tempo 20% different from either training tempo (Fig. 2b). Importantly, zebra finches can detect a 20% tempo difference (Appendix, Fig. A2b; also see Rouse et al., 2021). Birds that succeeded in our rhythm training paradigm listened for longer to stimulus sequences than birds who failed (Fig. 5), consistent with attention to the global temporal pattern of the sequence.

We also found that zebra finches that completed training were able to discriminate isochronous from arrhythmic stimuli across a range of tempi far beyond the training tempi and beyond the natural tempo range of zebra finch vocalizations (Fig. 4). In the ‘rule training’ experiment, birds heard an isochronous or arrhythmic pattern randomly drawn from 201 different tempi, with each tempo having a unique arrhythmic pattern. Responses to all stimuli were reinforced but only the first 1000 trials were analysed (i.e. ~5 trials/tempo), allowing little time to learn this discrimination based on reinforcement. At very fast tempi, failure to discriminate isochronous from arrhythmic patterns was expected, since the short inter-onset intervals allowed for minimal temporal variation (Rouse et al., 2021; Appendix, Fig. A3a). The failure to discriminate at slower tempi suggests an inability to accurately predict the timing of the next event in isochronous sequences, as happens with humans listening to very slow metronomes (Bååth et al., 2016).

Prior work suggested that zebra finches tend to recognize auditory rhythms based on absolute durations rather than relative timing patterns (ten Cate et al., 2016; van der Aa et al., 2015). Successful discrimination in our rule training experiment may have resulted, in part, from detection of absolute durations and learning to respond to stimuli close to the training stimuli. Indeed, discrimination of isochronous versus arrhythmic stimuli at tempi midway between the training tempi (145–155 ms IOI bin) was significantly worse than discrimination of stimuli at the training tempi (175–185 ms IOI bin and 115–125 ms IOI bin) for both males and females (χ21 = 18.461, P < 0.001).

On the other hand, the ability to recognize isochrony at novel tempi may not derive simply from the physical similarity between the training stimuli and the probe stimuli (i.e. generalization around a familiar training stimulus). Zebra finches can readily detect a 20% tempo difference between isochronous stimuli. In addition, each arrhythmic stimulus had a unique temporal pattern that differed from those used for training. To investigate whether birds might also rely on relative timing, we examined whether performance on rhythmic discrimination correlates with a measure that is sensitive to duration ratios of successive intervals (nPVI). We found that discrimination in the rule training experiment was significantly correlated with the nPVI of the arrhythmic stimuli. In the tempo range for which both sexes performed above chance, this measure of relative timing better predicted rhythmic discrimination accuracy than did a measure of average temporal variability within a sequence (i.e. CV; see Fig. 4b, c). This suggests that zebra finches, like humans, may use both absolute and relative timing of events when perceiving rhythmic patterns.

Several factors in our study may have encouraged birds to recognize isochrony across a broad range of tempi. First, unlike prior studies, we used multiple training phases, each with long sequences of a different conspecific vocalization (Fig. 1a, Appendix, Fig. A1) and novel arrhythmic temporal patterns. The use of stimuli with task-irrelevant spectral and amplitude differences across training phases as well as multiple training tempi may have promoted attention to the global temporal pattern of relative timing, which was the only relevant dimension on which rewards were based. Second, while prior studies of rhythm discrimination tested adult zebra finches that had completed sensorimotor learning, we trained and tested younger birds still in the sensorimotor learning phase (mean ± SD age = 72 ± 8 days posthatch at the start of training). We believe differences in training methods, stimuli (e.g. conspecific vocalizations versus human musical sounds) and age may influence the extent to which zebra finches rely on absolute versus relative timing for recognizing rhythmic patterns. In future work, it would be interesting to explore this further, e.g. by repeating our experiment with adult zebra finches to see if age is an important factor.

While female zebra finches can learn to recognize isochrony across a broad range of tempi, we found small but significant differences in performance between males and females, consistent with our hypothesis that differences in vocal learning abilities correlate with differences in flexible rhythm pattern perception. First, males consistently slightly outperformed females when tested with stimuli across a broad range of tempi at which both sexes performed well above chance (95–215 ms IOI; Fig. 4). In addition, males were able to recognize isochrony across a broader range than females (30% faster to 25% slower than the original training stimuli for males; ~20% faster to 20% slower for females; Fig. 4). These differences did not reflect differences in motivation to perform the task; on average, males and females performed comparable numbers of trials/day. Finally, the proportion of females that successfully recognized isochrony based on global temporal patterns (N = 7/13) was lower than that of males tested previously (N = 7/10), although more data would be needed to determine whether this difference is reliable.

Note that the sex difference we observed, while consistent with our hypothesis, is an average difference. Modest but consistent sex differences are well known in biology (e.g. on average, men are taller than women, due, in part, to sex-biased gene expression; Naqvi et al., 2019), but these differences typically pertain to anatomy, not cognition (Zentner, 2021). Prior work on sex differences in the auditory domain has focused largely on neural mechanisms in the periphery (Berninger, 2007; Gall et al., 2013; Krizman et al., 2020, 2021), although more recent work has demonstrated hormone-mediated differences in forebrain auditory responses to conspecific songs in birds (Brenowitz & Remage-Healey, 2016; Krentzel & Remage-Healey, 2015). Here, we show a small, but consistent, sex difference in an auditory cognitive task (flexible rhythm pattern recognition) in a sexually dimorphic bird. While our sample sizes are in line with other studies of sex differences in auditory processing in birds (Benichov et al., 2016; Kriengwatana et al., 2016), additional studies are needed to confirm this difference.

Just as some women are taller than some men, we find that some individual female zebra finches can outperform individual males in our tasks (e.g. probe tests in Fig. 3). How can this be reconciled with our hypothesis of a link between the neural circuitry for vocal learning and flexible rhythm pattern perception? Recent neuroanatomical work found that although female zebra finches possess smaller vocal motor regions, the overall network connectivity of vocal premotor regions is similar in male and female zebra finches (Shaughnessy et al., 2019). Similarities in ascending auditory inputs to premotor regions, in particular, suggest that auditory processing mechanisms may be conserved across sexes. This raises the question of whether individual variation in auditory–motor circuitry correlates with individual differences in flexibility of rhythmic pattern perception, irrespective of sex. Indeed, in humans, the strength of cortical auditory–motor connections predicts individual differences in rhythmic abilities (Blecher et al., 2016; Vaquero et al., 2018).

Our finding that female zebra finches perform slightly worse than males in flexibly recognizing isochrony contrasts with a prior finding that female zebra finches outperform males in a task involving temporal processing of rhythmic signals (Benichov et al., 2016). In that study, birds (6 males and 6 females) called antiphonally with a robotic partner that emitted calls at a rate of 1 call/s. Birds quickly learned to adjust the timing of their calls in order to avoid a jamming signal introduced at a fixed latency after the robot call, with females showing more pronounced adjustments of call timing than males. While both our study and that study highlight the importance of prediction for rhythm perception, the underlying mechanisms for predicting upcoming events may differ between the two studies. In principle, avoidance of the jamming signal could have resulted from learning a single temporal interval – the time between the robot call and the jamming signal. In contrast, in our task, birds had to learn to recognize the relative timing of successive events and to respond to the same pattern even when absolute interval durations changed. Prior work suggests distinct mechanisms for single-interval timing versus relative timing (Breska & Ivry, 2018; Grube et al., 2010; Teki et al., 2011), so a male advantage on a task that depends on relative timing is not necessarily inconsistent with a female advantage in single-interval timing.

Our work adds to a growing body of research suggesting that sensitivity to rhythmic patterns is widespread across animals. For example, Asokan et al. (2021) found that the responses of neurons in the primary auditory cortex of mice are modulated by the rhythmic structure of a sound sequence. While neurons in the midbrain and thalamus encode local temporal intervals with a short latency, cortical neurons integrate inputs over longer a timescale (~1 s), and the timing of cortical spikes differs depending on whether consecutive sounds are arranged in a repeating rhythmic pattern or are randomly timed. In Mongolian gerbils, Meriones unguiculatus, midbrain neurons have also been shown to exhibit context-dependent responses: on average, neural responses were greater for noise bursts that occurred on the beat of a complex rhythm compared to when the same noise bursts occurred off-beat (Rajendran et al., 2017).

Similarly, in rhesus monkeys, Macaca mulatta, occasional deviant sounds in auditory sequences elicited a larger auditory mismatch negativity in electroencephalogram recordings when those sequences had isochronous versus arrhythmic event timing (Honing et al., 2018). While these studies found context-dependent modulation of auditory responses, they did not test the ability of the animals to recognize a learned rhythm independently of tempo. Demonstrating this ability requires behavioural methods, and an important lesson from prior research is that training methods can strongly influence to what extent such abilities are revealed (e.g. compare van der Aa et al. (2015) with Rouse et al. (2021) and Hulse et al. (1984) with Samuels et al. (2021); see also Bouwer et al., 2021).

Our study investigated rhythm perception in a sexually dimorphic songbird, and a natural question is to what extent similar findings would be obtained in mammals with differing vocal learning capacities, ranging from animals that can modify innate vocalizations in response to sensory cues, such as rodents, bats and nonhuman primates, to animals that learn to imitate vocalizations, such as seals and cetaceans (Hage et al., 2016; Janik, 2014; Stansbury & Janik, 2019; Takahashi et al., 2017; Vernes & Wilkinson, 2019; Wirthlin et al., 2019). Rats can be trained to discriminate isochronous from arrhythmic sequences but show limited generalization when tested at novel tempi (Celma-Miralles & Toro, 2020). Macaques can also be trained to discriminate isochronous from arrhythmic sequences, but their ability to do this flexibly at novel tempi distant from training tempi has not been tested (Espinoza-Monroy & de Lafuente, 2021). Interestingly, macaques can be trained to flexibly entrain their saccades or tapping movements to isochronous sequences at different tempi (Gámez et al., 2018; Takeya et al., 2017), and population level neural activity consistent with a relative timing clock has been reported in premotor regions (presupplementary motor area and supplementary motor area) during the tapping task (Gámez et al., 2019; Merchant et al., 2015). Thus, they would be an interesting animal model for investigating flexible rhythm pattern perception in the absence of movement. More recently, vocal learning harbour seals, Phoca vitulina, have been shown to discriminate isochronous from arrhythmic sequences (Verga et al., 2022), although their ability to do this flexibly across a wide range of tempi remains unknown. Thus, comparative study of flexibility of rhythmic pattern perception is an area ripe for further behavioural and neural investigation. More generally, the ability to relate neural activity to perception and behaviour is critical for understanding the contributions of motor regions to detecting temporal periodicity and predicting the timing of upcoming events, two hallmarks of human rhythm processing that are central to music’s positive effect on a variety of neurological disorders.

Highlights:

Female zebra finches can recognize temporal regularity across a wide range of rates.

Regularity detection extends to tempi far outside conspecific male song rates.

Females were slightly worse than males at flexibly detecting temporal regularity.

Acknowledgments.

We thank T. Gardner and his laboratory for equipment and technical assistance with the operant chambers and T. Gentner for sharing the Pyoperant code. We thank members of the Kao lab for useful discussions and comments on earlier versions of this manuscript. We also thank C. ten Cate for his thoughtful input on this work. This work was supported by a Tufts University Collaborates grant (to M.H.K. and A.D.P.), U.S. National Institutes of Health (NIH) grants R21NS114682 and R01NS129695 (to M.H.K. and A.D.P.) and a Canadian Institute for Advanced Research catalyst grant (to A.D.P.).

Appendix

Table A1.

Reaction times (RTs) from the last 500 trials of the first training block for each subject

| Group | Discrimination | Sex | RT median (s) | RT IQR (s) |

|---|---|---|---|---|

| Rhythm | Success | Male | 0.965 | 0.440 |

| Rhythm | Success | Male | 1.404 | 1.454 |

| Rhythm | Success | Male | 0.965 | 0.360 |

| Rhythm | Success | Male | 1.915 | 1.288 |

| Rhythm | Success | Male | 1.305 | 0.974 |

| Rhythm | Success | Male | 1.210 | 0.692 |

| Rhythm | Success | Male | 1.590 | 1.473 |

| Rhythm | Success | Female | 1.095 | 0.565 |

| Rhythm | Success | Female | 1.344 | 0.370 |

| Rhythm | Success | Female | 0.965 | 0.660 |

| Rhythm | Success | Female | 1.445 | 0.520 |

| Rhythm | Success | Female | 1.325 | 2.584 |

| Rhythm | Success | Female | 1.050 | 0.376 |

| Rhythm | Success | Female | 1.145 | 0.416 |

| Rhythm | Fail | Male | 0.790 | 0.247 |

| Rhythm | Fail | Male | 0.785 | 0.229 |

| Rhythm | Fail | Male | 1.124 | 0.360 |

| Rhythm | Fail | Female | 1.065 | 0.789 |

| Rhythm | Fail | Female | 0.825 | 0.425 |

| Rhythm | Fail | Female | 0.930 | 0.425 |

| Rhythm | Fail | Female | 1.425 | 0.400 |

| Rhythm | Fail | Female | 1.024 | 0.379 |

| Rhythm | Fail | Female | 0.785 | 0.171 |

| Frequency | Success | Male | 0.965 | 0.314 |

| Frequency | Success | Male | 1.308 | 0.760 |

| Frequency | Success | Male | 0.905 | 0.530 |

| Frequency | Success | Male | 0.885 | 0.607 |

| Frequency | Success | Female | 0.805 | 0.469 |

| Frequency | Success | Female | 0.746 | 0.181 |

| Frequency | Success | Female | 1.025 | 0.450 |

| Frequency | Success | Female | 0.745 | 0.260 |

| Frequency | Success | Female | 1.166 | 0.545 |

| Frequency | Success | Female | 0.830 | 0.255 |

IQR: interquartile range. Only trials in which the bird pecked a switch are included.

Figure A1.

Stimulus waveforms, spectrograms and coefficients of variation (CVs). (a) Normalized amplitude waveforms and spectrograms of the four song elements used in stimulus creation (sound A: 79.9 ms; sound B: 51.5 ms; sound C: 63.1 ms; sound D: 74.7 ms). (b) CV of inter-onset interval (IOI) for all arrhythmic stimuli against mean stimulus IOI. Note that as the average IOI approaches the length of the source sound element, IOI variability approaches 0. Sound files are available at https://dx.doi.org/10.17632/fw5f2vrf4k.2

Figure A2.

Learning curves for discrimination of stimuli based on rhythmic pattern, tempo or frequency. (a) Learning curves for seven female birds that successfully discriminated isochronous versus arrhythmic stimuli within 30 days for each sound type. (b) Learning curves for a separate cohort of females (N = 6) trained to discriminate isochronous sequences of sound A that differed in tempo (120 ms IOI versus 144 ms IOI) or frequency-shifted isochronous sequences of sound B (shifted up 3 semitones versus 6 semitones). All females tested met the criterion for discriminating stimuli based on tempo or frequency.

Figure A3.

Comparison of generalization of rhythm discrimination by sex. Mean d′ values for all successful males (N = 7) and females (N = 7) during the last 500 trials of the first training phase and for probe and interleaved training stimuli in all phases. Data are collapsed across training order, and interleaved training trials are combined across tempi. Dashed horizontal line (d′ = 0) indicates chance performance; d′ = 3 is close to perfect performance.

Figure A4.

Relationship between performance on probe trials (generalization) and interleaved training trials (learned discrimination) across birds that successfully completed all three training phases (N = 14). For each bird, data were combined across all three sound elements; each data point is the average performance across 240 probe stimuli (except for 216 probe trials for one female (inverted triangle), as noted in main text) and ~2000 interleaved training stimuli.

Figure A5.

Reaction time (RT) across a broad range of tempi. Median reaction times for correct trials versus tempo during rule training for male (circles) and female (triangles) birds. Fitted quadratic curves for male (solid line) and female (dashed line) birds are plotted to illustrate the observed relationships.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Declaration of Interest

None.

Data Availability

The raw data are available from the Mendeley Repository (https://doi.org/10.17632/2r29x6gr7w.2).

References

- Asokan MM, Williamson RS, Hancock KE, & Polley DB (2021). Inverted central auditory hierarchies for encoding local intervals and global temporal patterns. Current Biology, 31(8), 1762–1770. 10.1016/j.cub.2021.01.076 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bååth R, Tjøstheim TA, & Lingonblad M (2016). The role of executive control in rhythmic timing at different tempi. Psychonomic Bulletin & Review, 23(6), 1954–1960. 10.3758/s13423-016-1070-1 [DOI] [PubMed] [Google Scholar]

- Benichov JI, Benezra SE, Vallentin D, Globerson E, Long MA, & Tchernichovski O (2016). The forebrain song system mediates predictive call timing in female and male zebra finches. Current Biology, 26(3), 309–318. 10.1016/j.cub.2015.12.037 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berninger E (2007). Characteristics of normal newborn transient-evoked otoacoustic emissions: Ear asymmetries and sex effects. International Journal of Audiology, 46(11), 661–669. 10.1080/14992020701438797 [DOI] [PubMed] [Google Scholar]

- Blecher T, Tal I, & Ben-Shachar M (2016). White matter microstructural properties correlate with sensorimotor synchronization abilities. NeuroImage, 138, 1–12. 10.1016/j.neuroimage.2016.05.022 [DOI] [PubMed] [Google Scholar]

- Bouwer FL, Nityananda V, Rouse AA, & ten Cate C (2021). Rhythmic abilities in humans and nonhuman animals: A review and recommendations from a methodological perspective. Philosophical Transactions of the Royal Society B: Biological Sciences, 376(1835), Article 20200335. 10.1098/rstb.2020.0335 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brenowitz EA, & Remage-Healey L (2016). It takes a seasoned bird to be a good listener: Communication between the sexes. Current Opinion in Neurobiology, 38(1), 12–17. 10.1016/j.conb.2016.01.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Breska A, & Ivry RB (2018). Double dissociation of single-interval and rhythmic temporal prediction in cerebellar degeneration and Parkinson’s disease. Proceedings of the National Academy of Sciences of the United States of America, 115(48), 12283–12288. 10.1073/pnas.1810596115 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burchardt LS, & Knörnschild M (2020). Comparison of methods for rhythm analysis of complex animals’ acoustic signals. PLoS Computational Biology, 16(4), Article e1007755. 10.1371/journal.pcbi.1007755 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cannon JJ, & Patel AD (2021). How beat perception co-opts motor neurophysiology. Trends in Cognitive Sciences, 25(2), 137–150. 10.1016/j.tics.2020.11.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Celma-Miralles A, & Toro JM (2020). Discrimination of temporal regularity in rats (Rattus norvegicus) and humans (Homo sapiens). Journal of Comparative Psychology, 134(1), 3–10. 10.1037/com0000202 [DOI] [PubMed] [Google Scholar]

- Chen JL, Penhune VB, & Zatorre RJ (2008). Listening to musical rhythms recruits motor regions of the brain. Cerebral Cortex, 18(12), 2844–2854. 10.1093/cercor/bhn042 [DOI] [PubMed] [Google Scholar]

- Clemens J, Schöneich S, Kostarakos K, Hennig RM, & Hedwig B (2021). A small, computationally flexible network produces the phenotypic diversity of song recognition in crickets. ELife, 10, Article 61475. 10.7554/eLife.61475 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dalla Bella S, Benoit CE, Farrugia N, Keller PE, Obrig H, Mainka S, & Kotz SA (2017). Gait improvement via rhythmic stimulation in Parkinson’s disease is linked to rhythmic skills. Scientific Reports, 7, Article 42005. 10.1038/srep42005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Espinoza-Monroy M, & de Lafuente V (2021). Discrimination of regular and irregular rhythms explained by a time difference accumulation model. Neuroscience, 459, 16–26. 10.1016/j.neuroscience.2021.01.035 [DOI] [PubMed] [Google Scholar]

- Gall MD, Salameh TS, & Lucas JR (2013). Songbird frequency selectivity and temporal resolution vary with sex and season. Proceedings of the Royal Society B: Biological Sciences, 280(1751), Article 20122296. 10.1098/rspb.2012.2296 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gámez J, Mendoza G, Prado L, Betancourt A, & Merchant H (2019). The amplitude in periodic neural state trajectories underlies the tempo of rhythmic tapping. PLoS Biology, 17(4), Article e3000054. 10.1371/journal.pbio.3000054 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gámez J, Yc K, Ayala YA, Dotov D, Prado L, & Merchant H (2018). Predictive rhythmic tapping to isochronous and tempo changing metronomes in the nonhuman primate. Annals of the New York Academy of Sciences, 1423(1), 396–414. 10.1111/nyas.13671 [DOI] [PubMed] [Google Scholar]

- Garcia M, Theunissen F, Sèbe F, Clavel J, Ravignani A, Marin-Cudraz T, Fuchs J, & Mathevon N (2020). Evolution of communication signals and information during species radiation. Nature Communications, 11(1), Article 4970. 10.1038/s41467-020-18772-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gess A, Schneider DM, Vyas A, & Woolley SMN (2011). Automated auditory recognition training and testing. Animal Behaviour, 82(2), 285–293. 10.1016/j.anbehav.2011.05.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grahn JA, & Brett M (2007). Rhythm and beat perception in motor areas of the brain. Journal of Cognitive Neuroscience, 19(5), 893–906. 10.1162/jocn.2007.19.5.893 [DOI] [PubMed] [Google Scholar]

- Green DM, & Swets JA (1966). Signal detection theory and psychophysics. J. Wiley. [Google Scholar]

- Grube M, Cooper FE, Chinnery PF, & Griffiths TD (2010). Dissociation of duration-based and beat-based auditory timing in cerebellar degeneration. Proceedings of the National Academy of Sciences of the United States of America, 107(25), 11597–11601. 10.1073/pnas.0910473107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hage SR, Gavrilov N, & Nieder A (2016). Developmental changes of cognitive vocal control in monkeys. Journal of Experimental Biology, 219(11), 1744–1749. 10.1242/jeb.137653 [DOI] [PubMed] [Google Scholar]

- Hagmann CE, & Cook RG (2010). Testing meter, rhythm, and tempo discriminations in pigeons. Behavioural Processes, 85(2), 99–110. 10.1016/j.beproc.2010.06.015 [DOI] [PubMed] [Google Scholar]

- Honing H, Bouwer FL, Prado L, & Merchant H (2018). Rhesus monkeys (Macaca mulatta) sense isochrony in rhythm, but not the beat: Additional support for the gradual audiomotor evolution hypothesis. Frontiers in Neuroscience, 12, Article 475. 10.3389/fnins.2018.00475 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hulse SH, Humpal J, & Cynx J (1984). Discrimination and generalization of rhythmic and arrhythmic sound patterns by European starlings (Sturnus vulgaris). Music Perception, 1(4), 442–464. 10.2307/40285272 [DOI] [Google Scholar]

- Janik VM (2014). Cetacean vocal learning and communication. Current Opinion in Neurobiology, 28, 60–65. 10.1016/j.conb.2014.06.010 [DOI] [PubMed] [Google Scholar]

- Krentzel AA, & Remage-Healey L (2015). Sex differences and rapid estrogen signaling: A look at songbird audition. Frontiers in Neuroendocrinology, 38, 37–49. 10.1016/j.yfrne.2015.01.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriengwatana B, Spierings MJ, & ten Cate C (2016). Auditory discrimination learning in zebra finches: Effects of sex, early life conditions and stimulus characteristics. Animal Behaviour, 116, 99–112. 10.1016/j.anbehav.2016.03.028 [DOI] [Google Scholar]

- Krizman J, Bonacina S, & Kraus N (2020). Sex differences in subcortical auditory processing only partially explain higher prevalence of language disorders in males. Hearing Research, 398, Article 108075. 10.1016/j.heares.2020.108075 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krizman J, Rotondo EK, Nicol T, Kraus N, & Bieszczad K (2021). Sex differences in auditory processing vary across estrous cycle. Scientific Reports, 11(1), 1–7. 10.1038/s41598-021-02272-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krotinger A, & Loui P (2021). Rhythm and groove as cognitive mechanisms of dance intervention in Parkinson’s disease. PLoS One, 16(5), Article e0249933. 10.1371/journal.pone.0249933 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levitin DJ, & Cook PR (1996). Memory for musical tempo: Additional evidence that auditory memory is absolute. Perception and Psychophysics, 58(6), 927–935. 10.3758/BF03205494 [DOI] [PubMed] [Google Scholar]

- Low LE, Grabe E, & Nolan F (2000). Quantitative characterizations of speech rhythm: Syllabletiming in Singapore English. Language and Speech, 43(4), 377–401. 10.1177/00238309000430040301 [DOI] [PubMed] [Google Scholar]

- Mathevon N, Casey C, Reichmuth C, & Charrier I (2017). Northern elephant seals memorize the rhythm and timbre of their rivals’ voices. Current Biology, 27(15), 2352–2356. 10.1016/j.cub.2017.06.035 [DOI] [PubMed] [Google Scholar]

- Merchant H, Pérez O, Bartolo R, Méndez JC, Mendoza G, Gámez J, Yc K, & Prado L (2015). Sensorimotor neural dynamics during isochronous tapping in the medial premotor cortex of the macaque. European Journal of Neuroscience, 41(5), 586–602. 10.1111/ejn.12811 [DOI] [PubMed] [Google Scholar]

- Merker BH, Madison GS, & Eckerdal P (2009). On the role and origin of isochrony in human rhythmic entrainment. Cortex, 45(1), 4–17. 10.1016/j.cortex.2008.06.011 [DOI] [PubMed] [Google Scholar]

- Nagel KI, McLendon HM, & Doupe AJ (2010). Differential influence of frequency, timing, and intensity cues in a complex acoustic categorization task. Journal of Neurophysiology, 104(3), 1426–1437. 10.1152/jn.00028.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Naqvi S, Godfrey AK, Hughes JF, Goodheart ML, Mitchell RN, & Page DC (2019). Conservation, acquisition, and functional impact of sex-biased gene expression in mammals. Science, 365(6450), Article eaaw7317. 10.1126/science.aaw7317 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Norton P, & Scharff C (2016). ‘Bird song metronomics’: Isochronous organization of zebra finch song rhythm. Frontiers in Neuroscience, 10, Article 309. 10.3389/fnins.2016.00309 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nottebohm F, & Arnold AP (1976). Sexual dimorphism in vocal control areas of the songbird brain. Science, 194(4261), 211–213. 10.1126/science.959852 [DOI] [PubMed] [Google Scholar]

- Patel AD, & Iversen JR (2014). The evolutionary neuroscience of musical beat perception: The action simulation for auditory prediction (ASAP) hypothesis. Frontiers in Systems Neuroscience, 8, Article 57. 10.3389/fnsys.2014.00057 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patel AD, Iversen JR, & Rosenberg JC (2006). Comparing the rhythm and melody of speech and music: The case of British English and French. Journal of the Acoustical Society of America, 119(5), 3034–3047. 10.1121/1.2179657 [DOI] [PubMed] [Google Scholar]

- Rajendran VG, Harper NS, Garcia-Lazaro JA, Lesica NA, & Schnupp JWH (2017). Midbrain adaptation may set the stage for the perception of musical beat. Proceedings of the Royal Society B: Biological Sciences, 284(1866), Article 20171455. 10.1098/rspb.2017.1455 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ravignani A, & Madison G (2017). The paradox of isochrony in the evolution of human rhythm. Frontiers in Psychology, 8, Article 1820. 10.3389/fpsyg.2017.01820 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roeske TC, Tchernichovski O, Poeppel D, & Jacoby N (2020). Categorical rhythms are shared between songbirds and humans. Current Biology, 30(18), 3544–3555. 10.1016/j.cub.2020.06.072 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ross JM, Iversen JR, & Balasubramaniam R (2018). The role of posterior parietal cortex in beat-based timing perception: A continuous theta burst stimulation study. Journal of Cognitive Neuroscience, 30(5), 634–643. 10.1162/jocn_a_01237 [DOI] [PubMed] [Google Scholar]

- Rouse AA, Patel AD, & Kao MH (2021). Vocal learning and flexible rhythm pattern perception are linked: Evidence from songbirds. Proceedings of the National Academy of Sciences of the United States of America, 118(29), Article e2026130118. 10.1073/pnas.2026130118 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Samuels B, Grahn J, Henry MJ, & Macdougall-Shackleton SA (2021). European starlings (Sturnus vulgaris) discriminate rhythms by rate, not temporal patterns. Journal of the Acoustical Society of America, 149, 2546–2558. 10.1121/10.0004215 [DOI] [PubMed] [Google Scholar]

- Savage PE, Brown S, Sakai E, & Currie TE (2015). Statistical universals reveal the structures and functions of human music. Proceedings of the National Academy of Sciences of the United States of America, 112(29), 8987–8992. 10.1073/pnas.1414495112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schiavo JK, Valtcheva S, Bair-Marshall CJ, Song SC, Martin KA, & Froemke RC (2020). Innate and plastic mechanisms for maternal behaviour in auditory cortex. Nature, 587(7834), 426–431. 10.1038/s41586-020-2807-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schöneich S, Kostarakos K, & Hedwig B (2015). An auditory feature detection circuit for sound pattern recognition. Science Advances, 1(8), Article e1500325. 10.1126/sciadv.1500325 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shaughnessy DW, Hyson RL, Bertram R, Wu W, & Johnson F (2019). Female zebra finches do not sing yet share neural pathways necessary for singing in males. Journal of Comparative Neurology, 527(4), 843–855. 10.1002/cne.24569 [DOI] [PubMed] [Google Scholar]

- Stansbury AL, & Janik VM (2019). Formant modification through vocal production learning in gray seals. Current Biology, 29(13), 2244–2249. 10.1016/j.cub.2019.05.071 [DOI] [PubMed] [Google Scholar]

- Takahashi DY, Liao DA, & Ghazanfar AA (2017). Vocal learning via social reinforcement by infant marmoset monkeys. Current Biology, 27(12), 1844–1852. 10.1016/j.cub.2017.05.004 [DOI] [PubMed] [Google Scholar]

- Takeya R, Kameda M, Patel AD, & Tanaka M (2017). Predictive and tempo-flexible synchronization to a visual metronome in monkeys. Scientific Reports, 7(1), Article 6127. 10.1038/s41598-017-06417-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Teki S, Grube M, Kumar S, & Griffiths TD (2011). Distinct neural substrates of duration-based and beat-based auditory timing. Journal of Neuroscience, 31(10), 3805–3812. 10.1523/JNEUROSCI.5561-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- ten Cate C, Spierings M, Hubert J, & Honing H (2016). Can birds perceive rhythmic patterns? A review and experiments on a songbird and a parrot species. Frontiers in Psychology, 7, Article 730. 10.3389/fpsyg.2016.00730 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trehub SE, & Thorpe LA (1989). Infants’ perception of rhythm: Categorization of auditory sequences by temporal structure. Canadian Journal of Psychology, 43(2), 217–229. 10.1037/h0084223 [DOI] [PubMed] [Google Scholar]

- van der Aa J, Honing H, & ten Cate C (2015). The perception of regularity in an isochronous stimulus in zebra finches (Taeniopygia guttata) and humans. Behavioural Processes, 115, 37–45. 10.1016/j.beproc.2015.02.018 [DOI] [PubMed] [Google Scholar]

- Vaquero L, Ramos-Escobar N, François C, Penhune V, & Rodríguez-Fornells A (2018). White-matter structural connectivity predicts short-term melody and rhythm learning in non-musicians. NeuroImage, 181, 252–262. 10.1016/j.neuroimage.2018.06.054 [DOI] [PubMed] [Google Scholar]

- Verga L, Sroka MGU, Varola M, Villanueva S, & Ravignani A (2022). Spontaneous rhythm discrimination in a mammalian vocal learner. Biology Letters, 18(10), Article 20220316. 10.1098/rsbl.2022.0316 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vernes SC, & Wilkinson GS (2019). Behaviour, biology and evolution of vocal learning in bats. Philosophical Transactions of the Royal Society B: Biological Sciences, 375(1789), Article 20190061. 10.1098/rstb.2019.0061 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wirthlin M, Chang EF, Knörnschild M, Krubitzer LA, Mello CV, Miller CT, Pfenning AR, Vernes SC, Tchernichovski O, & Yartsev MM (2019). A modular approach to vocal learning: Disentangling the diversity of a complex behavioral trait. Neuron, 104(1), 87–99. 10.1016/j.neuron.2019.09.036 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yeh Y-T, Rivera M, & Woolley SMN (2022). Auditory sensitivity and vocal acoustics in five species of estrildid songbirds. Animal Behaviour, 195, 107–116. 10.1016/j.anbehav.2022.11.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zann RA (1996). The zebra finch: A synthesis of field and laboratory studies. Oxford University Press. [Google Scholar]

- Zentner M (2021). Social bonding and credible signaling hypotheses largely disregard the gap between animal vocalizations and human music. Behavioral and Brain Sciences, 44, Article e120. 10.1017/S0140525X2000165X [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The raw data are available from the Mendeley Repository (https://doi.org/10.17632/2r29x6gr7w.2).