Abstract

Background

Funders and scientific journals use peer review to decide which projects to fund or articles to publish. Reviewer training is an intervention to improve the quality of peer review. However, studies on the effects of such training yield inconsistent results, and there are no up‐to‐date systematic reviews addressing this question.

Objectives

To evaluate the effect of peer reviewer training on the quality of grant and journal peer review.

Search methods

We used standard, extensive Cochrane search methods. The latest search date was 27 April 2022.

Selection criteria

We included randomized controlled trials (RCTs; including cluster‐RCTs) that evaluated peer review with training interventions versus usual processes, no training interventions, or other interventions to improve the quality of peer review.

Data collection and analysis

We used standard Cochrane methods. Our primary outcomes were 1. completeness of reporting and 2. peer review detection of errors. Our secondary outcomes were 1. bibliometric scores, 2. stakeholders' assessment of peer review quality, 3. inter‐reviewer agreement, 4. process‐centred outcomes, 5. peer reviewer satisfaction, and 6. completion rate and speed of funded projects. We used the first version of the Cochrane risk of bias tool to assess the risk of bias, and we used GRADE to assess the certainty of evidence.

Main results

We included 10 RCTs with a total of 1213 units of analysis. The unit of analysis was the individual reviewer in seven studies (722 reviewers in total), and the reviewed manuscript in three studies (491 manuscripts in total).

In eight RCTs, participants were journal peer reviewers. In two studies, the participants were grant peer reviewers. The training interventions can be broadly divided into dialogue‐based interventions (interactive workshop, face‐to‐face training, mentoring) and one‐way communication (written information, video course, checklist, written feedback). Most studies were small.

We found moderate‐certainty evidence that emails reminding peer reviewers to check items of reporting checklists, compared with standard journal practice, have little or no effect on the completeness of reporting, measured as the proportion of items (from 0.00 to 1.00) that were adequately reported (mean difference (MD) 0.02, 95% confidence interval (CI) −0.02 to 0.06; 2 RCTs, 421 manuscripts).

There was low‐certainty evidence that reviewer training, compared with standard journal practice, slightly improves peer reviewer ability to detect errors (MD 0.55, 95% CI 0.20 to 0.90; 1 RCT, 418 reviewers).

We found low‐certainty evidence that reviewer training, compared with standard journal practice, has little or no effect on stakeholders' assessment of review quality in journal peer review (standardized mean difference (SMD) 0.13 standard deviations (SDs), 95% CI −0.07 to 0.33; 1 RCT, 418 reviewers), or change in stakeholders' assessment of review quality in journal peer review (SMD −0.15 SDs, 95% CI −0.39 to 0.10; 5 RCTs, 258 reviewers).

We found very low‐certainty evidence that a video course, compared with no video course, has little or no effect on inter‐reviewer agreement in grant peer review (MD 0.14 points, 95% CI −0.07 to 0.35; 1 RCT, 75 reviewers).

There was low‐certainty evidence that structured individual feedback on scoring, compared with general information on scoring, has little or no effect on the change in inter‐reviewer agreement in grant peer review (MD 0.18 points, 95% CI −0.14 to 0.50; 1 RCT, 41 reviewers, low‐certainty evidence).

Authors' conclusions

Evidence from 10 RCTs suggests that training peer reviewers may lead to little or no improvement in the quality of peer review. There is a need for studies with more participants and a broader spectrum of valid and reliable outcome measures. Studies evaluating stakeholders' assessments of the quality of peer review should ensure that these instruments have sufficient levels of validity and reliability.

Keywords: Humans; Bias; Checklist; Peer Review; Peer Review, Research; Publishing; Reproducibility of Results

Plain language summary

What are the benefits of training peer reviewers?

Key messages

• Training peer reviewers may have little or no effect on the quality of peer review. • Larger, well‐designed studies are needed to give better estimates of the effect.

What is a peer reviewer?

A peer reviewer is a person who evaluates the research work done by another person. The peer reviewer is usually a researcher with skills similar to those needed to conduct the research they evaluate.

What is peer review used for?

Both funders and publishers of research can be uncertain if a research project or report is of good quality. Many use peer reviewers to evaluate the quality of a project or report.

How could peer review quality be improved by training reviewers?

Training peer reviewers might make them better at identifying strengths and weaknesses in the research they assess.

What did we want to find out?

We wanted to find out if training peer reviewers increased the quality of their work.

What did we do?

We searched for studies that looked at training for peer reviewers compared with no training, different types of training, or standard journal or funder practice. We extracted information and summarized the results of all the relevant studies. We rated our confidence in the evidence based on factors such as study methods and sizes.

What did we find?

We found 10 studies that involved a total of 1213 units of analysis (722 reviewers and 491 manuscripts). Eight studies included only journal peer reviewers. The remaining two studies included grant peer reviewers.

Main results

Emails reminding peer reviewers to check items of reporting checklists, compared with standard journal practice, probably have little or no effect on the completeness of reporting (evidence from 2 studies with 421 manuscripts).

Reviewer training, compared with standard journal practice, may slightly improve peer reviewer ability to detect errors (evidence from 1 study with 418 reviewers). The reviewers who received training identified 3.25 out of 9 errors on average, whereas the reviewers who received no training identified 2.7 out of 9 errors on average.

Reviewer training, compared with standard journal practice, may have little or no effect on stakeholders' assessment of review quality (evidence from 6 studies with 616 reviewers and 60 manuscripts).

We are unsure about the effect of a video course, compared with no video course, on agreement between reviewers (evidence from 1 study with 75 reviewers).

Structured individual feedback on scoring, compared with general information on scoring, may have little or no effect on the change in agreement between reviewers (evidence from 1 study with 41 reviewers).

What are the limitations of the evidence?

We have little confidence in most of the evidence because most studies lacked important information and included too few reviewers. Additionally, it is unclear whether the studies measured peer review quality in a valid and reliable way.

How up to date is this evidence?

The evidence is up to date to April 2022.

Summary of findings

Summary of findings 1. Summary of findings. Peer reviewer training versus standard journal practice or no training.

| Population: peer reviewers Settings: journal or grant peer review Intervention: training Comparison: standard journal practice or no training | ||||||

| Outcomes | Anticipated absolute effects* (95% CI) | SMD† (95% CI) | No of reviewers/ manuscripts (studies) | Certainty of evidence (GRADE) | Comments | |

| No training | Training | |||||

|

Completeness of reporting Proportion of items adequately reported, from 0.00 to 1.00, higher number indicates more complete reporting. |

The mean proportion of adequately reported items was 0.58. | The MD was 0.02 more (0.02 less to 0.06 more). | — | 421 manuscripts (2 RCTs) | ⊕⊕⊕⊝ Moderatea |

Reminding peer reviewers to check items of reporting checklists, compared with standard journal practice, probably has little or no effect on completeness of reporting. |

|

Peer reviewer detection of errors Number of major errors identified, assessed by 2 researchers independently, from 0 to 9, higher number indicates better detection. |

The mean number of errors identified was 2.7. | The MD was 0.55 errors more (0.20 more to 0.90 more). | — | 418 reviewers (1 RCT) | ⊕⊕⊝⊝ Lowa,b |

Peer reviewer training, compared with standard journal practice, may slightly improve numbers of major errors identified. |

|

Stakeholders' assessment of review quality (final score) Assessed by 2 editors independently using the RQI from 1 to 5 (average of the 2 ratings), higher scores indicate higher quality. |

The mean final review quality score was 2.74. | — | SMD 0.13 SDs (−0.07 to 0.33) | 418 reviewers (1 RCT) | ⊕⊕⊝⊝ Lowa,b |

Peer reviewer training, compared with standard journal practice, may have little or no effect on stakeholders' assessment of review quality. |

|

Stakeholders' assessment of review quality (change score) Assessed using either: A) a 5‐point scale, change scores range from −4 to 4 (3 studies); B) a 5‐point scale, expressed by "slope of quality score change"(1 study); or C) the MQAI, from −144 to 144 (1 study). In all cases, higher scores indicate increased review quality. |

The mean change in review quality was: A) 0.09 points. B) −0.23 points. C) 4.87 points. |

— | SMD −0.15 SDs (−0.39 to 0.10) | 258 reviewers (5 RCTs) | ⊕⊕⊝⊝ Lowa,c |

Peer reviewer training, compared with standard journal practice, may have little or no effect on the change in stakeholders' assessment of review quality. |

|

Inter‐reviewer agreement (final score) Assessed using the average deviation between reviewers, from −4 to 0, higher scores indicate higher agreement. |

The mean average deviation was −1.03 points. | The MD was 0.14 points higher (0.07 lower to 0.35 higher). | — | 75 reviewers (1 RCT) | ⊕⊝⊝⊝ Very lowd,e |

We are uncertain about the effect of a video course, compared with no video course, on agreement between reviewers, assessed using the average deviation between reviewers. |

|

Inter‐reviewer agreement (change score) Assessed using the average score difference between reviewers, possible changes scores range from −9 to 9, higher scores indicate increased agreement. |

The mean change in average score difference was 0.16 points. | The MD was 0.18 points higher (0.14 lower to 0.50 higher). | — | 41 reviewers (1 RCT) | ⊕⊕⊝⊝ Lowd |

Structured individual feedback on scoring, compared with general information on scoring, may have little or no effect on the change in agreement between reviewers. |

| Bibliometric scores | — | — | — | — | — | No studies reported this outcome. |

| * The effect in the intervention group (and its 95% CI) is based on the assumed effect in the comparison group and the relative effect of the intervention (and its 95% CI).

† SMD is used as a summary statistic in meta‐analysis when the studies all assess the same outcome but measure it in a variety of ways. For interpretation of the SMD, 0.2 is considered a small effect, 0.5 a moderate effect, and 0.8 a large effect. CI: confidence interval; MD: Mean difference; MQAI: Manuscript Quality Assessment Instrument; RQI: Review Quality Instrument; SD: standard deviation; SMD: standardized mean difference. | ||||||

| GRADE Working Group grades of evidence High certainty: we are very confident that the true effect lies close to that of the estimate of the effect. Moderate certainty: we are moderately confident in the effect estimate; the true effect is likely to be close to the estimate of the effect, but there is a possibility that it is substantially different. Low certainty: our confidence in the effect estimate is limited; the true effect may be substantially different from the estimate of the effect. Very low certainty: we have very little confidence in the effect estimate; the true effect is likely to be substantially different from the estimate of effect. | ||||||

a Downgraded one level for imprecision: wide CI exceeds (or likely exceeds) threshold for both no effect and an important effect. b Downgraded one level for risk of bias: high risk of bias due to lack of blinding of participants and personnel, incomplete outcome data, and unclear allocation concealment. c Downgraded one level for risk of bias: unclear risk of bias due to selective outcome reporting in all five studies, unclear or high risk of bias due to incomplete outcome data in three studies, unclear allocation concealment in three studies, and unclear blinding in two studies. d Downgraded two levels for imprecision: limited number of participants, and wide CI exceeds (or likely exceeds) threshold for both no effect and an important effect. e Downgraded one level for indirectness: participants were not only reviewers, and the intervention was limited to interpreting the review scale; and one level for risk of bias: unclear allocation concealment, sequence generation, blinding, completeness of outcome data, and selective outcome reporting.

Background

Both research funders and scientific journals use peer review to decide which projects to fund or articles to publish, and to review methods and improve reporting of journal articles. Most funders and journals have a set of criteria and specific instructions for reviewing project proposals or articles. Some funders and journals train their reviewers to increase compliance with the defined review criteria. Training can involve various interventions and methods, including (but not limited to) training sessions, courses, handbooks, written instructions, feedback, and guidance. The primary goal of training is to improve the quality of peer review.

There are different outcome measures for evaluating the quality of peer review. These include how well the research project or article performs in terms of different bibliometrics, stakeholders' evaluation of peer reviews, agreement between reviewers, and authors' adherence to guidelines.

Several studies have shown that both grant and journal peer reviewers perform suboptimally on several important outcome measures (Bornmann 2011; Guthrie 2017). Reviewer training is aimed at improving these outcomes, but studies on the effects of training have yielded inconsistent results (Bruce 2016).

Description of the methods being investigated

According to Elsevier, one of the world's largest scholarly publishers, "Reviewers evaluate article submissions to journals based on the requirements of that journal, predefined criteria, and the quality, completeness and accuracy of the research presented. They provide feedback on the paper, suggest improvements and make a recommendation to the editor about whether to accept, reject or request changes to the article" (Elsevier 2023).

Both funders and journals usually have a set of formal documents describing the aims and the scope of their work (e.g. a mission statement), the specific criteria that funding applications and articles should meet (e.g. guidelines, call for proposals), and details of the scales used for scoring and how the criteria should be scored (e.g. peer reviewer scoring instructions). Peer reviewer training should address the aims of the funder or journal, the peer review criteria, and the scoring instructions, with the goal of improving adherence to these measures and thus peer review quality.

Surveys of peer reviewers show that they receive little formal training in peer review, that they would like more guidance, and that they think it would improve the quality of the peer review (Mulligan 2013; Sense About Science 2009; Warne 2016). Training can be delivered in various ways. Some funders and journals use passive training strategies, such as guidelines, written instructions, handbooks, or videos; while others use more active strategies, such as live training sessions, online courses, or mentoring. Most strategies are implemented before the peer review, but there are also post‐review training strategies, such as feedback or evaluations to train peer reviewers for their next peer review session.

How these methods might work

Peer reviewer training might improve the peer review process through several mechanisms.

Research has shown that peer reviewers' interpretation and weighting of criteria can vary (Abdoul 2012), and the tasks considered critical by peer reviewers (e.g. evaluating risk of bias) are often incongruent with the tasks requested by the funding programme officers and journal editors (e.g. providing recommendations for publication; Chauvin 2015). Training could help to reduce these discrepancies, which otherwise might lower the inter‐rater and intra‐rater reliability of peer review.

Human judgement and decision‐making processes are susceptible to cognitive biases, and research suggests this might also be the case in grant and journal peer review (Bornmann 2006; Gallo 2018; Langfeldt 2006; Lee 2013; Walker 2015). Studies have found that the following factors influence reviews or similar assessments.

Social influence: the influence of one reviewer's assessment on another (Lane 2020; Teplitskiy 2019)

Matthew effect or status bias: the tendency for signals of status in a manuscript or grant proposal (such as prestigious affiliations or well‐known author names) to affect the overall assessment of the manuscript or proposal (Bol 2018; Huber 2022)

Similarity bias or cognitive distance: when the degree of similarity between authors and reviewers affects the outcome of the review (Wang 2015)

Anchor effects: the tendency for assessments to be influenced too heavily by the first piece of information presented, such as the review score that happens to be presented at the start of a group discussion (Roumbanis 2017)

Sequence effects: the influence of the order in which items are reviewed on different assessment settings (Bhargava 2014; Danziger 2011; Olbrecht 2010; Sattler 2015).

Educating peer reviewers about the risk of biases and how to avoid them might reduce these effects.

Furthermore, in some instances, peer review is conducted in groups, and social psychological phenomena such as groupthink, group polarization, or bandwagon effects might influence decisions, particularly in panel discussions and applicant interviews associated with funding decisions (Olbrecht 2010). Showing peer reviewers how to avoid these pitfalls might increase the reliability and validity of peer review.

Why it is important to do this review

Scholarly peer review is an undertaking of massive proportions. A total of 1,714,780 new articles, originating from 5282 journal titles, were indexed in 2022 in MEDLINE alone (National Library of Medicine 2022). One 2015 publication estimated that there were about 34,550 scholarly peer‐reviewed journals in the world, with a combined annual production of approximately 2.5 million articles (Ware 2015). The editorial decision to publish or reject manuscripts submitted to these journals relies heavily on peer review and has significant consequences for both researchers and research output. Researchers are likely to be evaluated on how often and in which journals they publish when they apply for jobs or grants. Additionally, research output is affected not only by the peer reviewers' recommendations regarding publication, but also their suggestions regarding revisions to the manuscript, including the methodology of the study.

Worldwide, large funds are distributed through grant application processes in which experts and peers consider whether a project is worthy of support. According to one review, "peer review decisions award an estimated >95% of academic medical research funding" (Guthrie 2017). In the USA, the National Institutes of Health alone invests around USD 45 billion a year in medical research, and more than 84% of this is "awarded for extramural research, largely through almost 50,000 competitive grants" (National Institutes of Health 2022). In the EU, the Horizon Europe programme will grant nearly EUR 96 billion, funding thousands of projects and researchers, and involving thousands of peer reviewers (European Commission 2021). More than 20,000 peer reviewers were involved in the first three years of Horizon Europe's predecessor, Horizon 2020 (European Commission 2018). As with journal peer review, the consequences are significant. Not only does peer review affect the distribution of research funds, but success in grant applications may also affect researchers' future chances of success (Bol 2018).

The literature is inconclusive on the effect of peer reviewer training on the quality of peer review. No systematic reviews have studied the effects of training in grant peer review (Guthrie 2017; Sattler 2015), and the most recent review of studies on reviewer training in journal peer review is current to June 2015 (Bruce 2016). One realist synthesis on what works for peer review in research funding concluded that "Evidence of the broader impact of [...] interventions on the research ecosystem is still needed, and future research should aim to identify processes that consistently work to improve peer review across funders and research contexts" (Recio‐Saucedo 2022).

Grant and journal peer review are very similar processes, often using overlapping criteria (such as methodological quality, impact, and originality). We decided to include both types of peer review in this systematic review to increase power. One Cochrane Methodology Review addresses journal peer review (Jefferson 2007), and another addresses grant peer review (Demicheli 2007). Both focus on peer review as a measure for improving the quality of funded research and study reports, and they both highlight important challenges. As our review focuses on training as a measure to address some of these challenges, we believe it will complement the two existing reviews.

Objectives

To evaluate the effect of peer reviewer training on the quality of grant and journal peer review.

Methods

Criteria for considering studies for this review

Types of studies

We included randomized controlled trials (RCTs; including cluster‐RCTs) that evaluated training interventions for peer reviewers versus usual processes, no training interventions, or other interventions to improve the quality of peer review.

Types of data

We included quantitative data on estimated intervention effects, using both continuous and dichotomous variables.

Types of methods

Any intervention aimed at training peer reviewers was eligible. Based on surveys of peer reviewers and previous research on the effects of training, we expected the following interventions to be the most prevalent.

Guidelines, instructions, checklists, and templates

Guidance or mentoring

Feedback and evaluation

Workshops, seminars, and webinars

Self‐administered online/video courses

Interventions could be specific to journals or funders, or could be aimed at any type of peer review. Workshops, seminars, and webinars are usually held in groups. Some of the interventions are more time‐consuming than others. Feedback and evaluation are typically given after or during the peer review process.

Types of outcome measures

Our main outcome was the quality of the review, however measured. Based mainly on one systematic review of interventions to improve journal peer review, we expected to find the outcome measures listed below (Bruce 2016). Reporting of our prespecified outcome measures was not an inclusion criterion of this review. We expected to find both objective and subjective outcome reporting, and we analysed both.

Primary outcomes

Completeness of reporting in articles, based on relevant guidelines such as the CONsolidated Standards for Reporting Trials (CONSORT; Schulz 2010), the Template for Intervention Description and Replication (TIDieR; Hoffmann 2014), and Standard Protocol Items: Recommendations for Interventional Trials (SPIRIT; Chan 2013)

Peer reviewer detection of errors: identification of flaws in manuscripts and proposals

Secondary outcomes

Bibliometric scores, such as citation rates, Altmetrics score, and save rate

Stakeholders' assessment of peer review quality: evaluations of peer reviewers or their reviews by stakeholders, such as grant administration or editors (e.g. scored with instruments like the Review Quality Instrument (RQI; van Rooyen 1999) or the Manuscript Quality Assessment Instrument (MQAI; Goodman 1994))

Inter‐reviewer agreement: degree of agreement between peer reviewers (changes in inter‐rater reliability measures like weighted kappa and intraclass correlation)

Process‐centred outcomes, such as speed or cost of reviewing

Peer reviewer satisfaction with the review process

Completion rate and speed of funded projects (applicable only to grant peer review)

Search methods for identification of studies

We searched for all published and unpublished studies of the effect of peer reviewer training on the quality of peer review, without restrictions on language or publication status. One Information Specialist (HS) developed the search strategy, and another Information Specialist peer reviewed it.

Electronic searches

We searched the following databases.

Cochrane Central Register of Controlled Trials (Wiley; searched 27 April 2022)

MEDLINE (Ovid; 1946 to 26 April 2022)

Embase (Ovid; 1947 to 26 April 2022)

PsycINFO (Ovid; 1806 to April Week 3 2022)

ERIC (Ovid; 1965 to March 2022)

ProQuest Dissertations & Theses Global (ProQuest; searched 27 April 2022)

CINAHL (EBSCO; 1982 to 7 February 2021)

Web of Science Indexes (SCI‐EXPANDED, SSCI, A&HCI, CPCI‐S, CPCI‐SSH, ESCI; Clarivate; searched 27 April 2022)

OpenGrey (searched 7 February 2021)

We performed all searches on 27 April 2022, except OpenGrey and CINAHL, which we searched in February 2021. Appendix 1 shows the full search strategies.

We also searched the following trials registries for planned and ongoing trials.

U.S. National Institutes of Health Ongoing Trials Registry ClinicalTrials.gov (clinicaltrials.gov/)

World Health Organization (WHO) International Clinical Trials Registry Platform (ICTRP; trialsearch.who.int/)

Open Science Framework (OSF; osf.io/search)

Searching other resources

We checked the reference lists of included studies and any relevant systematic reviews for references to relevant trials (Horsley 2011).

We contacted major research funding agencies and researchers who were known or expected to have conducted relevant research to ask them for information on relevant trials.

Data collection and analysis

Selection of studies

An Information Specialist (HS) conducted the searches and removed duplicates using EndNote X9. Three review authors (JOH, IS, TD) were involved in assessing the studies in three steps. First, two review authors independently screened the titles and abstracts of the records and eliminated those that were clearly irrelevant. Second, two review authors read the full‐text articles and study protocols of all potentially eligible records to decide which trials to include. Third, we compared the results from the two independent screenings; in case of disagreements, another review author (AF) assessed the studies. The three authors involved in the discussion made the final decision of whether to include or exclude a study through electronic communication. We recorded the selection process in sufficient detail to create a PRISMA flow diagram (Page 2021).

Two of the review authors (JOH, IS) are authors of one of the included studies (Hesselberg 2021). In this case, two other review authors (AF, TD) screened the article for inclusion.

We used Covidence for the screening process (Covidence 2021).

Data extraction and management

One review author (JOH) extracted general information from the studies. Two of three review authors (JOH, TD, IS) independently extracted the remaining data from each study using the template provided in Covidence (Covidence 2021). Two review authors (JOH, TD) piloted the data extraction form on two studies. In case of disagreements, a review author who was blinded to the details of the disagreement (AF) assessed the studies. The three authors involved in the discussion made the final decision through electronic communication.

We extracted the following study characteristics.

General information: author details, year and language of publication

Methods: study design, study setting, withdrawals, total duration of the study, date of study

Participants: numbers and proportions in each group, peer reviewer experience, field of expertise, gender, language, country of residence

Interventions: detailed description of the intervention, comparison, how the intervention was designed and by whom, delivery format, temporal length of intervention, who delivered the intervention

Outcomes: prespecified primary and secondary outcomes and any other relevant outcomes, time points reported

Notes: study funding, conflicts of interest

For studies that required translation, we would have asked for help from colleagues or from Cochrane Crowd. If this did not resolve the issue, we would have contacted the authors and asked for help to retrieve the relevant information. If all else failed, we would have hired professional translators.

Two of the review authors (JOH, IS) are authors of one of the included studies (Hesselberg 2021). In this case, two other review authors (AF, TD) performed data extraction.

We used the TIDieR checklist to describe the components of the intervention (Hoffmann 2014).

Assessment of risk of bias in included studies

Two of three review authors (JOH, IS, TD) independently assessed the risk of bias of each study using the original Cochrane risk of bias tool (RoB 1; Higgins 2011). We performed the risk of bias assessments in Covidence and transferred the results to Review Manager Web (RevMan Web 2022). When the published report provided insufficient information, we contacted the study authors for clarification. In case of disagreements, a third review author (AF) assessed the risk of bias. The three authors involved in the discussion made the final decision through electronic communication.

Two review authors (JOH, IS) are authors of one of the included studies (Hesselberg 2021). For this study, another two review authors (AF, TD) assessed risk of bias.

We assessed the risk of bias across the following domains.

Random sequence generation and allocation concealment (selection bias)

Blinding of participants and personnel (performance bias) and outcome assessors (detection bias)

Incomplete outcome data (attrition bias)

Selective reporting (reporting bias)

Other sources of bias

We judged each study to be at high, unclear, or low risk of bias in each domain.

Measures of the effect of the methods

We collected all outcomes reported in the studies along with how they were measured (self‐report, chart‐abstraction, other objective primary or secondary outcomes). For included outcomes, we extracted the intervention effect estimate reported by the study authors, along with its confidence interval (CI) and the method of statistical analysis used to calculate it.

When a study reported more than one measure for the same outcome, we used the primary outcome as defined by the study authors. If the primary outcome was not clearly defined, we would have chosen the outcome most similar to approaches used across included trials.

We requested additional information from the study authors if reports did not contain sufficient data (Young 2011).

Continuous outcomes

For continuous outcomes, we analysed data based on the mean, standard deviation (SD), and the number of people assessed in both the intervention and comparison groups to calculate the mean difference (MD) and 95% CI. When different studies reported the same outcome using different scales, we used the standardized mean difference (SMD) with its 95% CI.

Dichotomous outcomes

For dichotomous outcomes, we planned to analyse data based on the number of events and the number of people assessed in the intervention and comparison groups. We would have used these to calculate the risk ratio (RR) and 95% CI.

Unit of analysis issues

Cluster‐randomized trials

We planned to analyse cluster‐randomized trials using the average cluster size and the value of the intraclass correlation coefficient (ICC). We would have followed the methods described in the Cochrane Handbook for Systematic Reviews of Interventions (Higgins 2011). If the trials had not reported ICC estimates, we would have requested them from the trial authors, and if these attempts were unsuccessful, we would have used an estimate from a similar trial. We planned to perform a sensitivity analysis, omitting trials that had not accounted for clustering.

Studies with multiple intervention groups

For studies that reported multiple intervention groups, we included only the groups that were relevant to our review. We excluded or combined arms when conducting meta‐analyses with pairwise comparisons.

Dealing with missing data

We assessed each study for missing data and attrition, and we contacted study authors to request missing data and provide reasons for and characteristics of dropouts (Young 2011).

Assessment of heterogeneity

We assessed statistical heterogeneity by inspecting the forest plot visually for outliers and CI overlap. We considered the presence of outliers and lack of CI overlap as indicators of possible heterogeneity. We used the Chi2 test to assess statistical heterogeneity, with the significance level set at P < 0.10 (Deeks 2017). We also used the I2 statistic and would have interpreted results above 30% as possible heterogeneity (Higgins 2003). We also planned to use subgroup and sensitivity analyses to assess heterogeneity.

Assessment of reporting biases

If any of the meta‐analyses had included 10 or more studies, we would have investigated publication (or other small‐study) bias by visually inspecting funnel plots for skewness. We would have further investigated skewness/asymmetry using Egger's test for continuous outcomes and Harbord's test for dichotomous outcomes (Egger 1997; Harbord 2006). If we detected asymmetry, we would have discussed possible explanations and considered performing sensitivity analyses.

Data synthesis

We meta‐analysed data to provide an overall effect estimate. To the best of our knowledge, there was no evidence to suggest that different training interventions would have markedly different effects. We therefore presumed similar effects for all training interventions, across both grant and journal peer review, and meta‐analysed the data collectively. We used Review Manager Web for data analysis (RevMan Web 2022).

Based on the expected variability in samples and interventions, we used a random‐effects model to incorporate this heterogeneity. For continuous variables, we used the inverse variance method. For dichotomous variables, we used the Mantel‐Haenszel method (Mantel 1959).

When several studies measured the same outcomes but used different tools, we calculated the SMD and 95% CI using the inverse variance method in Review Manager Web (RevMan Web 2022).

GRADE and summary of findings table

We created a summary of findings table for following outcomes.

Completeness of reporting

Peer reviewer detection of errors

Bibliometrics scores

Stakeholders' assessment of review quality

Inter‐reviewer agreement

We used the five GRADE considerations (study limitations, consistency of effect, imprecision, indirectness, and publication bias) to assess the certainty of the evidence as it related to the studies that contributed data to the meta‐analyses for the prespecified outcomes (Guyatt 2008). We used methods and recommendations described in section 8.5 and Chapter 12 of the Cochrane Handbook for Systematic Reviews of Interventions (Higgins 2017). We justified all decisions to downgrade the certainty of the evidence in footnotes to the summary of findings table.

Subgroup analysis and investigation of heterogeneity

If heterogeneity had been above the thresholds described in Assessment of heterogeneity, we may have conducted the following subgroup analyses to investigate possible sources of heterogeneity.

Type of peer review: although several peer review criteria overlap between grants and journals (e.g. methodological quality, originality, and impact), there are also important differences. For example, in grant peer review, the expected feasibility of the study and the merits of the research environment for the proposed research are essential criteria that are not a part of journal peer review. This might result in increased heterogeneity.

Degree of interaction: training interventions that demand more interaction from the peer reviewers are expected to yield better outcomes than those that demand little input. Ideally, time spent in training should be the basis of a subgroup analysis. However, we were doubtful that a sufficient number of studies would have precise measures of this. As an alternative, we would have analysed types of training that are likely to demand different degrees of interaction from the peer reviewers. Training interventions expected to demand a higher degree of interaction are personal mentoring/guidance/feedback/evaluation and seminars/workshops/webinars, whereas training interventions expected to demand a lower degree of interaction are guidelines/written or verbal instructions and checklists/template.

Sensitivity analysis

We would have conducted sensitivity analyses under the following circumstances.

If cluster‐randomized trials had not adjusted for clustering (i.e. we would have excluded data from non‐adjusted cluster‐randomized trials).

If we detected significant heterogeneity (we would have inspected forest plots to determine possible sources).

If some studies were at high risk of selection bias (we would have excluded the studies deemed high risk).

Results

Description of studies

Results of the search

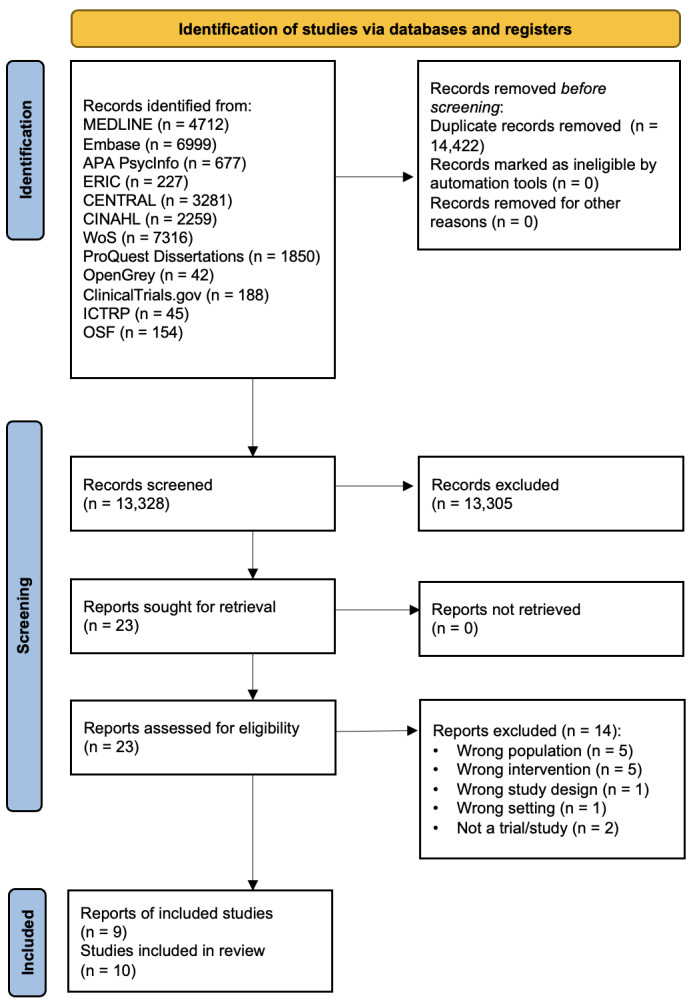

The searches yielded 27,750 records, all identified through searches via databases and registries and none through other sources. After removing 14,422 duplicates, we were left with 13,328 unique records.

After screening titles and abstracts, we excluded 13,305 records and assessed 23 full‐text reports for eligibility. We excluded 14 full‐text reports. Two of the remaining reports each described two studies (Callaham 2002a Study 1; Callaham 2002a Study 2, Callaham 2002b); only one of the studies described in Callaham 2002b was eligible for inclusion in this review. The total number of included studies was 10. Figure 1 illustrates the study selection process in a PRISMA flow chart.

1.

Included studies

The Characteristics of included studies table provides the details of each study.

We included 10 studies (Callaham 2002a Study 1; Callaham 2002a Study 2; Callaham 2002b; Cobo 2007; Hesselberg 2021; Houry 2012; Sattler 2015; Schroter 2004; Speich 2023 Study 1; Speich 2023 Study 2). All the studies measured the effects of peer reviewer training compared to no training or standard journal or funder practice. Eight included studies published results between 2002 and 2022, and an author of the two remaining studies provided results through personal communication in 2023 (Speich 2023 Study 1; Speich 2023 Study 2).

Design

All studies were parallel‐group RCTs with individual randomization. The number of randomized participants varied from 22 (Callaham 2002b) to 418 (Schroter 2004). The unit of analysis was the individual reviewer in seven studies (722 reviewers in total; Callaham 2002a Study 1; Callaham 2002a Study 2; Callaham 2002b; Hesselberg 2021; Houry 2012; Sattler 2015; Schroter 2004), and the reviewed manuscript in three studies (491 manuscripts in total; Cobo 2007; Speich 2023 Study 1; Speich 2023 Study 2). The total number of units randomized was 1213, with 660 allocated to a training intervention and 553 to a control group.

Participants

In eight studies, the participants were journal peer reviewers (Callaham 2002a Study 1; Callaham 2002a Study 2; Callaham 2002b; Cobo 2007; Houry 2012; Schroter 2004; Speich 2023 Study 1; Speich 2023 Study 2). Most participants (1097 of 1213) were included in these studies. In three of them, the unit of randomization was submitted manuscripts (Cobo 2007; Speich 2023 Study 1; Speich 2023 Study 2).

In two studies, the participants were grant peer reviewers (Hesselberg 2021; Sattler 2015).

One study was conducted at five different journals based in the USA or the UK (Speich 2023 Study 2). Four studies were conducted at journals based in the USA (Callaham 2002a Study 1; Callaham 2002a Study 2; Callaham 2002b; Houry 2012), two studies at journals based in the UK (Schroter 2004; Speich 2023 Study 1), and one study at a journal based in Spain (Cobo 2007). One study was conducted at a funder in Norway (Hesselberg 2021), and one was conducted at a university in the USA (Sattler 2015).

These countries most likely do not align with the participants' countries of residence. However, in the two studies that provided this information, the participants' country of residence and the country where the study was conducted did coincide (Hesselberg 2021; Sattler 2015).

Interventions

The interventions can be broadly divided into dialogue‐based interventions and one‐way communication. One study had two intervention arms, with interventions from both categories: face‐to‐face training and self‐teaching (Schroter 2004).

Two studies used only dialogue‐based interventions: an interactive workshop in Callaham 2002b and mentoring in Houry 2012.

Seven studies used interventions based on one‐way communication: an email reminder to check items of guidelines (Speich 2023 Study 1; Speich 2023 Study 2), written feedback or written information on how to do reviews (Callaham 2002a Study 1; Callaham 2002a Study 2; Hesselberg 2021), a checklist (Cobo 2007), and an 11‐minute video course (Sattler 2015). See Table 2 for a summary of study interventions.

1. Summary of study interventions.

| Study | Setting | Intervention | Control |

| Callaham 2002a Study 1 | Journal peer review | Summary of goals for quality review + editor's numerical rating | Standard journal practice |

| Callaham 2002a Study 2 | Journal peer review | Study 1 + copy of excellent review | Standard journal practice |

| Callaham 2002b | Journal peer review | 4‐hour, highly interactive workshop | Standard journal practice |

| Cobo 2007 | Journal peer review | Checklist (clinical reviewer + checklist) | Standard review + a standard letter |

| Hesselberg 2021 | Grant peer review | 1‐page, structured individual feedback report on scoring | 1‐page, non‐specific, general information on scoring |

| Houry 2012 | Journal peer review | Mentoring | Standard journal practice |

| Sattler 2015 | Grant peer review | 11‐minute video course | Written information only |

| Schroter 2004 | Journal peer review | Face‐to‐face training or self‐teaching | Standard journal practice |

| Speich 2023 Study 1 | Journal peer review | Email reminder to check items of SPIRIT guidelines | Standard journal practice |

| Speich 2023 Study 2 | Journal peer review | Email reminder to check items of CONSORT guidelines | Standard journal practice |

CONSORT: Consolidated Standards of Reporting Trials; SPIRIT: Standard Protocol Items: Recommendations for Interventional Trials.

Outcome measures

Three studies evaluated one of our predefined primary outcomes (completeness of reporting or peer reviewer detection of errors; Schroter 2004; Speich 2023 Study 1; Speich 2023 Study 2). Two studies measured completeness of reporting as the portion of adequately reported items from the SPIRIT guidelines (Speich 2023 Study 1) or the CONSORT guidelines (Speich 2023 Study 2). Schroter 2004 measured peer reviewer detection of errors by registering the number of major errors detected in a manuscript with deliberately inserted flaws.

Eight studies reported one of our secondary outcome measures. Six journal peer review studies evaluated stakeholders' assessment of review quality or change in stakeholders' assessment of review quality as the main outcome, using a variety of instruments and measures (Callaham 2002a Study 1; Callaham 2002a Study 2; Callaham 2002b; Cobo 2007; Houry 2012; Schroter 2004). Schroter 2004 used the average of two editors' assessments of review quality based on the RQI (van Rooyen 1999). Callaham 2002a Study 1, Callaham 2002a Study 2, and Callaham 2002b reported change in review quality assessed by editors on a five‐point scale used by the Annals of Emergency Medicine (Callaham 1998). Houry 2012 reported the slope of change in quality assessed independently by two researchers on the five‐point scale used by the Annals of Emergency Medicine, and Cobo 2007 reported the change in the average of two editors' assessment of review quality using the MQAI (Goodman 1994).

Both grant peer review studies used inter‐reviewer agreement as the main outcome (Hesselberg 2021, Sattler 2015). Sattler 2015 used intraclass correlation to measure agreement between participants. For ease of analysis and interpretation, we accessed the original data and used the participants' average deviation from the mean as the outcome. Greater deviation represents greater disagreement.

Excluded studies

See the Characteristics of excluded studies table.

We excluded 14 studies at full text review stage for the following reasons.

Ineligible population (not reviewers): Blanco 2020; Ghannad 2021; MacAuley 1998; Strowd 2014; Wong 2017

Ineligible intervention (not training): NCT03751878; Cobo 2011; Green 1989; Jones 2019; Pitman 2019

Ineligible study design (not an RCT): Chauvin 2017

Ineligible setting (not journal or grant peer review): Crowe 2011

Not a trial/study: DiDomenico 2017; Gardner 1986

Ongoing studies

At the time of the search, two records were protocols of ongoing studies (Speich 2023 Study 1; Speich 2023 Study 2). Results from these studies were presented at a conference in September 2022. On 11 March 2023, we contacted the lead author, who sent us a manuscript draft with all the details we needed to include the two studies.

Risk of bias in included studies

We contacted the authors of all studies for clarification on unclear risk of bias items; authors of five studies replied (Hesselberg 2021; Sattler 2015; Schroter 2004; Speich 2023 Study 1; Speich 2023 Study 2).

Figure 2 shows the review authors' judgements about each risk of bias item for each study, and Figure 3 shows the review authors judgements about each risk of bias item as percentages across all studies.

2.

'Risk of bias' summary: review authors' judgements about each risk of bias item for each included study.

3.

'Risk of bias; graph: review authors' judgements about each risk of bias item presented as percentages across all included trials.

Allocation

Random sequence generation

Nine studies randomized participants using computer‐generated random sequences, so were at low risk of bias in this domain (Callaham 2002a Study 1; Callaham 2002a Study 2; Callaham 2002b; Cobo 2007; Hesselberg 2021; Houry 2012; Schroter 2004; Speich 2023 Study 1; Speich 2023 Study 2). Sattler 2015 provided no information on the method of randomization (unclear risk of bias).

Allocation concealment

We rated five studies at low risk of bias for allocation concealment (Callaham 2002b; Hesselberg 2021; Houry 2012; Speich 2023 Study 1; Speich 2023 Study 2). The remaining five studies provided insufficient details on allocation concealment so were at unclear risk (Callaham 2002a Study 1; Callaham 2002a Study 2; Cobo 2007; Sattler 2015; Schroter 2004).

Blinding

Blinding of participants and personnel

Six studies reported adequate blinding of participants and personnel (Callaham 2002a Study 1; Callaham 2002a Study 2; Callaham 2002b; Hesselberg 2021; Speich 2023 Study 1; Speich 2023 Study 2).

In Cobo 2007, it was unclear if both the participants and the editorial team engaging with them were blinded. In two studies, the personnel were blinded, but it was unclear if the participants were blinded (Houry 2012; Sattler 2015). We considered Schroter 2004 at high risk of performance bias, as no participants in either group were blinded.

Blinding of outcome assessors

Outcome assessors were blinded in seven studies (Callaham 2002a Study 1; Callaham 2002a Study 2; Callaham 2002b; Hesselberg 2021; Schroter 2004; Speich 2023 Study 1; Speich 2023 Study 2). In Cobo 2007, the outcome assessors were able to identify the manuscripts that had been reviewed by a reviewer using a checklist. However, the report states that "after stratifying by response to [the] blinding questions, the overall conclusion on the main effects remain the same". Based on this clarification, we considered the risk of detection bias to be unclear. The two remaining studies provided no information on blinding of assessors so were also at unclear risk (Houry 2012; Sattler 2015).

Incomplete outcome data

We judged five studies at low risk of attrition bias (Cobo 2007; Hesselberg 2021; Houry 2012; Speich 2023 Study 1; Speich 2023 Study 2). Two studies reported very low levels of attrition (Cobo 2007; Hesselberg 2021), and one study reported an intention‐to‐treat analysis that yielded similar results to the main analysis (Houry 2012). Two studies reported high rates of exclusions, but the exclusions were similar in the intervention and control groups (Speich 2023 Study 1; Speich 2023 Study 2).

We judged three studies at unclear risk of attrition bias: they reported low attrition rates but did not provide reasons for missing data (Callaham 2002a Study 1; Callaham 2002a Study 2; Sattler 2015).

We judged two studies at high risk of attrition bias: they reported high rates of attrition with no description of the difference between responders and non‐responders (Callaham 2002b; Schroter 2004).

Selective reporting

Three studies were preregistered, so we judged them at low risk of reporting bias (Hesselberg 2021; Speich 2023 Study 1; Speich 2023 Study 2). We contacted the authors of the remaining studies to request information on any preplanned analyses (Callaham 2002a Study 1; Callaham 2002a Study 2; Callaham 2002b; Cobo 2007; Houry 2012; Sattler 2015; Schroter 2004). We received no such information, so considered these studies at unclear risk of reporting bias.

Other potential sources of bias

We identified no other potential sources of bias.

Effect of methods

Primary outcomes

Three studies reported one of our two predefined primary outcomes (Schroter 2004; Speich 2023 Study 1; Speich 2023 Study 2).

Completeness of reporting

Two studies measured the portion of adequately reported items from the SPIRIT checklist (Speich 2023 Study 1) or the CONSORT checklist (Speich 2023 Study 2). These studies included 421 manuscripts randomized to journal peer reviewers receiving either an email reminder to check items of SPIRIT or CONSORT guidelines or standard journal practice. Reminding peer reviewers to use reporting guidelines probably had little or no effect on completeness of reporting compared to standard journal practice (MD 0.02, 95% CI −0.02 to 0.06; moderate‐certainty evidence; Analysis 1.1). We downgraded the certainty of the evidence by one level for imprecision.

1.1. Analysis.

Comparison 1: Training versus no training or standard journal practice, Outcome 1: Completeness of reporting (proportion of items adequately reported, from 0.00 to 1.00)

Peer reviewer detection of errors

Schroter 2004 reported the number of major errors identified in a manuscript with deliberately inserted flaws. This study included 418 journal peer reviewers. Training compared with standard journal practice may slightly improve peer reviewer detection of errors (MD 0.55, 95% CI 0.20 to 0.90; low‐certainty evidence; Analysis 1.2). We downgraded the certainty of the evidence by two levels for risk of bias and imprecision.

1.2. Analysis.

Comparison 1: Training versus no training or standard journal practice, Outcome 2: Peer reviewer detection of errors (number of errors identified, from 0 to 9)

Secondary outcomes

Eight studies evaluated one of our predefined secondary outcomes (Callaham 2002a Study 1; Callaham 2002a Study 2; Callaham 2002b; Cobo 2007; Hesselberg 2021; Houry 2012; Sattler 2015; Schroter 2004).

Bibliometric scores

No studies reported bibliometric scores.

Stakeholders' assessment of review quality

Six studies, including a total of 676 participants, reported stakeholder's assessment of review quality (Callaham 2002a Study 1; Callaham 2002a Study 2; Callaham 2002b; Cobo 2007; Houry 2012; Schroter 2004). All the studies indicated little or no effect of the training interventions.

One study, including 418 reviewers, reported review quality as a final score (Schroter 2004). Reviewer training, compared with standard journal practice, may have little or no effect on stakeholders' assessment of review quality (SMD 0.13, 95% CI −0.07 to 0.33; low‐certainty evidence; Analysis 1.3). We downgraded the certainty of the evidence by two levels for risk of bias and imprecision.

1.3. Analysis.

Comparison 1: Training versus no training or standard journal practice, Outcome 3: Stakeholders' assessment of review quality

The five remaining studies, including 258 reviewers, reported change in review quality (Callaham 2002a Study 1; Callaham 2002a Study 2; Callaham 2002b; Cobo 2007; Houry 2012). Reviewer training compared with standard journal practice may have little or no effect on change in stakeholders' assessment of review quality (SMD −0.15, 95% CI −0.39 to 0.10; low‐certainty evidence; Analysis 1.3). We downgraded the certainty of the evidence by two levels for risk of bias and imprecision.

Inter‐reviewer agreement

Two studies reported levels of agreement between reviewers (Hesselberg 2021; Sattler 2015).

Sattler 2015, which included 75 reviewers, reported inter‐reviewer agreement as a final score. We are unsure about the effect of a peer reviewer training video, compared with no video, on inter‐reviewer agreement (MD 0.14, 95% CI −0.07 to 0.35; very low‐certainty evidence; Analysis 1.4). We downgraded the certainty of the evidence by three levels for risk of bias, imprecision, and indirectness.

1.4. Analysis.

Comparison 1: Training versus no training or standard journal practice, Outcome 4: Inter‐reviewer agreement

Hesselberg 2021, which included 41 reviewers, reported change in agreement. Structured individual feedback on scoring, compared with general information on scoring, may have little or no effect on change in inter‐reviewer agreement (MD 0.18, 95% CI −0.14 to 0.50; low‐certainty evidence; Analysis 1.4). We downgraded the certainty of the evidence by two levels for imprecision.

Process‐centred outcomes

No studies reported process‐centred outcomes.

Peer reviewer satisfaction

No studies reported peer reviewer satisfaction.

Completion rate and speed of funded projects

No studies reported completion rate and speed of funded projects.

Discussion

Summary of main results

We found moderate‐certainty evidence from two RCTs that reminding peer reviewers of important reporting guideline items has little or no effect on completeness of reporting. We found low‐certainty evidence from one RCT that peer reviewer training slightly increases peer reviewer detection of errors, but the improvement was not meaningful. There was low‐certainty evidence that different types of peer reviewer training have little or no effect on stakeholders' assessment of review quality (final score and change score) and change in inter‐reviewer agreement. Finally, there was very low‐certainty evidence that a video course has little or no effect on inter‐reviewer agreement (final score).

In summary, the evidence from 10 RCTs suggests that training peer reviewers may lead to little or no improvement in the quality of peer review. New research may change our conclusions.

Overall completeness and applicability of evidence

In general, the included studies had relevant settings, interventions, participants, and comparators. One exception is Sattler 2015, where the participants included people other than peer reviewers, and the outcome measure was agreement in the interpretation of descriptions of different review scores, not agreement in the review of research proposals.

When this review was submitted (5 April 2023), the mean number of years since publication of the included reviews was 13.1 (median 16.0 years, range 0.6 to 20.9). However, we do not believe the relevance of the interventions or the typical review setting changed significantly over these years

There are some concerns regarding the methods of measuring the outcomes, particularly stakeholder's assessment of peer review quality. One systematic review of the tools used to assess the quality of peer review concluded that the "development and validation process [of these tools] is questionable and the concepts evaluated by these tools vary widely" (Superchi 2019). In addition, inter‐rater reliability for the tools used in the included studies is generally low, no studies assessed criterion validity, and no studies provided a definition of overall quality. Hence, it is debatable whether stakeholders can assess peer review quality in a meaningful way and whether the tools used to guide the assessments are valid and reliable.

We considered grant and journal peer review as equal processes because they share many important traits. However, there are some differences that might limit the generalizability of results from one to the other. For example, it is reasonable to assume that grant peer reviewers are older and more experienced, are more often paid, and discuss more with other peer reviewers. However, we are unaware of any studies comparing grant to journal peer review, and we suspect that the variance is greater within each process rather than between the two.

We have a similar concern related to the selection of interventions. Although our assessments did not reveal problematic levels of heterogeneity, it could be argued that the interventions are not sufficiently similar. For example, a workshop is likely to have both more impact and higher compliance rates than an email reminder to check items of reporting guidelines, and it may have been better not to mix the different interventions in one analysis.

Furthermore, we consider the homogeneity of the outcome measures to be a disadvantage. The included studies reported only four of our prespecified outcomes: completeness of reporting, peer reviewer detection of errors, stakeholders' assessment of peer review quality, and inter‐reviewer agreement. While these are important measures, they are far from the only outcomes of interest. Editors, funders, researchers, and other end‐users like policymakers and patients are not primarily interested in whether reviewers detect errors, whether they agree, or how the editors assess the review quality; they are likely more interested in whether training peer reviews can improve the scientific output, resulting in a greater impact on end‐users' lives. Impact‐related outcomes such as new patents or products, contributions to policy change, or changes in treatment guidelines seem more relevant, albeit difficult to measure.

Quality of the evidence

Seven of the 10 included studies had unclear or high risk of bias in two or more of the seven domains. Several studies lacked information on allocation concealment and outcome data. Seven studies did not preregister any hypotheses or outcome measures. However, as no studies reported any important effect of the intervention, we consider it unlikely that the hypotheses or outcomes were altered in a way that would have affected the overall results.

The main reasons for downgrading the certainty of the evidence were risk of bias and imprecision (wide CIs exceeding or likely exceeding the threshold for both no effect and an important effect, and limited number of participants).

We downgraded one outcome (inter‐reviewer agreement; final score) for indirectness due to the questionable relevance of methods of measuring peer review quality (see Overall completeness and applicability of evidence).

Potential biases in the review process

Two review authors independently extracted all data and assessed the risk of bias. We resolved any disagreements by discussion or by involving a third review author. The use of RoB 1 is a possible weakness, as there is now a second version of the risk of bias tool (RoB 2).

In the search for unpublished trials, we contacted a selection of journals, funders, and researchers. Our choice of whom to contact was based on prior knowledge, scanning references, and superficial web searches; we could have made a more systematic effort to determine the most relevant sources of information.

Two study authors (IS and JOH) were involved in one of the included studies (Hesselberg 2021). For that study, two other review authors (AF and TD) performed data extraction and risk of bias assessment. We believe this was the best way of overcoming any potential biases. However, as the authors of the included study (IS and JOH) and the review authors assessing the study for inclusion (AF and TD) are all members of the same Cochrane author group, this might still be considered a limitation. In future updates, this could be subject to alteration if Cochrane's conflict of interest guidelines change.

The results of our review may be affected by publication bias. Although we conducted a thorough search and contacted large journals, and funders, we identified no unpublished studies. However, there is a vast number of journals and funders, and peer review processes are well suited to controlled experiments due to easy access to participants and data. Additionally, many funders and journals are familiar with the benefits of doing controlled research. For these reasons, we believe there may be unpublished research, but we consider this concern insufficient to downgrade the certainty of the evidence.

Agreements and disagreements with other studies or reviews

We are aware of two previous reviews that have addressed reviewer training. They both found similar results to ours, based on one or more of the same studies included in our review. The most recent review analysed several interventions, including reviewer training, for improving journal peer review (Bruce 2016). The meta‐analysis of the training interventions included data from five studies that were also included in this review (Callaham 2002a Study 1; Callaham 2002a Study 2; Callaham 2002b; Houry 2012; Schroter 2004). Bruce 2016 concluded that "Training had no impact on the quality of the peer review report".

A Cochrane Methodology Review of editorial peer review concluded that "There is no evidence that referees' training has any effect on the quality of the outcome" (Jefferson 2007). However, the searches for that review were performed in 2004, and the results are likely outdated.

Authors' conclusions

Implication for systematic reviews and evaluations of healthcare.

Evidence from 10 RCTs suggests that training peer reviewers may lead to little or no improvement in the quality of peer review. There is a need for studies with more participants and a broader spectrum of valid and reliable outcome measures. Future studies should pay particular attention to the possible heterogeneity between interventions aimed at grant reviewers versus journal reviewers, and between different types of training.

We believe journals and funders are in an ideal position to initiate such research. Their staff often have research experience, they organize huge quantities of reviews, and data on the review process are often collected systematically through editorial and grant manager systems. Settings like these lend themselves to testing interventions in randomized controlled trials.

Implication for methodological research.

The small number of included studies, the low number of participants in most studies, and the questionable validity and reliability of measures of peer review quality highlight the need for additional randomized trials. These trials should evaluate outcome measures of higher relevance and quality.

To increase relevance and generalizability, future studies should ideally involve multiple journals or funders, as the review settings and standard practice can vary greatly between them.

Studies that evaluate stakeholders' assessments of the quality of peer review should clearly define what constitutes good quality in that specific setting, and ensure that the instruments have sufficient validity and reliability.

History

Protocol first published: Issue 11, 2020

Acknowledgements

We wish to thank information specialist Marit Johansen at the Norwegian Institute of Public Health for reviewing the search strategy and offering important suggestions.

We also wish to thank secretary‐general Hans Christian Lillehagen at the Norwegian Foundation Dam for providing feedback on and supporting the project in its initial stages. His feedback was of particular importance to the selection of criteria for considering studies for the review.

Editorial contributions

Cochrane Methodology Review Group supported the authors in the development of this systematic review. The following people conducted the editorial process for this article:

Sign‐off Editor (final editorial decision): Mike Clarke, Co‐ordinating Editor of the Cochrane Methodology review Group, Queen's University Belfast, UK.

Managing Editor (selected peer reviewers, collated peer reviewer comments, provided editorial guidance to authors, edited the article): Marwah Anas El‐Wegoud, Cochrane Central Editorial Service.

Editorial Assistant (conducted editorial policy checks and supported editorial team): Leticia Rodrigues, Cochrane Central Editorial Service.

Copy Editor (copy editing and production): Julia Turner, Cochrane Central Production Service.

Peer reviewers (provided comments and recommended an editorial decision): Camilla Hansen Nejstgaard, Centre for Evidence‐Based Medicine Odense (CEBMO) & Cochrane Denmark; Dawid Pieper, Brandenburg Medical School, Germany; Professor Philippa Middleton, SAHMRI, University of Adelaide; Valerie Wells Research Associate, Information Scientist MRC/CSO Social and Public Health Sciences Unit School of Health and Wellbeing University of Glasgow (search review).

Appendices

Appendix 1. Search strategy

Ovid MEDLINE(R)

ALL <1946 to April 26, 2022>

Date searched: 27 April 2022

| 1 | Peer Review/ or Peer Review, Research/ | 15162 |

| 2 | peer review*.tw,kf. | 43906 |

| 3 | 1 or 2 | 53557 |

| 4 | Education, Professional/ or exp Inservice Training/ or Mentors/ or Mentoring/ | 46344 |

| 5 | (train* or workshop* or school* or feedback or mentor* or coach* or teach* or taught or educat* or exercis* or guid* or instruct* or practice or handbook* or manual* or course* or seminar* or webinar* or procedure* or program* or assessment* or evaluation* or checklist* or check‐list* or correspond* or template*).tw,kf. | 7824904 |

| 6 | 4 or 5 | 7837640 |

| 7 | 3 and 6 | 30592 |

| 8 | ((randomized controlled trial or controlled clinical trial).pt. or randomized.ab. or placebo.ab. or clinical trials as topic.sh. or randomly.ab. or trial.ti.) not (exp animals/ not humans.sh.) | 1326893 |

| 9 | 7 and 8 | 4712 |

Embase Classic+Embase

<1947 to 2022 April 26>

Date searched: 27 April 2022

| 1 | "peer review"/ | 35193 |

| 2 | peer review*.tw,kw. | 50891 |

| 3 | 1 or 2 | 72623 |

| 4 | education/ or continuing education/ or course content/ or education program/ or in service training/ or mentoring/ or mentor/ or postgraduate education/ or refresher course/ or teaching/ or workshop/ | 685025 |

| 5 | (train* or workshop* or school* or feedback or mentor* or coach* or teach* or taught or educat* or exercis* or guid* or instruct* or practice or handbook* or manual* or course* or seminar* or webinar* or procedure* or program* or assessment* or evaluation* or checklist* or check‐list* or correspond* or template*).tw,kw. | 10636114 |

| 6 | 4 or 5 | 10800658 |

| 7 | 3 and 6 | 38563 |

| 8 | (randomized controlled trial/ or randomization/ or (random*.tw,kw. or trial.ti.)) not (Animal experiment/ not (human experiment/ or human/)) | 1927927 |

| 9 | 7 and 8 | 6999 |

APA PsycInfo

<1806 to April Week 3 2022>

Date searched: 27 April 2022

| 1 | Peer Evaluation/ | 3232 |

| 2 | peer review*.tw. | 10570 |

| 3 | 1 or 2 | 12616 |

| 4 | exp Teaching/ or Training/ or Personnel Training/ or Inservice Training/ or Education/ or Continuing Education/ or Professional Development/ or Mentor/ | 219319 |

| 5 | (train* or workshop* or school* or feedback or mentor* or coach* or teach* or taught or educat* or exercis* or guid* or instruct* or practice or handbook* or manual* or course* or seminar* or webinar* or procedure* or program* or assessment* or evaluation* or checklist* or check‐list* or correspond* or template*).tw. | 2427361 |

| 6 | 4 or 5 | 2437073 |

| 7 | 3 and 6 | 8817 |

| 8 | (Randomized Controlled Trials/ or (random* or double‐blind).tw. or trial.ti.) not exp animals/ | 232886 |

| 9 | 7 and 8 | 677 |

ERIC

<1965 to March 2022>

Date searched: 27 April 2022

| 1 | Peer Evaluation/ | 5619 |

| 2 | peer review*.tw. | 3870 |

| 3 | 1 or 2 | 8064 |

| 4 | Training/ or Professional Training/ or Research Training/ or Education/ or Inservice Education/ or Professional Development/ or Workshops/ or Mentors/ or Facilitators/ or Teaching Guides/ | 85439 |

| 5 | (train* or workshop* or school* or feedback or mentor* or coach* or teach* or taught or educat* or exercis* or guid* or instruct* or practice or handbook* or manual* or course* or procedure* or program* or assessment* or evaluation* or checklist* or check‐list* or correspond* or template*).tw. | 1683834 |

| 6 | 4 or 5 | 1684097 |

| 7 | 3 and 6 | 7938 |

| 8 | Randomized Controlled Trials/ or (random* or double‐blind).tw. or trial.ti. | 40068 |

| 9 | 7 and 8 | 227 |

Cochrane Central Register of Controlled Trials

Date searched: 27 April 2022

| #1 | ([mh ^"Peer Review"] OR [mh ^"Peer Review, Research"]) | 83 |

| #2 | peer NEXT review*:ti,ab,kw | 3875 |

| #3 | #1 OR #2 | 3875 |

| #4 | ([mh ^"Education, Professional"] OR [mh "Inservice Training"] OR [mh ^Mentors] OR [mh ^Mentoring]) | 1330 |

| #5 | (train* OR workshop* OR school* OR feedback OR mentor* OR coach* OR teach* OR taught OR educat* OR exercis* OR guid* OR instruct* OR practice OR handbook* OR manual* OR course* OR seminar* OR webinar* OR procedure* OR program* OR assessment* OR evaluation* OR checklist* OR (check NEXT list*) OR correspond* OR template*):ti,ab,kw | 926815 |

| #6 | #4 OR #5 | 926815 |

| #7 | #3 AND #6 | 3348 |

| #8 | #3 AND #6 in Trials | 3281 |

Web of Science

Indexes=SCI‐EXPANDED, SSCI, A&HCI, CPCI‐S, CPCI‐SSH, ESCI Timespan=All years

Date searched: 27 April 2022

| #1 | TS="peer review*" | 93,585 |

| #2 | TS=(train* OR workshop* OR school* OR feedback OR mentor* OR coach* OR teach* OR taught OR educat* OR exercis* OR guid* OR instruct* OR practice OR handbook* OR manual* OR course* OR seminar* OR webinar* OR procedure* OR program* OR assessment* OR evaluation* OR checklist* OR check‐list* OR correspond* OR template*) | 15,736,076 |

| #3 | TS=random* OR TI=trial | 2,415,547 |

| #4 | #1 AND #2 AND #3 | 7,316 |

CINAHL (EBSCO)

Date searched: 7 February 2021

| S1 | MH Peer Review | 7,948 |

| S2 | "peer review*" | 24,035 |

| S3 | S1 OR S2 | 24,035 |

| S4 | (MH Education OR MH Teaching OR MH Teaching Materials+ OR MH Staff Development OR MH Seminars and Workshops OR MH Mentorship) | 188,444 |

| S5 | (train* OR workshop* OR school* OR feedback OR mentor* OR coach* OR teach* OR taught OR educat* OR exercis* OR guid* OR instruct* OR practice OR handbook* OR manual* OR course* OR seminar* OR webinar* OR procedure* OR program* OR assessment* OR evaluation* OR checklist* OR check‐list* OR correspond* OR template*) | 3,517,085 |

| S6 | S4 OR S5 | 3,560,721 |

| S7 | S3 AND S6 | 15,425 |

| S8 | (((MH randomized controlled trials OR MH double‐blind studies OR MH single‐blind studies OR MH triple‐blind Studies OR MH random assignment) OR (random* OR double‐blind) OR TI trial) NOT (MH animals+ NOT (MH animals+ AND MH humans))) | 531,957 |

| S9 | S7 AND S8 | 2,259 |

OpenGrey

Date searched: 7 February 2021 (database not updated since)

"peer review*" AND (train* OR workshop* OR school* OR feedback OR mentor* OR coach* OR teach* OR taught OR educat* OR exercis* OR guid* OR instruct* OR practice OR handbook* OR manual* OR course* OR procedure* OR program* OR assessment* OR evaluation* OR checklist* OR "check‐list*" OR correspond* OR template*)

42 hits

ProQuest Dissertations & Theses Global

Date searched: 27 April 2022

[STRICT] noft("peer review*") AND noft((train* OR workshop* OR school* OR feedback OR mentor* OR coach* OR teach* OR taught OR educat* OR exercis* OR guid* OR instruct* OR practice OR handbook* OR manual* OR course* OR seminar* OR webinar* OR procedure* OR program* OR assessment* OR evaluation* OR checklist* OR check‐list* OR correspond* OR template*)) AND (ft(trial OR random* OR double‐blind))

1850 hits

Data and analyses

Comparison 1. Training versus no training or standard journal practice.

| Outcome or subgroup title | No. of studies | No. of participants | Statistical method | Effect size |

|---|---|---|---|---|

| 1.1 Completeness of reporting (proportion of items adequately reported, from 0.00 to 1.00) | 2 | 421 | Mean Difference (IV, Fixed, 95% CI) | 0.02 [‐0.02, 0.06] |

| 1.2 Peer reviewer detection of errors (number of errors identified, from 0 to 9) | 1 | 418 | Mean Difference (IV, Random, 95% CI) | 0.55 [0.20, 0.90] |

| 1.3 Stakeholders' assessment of review quality | 6 | Std. Mean Difference (IV, Random, 95% CI) | Subtotals only | |

| 1.3.1 Final score | 1 | 418 | Std. Mean Difference (IV, Random, 95% CI) | 0.13 [‐0.07, 0.33] |

| 1.3.2 Change score | 5 | 258 | Std. Mean Difference (IV, Random, 95% CI) | ‐0.15 [‐0.39, 0.10] |

| 1.4 Inter‐reviewer agreement | 2 | Mean Difference (IV, Random, 95% CI) | Subtotals only | |

| 1.4.1 Final score | 1 | 75 | Mean Difference (IV, Random, 95% CI) | 0.14 [‐0.07, 0.35] |

| 1.4.2 Change score | 1 | 41 | Mean Difference (IV, Random, 95% CI) | 0.18 [‐0.14, 0.50] |

Characteristics of studies

Characteristics of included studies [ordered by study ID]

Callaham 2002a Study 1.

| Study characteristics | ||

| Methods |

Study design: RCT Study grouping: parallel group Duration and date: September 1998–September 2000 Setting: reviewers and reviews at the Annals of Emergency Medicine Withdrawals: 16 (10 control subjects and 6 intervention subjects) of 51 |

|

| Data |

Inclusion criteria: reviewers were selected from the entire pool according to their performance in the previous 2 years. Reviewers were eligible for analysis only if they completed ≥ 2 rated reviews during the study. The study targeted low‐volume, low‐quality reviewers. Exclusion criteria: reviewers who completed < 2 rated reviews during the study. Number of participants: 35 (20 in intervention group, 15 in control group) Group differences: minor differences at baseline in number of reviews past 2 years. However, no SDs or CIs are provided for most outcomes. Country of residence: not stated Field of expertise: not stated but likely emergency medicine Gender: not specified Language: not stated Peer reviewer experience: the average number of manuscripts reviewed in the previous 2 years was 7.3 in the intervention group and 7.5 in the control group. |

|

| Comparisons | Standard journal practice | |

| Outcomes |

Stakeholders' assessment of review quality (change score), assessed by editors using a 5‐point scale

|

|

| Identification |

Sponsorship source: not stated Country: USA Setting: University/Annals of Emergency Medicine Authors: Michael L Callaham, Robert K Knopp, E John Gallagher Institution: Department of Emergency Medicine, University of California, San Francisco, CA Email: mlc@medicine.ucsf.edu Address: Michael L Callaham, MD, Department of Emergency Medicine, University of California, Box 0208, San Francisco, CA Conflicts of interest: Callaham is the Editor‐in‐Chief of Annals of Emergency Medicine (3 June 2021). Unclear if he held this position when conducting the study. |

|

| Interventions | Summary of goals for quality review and editor's numerical rating. | |

| Notes | ||

| Risk of bias | ||

| Item | Authors' judgement | Support for judgement |

| Random sequence generation | Yes | Statview 5.0 was used for randomizations, but additional information is sparse. |

| Allocation concealment | Unclear | It is unclear who allocated reviewers and how allocation was organized. However, the intervention was delivered after the review, and it is unlikely that the process until the written feedback was affected by the allocation. |

| Blinding of participants and personnel All outcomes | Yes | Quote: "(reviewers were blinded to author identity and the editor's rating of their review, and authors were blinded to reviewer identity)." Comment: reviewers blinded to author identity and editor's rating, but not to the intervention. The editor (evaluator) was blinded to reviewer identity, reviewer participation, enrolment, and study purpose. |