Abstract

Reconstructing the incidence of SARS-CoV-2 infection is central to understanding the state of the pandemic. Seroprevalence studies are often used to assess cumulative infections as they can identify asymptomatic infection. Since July 2020, commercial laboratories have conducted nationwide serosurveys for the U.S. CDC. They employed three assays, with different sensitivities and specificities, potentially introducing biases in seroprevalence estimates. Using models, we show that accounting for assays explains some of the observed state-to-state variation in seroprevalence, and when integrating case and death surveillance data, we show that when using the Abbott assay, estimates of proportions infected can differ substantially from seroprevalence estimates. We also found that states with higher proportions infected (before or after vaccination) had lower vaccination coverages, a pattern corroborated using a separate dataset. Finally, to understand vaccination rates relative to the increase in cases, we estimated the proportions of the population that received a vaccine prior to infection.

Subject terms: SARS-CoV-2, Viral infection, Epidemiology, Computational models

SARS-CoV-2 seroprevalence surveys aim to estimate the proportion of the population that has been infected, but their accuracy depends on the characteristics of the test assay used. Here, the authors use statistical models to assess the impact of the use of different assays on estimates of seroprevalence in the United States.

Introduction

Estimating the cumulative proportion of the population infected with SARS-CoV-2 is central to understanding the current state of the pandemic, assessing the susceptibility of the population, and to planning and targeting public health responses. Epidemiological models and other statistical approaches can be used to estimate cumulative infections using reported positive SARS-CoV-2 PCR tests, COVID-19 deaths, and other surveillance data1–7. Such studies revealed large underreporting of cases detected through case surveillance due to asymptomatic infections and limited laboratory testing. Seroprevalence studies based on a random sample of the population may be the gold standard for assessing the proportion infected but are expensive and logistically complicated to perform.

Since July 2020, commercial laboratories have conducted regular nationwide serosurveys for the CDC8,9. These surveys and other convenience and representative seroprevalence studies (refs. 8,10–21; also see https://covid19serohub.nih.gov) have provided estimates of the cumulative proportion of the population with a history of at least one infection with SARS-CoV-2 in the United States at the national and local level. Modeling approaches have also used seroprevalence studies to improve estimates of critical parameters (e.g., the infection fatality rate) or to compare to model outputs3,4,22.

However, serosurveys can produce biased estimates of the proportion infected based on the samples and methods used. Convenience samples, samples collected from individuals in the provision of healthcare for testing unrelated to SARS-CoV-2, may not be representative of the general population. Seroprevalence studies focusing on individuals seeking care for reasons unrelated to COVID-19, such as those conducted by the CDC, can underestimate the extent of mild infections due to tests being evaluated and calibrated mostly on patients with symptoms23,24. Moreover, waning of antibodies to undetectable levels following infection has been observed25,26. Estimated waning varies substantially between assays due to differences in their formats (e.g., whether the assays use direct or indirect detection formats27) and resulting variation in their sensitivities and specificities28–32. For example, when using manufacturer-recommended cutoff points to determine seropositivity, Peluso et al.28 and Stone et al.32 found lower sensitivities using ARCHITECT SARS-CoV-2 IgG immunoassay targeting the nucleocapsid protein (“Abbott”) than with Ortho-Clinical Diagnostics VITROS SARS-CoV-2 Total Ig and IgG (the latter only in Peluso et al.) immunoassay targeting the spike protein (“Ortho”) or Roche Elecsys Anti-SARS-CoV-2 pan-immunoglobulin immunoassay that targets the nucleocapsid protein (“Roche”). However, sensitivities to recent infections in Peluso et al.28 were similar across all three assays. Both studies also estimated systematically faster waning using the Abbott assay while they found no evidence of waning for the Roche assay. As a result, all else remaining equal, antibody waning means that seroprevalence estimates will constitute an underestimate of the proportion infected. That the Abbott assay exhibited faster waning may also imply that the assay immunoglobulin type (IgG in the Abbott, pan-Ig in the Roche) is also important.

In this study, we use CDC’s commercial laboratory nationwide serosurvey data and multiple other data sources to explain the observed spatio-temporal patterns in seroprevalence in the United States, with a particular focus on the role played by the different assays used, waning of antibodies, and the implications for estimating the proportion infected. We explore the impact of waning antibodies using a simple model, where we adjust seroprevalence to reconstruct the proportion infected across the United States. Finally, to gain insight into the composition of sources of immunity, we compare the spatial patterns in estimated proportion infected with vaccination coverage across states over time.

Results

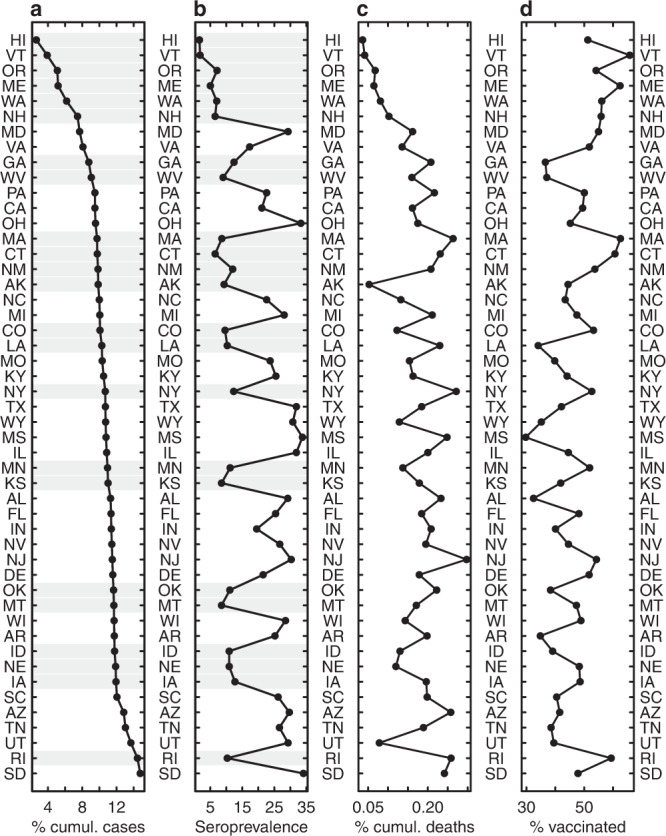

We used data from the CDC’s nationwide antibody serosurveys from commercial laboratories, which measures infection-induced seroprevalence. This study included both anti-nucleocapsid (anti-N) and anti-spike (anti-S) antibody assays prior to widespread vaccination campaigns, after which it included only anti-N assays. Anti-N assay seropositivity is reflective of prior infection with SARS-CoV-2 and not of vaccination with vaccines available in the United States, which contain only the spike protein; seroprevalence is also not a quantitative measure of current immunity status. We will henceforth refer to infection-induced seroprevalence as “seroprevalence”. Serosurveys started in July 2020 (round 1), and as of January 2022 (round 29), seroprevalence ranged from 18% in Vermont to 56% in Wisconsin (Supplementary Fig. 1). By then, the proportions of state populations reported as confirmed COVID-19 cases ranged from 10% in Hawaii to 25% in Rhode Island, and the proportions for confirmed deaths ranged from 0.1% in Vermont to 0.4% in Mississippi, with marked heterogeneity across states by round. Rank order of states by seroprevalence at a point in time differed quite markedly from that by proportion of the population reported as a case (Fig. 1). To explain spatio-temporal variation in seroprevalence across states, we fit two sets of logistic regression models. The first model (“reference model”) includes cumulative proportions of populations reported as cases and deaths as explanatory variables while accounting for a range of other factors including the assays used in each survey. The second set of models (“waning models”) explicitly incorporates the temporal effect of different waning rates (depending on the assay being used) on seroprevalence estimates (see “Methods”). Comparison of the waning models with the reference model enables assessment of the proposed model of waning in measured antibodies and its relative ability to explain observed patterns.

Fig. 1. States ranked by different metrics.

Ranked (a) cumulative percentage of the population reported as a COVID-19 case, b seroprevalence from the CDC nationwide serosurveys, c cumulative percentage of the population reported as a COVID-19 death, and d vaccination (with a full series) coverage, for July 2021 (round 24, the last round before the Roche assay started being used exclusively). Gray shading in (a) and (b) show serosurveys that at that point in time exclusively used the Abbott assay.

Variation in infection-induced seroprevalence associated with the use of different assays

Seroprevalence varied systematically as a function of the specific assays used in the surveys (Abbott, Ortho, or Roche). In the reference model, higher proportions of use of the Abbott assay were associated with lower seroprevalence while use of the Roche assay was associated with higher seroprevalence (Supplementary Table 1 and Supplementary Fig. 2; the Ortho assay was included in the model as the comparison group). As a result, some of the spatial variation observed in the nationwide serosurveys was attributable to the spatially heterogeneous use of assays (Supplementary Figs. 3–6). Using the reference model, we estimated the seroprevalence that would have resulted had all states exclusively used one of the three assays alone. We estimated that if the serosurveys had exclusively used the Roche assay (highest seroprevalence), estimated seroprevalence country-wide could have been 20 percentage points higher in January 2022 than if only the Abbott assay (lowest seroprevalence) was to have been used (Supplementary Fig. 7). There was substantial state-to-state variation in the change in expected seroprevalence had the Roche assay been exclusive used; for example, seroprevalence would have been over 27 percentage points higher in Iowa in May 2021 (round 21) had they exclusively used the Roche assay, relative to the actual survey estimates (Supplementary Fig. 7).

Accounting for waning helps explain variation in infection-induced seroprevalence

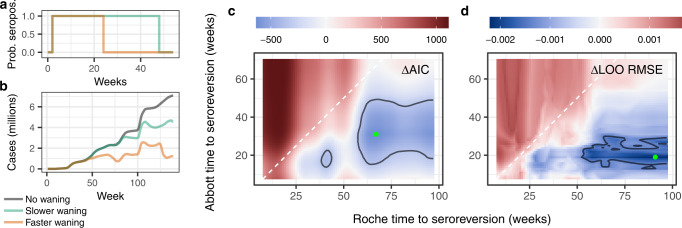

Accounting for waning detection of antibodies over time improved model fit over the reference model across various metrics (Fig. 2, Supplementary Fig. 8 and Table 1). Results support a faster waning rate for the Abbott assay, while there was no clear evidence of waning in the Roche assay (the best five percentile models by every metric included the maximum 97 weeks considered here; Fig. 2, Supplementary Fig. 8). Because the Ortho assay was only used up to January 2021 (except in Puerto Rico, not included in this study), we could only explore up to a maximum of 49 weeks of time to seroreversion. Time to seroreversion (the time for antibodies to fall below a detection threshold) in the Ortho assay depended on the metric used (no evidence of waning up to 49 weeks by Akaike information criterion (AIC) and root-mean-square error (RMSE), but only 10 weeks for LOO median RMSE), but its use was limited (Supplementary Fig. 1). The best fitting models by RMSE and median leave-one-out (LOO) RMSE had RMSEs and median LOO RMSEs ~0.91 and 0.90 times that of the reference model without waning, respectively. The best model by LOO median RMSE had a mean time of seroreversion of 19 weeks for the Abbott assay, 10 weeks for the Ortho assay, and 91 weeks for the Roche assay (although here, there was no statistical evidence of seroreversion over the time period studied of 97 weeks), with cases seroconverting 1 week prior to being reported as a case (−1 week detection delay; Table 1). Note, however, that there was uncertainty around these parameter estimates (Fig. 2). The best models by AIC and RMSE were similar, with estimated Abbott assay time to seroreversion of 31 weeks, and no clear evidence of waning in the Ortho assay (with a time to seroreversion ≥49 weeks; Table 1). After accounting for waning, the negative and positive associations between proportion of Abbott and Roche assays with seroprevalence remained, albeit with smaller effect sizes (Supplementary Table 1), potentially implying lower sensitivity of the Abbott assay for detecting recent infections relative to the other two.

Fig. 2. Rationale behind the models, and comparison of waning models with the reference model across two metrics.

Inclusion of waning helps explain patterns in seroprevalence better. Left panels (a, b) explain how we mechanistically incorporate waning into the models. In (a), a case is expected to test positive in a survey for a limited amount of time before seroreverting, leading to (b) different waning patterns that depend on the time to seroreversion (see Supplementary Fig. 15). In our models we use three different times to seroreversion, one for each assay. Within each tile panel (c, d), each pixel corresponds to a single model, where cases have been adjusted assuming three different times to seroreversion, one for each assay. The best models had a case seroconvert 1 week before being reported (Table 1). Tile plots assume the best model’s time to seroreversion for the Ortho assay (49 weeks by AIC, 10 weeks by LOO median RMSE), and show model performance for the remaining two variables, the times to seroreversion for Roche (x-axes) and Abbott (y-axes) assays. c, d show results for two different model metrics: AIC, and LOO median RMSE. Metrics are expressed relative to the metric for the reference model (that does not account for waning); blues (respectively reds) indicate waning models that are better (respectively worse), per that metric, relative to the model without waning. Green points in c, d indicate the best model by each metric, and contour lines enclose the best five percentile models as per each metric. See Table 1 for the corresponding best waning model by each metric, and Supplementary Fig. 8 for more complete results.

Table 1.

Best waning models across three metrics, compared to the reference model

| Best fitting waning model, by each metric | Reference model | |||

|---|---|---|---|---|

| AIC | RMSE | LOO median RMSE | ||

| Detection lead or lag (weeks) | −1 | −1 | −1 | – |

| Abbott time to seroreversion (weeks) | 31 | 31 | 19 | – |

| Ortho time to seroreversion (weeks) | 49a | 49a | 10 | – |

| Roche time to seroreversion (weeks) | 67 | 96 | 91 | – |

| ΔAIC | 0 | 42 | 553 | 634 |

| RMSE | 0.0201 | 0.0200 | 0.0210 | 0.0221 |

| LOO median RMSE | 0.0209 | 0.0213 | 0.0205 | 0.0227 |

| Observations | 1398 | 1398 | 1398 | 1398 |

| Residual degrees of freedom | 1285 | 1285 | 1285 | 1285 |

Metrics for the best models by Akaike information criterion (AIC), root-mean-square error (RMSE), and leave-one-out (LOO) median RMSE, compared to metrics for the reference model (with no waning). For example, the column “AIC” indicates the best waning model chosen by AIC (see Fig. 2). AIC values for waning models account for the added parameters being selected (times to seroreversion and detection lead or lag). ΔAIC values in the table are relative to the lowest AIC in the models shown (the best fitting waning model by AIC).

aThese are lower bounds because they are the maximum number of weeks for which these assays could be evaluated. The Ortho assay was only used, to a limited extent, in the first 13 rounds of the nationwide serosurveys (until January 2021), while the Abbott assay was used in the first 24 rounds (until July 2021; see “Methods” and Supplementary Figs. 1 and 3).

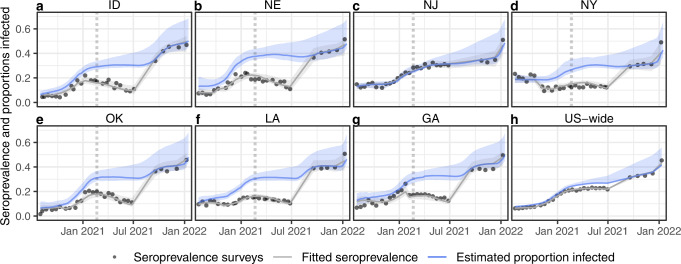

Next, we used the best waning model to estimate the proportion infected by correcting the seroprevalence for assay use and seroreversion (see Methods) and compared it to reported seroprevalence. The difference between estimated proportions infected and seroprevalence is greatest in states and time points in which the Abbott assay was predominantly used (e.g., Fig. 3 and Supplementary Figs. 4–6). The estimated proportion infected was at least 10 percentage points higher than the seroprevalence in six states in January 2021, and in 17 states in July 2021, although when averaged across the country (using state populations as weights), the difference was at most five percentage points (Fig. 3). Our results also show that the decreasing seroprevalence over time observed in some states was at least in part attributable to the assays used and corresponding waning rates (e.g., most of the states shown in Fig. 3). The resultant time series of proportion infected differed not only quantitatively but in some cases also qualitatively from the seroprevalence estimates (Fig. 3 and Supplementary Fig. 9).

Fig. 3. Time series of survey seroprevalence and estimated proportions infected for seven example states and U.S.-wide.

Example time series of survey seroprevalence, fitted seroprevalence and estimated proportion infected for (a) Idaho, (b) Nebraska, (c) New Jersey, (d) New York, (e) Oklahoma, (f) Louisiana, (g) Georgia, and (h) the United States. For all of these states, seroprevalence was estimated primarily using the Abbott assay prior to September 2021 (see Supplementary Figs. 1 and 3), except for (c) New Jersey, for which the Roche assay was exclusively used. The proportion infected was estimated using the best waning logistic regression by LOO median RMSE (Fig. 2 and Table 1). U.S.-wide estimates were obtained by taking mean values per round weighted by state populations, and each round was plotted taking the mean week for that round across all states. Uncertainty envelopes around fits and estimated proportions infected include model uncertainty and uncertainty around the selection of times to seroreversion and lead or lag between seroprevalence and reported cases (see Methods). The U.S.-wide ribbon does not include model uncertainty. Vertical dotted lines indicate the start of the vaccination campaigns. See Supplementary Fig. 9 for time series for all states included in the model.

To highlight the influence of choice of assays on seroprevalence estimates, we compare the seroprevalence estimated in New York and New Jersey. Both states experienced qualitatively similar outbreak dynamics according to reported cases and deaths, yet the surveys produced very different seroprevalence estimates (both in their absolute values, and in particular their evolution over time; see Fig. 3). The maximum difference in their seroprevalence was 19 percentage points (13% in New York, 32% in New Jersey in May 2021). Seroprevalence in New York exhibited a conspicuous drop between October and November, a drop that was not observed in neighboring New Jersey. Our results show that the drop in New York can at least in part be explained by a switch from using the Roche assay to a mix of the Ortho and Abbott assays in October 2020 to exclusively using the Abbott assay by January 2021 to produce the New York seroprevalence estimates. However, sampling for the study was also changed in November 2020 to include a larger proportion of specimens from outside the New York City metropolitan area, which had experienced the largest spike in early cases. Estimates for New Jersey were obtained exclusively using the Roche assay. Accounting for this difference produces estimates of proportion infected that are more similar in magnitude and in trend across the two states. For instance, the maximum difference between the two states after adjusting for assay use is less than six percentage points, in line with the maximum difference reported in seroprevalence after July 2021 (when both used the Roche assay) of just under four percentage points.

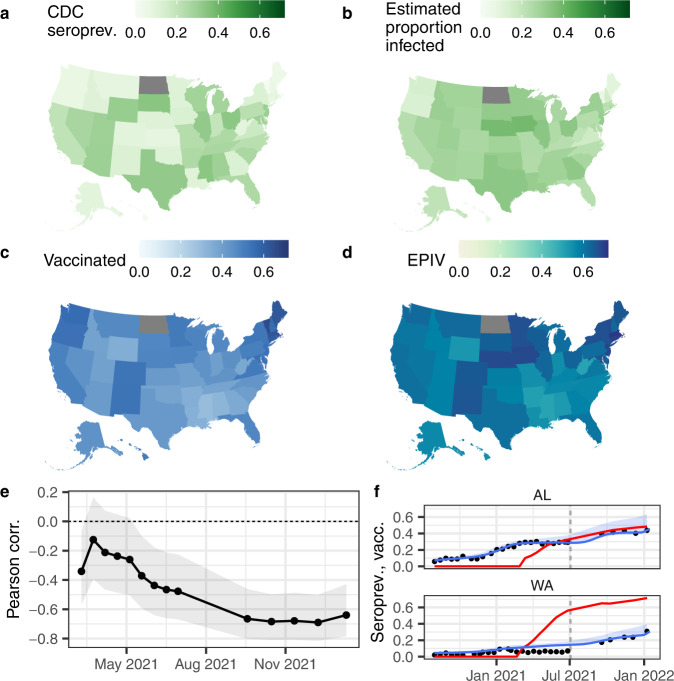

Spatial heterogeneity in infection-induced seroprevalence, estimated proportion infected, and vaccination coverage

Some of the observed spatial heterogeneity in seroprevalence (Fig. 4a) was a result of the use of different assays and their associated waning rates (Supplementary Figs. 3–6). For example, by July 2021 (round 24, the last round prior to the Roche assay being used exclusively), the standard deviation in percentage seroprevalence across states was 10% (Fig. 4a), while after correcting for assay use and waning (Fig. 4b), it was 7.2% (also see Supplementary Fig. 10). We also found that as vaccine distribution increased in 2021, states with higher vaccine coverage were associated with lower estimates of the proportion infected (Fig. 4e). We combined the estimates of the proportion infected from our best waning model with the vaccination coverage (thus including seropositives from both natural infection and/or vaccination, which we henceforth refer to as the estimated proportion infected and/or vaccinated, or EPIV), by assuming that the probabilities of being infected and vaccinated with a complete series are independent. The differences between states were further reduced when considering EPIV; in July 2021, the standard deviation in the EPIV was 5.6% (Fig. 4, comparing maps b and d; Supplementary Fig. 10). A comparison of time series in individual states (Fig. 4f) illustrates the relationship between vaccine coverage and the estimated proportion infected over time. Of note, Washington, a state with low estimated proportion infected pre-vaccination and higher vaccination coverage maintained a low proportion infected post-vaccination, while Alabama had high estimated proportion infected pre-vaccination and achieved lower vaccine coverage.

Fig. 4. Spatial patterns and correlations.

Spatial variation in July 2021 (round 24) in (a) CDC nationwide serosurvey seroprevalence, (b) estimated proportion infected, (c) proportion of the population with a complete series of a vaccine, (d) estimated proportion infected and/or vaccinated (EPIV; assuming independent probabilities of having had a natural infection and being vaccinated), (e) round-by-round Pearson correlation between the proportion of the population vaccinated and the estimated proportion infected (shaded areas show the 95% uncertainty intervals for a two-sided test), and (f) example time series of seroprevalence estimates (black points), estimated proportion infected (blue lines; shaded areas show the uncertainty envelopes due to the uncertainty in the model fit and in the selection of times to seroreversion and lead or lag between seroprevalence and reported cases), and proportion of the population vaccinated with a full series (red lines) for two states. a–d show maps for July 2021 (round 24); its point in time is shown in (f) as vertical gray dashed lines. In (e), a negative correlation means that states with a higher vaccination coverage tended to be those with lower proportion infected. See Supplementary Fig. 9 for time series like those in (f) for all states.

Pre-infection vaccine coverage

The greatest public health benefit of vaccines is likely achieved when administered to individuals prior to infection. Maximizing the vaccine coverage of individuals pre-infection would have required both limiting, to the extent possible, transmission (e.g., by implementing non-pharmaceutical interventions), and an effective vaccination campaign. Our reconstructions of the proportion infected show that the degree to which transmission was constrained in the United States varied across states and over time (Fig. 3 and Supplementary Fig. 9), by more accurately showing when the cumulative proportion infected remained flat. Furthermore, although vaccination campaigns started almost simultaneously across the country, differences across states in vaccination rates and coverage quickly emerged (Supplementary Figs. 5 and 6). To give insight on the coverage and speed of the vaccination campaign relative to the speed at which cases increased, we estimated the proportion of the total population that was vaccinated with a complete series of doses before being infected, assuming that vaccinations were distributed independently of prior infection status. The proportion of the whole population who were vaccinated and not previously infected ranged from 6% in Utah to 15% in Alaska in mid-March 2021, before widespread availability of vaccination to individuals over ages 65 years, and from 21% in Idaho to 42% in Vermont by mid-January 2022 (Supplementary Fig. 11).

Comparison with an independent dataset

Finally, we compared estimates produced by our models with an independent dataset, the nationwide blood donor serosurvey19. Infection-induced seroprevalence estimates from the two sets of surveys are clearly correlated (Pearson and Spearman correlations of 0.85), albeit with substantial variation (Supplementary Fig. 12), while our estimated proportion infected was also highly correlated to the blood donor serosurvey estimates (Pearson and Spearman correlations of 0.94 and 0.93, respectively), although our estimates tended to be higher.

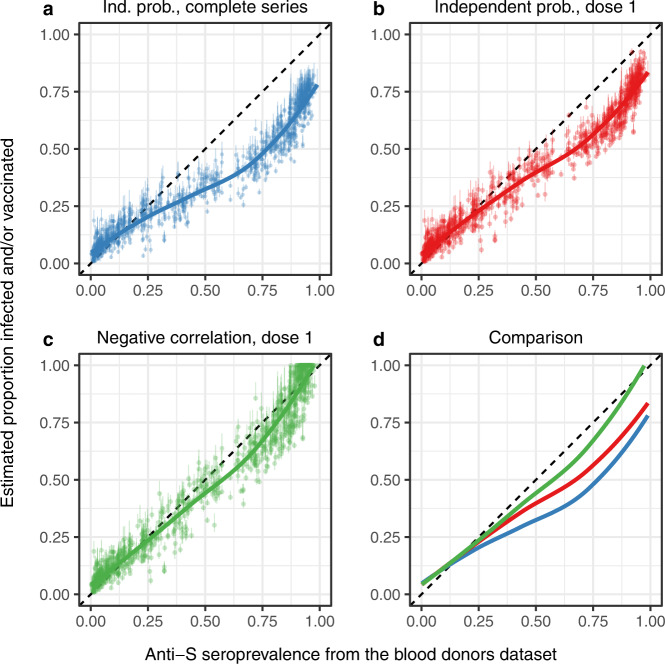

The blood donor surveys included both anti-N and anti-S assays, allowing estimates of seropositivity from both natural infection and vaccination, respectively19. Our EPIV values were substantially lower than the anti-S seroprevalence estimated in the blood donor survey (Fig. 5), especially after vaccinations started. Including individuals with at least one vaccine dose in the EPIV brought our values closer to the blood donor survey estimates. When assuming a perfect negative correlation between having one or more doses of a vaccine and having ever tested positive due to an infection (adding the two proportions, thus constituting an upper limit), our EPIV were comparable to those of the blood donor surveys. It is, however, also important to note that differences between our EPIV estimates and the blood donor surveys could also be attributable in part to the uncertainty around the time to seroreversion of, particularly, the Roche assay (Figs. 2 and 5).

Fig. 5. Comparison of our estimates of proportions infected and/or vaccinated with the blood donors anti-S seroprevalence estimates.

Comparing estimated proportions infected and/or vaccinated (EPIV) using the best waning logistic regression (Table 1) with estimated anti-S seroprevalence from blood donor samples. The seroprevalence from the blood donor samples includes both infected and vaccinated individuals, and our EPIV combines estimated proportion infected (adjusting for assay use and waning) with the proportion vaccinated. Thick lines are LOESS fits to the data points shown, and thin vertical lines show uncertainty in EPIV values. That uncertainty comes from our estimated proportions infected, due to the uncertainty in the model fit and in the selection of times to seroreversion and lead or lag between seroprevalence and reported cases (see “Methods”). The blue line and points (a) assume that the probability of infection and being vaccinated (with two doses) are independent (Eq. (1)), red lines and points (b) use the proportion vaccinated with at least one dose (Eq. (1)), while the green line and points use the proportion vaccinated with at least one dose, and assume a perfect negative correlation between vaccination coverage and estimated proportion infected (c; Eq. (2)). d compares the LOESS fits for (a–c). See Supplementary Fig. 13 for comparisons of time series of these quantities per state.

Discussion

Our results show that heterogeneous spatio-temporal patterns in seroprevalence are in part explained by which assays were used in the surveys: seroprevalence was lower in states that made greater use of the Abbott assay, underlining variation in the ability to detect infection and waning rates across assays, and possibly indicative of the different detection thresholds that define seropositivity used by each assay. The times to seroreversion were found to be likely distinctly lower in the Abbott assay than in the Ortho or Roche assays; differences supported by other literature (e.g. ref. 28) and likely related to characteristics of the assay target. Accounting for the assays used reduces differences across states in seroprevalence and suggests a more homogeneous impact of the pandemic across the United States than would otherwise be surmised based on the surveys alone. The estimated proportions infected were negatively correlated with vaccination rates across states.

Longitudinal studies quantifying within-individual antibody kinetics have also previously shown (albeit with relatively small sample sizes) how antibody levels and waning rates can markedly vary depending on the assay used and on disease severity (refs. 28, 32, but also see ref. 33). Peluso et al.28 found similar mean times to seroreversion for the Abbott assay (23 and 33 weeks for non-hospitalized and hospitalized individuals, respectively), compared to 19 weeks in our best waning model, and 39 and 79 weeks for the Ortho assay compared to 10 weeks in our results (although by AIC and RMSE there was no evidence of waning; note that the Ortho assay was phased out by January 2021), while they found no evidence of waning for the Roche assay, and neither did we (although our point estimate was 91 weeks), albeit with significant uncertainty around our estimate. Stone et al.32 also reported distinctly faster waning rates in the Abbott assay. Moreover, even after accounting for differential waning between assays, Abbott assay use was associated with lower seroprevalence, possibly suggesting lower sensitivity to recent infections, although this finding was not consistent with Peluso et al.28, who found similar sensitivities to recent infections across all three assays.

Previous studies have also leveraged individual-level immune dynamics to produce corrected seroprevalence estimates. For instance, using time series of reported cases, deaths, or hospitalizations, Takahashi et al.31 used time-varying assay sensitivities (and their variation with disease severity, estimated in individual-level data) to produce adjusted estimates of seroprevalence across five locations. Where they incorporated individual-level data into their methodology, we recovered individual-level patterns across a large population.

The spatial heterogeneity observed in the nationwide serosurveys was to an extent attributable to assays used and waning; variation across states in the estimated proportion infected was distinctly lower. Nevertheless, the vaccination campaign started at a point in time when the estimated proportion infected still differed by >36 percentage points across states, and this maximum range grew to >39 percentage points by January 2022. This means that vaccination campaigns started on a relatively heterogeneous landscape of immunity and that heterogeneity increased with vaccinations and subsequent infections. Uptake of vaccines also varied across states, with the proportions of the population vaccinated differing across states by as much as 30 percentage points by January 2022. Vaccination coverage was negatively correlated with the estimated proportion infected, a finding corroborated in a comparison with an independent dataset, the nationwide blood donor serosurvey. This negative correlation implies that differences among states in the proportions of state populations that have experienced an immune response (whether by infection or vaccination) is lower than expected based on vaccination coverage or the proportion infected alone. However, the negative correlation also suggests that the composition of the source of immunity (from either infection or vaccination) is likely heterogeneous across states. Consequently, were immune protection from infections and vaccines found to differ systematically (and there are indications that this may indeed be the case, e.g. refs. 34, 35), the result of future waves of the pandemic may also be expected to be spatially heterogeneous. We also presented a metric meant to capture the rates at which states delivered vaccines in relation to the rate at which cases accrued. Reconstruction of the dynamics of cumulative infections allows for greater investigation of heterogeneity between locales that might be used to guide future public health responses.

The differences in our estimates of the proportion infected and estimated proportion infected and/or vaccinated (estimates without and with vaccination, while accounting for assay use and waning) with the blood donors surveys could in part be attributed to likely differences in the biases in the sampling inherent to the two surveys. The nationwide serosurveys that form the basis of our estimates use samples from individuals seeking medical care for reasons unrelated to COVID-19. On the other hand, people who donate blood may differ from the overall population in important ways; for instance, blood donors are more likely to be healthy, non-pregnant adults, certain groups (e.g., younger age categories) may be systematically underrepresented, and for example, their vaccination uptake might be systematically higher (e.g. ref. 36). The assays used were also not the same across the two sets of serosurveys. Nonetheless, the comparison supports the negative correlation between proportion infected and vaccination coverage.

A number of caveats should be taken into consideration when interpreting our results. As noted above, our results are based on serosurveys using a convenience sample of individuals that sought health care for reasons other than COVID-19; this sample could deviate from the wider population in important ways and not be representative. For example, this group may have experienced different rates of severe illness upon infection with SARS-CoV-2, an important determinant of immune response, than the general population24,28,31 and may have systematically different healthcare seeking behavior. Furthermore, biases in the sampling could also vary over time and across states, for instance as a function of the numbers of cases and underlying demographics. Rates of seroconversion and reversion might also be different pre- and post-vaccination (e.g. refs. 34, 35, 37). We use numbers of tests that were positive and negative in the models, meaning that we do not make explicit adjustments for race, ethnicity, age, or sex, although these factors are, to an extent, captured in the model with state-specific intercepts. Finally, to calculate the estimated proportion infected and/or vaccinated, we assumed that the probabilities of being infected and vaccinated were independent. However, vaccination may be associated with prior infection and the comparison to blood donor seroprevalence suggests that vaccination may be negatively correlated with the probability of prior infection.

The lags between seroconversion and a case being reported were, a priori, an important parameter to consider in accounting for potential systematic shifts in the time series of seroprevalence and reporting of cases and deaths. However, this parameter should be interpreted with caution. Serosurveys were conducted roughly every 2–4 weeks, and they reported time windows (that can be 2–4 weeks long) over which the surveys were performed; we here use the midpoints of the windows. Reporting delays might also be expected to vary over time. Nevertheless, our results do not provide strong evidence for a specific lead or lag, and this is reflected in the uncertainty estimates we provide.

Our model assumes a constant relationship between infections and reported cases over time. This assumption will be increasingly challenged as the pandemic progresses, particularly beyond the time-frame considered here. The rising probability of reinfections and breakthrough cases, as well as the increasing reliance on at-home testing, would likely introduce biases into our estimates of numbers of infections. For example, if increasing numbers of cases reported were to be reinfections, then our approach would overestimate the estimated numbers of infections. Conversely, if fewer cases were to be reported due to at-home testing, then our model would produce underestimates. Furthermore, waning rates, which in our model are assumed to be constant over time, might vary as a result of prior infections and/or vaccinations. All these factors act concurrently, and understanding what the overall bias introduced would be and disentangling their effect on our estimates is a challenge that would require a change to our approach.

Serosurveys will continue to be critical tools to understand determinants and predictors of infection, reinfection, duration of protection, antigen-specific protection to SARS-CoV-2 variants, and the underlying determinants of burden (e.g., the infection fatality ratio). Given the changing relationship between reported cases and infections due to reinfections and breakthrough cases and the increasing availability of at-home testing, statistical and mechanistic approaches to analyzing serosurvey data will become more important. Our results identifying signals of waning and the correlation between vaccination and prior infection suggest that large scale, aggregate datasets like the U.S. serosurveys may yield useful inferences on the relationships between serological responses, protection, and reinfection. However, further work will be needed to interpret serology as seropositivity saturates in the population and more individuals experience multiple immunizing events (i.e., re-infection, vaccine boosts).

Methods

In this study we aimed to explain spatio-temporal variation in seroprevalence using logistic regressions. We included as covariates the variable use of different assays across time and space and assessed the evidence to support differential waning of seropositivity across assays. The correlations between the covariates used in the models are shown in Supplementary Fig. 14.

Data

The serosurveys, conducted by the CDC and commercial laboratories, included samples obtained for reasons unrelated to COVID-19. Nationwide seroprevalence studies using available serum specimens (henceforth referred to as “nationwide serosurveys”) were conducted from July 2020, with the aim of estimating seroprevalence from infection per state approximately every 2–4 weeks8. The surveys are ongoing, but we here analyze surveys up to January 2022 (round 29). Three immunoassays were used: the Roche Elecsys Anti-SARS-CoV-2 pan-immunoglobulin immunoassay that targets the nucleocapsid protein (henceforth referred to as “Roche”), the Abbott ARCHITECT SARS-CoV-2 IgG immunoassay targeting the nucleocapsid protein (henceforth referred to as “Abbott”, and the Ortho-Clinical Diagnostics VITROS SARS-CoV-2 IgG immunoassay targeting the spike protein (henceforth referred to as “Ortho”). Further details about the laboratory methods, including the sensitivity, specificity, can be found in section “Laboratory methods” in SI. As all vaccines available in the United States generate antibodies to the spike protein only (anti-S), serosurveys conducted following the widespread availability of vaccines used exclusively anti-N assays. The Ortho assay measured anti-S antibodies, but their use was phased out in the states analyzed here by the end of January 2021, prior to the start of widespread vaccination campaigns. The nationwide serosurvey data were downloaded from the CDC (https://data.cdc.gov/Laboratory-Surveillance/Nationwide-Commercial-Laboratory-Seroprevalence-Su/d2tw-32xv). We assumed the surveys for each round took place on the middle date of the range given. We then used the number of positive and negative tests produced in each survey as our outcome variable. Because there were few completed survey rounds for North Dakota, it was excluded from analyses.

County-level daily laboratory-confirmed COVID-19 cases and deaths in the counties of the United States were downloaded from USAFacts (https://usafacts.org/visualizations/coronavirus-covid-19-spread-map/) on March 2, 2022. After aggregating the numbers of cases and deaths per state, and differencing the cumulative curves to obtain numbers of cases and deaths per day, we found negative values of both reported deaths and cases. If the negative value was immediately followed by the same (positive) value, those counts were canceled out. Otherwise, the negative total was discounted from previous days’ totals. We then aggregated numbers by week, recalculated cumulative numbers, and divided them by the respective state populations to produce cumulative percentages of the population that were reported as COVID-19 cases and deaths, for each nationwide serosurvey round. These data did not include Puerto Rico, so Puerto Rico is not included in our analyses.

Excess deaths data for each state were downloaded from the CDC (https://www.cdc.gov/nchs/nvss/vsrr/covid19/excess_deaths.htm). We kept the weighted data only (which attempts to correct for reporting delays). To estimate the number of (excess) deaths not attributable to COVID-19, we took the difference between excess deaths and reported deaths. We then calculated this number as a percentage of the state population.

Laboratory testing (PCR) time series per state were downloaded from HealthData.gov (https://healthdata.gov/dataset/COVID-19-Diagnostic-Laboratory-Testing-PCR-Testing/j8mb-icvb), and from these we estimated the cumulative number of tests performed relative to each state’s population up to each serosurvey round.

We used data on the distribution of assays used in each serosurvey round38. The information provided included the number of tests, for each survey round, that were performed with each of three different assays (Abbott ARCHITECT IgG anti-N, Ortho VITROS IgG anti-S, and Roche Elecsys Total Ig anti-N). From September 2021 (round 25) onwards, states switched to exclusively using the Roche Elecsys assay.

COVID-19 vaccination data were downloaded from the CDC (https://data.cdc.gov/Vaccinations/COVID-19-Vaccinations-in-the-United-States-Jurisdi/unsk-b7fc). We produced percentages of the populations that had been vaccinated with at least one dose of a vaccine, or with a complete series of the vaccine (individuals with a second dose of a two-dose vaccine or one dose of a single-dose vaccine) at each point in time per state. For a couple of states (e.g., Kentucky and West Virginia), vaccination coverage is not a monotonically increasing function of time. However, it is unclear from the data documentation what the reason for this pattern may be.

We downloaded data on COVID-19 hospitalizations from HealthData.gov (https://healthdata.gov/Hospital/COVID-19-Reported-Patient-Impact-and-Hospital-Capa/g62h-syeh). We calculated the cumulative total number of confirmed adult hospital admissions, and then obtained the percentage of the cumulative number of cases that had been hospitalized, per state.

The proportion of COVID-19 cases reported in different age categories was estimated using the CDC restricted access case surveillance line-list data (https://data.cdc.gov/Case-Surveillance/COVID-19-Case-Surveillance-Restricted-Access-Detai/mbd7-r32t). The reporting times in the CDC line list data are not expected to match those from USAFacts.gov. We assumed that the proportions of total cases being reported in each of the age categories was unlikely to undergo very rapid changes over time, so we estimated these proportions based on 5-week rolling means of the cumulative number of total cases and cases reported in each age category.

We compared our estimates of proportions infected with a separate serosurvey conducted by the CDC: the nationwide blood donor serosurvey (https://covid.cdc.gov/covid-data-tracker/#nationwide-blood-donor-seroprevalence). The survey estimates the proportion of the population with antibodies against SARS-CoV-2 (both anti-N and anti-S Ig), for which they used the Roche Elecsys Total Ig and Ortho VITROS Total Ig assays. Multiple estimates were provided for different parts of some states; we took the mean seroprevalence weighted by the number of tests to get a single estimate by state. Surveys were not necessarily performed in the same weeks as the nationwide serosurveys. To maximize the data used when comparing the two datasets, if surveys in the two datasets were performed 1 week before or after the other, the two values were still matched.

We square-root-transformed the cumulative percentages of the populations reported as cases and deaths, and the percentages of the populations hospitalized, because their distributions were heavily skewed and are expected to be the result of a multiplicative process. For similar reasons, we natural-log-transformed the percentage of state populations that were PCR tested. Models with these transformations performed better across model metrics used in this study than models without transformations.

Models

We fit logistic regressions using function “svyglm” in package “survey” v4.1.1. In all models, the number of positives out of the total number of tests were the response variable. The “reference model” included an interaction between week and state (which aimed to capture changes in the percentages of COVID-19 infections reported as cases over time and across states); the square-root-transformed cumulative percentages of the state populations reported as a case and as a death, the percentage of the population reported as excess (unaccounted for) deaths, natural-log-transformed percentage of the population that had been tested (PCR), the cumulative percentage of the population that had been vaccinated, the square-root-transformed cumulative percentage of cases that had been hospitalized, the percentage of survey tests that utilized the Abbott and Roche assays (as two separate variables; we did not include a covariate for Ortho use because its inclusion would have been redundant, given percentages across the three assays always equal 100), and the percentage of cases being reported for different age categories (we did not include ages >70 years category as the inclusion would have been redundant given percentages across age categories add to 100). Of a priori primary concern were the cumulative numbers of reported cases and deaths (as they would likely play an important part in explaining patterns in seroprevalence), but we added the other variables as we assumed they might be important to control for. We weighted the model to account for the different proportions of the state populations that were tested in the nationwide serosurveys by using the inverse of the sampling proportion.

While accounting for the seroprevalence associated with the use of different assays, the reference model above does not explicitly account for the waning in antibodies over time as quantified by each of the assays. As a point of comparison, we separately fit a suite of logistic regressions (henceforth, “waning models”) based on the reference model above, but which assumed a range of different antibody waning rates per assay. We proceed with the following strategy. The time series of the numbers of cases in a location is a (monotonically) increasing function of time, while the time series for seroprevalence need not be (if, for instance, antibodies did wane below detectability). We therefore “adjust” the reported cases to incorporate waning following a series of assumptions.

To adjust cases, we multiply each reported case by a step function produced using two parameters: (1) a lead or lag between a case seroconverting and it being reported; and (2) a limited time during which that case would test positive before seroreverting (Fig. 2 and Supplementary Fig. 15). This produces an alternative “adjusted” time series of reported cases. Furthermore, we could hypothesize that different assays have potentially different times to seroreversion, and thus use multiple step functions to produce these adjusted time series of cases, one for each assay. We produce adjusted numbers of reported cases based on the proportions of each assay used to produce each seroprevalence estimate. We then fit different logistic regressions for all combinations of the three step functions (or waning rates). An improvement in model fit over the reference model provides evidence for the input waning rates. In this way, the impact assays have on seroprevalence is split into two components: the temporal waning rate, and the average seroprevalence associated with each assay, after accounting for waning, which could be interpreted as a proxy for assay sensitivity for recent infections. Note that while the Roche assay has been used through all survey rounds, the Abbott assay was used until July 2021 (round 24), and the Ortho assay was used until January 2021 (round 13). This means the maximum times to seroreversion we can explore for the Abbott and Ortho assays are 70 and 49 weeks, respectively, relative to the start of the pandemic.

We evaluated models using the Akaike Information Criterion (AIC), root-mean-squared-error (RMSE), and a leave-one-out (LOO) median RMSE. RMSE values were estimated by comparing model predictions on the response scale with nationwide serosurvey estimates. For the LOO RMSE, each round of the surveys was left out in turn, the model fit to the remaining rounds and used to predict the round left out. We then estimated the median RMSE from the predictions of the rounds left out. We estimate the proportion infected by taking the best waning model, and replacing the adjusted numbers of cases (with which the model was originally fit) with the original cumulative numbers of cases, and assuming only the Roche Elecsys assay (associated with the highest seroprevalence estimates) is used.

Uncertainty around our estimated proportions infected can come from both the individual model fit, and from the search for times to seroreversion and lead or lag between seroprevalence and reported cases. To characterize the uncertainty, we used an ad-hoc approach in which we took the best (bottom) five percentile LOO median RMSEs across parameter combinations (times to seroreversion and lead or lag), estimated the proportion infected for the corresponding subset of models to include the 95% uncertainty intervals (UIs) around each model fit, and extracted the range of estimates for each point in time and state (including the 95% UIs). U.S.-wide uncertainty estimates do not include model uncertainty (which in any case was significantly smaller than that from selection of times to seroreversion and lead or lag), and were estimated by producing a mean seroprevalence and estimated proportion infected weighted by state populations, for each of the models in the best five percentile models, and then taking the range of values at each point in time.

We combine the estimated proportion infected with vaccination coverage (what we refer to in the text as the “estimated proportion infected and/or vaccinated”, or EPIV) by making assumptions on the correlation between the two. We show results assuming an independent probability, such that the probability of being vaccinated has no bearing on the probability of having been infected, i.e.:

| 1 |

where “vacc” can either represent individuals with at least a single dose of a vaccine, or individuals with a complete series of the vaccine. We also show results assuming a perfect negative correlation, i.e.:

| 2 |

To understand variation in the extent to which naive (not yet infected or vaccinated) individuals had been prioritized by vaccination campaigns, we defined the following metric:

| 3 |

where s(t) is the EPIV (combined proportion infected and vaccination; see above) at time t, and v(t) is the vaccination coverage at time t. This metric estimates the proportion of the population that was vaccinated before being infected, assuming vaccinations were distributed independently of prior infection status.

We also repeated analyses allowing covariates to have non-parametrically non-linear relationships with seroprevalence by using splines. Predicted seroprevalence values from the logistic regressions using splines were very similar to those predicted without the splines (see section “Accounting for non-linear relationships” in SI).

R v4.239 was used in all analyses.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Supplementary information

Acknowledgements

We would like to thank Harrell Chesson, Kristie Clarke, Jefferson Jones, Augustine Rajakumar, Heather Scobie, Jerome Tokars, Ryan Wiegand, and three anonymous reviewers for helping improve the manuscript. B.G.-C., M.D.T.H., and D.A.T.C. were supported by the National Science Foundation (NSF) RAPID award 2223843. The findings and conclusions in this report are those of the authors and do not necessarily represent the official position of the Centers for Disease Control and Prevention. Use of trade names or commercial sources is for identification purposes only and does not imply endorsement by the U.S. Centers for Disease Control and Prevention.

Author contributions

B.G.-C., M.D.T.H., M.A.J., M.B., and D.A.T.C. designed the research; B.G.-C. analyzed data; B.G.-C., M.D.T.H., and D.A.T.C. wrote the first draft of the manuscript, and B.G.-C., M.D.T.H., M.A.J., M.B., R.B.S., J.M.H., J.L., T.Q., H.S., A.T.H., and D.A.T.C. reviewed and edited the manuscript.

Peer review

Peer review information

Nature Communications thanks the anonymous reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available.

Data availability

Data used to fit the logistic regressions and estimates of proportions of state populations infected by week are available online40. Estimates are produced using the best waning model by leave-one-out median RMSE (see Table 1 and Fig. 2 and Supplementary Fig. 8). See section “Models” in “Methods” in the main text for how the lower and upper bounds we provide for these estimates are calculated. Data were obtained from various sources and subsequently merged to fit the models. The nationwide serosurvey data were downloaded from the CDC (https://data.cdc.gov/Laboratory-Surveillance/Nationwide-Commercial-Laboratory-Seroprevalence-Su/d2tw-32xv). County-level daily laboratory-confirmed COVID-19 cases and deaths in the counties of the United States were downloaded from USAFacts (https://usafacts.org/visualizations/coronavirus-covid-19-spread-map/). Excess deaths data for each state were downloaded from the CDC (https://www.cdc.gov/nchs/nvss/vsrr/covid19/excess_deaths.htm). Laboratory testing (PCR) time series per state were downloaded from HealthData.gov (https://healthdata.gov/dataset/COVID-19-Diagnostic-Laboratory-Testing-PCR-Testing/j8mb-icvb). Data on the distribution of assays used in each serosurvey round were taken from Wiegand et al.38. COVID-19 vaccination data were downloaded from the CDC (https://data.cdc.gov/Vaccinations/COVID-19-Vaccinations-in-the-United-States-Jurisdi/unsk-b7fc). We downloaded data on COVID-19 hospitalizations from HealthData.gov (https://healthdata.gov/Hospital/COVID-19-Reported-Patient-Impact-and-Hospital-Capa/g62h-syeh). The proportion of COVID-19 cases reported in different age categories was estimated using the CDC restricted access case surveillance line-list data (https://data.cdc.gov/Case-Surveillance/COVID-19-Case-Surveillance-Restricted-Access-Detai/mbd7-r32t). The nationwide blood donor serosurvey dataset was downloaded from the CDC (https://covid.cdc.gov/covid-data-tracker/#nationwide-blood-donor-seroprevalence).

Code availability

The code used to run the models and produce the figures in the main text and the Supplementary Information, and the list of R packages used and their versions are available online40.

Competing interests

B.G.-C., M.D.T.H., and D.A.T.C. report a contract from Merck (to the University of Florida) for research unrelated to this manuscript. The remaining authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

The online version contains supplementary material available at 10.1038/s41467-023-37944-5.

References

- 1.Wu SL, et al. Substantial underestimation of SARS-CoV-2 infection in the United States. Nat. Commun. 2020;11:4507. doi: 10.1038/s41467-020-18272-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Chiu WA, Ndeffo-Mbah ML. Using test positivity and reported case rates to estimate state-level COVID-19 prevalence and seroprevalence in the United States. PLoS Comput. Biol. 2021;17:e1009374. doi: 10.1371/journal.pcbi.1009374. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Irons NJ, Raftery AE. Estimating SARS-CoV-2 infections from deaths, confirmed cases, tests, and random surveys. Proc. Natl Acad. Sci. USA. 2021;118:e2103272118. doi: 10.1073/pnas.2103272118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Lu FS, et al. Estimating the cumulative incidence of COVID-19 in the United States using influenza surveillance, virologic testing, and mortality data: four complementary approaches. PLoS Comput. Biol. 2021;17:e1008994. doi: 10.1371/journal.pcbi.1008994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Noh J, Danuser G. Estimation of the fraction of COVID-19 infected people in U.S. states and countries worldwide. PLoS ONE. 2021;16:e0246772. doi: 10.1371/journal.pone.0246772. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Pei S, Yamana TK, Kandula S, Galanti M, Shaman J. Burden and characteristics of COVID-19 in the United States during 2020. Nature. 2021;598:338–341. doi: 10.1038/s41586-021-03914-4. [DOI] [PubMed] [Google Scholar]

- 7.Sánchez-Romero M, di Lego V, Prskawetz A, Queiroz BL. An indirect method to monitor the fraction of people ever infected with COVID-19: an application to the United States. PLoS ONE. 2021;16:e0245845. doi: 10.1371/journal.pone.0245845. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Bajema KL, et al. Estimated SARS-CoV-2 seroprevalence in the US as of September 2020. JAMA Intern. Med. 2021;181:450–460. doi: 10.1001/jamainternmed.2020.7976. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Clarke KE, et al. Seroprevalence of infection-induced SARS-CoV-2 antibodies—United States, September 2021–February 2022. Morb. Mortal. Wkly. Rep. 2022;71:606–608. doi: 10.15585/mmwr.mm7117e3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Havers FP, et al. Seroprevalence of antibodies to SARS-CoV-2 in 10 sites in the United States, March 23–May 12, 2020. JAMA Intern. Med. 2020;180:1576–1586. doi: 10.1001/jamainternmed.2020.4130. [DOI] [PubMed] [Google Scholar]

- 11.Naranbhai V, et al. High seroprevalence of anti-SARS-CoV-2 antibodies in Chelsea, Massachusetts. J. Infect. Dis. 2020;222:1955–1959. doi: 10.1093/infdis/jiaa579. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Anand S, et al. Prevalence of SARS-CoV-2 antibodies in a large nationwide sample of patients on dialysis in the USA: a cross-sectional study. Lancet. 2020;396:1335–1344. doi: 10.1016/S0140-6736(20)32009-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Menachemi N, et al. Population point prevalence of SARS-CoV-2 infection based on a statewide random sample—Indiana, April 25–29, 2020. Morb. Mortal. Wkly. Rep. 2020;69:960–964. doi: 10.15585/mmwr.mm6929e1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Venugopal U, et al. SARS-CoV-2 seroprevalence among health care workers in a New York City hospital: a cross-sectional analysis during the COVID-19 pandemic. Int. J. Infect. Dis. 2021;102:63–69. doi: 10.1016/j.ijid.2020.10.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Lamba K, et al. SARS-CoV-2 cumulative incidence and period seroprevalence: results from a statewide population-based serosurvey in California. Open Forum Infect. Dis. 2021;8:ofab379. doi: 10.1093/ofid/ofab379. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Bruckner TA, et al. Estimated seroprevalence of SARS-CoV-2 antibodies among adults in Orange County, California. Sci. Rep. 2021;11:3081. doi: 10.1038/s41598-021-82662-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Kline D, et al. Estimating seroprevalence of SARS-CoV-2 in Ohio: a Bayesian multilevel poststratification approach with multiple diagnostic tests. Proc. Natl Acad. Sci. USA. 2021;118:e2023947118. doi: 10.1073/pnas.2023947118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Kalish H, et al. Undiagnosed SARS-CoV-2 seropositivity during the first 6 months of the COVID-19 pandemic in the United States. Sci. Transl. Med. 2021;13:eabh3826. doi: 10.1126/scitranslmed.abh3826. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Jones JM, et al. Estimated US infection- and vaccine-induced SARS-CoV-2 seroprevalence based on blood donations, July 2020–May 2021. JAMA. 2021;326:1400–1409. doi: 10.1001/jama.2021.15161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Sullivan PS, et al. Severe acute respiratory syndrome coronavirus 2 cumulative incidence, United States, August 2020–December 2020. Clin. Infect. Dis. 2022;74:1141–1150. doi: 10.1093/cid/ciab626. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Routledge I, et al. Using sero-epidemiology to monitor disparities in vaccination and infection with SARS-CoV-2. Nat. Commun. 2022;13:2451. doi: 10.1038/s41467-022-30051-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Chitwood MH, et al. Reconstructing the course of the COVID-19 epidemic over 2020 for US states and counties: results of a Bayesian evidence synthesis model. PLoS Comput. Biol. 2022;18:e1010465. doi: 10.1371/journal.pcbi.1010465. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Burgess S, Ponsford MJ, Gill D. Are we underestimating seroprevalence of SARS-CoV-2? BMJ. 2020;370:m3364. doi: 10.1136/bmj.m3364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Takahashi S, Greenhouse B, Rodríguez-Barraquer I. Are seroprevalence estimates for severe acute respiratory syndrome coronavirus 2 biased? J. Infect. Dis. 2020;222:1772–1775. doi: 10.1093/infdis/jiaa523. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Patel MM, et al. Change in antibodies to SARS-CoV-2 over 60 days among health care personnel in Nashville, Tennessee. JAMA. 2020;324:1781–1782. doi: 10.1001/jama.2020.18796. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Ibarrondo FJ, et al. Rapid decay of anti-SARS-CoV-2 antibodies in persons with mild Covid-19. N. Engl. J. Med. 2020;383:1085–1087. doi: 10.1056/NEJMc2025179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Macdonald, P. J. et al. Affinity of anti-spike antibodies in SARS-CoV-2 patient plasma and its effect on COVID-19 antibody assays. eBioMedicine75, 10.1016/j.ebiom.2021.103796 (2022). [DOI] [PMC free article] [PubMed]

- 28.Peluso MJ, et al. SARS-CoV-2 antibody magnitude and detectability are driven by disease severity, timing, and assay. Sci. Adv. 2021;7:eabh3409. doi: 10.1126/sciadv.abh3409. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Bond KA, et al. Longitudinal evaluation of laboratory-based serological assays for SARS-CoV-2 antibody detection. Pathology. 2021;53:773–779. doi: 10.1016/j.pathol.2021.05.093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Montesinos I, et al. Neutralizing antibody responses following natural SARS-CoV-2 infection: dynamics and correlation with commercial serologic tests. J. Clin. Virol. 2021;144:104988. doi: 10.1016/j.jcv.2021.104988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Takahashi, S. et al. SARS-CoV-2 serology across scales: a framework for unbiased seroprevalence estimation incorporating antibody kinetics and epidemic recency. medRxiv10.1101/2021.09.09.21263139 (2021). [DOI] [PMC free article] [PubMed]

- 32.Stone, M. et al. Evaluation of commercially available high-throughput SARS-CoV-2 serologic assays for serosurveillance and related applications. Emerg. Infect. Dis.28, 10.3201/eid2803.211885 (2022). [DOI] [PMC free article] [PubMed]

- 33.Arkhipova-Jenkins I, et al. Antibody response after SARS-CoV-2 infection and implications for immunity. Ann. Intern. Med. 2021;174:811–821. doi: 10.7326/M20-7547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Goldberg Y, et al. Protection and waning of natural and hybrid immunity to SARS-CoV-2. N. Engl. J. Med. 2022;386:2201–2212. doi: 10.1056/NEJMoa2118946. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Carazo, S. et al. Protection against Omicron re-infection conferred by prior heterologous SARS-CoV-2 infection, with and without mRNA vaccination. medRxiv 10.1101/2022.04.29.22274455 (2022).

- 36.Busch, M. P. et al. Population-weighted seroprevalence from SARS-CoV-2 infection, vaccination, and hybrid immunity among U.S. blood donations from January–December 2021. Clin. Infect. Dis.75, ciac470 (2022). [DOI] [PMC free article] [PubMed]

- 37.Follmann, D. et al. Anti-nucleocapsid antibodies after SARS-CoV-2 infection in the blinded phase of the randomized, placebo-controlled mRNA-1273 COVID-19 vaccine efficacy clinical trial. Ann. Intern. Med.175, 1258–1265 (2022). [DOI] [PMC free article] [PubMed]

- 38.Wiegand, R. et al. Estimated SARS-CoV-2 Antibody Seroprevalence and Infection to Case Ratio Trends in 50 States and District of Columbia, United States–October 25, 2020, to February 26, 2022, SSRN Scholarly Paper 4094826 (Social Science Research Network, Rochester, NY, 2022).

- 39.R Core Team. R: A Language and Environment for Statistical Computing (R Foundation for Statistical Computing, 2022).

- 40.García-Carreras, B. et al. Accounting for assay performance when estimating the temporal dynamics in SARS-CoV-2 seroprevalence in the U.S. https://github.com/UF-IDD/US_seroprevalence/releases/tag/v1.0.0, 10.5281/zenodo.7794239 (2023). [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Data used to fit the logistic regressions and estimates of proportions of state populations infected by week are available online40. Estimates are produced using the best waning model by leave-one-out median RMSE (see Table 1 and Fig. 2 and Supplementary Fig. 8). See section “Models” in “Methods” in the main text for how the lower and upper bounds we provide for these estimates are calculated. Data were obtained from various sources and subsequently merged to fit the models. The nationwide serosurvey data were downloaded from the CDC (https://data.cdc.gov/Laboratory-Surveillance/Nationwide-Commercial-Laboratory-Seroprevalence-Su/d2tw-32xv). County-level daily laboratory-confirmed COVID-19 cases and deaths in the counties of the United States were downloaded from USAFacts (https://usafacts.org/visualizations/coronavirus-covid-19-spread-map/). Excess deaths data for each state were downloaded from the CDC (https://www.cdc.gov/nchs/nvss/vsrr/covid19/excess_deaths.htm). Laboratory testing (PCR) time series per state were downloaded from HealthData.gov (https://healthdata.gov/dataset/COVID-19-Diagnostic-Laboratory-Testing-PCR-Testing/j8mb-icvb). Data on the distribution of assays used in each serosurvey round were taken from Wiegand et al.38. COVID-19 vaccination data were downloaded from the CDC (https://data.cdc.gov/Vaccinations/COVID-19-Vaccinations-in-the-United-States-Jurisdi/unsk-b7fc). We downloaded data on COVID-19 hospitalizations from HealthData.gov (https://healthdata.gov/Hospital/COVID-19-Reported-Patient-Impact-and-Hospital-Capa/g62h-syeh). The proportion of COVID-19 cases reported in different age categories was estimated using the CDC restricted access case surveillance line-list data (https://data.cdc.gov/Case-Surveillance/COVID-19-Case-Surveillance-Restricted-Access-Detai/mbd7-r32t). The nationwide blood donor serosurvey dataset was downloaded from the CDC (https://covid.cdc.gov/covid-data-tracker/#nationwide-blood-donor-seroprevalence).

The code used to run the models and produce the figures in the main text and the Supplementary Information, and the list of R packages used and their versions are available online40.