Abstract

Here the Human Pangenome Reference Consortium presents a first draft of the human pangenome reference. The pangenome contains 47 phased, diploid assemblies from a cohort of genetically diverse individuals1. These assemblies cover more than 99% of the expected sequence in each genome and are more than 99% accurate at the structural and base pair levels. Based on alignments of the assemblies, we generate a draft pangenome that captures known variants and haplotypes and reveals new alleles at structurally complex loci. We also add 119 million base pairs of euchromatic polymorphic sequences and 1,115 gene duplications relative to the existing reference GRCh38. Roughly 90 million of the additional base pairs are derived from structural variation. Using our draft pangenome to analyse short-read data reduced small variant discovery errors by 34% and increased the number of structural variants detected per haplotype by 104% compared with GRCh38-based workflows, which enabled the typing of the vast majority of structural variant alleles per sample.

Subject terms: Genome informatics, Genomics, Genome assembly algorithms, Haplotypes

An initial draft of the human pangenome is presented and made publicly available by the Human Pangenome Reference Consortium; the draft contains 94 de novo haplotype assemblies from 47 ancestrally diverse individuals.

Main

The human reference genome has formed the backbone of human genomics since its initial draft release more than 20 years ago2. The primary sequences are a mosaic representation of individual haplotypes containing one representative scaffold sequence for each chromosome. There are 210 Mb of gap or unknown (151 Mb) or computationally simulated sequences (59 Mb) within the current GRCh38 release, constituting 6.7% of the primary chromosome scaffolds. Missing reference sequences create an observational bias, or streetlamp effect, which limits studies to be within the boundaries of the reference. Recently, the Telomere-to-Telomere (T2T) consortium finished the first complete sequence of a haploid human genome, T2T-CHM13, which provides a contiguous representation of each autosome and of chromosome X, with the exception of some ribosomal DNA arrays that remain to be fully resolved3. Using T2T-CHM13 directly improves genomic analyses; for example, discovering 3.7 million additional single-nucleotide polymorphisms (SNPs) in regions non-syntenic to GRCh38 and better representing the true copy number variants (CNVs) of samples from the 1000 Genomes Project (1KG) compared with GRCh38 (refs. 1,4).

Although T2T-CHM13 represents a major achievement, no single genome can represent the genetic diversity of our species. Previous studies have identified tens of megabases of sequence contained within structural variants (SVs) that are polymorphic within the population5. Owing to the absence of these alternative alleles from the reference genome, more than two-thirds of SVs have been missed in studies that used short-read data and the human reference assembly6–8, despite individual SVs being more likely to affect gene function than either individual SNPs or short insertions and deletions (indels)9,10.

To overcome reference bias, a transition to a pangenomic reference has been envisioned11,12. Pangenomic methods have rapidly progressed over the past few years13–15 such that it is now practical to propose that common genomic analyses use a pangenome. Here we sequence and assemble a set of diverse individual genomes and present a draft human pangenome, the first release from the Human Pangenome Reference Consortium (HPRC)15. These genomes represent a subset of the planned HPRC panel, which aims to better capture global genomic diversity across the 700 haplotypes of 350 individuals.

Assembling 47 diverse human genomes

We assembled 47 fully phased diploid assemblies from genomes selected to represent global genetic diversity (Fig. 1a) and for which consent had been given for unrestricted access. All assemblies have been made publicly available, along with all data and analyses. These assemblies include 29 samples with long and linked read sequencing data generated entirely by the HPRC and 18 samples sequenced by other efforts16–18. In some cases, we supplemented the 18 additional samples with further sequencing. We selected the 29 HPRC samples from the 1KG lymphoblastoid cell lines, limiting selection to those lines classified as karyotypically normal and with low passage (to avoid artefacts from cell culture). We also ensured that the cell lines were derived from participants for whom whole-genome sequencing (WGS) data were available for both parents (for haplotype phasing). Cell lines meeting these criteria were prioritized by genetic and biogeographic diversity (Methods).

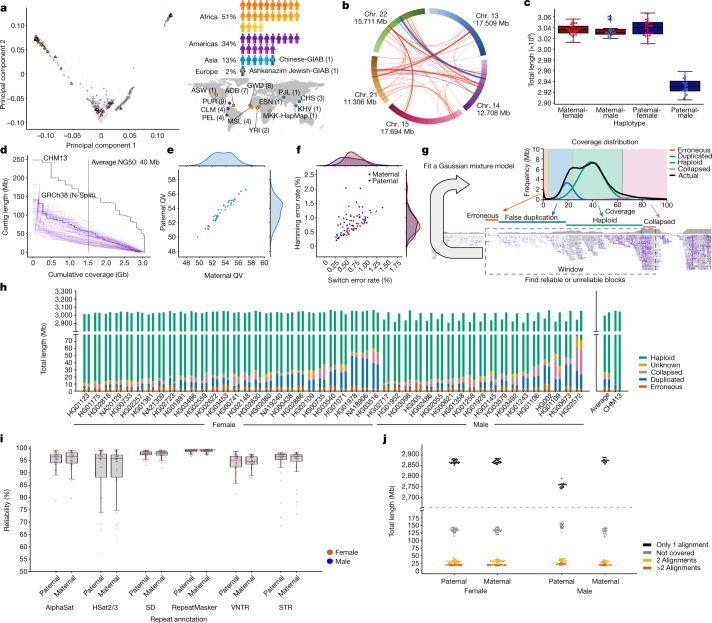

Fig. 1. Presenting 47 accurate and near-complete diverse diploid human genome assemblies.

a, Selecting the HPRC samples. Left, the first two principal components of 1KG samples showing HPRC (triangles) samples, excluding HG002, HG005 and NA21309. Right, summary of the HPRC sample subpopulations (three letter abbreviations) on a map of Earth as defined by the 1KG. ACB, African Caribbean in Barbados; ASW, African Ancestry in Southwest US; CHS, Han Chinese South; CLM, Colombian in Medellin, Colombia; ESN, Esan in Nigeria; GWD, Gambian in Western Division; KHV, Kinh in Ho Chi Minh City, Vietnam; MKK, Maasai in Kinyawa, Kenya; MSL, Mende in Sierra Leone; PEL, Peruvian in Lima, Peru; PJL, Punjabi in Lahore, Pakistan; PUR, Puerto Rican in Puerto Rico; YRI, Yoruba in Ibadan, Nigeria. b, Interchromosomal joins between acrocentric chromosome short arms. Red, the join is on the same strand; blue, otherwise. c, Total assembled sequence per haploid phased assembly. d, Assembly contiguity shown as a NGx plot. T2T-CHM13 and GRCh38 contigs are included for comparison. e, Assembly QVs showing the base-level accuracy of the maternal and paternal assembly for each sample. f, Yak-reported phasing accuracy showing the switch error percentage versus Hamming error percentage. g, Flagger read-based assembly evaluation pipeline. Coverage is calculated across the genome and a mixture model is fit to account for reliably assembled haploid sequence and various classes of unreliably assembled sequence. For each coverage block, a label is assigned according to the most probable mixture component to which it belongs: erroneous, falsely duplicated, (reliable) haploid, collapsed, and unknown. h, Reliability of the 47 HPRC assemblies using read mapping. For each sample, the left bar is the paternal and the right bar is the maternal haplotype. Regions flagged as haploid are reliable (green), constituting more than 99% on average of each assembly. The y axis is broken to show the dominance of the reliable haploid component and the stratification of the unreliable blocks. i, Assembly reliability of six types of repeats. AlphaSat, alpha satellites; HSat2/3, human satellites 2 and 3. j, Completeness of the HPRC assemblies relative to T2T-CHM13. The number of reference bases covered by none, by one, by two or by more than two alignments are included.

We created a consistent set of deeply sequenced data types for every sample (Supplementary Table 1). The data included Pacific Biosciences (PacBio) high-fidelity (HiFi) and Oxford Nanopore Technologies (ONT) long-read sequencing, Bionano optical maps and high-coverage Hi-C Illumina short-read sequencing for all HPRC samples. We also gathered previously generated high-coverage Illumina sequencing data for both parents of each participant19. We generated on average 39.7× HiFi sequence depth of coverage for the 46 HPRC samples (excluding HG002, which had around 130× coverage). This depth of coverage is consistent with the requirements for high-quality, state-of-the-art assemblies20 and facilitates comprehensive variant discovery irrespective of allele frequency (AF). The N50 value, which represents the shortest read length at which 50% of the total sequenced bases are covered by considering only equal or longer reads, was 19.6 kb on average for the HiFi reads (Supplementary Table 1; excluding HG002 because it was sequenced using a different library preparation protocol).

For the core assembler, we chose Trio-Hifiasm20 after detailed benchmarking of several alternatives21. Trio-Hifiasm uses PacBio HiFi long-read sequences and parental Illumina short-read sequences to produce near fully phased contig assemblies. The complete assembly pipeline (Supplementary Fig. 1 and Methods) included steps to remove adaptor and nonhuman sequence contamination and to ensure a single mitochondrial assembly per maternal assembly.

Assembly assessment

We first searched for large-scale misassemblies, looking for gene duplication errors, phasing errors and interchromosomal misjoins (Methods). We manually fixed three large duplication errors and one large phasing error, but left smaller errors, which are difficult to definitively distinguish from SVs. We found 217 putative interchromosomal joins. Only one of these joins (in the paternal assembly of HG02080) was located in a euchromatic, non-acrocentric region and was manually confirmed to be a misassembly. The remaining joins involved the short arms of the acrocentric chromosomes (Fig. 1b and Supplementary Table 2). This may be the result of misalignment, nonallelic gene conversion or other biological mechanisms that maintain large-scale homology between the short arms of the acrocentrics—a phenomenon that we have studied in an associated paper22.

To evaluate the resulting assemblies after manual correction of errors, we developed an automated assembly quality control pipeline that combined methods to assess the completeness, contiguity, base level quality and phasing accuracy of each assembly (Supplementary Table 3 and Methods). Haploid assemblies containing an X chromosome averaged a total length of 3.04 Gb, 99.3% the length of T2T-CHM13 (3.06 Gb), which also contains an X chromosome. Haploid assemblies containing a Y chromosome averaged a total length of 2.93 Gb, which reflects the size difference between the sex chromosomes (Fig. 1c). The average NG50 value, a widely used measure of contiguity, was comparable with the contig NG50 of GRCh38 (40 Mb compared with 56 Mb, respectively; Fig. 1d). Using short substrings (k-mer values of 31) derived from Illumina data, the Yak k-mer analyser20 estimated an average quality value (QV) of 53.57 for the assemblies, which corresponded to an average of 1 base error per 227,509 bases (Fig. 1e). To validate these QV estimates, we benchmarked the HG002 and HG005 assembly-based variant calls against the small variants called using Genome in a Bottle (GIAB; v.4.2.1). We estimated QVs of 54 for HG002 and 55 for HG005, which were highly similar to the k-mer QVs estimated using Yak. Consistent with our manual observation that most errors were primarily small indels in low-complexity regions, we found that approximately 32% of the indel errors were in homopolymers longer than 5 bp and an additional 48% were in tandem repeats and low-complexity regions. Moreover, about 42% of the indel errors were genotype errors, mostly heterozygous variants incorrectly called as homozygous variants due to collapsed haplotypes in the two assemblies of an individual (Supplementary Table 4). We next used Yak to analyse the phasing accuracy between the maternal and paternal assemblies using k-mer values derived from Illumina sequencing of the parents. An average haplotype switch error rate of 0.67% was observed and a Hamming error rate of 0.79% (Fig. 1f). We also calculated phase accuracy using Pstools23,24, which uses Hi-C sequence data of the sample not used to create the assembly. Pstools reported slightly lower switch error rates than Yak but comparable Hamming error rates (Supplementary Fig. 2). Taken together, the above results indicate that the assemblies are highly contiguous and accurate.

Regional assembly reliability

To determine which portions of the assemblies are reliable, we developed a read-based pipeline, Flagger, that detects different types of misassemblies within a phased diploid assembly (Fig. 1g and Methods). The pipeline works by mapping the HiFi reads to the combined maternal and paternal assembly in a haplotype-aware manner. It then identifies coverage inconsistencies within these read mappings that are likely to be due to assembly errors. This process is similar to likelihood-based approaches, which assess the assembly given the reads25, but is adapted to work with long reads and diploid assemblies. Using Flagger, we identified only 0.88% (26.4 Mb) of each assembly as unreliable (Fig. 1h and Supplementary Table 5). Using T2T-CHM13, we estimated that 0.09% of reliably assembled blocks were falsely labelled as unreliable (Methods). Compared with the distribution of contig sizes, the unreliable blocks were short (54.6 kb N50 average). We intersected the unreliable blocks in the assemblies from Flagger with different repeat annotations (Fig. 1i and Supplementary Table 6). We estimated that the following percentage of elements were correctly assembled: 95.4% of alpha satellites; 91.5% of human satellites 2 and 3; 97.7% of segmental duplications (SDs); 94.3% of variable number tandem repeats (VNTRs); 94.2% of short tandem repeats (STRs); and 98.8% of all human repeats26.

Completeness and CNV

To assess the completeness and copy number polymorphism of the assemblies, we aligned them to T2T-CHM13 (Methods). The paternal assemblies of male samples covered about 92.8% of T2T-CHM13 (excluding chromosome X) on average with exactly one alignment. For all other assemblies (excluding chromosome Y), about 94.1% on average was single-copy covered (Fig. 1j and Supplementary Table 7). On average, around 136 Mb (4.4%) of T2T-CHM13 was not covered by any alignment, which indicates that some parts of the genome are either systematically unassembled or cannot be reliably aligned. About 90% of these regions were centromeric or pericentromeric27 (Extended Data Fig. 1). Despite the majority of unaligned bases occurring within and around centromeres, on average, 90% of divergent and monomeric alpha satellites, gamma satellites and centromeric transition regions were covered by at least one alignment. Excluding the T2T-CHM13 centromere and satellites3 and including only the expected sex chromosome for each haploid assembly, on average, around 99.12% of the remaining reference was covered by exactly one alignment (Supplementary Table 7).

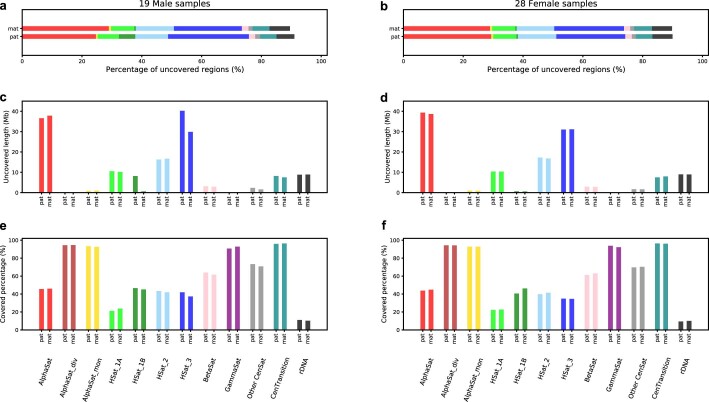

Extended Data Fig. 1. Characterizing uncovered reference bases using peri/centromeric annotation and evaluating the completeness of different satellite families.

We characterized the regions not covered by the assembly alignments to the T2T-CHM13 (v.2.0) reference and also investigated the completeness of the peri/centromeric satellites across all HPRC assemblies. We characterized these regions using the peri/centromeric annotation available for the T2T-CHM13 (v.2.0) reference. We made separate bar plots for male and female samples to exclude chromosome X for the paternal assemblies of male samples and exclude chromosome Y for all other assemblies. Panels a and b indicate that on average ~90% of the uncovered bases are located in peri/centromeric regions with the active/inactive alpha satellites and human satellite 3 comprising ~50% of these bases, mainly due to their highly repetitive composition and also higher frequency compared to other satellites. Other centromeric satellites, centromeric transition regions, and rDNA arrays accounted for another ~40% of the uncovered bases on average. Panels c and d display the average lengths of uncovered regions located within each satellite family. Panels e and f show what percentage of each satellite family was covered by at least one assembly alignment. The most complete centromeric regions (~90% coverage) are divergent/monomeric alpha satellites, gamma satellites and centromeric transition regions. The rDNA arrays have been covered by ~8% on average, which made them the least completely assembled repeat arrays.

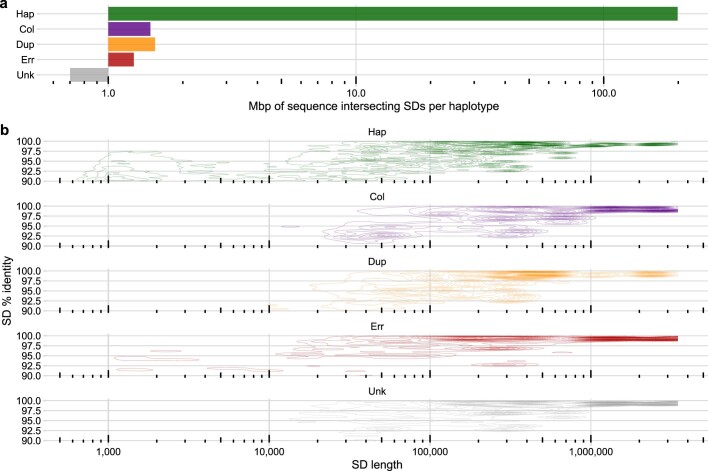

The average number of T2T-CHM13 bases with two or with more than two alignments was about 32.4 Mb (around 1.0%) and about 20.0 Mb (around 0.6%), respectively. On average, per haploid assembly, these duplicated regions had about 82.20% and 39.82% overlap with the pericentomeric or centromeric satellites and SDs, respectively, and around 94.62% had overlap with either of them. We characterized the accuracy of regions aligned to SDs in T2T-CHM13 (excluding chromosome Y) using a liftover of the assembly read-depth-based evaluation (Extended Data Fig. 2). On average, we estimated that only 2.5% (4.99 out of 199 Mb) of the SD sequence that could be lifted onto T2T-CHM13 was in error according to the read depth. To identify SDs associated with these errors, we took all 5 kb windows across the unreliable regions and intersected them with the longest and most identical overlapping SD. The median length of SDs overlapping sequences in error was 3.0 times longer (288 kb compared with 96.3 kb) than those in correctly assembled SDs and 1.8% more identical (98.9 compared with 97.1). This result reinforces earlier findings that the length and identity of SDs play an important part in assembly accuracy28.

Extended Data Fig. 2. Segmental duplication reliability.

a, Average number of Mbp per haplotype of correctly or incorrectly assembled SDs lifted from T2T-CHM13 (v.2.0). b, The features of the most identical and longest overlapping SDs for each type of assembly error calculated in 5 kbp windows.

Annotating 47 diverse genomes

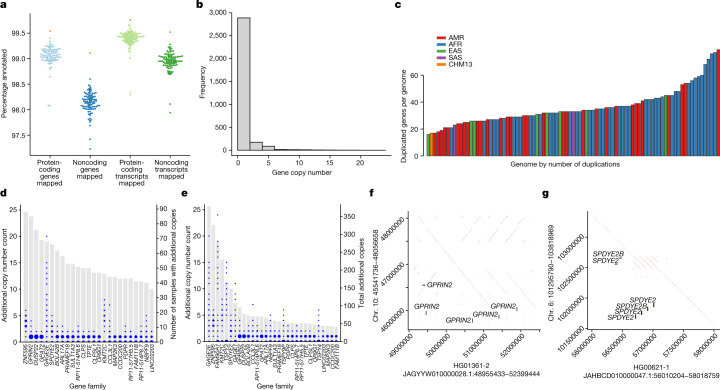

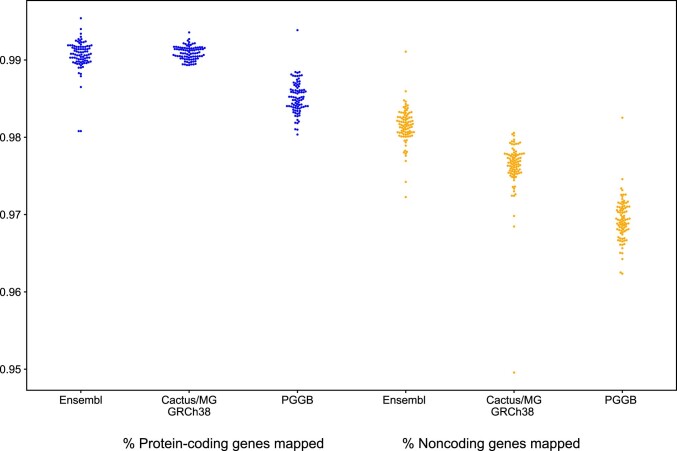

We developed a new Ensembl mapping pipeline to annotate GENCODE29 genes and transcripts within each new haploid assembly (Methods). A median of 99.07% of protein-coding genes (range = 98.08–99.40%) and 99.42% of protein-coding transcripts (range = 98.29–99.66%) were identified in each of the HPRC assemblies (Fig. 2a and Supplementary Table 8). Similarly, a median of 98.16% of noncoding genes (range = 97.23–98.60%) and 98.96% of noncoding transcripts (range = 97.94–99.28%) were similarly annotated. Running this pipeline on T2T-CHM13 produced similar, slightly higher, results. Intersecting the HPRC annotations with the assembly reliability predictions, a median of 99.53% of gene and 99.79% of transcript annotations occurred wholly within reliable regions, which indicated that most of the annotated transcript haplotypes were structurally correct. To examine transcriptome base accuracy, we looked for nonsense and frameshift mutations in the set of canonical transcripts (one representative transcript per gene; Supplementary Fig. 3, Supplementary Table 8 and Methods). We found a median of 25 nonsense mutations and 72 frameshifts per assembly. A median of 21 (84%) and 58 (80%) of these nonsense mutations and frameshifts per assembly, respectively, were supported by the independently generated Illumina variant call sets. These numbers were within the range of previously reported numbers of loss-of-function mutations (between 10 and 150 per person, depending on the level of conservation of the mutation)1,30. Conservatively, if all the non-confirmed frameshifts and nonsense mutations are assembly errors, this would predict 18 such transcript-altering errors per transcriptome (1 per 1.7 million assembled transcriptome bases).

Fig. 2. Transcriptome annotation of the assemblies.

a, Ensembl mapping pipeline results. Percentages of protein-coding and noncoding genes and transcripts annotated from the reference set in each of the HPRC assemblies. Orange points represent T2T-CHM13 for comparison. b, Frequency of gene copy number. Individual genes may have separate copy number states among genomes, and the frequency reflects 3,210 observed copy number changes among the HPRC genomes. c, Number of distinct duplicated genes or gene families per phased assembly relative to the number of duplicated genes annotated in GRCh38 (n = 152). The GRCh38 gene duplications reflect families of duplicated genes, whereas the counts in other genomes reflect gene duplication polymorphisms. The assemblies are colour coded according to their population of origin. d, The top 25 most commonly CNV genes or gene families in the HPRC assemblies out of all 1,115 duplicated genes, ordered by the number of samples with additional copies relative to GRCh38. Grey bars, the number of samples with additional copies. Blue circles, the number of additional copies per sample, with the size of the circle proportional to the number of samples. e, The top 30 most individually copied CNV genes or gene families in the HPRC assemblies, ordered by total number of additional copies observed. Blue circles, the number of additional copies per sample. Grey bars, the total number of additional copies summed over the samples. f, Dotplot illustrating haplotype-resolved GPRIN2 gains in the HG01361 assembly relative to GRCh38. g, Dotplot illustrating SPDYE2–SPDYE2B haplotype resolved gains within a tandem duplication cluster of the HG00621 assembly relative to GRCh38.

There were 1,115 protein-coding gene families within the Flagger-predicted reliable regions of the full set of assemblies that had a gain in copy number in at least one genome (Fig. 2b). Each assembly had an average of 36 genes with a gain in copy number relative to GRCh38 within its predicted reliable regions, with a bias towards rare, low-copy CNVs (Fig. 2c). In detail, 71% of CNV genes appeared in a single haplotype. Previous studies using read depth found that rare CNVs generally occur outside regions annotated as being enriched in SDs31. The genome assemblies confirmed this observation in sequence-resolved CNVs. When stratifying duplicated genes on the basis of AF into singleton (present in one haplotype), low frequency (<10%) and high frequency, 15% (118 out of 771) of the singleton CNVs mapped to SDs as annotated in GRCh38. Duplicated genes with a higher population frequency had a greater fraction in SDs: 59% (83 out of 140) of low frequency and 81% (44 out of 54) of high frequency. Overall, 58 genes were CNVs in 10% or more of haploid assemblies, and 16 genes were amplified in the majority of individuals relative to GRCh38 (Fig. 2d and Supplementary Table 9). Many of these genes were individually highly copy-number polymorphic and part of complex tandem duplications (Fig. 2e). For example, GPRIN2 is a copy-number polymorphic32 based on read depth and has a sequence resolution of one to three additional copies duplicated in tandem in the pangenome (Fig. 2f). SPDYE2 is similarly resolved as one to four additional copies duplicated in tandem (Fig. 2g). Other CNV genes were not contiguously resolved and reflect limitations of the current assemblies (see the associated article33). For example, the defensin gene DEFB107A has three to seven additional copies assembled across all samples; however, this gene was assembled into three to seven separate contigs that do not reflect the global organization of this gene.

Constructing a draft pangenome

We used a sequence graph representation for pangenomes12,14 in which nodes correspond to segments of DNA. Each node has two possible orientations, forward and reverse, and there are four possible edges between any pair of nodes to reflect all combinations of orientations (bidirected graph). The underlying haplotype sequences can be represented as walks in the graph. The model represents a generalized multiple alignment of the genome assemblies from which we built it, whereby haplotypes are aligned where they co-occur on a given node (Fig. 3a).

Fig. 3. Pangenome graphs represent diverse variation.

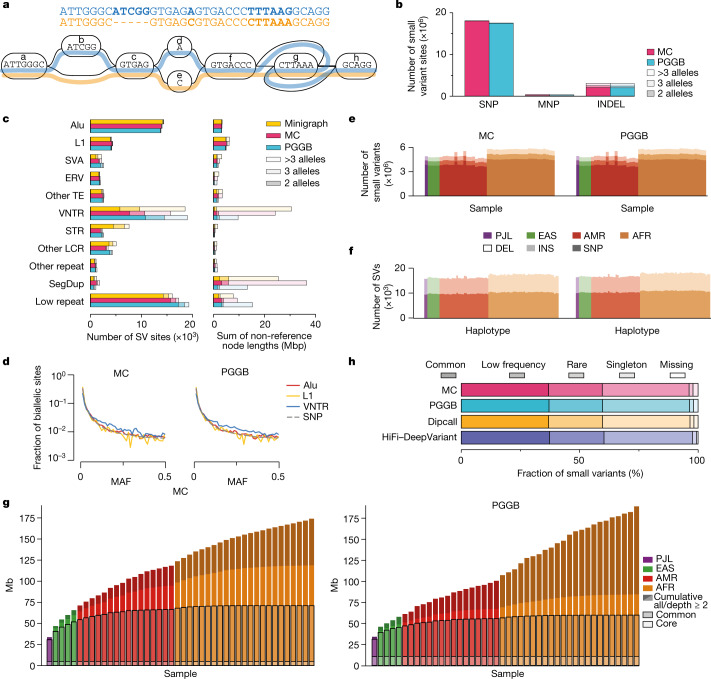

a, A pangenome variation graph comprising two elements: a sequence graph, the nodes of which represent oriented DNA strings and bidirected edges represent the connectivity relationships; and embedded haplotype paths (coloured lines) that represent the individual assemblies. b, Small variant sites in pangenome graphs stratified by the variant type and by the number of alleles at each site. MNP, multinucleotide polymorphism. c, SV sites in the pangenome graphs stratified by repeat class and by the number of alleles at each site. Other TE, a site involving mixed classes of transposable elements (TEs). VNTR, variable-number tandem repeat, a tandem repeat with the unit motif length ≥7 bp. STR, short tandem repeat, a tandem repeat with the unit motif length ≤6 bp. Other LCR, low-complexity regions with mixed VNTR and STR and low-complexity regions without a clear VNTR or STR pattern. Other repeat, a site involving mixed classes of repeats. SegDup, segmental duplication. Low repeat, a small fraction of the longest allele in a site involving repeats. d, Pangenome minor AF (MAF) spectrum for biallelic SNP, VNTR, L1 and Alu variants in the MC and PGGB graphs. e,f, Number of autosomal small variants per sample (e) and SVs per haplotype (f) in the pangenome. Variants were restricted to the Dipcall-confident regions. Samples are organized by 1KG populations. g, Pangenome growth curves for MC (left) and PGGB (right). Depth measures how often a segment is contained in any haplotype sequence, whereby core is present in ≥95% of haplotypes, common is ≥5%. h, Small variants in the GIAB (v.3.0) ‘easy’ regions annotated with AFs from gnomAD (v.3.1.2).

The process of generating a combined pangenome representation is an active research area. The problem is nontrivial both because of computational challenges (there are hundreds of billions of bases of sequence to align) and because determining which alignments to include is not always obvious, particularly for recently duplicated and repetitive sequences. We applied three different graph construction methods that have been under active development for this project: Minigraph34, Minigraph-Cactus (MC)35 and PanGenome Graph Builder (PGGB)36 (Extended Data Fig. 3 and Methods). The availability of these three models provided us with multiple views into homology relationships in the pangenome while supporting validation of discovered variation by independent methods. We included GRCh38 and T2T-CHM13 references within the pangenomes, and three samples (HG002, HG005 and NA19240) were held out to permit their use in benchmarking (hence 90 haplotypes total). In brief, Minigraph builds a pangenome by starting from a reference assembly, here GRCh38, and iteratively and progressively adds in additional assemblies, recording only SVs ≥ 50 bases. It admits complex variants, including duplications and inversions. MC extends the Minigraph pangenome with a base level alignment of the homology relationships between the assemblies using the Cactus genome aligner37 while retaining the structure of the Minigraph pangenome. PGGB constructs a pangenome from an all-to-all alignment of the assemblies. Although both T2T-CHM13 and GRCh38 are used to partition contigs into chromosomes, the PGGB graph is otherwise reference free (that is, it does not base itself on a chosen reference assembly).

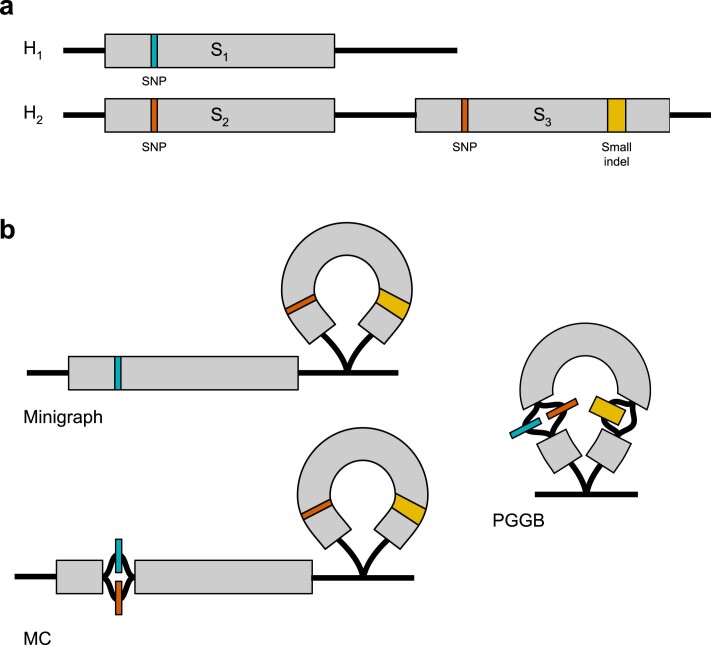

Extended Data Fig. 3. The differences in pangenome graph construction methods for Minigraph, MC, and PGGB.

a, Two haplotypes (H1 and H2) vary in copy number of a chromosomal segment S. The S1, S2, and S3 segments are highly similar with only a SNP or a small indel. b, Pangenome graph structures for Minigraph, MC, and PGGB. Minigraph used H1 as an initial backbone and then augmented with SVs (≥50 bp) from H2, such that the SNP in S2 is not represented in the pangenome graph. MC added small variants (<50 bp) to the pangenome graph constructed by Minigraph. PGGB used a symmetric, all-by-all alignment of haplotypes to build a pangenome graph whose structure is not affected by the order of inputs (unlike Minigraph and MC). The critical difference in graph construction is that, due to ambiguous pairwise relationships of paralogs, PGGB tends to collapse copy-number polymorphic loci like segmental duplications and VNTRs into a single copy through which haplotypes loop, while Minigraph and MC do not.

Measuring pangenome variation

The different algorithmic approaches used to construct a pangenome graph influence graph properties while representing the same underlying sequences. The basic properties of the three graphs produced with the different pangenome methods are shown in Supplementary Table 10. The Minigraph chart, by virtue of being limited to structural variation, is the smallest, with more than two orders of magnitude fewer nodes and edges than the base level graphs. Its length (3.24 Gb), measured as the total bases of all nodes, is similar to the MC graph (3.29 Gb) despite the latter adding many small variants. This difference is due to the MC graph also aligning a significant number of sequences left unaligned by Minigraph. The PGGB graph contains roughly 5 Gb more sequence because it includes highly structurally divergent satellite regions omitted from the other approaches (Methods) and does not implement any trimming or filtering of the input assembly contigs.

To characterize variants in the pangenome graphs, we used graph decomposition to identify ‘bubble’ subgraphs that correspond to non-overlapping variant sites. We then classified variant sites into small variants (<50 bp) and SVs (≥50 bp) of different types (Methods). We found similar numbers of each variant type in each pangenome, with 22 million small variants in the MC graph (21 million in PGGB) (Fig. 3b), and 67,000 SVs in the MC graph (73,000 in PGGB, 75,000 in Minigraph) (Fig. 3c). We assessed variation in each individual assembly by tracing their paths through the graphs and found similar numbers of small variants and SVs within confident genomic regions defined by Dipcall38. Specifically, there were 5.34 million small variants per sample and 16,800 SVs per haplotype on average in the MC graph (5.35 million and 17,400, respectively in PGGB) (Fig. 3e,f). Differences in variant counts among samples from different ancestry groups recapitulated previous observations1. There was a total of 90 Mb of non-reference sequence in the SV sites, excluding difficult-to-align centromeric repeats, in the MC graph (55 Mb for PGGB, 86 Mb for Minigraph). Alu, L1 and ERV SVs appeared largely biallelic, whereas VNTRs frequently had three or more distinct alleles per site. The minor AF in the pangenomes of biallelic variants was similar for SNPs and for L1, Alu and VNTR variants, although VNTRs showed a slight shift towards more common alleles (Fig. 3d).

We quantified the amount of euchromatic autosomal non-reference (GRCh38) sequence that each of the 44 diploid genomes incrementally contributes to the pangenome (Fig. 3g and Methods) for both MC and PGGB graphs. We limited the analysis to the euchromatic sequence because we were generally confident in its assembly and alignment, and much of the heterochromatic sequence was omitted from the MC graph (Methods). Overall, the euchromatic autosomal non-reference sequence added up to about 175 Mb in the MC graph (around 190 Mb in PGGB), out of which about 55 Mb (around 105 Mb in PGGB) was observed only on a single haplotype. Our analysis further suggested that about 5 Mb and 70 Mb in the MC graph (around 10 Mb and 60 Mb in PCGB) could be attributed to core (present in ≥95% of all haplotypes) and common genomes (present in ≥5% of all haplotypes), respectively (Supplementary Table 11). We also estimated the growth of the euchromatic autosomal pangenome independent of the order of genomes by sampling 200 permutations (Supplementary Fig. 4) and recording the median pangenome size across all samples in the MC graph. Our results indicated that the second genome added around 23 Mb of euchromatic autosomal sequence to the pangenome, whereas the last genome tended to add only about 0.64 Mb. These numbers are conservative owing to additional highly polymorphic sequence residing in assembly gaps. Extrapolating under Heaps’ Law39 (Methods), we anticipate that at least an additional 150 Mb of euchromatic autosomal sequence will be added in the pangenome graph when HPRC produces 700 haplotypes in the future.

We annotated the small variants overlapping the GIAB (v.3.0) ‘easy’ regions (covering 74.35% of GRCh38) with AFs from gnomAD (v.3.1.2) (Fig. 3h and Supplementary Table 12). In the MC graph, about 60.2% (around 9.7 million variants) had an AF of 1% or greater. About 35.7% were rare, having an AF less than 1% but above zero. About 1.7% were singleton. The remaining 2.4% were missing from gnomAD. Similar results were obtained using the PGGB graph by repeating this exercise with small variant calls detected by pairwise alignment of assemblies to GRCh38 using Dipcall38 and by calling small variants from the HiFi sequencing data using DeepVariant40. Given that 1KG samples are included in gnomAD, these missing variants are expected to be a mixture of false negatives in gnomAD and false positives in the pangenome.

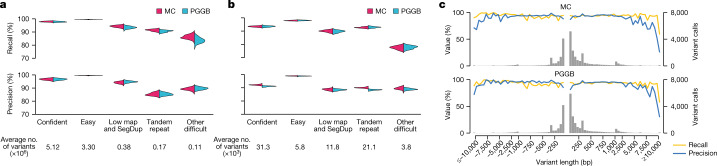

To further explore the quality of variant calls captured by assembly and graph construction, we compared pangenome-decoded variants against GRCh38 to variant sets identified by conventional reference-based genotyping methods (Supplementary Fig. 5 and Methods). These reference-based call sets were generated from the PacBio HiFi reads and haplotype-resolved assemblies using the following different discovery methods: DeepVariant40, PBSV41, Sniffles42 with Iris43, SVIM44, SVIM-asm45, PAV5 and the Hall-lab pipeline (Methods). For benchmarking small variants, we excluded regions that contained SVs detected or implied by the alignment of the haploid assemblies of that sample to GRCh38, as current benchmarking tools do not account for different representations of small variants inside or near SVs (Methods). Comparing small variants (Fig. 4a) and SVs (Fig. 4b) from the pangenomes to the reference-based sets, we observed a high level of concordance that varied, as expected, by the relative repeat content of the surrounding genome. Overall, variant calling performance was high in both the MC and PGGB graphs. For example, in relatively unique easy genomic regions constituting 75.42% of the autosomal genome, samples showed a mean of 99.64% recall and 99.64% precision for small variants in the MC graph. Meanwhile, in high-confidence regions (around 90% of autosomal genome), samples showed 97.91% recall and 96.66% precision (Fig. 4a). Performance was lower for SVs than for small variants (Fig. 4b), as expected, but was still strong. Variant calling performance was lower in highly repetitive genome regions (3.87% of autosomal genome; Fig. 4a,b), for which more work will be required to achieve high-quality variant maps. These values are likely to be significant underestimates of variant calling quality, considering known errors in the truth set owing to the inherent limitations of reference-based variant callers (see below). Stratifying the insertion and deletion SVs within the pangenome, we observed relatively high levels of agreement with the reference-based methods regardless of length (Fig. 4c).

Fig. 4. Pangenome graph evaluation.

a,b, Precision and recall of autosomal small variants (a) and SVs (b) in the pangenomes relative to consensus variant sets. Small variants are compared to HiFi–DeepVariant calls. SVs are compared to the consensus of six reference-based SV callers (Methods). Comparisons are restricted to the Dipcall-confident regions and then stratified by the GIAB (v.3.0) genomic context. c, Average SV precision, recall and frequency in the Dipcall-confident regions stratified by length in the MC (top) and PGGB (bottom) graphs relative to consensus SV sets. The histogram bin size is 50 bp for SVs <1 kb and 500 bp for SVs ≥1 kb.

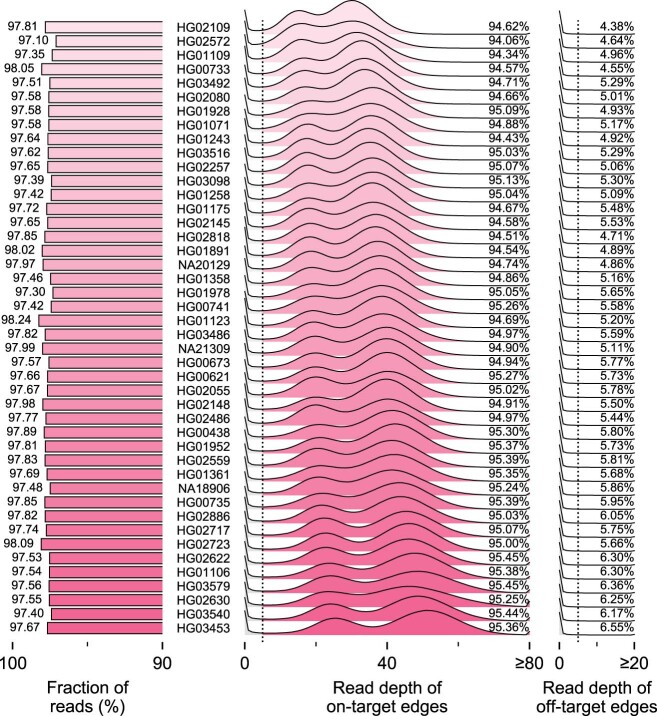

An independent measure of the quality of the pangenome graphs is the extent to which sample haplotype paths through the graph are well supported by the raw sequencing data. When we calculated the number of supporting reads by aligning them to the MC graph using GraphAligner (Methods), more than 97% of HiFi reads were aligned to the MC graph after filtering (Extended Data Fig. 4, left). We further calculated the read depth of on-target and off-target edges based on the sample paths in the graph. On average, more than 94% of on-target edges were supported by at least 5 reads, and we observed 2 peaks in the read depth distribution of on-target edges (Extended Data Fig. 4, middle): a minor peak corresponding to the edges in heterozygous regions, and a major peak at twice the minor peak corresponding to the edges in homozygous regions. By contrast, only 7% or fewer off-target edges were supported by at least 5 reads (Extended Data Fig. 4, right). In addition to HiFi reads, we used ONT reads from 29 out of the 44 samples to perform the same analysis. Even though the data were lower in coverage, similar results were obtained (Supplementary Figs. 6 and 7).

Extended Data Fig. 4. HiFi read depth of on- and off-target edges in the MC graph.

Left: fraction of reads aligned to the pangenome graph after filtering low-quality alignments. Middle: read depth distribution of on-target edges. Right: read depth distribution of off-target edges. Samples are sorted by sequencing coverage (Supplementary Table 1).

These data also show that the pangenome graphs performed better at capturing genome variation than the above benchmarking results imply. For example, a mean of 89.3% of putative false-positive small variant calls were supported by ≥5 HiFi reads, and 75.3% by ≥10 reads (85.9% and 73.8%, respectively, for SVs). This result suggests that most putative errors are in fact real variants that were missed by the reference-based callers used to create the truth set (Supplementary Fig. 8 and Supplementary Table 13).

To assess gene alignments in the pangenome, we used the Comparative Annotation Toolkit (CAT)46 to liftover GENCODE (v.38) annotations using the MC pangenome alignment onto the individual haplotype assemblies. CAT lifted and annotated a median of 99.1% of 86,757 protein-coding transcripts per assembly (Extended Data Fig. 5, Supplementary Fig. 9, Supplementary Tables 14 and 15 and Methods), making it comparable to the Ensembl-mapping-based pipeline (median of 99.4% per assembly). This result supports the idea that the MC pangenome captures most transcript homologies. When comparing the CAT and Ensembl annotations per assembly, median Jaccard similarities of 0.99 for both genes and transcripts were obtained (Methods). A median of 360 (0.4%) protein-coding transcripts per assembly mapped at different loci between the Ensembl and CAT annotations.

Extended Data Fig. 5. Gene mapping in the pangenome graphs.

The first three show the percentage of protein-coding genes from GENCODE (v.38) able to be mapped in the gene annotation sets from Ensembl, CAT run on the MC graph based on GRCh38, and CAT run on the PGGB graph. The second three show the percentage of noncoding genes from GENCODE (v.38) able to be mapped on the same annotation sets.

Pangenomes represent complex loci

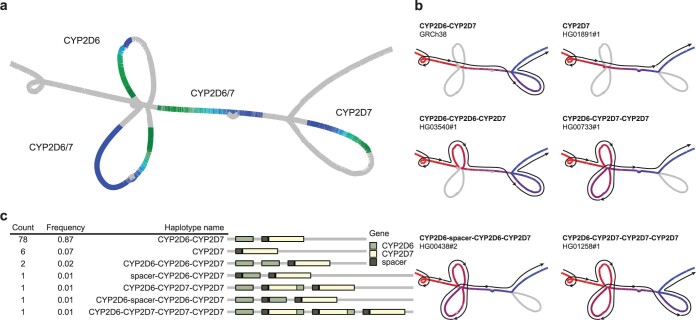

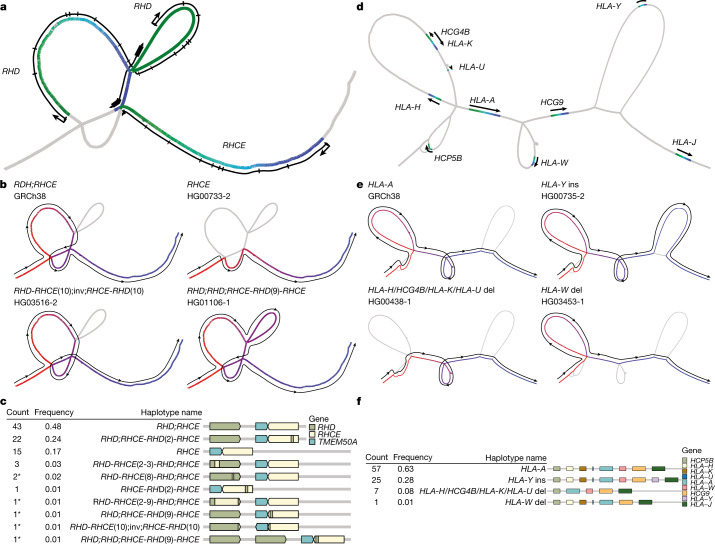

We next turned our attention to complex multiallelic SVs, which have historically been difficult to map using reference-based methods. To screen for complex SVs, we identified bubbles >10 kb from Minigraph that exhibited at least five structural alleles among the assembled haplotypes (Methods). We found that 620 out of 76,506 total sites (0.81%) were complex, and 44 of these overlapped with medically relevant protein-coding genes47 (Supplementary Table 16). Some are well-known complex SV loci, and all are known to be structurally variable based on previous short-read SV mapping studies10,19,32. However, whereas previous short-read SV calls at these loci are typically imprecise owing to alignment issues and low-resolution read-depth analysis methods, here we resolved their structure at single-base resolution. We selected five clinically relevant complex SV loci for detailed structural analysis: RHD–RHCE, HLA-A, CYP2D6–CYP2D7, C4 and LPA (Methods). For each locus and graph, we identified their locations within the graph and then annotated paths within this subgraph with known genes. We traced the individual haplotypes through the subgraph to reveal the structure of each assembly. In CYP2D6–CYP2D7 (Extended Data Fig. 6), C4 (Supplementary Fig. 10) and LPA (Supplementary Fig. 11), we recapitulated previously described haplotypes. For CYP2D6–CYP2D7, our calls matched 96% of haplotypes of 76 assemblies called by Cyrius using Illumina short-read data48. Two discrepancies appeared to be caused by errors from Cyrius, and the third was a false duplication in the HG01071-2 pangenome assembly revealed by Flagger. This comparison suggests that the pangenomes faithfully agree with existing knowledge of this complex locus. In RHD–RHCE (Fig. 5a–c), in addition to previously described haplotypes, we inferred the presence of five new haplotypes, which included one duplication allele of RHD and one inversion allele between RHD and RHCE that swaps the last exon of both genes. Around HLA-A (Fig. 5d–f and Supplementary Fig. 12), two deletion alleles have been previously described—albeit with imprecise breakpoints10—but an insertion allele carrying a HLA-Y pseudogene was previously unreported. The long sequence (65 kb) inserted with HLA-Y occurred at high frequency (28%) but has little homology to GRCh38.

Extended Data Fig. 6. Structural haplotypes of CYP2D6 and CYP2D7 from the MC graph.

a, Locations of CYP2D6 and CYP2D7 within the graph. The colour gradient is based on the precise relative position of each gene; green, head of a gene; blue, end of a gene. b, Different structural haplotypes take different paths through the graph. The colour gradient and lines show the path of each allele; red, start of a path; blue, end of a path. c, Frequency and linear structural visualization of all structural haplotypes called by the graph among 90 haploid assemblies.

Fig. 5. Visualizing complex pangenome loci.

a–c, Structural haplotypes of RHD and RHCE from the MC graph. Locations of RHD and RHCE within the graph (a). The colour gradient is based on the precise relative position of each gene; green, head of a gene; blue, end of a gene. The lines alongside the graph are based on the approximate position of gene bodies, including exons and transcription start sites. Different structural haplotypes take different paths through the graph (b). The colour gradient and lines show the path of each allele; red, start of a path; blue, end of a path. Frequency and linear structural visualization of all structural haplotypes called by the graph among 90 haploid assemblies (c). Asterisks indicate newly discovered haplotypes. d–f, Structural haplotypes of HLA-A from the PGGB graph, visualized using the same conventions as a–c. del, deletion; ins, insertion; inv, inversion.

We also compared the representation of these five loci in the MC and PGGB graphs (Supplementary Fig. 13). Each graph independently recapitulated the same haplotype structures. In general, in the PGGB graph, many SV hotspots, including the centromeres, were transitively collapsed into loops through a subgraph representing a single repeat copy. This feature tends to reduce the size of variants found in repetitive sequences. Assemblies that contained multiple copies of the homologous sequence traversed these nodes a corresponding number of times. By contrast, the MC graph maintained separate copies of these homologous sequences.

Applications of the pangenome

Pangenome-based short variant discovery

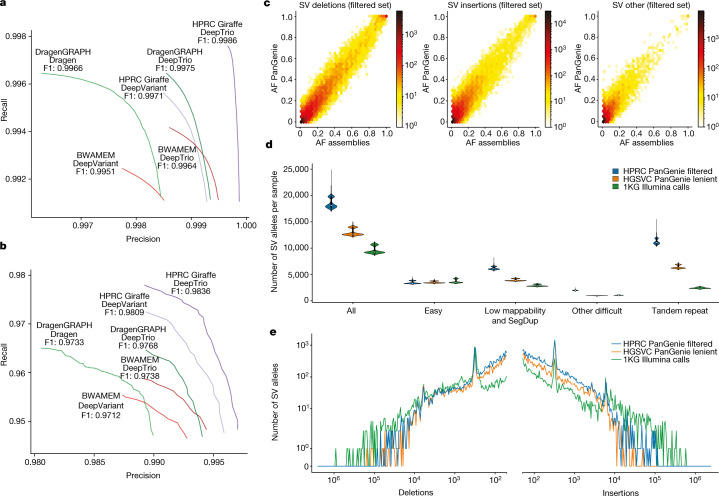

Our pangenome reference aims to broadly improve downstream analysis workflows by removing mapping biases that are inherent in the use of a single linear reference genome such as GRCh38 or CHM13. As an initial test case, we studied whether mapping against our pangenomes could improve the accuracy of calling small variants from short reads. We used Giraffe49 to align short reads from the GIAB benchmark samples18 to the MC pangenome graph. For comparison, we aligned reads to GRCh38 using BWA-MEM50 and to Dragen Graph51, which uses GRCh38 augmented with alternative haplotypes at variant sites. We called SNPs and indels with DeepVariant40 and the Dragen variant caller51 (Methods). Our pangenomic approach (Giraffe plus DeepVariant) outperformed the other approaches for calling small variants (Fig. 6a), with gains for both SNPs and indels (Supplementary Fig. 14 and Supplementary Table 17). For example, it made 21,700 errors (false positives or false negatives) in the confident regions of the GIAB truth set using 30× reads from HG005. By contrast, 36,144 errors were made when DeepVariant used the reads aligned to GRCh38, and 26,852 errors when using the Dragen pipeline. In challenging medically relevant genes47, the increase in performance was even larger for both SNPs (F1 score, defined as the harmonic mean of precision and recall, of 0.985 for Giraffe plus DeepVariant compared with <0.976 for the other methods) and indels (F1 score of 0.961 for Giraffe plus DeepVariant compared with <0.958 for the other methods) (Fig. 6b). Many regions benefitted from using pangenome mapping, but regions with errors in GRCh38 and large L1HS sequences benefitted the most from the pangenomic approach (Supplementary Figs. 15 and 16).

Fig. 6. Performance gains for pangenome-aided analysis of short-read WGS data.

a,b, Precision–recall curves showing the performance of different combinations of linear reference and various mappers and variant callers evaluated against the GIAB (v.4.2.1) HG005 benchmark (a) and the challenging medically relevant genes (CMRG; v.1.0) benchmark (b). Giraffe uses the MC pangenome graph, BWA-MEM uses GRCh38 and Dragen Graph uses GRCh38 with additional alternative haplotype sequences. c, Comparison of AFs observed from the PanGenie genotypes for all 2,504 unrelated 1KG samples and the AFs observed across 44 of the HPRC assembly samples in the MC graph. The PanGenie genotypes include all variants contained in the filtered set (28,433 deletions, 84,755 insertions, 32,431 other alleles). d, Number of SVs present (genotype 0/1 or 1/1) in each of the 3,202 1KG samples in the filtered HPRC genotypes (PanGenie) after merging similar alleles (n = 100,442 SVs), the HGSVC lenient set (n = 52,659 SVs) and the 1KG Illumina calls (n = 172,968 SVs) in GIAB regions. In the box plots, lower and upper limits represent the first and third quartiles of the data, the white dots represent the median and the black lines mark minima and maxima of the data points. e, Length distribution of SV insertions and SV deletions contained in the filtered HPRC genotypes (PanGenie), the HGSVC lenient set and the 1KG Illumina calls. Only variants with a common AF > 5% across the 3,202 samples were considered.

We next benchmarked variant calling using parent–child trios. Using DeepTrio52 resulted in better performance compared with DeepVariant across all samples of the GIAB (Fig. 6a) and the challenging medically relevant gene benchmarks (Fig. 6b and Supplementary Fig. 14). Moreover, improvements appeared to be additive to those from the pangenome. For example, DeepTrio using Giraffe alignments gave the highest calling accuracy, with the number of errors decreasing from 21,700 (single sample calling) to 10,098 (trio calling) for HG005.

A pangenome variant resource

To create a community resource to aid the development of methods and the analyses of pangenome-based population genetics, we used Giraffe to align high-coverage short-read data from 3,202 samples of the 1KG19 to our pangenome graph and DeepVariant to call small variants (Methods). The Mendelian consistency computed across 100 trios from those samples was comparable to the one computed across samples from the GIAB truth set, which indicated that comparable call set quality was obtained (Supplementary Figs. 17 and 18). The number of small variants called was consistently higher across different ancestries, with on average 64,000 more variants per sample compared with the 1KG catalogue (Extended Data Fig. 7a). Given that our pangenome-based calls showed improved performance in challenging regions (Fig. 6b), this call set across the 1KG cohort now provides the genetics and genomics communities with AF estimates for complex but medically relevant loci. For example, our approach was able to detect the gene conversion event covering the second exon RHCE, which was observed in about 25% of assembled haplotypes (Fig. 5c and Extended Data Fig. 8). Moreover, for KCNE1, we provide calls and frequencies in a 40 kb region, spanning 3 exons, that could not be previously assessed owing to the presence of a false duplication in GRCh38 (Supplementary Fig. 19; see also an associated article53 for genome-wide analysis of interlocus gene conversion).

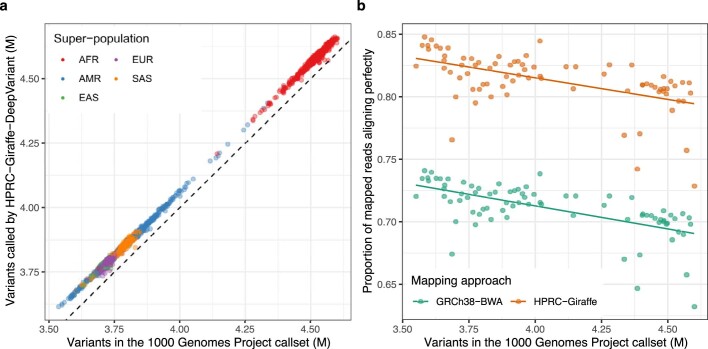

Extended Data Fig. 7. Performance comparison of pangenome-based variant calling and read mapping across populations.

a, Number of variants with at least one alternate allele (i.e. excluding homozygous for the reference allele) for each in the 1KG samples. The number of variants in the 1KG callset (x-axis) are compared to the variants found when aligning reads to the HPRC pangenome and calling variants with DeepVariant (y-axis). Points (samples) are coloured by their super-population label from the 1KG. b, The proportion of mapped reads that align perfectly (y-axis) is shown for a subset of samples from the 1KG, ordered by the number of variants called (x-axis). Two mapping approaches are compared: mapping short reads to GRCh38 with BWA (green); mapping to the HPRC pangenome with Giraffe (orange). The samples were selected to span the x-axis.

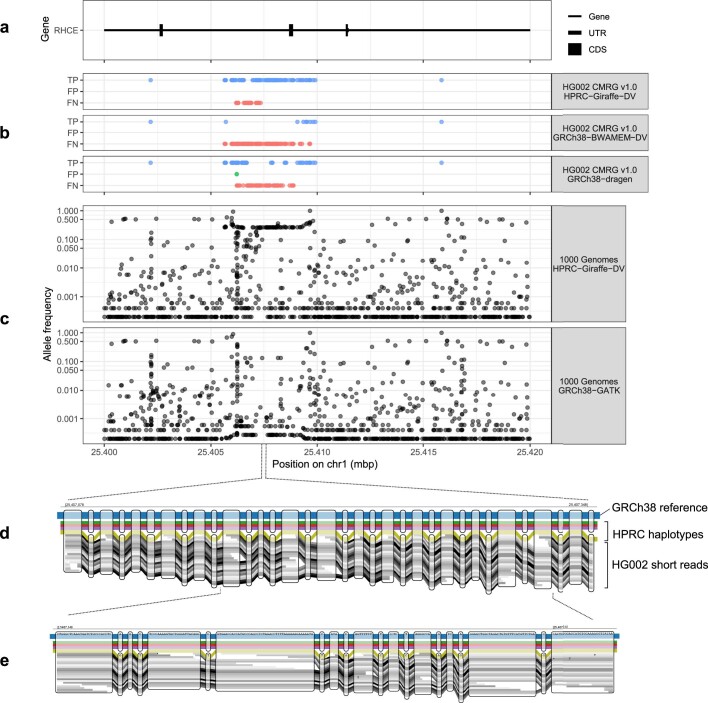

Extended Data Fig. 8. Improved genotyping in the challenging medically-relevant gene RHCE.

a, Gene annotation of part of the RHCE gene. b, Genotyping performance in this region for three approaches (horizontal panels). The top panel, using the HPRC pangenome, shows the best performance with most variants being true positives (TP, blue points) based on the CMRG (v.1.0) truth set while more other methods have a higher number of false negatives (FN, red points). c, Allele frequency across 2,504 unrelated individuals of the 1KG. The HPRC-Giraffe-DeepVariant calls show higher frequencies. In particular, the gene-converted alleles, at about 25.406-25.410 Mbp, are observed at ~25% frequency, similar to estimates from the HPRC haplotypes (Fig. 5a–c). d,e, A pangenomic view of the gene-converted region showing 1 of 4 haplotypes in the HPRC pangenome supporting the non-reference alleles. The inclusion of this haplotype in the HPRC pangenome enables short sequencing reads, here from HG002, to map along this gene-converted haplotype.

SV genotyping

The ability to represent polymorphic SVs is a key advantage of a graph-based pangenome reference. To demonstrate the utility of the sequence-resolved SVs inherent to our pangenome, we used PanGenie54 to genotype the bubbles in the MC graph. We decomposed bubbles into their constituent variant alleles (Supplementary Figs. 20 and 21) and found that 22,133,782 bubbles represented 20,194,117 SNP alleles, 6,848,115 indel alleles and 413,809 SV alleles (Supplementary Fig. 22 and Methods). Of these SV alleles that were non-reference (neither GRCh38 nor T2T-CHM13), 17,720 were observed in biallelic contexts and 396,089 at multiallelic loci with more than 1 non-reference allele, including extreme cases in which all 88 haplotypes showed distinct alleles (Supplementary Fig. 22). To analyse the genotyping performance of PanGenie, we conducted a leave-one-out experiment in which we repeatedly removed one sample from the graph and re-genotyped it using the remaining haplotype paths in the graph and short-read data for the left-out sample (Methods). In line with previous results5,54, we obtained high genotype concordance across all variant types and genomic contexts (Extended Data Fig. 9). Furthermore, we used PanGenie to genotype HG002 and evaluated genotypes based on SVs at challenging medically relevant loci47. This analysis resulted in a precision of 0.74 and an adjusted recall of 0.81 (Methods).

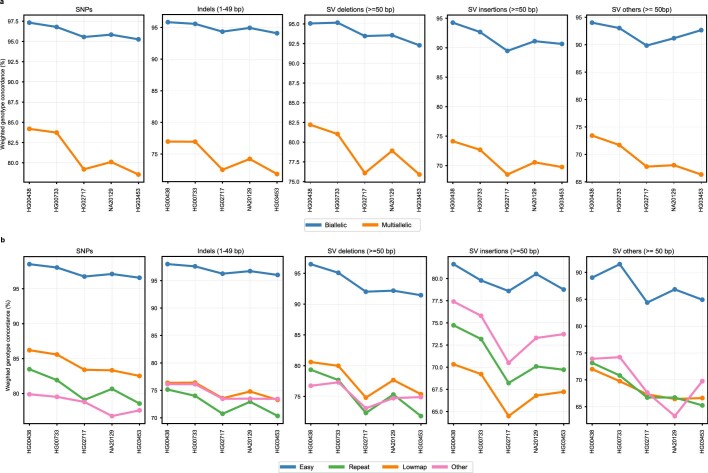

Extended Data Fig. 9. Leave-one-out experiment.

A leave-one-out experiment was conducted by repeatedly removing one of the assembly-samples from the panel VCF and genotyping it based on the remaining samples. Plots show the resulting weighted genotype concordances for different variant allele classes. a, weighted genotype concordances are stratified by graph complexity: biallelic regions of the MC graph include only bubbles with two branches, and multiallelic regions include all bubbles with > 2 different alternative paths defined by the 88 haplotypes. b, results of the same experiment stratified by different genomic regions defined by the GIAB.

Next we genotyped the 3,202 samples from the 1KG19 (Methods). We filtered the resulting SV genotypes using a machine-learning approach5,54 that assessed different statistics, including Mendelian consistency and concordance, to assembly based calls. As a result, we produced a filtered, high-quality subset of SV genotypes containing 28,434 deletion alleles, 84,752 insertion alleles and 26,439 other SV alleles (Supplementary Table 18 and Methods). Many of the alleles not included in the filtered set stemmed from complex, multiallelic loci and were enriched for rare alleles. As independent quality control measures for genotypes in the filtered set, we assessed the Hardy–Weinberg equilibrium values (Supplementary Figs. 23–25) and compared AFs observed across the genotypes of all 2,504 unrelated samples to the respective AFs of the 44 assembly samples (88 haplotypes) contained in the graph. Pearson correlation values of 0.96, 0.93 and 0.90 for the deletion, insertion and other SV alleles, respectively, were observed (Fig. 6c), which indicated the high quality of the genotypes. To quantify our ability to detect additional SVs, we compared our filtered set of genotypes to the HGSVC PanGenie genotypes (v.2.0 ‘lenient’ set)5 and the Illumina-based 1KG SV call set19. We analysed the number of detected SV alleles in each sample (homozygous or heterozygous) and stratified them by genome annotations from GIAB (Fig. 6d and Methods) as well as using our own more detailed annotations (Supplementary Fig. 26). Both of the PanGenie-based call sets detected more SVs (HPRC, 18,483 SVs per sample; HGSVC, 12,997 SVs per sample) than the short-read-based 1KG call set (9,596 SVs per sample), with a particularly substantial advance for deletions <300 bp and insertions (Fig. 6e). The respective average numbers of SVs per haplotype were 12,439 for HPRC, 9,227 for HGSVC and 6,099 for the 1KG calls (Supplementary Fig. 27); that is, a gain of 104.0% for HPRC over 1KG and of 34.8% over HGSVC. This result confirms that short-read-based SV discovery relative to a linear reference genome misses a large proportion of SVs5,6,8. As anticipated, the number of SVs per sample within ‘easy’ genomic regions was consistent across all three call sets, particularly in low-mappability and tandem repeat regions, and the use of our pangenome reference led to substantial gains (Fig. 6d), including for common variants (Fig. 6e and Supplementary Fig. 28). Although the newly identified SVs were harder to genotype because they are primarily located in repetitive regions, genotype concordances were high and close to the ones for known SVs (Supplementary Fig. 29).

Improved tandem repeat representation

VNTRs are particularly variable among individuals and are challenging to access with short reads. The gains in the number of genotyped SVs in VNTRs (Fig. 6d and Supplementary Fig. 28) prompted us to investigate whether our pangenome reference could also improve read mapping in VNTR regions. We first established orthology mapping between haplotypes in our pangenome reference using danbing-tk55. The orthology can be established for 94,452 out of the 98,021 VNTR loci (96.4%) discovered by TRF56. When mapping simulated short reads to GRCh38 with BWA-MEM, the rate of unmapped reads was 6.6–8.5 times greater compared with mapping to the MC graph with Giraffe (Extended Data Fig. 10a, Supplementary Fig. 30 and Supplementary Table 19). The true negatives were on average 1.9% higher than the GRCh38 approach, and the true positives were on average 0.087% higher. The graph approach also reduced false negatives by 2.1-fold. Read depth over a locus is correlated with the copy number of a duplication and we evaluated how well length variants in VNTR regions can be estimated using either the MC graph or GRCh38. The graph approach performed better for 80% of the loci (48,085 out of 60,386) and increased the median r2 from 0.58 to 0.70 (Supplementary Fig. 31).

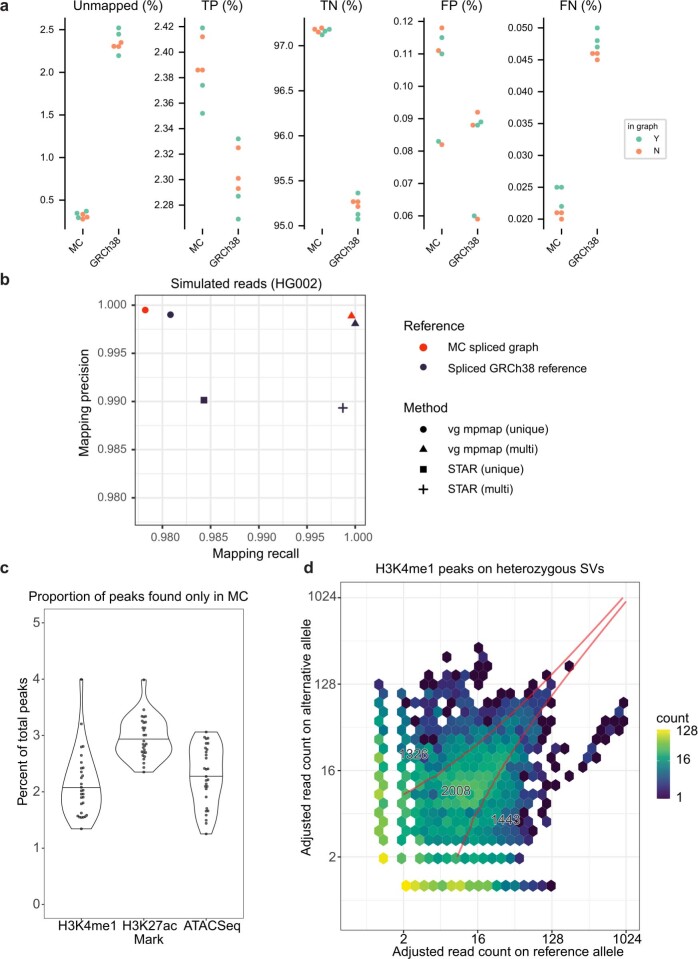

Extended Data Fig. 10. Additional applications supported by the pangenome reference.

a, Performance of read alignment in VNTR regions using the MC graph versus GRCh38. All statistics are expressed relative to the total number of reads simulated from each genome. b, Performance of RNA-seq read alignment. Mapping rate and false discovery rate are stratified by mapping quality producing the curves shown. The MC graph is compared to a graph derived from the 1KG variant calls and to GRCh38. Each reference is augmented with splice junctions. vg mpmap was used to map to the graphs, and STAR was used to map to the linear reference. c, Proportion of all ChIP-seq peaks that are called only in the MC graph. Each data point represents samples that were assayed for H3K4me1, H3K27ac histone marks or chromatin accessibility using ATAC-seq. d, H3K4me1 peaks that overlap an SV for which the sample is heterozygous. The reads within the peak are partitioned between the SV or reference allele. The red boundary represents regions where a binomial test assigns a peak to the SV allele, both alleles, or the reference allele.

Improved RNA sequencing mapping

To evaluate the benefit of our pangenome reference on transcriptomics, we simulated RNA sequencing (RNA-seq) reads and mapped them to a pangenome and to a standard reference genome (Methods). The pangenome-based pipeline using vg mpmap57 achieved significantly lower false mapping rates than a linear reference pipeline using either vg mpmap or STAR58 (Extended Data Fig. 10b). Compared with the linear reference pipelines, the pangenome pipeline also showed reduced allelic bias and increased mapped coverage on heterozygous variants, which could benefit studies of allele-specific expression (Supplementary Fig. 32). With real sequencing data, mapping rates were more difficult to interpret in the absence of a ground truth (Supplementary Fig. 33). Instead, we focused on the correlation in exon coverage to independent PacBio long-read Iso-Seq data. The analysis showed that the correlation was highest when mapping to a spliced pangenome graph derived from the MC graph (Supplementary Fig. 34). The pangenome pipeline showed a modest increase in correlation over the linear reference pipelines (0.006–0.011). In addition, mapping the simulated reads to the MC graph led to improved gene expression estimates relative to the linear GRCh38, regardless of whether alternative contigs were included in GRCh38 (Supplementary Fig. 35).

Improved chromatin immunoprecipitation and sequencing analysis

We used the pangenome to re-analyse H3K4me1 and H3K27ac data from chromatin immunoprecipitation and sequencing (ChIP-seq) and assay for transposase-accessible chromatin with high-throughput sequencing (ATAC-seq) of monocyte-derived macrophages from 30 individuals with African ancestry or European ancestry59. Overall, we observed a net increase in the number of peak calls, whereby, on average, 2–3% of peaks were found only when using the MC pangenome (Extended Data Fig. 10c). Moreover, the newly found peaks were replicated in more samples than expected by chance (Supplementary Fig. 36). In addition, we recovered epigenomic features that were specific to SV alleles not present in GRCh38 (termed non-reference). For example, across all H3K4me1 datasets, we assigned 1,326 events to the non-reference SV allele, 1,443 to the reference allele and 2,008 to both alleles within heterozygous SVs (Extended Data Fig. 10d), with some replicated multiple times across samples (Supplementary Fig. 37). Of these, there were 194 SVs with peaks that were observed only in African ancestry genomes, 150 that were observed only in European ancestry genomes and 216 that were observed in both African and European ancestry genomes. As expected, rare alleles were enriched for ancestry-specific events (Supplementary Fig. 37).

Discussion

We have publicly released 94 de novo haplotype assemblies from a diverse group of 47 individuals. This provides a large set of fully phased human genome assemblies and outperforms earlier efforts on many levels of assembly quality5,16,60. For example, compared with Ebert et al.5, the average median base level accuracy is nearly an order of magnitude higher, the N50 contiguity is nearly double and the structural accuracy is higher33. These improvements are the result of recent improvements in de novo assembly driven both by better sequencing technology and coordinated innovations in assembly algorithms20,21. To validate assembly structural accuracy, we developed a new pipeline that maps low error, long reads to each diploid assembly to support the predicted haplotypes. This pipeline indicated that more than 99% of each assembly, and greater than 90% of the assembled sequence representing highly repetitive arrays, was structurally correct. Some challenges around loci that harbour copy number polymorphisms and/or inversions remained33. Although the focus of this effort was to build a reference resource, highly accurate haplotype-resolved assemblies enabled us to access previously inaccessible regions, highlighting new forms of genetic variation and providing new insights into mutational processes such as interlocus gene conversion53.

Accompanying these assemblies are 94 sets of Ensembl gene annotations, representing a large collection of de novo assembled human transcriptome annotations. Each transcriptome annotation is highly complete, particularly for protein-coding transcripts. These putative transcriptome annotations enabled us to analyse sequence-resolved CNVs. In detail, we assembled genic CNVs (mostly singletons) for 1,115 different protein-coding genes, confirming earlier mapping-based analyses that predicted that the majority of rare genic CNVs occur outside known SDs31. These CNV genes accounted for 0.6–4.4 Mb of additional genic sequences per haplotype compared with GRCh38. These contained genes known to have CNVs associated with human health, including amylase61 (four to ten copies), β-defensin62 (three to seven copies, DEFB107A) and NOTCH2NLC–NOTCH2NLB63 (one additional copy).

The pangenomes presented here are both a set of individual haploid genome assemblies and an alignment of these assemblies. The combination can be efficiently described as a variation graph14,64. A new set of exchange formats for pangenomics, including extensions of the graphical fragment format (GFA) that encode variation graphs, are emerging34. An associated article65 to this work demonstrated that the pangenomes presented here can be losslessly stored using a compressed, binary representation of GFA in just 3–6 GB despite representing more than 282 billion bases of individual sequence, with strongly sublinear scaling as new genomes are added. Creating pangenome graphs is an active research topic, so we developed multiple pipelines, and details of these methods are further explored in companion papers35,36. We found concordance between the different construction approaches used here, whereby the MC and PGGB pangenomes contained nearly the same number of small variants and SVs of various types. Furthermore, these encoded pangenome variants showed high levels of agreement with existing linear reference-based methods for variant discovery, particularly within the non-repetitive fraction of the genome. Our study of complex and medically relevant loci showed that the pangenomes faithfully recapitulated existing knowledge and will enable future efforts to study the role of complex variation in human disease. Further work will be required to more comprehensively identify medically relevant complex SVs and to ensure the accuracy of each allele represented in the pangenome.

Where the pangenome graphs differ is principally in how they handle CNV sequences. The PGGB method will frequently merge CNVs, whereas the MC graphs represent CNV copies as independent subgraphs. Both approaches have merits, and which approach to favour will take further experimentation and community input, and may vary by the specific application. The PGGB method retained all centromeric and satellite sequences, whereas the MC graph pruned much of this sequence. This made it practical with current methods to use the MC graphs for read alignment applications. However, pruning these sequences is not a satisfactory solution. Longer term, more work is needed to determine how best to align and represent these large repeat arrays within pangenomes, particularly as T2T assembly becomes commonplace and these arrays therefore completed. Furthermore, although the PGGB graph retained centromeric and satellite sequences, in principle, by enabling analysis of previously inaccessible parts of the pangenome, our initial population-genetic analysis of these regions (Methods) leaves open questions about assembly accuracy and alignment, especially in areas of the genome where mutation rates are thought to be an order of magnitude greater66. This suggests that significant care must be taken when studying them, and new methods may need to be developed to fully understand and characterize this component of the human pangenome.

A near-term application of pangenome references will be to improve reference-based sequence mapping workflows. In these workflows, the pangenome can act as a drop-in replacement for existing references, with the read mappings projected from the pangenome space back onto an existing linear reference for downstream processing. This is how the Giraffe–DeepVariant workflow functions: DeepVariant, the variant caller, never needs to consider the complexity of the pangenome, but the workflow benefits from a mapping step that accounts for sequences that are missing from the linear reference. Making the switch to using pangenome mapping is not significantly more computationally expensive49 and resulted in an average 34% reduction in false-positive and false-negative errors compared with using the standard reference methods (Supplementary Fig. 38). These benefits were also greatest at complex loci47. Pangenomes not only improve variant calling but also improve transcript mapping accuracy57 and detection of ChIP-seq peaks67.

SVs have been mostly excluded from short-read studies because methods to genotype them using a linear reference have limited accuracy and sensitivity. Previous short-read, linear reference studies have discovered 7,500–9,500 SVs per sample19,68, whereas long-read sequencing efforts have routinely discovered around 25,000. Ebert et al.5 showed that using PanGenie, a pangenomic approach, with 32 samples, a subset of these variants could be genotyped in short-read genomes (about 13,000 genotyped on average, ranging from 12,000 to 15,000 per sample). Using the same PanGenie method, the HPRC pangenome increases this to around 18,500 (ranging from 16,900 to 24,900) per sample, enabling the genotyping of the substantial majority of SVs discovered using long-reads per sample. The draft pangenome therefore delivers better SV calling than previous approaches, extracting latent information from short-read samples that are already available. So, in the future, the pangenome will enable the inclusion of tens of thousands of additional SV alleles into genome-wide association studies. Looking beyond short reads, in the future, the combination of the pangenome and low-cost long-read sequencing should prove to be a potent combination for comprehensive SV genotyping.

These new pangenomic workflows could benefit individuals of different ancestries differently. For read mapping and small variant calling, we observed a consistent improvement across individuals (Extended Data Fig. 7). Moreover, the pangenome might improve SV genotyping differently across individuals owing to the stronger divergence of the alleles from the reference. In the 1KG cohort, we observed that the genotyped samples clustered by super-population labels (Extended Data Fig. 11), which would suggest different levels of detection bias that are mitigated with the pangenome. However, we caution that the composition of the samples underlying the pangenome relative to the composition of the set of samples genotyped could potentially influence these results; an analysis with more samples is warranted.

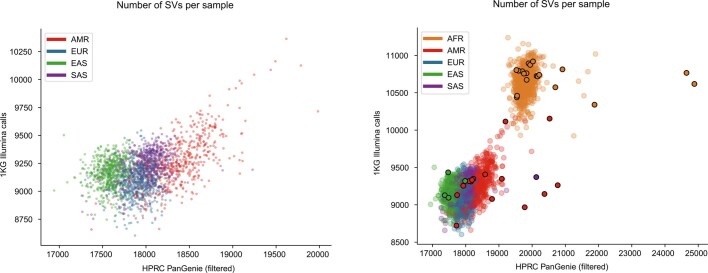

Extended Data Fig. 11. Number of SVs per sample in the HPRC PanGenie filtered set as well as the 1KG Illumina calls for all 3,202 1KG samples.

Samples are coloured by superpopulation. The left plot excludes the african superpopulation, while the right plot shows the same results including african samples and including the assembly samples present in the graph (marked by a black circle).

The openly accessible, diverse assemblies and pangenome graphs we present here form a draft of a pangenome reference. There are many remaining challenges to growing and refining this reference. For example, assembly reliability analysis revealed roughly an order of magnitude more erroneously assembled sequences in the HPRC assemblies than in the T2T-CHM13 complete assembly. Similarly, in a companion analysis, Strand-seq data from a subset of assemblies revealed 6–7 Mb of incorrectly oriented sequence per haplotype33, which indicates that there is room to structurally improve the assemblies. Furthermore, despite being predicted to have less than 1 base error per around 200,000 assembled bases, base level sequencing errors are still an issue. For example, we identified more than a dozen apparent frameshifts and nonsense mutations per genome annotation that are probably the result of sequencing errors. The cohort we present is also relatively small notwithstanding the significant effort to generate the underlying long-read sequencing resource. Our near-term goal is to expand the pangenome to a diverse cohort of 350 individuals (which should capture most common variants), to push towards T2T genomes for this cohort (to properly represent the entire genome in almost all individuals) and to refine the pangenome alignment methods (so that telomere-to-telomere alignment is possible, capturing more complex regions of the genome). This will give us a more comprehensive representation of all types of human variation.

We acknowledge that references generated from the 1KG samples alone are insufficient to capture the extent of sequence diversity in the human population. To ensure that we are able to maximize our surveys of sample diversity while abiding by principles of community engagement and avoiding extractive practices14,15, we will broaden our efforts to recruit new participants to improve the representation of human genetic diversity. A richer human reference map promises to improve our understanding of genomics and our ability to predict, diagnose and treat disease. A more diverse human reference map should also help ensure that the eventual applications of genomic research and precision medicine are effective for all populations. We recognize that the value of this project will partly be in the future establishment of new standards for how we capture variant diversity, the opportunity to disseminate science into diverse communities and continued efforts to engage with diverse voices in this ambitious goal to build a common global reference resource. The methods we are developing should prove valuable for other species. Indeed, other groups are pioneering such efforts69,70. In parallel with our efforts to obtain a more comprehensive collection of diverse and highly accurate human reference genomes, we anticipate further optimization and rapid improvement of the pangenome reference, enabling an increasingly broad set of applications and use cases for both the research and clinical communities.

Methods

Sample selection

We identified parent–child trios from the 1KG in which the child cell line banked within the NHGRI Sample Repository for Human Genetic Research at the Coriell Institute for Medical Research was listed as having zero expansions and two or fewer passages, and rank-ordered representative individuals as follows. Loci with MAFs less than 0.05 were removed. MAFs were measured in the full cohort (that is, 2,504 individuals, 26 subpopulations) regardless of each individual’s subpopulation labelling. For each chromosome, principal component analysis (PCA) was performed for dimension reduction. This resulted in a matrix with 2,200 features, which was then centred and scaled using smartPCA normalization. The matrix was further reduced to 100 features through another round of PCA.

We defined the representative individuals of a subpopulation as those who are similar to the other members in the group (which, in this scenario, is the subpopulation they belong to), as well as different from individuals outside the group. Group is defined by previous 1KG population labels (for example, ‘Gambian in Western Division’). We did this as follows. For each sample, we first calculated the intragroup distance dintra, which is the average of L2-norms between the sample and samples of the same subpopulation. The intergroup distance, dinter, was similarly defined as the average of L2-norms between the sample and samples from all other subpopulations. The L2-norms were derived in the feature space of the PCA. The score of this sample was then defined as 10 × dintra + dinter/(n – 1), where n is the number of subpopulations. For each subpopulation, if fewer than three trios were available, all were selected. Otherwise, trios were sorted by ranking children with max(paternalrank, maternalrank), where paternalrank and maternalrank are the respective ranks of each parent’s score, selecting the three trios with a maximum value. We ranked by parent scores because during the year 1 effort, the child samples did not have sequencing data and therefore had to be represented by the parents.

Ideally, we would have selected the same number of candidates from each subpopulation and have an equal number of candidates from both sexes. To correct for imbalances, we applied the following criteria for each subpopulation’s candidate set: (1) when the sex was unbalanced (that is, off by more than one sample), we tried to swap in the next-best candidate of the less represented sex or did nothing if this was not possible; (2) if a subpopulation had fewer individuals than the desired sample selection size (that is, all candidates were selected), their unused slots were distributed to other unsaturated subpopulations. The latter choice is arbitrary but should have little impact on the overall results.

The genetic information used in this study was derived from publicly available cell lines from the NHGRI Sample Repository for Human Genetic Research and the NIGMS Human Genetic Cell Repository at the Coriell Institute for Medical Research. Therefore, this study is exempt from human research approval as the proposed work involved the collection or study of data or specimens that are already publicly available.

Sequencing

Cell line expansion and banking

Lymphoblastoid cell lines (LCLs) used for sequencing from the 1KG collection (Supplementary Table 1) were obtained from the NHGRI Sample Repository for Human Genetic Research at the Coriell Institute for Medical Research. HG002 (GM24385) and HG005 (GM24631) LCLs were obtained from the NIGMS Human Genetic Cell Repository at the Coriell Institute for Medical Research. All expansions for sequencing were derived from the original expansion culture lot to ensure the lowest possible number of passages and to reduce overall culturing time. Cells used for HiFi, Nanopore, Omni-C, Strand-seq, 10x Genomics and Bionano production and for g-banded karyotyping and Illumina Omni2.5 microarray were expanded to a total culture size of 4 × 108 cells, which resulted in a total of five passages after cell line establishment. Cells were split into production-specific sized vials as follows: HiFi, 2 × 107 cells; Nanopore, 5 × 107 cells; Omni-C, 5 × 106 cells; Strand-seq, 1 × 107 cells; 10x Genomics, 4 × 106 cells; and Bionano, 4 × 106 cells. Cells for Strand-seq were stored in 65% RPMI-1640, 30% FBS and 5% DMSO and frozen as viable cultures. All other cells were washed in PBS and flash-frozen as dry cell pellets. Cells used for ONT-UL production were separately expanded from the original expansion culture lot to a bank of five vials of 5 × 106 cells. A single vial was subsequently expanded to a total culture size of 4 × 108 cells, which resulted in a total of eight passages. Cells were also reserved for g-banded karyotyping and Illumina Omni2.5 microarray.

Karyotyping and microarray

G-banded karyotype analysis was performed on 5 × 106 cells collected at passage five (for HiFi, Nanopore and Omni-C) and passage eight (for ONT-UL). For all cell lines, 20 metaphase cells were counted, and a minimum of 5 metaphase cells were analysed and karyotyped. Chromosome analysis was performed at a resolution of 400 bands or greater. A pass/fail criterion was used before cell lines proceeded to sequencing. Cell lines with normal karyotypes (46,XX or 46,XY) or lines with benign polymorphisms that are frequently seen in apparently healthy individuals were classified as passes. Cell lines were classified as failures if two or more cells harboured the same chromosomal abnormality. DNA used for microarray was isolated from frozen cell pellets (3 × 106 to 7 × 106 cells) using a Maxwell RSC Cultured Cells DNA kit on a Maxwell RSC 48 instrument (Promega). DNA was genotyped at the Children’s Hospital of Philadelphia’s Center for Applied Genomics using an Infinium Omni2.5-8 v.1.3 BeadChip (Illumina) on an iScan System instrument (Illumina).

HiFi sequencing

PacBio HiFi sequencing was distributed between two centres: Washington University in St. Louis and the University of Washington. We describe the protocols used at each centre separately.

Washington University in St. Louis