Abstract

Identifying informative predictors in a high dimensional regression model is a critical step for association analysis and predictive modeling. Signal detection in the high dimensional setting often fails due to the limited sample size. One approach to improving power is through meta-analyzing multiple studies which address the same scientific question. However, integrative analysis of high dimensional data from multiple studies is challenging in the presence of between-study heterogeneity. The challenge is even more pronounced with additional data sharing constraints under which only summary data can be shared across different sites. In this paper, we propose a novel data shielding integrative large–scale testing (DSILT) approach to signal detection allowing between-study heterogeneity and not requiring the sharing of individual level data. Assuming the underlying high dimensional regression models of the data differ across studies yet share similar support, the proposed method incorporates proper integrative estimation and debiasing procedures to construct test statistics for the overall effects of specific covariates. We also develop a multiple testing procedure to identify significant effects while controlling the false discovery rate (FDR) and false discovery proportion (FDP). Theoretical comparisons of the new testing procedure with the ideal individual–level meta–analysis (ILMA) approach and other distributed inference methods are investigated. Simulation studies demonstrate that the proposed testing procedure performs well in both controlling false discovery and attaining power. The new method is applied to a real example detecting interaction effects of the genetic variants for statins and obesity on the risk for type II diabetes.

Keywords: Debiasing, Distributed learning, False discovery rate, High dimensional inference, Integrative analysis, Multiple testing

1. Introduction

High throughput technologies such as genetic sequencing and natural language processing have led to an increasing number and types of predictors available to assist in predictive modeling. A critical step in developing accurate and robust prediction models is to differentiate true signals from noise. A wide range of high dimensional inference procedures have been developed in recent years to achieve variable selection, hypothesis testing and interval estimation (Van de Geer et al., 2014; Javanmard and Montanari, 2014; Zhang and Zhang, 2014; Chernozhukov et al., 2018, e.g.). However, regardless of the procedure, drawing precise high dimensional inference is often infeasible in practical settings where the available sample size is too small relative to the number of predictors. One approach to improve the precision and boost power is through meta-analyzing multiple studies that address the same underlying scientific problem. This approach has been widely adopted in practice in many scientific fields, including clinical trials, education, policy evaluation, ecology, and genomics (DerSimonian, 1996; Allen et al., 2002; Card et al., 2010; Stewart, 2010; Panagiotou et al., 2013, e.g.), as a tool for evidence-based decision making. Meta-analysis is particularly valuable in the high dimensional setting. For example, meta-analysis of high dimensional genomic data from multiple studies has uncovered new disease susceptibility loci for a broad range of diseases including Crohn’s disease, colorectal cancer, childhood obesity and type II diabetes (Houlston et al., 2008; Bradfield et al., 2012; Franke et al., 2010; Zeggini et al., 2008, e.g.).

Integrative analysis of high dimensional data, however, is highly challenging especially with biomedical studies for several reasons. First, between study heterogeneity arises frequently due to the difference in patient population and data acquisition. Second, due to privacy and legal constraints, individual level data often cannot be shared across study sites. Instead, only summary statistics can be passed between researchers. For example, patient level genetic data linked with clinical variables extracted from electronic health records (EHR) of several hospitals are not allowed to leave the firewall of each hospital. In addition to high dimensionality, attention to both heterogeneity and data sharing constraints are needed to perform meta-analysis of multiple EHR–linked genomic studies.

The aforementioned data sharing mechanism is referred to as DataSHIELD (Data aggregation through anonymous Summary–statistics from Harmonised Individual levEL Databases) in Wolfson et al. (2010), which has been widely accepted as a useful strategy to protect patient privacy (Jones et al., 2012; Doiron et al., 2013). Several statistical approaches to integrative analysis under the DataSHILED framework have been developed for low dimensional settings (Gaye et al., 2014; Zöller et al., 2018; Tong et al., 2020, e.g.). In the absence of cross-site heterogeneity, distributed high dimensional estimation and inference procedures have also been developed that can facilitate DataSHIELD constraints (Lee et al., 2017; Battey et al., 2018; Jordan et al., 2019, e.g.). Recently, Cai et al. (2019a) proposed an integrative high dimensional sparse regression approach that accounts for heterogeneity. However, their method is limited to parameter estimation and variable selection. To the best of our knowledge, no hypothesis testing procedures currently exist to enable identification of significant predictors with false discovery error control under the setting of interest. In this paper, we propose a data shielding integrative large–scale testing (DSILT) procedure to fill this gap.

1.1. Problem statement

Suppose there are independent studies and the study contains observations on an outcome and a -dimensional covariate vector , where can be binary or continuous, and without loss of generality we assume that contains 1 as its first element. Specifically, data from the study consist of independent and identically distributed random vectors, . Let and . We assume a conditional mean model and that the true model parameter is the minimizer of the population loss function:

where . When , this corresponds to a logistic model if is binary and a quasi-binomial model if is a continuous probability score sometimes generated from an EHR probabilistic phenotyping algorithm. One may take for some non-negative such as the count (or log-count) of a diagnostic code in EHR studies 1. As detailed in Assumptions 2-3 of Section 3.1, our procedure allows for a broad range of models provided that is smooth and the residuals are sub-Gaussian, although not all generalized linear models satisfy these assumptions.

Under the DataSHIELD constraints, the individual–level data is stored at the data computer (DC) and only summary statistics are allowed to transfer from the distributed DCs to the analysis computer (AC) at the central node. Our goal is to develop procedures under the DataSHIELD constraints for testing

| (1) |

simultaneously for to identify , while controlling the false discovery rate (FDR) and false discovery proportion (FDP), where is a user-specified subset with and denotes the size of any set . Here indicates that is independent of given all remaining covariates. To ensure effective integrative analysis, we assume that are sparse and share similar support. Specifically, we assume that and, for , where , , , and .

1.2. Our contribution and the related work

We propose in this paper a novel DSILT procedure with FDR and FDP control for the simultaneous inference problem (1). The proposed testing procedure consists of three major steps: (I) derive an integrative estimator on the AC using locally obtained summary statistics from the DCs and send the estimator back to the DCs; (II) construct a group effect test statistic for each covariate through an integrative debiasing method; and (III) develop an error rate controlled multiple testing procedure based on the group effect statistics.

The integrative estimation approach in the first step is closely related to the group inference methods in the literature. Denote by , ,

Literature in group LASSO and multi-task learning (Huang and Zhang, 2010; Lounici et al., 2011, e.g.) established that, under the setting as introduced in Section 1.1, the group LASSO estimator with tuning parameter , benefits from the group structure and attains the optimal rate of convergence. In this paper, we adopt the same structured group LASSO penalty for integrative estimation, but under data sharing constraints. Recently, Mitra et al. (2016) proposed a group structured debiasing approach under the integrative analysis setting, where they restricted their analysis to linear models and required that the precision matrices of the covariates be group-sparse across the distributed datasets. In contrast, our method accommodates non-linear models and imposes no sparsity or homogeneity structures on the covariate distributions from different local sites (see Assumption 1 in Section 3.1).

The second step of our method, i.e., the construction of the test statistics for each of the hypotheses, relies on the group debiasing of the above integrative estimation. For debiasing of M–estimation, nodewise LASSO regression was employed in the earlier work (Van de Geer et al., 2014; Janková and Van De Geer, 2016, e.g), while the Dantzig selector type approach was proposed more recently (Belloni et al., 2018; Caner and Kock, 2018, e.g). We develop in this article a cross–fitted group Dantzig selector type debiasing method, which requires weaker inverse Hessian assumptions (see Assumption 1 in Section 3.1) than the aforementioned approaches. In addition, the proposed debiasing step achieves proper bias rate under the same model sparsity assumptions as the ideal individual–level meta–analysis (ILMA) method. Compared with the One–shot distributed inference approaches (Tang et al., 2016; Lee et al., 2017; Battey et al., 2018), the proposed method additionally considers model heterogeneity and group inference; it further reduces the bias rate by sending the integrative estimator to the DCs to derive updated summary statistics, which in turn benefits the subsequent multiple testing procedure. See Section 3.4 for detailed comparisons.

As the last step, simultaneous inference with theoretical error rates control is performed based on the group effect statistics. The test statistics are shown to be asymptotically chi-square distributed under the null, and the proposed multiple testing procedure asymptotically controls both the FDR and FDP at the pre-specified level. Multiple testing for high dimensional regression models has recently been studied in the literature (Liu and Luo, 2014; Xia et al., 2018b,a; Javanmard et al., 2019, e.g). Our testing step for FDR control as a whole differs considerably from these existing procedures in the following aspects. First, the proposed test statistics, the key input to the FDR control procedure, are brand new and the resulting estimation of false discovery proportion differs fundamentally from those of the literature. Second, we consider a more general M–estimation setting which can accommodate different types of outcomes. Third, we allow the heterogeneity in both the covariates and the coefficients. Fourth, the existing testing approaches developed for individual-level data are not suitable for the DataSHIELD framework. Last, because there are complicated dependence structures among the integrative chi-squared statistics under the DataSHIELD constraints, the theoretical derivations are technically much more involved. Hence, our proposal makes a useful addition to the general toolbox of simultaneous regression inference.

We demonstrate here via numerical experiments that the proposed DSILT procedure attains good power while maintaining error rate control. In addition, we demonstrate how our new approach outperforms existing distributed inference methods and enjoys similar performance as the ideal ILMA approach.

1.3. Outline of the paper

The rest of this paper is organized as follows. We detail the DSILT approach in Section 2. In Section 3, we present asymptotic analysis on the false discovery control of our method and compare it with the ILMA and One–shot approach. In Section 4, we summarize finite sample performance of our approach along with other methods from simulation studies. In Section 5, we apply our proposed method to a real example. Proofs of the theoretical results and additional technical lemmas and simulation results are collected in the Supplementary Material.

2. Data shielding integrative large–scale testing procedure

In this section, we study the detailed procedure of the proposed method. We start with some notation that will be used throughout the paper.

2.1. Notation

For any integer , any vector , and any set , denote by , the vector with its entry removed from , the norm of and . For any -dimensional vectors and , let , , , and . Let be the unit vector with element being 1 and remaining elements being 0 and . Denote by and the and norm of respectively. For any –fold partition of , denoted by , let , , . For any index set , and . Let . Denote by and the true values of and respectively. For any and , define the sample measure operators and , and the population measure operator , for all integrable functions parameterized by or .

For any given , we define , , and the residual . Similar to Cai et al. (2019b) and Ma et al. (2020), given coefficient , we can express in an approximately linear form:

where is the reminder term and . For a given observation set and coefficient , we let , , . Note that for the logistic model, we have , and and can be viewed as the covariates and responses adjusted for the heteroscedasticity of the residuals.

2.2. Outline of the proposed testing procedure

We first outline in this section the DSILT procedure in Algorithm 1 and then study the details of each key step later in Sections 2.3 to 2.5. The procedure involves partitioning of into folds for , where without loss of generality we let be an even number. With a slight abuse of notation, we write , , , and .

Algorithm 1.

DSILT Algorithm.

| Input: at the DC for . | |

| 1. | For each , fit integrative sparse regression under DataSHIELD with : |

| (a) At the DC, construct cross-fitted summary statistics based on local LASSO estimator, and send them to the AC; | |

| (b) Obtain the integrative estimator at AC and send them back to each DC. | |

| 2. | Obtain debiased group test statistics: |

| (a) For each , at the DC, obtain the updated summary statistics based on and , and send them to the AC; | |

| (b) At the AC, construct cross-fitted debiased group estimators . | |

| 3. | Construct a multiple testing procedure based on the test statistics from Step 2. |

2.3. Step 1: Integrative sparse regression

As a first step, we fit integrative sparse regression under DataSHIELD with following similar strategies as given in Cai et al. (2019a). To carry out Step 1(a) of Algorithm 1, we split the index set into folds . For and , we construct local LASSO estimator with tuning parameter . With , we then derive summary data , where

| (2) |

In Step 1(b) of Algorithm 1, for , we aggregate the sets of summary data at the central AC and solve a regularized quasi–likelihood problem to obtain the integrative estimator with tuning parameter :

| (3) |

These sets of estimators, , are then sent back to the DCs. The summary statistics introduced in (2) can be viewed as the covariance terms of with the local LASSO estimator plugged-in to adjust for the heteroscedasticity of the residuals. Cross–fitting is used to remove the dependence of the observed data and the fitted outcomes - a strategy frequently employed in high dimensional inference literatures (Chernozhukov et al., 2016, 2018). As in Cai et al. (2019a), the integrative procedure can also be viewed in such a way that provides a second order one–step approximation to the individual–level data loss function initializing with the local LASSO estimators. In contrast to Cai et al. (2019a), we introduce a cross–fitting procedure at each local DC to reduce fitting bias and this in turn relaxes their uniformly-bounded assumption on for each and , i.e., Condition 4(i) of Cai et al. (2019a).

2.4. Step 2: Debiased group test statistics

We next derive group effect test statistics in Step 2 by constructing debiased estimators for and estimating their variances. In Step 2(a), we construct updated summary statistics

at the DC, for . These sets of summary statistics are then sent to the AC in Step 2(b) to be aggregated and debiased. Specifically, for each and , we solve the group Dantzig selector type optimization problem:

| (4) |

to obtain a vector of projection directions for some tuning parameter , where . Combining across the splits, we construct the cross–fitted group debiased estimator for by .

In Section 3.2, we show that the distribution of ) is approximately normal with mean 0 and variance , estimated by . Finally, we test for the group effect of the -th covariate across studies based on the standardized sum of square type statistics

We show in Section 3.2 that, under mild regularity assumptions, is asymptotically chi-square distributed with degree of freedom under the null. This result is crucial to ensure the error rate control for the downstream multiple testing procedure.

2.5. Step 3: Multiple testing

To construct an error rate controlled multiple testing procedure for

we first take a normal quantile transformation of , namely , where is the standard normal cumulative distribution function, , and is the survival function of . Based on the asymptotic distribution of as will be shown in Theorem 1, we present in the proof of Theorem 2 that asymptotically has the same distribution as the absolute value of a standard normal random variable. Thus, to test a single hypothesis of , we reject the the null at nominal level whenever , where .

However, for simultaneous inference across hypotheses , we shall further adjust the multiplicity of the tests as follows. For any threshold level , let and respectively denote the total number of false positives and the total number of rejections associated with , where . Then the FDP and FDR for a given are respectively defined as

The smallest such that , namely would be a desirable threshold since it maximizes the power under the FDP control. However, since the null set is unknown, we estimate by and conservatively estimate by because of the model sparsity. We next calculate

| (5) |

to approximate the ideal threshold . If (5) does not exist, we set . Finally, we obtain the rejection set as the output of Algorithm 1. The theoretical analysis of the asymptotic error rates control of the proposed multiple testing procedure will be studied in Section 3.3.

Remark 1.

Our testing approach is different from the BH procedure (Benjamini and Hochberg, 1995) in that, the latter obtains the rejection set with . Note that, first, the range in our procedure is critical, because when , is no longer consistently estimated by . As a result, the BH may not able to control the FDP with positive probability. Second, in the proposed approach, if is not attained in the range, it is crucial to threshold it at , instead of , because the latter will cause too many false rejections, and as a result the FDR cannot be properly controlled.

2.6. Tuning parameter selection

In this section, we detail data-driven procedures for selecting the tuning parameters . Since our primary goal is to perform simultaneous testing, we follow a similar strategy as that of Xia et al. (2018a) and select tuning parameters to minimize distance between and its expected value of 1, where is an estimate of from the testing procedure. However, unlike Xia et al. (2018a), it is not feasible to tune simultaneously due to DataSHIELD constraints. We instead tune , and sequentially as detailed below. Furthermore, based on the theoretical analyses of the optimal rates for given in Section 3, we select within a set of candidate values that are of the same order as their respective optimal rates.

First for in Algorithm 1, we tune via cross validation within the DC. Second, to select for the integrative estimation in (3), we minimize an approximated generalized information criterion that only involves derived data from studies. Specifically, we choose as the minimizer of , where is some pre-specified scaling parameter, is the estimator obtained with ,

are respectively the approximated deviance and degree of freedom measures, is the set of non-zero elements in and the operator denotes the second order partial derivative with respect to . Common choices of include , , (Wang et al., 2009, modified BIC) and (Foster and George, 1994, RIC). For numerical studies in Sections 4 and 5, we use BIC which appears to perform well across settings.

At the last step, we tune by minimizing an distance between and 1, where is an estimate of with a given tuning parameter , and we replace by as in Xia et al. (2018a). Our construction of differs from that of Xia et al. (2018a) in that we estimate under the complete null to better approximate the denominator of . As detailed in Algorithm 2, we construct as the difference between the estimator obtained with the first folds of data and the corresponding estimator obtained using the second folds of data, which is always centered around 0 rather than . Since the accuracy of for large is most relevant to the error control, we construct the distance measure in Algorithm 2 focusing on around for some values of .

Algorithm 2.

Selection of for multiple testing.

| 1. | For any given and each , calculate with |

| where is the debiasing projection direction obtained at tuning value . | |

| 2. | Define and a modified measure |

| where and is some specified constant. |

3. Theoretical Results

In this section, we present the asymptotic analysis results of the proposed method and compare it with alternative approaches.

3.1. Notation and assumptions

For any semi–positive definite matrix and , denote by the element of and its row, and the smallest and largest eigenvalue of . Define the sub-gaussian norms of a random variable and a -dimensional random vector , respectively by and , where is the unit sphere in . For and a scalar or vector , define as its neighbor with radius . Denote by , and . For simplicity, let , and denote by the row of . In our following analysis, we assume that the cross–fitting folds , for all . Here and in the sequel we use and denote order 1. Next, we introduce assumptions for our theoretical results. For Assumption 4, we only require either 4(a) or 4(b) to hold.

Assumption 1

(Regular covariance). (i) There exists absolute constant such that for all , , and . (ii) There exist and that for all and , norm of each row of is bounded by .

Assumption 2

(Smooth link function). There exists a constant such that for all , .

Assumption 3

(Sub-Gaussian residual). For any is conditional sub-Gaussian, i.e. there exists such that given . In addition, there exists some absolute constant such that, almost surely for , and .

Assumption 4(a)

(Sub-Gaussian design). is sub-Gaussian, i.e. there exists some constant that .

Assumption 4(b)

(Bounded design). is almost surely bounded by some absolute constant.

Remark 2.

Assumptions 1 (i) and 4(a) (or 4(b)) are commonly used technical conditions in high dimensional inference in order to guarantee rate optimality of the regularized regression and debiasing approach (Negahban et al., 2012; Javanmard and Montanari, 2014). Assumptions 4(a) and 4(b) are typically unified by the sub-Gaussian design assumption (Negahban et al., 2012). In our analysis, they are separately studied, since affects the bias rate, which leads to different sparsity assumptions under different design types. Similar conditions as our Assumption 1 (ii) were used in the context of high dimensional precision matrix estimation (Cai et al., 2011) and debiased inference (Chernozhukov et al., 2018; Caner and Kock, 2018; Belloni et al., 2018). Compared with their exact or approximate sparsity assumption imposed on the inverse Hessian, this boundness assumption is essentially less restrictive. As an important example in our analysis, logistics model satisfies the smoothness conditions for presented by Assumption 2. As used in Lounici et al. (2011) and Huang and Zhang (2010), Assumption 3 regularizes the tail behavior of the residuals and is satisfied in many common settings like logistic model.

3.2. Asymptotic properties of the debiased estimator

We next study the asymptotic properties of the group effect statistics , . We shall begin with some important prerequisite results on the convergence properties of and the debiased estimators as detailed in Lemmas 1 and 2.

Lemma 1.

Under Assumptions 1-3, 4(a) or 4(b), and that , there exist a sequence of the tuning parameters

with under Assumption 4(a) and under Assumption 4(b), such that, for each , the integrative estimator satisfies

Remark 3.

Lemma 1 provides the estimation rates of the integrative estimator . In contrast to the ILMA method, the second term in the expression of quantifies the additional noise incurred by using summary data under the DataSHIELD constraint. Similar results can be observed through debiasing truncation in distributed learning (Lee et al., 2017; Battey et al., 2018) or integrative estimation under DataSHIELD (Cai et al., 2019a). When as assumed in Lemma 2, the above mentioned error term becomes negligible. The DSILT method allows for any degree of heterogeneity across sites with respect to both the magnitude and support of . However, the cross-site similarity in the support determines the estimation rates as shown in Lemma 1 above. Specifically, the DSILT estimator for attains a rate-M improvement over the local methods (Lounici et al., 2011; Huang and Zhang, 2010, e.g.) if and has the same rate as that of the local estimators if .

We next present the theoretical properties of the group debiased estimators.

Lemma 2.

Under the same assumptions of Lemma 1 and assume that

we have with converging to a normal random variable with mean 0 and variance , where . In addition, there exists such that, simultaneously for all , the bias term and the variance estimator satisfy that

Remark 4.

The sparsity assumption in Lemma 2 is weaker than the existing debiased estimators for M–estimation where s is only allowed to diverge in a rate dominated by (Janková and Van De Geer, 2016; Belloni et al., 2018; Caner and Kock, 2018). This is benefited by the cross–fitting technique, through which we can get rid of the dependence on the convergence rate of .

Finally, we establish in Theorem 1 the main result of this section regarding to the asymptotic distribution of the group test statistic under the null.

Theorem 1.

Under all assumptions in Lemma 2, simultaneously for all , we have , where . Furthermore, if and for some constants and , we have

The above theorem shows that, the group effect test statistics is asymptotically chi-squared distributed under the null and its bias is uniformly negligible for .

3.3. False discovery control

We establish theoretical guarantees for the error rate control of the multiple testing procedure described in Section 2.5 in the following two theorems.

Theorem 2.

Assume that . Then under all assumptions in Lemma 2 with and , we have

Remark 5.

Assumption 1 (i) ensures that most of the group estimates are not highly correlated with each other. Thus the the variance of can be appropriately controlled, which in turn guarantees the control of FDP. It is possible to further relax the condition to for some , See, for example, Liu and Shao (2014) and Belloni et al. (2018), where they used moderate deviation technique to have tighter truncations and normal approximations for t-statistics. Because we used chi-squared type test statistics with growing M, the technical details on moderate deviation are much more involved and warrant future research.

As described in Section 2.5, if in equation (5) is not attained in the range , then it is thresholded at . The following theorem states a weak condition to ensure the existence of in such range. As a result, the FDP and FDR will converge to the pre-specified level asymptotically.

Theorem 3.

Let . Suppose for some and some , . Then under the same conditions as in Theorem 2, we have, as ,

In the above theorem, the condition on only requires very few covariates to have the signal sum of squares across the studies exceeding the rate for some , and is thus a very mild assumption.

3.4. Comparison with alternative approaches

To study the advantage of our testing approach and the impact of the DataSHIELD constraint, we next compare the proposed DSILT method to a One–shot approach and the ILMA approach, as described in Algorithms 3 and 4, through a theoretical perspective. The One–shot approach in Algorithm 3 is inspired by existing literature in distributed learning (Lee et al., 2017; Battey et al., 2018, e.g.), and is a natural extension of existing methods to the problem of multiple testing under the DataSHIELD constraint. The debiasing step of the One–shot approach is performed locally as in the existing literature.

Following similar proofs of Lemma 2 and Theorems 2 and 3, the One–shot, ILMA, and DSILT can attain the same error rate control results under the sparsity assumptions of

Algorithm 3.

One–shot approach.

| 1. | At each DC, obtain the cross–fitted debiased estimator by solving a Dantzig selector problem locally, where is estimated by local LASSO. |

| 2. | Send the debiased estimators to the AC and obtain the group statistics. |

| 3. | Perform multiple testing procedure as described in Section 2.5. |

Algorithm 4.

Individual–level meta–analysis (ILMA).

| 1. | Integrate all individual–level data at the AC. |

| 2. | Construct the cross–fitted debiased estimator by (4) using individual–level integrative estimator analog to (3), and then obtain the overall effect statistics. |

| 3. | Perform multiple testing procedure in Section 2.5. |

where under the high dimensional regime of and the assumptions of and as required in Theorems 2 and 3,

and for sub-Gaussian design and for bounded design as in Lemma 1. If additionally which directly implies , then the respective sparsity conditions for One–shot and ILMA/DSILT reduce to and . Hence, when grows with and at a slower rate of , we have , which implies that the ILMA and DSILT methods require strictly weaker sparsity assumption than the One–shot approach. On the other hand, if is not satisfied, then the rate dominates the rate of and the three methods share the same sparsity condition . Besides the sparsity condition comparisons in terms of the validity of tests, we learn from Cai et al. (2019a) that the estimation error rate of our integrative sparse regression in Step 1 is equivalent to the idealized method with all raw data and is smaller than the local estimator. Hence, we anticipate the power gain of the DSILT over the One–shot approach in finite-sample studies as the former uses more accurate estimator than the latter to derive statistics for debiasing. This advantage is also verified in our simulation studies in Section 4. Moreover, it is possible to follow the debiasing strategies proposed in Zhu et al. (2018) and Dukes and Vansteelandt (2019) that adapts to model sparsity, and construct a corresponding DSILT procedure with additional theoretical power gain compared with the One-shot method.

Remark 6.

Our DSILT approach involves transferring data twice from the DCs to the AC and once from the AC to the DCs, which requires more communication efforts compared to the One–shot approach. The additional communication gains lower bias rate than the One–shot approach while only requiring the same sparsity assumption as the ILMA method as discussed above. Under its sparsity condition, each method is able to draw inference that is asymptotically valid and has the same power as the ideal case when one uses the true parameters in construction of the group test statistics. This further implies that to construct a powerful and valid multiple testing procedure, there is no necessity to adopt further sequential communications between the DCs and the AC as in the distributed methods of Li et al. (2016) and Wang et al. (2017).

4. Simulation Study

We evaluate the empirical performance of the DSILT procedure and compare it with the One–shot and the ILMA methods. Throughout, we let , , and vary from 500 to 1000. For each setting, we perform 200 replications and set the number of sample splitting folds , and false discovery level . The tuning strategies described in Section 2.6 are employed with .

The covariate of each study is generated from either the (i) Gaussian auto–regressive (AR) model of order 1 and correlation coefficient 0.5; or (ii) Hidden Markov model (HMM) with binary hidden variables and binary observed variables with the transition probability and the emission probability both set as 0.2. We choose to be heterogeneous in magnitude across studies but to share the same support with

where the sparsity level is set to be 10 or 50, and are independently drawn from with equal probability and are shared across studies, while the local signal strength ’s vary across studies and are drawn independently from . To ensure the procedures have reasonable power magnitudes for comparison, we set the overall signal strength to be in the range of [0.21,0.42] for , mimicking a sparse and strong signal setting; and [0.14,0.35] for , mimicking a dense and weak signal setting. We then generate binary responses from .

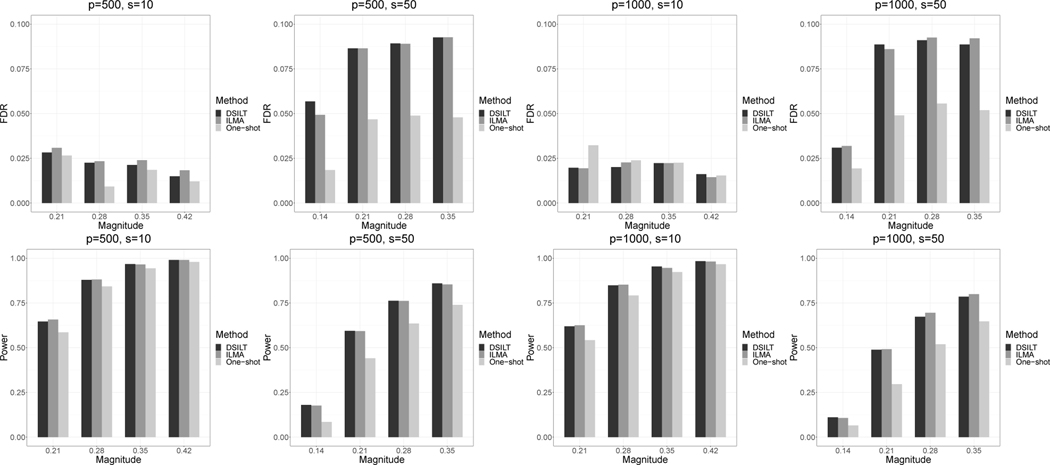

In Figure 1, we report the empirical FDR and power of the three methods with varying , , and under the Gaussian design. Results for the HMM design have almost the same pattern and are included in the Supplementary Material. Across all settings, DSILT achieves almost the same performance as the ideal ILMA in both error rate control and power. All the methods successfully control the desired FDR at . When or the signal strength is weak, all the methods have conservative error rates compared to the nominal level. While for with relatively strong signal, our method and the ideal ILMA become close to the exact error rate control empirically. This is consistent with Theorem 3 that if the number of relatively strong signals is large enough, our method tends to achieve exact FDR control. In contrast, the One–shot method fails to borrow information across the studies, and hence requires stronger signal magnitude to achieve exact FDR control. As a result, we observe consistently conservative empirical error rates for the One–shot approach.

Figure 1:

The empirical FDR and power of our DSILT method, the One–shot approach and the ILMA method under the Gaussian design, with . The horizontal axis represents the overall signal magnitude .

In terms of the empirical power, the difference between DSILT and ILMA is less than 1% in all cases. This indicates that the proposed DSILT can accommodates the DataSHIELD constraint at almost no cost in power compared to ideal method. This is consistent with our theoretical result in Section 3.4 that the two methods require the same sparsity assumption for simultaneous inference. Furthermore, the DSILT and ILMA methods dominate the One–shot strategy in terms of statistical power. Under every single scenario, the power of the former two methods is around 15% higher than that of the One–shot approach in the dense case, i.e., , and 6% higher in the sparse case, i.e, . By developing testing procedures using integrative analysis rather than local estimations, both DSILT and ILMA methods use the group sparsity structure of the model parameters more adequately than the One–shot approach, which leads to the superior power performance of these two methods. The power advantage is more pronounced as the sparsity level s grows from 10 to 50. This is due to the fact that, to achieve the same result, the One–shot approach requires a stronger sparsity assumption than the other two methods, and is thus much more easily impacted by the growth of s. In comparison, the performance of our method and the ILMA method is less sensitive to sparsity growth because the integrative estimator employed in these two methods is more stable than the local estimator under the dense scenario.

5. Real Example

Statins are the most widely prescribed drug for lowering low–density lipoprotein (LDL) and the risk of cardiovascular disease (CVD), with over a quarter of adults 45 years or older receiving the drug in the United States. Statins lower LDL by inhibiting 3-hydroxy-3-methylglutaryl-coenzyme A reductase (HMGCR) (Nissen et al., 2005). The treatment effect of statins can also be causally inferred based on the effect of the HMGCR variant rs17238484 – patients carrying the rs17238484-G allele have profiles similar to individuals receiving statin, with lower LDL and lower risk of CVD (Swerdlow et al., 2015). While the benefit of statins have been consistently observed, they are not without risk. There has been increasing evidence that statins increase the risk of type II diabetes (T2D) (Rajpathak et al., 2009; Carter et al., 2013). Swerdlow et al. (2015) demonstrated via both meta analysis of clinical trials and genetic analysis of the rs17238484 variant that statins are associated with a slight increase of T2D risk. However, the adverse effect of statins on T2D risk appears to differ substantially depending on the number of T2D risk factors patients have prior to receiving the statin, with adverse risk higher among patients with more risk factors (Waters et al., 2013).

To investigate potential genetic determinants of statin treatment effect heterogeneity, we studied interactive effects of the rs17238484 variant and 256 SNPs associated with T2D, LDL, high–density lipoprotein (HDL) cholesterol, and the coronary artery disease (CAD) gene which plays a central role in obesity and insulin sensitivity (Kozak and Anunciado-Koza, 2009; Rodrigues et al., 2013). A significant interaction between SNP and the statin variant rs17238484 would indicate that SNP modifies the effect of statin. Since the LDL, CAD and T2D risk profiles differ greatly between different racial groups and between male and female, we focus the analysis on the black sub-population and fit separate models for female and male subgroups.

To efficiently identify genetic risk factors that significantly interact with rs17238484, we performed an integrative analysis of data from 3 different studies, including the Million Vetern Project (MVP) from the Veteran Health Administration (Gaziano et al., 2016), Partners Healthcare Biobank (PHB) and the UK Biobank (UKB). Within each study, we have both a male subgroup indexed by subscript , and a female subgroup indexed by subscript , leading to datasets denoted by , , , , and . Since T2D prevalence within the datasets varies greatly from 0.05% to 0.15%, we performed a case control sampling with 1:1 matching so each dataset has equal numbers of T2D cases and controls. Since MVP has a substantially larger number of male T2D cases than all other studies, we down sampled its cases to match the number of female cases in MVP so that the signals are not dominated by the male population. This leads to sample sizes of 216, 392, 606, 822, 3120 and 3120 at , , , , and , respectively. The covariate vector is of dimension , where consists of the main effects of rs17238484, age and the aforementioned 256 SNPs, and consists of the interactions between rs17238484 and age, as well as each of the 256 SNPs. All SNPs are encoded such that the higher value is associated with higher risk of T2D. We implemented the proposed testing method along with the One–shot approach as a benchmark to perform multiple testing of coefficients corresponding to the interaction terms in at nominal level of with the model chosen as logistics regression and the sample splitting folds and .

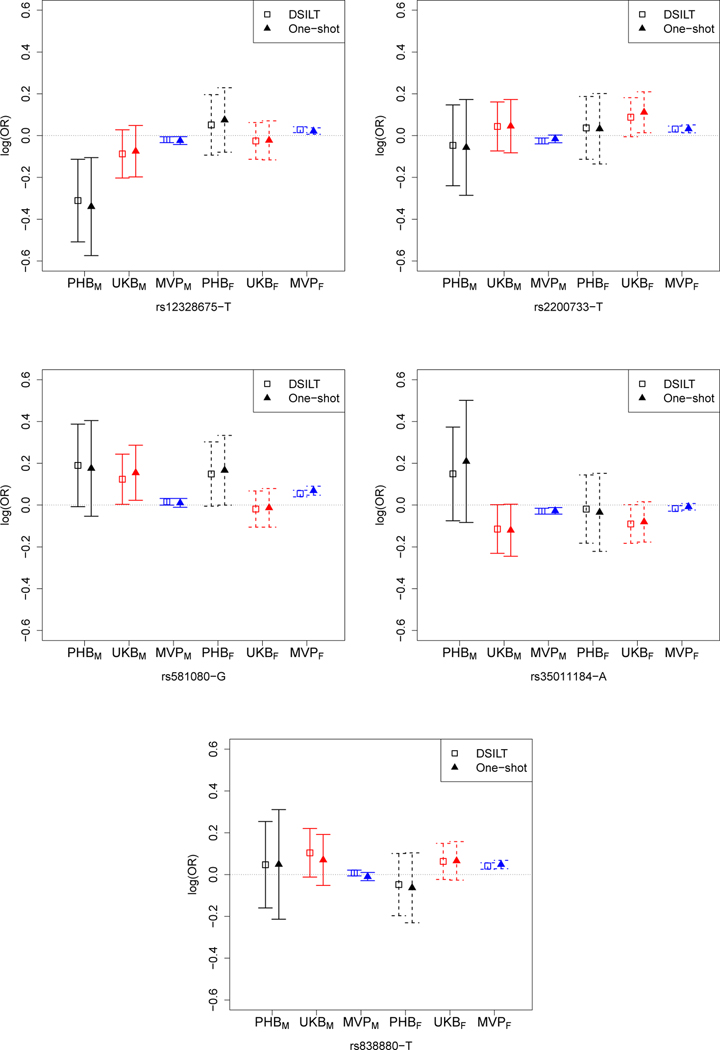

As shown in Table 1, our method identifies 5 SNPs significantly interacting with the statin SNP while the One–shot approach detects only 3 SNPs, all of which belong to the set of SNPs identified by our method. The presence of non-zero interactive effects demonstrates that the adverse effect of statin SNP rs17238484-G on the risk of T2D can differ significantly among patients with different levels of genetic predisposition to T2D. In Figure 2, we also present 90% confidence intervals obtained within each dataset for the interactive effects between rs17238484-G and each of these 5 detected SNPs. The SNP rs581080-G in the TTC39B gene has the strongest interactive effect with the statin SNP and has all interactive effects estimated as positive for most studies, suggesting that the adverse effect of statin is generally higher for patients with this mutation compared to those without. Interestingly, a previous report finds that a SNP in the TTC39B gene is associated with statin induced response to LDL particle number (Chu et al., 2015), suggesting that the effect of statin can be modulated by the rs581080-G SNP.

Table 1:

SNPs identified by DSILT to interact with the statin genetic variants rs17238484-G on the risk for T2D. The second column presents the name of the gene where the SNP locates. The third column presents the minor allele frequency (MAF) of each SNP averaged over the three sites. The last three columns respectively present the –values obtained using One–shot approach with all the studies, One–shot with solely the datasets and and the proposed method with all the studies. The –values shown in black fonts represent the SNPs selected by each method.

| SNP | Gene | MAF | One–shot | MVP–only | DSILT |

|---|---|---|---|---|---|

| rs12328675-T | COBLL1 | 0.13 | 1.1×10−3 | 2.3 × 10−3 | 6.0×10−4 |

| rs2200733-T | LOC729065 | 0.18 | 3.7 × 10−2 | 5.7 × 10−3 | 6.2×10−4 |

| rs581080-G | TTC39B | 0.22 | 3.6×10−6 | 1.1×10−6 | 2.6×10−6 |

| rs35011184-A | TCF7L2 | 0.22 | 1.9 × 10−2 | 5.2 × 10−2 | 8.6×10−4 |

| rs838880-T | SCARB1 | 0.36 | 6.7×10−4 | 6.0×10−5 | 6.2×10−4 |

Figure 2:

Debiased estimates of the log odds ratios and their 90% confidence intervals in each local site for the interaction effects between rs17238484-G and the 5 SNPs detected by DSILT, obtained respectively based on the One–shot and the DSILT approaches.

Results shown in Figure 2 also suggest some gender differences in the interactive effects. For example, the adverse effect of the statin is lower for female patients carrying the rs12328675-T allele compare to female patients without the allele. On the other hand, the effect of the statin appear to be higher for male patients with the rs12328675-T allele compared to those without genetic variants associated with a various of phenotypes related to T2D. The variation in the effect sizes across different data sources illustrates that it is necessary to properly account for heterogeneity of in the modeling procedure. Comparing the lengths of confidence intervals obtained based on the One–shot approach to those from the proposed method, we find that the DSILT approach generally yields shorter confidence intervals, which translates to higher power in signal detection. It is important to note that since MVP has much larger sample sizes, the width of the confidence intervals from MVP are much smaller than those of UKB and PHB. However, the effect sizes obtained from MVP also tend to be much smaller in magnitude and consequently, using MVP alone would only detect 2 of the 5 SNPs by multiple testing with level 0.1. This demonstrates the utility of the integrative testing involving data sources.

6. Discussion

In this paper, we propose a DSILT method for simultaneous inference of high dimensional covariate effects in the presence of between-study heterogeneity under the DataSHIELD framework. The proposed method is able to properly control the FDR and FDP in theory asymptotically, and is shown to have similar performance as the ideal ILMA method and to outperform the One–shot approach in terms of the required assumptions and the statistical power for multiple testing. Our method allows most distributional properties of the data to differ across the sites, such as the marginal distribution of , the conditional variance of given , and the magnitude of each . The support is also allowed to vary across the sites as well, but the DSILT method is more powerful when are more similar to each other. We demonstrate that the sparsity assumptions of the proposed method are equivalent to those for the ideal method but strictly weaker than those for the One–shot approach. As the price to pay, our method requires one more round of data transference between the AC and the DCs than the One–shot approach. Meanwhile, the sparsity condition equivalence between the proposed method and ILMA method implies that there is no need to include in our method further rounds of communications or adopt iterative procedures as in Li et al. (2016) and Wang et al. (2017), which saves a great deal of human effort in practice.

The proposed approach also adds technical contributions to existing literature in several aspects. First, our debiasing formulation helps to get rid of the group structure assumption on the covariates at different distributed sites. Such an assumption is not satisfied in our real data setting, but is unavoidable if one uses the node-wise group LASSO (Mitra et al., 2016) or group structured inverse regression (Xia et al., 2018a) for debiasing. Second, compared with the existing work on joint testing of high dimensional linear models (Xia et al., 2018a), our method considers model heterogeneity and allows the number of studies to diverge under the data sharing constraint, resulting in substantial technical difficulties in characterizing the asymptotic distribution of our proposed test statistics and their correlation structures for simultaneous inference.

We next discuss the limitation and possible extension of the current work. First, the proposed procedure requires transferring of Hessian matrix with complexity from each DC to the AC. To the best of our knowledge, there is no natural way to reduce the order of complexity for the group debiasing step, i.e., Step 2, as introduced in Section 2.4. Nevertheless, it is worthwhile to remark that, for the integrative estimation step, i.e., Step 1, the communication complexity can be reduced to only, by first transferring the locally debiased LASSO estimators from each DC to the AC and then integrating the debiased estimators with a group structured truncation procedure (Lee et al., 2017; Battey et al., 2018, e.g.) to obtain an integrative estimator with the same error rate as . However, such a procedure requires greater efforts in deriving the data at each DC, which is not easily accomplished in some situations such as in our real example. Second, we assume in the current paper as we have in the real example of Section 5. We can further extend our results to the cases when grows slower than . In such scenarios, the error rate control results in Theorems 2 and 3 still hold. Meanwhile, the model sparsity assumptions and the conditions on and can be further relaxed because we have fewer number of hypotheses to test in total and as a result the error rate tolerance for an individual test can be weakened. Third, for the limiting null distribution of the test statistics and the subsequent simultaneous error rate control, we require and . Such an assumption is naturally satisfied in many situations as in our real example. However, when the collaboration is of a larger scale, say or , developing an adaptive and powerful overall effect testing procedure (such as the –type test statistics), particularly under DataSHIELD constraints, warrants future research. Fourth, the sub-Gaussian residual Assumption 3 in our theoretical analysis does not hold for Poisson or negatively binomial response. Inspired by existing work (Jia et al., 2019; Xie and Xiao, 2020, e.g.), our framework can be potentially generalized to accommodate more types of outcome models. Last, our method may be modified by perturbing the weighted covariates and response , and transferring the summary statistics derived from the perturbed data. Designing such a method with more convincing privacy guarantees, as well as similar estimation and testing performance as in our current framework warrants future research.

Supplementary Material

Acknowledgments

Part of this research is based on data from the Million Veteran Program (Gaziano et al., 2016), Office of Research and Development, Veterans Health Administration, and was supported by awards #MVP000 and #MVP001. This publication does not represent the views of the Department of Veterans Affairs or the United States Government. The research of Yin Xia was supported in part by NSFC Grants 12022103, 11771094 and 11690013. The research of Tianxi Cai and Molei Liu were partially supported by the Translational Data Science Center for a Learning Health System at Harvard Medical School and Harvard T.H. Chan School of Public Health. We thank Zeling He and Nick Link for the help of running the real data, and Amanda King for the writing polish of the paper.

Footnotes

. Though a Poisson distribution does not satisfy the required sub-Gaussian residual Assumption 3, the counts of EHR diagnostic codes are usually less heavy-tailed than Poisson and are accommodated by our analysis.

Contributor Information

Molei Liu, Department of Biostatistics, Harvard T.H. Chan School of Public Health, USA.

Yin Xia, Department of Statistics, School of Management, Fudan University, China.

Kelly Cho, Massachusetts Veterans Epidemiology Research and Information Center, US Department of Veteran Affairs, Brigham and Women’s Hospital, Harvard Medical School, USA.

Tianxi Cai, Department of Biostatistics, Harvard T.H. Chan School of Public Health, USA.

References

- Allen Mike, Bourhis John, Burrell Nancy, and Mabry Edward. Comparing student satisfaction with distance education to traditional classrooms in higher education: A meta-analysis. The American Journal of Distance Education, 16(2):83–97, 2002. [Google Scholar]

- Battey Heather, Fan Jianqing, Liu Han, Lu Junwei, Zhu Ziwei, et al. Distributed testing and estimation under sparse high dimensional models. The Annals of Statistics, 46(3): 1352–1382, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belloni Alexandre, Chernozhukov Victor, Chetverikov Denis, Hansen Christian, and Kato Kengo. High-dimensional econometrics and regularized gmm. arXiv preprint arXiv:1806.01888, 2018.

- Benjamini Y. and Hochberg Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J. R. Statist. Soc. B, 57:289–300, 1995. [Google Scholar]

- Jonathan P Bradfield, Rob Taal H, Timpson Nicholas J, Scherag André, Lecoeur Cecile, Warrington Nicole M, Hypponen Elina, Holst Claus, Valcarcel Beatriz, Thiering Elisabeth, et al. A genome-wide association meta-analysis identifies new childhood obesity loci. Nature genetics, 44(5):526, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cai Tianxi, Liu Molei, and Xia Yin. Individual data protected integrative regression analysis of high-dimensional heterogeneous data. arXiv preprint arXiv:1902.06115, 2019a. [DOI] [PMC free article] [PubMed]

- Cai Tony, Liu Weidong, and Luo Xi. A constrained l1 minimization approach to sparse precision matrix estimation. Journal of the American Statistical Association, 106(494): 594–607, 2011. [Google Scholar]

- Cai TT, Li H, Ma J, and Xia Y. Differential markov random field analysis with an application to detecting differential microbial community networks. Biometrika, 106(2):401–416, 2019b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caner Mehmet and Kock Anders Bredahl. High dimensional linear gmm. arXiv preprint arXiv:1811.08779, 2018.

- Card David, Kluve Jochen, and Weber Andrea. Active labour market policy evaluations: A meta-analysis. The economic journal, 120(548):F452–F477, 2010. [Google Scholar]

- Carter Aleesa A, Gomes Tara, Camacho Ximena, Juurlink David N, Shah Baiju R, and Mamdani Muhammad M. Risk of incident diabetes among patients treated with statins: population based study. Bmj, 346:f2610, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chernozhukov Victor, Chetverikov Denis, Demirer Mert, Duflo Esther, Hansen Christian, and Newey Whitney K. Double machine learning for treatment and causal parameters. Technical report, cemmap working paper, 2016.

- Chernozhukov Victor, Newey Whitney, and Robins James. Double/de-biased machine learning using regularized riesz representers. arXiv preprint arXiv:1802.08667, 2018.

- Chu Audrey Y, Giulianini Franco, Barratt Bryan J, Ding Bo, Nyberg Fredrik, Mora Samia, Ridker Paul M, and Chasman Daniel I. Differential genetic effects on statin-induced changes across low-density lipoprotein–related measures. Circulation: Cardiovascular Genetics, 8(5):688–695, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- REBECCA DerSimonian. Meta-analysis in the design and monitoring of clinical trials. Statistics in medicine, 15(12):1237–1248, 1996. [DOI] [PubMed] [Google Scholar]

- Doiron Dany, Burton Paul, Marcon Yannick, Gaye Amadou, Bruce HR, Perola Markus, Stolk Ronald P, Foco Luisa, Minelli Cosetta, Waldenberger Melanie, et al. Data harmonization and federated analysis of population-based studies: the BioSHaRE project. Emerging themes in epidemiology, 10(1):12, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dukes Oliver and Vansteelandt Stijn. Uniformly valid confidence intervals for conditional treatment effects in misspecified high-dimensional models. arXiv preprint arXiv:1903.10199, 2019.

- Foster Dean P and George Edward I. The risk inflation criterion for multiple regression. The Annals of Statistics, pages 1947–1975, 1994.

- Franke Andre, McGovern Dermot PB, Barrett Jeffrey C, Wang Kai, Radford-Smith Graham L, Ahmad Tariq, Lees Charlie W, Balschun Tobias, Lee James, Roberts Rebecca, et al. Genome-wide meta-analysis increases to 71 the number of confirmed crohn’s disease susceptibility loci. Nature genetics, 42(12):1118, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gaye Amadou, Marcon Yannick, Isaeva Julia, LaFlamme Philippe, Turner Andrew, Jones Elinor M, Minion Joel, Boyd Andrew W, Newby Christopher J, Nuotio Marja-Liisa, et al. DataSHIELD: taking the analysis to the data, not the data to the analysis. International journal of epidemiology, 43(6):1929–1944, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gaziano John Michael, Concato John, Brophy Mary, Fiore Louis, Pyarajan Saiju, Breeling James, Whitbourne Stacey, Deen Jennifer, Shannon Colleen, Humphries Donald, et al. Million veteran program: A mega-biobank to study genetic influences on health and disease. Journal of clinical epidemiology, 70:214–223, 2016. [DOI] [PubMed] [Google Scholar]

- Houlston Richard S, Webb Emily, Broderick Peter, Pittman Alan M, Di Bernardo Maria Chiara, Lubbe Steven, Chandler Ian, Vijayakrishnan Jayaram, Sullivan Kate, Penegar Steven, et al. Meta-analysis of genome-wide association data identifies four new susceptibility loci for colorectal cancer. Nat Genet, pages 1426–35, 2008. [DOI] [PMC free article] [PubMed]

- Huang Junzhou and Zhang Tong. The benefit of group sparsity. The Annals of Statistics, 38(4):1978–2004, 2010. [Google Scholar]

- Janková Jana and Van De Geer Sara. Confidence regions for high-dimensional generalized linear models under sparsity. arXiv preprint arXiv:1610.01353, 2016.

- Javanmard Adel and Montanari Andrea. Confidence intervals and hypothesis testing for high-dimensional regression. The Journal of Machine Learning Research, 15(1):2869–2909, 2014. [Google Scholar]

- Javanmard Adel, Javadi Hamid, et al. False discovery rate control via debiased lasso. Electronic Journal of Statistics, 13(1):1212–1253, 2019. [Google Scholar]

- Jia Jinzhu, Xie Fang, Xu Lihu, et al. Sparse poisson regression with penalized weighted score function. Electronic Journal of Statistics, 13(2):2898–2920, 2019. [Google Scholar]

- Jones EM, Sheehan NA, Masca N, Wallace SE, Murtagh MJ, and Burton PR. DataSHIELD–shared individual-level analysis without sharing the data: a biostatistical perspective. Norsk epidemiologi, 21(2), 2012. [Google Scholar]

- Jordan Michael I, Lee Jason D, and Yang Yun. Communication-efficient distributed statistical inference. Journal of the American Statistical Association, 526(114):668–681, 2019. [Google Scholar]

- Kozak LP and Anunciado-Koza R. Ucp1: its involvement and utility in obesity. International journal of obesity, 32(S7):S32, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee Jason D, Liu Qiang, Sun Yuekai, and Taylor Jonathan E. Communication-efficient sparse regression. Journal of Machine Learning Research, 18(5):1–30, 2017. [Google Scholar]

- Li Wenfa, Liu Hongzhe, Yang Peng, and Xie Wei. Supporting regularized logistic regression privately and efficiently. PloS one, 11(6):e0156479, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu WD and Luo S. Hypothesis testing for high-dimensional regression models. Technical report, 2014.

- Liu Weidong and Shao Qi-Man. Phase transition and regularized bootstrap in large-scale t-tests with false discovery rate control. The Annals of Statistics, 42(5):2003–2025, 2014. [Google Scholar]

- Lounici Karim, Pontil Massimiliano, Van De Geer Sara, Tsybakov Alexandre B, et al. Oracle inequalities and optimal inference under group sparsity. The Annals of Statistics, 39(4):2164–2204, 2011. [Google Scholar]

- Rong Ma, Cai T Tony, and Li Hongzhe. Global and simultaneous hypothesis testing for high-dimensional logistic regression models. Journal of the American Statistical Association, pages 1–15, 2020. [DOI] [PMC free article] [PubMed]

- Mitra Ritwik, Zhang Cun-Hui, et al. The benefit of group sparsity in group inference with de-biased scaled group lasso. Electronic Journal of Statistics, 10(2):1829–1873, 2016. [Google Scholar]

- Negahban Sahand N, Ravikumar Pradeep, Wainwright Martin J, Yu Bin, et al. A unified framework for high-dimensional analysis of m-estimators with decomposable regularizers. Statistical Science, 27(4):538–557, 2012. [Google Scholar]

- Nissen Steven E, Tuzcu E Murat, Schoenhagen Paul, Crowe Tim, Sasiela William J, Tsai John, Orazem John, Magorien Raymond D, O’Shaughnessy Charles, and Ganz Peter. Statin therapy, ldl cholesterol, c-reactive protein, and coronary artery disease. New England Journal of Medicine, 352(1):29–38, 2005. [DOI] [PubMed] [Google Scholar]

- Panagiotou Orestis A, Willer Cristen J, Hirschhorn Joel N, and Ioannidis John PA. The power of meta-analysis in genome-wide association studies. Annual review of genomics and human genetics, 14:441–465, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rajpathak Swapnil N, Kumbhani Dharam J, Crandall Jill, Barzilai Nir, Alderman Michael, and Ridker Paul M. Statin therapy and risk of developing type 2 diabetes: a meta-analysis. Diabetes care, 32(10):1924–1929, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rodrigues Alice Cristina, Sobrino B, Dalla Vecchia Genvigir Fabiana, Willrich Maria Alice Vieira, Arazi Simone Sorkin, Dorea Egidio Lima, Bernik Marcia Martins Silveira, Bertolami Marcelo, Faludi André Arpad, Brion MJ, et al. Genetic variants in genes related to lipid metabolism and atherosclerosis, dyslipidemia and atorvastatin response. Clinica Chimica Acta, 417:8–11, 2013. [DOI] [PubMed] [Google Scholar]

- Stewart Gavin. Meta-analysis in applied ecology. Biology letters, 6(1):78–81, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Swerdlow Daniel I, Preiss David, Kuchenbaecker Karoline B, Holmes Michael V, EL Engmann Jorgen, Shah Tina, Sofat Reecha, Stender Stefan, Johnson Paul CD, Scott Robert A, et al. Hmg-coenzyme a reductase inhibition, type 2 diabetes, and bodyweight: evidence from genetic analysis and randomised trials. The Lancet, 385(9965):351–361, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tang Lu, Zhou Ling, and Song Peter X-K. Method of divide-and-combine in regularized generalized linear models for big data. arXiv preprint arXiv:1611.06208, 2016.

- Tong Jiayi, Duan Rui, Li Ruowang, Scheuemie Martijn J, Moore Jason H, and Chen Yong. Robust-odal: Learning from heterogeneous health systems without sharing patient-level data. In Pacific Symposium on Biocomputing. Pacific Symposium on Biocomputing, volume 25, page 695. World Scientific, 2020. [PMC free article] [PubMed] [Google Scholar]

- Van de Geer Sara, Bühlmann Peter, Ritov Ya’acov, Dezeure Ruben, et al. On asymptotically optimal confidence regions and tests for high-dimensional models. The Annals of Statistics, 42(3):1166–1202, 2014. [Google Scholar]

- Wang Hansheng, Li Bo, and Leng Chenlei. Shrinkage tuning parameter selection with a diverging number of parameters. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 71(3):671–683, 2009. [Google Scholar]

- Wang Jialei, Kolar Mladen, Srebro Nathan, and Zhang Tong. Efficient distributed learning with sparsity. In Proceedings of the 34th International Conference on Machine Learning-Volume 70, pages 3636–3645. JMLR. org, 2017. [Google Scholar]

- Waters David D, Ho Jennifer E, Boekholdt S Matthijs, DeMicco David A, Kastelein John JP, Messig Michael, Breazna Andrei, and Pedersen Terje R. Cardiovascular event reduction versus new-onset diabetes during atorvastatin therapy: effect of baseline risk factors for diabetes. Journal of the American College of Cardiology, 61(2):148–152, 2013. [DOI] [PubMed] [Google Scholar]

- Wolfson Michael, Susan E Wallace Nicholas Masca, Rowe Geoff, Nuala A Sheehan Vincent Ferretti, LaFlamme Philippe, Tobin Martin D, Macleod John, Little Julian, et al. DataSHIELD: resolving a conflict in contemporary bioscience–performing a pooled analysis of individual-level data without sharing the data. International journal of epidemiology, 39(5):1372–1382, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yin Xia T Cai Tony, and Li Hongzhe. Joint testing and false discovery rate control in high-dimensional multivariate regression. Biometrika, 105(2):249–269, 2018a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xia Yin, Cai Tianxi, and Cai T Tony. Two-sample tests for high-dimensional linear regression with an application to detecting interactions. Statistica Sinica, 28:63, 2018b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xie Fang and Xiao Zhijie. Consistency of `1 penalized negative binomial regressions. Statistics & Probability Letters, page 108816, 2020.

- Zeggini Eleftheria, Scott Laura J, Saxena Richa, Voight Benjamin F, Marchini Jonathan L, Hu Tianle, de Bakker Paul IW, Abecasis Gonçalo R, Almgren Peter, Andersen Gitte, et al. Meta-analysis of genome-wide association data and large-scale replication identifies additional susceptibility loci for type 2 diabetes. Nature genetics, 40(5):638, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang Cun-Hui and Zhang Stephanie S. Confidence intervals for low dimensional parameters in high dimensional linear models. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 76(1):217–242, 2014. [Google Scholar]

- Zhu Yinchu, Bradic Jelena, et al. Significance testing in non-sparse high-dimensional linear models. Electronic Journal of Statistics, 12(2):3312–3364, 2018. [Google Scholar]

- Zöller Daniela Stefan Lenz, and Binder Harald. Distributed multivariable modeling for signature development under data protection constraints. arXiv preprint arXiv:1803.00422, 2018.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.