Summary

Spiking neural networks (SNNs) serve as a promising computational framework for integrating insights from the brain into artificial intelligence (AI). Existing software infrastructures based on SNNs exclusively support brain simulation or brain-inspired AI, but not both simultaneously. To decode the nature of biological intelligence and create AI, we present the brain-inspired cognitive intelligence engine (BrainCog). This SNN-based platform provides essential infrastructure support for developing brain-inspired AI and brain simulation. BrainCog integrates different biological neurons, encoding strategies, learning rules, brain areas, and hardware-software co-design as essential components. Leveraging these user-friendly components, BrainCog incorporates various cognitive functions, including perception and learning, decision-making, knowledge representation and reasoning, motor control, social cognition, and brain structure and function simulations across multiple scales. BORN is an AI engine developed by BrainCog, showcasing seamless integration of BrainCog’s components and cognitive functions to build advanced AI models and applications.

Graphical abstract

Highlights

-

•

BrainCog is an integrated platform for brain-inspired AI and brain simulation

-

•

BrainCog offers a variety of universal foundational components

-

•

BrainCog supports diverse cognitive functions in various domains

-

•

BORN integrates BrainCog’s cognitive functions to create advanced AI

The bigger picture

To integrate the scientific exploration of the nature of biological intelligence and develop artificial intelligence (AI), the research community urgently requires an open-source platform that can simultaneously support brain-inspired AI and brain simulation across multiple scales. To meet this need, we release a spiking neural network (SNN)-based, brain-inspired cognitive intelligence engine (BrainCog) that provides a variety of universal essential components, including spiking neurons, encoding strategies, learning rules, brain areas, and hardware-software co-design. With these easy-to-use components, BrainCog incorporates numerous brain-inspired AI models that cover five categories of brain-inspired cognitive functions. It also supports multi-scale brain structure and function simulation. We also provide BORN, an SNN-driven, brain-inspired AI engine that integrates multiple BrainCog components and cognitive functions to build advanced AI models and robotics applications.

In this study, we introduce BrainCog, a comprehensive platform that bridges the gap between brain-inspired AI and brain simulation. By incorporating numerous foundational components, BrainCog showcases a diverse set of cognitive functions, serving as a substantial advancement in the integration of neuroscience and AI. This collaborative toolkit empowers researchers with a unique opportunity to explore the intersection of these critical domains as we strive toward unraveling and emulating the intricate complexities of cognitive processes.

Introduction

The human brain can self-organize and coordinate different cognitive functions to flexibly adapt to changing environments. A major challenge for artificial intelligence (AI) and computational neuroscience is integrating multi-scale biological principles to build brain-inspired intelligent models. As the third generation of neural networks,1 spiking neural networks (SNNs) are more biologically plausible at multiple scales, including membrane potential, neuronal firing, synaptic transmission, synaptic plasticity, and coordination of multiple brain areas. More importantly, SNNs are more biologically interpretable, more energy efficient, and naturally more suitable for modeling various cognitive functions of the brain and creating brain-inspired AI.

Existing neural simulators attempt to simulate elaborate biological neuron models, implement large-scale neural network simulations, and build neural dynamics models and deep SNN models. Neuron2 focuses on simulating elaborate biological neuron models. Neural simulation tool (NEST)3 implements large-scale neural network simulations. Brian/Brian24,5 provides an efficient and convenient tool for modeling SNNs. Shallow SNNs implemented by Brian2 can realize unsupervised visual classification.6 Further, BindsNET7 builds SNNs by coordinating various neurons and connections and incorporates multiple biological learning rules for training SNNs. SNNs implemented by these frameworks can realize machine learning tasks, including supervised, unsupervised, and reinforcement learning. However, supporting more complex tasks remains a challenge for current SNN frameworks, and there is a performance gap compared with traditional deep neural networks (DNNs).

Deep SNNs trained by surrogate gradient or converted from well-trained DNNs have achieved remarkable progress in the fields of speech recognition,8 computer vision,9 and reinforcement learning.10 Motivated by this, the SNN conversion toolbox (SNN-TB)11 provides an artificial neural network (ANN)-to-SNN framework that can transform DNN models built from different deep learning libraries (such as Keras, TensorFlow, and PyTorch) into SNN models and can provide interfaces with simulation platforms (such as PyNN12 and Brian2) as well as deployment to hardware (SpiNNaker13 and Loihi14). SINABS15 implements spiking convolutional neural networks (SCNNs) based on PyTorch. It integrates different types of neurons and various SCNN training algorithms (such as ANN-to-SNN conversion, training by backpropagation through time [BPTT]) and supports deploying models to neuromorphic hardware. SpikingJelly (SJ)16 develops a deep learning SNN framework (trained by surrogate gradient or converting well-trained DNNs to SNNs). It provides convenient basic components for deep supervised learning and reinforcement learning. These platforms are relatively more inspired by deep learning and focus on improving the performance of different tasks. They currently lack in-depth inspiration from brain information processing mechanisms and hence short at simulating large-scale functional brains.

BrainPy17 excels at modeling, simulating, and analyzing the dynamics of brain-inspired neural networks from multiple perspectives, including neurons, synapses, and networks. While it focuses on computational neuroscience research, it fails to consider the learning and optimization of deep SNNs or the implementation of brain-inspired functions. Semantic pointer architecture unified network (SPAUN)18 is a large-scale brain function model consisting of 2.5 million simulated neurons and is implemented by Nengo.19 It integrates multiple brain areas and can perform various brain cognitive functions, including image recognition, working memory, question answering, reinforcement learning, and fluid reasoning. However, SPAUN is not suitable for solving challenging and complex AI tasks that deep learning models can handle. In summary, the infrastructures for brain simulation and brain-inspired intelligence do not seem to have the same goal. Thus, the platforms for brain simulation and brain-inspired intelligence have been developed separately in the past. However, with a design that organizes biological plausibility and computational complexity at different levels, the two can be integrated and unified at the infrastructure level, eliminating the need for separate development. This integration is beneficial from the perspective of revealing the computational nature of intelligence and developing intelligent applications.

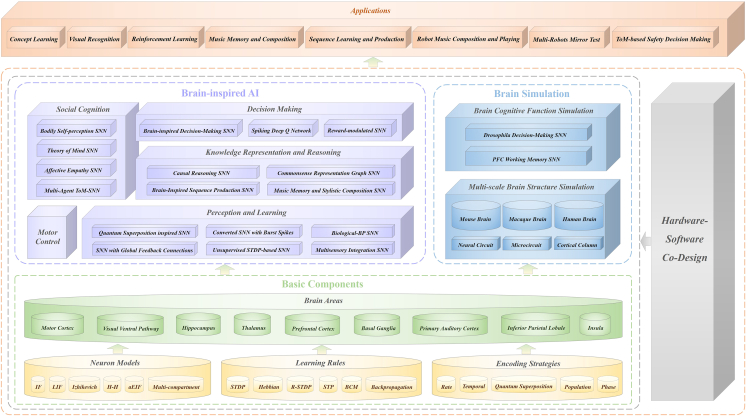

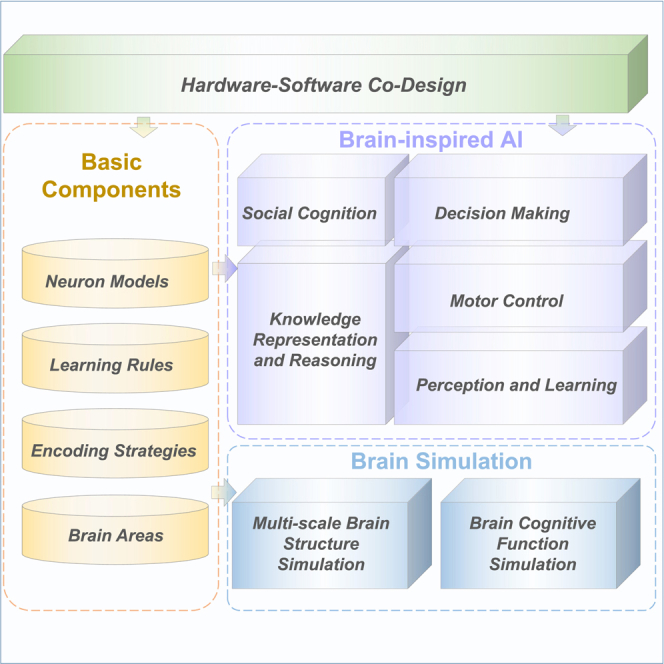

Considering the various limitations of existing frameworks mentioned above, in this paper, we present the brain-inspired cognitive intelligence engine (BrainCog), an SNN-based open-source platform for brain-inspired AI and brain simulation at multiple scales. As shown in Figure 1, BrainCog provides basic components such as different types of neuron models, learning rules, encoding strategies, etc., as building blocks to construct various brain areas and neural circuits to implement brain-inspired cognitive functions. Based on these essential components, BrainCog can perform a wide variety of brain-inspired AI modeling and simulate brain cognitive functions and structures, showing considerable scalability and flexibility. BrainCog also supports hardware-software co-design to facilitate the deployment of different SNN-based computational models. The platform includes several brain-inspired cognitive SNN models divided into five categories of cognitive functions: perception and learning, decision-making, motor control, knowledge representation and reasoning, and social cognition. For brain simulation, BrainCog provides simulations of brain structures and functions at different scales, from microcircuits and cortical columns to whole-brain structure simulations (covering the mouse brain, macaque brain, and human brain). We compare BrainCog with other platforms in terms of brain structure, learning mechanisms, and cognitive functions in Table 1.

Figure 1.

The architecture of the brain-inspired cognitive intelligence engine (BrainCog)

Table 1.

Comparison of the brain-inspired SNN and brain simulation platform

| Framework | SNN-TB (Rueckauer et al.11) | BindsNet (Hazan et al.7) | SINABS (SynSense SNN Library15) | SJ (Fang et al.16) | BrainPy (Wang et al.17) | SPAIC (Hong et al.20) | BrainCog | |

|---|---|---|---|---|---|---|---|---|

| Brain structure | neuron connection brain area |

✓ ✓ × |

✓ ✓ × |

✓ ✓ × |

✓ ✓ × |

✓ ✓ × |

✓ ✓ × |

✓ ✓ ✓ |

| Learning mechanisms | biologically conversion BP RL |

× ✓ × × |

✓ ✓ × ✓ |

× × ✓ × |

× ✓ ✓ ✓ |

× × ✓ × |

✓ × ✓ × |

✓ ✓ ✓ ✓ |

| Functions | brain-inspired AI brain simulation types |

✓ × little |

✓ × little |

✓ × little |

✓ × little |

× ✓ much |

✓ ✓ much |

✓ ✓ rich |

BrainCog is developed based on the deep learning framework (currently, it is based on PyTorch, but it is easy to migrate to other frameworks, such as PaddlePaddle, TensorFlow, etc.). The online repository of BrainCog can be accessed at http://www.brain-cog.network. With comprehensive, easy-to-use essential components and a considerable number of use cases (covering brain-inspired AI models, brain function, and structure simulation), BrainCog enables researchers to learn the platform quickly and implement their algorithms. In summary, BrainCog provides a powerful infrastructure for developing AI and computational neuroscience research based on SNNs.

Results

BrainCog provides an SNN-based open-source platform that can be applied to brain-inspired AI modeling and brain simulation at multiple scales, enabling the integration of revealing the nature of intelligence and developing brain-inspired intelligence models at the infrastructure level. This section presents the current applications and results of brain-inspired AI models and brain simulation integrated by BrainCog.

Brain-inspired AI

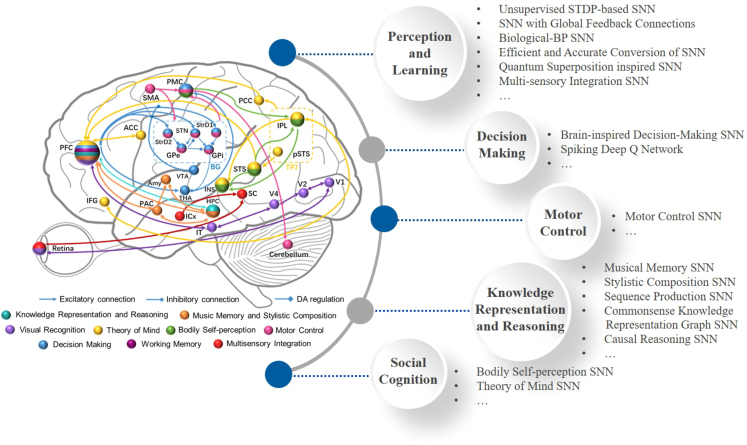

Computational units (different neuron models, learning rules, encoding strategies, brain area models, etc.) at multiple scales provided by BrainCog serve as a foundation to develop functional networks. To enable BrainCog to provide infrastructure support for brain-inspired AI, cognitive function-centric networks need to be built and provided as reusable functional building blocks. BrainCog aims to achieve the vision “the structure and mechanism are inspired by the brain, and the cognitive behaviors are similar to humans” for brain-inspired AI. As a result, BrainCog provides cognitive function components that collectively form neural circuits corresponding to 28 brain areas in mammalian brains, as shown in Figure 2. Drawing on the neural structure and learning mechanism from the brain, BrainCog implements a variety of brain-inspired AI models that can be classified into five categories: perception and learning, decision-making, motor control, knowledge representation and reasoning, and social cognition. The source code of the brain-inspired AI models implemented by BrainCog is available at https://github.com/BrainCog-X/Brain-Cog/tree/main/examples.

Figure 2.

Multiple cognitive functions and brain-inspired AI models integrated in BrainCog, along with their related brain areas and neural circuits

Perception and learning

For the computational models in sensory information processing, BrainCog implements SNN-based image classification, detection, and concept learning. BrainCog provides a variety of supervised and unsupervised methods for training SNNs, such as the biologically plausible spike-timing-dependent plasticity (STDP) in cooperation with short-term synaptic plasticity (STP), adaptive synaptic filter, and adaptive threshold balance21 to improve the performance of the SNN in unsupervised scenarios (Supplemental experimental procedures S1): global feedback connections combined with the local plasticity rule22 (Supplemental experimental procedures S2), the more biologically plausible backpropagation method based on surrogate gradients23 (Supplemental experimental procedures S3), conversion-based algorithms based on the burst spikes and lateral inhibition mechanism24 (Supplemental experimental procedures S4), the leaky integrate and fire or burst neuron with the dynamic burst pattern,25 excitatory and inhibitory neuron cooperation with self-feedback connections,26 and capsule structures routed by the STDP mechanism.27 Inspired by quantum information theory, BrainCog provides a quantum superposition-inspired SNN model, which encodes complement information to neural spike trains with different frequencies and phases.28 In addition, introducing the multi-compartment spiking neuron, the proposed SNN model achieves robust performance in noisy scenarios28 (Supplemental experimental procedures S5). Based on the BrainCog engine, we also present a human-like multi-sensory integration concept learning framework to generate representations with five types of perceptual strength information.29

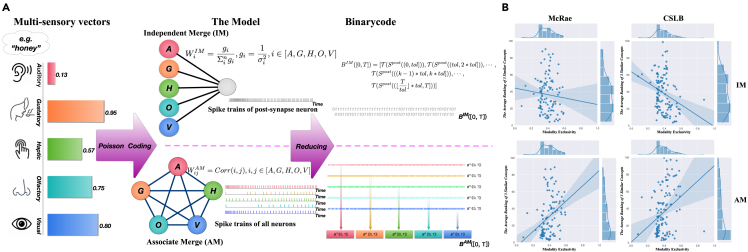

Case study 1: Multisensory integration

Combining information from multiple senses enhances perception, response times, and recognition capabilities. BrainCog provides a concept learning framework that generates integrated representations with five types of perceptual strength information.29 The framework is developed with two distinct paradigms: associate merge (AM) and independent merge (IM), as shown in Figure 3.

Figure 3.

Multisensory integration framework based on BrainCog

(A) The framework of multisensory concept learning based on SNNs.

(B) The correlation results between modality exclusivity (ME) and the average rank of neighbor.

IM is a cognitive model that assumes that each type of sensory information for a concept is processed independently before being integrated. It uses a two-layer SNN model with five neurons in the first layer representing the five types of perceptual strength (visual, auditory, haptic, olfactory, and gustatory) and one neuron in the second layer for integration. The model incorporates Poisson-encoded presynaptic neurons and leaky integrate and fire (LIF) or Izhikevich postsynaptic neural models, with weights between the neurons calculated as , where and is the variance of perceptual strength. IM converts the postsynaptic neuron’s spiking trains into integrated representations for each concept.

The AM paradigm assumes that each type of modality associated with a concept is processed together before integration. It consists of five neurons representing the concept’s distinct modal information sources, and they are connected to each other without self-connections. The input spike trains for AM are generated through Poisson coding based on perceptual strength. The weights are defined by the correlation between each pair of modalities. The AM model converts spike trains of all neurons into binary code and combines them as the integrated representation.

The multisensory integration framework is evaluated using similar concepts datasets.30,31 Three multisensory datasets are investigated (LC823,32,33 brain-based componential semantic representation (BBSR),34 and Lancaster40k35) respectively. The results (Figure 3B) show that representations generated by the framework are closer to human performance than the original ones. The framework paradigms, IM and AM, are evaluated and compared using concept feature norms datasets (McRae36 and Centre For Speech, Language, And The Brain (CSLB)37), and the findings reveal that IM performs better at multisensory integration for concepts with higher modality exclusivity, while AM benefits concepts with uniform perceptual strength distribution. Furthermore, both framework paradigms show good generality for perceptual strength-free metrics.

Decision-making

For decision-making, BrainCog provides a multi-brain area coordinated decision-making SNN,38 which achieves human-like learning ability on the Flappy Bird game. The platform also includes a reward-modulated brain-inspired SNN, empowering self-organizing obstacle avoidance for a drone swarm.39 In addition, BrainCog combines SNNs with deep reinforcement learning, providing a brain-inspired spiking deep Q network (spike-DQN) model,40 that outperforms vanilla ANN-based DQN on Atari game experiments.

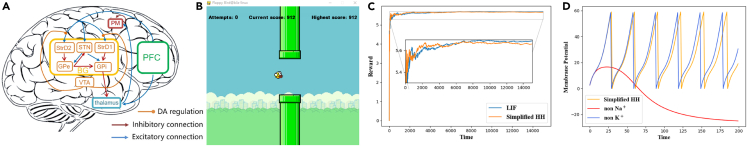

Case study 2: Brain-inspired decision-making SNN

BrainCog has developed a brain-inspired decision-making SNN (BDM-SNN) model38 that simulates the prefrontal cortex (PFC)-basal ganglia (BG)-thalamus (THA)-premotor cortex (PMC) neural circuit, as shown in Figure 4A. The BDM-SNN model incorporates the excitatory and inhibitory connections within the basal ganglia nuclei and the direct, indirect, and hyperdirect pathways from the PFC to the BG.41 This BDM-SNN model uses biological neuron models (LIF and simplified Hodgkin-Huxley [H-H] models), synaptic plasticity learning rules, and interactive connections among multi-brain areas. The learning process combines global dopamine modulation and local synaptic plasticity for online reinforcement learning.

Figure 4.

The architecture and experimental results of the BDM-SNN implemented by BrainCog

(A) The overall architecture of the BDM-SNN.

(B) Flappy Bird game.

(C) Experimental result of the BrainCog-based BDM-SNN on the Flappy Bird game. The y axis shows the mean of cumulative rewards.

(D) Effects of different ion channels on membrane potential for the simplified H-H model.

The BDM-SNN model implemented by BrainCog can perform different tasks, such as playing the Flappy Bird game (Figure 4B) and supporting unmanned aerial vehicle (UAV) online decision-making. For the Flappy Bird game, our method achieves human-like performance, stably passing the pipeline on the first try. Figure 4C illustrates the changes in the mean cumulative rewards for LIF and simplified H-H neurons while playing the game. The simplified H-H neuron performs similarly to the LIF neuron. The BDM-SNN with different neurons can quickly learn the correct rules and keep obtaining rewards. We also analyze the role of different ion channels in the simplified H-H model. Figure 4D shows that sodium and potassium ion channels have opposite effects on neuronal membrane potential. Removing sodium ion channels will make the membrane potential decay, while removing potassium ion channels will make the membrane potential rise faster and fire earlier. These results indicate that sodium ion channels can help increase the membrane potential and that potassium ion channels have the opposite effect. The experimental results also indicate that a BDM-SNN with a simplified H-H model that removes sodium ion channels fails to learn the Flappy Bird game.

Motor control

Embodied cognition is crucial to realizing biologically plausible AI. BrainCog provides a multi-brain area coordinated robot motion SNN model, which incorporates PMC, supplementary motor area (SMA), BG, and cerebellum functions, inspired by the brain’s motor control mechanism and embodied cognition. The model implements multi-brain area cooperation function and population neuron encoding and can control various robots.

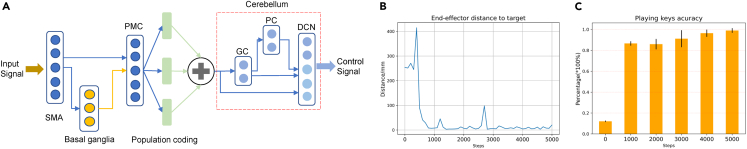

Case study 3: Motor control

Inspired by the brain motor circuit, we construct a brain-inspired motor control model with the LIF neuron provided by BrainCog and implement a robot piano-playing task. The model architecture is shown in Figure 5A. The SMA and PMC modules produce high-level motion information. The SMA processes internal movement stimuli and plans advanced actions. The cerebellum coordinates and fine-tunes movements. We build an SNN-based cerebellum model to process high-level motor control population embedding. The outputs of populations are fused to encode motor control information generated by the high-level cortex area, then entered into a three-layer cerebellum SNN, including granule cell (GC), purkinje cell (PC), and deep cerebellar nuclei (DCN) modules. The DCN layer generates the final joint control outputs. We use BrainCog’s cross-layer connection and population-coding modules to construct the motion control SNN according to the connection mechanism of the motor cortex in the biological brain. The entire motor control model is feedforward and can be trained using the spatiotemporal backpropagation (STBP) method provided by BrainCog.

Figure 5.

Motor control implemented by BrainCog

(A) The motor control SNN based on BrainCog.

(B) End-effector distance to target.

(C) Playing key accuracy during training.

Table 2 shows the brain areas and the number of neurons used for the motor control SNN. The SMA receives an input of music note information, and the PMC encodes the information with 16 groups of population-encoding neurons for action selection. The cerebellum’s GC, PC, and DCN parts form a residual connection and receive the output of the movement population neurons. The DCN puts out joint and end-effector coordinate control signals. We use the Euclidean distance between the model output end-effector position and the target end-effector position required to play a specific note as the loss function. The distance between the end effector and target and the playing key accuracy rate during the training process are shown in Figures 5B and 5C.

Table 2.

Motor control brain area and number of neurons

| Brain area | SMA | Basal ganglia | PMC | Cerebellum |

|---|---|---|---|---|

| Neuron number | 512 | 128 | 128 | GC, 512; PC, 512; DCN, 7 |

Knowledge representation and reasoning

BrainCog incorporates multiple neuroplasticity- and population-coding mechanisms for knowledge representation and reasoning. We develop a brain-inspired music memory and stylistic composition model that can represent and memorize note sequences and generate music in different styles.42,43 We also develop a sequence production SNN that can memorize and reconstruct symbol sequences according to different rules44 (Supplemental experimental procedures S6). We build a commonsense knowledge representation graph SNN that uses multi-scale neural plasticity and population coding to represent commonsense knowledge in a graph SNN model45 (Supplemental experimental procedures S7). We design a causal reasoning SNN that encodes a causal graph into an SNN and performs deductive reasoning tasks46 (Supplemental experimental procedures S8).

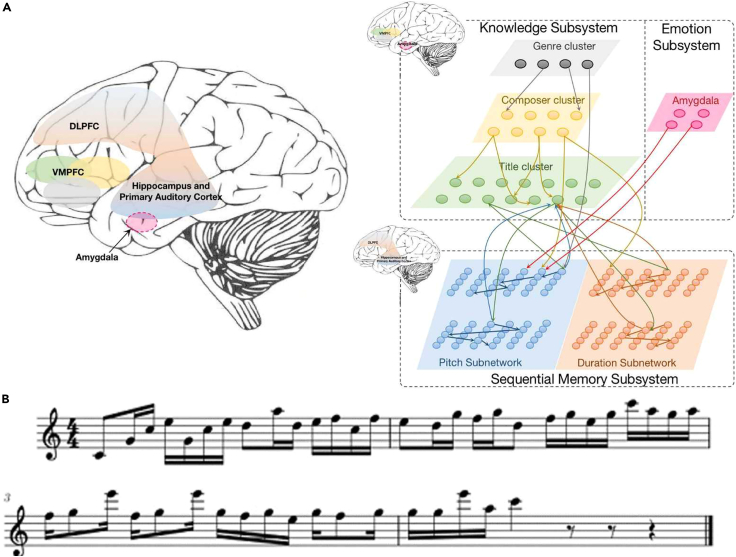

Case study 4: Stylistic composition SNN musical learning

BrainCog provides an example of SNN-based musical knowledge learning and creation of melodies in different styles. We develop a stylistic composition SNN that consists of a knowledge network and a sequence memory network.43 As shown in Figure 6A, the knowledge network is designed as a hierarchical structure that encodes and learns musical knowledge. These layers store the genre (such as baroque, classical, and romantic), the names of famous composers, and the titles of musical pieces. The sequence memory network stores the ordered notes. During learning, synaptic connections are projected from the knowledge network to the sequence memory network. This example takes the LIF model, supported by the BrainCog platform, to simulate neural dynamics. During learning, synaptic connections from the knowledge network to the sequence memory network are updated dynamically by the STDP learning rule.

Figure 6.

Stylistic composition SNN implemented by BrainCog

(A) Stylistic composition SNN model.

(B) A sample of a generated melody with Bach’s characteristics.

Musical composition

Given the beginning notes and the melody length to be generated, the genre-based composition can produce a single-part melody with a specific genre style. This task is achieved by the neural circuits of genre cluster and sequential memory system. Similarly, the composer-based composition can produce melodies with composers’ characters. The composer cluster and sequential memory system circuits contribute to this process. We train the model by using a classical piano dataset including 331 musical works recorded in the musical instrument digital interface (MIDI) format.47 Figure 6B shows a sample of a generated melody with Bach’s style.

Social cognition

For social cognition, BrainCog provides brain-inspired bodily self-perception and a theory of mind (ToM) model that enable the agent to perceive and understand itself and others and help the robots to pass the multi-robot mirror self-recognition test48 and the AI safety risks experiment.49 In addition, we construct a brain-inspired robot pain SNN based on BrainCog, which simulates the neural mechanism of painful emotion emergence and realizes two tasks with real robots: the alerting actual injury task and the preventing potential injury task.50 We also construct a brain-inspired affective empathy SNN based on BrainCog, which simulates the mirroring mechanism in the brain to achieve pain empathy and an altruistic rescue task among intelligent agents.51 Based on the BrainCog platform, we build a multi-agent theory of mind decision-making model to elevate multi-agent cooperation and competition52 and a brain-inspired intention prediction model to enable the robot to perform actions according to the user’s intention.53

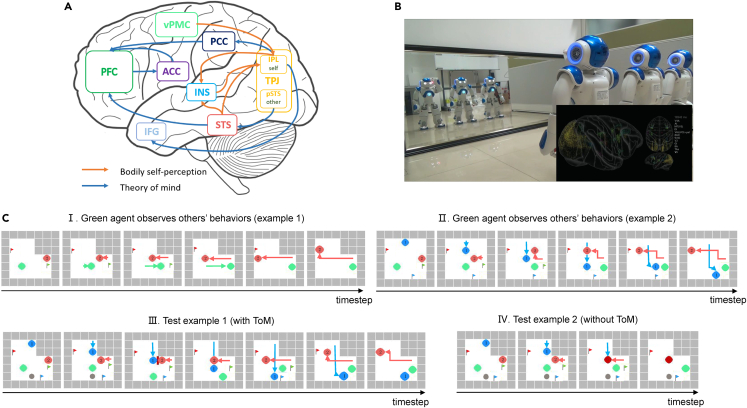

Case study 5: Brain-inspired bodily self-perception and theory of mind model

The nature and neural correlates of social cognition are advanced topics in cognitive neuroscience. In the field of AI and robotics, few in-depth studies take seriously the neural correlation and brain mechanisms of biological social cognition. Although the scientific understanding of biological social cognition is still in a preliminary stage, we integrate the biological findings of bodily self-perception54 and theory of mind55 into a brain-inspired bodily self-perception and theory of mind model to extend the functions of BrainCog. This model use the neuron models, STDP learning rule, and interactive connections among multi-brain areas provided by BrainCog, as shown in Figure 7A. This model enables the robot and the agents to pass the multi-robot mirror self-recognition test and the AI safety risks experiment, as shown in Figures 7B and 7C. The former is a classic experiment of self-perception in social cognition, and the latter is a variation and application of the theory of mind experiment in social cognition.

Figure 7.

The brain-inspired bodily self-perception and theory of mind model and experiments

(A) Brain-inspired bodily self-perception and theory of mind model.

(B) Multi-robot mirror self-recognition test. Three robots with identical appearances move their arms randomly in front of the mirror at the same time, and each robot needs to determine which mirror image belongs to it.

(C) AI safety risks experiment. After observing the behavior of the other two agents, the green agent can infer their actions by using its ToM ability when environmental changes may pose safety risks (e.g., the intersection may block the agents’ view and cause collisions). (I) Example 1. The green agent observes others’ behaviors. (II) Example 2. The green agent observes others’ behaviors. (III) The green agent with ToM can help other agents avoid risks. (IV) The green agent without ToM is unable to help other agents avoid risks.

Brain simulation

In addition to brain-inspired AI models, BrainCog also shows capabilities regarding brain cognitive function simulation and multi-scale brain structure simulation based on SNNs. BrainCog incorporates as much published anatomical data as possible to simulate cognitive functions such as decision-making and working memory. Anatomical and imaging multi-scale connectivity data are used to make whole-brain simulations from mouse and macaque to human more biologically plausible.

Brain cognitive function simulation

To demonstrate the capability of BrainCog for cognitive function simulation, we provide Drosophila decision-making and PFC working memory function simulations.56,57 For Drosophila nonlinear and linear decision-making simulations, BrainCog verifies the winner-takes-all behaviors of the nonlinear dopaminergic neuron-GABAergic neuron-mushroom body (DA-GABA-MB) circuit under a dilemma and obtains consistent conclusions with Drosophila biological experiments56 (for more details, see Supplemental experimental procedures S9). For the PFC working memory network implemented by BrainCog, we discover that using human neurons instead of rodent neurons without changing the network structure can significantly improve the accuracy and completeness of an image memory task,57 implying that the evolution of brains affects not only structures but also single neurons.

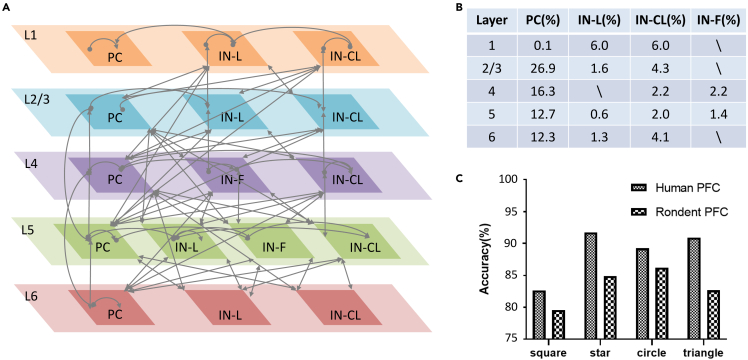

Case study 6: PFC working memory

Understanding the detailed differences between the brains of humans and other species on multiple scales will help illuminate what makes us unique as a species.57 We extract the key membrane parameters of human neurons from the human brain neuron database of the Allen Institute for Brain Science.58 Different types of neuron models are established based on the adaptive exponential integrate-and-fire (aEIF) model, supported by BrainCog. As shown in Figure 8A, we build a 6-layer PFC column model based on biometric parameters,59 following the model of a single PFC proposed by Hass et al.60 The pyramidal cells and interneurons are proportionally distributed from the literature,61,62 and connection probabilities for different types of neurons are based on previous studies60,63,64 (Figure 8B). We test the accuracy of information maintenance on the rodent neuron PFC network model. In Figure 8C, we can see that keeping the network structure and other parameters unchanged, only using human neurons instead of rodent neurons, can significantly improve the accuracy and integrity of image output. This is consistent with biological experiments65 showing that human neurons have a lower membrane capacitance and fire more quickly, thus improving the efficiency of information transmission. This data-driven PFC column model in BrainCog provides an effective simulation-validation platform to study other high-level cognitive functions.66

Figure 8.

Anatomical and network simulation diagram

(A) The connection of a single PFC column.

(B) The distribution proportion of different types of neurons in each column layer.

(C) Network persistent activity performance.

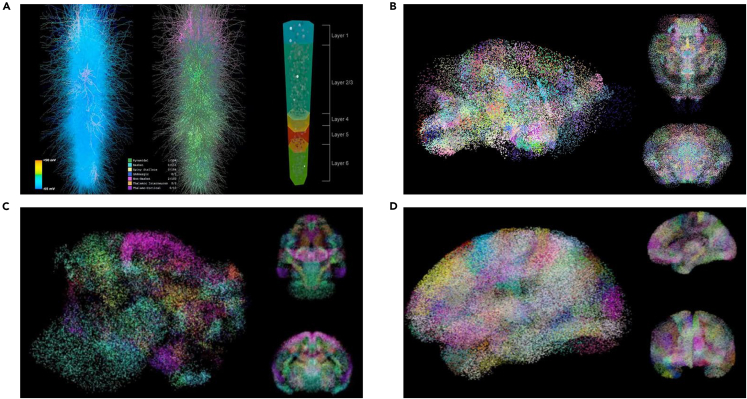

Multi-scale brain structure simulation

BrainCog simulates the biological brain of several species at different scales, from microcircuits and cortical columns to whole-brain structure simulations. (1) Neural microcircuit. BrainCog simulates the decision-making neural circuit of PFC-BG-THA-PMC in the mammalian brain (as shown in Figure 4A). Based on anatomical architecture, the neural microcircuit simulation models excitatory and inhibitory connections between nucleus clusters in the basal ganglia and between cortical (PFC and PMC) and subcortical (BG and THA) brain areas as well as the direct, indirect, and hyperdirect pathways from the PFC to BG. BrainCog builds a multi-brain area coordinated decision-making neural circuit by using the LIF neuron and CustomLinear connectivity modules in BrainCog. This brain-inspired neural microcircuit, combined with dopamine-regulated learning rules, enables human-like decision-making ability. (2) Cortical column. BrainCog builds a mammalian thalamocortical column based on realistic anatomical data.67 This column is made up of a six-layered cortical structure consisting of eight types of excitatory and nine types of inhibitory neurons. The thalamic neurons cover two types of excitatory neurons, inhibitory neurons, and GABAergic neurons in thalamic reticular neurons (TRNs). Neurons are simulated by the Izhikevich model, which BrainCog applies to exhibit their specific spiking patterns depending on their different neural morphologies. Each neuron has multiple dendritic branches with many synapses. The synaptic distribution and the microcircuits are reconstructed in BrainCog based on previous studies.67 Figure 9A describes the details of the minicolumn. The column contains 1,000 neurons and over 4,200,000 synapses. (3) Mouse brain. The BrainCog mouse brain simulator is an SNN model covering 213 mouse brain areas based on the Allen Mouse Brain Connectivity Atlas.68 Each neuron is modeled by aEIF neuron model and simulated with a resolution of = 1 ms. This model includes a total of 6 types of neurons: excitatory (E) neurons, interneuron basket cells (I-BCs), interneuron Matinotti cells (I-MCs), thalamocortical relay neurons, thalamic interneurons (TIs) and TRNs. The connections between brain areas follow the quantitative anatomical dataset from the Allen Mouse Brain Connectivity Atlas.68 Figure 9B shows the spontaneous activity of the model without external stimulation. (4) Macaque brain. The BrainCog macaque brain simulator is a large-scale SNN model covering 383 macaque brain areas,69 with 1.21 billion spiking neurons and 1.3 trillion synapses, which is 1/5 of a real macaque brain. The types of neurons in the cortical brain areas include excitatory neurons (80% of the neurons are of this type in the simulation) and inhibitory neurons (20% of the neurons are of this type in the simulation). The spiking neuron follows the H-H model, which is supported by BrainCog. Figure 9C shows the running demo of the model. The platform allows flexible settings for the neuron number, the connections, and the excitatory-inhibitory ratio in each region. (5) Human brain. The BrainCog human brain simulator follows an approach similar to the BrainCog macaque brain simulator. It uses the Human Brainnetome Atlas70,71 to build 246 brain areas. The details of the micro-circuit, including the excitatory and inhibitory neurons, are also considered. The final model (as shown in Figure 9D) includes 0.86 billion spiking neurons and 2.5 trillion synapses, which is 1/100 of a real human brain. The brain simulation demonstrates the framework’s ability to deploy on multi-scale computer clusters.

Figure 9.

Illustration of multi-scale brain structure simulation

(A) The structure of the thalamocortical column.

(B–D) Running of the BrainCog mouse brain (B), macaque brain (C), and human brain (D) simulators. The shining point is the spiking neuron at time t, and the point color represents the neuron belonging to the respective brain area.

Discussion

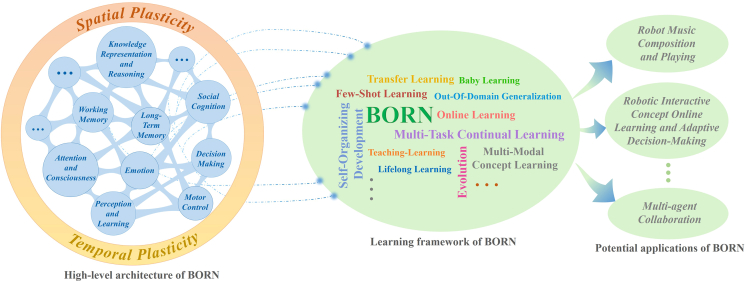

BORN: An SNN-driven AI engine based on BrainCog

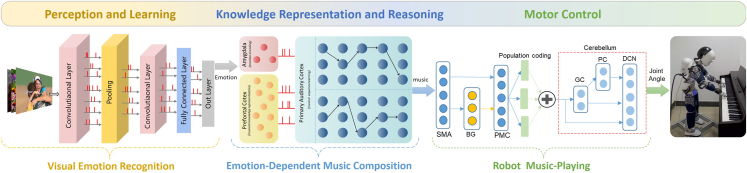

BrainCog is an open-source platform to enable the community to build SNN-based, brain-inspired AI models and brain simulators. Here we discuss future research and potential applications of the BrainCog platform. Based on the essential components developed for BrainCog, one can develop domain-specific or general-purpose AI engines. To further demonstrate how BrainCog can support the development of a brain-inspired AI engine, we introduce BORN, an ongoing SNN-driven, brain-inspired AI engine that leverages SNNs to build a general-purpose living AI system. As shown in Figure 10, the high-level architecture of BORN integrates spatial and temporal plasticities to implement various brain cognitive functions, such as perception and learning, decision-making, motor control, working memory, long-term memory, attention and consciousness, emotion, knowledge representation and reasoning, and social cognition. Spatial plasticity incorporates multi-scale neuroplasticity principles at micro, meso, and macro scales. Temporal plasticity considers learning and developmental and evolutionary plasticity at different timescales. How the human brain selects and coordinates various learning methods to solve complex tasks is crucial for understanding human intelligence and inspiring future AI. BORN is dedicated to addressing critical research issues like this. The learning framework of BORN consists of multi-task continual learning, few-shot learning, multi-modal concept learning, online learning, lifelong learning, teaching learning, transfer learning, etc. To demonstrate the ability and principles of BORN, we provide a relatively complex application of emotion-dependent robotic music composition and playing. This application involves a humanoid robot that can compose and play music based on visual emotion recognition. This application of BORN covers the whole process, from perception and learning to knowledge representation and reasoning and motor control. It consists of three modules built by BrainCog: the visual (emotion) recognition module, the emotion-dependent music composition module, and the robot music-playing module. As shown in Figure 11, the visual emotion recognition module enables robots to recognize the emotions (such as joy or sadness) expressed in images captured by the humanoid robot’s eyes. The emotion-dependent music composition module generates music pieces that correspond to the emotions in the image. Finally, with the help of the robot music-playing module, the robot controls its arms and fingers to perform the music on the piano. We introduce some details of these modules as follows. (1) Visual emotion recognition. For emotion recognition, inspired by the ventral visual pathway, we construct a deep convolutional SNN with the LIF neuron model and surrogate gradient provided by BrainCog. The structure of the network is 32C3-32C3-MP-32C3-32C3-300-7, where 32C3 means the output channels of the convolution layer are 32, the kernel size is 3, and MP means max pooling. We train and test our model on the Emotion6 dataset,72 which contains 6 emotions: anger, disgust, fear, joy, sadness, and surprise. Each emotion consists of 330 samples. On this basis, we extend the original Emotion6 dataset with exciting emotion, which we collect online. We use 80% of the images as the training set and the remaining 20% as the test set. (2) Emotion-dependent music composition. We construct an SNN that contains multiple subnetworks that collaborate to simulate different brain areas involved in representing, learning, and generating music melodies with different emotions. The model uses LIF neurons provided by BrainCog and the STDP learning rule to update the synaptic connections. We train the model on a dataset of 331 MIDI files of classical piano works.47 As shown in Figure 11, the amygdala network receives the outputs of visual emotion recognition as the input. The PFC and primary auditory cortex (PAC) networks then generate musical melodies that match the emotional categories. More details of the model are given in Supplemental experimental procedures S10. (3) Robot music-playing. We build a multi-brain area coordinated robot motor control SNN model based on the brain motor control circuit. The SNN model uses LIF neurons and incorporates SMA, PMC, BG, and cerebellum functions. The music notes are first processed by SMA, PMC, and BG networks to generate high-level target movement directions, and the output of the PMC is encoded by population neurons to target movement directions. The population coding of movement directions is then processed by the cerebellum model for low-level motor control. A humanoid robot, iCub, is used to validate the abilities of robotic music composition and playing, depending on the result of visual emotion recognition. The cerebellum SNN module implements the three-level residual architecture to process motor intentions and generate joint control outputs for the robot arms. The robot plays the music by moving its hand according to the generated sequence of music notes and pressing the keys with corresponding fingers. BrainCog aims to provide a community-based, open-source platform for developing SNN-based AI models and cognitive brain simulators. It integrates multi-scale biological plausible computational units and plasticity principles. Unlike existing platforms, BrainCog provides task-ready SNN models for AI and supports brain function and structure simulations at multiple scales. With the basic and functional components provided in the current version of BrainCog, we have shown how a variety of models and applications can be implemented for brain-inspired AI and brain simulations. Based on BrainCog, we are also committed to building BORN into a powerful SNN-based AI engine that incorporates multi-scale plasticity principles to realize human-level brain-inspired cognitive functions. Powered by 9 years of developing BrainCog modules, components, and applications, and inspired by biological mechanisms and natural evolution, continuous efforts on BORN will enable it to be a general-purpose AI engine. We have already started efforts to extend BrainCog and BORN to support high-level cognition, such as theory of mind,49 consciousness,48 and morality,49 which are essential for building true and general-purpose AI for human and ecological good. We invite you to join us on this exploration to create a future for a human-AI symbiotic society.

Figure 10.

The functional framework and vision of BORN

Figure 11.

The procedure of multi-cognitive function coordinated emotion-dependent music composition and playing by a humanoid robot based on BORN

Limitations of study

This paper introduces BrainCog, a brain-inspired cognitive intelligence engine that supports brain-inspired AI and brain simulation research. This integrated design enables researchers from different domains to collaborate more effectively on a common platform. However, we still face some challenges in achieving deep coordination between them. Although we strive to integrate the precise simulation of brain functions with the computational efficiency of deep learning, the current brain-inspired AI module has not been able to fully simulate the functions and structures of the real brain. Moreover, even though our brain simulation tools have demonstrated commendable performance on various tasks, they face difficulties when dealing with higher-complexity tasks that are inherent to deep learning. These challenges may affect the performance of our platform in some scenarios that require precise brain simulation. In the future, we will continue to improve the BrainCog platform to promote deep coordination between brain simulation and brain-inspired AI and further enhance its applications in neuroscience and AI research. BrainCog will play a key role in interdisciplinary collaboration and research, and we will also actively address its current limitations.

Experimental procedures

Resource availability

Lead contact

Further information and requests for resources should be directed to and will be fulfilled by the lead contact, Yi Zeng (yi.zeng@braincog.ai).

Materials availability

This study did not generate new unique materials.

Essential and fundamental components

BrainCog provides essential and fundamental components, including various biological neuron models, learning rules, encoding strategies, and models of different brain areas. One can build brain-inspired SNN models by reusing and refining these building blocks. Expanding and refining the components and cognitive functions included in BrainCog is an ongoing effort. We believe this should be a continuous community effort, and we invite researchers and practitioners to join us in enriching and improving the work in a synergistic way. Here we list some of the basic components incorporated in BrainCog.

Neuron models

BrainCog supports various models for spiking neurons, such as the following. (1) Integrate-and-Fire (IF) spiking neurons:73

| (Equation 1) |

I denotes the input current from the pre-synaptic neurons. C denotes the membrane capacitance. When the membrane potential reaches the threshold , the neuron j fires a spike.73

(2) LIF spiking neurons:74

| (Equation 2) |

denotes the time constant, and R and C denote the membrane resistance and capacitance, respectively.74.

| (Equation 3) |

is the leak conductance, is the leak reversal potential, is the reset potential, is the slope factor, I is the background current, and is the adaptation time constant. When the membrane potential is greater than the threshold , , and . a is the subthreshold adaptation, and b is the spike-triggered adaptation.75,76

-

(4)

Izhikevich spiking neurons:77

| (Equation 4) |

When the membrane potential v is greater than the threshold:

| (Equation 5) |

u represents the membrane recovery variable, and are the dimensionless parameters.77 (5) H-H spiking neurons:78

| (Equation 6) |

| (Equation 7) |

and are used to control the ion channel. n, m, and h are dimensionless probabilities between 0 and 1. is the maximal value of the conductance.78 The H-H model shows elaborate modeling of biological neurons. To apply it more efficiently to AI tasks, BrainCog incorporates a simplified H-H model (), as illustrated in Wang et al.79 (6) Multi-compartment spiking neurons.80 The multi-compartment neuron (MCN) model regards the dendrites, somata, and other parts of neurons as independent computing units. BrainCog provides a multi-compartment spiking neuron model containing basal dendrites, apical dendrites, and soma compartments. The basal and apical dendrites receive different source signals:

| (Equation 8) |

| (Equation 9) |

| (Equation 10) |

is basal dendrite potential, and is the apical dendrite potential. , , and are decay time constants of dendrites and the soma compartment, while , , and are conductance hyperparameters. The somatic potential integrates the basal and apical dendritic potentials, and when the somatic potential exceeds the threshold, the neuron fires a spike as output.

Learning rules

BrainCog provides various plasticity principles and rules to support biologically plausible learning and inference, such as (1) Hebbian learning theory:81

| (Equation 11) |

means the synapse weight of neuron at the time t. is the output of synapse at time t. is the output of neuron at time t.81 (2) STDP:82

| (Equation 12) |

is the modification of the synapse j, and is the STDP function. t is the time of the spike. mean the modification degree of STDP. and denote the time constant.82 (3) Bienenstock-Cooper-Munro (BCM) theory:83

| (Equation 13) |

x and y denote the firing rates of pre-synaptic and post-synaptic neurons, respectively, and threshold is the average of historical activity of the post-synaptic neuron.83 (4) STP.84 Short-term plasticity is used to model the synaptic efficacy changes over time.

| (Equation 14) |

| (Equation 15) |

| (Equation 16) |

w denotes the synaptic weight, and U denotes the fraction of synaptic resources. and denote the time constant for recovery from facilitation and depression. The variable models the fraction of synaptic efficacy available for the spike, and models the fraction of synaptic efficacy.84 (5) Reward-modulated STDP (R-STDP):85 R-STDP uses synaptic eligibility trace e to store temporary information of STDP. The eligibility trace accumulates the STDP and decays with a time constant .85

| (Equation 17) |

Then, synaptic weights are updated when a delayed reward r is received, as shown in Equation 18.85

| (Equation 18) |

(6) Backpropagation based on surrogate gradient.23 Because of the non-differentiable nature of the spiking function, researchers try to use the gradient of a smoother function (i.e., the surrogate gradient) as an alternative to the real gradient. The surrogate gradient enables the backpropagation algorithm to be successfully applied to the training of SNNs and allows SNNs to be applied to more complex network structures and tasks.

| (Equation 19) |

The model’s loss function is , and the gradient of the l layer’s neuron synaptic weight is calculated using the chain rule with derivative of neuron spike and potential .

Encoding strategies

BrainCog supports a number of different encoding strategies to encode the inputs of SNNs. (1) Rate coding.86 Rate coding is mainly based on spike counting to ensure that the number of spikes issued in the time window corresponds to the real value. Poisson distribution can describe the number of random events occurring per unit of time, which corresponds to the firing rate.86 Set as , the input can be encoded as follows:

| (Equation 20) |

(2) Phase coding.87 The idea of phase coding can be used to encode the analog quantity changing with time. The value of the analog quantity in a period can be represented by a spike time, and the change of the analog quantity in the whole time process can be represented by the spike train obtained by connecting all of the periods. Each spike has a corresponding phase weighting under phase encoding, and generally, the pixel intensity is encoded as a 0/1 input, similar to binary encoding. Here denotes the shift operation to the right, and K is the phase period.87 Pixel x is enlarged to and shifted to the right, where is the remainder operation. If the lowest bit is one, then s will be one at time t. means bit-wise AND operation.

| (Equation 21) |

(3) Temporal coding.88 The characteristic of the neuron spike is that the form of the spike is fixed, and there are only differences in quantity and time. A common way to implement this is to express information regarding the timing of individual spikes. The stronger the stimulus received, the earlier the spike generates.89 Let the total simulation time be T, and the input x of the neuron can be encoded as the spike at time :

| (Equation 22) |

(4) Quantum superposition coding.28 Quantum superposition-inspired spike coding processes different characteristics of information with spatiotemporal spike trains. The original information and complementary information are encoded to the superposition state . The spiking phase is generated from mixing parameter θ, and the superposition state is transferred to spiking rate . Final spiking trains are generated from the Poisson spike process with corresponding rate and phase arguments. This spatiotemporal coding method has been proven to be robust in processing noisy information.28

| (Equation 23) |

| (Equation 24) |

(5) Population coding:90 The intuitive idea of population coding is to make different neurons have different sensitivity to different types of inputs. A classical population coding method is the neural information coding method based on the Gaussian tuning curve, referred to in Equation 25. Suppose that m () neurons are used to encode a variable x with a value range of . can be firing time or membrane potential.

| (Equation 25) |

The corresponding mean μ and variance σ of the () neuron with adjustable parameter β are as follows:

| (Equation 26) |

| (Equation 27) |

μ represents the optimal input of the neuron, while σ controls the size of the receptive field of the neuron.

Brain area models

Brain-inspired models of several functional brain areas are constructed for BrainCog from different levels of abstraction. (1) PFC. The PFC plays a crucial role in human high-level cognitive behavior. In BrainCog, many cognitive tasks based on SNNs are inspired by the mechanisms of the PFC,91 such as decision-making, working memory,92 knowledge representation,93 and theory of mind and music processing.94 Different circuits are involved in completing these cognitive tasks. In BrainCog, the data-driven PFC column model contains 6 layers and 16 types of neurons. The distribution of neurons, membrane parameters, and connections of different types of neurons are all derived from existing biological experimental data.58,59 The PFC brain area component mainly employs the LIF neuron model to simulate the neural dynamics. The STDP and R-STDP learning rules are utilized to compute the weights between different neural circuits. (2) Basal ganglia. Basal ganglia facilitate desired action selection and inhibit competing behavior (making winner-takes-all decisions).95 They cooperate with the PFC and THA to realize the decision-making process in the brain.96 BrainCog models the basal ganglia brain area, including excitatory and inhibitory connections among the striatum, globus pallidus internus (Gpi), globus pallidus externus (Gpe), and subthalamic nucleus (STN) of basal ganglia.97 The BG brain area component adopts the LIF or simplified H-H neuron model in BrainCog as well as the STDP learning rule and CustomLinear to build internal connections of the BG. Then, the BG brain area component can be used to build BDM-SNNs. (3) PAC. The PAC is responsible for analyzing sound features and memory and extraction of inter-sound relationships.98 This area exhibits a topographical map, which means neurons respond to their preferred sounds. In BrainCog, neurons in this area are simulated by the LIF model and organized as minicolumns to represent different sound frequencies. To store the ordered note sequences, the excitatory and inhibitory connections are updated by the STDP learning rule. (4) Inferior parietal lobule (IPL). The function of the IPL is to realize motor-visual associative learning.99 The IPL consists of two subareas: IPLM (motor perception neurons in the IPL) and IPLV (visual perception neurons in the IPL). The IPLM receives information generated by self-motion from the ventral PMC (vPMC), and the IPLV receives information detected by vision from the superior temporal sulcus (STS). Motor-visual associative learning is established according to the STDP mechanism and the spiking time difference of neurons in the IPLM and IPLV. The IPL brain area component of BrainCog adopts CustomLinear to build internal connections of the IPL with Izhikevich neurons and the STDP learning rule. (5) Hippocampus (HPC). The HPC is part of the limbic system and plays an essential role in the learning and memory processes of the human brain. It is involved in the key process of converting short-term memory to long-term memory.100 In BrainCog, we draw on the population-coding mechanism of the HPC to realize knowledge representation and reasoning, music memory, and stylistic composition models. (6) Insula. The role of the insula is to realize self-representation.54 That is, when the agent detects that the movement in the visual field is generated by itself, the insula is activated. The insula receives information from the IPLV and STS. The IPLV puts out the visual feedback information predicted according to its motion, and the STS puts out the motion information detected by vision. When both are consistent, the insula will be activated. In BrainCog, the insula brain area component integrates Izhikevich neurons and the STDP mechanism. (7) THA. Research has shown that the THA is composed of a series of nuclei connected to different brain areas and plays a crucial role in many brain processes. In BrainCog, this area is discussed from anatomic and cognitive perspectives. Understanding the anatomical structure of the THA can help researchers to grasp the mechanisms of the THA. Based on essential and detailed anatomic thalamocortical data,67 BrainCog reconstructs the thalamic structure by involving five types of neurons (including excitatory and inhibitory neurons) to simulate the neuronal dynamics and building the complex synaptic architecture according to the anatomic results. Inspired by the structure and function of the THA, the BDM model implemented by BrainCog considers the transfer function of the THA and cooperates with the PFC and BG to realize a multi-brain area coordinated decision-making model. (8) Ventral visual pathway. Cognitive neuroscience research has shown that the brain can receive external input and quickly recognize objects because of the hierarchical information processing of the ventral visual pathway. The ventral visual pathway is mainly composed of the primary visual cortex (V1), visual area 2 (V2), visual area 4 (V4), inferior temporal (IT), and other brain areas, which mainly process information such as object shape and color.101 These visual areas are connected through forward, feedback, and self-layer projections. The interaction of different visual areas enables humans to recognize visual objects. V1 is selective for simple edge features. As information flows to higher-level regions, they integrate lower-level features into larger and more complex receptive fields that can recognize more abstract objects.102 Inspired by the structure and function of the ventral visual pathway, BrainCog builds a deep forward SNN with layer-wise information abstraction and a feedforward and feedback interaction deep SNN. The performance is verified on several visual classification tasks. (9) Motor cortex. Biological motor function requires coordination of multiple brain areas. The extra circuits consisting of the PMC, cerebellum, and BA6 motor cortex area are primarily associated with motor control elicited by external stimuli, such as visual, auditory, and tactual inputs. The internal motor circuits, which include the basal ganglia and the SMAs, dominate in self-initiated, learned movements.103 The population activity of motor cortical neurons encodes the direction of movement. Each neuron has a preferred direction and fires more strongly when the target movement direction matches its preferred direction.104 Inspired by the organization of the brain’s motor cortex, we use BrainCog’s LIF neuron to construct a motor control model. The cerebellum receives input from motor-related cortical areas such as the PMC, SMA, and PFC, which are important for fine movement execution, maintaining balance, and coordination of movements.105 We train this model using the surrogate gradient backpropagation method implemented in BrainCog and apply it to control the iCub robot, which can play the piano according to musical pieces.

Hardware-software co-design

Although BrainCog has already integrated a complete infrastructure for brain-inspired SNN algorithm design, existing neuromorphic hardware imposes strict constraints on algorithms in terms of neuron models, encoding strategies, learning rules, and connection topologies. There’s a huge gap between the ever-changing algorithms and the hard-to-use neuromorphic hardware. We use field programmable gate array (FPGA)-based hardware-software co-design to facilitate deployment of BrainCog. We can support different kinds of SNN algorithms with the least hardware limitations by utilizing the reconfigurable FPGA platforms. We can deploy brain-inspired models to unmanned vehicles or drones in real-world applications using FPGA edge devices. Our exploration of the hardware design is ongoing. FireFly106 is our initial attempt to achieve hardware-software co-design for the BrainCog project. FireFly is a lightweight accelerator for high-performance SNN inference. We propose a method to improve arithmetic and memory efficiency for SNN inference on Xilinx FPGA edge devices. We plan to apply more hardware optimizations and hardware-software co-design methodologies to the BrainCog project soon.

Implementation details

BrainCog is developed using Python as the main programming language. It incorporates PyTorch,107 a widely used open-source machine learning framework, to implement deep learning components. It also employs Tonic,108 a dedicated library, for preprocessing neuromorphic data. For detailed software version information, including specific package versions and dependencies, please refer to the GitHub repository at https://github.com/BrainCog-X/Brain-Cog. The repository provides comprehensive documentation and instructions for setting up and running BrainCog. BrainCog is designed to be a cross-platform framework that runs on various operating systems, such as Windows, macOS, and Linux. It supports Python 3.8 or higher versions. Additionally, BrainCog supports graphic processing unit (GPU) acceleration, which significantly enhances the speed of deep learning computations. Furthermore, BrainCog includes implementations of specific network models that can be deployed on Xilinx FPGAs. This FPGA deployment enables more efficient and low-power network inference, making it particularly suitable for resource-constrained environments.

Acknowledgments

We thank Hui Feng, Xiang He, Jihang Wang, Bing Han, Jindong Li, and Aorigele Bao for contributing to project analysis and development. This work is supported by the National Key Research and Development Program (2020AAA0104305) and the Strategic Priority Research Program of the Chinese Academy of Sciences (XDB32070100).

Author contributions

Y. Zeng designed, administered, and supervised the project. Y. Zeng, D.Z., G.S., and Y.D. implemented the basic components of BrainCog. Y. Zeng, D.Z., F.Z., G.S., Y.D., Y.S., Q.L., Y. Zhao, Z.Z., H.F., Y.W., and Y.L. built brain-inspired AI models. Y. Zeng, F.Z., Q.Z., Q.L., X.L., and C.D. contributed to brain simulations. D.Z., E.L., Y.S., and Q.L. carried out application on emotion-dependent robotic music composition and playing. Y. Zeng, D.Z., F.Z., G.S., Y.D., E.L., Q.Z., Y.S., Q.L., Y. Zhao, Z.Z., H.F., Y.W., Y.L., X.L., C.D., and Q.K. wrote and checked the paper. Z.R. and W.B. provided visualizations.

Declaration of interests

The authors declare no competing interests.

Published: July 6, 2023

Footnotes

Supplemental information can be found online at https://doi.org/10.1016/j.patter.2023.100789.

Supplemental information

Data and code availability

Human brain neuron parameters are extraced directly from the Allen Brain Atlas Cell Types Database: https://celltypes.brain-map.org/. The online repository of BrainCog can be found at https://github.com/BrainCog-X/Brain-Cog and Zenodo109 (https://doi.org/10.5281/zenodo.7955594). Demo videos related to applications of BrainCog can be found at https://www.youtube.com/watch?v=xActrzjamOE.

References

- 1.Maass W. Networks of spiking neurons: the third generation of neural network models. Neural Netw. 1997;10:1659–1671. doi: 10.1016/S0893-6080(97)00011-7. [DOI] [Google Scholar]

- 2.Carnevale N.T., Hines M.L. Cambridge University Press; 2006. The NEURON Book. [Google Scholar]

- 3.Gewaltig M.-O., Diesmann M. NEST (neural simulation tool) Scholarpedia. 2007;2:1430. doi: 10.4249/scholarpedia.1430. [DOI] [Google Scholar]

- 4.Stimberg M., Brette R., Goodman D.F. Brian 2, an intuitive and efficient neural simulator. Elife. 2019;8 doi: 10.7554/eLife.47314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Goodman D.F.M., Brette R. The brian simulator. Front. Neurosci. 2009;3:192–197. doi: 10.3389/neuro.01.026.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Diehl P.U., Cook M. Unsupervised learning of digit recognition using spike-timing-dependent plasticity. Front. Comput. Neurosci. 2015;9:99. doi: 10.3389/fncom.2015.00099. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Hazan H., Saunders D.J., Khan H., Patel D., Sanghavi D.T., Siegelmann H.T., Kozma R. Bindsnet: a machine learning-oriented spiking neural networks library in python. Front. Neuroinform. 2018;12:89. doi: 10.3389/fninf.2018.00089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Dominguez-Morales J.P., Liu Q., James R., Gutierrez-Galan D., Jimenez-Fernandez A., Davidson S., Furber S. 2018 International Joint Conference on Neural Networks (IJCNN) IEEE; 2018. Deep spiking neural network model for time-variant signals classification: a real-time speech recognition approach; pp. 1–8. [DOI] [Google Scholar]

- 9.Kim S., Park S., Na B., Yoon S. Vol. 34. 2020. Spiking-yolo: Spiking neural network for energy-efficient object detection; pp. 11270–11277. (Proceedings of the AAAI Conference on Artificial Intelligence). [DOI] [Google Scholar]

- 10.Tan W., Patel D., Kozma R. Vol. 35. 2021. Strategy and benchmark for converting deep q-networks to event-driven spiking neural networks; pp. 9816–9824. (Proceedings of the AAAI Conference on Artificial Intelligence). [DOI] [Google Scholar]

- 11.Rueckauer B., Lungu I.-A., Hu Y., Pfeiffer M., Liu S.-C. Conversion of continuous-valued deep networks to efficient event-driven networks for image classification. Front. Neurosci. 2017;11:682. doi: 10.3389/fnins.2017.00682. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Davison A.P., Brüderle D., Eppler J., Kremkow J., Muller E., Pecevski D., Perrinet L., Yger P. PyNN: a common interface for neuronal network simulators. Front. Neuroinform. 2008;2:11. doi: 10.3389/neuro.11.011.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Khan M.M., Lester D.R., Plana L.A., Rast A., Jin X., Painkras E., Furber S.B. 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence) IEEE; 2008. SpiNNaker: mapping neural networks onto a massively-parallel chip multiprocessor; pp. 2849–2856. [DOI] [Google Scholar]

- 14.Davies M., Srinivasa N., Lin T.-H., Chinya G., Cao Y., Choday S.H., Dimou G., Joshi P., Imam N., Jain S., et al. Loihi: a neuromorphic manycore processor with on-chip learning. IEEE Micro. 2018;38:82–99. doi: 10.1109/MM.2018.112130359. [DOI] [Google Scholar]

- 15.SynSense SNN Library. (2020). https://synsense.gitlab.io/sinabs/.

- 16.Fang W., Chen Y., Ding J., Chen D., Yu Z., Zhou H., Tian Y. SpikingJelly. 2020. Other contributors.https://github.com/fangwei123456/spikingjelly [Google Scholar]

- 17.Wang C., Jiang Y., Liu X., Lin X., Zou X., Ji Z., Wu S. Neural Information Processing: 28th International Conference, ICONIP. Springer; 2021. A just-in-time compilation approach for neural dynamics simulation; pp. 15–26. [DOI] [Google Scholar]

- 18.Eliasmith C., Stewart T.C., Choo X., Bekolay T., DeWolf T., Tang Y., Rasmussen D. A large-scale model of the functioning brain. Science. 2012;338:1202–1205. doi: 10.1126/science.1225266. [DOI] [PubMed] [Google Scholar]

- 19.Bekolay T., Bergstra J., Hunsberger E., DeWolf T., Stewart T.C., Rasmussen D., Choo X., Voelker A.R., Eliasmith C. Nengo: a Python tool for building large-scale functional brain models. Front. Neuroinform. 2014;7:48. doi: 10.3389/fninf.2013.00048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Hong C., Yuan M., Zhang M., Wang X., Zhang C., Wang J., Pan G., Wu Z., Tang H. SPAIC: a spike-based artificial intelligence computing framework. arXiv. 2022 doi: 10.48550/arXiv.2207.12750. Preprint at. [DOI] [Google Scholar]

- 21.Dong Y., Zhao D., Li Y., Zeng Y. 2022. An unsupervised spiking neural network inspired by biologically plausible learning rules and connections. Preprint at. [DOI] [PubMed] [Google Scholar]

- 22.Zhao D., Zeng Y., Zhang T., Shi M., Zhao F. GLSNN: a multi-layer spiking neural network based on global feedback alignment and local STDP plasticity. Front. Comput. Neurosci. 2020;14:576841. doi: 10.3389/fncom.2020.576841. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Shen G., Zhao D., Zeng Y. Backpropagation with biologically plausible spatiotemporal adjustment for training deep spiking neural networks. Patterns. 2022;3 doi: 10.1016/j.patter.2022.100522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Li Y., Zeng Y. Proceedings of the Thirty-First International Joint Conference on Artificial Intelligence, IJCAI-22. 2022. Efficient and accurate conversion of spiking neural network with burst spikes; pp. 2485–2491. [DOI] [Google Scholar]

- 25.Shen G., Zhao D., Zeng Y. Exploiting high performance spiking neural networks with efficient spiking patterns. arXiv. 2023 doi: 10.48550/arXiv.2301.12356. Preprint at. [DOI] [Google Scholar]

- 26.Zhao D., Zeng Y., Li Y. BackEISNN: a deep spiking neural network with adaptive self-feedback and balanced excitatory–inhibitory neurons. Neural Netw. 2022;154:68–77. doi: 10.1016/j.neunet.2022.06.036. [DOI] [PubMed] [Google Scholar]

- 27.Zhao D., Li Y., Zeng Y., Wang J., Zhang Q. Spiking capsnet: a spiking neural network with a biologically plausible routing rule between capsules. Inf. Sci. 2022;610:1–13. doi: 10.1016/j.ins.2022.07.152. [DOI] [Google Scholar]

- 28.Sun Y., Zeng Y., Zhang T. Quantum superposition inspired spiking neural network. iScience. 2021;24 doi: 10.1016/j.isci.2021.102880. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Wang Y., Zeng Y. Multisensory concept learning framework based on spiking neural networks. Front. Syst. Neurosci. 2022;16:845177. doi: 10.3389/fnsys.2022.845177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Agirre E., Alfonseca E., Hall K., Kravalova J., Pasca M., Soroa A. Proceedings of Human Language Technologies: The 2009 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL) 2009. A study on similarity and relatedness using distributional and wordnet-based approaches; pp. 19–27. [DOI] [Google Scholar]

- 31.Huang E.H., Socher R., Manning C.D., Ng A.Y. Volume 1. 2012. Improving word representations via global context and multiple word prototypes; pp. 873–882. (Proceedings of the 50th Annual Meeting of the Association for Computational Linguistics). Long Papers. [DOI] [Google Scholar]

- 32.Lynott D., Connell L. Modality exclusivity norms for 423 object properties. Behav. Res. Methods. 2009;41:558–564. doi: 10.3758/brm.41.2.558. [DOI] [PubMed] [Google Scholar]

- 33.Lynott D., Connell L. Modality exclusivity norms for 400 nouns: the relationship between perceptual experience and surface word form. Behav. Res. Methods. 2013;45:516–526. doi: 10.3758/s13428-012-0267-0. [DOI] [PubMed] [Google Scholar]

- 34.Binder J.R., Conant L.L., Humphries C.J., Fernandino L., Simons S.B., Aguilar M., Desai R.H. Toward a brain-based componential semantic representation. Cogn. Neuropsychol. 2016;33:130–174. doi: 10.1080/02643294.2016.1147426. [DOI] [PubMed] [Google Scholar]

- 35.Lynott D., Connell L., Brysbaert M., Brand J., Carney J. The lancaster sensorimotor norms: multidimensional measures of perceptual and action strength for 40,000 English words. Behav. Res. Methods. 2020;52:1271–1291. doi: 10.3758/s13428-019-01316-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.McRae K., Cree G.S., Seidenberg M.S., McNorgan C. Semantic feature production norms for a large set of living and nonliving things. Behav. Res. Methods. 2005;37:547–559. doi: 10.3758/BF03192726. [DOI] [PubMed] [Google Scholar]

- 37.Devereux B.J., Tyler L.K., Geertzen J., Randall B. The centre for speech, language and the brain (CSLB) concept property norms. Behav. Res. Methods. 2014;46:1119–1127. doi: 10.3758/s13428-013-0420-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Zhao F., Zeng Y., Wang G., Bai J., Xu B. A brain-inspired decision making model based on top-down biasing of prefrontal cortex to basal ganglia and its application in autonomous uav explorations. Cognit. Comput. 2018;10:296–306. doi: 10.1007/s12559-017-9511-3. [DOI] [Google Scholar]

- 39.Zhao F., Zeng Y., Han B., Fang H., Zhao Z. Nature-inspired self-organizing collision avoidance for drone swarm based on reward-modulated spiking neural network. Patterns. 2022;3 doi: 10.1016/j.patter.2022.100611. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Sun Y., Zeng Y., Li Y. Solving the spike feature information vanishing problem in spiking deep q network with potential based normalization. Front. Neurosci. 2022;16:953368. doi: 10.3389/fnins.2022.953368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Frank M.J., Claus E.D. Anatomy of a decision: striato-orbitofrontal interactions in reinforcement learning, decision making, and reversal. Psychol. Rev. 2006;113:300–326. doi: 10.1037/0033-295X.113.2.300. [DOI] [PubMed] [Google Scholar]

- 42.Liang Q., Zeng Y., Xu B. Temporal-sequential learning with a brain-inspired spiking neural network and its application to musical memory. Front. Comput. Neurosci. 2020;14:51. doi: 10.3389/fncom.2020.00051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Liang Q., Zeng Y. Stylistic composition of melodies based on a brain-inspired spiking neural network. Front. Syst. Neurosci. 2021;15 doi: 10.3389/fnsys.2021.639484. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Fang H., Zeng Y., Zhao F. Brain inspired sequences production by spiking neural networks with reward-modulated STDP. Front. Comput. Neurosci. 2021;15 doi: 10.3389/fncom.2021.612041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Fang H., Zeng Y., Tang J., Wang Y., Liang Y., Liu X. Brain-inspired graph spiking neural networks for commonsense knowledge representation and reasoning. arXiv. 2022 doi: 10.48550/arXiv.2207.05561. Preprint at. [DOI] [Google Scholar]

- 46.Fang H., Zeng Y. 2021 International Joint Conference on Neural Networks (IJCNN) IEEE; 2021. A brain-inspired causal reasoning model based on spiking neural networks; pp. 1–5. [DOI] [Google Scholar]

- 47.Krueger B. 2018. Classical piano midi page.http://piano-midi.de/ [Google Scholar]

- 48.Zeng Y., Zhao Y., Bai J., Xu B. Toward robot self-consciousness (ii): brain-inspired robot bodily self model for self-recognition. Cognit. Comput. 2018;10:307–320. doi: 10.1007/s12559-017-9505-1. [DOI] [Google Scholar]

- 49.Zhao Z., Lu E., Zhao F., Zeng Y., Zhao Y. A brain-inspired theory of mind spiking neural network for reducing safety risks of other agents. Front. Neurosci. 2022;16:753900. doi: 10.3389/fnins.2022.753900. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Feng H., Zeng Y. A brain-inspired robot pain model based on a spiking neural network. Front. Neurorobot. 2022;16:1025338. doi: 10.3389/fnbot.2022.1025338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Feng H., Zeng Y., Lu E. Brain-inspired affective empathy computational model and its application on altruistic rescue task. Front. Comput. Neurosci. 2022;16:784967. doi: 10.3389/fncom.2022.784967. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Zhao Z., Zhao F., Zhao Y., Zeng Y., Sun Y. 2022. Brain-inspired theory of mind spiking neural network elevates multi-agent cooperation and competition. SSRN 4271099. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Zhao Y., Zeng Y. A brain-inspired intention prediction model and its applications to humanoid robot. Front. Neurosci. 2022;16:1009237. doi: 10.3389/fnins.2022.1009237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Craig A.D.B. How do you feel—now? The anterior insula and human awareness. Nat. Rev. Neurosci. 2009;10:59–70. doi: 10.1038/nrn2555. [DOI] [PubMed] [Google Scholar]

- 55.Koster-Hale J., Saxe R. Theory of mind: a neural prediction problem. Neuron. 2013;79:836–848. doi: 10.1016/j.neuron.2013.08.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Zhao F., Zeng Y., Guo A., Su H., Xu B. A neural algorithm for drosophila linear and nonlinear decision-making. Sci. Rep. 2020;10:18660. doi: 10.1038/s41598-020-75628-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Zhang Q., Zeng Y., Zhang T., Yang T. Comparison between human and rodent neurons for persistent activity performance: a biologically plausible computational investigation. Front. Syst. Neurosci. 2021;15 doi: 10.3389/fnsys.2021.628839. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.(2017). Allen institute for brain science, allen software development kit. http://alleninstitute.github.io/AllenSDK/cell_types.html.

- 59.Shapson-Coe A., Januszewski M., Berger D.R., Pope A., Wu Y., Blakely T., Schalek R.L., Li P.H., Wang S., Maitin-Shepard J., et al. A connectomic study of a petascale fragment of human cerebral cortex. bioRxiv. 2021 doi: 10.1101/2021.05.29.446289. Preprint at. [DOI] [Google Scholar]

- 60.Hass J., Hertäg L., Durstewitz D. A detailed data-driven network model of prefrontal cortex reproduces key features of in vivo activity. PLoS Comput. Biol. 2016;12 doi: 10.1371/journal.pcbi.1004930. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Beaulieu C. Numerical data on neocortical neurons in adult rat, with special reference to the gaba population. Brain Res. 1993;609:284–292. doi: 10.1016/0006-8993(93)90884-. [DOI] [PubMed] [Google Scholar]

- 62.DeFelipe J. The evolution of the brain, the human nature of cortical circuits, and intellectual creativity. Front. Neuroanat. 2011;5:29. doi: 10.3389/fnana.2011.00029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Gibson J.R., Beierlein M., Connors B.W. Two networks of electrically coupled inhibitory neurons in neocortex. Nature. 1999;402:75–79. doi: 10.1038/47035. [DOI] [PubMed] [Google Scholar]

- 64.Gao W.-J., Wang Y., Goldman-Rakic P.S. Dopamine modulation of perisomatic and peridendritic inhibition in prefrontal cortex. J. Neurosci. 2003;23:1622–1630. doi: 10.1523/JNEUROSCI.23-05-01622.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Eyal G., Verhoog M.B., Testa-Silva G., Deitcher Y., Lodder J.C., Benavides-Piccione R., Morales J., DeFelipe J., de Kock C.P., Mansvelder H.D., et al. Unique membrane properties and enhanced signal processing in human neocortical neurons. Elife. 2016;5 doi: 10.7554/eLife.16553. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Zhang Q., Zeng Y., Yang T. Computational investigation of contributions from different subtypes of interneurons in prefrontal cortex for information maintenance. Sci. Rep. 2020;10:4671. doi: 10.1038/s41598-020-61647-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Izhikevich E.M., Edelman G.M. Large-scale model of mammalian thalamocortical systems. Proc. Natl. Acad. Sci. USA. 2008;105:3593–3598. doi: 10.1073/pnas.0712231105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Oh S.W., Harris J.A., Ng L., Winslow B., Cain N., Mihalas S., Wang Q., Lau C., Kuan L., Henry A.M., et al. A mesoscale connectome of the mouse brain. Nature. 2014;508:207–214. doi: 10.1038/nature13186. [DOI] [PMC free article] [PubMed] [Google Scholar]