Abstract

Neural computation in biological and artificial networks relies on the nonlinear summation of many inputs. The structural connectivity matrix of synaptic weights between neurons is a critical determinant of overall network function, but quantitative links between neural network structure and function are complex and subtle. For example, many networks can give rise to similar functional responses, and the same network can function differently depending on context. Whether certain patterns of synaptic connectivity are required to generate specific network-level computations is largely unknown. Here we introduce a geometric framework for identifying synaptic connections required by steady-state responses in recurrent networks of threshold-linear neurons. Assuming that the number of specified response patterns does not exceed the number of input synapses, we analytically calculate the solution space of all feedforward and recurrent connectivity matrices that can generate the specified responses from the network inputs. A generalization accounting for noise further reveals that the solution space geometry can undergo topological transitions as the allowed error increases, which could provide insight into both neuroscience and machine learning. We ultimately use this geometric characterization to derive certainty conditions guaranteeing a nonzero synapse between neurons. Our theoretical framework could thus be applied to neural activity data to make rigorous anatomical predictions that follow generally from the model architecture.

I. INTRODUCTION

Structure-function relationships are fundamental to biology [1–3]. In neural networks, the structure of synaptic connectivity critically shapes the functional responses of neurons [4,5], and large-scale techniques for measuring neural network structure and function provide exciting opportunities for examining this link quantitatively [6–15]. The ellipsoid body in the central complex of Drosophila is a beautiful example where modeling showed how the structural pattern of excitatory and inhibitory connections enables a persistent representation of heading direction [16–19]. Lucid structure-function links have also been found in several other neural networks [20–23]. However, it is generally hard to predict either neural network structure or function from the other [5,24]. For example, functionally inferred connectivity can capture neuronal response correlations without matching structural connectivity [25–28], and network simulations with structural constraints do not automatically reproduce function [29–31]. Two broad modeling difficulties hinder the establishment of robust structure-function links. First, models with too much detail are difficult to adequately constrain and analyze. Second, models with too little detail may poorly match biological mechanisms, the model mismatch problem. Here we propose a rigorous theoretical framework that attempts to balance these competing factors to predict components of network structure required for function.

Neural network function probably does not depend on the exact strength of every synapse. Indeed, multiple network connectivity structures can generate the same functional responses [32,33], as illustrated by structural variability across individual animals [24,34] and artificial neural networks [29,35–37]. Such redundancy may be a general feature of emergent phenomena in physics, biology, and neuroscience [38–40]. Nevertheless, some important details may be consistent despite this variability, and here we find well-constrained structure-function links by characterizing all connectivity structures that are consistent with the desired functional responses [24]. We also account for ambiguities caused by measurement noise. Our goal is not to find degenerate networks that perform equivalently in all possible scenarios. We instead seek a framework that finds connectivity required for specific functional responses, independently of whatever else the network might do.

The model mismatch problem has at least two facets. First, neurons and synapses are incredibly complex [41–44], but which complexities are needed to elucidate specific structure-function relationships is unclear [5,45,46]. This issue is very hard to address in full generality, and here we seek a theoretical framework that makes clear experimental predictions that can adjudicate candidate models empirically. In particular, we predict neural network structure only when it occurs in all networks generating the functional responses. This high bar precludes the analysis of biophysically-detailed network models, which require numerical exploration of the connectivity space that is typically incomplete [24,32,47–49]. We instead focus on recurrent firing rate networks of threshold-linear neurons, which are growing in popularity because they strike an appealing balance between biological realism, computational power, and mathematical tractability [12,16,18,20,22,23,29,30,37,50–55].

The second facet of the model mismatch problem is hidden variables, such as missing neurons, neuromodulator levels, and physiological states [5,56–58]. Here we take inspiration from whole-brain imaging in small organisms [15], such as Caenorhabditis elegans [9], larval zebrafish [8,12,57], and larval Drosophila [11], and assume access to all relevant neurons. Our model neglects neuromodulators and other state variables, which would be interesting to consider in the future. Furthermore, many experiments indirectly assess neuronal spiking activity, such as by calcium florescence [58–61] or hemodynamic responses [25,62–64]. We restrict our analysis to steady-state responses to mitigate mismatch between fast firing rate changes and these inherently slow measurement techniques.

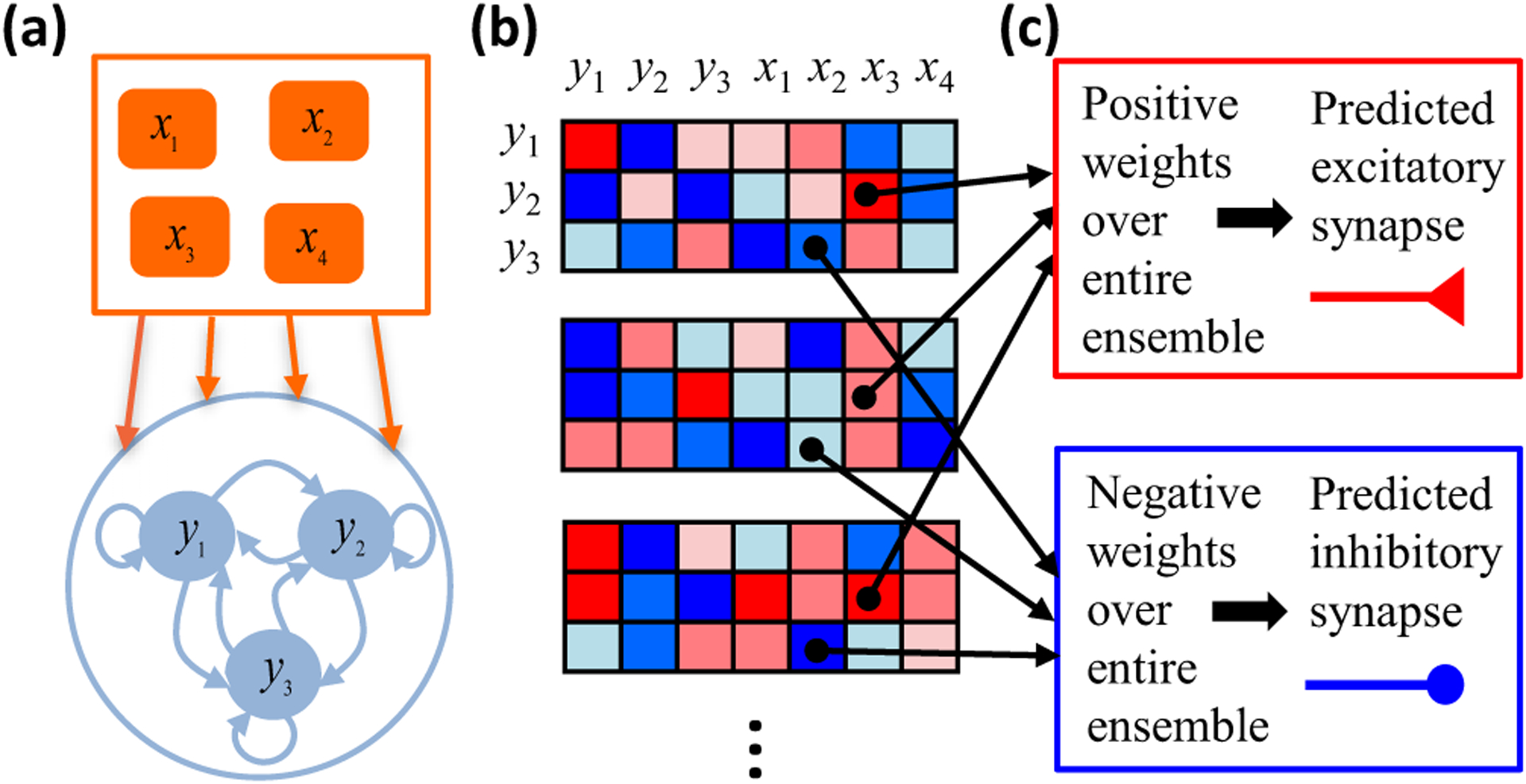

Our analysis begins with an analytical characterization of synaptic weight matrices that realize specified steady-state responses as fixed points of neural network dynamics [Figs. 1(a) and 1(b)]. A key insight is that asymmetrically constrained dimensions appear as a consequence of the threshold nonlinearity. Synaptic weight components in these semiconstrained dimensions are completely uncertain in one half of the dimension but well-constrained in the other. We then compute error surfaces by finding weight matrices with fixed points near the desired ones. This error landscape has a continuum of local and global minima, and constant-error surfaces exhibit topological transitions that add semiconstrained dimensions as the error increases. This may help explain the importance of weight initialization in machine learning, as poorly initialized models can get stuck in semiconstrained dimensions that abruptly vanish at nonzero error. By studying the geometric structure of the neural network ensemble that can approximate the functional responses, we derive analytical formulas that pinpoint a subset of connections, which we term certain synapses, that must exist for the model to work [Fig. 1(c)]. These analytical results are especially useful for studying high-dimensional synaptic weight spaces that are otherwise intractable. Since the presence of a synapse is readily measurable, our theory generates accessible experimental predictions [Fig. 1(c)]. Tests of these predictions assess the utility of the modeling framework itself, as the predictions hold across model parameters. Their successes and failures can thus move us forward toward identifying the mechanistic principles governing how neural networks implement brain computations.

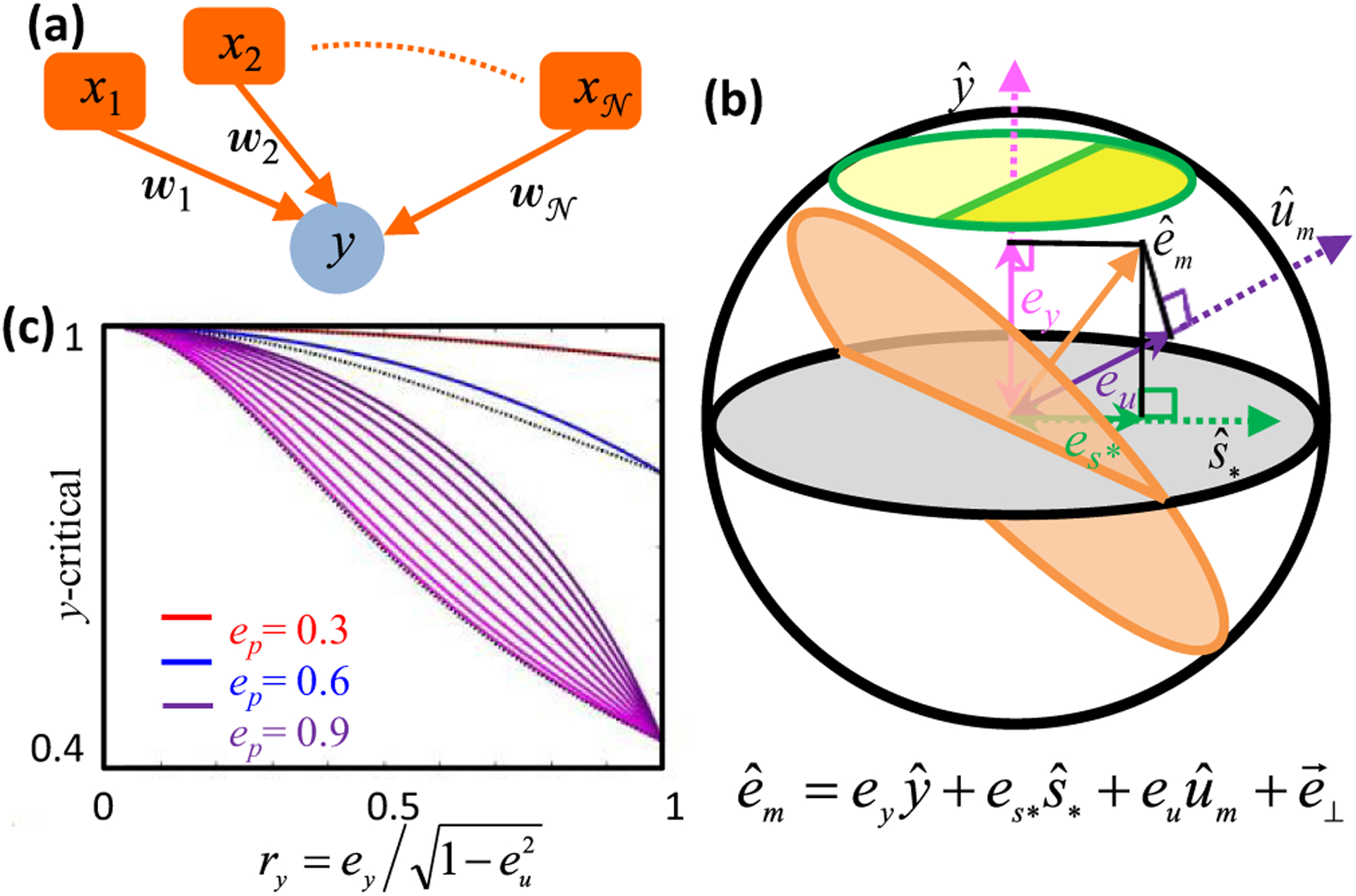

FIG. 1.

Cartoon of theoretical framework. (a) We first specify some steady-state responses of a recurrent threshold-linear neural network receiving feedforward input. (b) We then find all synaptic weight matrices that have fixed points at the specified responses. Red (blue) matrix elements are positive (negative) synaptic weights. (c) When a weight is consistently positive (or consistently negative) across all possibilities, then the model needs a nonzero synaptic connection to generate the responses. We therefore make the experimental prediction that this synapse must exist. We also predict whether the synapse is excitatory or inhibitory.

The rest of the paper begins in Sec. II with a toy problem that concretely demonstrates the approach illustrated in Fig. 1 and relates the geometry of the solution space (all synaptic weight matrices that realize a given set of response patterns) to the concept of a certain synapse. In Sec. III, we explain how the solution space for a limited number of response patterns can be calculated for an arbitrarily large threshold-linear recurrent neural network. Section IV is devoted to three simple toy problems that provide additional insights into how the geometry of the solution space can help us to identify certain synapses. This is followed by Sec. V, where we explain and numerically test the precise algebraic relation that must be satisfied for a synapse to be certain when the response patterns are orthonormal. Section VI generalizes our analyses to include noise, including numerical tests via simulation. Finally, Sec. VII concludes the paper by summarizing our main results and discussing important future directions.

II. AN ILLUSTRATIVE TOY PROBLEM

To gain intuition on how robust structure-function links can be established, including the effects of nonlinearity, we begin by analyzing the structural implications of functional responses in a very simple threshold-linear feedforward network [Fig. 2(a)]. We assume that two input neurons, x1 and x2, provide signals to a single driven neuron, y, via synaptic weights, w1 and w2. The weights are unknown, and we constrain their possible values using two neuronal response patterns, labeled μ = + and μ = −. We suppose that steady-state activities of the input neurons and driven neuron are nonlinearly related according to

| (1) |

where x1, x2, and y denote firing rates of the corresponding neurons, and

| (2) |

is the threshold-linear transfer function. The driven neuron responds (y = 1) when x1 = x2 = 1 in the μ = + pattern. In contrast, the driven neuron does not respond (y = 0) when x1 = −x2 = 1 in the μ = − pattern. If the transfer function were linear, then it is easy to see that there is a unique set of weights, , that produces these driven neuron responses, the brown dot in Fig. 2(b).

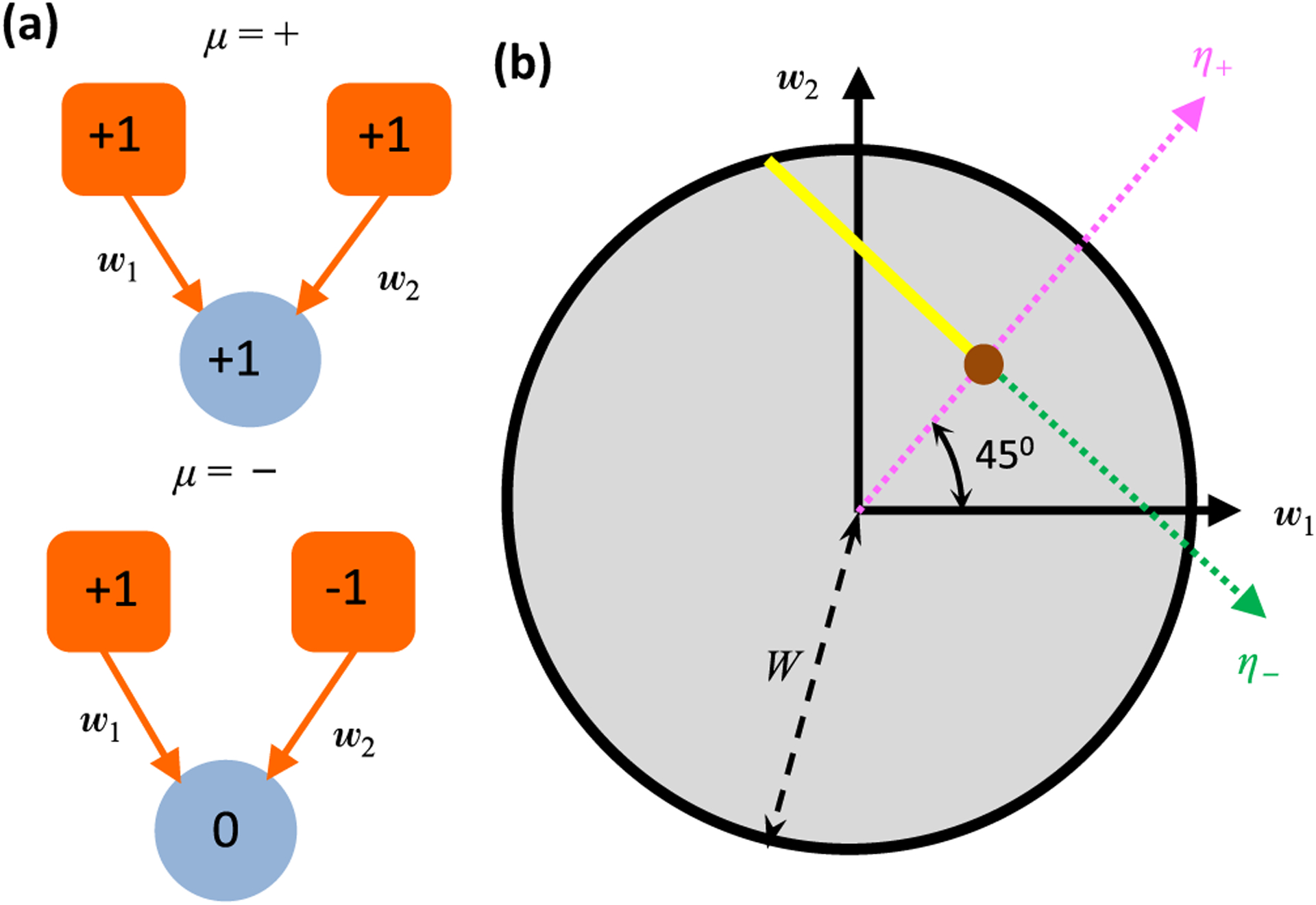

FIG. 2.

An illustrative two-dimensional problem. (a) Cartoon depicting two stimulus response patterns in a simple feedforward network with two input neurons and one driven neuron. (b) Since the driven neuron in panel (a) responds in one condition but not the other, we have one constrained dimension (magenta axis) and one semiconstrained dimension (green axis). The yellow ray depicts the space of weights, (w1, w2), that generate the stimulus transformation. The weight vector (brown dot) would uniquely generate the neural responses in a linear network. We assume that the magnitude of the weight vector is bounded by W, such that all candidate weight vectors lie within a circle of that radius. A nonzero synapse x2 → y exists in all solutions, but the x1 → y synapse can be zero because the yellow ray intersects the w1 = 0 axis.

How does the nonlinearity change the solution space of weights that reproduce the driven neuron responses? To answer this question, we define two linear combinations of weights,

| (3) |

which correspond to the driven neuron’s input drive in patterns μ = ±. Equation (1) now yields rather simple algebraic constraints for the two patterns:

| (4) |

| (5) |

Note that η− would have had to be zero if Φ were linear, but because the threshold-linear transfer function turns everything negative into a null response, η− can now also be any negative number. However, sufficiently negative values of η− correspond to implausibly large weight vectors, and hence we focus on solutions with norm bounded above by some value,W. The nonlinearity thus turns the unique linear solution [brown dot in Fig. 2(b)] into a continuum of solutions [yellow line segment in Fig. 2(b)]. This continuum lies along what we will refer to as a semiconstrained dimension. Indeed, this will turn out to be a generic feature of threshold-linear neural networks: every time there is a null response, a semiconstrained dimension emerges in the solution space.1

Although we found infinitely many weight vectors that solve the problem, all solutions to the problem have a synaptic connection x2 → y, and this connection is always excitatory [Fig. 2(b)]. Positive, negative, or zero connection weights are all possible for x1 → y. However, this reveals why the value of the synaptic weight bound, W, has important implications for the solution space. For example, all solutions in Fig. 2(b) with have w1 > 0, whereas larger magnitude weight vectors have w1 ≤ 0. Therefore, one would be certain that an excitatory x1 → y synapse exists if the weight bound were biologically known to be less than Wcr = 1. We refer to this weight bound as W-critical. Looser weight bounds raise the possibility that the synapse is absent or inhibitory. Note that too tight weight bounds, here less than , can exclude all solutions.

The example of Fig. 2 concretely illustrates the general procedure diagramed in Fig. 1. First, we specified a network architecture and steady-state response patterns [Figs. 1(a) and 2(a)]. Second, we found all synaptic weight vectors that can implement the nonlinear transformation [Figs. 1(b) and 2(b)]. Finally, we determined whether individual synaptic weights varied in sign across the solution space [Figs. 1(c) and 2(b)]. Section III will generalize the first two parts of this procedure to characterize the solution space of any threshold-linear recurrent neural network, assuming that the number of response patterns is at most the dimensionality of the weight vectors. Sections IV and V will then generalize the final part of this procedure to pinpoint synaptic connections that are critical for generating any specified set of orthonormal responses.

III. SOLUTION SPACE GEOMETRY

A. Neural network structure and dynamics

Consider a neural network of ℐ input neurons that send signals to a recurrently connected population of 𝒟 driven neurons [Fig. 3(a)]. We compactly represent the network connectivity with a matrix of synaptic weights, wim, where i = 1, …, 𝒟 indexes the driven neurons, and m = 1, …, 𝒟 + ℐ indexes presynaptic neurons from both the driven and input populations. We suppose that activity in the population of driven neurons dynamically evolves according to

| (6) |

where yi is the firing rate of the ith driven neuron, xm is the firing rate of the mth input neuron, and τi is the time constant that determines how long the ith driven neuron integrates its presynaptic signals. It is possible that prior biological knowledge dictates that certain synapses appearing in Eq. (6) are absent. For notational convenience, in this paper we will assume that the number of synapses onto each driven neuron remains the same,2 and we will denote this number of the incoming synapses as 𝒩. Note that 𝒩 = ℐ + 𝒟 for a general recurrent network, 𝒩 = ℐ + 𝒟 − 1 for recurrent networks without self-synapses, and 𝒩 = ℐ for feedforward networks. We suppose that the network functionally maps input patterns, xμm, to steady-state driven signals, yμi ⩾ 0, where μ = 1, …, 𝒫 labels the patterns [Fig. 3(b)]. We assume throughout that 𝒫 ⩽ 𝒩, as the number of known response patterns is typically small, and the number of possible synaptic inputs is large. Experimentally, different response patterns often correspond to different stimulus conditions, so we will often refer to μ as a stimulus index and xμm → yμi as a stimulus transformation.

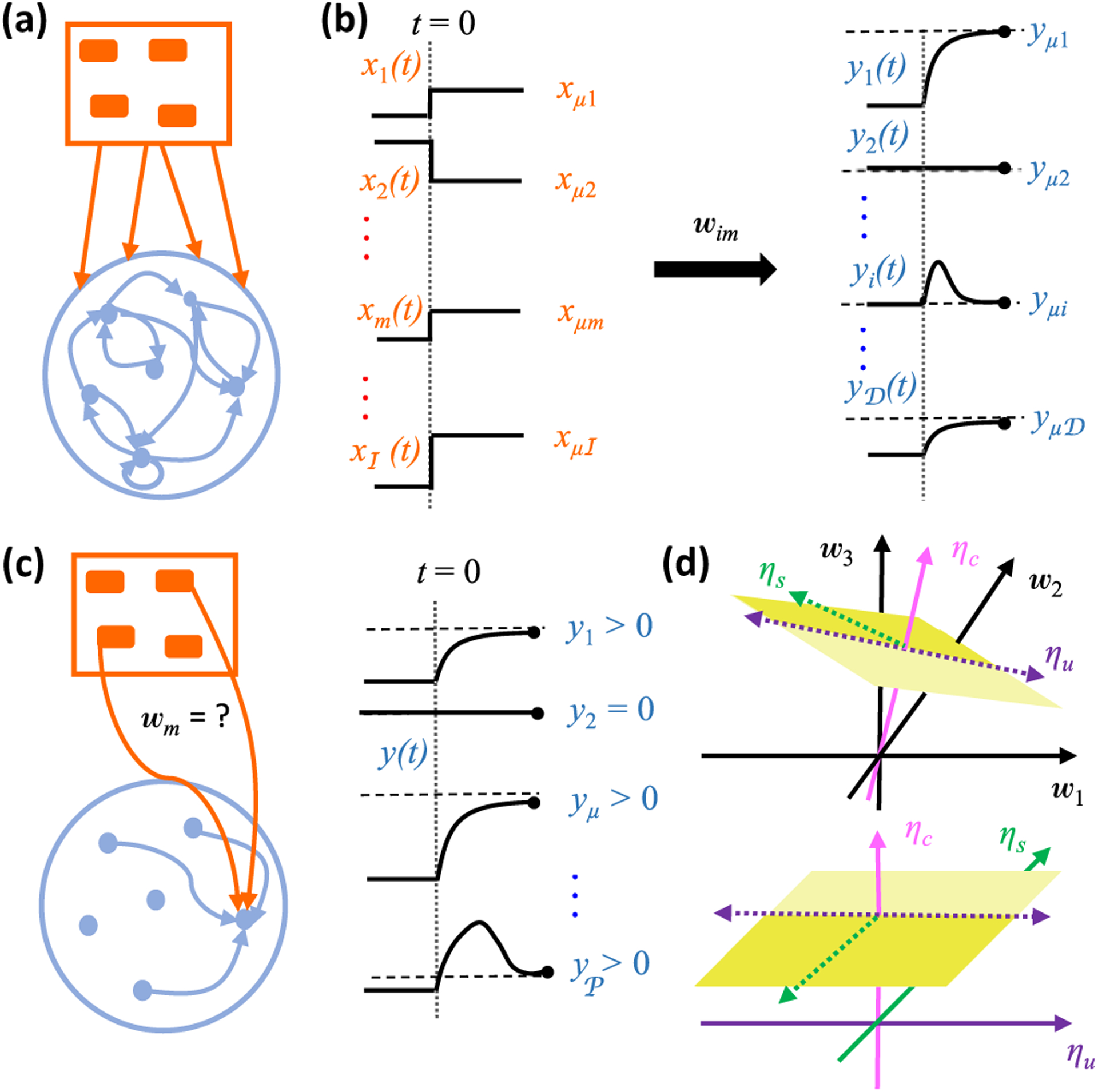

FIG. 3.

Finding network structure that implements functional responses. (a) Cartoon depicting a recurrent network of driven neurons (blue) receiving feedforward input from a population of input neurons (orange). (b) The μth pattern of input neuron activity (xμm) appears at t = 0 and drives the recurrent neurons to approach the steady-state response pattern (yμi) via feedforward and recurrent network connectivity (wim). (c) (Left) We focus on one driven neuron at a time, referred to henceforth as the target neuron, to determine its possible incoming synaptic weights, wm. (Right) These weights must reproduce the target neuron’s 𝒫 steady-state responses from the steady-state activity patterns of all 𝒩 presynaptic neurons. (d) The yellow planes depict the subspace of incoming weights that can exactly reproduce all nonzero responses of the target neuron, and the subregion shaded dark yellow indicates weights that also reproduce the target neuron’s zero responses. The top graph depicts the weight space parametrized by physically meaningful w coordinates, but the solution space is more simply parametrized by abstract η coordinates (bottom). The η coordinates depend on the specified stimulus transformation (xμm → yμi), and ηc, ηs, and ηu are coordinates in 𝒞-dimensional constrained, 𝒮-dimensional semiconstrained, and 𝒰-dimensional unconstrained subspaces, respectively.

B. Decomposing a recurrent network into 𝒟 feedforward networks

Our goal is to find features of the synaptic weight matrix that are required for the stimulus transformation discussed above. For notational simplicity, let us consider the case where we potentially have all-to-all connectivity, so that 𝒩 = 𝒟 + ℐ, but we will later explain how our arguments generalize. Since all time-derivatives are zero at steady-state, the response properties provide 𝒟 × 𝒫 nonlinear equations for 𝒟 × 𝒩 unknown parameters3:

| (7) |

Inspection of the above equation, however, reveals that each neuron’s steady-state activity depends only on a single row of the connectivity matrix [Fig. 3(c)]; the responses of the ith driven neuron, {yμi, μ = 1, …, P}, are only affected by its incoming synaptic weights, {wim, m = 1, …, 𝒩}. Thus, the above equations separate into 𝒟 independent sets of equations, one for each driven neuron. In other words, we now have to solve 𝒟 feedforward problems, each of which will characterize the incoming synaptic weights of a particular driven neuron, which we term the target neuron. Note that since a generic target neuron receives signals from both the input and the driven populations, the activities of both input and driven neurons serve to produce the presynaptic input patterns that drive the responses of the target neuron in the reduced feedforward problem.

C. Solution space for feedforward networks

We have just seen how we can solve the problem of finding synaptic weights consistent with steady-state responses of a recurrent population of neurons, provided we know how to solve the equivalent problem for feedforward networks. Accordingly, we will now focus on a feedforward network, where a single target neuron, y, receives inputs from 𝒩 neurons {xm; m = 1, …, 𝒩}, to find the ensemble of synaptic weights that reproduce this target neuron’s observed responses. The constraint equations are

| (8) |

where yμ now stands for the activity of the target neuron driven by the μth input pattern, and is the 𝒩-vector of synaptic weights onto the target neuron. Assuming that the 𝒫 × 𝒩 matrix x is rank P, we let the 𝒩 × 𝒩 matrix X be rank 𝒩 with Xμm = xμm for μ = 1, …, 𝒫. This implies that the last 𝒩 − 𝒫 rows of X span the null space of x, and X defines a basis transformation on the weight space,

| (9) |

The 𝒩 linearly independent columns of X−1 define the basis vectors corresponding to the η coordinates,

| (10) |

In other words,

| (11) |

where is the physical orthonormal basis whose coordinates, {wm}, correspond to the material substrates of network connectivity. These basis vectors can be obtained from by an inverse basis transformation:

| (12) |

We can thus write any vector of incoming weights as

| (13) |

In terms of η coordinates, the nonlinear constraint equations take a rather simple form:

| (14) |

Accordingly, η coordinates succinctly parametrize the solution space of all weight matrices that support the specified fixed points [Fig. 3(d)]. Each η dimension can be neatly categorized into one of three types. First, for each stimulus condition μ where yμ > 0, we must have ημ > 0. This in turn implies that Φ(ημ) = ημ = yμ. Because the coordinate ημ must adopt a specific value to generate the transformation, we say that μ defines a constrained dimension. We denote the number of constrained dimensions as 𝒞 ⩽ 𝒫. Second, note that the threshold in the transfer function implies that Φ(a) = 0 for all a ⩽ 0. Therefore, for any stimulus condition such that yμ = 0, we have a solution whenever ημ 0. Because positive values of ημ are excluded but all negative values are equally consistent with the transformation, we say that μ defines a semiconstrained dimension. We denote the number of semiconstrained dimensions as 𝒮 = 𝒫 – 𝒞. Finally, we have no constraint equations for ημ if μ = 𝒫 + 1,···, 𝒩. Because all positive or negative values of ημ are equally consistent with the stimulus transformation, we say that μ defines an unconstrained dimension. We denote the number of unconstrained dimensions as 𝒰 = 𝒩 − 𝒫. Altogether, the stimulus transformation is consistent with every incoming weight vector that satisfies

| (15) |

Note that one can enumerate the solutions in the physically meaningful w coordinates by simply applying the inverse basis transformation in Eq. (9) to any solution found in η coordinates.

Going forward, it will be convenient to extend the 𝒫-dimensional vector of target neuron activity to an 𝒩-dimensional vector whose components along the unconstrained dimensions are equal to zero, because this will allow us to compactly write equations in terms of dot products between the activity vector and vectors in the 𝒩-dimensional weight space. Rather than introducing a new notation for this extended 𝒩-dimensional vector, we simply write with yμ = 0 for μ = 𝒫 + 1, …, 𝒩. It is critical to remember that this is merely a notational convenience, and the solution space distinguishes between semiconstrained dimensions and unconstrained dimensions according to Eq. (15). In particular, yμ = 0 is a constraint equation for semiconstrained dimensions, but yμ = 0 is a notational convenience for unconstrained dimensions.

D. Back to the recurrent network

To understand how the solution space geometry of the feedforward network can be translated back to the recurrent network, it is useful to group together the steady-state activities of all input and driven neurons that are presynaptic to the ith driven neuron as a 𝒫 × 𝒩 input pattern matrix, z(i).4 The entries of the matrix, , correspond to the responses of the mth presynaptic neuron to the μth stimulus. At this point it is easy to see that when biological constraints dictate that some of the synapses are absent, then one should just exclude those presynaptic neurons when constructing z(i), such that the m index excludes those presynaptic neurons. Similarly, by a suitable reordering, which will depend on the driven neuron, we can always ensure that m = 1, …, 𝒩 runs only over the neurons that are presynaptic to the given driven neuron.

Once the input patterns feeding into the ith neuron are known, we can follow the steps outlined in the previous subsection to define the 𝒩 × 𝒩 full rank extension of z(i), Z(i), and the η(i) coordinates via

| (16) |

The nature of the coordinates, that is whether they are constrained, semiconstrained, or unconstrained, is determined by how the ith neuron responded to the stimulus conditions, as in Eq. (15). Repeating this process for all driven neurons provides a geometric characterization of the entire recurrent network solution space, which involves all elements of the synaptic weight matrix, wim.

An important special case is all-to-all network connectivity. In this case, the Z(i) matrices are the same for all driven neurons, and therefore the directions corresponding to the η coordinates are also preserved.5 In particular, the orientation of the unconstrained subspace with respect to the physical basis does not change from one driven neuron to another. However, how a given driven neuron responds to a particular stimulus determines whether the corresponding η direction is going to be constrained or semiconstrained for the feedforward network associated with that driven neuron.

IV. CERTAIN SYNAPSES IN ILLUSTRATIVE 3D EXAMPLES

Although we have found infinitely many weight matrices that produce a given stimulus transformation, it is nevertheless possible that the solutions imply firm anatomical constraints (e.g., Sec. II). In this paper we focus on finding synapses that must be nonzero in order for the response patterns to be fixed points of the neural network dynamics. We refer to such synapses as certain, because the synapse must exist in the model, and its sign is identifiable from the response patterns. It is clear from the geometry of the solution space that the relative orientations between the η coordinates and the physical w coordinates are significant determinants of synapse certainty. To build quantitative intuition for how the solution space geometry precisely determines synapse certainty, we begin by first analyzing a few illustrative toy problems. In the next section we will describe the more general treatment of high-dimensional networks. Importantly, we select and parametrize each toy problem to introduce concepts and notations that will reappear in the general solution.

More specifically, we first consider three feedforward examples with 𝒩 = 3 [Fig. 4(a)]. The first two examples have 𝒫 = 3, and the third has 𝒫 = 2. In the first example, we will assume that the driven neuron does not respond to the first two stimulus patterns, but responds positively to the third pattern. So we have two semiconstrained and one constrained dimension,

| (17) |

In contrast, in the second example we will have two constrained and one semiconstrained dimension,

| (18) |

The final example will feature one unconstrained, one semiconstrained, and one constrained dimension,

| (19) |

For technical simplicity we will consider orthonormal input patterns, X−1 = XT , which implies that

| (20) |

where δμν is the Kronecker δ function, which equals 1 if μ = ν and 0 if μ ≠ ν, so . This trivially implies that the η coordinates are related to the synaptic coordinates via a rotation, so the spherical biological bound on the physical coordinates transforms to an identical spherical bound on the η coordinates:

| (21) |

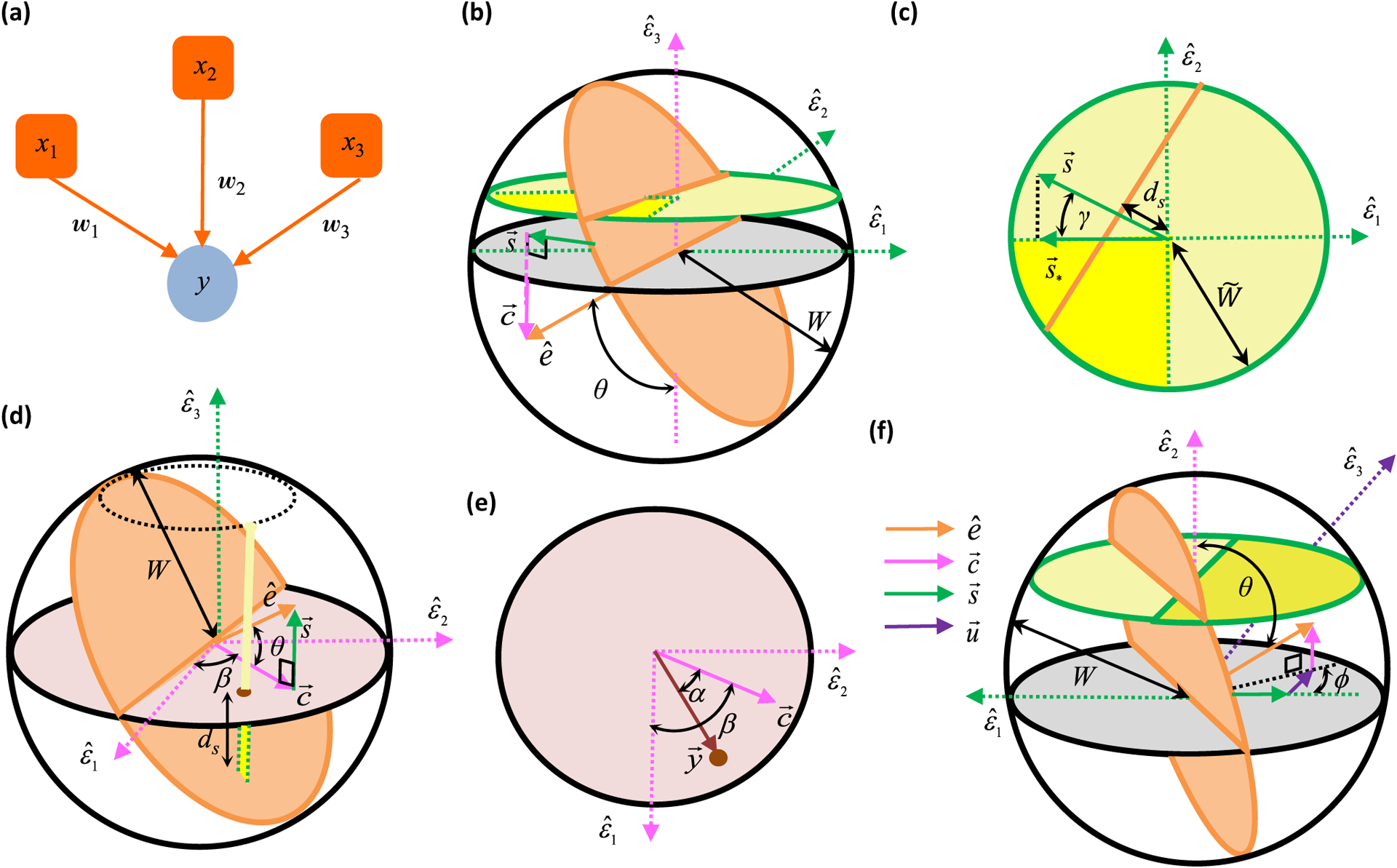

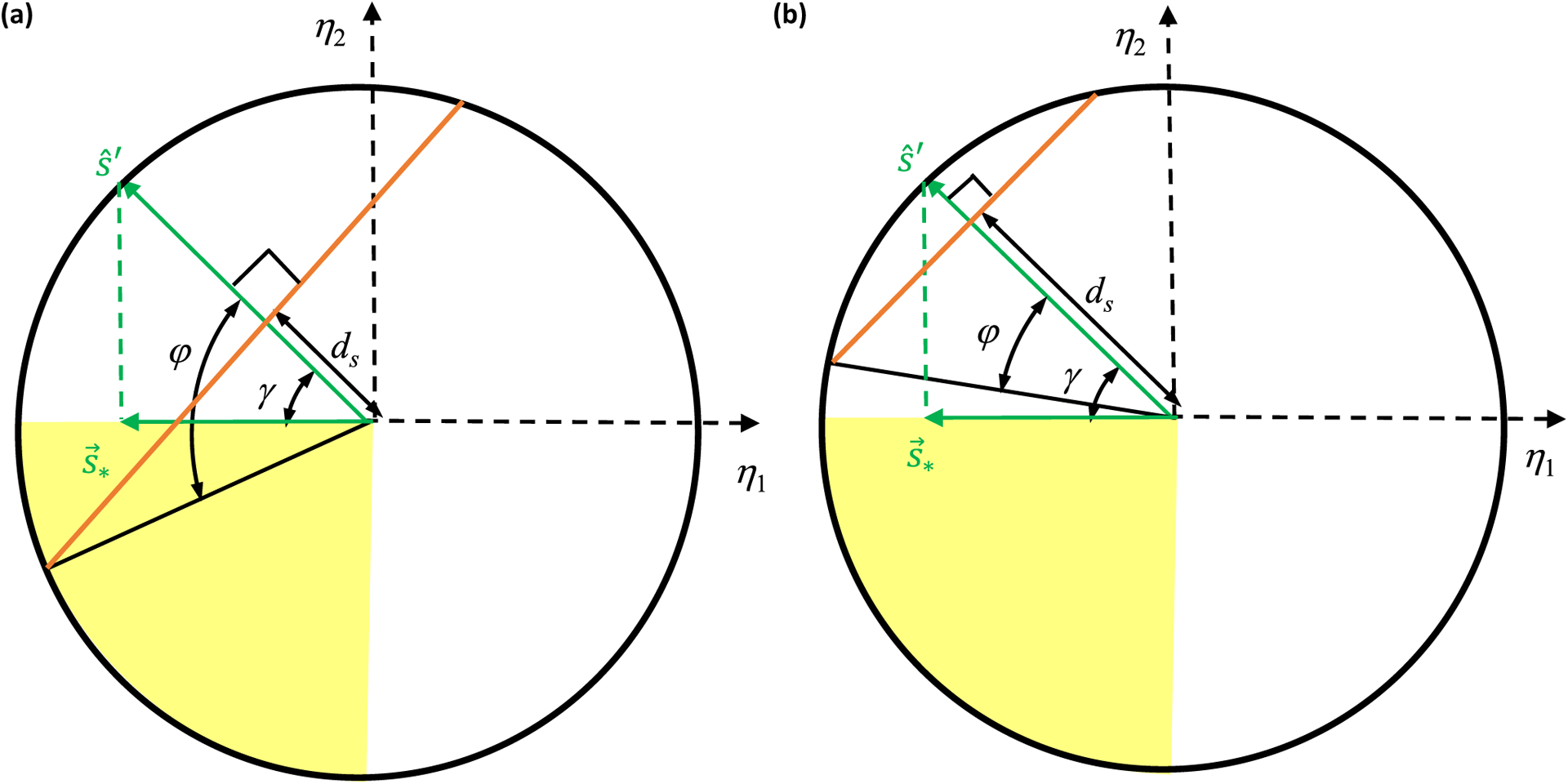

FIG. 4.

Geometric quantities determining whether neurons must be synaptically connected in several three-dimensional toy problems. (a) Cartoon depicting the 𝒩 = 3 feedforward network corresponding to the toy problems. (b), (c) Geometrically determining whether a synapse is nonzero when the target neuron responds to one input pattern but does not to two other patterns. A synapse can only vanish if the w1 = 0 plane (orange circle) intersects the solution space (dark yellow wedge) within the weight bounds (bounding sphere). For example, this intersection occurs in panel (b), so the synapse is not required for the responses. For every synapse one can associate a direction in synaptic weight space (orange arrow) that is normal to the planes with constant synaptic weight. This synapse vector can be decomposed into its projections into the semiconstrained subspace (green arrow, ) and along the constrained dimension (pink arrow, ). In this example, whether the synapse is certain is determined by the size of the bounding synapse space, W [see panel (b)], the angle θ between the synapse direction (orange arrow) and the closest axis of the constrained dimension [see panel (b)], and the angle γ between and its closest vector in the solution space [see panel (c)]. In panel (c), ds depicts the perpendicular distance from the origin of the yellow semiconstrained plane in panel (b) to its intersection line with the w1 = 0 orange plane. If this distance is sufficiently large, then the orange line will not intersect the target neuron responds to two input patterns but not the third pattern. In panel (d), the orange w1 = 0 plane intersects the solution space (deep solution space within the yellow plane’s circular bound of radius . (d), (e) Geometrically determining whether a synapse is nonzero when the yellow line) within the bounding sphere, so the synapse is not certain. In this example, the factors that determine synapse certainty are W [see panel (d)], the angle θ that the synapse vector (orange arrow) makes with its projection along the constrained subspace (pink arrow) [see panel (d)], and the angle α between the target response vector (brown arrow) and the pink arrow [see panel (e)]. The angle β does not ultimately matter, but it is included in the diagrams to aid the derivation. Here ds is the distance from the brown dot to the point of intersection between the yellow line and the orange plane. Again this point will lie outside the bounding sphere if ds is large enough, and this signals a certain synapse. (f) Geometrically determining whether a synapse is nonzero when the target neuron responds to one input pattern but does not to a second pattern. In the figure shown, the w1 = 0 orange plane intersects the solution space (deep yellow semicircle) within the bounding sphere, so the synapse is not certain. In this example, apart from W, what determines synapse certainty are the angles θ and φ, which encode how the synapse vector (orange arrow) can be decomposed into its projections along the constrained direction (pink arrow), semiconstrained direction (green arrow) and unconstrained direction (purple arrow).

A. Problem 1

Let us first focus on the example with two semiconstrained and one constrained dimension, whose solution space is depicted in deep yellow in Fig. 4(b). Suppose we are interested in assessing whether the w1 synapse is certain. Since the w1 = 0 plane divides the weight space into the positive and the negative halves, the synapse will be certain if this plane does not intersect with the solution space, which clearly depends on the orientation of the plane relative to the various η directions [Fig. 4(b)]. It is thus useful to consider how the w1 = 0 plane’s unit normal vector pointing toward positive weights, , is oriented relative to the η directions. For ease of graphical illustration, here we assume the specific orientation diagramed in Figs. 4(b) and 4(c). Using Eq. (12) and the orthogonality of X, we can parametrize as

| (22) |

where

| (23) |

[Figs. 4(b) and 4(c)]. Geometrically, and are unit vectors along the projections of onto the constrained and semiconstrained subspaces [Fig. 4(b)]. Thus, cos θ ⩾ 0 and sin θ ⩾ 0, making θ an acute angle. In this example, γ is also an acute angle, as depicted in Fig. 4(c).

Note that all solutions lie within the two-dimensional semiconstrained subspace having η3 = y3. The w1 = 0 plane intersects this semiconstrained subspace as a line [Figs. 4(b) and 4(c)], and its equation in η coordinates is

| (24) |

From the geometry of the problem [Fig. 4(c)], it is clear that if the perpendicular distance, ds, from the origin to this line is large enough, then it will not intersect the all-negative quadrant of the semiconstrained subspace within the weight bound. According to simple trigonometry, this occurs when

| (25) |

where is the radius of the semiconstrained subspace containing the solutions. The perpendicular distance can be identified from Eq. (24) as

| (26) |

Substituting this expression for ds into Eq. (25), one finds through simple algebra that the w1 = 0 hyperplane does not intersect the solution space, and hence the synapse is certain, if the response magnitude exceeds a critical value,

| (27) |

which we generally refer to as y-critical.

Notice that if θ increases in Fig. 4(b), then the orange line in Fig. 4(c) comes closer to the origin, making it intersect with the solution space for more γ angles. Therefore, the synapse is more difficult to identify, and indeed Eq. (27) shows that ycr increases. However, if γ increases, then the orange line in Fig. 4(c) rotates away from the solution space, making the synapse easier to identify with small ds. Accordingly, ycr decreases.

It will turn out that the concept of y-critical is general, and ycr can always be expressed in terms of projections of ê along several specific directions. In this example, if we define es* and ey to be projections of along, and respectively, then it is easy to check that one can re-express ycr as

| (28) |

We will later discover that these projections are closely related to correlations between pre-synaptic and postsynaptic neuronal activity patterns. Thus, the expressions in Eq. (28) will provide a deeper understanding of the determinants of synapse certainty.

B. Problem 2

Having identified two key angles, θ and γ, that play a role in synapse certainty, let us look at the example of two constrained and one semiconstrained dimensions to uncover other important geometric quantities. In this case, the solution space is a ray defined by η1 = y1, η2 = y2, and −∞ < η3 0, and the magnitude of η3 is at most

| (29) |

for solutions within the weight bound [Fig. 4(d)]. Figure 4(d) shows a geometry where the w1 = 0 plane intersects the solution space at the point

| (30) |

Now we must have

| (31) |

as the intersection point lies on the w1 = 0 plane by definition, where we have defined as in the previous toy problem. The projection directions of onto the constrained and semiconstrained subspaces are given by

| (32) |

[Fig. 4(d)]. Then combining Eqs. (22) and (32), we can find an equation to determine η3 at the intersection point

| (33) |

We next introduce α to represent the angle between and [Fig. 4(e)], such that

| (34) |

where . The first two terms in Eq. (33) can then be trigonometrically combined with a difference of angles identity to arrive at

| (35) |

To be able to identify the sign of w1, this intersection point must lie beyond the weight bounds of the solution line segment, so . After some straightforward algebra we obtain the certainty condition as

| (36) |

From the geometry of the problem in Figs. 4(d) and 4(e), one sees that as θ or α increases, the point where the orange hyperplane intersects the yellow line is closer to the origin. Indeed, ycr increases, making it more difficult to identify the synapse sign. Again, one can re-express ycr as Eq. (28) in terms of projections, with the role of being played by .

C. Problem 3

Through the two above examples we found three angles, θ,α, and γ, that determine how large the response of the driven neuron has to be in order for a given synapse to be certain. However in both examples the number of patterns were equal to the number of synapses, 𝒫 = 𝒩. When 𝒫 < 𝒩, we have unconstrained dimensions, and the projection of the vector into the unconstrained subspace will also matter, because it relates to how much we do not know about the response properties of the driven neuron.

Here we consider a 𝒩 = 3 example with one constrained, one semiconstrained, and one unconstrained dimension rection as a linear combination of its projections along the [Fig. 4(f)]. In this case, we can express the synaptic diconstrained, semiconstrained and unconstrained dimension as

| (37) |

where we can always choose the directions of the unit vectors to make θ and φ acute angles. For the example shown in Fig. 4(f), this is achieved by choosing

| (38) |

Obtaining the certainty condition again involves ascertaining whether the w1 = 0 hyperplane intersects the deep yellow solution space [Fig. 4(f)]. In the example of Fig. 4(f), one can see that increasing the driven neuron response moves the yellow plane up, and there will come a critical point when the orange w1 = 0 plane just touches the solution space at the corner (η1 = 0, η2 = ycr, η3). Thus,

| (39) |

Since this corner point has a negative η3 component and lies on the bounding sphere, we must also have

| (40) |

[Fig. 4(f)]. Substituting in the w1 = 0 plane equation,

| (41) |

we can then determine ycr through simple algebra as

| (42) |

The final result now depends on the two acute orientation angles, θ and φ. By inspection of Fig. 4(f) or Eq. (42), it is clear that ycr increases if either θ or φ increases toward π/2. One therefore needs a larger response (y3) to make the synapse certain. We can again express ycr in terms of projections

| (43) |

where eu is the projection of along , and es* does not appear because the intersection occurred at the origin of the semiconstrained subspace.

V. CERTAIN SYNAPSES, THE GENERAL TREATMENT

A. High-dimensional feedforward networks

We have seen in the previous section how geometric considerations can identify synapses that must be present to generate observed response patterns in small networks. One can similarly ask when a synapse is required in high-dimensional networks [Fig. 5(a)].

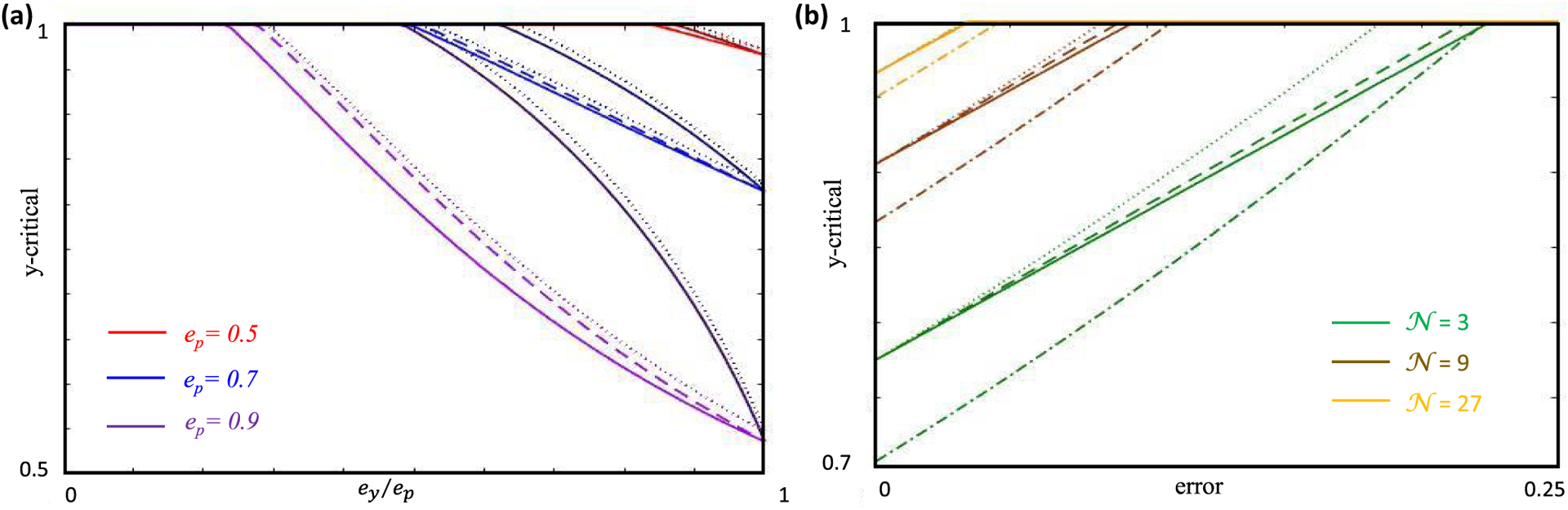

FIG. 5.

Identifying certain synapses in high-dimensional networks. (a) Cartoon depicting the high-dimensional feedforward network under consideration. (b) Geometrically determining whether a synapse is nonzero throughout a high-dimensional solution space. A synapse can only vanish if the wm = 0 hyperplane (orange circle) intersects the solution space (dark yellow wedge) within the weight bounds (bounding sphere). In the example shown, this intersection does not occur, so the synapse must be present. For orthonormal neural responses, only a few parameters determine whether this intersection occurs (Appendix A). First, the magnitude of the weight bound, W, controls the extent of the solution space. Second, there are three projections of the synapse direction (orange arrow) whose lengths are important determinants of the certainty condition: ey, the length of projection along the target response vector (pink arrow); es*, the length of projection along the closest boundary vector in the semiconstrained solution subspace [green arrow, see also in Fig. 4(c)]; and eu, the length of projection into the unconstrained subspace (purple arrow). Note that the shown example would have had an intersection if the solution space (dark yellow wedge) were moved down (along ) to lie below the hyperplane (orange circle). The solution space’s height is proportional to the magnitude of the postsynaptic responses, y. Thus, the solution space does not intersect the hyperplane only if y exceeds a critical value, ycr. (c) Plots of the certainty condition, Eq. (57), for W = 1. The red, blue, and purple curves plot ycr as a function of ry = ey/ep for ep = 0.3, 0.6, and 0.9, respectively. Different purple shades correspond to different values of . As this ratio increases, nonlinear effects increase ycr and make the sign harder to determine. The red and blue curves are for the maximally nonlinear case when . The dashed black curves represent ycr in a linear model, which cannot exceed the nonlinear ycr.

Although the rigorous derivation is intricate, this certainty condition is remarkably simple for orthonormal X (Appendix A). Quantitatively, orthonormal X imply that only a few parameters matter for the certainty condition, each illustrated in the previous section and abstractly summarized in Fig. 5(b).

For any given synapse, its physical basis vector, , can always be written as a sum of components in the constrained, semiconstrained, and unconstrained subspaces,

| (44) |

where , and denote the partial sums over μ in the constrained, semiconstrained, and unconstrained subspaces, respectively. Note that are orthogonal unit vectors if and only if X is an orthogonal matrix. In this case, the decomposition of is a sum of three orthogonal vectors that can be parameterized by two angles,

| (45) |

where , , and are unit vectors in the constrained, semiconstrained, and unconstrained subspaces, and (θ, ϕ) are spherical coordinates6 specifying the orientation of with respect to these subspaces [e.g., Fig. 4(f)]. In particular,

| (46) |

As we have seen in the toy examples, these two orientation angles heavily influence whether the synapse is certain.

Additionally, because the solution space’s height along [e.g., Fig. 4(b)] is controlled = by the angle between and , the equation for the wm = 0 hyperplane that divides the positive and negative synaptic regions in the solution space depends on

| (47) |

where y is the length of and α is the angle between and [Fig. 4(e)]. Finally, there is another critical angle, which we call γ, that encodes how is oriented with respect to the solution space in the semiconstrained subspace. Using a more convenient direction, , which is either along or opposite to the direction, we define γ to be the minimal angle between and the solution space [e.g., Fig. 4(b)]. It is generally given by

| (48) |

(Appendix A), where is the μth component of , and we have suppressed m to avoid cluttered notation. Although this definition and equation for γ may initially appear opaque, we soon clarify its meaning in terms of interpretable projections of the synapse vector.

Putting all the pieces together, we find that the mth synapse must be present, and its sign is unambiguous, if and only if y exceeds the critical value

| (49) |

(Appendix A). Intuitively, W bounds the magnitude of weight vectors, and largeW increase ycr by admitting more solutions. Note that a synapse is certain, for a given y, when the weight bound is less than a critical value,

| (50) |

Finally, we note that we must have W ⩾ y for any solutions to exist. One can straightforwardly obtain the special cases Eqs. (27), (36), and (42), by substituting α = φ = 0, γ = φ = 0, and α = cosγ = 0 in the general expression given by Eq. (49).

The geometric description of Eq. (49) can be written more intuitively as

| (51) |

(Appendix A), where is the unit vector in the solution space that is most aligned with [e.g., Fig. 4(b)], and ey, es*, and eu are the projections of onto , , and [Fig. 5(b)]. Indeed, Eqs. (28) and (43) canbe readily recognized as special cases of the above general expression.

Each of these projections is interpretable in light of the fact that xμm represents the activity level of the mth presynaptic neuron in the μth response pattern. Most simply,

| (52) |

is a normalized correlation of the pre- and postsynaptic activity (note that As expected, synapse certainty is aided by large magnitudes of ey. Moreover, the sign of a certain synapse is the sign of this correlation, or equivalently the sign of ey. Synapse sign identifiability is hindered by large values of

| (53) |

which effectively measures the weakness of the presynaptic neuron’s activity, as it is the amount of presynaptic drive for which we do not have any information on the target neuron’s response. The more subtle quantity is

| (54) |

The condition that selects for patterns where the sign of the presynaptic activity is Sgn(cosα) = Sgn(ey), but the postsynaptic neuron does not respond. In other words, presynaptic activity should have promoted a response in the target neuron according to the observed activity correlation. That it does not generates uncertainty in the sign of the synapse. See Appendix A for a heuristic derivation of ycr based on this argument.

We can gain more useful intuition by interpreting our result in relation to what we would obtain in a linear neural network. In the linear problem, there are only constrained and unconstrained dimensions; every dimension that was semiconstrained in the nonlinear problem becomes constrained, with all solutions having ημ = yμ for μ = 1, …, 𝒫. This implies that

| (55) |

Returning to the nonlinear problem, recall that the certainty condition finds the largest y for which the solution space and wm = 0 hyperplane intersect within the weight bound, and this intersection is simply a point when y = ycr. Importantly, each semiconstrained dimension can either behave like a linear constrained dimension with ημ = yμ = 0 at this intersection point (toy problems 1 and 3), or like an unconstrained dimension with ημ < 0 at the intersection point (toy problems 1 and 2).7 The first case occurs when and have opposite signs and ; the second case occurs when they have the same sign and . This means that one could compute the nonlinear theory’s y-critical from ycr,lin by appending the second class of semiconstrained dimensions onto the unconstrained dimensions. Mathematically, this corresponds to the replacement

| (56) |

which indeed transforms Eq. (55) to Eq. (51). The role of is to quantify the uncertainty introduced by the subset of semiconstrained dimensions that do not behave as constrained at the intersection point.

Since the parameters ey,es*, and eu cannot be set independently, it is convenient to reparameterize Eq. (51) as

| (57) |

where , and all three composite parameters can be independently set between 0 and 1. Conceptually, ry and rs* merely normalize ey and es* by their maximal values, and ep is the projection of into the activity-constrained subspace spanned by both constrained and semiconstrained dimensions. One could also interpret rs* as quantifying the effect of threshold nonlinearity. For instance, rs* = 0 describes the case where all semiconstrained dimensions are effectively constrained, but rs* increases as some of the semiconstrained dimensions start to behave like unconstrained dimensions. As expected, ycr is a decreasing function of and and an increasing function of [Fig. 5(c)].

B. Regarding nonorthogonal input patterns

While a complete treatment of the certainty condition for generally correlated input patterns is beyond the scope of this paper, we could find a conservative bound for y-critical that may be useful when patterns are close to being orthogonal. The details of the derivation are discussed in the final subsection of Appendix A.

The major challenge caused by nonorthogonal patterns is that the spherical weight space becomes elliptical in terms of the η coordinates. Thus, the main idea behind the bound is that one can always find the sphere that just encompasses this ellipse. We can then use our formalism to obtain a conservative y-critical, such that if the norm of is larger than this value then all solutions within the encompassing sphere have a consistent sign for the synapse under consideration. An interesting insight that emerges from our analysis is that the relative orientations between

| (58) |

and the various important η directions play the role of θ, φ, α and γ (Appendix A). Note that when X is an orthogonal matrix. We anticipate that will also be an important player in a more comprehensive treatment of nonorthogonal patterns.

C. Application to recurrent networks

As we explained in Sec. III, to find the ensemble of all incoming weight vectors onto the ith driven neuron, one can use the results obtained for the feedforward network and just substitute X with the Z(i) matrix. Consequently, identifying certain synapses onto the ith neuron can follow the route outlined for the feedforward scenario as long as Z(i) is orthogonal. So for example, if we want to ascertain whether any incoming synapse to the ith neuron is certain, we have to replace and yμ → yμi in Eqs. (51)–(54) to compute ycr.

D. Numerical illustration of the certainty condition

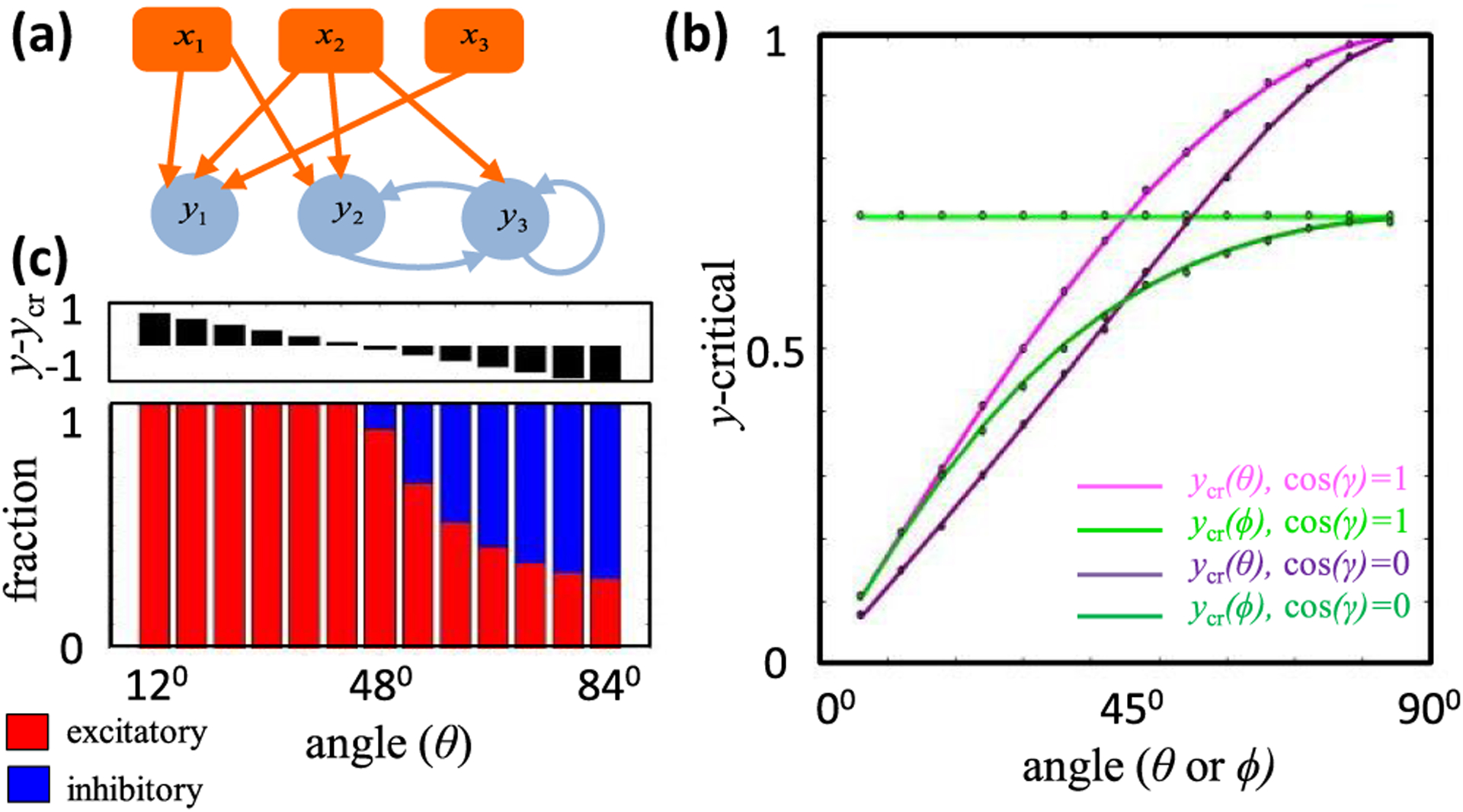

To illustrate and test the theory numerically, we first considered a small neural network of three input neurons and three driven neurons [Fig. 6(a)]. This small number of synapses meant that we could comprehensively scan the entire spherical weight space without relying on a numerical algorithm to find solutions.8 This is important because numerical techniques, such as gradient descent learning, potentially find a biased set of solutions that incompletely test the theory. We supposed that each driven neuron has three inputs, and we constrained weights with two orthonormal stimulus responses. We set W = 1 for all simulations and numerically screened weights randomly. See Appendix F for complete simulation details.

FIG. 6.

Testing the certainty condition with exhaustive low-dimensional simulations. (a) A simple recurrent network with three input neurons and three driven neurons (Appendix E). (b) We plot the theoretically derived ycr for feedforward synapses to y1 as we vary θ (green curves) or φ (magenta curves), keeping the other angle fixed at 45°. The lighter shades correspond to cosγ = 1 ⇒ rs* = 1. The darker shades correspond to cosγ = 0 ⇒ rs* = 0, where the predictions from the nonlinear network match those of a linear network. The dots represent ycr estimated through simulations, and they agree well with the theory. (c) (Bottom) Bar graph of the fraction of solutions with positive (red) and negative (blue) self-couplings (y3 → y3) as a function of θ. (Top) As predicted, all solutions have positive wy3,y3 when y − ycr > 0.

The first driven neuron in Fig. 6(a), y1, receives only feedforward drive, and we suppose that it responds to one stimulus condition with response y (μ = 2), but it does not respond to the other (μ = 1). Its synapses thus have one constrained, one semiconstrained, and one unconstrained dimension, and all of the terms in Eq. (49) contribute to y-critical. We could thus use y1 to verify Eq. (49). Moreover, this scenario includes the illustrative example of Fig. 4(f) as a special case, so we could also use y1 to verify Eq. (42).

To these ends, we decided to focus on a two-parameter family of input patterns,

| (59) |

where rows correspond to different input patterns and columns correspond to different input neurons, as usual, and we extend x to the full-rank orthogonal matrix

| (60) |

By Eq. (44), the physical basis vector corresponding to the synapse from the first input neuron is thus

| (61) |

and it has the same general form as Eqs. (37) and (45), where plays the role of . If ψ and χ are both acute, then one can identify them with θ and φ in Fig. 4(f), and the roles of and are played by and , respectively. In this case α = 0, cosγ = 0, and the theoretical dependencies of ycr on θ and φ are given by Eq. (42). Figure 6(b) illustrates these dependencies as the purple and dark green curves. If ψ is acute, but χ is obtuse, then according to our conventions, θ = ψ, φ = π – χ, , and . Now α = 0 and cosγ = 1, and our general formula, Eq. (49), implies

| (62) |

These dependencies are plotted as the pink and the light green curves in Fig. 6(b). We do not plot cases where ψ is obtuse, because obtuse and acute ψ result in equivalent ycr formulas. Whether ψ is acute or obtuse nevertheless matters because it determines the sign of the w1 synapse when it is certain.

The black dots in Fig. 6(b) show the largest response magnitude, y, for which we numerically found solutions with both positive and negative w1 (see Appendix F for numerical methods), thereby providing a numerical estimate of ycr. The theoretical curves and numerical points precisely aligned in all cases. The differences between the light and dark theoretical curves illustrates the effect of nonlinearity. When χ is obtuse, the semiconstrained dimension effectively behaves as unconstrained, and the mixing angle between the semiconstrained and unconstrained dimension is irrelevant to y-critical. When χ is acute, the semiconstrained dimension effectively behaves as constrained, as if its coordinate were set to zero. Moreover, these results confirmed that stronger responses were needed to make synapses fixed sign when the synaptic direction was less aligned with the constrained dimension [Fig. 6(b), purple and pink]. Furthermore, smaller y-critical values occurred when the synaptic direction anti-aligned with the semiconstrained dimension [Fig. 6(b), purple versus pink, dark green versus light green].

We next wanted to check the validity of our results for the recurrently connected neurons in Fig. 6(a). We therefore needed to tailor the steady-state activity levels of the recurrent network to result in orthogonal presynaptic input patterns for each driven neuron. In mathematical terms, Z(i) must be an orthogonal matrix for i = 1, 2, 3. We achieved this by considering a two-parameter family of driven neuronal responses in which the activity patterns of y2 and y3 were matched to those of x1 and x3, respectively. This construction means that all three driven neurons receive the same input patterns. To ensure positivity of driven neuronal responses, we set χ as an acute angle and ψ as the negative of an acute angle.

Although y2 has both feedforward and recurrent inputs, we can analyze its connectivity in exactly the same way as y1. Recurrence only complicates the analysis for neurons that synapse onto themselves, like y3, since changing the output activity also changes the input drive. So and ycr are not independent. Here we focused on the certainty condition for the self-synapse, wy3,y3, for which ycr = cos χ, and . Therefore, the synapse should be certain if 45° < χ ⩽ 90°. Since θ = π/2 − χ according to our conventions,9 this is equivalent to 0⩽ θ < 45° [Fig. 6(c), top]. Our numerical results precisely recapitulated these theoretical expectations [Fig. 6(c), bottom], as the self-connection was consistently positive across all simulations whenever this condition on θ was met. See Appendix E for certainty condition analyses for other synapses onto y3 and Appendix F for complete simulation details.

VI. ACCOUNTING FOR NOISE

A. Finding the solution space in the presence of noise

So far we have only considered exact solutions to the fixed point equations. However, it is also important to determine weights that lead to fixed points near the specified ones. For example, biological variability and measurement noise generally make it infeasible to specify exact biological responses. Furthermore, numerical optimization typically produces model networks that only approximate the specified computation. We therefore define the ℰ-error surface as those weights that generate fixed points a distance ℰ from the specified ones,

| (63) |

where yμi is the specified activity of the ith driven neuron in in the μth fixed point, and is the corresponding activity level in the fixed point approached by the model network when it is initialized as yi(t = 0) = yμi. If the network dynamics do not approach a fixed point, perhaps oscillating or diverging instead [54], we say ℰ = ∞.

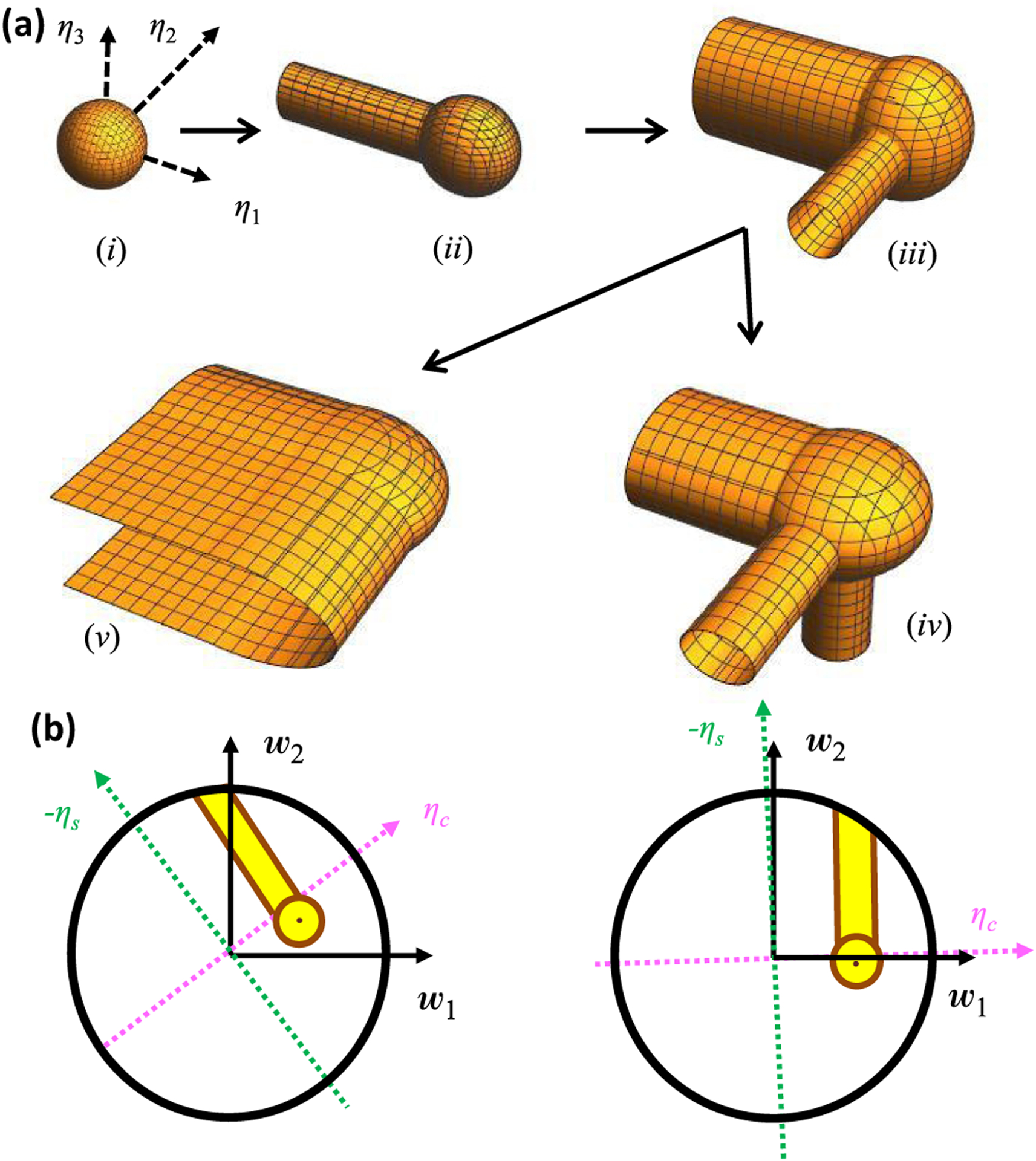

Each ℰ-error surface can be found exactly for feedforward networks. For illustrative purposes, let us first consider the 𝒟 = 1 feedforward scenario in which the driven neuron is active in every response pattern. This means that yμ > 0 for all μ = 1, …, 𝒫, and we can reorder the μ indices to sort the driven neuron responses in ascending order, 0 < y1 < y2 < ⋯ < y𝒫. Here we assumed that no two response levels are exactly equal, as is typical of noisy responses. Since all responses are positive, the zero-error solution space has no semiconstrained dimensions, and the only freedom for choosing w is in the 𝒰 = 𝒩 − 𝒫 unconstrained dimensions. Therefore, the zero-error surface of exact solutions, 𝒱0, is a 𝒰-dimensional linear subspace, and 𝒱0 is a point in the 𝒫-dimensional activity-constrained subspace.

How does this geometry change as we allow error? For 0 < ℰ < y1, we must have for all μ. Therefore, the nonlinearity is irrelevant, and ℰ-error surfaces are spherical in the activity-constrained η coordinates [Eq. (63), Fig. 7(a(i))]. However, once ℰ = y1 it becomes possible that , and suddenly a semi-infinite line of solutions appears with η1 ⩽ 0. As ℰ further increases, this line dilates to a high-dimensional cylinder [Fig. 7(a(ii))]. A similar transition happens at ℰ = y2, whereafter two cylinders cap the sphere [Fig. 7(a(iii))]. Things get more interesting as ℰ increases further because two transitions are possible. A third cylinder appears at ℰ′ = y3. However, at it is possible for both and to be zero, and the two cylindrical axes merge into a semi-infinite hyperplane defined by η1 ⩽ 0, η2 ⩽ 0. Thus, when ℰ″ < ℰ′ the error surface grows to attach a third cylinder [Fig. 7(a(iv))], and when ℰ″ < ℰ′ the two cylindrical surfaces merge to also include planar surfaces in between [Fig. 7(av)]. These topological transitions continue by adding new cylinders and merging existing ones, and the sequence is easily calculable from {yμ}. Note that we use the terminology “topological transition” to emphasize that the structure of the error surface changes discontinuously at these values of error. The geometric transitions we observe here also relate to topological changes in a formal mathematical sense. For instance, while there are no incontractible circles in Fig. 7(a(ii)), one develops as we transition to Fig. 7(a(iii)).

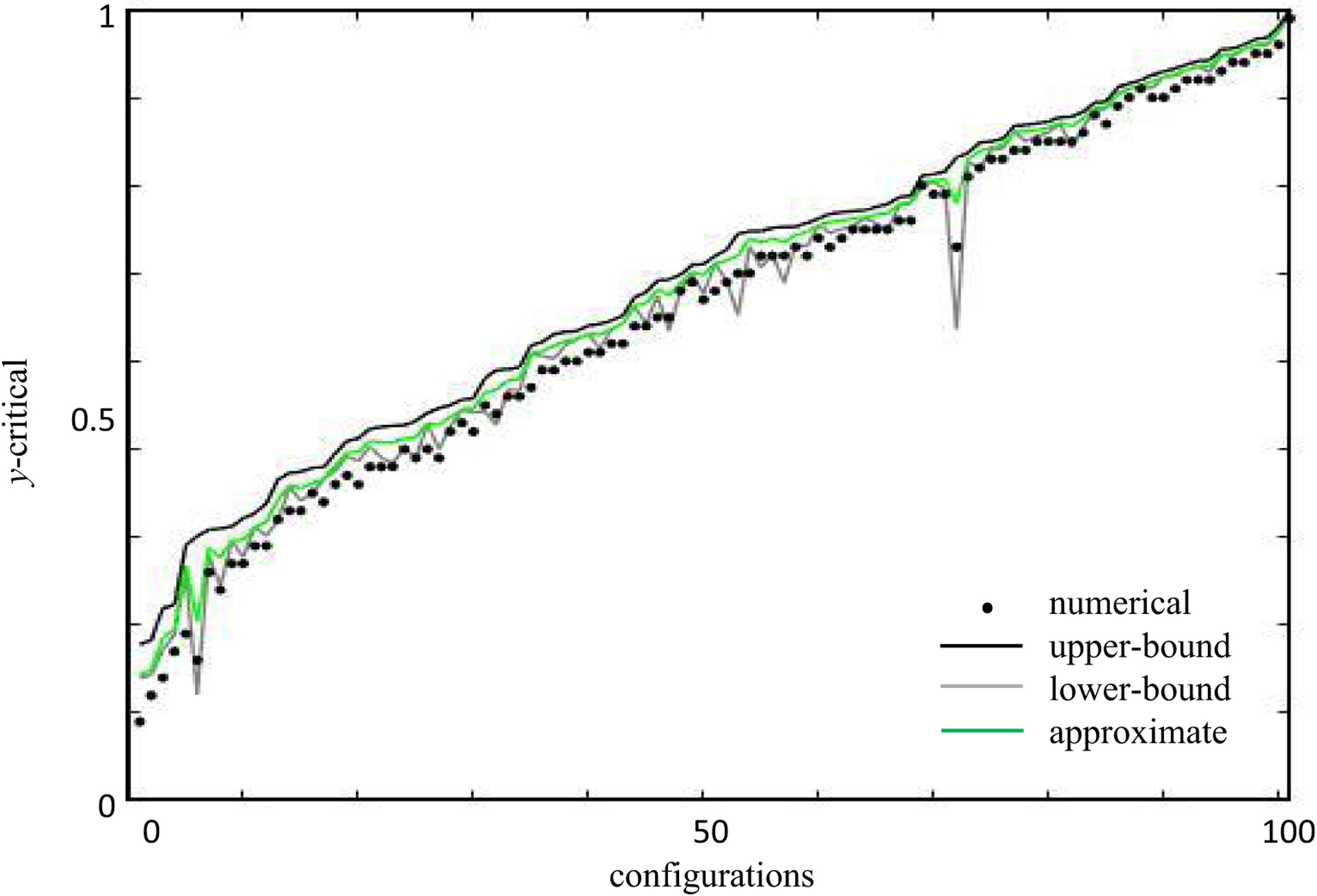

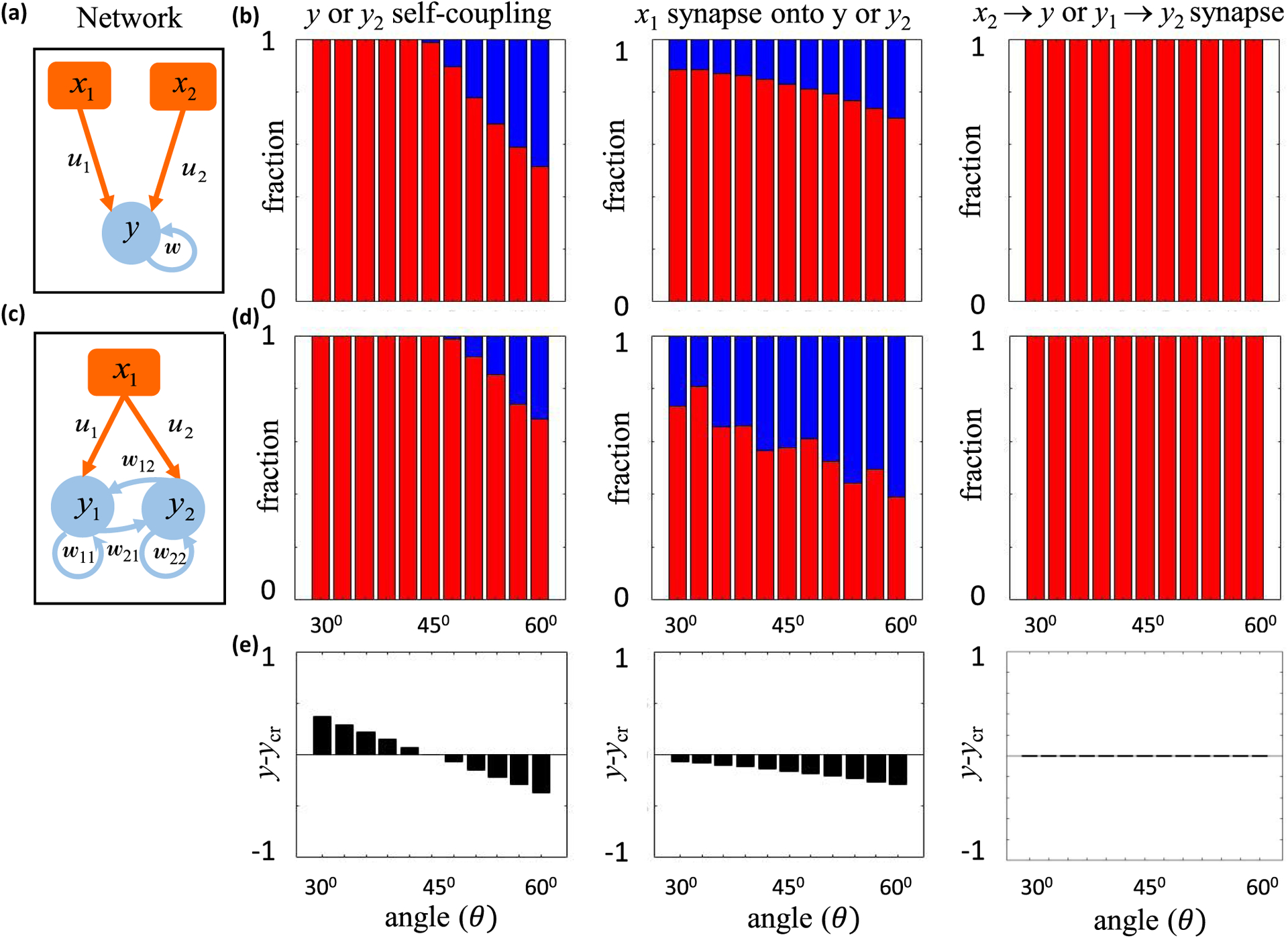

FIG. 7.

The solution space geometry changes as the allowed error increases. (a) Error surface contours in a three-dimensional subspace corresponding to η1, η2, and η3. Several topological transitions occur as the error increases. (i) We consider the case where all responses are positive, so the contours are spherical for small errors, just like in a linear neural network. (ii), (iii) Two cylindrical dimensions sequentially open up when the error is large enough for some η coordinates to become negative. (iv), (v) After that, either a third cylindrical dimension can open up, or the two cylindrical axes can join to form a plane. Which transition occurs at lower error depends on the pattern of neural responses. (b) (Left) We illustrate a case where there is a unique exact solution to the problem (brown dot). Allowing error but neglecting topological transitions would expand the solution space to an ellipse (here, brown circle), but the signs of w1 and w2 remains positive. Including topological transitions in the error surface can cap the ellipse with a cylinder (full yellow solution space). Now we can say with certainty that the sign of w2 is positive, but negative values of w1 become possible. (Right) Graphical conventions are the same. However, in this case all solutions inside the cylinder have w2 > 0. Therefore, the topological transition breaks a near symmetry between positive and negative weights.

In general, yμ may also be zero or negative in the presence of noise. Whenever yμ = 0, the μth response pattern generates a semiconstrained dimension in 𝒱0. However, if some response levels are negative, then there are no exact solutions at all. However, it becomes possible to find solutions when , and each response pattern associated with a negative yμ acts as a semiconstrained dimension in 𝒱ℇ. As illustrated above, more semiconstrained dimensions open up as more error is allowed in each of these cases.

This geometry only approximates ℰ-error surfaces for recurrent networks (Appendix C). For instance, displacing yμi from its specified value changes the input pattern that define the directions for downstream driven neurons, but this effect is neglected here. We will nevertheless find that this feedforward approximation to ℰ-error surfaces is practically useful for predicting synaptic connectivity in recurrent networks as well.

B. Predicting connectivity in the presence of noise

The threshold nonlinearity and error-induced topological transitions can have a major impact on synapse certainty [Fig. 7(b)]. For example, one might model a neuronal dataset with a linear neural network and find that models with acceptably low error consistently have positive signs for some synapses. However, if measured neural activity was sometimes comparable to the noise level, then semiconstrained dimensions could open up that suddenly make some of these synapse signs ambiguous [Fig. 7(b), left]. Although semiconstrained dimensions can never make an ambiguous synapse fully unambiguous, semiconstrained dimensions can heavily affect the distribution of synapse signs across the model ensemble by providing a large number of solutions that have consistent anatomical features [Fig. 7(b), right].

We therefore generalized the certainty condition to include the effects of error, including topological transitions in the error surface (Appendix C). As before, finding the certainty condition amounts to determining when the wm = 0 hyperplane intersects the solution space within the weight bound, but to account for noise of magnitude ε, we must now check whether an intersection occurs with any ℰ-error surface with ℰ ⩽ ε. No intersections will occur if and only if every nonnegative within ε of the provided 𝒫-vector of noisy target neuron activity [Fig. 3(c)] satisfies its zero-error certainty condition, and each is a possible denoised version of it [Eq. (63)]. We thus define y-critical in the presence of noise as the maximal ycr [Eq. (51)] among this set of .

Although we lack an exact expression for y-critical in the presence of noise, we derived several useful bounds and approximations (Appendix C). We usually focus on a theoretical upper bound for y-critical, ycr,max. Note that this upper bound suffices for making rigorous predictions for certain synapses, because y > ycr,max ⇒ y > y-critical. In the absence of topological transitions, this formula is

| (64) |

We also computed a lower bound, ycr,min, to assess the tightness of the upper bound. This bound is

| (65) |

without topological transitions. Both bounds increase with error and should be considered to be bounded above byW. As expected, both expressions reduce to Eq. (51) as ε/W → 0. We also note that the two bounds coincide, to leading order in ε/W, if ey ≪ max(es*, eu) and ep/max(es*, eu) = 𝒪(1), and we argue in Appendix B that this is typical when the network size is large.

The effect of topological transitions is that ycr,max and ycr,min become the maximums of several terms, each corresponding to a way that constrained dimensions could behave as semiconstrained within the error bound (Appendix C). We compute each term from generalizations of Eqs. (64) and (65) that account for the amount of error needed to open up semiconstrained dimensions.

C. Testing the theory with simulations

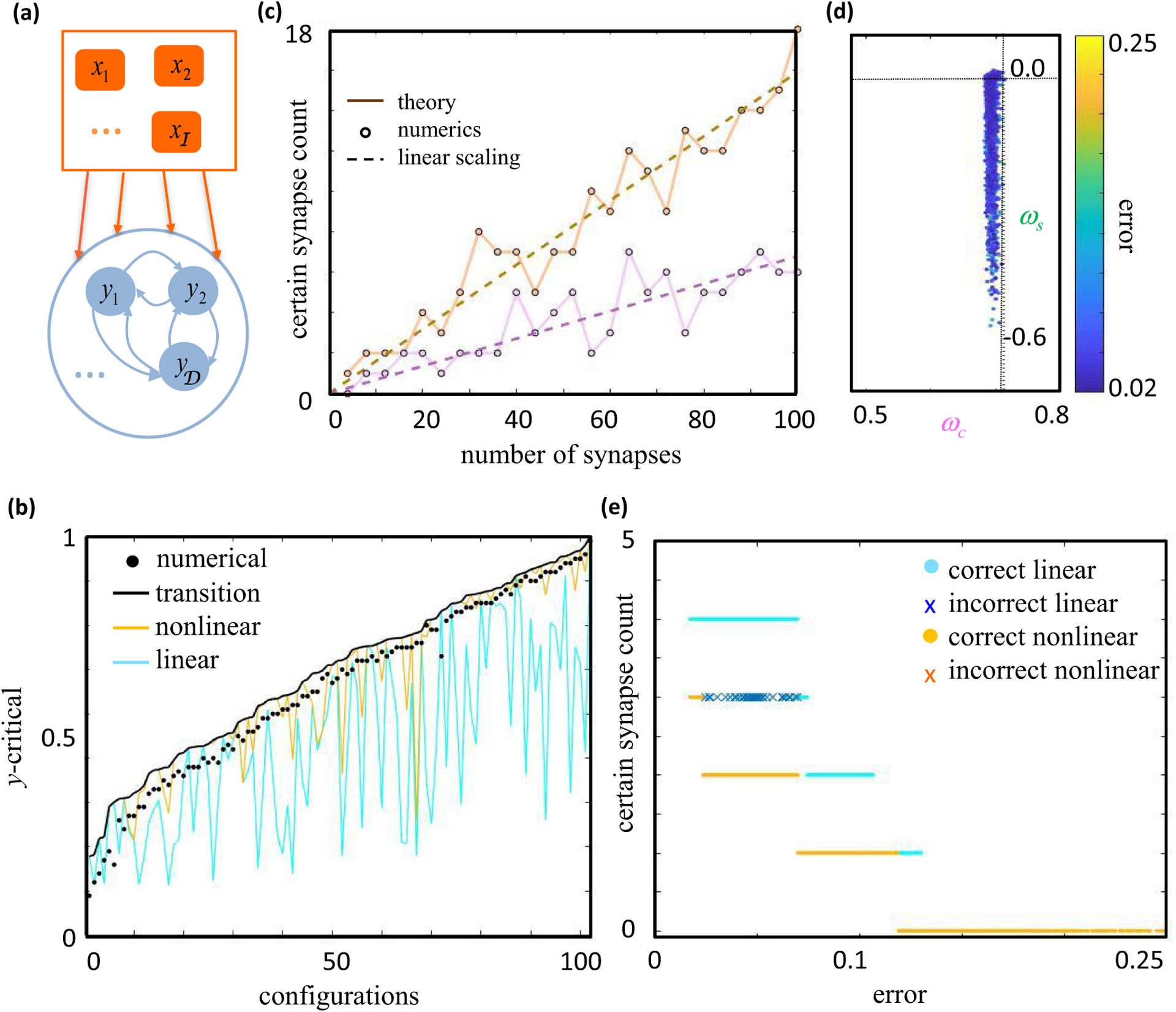

To examine our theory’s validity, we assessed its predictions with numerical simulations of feedforward and recurrent networks [Fig. 8(a)]. Each assessment used gradient descent learning to find neural networks whose late time activity approximated some specified orthogonal configuration of input neuron activity and driven neuron activity (Appendix F). We then used our analytically derived certainty condition with noise to identify a subset of synapses that were predicted to not vary in sign across the model ensemble (W = 1), and we checked these predictions using the numerical ensemble. We similarly checked predictions from simpler certainty conditions that ignored the nonlinearity or neglected topological transitions in the error surface (Appendix C). Note that we expected gradient descent learning to often fail at finding good solutions in high dimensions, as our theory predicts that each semiconstrained dimension induces local minima in the error surface [Fig. 7(a)]. Since we did not want the theory to bias our numerical verification of it, we focused our simulations on small to moderately sized networks, where we could reasonably sample the initial weight distribution randomly. Future work will consider more realistic neural network applications.

FIG. 8.

The theory accounting for error explains numerical ensembles of feedforward and recurrent networks. (a) Cartoon of a recurrent neural network. We disallow recurrent connectivity of neurons onto themselves throughout this figure. 𝒟 = 1 corresponds to the feedforward case, and W = 1 for all panels. (b) Comparison of numerical and theoretical y-critical values for 102 random configurations of input-output activity (Appendix F). We considered a feedforward network with ℐ = 6, 𝒫 = 5, 𝒞 = 2. For each configuration and postsynaptic activity level y, we used gradient descent learning to numerically find many solutions to the problem with ℇ ≈ 0.1. The black dots correspond to the maximal value of y in our simulations that resulted in an inconsistent sign for the synaptic weight under consideration. The continuous curves show theoretical values for y-critical that upper bound the true y-critical (ycr,max, black), that neglect topological transitions in the error surface (yellow), or that neglect the threshold nonlinearity (cyan). Only the black curve successfully upper bounded the numerical points. Configurations were sorted by the ycr,max value predicted by the black curve. (c) The number of certain synapses increased with the total number of synapses in feedforward networks. Purple and brown correspond to 𝒩 = 2𝒫 = 4𝒞 and 𝒩 = 𝒫 = 4𝒞, respectively. The solid lines plot the predicted number of certain synapses. The circles represent the number of correctly predicted synapse signs in the simulations. The dashed brown and purple lines are best-fit linear curves with slopes 0.16(±0.01) and 0.07(±0.01) at 95% confidence level, significantly less than the zero error theoretical estimates of 0.28 and 0.18 (Appendix B). (d), (e) Testing the theory in a recurrent neural network with 𝒩 = 10, ℐ = 7, 𝒟 = 4, 𝒫 = 8, and 𝒞 = 3. Each dot shows a model found with gradient descent learning. (d) x and y axes show two η coordinates predicted to be constrained and semiconstrained, respectively, and the color axis shows the model’s root-mean-square error over neurons, . Although our theory for error surfaces is approximate for recurrent networks, the solution space was well explained by the constrained and semiconstrained dimensions. Note that the numerical solutions tend to have constrained coordinates smaller than the theoretical value (vertical line) because the learning procedure is initialized with small weights and stops at nonzero error. (e) The x axis shows the model’s error, and the y axis shows the number of synapse signs correctly predicted by the nonlinear theory (yellow dots or red crosses) or linear theory (cyan dots or blue crosses). Dots denote models for which every model prediction was accurate, and crosses denote models for which some predictions failed.

We first considered feedforward network architectures, for which our analytical treatment of noise is exact. To illustrate how nonlinearity and noise affect synapse certainty, we calculated the magnitude of postsynaptic activity needed to make a particular synapse sign certain [Fig. 8(b)]. We specifically considered 102 random input-output configurations of a small feedforward network with 6 input neurons (𝒫 = 5, 𝒞 = 2), which were tailored to have orthonormal input patterns and generate one topological error surface transition at small errors. In particular, we generated random orthogonal matrices by exponentiating random antisymmetric matrices, we set one element of to a small random value to encourage the topological transition, and we ensured that the other nonzero random element of was large enough to preclude additional transitions (Appendices C and F). For each input-output configuration, we then systematically varied the magnitude of driven neuron activity, y, finding 105 synaptic weight matrices with moderate error, ℰ2 ≈ ε2, for each magnitude y. Since randomly screening a six-dimensional synaptic weight space is not numerically efficient, we applied gradient descent learning. Nevertheless, the small network size meant that we could comprehensively sample the solution space and numerically probe the distinct predictions made by each bound or approximation used to estimate y-critical.

As expected, the maximum value of y that produced numerical solutions with mixed synapse signs [Fig. 8(b), black dots] was always below the theoretical upper bound for y-critical [Fig. 8(b), black line]. In contrast, mixed-sign numerical ensembles were often found above theoretical y-critical values that neglected topological transitions in the error surface [Fig. 8(b), yellow line] or that neglected the nonlinearity entirely [Fig. 8(b), cyan line]. This means that these simplified calculations for estimating y-critical make erroneous predictions, because the synapse sign is supposed to be exclusively positive or negative whenever y exceeds y-critical, by definition. Therefore, we were able to accurately assess synapse certainty, and this generally required us to include both the nonlinearity and noise-induced topological transitions in the error surface.

We next asked how often we could identify certain synapses in larger networks. For this purpose, we generated 25 random input-output configurations in the feedforward setting (Appendix F), again with orthonormal input patterns, but this time we increased the number of input neurons from 4 to 100 across the configurations [Fig. 8(c)]. As we increased the size of the network, we kept 𝒞/𝒩 fixed at 0.25 and 𝒫/𝒩 fixed at 1 [Fig. 8(c), brown] or 0.5 [Fig. 8(c), purple]. These scaling relationships put our simulations in the setting of high-dimensional statistics [65], where both the number of parameters and the number of constraints increase with the size of the network. In this high-dimensional regime, a simple heuristic argument suggests that the number of zero-error certain synapses should scale linearly with the number of synapses (Appendix B), because ycr and the typical magnitude of y scale equivalently with 𝒩. Here we tested this prediction by setting randomly, setting y = 1 − ln 2/-𝒞 to approximate the median norm of vectors in the unit 𝒞-ball (Appendix B), and numerically finding a small error solutionC for each configuration (ℰ2/𝒫 ≈ 10−6).

As expected, we empirically found that the number of certain synapses predicted by the theory [Fig. 8(c), solid lines] scaled with the network size linearly [Fig. 8(c), dashed lines]. The jaggedness of the solid curves reflect the fact that each point is specific to the random input-output configuration constructed for that value of 𝒩. The purple curve corresponds to the case when 𝒩 = 2𝒫 = 4𝒞 and the brown curve when 𝒩 = 𝒫 = 4𝒞. Furthermore, for every certain synapse predicted, we verified that its predicted sign was realized in the numerical solution we found [Fig. 8(c), circles]. These results suggest that the theory will predict many synapses to be certain in realistically large neural systems.

Finally, we empirically tested our theory for a recurrent network [Figs. 8(d) and 8(e)], where our treatment of noise is only approximate. For this purpose, we considered networks without the self-coupling terms, 𝒩 = ℐ + 𝒟 − 1. We constructed a single random configuration with nonnegative driven neuron responses and orthogonal presynaptic patterns for one of the driven neurons10 (Appendix F). This driven neuron could thus serve as the target neuron for our analyses. Note that it is sometimes possible to orthogonalize the input patterns for more than one driven neuron, but this is irrelevant to our analysis and is not pursued here. We then used gradient descent learning to find around 4500 networks that approximated the desired fixed points with variable accuracy. For technical simplicity, we first found connectivity matrices using a proxy cost function that treated the network as if it were feedforward. We then simulated the neural network dynamics with these weights and correctly evaluated the model’s error as prescribed by Eq. (63).

This network ensemble revealed that constrained and semiconstrained dimensions accurately explained the structure of the solution space for recurrent networks with nonzero error. Figure 8(d) shows the projection of the corresponding solution space along two η directions, one predicted to be constrained by the feedforward theory and the other predicted to be semiconstrained. As predicted, the extension of the solution space along the negative semiconstrained direction was clearly discernible. However, recurrence implies that the exact solution space is not perfectly cylindrical around the semiconstrained axes (Appendix C), because the driven neuron inputs to the target neuron can themselves vary due to noise. Here this effect was empirically insignificant, and the geometric structure of the solution space conformed rather well to our feedforward prediction. One might have expected the error [color in Fig. 8(d)] to increase monotonically as one moves away from the center of semiconstrained cylinder, but this expectation is incorrect for two reasons. First, we are visualizing the error surface as a projection along two dimensions, yet variations in other η coordinates add variation to the error.11 Second, we are visualizing the solution space for one target neuron, but other driven neurons in the recurrent network contribute to the summed error represented by the color.

Moreover, the theory correctly predicted how the number of certain synapses would decrease as a function of ε [Fig. 8(e)], and we never found a numerical violation of the theoretical certainty condition that included nonlinearity and noise. In Fig. 8(e), the yellow circles represent the number of certain synapses that were predicted by the theory and verified to have synapse signs that agreed with the theoretical prediction. Here accurate predictions did not require us to account for topological error surface transitions. In contrast, although our simulations usually agreed with the predictions of the linear theory [Fig. 8(e), cyan circles], they could also disagree. In Fig. 8(e), the blue crosses indicate configurations where the linear theory incorrectly predicted some synapse signs. The absence of red crosses reiterates the consistency of predictions coming from the nonlinear treatment.

VII. DISCUSSION

In summary, we enumerated all threshold-linear recurrent neural networks that generate specified sets of fixed points, under the assumption that the number of candidate synapses onto a neuron is at least the specified number of fixed points. We found that the geometry of the solution space was elegantly simple, and we described a coordinate transformation that permits easy classification of weight-space dimensions into constrained, semiconstrained, and unconstrained varieties. This geometric approach also generalized to approximate error-surfaces of model parameters that imprecisely generate the fixed points. We used this geometric description of the error surface to analyze structure-function links in neural networks. In particular, we found that it is often possible to identify synapses that must be present for the network to perform its task, and we verified the theory with simulations of feedforward and recurrent neural networks.

Rectified-linear units are also popular in state of the art machine learning models [29,66–68], so the fundamental insights we provide into the effects of neuronal thresholds on neural network error landscapes may have practical significance. For example, machine learning often works by taking a model that initially has high error and gradually improving it by modifying its parameters in the gradient direction [69]. However, error surfaces with high error can have semiconstrained dimensions that abruptly vanish at lower errors (Fig. 7). Local parameter changes typically cannot move the model through these topological transitions, because models that wander deeply into semiconstrained dimensions are far from where they must be to move down the error surface. The model has continua of local and global minima, and the network needs to be initialized correctly to reach its lowest possible errors. This could provide insight into deep learning theories that view its success as a consequence of weight subspaces that happen to be initialized well [70,71].

The geometric simplicity of the zero-error solution space provides several insights into neural network computation. Every time a neuron has a vanishing response, half of a dimension remains part of the solution space, which the network could explore to perform other tasks. In other words, by replacing an equality constraint with an inequality constraint, simple thresholding nonlinearities effectively increase the computational capacity of the network [72,73]. The flexibility afforded by vanishing neuronal responses thereby provides an intuitive way to understand the impressive computational power of sparse neural representations [50,74–76]. Furthermore, the brain could potentially use this flexibility to set some synaptic strengths to zero, thereby improving wiring efficiency. This would link sparse connectivity to sparse response patterns, both of which are observed ubiquitously in neural systems.