Abstract

Background

Workplace violence (WPV) is a complex global challenge in healthcare that can only be addressed through a quality improvement initiative composed of a complex intervention. However, multiple WPV-specific quality indicators are required to effectively monitor WPV and demonstrate an intervention’s impact. This study aims to determine a set of quality indicators capable of effectively monitoring WPV in healthcare.

Methods

This study used a modified Delphi process to systematically arrive at an expert consensus on relevant WPV quality indicators at a large, multisite academic health science centre in Toronto, Canada. The expert panel consisted of 30 stakeholders from the University Health Network (UHN) and its affiliates. Relevant literature-based quality indicators which had been identified through a rapid review were categorised according to the Donabedian model and presented to experts for two consecutive Delphi rounds.

Results

87 distinct quality indicators identified through the rapid review process were assessed by our expert panel. The surveys received an average response rate of 83.1% in the first round and 96.7% in the second round. From the initial set of 87 quality indicators, our expert panel arrived at a consensus on 17 indicators including 7 structure, 6 process and 4 outcome indicators. A WPV dashboard was created to provide real-time data on each of these indicators.

Conclusions

Using a modified Delphi methodology, a set of quality indicators validated by expert opinion was identified measuring WPV specific to UHN. The indicators identified in this study were found to be operationalisable at UHN and will provide longitudinal quality monitoring. They will inform data visualisation and dissemination tools which will impact organisational decision-making in real time.

Keywords: Healthcare quality improvement, Emergency department, Safety culture, Crisis management, Performance measures

WHAT IS ALREADY KNOWN ON THIS TOPIC

Workplace violence (WPV) in healthcare is an urgent and increasing concern experienced by healthcare institutions globally requiring complex intervention.

Numerous WPV quality indicators exist that can be used to monitor quality improvement initiatives, however, a method to identify metrics specific to an organisation’s need is required.

WHAT THIS STUDY ADDS

An example of the identification of quality indicators tailored to our organisation’s needs as determined by experts from our organisation via a modified Delphi process.

HOW THIS STUDY MIGHT AFFECT RESEARCH, PRACTICE OR POLICY

This study provides a set of quality indicators that can be used to monitor and benchmark WPV and WPV prevention interventions in healthcare organisations.

Introduction

Workplace violence (WPV) has been a global challenge in the healthcare sector that has been linked to unsatisfactory patient care, decreased employee well-being and staff retention concerns.1 2 Recent data have shown that the frequency and severity of violence have increased significantly during COVID-19 pandemic across care settings increasing the urgency to address the issue.2–4 Acute care and emergency departments (EDs) have been particularly affected, healthcare organisations have reported up to twofold increases in violent incidents in EDs compared with prepandemic levels.3 5 The EDs at the University Health Network (UHN) in Toronto, Canada, have observed the number of WPV incidents increase from 0.43 to 1.15 incidents per 1000 visits, an increase of 169% (p<0.0001).6 The stressors of violent incidents, particularly in acute care settings, are known to contribute negatively to the quality of patient care and quality of life for healthcare providers (HCPs).2 As a result, organisations need to find strategies to reduce the incidence and mitigate the impact of WPV in care settings.

Quality improvement (QI) initiatives in healthcare aim to create reliable and sustained changes in response to identified needs, gaps or optimisation opportunities; however, the success of these projects requires methodological planning.7 An important step of QI project planning includes identifying measurements to demonstrate change over time.6 8 Quality measures or indicators help organisations identify areas for improvement and are integral in measuring the impact of QI interventions, including positive changes and unintended consequences.9 10 Quality indicators tailored to the organisation have been demonstrated to further increase the likelihood of successful QI project completion.11

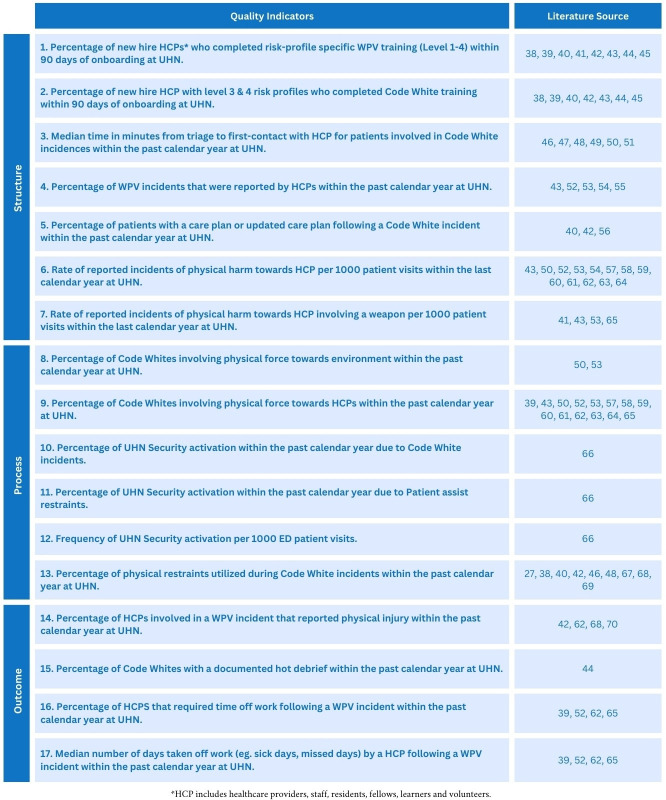

However, selecting quality indicators to measure WPV in healthcare is a difficult task due to the complexity of the issue. To begin with, it is imperative to acknowledge that the definition of WPV can vary across institutions, just as the formal response to a WPV incident may differ (figure 1). At our healthcare institution, the Occupational Health and Safety Act serves as the governing definition for WPV while the emergency response protocol for a WPV incident is denoted as a ‘code white’, as defined by UHN (figure 1).

Figure 1.

Comparing and defining workplace violence and code whites.6

Additionally, there are numerous contextual and risk factors that contribute to WPV. For an example, Keith and Brophy12 have suggested organising WPV into the following categories: (1) clinical risk factors, (2) environmental risk factors, (3) organisational risk factors, (4) societal risk factors and (5) economical risk factors.12 Current WPV metrics tracked in healthcare institutions often place a narrow emphasis on global outcome indicators, such as rates of documented WPV incidents. This is problematic and can have misleading consequences due to the well-documented prevalence of under-reporting of WPV events in healthcare.13 Literature demonstrates several reasons for under-reporting including complex reasons for resistance or hesitancy towards reporting relating to organisational culture concerns as well as more pragmatic barriers such as lack of time and resources due to clinical care burden.12 For these reasons, indicators and reports solely relying on incident tracking by staff cannot reliably provide an accurate depiction of WPV within healthcare settings due to the clinical and organisational risk factors that may influence these metrics. To overcome this challenge, a more differentiated approach and a larger set of quality indicators would be required. Studies investigating quality criteria for quality indicators suggest that effective quality indicator sets consider content validity by assessing their breadth and depth.14 High-quality indicators allow more valid conclusions to be drawn about organisational performance, supporting decision-making and enabling intervention performance tracking across and within various domains.14 It is also important to exclude less robust indicators from sets. The utility of individual quality indicators may vary based on the specific healthcare setting, suggesting the need for nuanced approaches to quality indicator selection.15 The Delphi method is well suited to this challenge as it facilitates an iterative and systematic process to arrive at an expert consensus on issues that are not well characterised.16 The Delphi method collates evidence-based knowledge, practical knowledge and expert opinion to arrive at conclusions that support decision-making in organisations.17 Therefore, a modified Delphi process was used in this study with the goal of developing a set of WPV quality indicators specific to our large multisite academic health science centre in Toronto, Canada.

Methods

Study design and registration

This modified Delphi study followed the Accurate Consensus Reporting Document guidelines for Delphi techniques in health science as proposed by Gattrell et al.16 Our modified Delphi process involved five components: (1) selection of panellists (2) preparation phase, (3) survey rounds, (4) data analysis and (5) implementation and dissemination.

This Delphi technique project was part of a larger organisational approach to addressing WPV within our institution.6 The project was managed by a focused UHN Security QI Team, consisting of staff physicians (n=2), a security director (n=1), data project manager (n=1) and a research analyst (n=1). The team provided study oversight from design to knowledge dissemination. They also participated in inter-round indicator data analysis.

Selection of panellists

The UHN Security QI team identified 48 experts who are involved in some aspect of WPV within the Toronto Academic Health Science Network. These experts were identified in a previous process that used a People, Environment, Tools, Tasks Scan as outlined by the Systems Engineering Initiative for Patient Safety 101 toolbox.6 18 In this scan, all functional units and people involved in addressing WPV at UHN were identified. This included individuals involved in clinical responses to WPV, WPV training and leadership roles. The majority of experts were identified across 17 distinct functional units at UHN. This included safety services, security operations, emergency preparedness, emergency medicine, quality of care committee, diversity and mediation services, clinical education, code white governance committee, workplace violence prevention advisory committee, centre of mental health, patient relations, general internal medicine, rehabilitation medicine, people, culture and community, legal affairs and the workplace violence education collaboration. Additional experts from the Centre for Addiction and Mental Health, Toronto, Canada were identified. All experts were contacted to increase the diversity of perspectives and mitigate the impact of selection bias. In addition, this QI project was governed by our organisational senior leadership committee on WPV prevention (Workplace Violence Prevention Advisory Committee), which includes representation from patient partners, Patient Relations Office and our Inclusion, Diversity, Equity, Accessibility, Anti-Racism office.

Panellists were invited to participate in the study via email. The email provided information regarding the study, a proposed timeline for the Delphi process and a link to the first survey (online supplemental appendix A). Panellists were not asked to suggest other members to the panel. At the beginning of the survey, panellists were requested to provide their informed consent to partake in the research. Those who did not initially provide consent were sent a single follow-up email to encourage their participation. All panellists who provided informed consent to participate and completed the first survey within 2 weeks would be asked to complete three additional surveys. While no financial incentives or reimbursements were provided to panellists, follow-up emails were used to encourage participation. All communication with panellists was supervised by the QI team, ensuring centralised oversight throughout the process.

bmjoq-2024-002855supp001.pdf (381.7KB, pdf)

Preparatory phase

Prior to initiation of the Delphi method, our team conducted a rapid review aimed at compiling a comprehensive list of literature-based quality indicators used to measure WPV in healthcare settings.19 The rapid review began with a literature search in Ovid databases, Medline, Embase and Emcare from inception to February 2023. The search was supported by UHN Library Services, and the study methodology and search strategy were clearly outlined in our rapid review.19 Two staff physicians and a research analyst served as reviewers. The three reviewers independently completed an abstract screening for articles related to measuring WPV using Covidence. Two ‘include’ votes were required for an abstract to be included in the full-text review. The three reviewers independently performed a full-text review and only articles containing quality indicators were included in the extraction phase. Any discrepancies related to the selection of studies were resolved through consensus during discussions among the reviewers. An additional article from the Ontario Public Services Health and Safety Association was also included in the extraction phase. The three authors then extracted all quality indicators from the literature and organised the indicators into structure, process and outcome quality indicators as outlined by the Donabedian model.19 20

These indicators were screened for duplicates, evaluated and operationalised for UHN by the three reviewers. Initially, all duplicate indicators were either removed or amalgamated. Subsequently, the remaining indicators were assessed for their relevance to WPV and their potential to be operationalised within UHN. The final stage involved operationalising the selected indicators for UHN, which entailed clearly defining each indicator in measurable terms. This process specified the exact procedures, tools and methods that would be employed to observe and quantify the indicators within the context of UHN. Operationalisation ensured that the indicators were concrete and practical for quantitative data collection and analysis. The remaining quality indicators from this review formed the basis of the surveys carried out during our Delphi study.

Delphi rounds

Our modified Delphi process contained two Delphi rounds; each round involved online survey distribution using an online survey platform. Each survey was open for 2–3 weeks. Emails containing survey links were sent to experts followed by a reminder email to experts who had not finished surveys within a certain time frame. Although all surveys requested the participant’s name, this information was solely used for tracking survey completion and stored separately from their responses to ensure anonymity. Participants were explicitly informed of this practice at the beginning of each survey. All surveys were in English and included information about the study, guiding definitions and ethics. In our modified Delphi process, our first round began with quality indicators identified in a rapid review as opposed to beginning with open-ended questions regarding quality indicators. This modification was made to ensure that only indicators that had previously been used in literature to measure WPV in healthcare were included and to improve engagement in the first round of surveys.

Round 1

The first-round surveys used a matrix scale question format to evaluate the experts’ opinion on WPV quality indicators (online supplemental appendices A–C). Experts were asked to select their agreement level to the validity, feasibility and importance of each indicator using a five-point Likert Scale (strongly disagree, disagree, neutral, agree and strongly agree). For each indicator, experts were given an open comment field to provide additional comments. After the development of the survey, four members of the QI team piloted the survey, their responses were included in determining expert agreement. Experts were also given the option to suggest new quality indicators and provide feedback at the end of each survey. Based on the experts’ level of agreement, we dichotomised each indicator as high rated or low rated.

bmjoq-2024-002855supp002.pdf (334.3KB, pdf)

bmjoq-2024-002855supp003.pdf (199.5KB, pdf)

Round 2

The second round of surveys used a matrix scale question format to evaluate the expert’s level of satisfaction with the high-rated WPV quality indicators determined in round one (online supplemental appendix D). This was evaluated using a five-point Likert Scale (strongly disagree, disagree, neutral, agree and strongly agree) and experts were given an open comment field to add additional comments about each indicator. After the development of the survey, four members of the QI team piloted the survey, their responses were included in determining expert agreement. Based on the experts’ level of agreement, we determined whether an indicator was an expert consensus recommendation. Experts were also given the option to suggest new quality indicators and provide feedback at the end of this survey.

bmjoq-2024-002855supp004.pdf (2.1MB, pdf)

Data analysis

Following each round of surveys, Likert scale ratings were converted to numerical values (strongly disagree=1, disagree=2, neutral=3, agree=4 and strongly agree=5). In round 1, ratings for validity, feasibility and importance for each indicator were averaged. If an indicator had a score of ≥4.0 in at least one category, it was accepted as high rated and included in the next round. In round 2, ratings for satisfaction were averaged and indicators were accepted as expert consensus if their rating was ≥4.0. In both rounds, low-rated indicators were removed unless validated comments provided by experts warranted the inclusion of the quality indicator, as reviewed by the UHN Security QI Team. Rationale and decision process were documented. Following each round of analysis, the UHN Security QI team assessed the list of quality indicators to determine their operationalisability within UHN.

Following completion of round 2 data analysis, the 17 indicators were implemented in a newly developed WPV dashboard using data visualisation software, offering real-time data representation for these indicators. A report was produced to disseminate among the expert panel to explain the consensus for qualitative and quantitative findings (online supplemental appendix E). This report was developed to explain the rationale behind the study, the methodology and to summarise the results of the Delphi process. To effectively communicate this information, the report was designed to explain the rational and provide transparency for knowledge translation. This report, along with the WPV dashboard, was disseminated to other key stakeholders at the organisation level to inform decision-making and governance surrounding WPV.

bmjoq-2024-002855supp005.pdf (663.7KB, pdf)

Results

Rapid review

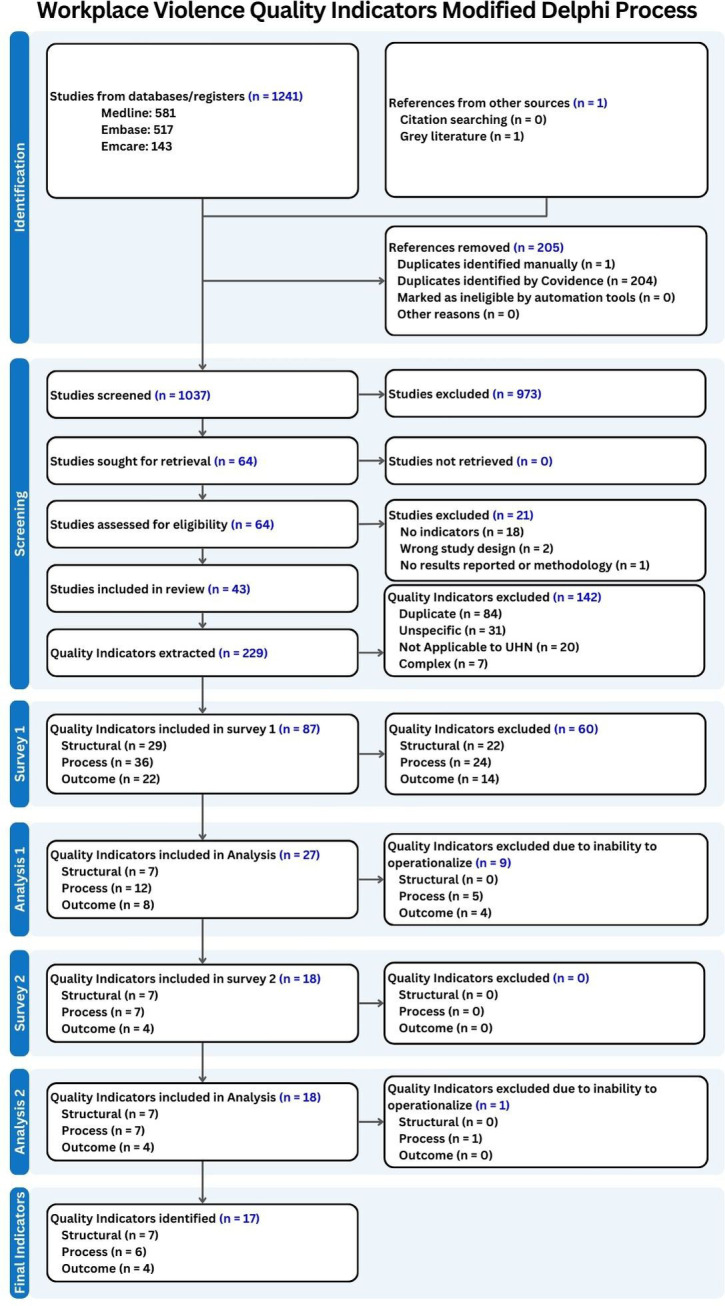

A total of 1241 studies were identified in the literature search and 1 additional article was included from grey literature. After removing 205 duplicates, 1037 studies were screened, and 64 underwent full-text reviews. Following full-text review, 43 studies were included from which 229 quality indicators were extracted. Finally, these 229 evidence-based quality indicators were screened for similarity, evaluated and operationalised by the UHN Security QI team, resulting in 87 evidence-based quality indicators. Afterwards, the indicators were organised into one of three categories: structure, process and outcomes, as outlined by the Donabedian model.20 Refer to figure 2 for the results of the rapid review.

Figure 2.

The rapid review and modified Delphi process.

Delphi rounds

Of the 48 experts contacted, 30 individuals from multiple functional units at UHN and its affiliates provided their consent to participate in the Delphi process (response rate: 62.5%). These experts represented one or more of the following functional units at UHN; safety services (n=1), security operations (n=5), emergency preparedness (n=2), emergency medicine (n=3), quality of care committee (n=2), clinical education (n=2), code white governance committee (n=10), workplace violence prevention advisory committee (n=4), centre of mental health (n=10), patient relations (n=1), general internal medicine (n=1), rehabilitation medicine (n=1), people, culture and community (n=1), legal affairs (n=1), workplace violence education collaboration (n=6) and external topic expert project partners (n=3).

Data were collected from the two rounds of surveys between 17 March 2023 and 17 August 2023. Due to the high number of quality indicators, we divided the first round into three individual surveys by structure (survey 1a: 29 indicators), process (survey 1b: 36 indicators) and outcome (survey 1c: 22 indicators) quality indicators. Survey 1a was open from 17 March 2023 to 31 March 2023, survey 1b was open from 5 April 2023 to 19 April 2023, and survey 1c was open from 20 April 2023 to 12 May 2023. We initially received a response rate of 62.5% to survey 1a, however, surveys 1b and 1c received further improved response rates of 90% and 96.7%, respectively. After collecting responses from all three surveys, our team analysed the data for consensus, defined as a minimum weighted average of 4.0 out of 5.0 points in at least one category. Round one yielded 27 indicators (7 structure, 12 process and 8 outcome). These indicators were further analysed to ensure that the data to operationalise these indicators was available at UHN. The 18 remaining indicators (7 structure, 7 process and 4 outcome) were evaluated using a second round of surveys. This process is summarised in figure 2.

The second round of surveys was sent on 26 June 2023, closed on 21 July 2023 and received a response rate of 96.7%. The survey duration extended beyond the originally intended time frame of 2–3 weeks, owing to the prevalence of scheduled vacations among a number of the panellists during this period. Following survey completion from our expert panel, our team collected and analysed the data from the survey. Analysis found that 17 of the 18 quality indicators (7 structure, 7 process and 4 outcome) were satisfactory, receiving a minimum weighted average of 4.0 out of 5.0 points. There was one indicator that fell below this threshold which measured median time for a patient involved in a code white to be seen by an HCP. This indicator was included based on expert comments, literature review and security QI team analysis, despite its average score of 3.83. Furthermore, operationalisability analysis of these quality indicators found that two of the indicators were redundant based on the available dataset, therefore, one was removed. Refer figure 2 for the results of the second round of surveys and analysis.

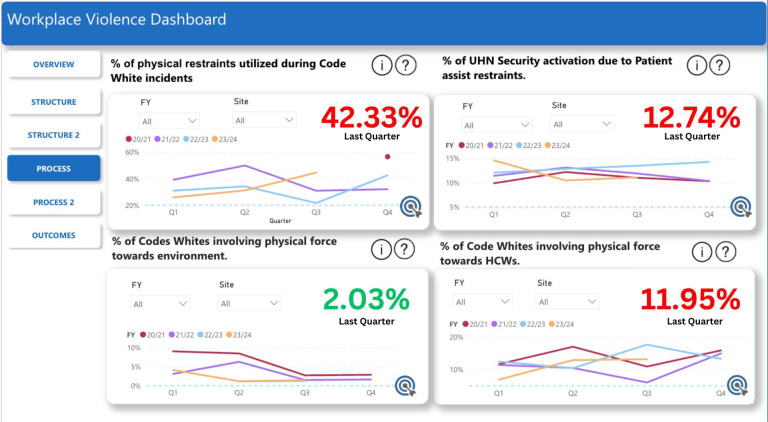

Following round 2, we identified 17 indicators across 3 categories (7 structure, 6 process and 4 outcome). Refer to figure 3 for the final set of indicators that reached expert consensus.

Figure 3.

Top WPV quality indicators identified through the modified Delphi Process. References relating to 27 38–70. *HCP includes healthcare providers, staff, residents, fellows learners and volunteers. UHN, University Health Network; WPV, workplace violence.

Category 1: structure

Category 1 focused on structure quality indicators which involve the organisation and infrastructure, including attributes of staffing or the healthcare institution. A total of seven structure indicators were selected in round 1 and subsequently deemed appropriate in the round 2 selection. However, one was removed due to redundancy created by available datasets. The final seven structure indicators that reached expert consensus focused on staff education across risk levels, wait times and WPV incident reporting. The structure indicators are listed in figure 3.

Category 2: process

Category 2 focused on process quality indicators, which involve the efficiency and effectiveness of the steps taken during a WPV incident. A total of 12 process indicators were high rated in round 1 and 7 indicators were deemed satisfactory in round 2. The final six process indicators that reached expert consensus focused on measuring acts of aggression by patients, frequency, intensity and the response to WPV incidents. The process indicators are listed in figure 3.

Category 3: outcome

Category 3 focused on outcome quality indicators which involve the impact of care on the patient, worker and population, such as injury and postincident support. A total of four outcome indicators were high rated in round 1 and subsequently deemed satisfactory in round 2. The final four outcome indicators that reached expert consensus focused on tracking lost time for work, the amount of HCPs involved in WPV incidents, and the usage of postincident reporting. The outcome indicators are listed in figure 3.

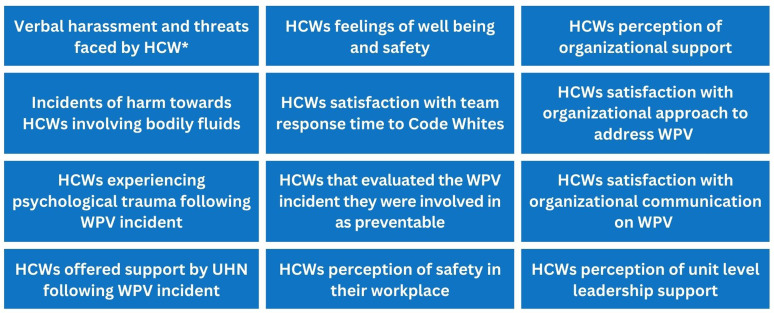

The 17 quality indicators selected by expert consensus through the modified Delphi process were operationalised and implemented in a newly developed WPV dashboard (figure 4). The dashboard was developed using a data visualisation software. The dashboard provided automated, real-time data for each quality indicator and used nine distinct databases from three separate function units within our organisation. The dashboard was designed to be accessible and readily comprehensible to all team members within our organisation.

Figure 4.

The UHN workplace violence dashboard. This is an example of a WPV dashboard, all numbers and figure are hypothetical and solely intended to visually demonstrate the utilisation of a dashboard and does not represent real-world data from UHN. The numbers in green reflect values that meet the organisation’s target while the numbers in red indicate values that are below the organisation’s target. UHN, University Health Network; WPV, workplace violence.

Discussion

This study identified the top quality indicators for our healthcare institution determined by expert consensus that was obtained through a modified Delphi process. This included 17 quality indicators that were categorised according to the Donabedian model as structural (n=7), process (n=6) and outcome (n=4) indicators.19 20 These quality indicators will be instrumental in our healthcare organisation’s initiative to address WPV. WPV in healthcare is a multifaceted issue that requires a complex intervention approach.7 21 Consequently, a comprehensive array of quality indicators was required to effectively provide adequate monitoring and benchmarking. The data collected from these quality indicators will contribute towards monitoring trends in WPV in our healthcare institution, measuring the impact of novel WPV initiatives and informing leadership decisions. Additionally, there is potential for benchmarking with other hospitals that may adopt similar quality indicators to measure WPV. Benchmarking these indicators will enable our institution to improve performance, set realistic goals, adopt best practices, enhance decision-making and foster a culture of continuous improvement by comparing our practices and outcomes regarding WPV with other healthcare institutions.22 Overall, establishing these quality indicators is a crucial step towards enhancing our understanding and management of WPV.

A modified Delphi process provides a pragmatic and effective method of selecting quality indicators tailored to the needs of our healthcare organisation. There exists an abundance of quality indicators that have previously been used to measure WPV in healthcare settings in the literature.19 The Delphi process was selected as a method to acquire expert consensus on the most relevant quality indicators, given its precedent in literature pertaining to WPV in healthcare.23–25 The Delphi methodology has many strengths including its anonymity provided through a survey approach.25 26 Furthermore, it presents a more feasible and accessible alternative compared with frequent in-person or online group or one-on-one meetings. This approach avoids the potential for an individual to dominate discussions or meetings, as well as minimises the occurrence of disagreements or undue influence from colleagues.24 27 However, the Delphi process can be subject to attrition as the numerous rounds run the risk of participants losing interest or not having the time.26 Fortunately, we mitigated this potential issue by maintaining active participant engagement through direct interactions, reminder emails and ongoing project updates.

The quality indicators identified through the modified Delphi Process enable us to investigate and monitor several areas of interest that are emphasised in literature pertaining to WPV in healthcare. For example, an indicator investigating the percentage WPV events reported by staff was highly endorsed by our expert panel. This aligns with the literature as under-reporting of WPV is a frequently highlighted issue with many contributing factors that require addressing.6 12 28–30 Similarly, our expert panel demonstrated strong support for indicators related to WPV education and training. Numerous articles advocate the need for education and training that incorporates concepts such as trauma-informed approach, de-escalation skills and agitation management in order to enhance safety and promote effective conflict resolution strategies.31 32 Lastly, the expert panel also demonstrated endorsement of indicators focused on HCPs that required time off work. These indicators provide insight into the severity of WPV incidents at the individual level, as well as an indicator of the cost of WPV on an organisational level, two consequences highlighted by literature.33 Quality indicators such as this, which offer insights on multiple levels contribute to a comprehensive understanding of WPV necessary for informed leadership decision-making.

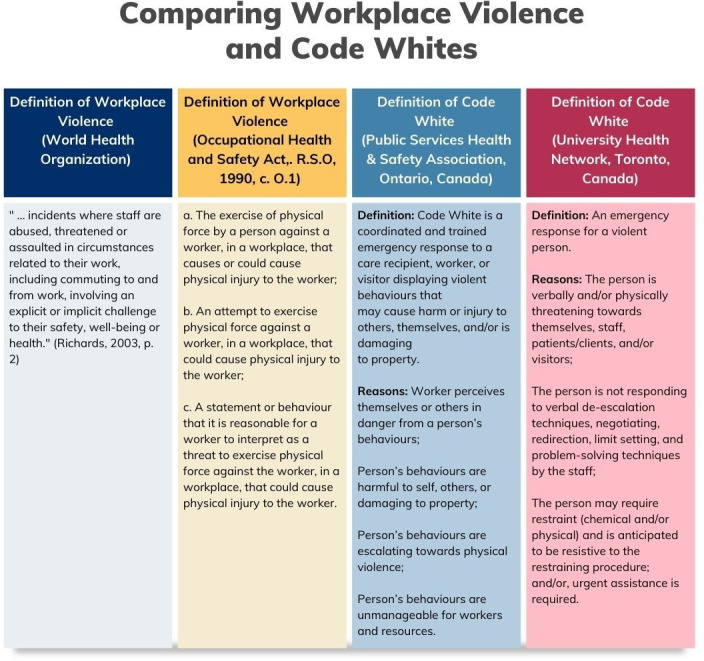

Interestingly, several indicators regarding staff’s well-being, satisfaction with WPV-related processes and psychological traumas gained support from our expert panel. Despite this, these indicators were removed by the security QI team after the first round due to issues with operationalisation as these topics are best captured qualitatively. This is important because although the information provided by the quality indicators is invaluable to monitoring WPV initiatives, it is crucial to be aware that decision-making cannot solely rely on quantitative metrics. WPV in healthcare has contributed to HCP’s decreased morale, job satisfaction, feelings of safety and quality of care.12 33 Consequently, it is necessary to collect qualitative feedback from staff through methods such as qualitative interviews or pulse surveys.34 Only qualitative data can provide leadership with the experiences of people in their workplace that is necessary to understand the severity of this issue’s impact. Our WPV expert panel was aware of this and provided recommendations for survey topics (figure 5). The next steps will include development and implementation of a pulse survey based on these topics to provide leadership with the qualitative data necessary to obtain a comprehensive perspective of WPV within our organisation.

Figure 5.

WPV-related topics were identified in the Delphi process by experts as topics to investigate qualitatively. *HCP includes healthcare providers, staff, residents, fellows learners and volunteers. HCW, healthcare worker; UHN, University Health Network; WPV, workplace violence.

An additional next step involves the assessment of the quality indicators. For any quality indicator project, it is crucial to monitor the impact and relevance of the indicators over time. The workplace violence prevention advisory committee that endorsed the WPV dashboard will conduct annual evaluations to assess the effectiveness, validity and relevance of the quality indicators. This may include comparing WPV reporting and prevalence before and after the new quality indicators were implemented, investigating changes in reporting patterns and performing a statistical analysis to assess the significance of observed changes.35 This recurring assessment will ensure that the quality indicators continue to provide the necessary information and address any potential gaps in WPV monitoring.

Limitations

Our modified Delphi process contained several limitations. Despite contacting WPV experts in many different fields, the study lacked diversity of experts as the experts that responded were primarily from the centre for mental health (n=10) and the code white governance committee (n=10).36 As a result, the study’s findings are influenced by over-representation of specific groups. Furthermore, no patients were directly involved in the project; however, as a mitigation strategy the project and its results were presented to our organisational governance bodies for WPV prevention which include patient partners. As an additional mitigation consideration, the higher-level organisational QI project addressing the prevention of WPV contains a dedicated subproject on community outreach and patient partner involvement.6 What is more, there exist inherent biases in the methodology such as bias in the interpretation of findings.36 37 As well, the identified quality indicators are specific to the needs of our organisation and run the risk of not being widely applicable to other organisations but defining organisation-specific quality indicators was also the very aim of this study. Lastly, although anonymity is a significant aspect of the Delphi process, experts may find it challenging to evaluate indicators thoroughly without the opportunity for clarification.36 Such clarification, which could typically be obtained through in-person interviews or focus groups, is limited to an anonymous survey format.

Conclusion

Addressing the significant and complex challenge of WPV in healthcare requires effective means of monitoring and benchmarking WPV using data sources grounded in evidence-based quality measurement specific to the context. Effective WPV dashboards can be used to provide healthcare organisation leaders with the metrics and insights necessary to make data-driven decisions, monitor trends in WPV, measure impacts of WPV initiatives and be used to compare data with other healthcare institutions. However, numbers and dashboards need to be balanced with qualitatively capturing the circumstances, perspectives and lived experiences and the very real distress central to any WPV event.

Acknowledgments

We would like to thank our colleagues for their contribution to the content of this article: Charlene Reynolds, John Shannon, Yasemin Sarraf, Melanie Anderson, Sam Sabbah, Lucas Chartier, Marnie Escaf, Diana Elder, the members of the workplace violence prevention advisory committee, the members of the code white governance committee and the members of the workplace violence education collaboration. In addition, we would like to thank all of those who dedicated their time and efforts to completing our surveys: Jane Ballantyne, Nathan Balzer, Sarah Beneteau, Sabrina Benett, Paul Beverly, Edna Bonsu, Aideen Carol, Lucas Chartier, Lisa Crawley, Sahand Ensafi, Erin Ledrew, Linda Liu, Mandy Lowe, Asha Maharaj, Nerissa Maxwell, Erin O’Connor, Martin Phung, Laura Pozzobon, Charlene Reynolds, Sam Sabbah, John Shannon, Kathleen Sheehan, Cheryl Simpson, Sanjeev Sockalingham, Yehudis Stokes and Marc Toppings.

Footnotes

Contributors: RS made substantial contributions to the conception and design of the Delphi process, the acquisition, analysis and interpretation of the data for the Delphi process and made substantial contributions to drafting the manuscript. RS reviewed the Delphi process manuscript critically for important intellectual content, provided final approval of the version to be published and agreed to be accountable for all aspects of the work. RS is the first author. BMRL made substantial contributions to the design of the Delphi process, the acquisition, analysis and interpretation of the data for the Delphi process and made substantial contributions to drafting the manuscript. BMRL contributed to drafting the work, provided final approval of the version to be published and agreed to be accountable for all aspects of the work. BMRL is the corresponding author. JG made a substantial contribution to the interpretation of the Delphi process data and made substantial contributions to drafting the manuscript, provided final approval of the version to be published and agreed to be accountable for all aspects of the work; JG has shared first authorship. BS provided substantial contributions to the conception of the Delphi process, reviewed the Delphi process manuscript critically for important intellectual content, provided final approval of the version to be published and agreed to be accountable for all aspects of the work. TH provided substantial contributions to the conception of the Delphi process, reviewed the Delphi process manuscript critically for important intellectual content, provided final approval of the version to be published and agreed to be accountable for all aspects of the work. JH provided substantial contributions to the conception of the Delphi process, reviewed Delphi process manuscript critically for important intellectual content, provided final approval of the version to be published and agreed to be accountable for all aspects of the work. MT made substantial contributions to the design of the Delphi process. MT reviewed the Delphi process manuscript critically for important intellectual content, provided final approval of the version to be published and agreed to be accountable for all aspects of the work. CS-Q made substantial contributions to the conception and design of the Delphi process, the acquisition, analysis and interpretation of the data for the Delphi process. CS-Q reviewed the Delphi process manuscript critically for important intellectual content, provided final approval of the version to be published and agreed to be accountable for all aspects of the work. CS-Q is responsible for the overall content as the guarantor. All authors have read and approved the final manuscript.

Funding: This work was supported by the UHN Quality Improvement Physicians Grant.

Competing interests: RS has received support from the Quality Improvement Physicians Grant, University Health Network. BMRL has received support from the Quality Improvement Physicians Grant, University Health Network. JG has received support from the Quality Improvement Physicians Grant, University Health Network. BS has received support from the Quality Improvement Physicians Grant, University Health Network. TH has nothing to declare. JH has nothing to declare. MT is on the board of Health Insurance Reciprocal of Canada. CS-Q has received education scholarship support from Academic Scholars Award, Department of Psychiatry, University of Toronto, and Young Leaders Program and Cancer Experience Program, Princess Margaret Cancer Center, University Health Network and the Quality Improvement Physicians Grant, University Health Network. He is on the board of directors for the Canadian Academy for Consultation and Liaison Psychiatry. He is the Provincial Quality Lead Psychiatry–Psychosocial Oncology at Ontario Health, Ontario, Canada.

Patient and public involvement: Patients and/or the public were involved in the design, or conduct, or reporting, or dissemination plans of this research. Refer to the Methods section for further details.

Provenance and peer review: Not commissioned; externally peer reviewed.

Supplemental material: This content has been supplied by the author(s). It has not been vetted by BMJ Publishing Group Limited (BMJ) and may not have been peer-reviewed. Any opinions or recommendations discussed are solely those of the author(s) and are not endorsed by BMJ. BMJ disclaims all liability and responsibility arising from any reliance placed on the content. Where the content includes any translated material, BMJ does not warrant the accuracy and reliability of the translations (including but not limited to local regulations, clinical guidelines, terminology, drug names and drug dosages), and is not responsible for any error and/or omissions arising from translation and adaptation or otherwise.

Data availability statement

All data relevant to the study are included in the article or uploaded as online supplemental information.

Ethics statements

Patient consent for publication

Not applicable.

Ethics approval

The study was reviewed and approved by the UHN Quality Improvement Review Committee, which granted the study a formal exemption from Research Ethics Board review (QI ID: 22-0499).

References

- 1. U.S. Bureau of Labor Statistics . Workplace violence in healthcare, 2018. 2020. Available: https://www.bls.gov/iif/factsheets/workplace-violence-healthcare-2018.htm

- 2. Liu R, Li Y, An Y, et al. Workplace violence against frontline clinicians in emergency departments during the COVID-19 pandemic. PeerJ 2021;9:e12459. 10.7717/peerj.12459 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. McGuire SS, Gazley B, Majerus AC, et al. Impact of the COVID-19 pandemic on workplace violence at an academic emergency department. Am J Emerg Med 2022;53:285. 10.1016/j.ajem.2021.09.045 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Dopelt K, Davidovitch N, Stupak A, et al. Workplace violence against hospital workers during the COVID-19 pandemic in Israel: implications for public health. Int J Environ Res Public Health 2022;19:4659. 10.3390/ijerph19084659 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Brigo F, Zaboli A, Rella E, et al. The impact of COVID-19 pandemic on temporal trends of workplace violence against healthcare workers in the emergency department. Health Policy 2022;126:1110–6. 10.1016/j.healthpol.2022.09.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Schulz-Quach C, Lyver B, Reynolds C, et al. Understanding, measuring, and ameliorating workplace violence in healthcare: a Canadian systematic framework to address a global healthcare phenomenon. In Review [Preprint]. 10.21203/rs.3.rs-4034167/v1 [DOI]

- 7. van Bokhoven MA, Kok G, van der Weijden T. Designing a quality improvement intervention: a systematic approach. Qual Saf Health Care 2003;12:215–20. 10.1136/qhc.12.3.215 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Chung PJ, Baum RA, Soares NS, et al. Introduction to quality improvement part one. J Dev Behav Pediatr 2014;35:460–6. 10.1097/DBP.0000000000000078 [DOI] [PubMed] [Google Scholar]

- 9. Gupta M, Kaplan HC. Measurement for quality improvement: using data to drive change. J Perinatol 2020;40:962–71. 10.1038/s41372-019-0572-x [DOI] [PubMed] [Google Scholar]

- 10. Varkey P, Reller MK, Resar RK. Basics of quality improvement in health care. Mayo Clin Proc 2007;82:735–9. 10.4065/82.6.735 [DOI] [PubMed] [Google Scholar]

- 11. McLees AW, Nawaz S, Thomas C, et al. Defining and assessing quality improvement outcomes: a framework for public health. Am J Public Health 2015;105 Suppl 2:S167–73. 10.2105/AJPH.2014.302533 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Keith MM, Brophy JT. Code white: sounding the alarm on violence against health care workers. Toronto, Ontario: Between the Lines; 2021. [Google Scholar]

- 13. Byon HD, Sagherian K, Kim Y, et al. Nurses’ experience with type II workplace violence and underreporting during the COVID-19 pandemic. Workplace Health Saf 2022;70:412–20. 10.1177/21650799211031233 [DOI] [PubMed] [Google Scholar]

- 14. Schang L, Blotenberg I, Boywitt D. What makes a good quality indicator set? A systematic review of criteria. Int J Qual Health Care 2021;33:mzab107. 10.1093/intqhc/mzab107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Quentin W, Partanen V-M, Brownwood I, et al. Characteristics, effectiveness and implementation of different strategies. In: Busse R, Klazinga N, Panteli D, eds. Improving Healthcare Quality in Europe. Copenhagen, Denmark: WHO Regional Office for Europe, 2019. [Google Scholar]

- 16. Gattrell WT, Logullo P, van Zuuren EJ, et al. ACCORD (accurate consensus reporting document): a reporting guideline for consensus methods in Biomedicine developed via a modified Delphi. PLoS Med 2024;21:e1004326. 10.1371/journal.pmed.1004326 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Franklin KK, Hart JK. Idea generation and exploration: benefits and limitations of the policy Delphi research method. Innov High Educ 2006;31:237–46. 10.1007/s10755-006-9022-8 [DOI] [Google Scholar]

- 18. Holden RJ, Carayon P. SEIPS 101 and seven simple SEIPS tools. BMJ Qual Saf 2021;30:901–10. 10.1136/bmjqs-2020-012538 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Lyver B, Gorla J, Schulz-Quach C, et al. Identifying evidence-based quality indicators to measure workplace violence in healthcare settings: a rapid review. In Review [Preprint] 2023. 10.21203/rs.3.rs-3516781/v1 [DOI] [PMC free article] [PubMed]

- 20. Donabedian A. The quality of care: how can it be assessed? JAMA 1988;260:1743–8. 10.1001/jama.260.12.1743 [DOI] [PubMed] [Google Scholar]

- 21. Somani R, Muntaner C, Hillan E, et al. A systematic review: effectiveness of interventions to de-escalate workplace violence against nurses in healthcare settings. Saf Health Work 2021;12:289–95. 10.1016/j.shaw.2021.04.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Pantall J. Benchmarking in healthcare. NT Research 2001;6:568–80. 10.1177/136140960100600203 [DOI] [Google Scholar]

- 23. D’Ettorre G, Caroli A, Pellicani V, et al. Preliminary risk assessment of workplace violence in hospital emergency departments. Ann Ig 2020;32:99–108. 10.7416/ai.2020.2334 [DOI] [PubMed] [Google Scholar]

- 24. Berezdivin J. Experts' consensus of OSHA’s guidelines for workplace violence prevention program effectiveness: a Delphi study. Union Institute and University; 2008. [Google Scholar]

- 25. Abregú-Tueros LF, Bravo-Esquivel CJ, Abregú-Arroyo SK, et al. Consensus on relevant psychosocial interventions applied in health institutions to prevent psychological violence at work: Delphi method. BMC Res Notes 2024;17:19. 10.1186/s13104-023-06680-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Donohoe H, Stellefson M, Tennant B. Advantages and limitations of the E-Delphi technique: implications for health education researchers. Am J Health Educ 2012;43:38–46. 10.1080/19325037.2012.10599216 [DOI] [Google Scholar]

- 27. Yakov S, Birur B, Bearden MF, et al. Sensory reduction on the general milieu of a high-acuity inpatient psychiatric unit to prevent use of physical restraints: a successful open quality improvement trial. J Am Psychiatr Nurses Assoc 2018;24:133–44. 10.1177/1078390317736136 [DOI] [PubMed] [Google Scholar]

- 28. Kim S, Lynn MR, Baernholdt M, et al. How does workplace violence–reporting culture affect workplace violence, nurse burnout, and patient safety? J Nurs Care Qual 2023;38:11–8. 10.1097/NCQ.0000000000000641 [DOI] [PubMed] [Google Scholar]

- 29. García-Pérez MD, Rivera-Sequeiros A, Sánchez-Elías TM, et al. Workplace violence on healthcare professionals and underreporting: characterization and knowledge gaps for prevention. Enferm Clin (Engl Ed) 2021;31:390–5. 10.1016/j.enfcle.2021.05.001 [DOI] [PubMed] [Google Scholar]

- 30. Spencer C, Sitarz J, Fouse J, et al. Nurses’ rationale for underreporting of patient and visitor perpetrated workplace violence: a systematic review. BMC Nurs 2023;22:134. 10.1186/s12912-023-01226-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Beech B, Leather P. Workplace violence in the health care sector: a review of staff training and integration of training evaluation models. Aggress Violent Behav 2006;11:27–43. 10.1016/j.avb.2005.05.004 [DOI] [Google Scholar]

- 32. Martinez AJS. Implementing a workplace violence simulation for undergraduate nursing students: a pilot study. J Psychosoc Nurs Ment Health Serv 2017;55:39–44. 10.3928/02793695-20170818-04 [DOI] [PubMed] [Google Scholar]

- 33. Hassankhani H, Parizad N, Gacki-Smith J, et al. The consequences of violence against nurses working in the emergency department: a qualitative study. Int Emerg Nurs 2018;39:20–5. 10.1016/j.ienj.2017.07.007 [DOI] [PubMed] [Google Scholar]

- 34. Zhang J, Zheng J, Cai Y, et al. Nurses' experiences and support needs following workplace violence: a qualitative systematic review. J Clin Nurs 2021;30:28–43. 10.1111/jocn.15492 [DOI] [PubMed] [Google Scholar]

- 35. Veronesi G, Ferrario MM, Giusti EM, et al. Systematic violence monitoring to reduce underreporting and to better inform workplace violence prevention among health care workers: before-and-after prospective study. JMIR Public Health Surveill 2023;9:e47377. 10.2196/47377 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Walker AM, Selfe J. The Delphi method: a useful tool for the allied health researcher. Br J Ther Rehabil 1996;3:677–81. 10.12968/bjtr.1996.3.12.14731 [DOI] [Google Scholar]

- 37. Hsu CC, Sandford BA. The Delphi technique: making sense of consensus. Pract Assess Res Eval 2007. 10.7275/pdz9-th90 [DOI] [Google Scholar]

- 38. Downey LVA, Zun LS, Gonzales SJ. Frequency of alternative to restraints and seclusion and uses of agitation reduction techniques in the emergency department. General Hospital Psychiatry 2007;29:470–4. 10.1016/j.genhosppsych.2007.07.006 [DOI] [PubMed] [Google Scholar]

- 39. Hills D. Relationships between aggression management training, perceived selfefficacy and rural general hospital nurses’ experiences of patient aggression. Contemp Nurse 2008;31:20–31. 10.5172/conu.673.31.1.20 [DOI] [PubMed] [Google Scholar]

- 40. Hoffmann JA, Johnson JK, Pergjika A, et al. Development of quality measures for pediatric agitation management in the emergency department. J Healthc Qual 2022;44:218–29. 10.1097/JHQ.0000000000000339 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Howard PK, Gilboy N. Workplace violence. Adv Emerg Nurs J 2009;31:94–100. 10.1097/TME.0b013e3181a34a14 [DOI] [PubMed] [Google Scholar]

- 42. Im DD, Scott KW, Venkatesh AK, et al. A quality measurement framework for emergency department care of psychiatric emergencies. Ann Emerg Med 2023;81:592–605. 10.1016/j.annemergmed.2022.09.007 [DOI] [PubMed] [Google Scholar]

- 43. Lyneham J. Violence in New South Wales emergency departments. Aust J Adv Nurs 2000;18:8–17. [PubMed] [Google Scholar]

- 44. Public Services Health and Safety Association . Emergency response to workplace violence (code white) in the healthcare sector toolkit. 2021.

- 45. Virani S, Bona A, Bodic M, et al. Management of severe agitation in the emergency department of a community hospital in Brooklyn. Prim Care Companion CNS Disord 2019;21:27452. 10.4088/PCC.18l02348 [DOI] [PubMed] [Google Scholar]

- 46. Balfour ME, Tanner K, Jurica PJ, et al. Crisis reliability indicators supporting emergency services (CRISES): a framework for developing performance measures for behavioral health crisis and psychiatric emergency programs. Community Ment Health J 2016;52:1–9. 10.1007/s10597-015-9954-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Efrat-Treister D, Moriah H, Rafaeli A. The effect of waiting on aggressive tendencies toward emergency department staff: providing information can help but may also backfire. PLoS One 2020;15:e0227729. 10.1371/journal.pone.0227729 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Gustafson DH, Sainfort F, Johnson SW, et al. Measuring quality of care in psychiatric emergencies: construction and evaluation of a Bayesian index. Health Serv Res 1993;28:131–58. [PMC free article] [PubMed] [Google Scholar]

- 49. Hart C, Colley R, Harrison A. Using a risk assessment matrix with mental health patients in emergency departments. Emerg Nurse 2005;12:21–8. 10.7748/en2005.02.12.9.21.c1181 [DOI] [PubMed] [Google Scholar]

- 50. Morphet J, Griffiths D, Plummer V, et al. At the crossroads of violence and aggression in the emergency department: perspectives of Australian emergency nurses. Aust Health Rev 2014;38:194–201. 10.1071/AH13189 [DOI] [PubMed] [Google Scholar]

- 51. Saillant S, Della Santa V, Golay P, et al. The code white protocol: a mixed somatic-psychiatric protocol for managing psychomotor agitation in the ED. Archives Suissses de Neurologie et de Psychiatrie/Schweizer Archiv Für Neurologie Und Psychiatrie/Swiss Archives of Neurology and Psychiatry 2018;169:121–6. [Google Scholar]

- 52. Hankin CS, Norris MM, Bronstone A. PMH36 estimated United States incidence and cost of emergency department staff assaults perpetrated by agitated adult patients with schizophrenia or bipolar disorder. Value Health 2010;13:A110–1. 10.1016/S1098-3015(10)72531-6 [DOI] [Google Scholar]

- 53. Sarnese PM. Continuous quality improvement to manage emergency department violence and security-related incidents. J Healthc Prot Manage 1995;12:54–60. [PubMed] [Google Scholar]

- 54. Stene J, Larson E, Levy M, et al. Workplace violence in the emergency department: giving staff the tools and support to report. Perm J 2015;19:e113–7. 10.7812/TPP/14-187 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55. Tavares G, Ribeiro O. Effectiveness of programs to prevent violence to health professionals in the emergency Department: a systematic review. Aten Primaria 2016;48:149. Available: https://regroup-production.s3.amazonaws.com/documents/ReviewReference/647520760/Atencion%20primaria_effectivenessof%20programs.pdf?response-content-type=application%2Fpdf&X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAYSFKCAWYQ4D5IUHG%2F20240628%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20240628T180218Z&X-Amz-Expires=604800&X-Amz-SignedHeaders=host&X-Amz-Signature=78cd0accbd04db2f86790bb4d1644788cf24c766164300dfde3cdb436e872669 26388468 [Google Scholar]

- 56. Hallett N, Huber JW, Sixsmith J, et al. Care planning for aggression management in a specialist secure mental health service: an audit of user involvement. Int J Ment Health Nurs 2016;25:507–15. 10.1111/inm.12238 [DOI] [PubMed] [Google Scholar]

- 57. Alexander DA, Gray NM, Klein S, et al. Personal safety and the abuse of staff in a Scottish NHS trust. Health Bull 2000;58:442–9. Available: https://pubmed.ncbi.nlm.nih.gov/12813775/ [PubMed] [Google Scholar]

- 58. Florisse EJR, Delespaul PAEG. Monitoring risk assessment on an acute psychiatric ward: effects on aggression, seclusion and nurse behaviour. PLoS One 2020;15:e0240163. 10.1371/journal.pone.0240163 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. Gillespie GL, Leming-Lee T. Chart it to stop it: failure modes and effect analysis for the reporting of workplace aggression. Nurs Clin North Am 2019;54:21–32. 10.1016/j.cnur.2018.10.004 [DOI] [PubMed] [Google Scholar]

- 60. Hvidhjelm J, Sestoft D, Skovgaard LT, et al. Aggression in psychiatric wards: effect of the use of a structured risk assessment. Issues Ment Health Nurs 2016;37:960–7. 10.1080/01612840.2016.1241842 [DOI] [PubMed] [Google Scholar]

- 61. Ma Y, Wang Y, Shi Y, et al. Mediating role of coping styles on anxiety in healthcare workers victim of violence: a cross-sectional survey in China hospitals. BMJ Open 2021;11:e048493. 10.1136/bmjopen-2020-048493 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62. Martini A, Fantini S, D’Ovidio MC, et al. Risk assessment of aggression toward emergency health care workers. Occupational Medicine 2012;62:223–5. 10.1093/occmed/kqr199 [DOI] [PubMed] [Google Scholar]

- 63. Okundolor SI, Ahenkorah F, Sarff L, et al. Zero staff assaults in the psychiatric emergency room: impact of a multifaceted performance improvement project. J Am Psychiatr Nurses Assoc 2021;27:64–71. 10.1177/1078390319900243 [DOI] [PubMed] [Google Scholar]

- 64. Sharifi S, Shahoei R, Nouri B, et al. Effect of an education program, risk assessment checklist and prevention protocol on violence against emergency department nurses: a single center before and after study. Int Emerg Nurs 2020;50:100813. 10.1016/j.ienj.2019.100813 [DOI] [PubMed] [Google Scholar]

- 65. Alshahrani M, Alfaisal R, Alshahrani K, et al. Incidence and prevalence of violence toward health care workers in emergency departments: a multicenter cross-sectional survey. Int J Emerg Med 2021;14:71. 10.1186/s12245-021-00394-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66. Gupta S, Williams K, Matile J, et al. Trends in the role of security services in the delivery of emergency department care. CJEM 2023;25:43–7. 10.1007/s43678-022-00406-w [DOI] [PubMed] [Google Scholar]

- 67. Campbell E, Jessee D, Whitney J, et al. Development and implementation of an emergent documentation aggression rating tool: quality improvement. J Emerg Nurs 2021;47:696–706. 10.1016/j.jen.2021.04.011 [DOI] [PubMed] [Google Scholar]

- 68. Bisconer SW, Green M, Mallon-Czajka J, et al. Managing aggression in a psychiatric hospital using a behaviour plan: a case study. J Psychiatr Ment Health Nurs 2006;13:515–21. 10.1111/j.1365-2850.2006.00973.x [DOI] [PubMed] [Google Scholar]

- 69. Legambi TF, Doede M, Michael K, et al. A quality improvement project on agitation management in the emergency department. J Emerg Nurs 2021;47:390–9. 10.1016/j.jen.2021.01.005 [DOI] [PubMed] [Google Scholar]

- 70. Love CC, Hunter ME. Violence in public sector psychiatric hospitals: Benchmarking nursing staff injury rates. J Psychosoc Nurs Ment Health Serv 1996;34:30–4. 10.3928/0279-3695-19960501-15 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjoq-2024-002855supp001.pdf (381.7KB, pdf)

bmjoq-2024-002855supp002.pdf (334.3KB, pdf)

bmjoq-2024-002855supp003.pdf (199.5KB, pdf)

bmjoq-2024-002855supp004.pdf (2.1MB, pdf)

bmjoq-2024-002855supp005.pdf (663.7KB, pdf)

Data Availability Statement

All data relevant to the study are included in the article or uploaded as online supplemental information.