Abstract

Affine image registration is a cornerstone of medical-image analysis. While classical algorithms can achieve excellent accuracy, they solve a time-consuming optimization for every image pair. Deep-learning (DL) methods learn a function that maps an image pair to an output transform. Evaluating the function is fast, but capturing large transforms can be challenging, and networks tend to struggle if a test-image characteristic shifts from the training domain, such as the resolution. Most affine methods are agnostic to the anatomy the user wishes to align, meaning the registration will be inaccurate if algorithms consider all structures in the image. We address these shortcomings with SynthMorph, a fast, symmetric, diffeomorphic, and easy-to-use DL tool for joint affine-deformable registration of any brain image without preprocessing. First, we leverage a strategy that trains networks with widely varying images synthesized from label maps, yielding robust performance across acquisition specifics unseen at training. Second, we optimize the spatial overlap of select anatomical labels. This enables networks to distinguish anatomy of interest from irrelevant structures, removing the need for preprocessing that excludes content which would impinge on anatomy-specific registration. Third, we combine the affine model with a deformable hypernetwork that lets users choose the optimal deformation-field regularity for their specific data, at registration time, in a fraction of the time required by classical methods. This framework is applicable to learning anatomy-aware, acquisition-agnostic registration of any anatomy with any architecture, as long as label maps are available for training. We analyze how competing architectures learn affine transforms and compare state-of-the-art registration tools across an extremely diverse set of neuroimaging data, aiming to truly capture the behavior of methods in the real world. SynthMorph demonstrates high accuracy and is available at https://w3id.org/synthmorph, as a single complete end-to-end solution for registration of brain magnetic resonance imaging (MRI) data.

Keywords: affine registration, deformable registration, deep learning, hypernetwork, domain shift, neuroimaging

1. Introduction

Image registration is an essential component of medical image processing and analysis that estimates a mapping from the space of the anatomy in one image to the space of another image (Cox, 1996; Fischl et al., 2002, 2004; Jenkinson et al., 2012; Tustison et al., 2013). Such transforms generally include an affine component accounting for global orientation such as different head positions, which are typically not of clinical interest. Transforms often include a deformable component that may represent anatomically meaningful differences in geometry (Hajnal & Hill, 2001). Many techniques analyze these further, for example voxel-based morphometry (Ashburner & Friston, 2000; Whitwell, 2009).

Iterative registration has been extensively studied, and the available methods can achieve excellent accuracy both within and across magnetic resonance imaging (MRI) contrasts (Ashburner, 2007; Cox & Jesmanowicz, 1999; Friston et al., 1995; Jiang et al., 1995; Lorenzi et al., 2013; Rohr et al., 2001; Rueckert et al., 1999). Approaches differ in how they measure image similarity and the strategy chosen to optimize it, but the fundamental algorithm is the same: fit a set of parameters modeling the spatial transformation between an image pair by iteratively minimizing a dissimilarity metric. While classical deformable registration can take tens of minutes to several hours, affine registration optimizes only a handful of parameters and is generally faster (Hoffmann et al., 2015; Jenkinson & Smith, 2001; Modat et al., 2014; Reuter et al., 2010). However, these approaches solve an optimization problem for every new image pair, which is inefficient: depending on the algorithm, affine registration of higher-resolution structural MRI, for example, can easily take 5–10 minutes (Table 2). Further, iterative pipelines can be laborious to use. The user typically has to tailor the optimization strategy and choose a similarity metric appropriate for the image appearance (Pustina & Cook, 2017). Often, images require preprocessing, including intensity normalization or removal of structures that the registration should exclude. These shortcomings have motivated work on deep-learning (DL) based registration.

Table 2.

Single-threaded runtimes on a 2.2-GHz Intel Xeon Silver 4114 CPU, averaged over runs.

| Method | Affine (seconds) | Deformable (seconds) |

|---|---|---|

| ANTs | 777.8 36.0 | 17189.5 52.7 |

| NiftyReg | 293.7 0.5 | 7021.0 21.3 |

| Deeds | 142.8 0.3 | 383.1 0.6 |

| Robust | 1598.9 0.8 | – |

| FSL | 151.7 0.4 | 8141.5 195.7 |

| C2FViTa | 43.7 0.3 | – |

| KeyMorph | 36.2 2.6 | – |

| VTNb | – | 63.5 0.3 |

| SynthMorph | 72.4 0.8 | 887.4 2.5 |

Errors indicate standard deviations. On an NVIDIA V100 GPU, all affine and deformable DL runtimes (bottom) are ~1 minute, including setup.

Timed on the GPU as the device is hard-coded.

Implementation performs joint registration only.

Recent advances in DL have enabled registration with unprecedented efficiency and accuracy (Balakrishnan et al., 2019; Dalca et al., 2018; Eppenhof & Pluim, 2018; Krebs et al., 2017; Li & Fan, 2017; Rohé et al., 2017; Sokooti et al., 2017; Yang et al., 2016, 2017). In contrast to classical approaches, DL models learn a function that maps an input registration pair to an output transform. While evaluating this function on a new pair of images is fast, most existing DL methods focus on the deformable component. Affine registration of the input images is often assumed (Balakrishnan et al., 2019; De Vos et al., 2017) or incorporated ad hoc, and thus given less attention than deformable registration (De Vos et al., 2019; Hu et al., 2018; Mok & Chung, 2022; S. Zhao, Dong, et al., 2019; S. Zhao, Lau, et al., 2019). Although state-of-the-art deformable algorithms can compensate for sub-optimal affine alignment to some extent, they cannot always fully recover the lost accuracy, as the experiment of Section 4.5 will show.

The learning-based models encompassing both affine and deformable components usually do not consider network generalization to modality variation (De Vos et al., 2019; Shen et al., 2019; S. Zhao, Dong, et al., 2019; S. Zhao, Lau, et al., 2019; Zhu et al., 2021). That is, networks trained on one type of data, such as T1-weighted (T1w) MRI, tend to inaccurately register other types of data, such as T2-weighted (T2w) scans. Even for similar MRI contrast, the domain shift caused by different noise or smoothness levels alone has the potential to reduce accuracy at test time. In contrast, learning frameworks capitalizing on generalization techniques and domain adaptation often do not incorporate the fundamental affine transform (M. Chen et al., 2017; Hoffmann et al., 2022; Iglesias et al., 2013; Qin et al., 2019; Tanner et al., 2018).

A separate challenge for affine registration consists of accurately aligning specific anatomy of interest in the image while ignoring irrelevant content. Any undesired structure that moves independently or deforms non-linearly will reduce the accuracy of the anatomy-specific transform unless an algorithm has the ability to ignore it. For example, neck and tongue tissue can confuse rigid brain registration when it deforms non-rigidly (Andrade et al., 2018; Fein et al., 2006; Fischmeister et al., 2013; Hoffmann et al., 2020).

1.1. Contribution

In this work, we present a single, easy-to-use DL tool for fast, symmetric, diffeomorphic—and thus invertible—end-to-end affine and deformable brain registration without preprocessing (Fig. 1). The tool performs robustly across MRI contrasts, intensity scales, and resolutions. We address the domain dependency and anatomical non-specificity of affine registration: while invariance to acquisition specifics will enable networks to generalize to new image types without retraining, our anatomy-specific training strategy alleviates the need for pre-processing segmentation steps that remove image content that would distract most registration methods—as Section 4.4 will show for the example of skull-stripping (Eskildsen et al., 2012; Hoopes, Mora, et al., 2022; Iglesias et al., 2011; Smith, 2002).

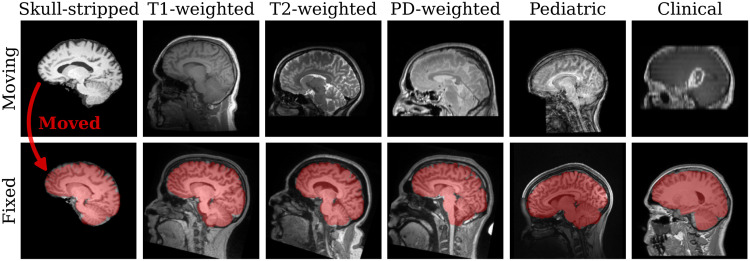

Fig. 1.

Examples of anatomy-aware SynthMorph affine 3D registration showing the moving brain transformed onto the fixed brain (red overlay). Trained with highly variable synthetic data, SynthMorph generalizes across a diverse array of real-world contrasts, resolutions, and subject populations without any preprocessing.

Our work builds on ideas from DL-based registration, affine registration, and a recent synthesis-based training strategy that promotes data independence by exposing networks to arbitrary image contrasts (Billot, Greve, et al., 2023; Billot et al., 2020; Hoffmann et al., 2022, 2023; Hoopes, Mora, et al., 2022; Kelley et al., 2024). First, we analyze three fundamental network architectures, to provide insight into how DL models learn and best represent the affine component (Appendix A). Second, we select an optimized affine architecture and train it with synthetic data only, making it robust across a landscape of acquired image types without exposing it to any real images during training. Third, we combine the affine model with a deformable hypernetwork to create an end-to-end registration tool, enabling users to choose a regularization strength that is optimal for their own data without retraining and in a fraction of the time required by classical methods. Fourth, we test our models across an extremely diverse set of images, aiming to truly capture the variability of real-world data. We compare their performance against popular affine and deformable toolboxes in Sections 4.4 and 4.5, respectively, to assess the accuracy users can achieve with off-the-shelf implementations for image types unseen at training.

We freely distribute our source code and tool, SynthMorph, at https://w3id.org/synthmorph. SynthMorph will ship with the upcoming FreeSurfer release (Fischl, 2012). For users who wish to use SynthMorph without downloading FreeSurfer, we maintain a standalone container with a wrapper script for easy setup and use supporting any of the following container tools: Docker, Podman, Apptainer, or Singularity.

2. Related Work

While this section provides an overview of widely adopted strategies for medical image registration, in-depth review articles are available (Fu et al., 2020; Oliveira & Tavares, 2014; Wyawahare et al., 2009).

2.1. Classical registration

Classical registration is driven by an objective function, which measures similarity in appearance between the moving and the fixed image. A simple and effective choice for images of the same contrast is the mean squared error (MSE). Normalized cross-correlation (NCC) is also widely used, because it provides excellent accuracy independent of the intensity scale (Avants et al., 2008). Registration of images across contrasts or modalities generally employs objective functions such as normalized mutual information (NMI) (Maes et al., 1997; Wells et al., 1996) or correlation ratio (Roche et al., 1998), as these do not assume similar appearance of the input images. Another class of classical methods uses metrics based on patch similarity (Glocker et al., 2008, 2011; Ou et al., 2011), which can outperform simpler metrics across modalities (Hoffmann et al., 2022).

To improve computational efficiency and avoid local minima, many classical techniques perform multi-resolution searches (Hellier et al., 2001; Nestares & Heeger, 2000). First, this strategy coarsely aligns smoothed downsampled versions of the input images. This initial solution is subsequently refined at higher resolutions until the original images align precisely (Avants et al., 2011; Modat et al., 2014; Reuter et al., 2010). Additionally, an initial grid search over a set of rotation parameters can help constrain this scale-space approach to a neighborhood around the global optimum (Jenkinson & Smith, 2001; Jenkinson et al., 2012).

Instead of optimizing image similarity, another registration paradigm detects landmarks and matches these across the images (Myronenko & Song, 2010). Early work relied on user assistance to identify fiducials (Besl & McKay, 1992; Meyer et al., 1995). More recent computer-vision approaches automatically extract features (Machado et al., 2018; Toews & Wells, 2013), for example from entropy (Wachinger & Navab, 2010, 2012) or difference-of-Gaussians images (Lowe, 2004; Rister et al., 2017; Wachinger et al., 2018). The performance of this strategy depends on the invariance of landmarks across viewpoints and intensity scales (Matas et al., 2004).

2.2. Deep-learning registration

Analogous to classical registration, unsupervised deformable DL methods fit the parameters of a deep neural network by optimizing a loss function that measures image similarity—but across many image pairs (Balakrishnan et al., 2019; Dalca et al., 2019; De Vos et al., 2019; Guo, 2019; Hoffmann et al., 2022; Krebs et al., 2019). In contrast, supervised DL strategies (Eppenhof & Pluim, 2018; Gopinath et al., 2024; Krebs et al., 2017; Rohé et al., 2017; Sokooti et al., 2017; Yang et al., 2016, 2017) train a network to reproduce ground-truth transforms, for example obtained with classical tools, and tend to underperform relative to their unsupervised counterparts (Hoffmann et al., 2022; Young et al., 2022), although warping features at the end of each U-Net (Ronneberger et al., 2015) level can close the performance gap (Young et al., 2022).

2.2.1. Affine deep-learning registration

A straightforward option for an affine-registration network architecture is combining a convolutional encoder with a fully connected (FC) layer to predict the parameters of an affine transform in one shot (Shen et al., 2019; S. Zhao, Dong, et al., 2019; S. Zhao, Lau, et al., 2019; Zhu et al., 2021). A series of convolutional blocks successively halve the image dimension, such that the output of the final convolution has substantially fewer voxels than the input images. This facilitates the use of the FC layer with the desired number of output units, preventing the number of network parameters from becoming intractably large. Networks typically concatenate the input images before passing them through the encoder. To benefit from weight sharing, twin networks pass the fixed and moving images separately and connect their outputs at the end (X. Chen et al., 2021; De Vos et al., 2019).

As affine transforms have a global effect on the image, some architectures replace the locally operating convolutional layers with vision transformers (Dosovitskiy et al., 2020; Mok & Chung, 2022). These models subdivide their inputs into patch embeddings and pass them through the transformer, before a multi-layer perceptron (MLP) outputs a transformation matrix. Multiple such modules in series can successively refine the affine transform if each module applies its output transform to the moving image before passing it onto the next stage (Mok & Chung, 2022). Composition of the transforms from each step produces the final output matrix.

Another affine DL strategy (Moyer et al., 2021; Yu et al., 2021) derives an affine transform without requiring MLP or FC layers, similar to the classical feature extraction and matching approach (Section 2.1). This method separately passes the moving and the fixed image through a convolutional encoder to detect two corresponding sets of feature maps. Computing the barycenter of each feature map yields moving and fixed point clouds, and a least-squares (LS) fit provides a transform aligning them. The approach is robust across large transforms (Yu et al., 2021), while removing the FC layer alleviates the dependency of the architecture on a specific image size.

In this work, we will test these fundamental DL architectures and extend them to build an end-to-end solution for joint affine and deformable registration that is aware of the anatomy of interest.

2.3. Robustness and anatomical specificity

Indiscriminate registration of images as a whole can limit the accurate alignment of specific substructures, such as the brain in whole-head MRI. One group of classical methods avoids this problem by down-weighting image regions that cannot be mapped accurately with the chosen transformation model, for example using an iteratively re-weighted LS algorithm (Billings et al., 2015; Gelfand et al., 2005; Modat et al., 2014; Nestares & Heeger, 2000; Puglisi & Battiato, 2011; Reuter et al., 2010). Few approaches focus on specific anatomical features, for example by restricting the registration to regions of an atlas with high prior probability for belonging to a particular tissue class (Fischl et al., 2002). The affine registration tools commonly used in neuroimage analysis (Cox, 1996; Friston et al., 1995; Jenkinson & Smith, 2001; Modat et al., 2014) instead expect—and require—that distracting image content be removed from the input data as a preprocessing step for optimal performance (Eskildsen et al., 2012; Iglesias et al., 2011; Klein et al., 2009; Smith, 2002). Similarly, many DL algorithms assume intensity-normalized and skull-stripped input images (Balakrishnan et al., 2019; Yu et al., 2021; S. Zhao, Lau, et al., 2019), preventing their applicability to diverse unprocessed data.

2.4. Domain generalizability

The adaptability of neural networks to out-of-distribution data generally presents a challenge to their deployment (Sun et al., 2016; M. Wang & Deng, 2018). Mitigation strategies include augmenting the variability of the training distribution, for example by adding random noise or applying geometric transforms (Chaitanya et al., 2019; Perez & Wang, 2017; Shorten & Khoshgoftaar, 2019; A. Zhao, Balakrishnan, et al., 2019). Transfer learning adapts a trained network to a new domain by fine-tuning deeper layers on the target distribution (Kamnitsas et al., 2017; Zhuang et al., 2020). These methods require training data from the target domain. By contrast, within medical imaging, a recent strategy synthesizes widely variable training images to promote data independence. The resulting networks generalize beyond dataset specifics and perform with high accuracy on tasks including segmentation (Billot, Greve, et al., 2023; Billot et al., 2020), deformable registration (Hoffmann et al., 2022), and skull-stripping (Hoopes, Mora, et al., 2022; Kelley et al., 2024). We build on this technology to achieve end-to-end registration.

3. Method

3.1. Background

3.1.1. Affine registration

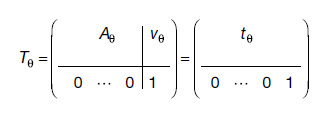

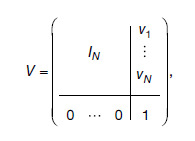

Let be a moving and a fixed image in -dimensional (D) space. We train a deep neural network with learnable weights to predict a global transform that maps the spatial domain of onto , given images . The transform is a matrix

|

(1) |

where matrix represents rotation, scaling, and shear, and is a vector of translational shifts, such that . We fit the network weights to training set subject to

| (2) |

where the loss measures the similarity of two input images, and means transformed by .

3.1.2. Synthesis-based training

A recent strategy (Billot, Greve, et al., 2023; Billot et al., 2020; Hoffmann et al., 2022, 2023; Hoopes, Mora, et al., 2022) achieves robustness to preprocessing and acquisition specifics by training networks exclusively with synthetic images generated from label maps. From a set of label maps , a generative model synthesizes corresponding widely variable images as network inputs. Instead of image similarity, the strategy optimizes spatial label overlap with a (soft) Dice-based loss (Milletari et al., 2016), strictly independent of image appearance:

| (3) |

where represents the one-hot encoded label of label map defined at the voxel locations in the discrete spatial domain of . The generative model requires only a few label maps to produce a stream of diverse training images that help the network accurately generalize to real medical images of any contrast at test time, which it can register without needing label maps.

3.2. Anatomy-aware registration

As we build on our recent work on deformable registration, SynthMorph (Hoffmann et al., 2022), here we only provide a high-level overview and focus on what is new for affine and joint affine-deformable registration. Figure 2 illustrates our setup for affine registration.

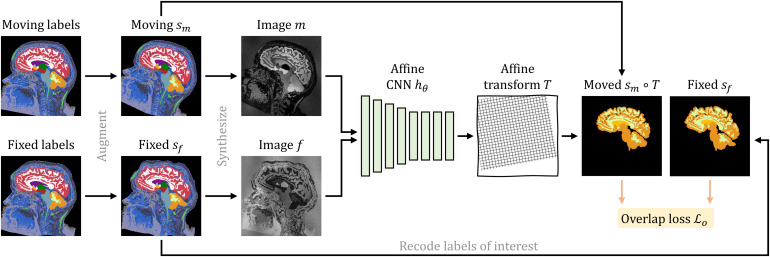

Fig. 2.

Training strategy for affine registration. At each iteration, we augment a pair of moving and fixed label maps and synthesize images from them. The network predicts an affine transform from which we compute the moved label map from. Loss recodes the labels in to optimize the overlap of select anatomy of interest only, such as WM, GM, and CSF.

3.2.1. Label maps

Every training iteration, we draw a pair of moving and fixed brain segmentations. We apply random spatial transformations to each of them to augment the range of head orientations and anatomical variability in the training set. Specifically, we construct an affine matrix from random translation, rotation, scaling, and shear as detailed in Appendix B.

We compose the affine transform with a randomly sampled and randomly smoothed deformation field (Hoffmann et al., 2022) and apply the composite transform in a single interpolation step. Finally, we simulate acquisitions with a partial field of view (FOV) by randomly cropping the label map, yielding .

3.2.2. Anatomical specificity

Let be the complete set of labels in . To encourage networks to register specific anatomy while ignoring irrelevant image content, we propose to recode such that the label maps include only a subset of labels . For brain-specific registration, consists of individual brain structures in the deformable case or larger tissue classes in the affine case. At training, the loss optimizes only the overlap of , whereas we synthesize images from the complete set of labels , providing rich image content outside the brain as illustrated in Figure 2.

3.2.3. Image synthesis

Given label map , we generate image with random contrast, noise, and artifact corruption (and similarly from ). Following SynthMorph, we first sample a mean intensity for each label in and assign this value to all voxels associated with label . Second, we corrupt by randomly applying additive Gaussian noise, anisotropic Gaussian blurring, a multiplicative spatial intensity bias field, intensity exponentiation with a global parameter, and downsampling along randomized axes. In aggregate, these steps produce widely varying intensity distributions within each anatomical label (Fig. 3).

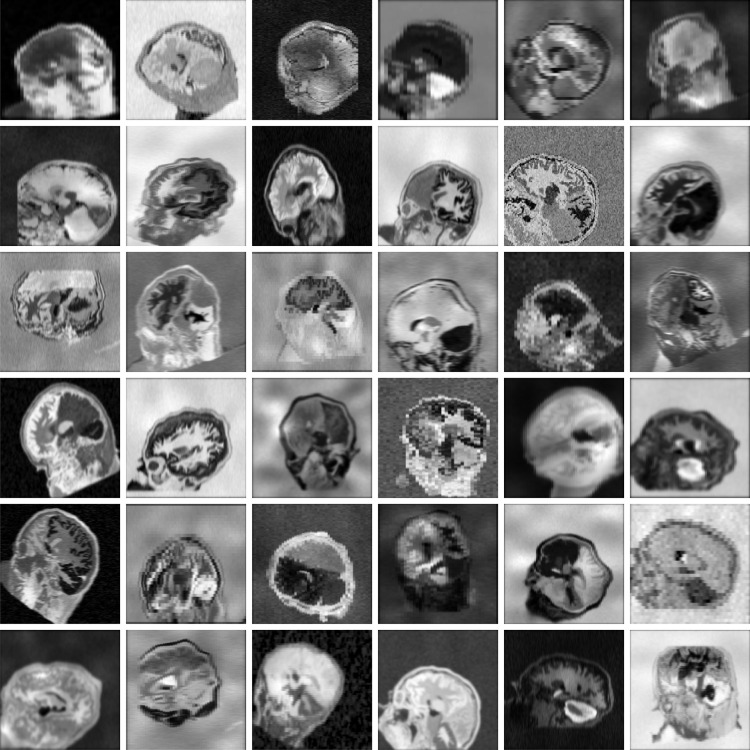

Fig. 3.

Synthetic 3D training data with arbitrary contrasts, resolutions, and artifact levels, generated from brain label maps. The image characteristics exceed the realistic range to promote network generalization across acquisition protocols. All examples are based on the same label map. In practice, we use several different subjects.

3.2.4. Generation hyperparameters

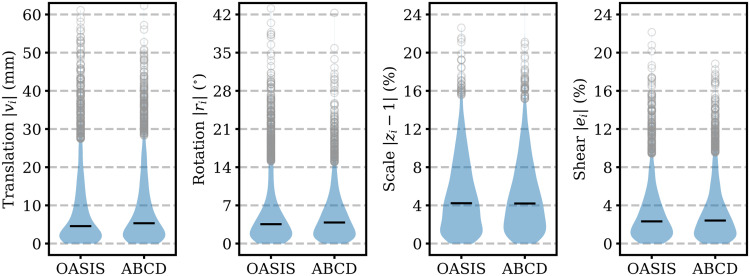

We choose the affine augmentation range such that it encompasses real-world transforms. Appendix Figure A4 (Appendix D) shows the distribution of affine transformation parameters measured across public datasets. We adapt all other values from prior work, which thoroughly analyzed their impact on accuracy (Hoffmann et al., 2022): Appendix Table A2 (Appendix C) lists hyperparameters for label-map augmentation and image synthesis.

3.3. Learning

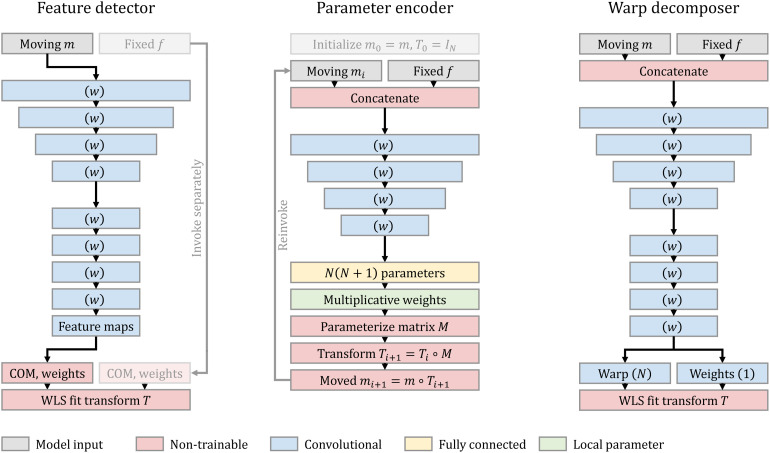

3.3.1. Symmetric affine network

Estimating an affine transform from a pair of medical images in D requires reducing a large input space of the order of 100 k–10 M voxels to only output parameters. We extend a recent architecture (Hoffmann et al., 2023; Moyer et al., 2021; A. Q. Wang et al., 2023; Yu et al., 2021), “Detector” in Appendix Figure A1 (Appendix A), that takes a single image as input and predicts a set of non-negative spatial feature maps with , to support full affine transforms (Yu et al., 2021) and weighted least-squares (WLS) (Moyer et al., 2021). Following a series of convolutions, we obtain the center of mass and channel power for each feature map of the moving image,

| (4) |

and separately center of mass with channel power for each of the fixed image. We interpret the sets and as corresponding moving and fixed point clouds. Detector refers to a network that predicts the affine transform aligning these point clouds subject to

| (5) |

where we use the definition of from Equation (1) as the submatrix of that excludes the last row, and we define the normalized scalar weight as

| (6) |

Let and be matrices whose th rows are and , respectively. Denoting , the closed-form WLS solution of Equation (5) is such that

| (7) |

3.3.2. Symmetric joint registration

For joint registration, we combine the affine model with a deformable SynthMorph architecture (Hoffmann et al., 2022). Let be a convolutional neural network with parameters that predicts a stationary velocity field (SVF) from concatenated images . While predicts symmetric affine transforms by construction, we explicitly symmetrize the SVF:

| (8) |

from which we obtain the diffeomorphic warp field via vector-field integration (Ashburner, 2007; Dalca et al., 2018), and integrating yields the inverse warp , up to the numerical precision of the algorithm used. Usually, approaches to learning deformable registration directly fit weights by optimizing a loss of the form

| (9) |

where quantifies label overlap as before, is a regularization term that encourages smooth warps, and the parameter controls the weighting of both terms.

Because directly fitting subject to Equation (9) yields an inflexible network predicting warps of fixed regularity, we parameterize using a hypernetwork. Let be a neural network with trainable parameters . Following our prior work (Hoopes, Hoffmann, et al., 2022; Hoopes et al., 2021), hypernetwork takes the regularization weight as input and outputs the weights of the deformable task network . Consequently, has no learnable parameters in our setup—its convolutional kernels can flexibly adapt in response to the value takes at test time.

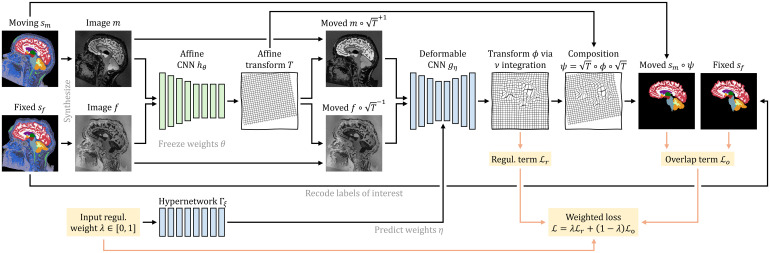

As shown in Figure 4, for symmetric joint registration, we move images into an affine mid-space using the matrix square roots of and have predict between images and using kernels specific to input .

Fig. 4.

Training strategy for joint registration. As in Figure 2, network predicts an affine transform between moving and fixed images synthesized from label maps . Hypernetwork takes the regularization weight as input and outputs the parameters of network . The moved images and are inputs to , which predicts a diffeomorphic warp field . We form the symmetric joint transform by composition and compute the moved label map . Loss recodes the labels of to optimize the overlap of select anatomy of interest—in this case brain labels only.

While users of SynthMorph can choose between running the deformable step in the affine mid-space or after applying the full transform to , only the former yields symmetric joint transforms. At training, the total forward transform is , and the loss of Equation (9) becomes

| (10) |

We choose , where is the displacement of the deformation , and is the identity field.

3.3.3. Overlap loss

In this work, we replace the Dice-based overlap loss term of Equation (3) with a simpler term (Heinrich, 2019; Y. Wang et al., 2021) that measures MSE between one-hot encoded labels ,

| (11) |

where we replace weights with and transform with for joint registration. MSE is sensitive to the proportionate contribution of each label to overall alignment, whereas Equation (3) normalizes the contribution of each label by its respective size.

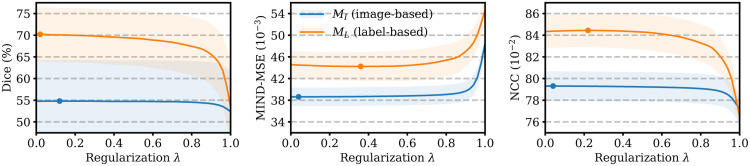

As a result, the MSE loss term discourages the optimization to disproportionately focus on aligning smaller structures, which we find favorable for warp regularity at structure boundaries. In Appendix E, we analyze how optimizing on label maps compares to an image-similarity loss term.

3.3.4. Implementation

Affine SynthMorph implements Detector (Appendix Fig. A1) with convolutional filters and output feature maps. The network width does not vary within the model. We activate the output of each internal block with LeakyReLU (parameter ) and downsample encoder blocks by a factor of 2 using max pooling.

As in our prior work, the deformable model implements a U-Net (Ronneberger et al., 2015) architecture of width , and we integrate the SVF via scaling and squaring (Ashburner, 2007; Dalca et al., 2018). Hypermodel is a simple feed-forward network with 4 ReLU-activated hidden FC layers of 32 output units each.

All kernels are of size . For computational efficiency, our 3D models linearly downsample the network inputs and loss inputs by a factor of 2. We min-max normalize input images such that their intensities fall in the interval . Affine coordinate transforms operate in a zero-centered index space. Appendix B includes further details.

3.3.5. Optimization

We fit model parameters with stochastic gradient descent using Adam (Kingma & Ba, 2014) over consecutive training strips () of batches each. At the beginning of each strip or in the event of divergence, we choose successively smaller learning rates from . For fast convergence, the first strip of affine training optimizes the overlap of larger label groups than indicated in Section 4.1.3: left hemisphere, right hemisphere, and cerebellum.

Because SynthMorph training is generally not prone to overfitting, it uses a simple stopping criterion measuring progress over batches in terms of validation Dice overlap (Section 4.3). The 3D models train with a batch size of 1 until the mean overlap across exceeds of the maximum, that is,

| (12) |

For joint registration, we uniformly sample hyperparameter values . For efficiency, we freeze parameters of the trained affine submodel to fit only the weights of hypernetwork , optimizing the loss of Equation (10).

However, unfreezing the affine weights within the setup of Figure 4 has no substantial impact on accuracy. Specifically, after one additional strip of joint training, deformable large-21 Dice scores change by depending on the dataset, while affine accuracy decreases by only points relative to affine-only training.

4. Experiments

In a first experiment, we train the Detector architecture with synthetic data only. This experiment focuses on building a readily usable tool, and we assess its accuracy in various affine registration tasks. In contrast, Appendix A analyzes the performance of the different architectures across a broad range of variants and transformations, to understand how networks learn and best represent the affine component. In a second experiment, we complete the affine model with a deformable hypernetwork to produce a joint registration solution and compare its performance to readily usable baseline tools.

4.1. Data

The training-data synthesis and analyses use 3D brain MRI scans from a broad collection of public data, aiming to truly capture the behavior of the methods facing the diversity of real-world images. While users of SynthMorph do not need to preprocess their data, our experiments use images conformed to the same isotropic 1-mm voxel space using trilinear interpolation, and by cropping and zero-padding symmetrically. We rearrange the voxel data to produce gross left-inferior-anterior (LIA) orientation with respect to the volume axes.

4.1.1. Generation label maps

For training-data synthesis, we compose a set of 100 whole-head tissue segmentations, each derived from T1w acquisitions with isotropic ~1-mm resolution. We do not use these T1w images in our experiments. The training segmentations include 30 locally scanned adult FSM subjects (Greve et al., 2021), 30 participants of the cross-sectional Open Access Series of Imaging Studies (OASIS, Marcus et al. 2007), 30 teenagers from the Adolescent Brain Cognitive Development (ABCD) study (Casey et al., 2018), and 10 infants scanned at Boston Children’s Hospital at age 0–18 months (de Macedo Rodrigues et al., 2015; Hoopes, Mora, et al., 2022).

We derive brain label maps from the conformed T1w scans using SynthSeg (Billot, Greve, et al., 2023; Billot et al., 2020). We emphasize that inaccuracies in the segmentations have little impact on our strategy, as the images synthesized from the segmentations will be in perfect voxel-wise registration with the labels by construction.

To facilitate the synthesis of spatially complex image signals outside the brain, we use a simple thresholding procedure to add non-brain labels to each label map. The procedure sorts non-zero image voxels outside the brain into one of six intensity bins, equalizing bin sizes on a per-image basis.

4.1.2. Evaluation images

For baseline comparisons, we pool adult and pediatric T1w images from the Brain Genomics Superstruct Project (GSP, Holmes et al., 2015), the Lifespan Human Connectome Project Development (HCP-D, Harms et al., 2018; Somerville et al., 2018), MASiVar (MASi, Cai et al., 2021), and IXI (Imperial College London, 2015).

The evaluation set also includes IXI scans with T2w and PDw contrast. As all these images are near-isotropic ~1-mm acquisitions, we complement the dataset with contrast-enhanced clinical T1w stacks of axial 6-mm slices from subjects with newly diagnosed glioblastoma (QIN, Clark et al., 2013; Mamonov and Kalpathy-Cramer 2016; Prah et al., 2015).

Our experiments use the held-out test images listed in Table 1. For monitoring and model validation, we use a handful of images pooled from the same datasets, which do not overlap with the test subjects. We do not consider QIN at validation and validate performance in pediatric data with held-out ABCD subjects. To measure registration accuracy, we compute anatomical brain label maps individually for each conformed image volume using SynthSeg (Billot, Greve, et al., 2023; Billot et al., 2020). Although SynthMorph does not require skull-stripping, we skull-strip all images with SynthStrip (Hoopes, Mora, et al., 2022) for a fair comparison across images that have undergone the preprocessing steps expected by the baseline methods—unless explicitly noted.

Table 1.

Acquired test data for baseline comparisons spanning a range of MRI contrasts, resolutions (res.), and subject populations.

| Dataset | Type | Res. (mm3) | Subjects |

|---|---|---|---|

| GSP | T1w, age 18–35 a | 100 | |

| IXI | T1w | 100 | |

| T2w | 100 | ||

| PDw | 100 | ||

| HCP-D | T1w, age 5–21 a | 80 | |

| MASi | T1w, age 5–8 a | 80 | |

| QIN | post-contrast T1w | 50 |

QIN includes contrast-enhanced clinical stacks of thick slices from patients with glioblastoma, whereas the other acquisitions use 3D sequences. While HCP-D and MASi include pediatric data, the remaining datasets consist of adult populations.

4.1.3. Labels

The training segmentations encompass a set of 38 different labels, 32 of which are standard (lateralized) FreeSurfer labels (Fischl et al., 2002). Parenthesizing their average size over FSM subjects relative to the total brain volume and combining the left and right hemispheres, these structures are: cerebral cortex (43.4%) and white matter (36.8%), cerebellar cortex (9.2%) and white matter (2.2%), brainstem (1.8%), lateral ventricle (1.7%), thalamus (1.2%), putamen (0.8%), ventral DC (0.6%), hippocampus (0.6%), caudate (0.6%), amygdala (0.3%), pallidum (0.3%), 4th ventricle (0.1%), accumbens (0.1%), inferior lateral ventricle (0.1%), 3rd ventricle (0.1%), and background.

The remaining labels map to variable image features outside the brain (Section 4.1.1). These added labels do not necessarily represent distinct or meaningful anatomical structures but expose the networks to non-brain image content at training. We use all labels to synthesize training images but optimize the overlap of brain-specific labels based on Equation (3).

For affine training and evaluation, we merge brain structures such that consists of larger tissue classes: left and right cerebral cortex, left and right subcortex, and cerebellum. These classes ensure that small labels like the caudate do not have a disproportionate influence on global brain alignment—different groupings may work equally well. In contrast, deformable registration redefines to include the 21 largest brain structures up to and including caudate. We use these labels for deformable training and evaluation, as prior analyses report that “only overlap scores of localized anatomical regions reliably distinguish reasonable from inaccurate registrations” (Rohlfing, 2011).

For a less circular assessment of deformable registration accuracy, we separately consider the set of the 10 finest-grained structures above whose overlap we do not optimize at training, including the labels from amygdala through 3rd ventricle.

4.2. Baselines

We test 3D affine and deformable classical registration with ANTs (Avants et al., 2011) version 2.3.5 using recommended parameters (Pustina & Cook, 2017) for the NCC metric within and MI across MRI contrasts. We test NiftyReg (Modat et al., 2014) version 1.5.58 with the NMI metric and enable SVF integration for joint registration, as in our approach. We also run the patch-similarity method Deeds (Heinrich et al., 2012), 2022-04-12 version. For a rigorous baseline assessment, we reduce the default grid spacing from to . This setting effectively trades a shorter runtime for increased accuracy as recommended by the author, since it optimizes the parametric B-spline model on a finer control point grid (Heinrich et al., 2013). The modification results in a 1–2% accuracy boost for most datasets as in prior work (Hoffmann et al., 2022). We test affine-only registration with mri_robust_register (“Robust”) from FreeSurfer 7.3 (Fischl, 2012) using its robust cost functions (Reuter et al., 2010), as only the robust cost functions can down-weight the contribution of regions that deform non-linearly. However, we highlight that the robust-entropy metric for cross-modal registration is experimental. We use Robust with up to 100 iterations and initialize the affine registration with a rigid run. Finally, we also test affine and deformable registration with the FSL (Jenkinson et al., 2012) tools FLIRT (Jenkinson & Smith, 2001) version 6.0 and FNIRT (Andersson et al., 2007) build 507. While the recommended cost function of FLIRT, correlation ratio, is suitable within and across modalities, we emphasize that users cannot change FNIRT’s MSE objective, which specifically targets within-contrast registration.

We compare DL model variants covering popular registration architectures in Section A.3. This analysis uses the same capacity and training set for each model. For our final synthesis-based tool in Sections 4.4 and 4.5, we consider readily available machine-learning baselines trained by their respective authors, to assess their generalization capabilities to the diverse data we have gathered. This strategy evaluates what level of accuracy a user can expect from off-the-shelf methods without retraining, as retraining is generally challenging for users (see Section 5.3). We test: KeyMorph (Yu et al., 2021) and C2FViT (Mok & Chung, 2022) models trained for pair-wise affine, and the 10-cascade Volume Tweening Network (VTN) (S. Zhao, Dong, et al., 2019; S. Zhao, Lau, et al., 2019) trained for joint affine-deformable registration. Each network receives inputs with the expected image orientation, resolution, and intensity normalization.

In contrast to the baselines, SynthMorph is the only method optimizing spatial label overlap. While this likely provides an advantage when measuring accuracy with a label-based metric, optimizing an image-based objective may be advantageous when measuring image similarity at test. For a balanced comparison, we assess registration accuracy in terms of label overlap and image similarity.

4.3. Evaluation metrics

To measure registration accuracy, we propagate the moving label map using the predicted transform to obtain the moved label map and compute its (hard) Dice overlap (Dice, 1945) with the fixed label map . In addition, we evaluate MSE of the modality-independent neighborhood descriptor (MIND, Heinrich et al., 2012) between the moved image and the fixed image as well as NCC for same-contrast registration. As we seek to measure brain-specific registration accuracy, we remove any image content external to the brain labels prior to evaluating the image-based metrics. We use paired two-sided -tests to determine whether differences in mean scores between methods are significant.

We analyze the regularity of deformation field in terms of the mean absolute value of the logarithm of the Jacobian determinant over brain voxels . This quantity is sensitive to the deviation of from the ideal value 1 and thus measures the width of the distribution of log-Jacobian determinants, the “log-Jacobian spread” :

| (13) |

where . We also determine the proportion of folding voxels, that is, locations where . We compare the inverse consistency of registration methods by means of the average displacement that voxels undergo upon subsequent application of transforms ,

| (14) |

Specifically, we evaluate the mean symmetric inverse consistency of method with and for any pair of input images :

| (15) |

4.4. Experiment 1: affine registration

In this experiment, we focus on “affine SynthMorph,” an anatomy-aware affine registration tool that generalizes across acquisition protocols while enabling brain registration without preprocessing. In contrast, Appendix A compares competing network architectures and analyzes how they learn and best represent affine transforms.

4.4.1. Setup

First, to give the reader an idea of the accuracy achievable with off-the-shelf algorithms for data unseen at training, we compare affine SynthMorph to classical and DL baselines trained by the respective authors. We test affine registration of skull-stripped images across MRI contrasts, for a variety of different imaging resolutions and populations, including adults, children, and patients with glioblastoma. We also compare the symmetry of each method with regard to reversing the order of input images. Each test involves held-out image pairs from separate subjects, summarized in Table 1.

Second, we analyze the effect of thick-slice acquisitions on affine SynthMorph accuracy compared to classical baselines. This experiment retrospectively reduces the through-plane resolution of the moving image of each GSPIXIT1 pair to produce stacks of axial slices of thickness mm. At each , we simulate partial voluming (Kneeland et al., 1986; Simmons et al., 1994) by smoothing all moving images in slice-normal direction with a 1D Gaussian kernel of full-width at half-maximum (FWHM) and by extracting slices apart using linear interpolation. Finally, we restore the initial volume size by linearly upsampling through-plane.

Third, we evaluate the importance of skull-stripping the input images for accurate registration. With the exception of skull-stripping, we preprocess full-head GSPIXIT1 pairs as expected by each method and assess brain-specific registration accuracy by evaluating image-based metrics within the brain only.

4.4.2. Results

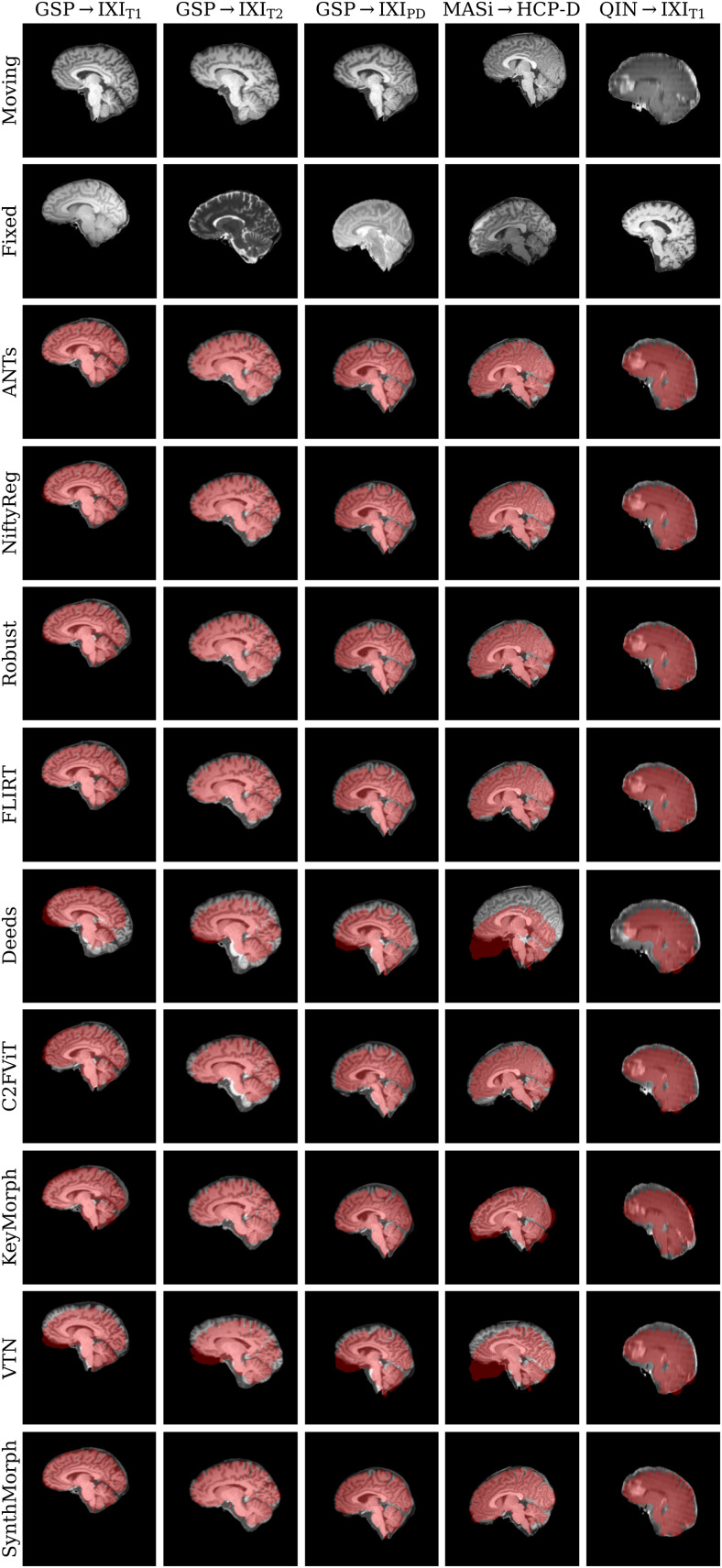

Figure 6 shows representative registration examples for the tested dataset combinations, while Figure 5 quantitatively compares affine registration accuracy across skull-stripped image pairings. Although affine SynthMorph has not seen any real MRI data at training, it achieves the highest Dice score for every dataset tested.

Fig. 6.

Representative affine 3D registration examples showing the image moved by each method overlaid with the fixed brain mask (red). Each row is an example from a different dataset. Subscripts indicate MRI contrast.

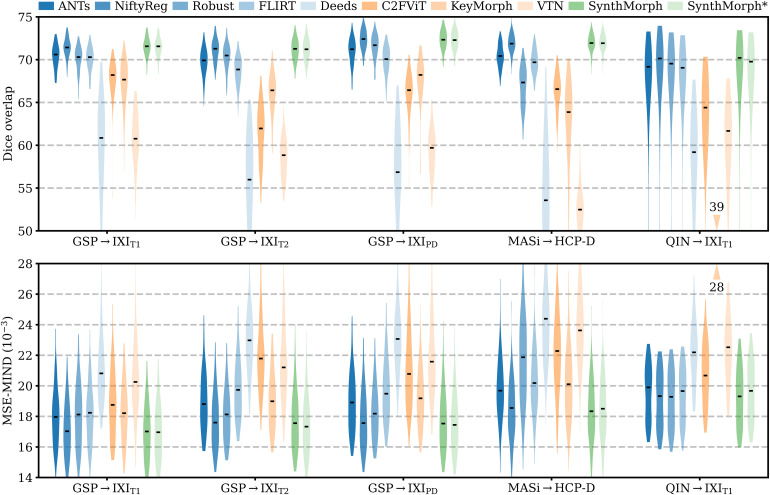

Fig. 5.

Affine 3D registration accuracy as mean Dice scores and in terms of image similarity. Each violin shows the distribution across the skull-stripped cross-subject pairs from Table 1. For comparison, the asterisk indicates SynthMorph performance without skull-stripping. Downward arrows indicate median scores outside the plotted range. Higher Dice and lower MSE-MIND are better.

For the GSPIXIT1 and MASiHCP-D pairs that most baselines are optimized for, SynthMorph exceeds the best-performing baseline NiftyReg by points ( and for paired two-sided -tests). Across all other pairings, SynthMorph matches the Dice score achieved by the most accurate affine baseline, which is NiftyReg in every case. Method Deeds performs least accurately, lagging behind the second last classical baselines by or more. The other classical methods perform robustly across all testsets, generally within 1–2 Dice points of each other.

On the MASiHCP-D testset, FLIRT’s performance exceeds Robust by ( ) and matches it across GSPIXIT1 pairs ( ). Across the remaining testsets, FLIRT ranks fourth among classical baselines.

In contrast, the DL baselines do not reach the same accuracy. Even for the T1w pairs they were trained with, SynthMorph leads by or more, likely due to domain shift between the test and baseline training data. As expected, DL-baseline performance continues to decrease as the test-image characteristics deviate further from those at training. Interestingly, VTN consistently ranks among the least accurate baselines, although its preprocessing effectively initializes the translation and scaling parameters by separately adjusting the moving and fixed images such that the brain fills the whole FOV.

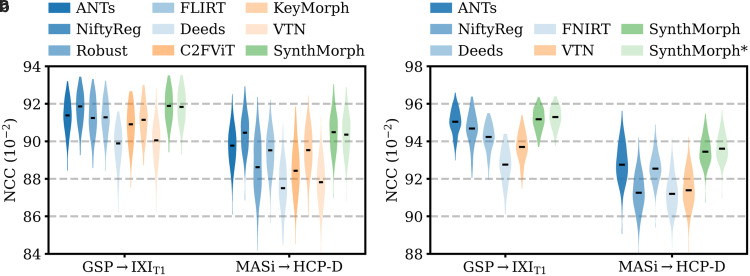

Even though affine SynthMorph does not directly optimize image similarity at training, it surpasses NiftyReg for GSPIXIT1 ( ) and MASiHCP-D ( ) pairs in terms of the image-based MSE-MIND metric. Generally, MSE-MIND ranks the methods similarly to Dice overlap, as does NCC across the T1w registration pairs (Fig. 8a).

Fig. 8.

Within-contrast 3D (a) affine and (b) deformable registration accuracy across skull-stripped cross-subject pairs in terms of brain-only NCC. We initialize all deformable tools with affine transforms estimated by NiftyReg. The asterisk indicates SynthMorph without skull-stripping. Higher is better.

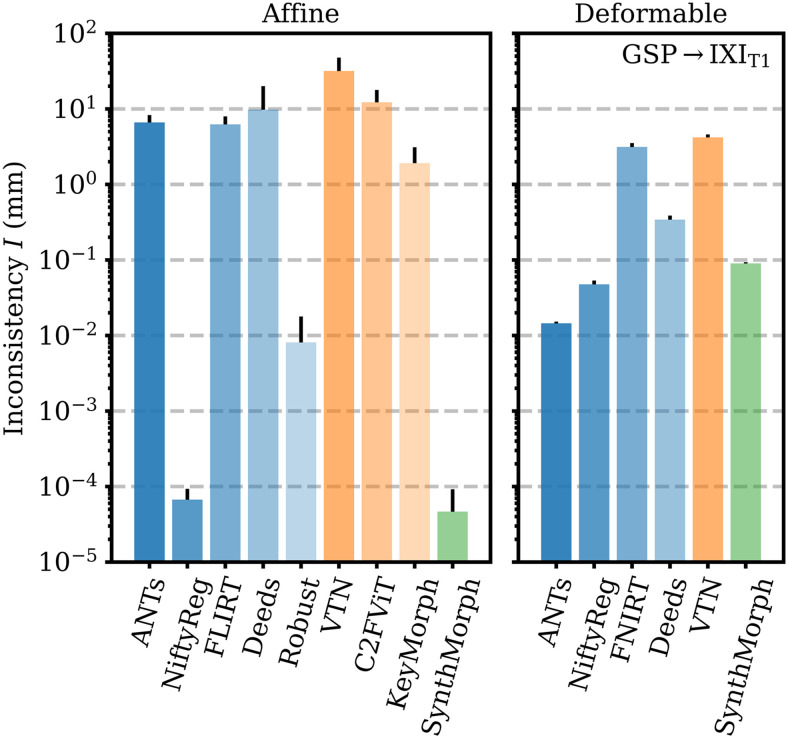

Figure 9 shows that SynthMorph’s affine transforms across GSPIXIT1 are more symmetric than all baselines tested. When we reverse the order of the input images, the mean inconsistency between forward and backward transforms is mm per brain voxel, closely followed by NiftyReg. Robust also uses an inverse-consistent algorithm, leading to mm. The remaining baselines are substantially less symmetric, with inconsistencies of mm for KeyMorph or more.

Fig. 9.

Forward-backward inconsistency between transforms when reversing the order of input images. We compare the mean displacement per brain voxel upon subsequent application of both transforms. Lower values are better.

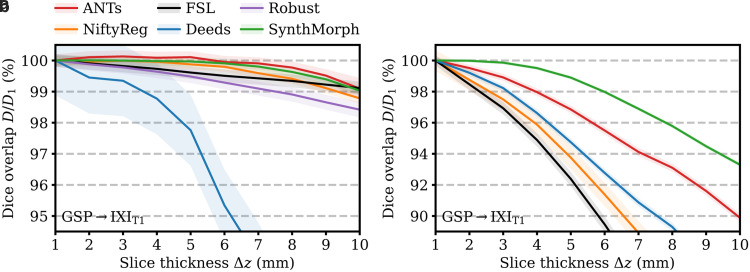

Figure 7a shows how registration accuracy evolves with increasing moving-image slice thickness . SynthMorph and ANTs remain the most robust for mm, reducing only to 99% at mm. For mm, ANTs accuracy even improves slightly, likely benefiting from the smoothing effect on the images. The classical baselines FLIRT and Robust are only mildly affected by thicker slices. While their Dice scores decrease more rapidly for , their accuracy reduces to 99% and about 98.5% at mm. Deeds is noticeably more susceptible to resolution changes, decreasing to less than 95% at mm.

Fig. 7.

Dependency of 3D (a) affine and (b) deformable registration accuracy on slice thickness. For comparability, we initialize all deformable tools with affine transforms estimated by NiftyReg. Each value indicates the mean over 100 skull-stripped pairs. Higher is better. Shaded areas indicate the standard error of the mean.

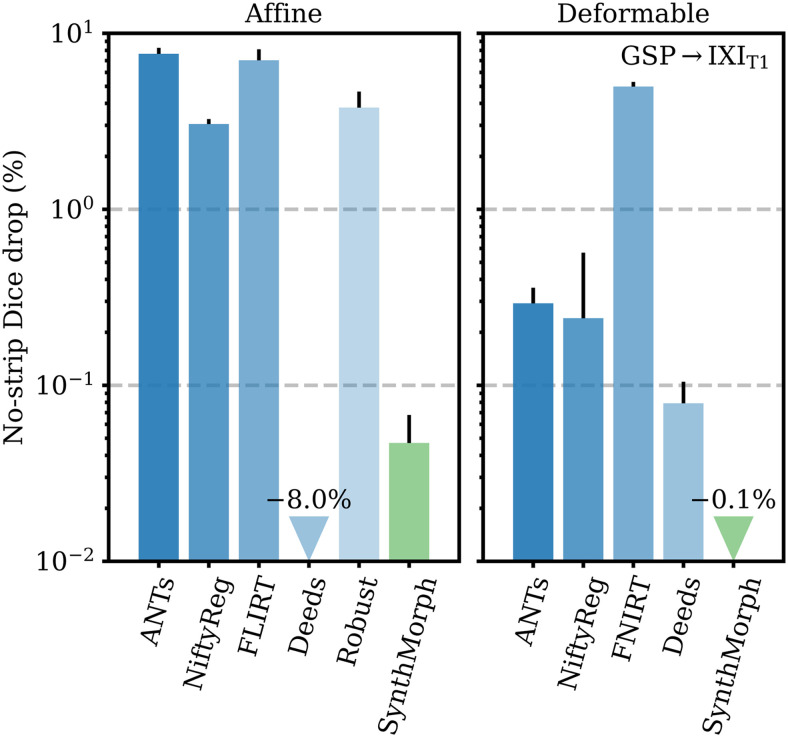

Figure 10 compares the drop in median Dice overlap the affine methods undergo when presented with full-head as opposed to skull-stripped GSPIXIT1 images. Except for Deeds, brain-specific accuracy reduces substantially, by 3% in the case of NiftyReg and up to 8% for ANTs. Affine SynthMorph remains most robust: its Dice overlap changes by less than 0.05%. Deeds’ accuracy increases but it still yields the lowest score for the testset.

Fig. 10.

Relative reduction in brain-specific accuracy when registering full-head as opposed to skull-stripped images. Lower values are better. Although affine Deeds is the only method whose Dice overlap increases, it ranks as the least accurate on the GSPIXIT1 testset. Error bars show the standard error of the mean.

Table 2 lists the registration time required by each affine method on a 2.2-GHz Intel Xeon Silver 4114 CPU using a single computational thread. The values shown reflect averages over uni-modal runs. Classical runtimes range between 2 and 27 minutes, with Deeds being the fastest and Robust being the slowest, although we highlight that we substantially increased the number of Robust iterations. Complete single-threaded DL runtimes are about 1 minute, including model setup. However, inference only takes a few seconds and reduces to well under a second on an NVIDIA V100 GPU.

4.5. Experiment 2: joint registration

Motivated by the affine performance of SynthMorph, we complete the model with a hypernetwork-powered deformable module to achieve 3D joint affine-deformable registration (Fig. 4). Our focus is on building a complete and readily usable tool that generalizes across scan protocols without requiring preprocessing.

4.5.1. Setup

First, we compare deformable registration using the held-out image pairs from separate subjects for each of the datasets of Table 1. The comparison employs skull-stripped images initialized with affine transforms estimated from skull-stripped data by NiftyReg, the most accurate baseline in Figure 5. We compare deformable SynthMorph performance to classical baselines and VTN, a joint DL baseline trained by the original authors—we seek to gauge the accuracy achievable with off-the-shelf algorithms for data unseen at training.

Second, we analyze the robustness of each tool to sub-optimal affine initialization. In order to cover realistic affine inaccuracies and assess the most likely and intended use case, we repeat the previous experiment, this time initializing each method with the affine transform obtained with the same method—that is, we test end-to-end joint registration with each tool. Similarly, we evaluate the importance of removing non-brain voxels from the input images. In this experiment, we initialize each method with affine transforms estimated by NiftyReg from skull-stripped data, and test deformable registration on a full-head version of the images.

Third, we analyze the effect of reducing the through-plane resolution on SynthMorph performance compared to classical baselines, following the steps outlined in Section 4.4. In this experiment, we initialize each method with affine transforms estimated by NiftyReg from skull-stripped images, such that the comparison solely reflects deformable registration accuracy.

Fourth, we analyze warp-field regularity and registration accuracy over dataset GSPIXIT1 as a function of the regularization weight . We also compare the symmetry of each method with regard to reversing the order of the input images.

4.5.2. Results

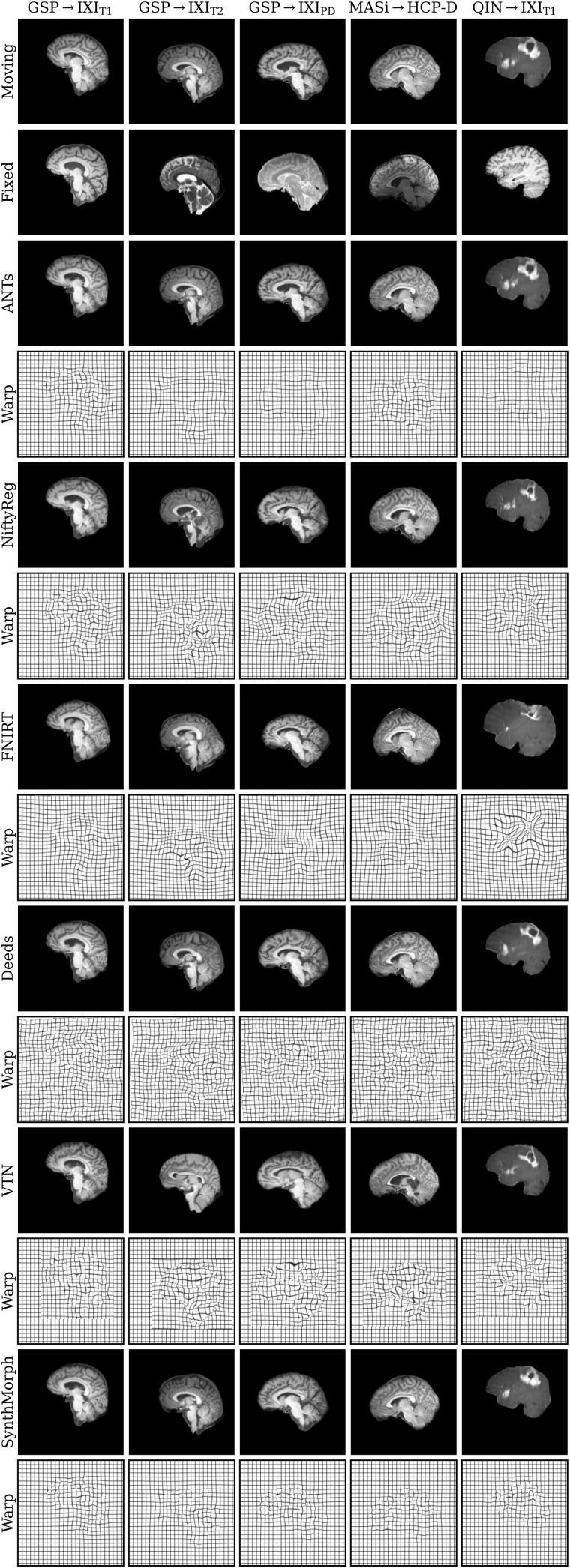

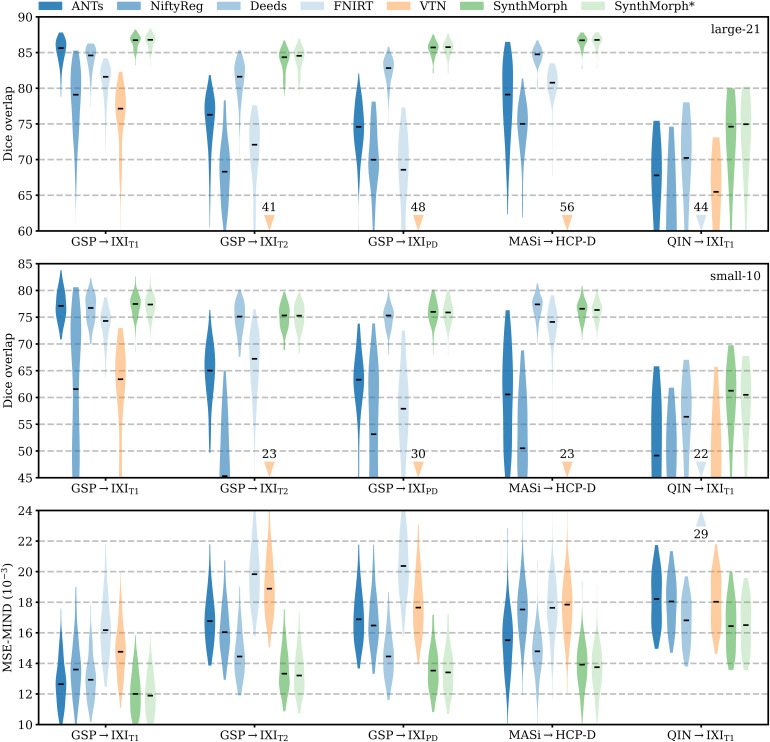

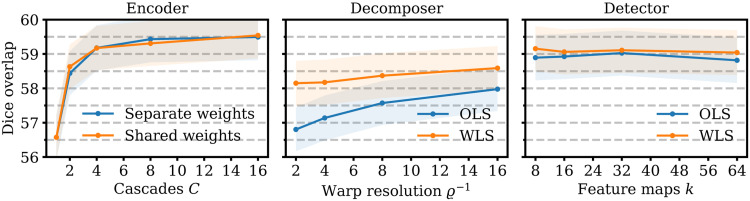

Figure 11 shows typical deformable registration examples for each method, and Figure 12 compares registration accuracy across testsets in terms of mean Dice overlap over the 21 largest anatomical structures (large-21), 10 fine-grained structures (small-10) not optimized at training, and image similarity measured with MSE-MIND. Supplementary Figures S1–S5 show deformable registration accuracy across individual brain structures.

Fig. 11.

Deformable 3D registration examples comparing the moved image and the deformation field across methods. Each row is an example from a different dataset. For comparability, we initialize all methods with NiftyReg’s affine registration.

Fig. 12.

Deformable 3D registration accuracy as mean Dice scores over the 21 largest brain regions (large-21), 10 fine-grained structures not optimized at SynthMorph training (small-10), and image similarity. Each violin shows the distribution across the skull-stripped cross-subject pairs from Table 1. For comparability, we initialize all deformable tools with affine transforms estimated by NiftyReg. The asterisk indicates SynthMorph performance without skull-stripping. Downward arrows show median scores outside the plotted range. Higher Dice and lower MSE-MIND are better.

Although SynthMorph trains with synthetic images only, it achieves the highest large-21 score for every skull-stripped testset. For all cross-contrast pairings and the pediatric testset, SynthMorph leads by at least 2 Dice points compared to the highest baseline score (MASi HCP-D, for paired two-sided -test) and often much more. Across these testsets, SynthMorph performance remains largely invariant, whereas the other methods except Deeds struggle. Crucially, the distribution of SynthMorph scores for isotropic data is substantially narrower than the baseline scores, indicating the absence of gross inaccuracies such as pairs with that several baselines yield across all isotropic contrast pairings. On the clinical testset QIN IXIT1, SynthMorph surpasses the baselines by at least . For GSP IXIT1, it outperforms the best classical baseline ANTs by 1 Dice point ().

Across the T1w testsets, FNIRT outperforms NiftyReg by several Dice points and also ANTs for MASi HCP-D pairs. Surprisingly, FNIRT beats NiftyReg’s NMI implementation for GSP IXIT2, even though FNIRT’s cost function targets within-contrast registration. The most robust baseline is Deeds, which ranks third at adult T1w registration. Its performance reduces the least for the cross-contrast and clinical testsets, where it achieves the highest Dice overlap after SynthMorph.

The joint DL baseline VTN yields relatively low accuracy across all testsets. This was expected for the cross-contrast pairings, since the model was trained with T1w data, confirming the data dependency introduced with standard training. However, VTN lags behind the worst-performing classical baseline for GSPIXIT1 data, NiftyReg, too ( , ), likely due to domain shift as in the affine case.

Considering the fine-grained small-10 brain structures held out at training, SynthMorph consistently matches or exceeds the best performing method, except for MASi HCP-D, where Deeds leads by ( ). On the clinical testset, SynthMorph leads by at least ( ). Interestingly, SynthMorph outperforms all baselines across testsets in terms of MSE-MIND ( ) and NCC for same-contrast registration (Fig. 8b, ), although it is the only method not optimizing or trained with an image-based loss.

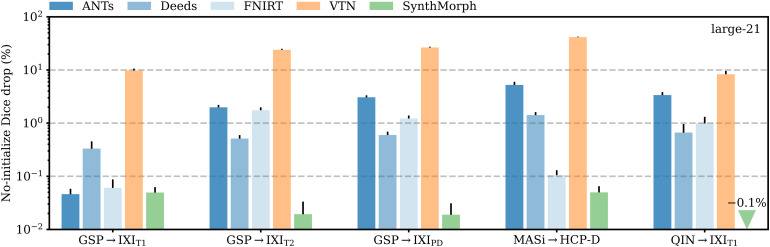

Figure 13 shows the relative change in large-21 Dice for each tool when run end-to-end compared to affine initialization with NiftyReg. SynthMorph’s drop in performance is 0.05% or less across all datasets. For GSP IXIT1, classical-baseline accuracy decreases by no more than . Across the other datasets, the classical methods generally cannot make up for the discrepancy between their own and NiftyReg’s affine transform: accuracy drops by up to , whereas SynthMorph remains robust. The performance of VTN reduces by at least across testsets and often much more, highlighting the detrimental effect an inaccurate affine transform can have on the subsequent deformable step.

Fig. 13.

Mean decrease in Dice scores for end-to-end joint registration relative to affine initialization with NiftyReg. Except for adult T1w registration pairs, the classical tools in blue generally cannot compensate for the discrepancy between their own and NiftyReg’s affine transform, indicating that inaccurate affine initialization can have a detrimental effect on subsequent deformable registration.

Figure 10 shows the importance of skull-stripping for deformable registration accuracy. Generally, deformable accuracy suffers less than affine registration when switching to full-head images, as the algorithms deform image regions independently. SynthMorph remains most robust to the change in preprocessing; its large-21 Dice overlap increases by 0.1%. With a drop of 0.08%, Deeds is similarly robust. In contrast, FNIRT’s performance is most affected, reducing by 5%—a decline of the same order as for most affine methods.

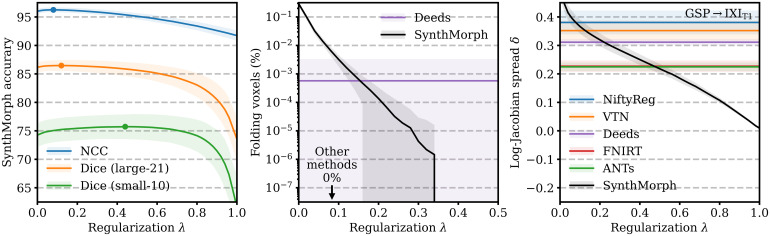

Figure 14 analyzes SynthMorph warp smoothness. As expected, image-based NCC and large-21 Dice accuracy peak for weak regularization of . In contrast, overlap of the small-10 regions not optimized at training benefits from smoother warps, with an optimum at . The fields predicted by SynthMorph achieve the lowest log-Jacobian spread across all baselines for . Similarly, the proportion of folding brain voxels decreases with higher and drops to for (10 integration steps). Deeds yields folding brain voxels, whereas the other baselines achieve . For realistic warp fields with characteristics that match or exceed the tested baselines, we conduct all comparisons in this study with a default weight . We highlight that is an input to SynthMorph, enabling users to choose the optimal regularization strength for their specific data without retraining.

Fig. 14.

Regularization analysis of SynthMorph registration accuracy, the proportion of folding voxels with a negative Jacobian determinant, and the spread of the distribution of absolute log-Jacobian determinants as a function of the regularization weight . The dots indicate maximum accuracy. For the other metrics, lower is better.

Deformable registration with SynthMorph is highly symmetric (Fig. 9), with a mean forward-backward inconsistency of only mm per brain voxel that closely follows ANTs (0.01 mm) and NiftyReg (0.05 mm). In contrast, the remaining methods are substantially more inconsistent, with mm for Deeds or more.

Figure 7b assesses the dependency of registration performance on slice thickness . Similar to the affine case, deformable accuracy decreases for thicker slices, albeit faster. SynthMorph performs most robustly. Its accuracy remains unchanged up to mm and reduces only to 95% at mm. ANTs is the most robust classical method, but its accuracy drops considerably faster than SynthMorph. FLIRT and NiftyReg are most affected at reduced resolution, performing at less than 95% accuracy for mm and mm, respectively.

Deformable registration often requires substantially more time than affine registration (Table 2). On the GPU, SynthMorph takes less than 8 seconds per image pair for registration, IO, and resampling. One-time model setup requires about 1 minute, after which the user could register any number of image pairs without reinitializing the model. SynthMorph requires about 16 GB of GPU memory for affine and 24 GB for deformable registration. On the CPU, the fastest classical method Deeds requires only about 6 minutes in single-threaded mode, whereas ANTs takes almost 5 hours. While VTN’s joint runtime is 1 minute, SynthMorph needs about 15 minutes for deformable registration on a single thread.

5. Discussion

We present an easy-to-use DL tool for end-to-end affine and deformable brain registration. SynthMorph achieves robust performance across acquisition characteristics such as imaging contrast, resolution, and pathology, enabling accurate registration for brain scans without preprocessing. The SynthMorph strategy alleviates the dependency on acquired training data by generating widely variable images from anatomical label maps—and there is no need for label maps at registration time.

5.1. Anatomy-specific registration

Accurate registration of the specific anatomy of interest requires ignoring or down-weighting the contribution of irrelevant image content to the optimization metric. SynthMorph learns what anatomy is pertinent to the task, as we optimize the overlap of select labels of interest only. It is likely that the model learns an implicit segmentation of the image, in the sense that it focuses on deforming the anatomy of interest, warping the remainder of the image only to satisfy regularization constraints. In contrast, many existing classical and DL methods cannot distinguish between relevant and irrelevant image features, and thus have to rely on explicit segmentation to remove distracting content prior to registration, such as skull-stripping (Eskildsen et al., 2012; Hoopes, Mora, et al., 2022; Iglesias et al., 2011; Smith, 2002).

Pathology missing from the training labels does not necessarily hamper overall registration accuracy, as the experiments with scans from patients with glioblastoma show. In fact, SynthMorph outperforms all deformable baselines tested on these data. However, we do not expect these missing structures to be mapped with high accuracy, in particular if the structure is absent in one of the test images—this is no different from the behavior of methods optimizing image similarity.

5.2. Baseline performance

Networks trained with the SynthMorph strategy do not have access to the MRI contrasts of the testsets nor, in fact, to any MRI data at all. Yet SynthMorph matches or outperforms classical and DL-baseline performance across the real-world datasets tested, while being substantially faster than the classical methods. For deformable registration, the fastest classical method Deeds requires 6 minutes, while SynthMorph takes about 1 minute for one-time model setup and just under 8 seconds for each subsequent registration. This speed-up may be particularly useful for processing large datasets like ABCD, enabling end-to-end registration of hundreds of image pairs per hour—the time that some established tools like ANTs require for a single registration.

The DL baselines tested have runtimes comparable to SynthMorph. Combining them with skull-stripping would generally be a viable option for fast brain-specific registration: brain extraction with a tool like SynthStrip only takes about 30 seconds. However, we are not aware of any existing DL tool that would enable deformable registration of unseen data with adjustable regularization strength without retraining. While the DL baselines break down for contrast pairings unobserved at training, they also cannot match the accuracy of classical tools for the T1w contrast they were trained with, likely due to domain shift.

In contrast, SynthMorph performance is relatively unaffected by changes in imaging contrast, resolution, or subject population. These results demonstrate that the SynthMorph strategy produces powerful networks that can register new image types unseen at training. We emphasize that our focus is on leveraging the training strategy to build a robust and accurate registration tool. It is possible that other architectures, such as the trained DL baselines tested in this work, perform equally well when trained using our strategy. Specifically, novel Bayesian similarity learning methods (Grzech et al., 2022; Su & Yang, 2023) and frameworks that jointly optimize the affine and deformable components emerged since the initial submission of this work (Chang et al., 2023; Meng et al., 2023; Qiu et al., 2023; L. Zhao et al., 2023).

Although Robust down-weights the contribution of image regions that cannot be mapped with the linear transformation model of choice, its accuracy dropped by several points for data without skull-stripping. The poor performance in cross-contrast registration may be due to the experimental nature of its robust-entropy cost function. We initially experimented with the recommended NMI metric, but registration failed for a number of cases as Robust produced non-invertible matrix transforms, and we hoped that the robust metrics would deliver accurate results in the presence of non-brain image content—which the NMI metric cannot ignore during optimization.

5.3. Challenges with retraining baselines

Retraining DL baselines to improve performance for specific user data involves substantial practical challenges. For example, users have to reimplement the architecture and training setup from scratch if code is not available. If code is available, the user may be unfamiliar with the specific programming language or machine-learning library, and building on the original authors’ implementation typically requires setting up an often complex development environment with matching package versions. In our experience, not all authors make this version information readily available, such that users may have to resort to trial and error. Additionally, the user’s hardware might not be on par with the authors’. If a network exhausts the memory of the user’s GPU, avoiding prohibitively long training times on the CPU necessitates reducing model capacity, which can affect performance. We emphasize that because SynthMorph registers new images without retraining, it does not require a GPU. On the CPU, SynthMorph runtimes still compare favorably to classical methods (Table 2).

In principle, users could retrain DL methods despite the above-mentioned challenges. However, in practice the burden is usually sufficiently large that users of these technologies will turn to methods that distribute pre-trained models. For this reason, we specifically compare DL baselines trained by the respective authors, to gauge the performance attainable without retraining. While our previous work (Hoffmann, Billot, et al., 2021) demonstrated the feasibility of training registration networks within the synthesis strategy and, in fact, without any acquired data at all, the original model predicted implausibly under-regularized warps, and changing the regularization strength required retraining. In contrast, the toolbox version provides fast, domain-robust, symmetric, invertible, general-purpose DL registration, enabling users to choose the optimal regularization strength for their specific data—without retraining. We hope that the broad applicability of SynthMorph may help alleviate the historically limited reusability of DL methods.

EasyReg (Iglesias, 2023) is a recent DL registration method developed concurrently with SynthMorph. Both methods leverage the same synthesis strategy (Hoffmann et al., 2022) and thus do not require retraining. They differ in that EasyReg fits an affine transform to hard segmentation maps and estimates transforms to MNI space internally (Fonov et al., 2009), whereas SynthMorph includes an affine registration network and estimates pair-wise transforms directly. In addition, SynthMorph enables the user to control the warp smoothness at test time.

5.4. Joint registration

The joint baseline comparison highlights that deformable algorithms cannot always fully compensate for real-world inaccuracies in affine initialization. Generally, the median Dice overlap drops by a few percent when we initialize each tool with affine transforms estimated by the same package instead of NiftyReg, the most accurate affine baseline we tested. This experiment demonstrates the importance of affine registration for joint accuracy—choosing affine and deformable algorithms from the same package is likely the most common use case.

In Section 4.4, the affine subnetwork of the 10-cascade VTN model consistently ranks among the least accurate methods even for the T1w image type it trained with. We highlight that the authors of VTN do not independently tune or compare the affine component to baselines and instead focus on joint affine-deformable accuracy (S. Zhao, Dong, et al., 2019; S. Zhao, Lau, et al., 2019). While the VTN publication presents the affine cascade as an Encoder architecture ( , Section A.1) terminating with an FC layer (S. Zhao, Lau, et al., 2019), the public implementation omits the FC layer. Some of our experiments with this architecture indicated that the FC layer is critical to competitive performance.

5.5. Limitations

While SynthMorph often achieves state-of-the-art performance, we also discuss several limitations. First, the large-21 evaluation of registration accuracy uses the same anatomical labels whose overlap SynthMorph training optimizes. Although the analyses also compare the small-10 labels not optimized at training, MSE-MIND, and NCC, we consider only one label for the left and another for the right cortex, limiting the evaluation predominantly to subcortical alignment.

Second, some applications require fewer DOF than the full affine matrix that SynthMorph estimates. For example, the bulk motion in brain MRI and its mitigation through pulse-sequence adjustments are constrained to 6 DOF accounting for translation and rotation (Gallichan et al., 2016; Singh et al., 2024; Tisdall et al., 2012; White et al., 2010). Although the SynthMorph utility includes a model for rigid alignment trained with scaling and shear (Appendix B) removed from matrix of Equation (7), the evaluation focuses on affine registration.

Third, considering voxel data alone, the SynthMorph rotational range is limited as the model only sees registration pairs rotated by angles below about any axis , resulting from the rotational offset between any two input label maps combined with spatial augmentation (Appendix Table A2), because the affine model did not converge with augmentation across the full range . However, the registration problem reduces to an effective 90º range when considering the orientation information stored in medical image headers. Ignoring headers, the rotational ranges measured across OASIS and ABCD do not exceed (Appendix Fig. A4).

Fourth, we train SynthMorph as a general tool for cross-subject registration, and the evaluation on clinical data is limited to 50 glioblastoma patients.

In addition, accuracy for specialized applications such as tumor tracking will likely trail behind dedicated models. However, for tumor-specific training, our learning framework could add synthesized pathology to label maps from healthy subjects. For example, an extended synthesis may simulate the mass effect by applying deformations measured in healthy-pathologic image pairs (Hogea et al., 2007) and overlaying the deformed label map with a synthetic tumor label (Zhou et al., 2023) to subsequently generate a distinct image intensity.

5.6. Future work

We plan to expand our work in several ways. First, we will provide a trained 6-DOF model for rigid registration, as many applications require translations and rotations only, and the most accurate rigid transform does not necessarily correspond to the translation and rotation encoded in the most accurate affine transform.

Second, we will employ the proposed strategy and affine architecture to train specialized models for within-subject registration for navigator-based motion correction of neuroimaging with MRI (Gallichan et al., 2016; Hoffmann et al., 2016; Tisdall et al., 2012; White et al., 2010). These models need to be efficient for real-time use but do not have to be invariant to MRI contrast or resolution when employed to track head-pose changes between navigators acquired with a fixed protocol. However, the brain-specific registration made possible by SynthMorph will improve motion-tracking and thus correction accuracy in the presence of jaw movement (Hoffmann et al., 2020).

Third, another application that can dramatically benefit from anatomy-specific registration is fetal neuroimaging, where the fetal brain is surrounded by amniotic fluid and maternal tissue. We plan to tackle registration of the fetal brain, which is challenging, partly due to its small size, and which currently relies on brain extraction prior to registration to remove confounding image content (Billot, Moyer, et al., 2023; Gaudfernau et al., 2021; Hoffmann, Abaci Turk, et al., 2021; Puonti et al., 2016).

6. Conclusion

We present an easy-to-use DL tool for fast, symmetric, diffeomorphic—and thus invertible—end-to-end registration of images without preprocessing. Our study demonstrates the feasibility of training accurate affine and joint registration networks that generalize to image types unseen at training, outperforming established baselines across a landscape of image contrasts and resolutions. In a rigorous analysis approximating the diversity of real-world data, we find that our networks achieve invariance to protocol-specific image characteristics by leveraging a strategy that synthesizes widely variable training images from label maps.

Optimizing the spatial overlap of select anatomical labels enables anatomy-specific registration without the need for segmentation that removes distracting content from the input images. We believe this independence from complex preprocessing has great promise for time-critical applications, such as real-time motion correction of MRI. Importantly, SynthMorph is a widely applicable learning strategy for anatomy-aware and acquisition-agnostic registration of any anatomy with any network architecture, as long as label maps are available for training—there is no need for these at registration time.

Supplementary Material

Acknowledgements

The authors thank Lilla Zöllei and OASIS Cross-Sectional for sharing data (PIs D. Marcus, R. Buckner, J. Csernansky, J. Morris, NIH awards P50 AG05681, P01 AG03991, P01 AG026276, R01 AG021910, P20 MH071616, U24 R021382). We are grateful for funding from the National Institute of Biomedical Imaging and Bioengineering (P41 EB015896, P41 EB030006, R01 EB019956, R01 EB023281, R01 EB033773, R21 EB018907), the National Institute of Child Health and Human Development (R00 HD101553), the National Institute on Aging (R01 AG016495, R01 AG070988, R21 AG082082, R56 AG064027), the National Institute of Mental Health (RF1 MH121885, RF1 MH123195), the National Institute of Neurological Disorders and Stroke (R01 NS070963, R01 NS083534, R01 NS105820, U01 NS132181, UM1 NS132358), and the National Center for Research Resources (S10 RR019307, S10 RR023043, S10 RR023401). We acknowledge additional support from the BRAIN Initiative Cell Census Network (U01 MH117023) and the NIH Blueprint for Neuroscience Research (U01 MH093765), part of the multi-institutional Human Connectome Project. The study also benefited from computational hardware provided by the Massachusetts Life Sciences Center.

A. Affine Network Analysis

A.1. Affine architectures

We analyze and compare three competing network architectures (Appendix Fig. A1) that represent state-of-the art methods (Balakrishnan et al., 2019; De Vos et al., 2019; Moyer et al., 2021; Shen et al., 2019; Yu et al., 2021; Zhu et al., 2021): Decoder from Section 3.3.1 and the following Encoder and Decomposer architectures.

Appendix Fig. A1.

Affine architectures. Detector outputs ReLU-activated feature maps for a single image. We compute their centers of mass (COM) and weights separately for and , to fit a transform that aligns these point sets. A recurrent Encoder estimates refinements to the current transform from moved image and fixed image . Decomposer predicts a one-shot displacement field (no activation) with corresponding voxel weights (ReLU), that we decompose in a weighted least-squares (WLS) sense to estimate affine transform . Parentheses specify filter numbers. We LeakyReLU-activate the output of unnamed convolutional blocks (param. ). Stacked convolutional blocks of decreasing size indicate subsampling by a factor of 2 via max pooling following each activation.

A.1.1. Parameter encoder

We build on networks combining a convolutional encoder with an FC layer (Shen et al., 2019; Zhu et al., 2021) whose output units we interpret as parameters for translation, rotation, scale, and shear. We refer to a cascade of such subnetworks , with , as “Encoder”. Each outputs a matrix constructed from the affine parameters as shown in Appendix B, to incrementally update the total transform. We obtain transform by matrix multiplication after invoking subnetwork ,

| (A 1) |

where is the moving image transformed by , and is the identity matrix. As the subnetworks are architecturally identical, weight sharing is possible, and we evaluate versions of the model with and without weights shared across cascades.

For balanced gradient steps, we complete each subnetwork with a layer applying a learnable rescaling weight to each affine parameter before matrix construction.

A.1.2. Warp decomposer

We propose another architecture building on deformable registration models (Balakrishnan et al., 2019; De Vos et al., 2019). “Decomposer” estimates a dense deformation field with corresponding non-negative voxel weights that we decompose into the affine output transform and a (discarded) residual component , that is, . The voxel weights enable the network to focus the decomposition on the anatomy of interest. Both are outputs of a single fully convolutional network and thus benefit from weight sharing. We decompose in a WLS sense over the spatial domain of , using the definition of from Equation (1) as the submatrix of that excludes the last row:

| (A 2) |

where is the matrix transpose of . Denoting , and by and the matrices whose corresponding rows are and for each , respectively, Equation (5) yields the closed-form WLS solution as in Section 3.3.1.

A.1.3. Implementation and training

Encoder predicts rotation parameters in degrees. This parameterization ensures varying rotation angles has an effect of similar magnitude as translations in millimeters, at the scale of the brain, which helps networks converge faster in our experiments. We initialize the rescaling weights of Encoder to 1 for translations and rotations, and to 0.05 for scaling and shear, which we find favorable to faster convergence. Appendix B includes details.

Training optimizes an unsupervised NCC loss between the moved image and the fixed image . All models train for a single strip with a batch size of 2 (Section 3.3.5). To avoid non-invertible matrices at the start of training, we pretrain Decomposer for 500 iterations, temporarily replacing the output transform with the field , where are the voxel weights predicted by the network (Section A.1.2), and denotes voxel-wise multiplication.

A.2. Data

For architecture analysis, we use T1w images with isotropic 1-mm resolution from adult participants aged 40–75 years from the UK Biobank (UKBB) study (Alfaro-Almagro et al., 2018; Miller et al., 2016; Sudlow et al., 2015). We conform images and derive label maps as in Section 4.1, extracting mid-sagittal slices from corresponding 3D images and label maps.

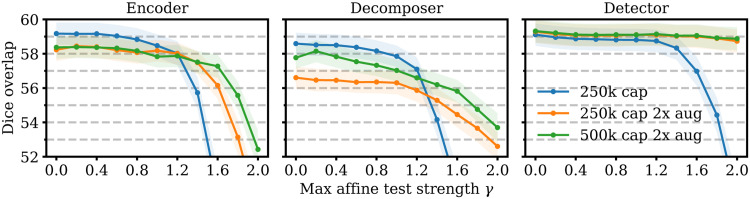

A.3. Experiment