Abstract

Biological neural circuits demonstrate exceptional adaptability to diverse tasks by dynamically adjusting neural connections to efficiently process information. However, current two-dimension materials-based neuromorphic hardware mainly focuses on specific devices to individually mimic artificial synapse or heterosynapse or soma and encoding the inner neural states to realize corresponding mock object function. Recent advancements suggest that integrating multiple two-dimension material devices to realize brain-like functions including the inter-mutual connecting assembly engineering has become a new research trend. In this work, we demonstrate a two-dimension MoS2-based reconfigurable analog hardware that emulate synaptic, heterosynaptic, and somatic functionalities. The inner-states and inter-connections of all modules co-encode versatile functions such as analog-to-digital/digital-to-analog conversion, and linear/nonlinear computations including integration, vector-matrix multiplication, convolution, to name a few. By assembling the functions to fit with different environment-interactive demanding tasks, this hardware experimentally achieves the reconstruction and image sharpening of medical images for diagnosis as well as circuit-level imitation of attention-switching and visual residual mechanisms for smart perception. This innovative hardware promotes the development of future general-purpose computing machines with high adaptability and flexibility to multiple tasks.

Subject terms: Two-dimensional materials, Electronic devices

This study introduces a reconfigurable MoS2-based neuromorphic hardware that integrates synaptic, heterosynaptic, and somatic functionalities. It adapts to diverse tasks like medical image enhancement and smart perception, advancing flexible, general-purpose computing solutions.

Introduction

General-purpose hardware is engineered to mirror human-like advanced problem-solving abilities and cognitive thinking. The fundamental challenge in developing general-purpose hardware lies in replicating the adaptability and flexibility of human brain intelligence1. The construction of general-purpose hardware can be decomposed into developing machine learning modules that simulate various units of the brain, such as neuron aggregates and then integrated based on a brain-like cognitive architecture, enabling the system to emulate the operational principles and structural intricacies of the human brain intelligence2. In particular, the highly reconfigurable neural network of brain neural circuits is one of the important sources of brain intelligence, giving the brain the exceptional capacity for flexible adaptation to dynamic environments. The brain neural circuits can maintain flexible reconfigurable synapse/heterosynapse/soma inter-connections while frequently adjusting neuronal states3. Such distinctive mode orchestrates the connections of neural circuits across various interactive environmental tasks to ensure an appropriate alignment between task complexity and computational resources4. For neural circuits, neural signals are transmitted to the receiving neuron via synapse, subsequently relayed through the control of heterosynaptic connection to various soma, where the integration and processing of signals are performed (Fig. 1a)5–7. Therefore, neuromorphic computing hardware equipped with this capability needs to be developed to imitate the principles and structures of the brain neural circuits. Such hardware should be able to simultaneously implement precise engineering of individual devices’ inner states and inter-modules’ connections, thus boosting adaptability and plasticity and providing strategies for the implementation of adaptability and flexibility in general-purpose hardware8–10.

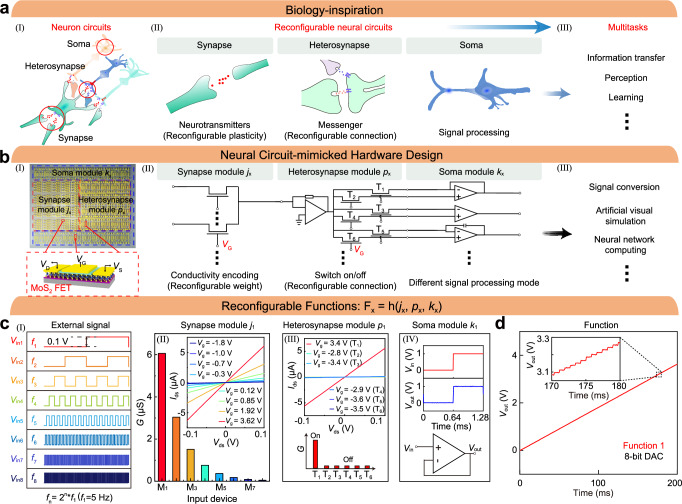

Fig. 1. Concept of RAH and reconfigurable functionalities.

a Schematic of the complex structures and functions of biological neural circuits formed by synapses, heterosynapses, soma, and their inter-connections. In the synaptic component, the release and reception of neurotransmitters accomplish signal transmission, and their dynamic modulation (concentration and action time) simulates the reconfigurable weights, reflecting the plasticity inherent in biological synapses. Heterosynapses are particularly notable for their ability to facilitate inter-neural circuit communication through state transitions and to transmit signals across various types of neural cells. The soma component underscores the integrative capacity of neural networks in processing complex signals. The multifunctionality of biological neural circuits is demonstrated in the capability of these circuits to perform a multitude of tasks, including perception and learning. b Schematic of the biology-inspired reconfigurable hardware (I) that encompasses three principal modules: the synaptic module (cascaded MoS2 FET arrays), the heterosynapse module (MoS2 FET-based OPA and MoS2 FET connections), and the soma module (MoS2 FET-based OPA and feedback component integration) (II). These modules employ adjustable states, such as conductance encoding, switchability, and reconfigurable feedback mechanisms, to mimic the components of biological neural circuits, such as synapses, heterosynapses, and soma. This configuration facilitates multitask processing, including signal conversion, artificial visual simulation, and neural network computing (III). c Demonstration of 8-bit DAC. Square-wave input signals (Vin1–Vin8) with an input amplitude of 0.1 V and an input frequency of fn = 2n−1 × f1, where f1 = 5 Hz is the input frequency of signal Vin1 (I). Conductance encoding in the synapse module following j1 (Gn = 2n−1 × G1, G1 = 6.06 μS) (II), a sustained on-state T1 as p1 in the heterosynapse module (III), and a voltage follower as k1 in the soma module (IV). The inset in the synapse module shows the output curves of the eight FETs. d Output characteristics of the 8-bit DAC. The inset (black box) provides a magnified view of the output signal between 170 and 180 milliseconds.

2D materials, which possess superior physical properties11, can support neuromorphic computing hardware12–14. Neuromorphic hardware based on 2D materials respectively adopts transistors, logic gates, and memory to construct synapses, heterosynapse, and soma components, which is optimized for a specific individual function15–17. Recent advancements have demonstrated the integration of multiple 2D material devices and multiple sensor modalities to achieve brain-like functionalities18–22. However, despite these advancements, limitations remain in fully emulating the computational flexibility of brain neural circuits, especially when efficient multitasking is required in dynamic environments23,24. This limitation results in resource waste in lightweight settings, specifically, excessive driving signals and device redundancy, because computational tasks of varying information content require different device quantities25–27. Moreover, this limited adaptability may yield suboptimal outcomes because different linear and nonlinear computational processes are required to match different tasks by circuit assembly28–31. Therefore, the development of 2D material-based reconfigurable analog hardware is the key to truly emulate the computational flexibility of brain neural circuits for multitasking demands in dynamic environments.

In this work, motivated by biological principles, we developed an 2D MoS2-based reconfigurable analog hardware (RAH) that included synapse, heterosynapse, and soma modules (Fig. 1b, (I)) and demonstrated its reconfigurable multiple functions and potential as a solution for general-purpose machines with rich dynamics. At the device level, synapse, heterosynapse, and soma modules were fabricated with MoS2 FETs (including cascaded MoS2 FETs and MoS2 FET-based operational amplifier (OPA) units32). At the circuit level, the synapse, heterosynapse, and soma module wiring assembly was adjusted based on the task requirements to process the signal transmission. By co-encoding the inner-states and inter-connections of all modules, the high adaptability and plasticity of RAH allowed the realization of diverse linear and nonlinear computing functions and effective handling of varying task requirements. The proposed RAH realized the functions of analog-to-digital converter (ADC) and digital-to-analog converter (DAC), with reconfigurable resolutions to match different tasks, a bandwidth of 50 kHz, and a maximum power consumption (8-bit ADC and DAC) of ~750 μW in a converting period. Given its adaptability and flexibility, RAH can also support multiple computing functions. For instance, it was employed in sparse coding and a convolutional computing, which was then used to reconstruct and image sharping from pathological regions in computed tomography (CT) images to facilitate easy identification and assessment by medical professionals. In addition, it imitated attention switching and visual persistence in visual systems through designed circuit configurations, which enabled the detection of distance and velocity in autonomous driving applications. This 2D MoS2-based RAH implemented the key degrees-of-freedom of the inner states and inter-connections of devices and modules. It can pave the way for the development of future brain-like general-purpose machines with high adaptability and plasticity for multitasking.

Biology-inspired reconfigurable hardware

Figure 1a illustrates the complex structures and functions of biological neural circuits formed by synapse, heterosynapse, soma, and their inter-connections. Motivated by biological principles, we developed an RAH by employing 2D MoS2 FETs to translate the biological concepts in Fig. 1a to a concrete electronic hardware design (Fig. 1b). RAH has synapse, heterosynapse, and soma modules, for which diverse computing functions can be built through connection adaption within or between these modules. As shown in Fig. 1b, (II), the synapse module constructed with multiple cascaded MoS2 FETs achieves synaptic-like plasticity by modulating the gate voltage to perform the FET conductance state (Gn). Each transistor Mn follows a customizable discrete equation marked as subfunction jx. The heterosynapse module fabricated with MoS2 FET-based OPA units and six MoS2 FETs (T1-T6) controls heterosynaptic-like inter-connection among different neural circuits by encoding the on/off state of the MoS2 FETs and is known as subfunction px. The connection encoding table of on/off status for 6 MoS2 FETs programs the heterosynaptic-like inter-connection under different environment-interactive requirements, as shown in Table S1. The soma module, composed of MoS2 FET-based OPA and diverse feedback loops, integrates the front-end transmitted signals and generates a soma-like response, thus forming subfunction kx33–35. The manufacturing details are given in the Methods section and Note S1. The optical images, SEM image, STEM image, and schematic of the hardware are shown in Fig. S1 and S2, which incorporates over 600 MoS2-based FETs, with an impressive yield rate exceeding 95%. The performance of the MoS2 FET arrays in the synapse module was measured and is presented in Figs. S3 and S4. The MoS2 FET-based OPA’s design details are shown in Note S3, and the corresponding feedback circuits formed with diverse feedback loops are shown in Figs. S5–S12. In consideration of the wiring–assembling degree of freedom commonly existing in the brain, the flexible combination of jx, px, and kx realizes diverse functions for the hardware (Table 1).

Table 1.

Multiple functions with reconfigurable hardware configuration

| Hardware function | Synapse module (subfunction jx) | Heterosynapse module (subfunction px) | Soma module (subfunction kx) |

|---|---|---|---|

| Function 1: DAC | Geometric sequence equation j1: (n = 1–8) | Sustained on-state p1: on state T1 | Operational state k1: Voltage follower |

| Function 1: ADC | Sustained on-state p2: on state T3 | Operational state k2: Voltage comparator | |

| Function 2: activation function (step function) | Non-linear sequence equation j2: (j = 2–8) | Sustained on-state p2: on state T3 | Operational state k2: Voltage comparator |

| Function 3: Convolution kernel | Sharpness convolutional kernel j3: (j = 1–4, 6–9) | Sustained on-state p1: on state T1 | Operational state k1: Voltage follower |

| Function 4: attention switching |

Gaussian distribution j4 (activation): Universal off states j5 (inactivation): |

Sustained on-state p1: on state T1 | Operational state k1: Voltage follower |

| Function 5: visual persistence | Sustained on-state p2: on state T3 | Operational state k2: Voltage comparator |

To demonstrate the functionalities experimentally, we encoded eight square-wave signals in eight input channels, with an amplitude of 0.1 V and frequencies of , where f1 = 5 Hz and n represents different input channels (Fig. 1c, (I)). The gate voltages applied to each device in the synapse module could fix the conductance of M1–M8 to the desired values to satisfy geometric sequence equation j1: (G1 is the conductance state of the first transistor, M1). Here, the conductance of M1 was G1 = 6.06μS at Vg = 3.62 V (Fig. 1c, (II)). Subfunction p1 was realized by a transimpedance amplifier with a feedback resistor (Rf = 300 kΩ) and an on-state MoS2 FET and was connected to the voltage follower of subfunction k1 (Fig. 1c, (III) and (IV)). Figure 1d shows the performance of the 8-bit DAC, with the adjacent transformed analog voltage levels discerned distinctly. Versatile functions were achieved in RAH by programming different combinations of subfunctions jx, px, and kx, which were then utilized to imitate the synapse, heterosynapse, and soma modules, respectively, leading to RAH’s ability to mimic the adaptive behavior of organisms in different environments.

Eight-bit DAC/ADC

RAH implemented the functionalities of 8-bit ADC and DAC to show its signal conversion capabilities. The related simplified circuit is presented in Fig. 2a. The detailed circuit design and operation mechanism of ADC and DAC are given in Notes S3 and S4. In a differential mode configuration, low-frequency sine waves (1 V peak to peak) were inputted to the MoS2 FET-constructed OPA in the soma module to characterize the frequency responses. The gain and phase response plots related to the input frequency are depicted in Fig. 2b, c. The maximum gain achieved was 6 dB because the reference resistor was set to twice the input resistor, leading to a 0 dB gain bandwidth cutoff frequency (fT) at 50 kHz. The high-frequency operation can be improved by reducing the MoS2 FET sizes36–40.

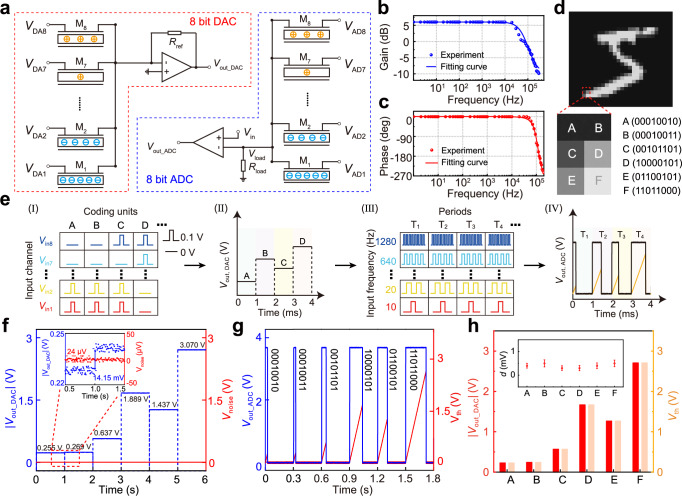

Fig. 2. RAH adapted as 8-bit DAC and 8-bit ADC.

a Diagram of an 8-bit DAC and ADC simplified circuit structure. Frequency responses of OPA, including gain bandwidth (b) and phase bandwidth (c). d Grayscale image of the digit 5 with 256 levels, with 6 selected pixel points as the input signals for the 8-bit DAC. e Entire data conversion process, including digital signal input Vin1–Vin8 (1), DAC output signal Vout, DAC (2), reference threshold voltage Vth for ADC (3), and ADC output signal Vout, ADC (4). f |Vout, DAC| of the 8-bit DAC corresponding to the signal input in (d) and the noise level of the circuit. The inset compares the unit quantization voltage (UQV) with noise. g Vout, ADC of the 8-bit ADC corresponding to the signal input in (f) and the scanning Vth. h Error analysis by comparing |Vout, DAC| and Vth when ADC finishes conversion.

Furthermore, to characterize the performance of RAH working as an 8-bit ADC and DAC, we chose six specific pixels from a grayscale image of the digit 5 containing 256 different levels as inputs (Fig. 2d). Digital pixels were converted into analog signals by DAC and converted back to digital outputs by ADC (the procedure is in Fig. 2e, and the conversion details are in Note S6). The analog voltage output values of DAC were measured (Fig. 2f), and the inset magnified the signal and noise curves within the dashed red box. The unit quantization voltage (UQV) reached 14.15 mV, and the noise level remained at 24 μV. The UQV-to-noise ratio of the 8-bit DAC was 55.4 dB, indicating that the converted results could be distinguished clearly. Subsequently, the six analog voltage signals were sent to the 8-bit ADC, and the converted digital number was determined by the corresponding converting time width t, as shown in Fig. 2g. Simultaneously, we recorded the Vload value when the output voltage switched to a low voltage level. Given that the load resistor of ADC and the reference resistor of DAC shared the same value, Vload and |VDAC_OUT| had nearly similar values, with the difference being below 1 mV, which is much lower than UQV. This result confirms that the hardware has excellent fidelity in signal conversion (Fig. 2h), and the DAC/ADC resolution is reconfigurable. The 4- and 6-bit ADC/DAC functions are presented in Notes S4 and S5 and Figs. S13–S18, which indicate that RAH can fit the resolution requirements to avoid resource wastage.

Reconstruction and feature extraction of medical images

The adaptability of RAH makes it applicable to various practical situations. Here, it was implemented to perform the reconstruction and feature extraction of medical computed tomography (CT) images, with the aim of improving the identification of pathological regions and enhancing the accuracy of medical diagnosis. To achieve the image restoration process, the hardware was used to construct Function 1 (DAC/ADC) and Function 3 (convolutional kernels) for signal conversion and convolution calculation, as shown in Fig. 3a. A 256 × 256 pixel original CT image containing bleeding spots in the brain was segmented into 8 × 8 pixel patches for image processing, as shown in the red dotted box in Fig. 3a (I). First, the hardware working under ADC and DAC functions was employed for a sparse coding algorithm, in which the original image was reconstructed to reduce the noise via data transfer and conversion within ADC and DAC (Fig. 3a, (II)). Second, the hardware adapted as convolutional kernels was used to sharpen the reconstructed image (Fig. 3a, (III)). The sparse coding process that involves forward and backward DA/AD conversion (the details are in Note S7) is shown in Fig. 3b. Signal 1 was mapped to 0.1 V, and Signal 0 was mapped to 0 V. Last, 256 random coefficients were constructed initially, and the forward and backward calculation results of the first iteration are shown in Fig. 3c. In the sparse coding experiment, the choice of regularization parameter λ was pivotal for the sparse coding outcomes to balance sparsity and image quality. Various λ values were applied experimentally to determine their effects. Figure S20 shows the corresponding activation level at λ = 2.2, in which only a few dictionary vectors contributed considerably to image reconstruction and captured the signal’s sparsity characteristics effectively. After 100 iterations, the optimized sparse coefficients were obtained and are shown in Fig. 3d. The small λ values could result in low sparsity but could cause overfitting in image reconstruction (Fig. S21 for λ = 0.1), and the large λ values allowed for sparse encoding but resulted in underfitting in image reconstruction (Fig. S22 for λ = 3.5). In the experiment, λ = 2.2 achieved excellent sparsity and image quality balance.

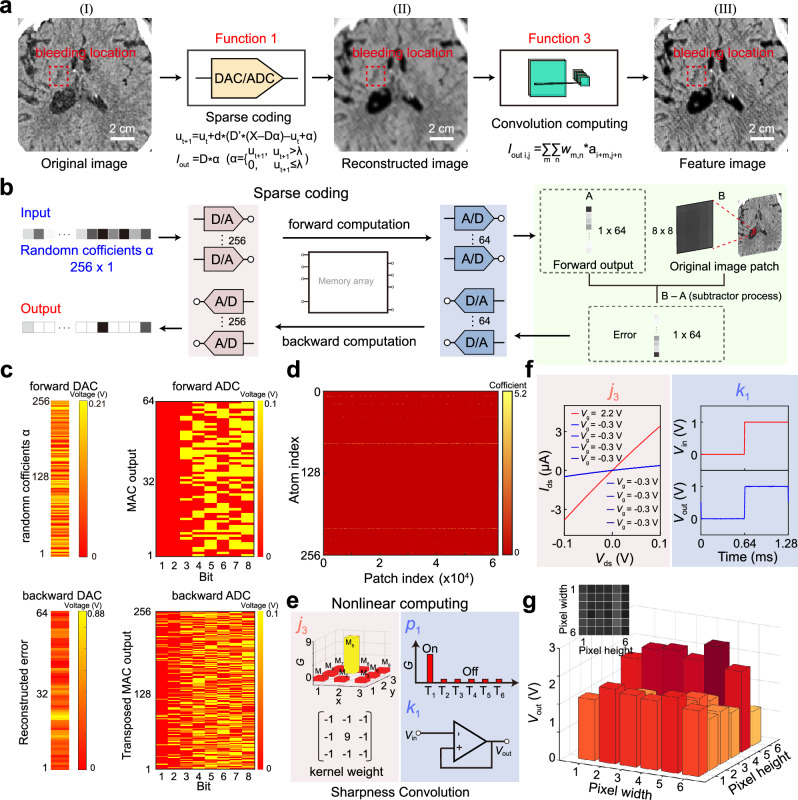

Fig. 3. RAH configured for reconstruction and feature extraction of medical images.

a Image reconstruction by sparse coding and feature extraction by convolution realized with the ADC/DAC function and nonlinear convolution function of RAH, respectively. b Process of sparse coding. c Output data of forward and backward DA/AD conversion for the first iteration in the experiments. d Sparse coding coefficients for all patches in the original image. e Image sharpening (Function 3) in RAH, in which the synapse, heterosynapse, and soma modules were configured as subfunction j3 (convolutional kernel), subfunction p1 (sustained on-state), and subfunction k1 (voltage follower), respectively. f Electronic performance to evaluate subfunctions j3 and k1. g Output current of a 6 × 6 patch from the convolution operation. The 2D grayscale image of the 6 × 6 patch is given in the inset.

After image reconstruction, a convolution operation for image sharpening was applied by configuring RAH as convolutional kernels (Function 3, Fig. 3a (III)). The configuration of a hardware as convolutional kernels avoids additional accelerators, which supports efficient large-scale convolution computations41. Such a configuration enhances the speed of image sharpening, particularly when dealing with extensive datasets. Initially, a 3 × 3 sharpening convolution kernel was designed, and the weight of the central element was nine times the weight of the surrounding elements. Accordingly, the weights of the convolution kernel were represented by the conductivity of the transistors in the synapse module to encode subfunction j3 (Fig. 3e, left). The conductance of M5 was 9 G0 (3.4 μS) under Vg = −0.7 V, and the conductance of the remaining components was G0 (~0.37 μS) under Vg = −2.4 V (Fig. 3f, left). The right panel of Fig. 3e shows a sustained on-state T1 in the heterosynapse module (subfunction p1). The soma module worked as a voltage follower (subfunction k1), and its output characteristics are shown in the right panel of Fig. 3f. The image patch from the reconstructed image was encoded as 8-bit binary digital signals, which were then converted into analog voltage signals through the hardware working as an 8-bit DAC. These analog voltage signals were fed into the reconfigured hardware (Function 3) working for convolution operations. The convolution operation involved sliding a convolution kernel over each patch of the same size and calculating the convolution result in the corresponding region. Afterward, the output voltages were obtained to represent the extracted features from the respective regions. The experimental results are shown in Fig. 3g, and the features from the 256 × 256 grayscale CT image were extracted and are presented in Fig. 3a (III). The blood vessels and tissue structures were displayed more clearly than those in the original image. Thus, RAH can provide robust support for future medical research and clinical applications. Additionally, image reconstruction with additional noise was implemented using RAH (Figs. S23 and S24). The peak signal-to-noise ratios of the noisy and reconstructed images are 20.1026 and 27.1774 dB, respectively, in Fig. S25a (20.2940 and 28.5906 dB in Fig. S25b), confirming the high-quality reconstruction under additional noise. Notably, the number and conductivity states of cascaded transistors can be configured to form multifunctional convolution cores for flexible convolution operations.

Visual processing in bionic receptive fields

RAH also allows for brain-like multitasking to realize attention switching and visual persistence in biological visual systems, so it has potential for applications in autonomous driving (Fig. 4a)42–45. Attention switching allows visual systems to focus on information only within the active receptive field (RF). The output signal changes immediately when RF shifts. RF selection enhances data sparsity, reduces redundancy, and effectively captures critical features45–47. Meanwhile, visual persistence can utilize previous data acquired outside the present RF to predict future scenarios and infer the actions of objects. The output signal that responded to the previous RF persists, and its disappearance is delayed after RF shifts. The biological mechanism is discussed in Note S7.

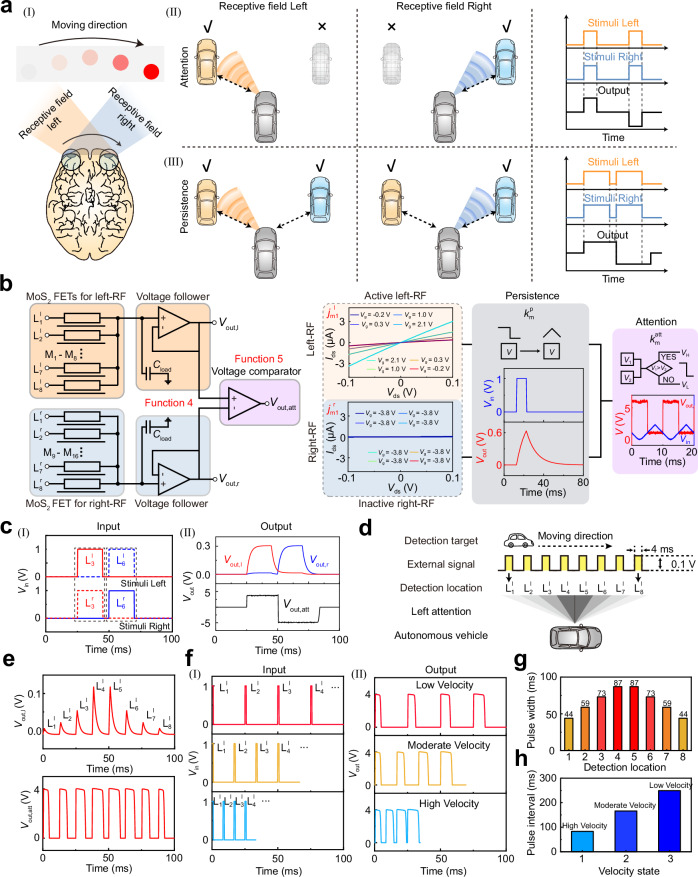

Fig. 4. RAH configured for visual processing in bionic receptive (RF) fields.

a Diagram of RF attention. Without visual residual, only signals within the active RF (left or right RF) are received. With visual residual, signals from the previously active RF persist for a short time and can be processed after the active RF is switched. b The left panel shows the simplified circuit connection for both RFs. The right panel presents the basic functional characteristics. The MoS2 FET conductance of the active RF satisfies the Gaussian distribution with G1 = G8 = G2/2 = G7/2 = G23 /4 = G6 /4 = G4/8 = G5/8 = 3.76 μS (), and the output characteristics of each FET are shown in an orange background. The FET conductance of the inactive RF is in the off state with Goff of G1/14 = 0.271 μS (), and the output characteristics of each FET are shown in a cyan background. The subfunction of the first-stage OPA constructed from MoS2 acts as a voltage follower, and its output characteristics are shown in a gray background. The subfunction of the second-stage OPA constructed from MoS2 acts as a comparator, and its output characteristics are shown in a pink background. The abovementioned modules were connected through subfunctions p1 and p2. c The left panel shows the input signals to and (red) under left RF activation and to and (blue) under right RF activation. The right panel presents the output signals of the first-stage OPA, where the red curve represents left RF, and the blue curve represents right RF. d Schematic of object detection in autonomous driving via RAH. Within the active left RF, the conductance distribution is programmed to compute distance and speed information. e, g For distance detection, the upper panel shows the output signals Vout,l of the first-stage OPA obtained from each programmed location. The lower panel shows the output signals Vout,att of the second-stage OPA obtained from each programmed location, whose width determines the distance (g). f, h For speed detection, the left panel presents the input signals with different time intervals representing different speed scenarios, and the right panel presents the output signals of the second-stage OPA obtained from each programmed location. The output time interval indicates the speed (h).

The functionality (Functions 4 and 5) of RAH was adjusted to realize the abovementioned biological mechanism, as shown in Fig. 4b. The simplified hardware system used two synapse modules to represent the left and right RFs (marked with orange and blue backgrounds, respectively). A soma module with three OPAs (The electrical properties of individual FET are shown in Figs. S4, S26, and S27) was employed to achieve RF switching and visual persistence, and a heterosynapse module was used to realize the inter-connection of synapse/soma modules by encoding the on/off switching of MoS2 FETs. The active RF (synapse module 1) encoded a Gaussian distribution with marked as subfunction and the inactive RF (synapse module 2) represented universal off-states with marked as subfunction . Signal persistence was realized with a voltage follower circuit by connecting the first-stage OPA (soma modules 1 and 2) circuit with a load capacitor (Cload, 33 μf; subfunction ). The second-stage OPA (soma module 3) served as a voltage comparator to generate the output (subfunction ).

After circuit configuration, the left RF was activated in 0–50 ms, and the right RF was activated in 50–100 ms. A 20 ms, 0.1 V voltage pulse was sent to the input terminal’s and ports at the time of 25 ms and inputted to the and ports at the time of 50 ms (Fig. 4c, (I)). When the attention was on the left RF, the left RF’s soma module 1 generated a voltage output () that was higher than that of the right RF’s soma module 2 (Vout,r), leading to positive output voltage for soma module 3 (Fig. 4c, (II)). Similarly, negative output voltage Vout,att was generated for soma module 3, representing the attention on the right RF (Fig. 4c, (II)). The output signal at soma module 3 persisted for a longer time than the input pulse width, indicating the visual persistence effect.

The distance and speed between the driving and target vehicles in autonomous driving can be determined using the abovementioned working principles. To simplify the testing, we focused on the detection of a single RF. The diagram is shown in Fig. 4d. M1–M8 represent eight activation state encoders to program eight different locations (marked #1–#8) centered around the driving vehicle. These locations include four different distances to the driving vehicle that are distributed symmetrically at the front and back sides of the driving vehicle, and the corresponding conductance distribution of M1–M8 follows . Notably, the programming of conductance can be configured based on actual road conditions to expand the detection range and improve accuracy, also highlighting the advantages of the adaptive hardware. Each encoder can receive an activation voltage pulse with a width of 4 ms and amplitude of 0.1 V when a target vehicle is detected at its corresponding programmed location. By processing the output signals of the hardware, we can obtain the distance and speed of the driving vehicle relative to the target vehicle.

During distance detection and processing, the current accumulation in soma module 1 is determined by the encoded transistor conductance, with high conductance resulting in a high output voltage, as shown in Fig. 4e (I). The high output voltage of soma module 1 can lead to a long pulse persistence time width for the output signal of soma module 3, which also indicates a close distance to the driving vehicle, as shown in Fig. 4e (II). Under the assumption that the target vehicle is passing from locations #1 to #8 sequentially in the experiment setup, the measured time widths of the output signals at #1–#8 are 44, 59, 73, 87, 87, 73, 59, and 44 ms, respectively (Fig. 4g), indicating that the target vehicle approaches the driving vehicle first then leaves the driving vehicle afterward. The distance information of the driving vehicle relative to the target vehicle can be distinguished directly.

In speed detection and processing, the different speeds of the detection vehicle result in different time intervals when the vehicle moves from one programmed location to an adjacent one. Thus, the time intervals of soma module 3’s output voltage pulses can be used to determine the speed, in which a short time interval indicates high speed. As shown in Fig. 4f, to simplify the testing, we defined three scenarios with high, medium, and low speeds. Pulse signals with three different frequencies representing the three speeds were applied to in sequence, as shown in Fig. 4f (I). The speed information could be distinguished directly by analyzing the time interval of the output signals (Fig. 4f, (II)). The output frequencies of high, medium, and low speeds were ~12.04, ~6.02, and ~4.02 Hz, respectively (Fig. 4h).

Discussion

In summary, the 2D MoS2-based RAH implemented the connection-adaptable degree of freedom innovated from neural circuits in the brain. The devices’ inner states and inter-connections were co-encoded to enhance adaptability, thus endowing RAH with multiple functions and potential as a solution for general-purpose machines. Notably, the MoS2 FETs used in this work could be replaced with floating gate transistors, charge-trapping transistors, or memristors to achieve a non-volatile hardware structure and reducing reliance on gate control strategies41,48. RAH working under ADC and DAC functions exhibited reconfigurable resolutions (maximum of 8 bits), which can match different tasks to avoid resource wastage. ADC and DAC also realized a bandwidth of 50 kHz and a maximum power consumption (8 bits ADC and DAC) of ~750 μW during a converting period. In addition, wide-ranging computing applications, such as AI-assisted diagnoses and autonomous driving, were discussed to directly prove that the high-level adaptability and flexibility of RAH can appropriately meet the linear and nonlinear computing requirements of different tasks. As one potential scheme for future brain-like general-purpose machines, this hardware is expected to be used in many other practical applications, such as speech recognition and decision making. This design strategy opens an avenue to create other intelligent, efficient, flexible general-purpose hardware solutions for complex problems.

Methods

This study complied with all the relevant ethical regulations. The Clinical CT image sample used in this research were obtained from Huangshi Central Hospital after written informed consent was obtained from patients. This research was approved by the Ethics Committee of Huangshi Central Hospital (K(2023)-46). All methods were implemented following the relevant guidelines and regulations stipulated in the Declaration of Helsinki.

Fabrication details of RAH

The CVD-MoS2 thin films supplied by Six Carbon Shenzhen as channel materials. The complete fabrication process encompassed all lithographic steps via UV lithography using an MA8 system. The gate, source, and drain metal contacts (Au and Pt) were manufactured with a Leybold electron-beam evaporation system at a pressure of <3 × 10−5 mbar. The gate dielectric, which was 30 nm Al2O3, was deposited via atomic layer deposition from trimethylaluminium and water at 250 °C. CVD MoS2 was lifted off the growth substrate by spinning a thick polymethyl methacrylate (PMMA) film. The polymer/MoS2 stack was then rinsed in deionized water. After drying at a slightly elevated temperature, CVD MoS2 was transferred in a dry-air glovebox to the prebaked target substrate, which was heated slowly from room temperature to ~150 °C to soften PMMA and facilitate proper contact between the film and substrate. PMMA was subsequently dissolved in acetone. MoS2 and Al2O3 were etched using Ar/SF6 plasma etching in an Oxford Cobra reactive ion etching system.

Electrical measurements

The electrical characteristics of the MoS2 FETs are measured in a cryogenic probe station at room temperature. The Agilent B1500 source measurement unit (SMU) was used to apply gate and drain voltages and to measure the drain current as a function of gate voltage and drain voltage. The testing evaluates key parameters such as transfer and output characteristics and threshold voltage. For the MoS2 FET-based operational amplifier (OPA) circuits, the measurements of individual FET were performed using a B1500 semiconductor analyzer in conjunction with a cryogenic probe station. For integrated device measurements, bias voltages were supplied by the B1500 semiconductor analyzer and source meter. Input and output signals were generated and measured using an Agilent 33220 A function generator and a Keysight Infiniivision oscilloscope, respectively. This setup ensures precise control of the device conditions and accurate characterization of the amplifier’s performance.

For the digital-to-analog conversion (DAC) functionality testing, encoded digital signals (square waves with varying frequencies) are applied across multiple input channels. The conductance values of these channels are programmed by the NI PXI equipment according to the required formula. The output analog signals are then measured using a Keysight Infiniivision oscilloscope to verify the accuracy of the digital-to-analog conversion. For analog-to-digital conversion (ADC) functionality testing, an Agilent 33220 A function generator provides an analog signal to one input of the operational amplifier, while reference conductance channels receive periodic square waves. The analog signal is compared to the reference voltages, and the digital output is monitored with a Keysight Infiniivision oscilloscope. The diagram of experimental setup is shown in Fig. S29.

For Convolutional Kernel testing, a convolution kernel was programmed by adjusting the conductance values of the transistors using an external controller (NI PXI system). The central FET is set to a conductance of 9 × G0, while the surrounding FETs are set to G0. The resulting output was recorded using a Keysight oscilloscope. The convolution process is applied to extract key features from the reconstructed CT image, enabling image sharpening and enhancing the clarity of important details. The Clinical CT image sample used in this research were obtained from Huangshi Central Hospital and approved by the Ethics Committee of Huangshi Central Hospital (K(2023)-46).

Sparse coding

The purpose of sparse coding is to find a sparse representation of input X that must be as similar as possible to the features of the input and whose coefficients are sparse. In optimal sparse approximation, we determined the coefficients with the fewest nonzero entries by solving the minimization problem

where denotes the number of nonzero elements of and D represents the feature dictionary. To solve this NP-hard problem, we used locally competitive algorithms to obtain the sparse coefficients.

The localized competitive algorithm (LCA) draws inspiration from observed properties in neural systems: the inputs cause the membrane potential to accumulate like a leaky integrator, the membrane potentials exceed the threshold-generated action potentials for extracellular signaling, and the positive responses inhibit neighboring units through horizontal connections. The internal state of the units is denoted by . When the internal state of a node is considerably large, the node becomes active and produces output signal that represents the stimulus inhibiting other nodes. This output coefficient results from the application of an activation function to the membrane potential, , with system threshold λ as a parameter. Through the combination of these components, the dynamics of LCA nodes can be expressed by a nonlinear ordinary differential equation.

where is the membrane potential of neuron m, τ is a time constant, is the column coefficient of D, and .

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Supplementary information

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Grants 62222404, T2450054, 52027806, 52435005, 62304084 and 92248304), National Key Research and Development Plan of China (Grants 2021YFB3601200), Research Grants Council of Hong Kong (Grant No. PDFS2223-4S06), Major Program of Hubei Province (Grant No. 2023BAA009), Innovation Fund of the Wuhan National Laboratory for Optoelectronics, and Open Fund of the State Key Laboratory of Infrared Physics.

Author contributions

L.Y., X.M., Y.Y., and C.X. conceived the ideas and designed the experiment. X.H., Z.P., Z.L., and X.Y. developed the wet-transfer technology technique. X.H. and L.T. investigated the characteristics of MoS2 field-effect transistors. X.H., L.T., L.X., and Y.W. conducted the circuit design and theoretical analysis of self-organizing functions. X.H. and H.W. analyzed the neuronal function. X.H. and L.X. examined sparse coding algorithms. X.H., L.T., and W.S. analyzed the experimental data on self-organizing functions. X.H., X.G., X.Q., J.W., and X.B.H. proposed revisions to the figures. X.H., L.X., and Y.Y. provided the medical CT images and reviewed the ethics documentation. X.H., L.T., and L.Y. wrote the manuscript. Special thanks are given to Y.Y. for providing medical CT images and to W.L., X.Z., C.X. and J.X. for the discussions and suggestions regarding the research and manuscript.

Peer review

Peer review information

Nature Communications thanks Saptarshi Das and Sebastian Pazos for their contribution to the peer review of this work. A peer review file is available.

Data availability

All data that support the findings of this study are available in the Figshare database at the following 10.6084/m9.figshare.27642318.

Code availability

All codes used in this study are available from the corresponding author (L. Y.) upon request.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

These authors contributed equally: Xinyu Huang, Lei Tong, Langlang Xu.

Contributor Information

Caihua Xiong, Email: chxiong@hust.edu.cn.

Yu Ye, Email: yeyuyeyu2@163.com.

Xiangshui Miao, Email: miaoxs@hust.edu.cn.

Lei Ye, Email: leiye@hust.edu.cn.

Supplementary information

The online version contains supplementary material available at 10.1038/s41467-024-55395-4.

References

- 1.Goertzel, B. Artificial general intelligence: concept, state of the art, and future prospects. J. Artif. Gen. Intell.5, 1–48 (2014). [Google Scholar]

- 2.Pei, J. et al. Towards artificial general intelligence with hybrid Tianjic chip architecture. Nature572, 106–111 (2019). [DOI] [PubMed] [Google Scholar]

- 3.Ballard, D. H. Cortical connections and parallel processing: structure and function. Behav. Brain Sci.9, 67–90 (2010). [Google Scholar]

- 4.Luo, L. Architectures of neuronal circuits. Science373, eabg7285 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Pessoa, L. & Adolphs, R. Emotion processing and the amygdala: from a ‘low road’ to ‘many roads’ of evaluating biological significance. Nat. Rev. Neurosci.11, 773–782 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Braun, U. et al. Dynamic reconfiguration of frontal brain networks during executive cognition in humans. Proc. Natl Acad. Sci. USA112, 11678–11683 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Vinogradova, O. S. Hippocampus as comparator: Role of the two input and two output systems of the hippocampus in selection and registration of information. Hippocampus11, 578–598 (2001). [DOI] [PubMed] [Google Scholar]

- 8.Mehonic, A. & Kenyon, A. J. Brain-inspired computing needs a master plan. Nature604, 255–260 (2022). [DOI] [PubMed] [Google Scholar]

- 9.Qiao, H., Li, Y., Li, F., Xi, X. & Wu, W. Biologically inspired model for visual cognition achieving unsupervised episodic and semantic feature learning. IEEE Trans. Cybern.46, 2335–2347 (2016). [DOI] [PubMed] [Google Scholar]

- 10.Muliukov, A. R. et al. A unified software/hardware scalable architecture for brain-inspired computing based on self-organizing neural models. Front. Neurosci.16, 825879 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Zhu, X., Li, D., Liang, X. & Lu, W. D. Ionic modulation and ionic coupling effects in MoS2 devices for neuromorphic computing. Nat. Mater.18, 141–148 (2018). [DOI] [PubMed] [Google Scholar]

- 12.Shi, Y. et al. Electronic synapses made of layered two-dimensional materials. Nat. Electron.1, 458–465 (2018). [Google Scholar]

- 13.Seo, S. et al. Artificial optic-neural synapse for colored and color-mixed pattern recognition. Nat. Commun.9, 5106 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Seo, S. et al. Recent progress in artificial synapses based on two-dimensional van der Waals materials for brain-inspired computing. ACS Appl. Electron. Mater.2, 371–388 (2020). [Google Scholar]

- 15.Bessonov, A. A. et al. Layered memristive and memcapacitive switches for printable electronics. Nat. Mater.14, 199–204 (2014). [DOI] [PubMed] [Google Scholar]

- 16.Migliato Marega, G. et al. Logic-in-memory based on an atomically thin semiconductor. Nature587, 72–77 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Wang, Y. et al. An in-memory computing architecture based on two-dimensional semiconductors for multiply-accumulate operations. Nat. Commun.12, 3347 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Ghosh, S. et al. An all 2D bio-inspired gustatory circuit for mimicking physiology and psychology of feeding behavior. Nat. Commun.14, 6021 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Sadaf, M. U. K., Sakib, N. U., Pannone, A., Ravichandran, H. & Das, S. A bio-inspired visuotactile neuron for multisensory integration. Nat. Commun.14, 5729 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Subbulakshmi Radhakrishnan, S. et al. A sparse and spike-timing-based adaptive photoencoder for augmenting machine vision for spiking neural networks. Adv. Mater.34, e2202535 (2022). [DOI] [PubMed] [Google Scholar]

- 21.Zheng, Y., Ghosh, S. & Das, S. A butterfly-inspired multisensory neuromorphic platform for integration of visual and chemical cues. Adv. Mater.36, e2307380 (2024). [DOI] [PubMed] [Google Scholar]

- 22.Zheng, Y. et al. Hardware implementation of Bayesian network based on two-dimensional memtransistors. Nat. Commun.13, 5578 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Buzsáki, G. Large-scale recording of neuronal ensembles. Nat. Neurosci.7, 446–451 (2004). [DOI] [PubMed] [Google Scholar]

- 24.Yu, L. & Yu, Y. Energy‐efficient neural information processing in individual neurons and neuronal networks. J. Neurosci. Res.95, 2253–2266 (2017). [DOI] [PubMed] [Google Scholar]

- 25.Grübl, A., Billaudelle, S., Cramer, B., Karasenko, V. & Schemmel, J. Verification and design methods for the BrainScaleS neuromorphic hardware system. J. Signal Process. Syst.92, 1277–1292 (2020). [Google Scholar]

- 26.Forbes, N. Evolution on a chip: evolvable hardware aims to optimize circuit design. Comput. Sci. Eng.3, 6–10 (2001). [Google Scholar]

- 27.Stomeo, E., Kalganova, T. & Lambert, C. Generalized disjunction decomposition for evolvable hardware. IEEE Trans. Syst., Man Cybern. Part B (Cybern.)36, 1024–1043 (2006). [DOI] [PubMed] [Google Scholar]

- 28.Zhou, T. et al. Large-scale neuromorphic optoelectronic computing with a reconfigurable diffractive processing unit. Nat. Photonics15, 367–373 (2021). [Google Scholar]

- 29.Tran, D. & Teuscher, C. Multi-tasking memcapacitive networks. IEEE J. Emerg. Sel. Top. Circuits Syst.13, 323–331 (2023). [Google Scholar]

- 30.Pagkalos, M., Makarov, R. & Poirazi, P. Leveraging dendritic properties to advance machine learning and neuro-inspired computing. Curr. Opin. Neurobiol. 85, 102853 (2024). [DOI] [PubMed]

- 31.Sun, X., Yao, F. & Ding, C. Modeling high-order relationships: brain-inspired hypergraph-Induced multimodal-multitask framework for semantic comprehension. IEEE Transactions on Neural Networks and Learning Systems, 12142–12156 (2023). [DOI] [PubMed]

- 32.Polyushkin, D. K. et al. Analogue two-dimensional semiconductor electronics. Nat. Electron.3, 486–491 (2020). [Google Scholar]

- 33.Gray, P. R. & Meyer, R. G. MOS operational amplifier design-a tutorial overview. IEEE J. Solid State Circ.17, 969–982 (1982). [Google Scholar]

- 34.Chen, J. J., Tsao, H. W. & Chen, C. C. Operational transresistance amplifier using CMOS technology. Electron. Lett.28, 2087 (1992). [Google Scholar]

- 35.Babanezhad, J. N. & Temes, G. C. A linear NMOS depletion resistor and its application in an integrated amplifier. IEEE J. Solid-State Circ.19, 932–938 (1984). [Google Scholar]

- 36.Krasnozhon, D., Dutta, S., Nyffeler, C., Leblebici, Y. & Kis, A. 27.24. 21-27.24. 24 (IEEE).

- 37.Gao, Q. et al. Scalable high performance radio frequency electronics based on large domain bilayer MoS2. Nat. Commun.9, 4778 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Krasnozhon, D., Lembke, D., Nyffeler, C., Leblebici, Y. & Kis, A. MoS2 transistors operating at gigahertz frequencies. Nano Lett.14, 5905–5911 (2014). [DOI] [PubMed] [Google Scholar]

- 39.Kim, M. et al. Zero-static power radio-frequency switches based on MoS2 atomristors. Nat. Commun.9, 2524 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Baek, S. et al. Low‐temperature carrier transport mechanism of wafer‐scale grown polycrystalline molybdenum disulfide thin‐film transistor based on radio frequency sputtering and sulfurization. Adv. Mater. Interfaces9, 2102360 (2022). [Google Scholar]

- 41.Migliato Marega, G. et al. A large-scale integrated vector–matrix multiplication processor based on monolayer molybdenum disulfide memories. Nat. Electron.6, 991–998 (2023). [Google Scholar]

- 42.Makrigiorgos, A., Shafti, A., Harston, A., Gerard, J. & Faisal, A. A. Human visual attention prediction boosts learning & performance of autonomous driving agents. arXiv10.48550/arXiv.1909.05003 (2019).

- 43.Unterholzner, A. & Wuensche, H.-J. Selective attention for detection and tracking of road-networks in autonomous driving. IEEE Intelligent Vehicles Symposium, 277–284 (2013).

- 44.Cheng, R., Agia, C., Shkurti, F., Meger, D. & Dudek, G. Latent attention augmentation for robust autonomous driving policies. 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 130–136 (2021).

- 45.Morrison, T. N., Jagacinski, R. J. & Petrov, J. Drivers’ attention to preview and its momentary persistence. IEEE Trans. Hum. Mach. Syst.53, 610–618 (2023). [Google Scholar]

- 46.Rolfs, M., Jonikaitis, D., Deubel, H. & Cavanagh, P. Predictive remapping of attention across eye movements. Nat. Neurosci.14, 252–256 (2010). [DOI] [PubMed] [Google Scholar]

- 47.Itoh, T. D., Takeya, R. & Tanaka, M. Spatial and temporal adaptation of predictive saccades based on motion inference. Sci. Rep.10, 5280 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Zhu, K. et al. Hybrid 2D–CMOS microchips for memristive applications. Nature618, 57–62 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All data that support the findings of this study are available in the Figshare database at the following 10.6084/m9.figshare.27642318.

All codes used in this study are available from the corresponding author (L. Y.) upon request.