Abstract

Objective: Authors evaluated whether displaying context sensitive links to infrequently accessed educational materials and patient information via the user interface of an inpatient computerized care provider order entry (CPOE) system would affect access rates to the materials.

Design: The CPOE of Vanderbilt University Hospital (VUH) included “baseline” clinical decision support advice for safety and quality. Authors augmented this with seven new primarily educational decision support features. A prospective, randomized, controlled trial compared clinicians' utilization rates for the new materials via two interfaces. Control subjects could access study-related decision support from a menu in the standard CPOE interface. Intervention subjects received active notification when study-related decision support was available through context sensitive, visibly highlighted, selectable hyperlinks.

Measurements: Rates of opportunities to access and utilization of study-related decision support materials from April 1999 through March 2000 on seven VUH Internal Medicine wards.

Results: During 4,466 intervention subject-days, there were 240,504 (53.9/subject-day) opportunities for study-related decision support, while during 3,397 control subject-days, there were 178,235 (52.5/subject-day) opportunities for such decision support, respectively (p = 0.11). Individual intervention subjects accessed the decision support features at least once on 3.8% of subject-days logged on (278 responses); controls accessed it at least once on 0.6% of subject-days (18 responses), with a response rate ratio adjusted for decision support frequency of 9.17 (95% confidence interval 4.6–18, p < 0.0005). On average, intervention subjects accessed study-related decision support materials once every 16 days individually and once every 1.26 days in aggregate.

Conclusion: Highlighting availability of context-sensitive educational materials and patient information through visible hyperlinks significantly increased utilization rates for study-related decision support when compared to “standard” VUH CPOE methods, although absolute response rates were low.

Care provider order entry (CPOE), also called computerized physician order entry, has been evaluated as a means of addressing issues of patient safety and quality of care.1,2,3,4,5,6,7,8,9 The role of CPOE systems in delivering patient-related, predominantly educational information has been less well studied. One classic study by McDonald and colleagues10 in 1980 suggested that CPOE-based delivery of educational materials including citations to journal literature was ineffective in increasing physicians' responsiveness to clinical reminders. Providing context-sensitive educational materials is difficult because CPOE system users only tolerate interruptions that are brief and minimally obtrusive to clinical workflows.11 The current study took a first step toward evaluating whether CPOE systems delivering focused, patient-specific educational materials in real-time can be effective; given that more traditional types of medical education, including seminars or conferences held in locations removed from patient care have been shown to have transitional effects at best.12,13,14,15

The authors conducted the Patient Care Provider Order Entry with Integrated Tactical Support (PC-POETS) study, a randomized, controlled trial comparing two interfaces within a CPOE system for accessing decision support content, including educational materials and patient information. The PC-POETS study evaluated (1) rates at which the CPOE system identified opportunities for users to access generously defined (explained below) study-related decision support materials, including educational items and patient information; (2) whether a new user interface design for delivering decision support would affect access rates for the study-related decision support features when compared to the local system's historical decision support delivery method; and (3) whether access rates for the study-related educational materials and patient information would be sustained over time. The PC-POETS study also measured utilization of preexisting CPOE-based safety- and quality-related clinical decision support features (such as allergy alerts and drug interaction warnings) to place the utilization rates for study-related educational decision support into perspective.

Background

Studies of clinical information needs have demonstrated that health care providers frequently require new information and/or knowledge to address questions resulting from patient care.16,17,18,19,20 Health care providers, however, are rarely able take the time to pursue the answers to clinical questions.21,22,23,24,25 Rather, providers are too busy delivering patient care to address all relevant clinical information needs.26,27,28 Health care providers' lack of time is compounded by the nearly exponential growth in the already massive body of biomedical knowledge.29,30,31 Conversely, any health care provider taking the time to review all the evidence-based literature for every clinical action would likely be too enmeshed in reading the information to have adequate time for patient care. Novel methods for delivering relevant educational materials into clinical workflows may improve health care providers' assimilation of new information into practice.2 One potential approach may be to leverage successful clinical decision support and CPOE systems already in use to supply pertinent “just in time”32,33 clinical education.34

Clinical decision support systems in general, whether freestanding or as components of electronic health record systems, have the potential to assist health care providers' decision making by delivering relevant patient-, disease-, clinician-, or institution-specific factual information, alerts, warnings, and evidence-based suggestions.35,36,37,38,39,40,41 Various individual clinical decision support systems have been demonstrated to reduce medical errors,42,43,44 improve the quality of patient care delivery,5,45,46,47,48 and deliver effective safety and quality related decision support.49,50,51,52 Numerous clinical decision support tools embedded in CPOE systems have reduced resource utilization,6,39,53,54,55,56,57,58,59,60,61,62,63,64,65,66 improved disease detection,36,67,68,69,70,71,72,73,74,75,76,77 reduced rates of medication errors and adverse drug events,42,43,46 and enhanced the quality of care provided to patients.8,48,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95

Previous studies evaluating the delivery of educational materials through electronic health record systems are uncommon,68,96 and some have suggested that such materials will not be used during clinical care delivery.10,41 The question of how best to deliver educational decision support to the to busy health care providers remains unanswered.11,97,98,99,100 Shortliffe101 has provided one explanation for poor adoption: “Effective decision-support systems are dependent on the development of integrated environments for communication and computing that allow merging of knowledge-based tools with other patient data-management and information-retrieval applications.” Successful incorporation of educational materials into routine clinical care requires that three conditions be met: (1) existence of relevant, useful, validated delivery tools; (2) ability to integrate such tools into clinical workflow (accessibility of hardware, adequate training on software, convenient user interface, adequate speed of response); and (3) availability in a single system of a comprehensive, “critical mass” of functionality (computer programs and clinical data) that can be brought to bear as decisions are being made.102 Bates et al.11 echoed these points in 2003 in describing the “Ten Commandments of Effective Clinical Decision Support.” Among the ten points, Bates et al. identified the importance of decision support fitting into the users' standard workflow, including relevant and up-to-date clinical knowledge, and having a simple, intuitive design.

The medium may be as important as the message in determining the effectiveness of decision support. Studies have documented that delivery modalities influence success rates for clinical decision support acceptance and usability.102,103 Others have suggested that the amount of alert-related “noise” in patient care information systems and clinical workflows may also attenuate users' acceptance of decision support systems.11,32,104 Characteristics of the user interface may also affect the adoption of decision support systems.105,106,107

Methods

Setting

At the time of the study, from April 1999 through March 2000, Vanderbilt University Hospital (VUH) at Vanderbilt University Medical Center (VUMC) was an inpatient tertiary care facility with local and regional primary referral bases and had 609 beds with 31,000 admissions per year. The study wards encompassed the primary VUH internal medicine inpatient services, including two combined general medicine and oncology units (together containing 60 beds), a medical intensive care unit (14 beds), a bone marrow transplantation unit (27 beds), a coronary care unit (12 beds), and a cardiac step-down unit (30 beds).

Vanderbilt University Medical Center has developed and implemented comprehensive, computer-based patient care information systems since 1994 through collaboration among faculty in the Department of Biomedical Informatics, the Vanderbilt Informatics Center technical staff, and clinical faculty and staff members.50,108,109,110 Deployed applications include an inpatient-centered CPOE system in use since 1995109 and a clinical data repository and electronic health record system110 in use since 1996. During the study period, the VUMC CPOE system was functional on all inpatient wards except for the pediatric and neonatal intensive care units (collectively containing 75 beds, but not part of the Internal Medicine service). On active CPOE units, 100% of orders were entered into the CPOE system, totaling more than 12,000 orders entered per day. On CPOE units, taken as a whole, physicians directly entered 75% of all CPOE orders; the remaining 25% were entered via clerical or nursing transcription of physicians' verbal or handwritten orders.

Before the study, the VUH CPOE system included safety-related clinical decision support features (e.g., drug allergy alerts, drug dose range checking, drug interaction checking, and pharmacy-related patient-specific medication advice).50,109 In addition, several rarely used educational materials had been available from the main patient-specific order entry menu. Since the CPOE system's inception, this menu item was labeled “Bells and Whistles” (▶). Selecting “Bells and Whistles” generated a menu from which the user could access educational materials, such as intravenous drug compatibility tables (listing which drugs should not be admixed with other drugs in the same intravenous line), infusion rate calculators, the results of medication-related literature searches in the PubMed database,111 outpatient formulary information, and other resources.

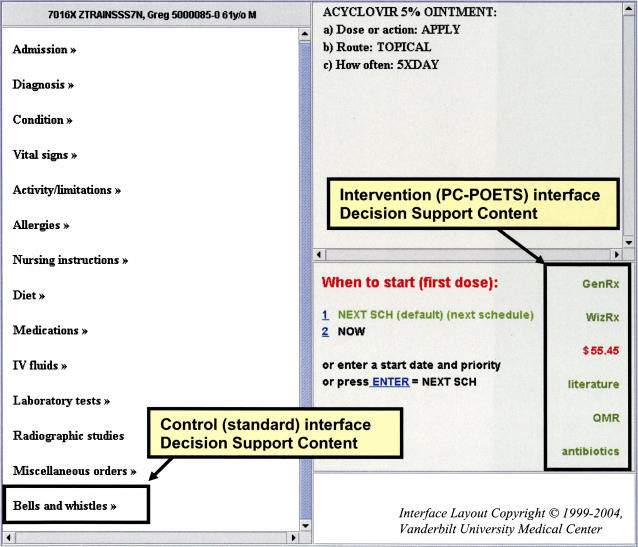

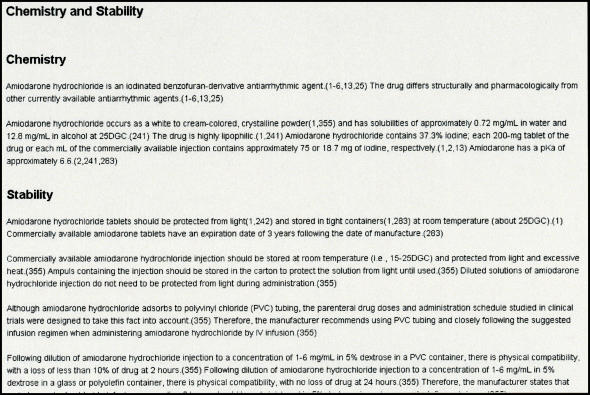

Figure 1.

Care provider order entry system interface, modified for Patient Care Provider Order Entry with Integrated Tactical Support (PC-POETS) intervention subjects. All subjects (control and intervention) could access decision support content through the “Bells and Whistles” link under the list of active orders. PC-POETS intervention subjects also saw a “Dashboard Indicator” containing active links to relevant information directly in the user interface. The links were displayed in color when they identified relevant opportunities for decision support. In this case, six links are displayed, and all are artificially colored to illustrate how they appeared.

Subjects

The VUMC Institutional Review Board approved the study before its inception. Study subjects included all house officers assigned to work monthly rotations on the seven study wards at VUH during the study period. House officer subjects included interns in their first year of postgraduate training (PGY-1) and second- (PGY-2) and third- (PGY-3) year residents. House officers generally worked together in care teams composed of one resident and one or, rarely, two interns. During the 1999–2000 academic year, the Department of Medicine trained 116 house officers, roughly half of whom had rotations on VUH study units during any given month. All study units had used the VUMC CPOE system regularly for at least two years before the study's initiation.

Intervention

System users could access study-related decision support features using one of two study interfaces. The control PC-POETS CPOE interface design, which was available to both intervention and control subjects, required that subjects request the new study-related educational materials and patient information by selecting the standard “Bells and Whistles” menu item (▶). Control users received no context-sensitive active notification that potentially relevant decision support was available. By contrast, whenever the potential for decision support was triggered by aspects of the patient's orders (▶), the intervention PC-POETS interface highlighted in a bright color hyperlinks to the study-related decision support features. The study-related intervention hyperlinks were clustered together in a single location on the main patient order entry screen and were highlighted only when corresponding decision support features were available (▶). The intervention display area was designed to be analogous to an automobile dashboard, with warning lights that are normally dimmed, but which become highlighted for low fuel, high engine temperature, electrical problems, etc. In this way, the intervention indicated to users when opportunities to access relevant educational decision support were available, without forcing an interruption in the user's clinical workflow.

Table 1.

Decision Support Features Available

| Code | Triggering Event | Decision Support Content | Opportunities |

|---|---|---|---|

| Baseline CPOE Decision Support Types | |||

| PHM | New medication order | Drug-allergy, dose range, drug interaction, and medication-specific alerts | 11,879* |

| GEN | Improperly formed orders | Specific feedback relating to error | 43,318* |

| PC-POETS Study-related Decision Support Types | |||

| ABA | Blood culture growing S. aureus without appropriate antibiotic order | Antibiotics recommendations based on organism-specific and institutional drug sensitivity patterns | 1,590 |

| MSH | Active diagnosis orders | Matches from the PubMed database | 50,873 |

| WRX | New medication order | Drug monograph from American Hospital Formulary Service database | 148,446 |

| MSB | New medication order | Drug monograph from Mosby's GenRx | 148,153 |

| QMR | Active diagnosis and medication orders | Matches from an expert internal medicine decision support engine | 50,892 |

| TRD | Laboratory results | Result trends that have been stable for 24 hr or that suggest an abnormal result within 72 hr | 93,973 |

| LMR | Laboratory, medications and radiology orders | Summary of costs associated with the current order entry session | 78,352 |

CPOE = care provider order entry; PC-POETS = Patient Care Provider Order Entry with Integrated Tactical Support.

Includes all data from April 1999 through March 2000.

For the PC-POETS study, the authors supplemented the standard CPOE system clinical decision support by providing subjects with seven new predominantly educational decision support features. These decision support features included: (1) an antibiotic advisor addressing therapy for Staphylococcus aureus bacteremia; (2) case-specific linkages to the biomedical literature using the specific diagnosis orders for the patient to generate relevant searches in the PubMed database111; both (3) the American Hospital Formulary Service (AHFS) Drug Information reference112 and (4) Mosby's GenRx® drug information reference,113 two sources of pharmacotherapy-related information that include mechanisms of action, usage indications, and dosing ranges; (5) access to an internal medicine diagnostic knowledge base74,114 listing the findings that have been reported to occur in 600 diseases in internal medicine, and the differential diagnosis of more than 4,500 individual clinical findings; (6) an alerting program that examined the results of 21 individual laboratory tests for those that were “clinically abnormal” or those having a trend suggesting that an abnormal value would occur within the next 72 hours; and (7) a function that tallied ongoing “estimated expenditures” for the medications, laboratory tests, and radiological procedures ordered during the current order entry session. The decision support features were available when triggered by relevant active orders and laboratory findings; PC-POETS decision support features and their triggers are summarized in ▶. Figures of the screens for each of these decision support features are included in Appendix 1.

To ensure that study-related educational features were available whenever needed by any subject, the authors designed triggers (▶) to be both objective and inclusive. Triggering events were counted separately for each participating control and intervention subject. Furthermore, a single order entered into the CPOE system would generate multiple opportunities for study-related decision support. Single drug orders, for example, generally created at least two opportunities to access decision support features, one to use the AHFS reference and one to use Mosby's GenRx® product. Likewise, single “diagnosis” orders entered on any study patient often triggered separate opportunities to access both the internal medicine diagnostic reference knowledge base and disease-specific literature via PubMed. Once triggered, the decision support features remained active until either a study subject accessed them, a time limit expired, or the triggering event was no longer present. As a result, opportunities for decision support were often counted many times and across multiple order entry sessions. The presence of S. aureus bacteremia or abnormal laboratory trends counted as opportunities every time a study subject selected the relevant patient. Most other opportunity types were available only during the single order entry session in which the study subject first encountered the relevant triggering event.

Randomization

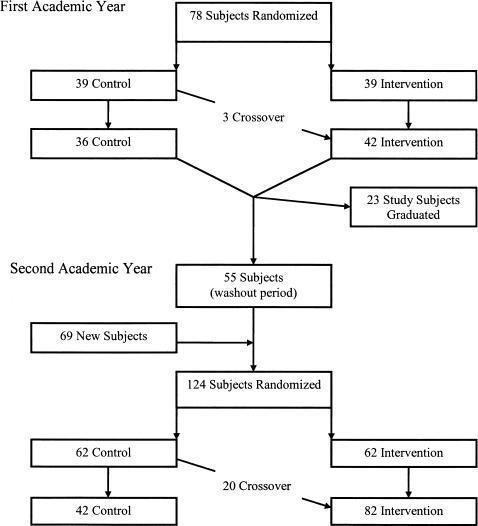

Study statisticians randomized house officers in clustered blocks115 by care teams into control or intervention groups (i.e., all members of a given team had the same study status, control or intervention, during their month together). Control intervention group assignments were maintained for individual subjects through the entire study period, whenever possible. To minimize each subjects' crossover between control and intervention status, the Internal Medicine Chief Resident notified the PC-POETS statistician of proposed study unit and care team assignments for each subject at least two weeks before the team's monthly hospital services began. At that point, the PC-POETS statisticians could request care team modification to maintain subjects' previous intervention or control group assignments. Study subjects' assignment could be changed from control to intervention status when clinical scheduling requirements and the status of other team members made this unavoidable; once subjects transitioned to the intervention group, they could not transition back to control status during later rotations during the same year of training. This randomization process allowed all members of a given team to be assigned to the same intervention or control group status. The randomization schema is summarized in ▶.

Figure 2.

Randomization scheme for study subjects. Study subjects were randomized at the start of the study period during the first academic year and were rerandomized in the second academic year after new postgraduate training year (PGY) 1 house officers arrived and the PGY levels increased by one for existing house officers. To permit time for new house officers to learn to use the care provider order entry system, a two-month “washout” period (July and August 1999) was implemented, during which all subjects were assigned to the “control” interface. In September 1999, house officers were rerandomized for assignment to study teams for the new year, independently of their statuses during the previous year.

Sources of Data

The institutional CPOE system log files record all users' keystrokes during order construction and all resulting completed orders. For the PC-POETS study, the authors modified the system to record each opportunity for study-related decision support that was triggered during any study subjects' (control and intervention) CPOE sessions. The CPOE system recorded a positive response whenever a subject accessed a decision support feature, according to the criteria in ▶. The system indicated in the log files whether the subject used the control or intervention interface. These files also contained character-delimited fields that store all information related to the user's construction of all CPOE orders, including system-generated, pharmacy-related alerts and warnings in context with the medication orders that triggered them. A separate program processed log files and extracted the appropriate study data for the primary analysis. For the subanalysis of pharmacy alerts, two authors (STR, RAM) reviewed representative (voluminous, 360 megabyte) log files covering January 2000 using automated tools to find pharmacy alerts from study hospital wards and manual review to categorize the alerts. The two authors discussed any categorization discrepancies to achieve a final category assignment. Pharmacy alerts were categorized as being clinically critical, cost or formulary related, or educational (examples and definitions are summarized in ▶). Only clinically critical alerts were included in the final analysis reported here.

Table 2.

Sample Pharmacy Decision Support Alerts during Study Period

| CPOE Order | Context | Alert Triggered |

|---|---|---|

| Clinically critical pharmacy alerts | ||

| Tylenol #2 | Active acetaminophen order | Multiple orders for acetaminophen |

| Acetazolamide | Allergy order for diamox | Probably allergy to this medication |

| Amiodarone infusion* | Active digoxin order that preceded amiodarone order | Amiodarone may double serum digoxin concentration/effects |

| Cost or formulary-related pharmacy alerts | ||

| Enoxaparin | Active heparin order | Do not give both heparin and enoxaparin |

| Verapamil SR | Active order for intravenous Pepcid | If patient can take SR tabs, then why not an oral H2 blocker? |

| Multivitamin infusion | N/A | There is a nationwide shortage, consider oral dosing |

| Educational pharmacy alerts | ||

| Digoxin† | Active amiodarone order that preceded digoxin order | Amiodarone may double serum digoxin concentration/effects |

| Celecoxib/Celebrex | N/A | Celecoxib is for arthritis; citalopram is for depression |

| Abciximab infusion | N/A | For Reopro dosing information, click on this message |

CPOE = care provider order entry; SR = sustained release; N/A = not available.

Initiating amiodarone in a patient receiving a previously stable digoxin dose can substantially increase digoxin levels and therefore requires an action, such as monitoring digoxin levels or altering the dose of one of the medications. This was categorized as a clinically critical alert.

Initiating digoxin in a patient already taking amiodarone will still require a digoxin loading dose and subsequent titration of digoxin until its desired effect is achieved; in this context, the warning about lowering the ultimate digoxin dose is classified as educational since no immediate action is required on starting digoxin.

Statistical Analysis

Using as the unit of analysis the individual opportunities to access decision support, study statisticians performed appropriate exploratory analyses with scatterplots, chi-square contingency tables, and Poisson regression to assess how response rates were affected by the intervention and by other covariates. These analyses indicated substantial overdispersion, a phenomenon in which the variance of a data set is greater than its mean. As a result, negative binomial regression, which effectively models overdispersed data,116 was used for the primary analyses. Deviance residual plots showed that these models provided a good fit to the data, which had a gamma distribution and included a small number of outliers. Ninety-five percent confidence intervals (CIs) for response rate estimates were derived from the models using Wald statistics.117 To evaluate whether subjects' responses represented a novelty effect that diminished with time, authors modeled response rates in the intervention group by the duration that responding subjects had spent in the intervention group. Wilcoxon rank sum tests compared the distribution of “estimated expenditures” for medications, laboratory tests, and radiology procedures ordered for patients treated by control and by intervention subjects. Because some subjects crossed between control and intervention status, three different exploratory analyses were performed: an “intention-to-treat” analysis in which a subject's behavior was always ascribed to the intervention group after being assigned to intervention; a “dropout” analysis in which intervention subjects' responses were excluded for any subsequent month when they were in the control group; and an “as-treated” analysis in which subjects' responses were attributed to the group to which they were currently assigned. All analysis methods produced similar results, so the most conservative, the intention to treat, is reported.

The total “estimated expenditure” was calculated for all study-related order entry sessions as the sum of all estimated expenditures for laboratory tests, medications, and radiology procedures ordered. Expenditures were consistently based on cost data from some departments and on charge data from others; no single department mixed cost with charge data for calculating estimated expenditures in the study. To calculate the total estimated expenditures for open-ended recurring orders, order duration was set to 5 days, which was the then-current average inpatient length of stay at VUH.

Results

Characteristics of Care Provider Order Entry Sessions during the Study Period

From April 1999 through March 2000, there were 263,100 order entry sessions initiated on study wards by all system users, including nurses, clerical unit staff, ancillary services, as well as study subjects and nonstudy physicians. Baseline data about CPOE-related decision support follow to provide a context for the current study. During the 263,100 order entry sessions and independent of the current study, the CPOE system generated 11,879 pharmacy warnings (range of 713 to 1,190 per month). These occurred predominantly during physician order entry sessions since physicians at VUH entered more than 90% of pharmacy orders on study units at that time. Pharmacy warnings occurred on study units at an average rate of 29.3 per day or one for every 22.1 order entry sessions. Manual review of pharmacy alerts from January 2000 revealed that users on study wards responded to critical pharmacy alerts by changing their orders 24% of the time or an average 5.0 times per day. Examples of pharmacy warnings and their categories assigned during manual review from the study period are shown in ▶. During the study period, the CPOE system generated an additional 43,318 warnings about technically incorrect orders (e.g., “duration of narcotic analgesic orders cannot exceed 72 hours before renewal”), at a rate of 119 per day for all CPOE system users.

PC-POETS Decision Support Opportunities

The PC-POETS study period included two and a half months from one academic year (mid-April 1999 through June 1999) and nine months from the following academic year (July 1999 through March 2000). The study enrolled as subjects a total of 147 house officers (see randomization scheme, ▶). In the first year, 78 subjects were evenly randomized to the control and intervention groups; three of the control residents in this year crossed over to the intervention group. At the beginning of the second academic year, there were 124 available residents. After a two-month washout period, these physicians were rerandomized into equal groups of control and intervention subjects; 20 of the control residents subsequently crossed over to the intervention group. Subjects included 90 PGY-1 level house officers and 88 PGY-2 or PGY-3 level house officers, with 31 contributing data during both PGY-1 and PGY-2. Subjects spent on average of 5.6 months (standard deviation 3.7) in the study: 5.2 months for control subjects and 6.1 months for intervention subjects (p = 0.12) (as noted previously, control subjects were allowed to become intervention subjects over time but not vice versa).

Overall, intervention subjects contributed 4,466 study days, while control subjects contributed 3,397 study days. Together, all subjects cared for 4,550 patients and initiated 100,881 order entry sessions. During these order entry sessions, 418,739 opportunities for study-related decision support features were triggered, occurring at a mean rate of 1,261 per day. There were 240,504 opportunities to access decision support features for intervention subjects (53.9 per subject-day) and 178,235 (52.5 per subject-day) for control subjects (p = 0.11). Decision support opportunities were triggered at a mean rate of 4.12 and 4.17 per order entry session for control and intervention subjects, respectively (p = 0.21). A total of 241,843 (57.8%) opportunities to access decision support were triggered during order entry sessions initiated by PGY-1 level house officers, while 176,896 (42.2%) were triggered during order entry sessions initiated by PGY-2 and PGY-3 level house officers. The distribution of available opportunities for educational materials by category is presented in ▶.

Subjects accessed 296 (0.07%) of the 418,739 study-related decision support features triggered during the study period, at a mean rate of 2.9 per 1000 order entry sessions. Intervention subjects accessed decision support features at least once on 3.8% of subject-days (278 responses); controls accessed features on 0.6% of subject-days (18 responses). The access rate ratio for intervention subjects, adjusted for number of decision support opportunities, was 9.17 (95% CI 4.6–18, p < 0.0005). On average, intervention subjects accessed study-related decision support materials once every 16 days individually and once every 1.26 days in aggregate, while control subjects accessed materials once every 189 days individually and once every 19 days in aggregate. Intervention subjects accessed five of the seven categories of decision support features more often than control subjects (▶). Access rates varied by subjects' PGY level; for first-year house officers, the rate ratio was 8.13 (95% CI 3.8–17); for PGY-2 and PGY-3, it was 21.7 (95% CI 7.6–62). There was no evidence of a trend of either increasing or decreasing access rates with continued exposure to the intervention; when compared to intervention subjects in their first month of the study, intervention subjects in the second month accessed the decision support features with a rate ratio of 1.4 (95% CI 0.7–2.9); subjects in the third month and beyond accessed the features with a rate ratio of 0.6 (95% CI 0.2–2.9).

Table 3.

Opportunities and Response Rate Ratios during the Clinical Trial

| Decision Support Opportunities |

Decision Support Responses |

Adjusted Response Rate Ratios* |

||||

|---|---|---|---|---|---|---|

| Opportunity Code | Control | Intervention | Control | Intervention | Ratio | 95% CI |

| ABA | 396 | 448 | 1 | 2 | 2.90† | 0.059–140 |

| MSH | 15,116 | 20,886 | 2 | 57 | 13.4 | 3.0–59 |

| WRX | 45,596 | 61,311 | 3 | 43 | 9.67 | 2.2–43 |

| MSB | 45,421 | 61,359 | 2 | 57 | 21.2 | 4.3–100 |

| QMR | 15,115 | 20,908 | 1 | 51 | 32.7 | 3.3–320 |

| TRD | 32,234 | 42,631 | 0 | 50 | ‡ | |

| LMR | 24,357 | 32,961 | 9 | 39 | 3.11 | 1.1–8.7 |

| Overall | 178,235 | 240,504 | 18 | 278 | 9.72 | 4.7–20 |

CI = confidence interval.

Ratio of decision support opportunity response rates for intervention subjects over control subjects;

Not significant.

Could not calculate a ratio.

A total of 57,743 order entry sessions (57.2% of all study sessions) permitted the calculation of an estimated expenditure by including medication, laboratory testing, or radiology procedure orders. Among estimated expenditure calculations, 24,557 resulted from control and 33,186 from intervention subjects' order entry sessions; these represented 56.6% of all control sessions versus 57.4% of all intervention sessions (p = 0.006). The overall mean estimated expenditure per order entry session was $405.44 (± $753, standard deviation). There were no differences in mean estimated expenditures between study groups ($403.10 per order entry session for intervention subjects versus $408.60 per order entry session for control subjects; p = 0.72, rank sum test).

Subjects were able to respond to the estimated expenditure opportunity by actively requesting a detailed breakdown of the total expenditure into its individual component orders. Among order entry sessions initiated by intervention subjects, subjects who asked for the estimated expenditure details had ordered more expensive items than subjects who did not ($778.62 per order entry session compared to $402.75 per order entry session, respectively, p = 0.026 rank sum test).

Discussion

In designing the PC-POETS study, the authors perceived that an optimal CPOE-based decision support delivery methods should meet the following criteria when providing decision support information: (1) the information is unknown to, or has not been considered by the user; (2) the information is relevant to the current order being entered; (3) the information is delivered using the method that is least likely to introduce workflow inefficiency; and (4) the clinical importance and urgency of the information being delivered should determine the degree of obtrusiveness of the alert generated. The authors do not believe that current CPOE systems can algorithmically identify specific gaps in a user's knowledge or consistently determine when educational decision support content is relevant to given patient case. The PC-POETS study was consequently designed to trigger the study-related decision support features generously, whenever decision support might potentially be relevant. In this way, the authors overemphasized sensitivity with respect to specificity of triggers for potential educational decision support. This approach to identifying and counting relevant decision support resulted in the study recording disproportionately large numbers of opportunities for subjects to access study-related decision support features (i.e., 418,739 opportunities during 100,881 study order entry sessions). The authors believe that the overwhelming majority of these 418,739 decision support opportunities did not meet all criteria for optimal decision support delivery (above), primarily because they contained information that was already known to the user and because accessing the information would introduce a workflow inefficiency. Based on the reported subjects' response rates and recognizing that it is hard to know what is optimal when providing passive links to relevant information resources, the authors speculate that the triggers may have been two to three orders of magnitude more frequent than optimal.

The measured absolute response rates to the study-related decision support opportunities were low (i.e., subjects accessed 296 of 418,739 opportunities). However, this low rate is likely misleading as a result of the counting method. In addition to counting all “potential” opportunities for decision support as actual opportunities, as described above, the PC-POETS study also counted opportunities for study-related decision support redundantly (e.g., a single diagnosis order on a patient would lead to two opportunities being counted every time any subject entered orders on that patient). While eliminating the redundant alerts would have improved the absolute response rate, the authors do not believe that this would have affected the rate ratio. In addition, the observed absolute response rate in this study is greater than what McDonald10 observed in his 1980 study, when subjects accessed none of the educational materials made available during the 1260 opportunities to do so.

Decision support information is commonly delivered via CPOE systems using one of three user interface methods: system-generated workflow interruptions, active user-generated requests, and direct display in the user interface.9 Each method has a different degree of invasiveness and may have a corollary degree of user responsiveness. Pop-up alerts, for example, require users to respond before continuing with their order entry workflow. By mandating an action from the user, this method of delivering decision support advice is highly invasive but ensures that the user knows about the triggering event. This method may be especially useful for critical alerts, such as pharmacy, allergy, or formulary warnings arising during order entry sessions. By contrast, delivering decision support advice through direct display in the user interface allows the user to acknowledge or use the information only when he or she believes that it is necessary. This method is especially useful for providing information relevant to the user's current task without stopping the workflow or introducing unnecessary inefficiency. Direct display of decision support advice can provide users information about recommended or available medication dose forms; users who know the dosing will not be stopped, while those who need the information will have ready access to it.

Excessive exposure to obtrusive decision support alerting has been suggested to lead to user “information overload”32 and reduced user satisfaction.104 The PC-POETS intervention displayed unobtrusive hyperlinks to the study-related decision support features rather than using a more invasive alerting method. This alerting method ensured that subjects had access to the information when they needed it but did not interrupt their workflow every time new decision support became available. The more obtrusive method used by the VUMC CPOE system to deliver clinically significant pharmacy alerts was associated with a 24% response rate (more than two orders of magnitude higher than the response rate for less clinically urgent, educational PC-POETS decision support opportunities). Had the PC-POETS study alerted users to available decision support features via a relatively more obtrusive method, the absolute response rates may have been higher. However, using a such an alerting method for all 418,739 potential decision support opportunities would likely also have increased the time that users spent on the order-entry system, may have led users to access decision support information that they did not need, and would likely have reduced user satisfaction with the CPOE system. In addition, pharmacy alerts may also be expected to have a higher user response rate than relatively nonurgent educational decision support features.

Advocates of CPOE promote it on the basis that such systems will improve patient safety and reduce adverse events by alerting health care providers to potential errors (including to drug interactions and patient allergies).2,7,43 In the current study, intervention subjects responded to critical pharmacy alerts (as one might expect) 346 times more frequently than they did to educational decision support opportunities. However, the disparate nature of the two types of alerts and of their respective alerting mechanisms makes it appropriate to compare them using a more normalized rate, such as subjects' daily response rates. When measured as a daily rate, intervention subjects responded to critical pharmacy alerts about four times more frequently than to study-related educational decision support features (i.e., 2.9 responses to pharmacy alerts versus 0.8 responses to study-related alerts per study day). The authors believe that a fourfold difference between users' absolute daily response rates to clinically critical alerts compared to opportunity-triggered educational alerts is surprisingly close, given recent literature emphasizing the frequency of prescribing errors.2,7,43 Varying the delivery methods for decision support content based on its acuity may be appropriate for balancing workflow and patient safety considerations.

The PC-POETS study subanalysis of estimated expenditure did not reproduce the magnitude of effect seen in the previous work of Tierney et al.,6 which demonstrated that the display of estimated cost for laboratory testing was associated with a 14% reduction in test ordering during outpatient clinical encounters. It is not clear whether the trend observed toward decreased ordering session estimated expenses in the intervention group (compared to the control group) was insignificant due to lack of intervention effect or due to lack of power of the study to detect a significant difference, given the large standard deviations in each group. However, the current study demonstrated an association between high estimated expenditures during an order entry session and the likelihood that the user would request a detailed expenditure breakdown. It is likely that subjects responded at greater rates to this opportunity as a result of seeing unexpectedly high estimated expenditure values in the PC-POETS end user interface.

Limitations

This study has limitations that merit discussion. First, it only directly compared two delivery techniques for educational materials in a CPOE system user interface. A more formal comparison of other delivery methods might not show the substantial differences in response rates reported in this study. Second, the results may not generalize to other health care institutions. Computerized patient care systems are not currently ubiquitous in inpatient settings, and less than 10% of inpatient facilities currently have implemented CPOE systems that were adopted into the standard clinical workflow.118 Additionally, the current study was performed in a teaching hospital where subjects were Internal Medicine house officers, and the results may not be reproducible for other clinical specialties or in nonacademic settings. Third, the study did not evaluate whether any clinical outcomes were changed as a result of the intervention. Fourth, PC-POETS subjects may have been cross-contaminated by exposure to other subjects in the opposite experimental group. In highly collaborative environments, as is the case in hospital settings, unblinded randomization schemes inherently risk unmeasured crossover between experimental groups.115 Clustered block randomization, as this study employed, attempts to minimize crossover risk but does not eliminate it. Any such crossover would have the effect of reducing the observed impact of the intervention. Fifth, the current study is now reported several years after its completion due to the departure from Vanderbilt of the primary order entry system code author (AJG) shortly after the inception of the study, delaying the subsequent analysis and reporting of the results. Had the study been conducted more recently, study subject behaviors may have varied from those observed. The order entry system characteristics studied have not changed substantially since the time of the intervention, and the authors know of no reasons why current system users would respond differently from those during the study period. Sixth, while the current study demonstrated that the user interface can affect the rates with which knowledge resources are accessed, it did not evaluate the impact of such information on physicians' information needs.

Implications for Future Work

As it becomes increasingly feasible to link electronic information interventions into clinical workflows, clinical informatics as a discipline will need to determine how to select the proper “information dose” for each type of “alerting” situation. As with medications, no beneficial effect occurs if too little of an intervention is given, while too high a dose may cause toxic side effects. As Bates et al.,11 Barnett et al.,32 and Ash et al.104 have pointed out, too many information alerts may also cause “adverse information effects” by burying an important alert in a sea of less important interruptions, thus forcing users to bypass (ignore) alerts to carry out their job duties.

Conclusion

Highlighting availability of context-sensitive educational materials and patient information through visible hyperlinks significantly increased utilization rates for related forms of rarely used decision support when compared to “standard” VUH CPOE methods, although absolute response rates were low. Active integration of decision support into existing clinical workflows can increase providers' responsiveness to educational and patient care–related opportunities. Varying the delivery methods for decision support content based on its acuity can alter user responsiveness and may be appropriate for balancing workflow and patient safety considerations.

Appendix 1. User Interface Images for Each Study-related Educational Decision Support Feature

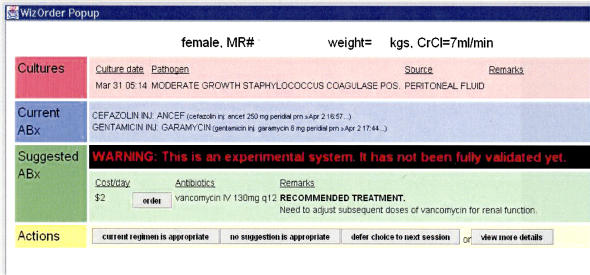

Figure 3.

ABA, Antibiotic Advisor, triggered from positive blood cultures. Provided antibiotics recommendations based on organism-specific and institutional drug sensitivity patterns.

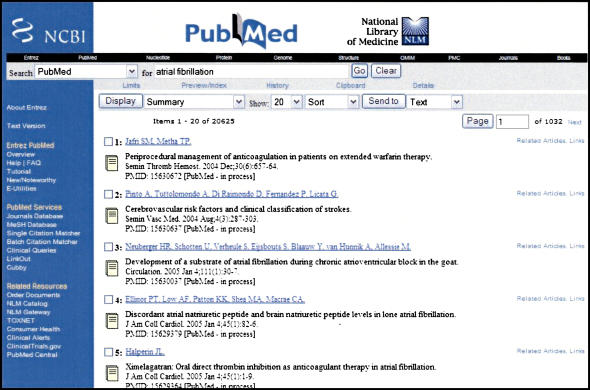

Figure 4.

MSH, triggered from an active diagnosis order. Performed a real-time keyword search in the online interface to the PubMed database.

Figure 5.

WRX, triggered from new medication orders. Displayed drug monographs from the American Hospital Formulary Service (AHFS) Drug Information reference database.

Figure 6.

MSB, triggered from new medication orders. Displayed drug monographs from Mosby's GenRx® drug information reference.

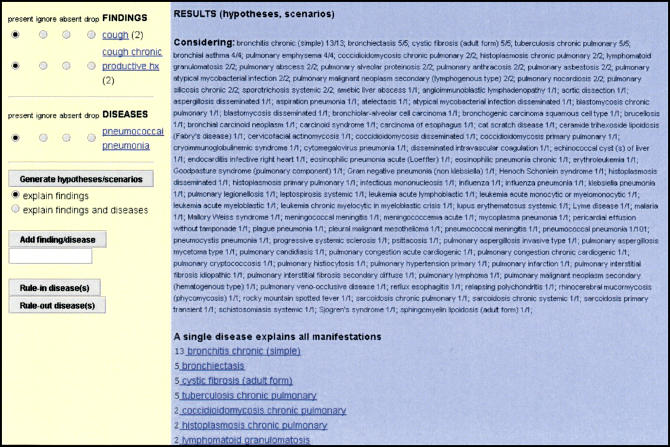

Figure 7.

QMR® knowledgebase, triggered from active diagnosis and medication orders. Provided access to an internal medicine diagnostic knowledge base and diagnostic expert system listing the findings that have been reported to occur in 600 diseases in internal medicine, and the differential diagnosis of more than 4500 individual clinical findings.

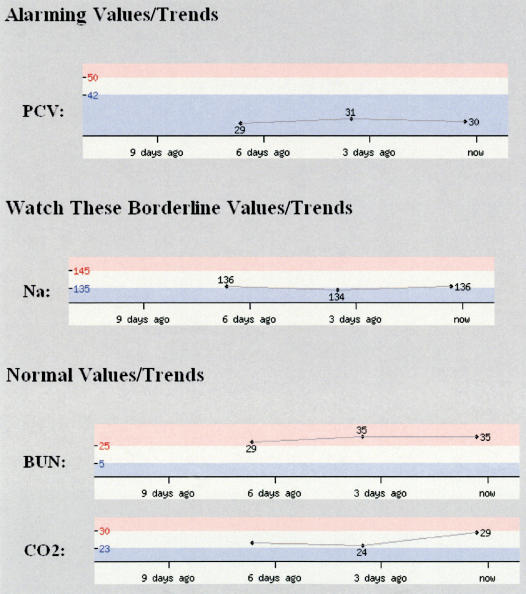

Figure 8.

TRD, triggered off of the results of 21 individual laboratory tests for those that were “clinically abnormal” or those having a trend suggesting that an abnormal value would occur within the next 72 hours. Provided access to graphical display of pertinent laboratory trends.

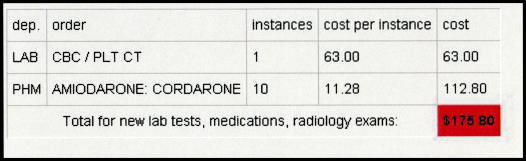

Figure 9.

LMR, triggered off of orders for laboratory tests, medications, and radiology procedures. Provided access to a display that gave a detailed tally of “estimated expenditures” from during the current order entry session.

Supplementary Material

Supported by U.S. National Library of Medicine Grants (R01 LM06226-01 and 5 T15 LM007450-02), by the Vanderbilt Physician Scientist Development program, and by Vanderbilt University Medical Center funds.

Note: The Quick Medical Reference (QMR)® knowledgebase is ©1994 by the University of Pittsburgh and is proprietary to that institution. It has been supplied to Vanderbilt University for purposes of research.

Disclosure: The WizOrder Care Provider Order Entry system described in this article was developed by Vanderbilt University Medical Center faculty and staff within the School of Medicine and Informatics Center beginning in 1994. In May 2001, Vanderbilt University licensed the product to a commercial vendor, who is modifying the software. All study data were collected before the commercialization agreement, and the system as described represents the Vanderbilt noncommercialized software code. Dr. S. Trent Rosenbloom, the lead author, has been paid by the commercial vendor in the past to demonstrate the noncommercialized Vanderbilt product to potential clients. Drs. Geissbuhler, Giuse, Miller, Stead, and Talbert have been recognized by Vanderbilt as contributing to the authorship of the WizOrder software and have received and will continue to receive royalties from Vanderbilt under the University's intellectual property policies. While these involvements could potentially be viewed as a conflict of interest with respect to the submitted article, the authors have taken a number of concerted steps to avoid an actual conflict (including conduct of study design and statistical analysis by individuals not involved in software development or commercialization), and those steps have been disclosed to the Journal during editorial review.

The authors thank Jonathan Grande, Douglas Kernodle, and Matt Steidel, VUMC Internal Medicine Chief Residents, and the VUH Medical House Officers for their significant contributions to the project and Elizabeth Madsen for her assistance in proofreading the manuscript.

References

- 1.The Leapfrog group. Available from: http://www.leapfroggroup.org/. Accessed February 10, 2005.

- 2.Committee on Quality of Health Care in America, Using Information Technology. Crossing the quality chasm: a new health system for the 21st century. Washington, DC: IOM, 2001, p 175–92.

- 3.Berger RG, Kichak JP. Computerized physician order entry: helpful or harmful? J Am Med Inform Assoc. 2004;11:100–3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.McDonald CJ. Protocol-based computer reminders, the quality of care and the non-perfectability of man. N Engl J Med. 1976;295:1351–5. [DOI] [PubMed] [Google Scholar]

- 5.Dexter PR, Perkins S, Overhage JM, Maharry K, Kohler RB, McDonald CJ. A computerized reminder system to increase the use of preventive care for hospitalized patients. N Engl J Med. 2001;345:965–70. [DOI] [PubMed] [Google Scholar]

- 6.Tierney WM, Miller ME, McDonald CJ. The effect on test ordering of informing physicians of the charges for outpatient diagnostic tests. N Engl J Med. 1990;322:1499–504. [DOI] [PubMed] [Google Scholar]

- 7.Bates DW, Evans RS, Murff H, Stetson PD, Pizziferri L, Hripcsak G. Detecting adverse events using information technology. J Am Med Inform Assoc. 2003;10:115–28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Hunt DL, Haynes RB, Hanna SE, Smith K. Effects of computer-based clinical decision support systems on physician performance and patient outcomes: a systematic review. JAMA. 1998;280:1339–46. [DOI] [PubMed] [Google Scholar]

- 9.Kuperman GJ, Gibson RF. Computer physician order entry: benefits, costs, and issues. Ann Intern Med. 2003;139:31–9. [DOI] [PubMed] [Google Scholar]

- 10.McDonald CJ, Wilson GA, McCabe GP Jr. Physician response to computer reminders. JAMA. 1980;244:1579–81. [PubMed] [Google Scholar]

- 11.Bates DW, Kuperman GJ, Wang S, et al. Ten commandments for effective clinical decision support: making the practice of evidence-based medicine a reality. J Am Med Inform Assoc. 2003;10:523–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Davis DA, Thomson MA, Oxman AD, Haynes RB. Changing physician performance. A systematic review of the effect of continuing medical education strategies. JAMA. 1995;274:700–5. [DOI] [PubMed] [Google Scholar]

- 13.Davis NL, Willis CE. A new metric for continuing medical education credit. J Contin Educ Health Prof. 2004;24:139–44. [DOI] [PubMed] [Google Scholar]

- 14.Bennett NL, Davis DA, Easterling WE Jr, et al. Continuing medical education: a new vision of the professional development of physicians. Acad Med. 2000;75:1167–72. [DOI] [PubMed] [Google Scholar]

- 15.Moore DE Jr, Pennington FC. Practice-based learning and improvement. J Contin Educ Health Prof. 2003;23(Suppl1):S73–80. [DOI] [PubMed] [Google Scholar]

- 16.Chambliss ML, Conley J. Answering clinical questions. J Fam Pract. 1996;43:140–4. [PubMed] [Google Scholar]

- 17.Covell DG, Uman GC, Manning PR. Information needs in office practice: are they being met? Ann Intern Med. 1985;103:596–9. [DOI] [PubMed] [Google Scholar]

- 18.Forsythe DE, Buchanan BG, Osheroff JA, Miller RA. Expanding the concept of medical information: an observational study of physicians' information needs. Comput Biomed Res. 1992;25:181–200. [DOI] [PubMed] [Google Scholar]

- 19.Giuse NB, Huber JT, Giuse DA, Brown CW Jr, Bankowitz RA, Hunt S. Information needs of health care professionals in an AIDS outpatient clinic as determined by chart review. J Am Med Inform Assoc. 1994;1:395–403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Osheroff JA, Forsythe DE, Buchanan BG, Bankowitz RA, Blumenfeld BH, Miller RA. Physicians' information needs: analysis of questions posed during clinical teaching. Ann Intern Med. 1991;114:576–81. [DOI] [PubMed] [Google Scholar]

- 21.Green ML, Ciampi MA, Ellis PJ. Residents' medical information needs in clinic: are they being met? Am J Med. 2000;109:218–23. [DOI] [PubMed] [Google Scholar]

- 22.Ely JW, Osheroff JA, Ebell MH, et al. Analysis of questions asked by family doctors regarding patient care. BMJ. 1999;319:358–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Gorman PN, Ash J, Wykoff L. Can primary care physicians' questions be answered using the medical journal literature? Bull Med Libr Assoc. 1994;82:140–6. [PMC free article] [PubMed] [Google Scholar]

- 24.Gorman PN, Helfand M. Information seeking in primary care: how physicians choose which clinical questions to pursue and which to leave unanswered. Med Decis Making. 1995;15:113–9. [DOI] [PubMed] [Google Scholar]

- 25.Ely JW, Osheroff JA, Chambliss ML, Ebell MH, Rosenbaum ME. Answering physicians' clinical questions: obstacles and potential solutions. J Am Med Inform Assoc. 2005;12:217–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Rosenbloom ST, Giuse NB, Jerome RN, Blackford JU. Providing evidence-based answers to complex clinical questions: evaluating the consistency of article selection. Acad Med. 2005;80:109–14. [DOI] [PubMed] [Google Scholar]

- 27.Davidoff F, Florance V. The informationist: a new health profession? Ann Intern Med. 2000;132:996–8. [DOI] [PubMed] [Google Scholar]

- 28.Ely JW, Osheroff JA, Ebell MH, et al. Obstacles to answering doctors' questions about patient care with evidence: qualitative study. BMJ. 2002;324:710. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Williamson JW, German PS, Weiss R, Skinner EA, Bowes F 3rd. Health science information management and continuing education of physicians. A survey of U.S. primary care practitioners and their opinion leaders. Ann Intern Med. 1989;110:151–60. [DOI] [PubMed] [Google Scholar]

- 30.MEDLINE to PubMed and beyond. Available from: http://www.nlm.nih.gov/bsd/historypresentation.html/. Accessed February 10, 2005.

- 31.Wyatt J. Use and sources of medical knowledge. Lancet. 1991;338:1368–73. [DOI] [PubMed] [Google Scholar]

- 32.Barnett GO, Barry MJ, Robb-Nicholson C, Morgan M. Overcoming information overload: an information system for the primary care physician. Medinfo. 2004;2004:273–6. [PubMed] [Google Scholar]

- 33.Chueh H, Barnett GO. “Just-in-time” clinical information. Acad Med. 1997;72:512–7. [DOI] [PubMed] [Google Scholar]

- 34.McDonald CJ, Tierney WM. Computer-stored medical records. Their future role in medical practice. JAMA. 1988;259:3433–40. [PubMed] [Google Scholar]

- 35.Gardner RM, Pryor TA, Warner HR. The HELP hospital information system: update 1998. Int J Med Inf. 1999;54:169–82. [DOI] [PubMed] [Google Scholar]

- 36.Gardner RM, Golubjatnikov OK, Laub RM, Jacobson JT, Evans RS. Computer-critiqued blood ordering using the HELP system. Comput Biomed Res. 1990;23:514–28. [DOI] [PubMed] [Google Scholar]

- 37.Tierney WM, Hui SL, McDonald CJ. Delayed feedback of physician performance versus immediate reminders to perform preventive care. Effects on physician compliance. Med Care. 1986;24:659–66. [DOI] [PubMed] [Google Scholar]

- 38.Tierney WM, McDonald CJ, Hui SL, Martin DK. Computer predictions of abnormal test results. Effects on outpatient testing. JAMA. 1988;259:1194–8. [PubMed] [Google Scholar]

- 39.Tierney WM, McDonald CJ, Martin DK, Rogers MP. Computerized display of past test results. Effect on outpatient testing. Ann Intern Med. 1987;107:569–74. [DOI] [PubMed] [Google Scholar]

- 40.Tierney WM, Overhage JM, Takesue BY, et al. Computerizing guidelines to improve care and patient outcomes: the example of heart failure. J Am Med Inform Assoc. 1995;2:316–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Osheroff JA, Bankowitz RA. Physicians' use of computer software in answering clinical questions. Bull Med Libr Assoc. 1993;81:11–9. [PMC free article] [PubMed] [Google Scholar]

- 42.Bates DW, Leape LL, Cullen DJ, et al. Effect of computerized physician order entry and a team intervention on prevention of serious medication errors. JAMA. 1998;280:1311–6. [DOI] [PubMed] [Google Scholar]

- 43.Potts AL, Barr FE, Gregory DF, Wright L, Patel NR. Computerized physician order entry and medication errors in a pediatric critical care unit. Pediatrics. 2004;113:59–63. [DOI] [PubMed] [Google Scholar]

- 44.Gardner RM, Evans RS. Using computer technology to detect, measure, and prevent adverse drug events. J Am Med Inform Assoc. 2004;11:535–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.McDonald CJ, Hui SL, Smith DM, et al. Reminders to physicians from an introspective computer medical record. A two-year randomized trial. Ann Intern Med. 1984;100:130–8. [DOI] [PubMed] [Google Scholar]

- 46.Evans RS, Pestotnik SL, Classen DC, et al. Development of a computerized adverse drug event monitor. Proc Annu Symp Comput Appl Med Care. 1991:23–7. [PMC free article] [PubMed]

- 47.Tierney WM. Improving clinical decisions and outcomes with information: a review. Int J Med Inf. 2001;62:1–9. [DOI] [PubMed] [Google Scholar]

- 48.Lobach DF, Hammond WE. Computerized decision support based on a clinical practice guideline improves compliance with care standards. Am J Med. 1997;102:89–98. [DOI] [PubMed] [Google Scholar]

- 49.Shiffman RN, Karras BT, Agrawal A, Chen R, Marenco L, Nath S. GEM: a proposal for a more comprehensive guideline document model using XML. J Am Med Inform Assoc. 2000;7:488–98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Stead WW, Miller RA, Musen MA, Hersh WR. Integration and beyond: linking information from disparate sources and into workflow. J Am Med Inform Assoc. 2000;7:135–45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Ash JS, Gorman PN, Lavelle M, et al. A cross-site qualitative study of physician order entry. J Am Med Inform Assoc. 2003;10:188–200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Ash JS, Sittig DF, Seshadri V, Dykstra RH, Carpenter JD, Starvi PZ. Adding insight: a qualitative cross-site study of physician order entry. Medinfo. 2004;2004:1013–7. [PubMed] [Google Scholar]

- 53.Bates DW, Kuperman GJ, Rittenberg E, et al. A randomized trial of a computer-based intervention to reduce utilization of redundant laboratory tests. Am J Med. 1999;106:144–50. [DOI] [PubMed] [Google Scholar]

- 54.Cummings KM, Frisof KB, Long MJ, Hrynkiewich G. The effects of price information on physicians' test-ordering behavior. Ordering of diagnostic tests. Med Care. 1982;20:293–301. [DOI] [PubMed] [Google Scholar]

- 55.Gortmaker SL, Bickford AF, Mathewson HO, Dumbaugh K, Tirrell PC. A successful experiment to reduce unnecessary laboratory use in a community hospital. Med Care. 1988;26:631–42. [DOI] [PubMed] [Google Scholar]

- 56.Hagland MM. Physicians using computers show lower resource utilization. Hospitals. 1993;67:17. [PubMed] [Google Scholar]

- 57.Hampers LC, Cha S, Gutglass DJ, Krug SE, Binns HJ. The effect of price information on test-ordering behavior and patient outcomes in a pediatric emergency department. Pediatrics. 1999;103:877–82. [PubMed] [Google Scholar]

- 58.Hawkins HH, Hankins RW, Johnson E. A computerized physician order entry system for the promotion of ordering compliance and appropriate test utilization. J Healthc Inf Manage. 1999;13:63–72. [PubMed] [Google Scholar]

- 59.Klein MS, Ross FV, Adams DL, Gilbert CM. Effect of online literature searching on length of stay and patient care costs. Acad Med. 1994;69:489–95. [DOI] [PubMed] [Google Scholar]

- 60.Pugh JA, Frazier LM, DeLong E, Wallace AG, Ellenbogen P, Linfors E. Effect of daily charge feedback on inpatient charges and physician knowledge and behavior. Arch Intern Med. 1989;149:426–9. [PubMed] [Google Scholar]

- 61.Shojania KG, Yokoe D, Platt R, Fiskio J, Ma'luf N, Bates DW. Reducing vancomycin use utilizing a computer guideline: results of a randomized controlled trial. J Am Med Inform Assoc. 1998;5:554–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Solomon DH, Shmerling RH, Schur PH, Lew R, Fiskio J, Bates DW. A computer based intervention to reduce unnecessary serologic testing. J Rheumatol. 1999;26:2578–84. [PubMed] [Google Scholar]

- 63.Studnicki J, Bradham DD, Marshburn J, Foulis PR, Straumfjord JV. A feedback system for reducing excessive laboratory tests. Arch Pathol Lab Med. 1993;117:35–9. [PubMed] [Google Scholar]

- 64.Teich JM, Merchia PR, Schmiz JL, Kuperman GJ, Spurr CD, Bates DW. Effects of computerized physician order entry on prescribing practices. Arch Intern Med. 2000;160:2741–7. [DOI] [PubMed] [Google Scholar]

- 65.Sanders DL, Miller RA. The effects on clinician ordering patterns of a computerized decision support system for neuroradiology imaging studies. Proc AMIA Symp. 2001:583–7. [PMC free article] [PubMed]

- 66.Neilson EG, Johnson KB, Rosenbloom ST, et al. The impact of peer management on test ordering behavior. Ann Intern Med. 2004:196–204. [DOI] [PubMed]

- 67.Aronsky D, Haug PJ. An integrated decision support system for diagnosing and managing patients with community-acquired pneumonia. Proc AMIA Symp. 1999:197–201. [PMC free article] [PubMed]

- 68.Bankowitz RA, McNeil MA, Challinor SM, Parker RC, Kapoor WN, Miller RA. A computer-assisted medical diagnostic consultation service. Implementation and prospective evaluation of a prototype. Ann Intern Med. 1989;110:824–32. [DOI] [PubMed] [Google Scholar]

- 69.Breitfeld PP, Weisburd M, Overhage JM, Sledge G Jr, Tierney WM. Pilot study of a point-of-use decision support tool for cancer clinical trials eligibility. J Am Med Inform Assoc. 1999;6:466–77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Dean NC, Suchyta MR, Bateman KA, Aronsky D, Hadlock CJ. Implementation of admission decision support for community-acquired pneumonia. Chest. 2000;117:1368–77. [DOI] [PubMed] [Google Scholar]

- 71.Evans RS, Larsen RA, Burke JP, et al. Computer surveillance of hospital-acquired infections and antibiotic use. JAM. 1986;256:1007–11. [PubMed] [Google Scholar]

- 72.Feldman MJ, Barnett GO, Morgan MM. The sensitivity of medical diagnostic decision-support knowledge bases in delineating appropriate terms to document in the medical record. Proc Annu Symp Comput Appl Med Care. 1991:258–62. [PMC free article] [PubMed]

- 73.Kahn MG, Steib SA, Fraser VJ, Dunagan WC. An expert system for culture-based infection control surveillance. Proc Annu Symp Comput Appl Med Care. 1993:171–5. [PMC free article] [PubMed]

- 74.Miller RA, Pople HE Jr, Myers JD. Internist-1, an experimental computer-based diagnostic consultant for general internal medicine. N Engl J Med. 1982;307:468–76. [DOI] [PubMed] [Google Scholar]

- 75.Miller RA, Gieszczykiewicz FM, Vries JK, Cooper GF. CHARTLINE: providing bibliographic references relevant to patient charts using the UMLS Metathesaurus Knowledge Sources. Proc Annu Symp Comput Appl Med Care. 1992:86–90. [PMC free article] [PubMed]

- 76.Rocha BH, Christenson JC, Pavia A, Evans RS, Gardner RM. Computerized detection of nosocomial infections in newborns. Proc Annu Symp Comput Appl Med Care. 1994:684–8. [PMC free article] [PubMed]

- 77.White KS, Lindsay A, Pryor TA, Brown WF, Walsh K. Application of a computerized medical decision-making process to the problem of digoxin intoxication. J Am Coll Cardiol. 1984;4:571–6. [DOI] [PubMed] [Google Scholar]

- 78.Balas EA, Weingarten S, Garb CT, Blumenthal D, Boren SA, Brown GD. Improving preventive care by prompting physicians. Arch Intern Med. 2000;160:301–8. [DOI] [PubMed] [Google Scholar]

- 79.Balcezak TJ, Krumholz HM, Getnick GS, Vaccarino V, Lin ZQ, Cadman EC. Utilization and effectiveness of a weight-based heparin nomogram at a large academic medical center. Am J Manag Care. 2000;6:329–38. [PubMed] [Google Scholar]

- 80.Dexter PR, Wolinsky FD, Gramelspacher GP, et al. Effectiveness of computer-generated reminders for increasing discussions about advance directives and completion of advance directive forms. A randomized, controlled trial. Ann Intern Med. 1998;128:102–10. [DOI] [PubMed] [Google Scholar]

- 81.Eccles M, Grimshaw J, Steen N, et al. The design and analysis of a randomized controlled trial to evaluate computerized decision support in primary care: the COGENT study. Fam Pract. 2000;17:180–6. [DOI] [PubMed] [Google Scholar]

- 82.Greenes RA, Tarabar DB, Krauss M, et al. Knowledge management as a decision support method: a diagnostic workup strategy application. Comput Biomed Res. 1989;22:113–35. [DOI] [PubMed] [Google Scholar]

- 83.Johnston ME, Langton KB, Haynes RB, Mathieu A. Effects of computer-based clinical decision support systems on clinician performance and patient outcome. A critical appraisal of research. Ann Intern Med. 1994;120:135–42. [DOI] [PubMed] [Google Scholar]

- 84.Kuperman GJ, Teich JM, Tanasijevic MJ, et al. Improving response to critical laboratory results with automation: results of a randomized controlled trial. J Am Med Inform Assoc. 1999;6:512–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Larsen RA, Evans RS, Burke JP, Pestotnik SL, Gardner RM, Classen DC. Improved perioperative antibiotic use and reduced surgical wound infections through use of computer decision analysis. Infect Control Hosp Epidemiol. 1989;10:316–20. [DOI] [PubMed] [Google Scholar]

- 86.Margolis A, Bray BE, Gilbert EM, Warner HR. Computerized practice guidelines for heart failure management: the HeartMan system. Proc Annu Symp Comput Appl Med Care. 1995:228–32. [PMC free article] [PubMed]

- 87.McDonald CJ, Overhage JM, Tierney WM, Abernathy GR, Dexter PR. The promise of computerized feedback systems for diabetes care. Ann Intern Med. 1996;124:170–4. [DOI] [PubMed] [Google Scholar]

- 88.Montgomery AA, Fahey T, Peters TJ, MacIntosh C, Sharp DJ. Evaluation of computer based clinical decision support system and risk chart for management of hypertension in primary care: randomised controlled trial. BMJ. 2000;320:686–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Persson M, Bohlin J, Eklund P. Development and maintenance of guideline-based decision support for pharmacological treatment of hypertension. Comput Methods Programs Biomed. 2000;61:209–19. [DOI] [PubMed] [Google Scholar]

- 90.Raschke RA, Gollihare B, Wunderlich TA, et al. A computer alert system to prevent injury from adverse drug events: development and evaluation in a community teaching hospital. JAMA. 1998;280:1317–20. [DOI] [PubMed] [Google Scholar]

- 91.Schriger DL, Baraff LJ, Rogers WH, Cretin S. Implementation of clinical guidelines using a computer charting system. Effect on the initial care of health care workers exposed to body fluids. JAMA. 1997;278:1585–90. [PubMed] [Google Scholar]

- 92.Schriger DL, Baraff LJ, Buller K, et al. Implementation of clinical guidelines via a computer charting system: effect on the care of febrile children less than three years of age. J Am Med Inform Assoc. 2000;7:186–95. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Selker HP, Beshansky JR, Griffith JL. Use of the electrocardiograph-based thrombolytic predictive instrument to assist thrombolytic and reperfusion therapy for acute myocardial infarction. A multicenter, randomized, controlled, clinical effectiveness trial. Ann Intern Med. 2002;137:87–95. [DOI] [PubMed] [Google Scholar]

- 94.Shea S, DuMouchel W, Bahamonde L. A meta-analysis of 16 randomized controlled trials to evaluate computer-based clinical reminder systems for preventive care in the ambulatory setting. J Am Med Inform Assoc. 1996;3:399–409. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Smith SA, Murphy ME, Huschka TR, et al. Impact of a diabetes electronic management system on the care of patients seen in a subspecialty diabetes clinic. Diabetes Care. 1998;21:972–6. [DOI] [PubMed] [Google Scholar]

- 96.Cimino JJ, Johnson SB, Aguirre A, Roderer N, Clayton PD. The MEDLINE Button. Proc Annu Symp Comput Appl Med Care. 1992:81–5. [PMC free article] [PubMed]

- 97.Miller RA. INTERNIST-1/CADUCEUS: problems facing expert consultant programs. Methods Inf Med. 1984;23:9–14. [PubMed] [Google Scholar]

- 98.Miller RA. Why the standard view is standard: people, not machines, understand patients' problems. J Med Philos. 1990;15:581–91. [DOI] [PubMed] [Google Scholar]

- 99.Mongerson P. A patient's perspective of medical informatics. J Am Med Inform Assoc. 1995;2:79–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Ash JS, Fournier L, Stavri PZ, Dykstra R. Principles for a successful computerized physician order entry implementation. AMIA Annu Symp Proc. 2003:36–40. [PMC free article] [PubMed]

- 101.Shortliffe EH. The adolescence of AI in medicine: will the field come of age in the '90s? Artif Intell Med. 1993;5:93–106. [DOI] [PubMed] [Google Scholar]

- 102.Overhage JM, Middleton B, Miller RA, Zielstorff RD, Hersh WR. Does national regulatory mandate of provider order entry portend greater benefit than risk for health care delivery? The 2001 ACMI debate. The American College of Medical Informatics. J Am Med Inform Assoc. 2002;9:199–208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Ash JS, Stavri PZ, Kuperman GJ. A consensus statement on considerations for a successful CPOE implementation. J Am Med Inform Assoc. 2003;10:229–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104.Ash JS, Berg M, Coiera E. Some unintended consequences of information technology in health care: the nature of patient care information system-related errors. J Am Med Inform Assoc. 2004;11:104–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 105.Wachter SB, Agutter J, Syroid N, Drews F, Weinger MB, Westenskow D. The employment of an iterative design process to develop a pulmonary graphical display. J Am Med Inform Assoc. 2003;10:363–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106.Staggers N, Kobus D. Comparing response time, errors, and satisfaction between text-based and graphical user interfaces during nursing order tasks. J Am Med Inform Assoc. 2000;7:164–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107.Hripcsak G. IAIMS architecture. J Am Med Inform Assoc. 1997;4:S20–30. [PMC free article] [PubMed] [Google Scholar]

- 108.Stead WW, Bates RA, Byrd J, Giuse DA, Miller RA, Shultz EK. Case study: the Vanderbilt University Medical Center information management architecture. In: Van De Velde R, Degoulet P, editors. Clinical information systems: a component-based approach. New York: Springer-Verlag, 2003, Appendix C.

- 109.Geissbuhler A, Miller RA. A new approach to the implementation of direct care-provider order entry. Proc AMIA Annu Fall Symp. 1996:689–93. [PMC free article] [PubMed]

- 110.Giuse DA, Mickish A. Increasing the availability of the computerized patient record. Proc AMIA Annu Fall Symp. 1996:633–7. [PMC free article] [PubMed]

- 111.National Library of Medicine PubMed database. Available from: http://www.ncbi.nlm.nih.gov/entrez/query.fcgi. Accessed December 29, 2004.

- 112.AHFS Drug Information Database. Available from: http://www.ashp.org/ahfs. Accessed November 15, 2004.

- 113.Mosby's GenRx. Available from: www.mosby.com/mosby/genrx/web. Accessed November 1, 2004.

- 114.Miller RA, McNeil MA, Challinor SM, Masarie FE Jr, Myers JD. The INTERNIST-1/QUICK MEDICAL REFERENCE project—status report. West J Med. 1986;145:816–22. [PMC free article] [PubMed] [Google Scholar]

- 115.Grimshaw J, Campbell M, Eccles M, Steen N. Experimental and quasi-experimental designs for evaluating guideline implementation strategies. Fam Pract. 2000;17(Suppl1):S11–6. [DOI] [PubMed] [Google Scholar]

- 116.Diggle P, Liang K-Y, Zeger SL. Analysis of longitudinal data. Oxford Statistical Science Series, 13. New York: Oxford University Press, 1994.

- 117.Rothman KJ, Greenland S. Modern epidemiology. Philadelphia: Lippincott-Raven, 1998, p. xiii, 737.

- 118.Ash JS, Gorman PN, Seshadri V, Hersh WR. Computerized physician order entry in U.S. hospitals: results of a 2002 survey. J Am Med Inform Assoc. 2004;11:95–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.