Abstract

Recent studies have demonstrated that context can dramatically influence the recognition of basic facial expressions, yet the nature of this phenomenon is largely unknown. In the present paper we begin to characterize the underlying process of face-context integration. Specifically, we examine whether it is a relatively controlled or automatic process. In Experiment 1 participants were motivated and instructed to avoid using the context while categorizing contextualized facial expression, or they were led to believe that the context was irrelevant. Nevertheless, they were unable to disregard the context, which exerted a strong effect on their emotion recognition. In Experiment 2, participants categorized contextualized facial expressions while engaged in a concurrent working memory task. Despite the load, the context exerted a strong influence on their recognition of facial expressions. These results suggest that facial expressions and their body contexts are integrated in an unintentional, uncontrollable, and relatively effortless manner.

Keywords: facial expression recognition, context, automaticity

Humans are tuned to recognize facial expressions of emotion in a fast and effortless manner (Adolphs, 2002, 2003; Darwin, 1872; Ekman, 1992; Hassin & Trope, 2000; Ohman, 2000; Peleg et al., 2006; Russell, 1997; Tracy & Robins, 2008; Waller, Cray Jr., & Burrows, 2008). When viewed in isolation, specific muscular configurations in the face act as signals that accurately1 and rapidly convey (Ekman, 1992) discrete and basic categories of emotion (Ekman, 1993; Ekman & O’Sullivan, 1988; Smith, Cottrell, Gosselin, & Schyns, 2005; Young et al., 1997). Yet in real life, facial expressions are typically embedded in a rich context which may be emotionally congruent or incongruent with the face (de Gelder et al., 2006). Nevertheless, the majority of studies have focused on isolated facial expressions, overlooking the mechanisms of face-context integration.

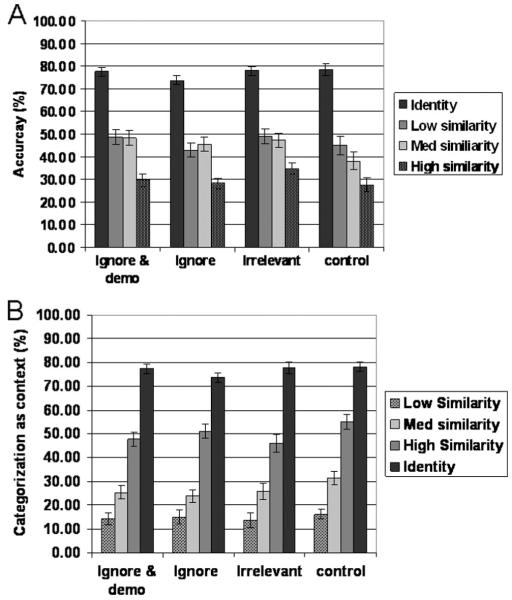

Recent investigations have indeed shown that the recognition of facial expressions is systematically influenced by context (Aviezer et al., 2009; Aviezer, Hassin, Bentin, & Trope, 2008; Aviezer, Hassin, Ryan, et al. (2008); Meeren, van Heijnsbergen, & de Gelder, 2005; Van den Stock, Righart, & de Gelder, 2007). For example, Aviezer, Hassin, & Ryan (2008) demonstrated that emotional body context (and related paraphernalia) can dramatically alter the recognition of emotions from prototypical facial expressions. To take an example, consider the facial expression in Figure 1, recognized as fearful by over 70% of participants (Aviezer, Hassin, Bentin, et al., 2008). In fact, the face was a prototypical facial expression of sadness recognized as sad by 74% of participants when it was presented in isolation (Ekman & Friesen, 1976). Yet, when a fearful context was embedded, the sad face was recognized as sad by less than 20% of the participants.

Figure 1.

An example of a prototypical facial expression embedded on a fearful body context. Image from Pictures of Facial Affect, by P. Ekman and W. V. Friesen, 1976, Palo Alto, CA: Consulting Psychologists Press. Copyright 1976 by P. Ekman and W. V. Friesen. Reproduced with permission from the Paul Ekman Group.

The magnitude of face-context integration was strongly correlated with the degree of similarity between the target facial expression (i.e., the face being presented) and the facial expression that was prototypically associated with the emotional context (Aviezer et al., 2008b). The similarity between facial expressions can be objectively assessed using data-driven computer models (Susskind, Littlewort, Bartlett, Movellan, & Anderson, 2007) and by analyzing perceptual confusability patterns in human participants (Young et al., 1997). The more similar the target facial expression was to the face prototypically associated with the emotional context, the stronger the contextual influence: a pattern we coined the “similarity effect” (Aviezer, Hassin, Bentin, et al., 2008). Of course, real life emotional face-body interactions and incongruities are probably far more subtle and complex. Nevertheless, systematically manipulating and combining basic face-body emotions serves as a powerful tool for exploring the process of face-context integration and revealing the malleability of expressive face perception.

Although the influence of emotional body context on facial expression recognition is robust, the underlying process is unclear. Specifically, it is unknown if the integration process is a controlled deliberate process (Carroll & Russell, 1996; Trope, 1986) or if it proceeds in a more automatic fashion (Bargh, 1994; Buck, 1994; McArthur & Baron, 1983). Recently we provided tentative support for the latter view by showing that the pattern of eye movements to contextualized faces was determined by the expression revealed by the context rather than the face (Aviezer, Hassin, Ryan, et al., 2008). Yet, while they are suggestive, changes in eye movements may reflect cognitive categorization strategies and decisional processes rather than automatic perceptual processes (Henderson, 2007). Furthermore, changes in eye movements may be decoupled from changes in categorization. For example, when perceiving isolated facial expressions of anger and fear, observers scan both types of faces with highly similar patterns of eye movements, yet the faces are easily differentiated as conveying separate emotions (Wong, Cronin-Golomb, & Neargarder, 2005). Hence, previous evidence is insufficient for determining if face-context integration is automatic or not.

Understanding if face-context integration proceeds automatically is central for assessing the importance of the process. From a practical level, automaticity in emotion perception seems crucial to enhance efficiency (Bargh, 1994). Daily social interactions impose huge processing demands resulting from the simultaneous flow of rapidly changing affective information from multiple sensory channels, often under suboptimal conditions of noise and distraction (Halberstadt, Winkielman, Niedenthal, & Dalle, 2009). If one were to process each source of information separately and then formulate a slow and effortful integration, the output would only be available long after the response was required. Therefore, finding that this process displays features of automaticity would mean that the face-context integration effect may be functional in real life conditions occurring outside the lab.

The feasibility of face-context integration seems evident as previous work found early cross-modal integration effects of facial expressions on auditory-evoked potentials to emotional voices (Dolan, Morris, & de Gelder, 2001; Pourtois, de Gelder, Vroomen, Rossion, & Crommelinck, 2000). Additionally, studies using evoked related potentials revealed that face-body stroop effects trigger an enhancement of the occipital component to incongruent combinations peaking around 100 ms (Meeren et al., 2005). These studies suggest that in principle the visual system is well equipped to perform rapid integration of affective visual information, yet it is unknown if face-context integration actually proceeds in such an automatic fashion.

In the current investigation we begin to explore the characteristics of the face-context integration process. One possibility is that upon encountering a contextualized facial expression viewers consider the emotions expressed by the face and context, and then formulate an intentional, deliberate, and effortful judgment about the face. For example, Carroll and Russell (1996) explicitly proposed that the process of attributing specific emotions to others based on facial and contextual information proceeds in a slow and effortful manner. Although it is possible, such a controlled process might prove inefficient in the dynamic social and emotional realms in which rapid emotional recognition is crucial, often without the luxury of extended conscious deliberation.

Alternatively, the process of face-context integration may take place in a relatively automatic fashion. Automatic integration may occur efficiently and rapidly, with little effort or conscious deliberation involved, hence deeming it suitable for the typical constraints of emotion recognition (Bargh, 1994; Buck, 1994; McArthur & Baron, 1983). If this is indeed the case, then facecontext integration could play an integral part in commonplace emotion recognition.

It should be noted that traditionally, psychological processes have only been considered automatic if they exhibited a full checklist of criteria such as being nonconscious, ballistic (i.e., once started they cannot be stopped), effortless (i.e., they do not require conscious mental resources), and unintentional, (e.g., Bargh, 1994; Posner & Snyder, 1975; Schneider, Dumais, & Shiffrin, 1984; Wegner & Bargh, 1998). However, recent accounts of automaticity have cast doubt on the need for all of these criteria to be fulfilled (Tzelgov, 1997a; Tzelgov, 1997b). Rather, it seems likely that some constellation of these features is required for the rapid and highly efficient processing of stimuli. Following this logic, the current set of experiments examined two key features of automaticity with regard to face-context integration: a) intentionality, that is, does face-context integration proceed regardless of intention (Bargh, Chen, & Burrows, 1996), and b) effortfulness, that is, does face-context integration require the exertion of mental resources or does it proceed effortlessly.

Experiment 1

The main goal of this experiment was to examine whether the process of integrating the face and context is intentional, that is, whether intention was a prerequisite for the process. Participants in all conditions were instructed to focus on the faces and to categorize the emotions that they portrayed. In the first two experimental conditions participants were warned that the contexts may affect their judgments, and hence they were explicitly encouraged to ignore them. In Condition 1, participants were told about the face-context manipulation and they were explicitly encouraged to ignore the contexts (henceforth the “Ignore” condition). In a second condition, we added a vivid visual demonstration of an isolated facial expression being placed and removed from an emotional body context to the aforementioned instructions (henceforth the “Ignore & Demo” condition).

In a third experimental condition, participants were not warned against using the context; however, they were led to believe that the context was irrelevant to the face recognition task because the faces and bodies were randomly paired (henceforth the “Irrelevant” condition). This condition was introduced to control for potential rebound ironic-processing effects in the two Ignore conditions, which may be triggered when individuals actively attempted to avoid processing information (Wegner, 1994). Participants in a fourth control group were simply asked to categorize the facial expressions with no special instructions concerning the context (henceforth the “Control” condition). To ensure that all the participants were motivated to follow through with the instructions, we offered a $35 prize to the most accurate participant.

Participants in the three experimental conditions should have no intention to integrate the contexts. Thus, if the integration requires intention, participants in these conditions should be relatively unaffected by the contexts. In contrast, if the integration does not require intention, then context effects should emerge. Consequently, one would expect the contexts to exert similar effects in all four conditions. Moreover, if the process is unintentional and still stoppable, then participants in the two Ignore conditions as well as the Irrelevant condition should be able to stop it. Hence, if this is the case, then they should show diminished effects of context.

Method

Participants

One hundred sixty-six undergraduate students (44 male, 122 female) from the Hebrew University (18–24 years, M = 22.3) participated for course credit or payment. Past work has shown that face-context effects are robust and reliably detected with n = 10 participants, with an alpha level of .01 (using a within-subject design). In the current study we used a much larger sample of participants in order to increase our power to detect any influence of the instructions on the similarity effect (Control: N = 45, Ignore: N = 47, Ignore & Demo: N = 44, Irrelevant: N = 30).

Stimuli

Portraits of 10 individuals (5 female) posing with the basic facial expressions of disgust, anger, sadness, and fear were selected from the Ekman and Friesen (1976) set. These faces were combined with context images which included bodies of models placed in emotional situations handling various paraphernalia. The emotion of the context was conveyed by a combination of body language, gestures, and paraphernalia: all contributing to a highly recognizable manifestation of a specific emotion.

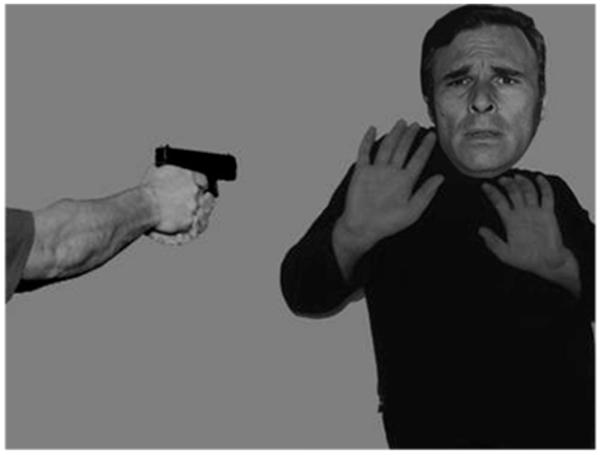

Four levels of similarity were created between the face and context: (a) High Similarity, disgust face on the anger body and anger on disgust; (b) Medium Similarity, disgust on sadness and sadness on disgust; (c) Low Similarity, disgust on fear and fear on disgust; and (d) Identity, in which each facial expression appeared in a congruent emotional context, for example, disgust on disgust (see examples in Figure 2). Previous work with computerized image analysis and similarity ratings of participants has shown that facial expressions of disgust bear declining degrees of similarity (and confusability) to faces of anger, sadness, and fear, respectively (Susskind et al., 2007; Young et al., 1997).

Figure 2.

Examples of the face-context similarity conditions presented in Experiment 1 for the disgust faces. (A) Disgust face in a disgust context (Identity). (B) Disgust face in a fearful context (Low Similarity). (C) Disgust face in a sad context (Medium Similarity). (D) Disgust face in an anger context (High Similarity). Images from Pictures of Facial Affect, by P. Ekman and W. V. Friesen, 1976, Palo Alto, CA: Consulting Psychologists Press. Copyright 1976 by P. Ekman and W. V. Friesen. Reproduced with permission from the Paul Ekman Group.

Isolated facial expressions and the faceless emotional contexts were also presented in separate counterbalanced blocks following the experimental task, serving as baseline stimuli.

Design

The 4 × 4 mixed experimental design included a within-participant factor of Similarity (Identity, Low Similarity, Medium Similarity, and High Similarity) that reflected the varying degrees of similarity between the face associated with the context and the actual face presented to participants. The Instructions (Control, Ignore, Ignore & Demo, and Irrelevant) served as a between-participant factor. The dependent variable was the accuracy of facial expression recognition defined as the percentage of categorizations to the original intended face emotion. An additional dependent variable was the tendency to miscategorize the face as conveying the emotion of the context, that is, the degree of contextual bias.

Procedure

Face-context composites were randomly presented on a computer monitor one at a time with no time limits, and participants were requested to choose the emotion that best described the facial expressions from a standard list of six basicemotion labels (sadness, anger, fear, disgust, happiness, and surprise) which were presented under the image.

The four groups of participants differed in the instructions and information that they received prior to the facial expression recognition task. In the Ignore condition, participants were instructed, “In this experiment we combined facial expressions with emotional contexts. Sometimes the facial expression fits the context and sometimes it does not, and hence the context might interfere with the recognition of the emotional expression of the face. Your task is to indicate the category that best describes the facial expression. Ignore the context and base your answers on the face alone.”

In the Ignore & Demo condition, participants received the exact same instructions, followed by a PowerPoint face-context integration demo. In the demo, a prototypical isolated facial expression of disgust first appeared on a gray background. After 5 s, a context image (the torso of a bodybuilder) emerged from the bottom of the screen and seamlessly formed an integrated image with the head portraying the facial expression (Previous work has shown that this face-body combination results in the disgust face being strongly misrecognized as proud and boasting; Aviezer , Hassin, Ryan, et al., 2008). After 5 s, the torso disappeared from the image. The isolated disgusted facial expression remained on the screen for an additional 5 s and then disappeared. Participants were purposefully not exposed to one of the critical face-context combinations from the actual experiment so as to minimize experimental demand.

In the Irrelevant condition, participants were told that “this experiment is part of a validation study of stimuli which will be used in the lab in the future. In order to increase the number of stimuli, we used StimGen, a computer program which randomly selects and combines facial expressions with body expressions. You will see images of people generated by the software and your task is to indicate the category that best describes the facial expression.”

Finally, in the Control condition, participants were instructed that on each trial they should “press the button indicating the category that best describes the facial expression”.

Results

Isolated faces and contexts

The full means and SDs for recognizing the isolated faces and contexts are outlined in Table 1. The groups did not differ in their baseline recognition of the facial expressions, F(3, 162) = 1.02, p = .38 , and they did not differ in their recognition of the faceless contexts, F(3, 162) = .86, p = .46 . As seen in Table 1, disgust and fear were more poorly recognized than anger and sadness (p < .001): a finding which recurred in other studies with Israeli (Aviezer, Hassin, & Bentin, in press) and United States populations (Aviezer, Trope, & Todorov, 2011), which may reflect a confusability carryover from the contextualized conditions.

Table 1. Experiment 1: Baseline Means (%) and Standard Deviations (SD) for Recognizing Faceless Emotional Bodies and Bodiless Emotional Faces.

| Ignore & Demo |

Ignore |

Irrelevant |

Control |

|||||

|---|---|---|---|---|---|---|---|---|

| Emotional Stimuli |

Mean | SD | Mean | SD | Mean | SD | Mean | SD |

| Sad body | 93.2 | 22.3 | 91.5 | 22.5 | 90.0 | 25.0 | 90.4 | 27.2 |

| Anger body | 90.3 | 22.4 | 94.7 | 18.7 | 96.7 | 10.9 | 90.6 | 25.2 |

| Disgust body | 89.8 | 21.1 | 88.3 | 26.0 | 93.3 | 14.6 | 94.4 | 12.9 |

| Fear body | 83.0 | 31.8 | 76.6 | 31.5 | 93.3 | 20.7 | 78.3 | 30.9 |

| Sad face | 85.9 | 22.4 | 84.3 | 19.5 | 90.3 | 9.6 | 80.0 | 22.5 |

| Anger face | 100.0 | 0.0 | 100.0 | 0.0 | 100.0 | 0.0 | 100.0 | 0.0 |

| Disgust face | 44.3 | 25.6 | 43.0 | 23.9 | 52.0 | 23.3 | 41.8 | 26.5 |

| Fear face | 51.8 | 28.2 | 42.8 | 27.5 | 41.7 | 24.4 | 46.4 | 26.6 |

Facial expressions in context

The recognition of facial expressions was strongly influenced by the body context, as evidenced by a significant effect of Similarity. Participants were most accurate at recognizing the intended facial expression when the faces appeared in the Identity condition, less accurate in the Low and Medium Similarity conditions, and the least accurate in the High Similarity condition, F(3, 486) = 289.09, p < .0001, .

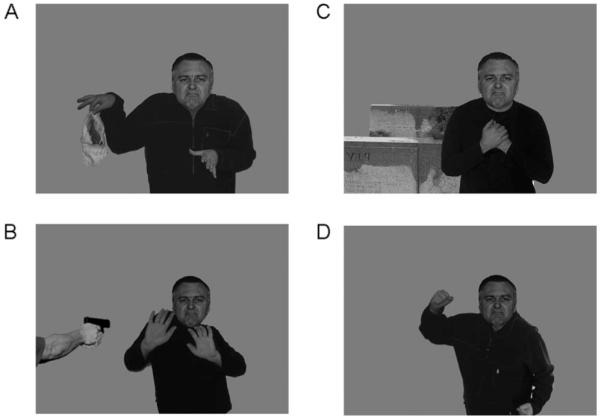

Crucially, despite the instructions, information, and incentives to not use the context, participants in the four experimental groups were influenced to similar degrees by the context (Figure 3A). Both the effects of the Instruction, F(3, 162) = 1.2, p = .28, and the interaction, F(9, 486) = 1.32, p = .22, , were not significant.

Figure 3.

(A) Accuracy (% correct) of recognizing contextualized facial expressions as a function of the experimental condition and context similarity. (B) Categorization of the faces (%) as conveying the context emotion.

In order to further examine if the effect sizes and magnitude of the similarity effect changed following instruction, we ran separate ANOVAs for each group. The results indicated that the Similarity effect had nearly identical significance levels and effect sizes for the Control group, F(3, 132) = 99.7, p < .0001, , the Ignore group, F(3, 138) = 78.3, p < .0001, , the Ignore & Demo group F(3, 129) = 71.4, p < .0001, , and the Irrelevant group, F(3, 87) = 58.7, p < .0001, .

An additional way to examine face-context integration is by focusing on the mirror image of accuracy, that is, the miscategorization of the face as conveying the context emotion rather than the face emotion (Aviezer, Hassin, Ryan, et al., 2008). A 4 × 4 Similarity by Instructions repeated ANOVA revealed a main effect of similarity: Participants were most likely to categorize the face as the context emotion in the Identity condition, followed by the High Similarity, Medium Similarity, and Low Similarity conditions (in that order), F(3, 486) = 687.5, p < .0001, (Figure 3B). Planned t tests confirmed that the difference was significant between all similarity levels (all ps < .001). By contrast, no difference was found between the four instruction groups, F(3, 162) = 1.1, p = .347, , and the interaction was also not significant, F(3, 162) = .143, p = .934, .

Hypothetically, not getting the effects of instruction while getting the effects of context similarity could occur as a result of the former being a between-condition comparison and the latter being a within-participant condition. We ruled out this possibility by examining if the similarity effect would still hold between subjects, for example, by comparing the accuracy scores of the identity context from the control group, the Low Similarity context from the Ignore group, and the High Similarity context from the Ignore & Demo group. In all combinations the similarity effect held up, (all Fs > 30, all ps < .0001) suggesting sufficient power of the design.

Overall, these data suggest that all groups were similarly influenced by the contexts in which the facial expressions were embedded, implicating that participants had little control over the integration outcome.

Discussion

The findings from Experiment 1 show that when participants are instructed and motivated to not use the context, and hence have no motivation to use the context, integration still occurs. This pattern held up even when participants were not instructed to avoid the context, but rather, were led to believe that the context was irrelevant for the face recognition task. This implies that our findings did not result from rebound effects caused by ironic processes. Thus, similar patterns of contextual influence were observed regardless of the instructions participants received prior to the task. This result strongly implies that differential and selective face-context integrations occur unintentionally. Furthermore, they also suggest that the integrations are unstoppable.

As always, null effects must be interpreted with caution. Nevertheless, our analysis showed that the contextual similarity effect was maintained in all three experimental groups with sufficient power and sample size. Given the explicit information, instructions, demonstration, and motivation participants had for not using the context, one wonders what additional help might have been offered that would have assisted in releasing participants from the relentless grip of the context. Next, we turn to explore a second key feature of automaticity: effortfulness.

Experiment 2

The results of Experiment 1 suggest that the emotional context we used cannot be easily controlled, and hence its integration with the face does not require intention and it cannot be deliberately disregarded or ignored. Nevertheless, the categorization of facial expressions in context might still be sensitive to the amount of available cognitive resources. In Experiment 2 we used a dual-task paradigm in order to examine if face-context integrations reveal another feature of automaticity—effortlessness (Gilbert, Krull, & Pelham, 1988; Trope & Alfieri, 1997). If the process is effortful, the decrease in available mental resources should result in diminished contextual influences. However, if the process is relatively effortless, then the amount of available mental resources should not matter.

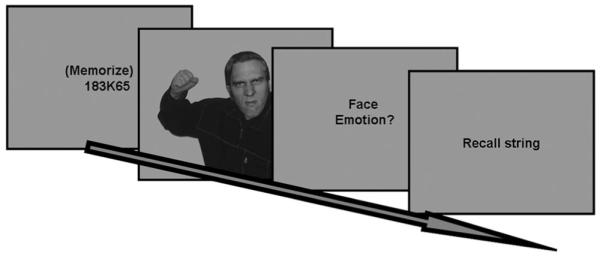

Participants were instructed to categorize contextualized facial expressions while memorizing a letter-number string (Tracy & Robins, 2008). Following the categorization of the contextualized face expression, participants were required to recall the memorized string (see Figure 4). One concern in dual task memory recall tasks is that the recall itself might prove to be a frustrating emotional experience. This problem is most pertinent to studies in social cognition, as experiencing a given emotion may bias one’s recognition of emotions in others (Richards et al., 2002). To this end we opted for a string length of six items, which would tax cognitive resources while not inducing extreme frustration in participants. Strings of this length have been effectively used in a wide range of dual-task studies (Bargh & Tota, 1988; Karatekin, 2004; Ransdell, Arecco, & Levy, 2001).

Figure 4.

Outline of an experimental trial in Experiment 2. Image from Pictures of Facial Affect, by P. Ekman and W. V. Friesen, 1976, Palo Alto, CA: Consulting Psychologists Press. Copyright 1976 by P. Ekman and W. V. Friesen. Reproduced with permission from the Paul Ekman Group.

As in Experiment 1, we exploited the similarity effect; that is, the finding that the magnitude of contextual influence is strongly correlated with the degree of similarity between the target facial expression (i.e., the face being presented) and the facial expression that is prototypically associated with the emotional context. If contextual influence occurs effortlessly, it should occur irrespective of the cognitive load.

Method

Participants

Ninety-four undergraduate students (39 male, 55 female) from the Hebrew University (mean age = 21.2) participated for course credit or payment.

Stimuli

Portraits of 10 individuals (five female) posing with the basic facial expressions of disgust (10 images) and sadness (10 images) were selected from the Ekman and Friesen (1976) set. Pilot studies found that these two emotions show strong and linear contextual influence as a function of face context similarity. Faces from each emotional category were planted on images of models in emotional contexts that were tailored to exert three different levels of similarity: Identity, High Similarity, and Low Similarity.

For the current experiment, three levels of face-context similarity were introduced for each facial expression category. In the High Similarity contextual condition, disgust faces appeared in an anger context and sadness faces appeared in a fearful context (for the similarity structure between facial expressions of emotions see Susskind et al., 2007). In the Low Similarity contextual condition, disgust faces appeared in a fearful context and sadness faces appeared in an anger context. In the Identity condition, facial expressions of disgust and sadness appeared in a congruent emotional context. Isolated facial expressions and faceless emotional contexts were also presented in separate counterbalanced blocks following the experimental task, serving as baseline stimuli.

Design

A 3 × 2 mixed experimental design was employed with Similarity (Identity, High Similarity, Low Similarity) as a within-participant factor and Load (High, Low) as a between-participants factor. Face context integration was measured using recognition accuracy, which was defined as the percentage of times a face was categorized as its “original” intended emotional category (see footnote 1).

Procedure

Each trial started with the presentation of a random six-item string which included five numbers and one letter (e.g., 274K81). Participants in the High Load group were instructed to memorize the full string, whereas participants in the Low Load group were instructed to memorize the single letter. After 4 s, the string disappeared and participants were presented with a contextualized facial expression which they were asked to categorize as conveying one of six basic emotions (see Experiment 1). Immediately after the emotional categorization, participants were required to type the string in a box that appeared on the screen. Trial-by-trial feedback was delivered for the memory task accuracy (correct and incorrect) as well as the cumulative average accuracy for the memory task. We opted to supply an ongoing feedback, as we expected it would maintain engagement in the task and because we assumed (based on previous work) that the overall accuracy would not be sufficiently low to induce a negative mood. An accurate recall in the memory task was defined as correct recall of all six figures in the correct sequence.

Results

In order to ensure baseline recognition of isolated emotional stimuli, we excluded participants who displayed a zero recognition rate for any of the isolated facial expressions or any of the faceless body contexts. The remaining 62 participants (33 experimental, 29 control) were used in the following analyses.

Memory performance in the load task

Responses in the load memory task were only defined as “correct” if the full string was recalled in the correct order. As expected, memorization of the full string in the load task was more difficult than the memorization of the single letter in the control task. This was evident because the accuracy was significantly higher in the Control group (M = 93.4%, SD = 11.6) than the Load group (M = 70.2%, SD = 16.2), t(60) = 6.3, p < .0001. Subsequent analyses were conducted on correct trials only, in which participants successfully memorized the memory string (i.e., all items were recalled in correct in sequence).

Isolated contexts and faces

The average recognition of the isolated contexts and faces by group is shown in Table 2. The faceless contexts did not differ in terms of their recognition rates, and the groups did not differ significantly in their recognition of the isolated faces (both the group and interaction p values > .45). As in Experiment 1, sad faces were better recognized than disgust expressions, a finding which may reflect a confusability carryover from the contextualized conditions.

Table 2. Experiment 2: Baseline Means (%) and Standard Deviations (SD) for Recognizing Faceless Emotional Bodies and Bodiless Emotional Faces.

| Load |

Control |

|||

|---|---|---|---|---|

| Emotional Stimuli |

Mean | SD | Mean | SD |

| Disgust body | 98.4 | 8.7 | 99.1 | 4.6 |

| Sad body | 98.4 | 8.7 | 96.2 | 11.6 |

| Anger body | 91.9 | 20.7 | 94.8 | 13.9 |

| Disgust face | 48.6 | 24.9 | 43.1 | 22.3 |

| Sad face | 76.7 | 18.8 | 75.9 | 18.8 |

Recognition of facial expressions in context

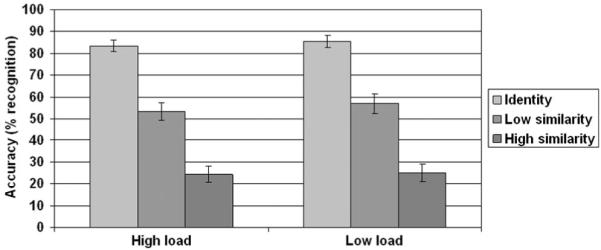

Replicating our findings from Experiment 1, face expression recognition was strongly influenced by the face-context similarity. Across both facial expressions, accuracy was highest in the Identity context, intermediate in the Low Similarity context, and lowest in the High Similarity contexts, F(2, 120) = 144, p < .0001, . Pairwise comparisons between all context conditions confirmed that the decline in accuracy was significant across all levels (all p values < .001). Crucially, the ANOVA indicated that despite the cognitive effort exerted by the memorization task, the Load group did not differ from the Control group in their pattern of emotion recognition, F(1, 60) = .43, p = .51, , and the interaction between the group and context was not significant, F < 1. Hence, face-context integration did not break down despite the manipulation of load (see Figure 5).

Figure 5.

Overall accuracy (% correct) of recognizing contextualized facial expressions as a function of the experimental condition (High Load vs. Low Load) and context similarity.

Because the isolated disgust facial expressions were recognized more poorly than the isolated sad facial expressions, we ran additional separate ANOVAs for each facial expression type. This analysis allowed us to examine if the automaticity of face-context integration was modulated by face ambiguity. Face-context integration (as evident by the similarity effect) occurred strongly for both facial expressions of disgust, F(2, 120) = 131.4, p < .0001, and sadness, F(2, 120) = 93.3, p < .0001, . Critically, there was no effect of the group in either the disgust, F(1, 60) = .228, p > .6., , or sadness expressions F(1, 60) = .28, p > .59., .

We also examined whether forgetting the string happened more or less often in the conditions where incongruent context was presented than when there was a similar context presented. If face-context integration takes place with minimal cognitive resources, then the memory task might serve as a second indicator of whether the perception of incongruent expressions and contexts required greater cognitive resources. Focusing on the High Load group, we used a repeated ANOVA to compare accuracy on the memory task as a function of face-context similarity. The results indicated that the probability of memory errors was virtually the same in the identity (M = 71%, SD = 17.2), Low Similarity (M = 69.85, SD = 19.6), and High Similarity (M = 69.09, SD = 18.09) conditions, F(2, 64) = .53, p = .59, . Hence, facecontext integration occurs with minimal resources irrespective of the incongruity between face and context.

Discussion

The findings from Experiment 2 suggest that face-context integration occurs effortlessly in that it is not impaired by a concurrent cognitive load. Despite the different levels of the load in the experimental and control conditions, participants in both groups showed similar face-context integrations. As in Experiment 1, participants in both groups displayed a differential effect of context that was determined by the degree of similarity between the observed faces and the facial expressions that would typically be associated with each emotional context. The fact that this finetuned process was highly similar in both groups suggests that this process draws very little on a common pool of cognitive resources. Interestingly, our analysis suggests that the recognizability of the facial expressions played little role, if any, in the automaticity of the face-context integration process. Although disgust faces were more ambiguous than sad faces, both expressions showed comparable similarity effects irrespective of the load.

General Discussion

A large body of work has shown that isolated prototypical facial expressions directly signal the specific emotional states of individuals (Adolphs, 2003; Buck, 1994; Ekman, 1993; Ekman & O’Sullivan, 1988; Frith, 2008; Ohman, 2000; Peleg et al., 2006; Tracy & Robins, 2008; Waller et al., 2008). Recent work in the field, however, has highlighted the importance of contextual information by demonstrating the malleability and flexibility of contextualized facial expression recognition (Barrett, Lindquist, & Gendron, 2007). In this paper, we aimed to characterize the process by which facial expressions and body context are integrated. Specifically, we asked if viewers formulate an intentional and effortful decision after considering the emotion expressed by the face and context, or if the face and context are integrated in a highly automatic, unintentional, and noneffortful manner.

Unintentionality

In Experiment 1 participants were explicitly instructed and monetarily motivated to ignore the context. Additionally, participants were given information about the manipulation and shown a visual demonstration, which were used to help motivate them to ignore the context of the face. An additional group of participants was led to believe that the body contexts were irrelevant because they were randomly paired with the faces, but no instruction to avoid the body was given. Nevertheless, despite their intention, participants in all groups proved incapable of ignoring the context, which was evidenced by the highly similar patterns of contextual influence in all groups. The results of this experiment strongly suggest that face-context integrations occur in an unintentional manner.

The current design encouraged participants to prevent the facecontext integration from the first moment that they encountered the visual scene. Given our results, it also seems safe to assume that the outcome would be similar if participants would try to stop a process of face-context integration that had already been launched. Hence, although the automaticity feature of stoppability (Bargh, 1994) was not explicitly assessed in Experiment 1, the results suggest that face-context integration is ballistic by nature; that is, once it is instigated, the process cannot be stopped at will. Other-wise, participants would have stopped the integration in order to increase the likelihood that they would receive the monetary prize.

Effortlessness

In Experiment 2 participants categorized contextualized facial expressions while they were engaged in an unrelated concurrent working memory task. Experimental and control groups performed an identical face categorization task that differed only in the degree of concurrent cognitive load. Nevertheless, a similar degree of face-context integration occurred irrespective of the load, suggesting that face-context integration proceeds in an effortless manner. Previous work has suggested that the processing of facial stimuli (Landau & Bentin, 2008) and facial expressions in particular (Tracy & Robins, 2008) proceed in an automatic manner that is relatively uninfluenced by central executive resources. Our results extend the claim and suggest that not only are facial expressions processed automatically, but they are also integrated with their surroundings in such a manner.

The Issue of Automaticity Revisited

The current set of experiments suggests that face-context integration proceeds in an automatic fashion on at least two dimensions: intentionality and effort. Yet, the striking findings in this study relate to the failure to demonstrate a difference between conditions. Clearly, failing to reject H0, one cannot conclude that H0 is true. Nevertheless, the effect sizes in the control versus automatic conditions are highly similar. If indeed the groups we examined do differ, our failure to document even the slightest bit of evidence for this difference is surprising.

Furthermore, one can consider the results of our experimental conditions on their own (i.e., without the comparison to the control condition). Contrary to the predictions of a controlled account, these conditions show that we get dramatic effects of context even when participants are encouraged not to show them and they are under load. These data suggest that the process of face-context integration, at least in the specific case in which the context is a body, occurs in an automatic fashion as far as intentionality and effortlessness are concerned.

Why is it that participants do not attempt to correct their initial impression of the contextualized face? One possibility might be that the context changes the actual perceptual appearance of the face from an early stage (Aviezer, Hassin, Ryan, et al., 2008; Barrett et al., 2007; Halberstadt, 2005; Halberstadt & Niedenthal, 2001). For example, we have shown that an emotional context changes the profile of eye scanning to facial expressions from an early stage (Aviezer, Hassin, Ryan, et al., 2008). Hence, participants might not be able to correct their perception simply because the faces look different to them (Wilson & Brekke, 1994).

The current work provides evidence for automaticity in emotional face-context integration, yet it leaves open the intriguing question of how this mode of processing develops. One way to further explore this matter would be to examine the developmental timeline of face-body integration. One obvious possibility is that young children are initially poor at face-context integration just as they have reduced visual context integration, as is evidenced by their weaker experience of visual illusions (Kldy & Kovcs, 2003). However, after being repeatedly exposed to certain combinations of affective information (both within and between modalities), they become more and more proficient at integrating the sources until the process is automatized. According to this account, one may see a gradual shift from effortful and deliberate face-body processing to automatic and more efficient integration during the early childhood years.

Recent work has shown that for young children (ages 4–10), contextual verbal story scripts were more important than facial information in determining the emotion felt by targets (Widen & Russell, 2010a). Interestingly, visual (and hence more ecological) context may have a different effect. An anecdotal observation of the first author (H.A.) suggests that toddlers may discern the affective states of others from the face while ignoring the obvious visual context of the expression. For example, when observing a picture of a man picking up a heavy box, the effortful face expression is misinterpreted as “Mad”. Finally, the developmental time course of face context integration may also develop at a different rate for different expressions, perhaps in parallel to the developmental time course of facial expression recognition (Widen & Russell, 2010b, 2010c). Clearly, additional work will be required to clarify these matters.

In sum, our findings suggest that the integration of facial expressions and context cascades in a highly efficient manner which deems it relevant for the everyday demands of social cognition. Although previous accounts have focused on the relation between isolated facial expression recognition and social adeptness (Marsh, Kozak, & Ambady, 2007), an even richer picture may emerge when facial expression perception is considered within an emotional context.

Acknowledgments

This work was funded by an NIMH Grant R01 MH 64458 to Shlomo Bentin and an ISF Grant 846/03 to Ran R. Hassin. We thank Shira Agasi for her skilful help in running and analyzing Experiment 1.

Footnotes

We use the term “accuracy” in the limited sense of classifying posed facial expressions in the categories in which they were originally intended to convey in the Ekman and Friesen (1976) set.

Contributor Information

Hillel Aviezer, Department of Psychology, Hebrew University of Jerusalem, Jerusalem, Israel; Department of Psychology at Princeton University.

Veronica Dudarev, Department of Psychology, Hebrew University of Jerusalem, Jerusalem, Israel.

Shlomo Bentin, Department of Psychology, Hebrew University of Jerusalem & Center for Neural Computation, Jerusalem, Israel.

Ran R. Hassin, Department of Psychology, Hebrew University of Jerusalem & Center for the Study of Rationality, Jerusalem, Israel

References

- Adolphs R. Neural systems for recognizing emotion. Current Opinion in Neurobiology. 2002;12:169–177. doi: 10.1016/s0959-4388(02)00301-x. doi:10.1016/s0959-4388(02)00301-x. [DOI] [PubMed] [Google Scholar]

- Adolphs R. Cognitive neuroscience of human social behaviour. Nature Reviews Neuroscience. 2003;4:165–178. doi: 10.1038/nrn1056. doi:10.1038/nrn1056. [DOI] [PubMed] [Google Scholar]

- Aviezer H, Bentin S, Hassin RR, Meschino WS, Kennedy J, Grewal S, Moscovitch M. Not on the face alone: Perception of contextualized face expressions in Huntington’s disease. Brain. 2009;132:1633–1644. doi: 10.1093/brain/awp067. doi:10.1093/brain/awp067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aviezer H, Hassin R, Bentin S, Trope Y. Putting facial expressions into context. In: Ambady N, Skowronski J, editors. First Impressions. Guilford Press; New York, NY: 2008. [Google Scholar]

- Aviezer H, Hassin RR, Bentin S. Impaired integration of emotional faces and bodies in a rare case of developmental visual agnosia with severe prosopagnosia. Cortex. doi: 10.1016/j.cortex.2011.03.005. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aviezer H, Hassin RR, Ryan J, Grady C, Susskind J, Anderson A, Bentin S. Angry, disgusted, or afraid? Studies on the malleability of emotion perception. Psychological Science. 2008;19:724–732. doi: 10.1111/j.1467-9280.2008.02148.x. doi:10.1111/j.1467–9280.2008.02148.x. [DOI] [PubMed] [Google Scholar]

- Aviezer H, Trope Y, Todorov A. Holistic person processing: Faces with bodies tell the whole story. Manuscript submitted for publication; 2011. [DOI] [PubMed] [Google Scholar]

- Bargh JA. The four horsemen of automaticity: Awareness, intention, efficiency, and control in social cognition. In: Wyer RJ, Srull TK, editors. Handbook of social cognition. Erlbaum, Inc; Hillsdale, NJ: 1994. pp. 1–40. [Google Scholar]

- Bargh JA, Chen M, Burrows L. Automaticity of social behavior: Direct effects of trait construct and stereotype activation on action. Journal of Personality and Social Psychology. 1996;71:230–244. doi: 10.1037//0022-3514.71.2.230. doi:10.1037/0022–3514.71.2.230. [DOI] [PubMed] [Google Scholar]

- Bargh JA, Tota ME. Context-dependent automatic processing in depression: Accessibility of negative constructs with regard to self but not others. Journal of Personality and Social Psychology. 1988;54:925–939. doi: 10.1037//0022-3514.54.6.925. [DOI] [PubMed] [Google Scholar]

- Barrett LF, Lindquist KA, Gendron M. Language as context for the perception of emotion. Trends in Cognitive Sciences. 2007;11:327–332. doi: 10.1016/j.tics.2007.06.003. doi:10.1016/j.tics.2007.06.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buck R. Social and emotional functions in facial expression and communication: The readout hypothesis. Biological Psychology. 1994:95–115. doi: 10.1016/0301-0511(94)90032-9. doi:10.1016/0301–0511(94)90032–9. [DOI] [PubMed] [Google Scholar]

- Carroll JM, Russell JA. Do facial expressions signal specific emotions? Judging emotion from the face in context. Journal of Personality and Social Psychology. 1996;70:205–218. doi: 10.1037//0022-3514.70.2.205. doi:10.1037/0022–3514.70.2.205. [DOI] [PubMed] [Google Scholar]

- Darwin C. The Expression of the Emotions in Man and Animals. Oxford University Press; New York, NY: 1872. [Google Scholar]

- de Gelder B, Meeren HKM, Righart R, Stock J, van de Riet WAC, Tamietto M. Beyond the face: Exploring rapid influences of context on face processing. Progress in Brain Research. 2006;155:37–48. doi: 10.1016/S0079-6123(06)55003-4. [DOI] [PubMed] [Google Scholar]

- Dolan RJ, Morris JS, de Gelder B. Crossmodal binding of fear in voice and face. Proceedings of the National Academy of Sciences, USA. 2001;98:10006–10010. doi: 10.1073/pnas.171288598. doi:10.1073/pnas.171288598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ekman P. Facial Expressions of Emotion: New Findings, New Questions. Psychological Science. 1992;3:34–38. doi:10.1111/j.1467–9280.1992.tb00253.x. [Google Scholar]

- Ekman P. Facial expression and emotion. American Psychologist. 1993;48:384–392. doi: 10.1037//0003-066x.48.4.384. doi:10.1037/0003-066x.48.4.384. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen WV. Pictures of facial affect. Consulting Psychologists Press; Palo Alto, CA: 1976. [Google Scholar]

- Ekman P, O’Sullivan M. The role of context in interpreting facial expression: Comment on Russell and Fehr (1987) Journal of Experimental Psychology: General. 1988;117:86–88. doi: 10.1037//0096-3445.117.1.86. doi:10.1037/0096–3445.117.1.86. [DOI] [PubMed] [Google Scholar]

- Frith CD. Social cognition. Philosophical Transactions of the Royal Society B: Biological Sciences. 2008;363:2033–2039. doi: 10.1098/rstb.2008.0005. doi:10.1098/rstb.2008.0005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gilbert DT, Krull DS, Pelham BW. Of thoughts unspoken: Social inference and the self-regulation of behavior. Journal of Personality and Social Psychology. 1988;55:685–694. [Google Scholar]

- Halberstadt J. Featural shift in explanation-biased memory for emotional faces. Journal of Personality and Social Psychology. 2005;88:38–49. doi: 10.1037/0022-3514.88.1.38. [DOI] [PubMed] [Google Scholar]

- Halberstadt J, Winkielman P, Niedenthal PM, Dalle N. Emotional conception. Psychological Science. 2009;20:1254–1261. doi: 10.1111/j.1467-9280.2009.02432.x. doi:10.1111/j.1467–9280.2009.02432.x. [DOI] [PubMed] [Google Scholar]

- Halberstadt JB, Niedenthal PM. Effects of emotion concepts on perceptual memory for emotional expressions. Journal of Personality and Social Psychology. 2001;81:587–598. [PubMed] [Google Scholar]

- Hassin R, Trope Y. Facing faces: Studies on the cognitive aspects of physiognomy. Journal of Personality and Social Psychology. 2000;78:837–852. doi: 10.1037//0022-3514.78.5.837. doi:10.1037/0022–3514.78.5.837. [DOI] [PubMed] [Google Scholar]

- Henderson JM. Regarding scenes. Current Directions in Psychological Science. 2007;16:219–222. doi:10.1111/j.1467–8721.2007.00507.x. [Google Scholar]

- Karatekin C. Development of attentional allocation in the dual task paradigm. International Journal of Psychophysiology. 2004;52:7–21. doi: 10.1016/j.ijpsycho.2003.12.002. doi:10.1016/j.ijpsycho.2003.12.002. [DOI] [PubMed] [Google Scholar]

- Kldy Z, Kovcs I. Visual context integration is not fully developed in 4-year-old children. Perception. 2003;32:657–666. doi: 10.1068/p3473. [DOI] [PubMed] [Google Scholar]

- Landau AN, Bentin S. Attentional and perceptual factors affecting the attentional blink for faces and objects. Journal of Experimental Psychology: Human Perception and Performance. 2008;34:818–830. doi: 10.1037/0096-1523.34.4.818. doi:10.1037/0096–1523.34.4.818. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marsh AA, Kozak MN, Ambady N. Accurate identification of fear facial expressions predicts prosocial behavior. Emotion. 2007;7:239–251. doi: 10.1037/1528-3542.7.2.239. doi:10.1037/1528–3542.7.2.239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McArthur LZ, Baron RM. Toward an ecological theory of social perception. Psychological Review. 1983;90:215–238. doi:10.1037/0033-295x.90.3.215. [Google Scholar]

- Meeren HKM, van Heijnsbergen CCRJ, de Gelder B. Rapid perceptual integration of facial expression and emotional body language. Proceedings of the National Academy of Sciences, USA. 2005;102:16518–16523. doi: 10.1073/pnas.0507650102. doi:10.1073/pnas.0507650102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ohman A. Fear and anxiety: Evolutionary, cognitive, and clinical perspectives. In: Haviland-Jones MLJM, editor. Handbook of emotions. 2nd ed. Guilford Press; New York, NY: 2000. pp. 573–691. [Google Scholar]

- Peleg G, Katzir G, Peleg O, Kamara M, Brodsky L, Hel-Or H, Nevo E. Hereditary family signature of facial expression. Proceedings of the National Academy of Sciences, USA. 2006;103:15921–15926. doi: 10.1073/pnas.0607551103. doi:10.1073/pnas.0607551103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Posner MI, Snyder RR. In: Solso RL, editor. Attention and cognitive control; Information processing and cognition: The Loyola symposium; Hillsdaly, NJ: Erlbaum. 1975.pp. 55–86. [Google Scholar]

- Pourtois G, de Gelder B, Vroomen J, Rossion B, Crommelinck M. The time-course of intermodal binding between seeing and hearing affective information. NeuroReport. 2000;11:1329–1333. doi: 10.1097/00001756-200004270-00036. [DOI] [PubMed] [Google Scholar]

- Ransdell S, Arecco MR, Levy CM. Bilingual long-term working memory: The effects of working memory loads on writing quality and fluency. Applied Psycholinguistics. 2001;22:113–128. [Google Scholar]

- Richards A, French CC, Calder AJ, Webb B, Fox R, Young AW. Anxiety-related bias in the classification of emotionally ambiguous facial expressions. Emotion. 2002;2:273–287. doi: 10.1037/1528-3542.2.3.273. [DOI] [PubMed] [Google Scholar]

- Russell JA. Reading emotions from and into faces: Resurrecting a dimensional contextual perspective. In: Russell AJ, Fernandez-Dols JM, editors. The psychology of facial expressions. Cambridge University Press; New York, NY: 1997. pp. 295–320. [Google Scholar]

- Schneider W, Dumais ST, Shiffrin RM. Automatic and controlled processing and attention. In: Parasuraman R, Davies D, editors. Varieties of attention. Academic Press; New York, NY: 1984. pp. 1–17. [Google Scholar]

- Smith ML, Cottrell GW, Gosselin F, Schyns PG. Transmitting and decoding facial expressions. Psychological Science. 2005;16:184–189. doi: 10.1111/j.0956-7976.2005.00801.x. doi:10.1111/j.0956–7976.2005.00801.x. [DOI] [PubMed] [Google Scholar]

- Susskind JM, Littlewort G, Bartlett MS, Movellan J, Anderson AK. Human and computer recognition of facial expressions of emotion. Neuropsychologia. 2007;45:152–162. doi: 10.1016/j.neuropsychologia.2006.05.001. doi:10.1016/j.neuropsychologia.2006.05.001. [DOI] [PubMed] [Google Scholar]

- Tracy JL, Robins RW. The automaticity of emotion recognition. Emotion. 2008;8:81–95. doi: 10.1037/1528-3542.8.1.81. doi:10.1037/1528–3542.8.1.81. [DOI] [PubMed] [Google Scholar]

- Trope Y. Identification and inferential processes in dispositional attribution. Psychological Review. 1986;93:239–257. doi:10.1037/0033-295x.93.3.239. [Google Scholar]

- Trope Y, Alfieri T. Effortfulness and flexibility of dispositional judgment processes. Journal of Personality and Social Psychology. 1997;73:662–674. [Google Scholar]

- Tzelgov J. Automatic but conscious: That is how we act most of the time. In: Wyer JRS, editor. Advances in social cognition. Vol. 10. Erlbaum; Mahwah, NJ: 1997a. pp. 217–230. [Google Scholar]

- Tzelgov J. Specifying the relations between automaticity and consciousness: A theoretical note. Consciousness and Cognition. 1997b;6:441–451. doi:10.1006/ccog.1997.0303. [PubMed] [Google Scholar]

- Van den Stock J, Righart R, de Gelder B. Body expressions influence recognition of emotions in the face and voice. Emotion. 2007;7:487–494. doi: 10.1037/1528-3542.7.3.487. doi:10.1037/1528–3542.7.3.487. [DOI] [PubMed] [Google Scholar]

- Waller BM, Cray JJ, Jr., Burrows AM. Selection for universal facial emotion. Emotion. 2008;8:435–439. doi: 10.1037/1528-3542.8.3.435. doi:10.1037/1528–3542.8.3.435. [DOI] [PubMed] [Google Scholar]

- Wegner DM. Ironic processes of mental control. Psychological Review. 1994;101:34–52. doi: 10.1037/0033-295x.101.1.34. [DOI] [PubMed] [Google Scholar]

- Wegner DM, Bargh JA. Control and automaticity in social life. In: Gilbert D, Fiske S, editors. The handbook of social psychology. Vol. 1. McGraw-Hill; New York, NY: 1998. pp. 446–496. [Google Scholar]

- Widen SC, Russell JA. Children’s scripts for social emotions: Causes and consequences are more central than are facial expressions. British Journal of Developmental Psychology. 2010a;28:565–581. doi: 10.1348/026151009x457550d. doi:10.1348/026151009X457550d. [DOI] [PubMed] [Google Scholar]

- Widen SC, Russell JA. Differentiation in preschooler’s categories of emotion. Emotion. 2010b;10:651–661. doi: 10.1037/a0019005. doi:10.1037/a0019005. [DOI] [PubMed] [Google Scholar]

- Widen SC, Russell JA. The “disgust face” conveys anger to children. Emotion. 2010c;10:455–466. doi: 10.1037/a0019151. doi:10.1037/a0019151. [DOI] [PubMed] [Google Scholar]

- Wilson TD, Brekke N. Mental contamination and mental correction: Unwanted influences on judgments and evaluations. Psychological Bulletin. 1994;116:117–142. doi: 10.1037/0033-2909.116.1.117. [DOI] [PubMed] [Google Scholar]

- Wong B, Cronin-Golomb A, Neargarder S. Patterns of visual scanning as predictors of emotion identification in normal aging. Neuropsychology. 2005;19:739–749. doi: 10.1037/0894-4105.19.6.739. doi:10.1037/0894–4105.19.6.739. [DOI] [PubMed] [Google Scholar]

- Young AW, Rowland D, Calder AJ, Etcoff NL, Seth A, Perrett DI. Facial expression megamix: Tests of dimensional and category accounts of emotion recognition. Cognition. 1997;63:271–313. doi: 10.1016/s0010-0277(97)00003-6. doi:10.1016/s0010-0277(97)00003–6. [DOI] [PubMed] [Google Scholar]