Summary

The rapid development of new biotechnologies allows us to deeply understand biomedical dynamic systems in more detail and at a cellular level. Many of the subject-specific biomedical systems can be described by a set of differential or difference equations which are similar to engineering dynamic systems. In this paper, motivated by HIV dynamic studies, we propose a class of mixed-effects state space models based on the longitudinal feature of dynamic systems. State space models with mixed-effects components are very flexible in modelling the serial correlation of within-subject observations and between-subject variations. The Bayesian approach and the maximum likelihood method for standard mixed-effects models and state space models are modified and investigated for estimating unknown parameters in the proposed models. In the Bayesian approach, full conditional distributions are derived and the Gibbs sampler is constructed to explore the posterior distributions. For the maximum likelihood method we develop a Monte Carlo EM algorithm with a Gibbs sampler step to approximate the conditional expectations in the E-step. Simulation studies are conducted to compare the two proposed methods. We apply the mixed-effects state space model to a data set from an AIDS clinical trial to illustrate the proposed methodologies. The proposed models and methods may also have potential applications in other biomedical system analyses such as tumor dynamics in cancer research and genetic regulatory network modeling.

Keywords: EM algorithm, Gibbs sampler, Kalman filter, Mixed-effects models, Parameter estimation, State space models

1. Introduction

State space modelling has been used mainly in time series data analysis. It has found application in many areas, such as economics, engineering, biology etc. A state space model consists of a state equation and an observation equation. The state equation models the process of the states while the observation equation links the observations to these underlying states. A standard linear state space model can be written as

| (1) |

| (2) |

where xt is the state vector, yt is the observation vector, Ft is the state transition matrix, and Gt is the observation matrix. It is assumed that vectors vt and wt are independent and identically distributed with vt ~ N(0, Q) and wt ~ N(0, R). The system matrices (Ft, Gt) and the covariance matrices (Q, R) may contain unknown parameters θ and ω, respectively.

In this paper, motivated by HIV dynamic studies, we propose a class of mixed-effects state space models (MESSM) based on the longitudinal feature of the dynamic systems. In the models, the system matrices contain random effects, and the dimension and structure of the system matrices are specified a priori and may be based on a system of differential/difference equations, which is often used in engineering applications. Two estimation methods for standard mixed-effects models and state space models, the Bayesian approach and the maximum likelihood method, are modified and investigated for estimating unknown parameters in the proposed MESSM. Simulation studies are carried out to assess the finite-sample performance of the two estimation methods, and the results indicate that both methods provide quite reasonable estimates for the parameters. The mixed-effects state space models are applied to a data set from an AIDS clinical trial to illustrate the proposed methodologies.

The primary interest of state space modelling is to estimate the state variables based upon the observations. If the parameters are known, the well-known Kalman filter (Kalman, 1960) yields, under the condition of linearity and normality, the optimal estimates of the states. For unknown parameters the maximum likelihood method provides efficient estimates under certain conditions (Schweppe, 1965; Pagan, 1980; Jensen and Petersen, 1999).

The state space model provides rich covariance structures for the observations yt. It indeed covers the covariance structure of ARMA (Autoregressive Moving Average) models as the latter can be written into a state space form. Another interesting aspect of the state space model is its flexibility in modeling the underlying mechanism (state equation) and the observations (observation equation) separately.

A longitudinal study usually involves a large number of individuals with observations for each individual taken over a period of time. Variation among the observations consists of the between-subject variation and the within-subject variation. In the very popular linear random-effects models (Laird and Ware, 1982)

the random effects bi and errors ei = [ei1, · · ·, eini]′ account for the between-subject and within-subject variation, respectively. Covariance matrix Ωi may be diagonal or has a complicated structure (Jones, 1993; Diggle, Liang and Zeger, 1994). In particular, Jones (1993) considered the ARMA covariance structure for Ωi. In this work ARMA models were transformed into state space forms, and the Kalman filter was used to compute the likelihood.

Stochastic models have been used to model longitudinal data. For example, Rahiala (1999) proposed a random-effects autoregressive (AR) model for a growth curve study in which the AR coefficients were treated as random effects. The state space approach was briefly discussed in Rahiala’s paper for the computation of the likelihood with missing data.

Instead of using the state space approach as a computational tool, we consider the state space modeling approach to directly model longitudinal dynamic systems in this paper. We introduce the random effects to the system matrices Ft and Gt in (1)–(2) to account for between-individual variations in longitudinal studies. It is a stochastic modeling approach, but is more flexible than the random-effects AR model and can easily handle irregularly spaced data and missing data. Such modeling approach inherits the modeling power of state space models for dynamic systems as well as the idea of random effects for longitudinal studies. Thus it is especially useful in dealing with mechanism-based longitudinal dynamic systems (Huang, Liu and Wu, 2006).

This paper is organized as follows. In Section 2, we introduce the MESSM, state some basic assumptions on the system matrices, and give an illustration example based on an HIV dynamic model. Section 3 presents estimation methods for both the individual and the population state variables. The Bayesian approach and the maximum likelihood (ML) method are proposed to estimate unknown parameters in the proposed models in Section 4. In Section 5 we present results from simulation studies and apply the MESSM to an AIDS clinical trial data. In Section 6 we summarize the results and discuss possible extensions of the MESSM and other related issues.

2. Model Specification

A linear MESSM can be written as

| (3) |

| (4) |

where yit is the q × 1 vector of observations for the ith (i = 1, …, m) subject at time t (t = 1, …, ni), xit is the p × 1 state vector, vit is the p × 1 dynamic disturbance vector, and wit is the q × 1 vector of the observation errors. {vit} and {wit} are assumed to be mutually independent within themselves and independent to each other. We assume that the initial values xi0’s are independent and xi0 ~ N(τ, A). This assumption is critical in deriving the population state in Section 3.2, but not important for the formulation of the parameter estimation methods in Section 4. The p × p transition matrix F(θi) and the q × p observation-system matrix G(θi) are parameterized with the r × 1 parameter vector θi, and the p × p matrix Q and the q × q matrix R with the d × 1 vector of parameters ω. Note that the parameters for F and G are not necessarily same. They may share some common parameters or may have different parameters. However, for presentation convenience, we use θi to denote all the unknown parameters in both F and G. Similar handling is applied for ω. Recognized as a vector of individual parameters, θi can be modelled as

| (5) |

where θ is the fixed effect or the population parameter vector (r × 1), and the vectors bi are the random effects, which are assumed to be i.i.d. random vectors. Model (5) for the mixed effects can be generalized without much difficulty to more complicated linear or nonlinear models with covariates (Davidian and Giltinan, 1995; Vonesh and Chinchilli, 1996).

We give an illustration example for the proposed MESSM based on an HIV dynamic model proposed by Perelson et al. (1997). This model consists of three differential equations describing the interaction between the target cells and HIV infection. It was assumed that the antiviral therapy completely blocked the reproduction of infectious HIV virions, thus the newly produced virions were all noninfectious. The model was written as

| (6) |

where VI, VNI, T and T* represented the plasma concentrations of infectious viruses, noninfectious viruses, uninfected T-cells, and productively infected T-cells, respectively. Wu, Ding, and DeGruttola (1998) and Wu and Ding (1999) introduced a mixed-effects model approach for HIV dynamics that was shown to improve the estimation of both the individual and the population parameters. Because in practice HIV dynamic data were collected at discrete times (days), difference equations may be considered to replace the differential equations (6). If the total plasma HIV concentration (V = VNI + VI) and T* were observed, a mixed-effects state space model for system (6) could be formulated as

| (7) |

| (8) |

where yit is the observed (T* V) for the ith patient at time t,

The mixed effects are assumed to follow

where are population parameters.

The proposed MESSM in this study is a hybrid of mixed-effects models and the state space models. It inherits the strengths of both types of models. The mixed effects link all subjects via the population structure, which establishes the concept of the population dynamics. The estimation of unknown parameters for individual subjects, especially for those with insufficient observations, can be improved by borrowing information from other subjects. State space modelling is especially valuable when the system dynamics can be described by mathematical models. Irregularly spaced data, common in longitudinal studies, could be handled easily by state space models. Moreover, state space models can flexibly incorporate various correlation structures for serially correlated longitudinal data.

3. Estimation of State Variables

In this section we consider the estimation of both individual state variables and population state variables in a mixed-effects state space model for given parameters θ and ω.

3.1 The Kalman filter and the estimation of individual states

The estimation of an individual state xit can be classified into three types based on the amount of information that is available. The estimation of xit is called a one-step-ahead prediction if {yi1, · · ·, yi,t−1} are observed; it is called a filtered estimation if {yi1, · · ·, yit} are observed; and a smoothed estimation if {yi1, · · ·, yini(ni > t)} are observed. If the parameters θi and ω are known in the linear MESSM (3) – (4), then the Kalman filter (Kalman, 1960) provides the basic solutions to the three types of estimation problems. The Kalman filter is a recursive algorithm which produces the linear estimators for the state variables. It is well-known that, under the assumption of normality, these estimators obtained from the Kalman filter are unbiased and minimize the mean squared error. We review the iterative algorithm of the Kalman filter in the following discussion. Details of the Kalman filter can be found in Harvey (1989), Brockwell and Davis (1991), and West and Harrison (1997).

Let xit|k denote the estimation of the individual state xit given the observations {yi1, …, yik}. Let Pit|k be the covariance matrix of the estimation error xit|k − xit. Use Fi and Gi to represent F(θi) and Gi(θi), respectively. The Kalman recursion proceeds as follows,

where is the Kalman gain matrix, fit is the innovation with covariance matrix Oit. xit|t−1 and xit|t are the one-step-ahead prediction and the filtered estimation of xit, respectively.

Let , and . The fixed-point smoothing algorithm proceeds as follows:

| (9) |

| (10) |

with the initial condition, Pit,t = Pit|t−1.

Under the normality assumption, the Kalman estimator xit|k is the mean of xit conditional on the data {yi1, …, yik}. If the normality assumption does not hold, the Kalman filter may not produce the conditional means of the states. The Bayesian approach provides an alternative method for the estimation of the state vectors (Section 4.1).

3.2 Population state variable and the EM algorithm enhanced smoothing

From (3), we can write xit in terms of xi0 and {vis, s ≤ t}:

| (11) |

Here Fi = F (θi) is a function of θi. Therefore, under the assumptions that the initial values xi0’s are i.i.d. and θi’s are i.i.d., Equation (11) implies that xit’s are i.i.d. at a given time point t. Thus the individual state variables xit (i = 1, · · ·, m) follow a common mean process xt plus a mean zero process, and can be modelled as

| (12) |

We call xt the population state variable at time t, which represents the mean response of all individuals at time t. Vector zit represents the deviation of the individual state xit from the population variable xt with Bt being the covariance matrix.

If the xit’s were observable, the maximum pseudo-likelihood estimation of xt would then be the sample mean . However, because in practice the xit’s are usually not directly observable, we instead use the Kalman smoothing estimate xit|ni(t < ni), denoted by x̃it, to replace xit in (12). Let ςit = x̃it − xit be the estimation error of x̃it, then ςit has mean 0 and covariance matrix Σit = Pit|ni as defined in Section 3.1. One can establish a variance-components model for x̃it

| (13) |

Treating x̃it as “data”, we maximize the pseudo-likelihood function to estimate the population state xt and the covariance matrix Bt, that is, to maximize

Note that if we assume normality in model (13), the resulting likelihood function is equivalent to the pseudo-likelihood function. Thus, the EM algorithm developed by Steimer, Mallet, Golmard and Boisvieux (1984) to maximize the likelihood function under the normality assumption can be adapted as follows to maximize the pseudo-likelihood function for the estimation of xt and Bt:

Initialization:

- E-step: Update the individual estimates of state variables:

- M-step: Update the population estimates of state variable and parameters,

Repeat Steps 2 and 3 until convergence is reached. Estimates of both individual states and population states are collected at the final step.

We have proposed to estimate the population state variable based on the smoothing estimates of the individual state variables. In a similar way, the filtered or predicted individual state variables can be used to estimate the population state variable if the interested time point t is beyond the last time point of observations. Note that if the assumption that xi0’s are i.i.d. does not hold, then the population state variable xt is no longer the mean of xit. In this case, the estimated x̂t using the EM algorithm may be considered as an average of the estimated individual state variables after adjusting for the estimation errors.

4. Parameter Estimation

In this section we discuss parameter estimation in MESSM from both the Bayesian and the likelihood perspectives. The Bayesian approach and the ML method for mixed-effects models and the state space models have been well established (Harvey, 1989; Davidian and Giltinan, 1995; Vonesh and Chinchilli, 1996; West and Harrison, 1997). The proposed estimation methods for MESSM are combinations of these estimation methods.

4.1 Bayesian Approach

The Bayesian approach for state space models has been studied by many authors in recent years. Linear state space models have been presented in West and Harrison (1997). Nonlinear non-Gaussian state space models have been studied in, for example, Kitagawa (1987, 1996), Carlin, Polson, and Stoffer (1992), De Jong and Shephard (1995), and Chen and Liu (2000). Most of these studies focus on the estimation of state variables, while the estimation of unknown parameters in the models has not been paid enough attention. For MESSM we adopt the method proposed by Carlin, Polson and Stoffer (1992), in which the Gibbs sampler was used to draw samples from the full conditional distributions of state variables xt and parameters conditional on the observations yt. This approach is straightforward and easy to implement, and it has produced good results in our simulation studies. However, our method differs from Carlin et al. (1992) in that (1) independent individual subjects are involved in the MESSM; (2) we include one more hierarchical structure on the individual parameters; and (3) we emphasize both parameter estimation and state variable estimation. For the Bayesian approach, prior distributions are assigned to the parameters, and the inference is based upon the posterior distributions. Note that, if only one subject i is considered and the prior distribution N(θ, D) is assigned to θi, then conceptually θ and D are simply hyperprior parameters for a standard state space model. However, these hyperprior parameters represent the population parameters when all the subjects are considered, and thus have their own interpretations at the population level.

When the conjugate prior distributions are carefully chosen, the full conditional distributions have closed forms and are easy to sample from. The Gibbs sampler can then be used to explore the posterior distributions of the unknown parameters.

Let W(ϒ, ν) denote a Wishart distribution with the scale matrix ϒ and the degrees of freedom parameter ν. We use the following prior distributions:

| (14) |

As one of the referees pointed out, for dynamic systems, vague and proper priors may lead to improper posteriors. In order to avoid improper posterior densities, Bates and Watts (1988) suggested to put a locally uniform prior on the expectation surface to represent a non-informative prior, instead of putting a locally uniform prior on the parameter space. Under our model setting, it is possible that vague proper priors on the parameter space can be translated into informative priors in the state space and can lead to improper posteriors.

The full conditional distributions can be derived as follows:

-

The conditional distributions of the state vectors are

(15) where for t ≠ 0 or ni(16) (17) for t = 0, Hi0 = Fi′Q−1Fi + A−1, λi0 = Fi′Q−1xi1 + A−1τ; and for t = ni, , λini = Q−1Fixi,ni−1 + Gi′R−1 y ini.

-

The conditional distributions of θi, θ, and D are

(18) (19) where ,‘⊗’ stands for kronecker product. U1 or U2 has dimension of p2 × r or q2 × r (r is length of θ) and is defined as follows. Remember that each element of Fi or Gi can be represented by a linear combination of all elements in θi. If we strip out Fi or Gi by column and stack them, the formalized vector with length p2 or q2 which we denote as vec(Fi) or vec(Gi) can be represented by the product of U1 or U2 with θi, i.e., vec(Fi) = U1θi and vec(Gi) = U2θi.

-

The conditional distributions of the covariance matrices Q and R are

(20) (21) where .

Note that irregularly spaced data can be treated as data with missing observations, and the full conditional distributions only need slight adjustments at the time points of missing data. For example, suppose yit is missing, the conditional distribution p(xit|y, xi,k≠t, · · ·) in (15) depends only on xi,t−1, xi,t+1 and the parameters, but not on yit. Thus, one needs to drop the terms Gi′R−1Gi in (16) and Gi′R−1yit in (17), which are related to the observation. With the large number of missing data, the Bayesian approach ran reasonably fast. However, a large number of missing data can slow down the EM algorithm. It is well-known that the EM algorithm has a linear convergence rate, which is determined by the information ratio of the missing data to the complete data. For sparse data, a large amount of information, including the random effects, the state vectors and many observations, is missing, and therefore the convergence of the EM algorithm can be quite slow.

The construction of the Gibbs sampler is based on the full conditional distributions. Starting from an arbitrary set of initial values, samples are drawn from the conditional distributions sequentially. Let Φ be the collection of all the components involved in the full conditional distributions, i.e., Φ = (x10, · · ·, x1n1, · · ·, xm0, · · ·, xmnm, θ1, · · ·, θm, θ, D, Q, R). Let Φ − {u} be the set of all the components except {u}. Here {u} can be any component or a set of components in Φ. Starting from the initial value Φ(0), the Gibbs sampler draws a sample from p(x10| {Φ − {x10}}(0), y), then draws from , and so on up to R(1) from p(R| {Φ − {R}}(1), y). Suppose that the Jth iteration produces a sample Φ(J). When J is large, Φ(J) is a sample from the posterior distribution p(Φ|y) (Geman and Geman, 1984). The posterior distribution of the kth component of Φ can be approximated by (Carlin, Polson and Stoffer, 1992)

| (22) |

The posterior mean can be taken as the point estimate of a parameter if the Bayesian loss function is chosen to be the squared-error loss.

Assuming that the observations are available up to time ni, the Gibbs sampler constructed above can produce samples from the posterior distribution p(xit|y) for t ≤ ni. For t < ni this is a solution to the smoothing problem defined in Section 3.1. For t = ni the posterior distribution p(xini|y) provides a solution for the filtering problem at time ni. To solve the prediction problem, i.e. for t > ni, one can treat {yi,ni+1, yi,ni+2, · · ·, yit} as missing and derive the conditional distributions for the state vectors {xi,ni+1, xi,ni+2, · · ·, xit}, then include these state vectors in the Gibbs sampler. Compared with the Kalman filter, the Gibbs sampler approach is more flexible and can be extended to nonlinear and non-Gaussian cases (Carlin, Polson and Stoffer, 1992), which also provides a unified framework for the state estimation and parameter estimation. However, the Gibbs sampler approach is computationally expensive, and does not provide online solutions to the estimation problems.

4.2 Estimation by the EM algorithm

For the standard Gaussian linear state space models, the ML method for the estimation of parameters has been well studied (Harvey, 1989). The idea of the ML method is to calculate the likelihood function via the prediction error decomposition (Schweppe, 1965) in which the Kalman filter is applied to obtain the innovations and their covariances. Due to the complexity of the likelihood function of the standard state space model, numerical methods, specifically the EM algorithm (Watson and Engle, 1983) and the Newton-Raphson algorithm (Engle and Watson, 1981), are often applied. We can extend the EM algorithm proposed by Watson and Engle (1983) to find the MLE of unknown parameters for the MESSM (3)–(5). The variance estimate can be obtained by inversing the observed Fisher information matrix, which can be used to get the interval estimates for the unknown parameters under a normal distribution assumption. To save space, we provide the details on implementation of EM algorithm in the Web Supplementary Appendix.

The EM algorithm proposed here is closely related to the Bayesian approach as both contain a Gibbs sampling scheme, and the full conditional distributions for the Gibbs sampler are very similar. The differences are also clear. The EM algorithm is developed for the likelihood approach, thus the parameters (θ, Q, R, D) are not treated as random and no prior distributions are needed. Computationally, in the Bayesian approach the parameters (θ, Q, R, D) are constantly updated by sampling from their conditional distributions, while in the EM algorithm they remain fixed during the sampling step, and are updated only in the M-step. Therefore, for one iteration of the EM algorithm, the sampling step is computationally less expensive than the Bayesian approach, but extra computational costs is needed in the M-step. From our experience, running one iteration of the EM algorithm is more time consuming than running the Gibbs sampler in the Bayesian approach, assuming equal number of Gibbs iterations. The convergence of the EM algorithm is also slow, usually more than 50 iterations are needed for convergence. Overall the computational cost for the EM algorithm is much higher. However, an alternative approach, the stochastic approximation EM (SAEM) approach (Delyon, Lavielle and Moulines 1999) is very efficient and generally applicable to obtain the maximum likelihood estimates for the unknown parameters in nonlinear mixed-effects models from longitudinal data, which is worthy more exploring for potential online estimation.

5. Numerical Examples

To illustrate the usefulness of the proposed MESSM and estimation methods, we carried out two simulations studies: one is a simple univariate MESSM and another is a more complicated bivariate MESSM. To save space, we only report the simulation results from the bivariate model in this section and report the results from the univariate MESSM in the Web Supplementary Appendix. We also applied the proposed MESSM and estimation methods to an AIDS clinical data set in this section.

Simulation Study

Consider a MESSM model (3)–(4) with p = 2, q = 1, r = 2, and . Let . Set , and R = 1. The variances Q and R were selected to make the Filter Input Signal to Noise Ratio (FISNR) (Anderson and Moore, 1979) close to 3:2. We assumed the following priors for the Bayesian approach

| (23) |

Here G(., .) stands for the Gamma distribution. We specified the priors by setting β0 = 0.5, β1 = 0.0001, ν0 = 0.5, ν1 = 0.5, ω0 = 0.5, ω1 = 0.5, τ = 20, and A = 100. Note that the true value of is very small (close to zero). If a prior for is selected to be skewed toward zero such as the one we used in our simulation, it is easier to identify this variance parameter as suggested by one of the referees. Otherwise, the estimate of is poor. We assumed a normal prior distribution N(η, Δ) for θ with η = (0.5, 0.5)′ and . For this bivariate MESSM, contained the known parameters in the system matrix Fi. A simulation study of 50 replicates was carried out for m = 20 and n = 20. The Gibbs sampler was run for 20000 iterations for each replicate. The estimation results are shown in Table 1. In this table represents the relative error. For both the Bayesian approach and the EM algorithm the estimates for θ1 and θ2 were close to the true values. The Bayesian estimates of and R were better than the EM estimates. However the EM algorithm provided better estimation for .

Table 1.

Parameter estimation for the bivariate MESSM model with 50 replicates, m = 20 and n = 20.

| θ̂1 | θ̂2 |

|

|

R̂ | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

| ||||||||||||

| B | EM | B | EM | B | EM | B | EM | B | EM | |||

| Mean | 0.305 | 0.293 | 0.594 | 0.606 | 0.009 | 0.006 | 1.357 | 1.122 | 1.04 | 1.164 | ||

| Bias | 0.005 | −0.007 | −0.006 | 0.006 | 0.0026 | −0.0004 | −0.083 | −0.318 | 0.04 | 0.164 | ||

| MSE | 0.0014 | 0.0012 | 0.0018 | 0.0014 | 7 × 10−6 | 3 × 10−6 | 0.142 | 0.345 | 0.09 | 0.215 | ||

| RE | 0.125 | 0.115 | 0.071 | 0.062 | 0.413 | 0.271 | 0.262 | 0.401 | 0.300 | 0.463 | ||

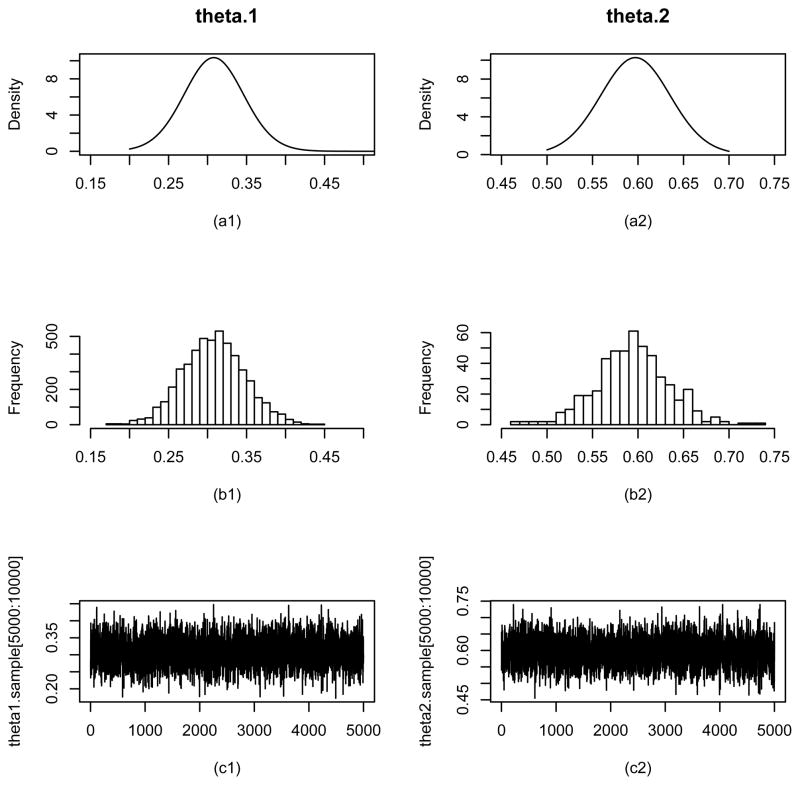

Figure 1 shows the histogram of the Gibbs samples for θ1 and θ2 and the estimated marginal posterior densities for both parameters. Considering θ1, one notices that with Φ being the collection of all the parameters and the state variables. Using the formula (22) the estimated posterior density can be derived as

Figure 1.

Parameter estimation results for θ1 and θ2 from the Bayes method for the simulation study (the bivariate MESSM model). (a1) and (a2) are estimated posterior densities, (b1) and (b2) are histograms of the Gibbs sampler, and (c1) and (c2) are trace plots for the last 5000 samples for θ1 and θ2, respectively.

where η1 is the first element of η, and N(θ1|a, b) stands for the normal density with mean a and variance b. The histogram shows that the Gibbs samples for θ1 are tightly centered around 0.3, and agrees very well with the estimated posterior density. The similar plots for θ2 are also displayed in Figure 1. It can be seen that the Gibbs samples for θ2 are concentrated around 0.6, the true parameter value of θ2. To monitor the convergence of the Gibbs samples the trace plots for both parameters are also shown in Figure 1. The trace plots do not display any signs of patterns, suggesting the convergence to the stationary distribution. Similarly the Gibbs samples for the other parameters D, Q and R (not shown) also suggest the convergence of the Gibbs samplers.

HIV Dynamic Application

We applied the MESSM to a data set from the AIDS Clinical Trial Group (ACTG) Study 315 (Wu and Ding, 1999). In this trial, 53 HIV infected patients were treated with potent antiviral drugs (ritonavir, 3TC, and AZT). Among those 5 dropped out of the study. Treatments started on day 0 and the plasma concentration of HIV-1 RNA was repeatedly measured on days 0, 2, 7, 10, 14, 21, 28 and weeks 8, 12, 24, 48 after the initiation of the treatment. Perelson et al. (1997) suggested a two-phase clearance of viral load. The first phase featured a rapid drop in viral load due to the exponential death rate of the productively infected cells during the first four weeks of treatment. The decline of viral load was slower in the second phase due to the slow clearance rate of the long lived infected T-cells or latently infected T-cells. Here we considered a time-varying MESSM for the ACTG 315 data assuming the rate of clearance changed at the mid-point of week 4 (i.e. day 24). This model is closely related to the simple HIV dynamic model with a constant rate (Ho et al. 1995). The MESSM is written as

where yit is the base 10 logarithm of the measured viral load from patient i at time t, and xit represents the base 10 logarithm of the actual viral load. θit indicates the viral clearance rate for patient i at day . Here for k = 1, 2, and are independent. Note that we may use the log-transformation to guarantee θit to be positive. For other complicated constraints of parameters, there is no general method to deal with and we may need to deal with this problem case-by-case. Although this is an simplified HIV dynamic model, it serves to illustrate the proposed methodologies.

The similar prior distributions as in the simulation studies were utilized for the Bayesian approach (see the Web Supplementary Appendix). Table 2 gives the estimation values of both the population parameters and the individual parameters following the Bayesian approach. The EM estimation was similar and omitted.

Table 2.

Parameter estimation and forecasts of viral load for the HIV dynamic model. Confidence interval for population estimates are included in brackets.

| Estimation of population parameters

| ||||||||

|---|---|---|---|---|---|---|---|---|

| θ̂ 1 | θ̂2 | D̂1 | D̂2 | Q̂ | R̂ | |||

| 0.976(0.974,0.979) | 0.994(0.991,0.997) | 0.0052 (0.004,0.0075) | 0.0046(0.003,0.0065) | 0.119(0.104,0.134) | 0.204(0.174,0.238) | |||

|

| ||||||||

| Estimation of the individual parameters for 48 patients

| ||||||||

|

|

||||||||

| 0.975753 | 0.972227 | 0.976278 | 0.975794 | 0.97177 | 0.976373 | 0.98232 | 0.976072 | |

| 0.975554 | 0.979314 | 0.976282 | 0.978256 | 0.973686 | 0.98045 | 0.976808 | 0.976119 | |

| 0.972679 | 0.976762 | 0.97706 | 0.974961 | 0.975959 | 0.974782 | 0.97605 | 0.977631 | |

| 0.976737 | 0.977898 | 0.979602 | 0.978431 | 0.974501 | 0.975863 | 0.977751 | 0.976239 | |

| 0.977426 | 0.977808 | 0.978213 | 0.976879 | 0.973311 | 0.979874 | 0.97188 | 0.978342 | |

| 0.974063 | 0.972705 | 0.974047 | 0.975449 | 0.975593 | 0.976236 | 0.979845 | 0.9746 | |

|

|

||||||||

| 0.994389 | - | 0.994317 | 0.993837 | 0.993597 | 0.996628 | 0.993837 | - | |

| - | 0.994033 | 0.993657 | 0.994675 | 0.992734 | 0.994795 | 0.994546 | 0.994494 | |

| 0.994527 | 0.994921 | 0.994156 | 0.993475 | 0.9951 | 0.994282 | - | 0.992501 | |

| 0.994217 | 0.994472 | 0.991974 | - | - | 0.994325 | 0.993253 | 0.99328 | |

| - | 0.993176 | 0.993242 | 0.993993 | 0.993556 | 0.994226 | 0.993714 | 0.9946 | |

| 0.994275 | 0.993367 | 0.99472 | 0.994184 | 0.993569 | 0.996103 | 0.993211 | 0.994178 | |

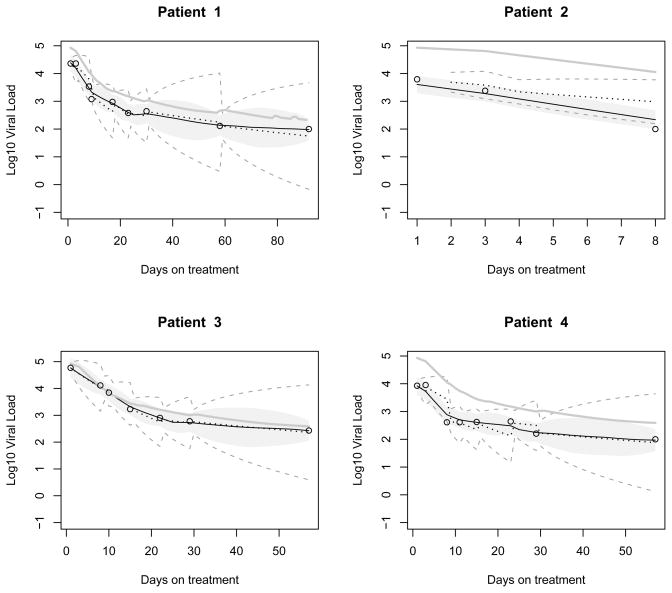

Figure 2 shows the one-step-ahead Kalman forecasts (dotted curves) of the individual states for the first four patients using the estimated individual parameters. The estimated posterior means for the individual states using the Gibbs sampler approach are also shown in solid curves. As expected the posterior mean curves are smooth and close to the observed values. A Kalman forecast jumps one day after the time point of an observation, indicating an adjustment based on the observation. The model checking results are shown in Figure S1 in the Web Supplementary Appendix. The fitted viral loads from both methods match the data quite well. The normal assumption on measurement errors is validated by QQ plots. The density estimates of individual parameters show that the normality assumption on random effects is satisfied for both methods.

Figure 2.

Estimation and prediction of viral load for four patients in the HIV dynamic study (four subjects with different number of measurements and different response patterns are selected for illustration). Base 10 logarithm of the viral loads are shown in circles. The smoothing estimates using the Gibbs sampler approach are shown in black solid curves, and the 95% error bounds are shown by the shaded grey region. The one-step-ahead Kalman forecasts are shown in black dotted curves, and the prediction error bounds are in grey dashed curves. The estimates of population state variable are shown as the gray solid curve.

6. Discussion

In many biomedical longitudinal studies, the underlying biological mechanisms are well studied and mathematical representations, usually a set of differential or difference equations, are available. In this paper, we have proposed a class of mixed-effects state space models for analysis of longitudinal dynamic systems that may arise from biomedical studies. With the intention of giving a comprehensive treatment of this subject, we investigated two methods for estimation of unknown parameters in the proposed models by borrowing ideas from standard mixed-effects models. The concept of population state was introduced, and the EM-enhanced smoothing was proposed. Filtering, smoothing and forecasting algorithms for estimation of individual states were also discussed based on the Kalman filter methods.

The MESSM may be further extended to nonlinear and non-Gaussian cases. It is well-known that, for nonlinear and non-Gaussian state space models, the Kalman estimates of states are not optimal in terms of unbiasedness and mean squared errors. Other filters, such as the extended Kalman filter (Anderson and Moore, 1979), usually involve linear approximation of the nonlinear functions. Although lacking optimality and often awkward when handling complicated models, these filters have been applied extensively simply because there were no other alternative methods. In the past decades, the Bayesian approach and the Gibbs sampler techniques have provided good solutions for smoothing problems in nonlinear non-Gaussian state space models (Carlin, Polson and Stoffer, 1992; Carter and Kohn, 1994 and 1996). Such approach could be adapted to nonlinear non-Gaussian MESSM. A major limitation for the Gibbs sampler approach lies in its incapability of online filtering. Recently sequential Monte Carlo methods (Liu and Chen, 1998), such as the particle filter (Gordon, 1993; Kitagawa 1996), have provided a general framework for online filtering for nonlinear and non-Gaussian state space models. However, when the particle filter approach is extended to MESSM, the extra layer of the mixed effects in the system matrices of MESSM adds significant computational difficulties that need to be overcome in practice.

In summary, we have introduced the MESSM which brings together the techniques of mathematical modelling, time series analysis, and longitudinal data analysis to deal with longitudinal dynamic systems with many potential applications in biomedical research, such as hepatitis virus dynamics (Nowak et al. 1996), tumor dynamics in cancer research (Swan, 1984; Martin and Teo, 1994), and genetic regulatory network modeling (Chen, He and Church, 1999; Holter et al. 2001). However, many theoretical and methodological problems for MESSM remain unsolved. We expect that our paper can attract more attention and stimulate more research in this promising research area.

Supplementary Material

Acknowledgments

This research was partially supported by NIAID/NIH research grants AI078842, AI078498, AI50020 and AI087135. The authors appreciate the two referees for their constructive and insightful comments and suggestions.

Footnotes

Web Appendix 1 is available under the Paper Information link at the Biometrics website http://www.tibs.org/biometrics.

References

- Anderson BDO, Moore JB. Optimal Filtering. Prentice-Hall; 1979. [Google Scholar]

- Bates DM, Watts DB. Nonlinear Regression Analysis and Its Applications. New York: Wiley; 1988. [Google Scholar]

- Brockwell PJ, Davis RA. Time Series: Theory and Methods. Springer-Verlag; 1991. [Google Scholar]

- Carlin BP, Polson NG, Stoffer DS. A Monte Carlo Approach to Nonnormal and Nonlinear State-space Modeling. Journal of American Statistical Association. 1992;87:493–500. [Google Scholar]

- Carter CK, Kohn R. On Gibbs Sampling for State Space Models. Biometrika. 1994;81:541–553. [Google Scholar]

- Carter CK, Kohn R. Markov Chain Monte Carlo in Conditionally Gaussian State Space Models. Biometrika. 1996;83:589–601. [Google Scholar]

- Chen R, Liu J. Mixture Kalman Filters. Journal of the Royal Statistical Society, Series B. 2000;62:493–508. [Google Scholar]

- Chen T, He HL, Church GM. Modeling Gene Expression with Differential Equations. Pacific Symposium of Biocomputing. 1999;1999:29–40. [PubMed] [Google Scholar]

- Davidian M, Giltinan DM. Nonlinear Models for Repeated Measurements Data. Chapman & Hall; 1995. [Google Scholar]

- De Jong P, Shephard N. The Simulation Smoother for Time Series Models. Biometrika. 1995;82:339–350. [Google Scholar]

- Delyon B, Lavielle M, Moulines E. Convergence of a stochastic approximation version of the EM algorithm. Annals of Statistics. 1999;27(1):94–128. [Google Scholar]

- Diggle P, Liang KY, Zeger S. Analysis of Longitudinal Data. Oxford Science; 1994. [Google Scholar]

- Engle RF, Watson MW. A one factor multivariate time series model of metropolitan wage rates. Journal of American Statistical Association. 1981;76:774–781. [Google Scholar]

- Geman S, Geman D. Stochastic relaxation, Gibbs distributions, and the Bayesian restoration of images. IEEE Trans Pattern Anal Mach Intell. 1984;(6):721–741. doi: 10.1109/tpami.1984.4767596. [DOI] [PubMed] [Google Scholar]

- Gordon NJ, Salmond DJ, Smith AFM. Novel approach to nonlinear/non-Gaussian Bayesian state estimation. IEEE Proceedings-F. 1993;140:107–113. [Google Scholar]

- Harvey AC. Forecasting, Structural Time Series Models and the Kalman Filter. Cambridge University Press; 1989. [Google Scholar]

- Holter NS, Maritan A, Cieplak M, et al. Dynamic Modeling of Gene Expression Data. PNAS. 2001;98:1693–1698. doi: 10.1073/pnas.98.4.1693. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ho DD, Neumann AU, Perelson AS, Chen W, Leonard JM, Markowitz M. Rapid turnover of plasma virions and CD4 lymphocytes in HIV-1 infection. Nature. 1995;373:123–126. doi: 10.1038/373123a0. [DOI] [PubMed] [Google Scholar]

- Huang Y, Liu D, Wu H. Hierarchical Bayesian methods for estimation of parameters in a longitudinal HIV dynamic system. Biometrics. 2006;62:413–423. doi: 10.1111/j.1541-0420.2005.00447.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jensen JL, Petersen NV. Asymptotic normality of the maximum likelihood estimator in state space models. The Annals of Statistics. 1999;27:514–535. [Google Scholar]

- Jones RH. Longitudinal Data with Serial Correlation: A State-space Approach. New York: Chapman & Hall; 1993. [Google Scholar]

- Kalman RE. A new approach to linear filtering and prediction theory. Journal of Basic Engineering, Transactions ASME, Series D. 1960;82:35–45. [Google Scholar]

- Kitagawa G. Non-Gaussian state space modelling of nonstationary time series (with discussion) Journal of American Statistical Association. 1987;82:1032–1063. [Google Scholar]

- Kitagawa G. Monte Carlo filter and smoother for nonlinear non-Gaussian state space models. Journal of Computational and Graphical Statistics. 1996;5:1–25. [Google Scholar]

- Laird NM, Ware JH. Random effects models for longitudinal data. Biometrics. 1982;38:963–974. [PubMed] [Google Scholar]

- Liu JS, Chen R. Sequential Monte Carlo methods for dynamic systems. Journal of the American Statistical Association. 1998;93:1032–1044. [Google Scholar]

- Martin R, Teo KL. Optimal Control of Drug Administration in Cancer Chemotherapy. World Scientific; Singapore: 1994. [Google Scholar]

- Nowak MA, Bonhoeffer S, Hill AM, Boehme R, Thomas H, McDade H. Viral dynamics in hepatitis B virus infection. Proc Natl Acad Sci USA. 1996;93:4398–4402. doi: 10.1073/pnas.93.9.4398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pagan A. Some Identification and Estimation Results for Regression Models with Stochastically Varying Parameters. Journal of Econometrics. 1980;13:341–363. [Google Scholar]

- Perelson AS, Essunger P, Cao Y. Decay Characteristics of HIV-1 Infected Compartments during Combination Therapy. Nature. 1997;387:188–191. doi: 10.1038/387188a0. [DOI] [PubMed] [Google Scholar]

- Rahiala M. Random Coefficient Autoregressive Models for Longitudinal Data. Biometrika. 1999;86:718–722. [Google Scholar]

- Schweppe F. Evaluation of likelihood functions for Gaussian signals. IEEE Transactions on Information Theory. 1965;11:61–70. [Google Scholar]

- Steimer JL, Mallet A, Golmard J, Boisvieux J. Alternative Approaches to Estimation of Population Pharmacokinetic Parameters: Comparison with the Nonlinear Mixed Effects Model. Drug Metabolism Reviews. 1984;15:265–292. doi: 10.3109/03602538409015066. [DOI] [PubMed] [Google Scholar]

- Swan GW. Applications of Optimal Control Theory in Biomedicine. Marcel Dekker, Inc; New York: 1984. [Google Scholar]

- Vonesh EF, Chinchilli VM. Linear and Nonlinear Models for the Analysis of Repeated Measurements. Marcel Dekker; New York: 1996. [Google Scholar]

- Watson MW, Engle RF. Alternative Algorithms for the Estimation of Dynamic Factor, Mimic and Varying Coefficient Regression Models. Journal of Econometrics. 1983;23:385–400. [Google Scholar]

- West M, Harrison J. Bayesian Forecasting and Dynamic models. New York: Springer; 1997. [Google Scholar]

- Wu H, Ding A. Population HIV-1 Dynamics in Vivo: Applicable Models and Inferential Tools for Virological Data from AIDS Clinical Trials. Biometrics. 1999;55:410–418. doi: 10.1111/j.0006-341x.1999.00410.x. The data are available online at http://stat.tamu.edu/biometrics/datasets/980622.txt. [DOI] [PubMed] [Google Scholar]

- Wu H, Ding A, DeGruttola V. Estimation of HIV Dynamic Parameters. Statistics in Medicine. 1998;17:2463–2485. doi: 10.1002/(sici)1097-0258(19981115)17:21<2463::aid-sim939>3.0.co;2-a. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.