Abstract

This article examines the challenges in and progress of behavioral intervention research, the trajectory followed for introducing new interventions, and key considerations in protocol development. Developing and testing health-related behavioral interventions involve an incremental and iterative process to build a robust body of evidence that initially supports feasibility and safety, then proves efficacy and effectiveness, and subsequently involves translation, implementation, and sustainability in a real-world context. This process occurs over close to two decades and yields less than 14% of the evidence being integrated into practice. New hybrid models that blend test phases and involve stakeholders and end users up front in developing and testing interventions may shorten this time frame and enhance adoption of a proven intervention. Knowledge of setting exigencies and implementation challenges may also inform intervention protocol development and facilitate rapid and efficient translation into practice. Although interventions needed to improve the public’s health are complex and funding lags behind, introducing new interventions remains a critical and most worthy pursuit.

MeSH TERMS: behavioral research, diffusion of innovation, randomized controlled trials as topic, translational medical research

Behavior, lifestyle, and the social and physical environment are the primary contributors to longevity, health, well-being, and quality of life (Buetner, 2008). Additionally, the most important public health challenges of today—obesity, caregiving, chronic illness, dementia care, autism—are not amenable to pharmacological and medical solutions, approaches that have mostly been ineffective in addressing the behavioral, cognitive, and environmental components of these health conditions. Thus, developing behavioral, nonpharmacological interventions that tackle our most serious public health challenges has become an imperative (Lovasi, Hutson, Guerra, & Neckerman, 2009). New behavioral interventions are needed that improve health behaviors, promote healthy lifestyles, prevent disease, reduce symptoms, promote self-management of chronic diseases and functional disability, and reduce health disparities (Grady, 2011; Jackson, Knight, & Rafferty, 2010; Milstein, Homer, Briss, Burton, & Pechacek, 2011). Also of importance is the development of effective behavioral interventions that are tailored to the cultural nuances of an increasingly diverse population (Institute of Medicine [IOM], 2003; U.S. Department of Health and Human Services, 2011). This article examines the key challenges in and progress of behavioral intervention research, the trajectory of introducing a new behavioral intervention with potential for implementation, and key considerations in developing an intervention protocol.

Challenges of and Progress in Behavioral Intervention Research

One of the most critical challenges in introducing a new intervention is that development and testing transpire over a long period of time, estimated at 17 yr or more. Even then, most interventions are not integrated into practice. Only 14% of new scientific discoveries ever enter real-world contexts; Americans receive only about 50% of recommended evidence-based preventive, acute, and long-term health care services; and minority populations are at the greatest disadvantage, receiving recommended, proven evidence-based care only 35% or less of the time (IOM, 2001, 2008; McGlynn et al., 2003).

The need to shorten the time span from idea inception to translation has increasingly become a concern of funders, health systems, researchers, and the public (Glasgow, Lichtenstein, & Marcus, 2003). In response to this research-to-practice crisis, the National Institutes of Health (NIH) launched its Roadmap for Medical Research in 2004 and the Common Fund in 2006 to develop and support Roadmap initiatives, yet these opportunities have favored knowledge transfer from laboratory understandings of disease mechanisms to the development of diagnostic therapies (T1 research; Bear-Lehman, 2011). The translation of results from clinical studies into everyday practice and decision making (T2 research) has reflected only less than 5% of the NIH budget, and research support for dissemination and implementation of interventions (T3 research) is rarely funded (Westfall, Mold, & Fagnan, 2007; Woolf, 2008).

Another challenge in introducing a new intervention concerns the complexity of practice environments (Burke & Gitlin, 2012). Embedding scientific knowledge into practice requires its own set of implementation steps and evaluative processes (Fixen, Naoom, Blasé, Friedman, & Wallace, 2005; Wilson, Brady, & Lesesne, 2011). Unfortunately, most researchers lack a clear understanding of the contexts in which their interventions may eventually be located. Understanding organizational factors affecting knowledge uptake and potential barriers to intervention adoption up front in the intervention development process may better inform trial designs that in turn advance integration of the intervention into practice if it is proven effective (Cochrane et al., 2007; Gitlin, Jacobs, & Earland, 2010; Glasgow, 2002; Lavis, Robertson, Woodside, McLeod, & Abelson, 2003).

Another important challenge concerns the level of responsiveness of tested interventions to the needs of diverse populations. Because efficacy trials are typically dependent on study volunteers and focus on internal versus external validity, inclusion criteria usually delimit a homogeneous population. Thus, generalizing to nonvolunteers may be hindered, necessitating further testing of an intervention’s acceptability and effectiveness for a broader group of potential users. Tailoring intervention protocols to the needs and preferences of diverse populations and testing cultural modifications may require additional research steps and further testing before rolling out and scaling up for a particular intervention.

Thus, length of time from discovery to integration in a practice setting, complexity of developing evidence for diverse populations, and insufficient funding for developing and introducing behavioral interventions in real-world contexts continue to fuel the knowledge-to-application gap. New strategies are needed to address these challenges, and researchers are called on to more fully understand the trajectory of intervention development and implementation hurdles.

Despite challenges, great strides have been made in introducing new interventions (Campbell et al., 2000). Single and multisite interventions such as the NIH-supported Resources for Advancing Caregiver Health studies (REACH I, Gitlin et al., 2003; REACH II, Belle et al., 2006); ACTIVE to improve cognitive well-being (Jobe et al., 2001); primary care practice–based interventions to improve a variety of conditions such as dementia care (Callahan et al., 2006) or geriatric syndromes (Counsell et al., 2007); and well-constructed occupational therapy–based interventions to enhance older adult well-being (Clark et al., 1997), reduce functional disability (Gitlin et al., 2006), and increase quality of life in people with dementia and their caregivers (Gitlin, Winter, Dennis, Hodgson, & Hauck, 2010a, 2010b) are only a few of the recent studies exemplifying progress in efficacy trials that address multifaceted and complex public health challenges. Nevertheless, although these interventions have been shown to be effective, they are mostly out of reach to the public, primarily because of the complexities of workforce preparatory needs and the fiscal constraints of care settings.

Advances in principles and practices of community-based participatory research (Clinical and Translational Science Awards Consortium, Community Engagement Key Function Committee, 2011; Viswanathan et al., 2004) and use of embedded trial designs (Cooper, Hill, & Powe, 2002) provide researchers the theory base and tools to systematically integrate stakeholders and end users early on in developing an intervention to enhance relevance and the potential for generalizability. Similarly, advances in implementation science (Brownson, Colditz, & Proctor, 2012) and conceptual frameworks for guiding translational efforts (see, e.g., Glasgow, 2002; Murray et al., 2010) have contributed to more nuanced understandings of practice contexts and strategies for moving interventions into practice. Concomitantly, new pipelines are evolving, such as hybrid designs that combine developmental steps to more efficiently and quickly move interventions from test phases to implementation phases (Curran, Bauer, Mittman, Pyne, & Stetler, 2012). These developments are positively changing the conduct of behavioral intervention research.

Intervention Development Phases

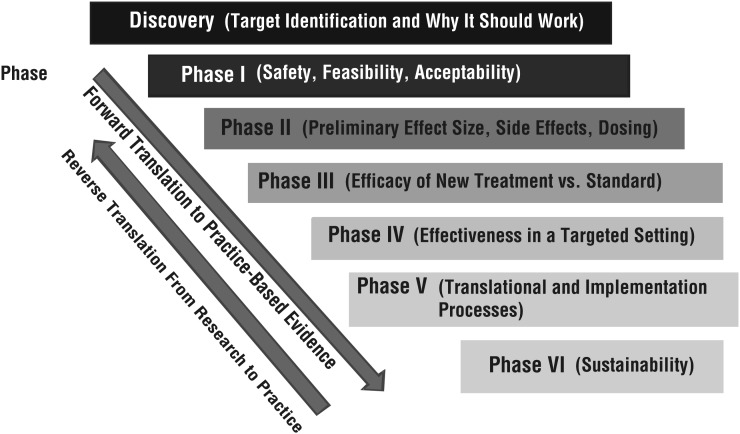

Figure 1 presents an adaptation of the traditional four-phase sequence for developing an intervention: discovery; Phase 1, feasibility; Phase 2, exploratory; Phase 3, efficacy; and Phase 4, dissemination and implementation (Kleinman & Mold, 2009). The figure suggests that developing and testing health-related behavioral interventions involve an incremental, iterative process of building a robust body of evidence (discovery, proof of concept, feasibility, safety, and efficacy, similar to the traditional phased approach) followed by three additional phases—translation, implementation, and maintenance and sustainability—to embed a proven intervention and normalize it within a practice setting.

Figure 1.

The randomized trial-to-translation continuum.

Regardless of the pipeline followed, intervention development must begin with discovery (preclinical phase). Discovery is guided by theory and involves identifying a clinical problem, target population, and existing evidence (e.g., epidemiological record, previous trials) that supports the potential benefit of the intervention being developed. Identifying a base in theory (or theories) is critical to inform an understanding and evaluation of the mechanisms by which an intervention may have its desired effect. An essential question in this phase is, Why would the intervention work?

Several challenges are encountered at the discovery stage. First, the epidemiological record may be incomplete or inadequate to substantiate the scope of a health problem. Second, theories often lack a strong empirical foundation, and their application to an intervention can be difficult. Theory may suggest what needs to be changed and why but not how to induce change. Behavior change and health behavior theories tend to explain behavioral intentions or motivation but do not explain or predict actual behavior or behavior change, which is typically the intent of an intervention. Multicomponent interventions may require combining complementary theories. Similarly, an intervention targeting multisystems (individual, family, community) may require a broad ecological model supplemented with theories specific to the planned intervention activities at each level. Another challenge is the difficulty in identifying a potential practice or service context and payment mechanism; these may not even exist for the proposed intervention. Still, consideration of where and how an intervention will be embedded in the future, if proven effective, is important even at this early stage and helps establish feasibility.

Funding for the discovery stage is scarce. Intramural funds and support from a professional association may be available. If this stage is combined with Phase 1, an NIH planning grant (R34) sponsored by some institutions is a potential funding source. Also, investigators located in research-intensive universities may find pilot funds available for discovery as part of large initiatives such as the Clinical and Translational Science Award grant activities or NIH P30 grant initiatives, all of which support pilot funding, particularly for developmental projects and new investigators.

Phase 1 testing involves identifying and evaluating intervention components and determining acceptability, feasibility, and safety. In this phase, case studies, pre–post study designs, or focus groups, or a combination of these methodologies, can help define and refine intervention delivery characteristics (e.g., dose, intensity, treatment elements). Qualitative research can help investigators evaluate the acceptability and utility of intervention components and potential barriers to adherence and behavioral change. Knowledge generated from activities in this phase can advance a working intervention prototype and treatment manual. If there is a clear implementation site for the intervention if it is proven to be effective, then involving stakeholders at this stage is important. Involving stakeholders at this early stage can help identify the potential facilitators and barriers to inform development of the intervention protocol. As in discovery, funding for Phase 1 is dependent primarily on intramural support or planning grant initiatives occasionally sponsored by foundations or NIH mechanisms such as the R34 or K for new investigators.

Phase 2 involves an initial test of the intervention in comparison with an appropriate alternative. A small randomized trial (with a sample size, e.g., of 30 to 60) can be used to identify outcomes, evaluate whether measurement is sensitive to the expected change, and generate effect sizes. The latter is particularly important because it informs sample size calculations and other design elements of a Phase 3 efficacy trial. In Phase 2, monitoring of feasibility, acceptability, and safety continues, and investigators examine the theoretical basis for observed changes. Yet another important task is evaluating fidelity and refining a monitoring plan and measures for ensuring that intervention groups are implemented as intended. This phase should also yield preliminary evidence that the intervention is efficacious and well-defined treatment manuals. In addition to the funding sources discussed above, activities for this phase can be supported through the NIH R21 mechanism. Also, intramural support through pilot study mechanisms and foundation support are feasible funding alternatives.

Phase 3 represents the definitive randomized controlled trial that compares a fully developed intervention with an appropriate alternative. The most robust efficacy trials are double-blinded: Research team members as well as study participants remain unaware of group allocation. Double-blinding can be difficult to achieve in behavioral intervention research; typically, a single-blind approach is used in which only assessors remain masked to participants’ group assignment. Intention-to-treat (ITT) analysis, in which all data are used regardless of study completion, is considered the most definitive approach, in contrast to a per protocol analysis, in which only participants who complete the entire clinical trial are included in the final analyses of results. ITT may require that interpolation methodologies be applied because behavioral trials always experience attrition. Also, a modified ITT can be used in which data only of participants available at the follow-up are used, regardless of their level of intervention participation.

Determining an appropriate alternative for comparison with the intervention is an important challenge that needs to be addressed in Phase 3. Standard care, usual care, attention control, or active alternative interventions are potential comparison groups, each with its own pros and cons. No consensus exists, and researchers need to offer justification for any type of control group. An active control group is often favored for comparative purposes because it is designed to control for attention, time, and empathy, factors that are afforded the treatment group and that may account for or confound treatment benefits. An active intervention, however, should not include any active ingredients being tested in the treatment group. Active ingredients must be identified a priori and be grounded in the theoretical basis of the proposed intervention. Another consideration in this phase is monitoring fidelity in both the intervention and control groups.

Also of importance in designing an efficacy trial is attention to potential mediator and moderator factors, again according to the theory guiding the trial. Mediational analyses seek to examine the underlying mechanisms or latent variables responsible for treatment benefit, whereas moderator analyses seek to evaluate whether differential treatment effects are obtained on the basis of factors of interest (e.g., participant characteristics such as age, gender, health, cognitive status). Funding for Phase 3 trials is typically through the NIH R01 mechanism. A competitive application for an efficacy trial must be supported by pilot test results, demonstration of feasibility, expected effect size, treatment manuals detailing treatment and control group protocols, tested recruitment and enrollment procedures, and a strong theory base and hypotheses. Before conducting an efficacy trial, formal registration is required at ClinicalTrials.gov, a registry of federally and privately supported clinical trials conducted in the United States and internationally (http://clinicaltrials.gov/). Also, in addition to institutional review board approval, a Phase 3 trial requires a data safety and monitoring board that provides oversight of recruitment and accrual progress, adverse events, interim analyses if part of the trial design, and stopping rules.

In reporting efficacy trial results, investigators must address the design elements detailed by the Consolidated Standards of Reporting Trials (CONSORT) group (www.consort-statement.org/home/). Many journals require submission of a standard study flowchart and CONSORT checklist indicating that listed design elements (e.g., randomization scheme, sample size calculations) are included in the manuscript. It is helpful to become familiar with the CONSORT checklist before designing an efficacy trial and writing a grant application because it reflects a comprehensive list of necessary considerations.

Trials are increasingly costly to conduct because of the need for diverse and large samples, repeated measures, skilled personnel intervening in both treatment and control groups, and extensive monitoring. Thus, balancing costs with funding levels and necessary design elements is an ongoing challenge. Because of the budgetary constraints of funders, it is virtually impossible to design a trial involving long-term follow-up (>12 mo); thus, long-term treatment effects are difficult to evaluate, even though they are important to understand.

Demonstrating efficacy is only the first requirement on the long road to introducing an intervention into standard care. Although scientific reporting of trial results is critical, publications alone do not lead to adoption of the evidence. Hence, Phases 4, 5, and 6 reflect processes in moving from evaluating efficacy to normalizing an intervention and sustaining it in practice. Phase 4 can be either an effectiveness or a replication trial in which the intervention is evaluated within the practice or service context and with a broader group of study participants. Phase 5 consists of a wide range of translational processes, including identifying facilitators of and barriers to implementation, evaluating fidelity, fully manualizing the intervention (Gitlin, Jacobs, & Earland, 2010), developing training programs for instruction in its delivery, and scaling up for full implementation (Glasgow, 2010). Critical to this phase is ensuring that the active ingredients that make the intervention work, or its immutable components (e.g., use of a client-directed approach), are not modified. As to sustainability, of interest is evaluating whether and how the intervention is embedded in a setting such that it becomes “normal” practice.

Determining ways to monitor quality of and fidelity in delivery, receipt, and enactment of the intervention remains the primary focus of these three latter phases. Also, new evidence may emerge from practice or additional research testing the intervention, and this new evidence may lead to enhancements of the original tested intervention. Thus, mechanisms for infusing new evidence to enhance the existing intervention need consideration.

Key Considerations in Designing an Intervention

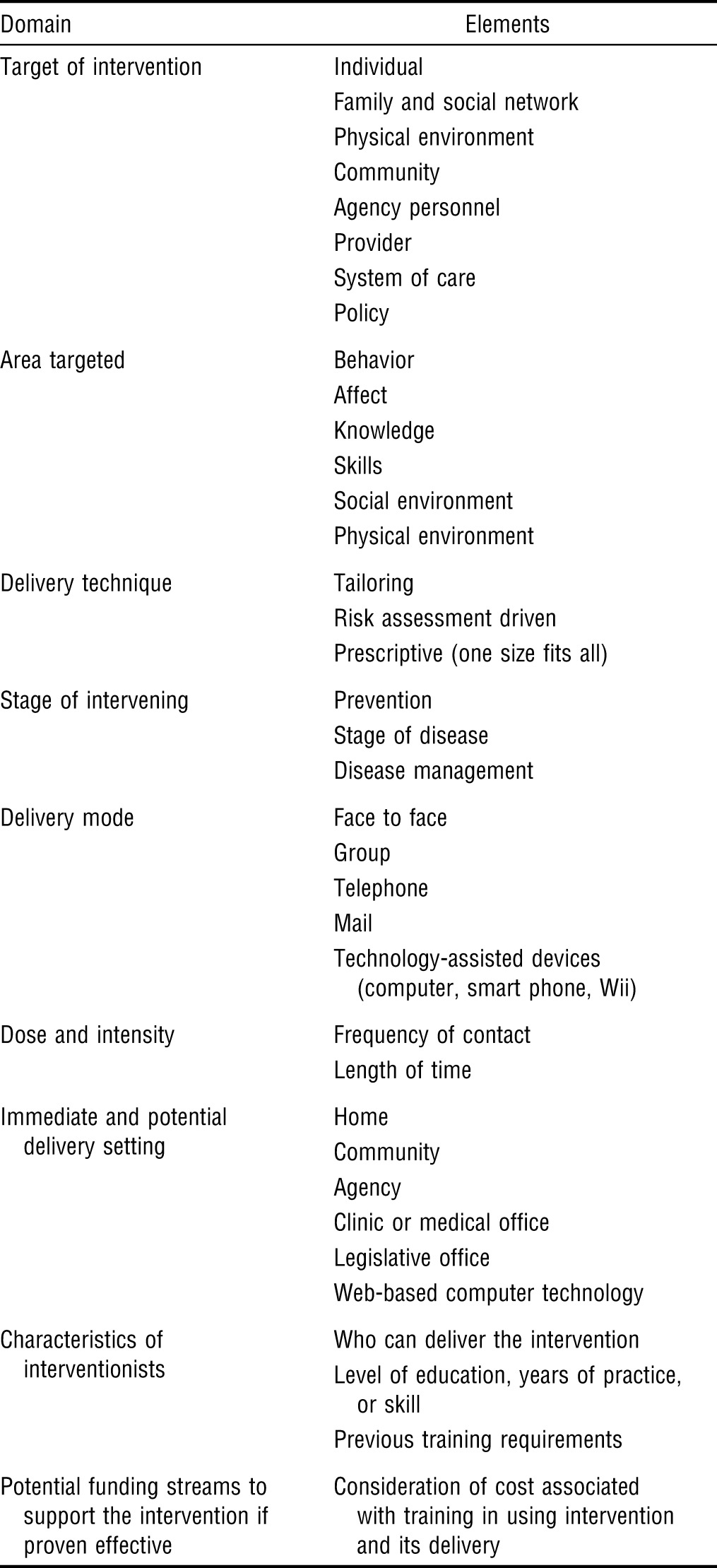

Table 1 outlines domains and specific elements to consider in constructing an intervention. Each of these considerations should be informed by theory, best evidence, practice guidelines, and clinical know-how and knowledge of the implementation goal and site. For example, evidence shows that education can enhance knowledge of a content area but not skill or behavior change. If skill enhancement is the desired intervention goal, a more interactive approach in intervention delivery would be necessary, such as using structured role-play or simulation. Similarly, evidence suggests that tailoring an intervention to specific needs or characteristics is more effective than taking a one-size-fits-all approach if the desired outcome is behavior change (Richards et al., 2007).

Table 1.

Considerations for Designing Behavioral Interventions

| Domain | Elements |

| Target of intervention | Individual |

| Family and social network | |

| Physical environment | |

| Community | |

| Agency personnel | |

| Provider | |

| System of care | |

| Policy | |

| Area targeted | Behavior |

| Affect | |

| Knowledge | |

| Skills | |

| Social environment | |

| Physical environment | |

| Delivery technique | Tailoring |

| Risk assessment driven | |

| Prescriptive (one size fits all) | |

| Stage of intervening | Prevention |

| Stage of disease | |

| Disease management | |

| Delivery mode | Face to face |

| Group | |

| Telephone | |

| Technology-assisted devices (computer, smart phone, Wii) | |

| Dose and intensity | Frequency of contact |

| Length of time | |

| Immediate and potential delivery setting | Home |

| Community | |

| Agency | |

| Clinic or medical office | |

| Legislative office | |

| Web-based computer technology | |

| Characteristics of interventionists | Who can deliver the intervention |

| Level of education, years of practice, or skill | |

| Previous training requirements | |

| Potential funding streams to support the intervention if proven effective | Consideration of cost associated with training in using intervention and its delivery |

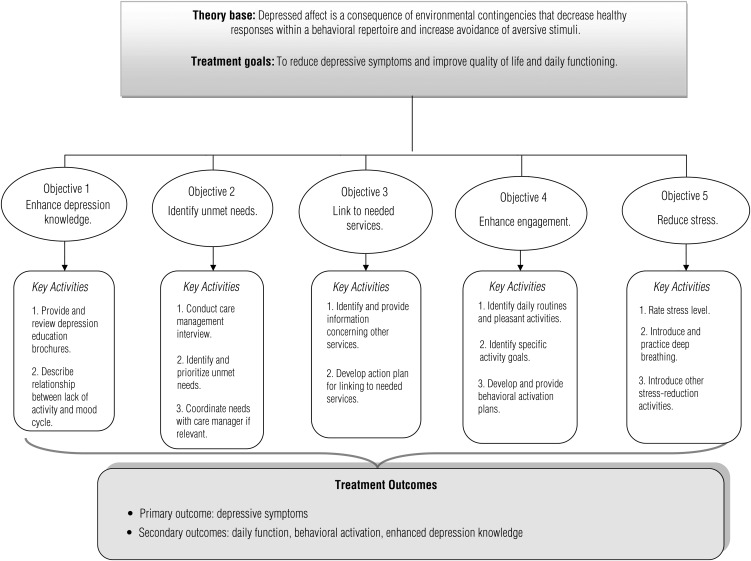

In addition to these considerations, conducting a task analysis of an intervention is a useful exercise. This analysis entails breaking down the intervention by detailing its theory base, treatment goals, objectives and specific activities, and hypothesized primary (proximal) and secondary (distal) outcomes to ensure alignment of elements. Figure 2 graphically displays a task analysis for a depression intervention protocol as an example (Gitlin et al., 2012). On the basis of this analysis, a detailed description of the specific content and logical sequence of activities in each intervention session can be constructed in a treatment manual.

Figure 2.

Task analysis for a depression intervention protocol.

Emerging Models

Given the elongated process of building and testing a behavioral intervention as shown in the six test phases, recent efforts have been directed at developing hybrid models to accelerate the discovery process (Curran et al., 2012). One approach is inclusion of economic analyses in Phase 2 or Phase 3, or both, to evaluate the implementation potential of an intervention from a cost perspective. Economic evaluations inform the investment necessary at the individual, agency, and societal levels.

In addition, “practical” or embedded trials combining efficacy and effectiveness or effectiveness and implementation and that involve the practice setting as the test site for which the intervention is intended may shorten the developmental trajectory. Other hybrid models involve conducting implementation trials that secondarily evaluate treatment benefits (Curran et al., 2012) or embedding the test of dissemination strategies early on in the development process.

Yet another approach is involvement of stakeholders and end users as research team members in early phases. Using principles of community-based participatory research, involvement of agency personnel, clinicians, or community members may facilitate development of interventions that are more responsive and implementation ready. Nevertheless, strategies to shorten the developmental trajectory and enhance implementation need to be evaluated to establish evidence that they indeed improve knowledge transfer.

Conclusion

Introducing a new behavioral intervention is an important and exciting endeavor but is not without challenges. It takes many years, from discovery to efficacy testing and evaluation of implementation, for interventions to be fully developed and subsequently integrated in practice. Introducing a new behavioral intervention has typically followed a traditional medical and pharmacological linear pipeline. This pathway must, however, be tailored to the behavioral intervention context and accelerated. New hybrid models that blend test phases and involve a team approach consisting of stakeholders and end users up front in developing and testing interventions may shorten the timeframe and enhance adoption. Knowledge of setting exigencies and implementation challenges may inform intervention protocol development and facilitate more rapid and efficient translation into practice. Integrating cost analyses early on and using hybrid models also hold promise for closing the research–practice gap.

An iterative developmental model for building evidence for novel behavioral interventions and training the next generation in such approaches is critical. Although interventions to improve the public’s health are complex and funding lags, introducing new interventions remains a critical and most worthy pursuit.

Acknowledgments

A version of this article was presented as part of the Advancing Clinical Trials and Outcomes Research (ACTOR) Conference, Fairfax, VA, December 2, 2011. The author was supported in part by grant funds from the National Institute of Mental Health (NIMH RO1MH079814; NIMH RC1MH090770); and a Non-Pharmacological Strategies to Ameliorate Symptoms of Alzheimer’s Disease grant (NPSASA-10-174265).

References

- Bear-Lehman J. The NIH Roadmap: An opportunity for occupational therapy. OTJR: Occupation, Participation and Health. 2011;31:106–107. doi: 10.3928/15394492-20110428-01. http://dx.doi.org/10.3928/15394492-20110428-01 . [DOI] [PubMed] [Google Scholar]

- Belle S. H., Burgio L., Burns R., Coon D., Czaja S. J., Gallagher-Thompson D., Zhang S. Resources for Enhancing Alzheimer’s Caregiver Health (REACH) II Investigators. Enhancing the quality of life of dementia caregivers from different ethnic or racial groups: A randomized, controlled trial. Annals of Internal Medicine. 2006;145:727–738. doi: 10.7326/0003-4819-145-10-200611210-00005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brownson R. C., Colditz G. A., Proctor E. K. Dissemination and implementation research in health: Translating science to practice. New York: Oxford University Press; 2012. [Google Scholar]

- Buetner D. The blue zones: Lessons for living longer from the people who’ve lived the longest. Washington, DC: National Geographic Press; 2008. [Google Scholar]

- Burke J. P., Gitlin L. N. The Issue Is—How do we change practice when we have the evidence? American Journal of Occupational Therapy. 2012;66:e85–e88. doi: 10.5014/ajot.2012.004432. http://dx.doi.org/10.5014/ajot.2012.004432 . [DOI] [PubMed] [Google Scholar]

- Callahan C. M., Boustani M. A., Unverzagt F. W., Austrom M. G., Damush T. M., Perkins A. J., Hendrie H. C. Effectiveness of collaborative care for older adults with Alzheimer disease in primary care: A randomized controlled trial. JAMA. 2006;295:2148–2157. doi: 10.1001/jama.295.18.2148. http://dx.doi.org/10.1001/jama.295.18.2148 . [DOI] [PubMed] [Google Scholar]

- Campbell M., Fitzpatrick R., Haines A., Kinmonth A. L., Sandercock P., Spiegelhalter D., Tyrer P. Framework for design and evaluation of complex interventions to improve health. BMJ. 2000;321:694–696. doi: 10.1136/bmj.321.7262.694. http://dx.doi.org/10.1136/bmj.321.7262.694 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark F., Azen S. P., Zemke R., Jackson J., Carlson M., Mandel D., Lipson L. Occupational therapy for independent-living older adults: A randomized controlled trial. JAMA. 1997;278:1321–1326. http://dx.doi.org/10.1001/jama.1997.03550160041036 . [PubMed] [Google Scholar]

- Clinical and Translational Science Awards Consortium, Community Engagement Key Function Committee. Principles of community engagement. 2nd ed., Publication No. 11–7782. Washington, DC: National Institutes of Health; 2011. Publication No. 11–7782. [Google Scholar]

- Cochrane L. J., Olson C. A., Murray S., Dupuis M., Tooman T., Hayes S. Gaps between knowing and doing: Understanding and assessing the barriers to optimal health care. Journal of Continuing Education in the Health Professions. 2007;27:94–102. doi: 10.1002/chp.106. http://dx.doi.org/10.1002/chp.106 . [DOI] [PubMed] [Google Scholar]

- Cooper L. A., Hill M. N., Powe N. R. Designing and evaluating interventions to eliminate racial and ethnic disparities in health care. Journal of General Internal Medicine. 2002;17:477–486. doi: 10.1046/j.1525-1497.2002.10633.x. http://dx.doi.org/10.1046/j.1525-1497.2002.10633.x . [DOI] [PMC free article] [PubMed] [Google Scholar]

- Counsell S. R., Callahan C. M., Clark D. O., Tu W., Buttar A. B., Stump T. E., Ricketts G. D. Geriatric care management for low-income seniors: A randomized controlled trial. JAMA. 2007;298:2623–2633. doi: 10.1001/jama.298.22.2623. http://dx.doi.org/10.1001/jama.298.22.2623 . [DOI] [PubMed] [Google Scholar]

- Curran G. M., Bauer M., Mittman B., Pyne J. M., Stetler C. Effectiveness-implementation hybrid designs: Combining elements of clinical effectiveness and implementation research to enhance public health impact. Medical Care. 2012;50:217–226. doi: 10.1097/MLR.0b013e3182408812. http://dx.doi.org/10.1097/MLR.0b013e3182408812 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fixen D. L., Naoom S. F., Blasé K. A., Friedman R. M., Wallace F. Implementation research: A synthesis of the literature. Tampa: Louis de la Parte Florida Mental Health Institute, Department of Child & Family Studies, University of South Florida; 2005. [Google Scholar]

- Gitlin L. N., Belle S. H., Burgio L. D., Czaja S. J., Mahoney D., Gallagher-Thompson D., Ory M. G. REACH Investigators. Effect of multicomponent interventions on caregiver burden and depression: The REACH multisite initiative at 6-month follow-up. Psychology and Aging. 2003;18:361–374. doi: 10.1037/0882-7974.18.3.361. http://dx.doi.org/10.1037/0882-7974.18.3.361 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gitlin L. N., Harris L. F., McCoy M., Chernett N. L., Jutkowitz E., Pizzi L. T. Beat the Blues Team. A community-integrated home based depression intervention for older African Americans: Description of the Beat the Blues randomized trial and intervention costs. BMC Geriatrics. 2012;12:4. doi: 10.1186/1471-2318-12-4. http://dx.doi.org/10.1186/1471-2318-12-4 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gitlin L. N., Jacobs M., Earland T. V. Translation of a dementia caregiver intervention for delivery in homecare as a reimbursable Medicare service: Outcomes and lessons learned. Gerontologist. 2010;50:847–854. doi: 10.1093/geront/gnq057. http://dx.doi.org/10.1093/geront/gnq057 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gitlin L. N., Winter L., Dennis M. P., Corcoran M., Schinfeld S., Hauck W. W. A randomized trial of a multicomponent home intervention to reduce functional difficulties in older adults. Journal of the American Geriatrics Society. 2006;54:809–816. doi: 10.1111/j.1532-5415.2006.00703.x. http://dx.doi.org/10.1111/j.1532-5415.2006.00703.x . [DOI] [PubMed] [Google Scholar]

- Gitlin L. N., Winter L., Dennis M. P., Hodgson N., Hauck W. W. A biobehavioral home-based intervention and the well-being of patients with dementia and their caregivers: The COPE randomized trial. JAMA. 2010a;304:983–991. doi: 10.1001/jama.2010.1253. http://dx.doi.org/10.1001/jama.2010.1253 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gitlin L. N., Winter L., Dennis M. P., Hodgson N., Hauck W. W. Targeting and managing behavioral symptoms in individuals with dementia: A randomized trial of a nonpharmacological intervention. Journal of the American Geriatrics Society. 2010b;58:1465–1474. doi: 10.1111/j.1532-5415.2010.02971.x. http://dx.doi.org/10.1111/j.1532-5415.2010.02971.x . [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glasgow R. E. Evaluation models for theory-based interventions: The RE–AIM model. In: Glanz K., Rimer B. K., Lewis F. M., editors. Health behavior and health education: Theory, research, and practice. 3rd ed. New York: Jossey-Bass; 2002. pp. 531–544. [Google Scholar]

- Glasgow R. E. HMC research translation: Speculations about making it real and going to scale. American Journal of Health Behavior. 2010;34:833–840. doi: 10.5993/ajhb.34.6.17. http://dx.doi.org/10.5993/AJHB.34.6.17 . [DOI] [PubMed] [Google Scholar]

- Glasgow R. E., Lichtenstein E., Marcus A. C. Why don’t we see more translation of health promotion research to practice? Rethinking the efficacy-to-effectiveness transition. American Journal of Public Health. 2003;93:1261–1267. doi: 10.2105/ajph.93.8.1261. http://dx.doi.org/10.2105/AJPH.93.8.1261 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grady P. A. Advancing the health of our aging population: A lead role for nursing science. Nursing Outlook. 2011;59:207–209. doi: 10.1016/j.outlook.2011.05.017. http://dx.doi.org/10.1016/j.outlook.2011.05.017 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- Institute of Medicine. Crossing the quality chasm: A new health system for the 21st century. Washington, DC: National Academies Press; 2001. [PubMed] [Google Scholar]

- Institute of Medicine. Priority areas for national action: Transforming health care quality. Washington, DC: National Academies Press; 2003. [PubMed] [Google Scholar]

- Institute of Medicine. Retooling for an aging America: Building the health care workforce. Washington, DC: National Academies Press; 2008. [PubMed] [Google Scholar]

- Jackson J. S., Knight K. M., Rafferty J. A. Race and unhealthy behaviors: Chronic stress, the HPA axis, and physical and mental health disparities over the life course. American Journal of Public Health. 2010;100:933–939. doi: 10.2105/AJPH.2008.143446. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jobe J. B., Smith D. M., Ball K., Tennstedt S. L., Marsiske M., Willis S. L., Kleinman K. ACTIVE: A cognitive intervention trial to promote independence in older adults. Controlled Clinical Trials. 2001;22:453–479. doi: 10.1016/s0197-2456(01)00139-8. http://dx.doi.org/10.1016/S0197-2456(01)00139-8 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kleinman M. S., Mold J. W. Defining the components of the research pipeline. Clinical and Translational Science. 2009;2:312–314. doi: 10.1111/j.1752-8062.2009.00119.x. http://dx.doi.org/10.1111/j.1752-8062.2009.00119.x . [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lavis J. N., Robertson D., Woodside J. M., McLeod C. B., Abelson J. Knowledge Transfer Study Group. How can research organizations more effectively transfer research knowledge to decision makers? Milbank Quarterly. 2003;81:221–248. doi: 10.1111/1468-0009.t01-1-00052. 171–172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lovasi G. S., Hutson M. A., Guerra M., Neckerman K. M. Built environments and obesity in disadvantaged populations. Epidemiologic Reviews. 2009;31:7–20. doi: 10.1093/epirev/mxp005. http://dx.doi.org/10.1093/epirev/mxp005 . [DOI] [PubMed] [Google Scholar]

- McGlynn E. A., Asch S. M., Adams J., Keesey J., Hicks J., DeCristofaro A., Kerr E. A. The quality of health care delivered to adults in the United States. New England Journal of Medicine. 2003;348:2635–2645. doi: 10.1056/NEJMsa022615. http://dx.doi.org/10.1056/NEJMsa022615 . [DOI] [PubMed] [Google Scholar]

- Milstein B., Homer J., Briss P., Burton D., Pechacek T. Why behavioral and environmental interventions are needed to improve health at lower cost. Health Affairs (Project Hope) 2011;30:823–832. doi: 10.1377/hlthaff.2010.1116. http://dx.doi.org/10.1377/hlthaff.2010.1116 . [DOI] [PubMed] [Google Scholar]

- Murray E., Treweek S., Pope C., MacFarlane A., Ballini L., Dowrick C., May C. Normalisation process theory: A framework for developing, evaluating and implementing complex interventions. BMC Medicine. 2010;8:63. doi: 10.1186/1741-7015-8-63. http://dx.doi.org/10.1186/1741-7015-8-63 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richards K. C., Enderlin C. A., Beck C., McSweeney J. C., Jones T. C., Roberson P. K. Tailored biobehavioral interventions: A literature review and synthesis. Research and Theory for Nursing Practice: An International Journal. 2007;21:271–285. doi: 10.1891/088971807782428029. http://dx.doi.org/10.1891/088971807782428029 . [DOI] [PubMed] [Google Scholar]

- U.S. Department of Health and Human Services. HHS action plan to reduce racial and ethnic health disparities: A nation free of disparities in health and health care. Washington DC: Author; 2011. Retrieved from http://minorityhealth.hhs.gov/npa/files/Plans/HHS/HHS_Plan_complete.pdf. [Google Scholar]

- Viswanathan M., Ammerman A., Eng E., Garlehner G., Lohr K. N., Griffith D., Whitener L. Community-based participatory research: Assessing the evidence. Evidence Report/Technology Assessment (Summary) 2004;99:1–8. [PMC free article] [PubMed] [Google Scholar]

- Westfall J. M., Mold J., Fagnan L. Practice-based research—“Blue Highways” on the NIH roadmap. JAMA. 2007;297:403–406. doi: 10.1001/jama.297.4.403. http://dx.doi.org/10.1001/jama.297.4.403 . [DOI] [PubMed] [Google Scholar]

- Wilson K. M., Brady T. J., Lesesne C. NCCDPHP Work Group on Translation. An organizing framework for translation in public health: The Knowledge to Action framework. Preventing Chronic Disease. 2011;8:A46. Retrieved from http://www.cdc.gov/pcd/issues/2011/mar/10_0012.htm. [PMC free article] [PubMed] [Google Scholar]

- Woolf S. H. The meaning of translational research and why it matters. JAMA. 2008;299:211–213. doi: 10.1001/jama.2007.26. [DOI] [PubMed] [Google Scholar]