Abstract

Context

It is important to consider the way in which information is presented by the interfaces of clinical decision support systems, to favor the adoption of these systems by physicians. Interface design can focus on decision processes (guided navigation) or usability principles.

Objective

The aim of this study was to compare these two approaches in terms of perceived usability, accuracy rate, and confidence in the system.

Materials and methods

We displayed clinical practice guidelines for antibiotic treatment via two types of interface, which we compared in a crossover design. General practitioners were asked to provide responses for 10 clinical cases and the System Usability Scale (SUS) for each interface. We assessed SUS scores, the number of correct responses, and the confidence level for each interface.

Results

SUS score and percentage confidence were significantly higher for the interface designed according to usability principles (81 vs 51, p=0.00004, and 88.8% vs 80.7%, p=0.004). The percentage of correct responses was similar for the two interfaces.

Discussion/conclusion

The interface designed according to usability principles was perceived to be more usable and inspired greater confidence among physicians than the guided navigation interface. Consideration of usability principles in the construction of an interface—in particular ‘effective information presentation’, ‘consistency’, ‘efficient interactions’, ‘effective use of language’, and ‘minimizing cognitive load’—seemed to improve perceived usability and confidence in the system.

Keywords: User-computer interface; Usability; Practice guideline; Physicians, Primary care; Antibiotic prescription

Introduction

Medical knowledge is voluminous and changes rapidly. If physicians are unaware of these changes, they may make incorrect medical decisions, resulting in inappropriate treatment. This may have consequences for both patients (morbidity, mortality)1 and the healthcare system (cost).1

Health authorities provide clinical practice guidelines (CPGs) to inform physicians of recent advances in medical knowledge and best practice for patient care. However, physicians do not always consult CPGs, which they find complex and difficult to use in clinical practice.2 3 Clinical decision support systems (CDSSs) that implement guidelines have been developed to facilitate the use of CPGs.4–6 However, physicians do not always use such systems7 because of barriers relating to CDSSs.8

If we are to promote the use of CDSSs by physicians, we need to determine the best way to present the information via interfaces.8 User interfaces can be designed in several ways. In one approach, designers try to present the decision process so as to facilitate physician navigation. Various kinds of ‘guided navigation’ can be designed for CDSSs.

Decision tree diagrams are used by health authorities9 10 to represent the decision process. They consist of static presentations with node and link diagrams.11 Tree diagrams provide physicians with an overview of the decision process. However, they require large amounts of available display space,11 and the information displayed is limited to a simple text label at each node.11

Hypertext links are used in many CDSSs.12–14 These textual links correspond to the nodes of the decision process. Physicians move through the hierarchy manually by clicking on textual links. This preserves the active reasoning of physicians.15 However, this approach is subject to several limitations: (i) the number of clicks depends on the depth of the hierarchy and may be large; (ii) there is no overview of the decision process; (iii) physicians may not remember where they are in the decision process (the ‘lost in hyperspace’ phenomenon),16 potentially leading to navigation errors.

Data entries and check boxes may also be included.17–20 Physicians input data (as free text or boxes to check) and press buttons to go through the decision process. Presentations of this type preserve the autonomy of the physician. However, (i) it takes time to input the necessary data,15 (ii) all fields must be completed even if only a fraction of the data is required for the decision process, and (iii) there is no overview of the arborescence.

Expand/contract interfaces are also used.21–23 The user has a global hierarchical view of the decision process, because the information content is concealed within individual nodes.24 Users may gradually reduce the search field by dynamic filtration25; this involves clicking on the node, so that items in the level immediately below appear,24 and adapting navigation across the hierarchy according to the result obtained after each mouse click.25 This approach also facilitates reading, because elements are read from top to bottom. However, ‘expand/contract’ interfaces have limitations: the number of clicks depends on the depth of the hierarchy; there is no complete view of the overall arborescence25; the user must have a priori knowledge about navigation of the system.

Alternatively, designers may focus on usability principles.26 27 Human factors and ergonomics were developed and expanded in the domains of computer software and medicine after World War II.28 In 2009, the Healthcare Information and Management Systems Society (HIMSS)29 stressed the advantages of designs based on usability principles: simplicity, naturalness, consistency, minimization of the cognitive load, efficient interactions, forgiveness and feedback, effective use of language, effective information presentation and preservation of context.

Various techniques have been proposed for design interfaces according to usability principles. Some of these techniques relate to CDSS content: (i) the use of concise and unambiguous language,30 based on a consistent terminology,30–33 to promote simplicity, consistency, efficient interactions, and effective use of language; (ii) the presentation of explanations and justifications to increase physician confidence30; (iii) the provision of advice, suggestions and alternatives, rather than orders,30 to increase compliance and to respect the autonomy of the physician.

Various techniques have been proposed for CDSS display: (a) reduction of the number of screens to facilitate navigation31 32 and to promote efficient interactions30; (b) use of appropriate font sizes,29 acceptable contrast between text and background,29 and meaningful colors29 30 to improve readability; (c) organization of information (eg, grouping similar pieces of information together)30–32 to facilitate on-screen searches; (e) display of important information in more prominent positions to ensure that it is seen31 32; (d) use of tables,21 graphs,21 buttons,29 scroll bars,29 and iconic languages34–36 to ensure that the density of information is appropriate. Space-filling approaches such as Treemaps principles may also help to maximize the amount of information that can be displayed in the available display space.11 37

According to a recent systematic review,31 no quantitative evaluation has ever compared the impact of these two approaches on the usability of the resulting interface.

Objective

The aim of this study was to compare these two approaches—that is, ‘guided navigation’ versus an ‘interface based on usability principles’—in terms of perceived usability, accuracy rate, and user confidence, in the context of a CDSS for empiric antibiotic prescription, Antibiocarte. We will first describe the two interfaces and the evaluation protocol. We will then compare them in terms of perceived usability, accuracy rate, and the confidence of physicians in the two interfaces and discuss the results obtained.

Background

Antibiocarte is a website that was initially developed to display the natural susceptibility and level of acquired resistance of bacteria38 based on information from a knowledge base constructed from the summary of product characteristics of antibiotics and bacterial ontology data.39

The clinical use of Antibiocarte by physicians requires the addition of an entry by ‘disease’, corresponding to various clinical situations for empiric antibiotic prescription listed in CPGs.

CPGs can be structured into a decision tree, in which each node leads to a specific etiology and to a treatment adapted to the patient's profile. Thus, each leaf corresponds to a ‘fully described patient condition’ (eg, pharyngitis in a child under the age of 2 years with penicillin allergy), and leads to one of the following treatment recommendations: ‘hospitalization’, ‘antibiotic prescription’, ‘laboratory testing’, ‘monitoring’, or ‘no treatment’. ‘Guided navigation’ interfaces are appropriate for the display of information structured in this way, because the CPGs are structured as a decision tree with a specific pathway for each clinical situation.

Alternatively, CPGs can be structured by considering the general decision-making process for empiric antibiotic prescription from the physician's viewpoint, allowing a common representation for all clinical situations.40 The information contained in CPGs is structured by its nature (patient conditions, scores, risk factors, hospitalization criteria, etc) and use in the decision-making process (discriminating, explaining, alerting, documenting, etc), and is displayed in a specific space within the interface.40

The interfaces developed by these two approaches are referred to here as the ‘expand/contract’ and ‘at-a-glance’ interfaces.

The ‘expand/contract’ interface

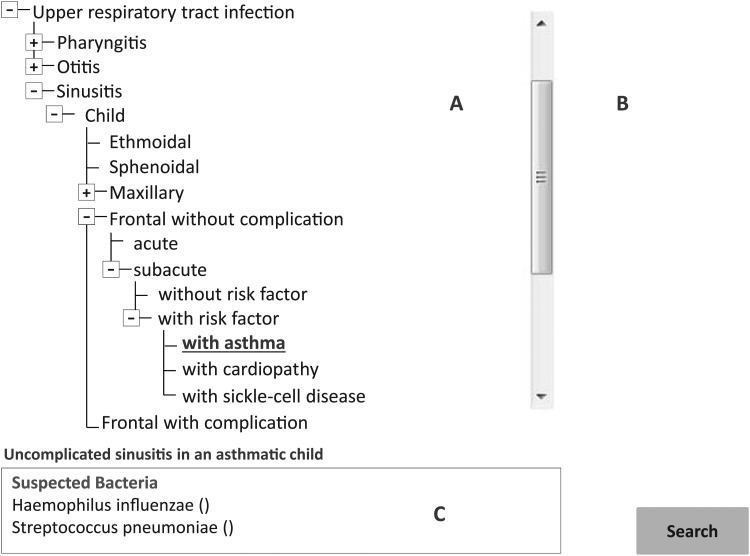

The first interface of Antibiocarte, the ‘expand/contract’ interface, is a guided navigation interface divided into three areas (figure 1).

Area A contains etiologic and therapeutic orientation criteria. These criteria are concealed within individual nodes, and can be viewed by clicking on the node concerned, with the mouse.24 Each node is a specialization of the previous patient condition.

Area B, on the right, is completed only if the action chosen is ‘hospitalization’, ‘laboratory testing’, ‘monitoring’, or ‘no treatment’.

Area C, at the bottom, is completed only if the action chosen is ‘antibiotic prescription’.

Figure 1.

The ‘expand/contract’ version of AntibioCarte. Example: Uncomplicated frontal sinusitis in an asthmatic child. The physician navigates through the decision process manually: he or she has to click on six crosses to arrive at the leaf level of the hierarchy. An additional click is then required on the leaf (with asthma). At this point, area C is highlighted: the names of the causal bacteria—Haemophilus influenzae and Streptococcus pneumoniae—are displayed, and the physician has to click on the button ‘search’ to reach the third page, which shows antibiotic spectra.

The ‘expand/contract’ interface does not display knowledge that physicians are supposed to master, such as hospitalization criteria or definitions.

Physicians navigate through the decision process manually. They click on each node of area A, until they arrive at the leaf level of the hierarchy, corresponding to the clinical situation sought. At this point, area B or C is highlighted, depending on the type of action. If the action is ‘hospitalization’, ‘laboratory testing’, ‘monitoring’, or ‘no treatment’, then the action is displayed in area B. If the action is ‘antibiotic prescription’, then the names of bacteria causing the infection are displayed in area C, and physicians have to click on a button, taking them to a third page. The third page displays antibiotic spectra with the recommended antibiotics flagged.

The ‘at-a-glance’ interface

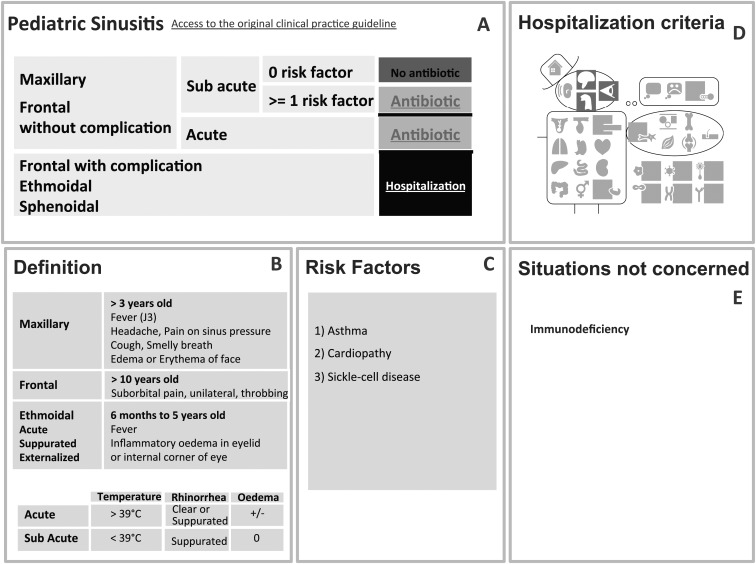

The second interface of Antibiocarte, the ‘at-a-glance’ interface, was constructed according to usability principles (table 1) and is divided into five areas (figure 2).40

Area A is a kind of Treemap representation, presenting the alternatives for the decision-making process in concise language. The last column displays the recommended action in intuitive colors (red: no prescription; green: antibiotic prescription; orange: monitoring or laboratory testing; black: hospitalization). Green boxes lead to a third page, which displays antibiotic spectra. A hyperlink to the original CPG is also provided. Area A is the first to be read because it is located in the upper left part of the screen.

Areas B and C, located below A, contain justifications of the decision process: definitions and score or risk factors are recalled. These areas highlight the terms used in the table in A by explaining decision variables and rendering them unambiguous.

Area D, on the right side of the screen, displays the criteria for hospitalization, with a graphical summary: Mister VCM, developed in our laboratory by Lamy et al34–36 (eg, a highlighted brain means that the patient should be hospitalized if he or she has neurological problems).

Area E, on the right side of the screen, shows situations not concerned by the CPG.

Table 1.

Link between the design techniques29 30 and usability principles26 29 used to build the ‘at-a-glance’ interface

| Design techniques used | Effect on visualization of the decision process | Simplicity | Naturalness | Consistency | Minimizing cognitive load | Efficient interactions | Forgiveness and feedback | Effective use of language | Effective information presentation | Preservation of context |

|---|---|---|---|---|---|---|---|---|---|---|

| Space-filling approach | The amount of information in the available display space is maximized | x | ||||||||

| Organization into five fixed areas. Each area contains the same kind of information, whatever the clinical situation | On-screen searches are facilitated, because physicians know where to find information they need | x | x | x | x | x | ||||

| Localization of information. The most important information is prominent and located at the upper left part of the screen (area A) | The most important information is sure to be read | x | ||||||||

| Use of concise language to describe decision and action variables (area A) | Readability is improved | x | x | |||||||

| Decision process read from left to right | Readability is improved | x | x | |||||||

| Display of reminders of definitions and risk factors (areas B and C) | The decision process is rendered unambiguous. The physician's confidence is increased |

x | x | |||||||

| Display of the criteria for hospitalization with Mr VCM | Density of information is reduced | x | x | |||||||

| Use of acceptable contrast between text and background | Readability is improved | x | ||||||||

| Use of meaningful colors | Readability is improved | x | x | |||||||

| Visualization of no more than three screens | Navigation is facilitated | x | x | |||||||

| Alternatives for the decision process are presented | Physician has an overview of the decision process | x | x | |||||||

| Hyperlink to CPGs is displayed | Physician's confidence is increased | x |

CPG, clinical practice guideline.

Figure 2.

The ‘at-a-glance’ version of AntibioCarte. All clinical practice guidelines (CPGs) can be implemented in an ‘at-a-glance’ interface. Example: Uncomplicated frontal sinusitis in an asthmatic child. The physician first looks at area A, which is located in the upper left part of the screen. He or she visually crosses the decision table until the leaf of the hierarchy is reached. The last column displays the recommended action in intuitive colors, corresponding to a green button in our clinical situation (green button means ‘antibiotic prescription’). The physician has to click on this button to reach the third page, which displays antibiotic spectra. Areas B, C and D are facultative: the physician consults them only if he or she needs a reminder of definitions (areas B and C) or hospitalization criteria (area D). The information in area B is displayed in a comparative table to facilitate comparison. Hospitalization criteria are displayed with a graphical summary: Mister VCM makes it possible to view a summarized version of the information before going into greater detail. For instance, the physician may see a highlighted eye, indicating that the patient should be hospitalized if he or she has ophthalmological problems. If the physician requires more detail, he or she can move the mouse over the ‘red eye’ of Mister VCM, revealing the corresponding mouseover. Area E displays situations not concerned by the CPGs.

The interface was built according to a ‘space-filling approach’, because it displays all the knowledge required for antibiotic prescription, even that supposedly familiar to physicians, such as hospitalization criteria and definitions (eg, signs and symptoms).

The physician first selects the infectious disease and the patient profile. He or she then goes on to the second page, with the five areas. On this page, the physician navigates visually through the decision process, crossing the decision table from left to right, until arrival at the leaf level of the hierarchy. If the action is ‘hospitalization’, ‘laboratory testing’, ‘monitoring’, or ‘no treatment’, then the last column displays the action in intuitive colors. If the action is ‘antibiotic prescription’, the physician clicks on the green box to gain access to the third page. This third page displays a list of antibiotics and their activity spectra with the recommended antibiotics flagged. This system thus includes no more than three screens.

The two interfaces are automatically generated with php scripts relating to a structured knowledge database.

Materials and methods

Interface evaluation methods

Study design

We compared the perceived usability of the two interfaces by carrying out a two-period experiment in which the evaluator served as his own control and the order of interface evaluation was randomized (two-period crossover design). The evaluation was web-based and made use of the Firefox browser.

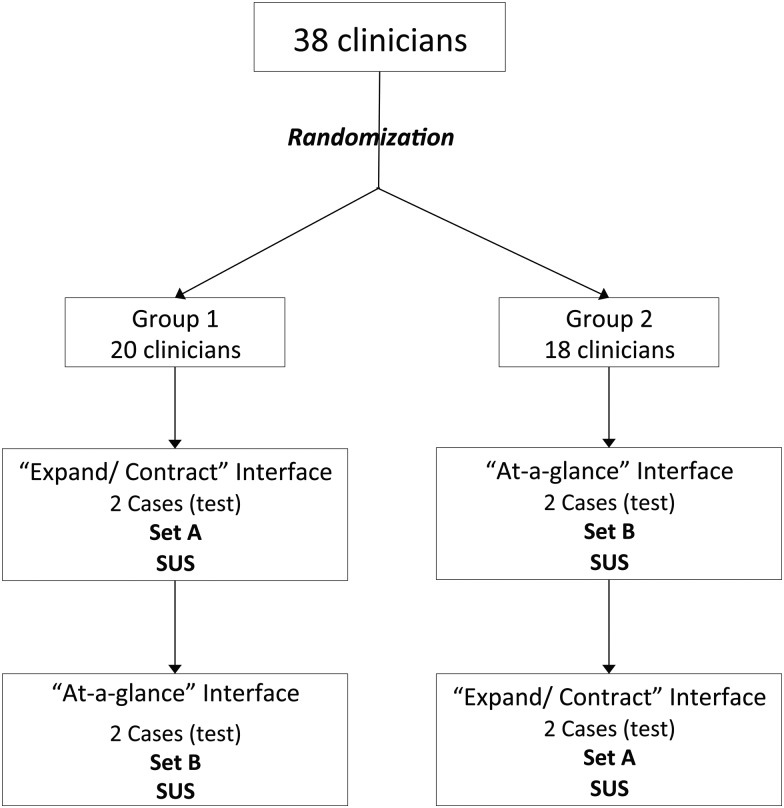

Physicians were contacted by an email sent to various general practitioners’ associations. Thirty-eight physicians were randomized to two groups (figure 3). In each group, the evaluation was carried out online and involved:

Reading a short manual describing the two interfaces and explaining the evaluation;

Filling in a form about demographic characteristics;

Connecting online with a user name and password;

Providing responses for two test clinical cases, 10 clinical cases, and an assessment of the System Usability Scale (SUS)41 42 for each interface;

Writing optional ‘free comments’ for each interface.

Figure 3.

Crossover study. Sets A and B were considered to be similar because they were randomly selected. For each physician, the order of clinical cases was randomized in each set.

Constitution of two sets of clinical cases

We extracted 150 clinical situations from five CPGs. These 150 clinical situations were turned into 150 clinical cases, relating to urinary, upper or lower respiratory tract infections, and potentially leading to different types of action (‘antibiotic treatment’, ‘no treatment’, ‘hospitalization’, or ‘laboratory test/monitoring’). For instance, the clinical situation ‘Pneumonia in a child less than 3 years old with no signs of severity’ was converted into the clinical case: ‘Théo, 2 years old, has been coughing for 2 days. His temperature is 40°C. Auscultation reveals crepitant rales in the lower right lung. There are no signs of severity. We conclude that he has pneumonia in the lower right lung. What treatment would you prescribe?’

Three randomizations of these 150 clinical cases were carried out. The first randomization yielded two clinical cases, which were used to test the interface at the start of the evaluation, for both interfaces. These test cases allowed the physicians to get used to the two interfaces.

The second and third randomizations yielded two similar sets of clinical cases, which were used for the evaluation. These randomizations were stratified for (i) the type of action (eg, ‘antibiotic treatment’), (ii) the type of disease (eg, ‘cystitis’) and (iii) the presence of allergy (eg, ‘penicillin allergy’). The second randomization generated ‘set A’ of 10 clinical cases, which was used to evaluate the ‘expand/contract’ interface. The third randomization generated ‘set B’ of 10 clinical cases, which was used to evaluate the ‘at-a-glance’ interface. The randomization of clinical cases ensured that ‘set A’ and ‘set B’ were similar.

Distribution of clinical cases by group of physicians

The physicians in group 1 first evaluated the ‘expand/contract’ interface, with ‘set A’, and completed the SUS for this interface. They then evaluated the ‘at-a-glance’ interface, with ‘set B’, and completed the SUS (figure 3).

The physicians in group 2 first evaluated the ‘at-a-glance’ interface, with ‘set B’, and completed the SUS for this interface. They then evaluated the ‘expand/contract’ interface, with ‘set A’, and completed the SUS (figure 3).

For each evaluator, and for each set of clinical cases, we randomized the order of clinical cases. In both groups, the physicians were allowed to familiarize themselves with the two interfaces, using the two test clinical cases, before the start of the evaluation.

Statistical analysis

Perceived usability was measured by obtaining an SUS score.41 SUS is a 10-item scale, in which items are assessed with a Likert scale. Physicians indicate the degree of agreement on a five-point scale for each item, making it possible to calculate a total SUS score, which should lie between 0 and 100.41

Accuracy rate was measured by determining the number of correct responses for each clinical case.29 A response was considered ‘correct’ if the action chosen by the physician corresponded to the action recommended in the CPGs, whatever the level of recommendation. The first author determined the exact matches by comparing each response with the CPGs, which was taken as the gold standard.

Confidence level was measured with a four-point Likert scale for each clinical case. A response was considered ‘confident’ if the physician affirmed that he/she had confidence in the system (levels 3 and 4 on the Likert scale).

Each question of the SUS and free comments were also studied.

We compared SUS score, the number of correct responses, and the number of confident responses between the two interfaces with R V.2.15.1 statistical software.

SUS scores were compared by analysis of variance for repeated measures.

The numbers of correct responses and confident responses were compared in a generalized logistic regression model for dependent data. Subgroup analyses were also carried out by type of action.

Results

Characteristics of the physicians

There were no significant differences in the characteristics of the physicians in groups 1 and 2 (table 2). None were color blind and none had had any training on antibiotic treatment within the preceding 12 months. All worked in computerized offices. Three were excluded because they evaluated only one interface.

Table 2.

Characteristics of the physicians

| Characteristic | Group 1 (n=18) | Group 2 (n=17) | p Value |

|---|---|---|---|

| Female | 67% | 65% | 1 |

| Mean age (years) | 31 (SD=3) | 30 (SD=2) | 0.29 |

| Mean time in practice (years) | 3 (SD=2) | 1.9 (SD=1) | 0.096 |

| Mean number of patients seen per week | 69 (SD=35) | 60 (SD=24) | 0.36 |

| Mean number of antibiotics prescribed per week | 11.7 (SD=11) | 7.3 (SD=5) | 0.15 |

Perceived usability

SUS score was significantly higher for the ‘at-a-glance’ interface than for the ‘expand/contract’ interface (76 vs 62; p=0.002) over the entire study period.

However, we also analyzed the SUS score for each period separately because there was a significant interface–period interaction (p=0.001) (table 3). In period 1, physicians evaluated the ‘expand/contract’ interface in group 1 and the ‘at-a-glance’ interface in group 2. In each group, the first interface was effectively compared with ‘nothing’ because the physicians had not yet seen the other interface. There was no significant difference between the two interfaces in period 1 (72 vs 70, p=0.732).

Table 3.

SUS score, accuracy rate, and confidence for each interface: analysis for each period separately

| Expand/contract | At-a-glance | δ (‘At-a-glance’– ‘Expand/contract’) | |

|---|---|---|---|

| SUS | |||

| Period 1 | 72 | 70 | −2 (p=0.732) |

| Period 2 | 51 | 81 | +30 (p=0.00004) |

| Accuracy rate | |||

| Period 1 | 62.4% | 68.2% | +5.8 (p=0.234) |

| Period 2 | 66.3% | 69.5% | +3.2 (p=0.551) |

| Confidence | |||

| Period 1 | 85.4% | 86.5% | +1.1 (p=0.812) |

| Period 2 | 75.7% | 91.5% | +15.8 (p=0.001) |

SUS, System Usability Scale.

In period 2, physicians evaluated the other interface —that is, the ‘at-a-glance’ interface in group 1 and the ‘expand/contract’ interface in group 2. In each group, the opinion that the physicians had of the interface may have been conditioned by their experience with the first interface seen in period 1 (results in period 2 may be influenced by the intervention in period 1 because of a carry-over effect43). The physicians in group 1 perceived the second interface (ie, the ‘at-a-glance’ interface) to be more usable than the first interface, and the physicians in group 2 perceived the second interface (ie, the ‘expand/contract’) to be less usable than the first interface. A highly significant difference (p=0.00004) was detected in period 2: the SUS score was 51 for the ‘expand/contract’ interface and 81 for the ‘at-a-glance’ interface. According to the scale proposed by Bangor et al44 45 (score of 0–25: worst imaginable; score of 25–39: poor; score of 39–52: OK; score of 52–73: good; score of 73–85: excellent; score of 85–100: best imaginable), perceived usability was ‘OK’ for the ‘expand/contract’ interface and ‘excellent’ for the ‘at-a-glance’ interface in period 2.

Accuracy rate

For the entire study period, 64.3% of responses were correct with the ‘expand/contract’ interface versus 68.9% with the ‘at-a-glance’ interface. This difference was not significant (p=0.179). There was no interface–period interaction, but we nevertheless analyzed the number of correct responses in each period separately (table 3) and found that there was no significant difference between the two interfaces (p=0.234 in period 1, p=0.551 in period 2).

For the action ‘laboratory test/monitoring’, the percentage of correct responses was significantly higher for the ‘at-a-glance’ interface (76.5% vs 29.4%; p=0.0002) for the entire study period.

No significant difference between interfaces was observed for the percentages of correct responses for the actions ‘antibiotic’ (p=0.142), ‘no treatment’ (p=0.571) and ‘hospitalization’ (p=0.484).

Confidence

Over the entire study period, the percentage of confident responses was significantly higher for the ‘at-a-glance’ interface than for the ‘expand/contract’ interface (88.8% vs 80.7%; p=0.004). There was no interface–period interaction, but we nevertheless analyzed the number of confident responses for each period separately (table 3). In period 1, there was no significant difference between the two interfaces (85.4% vs 86.5%, p=0.812).

In period 2, physicians had seen the two interfaces. In group 1, the second interface (ie, the ‘at-a-glance’ interface) inspired more confidence than the first, whereas in group 2, the second interface (ie, the ‘expand/contract’) inspired less confidence than the first. A highly significant difference was detected in period 2 (75.7% vs 91.5% p=0.001).

For the action ‘antibiotic’, the percentage of confident responses was significantly higher for the ‘at-a-glance’ interface than for the ‘expand/contract’ interface (88.9% vs 78.6%; p=0.007). However, we had to analyze each period separately because there was a significant interface–period interaction (p=0.007). In period 1, there was no significant difference between the two interfaces (p=0.966). In period 2, the percentage of confident responses was significantly higher for the ‘at-a-glance’ interface than for the ‘expand/contract’ interface (93.4% vs 72.9%; p=0.0003), possibly because of a carry-over effect.

No significant differences in the percentages of confident responses were found for the actions ‘no treatment’ (p=0.480), ‘hospitalization’ (p=0.061), and ‘laboratory test’ (p=0.101).

Qualitative analysis

Qualitative analysis of each SUS question separately

The ‘at-a-glance’ interface appeared to be more usable than the ‘expand/contract’ interface in terms of frequency of use, ease of use, well-integrated functions, learning speed, and confidence in the system (see online supplementary appendix 1).

Qualitative analysis of free comments

The reminders of definitions for risk factors, scores, and criteria for diagnosis, severity, and hospitalization in the ‘at-a-glance’ interface seemed to be appreciated by the physicians (table 4). The lack of these reminders in the ‘expand/contract’ interface seemed to make the decision process ambiguous.

Table 4.

Free comments: extraction of terms used by physicians

| Expand/contract interface (n) | At-a-glance interface (n) | |

|---|---|---|

| Amount of information | ▸ Reminders of definitions of risk factors, scores, and criteria for diagnosis, severity, and hospitalization are missing (n=9) | ▸ Reminders of definitions of risk factors, scores, and criteria for diagnosis, severity, and hospitalization are helpful (n=8) ▸ The source of the CPGs is present and it is important to know the source (n=1) ▸ Names of etiologic bacteria are missing (n=1) ▸ Overload of information (n=1) |

| Organization of the area | ▸ The area on the right does not stand out enough (n=3) | ▸ Well-organized, well-structured (n=3) ▸ Hospitalization criteria should be located below and on the left (n=1) |

| Hierarchy of the decision process | ▸ Errors in hierarchy (n=7) ▸ Overview is missing (n=1) ▸ In case of doubt, it is necessary to explore manually the different routes in the decision-making process (n=1) ▸ In case of error, it is necessary to start again from the beginning (n=1) |

▸ Overview of all clinical situations (n=6) |

| Clicks | ▸ Many clicks (n=3) ▸ It is difficult to click on the cross (n=2) |

▸ Few clicks (n=1) |

| Visuals | ▸ Hospitalization criteria unclear (n=2) ▸ Useful visuals (n=1) ▸ Useful colors (n=1) |

|

| Confidence | ▸ Low confidence (n=2) | |

| Positive descriptive | ▸ Useful, practical (n=4) ▸ Pleasant (n=3) ▸ Clear (n=2) ▸ Easy (n=2) ▸ Rapid (n=1) ▸ Intuitive (n=1) |

▸ Easy, simple (n=7) ▸ Pleasant, user-friendly (n=6) ▸ Useful, practical (n=4) ▸ Excellent (n=3) ▸ Clear (n=2) ▸ Ergonomic (n=2) ▸ Rapid (n=3) ▸ Intuitive (n=1) ▸ Natural (n=1) ▸ Facilitates learning (n=1) ▸ Facilitates medical encoding (n=1) |

| Negative descriptive | ▸ Not practical (n=5) ▸ Not intuitive (n=4) ▸ Long, tedious, time-consuming (n=3) ▸ Not very pleasant or user-friendly (n=2) ▸ Complicated (n=2) ▸ Not ergonomic (n=1) ▸ Needed help (manual consultation) (n=1) |

▸ Long (n=2) ▸ Not very practical (n=1) ▸ Not very pleasant (n=1) ▸ Need for time to adapt (n=1) ▸ Worried would prescribe more antibiotics with this system (n=1) |

n, number of physicians writing similar comments.

CPG, clinical practice guideline.

The overview of all clinical situations in the ‘at-a-glance’ interface was appreciated by the physicians. The lack of this overview in the ‘expand/contract’ interface limited the utility of this interface: (i) in the case of doubt, the physician had to navigate manually through the different decision-making routes; (ii) in the case of error, the physician had to start again from the beginning; (iii) physicians felt that there were errors in the hierarchy; (iv) they also felt that a large number of clicks were required.

“Before carrying out the evaluation, I thought I would prefer the “expand/contract” interface. However, after carrying out the evaluation, I preferred the “at-a-glance” interface, for several reasons: First, it provided the best overview. With the “expand/contract” interface, you are not sure whether you are making the right decision, and so you are forced to expand all the possibilities… Second, you can see a large amount of information at a glance, and as it is well organized, it is useful and usable. Third, there are 10 times fewer mouse clicks…”.

Discussion

The interface designed on the basis of usability principles was found to be more usable and inspired more confidence than that based on guided navigation. Accuracy rate was similar for the two interfaces. To our knowledge, this is the first study to provide evidence supporting the consideration of usability principles in CDSS design.

This study has several strengths. First, we carried out a controlled trial: two-period crossover design, randomization of physicians, clinical cases, and the order of clinical cases for each physician. We used two different sets of clinical cases for each interface to prevent a memory effect (physicians being reminded of their previous responses to clinical cases when evaluating the second interface). We used a two-period crossover design to show a significant difference in perceived usability between the two interfaces (we assume that the carry-over effect43 present in period 2 led to the detection of a significant difference).

Second, we obtained results that were largely in favor of the ‘at-a-glance’ interface. The perceived usability was ‘OK’ for the ‘expand/contract’ and ‘excellent’ for the ‘at-a-glance’ interface. A qualitative analysis of the free comments identified four usability principles that seemed to be appreciated by the physicians:

‘Simplicity’: physicians seemed to find the ‘at-a-glance’ interface ‘easy’ to use.

‘Effective use of language’: explanations, justifications, and reminders for decision variables were popular with physicians because they rendered the decision process unambiguous. This was confirmed by subgroup analyses by type of action: for the action ‘laboratory test’, the accuracy rate was higher (76.5% vs 29.4%) for the ‘at-a-glance’ interface, probably because of the explanations and reminders provided, which were not present in the ‘expand/contract’ interface.

‘Effective information presentation’: the overview of the decision process in the ‘at-a-glance’ interface was also appreciated. This increased physician confidence because it was possible to see all the possible alternatives before making a decision.

‘Efficient interactions’: the decrease in the number of clicks required seemed to be important to the physicians.

It would be interesting to confirm the results obtained with the qualitative analysis of free comments by carrying out an evaluation including qualitative methods, such as observations, interviews, focus group, etc.46 47 Confidence in the system was 88.8% for the ‘at-a-glance’ interface versus 80.7% for the ‘expand-contract’ interface. We can assume that this significantly higher confidence rate is due to the design and not the scientific content of the guideline, because we controlled this potential bias by comparing two interfaces presenting the same scientific content taken from the same CPGs. This confirmed the potential effect of interface design on the degree of confidence felt by the physicians.48 Confidence in the system should be considered in CDSS design because it is an important factor favoring the use of these systems.49

Third, we proposed an ‘at-a-glance’ interface derived from a general model of the decision process for antibiotic prescription.40 Thus, all CPGs were automatically implemented from a common knowledge base constructed from this general model. The cost of custom design for each clinical situation is thereby considerably reduced. Designing interfaces according to a general model of the decision process in a particular medical domain may make it possible to decrease the cost of design, and this factor should systematically be considered in the development and maintenance of CDSSs.50 51

This study also has several limitations. The evaluators were all rather young because of the mode of recruitment: volunteering, contact by email, physicians’ associations. However, all these physicians are potential future users of these systems.

Pre-evaluation training was limited to the reading of a short manual, practice with two test clinical cases, and the possibility of contacting the first author if necessary. This may explain why some of the new concepts used in the ‘at-a-glance’ interface were ambiguous for a few physicians. For instance, VCM language, used to display hospitalization criteria, would be easily understandable after a short training session.34 35

We did not measure three of the five metrics described by the HIMSS for evaluating usability.29 The first of these metrics is ‘efficiency’, corresponding to the speed with which a user is able to complete a task, generally measured as the time taken to complete a task.29 52 The study design used made it impossible to evaluate this metric, because it was not easy to measure time with the web interface used for the evaluation, as we could not determine whether physicians carried out the entire evaluation in one go or in several sessions. Data analysis suggested that a few physicians interrupted the evaluation because they logged on to the website more than once. However, efficiency can also be measured by determining the number of mouse clicks29 or the number of back-button uses.29 A qualitative analysis of free comments showed that too many clicks were required in the ‘expand/contract’ interface. No such comments were made for the ‘at-a-glance’ interface, with which it was possible to complete the decision process in one to three clicks,40 corresponding to 30 s to 2 min of interaction time (our estimate).40 Short time interactions are a major factor in searches for information53 and are essential for the adoption of CDSSs.8 ‘Ease of learning’ was another metric that we did not evaluate. It can be measured by determining the time spent using a manual or help function or performance time.29 Our results suggested that the ‘at-a-glance’ interface was easier to learn than the ‘expand/contract’ interface. Separate analyses of SUS questions revealed that 91.5% of physicians felt that they did not need technical support to be able to use the ‘at-a-glance’ interface compared with only 77.1% with the ‘expand/contract’ interface. Moreover, the analysis of free comments revealed that one physician had had to read the manual during the evaluation of the ‘expand/contract’ interface. ‘Cognitive load’ is the other metric that we did not evaluate. However, the features of the ‘at-a-glance’ interface may minimize cognitive load because of the organization of information being similar and consistent for all clinical situations.

Another limitation of this study is that our evaluation of usability focused on the user's perception and ability to interact with the system correctly.46 We evaluated the three-component ‘system–user–task’ interaction with fictional clinical situations, and we did not take into account the ‘environment’ factor.46 This factor would have to be taken into account to confirm our results in clinical practice, because implementation in a real environment may generate results different from those obtained in a laboratory setting.46

Finally, this evaluation of the two interfaces related specifically to antibiotic treatment. The possibility of generalization to other fields of medicine should also be evaluated.

Conclusion

The interface designed according to usability principles was more usable and inspired more confidence among clinicians than the guided navigation interface. Thus, consideration of usability principles—such as ‘effective information presentation’, ‘consistency’, ‘efficient interactions’, ‘effective use of language’, and ‘minimizing cognitive load’ in particular—in the construction of an interface seemed to improve perceived usability and confidence in the system.

Supplementary Material

Acknowledgments

We would like to thank all physicians for freely agreeing to carry out this evaluation.

Footnotes

Contributors: RT and CD designed the ‘at-a-glance’ interface. CD designed the ‘expand/contract’ interface. RT, CD, and AV designed the evaluation protocol. RT recruited physicians for evaluation. RT and J-PJ carried out the statistical analysis of data. RT wrote the article. CD and AV revised it critically for important intellectual content. RT, CD, AV and J-PJ approved the final version to be published.

Competing interests: None.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Davey PG, Marwick C. Appropriate vs. inappropriate antimicrobial therapy. Clin Microbiol Infect 2008;14(Suppl 3):15–21 [DOI] [PubMed] [Google Scholar]

- 2.Lugtenberg M, Zegers-van Schaick JM, Westert GP, et al. Why don't physicians adhere to guideline recommendations in practice? An analysis of barriers among Dutch general practitioners. Implement Sci 2009;4:54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Cabana MD, Rand CS, Powe NR, et al. Why don't physicians follow clinical practice guidelines? A framework for improvement. JAMA 1999;282:1458–65 [DOI] [PubMed] [Google Scholar]

- 4.Shiffman RN, Liaw Y, Brandt CA, et al. Computer-based Guideline Implementation Systems: a systematic review of functionality and effectiveness. J Am Med Inform Assoc 1999;6:104–14 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Boxwala AA, Rocha BH, Maviglia S, et al. A multi-layered framework for disseminating knowledge for computer-based decision support. J Am Med Inform Assoc 2011;18(Suppl 1):i132–9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Maviglia SM, Zielstorff RD, Paterno M, et al. Automating complex guidelines for chronic disease: lessons learned. J Am Med Inform Assoc 2003;10:154–65 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Linder JA, Schnipper JL, Tsurikova R, et al. Documentation-based clinical decision support to improve antibiotic prescribing for acute respiratory infections in primary care: a cluster randomised controlled trial. Inform Prim Care 2009;17:231–40 [DOI] [PubMed] [Google Scholar]

- 8.Moxey A, Robertson J, Newby D, et al. Computerized clinical decision support for prescribing: provision does not guarantee uptake. J Am Med Inform Assoc 2010;17:25–33 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.National Institute for Health and Clinical Excellence. NHS Clinical Knowledge Summaries. (26 May 2013). http://www.nice.org.uk/

- 10.Haute Autorité de Santé. (26 May 2013). http://www.has-sante.fr

- 11.Johnson B, Shneiderman B. Tree-Maps: a space-filling approach to the visualization of hierarchical information structures. Proceedings of the 2nd conference on Visualization ‘91; Los Alamitos, CA, USA: IEEE Computer Society Press, 1991:284–91 [Google Scholar]

- 12.Litvin CB, Ornstein SM, Wessell AM, et al. Adoption of a clinical decision support system to promote judicious use of antibiotics for acute respiratory infections in primary care. Int J Med Inform 2012;81:521–6 [DOI] [PubMed] [Google Scholar]

- 13.Litvin CB, Ornstein SM, Wessell AM, et al. Use of an electronic health record clinical decision support tool to improve antibiotic prescribing for acute respiratory infections: the ABX-TRIP study. J Gen Intern Med 2013;28:810–16 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Séroussi B, Bouaud J, Antoine EC. ONCODOC: a successful experiment of computer-supported guideline development and implementation in the treatment of breast cancer. Artif Intell Med 2001;22:43–64 [DOI] [PubMed] [Google Scholar]

- 15.Bouaud J, Séroussi B, Antoine EC, et al. Hypertextual navigation operationalizing generic clinical practice guidelines for patient-specific therapeutic decisions. Proccedings of AMIA Symposium; 1998, 488–92 [PMC free article] [PubMed] [Google Scholar]

- 16. Theng YL, Thimbleby H, Jones M. Lost in hyperspace: psychological problem or bad design? Asia-Pacific Computer-Human Interaction 1996:387–96.

- 17.Rubin MA, Bateman K, Donnelly S, et al. Use of a personal digital assistant for managing antibiotic prescribing for outpatient respiratory tract infections in rural communities. J Am Med Inform Assoc 2006;13:627–34 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Linder JA, Schnipper JL, Volk LA, et al. Clinical decision support to improve antibiotic prescribing for acute respiratory infections: results of a Pilot study. AMIA Annu Symp Proc 2007;11:468–72 [PMC free article] [PubMed] [Google Scholar]

- 19.Lee N-J, Bakken S. Development of a prototype personal digital assistant-decision support system for the management of adult obesity. Int J Med Inform 2007;76(S2):281–92 [DOI] [PubMed] [Google Scholar]

- 20.Hoeksema LJ, Bazzy-Asaad A, Lomotan EA, et al. Accuracy of a computerized clinical decision-support system for asthma assessment and management. J Am Med Inform Assoc 2011;18:243–50 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Starren J, Johnson SB. An object-oriented taxonomy of medical data presentations. J Am Med Inform Assoc 2000;7:1–20 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Bell DS, Greenes RA, Doubilet P. Form-based clinical input from a structured vocabulary: initial application in ultrasound reporting. Proceedings of Annual Symposium on Computer Application in Medical Care; 1992, 789–90 [PMC free article] [PubMed] [Google Scholar]

- 23.Bell DS, Greenes RA. Evaluation of UltraSTAR: performance of a collaborative structured data entry system. Proceedings of Annual Symposium on Computer Application in Medical Care; 1994, 216–22 [PMC free article] [PubMed] [Google Scholar]

- 24.Chimera R, Shneiderman B. An exploratory evaluation of three interfaces for browsing large hierarchical tables of contents. ACM Trans Inform Syst 1994;12:383–406 [Google Scholar]

- 25.Hascoët M, Beaudouin-Lafon M. Visualisation interactive d'information. I3: Inform Interact Intell 2001;1:77–108 [Google Scholar]

- 26.Shneiderman B, Plaisant C. Designing the user interface: strategies for effective human-computer interaction. 4th edn. Pearson Addison Wesley, 2004 [Google Scholar]

- 27.Saleem JJ, Flanagan ME, Wilck NR, et al. The next-generation electronic health record: perspectives of key leaders from the US Department of Veterans Affairs. J Am Med Inform Assoc 2013;20(e1):e175–7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Human Factors and Ergonomics Society (26 May 2013). http://www.hfes.org

- 29. Belden JL, Grayson R, Barnes J. Defining and Testing EMR Usability: Principles and Proposed Methods of EMR Usability Evaluation and Rating. Healthcare Information and Management Systems Society (HIMSS); 2009.

- 30.Horsky J, Schiff GD, Johnston D, et al. Interface design principles for usable decision support: a targeted review of best practices for clinical prescribing interventions. J Biomed Inform 2012;45:1202–16 [DOI] [PubMed] [Google Scholar]

- 31.Khajouei R, Jaspers MWM. The impact of CPOE medication systems’ design aspects on usability, workflow and medication orders: a systematic review. Methods Inf Med 2010;49:3–19 [DOI] [PubMed] [Google Scholar]

- 32.Khajouei R, Jaspers MWM. CPOE system design aspects and their qualitative effect on usability. Stud Health Technol Inform 2008;136:309–14 [PubMed] [Google Scholar]

- 33.Middleton B, Bloomrosen M, Dente MA, et al. Enhancing patient safety and quality of care by improving the usability of electronic health record systems: recommendations from AMIA. J Am Med Inform Assoc 2013;20(e1):e2–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Lamy J-B, Duclos C, Bar-Hen A, et al. An iconic language for the graphical representation of medical concepts. BMC Med Inform Decis Mak 2008;8:16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Lamy J-B, Venot A, Bar-Hen A, et al. Design of a graphical and interactive interface for facilitating access to drug contraindications, cautions for use, interactions and adverse effects. BMC Med Inform Decis Mak 2008;8:21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Lamy J-B, Soualmia LF, Kerdelhué G, et al. Validating the semantics of a medical iconic language using ontological reasoning. J Biomed Inform 2013;46:56–67 [DOI] [PubMed] [Google Scholar]

- 37.Chazard E, Puech P, Gregoire M, et al. Using Treemaps to represent medical data. Stud Health Technol Inform 2006;124:522–7 [PubMed] [Google Scholar]

- 38.Duclos C, Cartolano GL, Ghez M, et al. Structured representation of the pharmacodynamics section of the summary of product characteristics for antibiotics: application for automated extraction and visualization of their antimicrobial activity spectra. J Am Med Inform Assoc 2004;11:285–93 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Duclos C, Nobécourt J, Cartolano GL, et al. An ontology of bacteria to help physicians to compare antibacterial spectra. AMIA Annu Symp Proc 2007;11:196–200 [PMC free article] [PubMed] [Google Scholar]

- 40.Tsopra R, Lamy J-B, Venot A, et al. Design of an original interface that facilitates the use of clinical practice guidelines of infection by physicians in primary care. Stud Health Technol Inform 2012;180:93–7 [PubMed] [Google Scholar]

- 41. Brooke J. SUS-A quick and dirty usability scale. In: Jordan PW, Thomas B, Weerdmeester BA, McClelland IL, eds. Usability Evaluation in Industry. London: Taylor and Francis, 1996:189–94. [Google Scholar]

- 42.Brooke J. SUS: A Retrospective. J Usability Stud 2013;8:29–40 [Google Scholar]

- 43.Hills M, Armitage P. The two-period cross-over clinical trial. Br J Clin Pharmacol 1979;8:7–20 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Bangor A, Kortum PT, Miller JT. An empirical evaluation of the system usability scale. Int J Hum Comput Interact 2008;24:574–94 [Google Scholar]

- 45.Bangor A, Kortum P, Miller J. Determining what individual SUS scores mean: adding an adjective rating scale. J Usability Stud 2009;4:114–23 [Google Scholar]

- 46.Yen P-Y, Bakken S. Review of health information technology usability study methodologies. J Am Med Inform Assoc 2012;19:413–22 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Beuscart-Zéphir MC, Pelayo S, Anceaux F, et al. Impact of CPOE on doctor-nurse cooperation for the medication ordering and administration process. Int J Med Inform 2005;74:629–41 [DOI] [PubMed] [Google Scholar]

- 48.Alexander GL. Issues of trust and ethics in computerized clinical decision support systems. Nurs Adm Q 2006;30:21–9 [DOI] [PubMed] [Google Scholar]

- 49.Goddard BL. Termination of a contract to implement an enterprise electronic medical record system. J Am Med Inform Assoc 2000;7:564–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Field TS, Rochon P, Lee M, et al. Costs associated with developing and implementing a computerized clinical decision support system for medication dosing for patients with renal insufficiency in the long-term care setting. J Am Med Inform Assoc 2008;15:466–72 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Ash JS, Sittig DF, Campbell EM, et al. Some unintended consequences of clinical decision support systems. AMIA Annu Symp Proc 2007;11:26–30 [PMC free article] [PubMed] [Google Scholar]

- 52.Chan J, Shojania KG, Easty AC, et al. Does user-centred design affect the efficiency, usability and safety of CPOE order sets? J Am Med Inform Assoc 2011;18:276–81 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Ely JW, Osheroff JA, Ebell MH, et al. Obstacles to answering doctors’ questions about patient care with evidence: qualitative study. BMJ 2002;324:710. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.