Abstract

Training or exposure to a visual feature leads to a long-term improvement in performance on visual tasks that employ this feature. Such performance improvements and the processes that govern them are called visual perceptual learning (VPL). As an ever greater volume of research accumulates in the field, we have reached a point where a unifying model of VPL should be sought. A new wave of research findings has exposed diverging results along three major directions in VPL: specificity versus generalization of VPL, lower versus higher brain locus of VPL, and task-relevant versus task-irrelevant VPL. In this review, we propose a new theoretical model that suggests the involvement of two different stages in VPL: a low-level, stimulus-driven stage, and a higher-level stage dominated by task demands. If experimentally verified, this model would not only constructively unify the current divergent results in the VPL field, but would also lead to a significantly better understanding of visual plasticity, which may, in turn, lead to interventions to ameliorate diseases affecting vision and other pathological or age-related visual and nonvisual declines.

Keywords: perceptual learning, vision, two-stage model, plasticity

Introduction

Visual perceptual learning (VPL) is defined as a long-term improvement in visual performance on a task resulting from visual experiences.1–10 An expert in X-ray analysis, for example, can identify a tumor from the pattern of gray and black spots on an X-ray scan without much difficulty, whereas it is impossible for an untrained person to perform the task. Such feats are possible because the experts are trained through experience.

VPL indicates that, long after many aspects of sensory development have ceased in an early period of our life, repeated exposure or training can improve our visual abilities and cause reorganizations of neural processing in the brain. Therefore, VPL is regarded as an important tool that can help to clarify the mechanisms of adult visual and brain plasticity.11–14 In addition, an increasing number of studies have found that training on a visual task can significantly strengthen the visual abilities of adult patients with amblyopia and other forms of abnormal vision15–20 and ameliorate age-related visual declines.21–23 These recent clinical successes are the result of translating basic VPL research findings into practical and powerful real-world applications.24–28 Thus, a clearer understanding of the mechanisms of VPL in adults could lead to innovations in clinical intervention as well as advancement in basic science.

Three major directions in VPL research

In light of the steadily increasing body of evidence in the VPL field, a unified model is highly sought. However, a new wave of research findings has caused the field to diverge along three major directions. First, the mechanisms that govern the specificity and generalization of VPL remain controversial. Seminal psychophysical studies reported that VPL is highly specific for stimulus features (e.g., retinal location, orientation, contrast, spatial frequency, motion direction, background texture, and eye) that are trained or exposed during training.29–44 However, recent studies have indicated that under some conditions VPL can be generalized to untrained features.45–52 Second, the locus, or brain area/information processing stage, at which VPL occurs has been highly debated. One line of studies has suggested that VPL is associated with changes primarily in visual areas (visual model),53–67 while the other line of studies has proposed that VPL arises from changes in higher-level cognitive areas that are involved in decision making, or changes in weighting between the visual and cognitive areas (cognitive model).68–76 Third, it has been controversial whether task-relevant and task-irrelevant VPL (TR-VPL and TI-VPL, respectively) share a common mechanism or reflect distinct mechanisms.8 TR-VPL of a feature occurs owing to training on a task of the visual feature and is gated by focused attention to the feature in principle.33,77–80 On the other hand, TI-VPL is defined as VPL of a feature that is irrelevant to a given task.81 It has been found that TI-VPL does not necessarily require attention to, and awareness of, the trained feature.81–83 Some studies have suggested that the same or similar mechanisms84–86 underlie TI-VPL and TR-VPL, while others have suggested distinct mechanisms.1,45,87,88

Toward a unifying model of VPL

The findings described above reveal a substantially divergent picture of the current VPL field. Existing assembled models account for only a partial body of evidence. Instead, we propose a constructive approach that builds a unified VPL model from a global perspective. This approach attempts to comprehensively explain the existing divergent findings in the field.

Here, we propose a new theoretical framework, termed a two-stage model, built on the following two simple assumptions. First, there are two different types of learning: stimulus-driven learning (feature-based plasticity) and task-dependent learning (task-based plasticity). Second, feature-based plasticity occurs within visual areas, whereas task-based plasticity occurs in higher-level cognitive areas, where information from visual and nonvisual areas are integrated, or in the connections between the visual and cognitive areas.

The goal of this review is to summarize the aforementioned three major directions in the VPL field and describe how the two-stage model accounts for the divergent findings, and therefore leads to a comprehensive picture of VPL. Systematic examination of the validity of the two-stage model will be needed in future studies.

Specificity versus generalization of VPL

Specificity and generalization have been a point of divergence in the VPL field. A well-established trademark characteristic of VPL is its specificity; improvement in visual performance is largely specific to stimulus features such as retinal location,33,35,38,42 contrast,29,44 orientation,34,36,37,40 spatial frequency,32,36,40,42 motion direction,30,31,41,43 or background texture38 that were used during training. In other words, VPL is not generalized to other features. In addition, it has been shown that performance improvement is specific to an eye to which a trained stimulus is presented during training.32,33,39,40 Such high specificity of VPL has been often regarded as the evidence for refinement of the neural representation of a trained visual feature.

The early general view that VPL occurs specifically for a trained feature has been challenged by recent VPL studies that reported generalization of VPL to untrained features under certain conditions.45–52,89 These results support the view that VPL is mostly caused by improved task processing, and specificity of VPL is not explained by changes in low-level visual representations, but rather by higher level cognitive factors such as enduring attentional inhibition of the untrained features,48,50–52,90 selective reweighting of readout process to find specific visual representations which are the most useful for a trained task,46,69,75,76,91 or overfitting the trained neuronal network to accidental features of the specific stimulus that is used during training.6,92

Lower versus higher brain locus of VPL

One of the controversies in the VPL field concerns the brain locus of VPL, that is, the neural processing stage that is changed in association with VPL. The accumulated findings can be generally framed in terms of one of two opposing models: the visual and cognitive models. Both models have amassed substantial psychophysical, physiological, and computational findings in their respective favors.

As mentioned, a number of psychophysical studies have shown that VPL is often specific to a trained feature29–44 and eye.32,33,39,40 These findings suggest that VPL involves changes in visual areas in which feature- and eye-specific information are processed.93–95 In accordance with this visual model, physiological studies have reported changes in neural responses of visual areas to a trained feature,54,96,97 even when the trained feature was merely exposed to subjects without any active task on the trained feature.53,56–58,60,65 Results of neuroimaging studies have also supported this view.55,59,61,62,64,67

On the other hand, recent psychophysical and computational studies have proposed the cognitive model or connectivity model in which VPL arises from changes in higher level cognitive areas that are involved in decision making (e.g., the intra parietal sulcus, frontal eye fields, and anterior cingulate cortex) or changes in connection weights between the visual and cognitive areas.50,51,69,74–76 This argument is supported by studies that showed that VPL occurs in association with neural changes in cognitive areas or connectivity between visual and cognitive areas,68,70,71,74 rather than only in visual areas.72

TR-VPL versus TI-VPL

Another open problem concerns whether TR-VPL and TI-VPL are subserved by common mechanisms or by separate mechanisms. Since the initial discovery of TI-VPL,81 a number of studies have been conducted on TI-VPL.82,83,88,98–110 However, the mechanism of TI-VPL has yet to be well clarified. Some studies have developed models that focus on accounting for TR-VPL, but not for TI-VPL.1,45,88 In contrast, other studies have proposed models that assume that TR-VPL and TI-VPL share common mechanisms.84–86 In spite of this controversy, there have been scarcely any attempts to empirically examine the relationship between the neural mechanisms of TR-VPL and TI-VPL from a global perspective.

Multistage mechanisms of VPL

A potentially useful framework for understanding the aforementioned divergent results consists of multistage learning mechanisms. It has been generally assumed that a single mechanism governs VPL of a given task throughout an entire training period. However, if VPL is associated with changes in mechanisms in multiple stages, the seemingly contradictory findings described above could be regarded as a reflection of changes in different stages. As discussed below, several studies have shown the involvement of at least two qualitatively different stages in VPL.

A pioneering study by Karni and Sagi39 indicated two different stages for TR-VPL of a texture-discrimination task. In an early phase of training on the texture-discrimination task, a rapidly saturating performance improvement occurred. After this initial improvement, no further performance improvement was found during training. However, prominent improvement occurred when the subjects’ performance was tested 8–20 h after the training period. Importantly, while the initial improvement in the early phase was transferable from a trained eye to an untrained eye, the improvement found several hours after the training period was monocular (i.e., no transfer to the untrained eye). These results suggest that the transferred learning component reflected the setting up of a task-related processing while the eye-specific learning component occurred at an earlier stage of visual processing after training was terminated. Thus, at least two qualitatively different stages of learning may be involved in TR-VPL of the texture-discrimination task.

Two different stages of learning were also reported in a study for TR-VPL of a global motion– discrimination task using a random dot–motion stimulus.109 The random dot–motion stimulus in this study was designed so that the directions of local dots that compose the stimulus do not overlap with a global-motion direction that is defined by the mean direction of the local dots. During relatively early phases of time course of training on the global motion–discrimination task, motion sensitivities increased for the directions in which the local dots moved. However, as training proceeds further, motion sensitivities increased only at or around the trained global-motion direction. Importantly, the motion directions of the local dots did not include the global-motion direction, which was the target in the discrimination task. On the other hand, in a different condition in which the randomdot–motion stimulus was merely presented as irrelevant to a given task, increase of motion sensitivities was found only for the direction of the local dots, but not for the global-motion direction, even after an extended training period. These results indicate that learning gain for the directions of the local dots is obtained irrespective of whether the motion stimulus was TR or TI, and that there is learning gain for a global-motion direction only when the global-motion direction was TR. That is, learning on the local direction is based purely on the stimulus while learning on the global direction is only in response to task demands, since the global-motion direction was not physically presented during training. These results are also in accord with the hypothesis that the two different stages of learning occur at different temporal phases or at different speeds.

A recent neuroimaging study67 gave important hints about the multistage mechanisms of VPL in the brain. In an early phase of training on the texture-discrimination task, performance increase was accompanied with activation enhancement of a trained region of the early visual area (V1). However, in a later phase, after performance on the task reached a plateau, V1 activation was reduced to the baseline level, while performance remained high. The observed time course of V1 activation might be a reflection of synaptic potentiation during the early phase of training and synaptic downscaling in the later phase after performance becomes saturated.67 This finding suggests a multistage mechanism of VPL in which V1 is involved in the encoding of learning but not in the retention of already improved performance after learning, which might be supported by a mechanism in a different stage than encoding. This could explain why a number of single cell–recording studies have found very little evidence for changes in receptive-field properties in V1.

A recent psychophysical study reported that location specificity of VPL is related to long-term adaptation in early visual areas.89 Results of the study showed that location-specific VPL occurs under conditions that involve sensory adaptation. However, VPL at one location is completely generalized to a new and untrained location when sensory adaptation is removed during training. Sensory adaptation, which develops within the visual system,111 can occur passively without any task involvement,112 persist for a long time,113 and selectively reduce performance on an exposed feature.114–116 Together with the nature of sensory adaptation, the results of the study are in accord with the hypothesis that location specificity results from long-term sensory adaptation that may passively occur in visual areas. The generalization of VPL to untrained features after the removal of the adaptation89 also suggests that plasticity related to the task occurs at a higher stage but is constrained by the location-specific signals owing to sensory adaptation. This view is also consistent with results showing that VPL becomes highly specific to a trained feature with a larger number of trials, while more generalization of VPL occurs from shorter training with a relatively small number of trials,117 since the shorter training leads to less sensory adaptation.118

The above-mentioned findings in these four studies suggest that TR-VPL derives from multistage neural mechanisms and that improved performance can be mediated by refinement of a representation of a trained feature and/or improvement in task-related processing. These results also suggest that the one learning type can precede the other, depending on the phase of training and the stimulus and task used during training.

A unified two-stage model

Here, we propose a new theoretical framework, which we call a two-stage model. The two-stage model is inspired by the findings about multistage mechanisms of VPL described above and may account for the aforementioned divergent results in the current VPL field.

The two-stage model

According to the two-stage model, there are two different types of plasticity—feature-based plasticity and task-based plasticity—that occur in different stages in visual information processing. Feature-based plasticity refers to refinement of the processing/representation of visual features (e.g., improved information transmission,119 gain, and bandwidth) that are used during training. This type of plasticity results from simple exposure to the features, irrespective of whether the feature is TR or TI, and results in specificity of VPL to the features (feature constraints). Task-based plasticity refers to improvement in task-related processing and results from active involvement in a trained task during training. Feature-based plasticity occurs within visual areas in which feature-specific visual signals are processed and/or feature representations are made, whereas task-based plasticity occurs in higher level cognitive areas that govern task-related processing.

How does the two-stage model account for the divergent results in the current VPL field?

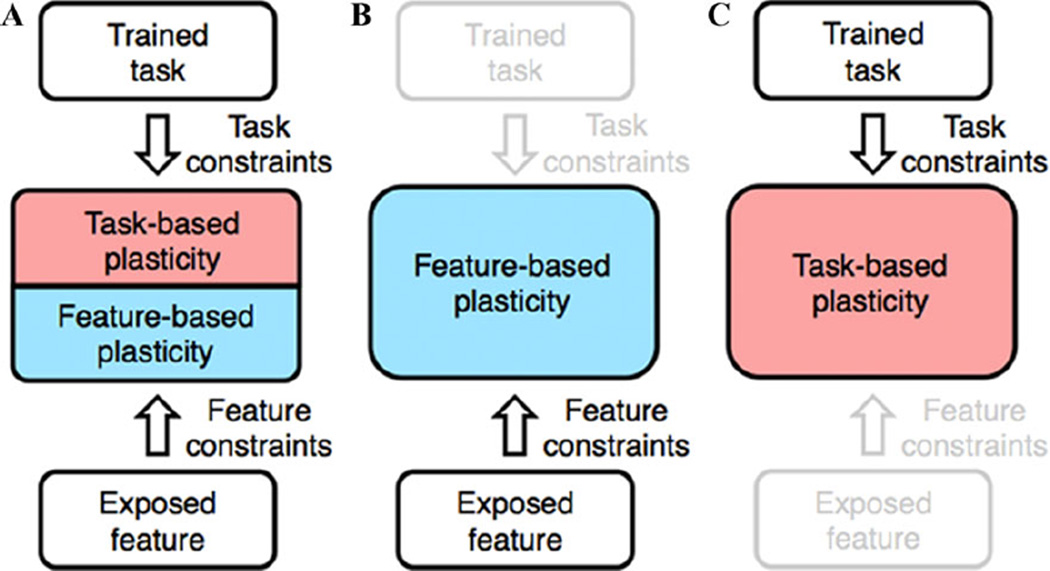

The aforementioned divergent findings/controversial issues could be understood within the framework of the two-stage model. First, the two-stage model would clarify the relationship between TR-VPL and TI-VPL. TR-VPL involves both refinement of the processing/representation of visual features that were used during training (feature-based plasticity) and improvements in task processing (task-based plasticity) (Fig. 1A). On the other hand, TI-VPL only involves feature-based plasticity (Fig. 1B). In other words, TI-VPL is subserved by the lower-level component of TR-VPL. This view is consistent with both common and divergent aspects of TR-VPL and TI-VPL found in previous studies.84–86,88

Figure 1.

The two-stage model in visual perceptual learning (VPL). (A) Task-relevant VPL (TR-VPL) reflects two different types of plasticity: feature-based and task-based plasticity. Contributions of feature- and task-based plasticity to overall performance improvement vary according to the phase of training and the task and stimulus used during training. (B) Task-irrelevant VPL (TI-VPL) is subserved only by feature-based plasticity. (C) TR-VPL can be generalized to untrained features if feature constraints are removed.

Second, the two-stage model would account for the current divergent findings about the brain locus of VPL. As described before, the visual model supports changes within visual areas in association with VPL,53–67 whereas the cognitive model argues that VPL arises from changes in higher-level cognitive areas or changes in connections between the visual and cognitive areas.68–76 In particular, it is controversial whether TR-VPL involves changes in the visual or cognitive areas, or both.8 From the viewpoint of the two-stage model for TR-VPL (Fig. 1A), this lack of clarity derives from two different types of plasticity and their different contributions to improved performance. For a certain task, stimulus, and training phase, improved performance in TR-VPL mainly depends on feature-based plasticity, and the resultant changes are observed in visual areas. In a different condition and phase, improved performance in TR-VPL is mainly based on task-based plasticity, which in turn appears as changes in higher-level cognitive areas or connectivity between the visual and cognitive areas, but not in the visual areas. This view does not accept a simple dichotomy between the visual and cognitive models, but rather supports multifaceted mechanisms for the loci of VPL in the brain. For example, the two-stage model may explain why changes in visual areas are observed in some studies but not in other studies of TR-VPL, because feature-based plasticity does not always occur during an entire period of training and its neural signature may be observable only in a part of the period. This view can account for both the involvement of the visual areas in human studies55,59,61,62,64,67 and little evidence of changes in neural activity in the visual areas in monkey single-unit recordings.72,120 Animal studies have employed much longer training period (months) than human subjects (days) before neurophysiological measurements to examine neural changes caused by VPL.

Third, the specificity of VPL29–44 can be accounted for as feature constraints that are assumed in feature-based plasticity. The two-stage model (Fig. 1C) predicts that generalization of TR-VPL to untrained features occurs if the feature constraints are removed or not involved during training. As mentioned previously, removal of sensory adaptation leads to generalization of TR-VPL.89 This generalization can be regarded as a result of a lack of feature constraints during training. In addition, previous studies showed that generalization of VPL is obtained as a result of training that employs easy task trials.1,45 It has been suggested that, in this condition, refinement of processing/representation of trained visual features does not occur, while performance increase is mediated mainly by improvement in task-related processing.1,45 Furthermore, recent studies have reported that performance improvement owing to training on a task of a feature is generalized to an untrained location when the training is followed by an additional training on another irrelevant task at the untrained location (so-called double-training paradigm).50 Although precise nature of the effects of the double-training paradigm is still under debate,105,121 the reported generalization effect may be attributed to removal of feature constraints as a result of the additional training.

Relationships with existing models

What is the key difference distinguishing the two-stage model from existing models in the field? As described, the visual, cognitive, and connectivity models assume that TR-VPL involves changes in the visual areas, higher cognitive areas, and connection weights between the visual and cognitive areas. In the two-stage model, a key difference from these models is that TR-VPL occurs as a result of at least two different types of plasticity (feature and task-based plasticity) at different cortical stages. The reverse-hierarchy theory1,87 proposed that TR-VPL is derived from changes in higher and lower cortical stages. There are two important differences between the reverse-hierarchy theory and the two-stage model. First, the reverse-hierarchy theory considers only TR-VPL while, as described above, the two-stage model covers both TR-VPL and TI-VPL within an integrated framework. Second, while the reverse-hierarchy theory assumes that learning at a lower cortical stage follows learning at higher cortical stage, the two-stage model retains flexibility in temporal order of different types of plasticity. These types of plasticity can occur in order or at the same time, depending on the task and stimulus. Indeed, task-based and transferrable learning may occur first in some cases,39 while learning based on an exposed TI feature occurs first in other cases.109

Conclusions and future directions

As described above, the two-stage model would constructively clarify the incompatibilities in the VPL field and synthesize current divergent findings into a global and comprehensive shape.

The assumptions of the two-stage model need to be empirically validated in future studies. The first touchstone is to test whether the two different types of plasticity (feature-based and task-based plasticity) involved in TR-VPL are experimentally dissociable. Feature-based plasticity refers to changes in the visual representation of a trained feature in visual areas, while task-based plasticity refers to changes in task processing in higher level cognitive areas. Thus, neural changes based on feature-based plasticity may be observed as changes in neural responses to the trained feature in the corresponding visual areas in association with TR-VPL, irrespective of whether subjects engage in a trained task on a feature or are merely presented with a feature that is irrelevant to a given task. On the other hand, neural changes due to task-based plasticity may be observed in higher cognitive areas or in connectivity between the visual and cognitive areas only when subjects engage in the trained task on the trained feature.

The second touchstone is to test whether the two different phases in TR-VPL are experimentally dissociable. The two-stage model predicts that contributions of the feature- and task-based plasticity to overall performance improvement vary according to a temporal phase of training and a task and stimulus used during training. The previous psychophysical studies suggested that performance improvement can occur on the basis of visual and task-related processing in different phases of training.39,109 Thus, it is possible that in a certain phase of training neural correlates of improved performance are found in the visual areas, while in a different phase neural changes are observed in the cognitive areas or in the connectivity between the visual and cognitive areas.

The framework of the two-stage model poses important questions/predictions that have the potential to comprehensively connect different findings that have been investigated separately and to clarify relationships between them. For example, it would be beneficial to reconsider the roles of response feedback and reward from the multistage perspective. Response feedback provides subjects with the information as to whether their response to a stimulus was correct.122–124 Some types of TR-VPL require response feedback125 but others do not.122,126 On the other hand, reward can be given to subjects irrespective of whether the subjects engaged in a task on a trained feature.82 We propose that task-based plasticity follows both supervised and reinforcement learning rules,40,73,76 while feature-based plasticity, in which task-related improvement is not involved, is driven by unsupervised learning modulated by reinforcement signals.63,82,83,85,106,126,127 Thus, it is likely that both response feedback and reward facilitate task-based plasticity, whereas only reward influences feature-based plasticity.

Another example is the relationship between TI-VPL and perceptual-deterioration effects on a visual feature that occur as a result of intensive training or overexposure to the feature.114–116,118,128,129 It has been suggested that the deterioration effect is the result of saturation of synaptic strength and the resultant breakdown of visual information processing in early and local neuronal networks when too many task trials/stimuli are given.6,118 Importantly, it has been reported that TI-VPL often occurs when an exposed feature is not suppressed by attention.108,130 In addition, a fast version of TI-VPL has been found to occur with as little as a single trial of exposure to a feature.131 These characteristics of TI-VPL and the perceptual-deterioration effect suggest that feature-based plasticity is sensitive to the strength and frequency of visual signals that are exposed during training.

In this review, we focused on VPL of primitive features, such as motion direction, orientation, and background texture. The learning of more complex features that may involve affective or semantic processing, categorization learning, and other types of learning and memory have emergent distinguishing characteristics of their own, but may also have fundamentally common aspects of mechanisms shared by that of the learning of primitive features. Thus, it is possible that the unified two-stage model leads to a better understanding of the mechanisms unique to the learning of primitive features and also of a general mechanism of learning and memory. Indeed, previous studies on VPL of primitive visual features have shown them to be highly related to the learning of changes of objects,132,133 categorization learning,134 word learning,135,136 speech learning,137 learning in general,138 sleep,115,139 memory,140 psychiatric diseases,141 and attention.142

A fuller understanding of VPL also has implications for clinical applications. The research history of VPL has proved that an understanding of the mechanisms of VPL is vital to the development and application of clinical interventions for patients with weak or degraded vision.15–23 Thus, the proposed two-stage model, if experimentally verified, would not only resolve the major controversial issues in the current VPL field, but would also clarify the comprehensive mechanisms of VPL, which would help us to develop more practical and powerful clinical applications.

Acknowledgments

This work is supported by the Japan Society for the Promotion of Science (JSPS), the Basic Research Foundation administered by the Israel Academy of Sciences and Humanities, NIH R01 EY015980, and NIH R01 MH091801.

Footnotes

Conflicts of interest

The authors declare no conflicts of interest.

References

- 1.Ahissar M, Hochstein S. The reverse hierarchy theory of visual perceptual learning. Trends Cogn. Sci. 2004;8:457–464. doi: 10.1016/j.tics.2004.08.011. [DOI] [PubMed] [Google Scholar]

- 2.Fahle M. Perceptual learning: gain without pain? Nat. Neurosci. 2002;5:923–924. doi: 10.1038/nn1002-923. [DOI] [PubMed] [Google Scholar]

- 3.Fahle M, Poggio T. Perceptual Learning. Cambridge: The MIT Press; 2002. [Google Scholar]

- 4.Gold JI, Watanabe T. Perceptual learning. Curr. Biol. 2010;20:R46–R48. doi: 10.1016/j.cub.2009.10.066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Lu ZL, et al. Visual perceptual learning. Neurobiol. Learn. Mem. 2011;95:145–151. doi: 10.1016/j.nlm.2010.09.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Sagi D. Perceptual learning in vision research. Vision Res. 2011;51:1552–1566. doi: 10.1016/j.visres.2010.10.019. [DOI] [PubMed] [Google Scholar]

- 7.Sagi D, Tanne D. Perceptual learning: learning to see. Curr. Opin. Neurobiol. 1994;4:195–199. doi: 10.1016/0959-4388(94)90072-8. [DOI] [PubMed] [Google Scholar]

- 8.Sasaki Y, Nanez JE, Watanabe T. Advances in visual perceptual learning and plasticity. Nat. Rev. Neurosci. 2010;11:53–60. doi: 10.1038/nrn2737. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Sasaki Y, Nanez JE, Watanabe T. Recent progress in perceptual learning research. Wiley Interdiscip. Rev. Cogn. Sci. 2012;3:293–299. doi: 10.1002/wcs.1175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Sasaki Y, Watanabe T. Perceptual learning. In: Werner JS, Chalupa LM, editors. The Visual Neurosciences. Vol. 2. Cambridge: MIT Press; 2012. [Google Scholar]

- 11.Espinosa JS, Stryker MP. Development and plasticity of the primary visual cortex. Neuron. 2012;75:230–249. doi: 10.1016/j.neuron.2012.06.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Gilbert CD, Li W. Adult visual cortical plasticity. Neuron. 2012;75:250–264. doi: 10.1016/j.neuron.2012.06.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Karmarkar UR, Dan Y. Experience-dependent plasticity in adult visual cortex. Neuron. 2006;52:577–585. doi: 10.1016/j.neuron.2006.11.001. [DOI] [PubMed] [Google Scholar]

- 14.Wandell BA, Smirnakis SM. Plasticity and stability of visual field maps in adult primary visual cortex. Nat. Rev. Neurosci. 2009;10:873–884. doi: 10.1038/nrn2741. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Levi DM. Perceptual learning in adults with amblyopia: a reevaluation of critical periods in human vision. Dev. Psychobiol. 2005;46:222–232. doi: 10.1002/dev.20050. [DOI] [PubMed] [Google Scholar]

- 16.Levi DM, Li RW. Improving the performance of the amblyopic visual system. Philos. Trans. R. Soc. Lond. B. Biol. Sci. 2009;364:399–407. doi: 10.1098/rstb.2008.0203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Levi DM, Polat U. Neural plasticity in adults with amblyopia. Proc. Natl. Acad. Sci. U S A. 1996;93:6830–6834. doi: 10.1073/pnas.93.13.6830. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Li RW, et al. Perceptual learning improves visual performance in juvenile amblyopia. Invest. Ophthalmol. Vis. Sci. 2005;46:3161–3168. doi: 10.1167/iovs.05-0286. [DOI] [PubMed] [Google Scholar]

- 19.Polat U, et al. Improving vision in adult amblyopia by perceptual learning. Proc. Natl. Acad. Sci. U S A. 2004;101:6692–6697. doi: 10.1073/pnas.0401200101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Xu JP, He ZJ, Ooi TL. Effectively reducing sensory eye dominance with a push-pull perceptual learning protocol. Curr. Biol. 2010;20:1864–1868. doi: 10.1016/j.cub.2010.09.043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Andersen GJ, et al. Perceptual learning, aging, and improved visual performance in early stages of visual processing. J. Vis. 2010;10:4–000. doi: 10.1167/10.13.4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Bower JD, Andersen GJ. Aging, perceptual learning, and changes in efficiency of motion processing. Vision Res. 2012;61:144–156. doi: 10.1016/j.visres.2011.07.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Bower JD, Watanabe T, Andersen GJ. Perceptual learning and aging: improved performance for low-contrast motion discrimination. Front. Psychol. 2013;4:66. doi: 10.3389/fpsyg.2013.00066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Green CS, Bavelier D. Learning, attentional control, and action video games. Curr. Biol. 2012;22:R197–R206. doi: 10.1016/j.cub.2012.02.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Li J, et al. Dichoptic training enables the adult amblyopic brain to learn. Curr. Biol. 2013;23:R308–R309. doi: 10.1016/j.cub.2013.01.059. [DOI] [PubMed] [Google Scholar]

- 26.Li RW, et al. Video-game play induces plasticity in the visual system of adults with amblyopia. PLoS Biol. 2011;9:e1001135. doi: 10.1371/journal.pbio.1001135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Ooi TL, et al. A push-pull treatment for strengthening the “lazy eye” in amblyopia. Curr. Biol. 2013;23:R309–R310. doi: 10.1016/j.cub.2013.03.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Shibata K, et al. Monocular deprivation boosts long-term visual plasticity. Curr. Biol. 2012;22:R291–R292. doi: 10.1016/j.cub.2012.03.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Adini Y, Sagi D, Tsodyks M. Context-enabled learning in the human visual system. Nature. 2002;415:790–793. doi: 10.1038/415790a. [DOI] [PubMed] [Google Scholar]

- 30.Ball K, Sekuler R. A specific and enduring improvement in visual motion discrimination. Science. 1982;218:697–698. doi: 10.1126/science.7134968. [DOI] [PubMed] [Google Scholar]

- 31.Ball K, Sekuler R. Direction-specific improvement in motion discrimination. Vision Res. 1987;27:953–965. doi: 10.1016/0042-6989(87)90011-3. [DOI] [PubMed] [Google Scholar]

- 32.Fahle M. Human pattern recognition: parallel processing and perceptual learning. Perception. 1994;23:411–427. doi: 10.1068/p230411. [DOI] [PubMed] [Google Scholar]

- 33.Fahle M. Perceptual learning: a case for early selection. J. Vis. 2004;4:879–890. doi: 10.1167/4.10.4. [DOI] [PubMed] [Google Scholar]

- 34.Fahle M, Edelman S. Long-term learning in vernier acuity: effects of stimulus orientation, range and of feedback. Vision Res. 1993;33:397–412. doi: 10.1016/0042-6989(93)90094-d. [DOI] [PubMed] [Google Scholar]

- 35.Fahle M, Morgan M. No transfer of perceptual learning between similar stimuli in the same retinal position. Curr. Biol. 1996;6:292–297. doi: 10.1016/s0960-9822(02)00479-7. [DOI] [PubMed] [Google Scholar]

- 36.Fiorentini A, Berardi N. Perceptual learning specific for orientation and spatial frequency. Nature. 1980;287:43–44. doi: 10.1038/287043a0. [DOI] [PubMed] [Google Scholar]

- 37.Fiorentini A, Berardi N. Learning in grating wave form discrimination: specificity for orientation and spatial frequency. Vision Res. 1981;21:1149–1158. doi: 10.1016/0042-6989(81)90017-1. [DOI] [PubMed] [Google Scholar]

- 38.Karni A, Sagi D. Where practice makes perfect in texture discrimination: evidence for primary visual cortex plasticity. Proc. Natl. Acad. Sci. U S A. 1991;88:4966–4970. doi: 10.1073/pnas.88.11.4966. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Karni A, Sagi D. The time course of learning a visual skill. Nature. 1993;365:250–252. doi: 10.1038/365250a0. [DOI] [PubMed] [Google Scholar]

- 40.Poggio T, Fahle M, Edelman S. Fast perceptual learning in visual hyperacuity. Science. 1992;256:1018–1021. doi: 10.1126/science.1589770. [DOI] [PubMed] [Google Scholar]

- 41.Saffell T, Matthews N. Task-specific perceptual learning on speed and direction discrimination. Vision Res. 2003;43:1365–1374. doi: 10.1016/s0042-6989(03)00137-8. [DOI] [PubMed] [Google Scholar]

- 42.Sowden PT, Rose D, Davies IR. Perceptual learning of luminance contrast detection: specific for spatial frequency and retinal location but not orientation. Vision Res. 2002;42:1249–1258. doi: 10.1016/s0042-6989(02)00019-6. [DOI] [PubMed] [Google Scholar]

- 43.Vaina LM, et al. Neural systems underlying learning and representation of global motion. Proc. Natl. Acad. Sci. U S A. 1998;95:12657–12662. doi: 10.1073/pnas.95.21.12657. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Yu C, Klein SA, Levi DM. Perceptual learning in contrast discrimination and the (minimal) role of context. J. Vis. 2004;4:169–182. doi: 10.1167/4.3.4. [DOI] [PubMed] [Google Scholar]

- 45.Ahissar M, Hochstein S. Task difficulty and the specificity of perceptual learning. Nature. 1997;387:401–406. doi: 10.1038/387401a0. [DOI] [PubMed] [Google Scholar]

- 46.Jeter PE, et al. Task precision at transfer determines specificity of perceptual learning. J. Vis. 2009;9:11–13. doi: 10.1167/9.3.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Liu Z, Weinshall D. Mechanisms of generalization in perceptual learning. Vision Res. 2000;40:97–109. doi: 10.1016/s0042-6989(99)00140-6. [DOI] [PubMed] [Google Scholar]

- 48.Wang R, et al. Task relevancy and demand modulate double-training enabled transfer of perceptual learning. Vision Res. 2012;61:33–38. doi: 10.1016/j.visres.2011.07.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Wang X, Zhou Y, Liu Z. Transfer in motion perceptual learning depends on the difficulty of the training task. J. Vis. 2013;13:1–9. doi: 10.1167/13.7.5. [DOI] [PubMed] [Google Scholar]

- 50.Xiao LQ, et al. Complete transfer of perceptual learning across retinal locations enabled by double training. Curr. Biol. 2008;18:1922–1926. doi: 10.1016/j.cub.2008.10.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Zhang JY, et al. Rule-based learning explains visual perceptual learning and its specificity and transfer. J. Neu-rosci. 2010;30:12323–12328. doi: 10.1523/JNEUROSCI.0704-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Zhang T, et al. Decoupling location specificity from perceptual learning of orientation discrimination. Vision Res. 2010;50:368–374. doi: 10.1016/j.visres.2009.08.024. [DOI] [PubMed] [Google Scholar]

- 53.Adab HZ, Vogels R. Practicing coarse orientation discrimination improves orientation signals in macaque cortical area v4. Curr. Biol. 2011;21:1661–1666. doi: 10.1016/j.cub.2011.08.037. [DOI] [PubMed] [Google Scholar]

- 54.Crist RE, Li W, Gilbert CD. Learning to see: experience and attention in primary visual cortex. Nat. Neurosci. 2001;4:519–525. doi: 10.1038/87470. [DOI] [PubMed] [Google Scholar]

- 55.Furmanski CS, Schluppeck D, Engel SA. Learning strengthens the response of primary visual cortex to simple patterns. Curr. Biol. 2004;14:573–578. doi: 10.1016/j.cub.2004.03.032. [DOI] [PubMed] [Google Scholar]

- 56.Hua T, et al. Perceptual learning improves contrast sensitivity of V1 neurons in cats. Curr. Biol. 2010;20:887–894. doi: 10.1016/j.cub.2010.03.066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Lee TS, et al. Neural activity in early visual cortex reflects behavioral experience and higher-order perceptual saliency. Nat. Neurosci. 2002;5:589–597. doi: 10.1038/nn0602-860. [DOI] [PubMed] [Google Scholar]

- 58.Raiguel S, et al. Learning to see the difference specifically alters the most informative V4 neurons. J. Neurosci. 2006;26:6589–6602. doi: 10.1523/JNEUROSCI.0457-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Schiltz C, et al. Neuronal mechanisms of perceptual learning: changes in human brain activity with training in orientation discrimination. NeuroImage. 1999;9:46–62. doi: 10.1006/nimg.1998.0394. [DOI] [PubMed] [Google Scholar]

- 60.Schoups A, et al. Practising orientation identification improves orientation coding in V1 neurons. Nature. 2001;412:549–553. doi: 10.1038/35087601. [DOI] [PubMed] [Google Scholar]

- 61.Schwartz S, Maquet P, Frith C. Neural correlates of perceptual learning: a functional MRI study of visual texture discrimination. Proc. Natl. Acad. Sci. U S A. 2002;99:17137–17142. doi: 10.1073/pnas.242414599. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Shibata K, et al. Decoding reveals plasticity in V3A as a result of motion perceptual learning. PLoS One. 2012;7:e44003. doi: 10.1371/journal.pone.0044003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Shibata K, et al. Perceptual learning incepted by decoded fMRI neuro feedback without stimulus presentation. Science. 2011;334:1413–1415. doi: 10.1126/science.1212003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Walker MP, et al. The functional anatomy of sleep-dependent visual skill learning. Cereb. Cortex. 2005;15:1666–1675. doi: 10.1093/cercor/bhi043. [DOI] [PubMed] [Google Scholar]

- 65.Yang T, Maunsell JH. The effect of perceptual learning on neuronal responses in monkey visual area V4. J. Neurosci. 2004;24:1617–1626. doi: 10.1523/JNEUROSCI.4442-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Yotsumoto Y, et al. Location-specific cortical activation changes during sleep after training for perceptual learning. Curr. Biol. 2009;19:1278–1282. doi: 10.1016/j.cub.2009.06.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Yotsumoto Y, Watanabe T, Sasaki Y. Different dynamics of performance and brain activation in the time course of perceptual learning. Neuron. 2008;57:827–833. doi: 10.1016/j.neuron.2008.02.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Chowdhury SA, DeAngelis GC. Fine discrimination training alters the causal contribution of macaque area MT to depth perception. Neuron. 2008;60:367–377. doi: 10.1016/j.neuron.2008.08.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Dosher BA, Lu ZL. Perceptual learning reflects external noise filtering and internal noise reduction through channel reweighting. Proc. Natl. Acad. Sci. U S A. 1998;95:13988–13993. doi: 10.1073/pnas.95.23.13988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Gu Y, et al. Decoding of MSTd population activity accounts for variations in the precision of heading perception. Neuron. 2010;66:596–609. doi: 10.1016/j.neuron.2010.04.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Kahnt T, et al. Perceptual learning and decision-making in human medial frontal cortex. Neuron. 2011;70:549–559. doi: 10.1016/j.neuron.2011.02.054. [DOI] [PubMed] [Google Scholar]

- 72.Law CT, Gold JI. Neural correlates of perceptual learning in a sensory-motor, but not a sensory, cortical area. Nat. Neurosci. 2008;11:505–513. doi: 10.1038/nn2070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Law CT, Gold JI. Reinforcement learning can account for associative and perceptual learning on a visual-decision task. Nat. Neurosci. 2009;12:655–663. doi: 10.1038/nn.2304. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Lewis CM, et al. Learning sculpts the spontaneous activity of the resting human brain. Proc. Natl. Acad. Sci. U S A. 2009;106:17558–17563. doi: 10.1073/pnas.0902455106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Liu J, Lu ZL, Dosher BA. Augmented Hebbian reweighting: interactions between feedback and training accuracy in perceptual learning. J. Vis. 2010;10:29. doi: 10.1167/10.10.29. [DOI] [PubMed] [Google Scholar]

- 76.Petrov AA, Dosher BA, Lu ZL. The dynamics of perceptual learning: an incremental reweighting model. Psychol. Re v. 2005;112:715–743. doi: 10.1037/0033-295X.112.4.715. [DOI] [PubMed] [Google Scholar]

- 77.Ahissar M, Hochstein S. Attentional control of early perceptual learning. Proc. Natl. Acad. Sci. U S A. 1993;90:5718–5722. doi: 10.1073/pnas.90.12.5718. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Fahle M. Specificity of learning curvature, orientation, and vernier discriminations. Vision Res. 1997;37:1885–1895. doi: 10.1016/s0042-6989(96)00308-2. [DOI] [PubMed] [Google Scholar]

- 79.Fahle M. Perceptual learning and sensomotor flexibility: cortical plasticity under attentional control? Philos. Trans. R. Soc. Lond. B. Biol. Sci. 2009;364:313–319. doi: 10.1098/rstb.2008.0267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Shiu LP, Pashler H. Improvement in line orientation discrimination is retinally local but dependent on cognitive set. Percept. Psychophys. 1992;52:582–588. doi: 10.3758/bf03206720. [DOI] [PubMed] [Google Scholar]

- 81.Watanabe T, Nanez JE, Sasaki Y. Perceptual learning without perception. Nature. 2001;413:844–848. doi: 10.1038/35101601. [DOI] [PubMed] [Google Scholar]

- 82.Seitz AR, Kim D, Watanabe T. Rewards evoke learning of unconsciously processed visual stimuli in adult humans. Neuron. 2009;61:700–707. doi: 10.1016/j.neuron.2009.01.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Seitz AR, Watanabe T. Psychophysics: is subliminal learning really passive? Nature. 2003;422:36–000. doi: 10.1038/422036a. [DOI] [PubMed] [Google Scholar]

- 84.Choi H, Watanabe T. Selectiveness of the exposure-based perceptual learning: what to learn and what not to learn. Learn. Percept. 2009;1:89–98. doi: 10.1556/LP.1.2009.1.7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Seitz A, Watanabe T. A unified model for perceptual learning. Trends Cogn . Sci. 2005;9:329–334. doi: 10.1016/j.tics.2005.05.010. [DOI] [PubMed] [Google Scholar]

- 86.Seitz AR, Watanabe T. The phenomenon of task-irrelevant perceptual learning. Vision Res. 2009;49:2604–2610. doi: 10.1016/j.visres.2009.08.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Hochstein S, Ahissar M. View from the top: hierarchies and reverse hierarchies in the visual system. Neuron. 2002;36:791–804. doi: 10.1016/s0896-6273(02)01091-7. [DOI] [PubMed] [Google Scholar]

- 88.Zhang J, Kourtzi Z. Learning-dependent plasticity with and without training in the human brain. Proc. Natl. Acad. Sci. U S A. 2010;107:13503–13508. doi: 10.1073/pnas.1002506107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Harris H, Gliksberg M, Sagi D. Generalized perceptual learning in the absence of sensory adaptation. Curr. Biol. 2012;22:1813–1817. doi: 10.1016/j.cub.2012.07.059. [DOI] [PubMed] [Google Scholar]

- 90.Zhang GL, et al. ERP P1-N1 changes associated with Vernier perceptual learning and its location specificity and transfer. J. Vis. 2013;13:19–000. doi: 10.1167/13.4.19. [DOI] [PubMed] [Google Scholar]

- 91.Dosher BA, Lu ZL. Mechanisms of perceptual learning. Vision Res. 1999;39:3197–3221. doi: 10.1016/s0042-6989(99)00059-0. [DOI] [PubMed] [Google Scholar]

- 92.Mollon JD, Danilova MV. Three remarks on perceptual learning. Spat. Vis. 1996;10:51–58. doi: 10.1163/156856896x00051. [DOI] [PubMed] [Google Scholar]

- 93.Hubel DH, Wiesel TN. Receptive fields of single neurons in the cat’s striate cortex. J. Physiol. 1959;148:574–591. doi: 10.1113/jphysiol.1959.sp006308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Priebe NJ, Ferster D. Mechanisms of neuronal computation in mammalian visual cortex. Neuron. 2012;75:194–208. doi: 10.1016/j.neuron.2012.06.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Wandell BA, Dumoulin SO, Brewer AA. Visual field maps in human cortex. Neuron. 2007;56:366–383. doi: 10.1016/j.neuron.2007.10.012. [DOI] [PubMed] [Google Scholar]

- 96.Li W, Piech V, Gilbert CD. Perceptual learning and top-down influences in primary visual cortex. Nat. Neurosci. 2004;7:651–657. doi: 10.1038/nn1255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Li W, Piech V, Gilbert CD. Learning to link visual contours. Neuron. 2008;57:442–451. doi: 10.1016/j.neuron.2007.12.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Beste C, et al. Improvement and impairment of visually guided behavior through LTP- and LTD-like exposure-based visual learning. Curr. Biol. 2011;21:876–882. doi: 10.1016/j.cub.2011.03.065. [DOI] [PubMed] [Google Scholar]

- 99.Choi H, Seitz AR, Watanabe T. When attention interrupts learning: inhibitory effects of attention on TIPL. Vision Res. 2009;49:2586–2590. doi: 10.1016/j.visres.2009.07.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Gutnisky DA, et al. Attention alters visual plasticity during exposure-based learning. Curr. Biol. 2009;19:555–560. doi: 10.1016/j.cub.2009.01.063. [DOI] [PubMed] [Google Scholar]

- 101.Huang TR, Watanabe T. Task attention facilitates learning of task-irrelevant stimuli. PLoS One. 2012;7:e35946. doi: 10.1371/journal.pone.0035946. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Ludwig L, Skrandies W. Human perceptual learning in the peripheral visual field: sensory thresholds and neurophysiological correlates. Biol. Psychol. 2002;59:187–206. doi: 10.1016/s0301-0511(02)00009-1. [DOI] [PubMed] [Google Scholar]

- 103.Nishina S, et al. Effect of spatial distance to the task stimulus on task-irrelevant perceptual learning of static Gabors. J. Vis. 2007;7:2.1–10. doi: 10.1167/7.13.2. [DOI] [PubMed] [Google Scholar]

- 104.Paffen CL, Verstraten FA, Vidnyanszky Z. Attention-based perceptual learning increases binocular rivalry suppression of irrelevant visual features. J. Vis. 2008;8:1–11. doi: 10.1167/8.4.25. [DOI] [PubMed] [Google Scholar]

- 105.Pilly PK, Grossberg S, Seitz AR. Low-level sensory plasticity during task-irrelevant perceptual learning: evidence from conventional and double training procedures. Vision Res. 2010;50:424–432. doi: 10.1016/j.visres.2009.09.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106.Seitz A, et al. Requirement for high-level processing in subliminal learning. Curr. Biol. 2005;15:R753–R755. doi: 10.1016/j.cub.2005.09.009. [DOI] [PubMed] [Google Scholar]

- 107.Seitz AR, et al. Seeing what is not there shows the costs of perceptual learning. Proc. Natl. Acad. Sci. U S A. 2005;102:9080–9085. doi: 10.1073/pnas.0501026102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 108.Tsushima Y, Seitz AR, Watanabe T. Task-irrelevant learning occurs only when the irrelevant feature is weak. Curr. Biol. 2008;18:R516–R517. doi: 10.1016/j.cub.2008.04.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 109.Watanabe T, et al. Greater plasticity in lower-level than higher-level visual motion processing in a passive perceptual learning task. Nat. Neurosci. 2002;5:1003–1009. doi: 10.1038/nn915. [DOI] [PubMed] [Google Scholar]

- 110.Xu JP, He ZJ, Ooi TL. Perceptual learning to reduce sensory eye dominance beyond the focus of top-down visual attention. Vision Res. 2012;61:39–47. doi: 10.1016/j.visres.2011.05.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 111.Webster MA. Evolving concepts of sensory adaptation. F1000 Biol. Rep. 2012;4:21–000. doi: 10.3410/B4-21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 112.Wark B, Lundstrom BN, Fairhall A. Sensory adaptation. Curr. Opin. Neurobiol. 2007;17:423–429. doi: 10.1016/j.conb.2007.07.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 113.Webster MA. Adaptation and visual coding. J. Vis. 2011;11:1–23. doi: 10.1167/11.5.3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 114.Censor N, Karni A, Sagi D. Alink between perceptual learning, adaptation and sleep. Vision Res. 2006;46:4071–4074. doi: 10.1016/j.visres.2006.07.022. [DOI] [PubMed] [Google Scholar]

- 115.Mednick SC, et al. The restorative effect of naps on perceptual deterioration. Nat. Neurosci. 2002;5:677–681. doi: 10.1038/nn864. [DOI] [PubMed] [Google Scholar]

- 116.Ofen N, Moran A, Sagi D. Effects of trial repetition in texture discrimination. Vision Res. 2007;47:1094–1102. doi: 10.1016/j.visres.2007.01.023. [DOI] [PubMed] [Google Scholar]

- 117.Jeter PE, et al. Specificity of perceptual learning increases with increased training. Vision Res. 2010;50:1928–1940. doi: 10.1016/j.visres.2010.06.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 118.Censor N, Sagi D. Global resistance to local perceptual adaptation in texture discrimination. Vision Res. 2009;49:2550–2556. doi: 10.1016/j.visres.2009.03.018. [DOI] [PubMed] [Google Scholar]

- 119.Benucci A, Saleem AB, Carandini M. Adaptation maintains population homeostasis in primary visual cortex. Nat. Neurosci. 2013;16:724–729. doi: 10.1038/nn.3382. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 120.Ghose GM, Yang T, Maunsell JH. Physiological correlates of perceptual learning in monkey V1 and V2. J. Neurophysiol. 2002;87:1867–1888. doi: 10.1152/jn.00690.2001. [DOI] [PubMed] [Google Scholar]

- 121.Le Dantec CC, Seitz AR. High resolution, high capacity, spatial specificity in perceptual learning. Front. Psychol. 2012;3:222. doi: 10.3389/fpsyg.2012.00222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 122.Herzog MH, Fahle M. The role of feedback in learning a vernier discrimination task. Vision Res. 1997;37:2133–2141. doi: 10.1016/s0042-6989(97)00043-6. [DOI] [PubMed] [Google Scholar]

- 123.Herzog MH, Fahle M. Modeling perceptual learning: difficulties and how they can be overcome. Biol. Cybern. 1998;78:107–117. doi: 10.1007/s004220050418. [DOI] [PubMed] [Google Scholar]

- 124.Herzog MH, Fahle M. Effects of biased feedback on learning and deciding in a vernier discrimination task. Vision Res. 1999;39:4232–4243. doi: 10.1016/s0042-6989(99)00138-8. [DOI] [PubMed] [Google Scholar]

- 125.Seitz AR, et al. Two cases requiring external reinforcement in perceptual learning. J. Vis. 2006;6:966–973. doi: 10.1167/6.9.9. [DOI] [PubMed] [Google Scholar]

- 126.Shibata K, et al. Boosting perceptual learning by fake feedback. Vision Res. 2009;49:2574–2585. doi: 10.1016/j.visres.2009.06.009. [DOI] [PubMed] [Google Scholar]

- 127.Seitz AR, Dinse HR. A common framework for perceptual learning. Curr. Opin. Neurobiol. 2007;17:148–153. doi: 10.1016/j.conb.2007.02.004. [DOI] [PubMed] [Google Scholar]

- 128.Censor N, Sagi D. Benefits of efficient consolidation: short training enables long-term resistance to perceptual adaptation induced by intensive testing. Vision Res. 2008;48:970–977. doi: 10.1016/j.visres.2008.01.016. [DOI] [PubMed] [Google Scholar]

- 129.Mednick SC, Arman AC, Boynton GM. The time course and specificity of perceptual deterioration. Proc. Natl. Acad. Sci. U S A. 2005;102:3881–3885. doi: 10.1073/pnas.0407866102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 130.Leclercq V, Seitz AR. The impact of orienting attention in fast task-irrelevant perceptual learning. Atten. Percept. Psychophys. 2012;74:648–660. doi: 10.3758/s13414-012-0270-7. [DOI] [PubMed] [Google Scholar]

- 131.Leclercq V, Seitz AR. Fast task-irrelevant perceptual learning is disrupted by sudden onset of central task elements. Vision. Res. 2012;61:70–76. doi: 10.1016/j.visres.2011.07.017. [DOI] [PubMed] [Google Scholar]

- 132.Li S, Mayhew SD, Kourtzi Z. Learning shapes the representation of behavioral choice in the human brain. Neuron. 2009;62:441–452. doi: 10.1016/j.neuron.2009.03.016. [DOI] [PubMed] [Google Scholar]

- 133.Op de, Beeck HP, et al. Discrimination training alters object representations in human extra striate cortex. J. Neurosci. 2006;26:13025–13036. doi: 10.1523/JNEUROSCI.2481-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 134.Folstein JR, Gauthier I, Palmeri TJ. Mere exposure alters category learning of novel objects. Front. Psychol. 2010;1:40. doi: 10.3389/fpsyg.2010.00040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 135.Dewald AD, Sinnett S, Doumas LA. Conditions of directed attention inhibit recognition performance for explicitly presented target-aligned irrelevant stimuli. Acta Psychol. 2011;138:60–67. doi: 10.1016/j.actpsy.2011.05.006. [DOI] [PubMed] [Google Scholar]

- 136.Dewald AD, Sinnett S, Doumas LA. A window of perception when diverting attention? Enhancing recognition for explicitly presented, unattended, and irrelevant stimuli by target alignment. J. Exp. Psychol. Hum. Percept. Perform. 2012;39:1304–1312. doi: 10.1037/a0031210. [DOI] [PubMed] [Google Scholar]

- 137.Vlahou EL, Protopapas A, Seitz AR. Implicit training of nonnative speech stimuli. J. Exp. Psychol. Gen. 2012;141:363–381. doi: 10.1037/a0025014. [DOI] [PubMed] [Google Scholar]

- 138.Reed A, et al. Cortical map plasticity improves learning but is not necessary for improved performance. Neuron. 2011;70:121–131. doi: 10.1016/j.neuron.2011.02.038. [DOI] [PubMed] [Google Scholar]

- 139.Mednick S, Nakayama K, Stickgold R. Sleep-dependent learning: a nap is as good as a night. Nat. Neu-rosci. 2003;6:697–698. doi: 10.1038/nn1078. [DOI] [PubMed] [Google Scholar]

- 140.Lin JY, et al. Enhanced memory for scenes presented at behaviorally relevant points in time. PLoS Biol. 2010;8:e1000337. doi: 10.1371/journal.pbio.1000337. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 141.Ehlers MD. Hijacking hebb: noninvasive methods to probe plasticity in psychiatric disease. Biol. Psychiatry. 2012;71:484–486. doi: 10.1016/j.biopsych.2012.01.001. [DOI] [PubMed] [Google Scholar]

- 142.Swallow KM, Jiang YV. The attentional boost effect: transient increases in attention to one task enhance performance in a second task. Cognition. 2010;115:118–132. doi: 10.1016/j.cognition.2009.12.003. [DOI] [PMC free article] [PubMed] [Google Scholar]