Abstract

It has recently been shown that networks of spiking neurons with noise can emulate simple forms of probabilistic inference through “neural sampling”, i.e., by treating spikes as samples from a probability distribution of network states that is encoded in the network. Deficiencies of the existing model are its reliance on single neurons for sampling from each random variable, and the resulting limitation in representing quickly varying probabilistic information. We show that both deficiencies can be overcome by moving to a biologically more realistic encoding of each salient random variable through the stochastic firing activity of an ensemble of neurons. The resulting model demonstrates that networks of spiking neurons with noise can easily track and carry out basic computational operations on rapidly varying probability distributions, such as the odds of getting rewarded for a specific behavior. We demonstrate the viability of this new approach towards neural coding and computation, which makes use of the inherent parallelism of generic neural circuits, by showing that this model can explain experimentally observed firing activity of cortical neurons for a variety of tasks that require rapid temporal integration of sensory information.

Author Summary

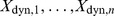

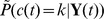

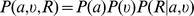

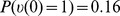

The Markov Chain Monte Carlo (MCMC) approach to probabilistic inference for a distribution  is to draw a sequence of samples from

is to draw a sequence of samples from  and to carry out computational operations via simple online computations on such a sequence. But such a sequential computational process takes time, and therefore this simple version of the MCMC approach runs into problems when one needs to carry out probabilistic inference for rapidly varying distributions. This difficulty also affects all currently existing models for emulating MCMC sampling by networks of stochastically firing neurons. We show here that by moving to a space-rate approach where salient probabilities are encoded through the spiking activity of ensembles of neurons, rather than by single neurons, this problem can be solved. In this way even theoretically optimal models for dealing with time varying distributions through sequential Monte Carlo sampling, so called particle filters, can be emulated by networks of spiking neurons. Each spike of a neuron in an ensemble represents in this approach a “particle” (or vote) for a particular value of a time-varying random variable. In other words, neural circuits can speed up computations based on Monte Carlo sampling through their inherent parallelism.

and to carry out computational operations via simple online computations on such a sequence. But such a sequential computational process takes time, and therefore this simple version of the MCMC approach runs into problems when one needs to carry out probabilistic inference for rapidly varying distributions. This difficulty also affects all currently existing models for emulating MCMC sampling by networks of stochastically firing neurons. We show here that by moving to a space-rate approach where salient probabilities are encoded through the spiking activity of ensembles of neurons, rather than by single neurons, this problem can be solved. In this way even theoretically optimal models for dealing with time varying distributions through sequential Monte Carlo sampling, so called particle filters, can be emulated by networks of spiking neurons. Each spike of a neuron in an ensemble represents in this approach a “particle” (or vote) for a particular value of a time-varying random variable. In other words, neural circuits can speed up computations based on Monte Carlo sampling through their inherent parallelism.

Introduction

Humans and animals are confronted with various situations where the state of some behaviorally relevant time-varying random variable  is only accessible through noisy observations

is only accessible through noisy observations  . It is then essential to estimate the current value of that random variable

. It is then essential to estimate the current value of that random variable  , and to update this belief on the basis of further evidence. Over the last three decades we have learned from various experiments that monkeys are able to perform such operations. In the classical random-dot motion task, monkeys are confronted with dots on a screen moving in random directions, where a random subset of dots moves coherently. Monkeys are able to determine the direction of coherent motion even for low coherency levels [1]. A more recent study has shown that the firing rate of neurons in parietal cortex are proportional to the momentary log-likelihood ratio of a rewarded action for the given sensory evidence [2], suggesting that cortical circuits perform some form of probabilistic inference to determine the value of the hidden variable that represents rewarded actions. In yet another experiment, Cisek and Kalaska [3] studied macaque monkeys in an ambiguous target task. A visual spatial cue and a color cue, which were separated by a memory epoch, determined the rewarded direction of an arm movement, see Figure 1A. Ambiguity about the rewarded action after the first cue was reflected in the firing activity of dorsal premotor cortex (PMd) neurons, see Figure 1B. When the second cue determined the single rewarded action, only neurons tuned to the rewarded movement direction remained active. This finding suggests that estimates for the value of a salient time-varying random variable

, and to update this belief on the basis of further evidence. Over the last three decades we have learned from various experiments that monkeys are able to perform such operations. In the classical random-dot motion task, monkeys are confronted with dots on a screen moving in random directions, where a random subset of dots moves coherently. Monkeys are able to determine the direction of coherent motion even for low coherency levels [1]. A more recent study has shown that the firing rate of neurons in parietal cortex are proportional to the momentary log-likelihood ratio of a rewarded action for the given sensory evidence [2], suggesting that cortical circuits perform some form of probabilistic inference to determine the value of the hidden variable that represents rewarded actions. In yet another experiment, Cisek and Kalaska [3] studied macaque monkeys in an ambiguous target task. A visual spatial cue and a color cue, which were separated by a memory epoch, determined the rewarded direction of an arm movement, see Figure 1A. Ambiguity about the rewarded action after the first cue was reflected in the firing activity of dorsal premotor cortex (PMd) neurons, see Figure 1B. When the second cue determined the single rewarded action, only neurons tuned to the rewarded movement direction remained active. This finding suggests that estimates for the value of a salient time-varying random variable  (getting rewarded for carrying out a specific action) are represented and updated through the current firing activity of different ensembles

(getting rewarded for carrying out a specific action) are represented and updated through the current firing activity of different ensembles  of neurons, one for each possible value

of neurons, one for each possible value  of the random variable

of the random variable  .

.

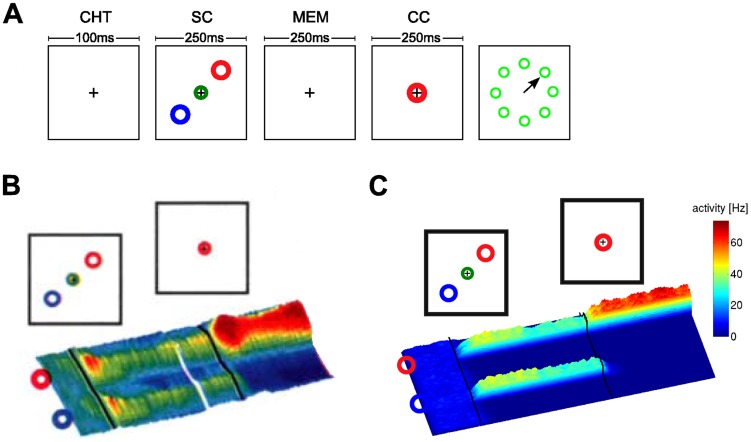

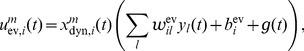

Figure 1. Representation of a belief in dorsal premotor cortex (PMd) in the ambiguous target task.

A) Task structure. After an initial fixation (center-hold time; CHT), the spatial cue (SC) is shown in the form of two color markers at one of eight possible locations and displaced from each other by 180 degrees. They mark two potentially rewarded movement directions. After a memory epoch (MEM), the color cue (CC) is shown at the fixation cross. The rewarded movement direction is defined by the direction of matching color in the color cue (time periods of simulation indicated). B) Firing activity of neurons in dorsal premotor cortex during the task. Before the spatial cue is shown, neurons are diffusely active. As the spatial cue is shown, neurons with preferred directions consistent with the spatial cue increase the firing rate and others are silenced. This circuit behavior is retained during the memory epoch. As the color cue is presented, neurons with consistent preferred directions increase their firing rates. C) Simulation result for a circuit that performs evidence integration in ENS coding (activity smoothed; horizontal axis: time). Neurons are ordered by their preferred direction. Panel B modified with permission from [52].

We show that despite of their diversity, all these tasks can be viewed as probabilistic inference tasks, where some internal belief about the current value  of a hidden random variable

of a hidden random variable  (e.g., which action is most likely to be rewarded at the end of a trial) needs to be updated based on often ambiguous sensory evidence

(e.g., which action is most likely to be rewarded at the end of a trial) needs to be updated based on often ambiguous sensory evidence  (moving dots, visual cues, etc.). We will distinguish 5 different classes of such tasks (labeled A - E in Results) that differ for example with regard to the time scale on which the hidden variable

(moving dots, visual cues, etc.). We will distinguish 5 different classes of such tasks (labeled A - E in Results) that differ for example with regard to the time scale on which the hidden variable  changes, or prior knowledge about the expected change of

changes, or prior knowledge about the expected change of  . These tasks can not be solved adequately through a Hidden Markov Model (HMM). The reason is that a HMM generates at each moment in time just a single guess for the current value of an unknown variable. It is therefore not able to work with more complex temporary guesses, say that an unknown variable has probably value 1 or 2, but definitely not value 3. Obviously such advanced representations are necessary in order to make decisions that depend on the integration of numerous temporally dispersed cues.

. These tasks can not be solved adequately through a Hidden Markov Model (HMM). The reason is that a HMM generates at each moment in time just a single guess for the current value of an unknown variable. It is therefore not able to work with more complex temporary guesses, say that an unknown variable has probably value 1 or 2, but definitely not value 3. Obviously such advanced representations are necessary in order to make decisions that depend on the integration of numerous temporally dispersed cues.

For all these classes of computational tasks there exist theoretically optimal solutions that can be derived within a probabilistic inference framework. If one assumes that the hidden random variable  is static (i.e.,

is static (i.e.,  for some

for some  and all times

and all times  ), evidence provided by the temporal stream of observations has to be integrated in order to infer the internal belief about the value of the random variable

), evidence provided by the temporal stream of observations has to be integrated in order to infer the internal belief about the value of the random variable  . In such evidence integration, an initial prior belief formalized as a probability distribution

. In such evidence integration, an initial prior belief formalized as a probability distribution  is updated over time in order to infer the time-varying posterior distribution

is updated over time in order to infer the time-varying posterior distribution  , where

, where  denotes all evidence up to time

denotes all evidence up to time  . For example, an observation at time

. For example, an observation at time  that is likely for

that is likely for  will increase the probability of state

will increase the probability of state  at time

at time  , while the probability of values under which the observation is unlikely will be decreased. Bayesian filtering generalizes evidence integration to time-varying random variables. It is often assumed that the dynamics of the random variable is time-independent. Bayesian filtering then infers the posterior

, while the probability of values under which the observation is unlikely will be decreased. Bayesian filtering generalizes evidence integration to time-varying random variables. It is often assumed that the dynamics of the random variable is time-independent. Bayesian filtering then infers the posterior  by taking the assumed dynamics of the time-varying random variable

by taking the assumed dynamics of the time-varying random variable  into account. For example, if value

into account. For example, if value  is currently likely, and state

is currently likely, and state  is likely to transition to state

is likely to transition to state  , then the probability for state

, then the probability for state  will gradually increase over time. For many important tasks, the dynamics of the random variable

will gradually increase over time. For many important tasks, the dynamics of the random variable  is not identical at all times but rather depends on context. For example, the change of body position in space (formalized as a hidden random variable

is not identical at all times but rather depends on context. For example, the change of body position in space (formalized as a hidden random variable  ) depends on motor actions. We refer to Bayesian filtering with context dependent dynamics as context-dependent Bayesian filtering. It infers

) depends on motor actions. We refer to Bayesian filtering with context dependent dynamics as context-dependent Bayesian filtering. It infers  , where

, where  denotes all context information received up to time

denotes all context information received up to time  .

.

In previous work, it was shown that networks of spiking neurons can embody a probability distribution through their stochastic spiking activity. This enables a neural system to carry out probabilistic inference through sampling (e.g., estimate of a marginal probability by observing the firing rate of a corresponding neuron) [4]. This model for probabilistic inference in networks of spiking neurons was termed neural sampling. However, neural sampling does not provide a suitable model for the representation and updating of quickly-varying distributions as it is needed for the tasks discussed above, since a good estimate of the current value of  can only be read out after several samples have been observed. Another deficit of the aforementioned simple form of the neural sampling model is, that each salient random variable is represented through the firing activity of just a single neuron. This is unsatisfactory because it does not provide a network computation that is robust against the failures of single neurons. In fact, the representation of random variables through single neurons is not consistent with experimental data, see Figure 1B. In addition it requires unbiologically strong synaptic connections in order to ensure that the random variable that is represented by such single neuron has an impact on other random variables, or on downstream readouts. Furthermore, downstream readout neurons are required to integrate (count) spikes of such neuron over intervals of several hundred ms or larger, in order to get a reasonable estimate of the probability that is represented through the firing rate of the neuron (i.e., in order to estimate a posterior marginal, which is an important form of probabilistic inference).

can only be read out after several samples have been observed. Another deficit of the aforementioned simple form of the neural sampling model is, that each salient random variable is represented through the firing activity of just a single neuron. This is unsatisfactory because it does not provide a network computation that is robust against the failures of single neurons. In fact, the representation of random variables through single neurons is not consistent with experimental data, see Figure 1B. In addition it requires unbiologically strong synaptic connections in order to ensure that the random variable that is represented by such single neuron has an impact on other random variables, or on downstream readouts. Furthermore, downstream readout neurons are required to integrate (count) spikes of such neuron over intervals of several hundred ms or larger, in order to get a reasonable estimate of the probability that is represented through the firing rate of the neuron (i.e., in order to estimate a posterior marginal, which is an important form of probabilistic inference).

We examine in this article therefore an extension of the neural sampling model, where random variables (e.g. internal beliefs) are represented through a space-rate code of neuronal ensembles. In other words, we are making stronger use of the inherent parallelism of neural systems. In this ensemble based neural sampling (ENS) code, the percentage of neurons in an ensemble  that fire within some short (e.g. 20 ms) time interval encodes the internal belief (or estimated probability) that a random variable currently has a specific value

that fire within some short (e.g. 20 ms) time interval encodes the internal belief (or estimated probability) that a random variable currently has a specific value  .

.

This variation of the neural sampling model is nontrivial, since one tends to lose the link to the theory of sampling/probabilistic inference if one simply replaces a single neuron by an ensemble of neurons. We show however that ensemble based neural sampling is nevertheless possible, and is supported by a rigorous theory. In this new framework downstream neurons can read out current internal estimates in the ENS code just through their standard integration of postsynaptic potentials. We prove rigorously that this generates unbiased estimates, and we also show on what parameters the variance of this estimate depends. Furthermore we explore first steps of a theory of neural computation with the ENS code. We show that nonlinear computation steps that are needed for optimal integration of time-varying evidence can be carried out within this spike-based setting through disinhibition of neurons. Hence networks of spiking neurons with noise are in principle able to approximate theoretically optimal filtering operations – such as evidence integration and context-dependent Bayesian filtering – for updating internal estimates for possible causes of external stimuli. We show in particular, that networks of spiking neurons with noise are able to emulate state-of-the-art probabilistic methods that enable robots to estimate their current position on the basis of multiple ambiguous sensory cues and path integration. This provides a first paradigm for the organization of brain computations that are able to solve generic self-localization tasks. The resulting model is especially suited as “computational engine” for an intention-based neural coding framework, as proposed in [5]. Intention-based neural coding is commonly observed in lateral intraparietal cortex (area LIP) of monkeys, where neurons encode a preference for a particular target of a saccade within the visual field [1].

The remainder of this paper is structured as follows. First we introduce ENS coding. We then discuss basic properties of the ENS code. In Computational operations through ensemble-based neural sampling, we show how basic computations on time-varying internal beliefs can be realized by neural circuits in ENS coding. This section is structured along 5 classes of computational tasks of increasing complexity (Task class A to Task class E). Within these task classes, we present computer simulations where the characteristics of these neural circuits are analyzed and compared to experimental results. A discussion of the main findings of this paper and related work can be found in Discussion. Detailed derivations and descriptions of computer simulations are provided in Methods.

Results

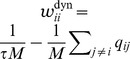

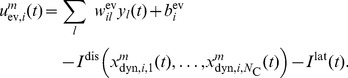

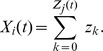

Ensemble-based neural sampling

In neural sampling [4], each neuron in a network represents a binary random variable. Spike generation is stochastic, with a probability that depends on the current membrane potential. At each time  , the activity of each neuron is mapped to a sample for the value of the corresponding variable by setting the value to 1 if the neuron has spiked in

, the activity of each neuron is mapped to a sample for the value of the corresponding variable by setting the value to 1 if the neuron has spiked in  for some small

for some small  (e.g. 20 ms). It was shown that under certain conditions on the membrane potentials of the neurons, the network converges to a stationary distribution that corresponds to the posterior distribution of the represented random variables for the given evidence. Evidence is provided to the circuit by clamping the activities of a subset of neurons during inference. The marginal distribution for a given variable can be read out by observing the firing activity of the corresponding neuron in the stationary distribution.

(e.g. 20 ms). It was shown that under certain conditions on the membrane potentials of the neurons, the network converges to a stationary distribution that corresponds to the posterior distribution of the represented random variables for the given evidence. Evidence is provided to the circuit by clamping the activities of a subset of neurons during inference. The marginal distribution for a given variable can be read out by observing the firing activity of the corresponding neuron in the stationary distribution.

Neural sampling is an implementation of the Markov Chain Monte Carlo (MCMC) sampling approach (see e.g. [6]) in networks of spiking neurons. By definition, it does not provide a suitable model for the representation of time-varying distributions, since samples are generated in a sequential manner. Convergence to the stationary distribution in MCMC sampling can take substantial time, the readout of marginal distributions demands spike counts of neurons over extended periods of time, and MCMC sampling is only defined for the fictional case of stationary external inputs. But also time-varying distributions can theoretically be handled through Monte Carlo sampling if one has a sufficiently parallelized stochastic system that can generate at each time point  simultaneously several samples

simultaneously several samples  from the time varying distribution

from the time varying distribution  , and by carrying out simple computational operations on this batch of samples in parallel. The resulting computational model is usually referred to as particle filter, a special case of sequential Monte Carlo sampling [6], [7]. Here each sample

, and by carrying out simple computational operations on this batch of samples in parallel. The resulting computational model is usually referred to as particle filter, a special case of sequential Monte Carlo sampling [6], [7]. Here each sample  from a batch

from a batch  that is generated at time

that is generated at time  is referred to as a particle.

is referred to as a particle.

To port the idea of neural sampling to the representation of time-varying distributions through neuronal ensembles, we therefore consider  ensembles

ensembles  that collectively encode the belief about the value of a random variable

that collectively encode the belief about the value of a random variable  with range

with range  in terms of a probability distribution

in terms of a probability distribution  . We refer to the value of a random variable also as the hidden state, or simply the state of the variable. We will in the following omit the subscript

. We refer to the value of a random variable also as the hidden state, or simply the state of the variable. We will in the following omit the subscript  in

in  for notational convenience (formally, we consider a family of variables, indexed by

for notational convenience (formally, we consider a family of variables, indexed by  , that defines a random process, see [8];

, that defines a random process, see [8];  is then the distribution over the member

is then the distribution over the member  of this family). Each ensemble

of this family). Each ensemble  consists of

consists of  neurons

neurons  , where we refer to

, where we refer to  as the ensemble size. We interpret a spike in the circuit as one sample from

as the ensemble size. We interpret a spike in the circuit as one sample from  , i.e., one concrete value for the represented variable drawn according to the distribution [4], [9]. In particular, if a spike is elicited by some neuron in ensemble

, i.e., one concrete value for the represented variable drawn according to the distribution [4], [9]. In particular, if a spike is elicited by some neuron in ensemble  , then this value is

, then this value is  . See Figure 2A for an illustration of sample-based representations.

. See Figure 2A for an illustration of sample-based representations.

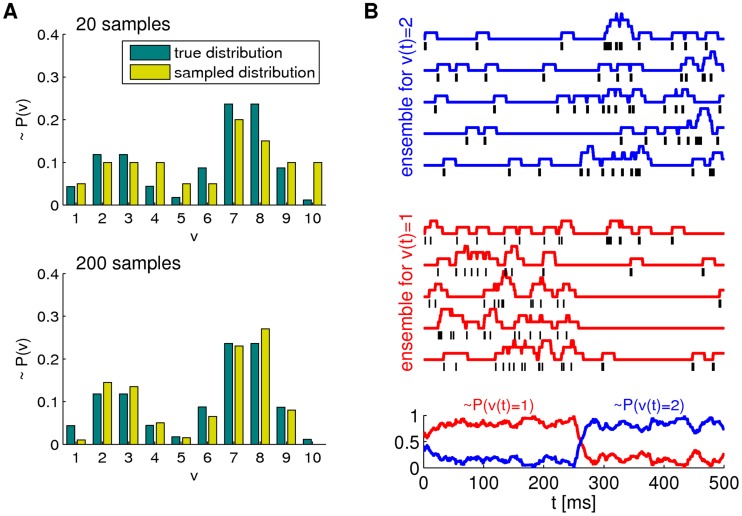

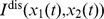

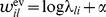

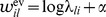

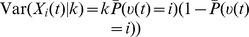

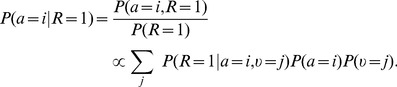

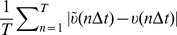

Figure 2. Spikes as samples from probability distributions.

A) Sample-based representations of probability distributions. True distribution of a random variable  (green) and approximated distribution (yellow) based on 20 samples (top) and 200 samples (bottom) B) Interpretation of the spiking activity of two neuronal ensembles as samples from a probability distribution over a temporally changing random variable

(green) and approximated distribution (yellow) based on 20 samples (top) and 200 samples (bottom) B) Interpretation of the spiking activity of two neuronal ensembles as samples from a probability distribution over a temporally changing random variable  . Shown is an example for a random variable

. Shown is an example for a random variable  with two possible states. Black lines in the top traces indicate action potentials in two ensembles (5 neurons per state shown). Traces above spikes show EPSP-filtered versions of these spikes (red: state 1; blue: state 2). Bottom plot: Estimated probabilities for state 1 (red) and state 2 (blue) according to eq. (1) based on the spiking activity of 10 neurons per state.

with two possible states. Black lines in the top traces indicate action potentials in two ensembles (5 neurons per state shown). Traces above spikes show EPSP-filtered versions of these spikes (red: state 1; blue: state 2). Bottom plot: Estimated probabilities for state 1 (red) and state 2 (blue) according to eq. (1) based on the spiking activity of 10 neurons per state.

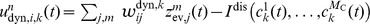

A downstream neuron can evaluate how many spikes it received from each ensemble within its membrane time constant  through summation of excitatory postsynaptic potentials (EPSPs) caused by spikes from ensemble neurons. We denote the EPSP-filtered spike train of neuron

through summation of excitatory postsynaptic potentials (EPSPs) caused by spikes from ensemble neurons. We denote the EPSP-filtered spike train of neuron  by

by  (see Methods for a precise definition) and adopt rectangular EPSP shapes of length

(see Methods for a precise definition) and adopt rectangular EPSP shapes of length  in the following, similar to those recorded at the soma of pyramidal neurons for dendrite-targeting synaptic inputs (see Figure 1 in [10]). The sum of all EPSPs from an ensemble

in the following, similar to those recorded at the soma of pyramidal neurons for dendrite-targeting synaptic inputs (see Figure 1 in [10]). The sum of all EPSPs from an ensemble  , denoted by

, denoted by  , is then the number of samples for hidden state

, is then the number of samples for hidden state  in the time window from

in the time window from  to

to  . The number of samples

. The number of samples  is thus directly accessible to downstream neurons. We refer to

is thus directly accessible to downstream neurons. We refer to  as the (non-normalized) probability mass for hidden state

as the (non-normalized) probability mass for hidden state  . The use of plateau-like EPSP shapes is motivated from the need to count spikes in some predefined time interval. An alternative motivation that is based on the idea that the EPSP weights a spike by the probability that it belongs to the most recent samples is given in Text S1.

. The use of plateau-like EPSP shapes is motivated from the need to count spikes in some predefined time interval. An alternative motivation that is based on the idea that the EPSP weights a spike by the probability that it belongs to the most recent samples is given in Text S1.

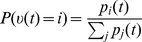

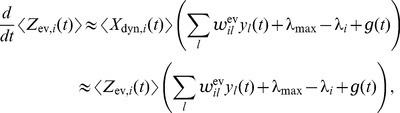

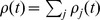

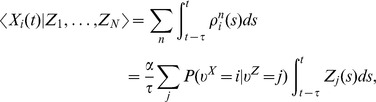

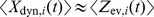

The represented distribution can be estimated by the relative portion of spikes from the ensembles

| (1) |

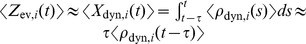

where we assume that at least one sample is available. See Figure 2B for an intuitive illustration of ENS coding. In this representation, probabilities are temporally filtered by the EPSPs. Hence, the ability of the code to capture fast dynamics of distributions depends on the length of EPSPs, where shorter time constants give rise to faster tracking. Due to the stochasticity of the sampling process,  is a random variable that assumes different values each time the distribution is represented. We demand in ENS coding that the expected value of

is a random variable that assumes different values each time the distribution is represented. We demand in ENS coding that the expected value of  is equal to the temporally filtered represented probability

is equal to the temporally filtered represented probability  for all states

for all states  , see Methods for details.

, see Methods for details.

We will see in the construction of computational operations in ENS coding that downstream neurons do not have to carry out the division of eq. (1). Instead, for these operations, they can compute with the non-normalized probability masses  that they obtain through summation of EPSPs from ensemble neurons. The reason is that normalization is not necessary in the representation of a distribution in ENS coding. It is rather the relative portion of spikes for each value of the random variable that defines the represented distribution. Of course, activity needs to be kept in some reasonable range, but this can be done in a rather relaxed manner.

that they obtain through summation of EPSPs from ensemble neurons. The reason is that normalization is not necessary in the representation of a distribution in ENS coding. It is rather the relative portion of spikes for each value of the random variable that defines the represented distribution. Of course, activity needs to be kept in some reasonable range, but this can be done in a rather relaxed manner.

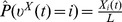

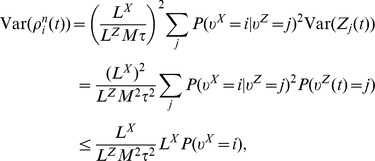

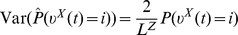

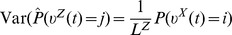

Basic properties of the ENS code

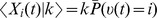

Assume that samples (spikes) for state  are produced by a Poisson process with rate proportional to the represented probability, i.e., the rates

are produced by a Poisson process with rate proportional to the represented probability, i.e., the rates  of neurons in ensemble

of neurons in ensemble  are given by

are given by  , where

, where  is a constant that defines the maximal instantaneous rate of each neuron.

is a constant that defines the maximal instantaneous rate of each neuron.  is then a random variable distributed according to a Poisson distribution with intensity

is then a random variable distributed according to a Poisson distribution with intensity  , where the estimation sample size

, where the estimation sample size

is the average number of spikes produced by all ensembles within a time span of

is the average number of spikes produced by all ensembles within a time span of  .

.

We show in Methods that in this case,  is an unbiased estimator of the probability of state

is an unbiased estimator of the probability of state  at time

at time  filtered by the EPSPs, hence, an ENS code is established. An important question is how parameters of a circuit influence the fidelity of the encoding. To answer this question, we investigated the variance of the estimator. It is inversely proportional to the ensemble size

filtered by the EPSPs, hence, an ENS code is established. An important question is how parameters of a circuit influence the fidelity of the encoding. To answer this question, we investigated the variance of the estimator. It is inversely proportional to the ensemble size  , the maximal firing rate

, the maximal firing rate  , and the membrane time constant

, and the membrane time constant  if the number of samples within

if the number of samples within  is large, see Methods. Hence, the accuracy can be increased by increasing the ensemble size, the firing rate of neurons, and the time constant of neuronal integration. Note however that an increase of the latter will lead to more temporal filtering of the distribution.

is large, see Methods. Hence, the accuracy can be increased by increasing the ensemble size, the firing rate of neurons, and the time constant of neuronal integration. Note however that an increase of the latter will lead to more temporal filtering of the distribution.

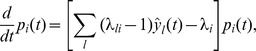

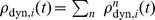

We close this discussion by considering the relation between the instantaneous firing rates of ensemble neurons and the mean represented probability mass  . The probability mass is not an instantaneous function of the ensemble firing rate, since at time

. The probability mass is not an instantaneous function of the ensemble firing rate, since at time  there are still past samples that influence

there are still past samples that influence  through their EPSPs. A past sample becomes invalid after time

through their EPSPs. A past sample becomes invalid after time  , when the associated EPSP vanishes. The instantaneous firing rates change the mass through the production of novel samples. Consider given continuous firing rates

, when the associated EPSP vanishes. The instantaneous firing rates change the mass through the production of novel samples. Consider given continuous firing rates  . Under mild assumptions on the firing rates of ensemble neurons (see Methods), the change of the expected probability mass

. Under mild assumptions on the firing rates of ensemble neurons (see Methods), the change of the expected probability mass  is then given by

is then given by

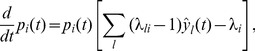

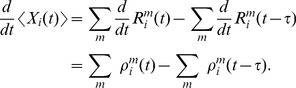

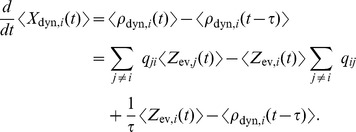

| (2) |

where the expectation is taken over realizations of spike trains for the given instantaneous rates. Here, the first term is due to the production of novel samples and the second term due to old samples that become invalid. In summary, the membrane potentials of the neurons determine – through the firing rate – the rate of change of the represented probability mass in ENS-coding.

Computational operations through ensemble-based neural sampling

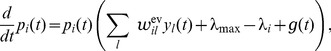

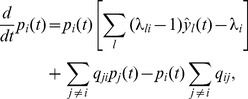

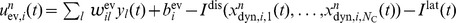

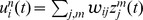

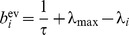

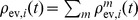

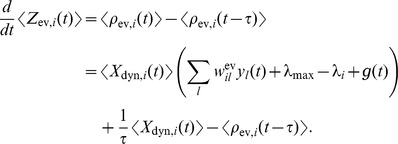

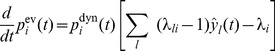

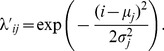

We address now the question how basic computations on time-varying internal beliefs can be realized by neural circuits in ENS coding. Spiking activity of excitatory neurons is modeled according to the stochastic Spike Response model [11], [12]. In this model, each neuron  emits a Poisson spike train with instantaneous firing rate

emits a Poisson spike train with instantaneous firing rate

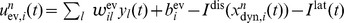

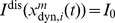

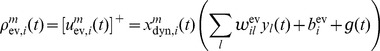

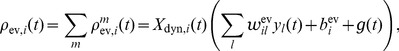

| (3) |

Here,  denotes a link function that links the somatic membrane potential

denotes a link function that links the somatic membrane potential  to the instantaneous firing rate. Typically, the link function is either an exponential function or a non-negative linear function. We consider in this article a non-negative linear link function

to the instantaneous firing rate. Typically, the link function is either an exponential function or a non-negative linear function. We consider in this article a non-negative linear link function  [12], where

[12], where  is

is  for non-negative

for non-negative  and

and  otherwise.

otherwise.

We discuss five classes of computational tasks.

Tasks class A

In these tasks, the state of a random variable  has to be inferred in ENS coding given the belief about a variable

has to be inferred in ENS coding given the belief about a variable  in ENS coding, where the distribution over

in ENS coding, where the distribution over  depends solely on the current state of

depends solely on the current state of  . The computational operation needed to solve such problems is simpler than the other ones considered here in the sense that the distribution over

. The computational operation needed to solve such problems is simpler than the other ones considered here in the sense that the distribution over  can be directly inferred from recent samples for

can be directly inferred from recent samples for  . We will use this operation several times to read out the belief about a rewarded motor action (

. We will use this operation several times to read out the belief about a rewarded motor action ( ) from the internal belief about some random variable

) from the internal belief about some random variable  .

.

Tasks class B

This class consists of tasks that can be solved through evidence integration. In other words, the state of a random variable  has to be estimated based on evidence

has to be estimated based on evidence  , where

, where  is assumed to be static during each trial. Examples for such tasks are the ambiguous target task, the random-dot motion task, and the probabilistic inference task from [2]. We will exhibit a spiking neural network architecture that approximates optimal solutions for these tasks in ENS coding and compare its behavior to experimental results.

is assumed to be static during each trial. Examples for such tasks are the ambiguous target task, the random-dot motion task, and the probabilistic inference task from [2]. We will exhibit a spiking neural network architecture that approximates optimal solutions for these tasks in ENS coding and compare its behavior to experimental results.

Tasks class C

Also in these tasks, evidence  has to be integrated to estimate the state of a random variable

has to be integrated to estimate the state of a random variable  . However, the state of

. However, the state of  may change over time according to known time-independent stochastic dynamics. Bayesian filtering provides an optimal solution for such tasks. We will extend the circuit architecture from task class B to approximate Bayesian filtering in ENS coding and test its performance in a generic task setup.

may change over time according to known time-independent stochastic dynamics. Bayesian filtering provides an optimal solution for such tasks. We will extend the circuit architecture from task class B to approximate Bayesian filtering in ENS coding and test its performance in a generic task setup.

Tasks class D

In this class of tasks, the dynamics of the random variable  may change during the task, and changes are indicated by some context-variable

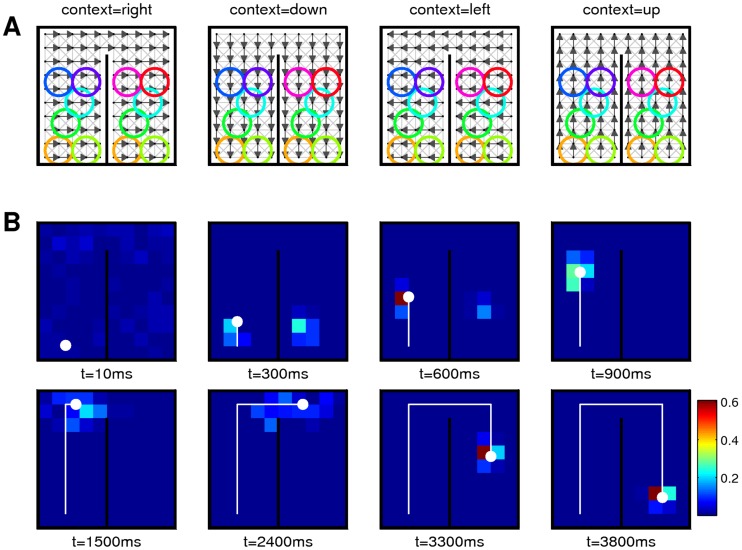

may change during the task, and changes are indicated by some context-variable  . Note that task classes B and C are special cases of this task class. We refer to the optimal solution as context-dependent Bayesian filtering. An approximation based on sequential Monte Carlo sampling is particle filtering. We will extend our circuit architecture to perform full particle filtering in ENS coding. We will show how the important problem of self-localization can be solved by this architecture.

. Note that task classes B and C are special cases of this task class. We refer to the optimal solution as context-dependent Bayesian filtering. An approximation based on sequential Monte Carlo sampling is particle filtering. We will extend our circuit architecture to perform full particle filtering in ENS coding. We will show how the important problem of self-localization can be solved by this architecture.

Tasks class E

Finally, we will discuss tasks where the context variable  is not explicitly given but has to be estimated from noisy evidence. Hence,

is not explicitly given but has to be estimated from noisy evidence. Hence,  – which determines the dynamics of

– which determines the dynamics of  – has also to be considered a random variable. We will treat such tasks by combining two particle filters. One particle filter estimates

– has also to be considered a random variable. We will treat such tasks by combining two particle filters. One particle filter estimates  and provides context for another particle filter that estimates

and provides context for another particle filter that estimates  . As an example, we will reconsider the ambiguous target task. We show that a belief about the current stage within a series of trials can be generated in order to decide whether evidence should be further integrated for the belief about the rewarded action or whether a new trial has started and the belief should be reset to some prior distribution.

. As an example, we will reconsider the ambiguous target task. We show that a belief about the current stage within a series of trials can be generated in order to decide whether evidence should be further integrated for the belief about the rewarded action or whether a new trial has started and the belief should be reset to some prior distribution.

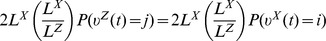

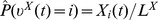

Task class A: Simple probabilistic dependencies

We first discuss how the belief for a random variable  can be inferred in ENS coding given the belief over a variable

can be inferred in ENS coding given the belief over a variable  . Consider a random variable

. Consider a random variable  for which the distribution depends solely on the current state of a random variable

for which the distribution depends solely on the current state of a random variable  . The task is to infer the distribution over

. The task is to infer the distribution over  for the current distribution over

for the current distribution over  . This operation is needed for example in typical decision making tasks where inference about a rewarded action has to be performed according to the belief about some hidden variable that is based on sensory information. For example, the rewarded movement direction has to be guessed, based on the belief about the perceived cue combination in the ambiguous target task.

. This operation is needed for example in typical decision making tasks where inference about a rewarded action has to be performed according to the belief about some hidden variable that is based on sensory information. For example, the rewarded movement direction has to be guessed, based on the belief about the perceived cue combination in the ambiguous target task.

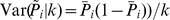

Assume that  is constant and represented by neurons

is constant and represented by neurons  through ENS coding with estimation sample size

through ENS coding with estimation sample size  . We are looking for a neural circuit that represents the posterior belief

. We are looking for a neural circuit that represents the posterior belief

| (4) |

in ENS coding. Here,  are the known conditional probabilities that determine the dependencies between

are the known conditional probabilities that determine the dependencies between  and

and  .

.

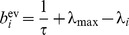

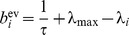

We consider a layer of ensembles  that receive feed-forward synaptic input from ensembles

that receive feed-forward synaptic input from ensembles  representing

representing  , see Figure 3. The membrane potentials of neurons

, see Figure 3. The membrane potentials of neurons  are given by

are given by

| (5) |

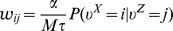

where  denotes the efficacy of the synapse connecting presynaptic neuron

denotes the efficacy of the synapse connecting presynaptic neuron  to postsynaptic neuron

to postsynaptic neuron  (we assume for simplicity of notation that all weights between two ensembles are identical). Consider the estimator

(we assume for simplicity of notation that all weights between two ensembles are identical). Consider the estimator  for synaptic efficacies

for synaptic efficacies

| (6) |

where  is the ensemble size of the ensembles

is the ensemble size of the ensembles  , and

, and  is some constant. Due to the stochastic nature of neurons, this estimator is a random variable. Its expected value (with respect to realizations of spikes trains in all ensembles) is equal to the posterior probability and the estimation sample size is

is some constant. Due to the stochastic nature of neurons, this estimator is a random variable. Its expected value (with respect to realizations of spikes trains in all ensembles) is equal to the posterior probability and the estimation sample size is  (see Methods). Thus, the layer represents the posterior distribution in ENS coding. The estimate at some specific time

(see Methods). Thus, the layer represents the posterior distribution in ENS coding. The estimate at some specific time  is however variable due to variability in spike counts of both the representation of

is however variable due to variability in spike counts of both the representation of  and the representation of

and the representation of  . An analysis of the variance of the posterior representation is given in Methods.

. An analysis of the variance of the posterior representation is given in Methods.

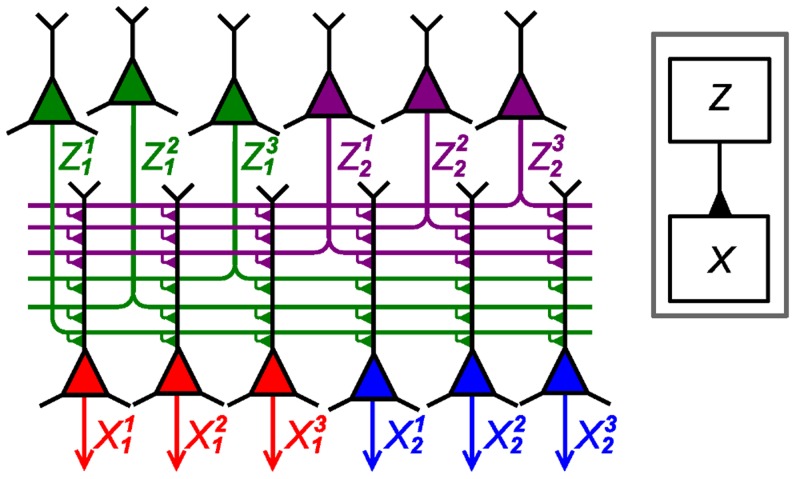

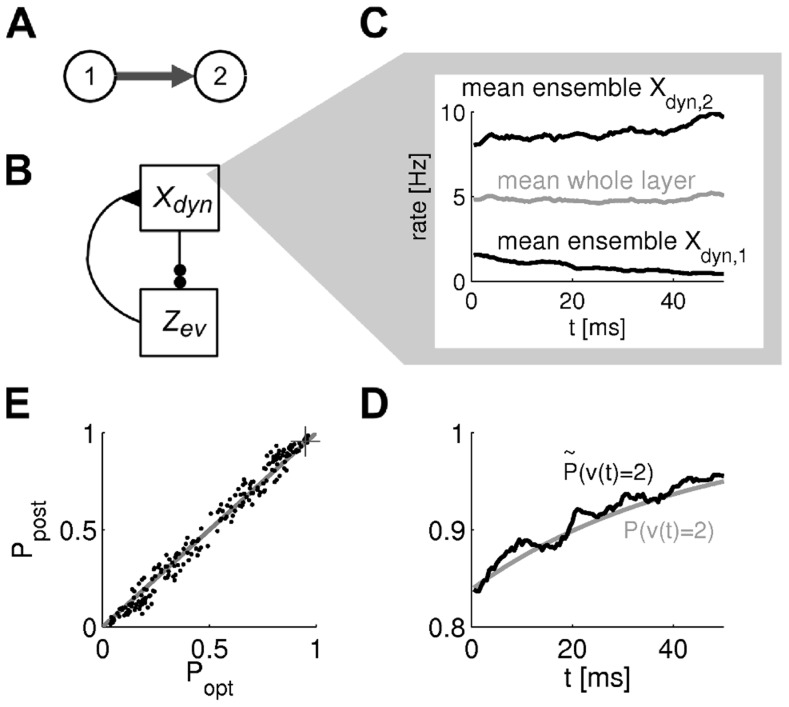

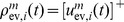

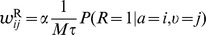

Figure 3. Computations in ENS coding in a feed forward circuit architecture.

A binary random variable  is represented in ENS coding through neurons

is represented in ENS coding through neurons  . The posterior

. The posterior  for a binary variable

for a binary variable  is represented by neurons

is represented by neurons  . Each variable is represented by

. Each variable is represented by  ensembles, one for each possible state (indicated by neuron color), and

ensembles, one for each possible state (indicated by neuron color), and  neurons per ensemble. The two layers are connected in an all-to-all manner. Arrows indicate efferent connections (i.e., outputs in ENS coding). The architecture is summarized in the inset.

neurons per ensemble. The two layers are connected in an all-to-all manner. Arrows indicate efferent connections (i.e., outputs in ENS coding). The architecture is summarized in the inset.

We will use such a layer with feed-forward input several times in our simulations to infer a belief about rewarded actions for a given belief about the state of a random variable and thus refer to it as an action readout layer.

We will also need a special case of this operation where the distribution  is simply copied, i.e., the state of the random variable

is simply copied, i.e., the state of the random variable  is assumed to be identical to the state of

is assumed to be identical to the state of  . In other words,

. In other words,  is 1 for

is 1 for  and 0 for

and 0 for  . The copy operation is thus performed for weights

. The copy operation is thus performed for weights  and

and  for

for  (where we used

(where we used  ).

).

Task class B: Evidence integration

In pure evidence integration, the value of the random variable  is assumed to be constant and only indirectly observable via stochastic point-event observations. Point-event observations are assumed to arise according to Poisson processes with instantaneous rates that depend on the current hidden state. In the context of neuronal circuits, observations are reported through spikes of afferent neurons

is assumed to be constant and only indirectly observable via stochastic point-event observations. Point-event observations are assumed to arise according to Poisson processes with instantaneous rates that depend on the current hidden state. In the context of neuronal circuits, observations are reported through spikes of afferent neurons  . In particular, afferent neuron

. In particular, afferent neuron  is assumed to spike in a Poissonian manner with rate

is assumed to spike in a Poissonian manner with rate  if the hidden variable assumes state

if the hidden variable assumes state  . For a prior distribution over states

. For a prior distribution over states  , the task is to infer the posterior

, the task is to infer the posterior  , that is, the distribution over states at time

, that is, the distribution over states at time  , given the spike trains of all afferent neurons up to time

, given the spike trains of all afferent neurons up to time  , denoted here by

, denoted here by  . Many laboratory tasks can be formalized as evidence integration task, including the random-dot motion task, the probabilistic inference task considered in [2] and the ambiguous target task discussed in the introduction. We construct in the following a circuit of spiking neurons that approximates optimal evidence integration by performing particle filtering in ENS coding under the assumption that

. Many laboratory tasks can be formalized as evidence integration task, including the random-dot motion task, the probabilistic inference task considered in [2] and the ambiguous target task discussed in the introduction. We construct in the following a circuit of spiking neurons that approximates optimal evidence integration by performing particle filtering in ENS coding under the assumption that  is constant. The will then evaluate its behavior against experimental data in computer simulations. This circuit will be extended in the subsequent sections to perform particle filtering for tasks classes C and D.

is constant. The will then evaluate its behavior against experimental data in computer simulations. This circuit will be extended in the subsequent sections to perform particle filtering for tasks classes C and D.

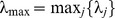

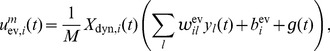

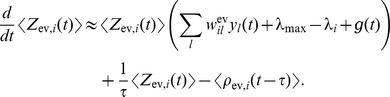

It is well-known that the evidence integration problem can be solved efficiently through a set of coupled differential equations

|

(7) |

where  is the spike train of afferent neuron

is the spike train of afferent neuron  formalized as a sum of Dirac delta pulses at spike times (see Methods), and

formalized as a sum of Dirac delta pulses at spike times (see Methods), and  [13], [14]. The inferred probabilities can be obtained by normalization

[13], [14]. The inferred probabilities can be obtained by normalization  (for

(for  ).

).  induces a constant decrease of

induces a constant decrease of  such that hidden states that give rise to many observations are punished if no observations are encountered. Is the dynamics (7) compatible with ENS coding, assuming that

such that hidden states that give rise to many observations are punished if no observations are encountered. Is the dynamics (7) compatible with ENS coding, assuming that  is estimated from the spiking activity of an ensemble? Four potential difficulties arise. First, the afferent neurons impact eq. (7) via point events and not via EPSPs (

is estimated from the spiking activity of an ensemble? Four potential difficulties arise. First, the afferent neurons impact eq. (7) via point events and not via EPSPs ( instead of

instead of  ). Second, the deterministic dynamics (7) need to be implemented via particles in the ENS code. Third, the summed evidence needs to be multiplied with the current value of

). Second, the deterministic dynamics (7) need to be implemented via particles in the ENS code. Third, the summed evidence needs to be multiplied with the current value of  . And finally, to avoid an exponential blow-up of the

. And finally, to avoid an exponential blow-up of the  's, their values need to be normalized. We discuss these four issues in the following.

's, their values need to be normalized. We discuss these four issues in the following.

Evidence can be provided through EPSPs

It turns out that the first difficulty can be resolved in a convenient manner. The set of differential equations (7) can be transformed to

|

(8) |

with weights  for an arbitrary constant

for an arbitrary constant  ,

,  , and an arbitrary function

, and an arbitrary function  . Integration of EPSPs weighted by

. Integration of EPSPs weighted by  leads to exactly the same result as integration of eq. (7) after the EPSPs have fully been integrated, even if they are temporally overlapping, see Methods. The constant

leads to exactly the same result as integration of eq. (7) after the EPSPs have fully been integrated, even if they are temporally overlapping, see Methods. The constant  can be used to shift

can be used to shift  to positive values. This constant,

to positive values. This constant,  , and the function

, and the function  are integrated by all

are integrated by all  's giving rise to a scaling that cancels in the normalization.

's giving rise to a scaling that cancels in the normalization.

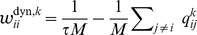

Particle-based implementation of the filtering equations

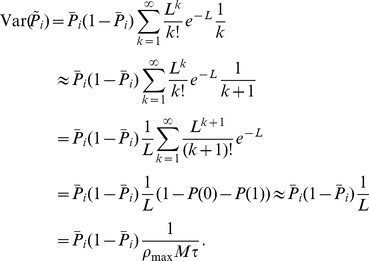

We now discuss how eq. (8) is approximated in ENS coding. While eq. (8) is a deterministic differential equation, the dynamics of the circuit is stochastic due to sampling noise. We construct a circuit that approximates the desired changes in its expected probability masses. The validity of this approach will later be ascertained through various computer simulations. The belief about the hidden state of the random variable  is in general shaped by two components. First, the assumed internal dynamics of the random variable, and second by novel evidence about the state. Consider a circuit that consists of two layers

is in general shaped by two components. First, the assumed internal dynamics of the random variable, and second by novel evidence about the state. Consider a circuit that consists of two layers  (the dynamics layer) and

(the dynamics layer) and  (the evidence layer) with neural ensembles

(the evidence layer) with neural ensembles  and

and  respectively, see Figure 4A. The dynamics layer

respectively, see Figure 4A. The dynamics layer  implements changes of the represented distribution due to the internal dynamics of the random variable. The evidence layer

implements changes of the represented distribution due to the internal dynamics of the random variable. The evidence layer  implements changes due to incoming evidence. Since in task class B, the random variable is assumed to be static, no temporal changes of the random variable are expected, and hence the excitatory weights

implements changes due to incoming evidence. Since in task class B, the random variable is assumed to be static, no temporal changes of the random variable are expected, and hence the excitatory weights  from

from  to

to  are set such that

are set such that  copies the distribution represented by

copies the distribution represented by  , as discussed in Task class A. For task classes C and D, different weights will be used such that layer

, as discussed in Task class A. For task classes C and D, different weights will be used such that layer  predicts dynamics changes of the hidden variable.

predicts dynamics changes of the hidden variable.

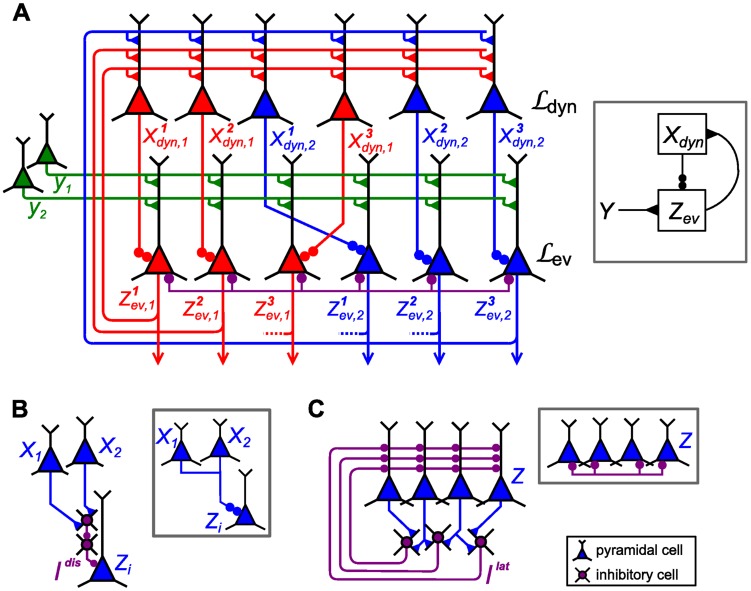

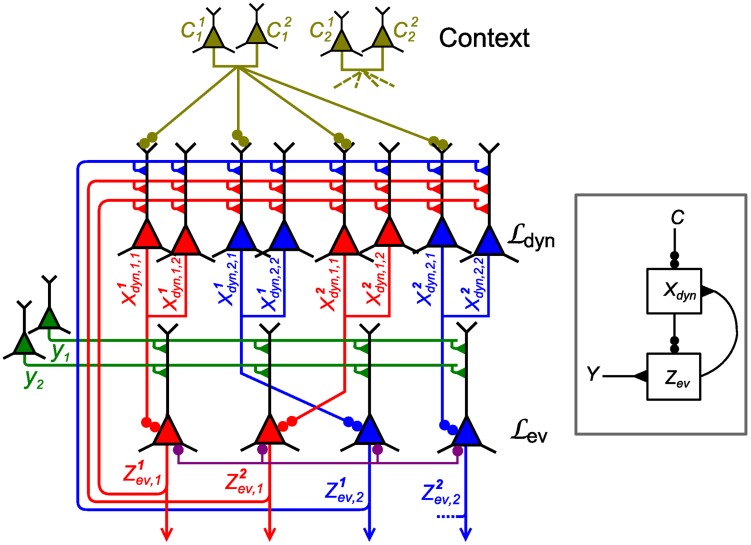

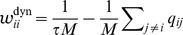

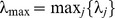

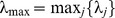

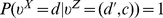

Figure 4. Particle filter circuit architecture for task classes B and C.

A) Circuit with  ensembles (indicated by red and blue neurons respectively) and

ensembles (indicated by red and blue neurons respectively) and  neurons per ensemble. Neurons in layer

neurons per ensemble. Neurons in layer  receive synaptic connections from neurons in layer

receive synaptic connections from neurons in layer  and update the represented distribution according to evidence input from afferent neurons (green). Lateral inhibition (magenta; see panel C) stabilizes activity in this layer. Neurons project back to layer

and update the represented distribution according to evidence input from afferent neurons (green). Lateral inhibition (magenta; see panel C) stabilizes activity in this layer. Neurons project back to layer  . For task class B (evidence integration; static random variable

. For task class B (evidence integration; static random variable  ), only connections between neurons that code for the same hidden state are necessary and layer

), only connections between neurons that code for the same hidden state are necessary and layer  simply copies the distribution represented by layer

simply copies the distribution represented by layer  , see Task class A and Figure 3 (in contrast to Figure 3, the copying ensembles

, see Task class A and Figure 3 (in contrast to Figure 3, the copying ensembles  are plotted above ensembles

are plotted above ensembles  in order to avoid a cluttered diagram). For task class C (Bayesian filtering; random variable

in order to avoid a cluttered diagram). For task class C (Bayesian filtering; random variable  with time-independent dynamics),

with time-independent dynamics),  implements changes of the represented distribution due to the dynamics of the random variable and

implements changes of the represented distribution due to the dynamics of the random variable and  is potentially fully connected to

is potentially fully connected to  . Neurons in layer

. Neurons in layer  disinhibit neurons in layer

disinhibit neurons in layer  (double-dot connections; see panel B). Disinhbition and lateral inhibition is indicated by shortcuts as defined in B, C. Arrows indicate efferent connections. A schematic overview of the circuit is shown in the inset. B) Disinhibition

(double-dot connections; see panel B). Disinhbition and lateral inhibition is indicated by shortcuts as defined in B, C. Arrows indicate efferent connections. A schematic overview of the circuit is shown in the inset. B) Disinhibition  : neurons

: neurons  excite an interneuron (purple) which inhibits the inhibitory drive to some neuron

excite an interneuron (purple) which inhibits the inhibitory drive to some neuron  . As a graphical shortcut, we draw such disinhibitory influence as a connection with two circles (inset) C) Lateral inhibition: Pyramidal cells (blue) excite a pool of inhibitory neurons (magenta) which feed back common inhibition

. As a graphical shortcut, we draw such disinhibitory influence as a connection with two circles (inset) C) Lateral inhibition: Pyramidal cells (blue) excite a pool of inhibitory neurons (magenta) which feed back common inhibition  . The graphical shortcut for lateral inhibition is shown in the inset.

. The graphical shortcut for lateral inhibition is shown in the inset.

The evidence layer  receives input from

receives input from  and evidence from afferent neurons

and evidence from afferent neurons  . Our goal is that its probability masses integrate evidence in the representation of

. Our goal is that its probability masses integrate evidence in the representation of  in

in  , such that

, such that

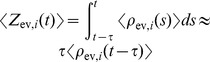

|

(9) |

where the latter approximation applies due to the copy operation of layer  . This equation resembles eq. (8) where the

. This equation resembles eq. (8) where the  's represent the

's represent the  's. We show in Methods that such changes are obtained if the membrane potentials of the neurons in

's. We show in Methods that such changes are obtained if the membrane potentials of the neurons in  are set to

are set to

|

(10) |

where  are positive biases.

are positive biases.

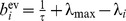

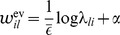

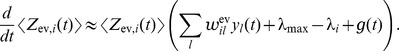

Multiplication through gating of activity

We see that neurons need to compute a multiplication between the current probability mass for state  and the summed evidence. In order to implement similar equations in a neuronal-like manner, logarithmic dendritic nonlinearities or multiplicative synaptic interactions have been postulated in a number of studies, see e.g. [14], [15]. In ENS coding however, the population response in the evidence layer is the sum of the responses of individual neurons. This linearity allows us to base the membrane potential of an individual neuron

and the summed evidence. In order to implement similar equations in a neuronal-like manner, logarithmic dendritic nonlinearities or multiplicative synaptic interactions have been postulated in a number of studies, see e.g. [14], [15]. In ENS coding however, the population response in the evidence layer is the sum of the responses of individual neurons. This linearity allows us to base the membrane potential of an individual neuron  on a small number of particles rather than on the whole set of particles summarized in

on a small number of particles rather than on the whole set of particles summarized in  , as long as each particle is used exactly once in the computation. Hence, the same behavior is obtained on the population level if instead of membrane potentials (10), membrane potentials are given by

, as long as each particle is used exactly once in the computation. Hence, the same behavior is obtained on the population level if instead of membrane potentials (10), membrane potentials are given by

|

(11) |

see Methods for a detailed derivation. If spiking of individual neurons  is sparse, i.e., if the ensemble size is large compared to the estimation sample size,

is sparse, i.e., if the ensemble size is large compared to the estimation sample size,  nearly always takes on the values 0 or 1. In this case, it suffices that the activity of neuron

nearly always takes on the values 0 or 1. In this case, it suffices that the activity of neuron  is gated by neuron

is gated by neuron  , i.e., the neuron

, i.e., the neuron  is able to produce spikes only if

is able to produce spikes only if  was recently active. In summary, under the assumption of sparse activity (which can always be accomplished by a suitable choice of parameters), one can replace the multiplication of two analog variables by gating of activity in ENS coding. This multiplication strategy generalizes the one proposed in the context of stochastic computation to ensemble representations [16], [17].

was recently active. In summary, under the assumption of sparse activity (which can always be accomplished by a suitable choice of parameters), one can replace the multiplication of two analog variables by gating of activity in ENS coding. This multiplication strategy generalizes the one proposed in the context of stochastic computation to ensemble representations [16], [17].

Such gating could be accomplished in cortical networks in various ways. One possibility is synaptic gating [18], [19] where inputs can be gated by either suppression or facilitation of specific synaptic activity. Another possibility is disinhibition. Disinhibitory circuits provide pyramidal cells with the ability to release other neurons from strong inhibitory currents [20]. We choose in this article disinhibition as the gating mechanism, although no specific mechanism can be favored on the basis of the experimental literature. A small circuit with disinhibition  is shown in Figure 4B. Here, two pyramidal cells excite an interneuron which inhibits the inhibitory drive to some neuron

is shown in Figure 4B. Here, two pyramidal cells excite an interneuron which inhibits the inhibitory drive to some neuron  . Functionally,

. Functionally,  is released from strong baseline inhibition if one of the neurons

is released from strong baseline inhibition if one of the neurons  was recently active (see Methods for a formal definition). Using disinhibition, the membrane potential of the neuron can be written as

was recently active (see Methods for a formal definition). Using disinhibition, the membrane potential of the neuron can be written as

| (12) |

where  ensures that the firing rate

ensures that the firing rate  is nonzero only if neuron

is nonzero only if neuron  did spike within the last 20 ms.

did spike within the last 20 ms.

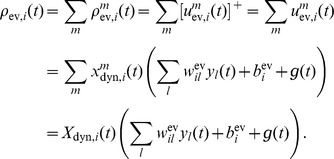

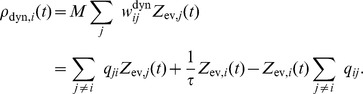

Stabilization of firing rates through lateral inhibition

Lateral inhibition is generally assumed to stabilize the activity of excitatory populations [21]–[23]. A group of pyramidal cells inhibit each other laterally by projecting to a group of inhibitory neurons which in turn inhibit that ensemble, see Figure 4C. The key observation that enables us to use lateral inhibition to stabilize circuit activity is that one has freedom to choose  in eq. (12) as long as it is identical in all ensembles. We model lateral inhibition

in eq. (12) as long as it is identical in all ensembles. We model lateral inhibition  that depends on the recent firing activity in

that depends on the recent firing activity in  such that inhibitory activity increases if the estimation sample size is above the desired value

such that inhibitory activity increases if the estimation sample size is above the desired value  and choose

and choose  , see Methods for details and a brief discussion. This concludes the construction of a particle filtering circuit in ENS coding for task class B. The circuit architecture is depicted in Figure 4A. A summary of circuit equations can be found in Table 1.

, see Methods for details and a brief discussion. This concludes the construction of a particle filtering circuit in ENS coding for task class B. The circuit architecture is depicted in Figure 4A. A summary of circuit equations can be found in Table 1.

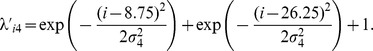

Table 1. Particle filter circuit equations for task classes B and C.

| Layer | Ensembles | Neurons | Membrane voltage and parameters |

|

|

|

|

|

|

|

for for  , ,  for all i. for all i. |

|

|

|

|

|

|

|

, ,

|

Here we have defined  and

and  .

.  denotes lateral inhibition and

denotes lateral inhibition and  disinhibition.

disinhibition.  is an arbitrary constant. In task class B (evidence integration),

is an arbitrary constant. In task class B (evidence integration),  for

for  , leading to

, leading to  for

for  and

and  .

.

We tested how well a circuit consisting of 2000 neurons per state and an estimation sample size of 400 can approximate the true posterior in a simple evidence integration setup. The task was to compute the posterior distribution for a random variable  with two hidden states and two observable variables

with two hidden states and two observable variables  , see Figure 5A. The schematic circuit diagram is shown in panel B. The two evidence neurons

, see Figure 5A. The schematic circuit diagram is shown in panel B. The two evidence neurons  spiked at times 20 ms and 25 ms respectively, see panel C. Figure 5D depicts the rate dynamics in layer

spiked at times 20 ms and 25 ms respectively, see panel C. Figure 5D depicts the rate dynamics in layer  for one example trial. Novel evidence transiently increases the firing rate in the layer, which is in turn restored by lateral inhibition. The response of ensemble

for one example trial. Novel evidence transiently increases the firing rate in the layer, which is in turn restored by lateral inhibition. The response of ensemble  that represents state 1 undergoes a transient increase that is counteracted by inhibition until it stabilizes at an enhanced sustained level. This behavior is reminiscent of the typical response of cortical pyramidal cells to sensory input. Figure 5E shows the temporal evolution of the encoded posterior probability

that represents state 1 undergoes a transient increase that is counteracted by inhibition until it stabilizes at an enhanced sustained level. This behavior is reminiscent of the typical response of cortical pyramidal cells to sensory input. Figure 5E shows the temporal evolution of the encoded posterior probability  in comparison to the true posterior

in comparison to the true posterior  . The true posterior is approximated very well after a delay of about 20 ms, which is the time needed to integrate the EPSPs from evidence neurons. We simulated 100 trials where in each trial, prior probabilities for the states and observation likelihoods were drawn randomly such that the posterior

. The true posterior is approximated very well after a delay of about 20 ms, which is the time needed to integrate the EPSPs from evidence neurons. We simulated 100 trials where in each trial, prior probabilities for the states and observation likelihoods were drawn randomly such that the posterior  at time t = 45 ms assumed values between 0 and 1 (see Methods). The estimate of the circuit at the end of the second EPSP (i.e., at time t = 45 ms) is shown in comparison to the true posterior in Figure 5F.

at time t = 45 ms assumed values between 0 and 1 (see Methods). The estimate of the circuit at the end of the second EPSP (i.e., at time t = 45 ms) is shown in comparison to the true posterior in Figure 5F.

Figure 5. Evidence integration through particle filtering in ENS coding.

A) The state of a binary random variable  that gives rise to two possible observations

that gives rise to two possible observations  is estimated. Both observations occur more frequently in state 1 (indicated by sharpness of arrows). B) Estimation is performed by a particle filtering circuit with evidence input

is estimated. Both observations occur more frequently in state 1 (indicated by sharpness of arrows). B) Estimation is performed by a particle filtering circuit with evidence input  (

( : dynamics layer ensembles;

: dynamics layer ensembles;  : evidence layer ensembles). C) An evidence spike is observed at times 20 ms and 25 ms in evidence neuron

: evidence layer ensembles). C) An evidence spike is observed at times 20 ms and 25 ms in evidence neuron  and

and  respectively. D) Example for the rate dynamics in layer

respectively. D) Example for the rate dynamics in layer  . Ensemble rate for ensemble

. Ensemble rate for ensemble  (black) and whole layer

(black) and whole layer  (gray). The input leads to a transient increase in the ensemble rate. Inhibition recovers baseline activity. The ensemble rate for state 1 undergoes a transient and a sustained activity increase. E) Temporal evolution of estimated posterior probability

(gray). The input leads to a transient increase in the ensemble rate. Inhibition recovers baseline activity. The ensemble rate for state 1 undergoes a transient and a sustained activity increase. E) Temporal evolution of estimated posterior probability  for state 1 (black) in comparison to true posterior

for state 1 (black) in comparison to true posterior  (gray) for this example run. F) Posterior probability at t = 45 ms (

(gray) for this example run. F) Posterior probability at t = 45 ms ( ) for state 1 of the circuit in comparison to true posterior at this time (

) for state 1 of the circuit in comparison to true posterior at this time ( ). Each dot represents one out of 100 runs with prior probabilities and observation likelihoods drawn independently in each run (see Methods). The results of the example run from panels A–E is indicated by a cross.

). Each dot represents one out of 100 runs with prior probabilities and observation likelihoods drawn independently in each run (see Methods). The results of the example run from panels A–E is indicated by a cross.

Gating of activity for multiplication can be used for many types of multiplicative operations on probability distributions. In Text S2, we discuss its application to cue combination, an operation that has been considered for example in [24].

Comparison to experimental results

We performed computer simulations in order to compare the behavior of the model to various experimental studies on tasks that are examples for task class B.

The ambiguous target task

The ambiguous target task studied in [3] was already discussed above, see also Figure 1A. In our model of the decision making process, the hidden state of a random variable  was estimated through evidence integration. Each of the 16 hidden states corresponded to a tuple

was estimated through evidence integration. Each of the 16 hidden states corresponded to a tuple  , where

, where  denotes one of eight possible directions of movement, and

denotes one of eight possible directions of movement, and  denotes the color of the color cue. In other words, such a state represented the color of the color cue and the movement direction that leads to reward, see Figure 6Aa. Possible observations were the fixation cross, the spatial cues at 8 positions in two colors, and the two color cues. Each of the 19 possible stimuli was coded by 20 afferent neuron that fired at a baseline rate of 0.1 Hz. When a stimulus was present, the corresponding neurons spiked in a Poissonian manner with a rate of 5 Hz.

denotes the color of the color cue. In other words, such a state represented the color of the color cue and the movement direction that leads to reward, see Figure 6Aa. Possible observations were the fixation cross, the spatial cues at 8 positions in two colors, and the two color cues. Each of the 19 possible stimuli was coded by 20 afferent neuron that fired at a baseline rate of 0.1 Hz. When a stimulus was present, the corresponding neurons spiked in a Poissonian manner with a rate of 5 Hz.

Figure 6. Particle filtering in ENS coding for the ambiguous target task.

A) Represented random variables. Aa) Evidence integration is performed for a random variable with 16 hidden states corresponding to direction-color pairs. Values of the random variable are depicted as circles. Observations accessible to the monkey in one example state are shown as boxes. Ab) The action readout layer infers a color-independent random variable by marginalization over color in each direction. B) Circuit structure. The circuit on the top approximates evidence integration through particle filtering (top gray box;  : dynamics layer ensembles;

: dynamics layer ensembles;  : evidence layer ensembles)) on the random variable indicated in panel (Aa). An action readout layer (bottom gray box; ensembles X) receives feed-forward projections from the particle filter circuit. C) Spike rasters from simulations for afferent neurons (Ca) and neurons in the action readout layer (Cb). Each line corresponds to the output of one neuron. Afferent neurons are ordered by feature selectivity (e.g., top neurons code the presence of the fixation cross). Action readout neurons are ordered by preferred movement direction. See also Figure 1.

: evidence layer ensembles)) on the random variable indicated in panel (Aa). An action readout layer (bottom gray box; ensembles X) receives feed-forward projections from the particle filter circuit. C) Spike rasters from simulations for afferent neurons (Ca) and neurons in the action readout layer (Cb). Each line corresponds to the output of one neuron. Afferent neurons are ordered by feature selectivity (e.g., top neurons code the presence of the fixation cross). Action readout neurons are ordered by preferred movement direction. See also Figure 1.

We simulated a particle filter circuit to compute the belief about the state of the random variable with 1000 neurons per hidden state and an estimation sample size of 400. An action readout layer as described in Task class A was added that received connections from  in a feedforward manner, see Figure 6B. This layer computed the current belief over rewarded actions independently from the color of the color cue (i.e., it marginalized over color), see Methods for details.

in a feedforward manner, see Figure 6B. This layer computed the current belief over rewarded actions independently from the color of the color cue (i.e., it marginalized over color), see Methods for details.

The spiking activity of afferent neurons that provided evidence for one example simulation run is shown in Figure 6Ca. Simulated neural activities from the readout layer are shown in Figure 6Cb, see also Figure 1C. After the spatial cue was presented, the two consistent ensembles increased their activity. Due to competition between these ensembles, neurons fired at a medium rate. After the color cue was shown, only the ensemble consistent with both the spatial and the color cue remained active. These neurons increased their firing rate since the competing action became improbable and the winning ensemble was uncompeted. This behavior has been observed in PMd [3], see Figure 1B. The action readout layer is not needed to reproduce this behavior, since neurons in the particle filter circuit exhibit similar behavior. However, it was reported that most neurons in PMd were not color selective [3]. In our model, neurons of the particle filter circuit are color selective since states are defined according to direction-color pairs. It is clear that color-related information has to be integrated with movement-related information and memorized during the memory epoch in order to solve the task. The experimental results suggest that this integration is not implemented in PMd but rather in upstream circuits. PMd could then act as a motor readout.

Applications of the model to various other experimental tasks can be found in supporting texts. Action-predictive activity in macaque motor cortex is also modulated by the expected value of the action. This was demonstrated for example in [25]. An application of our model to this scenario is described in Text S3. Furthermore, we show in Text S4 that the model is consistent with features of neuronal activity during random-dot motion tasks [1], [26], [27]. In Text S5 it is shown that the model can also explain neuronal activitiy in area LIP during a probabilistic reasoning task [2].

Task class C: Bayesian filtering

Evidence integration cannot take temporal changes of the hidden variable into account. Knowledge about temporal changes can be exploited by Bayesian filtering, which is an extension of evidence integration. Here, we assume that the dynamics of  are constant during the filtering process. The more general case when the dynamics may change is discussed below in Task class D. Formally, the Bayesian filtering problem considered here is to estimate the posterior distribution

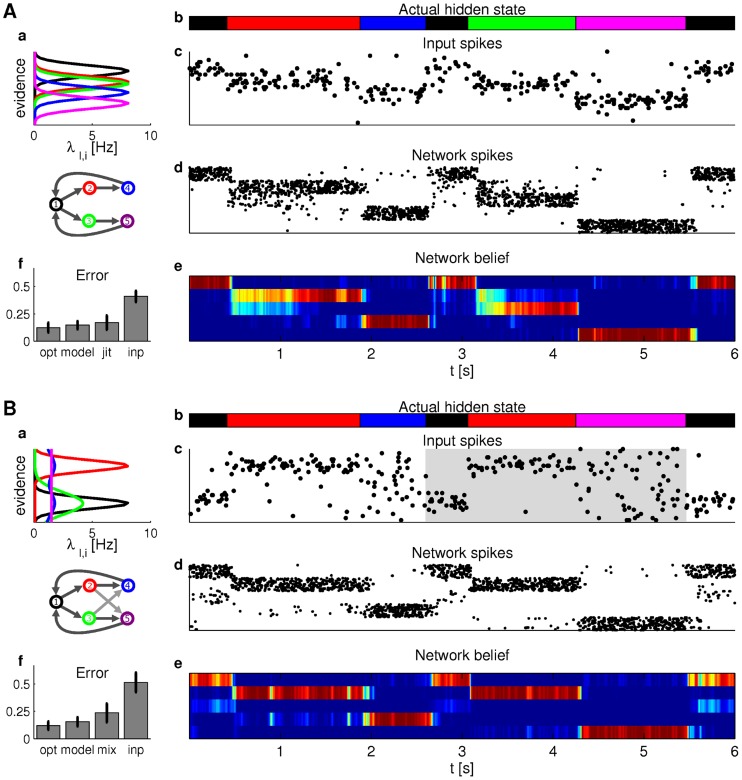

are constant during the filtering process. The more general case when the dynamics may change is discussed below in Task class D. Formally, the Bayesian filtering problem considered here is to estimate the posterior distribution  over the states of a random variable

over the states of a random variable  that represents the hidden state of a random process which is only indirectly observable via stochastic point-event observations