Abstract

In the host of numerical schemes devised to calculate free energy differences by way of geometric transformations, the adaptive biasing force algorithm has emerged as a promising route to map complex free-energy landscapes. It relies upon the simple concept that as a simulation progresses, a continuously updated biasing force is added to the equations of motion, such that in the long-time limit it yields a Hamiltonian devoid of an average force acting along the transition coordinate of interest. This means that sampling proceeds uniformly on a flat free-energy surface, thus providing reliable free-energy estimates. Much of the appeal of the algorithm to the practitioner is in its physically intuitive underlying ideas and the absence of any requirements for prior knowledge about free-energy landscapes. Since its inception in 2001, the adaptive biasing force scheme has been the subject of considerable attention, from in-depth mathematical analysis of convergence properties to novel developments and extensions. The method has also been successfully applied to many challenging problems in chemistry and biology. In this contribution, the method is presented in a comprehensive, self-contained fashion, discussing with a critical eye its properties, applicability, and inherent limitations, as well as introducing novel extensions. Through free-energy calculations of prototypical molecular systems, many methodological aspects are examined, from stratification strategies to overcoming the so-called hidden barriers in orthogonal space, relevant not only to the adaptive biasing force algorithm but also to other importance-sampling schemes. On the basis of the discussions in this paper, a number of good practices for improving the efficiency and reliability of the computed free-energy differences are proposed.

Introduction

Although the statistical-mechanical foundations of free-energy calculations were laid a long time ago,1−3 their practical applications became possible only with the advent of modern computers. From the inception of computer-based free-energy calculations4,5 it has been clear to theorists that direct Boltzmann sampling of rugged energy landscapes is inefficient. The subsequent development of the field is a history of efforts to remedy this problem.

In free-energy calculations, the quantity of interest is almost always the free-energy difference between physical states of the system rather than the absolute free energy of a given state. From this standpoint, calculations can be categorized on the basis of variables used to transform the system between states of interest. Then, two main classes can be distinguished, namely alchemical and geometrical transformations.6 They rely, respectively, on changes of a parameter in the Hamiltonian or a function of atomic coordinates. The first class encompasses structural modifications of chemical species that rest upon the remarkable malleability of the potential energy function in molecular-mechanics-based simulations,7,8 reminiscent of the fabled ability of alchemists to transmute base metals into noble ones. Alchemical transformations are often associated with the free-energy perturbation method2,3 on account of the progressive and perturbative nature of the change incurred by the system of interest, although, strictly speaking, alchemical free-energy calculations can be carried out by way of alternate approaches, such as thermodynamic integration.1 The very first application of alchemical transformations to a nontrivial chemical problem was published nearly 30 years ago by William Jorgensen, to whom the present contribution is dedicated.9 In noteworthy agreement with experiment, this pioneering simulation reproduced the relative hydration free energy of methanol with respect to ethane.

The second class of transformations embraces virtually any geometric modification in a molecule or a collection of molecules by means of selected collective variables tailored to address the problem at hand, which could vary from changes in the internal degrees of freedom in a molecule to intricate recognition and association phenomena.7 Such collective variables form the transition coordinate, a low-dimensional representation of a multidimensional mathematical object.

The distinction between the two types of transformations is theoretically important. For geometric transformations, the transition coordinate is, in effect, a generalized coordinate, the evolution of which is usually described by Hamilton’s equations of motion. In contrast, for a parameter in the Hamiltonian, no equations of motion naturally exist, although it is possible to extend the formalism of dynamics to include such a parameter.10 As a consequence, a number of methods for calculating free energy by way of geometric transformations cannot be applied to alchemical transformations without such extension. The adaptive biasing force method can serve as an example.7

A considerable number of ingenious techniques have been developed to improve the efficiency of mapping free-energy landscapes associated with geometrical transformations along a transition coordinate.11−25 A common feature of these methods is their reliance on importance-sampling techniques. The central idea of these techniques is to depart from sampling from the Boltzmann distribution defined by the original Hamiltonian and, instead, sample from another distribution that favors regions of phase space that would be visited only infrequently but are important to achieving reliable free-energy estimates. Because this procedure is clearly biased, it is essential to know how to correct, or unbias, it to recover the true underlying distribution. Importance sampling is commonly used not only in statistical mechanics of condensed phases but also in other fields of science, usually as a variance reduction technique most frequently combined with the Monte Carlo method.

Probably the most popular, and also the oldest importance-sampling technique used in free-energy calculations is umbrella sampling.11 It relies on introducing a bias in simulations that favors states corresponding to large values of the free energy along the transition coordinate. Local elevation,15 conformational flooding,16 metadynamics,20,24 and the Wang–Landau algorithm26,27 are examples of more recent importance-sampling algorithms, united by the common denominator that a memory-dependent potential disfavors regions of conformational space that have already been frequently visited.

In a sense, the adaptive biasing force method19 rewove the fabric of free-energy calculations of geometrical transformations, as it is characterized by both conceptual and practical simplicity and requires, at least in principle, little intervention from the end user. In spite of apparent similarities with the local-elevation and conformational-flooding strategies in its aim to sample efficiently all values of the transition coordinate, its theoretical underpinnings are quite different, as we will argue further in this paper. Furthermore, in contrast with seemingly similar strategies, the adaptive biasing force algorithm requires no prior knowledge of the free-energy landscape at hand.

At its core, the adaptive biasing force method is an adaptive importance-sampling strategy in which the quantity being adjusted is the average force acting along the transition coordinate. It helps the system under study escape from kinetic traps in which it would otherwise have remained for a very long time. The method constitutes a highly efficient route to estimating free energies, which, since its inception, has been used to tackle a number of challenging problems of chemical and biological interest, such as mechanical proteins,28 transport phenomena,29−31 or protein–ligand and protein–protein recognition and association.32 More generally, it is a versatile, adaptive, importance-sampling strategy that can be utilized in many fields, whenever sampling of a probability measure is thwarted by metastability of the sampling dynamics.

In this self-contained contribution, the multiple facets of the adaptive biasing force algorithm are discussed in an exhaustive manner, tackling a number of issues that have not been addressed so far, or only rarely so. In the following section, we present the theoretical foundations of the method, discussed in the context of other free-energy approaches. Next, we briefly address a number of practical issues related to a proper choice of the transition coordinate. Then, an analysis of the convergence properties of the method and approaches to calculating and controlling statistical errors associated with the calculated free-energy values are presented. The discussion of convergence and errors continues with the focus on nonergodicity scenarios, and ways to identify and circumvent them. Subsequently, we examine for the first time how the adaptive biasing force algorithm is used in conjunction with geometrical restraints, which have to be enforced in many problems of interest. Finally, we discuss some new strategies for combining thermodynamics and kinetics in importance-sampling simulations, before closing with recommendations for ”good practices” in applying the adaptive biasing force method and an outlook toward further promising statistical mechanical and algorithmic developments.

The adaptive biasing force algorithm

In this section, the essential idea behind the adaptive biasing force algorithm is first explained in terms appealing to physical intuition, followed by the theoretical underpinnings of the method presented in a more formal language. Then, the reader is guided through the common expressions for the mean force, and the adaptive algorithm, both of which are at the core of the method. Finally, the method is compared with related importance-sampling schemes.

The Adaptive Biasing Force in Plain Language

The adaptive biasing force method is aimed at improving the efficiency of molecular dynamics simulations in which the potential energy surface is sampled ineffectively due to free-energy barriers. In practice, these barriers appear as bottlenecks in the dynamics of certain privileged coordinates that describe the transitions between physically important states (transition coordinates). They also cause the system to become trapped in some states for durations exceeding the time scale of the simulation, resulting in incomplete sampling.

The free energy along a transition coordinate can be seen as a potential resulting from the average force acting along the coordinate (i.e., the negative of the gradient of this potential), hence the name potential of mean force. In the formalism of thermodynamic integration, on which the adaptive biasing force is based, the average force is the quantity that is calculated directly. Subsequently, this force is integrated to yield the potential. The instantaneous force acting along the coordinate may be decomposed into the sum of the average force (which depends only on the value of the transition coordinate) and a random force with zero average, reflecting fluctuations of all other degrees of freedom. Hence, in a low-dimensional view of the process, the transition coordinate evolves dynamically in its time-independent potential of mean force, and this evolution is driven by the random force. In many instances, the random force can be satisfactorily approximated as diffusive, leading to a simple physical picture in which the system diffuses along the transition coordinate in the potential of mean force.

The idea behind the adaptive biasing force algorithm is to preserve most characteristics of this dynamics, including the random fluctuating force, while flattening the potential of mean force to remove free-energy barriers, and thus accelerate transitions between states. This is done adaptively, without any prior information about the potential of mean force. To accomplish this, the instantaneous force acting along the coordinate is calculated, and its running time average is recorded, thus providing an on-the-fly estimate of the derivative of the free energy at each point along the pathway. At the same time, an external biasing force is applied, exactly canceling the current estimate of the average force. Over time, as the estimate converges to the average force at equilibrium, the total, biased average force stabilizes at values very close to zero. Then, the system experiences a nearly flat potential of mean force and displays accelerated dynamics along the transition coordinate. The fact that the biasing force is exactly equal to the mean force is actually not crucial. What is important is that the biasing force yields sufficiently uniform sampling of the transition coordinate that the remaining barriers can be easily traversed in response to thermal fluctuations.

Theoretical Backdrop

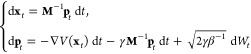

Let us now define the adaptive biasing force algorithm in a formal way. The adaptive biasing force algorithm is not inherently tied to any specific type of dynamics but does rely on sampling of the canonical ensemble. In explicit-solvent simulations, Langevin dynamics with sufficiently soft damping and small stochastic forces becomes a mere perturbation of Hamiltonian dynamics and may be used as one simple way to achieve canonical sampling. For convenience, but without loss of generality, we will base our description below on Langevin dynamics. Langevin dynamics can be written as

|

1 |

where

(xt, pt) denotes the positions and

momenta of the particles at time t, M is the mass tensor, V :  is the potential energy function, γ

is the friction coefficient, Wt is the Wiener process that underlies the random force (white

noise), and β–1 = kBT, where kB is the Boltzmann

constant and T is temperature. The dynamics of eq 1 is ergodic (under mild conditions on V) with respect to the canonical measure Zp–1 exp[−βpTM–1p/2] dp μ(dx), where μ(dx) = Zx–1 exp[−βV(x)] dx. Ergodicity means

that long-time averages converge to canonical averages:

is the potential energy function, γ

is the friction coefficient, Wt is the Wiener process that underlies the random force (white

noise), and β–1 = kBT, where kB is the Boltzmann

constant and T is temperature. The dynamics of eq 1 is ergodic (under mild conditions on V) with respect to the canonical measure Zp–1 exp[−βpTM–1p/2] dp μ(dx), where μ(dx) = Zx–1 exp[−βV(x)] dx. Ergodicity means

that long-time averages converge to canonical averages:

| 2 |

and that the law at time t of the stochastic process converges to the canonical measure in the long-time limit:

| 3 |

where  is

the expected value. The first limit

in eq 2 is of particular interest for practical

applications because it allows for computing canonical averages from

trajectory averages.

is

the expected value. The first limit

in eq 2 is of particular interest for practical

applications because it allows for computing canonical averages from

trajectory averages.

For a typical potential V, the associated Boltzmann measure μ is multimodal: high-probability regions are separated by low-probability regions. The former correspond, for instance, to the most likely conformations of a biological object, which are typically separated by transition regions of very low probability. For these reasons, estimating averages with respect to the probability measure μ is, in general, a difficult task. In particular, the ergodic properties of the dynamics of eq 1 are not sufficient to devise reliable numerical methods, because under these premises, the stochastic process remains trapped in large-probability regions, and, as a consequence, the long-time asymptotic regime t → ∞ is very difficult to reach in the ergodic limits in eqs 2 and 3. The fact that the system remains for a very long time in some region of phase space before hopping to another region is called metastability, and the corresponding states of the system are called metastable. The inability to reach the ergodic limit is often called quasi nonergodicity; the system appears nonergodic on the time scales of the simulations. A typical example of a metastable state is a local free-energy minimum in the conformational space of a protein.

The adaptive biasing

force method relies on modifying the potential V in

such a way that the energy landscape is flattened along

a given transition coordinate ξ:  . Here, we restrict ourselves to a one-dimensional

transition coordinate, leaving generalization to high-dimensional

transition coordinates for the section Expressions

for the Mean Force. More precisely, the potential V is changed to x → V(x) – At[ξ(x)] and At is

updated in such a way that it converges to the free energy A, defined (up to an additive constant) by

. Here, we restrict ourselves to a one-dimensional

transition coordinate, leaving generalization to high-dimensional

transition coordinates for the section Expressions

for the Mean Force. More precisely, the potential V is changed to x → V(x) – At[ξ(x)] and At is

updated in such a way that it converges to the free energy A, defined (up to an additive constant) by

| 4 |

where the measure δξ(x)–z (dx) is supported by the subset {x, ξ(x) = z} and is such that δξ(x)–z (dx) dz = dx.

In practice, the bias is only applied in a window [zmin, zmax] as explained in the section Justification of a Stratification Strategy. Notice that, by the definition of A, the canonical measure associated with the biased potential V(x) – A[ξ(x)] is such that ∫exp(−β{V(x) – A[ξ(x)]})δξ(x)–z (dx) = C, where C is a constant independent of z. Therefore, if the biased potential V(x) – A[ξ(x)]1ξ(x)∈[zmin,zmax] is used, the marginal along ξ is a uniform law over [zmin, zmax]. Here, 1ξ(x)∈[zmin,zmax] is an indicator function, which is one when ξ(x) ∈ [zmin, zmax] and zero otherwise. Let us recall that the marginal law is defined as follows: if x is distributed according to a probability distribution μ, then the law of ξ(x) is called the marginal of μ along ξ. If we knew the free energy A, it would be a good idea to use −A◦ξ as a biasing potential, because sampling along ξ would be easier. This is illustrated in simple two-dimensional examples in Figures 1 and 2. Notice in particular that the free energy seems to be a good biasing function for efficient sampling of both energetic barriers (Figure 1) and entropic barriers (Figure 2).

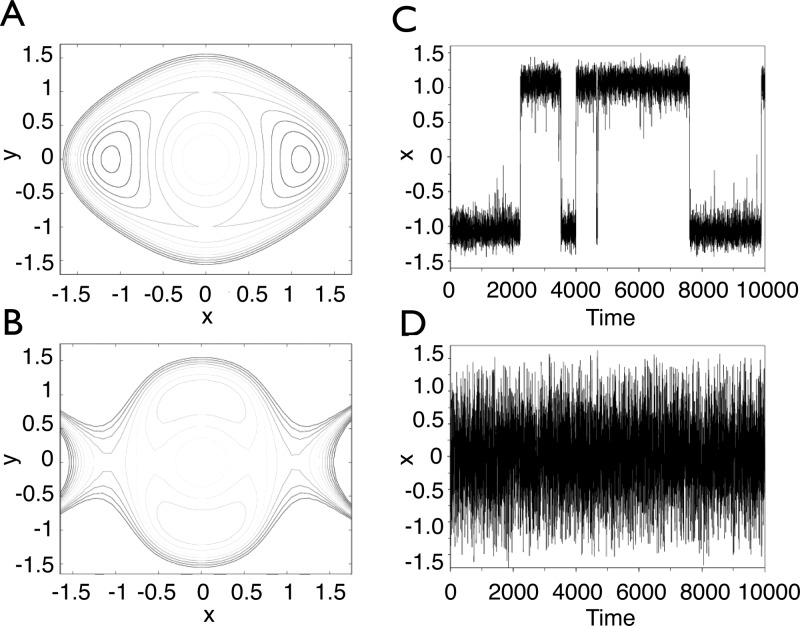

Figure 1.

Upper row: (A) original two-dimensional, double-well potential displayed as level sets; (C) time trajectory of the first coordinate x in the stochastic process, showing oscillations between the two metastable wells. Lower row: (B) level sets of the same potential biased by the free energy associated with the transition coordinate ξ(x,y) = x; (D) time trajectory of the transition coordinate x in the adaptively biased dynamics, showing no metastability.

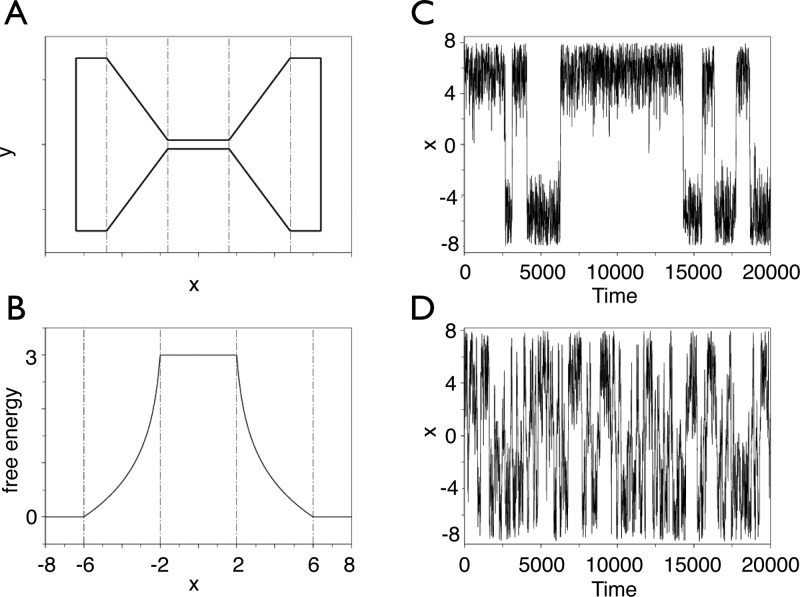

Figure 2.

Upper row: (A) the original two-dimensional potential is zero inside the hourglass shape, and +∞ outside; (C) time trajectory of the first coordinate x in the stochastic process, showing oscillations between the two metastable wells. Lower row: (B) free energy along the transition coordinate ξ(x,y) = x, featuring a purely entropic barrier; (D) time trajectory of the transition coordinate x in the free-energy-biased dynamics, showing no metastability.

The main ingredient that we now need is an update rule for At such that limt→∞At = A. This is based on the following formula,33−35 which defines the mean force, namely, the negative of the derivative of the free energy. More general formulas can be derived, which give rise to many variants of the adaptive biasing force method (see section Expressions for the Mean Force),

| 5 |

where the instantaneous force is defined by

| 6 |

Equation 5 is a consequence of the definition

of the free energy in eq 4 and of the co-area

formula (which is a generalization of the Fubini theorem); see for

instance ref (8). From

eq 5 it follows that A′

is the conditional average of the instantaneous force, Fξ, with respect to the canonical measure conditioned

to a fixed value of the transition coordinate: A′(z) =  [Fξ(x)

[Fξ(x) ξ(x) = z]. An important observation is that

eq 5 remains

true if the potential V is changed to V – At◦ξ:

for any function At

ξ(x) = z]. An important observation is that

eq 5 remains

true if the potential V is changed to V – At◦ξ:

for any function At

| 7 |

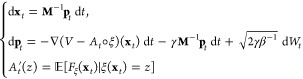

In other words, a biasing potential At depending solely on ξ leaves averages conditioned by ξ unchanged. This is the intuition behind the adaptive biasing force dynamics, which can be written as

|

8 |

Indeed, if (xt, pt) were at equilibrium with respect to the biased canonical measure Zp–1 exp(−βpTM–1p/2) dpZt–1 exp[−β(V – At◦ξ)(x)] dx, then At′ would be equal to A′. In practice, of course, the sampling is not sufficiently fast for the process to be instantaneously at equilibrium with respect to the time-varying biased potential V – At◦ξ, and this is why this heuristic is not sufficient to fully understand the convergence of the adaptive biasing force dynamics (see the section Convergence and Error Analysis). However, this simple reasoning is sufficient to check that if At converges to some limit A∞, then necessarily, A∞ = A′.

From a practical viewpoint, the conditional expectation in eq 8 can be computed using two procedures: either time averages over a single long trajectory or averages over many replicas run in parallel. These procedures will be discussed in ample detail in the section Multiple-Walker Strategies below.

Intuitively, the adaptive biasing force dynamics thus consists of adding a force At′[ξ(x)]∇ξ(x) that exactly compensates the average of the original force, −∇V(x), along a given transition coordinate. If ξ is well-chosen, the hope is to observe a fast convergence (at least compared to the original dynamics embodied in eq 1 at equilibrium) of At to the mean force A′.

Expressions for the Mean Force

Given a transition coordinate ξ(x), the mean force is a well-defined quantity, yet its expression as an ensemble average of an instantaneous force Fξ is not unique, as we will see below. In adaptive biasing force simulations, the choice of a convenient expression for Fξ is driven by practical considerations, notably ease of implementation and numerical behavior, such as variance.

The classic expression for the instantaneous

force involves an explicit coordinate transformation Ξ from

Cartesian to generalized coordinates, which include the transition

coordinate ξ. That is, Ξ :  , with Ξ1 = ξ and

components Ξi for i > 1 are generalized coordinates of no particular physical significance,

but necessary to the mathematical framework. A valid expression for

the instantaneous force is then12

, with Ξ1 = ξ and

components Ξi for i > 1 are generalized coordinates of no particular physical significance,

but necessary to the mathematical framework. A valid expression for

the instantaneous force is then12

| 9 |

which in the physics literature is more commonly written as

| 10 |

where |J| is the determinant of the Jacobian matrix (∂iΞj–1)(i,j). From the arbitrary choice of Ξi,i>1 is derived a (somewhat arbitrary) function Fξ, whose ξ-restricted ensemble average nevertheless yields the uniquely defined mean force (eq 5). Equation 10 provides an intuitive view that different choices of Ξ and Fξ correspond to different ways of projecting the Cartesian forces, −∇V, onto the transition coordinate. The direction along which the forces are projected in this expression is the vector ∂1Ξ–1, which we call “inverse gradient”.36 As the gradient of ξ can be seen as the changes in ξ corresponding to infinitesimal changes in x, the inverse gradient is the vector along which a change in ξ is propagated in Cartesian coordinates, other generalized coordinates (Ξi,i>1) being constant, hence the dependence of the inverse gradient on the explicit coordinate transformation. The alternate notation for the inverse gradient, ∂x/∂ξ, has the drawback of hiding this dependence on the choice of Ξ.

Numerically, eq 10 is impractical for two reasons. One is that defining Ξi,i>1 explicitly can be exceedingly difficult, especially if ξ is a collective coordinate (e.g., the radius of gyration of a set of particles). Supposing that this step is done, the second difficulty comes with the numerical computation of the Jacobian derivative, as it involves second derivatives of Ξ–1 whose analytical derivation and numerical implementation may be cumbersome, again, depending on the nature of the transition coordinate.

To circumvent this issue, the original adaptive biasing force method was introduced with an instantaneous force estimator based on a constraint force that is calculated iteratively, but never applied.19 In the initial implementation of the adaptive biasing force algorithm37 in the popular molecular dynamics program NAMD,38 eq 10 was used because the small set of implemented coordinates made it practical. As this set was greatly extended in the framework of the collective variables module,39 more versatile expressions of the instantaneous force were required.36

Den Otter put forward the idea that the

inverse gradient can be

replaced with an arbitrary vector field (satisfying certain requirements).40 In other words, changes in ξ may be propagated

along an arbitrary direction in Cartesian coordinates, without explicitly

defining a complete set of generalized coordinates. That idea was

extended to a multidimensional case by Ciccotti et al.41 Consider a vector transition coordinate (ξi), in the presence of a set of constraints

of the form σk(x) =

0. For each coordinate ξi, let vi be a vector field  satisfying, for all j and k:

satisfying, for all j and k:

| 11 |

| 12 |

The ith partial derivative of the free energy can then be calculated as the conditional average of the following instantaneous force:

| 13 |

of which eq 6 is a special case, limited to a single coordinate and choosing v = ∇ξ/|∇ξ|2, which satisfies the condition (eq 11) above. Note that this estimator still requires the calculation of second derivatives, in the form of the divergence of the vector field v, although the relative freedom in choosing v can be taken advantage of to make the divergence calculation practical. The choice v = ∇ξ/|∇ξ|2 is always valid, and as such, convenient for theoretical purposes, but certainly not always optimal when implementing specific generalized coordinates. Expressions that were chosen in practice for those coordinates implemented in the collective variables module are listed in ref (39).

Darve et al. described an estimator that does not require second derivatives, but rather first derivatives with respect to time and space, and is valid for multidimensional adaptive biasing force calculations.42 This estimator resembles a statistical form of Newton’s equation of motion: instead of relating acceleration to the potential energy gradient, it relates the mean acceleration to the gradient of the free energy. In a considerable simplification, only the first derivative of the force with respect to ξ needs to be derived. The time derivative is calculated numerically in the same fashion and at the same level of accuracy as time derivatives of other quantities in molecular dynamics. Other ways of simplifying calculations of instantaneous forces will be discussed in the context of extended adaptive biasing force simulations, or eABF, in the section The Extended Adaptive Biasing Force Method.

Adaptive Algorithm

The final, essential ingredient of the theory underlying the adaptive force method is an algorithm for deriving the current estimate of the average force as simulations progress. In its generic, one-dimensional implementation, the transition coordinate, ξ, connecting two end points, is divided into M equally sized bins of width δξ in which forces are accrued in the course of the simulation. In a naïve approach, the approximation to the average force, F̅ξ(Nstep, k) in bin k after Nstep molecular dynamics steps is just the simple, unweighted average of all force samples in this bin

| 14 |

provided that the bin has already been visited at least once. Nstepk is the number of samples accrued in bin k after Nstep steps and Fμ abbreviates the μth force sample in this bin. This approach would work well for large Nstepk. However, when only a few samples are available in a given bin k, the running average might be a poor estimate of the actual average force in this bin. Moreover, adding additional samples might markedly change F̅ξ(Nstep,k). Large fluctuations in the running estimate of the average force are undesirable, as they may drive the system away from equilibrium, thus slowing the convergence of the algorithm and reducing the efficiency of the method. To control these effects, a procedure is needed to reduce variations in early estimates of F̅ξ(Nstep,k). A number of schemes can be applied for this purpose. In current implementations, the biasing force in bin k at time t is applied in full only if the number of samples Nt is above a threshold, Nfull. It is ramped up smoothly as Ntk varies from 0 to Nfull. In one implementation,42 the ramp is linear and the force is proportional to Nt/Nfull; in another,36,37 the biasing force is zero for Ntk < Nfull/2 and is ramped linearly above that value, proportionally to 2Nt/Nfull – 1. Both implementations have proven to be efficient in a number of applications, but other, more advanced schemes are possible. So far, there have been no systematic studies on the efficiency of different adaptive algorithms, but it is anticipated that it may be strongly system-dependent.

For a sufficiently large Nstep, F̅ξ(Nstep,k) approaches the correct average force in each bin. Then, the free-energy difference, ΔAξ, between the end point states can be estimated simply by way of summing the force estimates in individual bins.

| 15 |

If the average force is a rapidly changing function of ξ, a more sophisticated integration algorithm may be required. It might be also possible to develop binless integration algorithms, similar to those proposed for some other free-energy calculation methods.43,44

A common trait of importance-sampling algorithms is the discretization of the transition coordinate, ξ, in bins of width δξ in which statistical information is accrued in the course of the simulation.7 In the umbrella-sampling scheme,11 for instance, a histogram is constructed, corresponding to the biased probability of occurrence of the molecular assembly of interest at the different values of the transition coordinate. In the adaptive biasing force algorithm,19 bins are utilized to store instantaneous values of the thermodynamic force that acts along the transition coordinate. As has been observed in practice previously for diffusive dynamics, the instantaneous force in any given bin obeys a normal distribution.37 At thermodynamic equilibrium, by definition, its average is exactly equal to −A′(z), that is, the gradient of the free energy along the transition coordinate. Insisting upon being at thermodynamic equilibrium is pivotal here, as application of a poorly estimated time-dependent bias, i.e., from a distribution out of equilibrium, will not yield a Hamiltonian bereft of a mean force acting along the transition coordinate.

The adaptive algorithms described above contain two adjustable parameters: bin width, δξ, and the number of samples, Ninit, below which R(Nstepk) is not equal to 1 or, equivalently, averaging does not follow eq 14. The choice of these parameters should be done with some care.

At constant δξ, large values of Ninit yield better estimates of the average force once the number of samples collected in a given bin reaches this threshold value and conventional averaging begins, at the price of delayed application of the full averaging and slow, initial progress along the transition coordinate. Small values of Ninit, in turn, tend to drive the system out of equilibrium. Typically, setting Ninit in the range between 200 and 500 appears to be a good compromise that allows for avoiding both types of problems.

At constant Ninit, large values of δξ prevent capturing variations of the average force on short length scales. This may have adverse effects on the accuracy of integration in eq 15. On the other hand, small values of δξ require longer simulation times to collect sufficient force statistics in every bin. If the transition coordinate is a distance in an atomistic system, the choice of δξ as 0.1 or 0.2 Å usually represents a satisfactory trade-off.

Differences with Other Importance-Sampling Algorithms

In the last four decades a number of strategies have been developed for computing free-energy changes as a function of geometrical transformations, each endowed with advantages, as well as limitations.11−25,45 To a large extent, umbrella sampling, whether in its original formulation or variants thereof,11,18,46 remains one of the most widely utilized routes to address rare events in molecular simulations. In its original form, umbrella sampling referred to incorporating an external biasing potential in the simulation, i.e., an umbrella,11 ideally the negative of the free energy, which would yield a broad, if not uniform exploration of the transition coordinate. In other words, in ideal circumstances, the system would evolve in the collective-variable space on a flat free-energy hyperplane, as is also the case for the adaptive biasing force method. Under most circumstances, however, the form of the optimal umbrella is unknown. Thus, for any qualitatively new problem, the end-user must resort to an educated guess regarding the shape of the biasing umbrella potential, usually on the basis of prior knowledge of this and related problems. This may constitute a daunting task.47 Poorly predicted biasing potentials yield nonuniform probability distributions across the transition coordinate. This decreases the efficiency of umbrella sampling, which, in extreme cases, may reduce rather than improve the efficiency compared to results with unbiased calculations. This common shortcoming led to the development of an adaptive variant of the umbrella-sampling algorithm,18 wherein the initial guess of the biasing potential is progressively refined in light of a series of short simulations.

Adaptive umbrella sampling is one member of a broader family of techniques called adaptive biasing potential methods.48 Local elevation,15 conformational flooding,16 or its more recent avatar, metadynamics,20,49 adaptive biasing molecular dynamics,23 and the Wang–Landau algorithm26,27 belong to the same family. In the former methods, the idea is to penalize the already visited states by changing the potential V to V – At◦ξ, At(z) being related to the occupation time of the value z of the transition coordinate up to time t. The longer the time spent in a bin {x, ξ(x) ∈ [z0, z1]}, the larger the biasing potential At(z), z ∈ [z0, z1]. The local elevation technique and metadynamics are based on a similar idea. The biasing potential At is built as a sum of Gaussian kernels that are periodically added to the Hamiltonian along the ξ variable. This pushes the system away from states that have already been visited and, by doing so, improves sampling. It ought to be noted that the potential At, rather than its derivative, is computed in these two cases, hence, the name adaptive biasing potential methods. One important downside of the adaptive biasing force algorithm, compared to the class of adaptive biasing potential methods, lies in its inability to handle discrete transition coordinates, for instance, coordination numbers. This drawback can be understood by considering that the free energy is now a map from integers to reals, and, thus, has no derivative and, hence, no mean force.

From a mathematical viewpoint, the adaptive biasing force method, just like adaptive biasing potential methods, is an adaptive importance-sampling procedure. There is, however, a salient difference between these two techniques. In the latter, the potential of mean force or, equivalently, the corresponding probability distribution along the transition coordinate is being adapted. In contrast, the former relies on biasing the force, i.e., the gradient of the potential. This difference is more important than it might appear at first sight, as potentials and probability distributions are global properties whereas gradients are defined locally. In terms of probability distributions, it means that the count of samples in the neighborhood of a given value of the transition coordinate is insufficient to estimate probability. Knowledge of the underlying probability distribution over a much broader range of ξ is required. This may considerably impede efficient adaptation. In contrast, all that is needed to estimate the gradient is the knowledge of local behavior of the potential of mean force. Other regions along the transition coordinate do not have to be visited. Thus, in many instances, adaptation proceeds markedly faster. Using a common metaphor, the difference between the adaptive biasing potential and adaptive biasing force methods can be compared to inundating the valleys of the free-energy landscape as opposed to plowing over its barriers to yield an approximately flat terrain, conducive to unhampered diffusion.

There are also a number of important technical differences between these two methods. For example, in metadynamics and its ancestors, the widths and weights of Gaussian functions and the frequency with which the biasing potential is updated have to be carefully chosen, which often requires considerable experience. In the adaptive biasing force method, estimating the biasing gradient happens automatically by way of a simple algorithm, described in the previous subsection. Another technical concern about adaptive methods is to ensure that adaptation vanishes once At approaches the converged free energy.50 There are a number of ways to fulfill this condition more-or-less automatically,51,52 but adaptive biasing force and adaptive biasing potential techniques remain intrinsically different from this point of view. In the adaptive biasing force algorithm, if the correct free energy is given as an initial guess (namely if V is replaced by V – A◦ξ in eq 8), then the biasing force will not be updated (At′ in eq 8 will be constant over time). This is not the case for an adaptive biasing potential strategy. Moreover, if the derivative of the biasing potential is needed (for example to bias the Langevin dynamics as in eq 8), the advantage of the adaptive biasing force algorithm is that At is directly computed, whereas in adaptive biasing potential algorithms, one needs to differentiate the evaluated biasing potential At, which may lead to very noisy results because At is estimated along a stochastic trajectory.

Because the basic quantity calculated, and subsequently integrated, in the adaptive biasing force method is the force, this approach belongs to the thermodynamic integration class of methods. However, in contrast to conventional implementations of thermodynamic integration and its generalizations, such as the blue-moon ensemble approach,12 the adaptive biasing force algorithm does not rely on constrained molecular dynamics, but instead is based on unconstrained simulations; i.e., the free-energy difference is not determined at discrete values of the transition coordinate through solving constrained equations of motion. Sampling of the transition pathway proceeds in a continuous, unhampered fashion, guided by the diffusion properties of the system of interest, obviating the need for re-equilibration at fixed, predefined values of the transition coordinate, even in stratified simulations. As will be discussed further in this paper, this may improve ergodic behavior of the system.

It is also of interest to compare the adaptive biasing force method with reconstructions of free-energy landscapes from nonequilibrium trajectories that represent repeated pulling experiments.53−55 The latter are based on the groundbreaking Jarzynski identity,56 or its extension to bidirectional transformations,57 combined with steered molecular dynamics. Even though both approaches involve molecular dynamics trajectories that are initially away from equilibrium, there is a fundamental distinction between them. In the adaptive force method, only the average, or systematic force is removed to erase the ruggedness of the free-energy landscape, preserving the random force responsible for diffusion. Once a good estimate of the average force becomes available, the equilibrium behavior of the system is restored. In pulling experiments, the transformation always proceeds away from equilibrium at constant velocity and, therefore, the instantaneous force acting along the transition coordinate is nil. The random force actually appears in the formalism after averaging over the ensemble of pulling experiments. Moreover, achieving convergence in calculations based on Jarzynski’s identity usually requires large numbers of independent realizations,21 which comes at a significant computational cost. Taken together, when a geometrical transformation can be undertaken at equilibrium, it is not clear whether there is any practical advantage of handling the problem at hand by means of nonequilibrium work experiments rather than the adaptive biasing force method.

An important advantage of gradient-based methods7,8 is the possibility of formally decomposing the free-energy change into physically meaningful contributions,58,59 thereby helping to dissect qualitatively the nature of the intermolecular interactions at play. It is worth noting that different energy terms contribute to the mean force both explicitly through force terms and implicitly through the Boltzmann weights in the canonical average; contributions can only be separated numerically at the former level, not at the latter. Decomposition of the free energy is generally handled a posteriori through computing the thermodynamic force between, for instance, groups of atoms of interest. This force is then projected onto the transition coordinate determined for each stored configuration, prior to the construction of a histogram from which the average force is inferred. Integration of the latter yields the desired contribution to the total free-energy change across the entire transition pathway.

Among many options for reconstructing free-energy landscapes along a transition coordinate, which one is the best? Considering that the efficiency of different methods strongly depends on their implementation in software packages and, very likely, on a system of interest, attempts to answer this question appear somewhat misguided and unproductive. That said, one aspect of the adaptive biasing force algorithm pleading in its favor is its simplicity.36,37 How the algorithm operates is physically intuitive,7,42 requiring, in principle, very little prior knowledge of the free-energy landscape, or input from the end-user, even for qualitatively new problems.

TRANSITION COORDINATE

Central to geometric transformations is the concept of a transition coordinate. In this section, this concept is illuminated in the context of free-energy calculations aimed at tackling rare events. Specifically, we will discuss how the transition coordinate is explored with the adaptive biasing force algorithm and delve into the practical aspects of defining this coordinate. Stratification,60 a common technique for improving the efficiency of free-energy calculations by partitioning the reaction pathway into ranges of the transition coordinate, will be discussed with the focus on the justification for and limitations of this strategy.

Transition Coordinates and Rare Events

For any transition from a macrostate, A, to another macrostate, B, of the same system there exists an exact one-dimensional transition coordinate: the committor probability.61−64 In most cases, this coordinate is difficult to calculate and usually offers very limited insight into the nature of the process of interest. For these reasons, it is often more useful to employ a transition coordinate that is only an approximation to the committor probability but is physically more meaningful and easier to handle. Sometimes it might be helpful to extend the reduced representation of the transition to a transition coordinate that extends beyond one dimension. Not only physical significance but also efficiency of sampling, given a transition coordinate, is of concern. These two factors are often closely related.

As in any enhanced sampling method based on a reduced representation, adaptive biasing force sampling relies on stochastic exploration along the transition coordinate, ξ, enhanced by the adaptive bias, combined with equilibration of other, orthogonal degrees of freedom. Equilibration in the orthogonal space is critical in two respects: It affects the mobility along ξ (see Figure 1B for a diffusive example), and it determines the rate of convergence of A′(z) = ⟨−Fξ(x)⟩ξ, which is an average over the orthogonal degrees of freedom. In the ideal situation of time scale separation, all slow degrees of freedom are captured by the transition coordinates, and relaxation in the orthogonal space is comparatively fast. In other words, the adaptive biasing force algorithm removes metastability along the transition coordinates, provided that other degrees of freedom are not metastable. Complex systems such as biological macromolecules, however, possess many slow, coupled degrees of freedom, making time scale separation difficult or impossible to achieve.

Fortunately, empirical results suggest that time scale separation is not an absolute requirement of adaptive biasing force sampling. One reason behind it might be that enhanced diffusion in the transition coordinate space reduces metastability in the orthogonal space, by letting the dynamics sidestep orthogonal barriers rather than cross them, making some “multichannel” cases (see the section Hidden Barriers and Other Challenges to Obtaining Accurate Results) tractable with standard adaptive biasing force simulations. More encouraging still, convergence in such multichannel cases can be markedly accelerated by multiple-walker formulations of the adaptive biasing force algorithm (see the section Multiple-Walker Strategies).

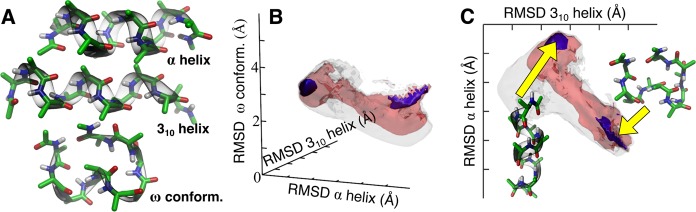

Yet, not all intuitive choices of the transition are appropriate. As will be extensively discussed further in this paper, the end-to-end distance of the α-helical deca-alanine peptide provides a good example of an inadequate transition coordinate. Depending on the range of values, the coordinate exhibits completely different behavior. For values corresponding to the stable α-helix (14 Å) and larger, separation of time scales is obeyed and the adaptive biasing force converges well.37 In contrast, smaller values of the end-to-end distance correspond to a rich set of metastable, compact states that are not resolved by the transition coordinate.36 As a result, adaptive biasing force dynamics becomes trapped in these states, and the free-energy estimator does not converge in accessible simulation times.65 Attempts to resolve these metastable states by two- and three-dimensional coordinates give improved results, allowing the exploration of all metastable basins, yet those basins are not all resolved even in three dimensions. Furthermore, the adaptive biasing force dynamics retains some metastability.36 These difficulties are not due to specific deficiencies of the adaptive biasing force method, but rather, to shortcomings of the reduced representation, which would constitute an obstacle to any sampling method.

Practical Design of a Transition Coordinate

In practice, finding an effective reduced representation is still a process largely guided by physical intuition about the process of interest, as well as trial-and-error. More systematic and robust approaches for this dimension reduction step are an area of active research.66 A limiting factor is often the availability of usable numerical implementations of the generalized coordinates of choice. The collective variables module39 is an attempt to overcome this limitation, by providing a rich and flexible toolbox to define many types of coordinates, in particular those useful for the description of biological macromolecules. In this module, the adaptive biasing force is implemented, among other algorithms.

Once an intuitive understanding of the relevant generalized coordinate has been obtained, some technical choices remain to be made to express this coordinate as a function ξ of atomic Cartesian coordinates. Though these decisions may seem ancillary, they have a strong influence on the accuracy, convergence, and computational performance of the adaptive biasing force algorithm.

When objects of interest are composed of many atoms, there are often several nearly equivalent ways to define the transition coordinate. In sufficiently long simulations, different definitions produce nearly identical potentials of mean force, but the efficiency might vary considerably. For example, the distance between two proteins could be defined by selecting one central atom in each protein, or by selecting the centers of mass of large groups of atoms. The largest contribution to the instantaneous force on each atom in a molecule is due to rapidly oscillating bonded interactions; more generally, in all applications, forces on the particles will contain some background noise. If many atoms contribute to the projected force (e.g., the first right-hand-side term in eq 13), contributions from those noisy terms average out, which lowers the variance of the instantaneous force estimator.

In the initial stage of an adaptive biasing force simulation, nonequilibrium effects occur if the biasing force applied to some degrees of freedom varies faster than coupled degrees of freedom can relax. This can be mitigated by defining “smooth” collective variables that involve many Cartesian coordinates with smoothly varying contributions to the gradient (hence, to the biasing force vector). At equilibrium, a smooth motion may be described by a nonsmooth variable and this may make the convergence of the adaptive bias more difficult. In the deca-alanine stretching toy example, the geometric process of interest involves all atoms in the peptide, yet our classic approach uses the end-to-end distance as a biasing coordinate, with the implicit assumption that biasing forces exerted on the terminal atoms propagate, and that the entire peptide relaxes rapidly, so that the biased trajectory remains close to equilibrium. A more robust approach is to replace that coordinate with the radius of gyration of the peptide. The gradient of the radius of gyration has components on each atom proportional to its distance from the center of the group, so that atoms close to the center also experience moderate biasing forces and do not lag behind the terminal atoms when the biasing force varies rapidly over time. A more elaborate discussion of this problem can be found in ref (42).

In some cases, however, coordinates involving large collection of atoms will have less resolution than more local ones. A biophysical example is permeation through an interface, such as a lipid bilayer. The common choice of transition coordinate is the distance of the permeant molecule to the center of the bilayer center, projected onto the bilayer normal. In such a case, the physically relevant phenomenon is interaction of the permeant with the membrane surface, on a local scale. If the bilayer patch is large enough, it will experience fluctuations away from planarity, thus the local position of the interface will fluctuate with respect to the bilayer center. In turn, this will cause spurious fluctuations in the transition coordinate. A comparable situation arose in a study of glycerol permeation through the water channel protein GlpF.30 Interaction of the permeant with the protein depended on distances to neighboring pore-lining residues, which fluctuated with respect to the bulk of the protein. Therefore, the global coordinate measured between the protein and glycerol molecule had insufficient resolution on a local scale, and a more local coordinate had to be defined to resolve the structure of the free-energy profile in the constricted region of the selectivity filter.

Performance

Depending on system size and implementation details, choices of coordinates may impact performance noticeably. A common application case is a biomolecular simulation performed with the NAMD package,38 with the adaptive biasing force algorithm implemented36 in the collective variables module.39 NAMD is highly parallelized and can simulate large systems on supercomputers with nearly linear scaling. The current implementation of the adaptive biasing force algorithm is not parallelized and runs on one node, leading to two potential bottlenecks: (1) Poor serial performance on the master node: for the most expensive variables (e.g., those involving sums on atom pairs), the bias calculation on the master node might take longer than the force calculation on other nodes. (2) Scaling may suffer even for computationally simple coordinates, if too many atom coordinates and forces have to be communicated across nodes, increasing latency. This second case may affect any highly parallel application with coordinates defined on many atoms. In practice, one often has to find an acceptable trade-off between variance and performance by selecting a reasonable number of Cartesian coordinates that are most representative of the quantity of interest. To describe conformational fluctuations of a protein, for example, the root-mean-square deviation of α carbon coordinates is often a good compromise.

Justification of a Stratification Strategy

To increase the efficiency of exploring the transition coordinate in adaptive biasing force19 or umbrella sampling,11 it is common to break down the transition path into a series of sequential strata or windows. This idea60 arises from the intuition that the time to convergence grows as the square of the range of the transition coordinate. Simple considerations provide the rationale for this strategy.

Consider a transition path of length  . Convergence

of the free energy over the

entire range

. Convergence

of the free energy over the

entire range  is achieved

after t0. Let us now divide the transition

path into N nonoverlapping windows of lengths

is achieved

after t0. Let us now divide the transition

path into N nonoverlapping windows of lengths  , for which convergence

is attained after t1′, ..., tN. As shown in the Supporting Information, t0 > Σiti′.

, for which convergence

is attained after t1′, ..., tN. As shown in the Supporting Information, t0 > Σiti′.

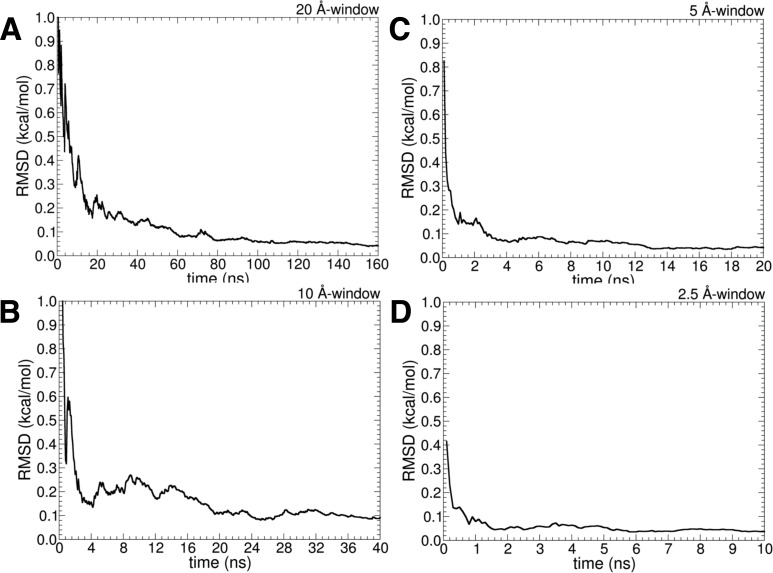

We illustrate this result in a simple example of a tagged water molecule diffusing in a bulk environment over a stretch of 20 Å. The transition coordinate is the projection of the distance separating the centers of mass of the tagged water molecule and the simulation cell along a given direction of Cartesian space. Translational invariance due to the isotropic nature of the liquid imposes the condition that the free-energy change along the transition coordinate be zero. For this system, the potential of mean force was determined in a single, 20 Å long stratum, two 10 Å strata, four 5 Å strata, and eight 2.5 Å strata. The simulations continued until the root-mean-square deviation between the computed potential of mean force and the reference zero free-energy profile was less than 0.1 kcal/mol.

As can be observed in Figure 3, t0, the time necessary to attain convergence within this preset tolerance without stratification is on the order of 100 ns. When the transition coordinate is divided into two strata, convergence in each 10 Å stratum is reached in approximately 40 ns, i.e., in 80 ns over the full 20 Å range. The effect of stratification increases further, as the reaction coordinate is decomposed into four and eight windows. In these cases, convergence is achieved in approximately 6 and 2 ns per window, respectively, which corresponds to 24 and 16 ns for the complete 20 Å range.

Figure 3.

Stratification strategies for the translationally invariant toy model of a tagged water molecule diffusing in a bulk aqueous medium. The transition path spans 20 Å and is handled in a single window (A), in two 10 Å windows (B), in four 5 Å windows (C), and in eight 2.5 Å windows (D). For each stratification strategy, a potential of mean force calculation is carried out until the root-mean-square deviation with respect to the accurate zero free-energy profile is less than 0.1 kcal/mol.

Both theoretical considerations and a simple example given above appear to suggest that extensive stratification should be always preferred. This, however, does not have to be the case. First, simulations in each window require initial equilibration, which may erase benefits gained from stratification into many windows. Perhaps more importantly, extensive stratification may impede ergodic sampling of the phase space. This behavior, shared with the standard thermodynamic integration, will be discussed in section Addressing Nonergodicity Scenarios.

In contrast to umbrella sampling and its adaptive variants, the adaptive biasing force algorithm does not require that consecutive windows of a dissected transition coordinate overlap. Provided that convergence has been achieved in each window, the gradient, ∇A(ξ), can be reconstructed merely by joining the gradients from individual windows at the boundaries. This improves efficiency, as the requirement for overlap between windows may frequently add as much as 50% to the total simulation time. If the gradient between consecutive windows is not continuous to within statistical error, this is usually a sign of difficulties with ergodic sampling. Again, this problem will be considered in section Addressing Nonergodicity Scenarios.

Convergence and Error Analysis

In this section, we examine the convergence properties of the adaptive biasing force algorithm and the reliability of the computed free-energy estimates. First, the reader is invited to follow a demonstration that the numerical scheme formally converges. Then, we discuss how statistical errors associated with the reconstructed free-energy landscapes can be measured and managed.

Formal Convergence of the Adaptive Biasing Force Algorithm

The aim of this section is to explain convergence properties of the adaptive biasing force algorithm. For more details, the reader is referred to the Supporting Information.

We restrict ourselves to the following simple setting. We consider the overdamped Langevin dynamics,

| 16 |

where xt is defined in the N-dimensional

torus  (namely, in [0, 1]N with periodic boundary

conditions) and ξ(x1,...,xN)

= x1. We direct the reader to refs (67) and (68) for extensions to more

general situations of the results presented below.

(namely, in [0, 1]N with periodic boundary

conditions) and ξ(x1,...,xN)

= x1. We direct the reader to refs (67) and (68) for extensions to more

general situations of the results presented below.

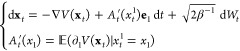

Starting from eq 16 and using the above choice of the transition coordinate ξ, the adaptive biasing force dynamics can be represented as

|

17 |

where xt1 denotes the first coordinate of the vector xt, e1 is the vector with coordinates (1, 0, ..., 0), and ∂1V denotes the partial derivative of V(x1,...,xN) with respect to x1.

As explained in the section Theoretical Backdrop, it is clear at least formally that the

only possible stationary

state for At is the free

energy (up to an additive constant). Indeed, if At converges to some stationary state A∞, then the law of xt converges to Z∞–1 exp(−β{V(x) – A∞[ξ(x)]}) dx, which implies that  (∂1V(xt)

(∂1V(xt) xt1=x1) is A′(x1).

Yet, this does not provide a proof of the convergence of At to A′, and it does not explain

why the adaptive biasing force dynamics of eq 17 indeed converges faster to equilibrium than the original, unbiased

dynamics of eq 16.

xt1=x1) is A′(x1).

Yet, this does not provide a proof of the convergence of At to A′, and it does not explain

why the adaptive biasing force dynamics of eq 17 indeed converges faster to equilibrium than the original, unbiased

dynamics of eq 16.

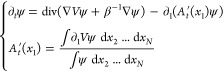

One way to understand this convergence is to look at the way the law of xt evolves. Let us denote ψ(t,x) the density of xt. For the original dynamics of eq 16, the density ψ satisfies the Fokker–Planck equation:

| 18 |

For the adaptive biasing force dynamics, the density ψ satisfies

|

19 |

It ought to be noted that eq 19 is a nonlinear partial differential equation (PDE), which makes the study of its long-time behavior much more complicated than for the linear Fokker–Planck PDE (18).

Using appropriate mathematical tools (namely, entropy techniques, see the Supporting Information), one can show that if the transition coordinate is well chosen, the convergence to equilibrium for the adaptive biasing force dynamics of eq 19 is much faster than for the original unbiased dynamics of eq 18. Roughly speaking, the assumption on the transition coordinate is that the canonical measure Z–1 exp[−βV(x)] dx is “more multimodal” than the conditional measures at a fixed value x1 of the transition coordinate

This is typically the case for the simple illustrative two-dimensional potentials in Figures 1 and 2 if ξ(x1,x2) = x1. This can be fully quantified, and it actually gives a way to measure the quality of the transition coordinate.

In the analysis outlined above, it is assumed that the conditional expectation appearing in the adaptive biasing force dynamics is computed exactly. This analysis is therefore well adapted to discretizations that involve many replicas in parallel, which indeed converge to the adaptive biasing force dynamics with the exact conditional expectation; see ref (69). Analysis of the adaptive algorithms (adaptive biasing force or adaptive biasing potential) with estimates of the conditional expectations based on trajectory averages along a single path are much more complicated. See refs (70) and (71) for preliminary results for the Wang–Landau algorithm.

Distinguishing Sources of Error

Just like any experimental measurement, free-energy calculations, of either alchemical or geometrical nature, ought to be reported with the associated error bars. In the absence of an error estimate, a free-energy difference is generally of limited utility, making direct comparison with experiment difficult and speculative. Although much effort has been devoted in recent years to the characterization of errors that are associated with free-energy calculations,7,8,72−81 estimating the reliability of such calculations remains an intricate task. This explains why it is not unusual that calculated free energies are still being published without error estimates.

Ideally,

any free-energy difference should be determined from a series of N independent simulations. If this were, indeed, the case,

the best possible estimate of the target free-energy difference would

be the expected value over the N simulations, i.e.,  , where ΔÂ denotes the estimate of

the exact quantity, ΔA, inferred from one individual

free-energy calculation. Then, the

associated mean-square error can be written as

, where ΔÂ denotes the estimate of

the exact quantity, ΔA, inferred from one individual

free-energy calculation. Then, the

associated mean-square error can be written as

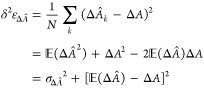

|

20 |

The first term

of eq 20, σΔÂ2 =  , is the variance,

or the precision82 of the free-energy calculation.

In other words,

it is a measure of its statistical error. The second term,

, is the variance,

or the precision82 of the free-energy calculation.

In other words,

it is a measure of its statistical error. The second term,  , is the square

of the bias of the free-energy

estimator, i.e., the square of the difference between the expected

value of the estimator and the actual free-energy change, ΔA. The bias, also referred to as the accuracy82 of the free-energy calculation, is a measure

of systematic error of the latter.

, is the square

of the bias of the free-energy

estimator, i.e., the square of the difference between the expected

value of the estimator and the actual free-energy change, ΔA. The bias, also referred to as the accuracy82 of the free-energy calculation, is a measure

of systematic error of the latter.

How to estimate statistical error for the adaptive force method will be discussed in the next section. Unfortunately, a similar, formal treatment does not exist for systematic error. One important source of bias arises from incomplete sampling in finite-length simulations due to quasi nonergodic behavior of the system. Although this behavior cannot be quantified, in many instances it can be detected. Then, a number of remedial tools are at our disposal. How to recognize and remedy problems with quasi nonergodicity will be discussed in section Addressing Nonergodicity Scenarios. Other common sources of bias are inaccurate treatment of intermolecular interactions and algorithmic artifacts arising primarily from imprecise numerical integration of the equations of motion. These contributions to bias, which are common to all simulation methods, will not be discussed here.

Measure of the Statistical Error

The adaptive biasing force method is typically applied to nanoscale molecular processes under physiological conditions, a realm dominated by thermal noise. Thus, estimating free-energy differences using adaptive biasing force requires careful consideration of statistical error.83 Within the adaptive biasing force framework, free-energy differences are determined by integrating the estimated mean force of the system exerted along the transition coordinate. Namely, a free-energy difference on the interval za ≤ ξ ≤ zb can be expressed as83

| 21 |

where ⟨Fξ⟩z is the average of the instantaneous force on the transition coordinate ξ at the position ξ(x) = z.

Thus, to determine the statistical error of the free-energy differences, we must delve into the statistics of the mean system force. We assume that the transition coordinate, ξ, is discretized and instantaneous forces calculated during the course of the simulation are collected in appropriate bins along ξ. As derived in the Supporting Information, one way to estimate the error of the mean force in bin i is given by

| 22 |

where ΔFξ(xt) = Fξ(xt) – ⟨Fξ⟩i is the random component of the instantaneous force, ni is the number of samples accrued in bin i, Δt is the time step of the simulation, and τi and ⟨ΔFξ2⟩i are the autocorrelation time and variance of ΔFξ(xt) in bin i.

Given a reliable estimate of the error of the mean force in each bin, we are prepared to analyze how these estimates are propagated to yield free-energy differences. On a discrete grid along the transition coordinate, the integral in eq 21 becomes

| 23 |

where ia and ib are bin indices delimiting the ξ-interval [za, zb]. Assuming independent behavior in each bin, the error of a sum of mean forces is approximated from from the Bienaymé formula as equal to the square root of the sum of squares of the errors of these mean forces,84

|

24 |

A notable property of this formula is that the error increases with the size of the interval over which the free-energy difference is calculated.83

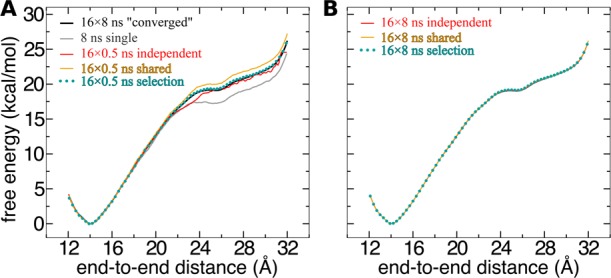

Application to the Toy-Model Deca-alanine

For concreteness, we now consider the error in free-energy differences for the reversible folding of deca-alanine in vacuum. We define the transition coordinate of interest ξ as the end-to-end distance of the peptide, specifically the distance between the carbonyl carbon atoms of the first and the tenth residue. Below we discuss the behavior of the three quantities that enter eq 22, namely. The number of samples in each bin ni, the standard deviation of the random force (⟨Fξ2⟩i)1/2, and the autocorrelation time of this force τi. See the Supporting Information for further comments on calculating these quantities.

The black curve in Figure 4A is the number of samples in each bin of width δξ = 0.1 Å for the adaptive biasing force calculated in the range from 12 to 32 Å (the red curve will be discussed later in the paper). The number of samples, ni, in this range varies from 17 000 to 36 000. Thus, as expected from the adaptive biasing force algorithm, the number of samples in each bin approaches uniformity. In this case, nonuniformity of sampling exceeds only slightly a factor of 2, which corresponds to the variations of the biased free energy not exceeding 0.5 kcal/mol. For comparison, the unbiased free energy changes in the same range by approximately 30 kcal/mol.

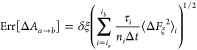

Figure 4.

Coordinate dependence of sampling and the system force distribution in reversible folding of deca-alanine. (A) Samples in each bin for a 10 ns adaptive biasing force calculation on the domain ξ ∈ [4, 32] Å (black curve) and a 100 ns calculation on the domain ξ ∈ [4, 32] Å (red curve). Note the logarithmic scale on the vertical axis. (B) Distribution of the ξ-component of the instantaneous system force for different ranges of ξ. (C) Standard deviation of the ξ-component of the instantaneous system force as a function of ξ.

In Figure 4B, we show the distribution of instantaneous forces in four different bins. The forces in each bin are approximately normally distributed, with similar standard deviations of about 20 kcal mol–1 Å–1. This point is underscored in Figure 4C, which illustrates that, for deca-alanine in vacuum with Langevin dynamics emulating buffeting of the molecule by solvent, the standard deviation of the force acting along the transition coordinate changes very modestly, even though the peptide explores structures that are as disparate as can be imagined. The peptide courses through different compact forms for ξ < 10 Å, remains mostly α-helical for 10 < ξ < 16 Å, and forms extended structures with diminishing helical fractions as ξ increases beyond 16 Å.85 The approximate uniformity of ⟨Fξ2⟩i1/2 seen here is also characteristic of many other systems. For example, it has been previously found86 that the standard deviation of the instantaneous force on the center of mass of a water molecule is about 2 kcal mol–1 Å–1, irrespective of whether the molecule lies in the bulk aqueous phase or in the hydrophobic core of a lipid bilayer. On the other hand, the transfer of a solute across a liquid–vapor interface is an example of a transition for which ⟨Fξ2⟩i1/2 is expected to vary considerably with ξ.

Note that ⟨Fξ2⟩i1/2 is considerable. Because this term enters prominently the expression for statistical error in eq 22, there are significant merits in reducing the dispersion of instantaneous forces. We have already pointed out two potential paths toward this goal. First, because the expression for the ensemble average of instantaneous force is not unique we can, in principle, choose one that reduces ⟨Fξ2⟩i1/2. Second, variation of forces can be also reduced by a thoughtful choice of the transition coordinate.

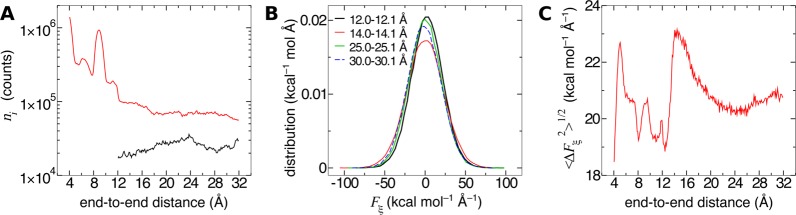

The third variable in eq 22, the correlation time of the instantaneous system force τi, is also the most difficult to calculate. The sampling in a single bin is rarely sufficient to obtain a converged autocorrelation function of the system force; thus, in Figure 5A we plot the autocorrelation function averaged over 40 bins along different regions of ξ. The correlation time for each region, τ, is determined by fitting an unscaled exponential function e–t/τ to the positive values of the autocorrelation function. Figure 5B reveals only modest variations in correlation time for different regions.

Figure 5.

Coordinate dependence of correlations in the system force in reversible folding of deca-alanine. (A) Autocorrelation functions of the random component of the instantaneous system force for different ranges of ξ. The functions are normalized by the variance ⟨ΔFξ2⟩i so that correlation at t = 0 is unity. (B) Correlation time for ξ-ranges in panel A.

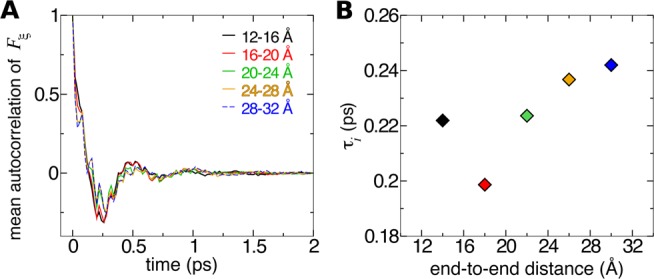

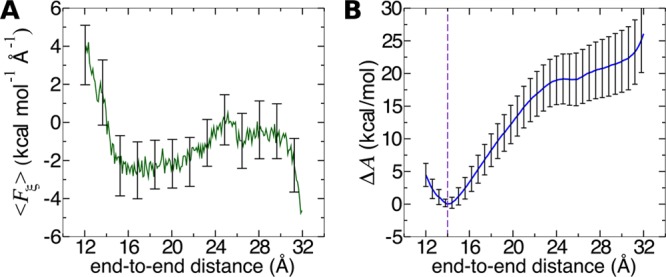

Using the calculated values of ni, ⟨Fξ2⟩i and τi, we now estimate the uncertainties of free-energy differences between deca-alanine structures with different end-to-end distances. The mean system force, with uncertainties calculated by eq 22, is shown in Figure 6A. Because only free-energy differences between two points along ξ, rather than free energies at single points, have a clear physical meaning, we focus on errors associated with these differences. To compute the error in the free-energy difference at points za and zb, we must accumulate the uncertainties of the force between these two points, in accord with eq 24. If stratification is used and points za and zb are in different windows, then the Bienaymé formula needs to be used across all windows separating these points.

Figure 6.

Propagation of error in the mean force to the error of free-energy differences. (A) Mean system force on ξ for a 10 ns adaptive biasing force calculation, with error bars determined according to eq 22. (B) Error of free-energy differences A(ξ) – A(za) with the reference position za = 14 Å. This position is denoted by a violet dashed line.

In Figure 6B we show the estimated error of ΔA between the α-helical minimum free-energy state (za = 14) and other states within the interval zb ∈ [12, 32] Å. If we want to calculate, for instance, the difference in free-energy between the minimum and ξ = 16 Å, we obtain 3.6 ± 1.8 kcal/mol. Larger distances in ξ yield larger error: the free-energy difference between the minimum and the plateau at 25.5 Å is 19 ± 4 kcal/mol.

It is expected that, for sufficiently long total simulation time, t, statistical errors of both the average forces and free-energy differences will decay proportionally as t1/2.42 The same dependence on t should apply to deviations from nonuniform sampling. If force statistics is collected in M bins, then the root-mean-square deviation from the uniform distribution can be defined as the square root of the variance, Var(t),

| 25 |

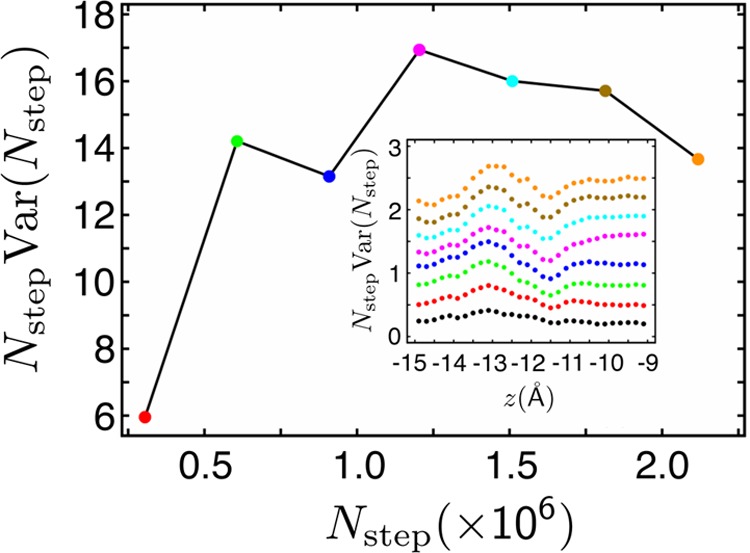

where Nstepk and Nstep are the number of samples in bin k and the total number of samples at time t, respectively. For large t, the variance is expected to be proportional to 1/t or, equivalently to 1/Nstep. In other words, Nstep × Var(t) should be constant. An example of how Var(t) behaves in a typical simulation is shown in Figure 7. This is the expected behavior for diffusive motion. Errors that clearly deviate from such behavior are a sign of insufficient sampling. If the problem persists with increasing t, and especially if errors exhibit large fluctuations, then most likely problems with quasi nonergodic behavior have been encountered.

Figure 7.

Nstep × Var(t) as a function of the number of molecular dynamics steps, Nstep. This quantity was calculated for the permeation of K+ through a transmembrane hexametric channel of a peptaibol, trichotoxin in a window along the normal to the membrane spanning the range between z equal to −15 and −9 Å. z = 0 is located in the middle of the membrane.87 Note that for Nstep between 0.6 × 106 and 2.3 × 106, Nstep × Var(t) differs from its average value in this range by no more than 10%. The inset: the number of force samples along z in the window as a function of Nstep.

ADDRESSING NONERGODICITY SCENARIOS

A common manifestation of pathological free-energy calculations, in particular those of geometrical nature, is quasi nonergodicity, wherein sampling along the selected transition coordinate appears to be hampered. Here, we inspect closely the effects that impede accurate results to be obtained from free-energy methods, including the adaptive biasing force scheme (notably hidden barriers in the slow manifolds), and discuss how to identify these effects and outline possible remedies, by increasing the dimensionality of the transition coordinate, improved stratification, or sampling aided by multiple-replica strategies.

Hidden Barriers and Other Challenges to Obtaining Accurate Results

The primary objective of importance-sampling schemes is to facilitate exploration of the transition pathway with a uniform probability.7,8 Among these schemes, as has been previously emphasized, the adaptive biasing force algorithm uses a local estimate of the gradient, A′, acting along the transition coordinate, to erase progressively the original ruggedness of the free-energy landscape. As has already been discussed in section Formal Convergence of the Adaptive Biasing Force Algorithm, this feature is valid from a theoretical standpoint. How true is this in practice? In most instances, satisfactorily uniform sampling is achieved quite efficiently. Occasionally, however, the adaptive biasing force algorithm does not perform as expected. The reminder of this section is devoted to explaining and identifying these special, yet important cases.

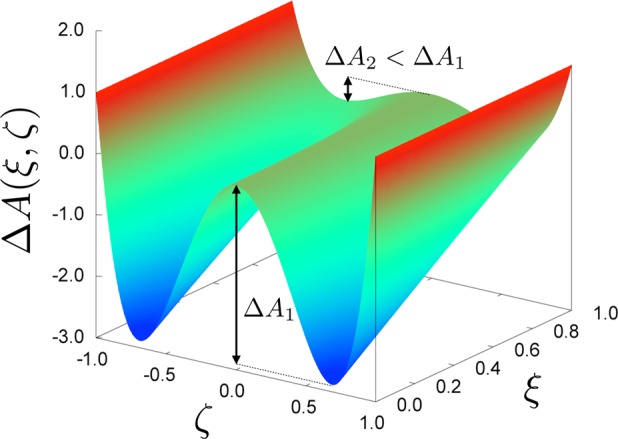

Potential difficulties in applying the adaptive biasing force algorithm are intimately related to the choice of transition coordinate. A basic, yet seldom verified assumption that underlies this choice is the separation between time scales of motions along the transition coordinate and orthogonal degrees of freedom (see section Transition Coordinates and Rare Events). For complex, rugged free-energy landscapes, notably those formed by parallel valleys separated by considerable barriers in the direction orthogonal to the transition coordinate (Figure 8),88 assuming time scale separation may turn out to be unwarranted. Because the adaptive biasing force algorithm exerts no direct action in the orthogonal space, it will not improve sampling at constant ξ. Returning to the foundational expression for the adaptive biasing force method, eq 8, which relates the gradient of the free energy to an ensemble average at constant value of the transition coordinate, the inability to cross hidden barriers in the orthogonal space is tantamount to incomplete ensemble averages and, hence, poor estimates of free-energy changes.

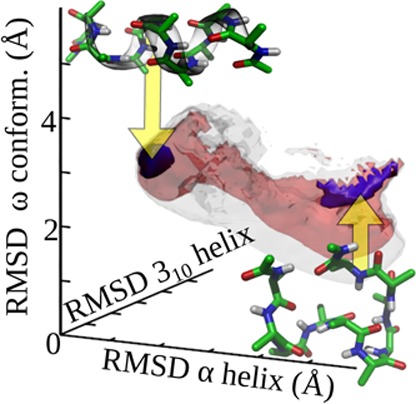

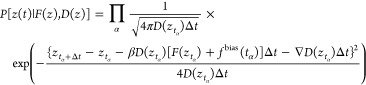

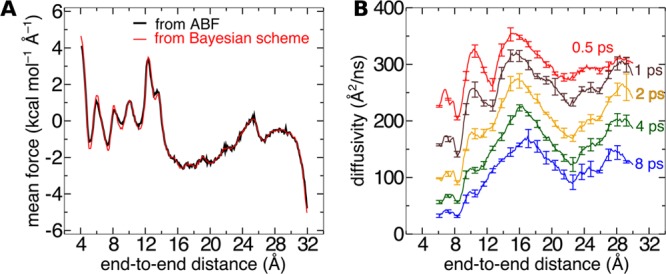

Figure 8.