Abstract

Background

E-learning and blended learning approaches gain more and more popularity in emergency medicine curricula. So far, little data is available on the impact of such approaches on procedural learning and skill acquisition and their comparison with traditional approaches.

Objective

This study investigated the impact of a blended learning approach, including Web-based virtual patients (VPs) and standard pediatric basic life support (PBLS) training, on procedural knowledge, objective performance, and self-assessment.

Methods

A total of 57 medical students were randomly assigned to an intervention group (n=30) and a control group (n=27). Both groups received paper handouts in preparation of simulation-based PBLS training. The intervention group additionally completed two Web-based VPs with embedded video clips. Measurements were taken at randomization (t0), after the preparation period (t1), and after hands-on training (t2). Clinical decision-making skills and procedural knowledge were assessed at t0 and t1. PBLS performance was scored regarding adherence to the correct algorithm, conformance to temporal demands, and the quality of procedural steps at t1 and t2. Participants’ self-assessments were recorded in all three measurements.

Results

Procedural knowledge of the intervention group was significantly superior to that of the control group at t1. At t2, the intervention group showed significantly better adherence to the algorithm and temporal demands, and better procedural quality of PBLS in objective measures than did the control group. These aspects differed between the groups even at t1 (after VPs, prior to practical training). Self-assessments differed significantly only at t1 in favor of the intervention group.

Conclusions

Training with VPs combined with hands-on training improves PBLS performance as judged by objective measures.

Keywords: virtual patients, blended learning, simulation, pediatric basic life support, performance

Introduction

Basic life support training, such as for pediatric basic life support (PBLS), is usually simulation-based with the need for evaluating learners’ performances [1-4]. Although there is evidence that simulator training is effective to improve basic life support performance, literature comparing various methods of training is scarce [5]. In particular, the instructional design of life support training is increasingly being investigated. Carrero et al assessed the improvement in procedural knowledge acquired by typically used tutor-led, case-based discussions versus the use of noninteractive multimedia presentations—video plus PowerPoint presentation. Both were shown to have equal impact on the level of cognitive skills [6]. Some reports have shown advantages for learning basic life support when using instructional videos [7-9]. Such approaches provide individual preparation and can be easily distributed, save instructors’ resources, and allow for more training time in face-to-face sessions.

For promoting clinical reasoning and decision making, virtual patients (VPs) are known for being effective [10]. For the context of acquiring life support skills, VPs integrate features that have been shown to foster both the development of clinical decision making (eg, through interactivity and feedback [11]) and procedural skills (eg, by integration of media [12]). E-learning and blended learning approaches are gaining popularity in emergency medicine curricula [13-16]. Lehmann et al reported recently that VPs combined with skills laboratory training are perceived by both trainees and trainers as an effective approach to train undergraduates in PBLS, leading to an efficient use of training time [17]. A few other reports have already suggested positive effects of VPs and comparable simulators regarding knowledge and procedural skill acquisition used for different kinds of life support courses [18-20].

In this study, we investigated the effect of VPs combined with standard simulation-based PBLS training on the acquisition of clinical decision-making skills and procedural knowledge, objective skill performance, and self-assessment. Our hypotheses were that preparation with VPs would yield (1) superior clinical decision making and procedural knowledge, (2) an objectively better performance of PBLS after the training, and (3) better self-assessment after working with VPs and after exposure to standard training.

Methods

Study Design

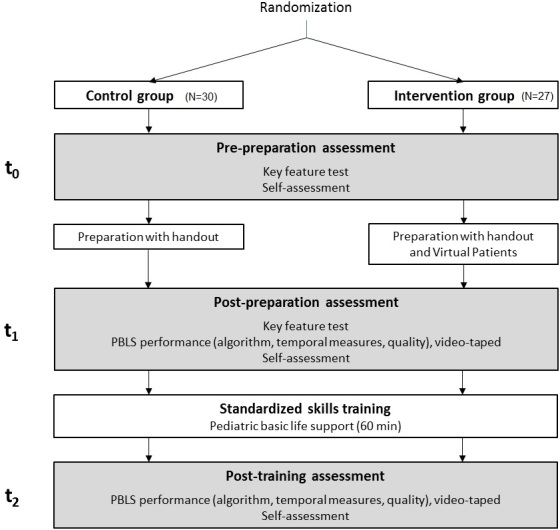

We used a two-group randomized trial design (see Figure 1). All participants were assessed regarding their self-assessment, clinical decision-making skills, and procedural knowledge (key-feature test) about PBLS after randomization to ensure comparability (prepreparation assessment, t0). PBLS training sessions were conducted 1 to 2 weeks after the preparation assessment. Both groups were requested to prepare themselves a day ahead of the appointed training using handouts we had distributed. In addition, the intervention group (IG) was granted access to VPs as mandatory preparation. After the preparation, on the day of the practical training, self-assessment and procedural knowledge were assessed again to compare the participants’ progress (postpreparation assessment, t1). Subsequently, we videotaped PBLS sequences undertaken by each participant for later scoring of their performances. Both groups then attended standard training on PBLS. Later that day, we again recorded PBLS demonstrations and reevaluated participants’ self-assessments after the practical training (posttraining assessment, t2). The study was conducted in September 2014.

Figure 1.

Study design.

Instruments

Overview

All instruments were pilot-tested on video recordings of PBLS demonstrations by student tutors and faculty before implementation, and revisions were made to ensure clarity and content validity. We particularly tested the estimated and calculated temporal scores adapted from international recommendations [21] by recording and analyzing best-practice examples of our faculty.

Basic Data

Participants were asked about their age, sex, and level of qualification in emergency medicine. For subgroup analysis we identified participants who were qualified as paramedics or had some similar training—qualifications that include PBLS training.

Clinical Decision-Making Skills and Procedural Knowledge

We developed a key-feature test according to published guidelines [22] to evaluate the students’ procedural knowledge and clinical decision making. This kind of testing was introduced by Page and Bordage specifically to assess clinical decision-making skills [23]. The test contained seven cases with three key features each (see Multimedia Appendix 1). Answers were to be given in “write in” format, which was suggested for decisions regarding the differential diagnosis, therapy, and further management [22]. Questions concerned both clinical decision making (proposed next steps) and procedural knowledge (eg, head positioning or compression depth). Each correct answer was given 1 point, with a maximum of 21 points. The test was reviewed for correctness and clinical relevance by group-blinded senior pediatricians with expertise in PBLS.

Performance: Adherence to Algorithm

Two raters scored the performed algorithm for its correct order. Each step of the sequence was given 2 points if it was done in the correct algorithmic order. It was given 1 point if it had been performed in an incorrect algorithmic order. No points were assigned if the step had not been undertaken at all (see Multimedia Appendix 2). The maximum score was 18.

Performance: Temporal Demands

Concrete temporal recommendations for three procedural steps of the PBLS algorithm are as follows [21].

1. Every rescue breath should take 1.0 to 1.5 s for inspiration plus time for expiration.

2. Assessment of the signs of life and circulation may not take longer than 10 s.

3. Chest compressions should be given at a frequency of at least 100/min, not exceeding 120/min.

With these recommendations being followed, the optimal temporal specifications for the initial five rescue breaths, the circulation check, and the four cardiopulmonary resuscitation (CPR) cycles were estimated and calculated (see Multimedia Appendix 3). The optimal total time was also estimated for the whole sequence, from safety check to emergency call. We scored 2 points for each procedural step if it was performed within ±10% of the optimal estimated calculated time and 1 point if within ±20%. If the participant took a longer or shorter time, no points were scored per step. Two raters measured these times on video recordings. A total of 8 points could be achieved.

Performance: Procedural Quality

Two group-blinded video raters with expertise in PBLS scored the procedural quality of the participants’ PBLS skills. The scores were averaged for further analysis. We used a scoring form in trichotomous fashion, with 2 points for correct performance, 1 point for minor deficits, and no points for major deficits (see Multimedia Appendix 4). A maximum of 22 points could be achieved; items were not weighted. Such kinds of scoring systems with comparable checklists are established to assess clinical performances in simulated emergency scenarios [24-27]. In contrast to published rating modalities, we rated the aspects of the algorithm and time measures separately as described above to achieve more objective scoring. In addition, skills performance levels were rated globally: competent, borderline, not competent. Only the performances that were rated “competent” concordantly by both raters were counted and used in the analyses.

Self-Assessments

We developed a self-assessment instrument consisting of seven items on procedural knowledge and seven items on procedural skills (see Multimedia Appendix 5). Two senior pediatricians with expertise in both PBLS and questionnaire design had reviewed these items. Answers were given on 100 mm visual analog scales from 0 (very little confidence) to 100 (highly confident).

Preparation Material and Pediatric Basic Life Support Training

For individual preparation of the training, we developed and distributed to both groups a paper handout on PBLS. Such handouts are commonly used as preparation for undergraduate skills laboratories [28]. The handout contained all relevant information, explaining the procedural steps of PBLS, including the algorithm, temporal demands, and a flowchart. Additionally, the intervention group was given Web-based access to two VPs dealing with PBLS in infants and toddlers. The VPs were designed with CAMPUS-Software [29] according to published design criteria [11] and enriched by video clips and interactive graphics (see Figure 2). For more detailed characterization of the VP cases used for this study, see Lehmann et al (VP3 and VP4) [17]. Both VPs had to be worked up twice, which was checked electronically but without the ability to identify any participant. The required overall workup time was estimated at 30 to 60 min based on previously measured log data.

Figure 2.

Screenshot of CAMPUS-Software showing a virtual patient.

Participants were trained in a single-rescuer scenario: from finding an unresponsive child, to the emergency call after 1 min (about four cycles) of CPR according to current guidelines [21]. The hands-on training was divided into two sessions—infant and toddler phases—of 30 min each. The sessions were structured with a commonly used four-step approach [1,28,30-32]. Steps three and four—tutor guided by learner and demonstration by the learner, respectively—were performed once per session by each participant so there was a standardized and comparable amount of individual training time. Two senior tutors provided close feedback on the participants’ performance as suggested by Issenberg et al for effective learning during simulations [33]. We used manikins by Laerdal Medical GmbH, Puchheim, Germany ("Baby Anne") and Simulaids Inc, Saugerties, New York ("Kyle").

Participants and Data Collection

The Ethics Committee of the Medical Faculty Heidelberg granted ethical approval for this study (EK No. S-282/2014). All collected data were pseudonymized. We affirmed with participants by written informed consent that their participation was voluntary, that they could not be identified from the collected data, and that no plausible harm could arise from participation in the study.

We offered participation in this study to a total of about 480 third- and fourth-year medical students at Heidelberg Medical School by group emails and bulletin boards. Invited students had already completed basic life support (BLS) training but had had no PBLS training yet. Announcements were worded as invitations to a special PBLS course and educational study without mentioning e-learning in particular. At an orientation meeting, prospective students enrolled themselves onto a numbered list, unaware of group allocation, which was randomly distributed by numbers.

Rater Selection and Training

We selected and trained two raters to score videotaped performances with the help of best-practice videos of senior faculty. Rater training included reviewing the case content and objectives, and an introduction to the rating schemes. Videotaped examples with different levels of procedural quality were discussed for calibration of the intended use of the schemes. We chose a senior pediatric consultant and a pediatric intensive care nurse practitioner, each of whom was an experienced facilitator for pediatric emergency simulations. Raters were blinded to group classification of all video records.

Data Analysis

Results are presented as the mean ± standard deviation per group and given as the percent of the maximum achievable scores. Data were checked for normal distribution using the Kolmogorov-Smirnov test. If a presumed normal distribution was accepted, statistical differences were evaluated using the unpaired t test for between-group comparisons and the paired t test for within-group comparisons. Otherwise, we used the Mann-Whitney U test for nonnormal distributions. We assumed that a group difference of 1 SD or more was a relevant effect size. For a group of 30 subjects, we estimated a 65% power to detect this effect, assuming a two-sided significance level of .05. The interrater reliability was estimated using the case 2 intraclass correlation coefficient (ICC2) measured on 100% of the sample size [34]. Global competence-level ratings were compared using Fisher’s exact test. As a higher level of qualification in PBLS appeared to be a possible confounder, we confirmed all statistics with exclusion of participants with PBLS qualifications who were identified from the basic data. We used SPSS Statistics version 21 (IBM Corporation, Armonk, NY, USA) for all statistical analyses and an alpha level of .05.

Results

Overview

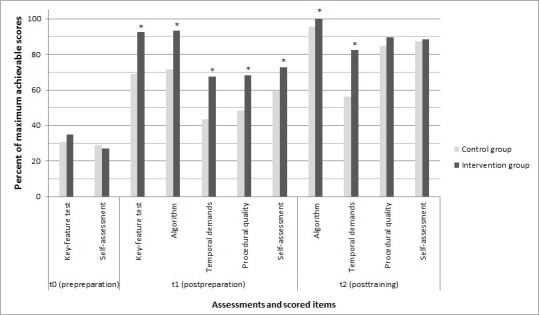

Scoring results are depicted in Table 1 and Figure 3.

Table 1.

Key-feature test, performance, and self-assessment scores.

| Scored items | Scores, mean (SD) or n (%) | P (CG vs IG) | ||

|

|

Control group (CG) | Intervention group (IG) |

|

|

| Procedural knowledge: Key-feature test (%), mean (SD) |

|

|

|

|

|

|

t0 a | 31.0 (12.9) | 34.8 (17.1) | .34 |

|

|

t1 b | 68.8 (16.3) | 92.2 (4.7) | <.001 c |

|

|

P (t0 vs t1) | <.001 | <.001 |

|

| Performance: Adherence to algorithm (%), mean (SD) |

|

|

|

|

|

|

t1 | 72.0 (17.7) | 93.4 (7.1) | <.001 |

|

|

t2 d | 95.7 (7.2) | 99.8 (1.1) | .008 |

|

|

P (t1 vs t2) | <.001 | <.001 |

|

| Performance: Temporal demands (%), mean (SD) |

|

|

|

|

|

|

t1 | 43.3 (23.4) | 67.6 (21.4) | <.001 |

|

|

t2 | 55.8 (27.8) | 82.4 (17.8) | <.001 |

|

|

P (t1 vs t2) | .03 | .004 |

|

| Total time of PBLS e sequence in seconds, mean (SD) |

|

|||

| t1 | 107.7 (35.2) | 88.1 (12.6) | .008 | |

|

|

t2 | 95.2 (16.2) | 78.1 (10.2) | <.001 |

|

|

P (t1 vs t2) | .05 | <.001 |

|

| Performance: Procedural quality (%), mean (SD) |

|

|

|

|

|

|

t1 | 48.8 (20.2) | 68.2 (15.0) | <.001 |

|

|

t2 | 84.4 (11.2) | 89.4 (9.2) | .07 |

|

|

P (t1 vs t2) | <.001 | <.001 |

|

| Global ratings (rated "competent"), n (%) |

|

|

|

|

|

|

t1 | 0/30 (0) | 5/27 (19) | .02 |

|

|

t2 | 17/30 (57) | 23/27 (85) | .02 |

| Self-assessment (%), mean (SD) |

|

|

|

|

|

|

t0 | 29.1 (16.3) | 27.2 (18.8) | .68 |

|

|

t1 | 59.6 (15.8) | 72.3 (11.7) | .001 |

|

|

t2 | 87.4 (8.6) | 88.4 (7.8) | .63 |

aPrepreparation assessment (t0).

bPostpreparation assessment (t1).

cItalicized P values represent significant results.

dPosttraining assessment (t2).

ePediatric basic life support (PBLS).

Figure 3.

Key-feature test, performance, and self-assessment scores. Scores are given as the percent of the maximum achievable scores (*P<.05).

Basic Data

A total of 57 participants completed the training and all surveys were included in this study—30 (53%) in the control group (CG) and 27 (47%) in the intervention group; approximately 11.9% (57/480) of all eligible students. Out of 60 initial participants, 3 (5%) were excluded due to nonappearance at the training session; all participants of the intervention group processed the VPs completely as requested. Participants’ mean age was 24.2 years (SD 2.6) (16/30, 53% female) in the control group and 24.1 years (SD 3.1) (17/27, 63% female) in the intervention group. Of the 57 participants, there were 5 out of 30 (17%) PBLS-qualified participants (paramedics) in the control group and 4 out of 27 (15%) in the intervention group.

Clinical Decision-Making Skills and Procedural Knowledge

There was no significant difference in the key-feature test results between the control group and intervention group at t0 (31.0%, SD 12.9 vs 34.8%, SD 17.1; P=.34). The intervention group showed a significantly superior increase in procedural knowledge at t1 compared with the control group (92.2%, SD 4.7 vs 68.8%, SD 16.3; P<.001). There were significant improvements in both groups between t0 and t1 (both P<.001).

Performance: Adherence to Algorithm

Regarding adherence to the algorithm, the intervention group was already better than the control group at t1 (93.4%, SD 7.1 vs 72.0%, SD 17.7; P<.001), which continued at t2 (99.8%, SD 1.1 vs 95.7%, SD 7.2; P=.008). Significant improvements, however, were found between t1 and t2 for both groups (both P<.001).

Performance: Temporal Demands

The intervention group already showed significantly better adherence to temporal specifications than the control group at t1 (67.6%, SD 21.4 vs 43.3%, SD 23.4; P<.001), which continued at t2 (82.4%, SD 17.8 vs 55.8%, SD 27.8; P<.001). Both groups showed significant improvements in temporal measures between t1 and t2 (P=.03 and P=.004, respectively). Table 1 also shows the measured mean times for the total sequence.

Performance: Procedural Quality

The interrater reliability coefficient was .71 indicating a sufficient level of interrater agreement [35].

The performance quality score of the intervention group was significantly superior to that of the control group at t1 (68.2%, SD 15.0 vs 48.8%, SD 20.2; P<.001). After practical training, at t2, they did not differ significantly (89.4%, SD 9.2 vs 84.4%, SD 11.2; P=.07). Both groups showed significantly increased quality scores between t1 and t2 (both P<.001).

The global ratings of competence showed significant differences in favor of the intervention group, again already at t1 and continuing at t2 (0/30 CG vs 5/27 IG rated “competent”, P=.02; 17/30 CG vs 23/27 IG, P=.02, respectively). In all, 85% (23/27) of the intervention group participants performed PBLS “competently” after having practiced with VPs and undergoing PBLS training, compared with only 57% (17/30) of the control group participants.

Self-Assessments

There was no significant difference in the self-assessment means of the two groups at t0 (29.1%, SD 16.3 CG vs 27.2%, SD 18.8 IG; P=.69). After different preparations, the intervention group showed a significant increase in its self-assessment compared with that in the control group at t1 (72.3%, SD 11.7 vs 59.6%, SD 15.8; P=.001). At t2, there was no significant difference between the groups (87.4%, SD 8.6 CG vs 88.4%, SD 7.8 IG; P=.62).

Subgroup Analyses

After identifying and excluding PBLS-qualified participants, there were no changes in statistical significances in any of the calculations. The level of significance did not differ by the power of 10.

Discussion

Principal Findings

In this randomized controlled trial, we investigated the impact of an additional preparation with VPs on the improvement of objective and subjective learning outcomes of skill acquisition when combined with standard PBLS training. The control group and intervention group were comparable in terms of their self-assessment and procedural knowledge during the prepreparation assessment. However, after addition of practical training, the intervention group demonstrated significantly better performance in key aspects of PBLS than did the control group, although self-assessment ratings were similar. Also, after practicing with VPs, the intervention group had already demonstrated superior skills, even before the hands-on training in terms of objective skill performance, procedural knowledge, and self-assessment.

Objective Learning Outcomes

After using VPs as an interactive preparation, the intervention group showed significantly improved clinical decision-making skills and procedural knowledge. Also, their PBLS skill performance was superior to that of the control group after the preparation period in regard to objective performance measures, including adherence to the algorithm, temporal demands, and procedural quality. This is in line with existing reports that such electronic learning activities improve both knowledge and skills [18,19]. De Vries et al also showed that a comparable computer simulator improves procedural skills [18]. Although they reported that some of the skill outcomes were suboptimal, the training was not blended with hands-on training as presented here, which led to increased improvements compared with using the computer simulator alone. Furthermore, as reported by Ventre et al, such approaches might fill a gap in continuing medical education [20]. Procedural skill performance was rated as objectively as possible to discriminate procedural learning effects. In contrast, typically used checklists often subsume adherence to the algorithm, temporal aspects, and performance quality—for example, “CPR continued—2 points for initiated immediately after pulse check and rhythm identification (<30 s) and good CPR technique and checks pulse with CPR” (taken from The Clinical Performance Tool [26]). At t2, when both groups had had equal practical training, the procedural steps of PBLS were still performed qualitatively more competently by the intervention group in some aspects. Such differences will probably not be found when using global rating scales, but may be when using automated skill reporting devices as used by Kononowicz et al [19], or when using discriminating rating schemes as presented here when such devices are not available.

We assume that VPs facilitated application of acquired clinical decision-making skills and procedural knowledge. Interactivity and feedback in VPs, which included interactive graphics and video clips, might have enhanced the learning process beyond the use of media, as in other approaches. It is well known that educational feedback, such as that given in the VPs, is the most important feature of simulation-based education [33]. Interacting with clinical case scenarios might also provide an emotionally activating stimulus to get trainees involved as it supports the acquisition and retention of skills [36]. For complex procedures, current learning theories support a reasonable simple-to-complex learning process that facilitates learning [37,38]. VPs may bridge this gap between knowledge and practice.

The presented results support the subjective perceptions of students and tutors [17] that such a blended learning approach is effective and efficient for procedural learning. In this study, self-directed learning with paper handouts seems to have had little effect on facilitating the acquisition of practical skills, although it did have an effect on improving procedural knowledge. In contrast, the blended approach that included interactive VPs for preparation led to improved learning of both procedural knowledge and procedural skills. Implications for CPR and other emergency training might be a more efficient and effective use of resource-intensive training time.

Subjective Learning Outcomes

In their self-assessments, the participants of the intervention group judged themselves superior to those in the control group after the preparation period. Objective findings in their scored performances support these ratings. After their practical training, however, the self-assessments of the two groups were similar. In contrast, the intervention group still had superior objective scores regarding skill performance. Self-assessments are not necessarily correlated with performance; for example, postgraduate practitioners have limited ability to self-assess accurately, as shown by Davis et al [39].

Study Strengths

The assessments of clinical decision-making skills and procedural knowledge, practical performance, and self-assessment combine relevant and detailed objective and subjective measures for elucidating the learning effects of this approach. This is one of the first studies that provides objective data that support how effectively VPs can foster the acquisition of PBLS skills.

Study Limitations

Participants’ VP case completions were monitored to validate their workup, but the validation was not done in a controlled environment that allowed evaluation of participants’ efforts. Accordingly, efforts on the workup of handouts were also not assessed. Workup of VPs might have led to more motivation for learning even though the study was not announced as an e-learning study attracting mainly tech-savvy students. Because both groups had significantly increased procedural knowledge after the preparation period, we assumed that motivation for preparation might not have been very different. Result details, for example, of the temporal scoring, suggest that VPs address skills not affected by paper-based learning materials. However, group differences might also have been influenced by different durations of their efforts to learn in addition to different modalities. Additionally, both groups were not limited in their access to other learning resources than those provided. Also, most of the instruments used in this study have not been validated formally, although all were developed based on current literature and were pilot-tested. Finally, the sample size is rather limited, thereby providing limited power to investigate differences between groups.

Conclusions

The blended learning approach described herein leads to improved outcomes of practical skill acquisition compared with a standard approach. Even before having practical training, preparation with VPs leads to improved practical performances as well as better clinical decision-making skills and procedural knowledge. Further studies are necessary to understand the specific benefit of using VPs regarding clinical skill acquisition and its sustainability.

Acknowledgments

This study was funded by general departmental funds without involving third party funds.

Abbreviations

- BLS

basic life support

- CG

control group

- CPR

cardiopulmonary resuscitation

- ICC2

case 2 intraclass correlation coefficient

- IG

intervention group

- PBLS

pediatric basic life support

- t0

prepreparation assessment

- t1

postpreparation assessment

- t2

posttraining assessment

- VP

virtual patient

Key-feature test.

Adherence to algorithm scoring form.

Temporal measures scoring form.

Performance quality scoring form.

Self-assessment instrument.

Footnotes

Conflicts of Interest: None declared.

References

- 1.Bullock I. Skill acquisition in resuscitation. Resuscitation. 2000 Jul;45(2):139–143. doi: 10.1016/s0300-9572(00)00171-4.S0300-9572(00)00171-4 [DOI] [PubMed] [Google Scholar]

- 2.Adler MD, Vozenilek JA, Trainor JL, Eppich WJ, Wang EE, Beaumont JL, Aitchison PR, Erickson T, Edison M, McGaghie WC. Development and evaluation of a simulation-based pediatric emergency medicine curriculum. Acad Med. 2009 Jul;84(7):935–941. doi: 10.1097/ACM.0b013e3181a813ca.00001888-200907000-00029 [DOI] [PubMed] [Google Scholar]

- 3.Cheng A, Goldman RD, Aish MA, Kissoon N. A simulation-based acute care curriculum for pediatric emergency medicine fellowship training programs. Pediatr Emerg Care. 2010 Jul;26(7):475–480. doi: 10.1097/PEC.0b013e3181e5841b. [DOI] [PubMed] [Google Scholar]

- 4.Cheng A, Hunt EA, Donoghue A, Nelson K, Leflore J, Anderson J, Eppich W, Simon R, Rudolph J, Nadkarni V, EXPRESS Pediatric Simulation Research Investigators EXPRESS--Examining Pediatric Resuscitation Education Using Simulation and Scripting. The birth of an international pediatric simulation research collaborative--from concept to reality. Simul Healthc. 2011 Feb;6(1):34–41. doi: 10.1097/SIH.0b013e3181f6a887.01266021-201102000-00008 [DOI] [PubMed] [Google Scholar]

- 5.Lynagh M, Burton R, Sanson-Fisher R. A systematic review of medical skills laboratory training: where to from here? Med Educ. 2007 Sep;41(9):879–887. doi: 10.1111/j.1365-2923.2007.02821.x.MED2821 [DOI] [PubMed] [Google Scholar]

- 6.Carrero E, Gomar C, Penzo W, Fábregas N, Valero R, Sánchez-Etayo G. Teaching basic life support algorithms by either multimedia presentations or case based discussion equally improves the level of cognitive skills of undergraduate medical students. Med Teach. 2009 May;31(5):e189–e195. doi: 10.1080/01421590802512896.909022755 [DOI] [PubMed] [Google Scholar]

- 7.Braslow A, Brennan RT, Newman MM, Bircher NG, Batcheller AM, Kaye W. CPR training without an instructor: development and evaluation of a video self-instructional system for effective performance of cardiopulmonary resuscitation. Resuscitation. 1997 Jun;34(3):207–220. doi: 10.1016/s0300-9572(97)01096-4.S0300957297010964 [DOI] [PubMed] [Google Scholar]

- 8.Sopka S, Biermann H, Rossaint R, Knott S, Skorning M, Brokmann JC, Heussen N, Beckers SK. Evaluation of a newly developed media-supported 4-step approach for basic life support training. Scand J Trauma Resusc Emerg Med. 2012;20:37. doi: 10.1186/1757-7241-20-37. http://www.sjtrem.com/content/20//37 .1757-7241-20-37 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Todd KH, Braslow A, Brennan RT, Lowery DW, Cox RJ, Lipscomb LE, Kellermann AL. Randomized, controlled trial of video self-instruction versus traditional CPR training. Ann Emerg Med. 1998 Mar;31(3):364–369. doi: 10.1016/s0196-0644(98)70348-8.S0196064498000730 [DOI] [PubMed] [Google Scholar]

- 10.Cook DA, Triola MM. Virtual patients: a critical literature review and proposed next steps. Med Educ. 2009 Apr;43(4):303–311. doi: 10.1111/j.1365-2923.2008.03286.x.MED3286 [DOI] [PubMed] [Google Scholar]

- 11.Huwendiek S, Reichert F, Bosse H, de Leng BA, van der Vleuten CP, Haag M, Hoffmann GF, Tönshoff B. Design principles for virtual patients: a focus group study among students. Med Educ. 2009 Jun;43(6):580–588. doi: 10.1111/j.1365-2923.2009.03369.x.MED3369 [DOI] [PubMed] [Google Scholar]

- 12.Iserbyt P, Charlier N, Mols L. Learning basic life support (BLS) with tablet PCs in reciprocal learning at school: are videos superior to pictures? A randomized controlled trial. Resuscitation. 2014 Jun;85(6):809–813. doi: 10.1016/j.resuscitation.2014.01.018.S0300-9572(14)00050-1 [DOI] [PubMed] [Google Scholar]

- 13.Burnette K, Ramundo M, Stevenson M, Beeson MS. Evaluation of a web-based asynchronous pediatric emergency medicine learning tool for residents and medical students. Acad Emerg Med. 2009 Dec;16 Suppl 2:S46–S50. doi: 10.1111/j.1553-2712.2009.00598.x.ACEM598 [DOI] [PubMed] [Google Scholar]

- 14.Roe D, Carley S, Sherratt C. Potential and limitations of e-learning in emergency medicine. Emerg Med J. 2010 Feb;27(2):100–104. doi: 10.1136/emj.2008.064915.27/2/100 [DOI] [PubMed] [Google Scholar]

- 15.Shah IM, Walters MR, McKillop JH. Acute medicine teaching in an undergraduate medical curriculum: a blended learning approach. Emerg Med J. 2008 Jun;25(6):354–357. doi: 10.1136/emj.2007.053082.25/6/354 [DOI] [PubMed] [Google Scholar]

- 16.Spedding R, Jenner R, Potier K, Mackway-Jones K, Carley S. Blended learning in paediatric emergency medicine: preliminary analysis of a virtual learning environment. Eur J Emerg Med. 2013 Apr;20(2):98–102. doi: 10.1097/MEJ.0b013e3283514cdf. [DOI] [PubMed] [Google Scholar]

- 17.Lehmann R, Bosse HM, Simon A, Nikendei C, Huwendiek S. An innovative blended learning approach using virtual patients as preparation for skills laboratory training: perceptions of students and tutors. BMC Med Educ. 2013;13:23. doi: 10.1186/1472-6920-13-23. http://www.biomedcentral.com/1472-6920/13/23 .1472-6920-13-23 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.de Vries W, Handley AJ. A web-based micro-simulation program for self-learning BLS skills and the use of an AED. Can laypeople train themselves without a manikin? Resuscitation. 2007 Dec;75(3):491–498. doi: 10.1016/j.resuscitation.2007.05.014.S0300-9572(07)00259-6 [DOI] [PubMed] [Google Scholar]

- 19.Kononowicz AA, Krawczyk P, Cebula G, Dembkowska M, Drab E, Frączek B, Stachoń AJ, Andres J. Effects of introducing a voluntary virtual patient module to a basic life support with an automated external defibrillator course: a randomised trial. BMC Med Educ. 2012;12:41. doi: 10.1186/1472-6920-12-41. http://www.biomedcentral.com/1472-6920/12/41 .1472-6920-12-41 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Ventre KM, Collingridge DS, DeCarlo D. End-user evaluations of a personal computer-based pediatric advanced life support simulator. Simul Healthc. 2011 Jun;6(3):134–142. doi: 10.1097/SIH.0b013e318207241e.01266021-201106000-00002 [DOI] [PubMed] [Google Scholar]

- 21.Biarent D, Bingham R, Eich C, López-Herce J, Maconochie I, Rodríguez-Núñez A, Rajka T, Zideman D. European Resuscitation Council Guidelines for Resuscitation 2010 Section 6. Paediatric life support. Resuscitation. 2010 Oct;81(10):1364–1388. doi: 10.1016/j.resuscitation.2010.08.012.S0300-9572(10)00438-7 [DOI] [PubMed] [Google Scholar]

- 22.Kopp V, Möltner A, Fischer M. Key feature problems for the assessment of procedural knowledge: a practical guide. GMS Z Med Ausbild. 2006;23(3):Doc50. http://www.egms.de/de/journals/zma/2006-23/zma000269.shtml . [Google Scholar]

- 23.Page G, Bordage G, Allen T. Developing key-feature problems and examinations to assess clinical decision-making skills. Acad Med. 1995 Mar;70(3):194–201. doi: 10.1097/00001888-199503000-00009. [DOI] [PubMed] [Google Scholar]

- 24.Adler MD, Vozenilek JA, Trainor JL, Eppich WJ, Wang EE, Beaumont JL, Aitchison PR, Pribaz PJ, Erickson T, Edison M, McGaghie WC. Comparison of checklist and anchored global rating instruments for performance rating of simulated pediatric emergencies. Simul Healthc. 2011 Feb;6(1):18–24. doi: 10.1097/SIH.0b013e318201aa90.01266021-201102000-00006 [DOI] [PubMed] [Google Scholar]

- 25.Donoghue A, Nishisaki A, Sutton R, Hales R, Boulet J. Reliability and validity of a scoring instrument for clinical performance during Pediatric Advanced Life Support simulation scenarios. Resuscitation. 2010 Mar;81(3):331–336. doi: 10.1016/j.resuscitation.2009.11.011.S0300-9572(09)00592-9 [DOI] [PubMed] [Google Scholar]

- 26.Donoghue A, Ventre K, Boulet J, Brett-Fleegler M, Nishisaki A, Overly F, Cheng A, EXPRESS Pediatric Simulation Research Investigators Design, implementation, and psychometric analysis of a scoring instrument for simulated pediatric resuscitation: a report from the EXPRESS pediatric investigators. Simul Healthc. 2011 Apr;6(2):71–77. doi: 10.1097/SIH.0b013e31820c44da. [DOI] [PubMed] [Google Scholar]

- 27.Lockyer J, Singhal N, Fidler H, Weiner G, Aziz K, Curran V. The development and testing of a performance checklist to assess neonatal resuscitation megacode skill. Pediatrics. 2006 Dec;118(6):e1739–e1744. doi: 10.1542/peds.2006-0537.peds.2006-0537 [DOI] [PubMed] [Google Scholar]

- 28.Krautter M, Weyrich P, Schultz J, Buss SJ, Maatouk I, Jünger J, Nikendei C. Effects of Peyton's four-step approach on objective performance measures in technical skills training: a controlled trial. Teach Learn Med. 2011;23(3):244–250. doi: 10.1080/10401334.2011.586917. [DOI] [PubMed] [Google Scholar]

- 29.Centre for Virtual Patients. [2015-06-25]. CAMPUS-Software http://www.medizinische-fakultaet-hd.uni-heidelberg.de/CAMPUS-Software.109992.0.html?&FS=0&L=en .

- 30.Cummins RO. Advanced Cardiovascular Life Support, Instructor's Manual (American Heart Association) Dallas, TX: American Heart Association; 2001. [Google Scholar]

- 31.Nolan J, Baskett P, Gabbott D, Gwinnutt C, Latorre F, Lockey A. In: Advanced Life Support Course: Provider Manual. 4th edition. Nolan J, editor. London, UK: Resuscitation Council; 2001. [Google Scholar]

- 32.Peyton JWR, editor. Teaching & Learning in Medical Practice. Rickmansworth, UK: Manticore Publishers; 1998. [Google Scholar]

- 33.Issenberg SB, McGaghie WC, Petrusa ER, Lee GD, Scalese RJ. Features and uses of high-fidelity medical simulations that lead to effective learning: a BEME systematic review. Med Teach. 2005 Jan;27(1):10–28. doi: 10.1080/01421590500046924.R3P0QDKQ9XBG5AJ9 [DOI] [PubMed] [Google Scholar]

- 34.Shrout PE, Fleiss JL. Intraclass correlations: uses in assessing rater reliability. Psychol Bull. 1979 Mar;86(2):420–428. doi: 10.1037//0033-2909.86.2.420. [DOI] [PubMed] [Google Scholar]

- 35.Fleiss JL. The Design and Analysis of Clinical Experiments. New York, NY: Wiley-Interscience; 1986. [Google Scholar]

- 36.Beckers SK, Biermann H, Sopka S, Skorning M, Brokmann JC, Heussen N, Rossaint R, Younker J. Influence of pre-course assessment using an emotionally activating stimulus with feedback: a pilot study in teaching Basic Life Support. Resuscitation. 2012 Feb;83(2):219–226. doi: 10.1016/j.resuscitation.2011.08.024.S0300-9572(11)00526-0 [DOI] [PubMed] [Google Scholar]

- 37.Sweller J, van Merrienboer JJG, Paas FGWC. Cognitive architecture and instructional design. Educ Psychol Rev. 1998 Sep;10(3):251–296. doi: 10.1023/A:1022193728205. Print ISSN 1040-726X. [DOI] [Google Scholar]

- 38.van Merriënboer JJ, Sweller J. Cognitive load theory in health professional education: design principles and strategies. Med Educ. 2010 Jan;44(1):85–93. doi: 10.1111/j.1365-2923.2009.03498.x.MED3498 [DOI] [PubMed] [Google Scholar]

- 39.Davis DA, Mazmanian PE, Fordis M, Van Harrison R, Thorpe KE, Perrier L. Accuracy of physician self-assessment compared with observed measures of competence: a systematic review. JAMA. 2006 Sep 6;296(9):1094–1102. doi: 10.1001/jama.296.9.1094.296/9/1094 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Key-feature test.

Adherence to algorithm scoring form.

Temporal measures scoring form.

Performance quality scoring form.

Self-assessment instrument.