Abstract

We present a new approach to facilitate the application of the optimal transport metric to pattern recognition on image databases. The method is based on a linearized version of the optimal transport metric, which provides a linear embedding for the images. Hence, it enables shape and appearance modeling using linear geometric analysis techniques in the embedded space. In contrast to previous work, we use Monge's formulation of the optimal transport problem, which allows for reasonably fast computation of the linearized optimal transport embedding for large images. We demonstrate the application of the method to recover and visualize meaningful variations in a supervised-learning setting on several image datasets, including chromatin distribution in the nuclei of cells, galaxy morphologies, facial expressions, and bird species identification. We show that the new approach allows for high-resolution construction of modes of variations and discrimination and can enhance classification accuracy in a variety of image discrimination problems.

Keywords: Optimal transport, Linear embedding, Generative image modeling, Pattern visualization

1. Introduction

Automated pattern learning from image databases is important for numerous applications in science and technology. In the health sciences arena, biomedical scientists wish to understand patterns of variation in white and gray matter distributions in the brains of normal versus diseased volunteers from magnetic resonance images (MRI) [26,27]. Pathologists wish to understand patterns of morphological variations between benign versus malignant cancer cell populations through a variety of microscopy techniques [28,30]. Other applications include morphometrics [31], the analysis of facial images [32,17], and the analysis of mass distributions in telescopic images of galaxies [14]. The goal is usually related to discovery and understanding any discriminant pattern among different classes. More specifically, the objective in many morphometry-related applications is to determine whether statistically significant discriminating information between two or more classes (e.g. benign vs. malignant cancer cells) exists. If so, it is also often desirable to determine through visualization the nature (e.g. biological interpretation) of the found differences.

Numerous image analysis methods have been described to facilitate discrimination and understanding from image databases. A critical step in this process is the choice of an image similarity (or distance) criterion, based on which image clustering, classification and related analysis can be performed. While the Euclidean distance between pixel intensities (after images are appropriately normalized for rotation and other uninteresting variations) remains a viable alternative in many cases [45], typically employed distances involve the extraction of numerical descriptors or features for the images at hand. In many applications [14,33], feature-based distances lead to high performance and accurate image classification. Feature-based methods commonly incorporate shape-related features [34], texture-related features [44], such as Haralick and Gabor features, derived based on the adaptations of Fourier and Wavelet transforms [46,35], and others [47]. Most feature-based approaches, however, are not ‘generative’ and thus any statistical modeling performed in feature space is not easily visualized. This can be readily understood by noting that the transformation (from the ambient image space to feature space) is not invertible: one can extract features from images, though it is not generally possible to obtain an image from an arbitrary point in feature space.

In analyzing shape distributions obtained from image data, numerous approaches based on differential geometry have been employed. Grenander et al. [37], for example, have described methods for comparing the shape of two brains (or brain structures) by minimizing the amount of incremental ‘effort’ required to deform one structure onto another. Likewise, Joshi et al. [38], and Klassen et al. [39] have described similar approaches for determining the distance between two shapes as encoded by medial axis models and contours, respectively. Such approaches are not only capable of measuring differences between shapes without loss of information, but are also generative and thus allow for interpolating between shape exemplars, thus giving researchers access to geometrical properties of shape distributions from image databases. It is worthwhile noting that the approaches described in these references, however, do not allow for one to encode information regarding intensity variations, or texture. Moreover, such approaches can be computationally expensive to employ in pattern recognition tasks on large datasets.

Active appearance models [7,8] and Morphable Models [48] are another class of approaches that can be used for modeling shape and intensity variations in a set of images. Such methods are parametric, nonlinear, and generative. However, these methods require the definition of characteristic landmarks, as well as the correspondence between the landmarks of all images in the set.

Finally, we also mention that several graph-based techniques have also been developed for interpreting variations in image databases. The idea in such methods is to obtain an understanding of the geometric structure of such variations by applying manifold and graph connectivity learning algorithms [40,41]. These methods find a linear embedding for the images, which preserve the local structure of the data in the image space. Such approaches have been often employed in studying variations in image databases [40]. We note that, generally speaking, such methods are not generative. As with the numerical feature approach above, it is generally not possible to reconstruct an image corresponding to any arbitrary point in feature space (other than images present in the same dataset).

1.1. Previous work on linear optimal transport (LOT)

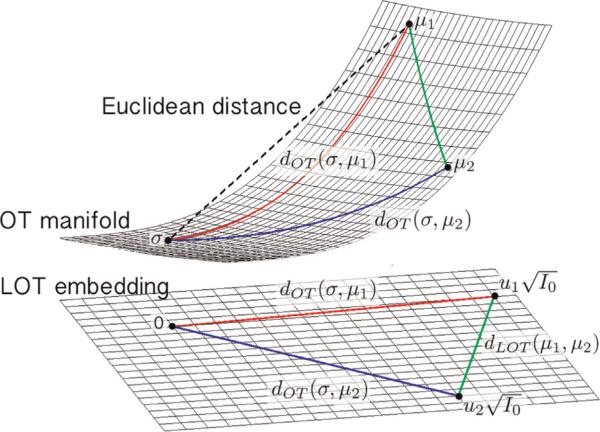

In [21,22] we have recently introduced a new approach based on the mathematics of optimal transport (OT) for performing discrimination and visualizing meaningful variations in image databases. Interpreting images as nonnegative measures one can employ the Kantorovich–Wasserstein distance to measure the similarity between pairs of images. The metric space is formally a Riemannian manifold [42] where at each point one can define a tangent space endowed with an inner product. The OT distance is then the length of the shortest curve (geodesic) connecting two images (see Fig. 1). The linear optimal transport (LOT) idea presented in [21] involves computing a modified version of the OT metric between images by first computing the necessary optimal transport plans between each image and a reference point, and then quantifying the similarity between the two images as a functional of computed transport plans. The idea is depicted in Fig. 1.

Fig. 1.

The geometry of the OT manifold and the LOT embedding.

In short, the LOT framework initially proposed in [21], and refined in [22] for the analysis of cell images can be viewed as a new nonlinear image transformation method. The ‘analysis’ portion of the transform consists of computing a linear embedding for given images by finding the transport plans from images to a reference image. In this manner, each point in the embedding corresponds to a transport plan that maps an image to the reference image. The ‘synthesis’ operation relates to computing the image corresponding to a point in the embedding.

One of the major benefits of this signal transformation framework is that it facilitates the application of the OT metric to pattern recognition on image databases. If a given pattern recognition task (e.g. clustering or classification) requires all pairwise distances for a given database of N images to be computed, one can compute the LOT distance between them with only N optimal transport problems, as opposed to the usual N(N – 1)/2 computations that would be required in the standard approach [20]. Beyond just an improvement in computation time, the LOT image transformation framework also allows for the unification of the discrimination and visualization tasks. Discrimination using Euclidean distances in the LOT space are akin to a modified (linearized) version of the transport metric, described as a generalized geodesic by Ambrosio et al. [43], and have been shown to be very sensitive in capturing the necessary information in a variety of discrimination tasks [21,22]. In addition, given that the new transformation framework is invertible, the framework also allows for the direct visualization of any statistical modeling (e.g. principal component analysis and linear discriminant analysis) in the embedding. This enables direct visualization of important variations in a given database.

1.2. Contribution highlights

The work described in [21,22] utilizes Kantorovich's formulation of the OT problem in a discrete setting (particle-based). That is, images are viewed as mass distributions and modeled as sums of discrete delta ‘functions’ placed throughout the image domain. The underlying OT problem then simplifies to a linear programming problem and is solved using existing approaches. The method in [21,22] is, however, computationally expensive as the computational complexity of linear programming solvers is generally of polynomial order (w.r.t. number of particles) and in addition it requires an initial particle approximation step.

Here we improve the work in [21,22] by utilizing Monge's formulation of the underlying OT problem. In particular, we highlight the following contributions:

We describe a continuous version of the LOT framework that bypasses many of the difficulties associated with the discrete formulation. In this respect, we define a forward and inverse transform operation based on continuous transport maps, define an improved reference estimation algorithm, as well as describe the range within which points in LOT space are invertible according to the continuous formulation.

We show experimentally that the new formulation significantly speeds up the computation of the LOT embedding for a set of images.

We demonstrate that (in contrast to the previous method), the new method allows for reliable information extraction from high resolution, non-sparse, images.

We demonstrate that the method performs well in comparison to other methods in several discrimination tasks, while at the same time allowing for meaningful generative modeling and visualization.

We start by reviewing the LOT framework, and then describe the nonlinear minimization technique we use, in Sections 2 and 3. Section 4 describes our approach for computing the LOT embedding using the continuous OT maps between each image and a template in a given dataset. In Section 5 we describe how to combine the LOT embedded images together with PCA and penalized LDA to visualize meaningful information in different datasets. We describe the datasets used in this paper and demonstrate the output of our LOT framework by showing modes of variations, discrimination modes, and classification results for all the datasets, in Section 6. Finally, the paper is summarized and our contributions are highlighted in Section 7.

2. Linearized optimal transport

Optimal transport methods have long been used to mine information in digital image data (see for example [36]). The idea is to minimize the total amount of mass times the distance that it must be transported to match one exemplar to another. Let μ1 and μ2 be two nonnegative probability measures defined over a 2D domain Ω such that μ1(Ω) = μ2(Ω) = 1. Let Π(μ1, μ2) be the set of all couplings between the two measures. That is, let A and B be measurable subsets of Ω, and let π ∈ Π(μ1, μ2). Then π(A × B) describes, how ‘mass’ originally in set A in measure μ1 is transported to set B in measure μ2 so that all mass in μ1 can be transported to the same configuration as the mass in μ2. The optimal transport plan, with quadratic cost, between μ1 and μ2 is given as the minimizer of

| (1) |

where and are coordinates in domains of μ1 and μ2, respectively, and | · | denotes the Euclidean norm. In Wang et al. [21] a linearized version of the OT metric is described, which is denoted as the linear optimal transportation (LOT), based on the idea of utilizing a reference measure σ. Let πμi denote a transport plan between σ and μi. In addition, let Π(σ,μ1,μ2) be the set of measures such that if π ∈ Π(σ,μ1,μ2), π(A × B × Ω) = πμ1 (A × B), and π(A × Ω × C) = πμ2 (A × C). Then, in the most general setting, the LOT can be defined as

| (2) |

In [21] a ‘particle’-based approach is utilized for computing (2). The idea is to first approximate the measures μ1, μ2 and σ using a weighted linear combination of masses and compute (2) by solving the associated linear program [21]. Because linear programs are expensive to compute (generally scale as O(n3), where n is the number of particles used to approximate the image), Wang et al. proposed a particle approximation algorithm to reduce the number of pixels in each image prior to computation.

Instead, here we describe an extension of the LOT computation to the set of smooth densities, together with a few additional modifications required by the continuous formulation, that can significantly reduce the computational complexity while at the same time allowing transport maps to be computed at full image resolution. Let μ1, μ2, and σ be smooth measures on Ω with , and , where I0, I1 and I2 are the corresponding densities. Monge's formulation of the OT problem between σ and μ1, for example, is to find a spatial transformation (a map) that minimizes

| (3) |

subject to the constraint that the map f1 pushes measure μ1 into measure σ. When the mapping is smooth, this requirement can be written in differential form as

| (4) |

where Df1 is the Jacobian matrix of f1. Eq. (4) specifies the mass preserving property that the mapping must have. In addition, we note that f1 is a 2D map: , with f1x and f1y denoting the x and y components of the map, respectively. Similarly, we note that , where u1x and u1y are displacement fields in the x and y directions, respectively. Now let us similarly define as the continuous OT mapping between σ and μ2.

Using and in Eq. (2), the LOT distance between measures μ1 and μ2 with respect to the reference measure σ can be written as

| (5) |

where . Thus the functions and are natural isometric linear embeddings (with respect to the LOT) for I1 and I2, respectively.

From the geometric point of view, the LOT transform can be viewed as the identification (projection), P, of the OT manifold on the tangent space at σ. Hence, given a measure μi with the optimal transport map fi (with respect to σ) we have

| (6) |

where . See Fig. 1 for a visualization. From (6) we have and we can write

| (7) |

therefore, P preserves the OT distance between μi and σ.

3. Optimal transport minimization

There exist several algorithms for finding an estimate of the solution to the OT problem. Haker et al. [11] utilized a variational approach for computing a transport map by minimizing the problem in (3), while Wang et al. [20,21], for example, made use of Kantorovich's formulation of the problem in a discrete setting (2) to achieve a solution based on linear programming. Benamou et al. [3] solved the OT problem by resetting the problem into a continuum mechanics framework. More recently, Chartrand et al. [5] introduced a gradient descent solution to the Monge–Kantorovich problem, which solves the dual problem. In another interesting approach toward solving the OT problem, Haber et al. [10] reformulated the problem as a penalized projection of an arbitrary mapping onto the set of mass preserving mappings. In this paper, we show that the LOT approach, introduced in [21], can be extended to use the continuous formulation in (3), thus allowing for the use of full resolution images hitherto impossible with the state of the art methods. We employ the generic approach as described in [11]. Given densities I0 and I1, the method works by first finding an initial measure preserving ‘guess’, that is det, and then minimizing (3) based on a partial differential equation approach.

3.1. Finding an initial measure preserving (MP) map

We follow the approach described in [11], originally proposed in [13], for finding an initial measure preserving map. Briefly, the initial measure preserving map can be found by solving a series of 1D problems. In 1D, the OT solution can be found by simply computing the points along the line where the integrals of the two functions coincide. In 2D, the initial mapping can be found by solving a series of 1D problems as described below.

Let Ω = [0,1]2 and I0 and I1 be the two density maps (images) one wishes to compare through the OT approach. In order to find an initial MP map, we first find the transportation map parallel to the x-axis, a(x):

| (8) |

Then the transport map along the y-axis, b(x,y), is calculated by differentiating the equation above with respect to η and solving for b(a(x),y):

| (9) |

and hence . In practice, and cab be found with simple numerical integration techniques.

3.2. Optimal transport minimization

We employ a fundamental theoretical result [4,9] which states that there is a unique optimal MP mapping f* that minimizes Eq. (3), and it can be written as the gradient of a convex function , and hence it is curl free. Once an initial mapping f0 is found, we follow a gradient descent process [11] to make f0 curl free. We use the closure under composition and closure under inversion properties of the MP mappings [11] and define . Note that f is a MP mapping from I0 to I1, if and only if s is a MP mapping from I0 to itself, meaning that

| (10) |

Now we update , starting from , such that converges to a curl free mapping from I0 to I1. For to be a MP mapping from I0 to itself, should have the following form:

| (11) |

for some vector field ξ on Ω with div(ξ) = 0 and on , where is normal to the boundary of Ω. From (11), it follows that for to be a MP mapping from I0 to I1 we need

| (12) |

Differentiating the OT objective function,

| (13) |

with respect to t and after rearranging we have

| (14) |

Substituting (11) into the above equation we get

| (15) |

Writing the Helmholtz decomposition of f as and using the divergence theorem we get

| (16) |

thus, ξ = χ decreases M the most. But to find χ we first need to find ϕ. Given that div(χ) = 0 and , we take the divergence of the Helmholtz decomposition of f and we find ϕ from

| (17) |

Hence, where Δ−1 div(f) is the solution to (17). Substituting ξ back into (12) we have

| (18) |

which for can be even simplified further:

| (19) |

where indicates rotation by 90°. Finally, the MP mapping is updated using the gradient descent approach as follows:

| (20) |

where α is the gradient descent step. In our implementation α is found based on a backtracking line search approach. The gradient descent method described above is shown to converge to the global optimum [1]. This method can be thought of as a gradient descent approach on the manifold of the MP mappings from I0 to I1.

4. Calculating LOT embedding from optimal transport maps

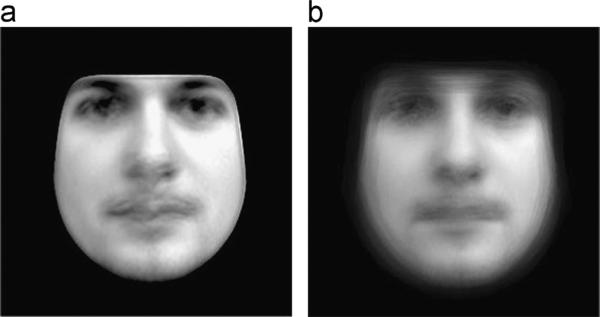

The linear embedding, as explained in Section 2, is calculated with respect to the template image I0. Given a set of images I1,...,IN, I0 is initially set to the average image via . The set of OT maps that transform each image Ik into I0 is computed via the OT minimization procedure described above. Having the mappings for k = 1 ··· N, the reference (template) image is updated iteratively using the mean optimal transport map. This iterative approach helps ‘sharpen’ the estimated template as well as have it be a more representative intrinsic ‘mean’ image for the given dataset. Fig. 2shows the calculated mean using the mentioned approach as well as the average image for a set of images including 40 faces with neutral expressions. The increase in sharpness is clearly evident.

Fig. 2.

Calculated mean image (a) and the average image (b) for the 40 neutral faces.

The outcome of this procedure is used as the ‘reference’ template I0, and the corresponding mappings fk, k = 1, ..., N such that det are calculated for the I0. The LOT embedding for an image Ik, as described in Section 2, is given by . This linear embedding can now be used for a variety of purposes, including clustering and classification, as well as visualization of main modes of variation, as we show below.

We emphasize that in contrast to other ‘data driven’ methods [16,2] for computing a linear embedding of a nonlinear dataset, the LOT embedding is generative. Meaning that, one is not only able to visualize any original image, but also able to visualize any point in the LOT space (subject to the constraint described in the next section) to obtain a synthetic image, which lays on the OT manifold in the image space. Let correspond to the displacement field of an arbitrary point in LOT space. Its corresponding image is computed by setting , and then computing the corresponding image Iv by

| (21) |

where refers to the inverse transformation of fv, such that . Eq. (21) shows that, any arbitrary point in LOT space, with corresponding displacement field , is visualizable if and only if Dfv is not singular, and hence f−1 exists. Next, we devise an approach which gives us the visualizable range in the LOT space.

4.1. Visualizable range in the LOT space

We utilize an orthogonal expansion in order to describe a visualizable range for the LOT embedding computed with respect to a chosen reference. Let the mean displacement field for the embedded images be . Now let [v1,...,vM] be an orthonormal basis for the zero mean displacement fields, . Such a basis can be obtained using PCA or a Gramschmidt orthogonalization process, for example. Hence, the displacement field of an arbitrary point in LOT space can always be written as follows:

| (22) |

where cks are coefficients of linear combination with , and β is a constant which corresponds to the range of change in the direction of . Therefore we can rewrite the mapping as below:

| (23) |

where and . The goal is to find the maximum β, for the Dfv remains nonsingular, or in other words, det for all x ∈ Ω. Expanding det(Dfv) and after rearranging one can write

| (24) |

where , and . In order to find the visualizable range, the above equation is set to zero and is solved for for each . Finally we choose to denote the range in direction. Although the process above leads to a very conservative bound for β, |β| <β*, it enables one to find the visualizable range for any linear combination of the orthonormal basis displacement fields. In all of the computational experiments shown in the following sections , where σ is the standard deviation of the projected images on the given direction in LOT space.

5. Modeling shape and appearance

Having the linearly embedded images with respect to the reference image, I0, enables one to visualize any point in the embedded space (subject to the restrictions described above). Hence, linear data analysis techniques such as principal component analysis (PCA) and linear discriminant analysis (LDA) provide visualizable modes of variations in the embedded space.

5.1. Visualizing variations within a single class

We utilize PCA (in kernel space) to visualize the main modes of variation in texture and shape within the dataset. Briefly, let S be the inner product matrix of the LOT embeddings of the images:

| (25) |

Let the corresponding eigenvalues γi be

| (26) |

where i = 1...N and ei s are the eigenvectors. The PCA directions are then given by

| (27) |

with corresponding eigenvalues γi. The variations in the dataset along the ith prominent variation direction can then be computed about the mean:

| (28) |

with the corresponding image computed by , as mentioned earlier, and with β being a coefficient computed in units of standard deviations σi computed over the projection of data on vi. Note that for each direction β is chosen such that |β| <β*.

5.2. Visualizing discriminant information between different classes

In order to find the most discriminant variation in a dataset containing two classes we apply the methodology we described in [19], which is a penalized version of the well-known Fisher linear discriminant analysis (FLDA), to the PCA-space of the LOT embeddings. The reason behind applying FLDA in the PCA-space is that the number of data samples is usually much smaller than the dimensionality of the embedded space. Therefore, the data can be presented in a lower dimensional space of the size equal to the number of sample points. In the PCA-space of the LOT embeddings, the kth image is represented by

| (29) |

with i = 1...N. The discriminant directions are then calculated in the PCA-space using

| (30) |

where is the total scatter matrix, represents the ‘within class’ scatter matrix, and is the center of class c. Note that, ε is a constant and we use the approach described in [22] to compute the appropriate value for ε in each case. Finally, the discriminant directions in the LOT embedding space are given by

| (31) |

The variations along vpLDA and around the mean are then visualized using the same scheme explained in the previous section.

6. Computational experiments

Here we describe results obtained in analyzing 4 different datasets using the proposed method. These datasets contain images of chromatin distribution in two types of cancerous thyroid cells, spiral and elliptical galaxies, human facial expressions, and part of the Caltech-UCSD Birds 200 dataset. These datasets are chosen to cover a broad spectrum of applications, in order to demonstrate versatility and the limitations of the proposed approach.

6.1. Datasets

The human facial expression dataset used below is part of the Carnegie Mellon University Face Images database, and is described in detail in [15]. Each image contains 276 × 276 grayscale pixels of 40 subjects under two classes of expressions, namely neutral and smiling. The light variation in these images is relatively minor and facial area, gender, and expression differences are the major variations in the dataset. The second dataset we used consists of segmented thyroid nuclei from patients with two types of follicular lesions, namely follicular adenoma (FA) of the thyroid and follicular carcinoma (FTC) of the thyroid. Tissue blocks, belonging to 27 patients with FA and 20 patients with FTC, were obtained from the archives of the University of Pittsburgh Medical Center. The study was approved as an exempt protocol by the Institutional Review Board of the University of Pittsburgh.

All images were acquired using an Olympus BX51 microscope equipped with a 100× UIS2 UPlanFl oil immersion objective (numerical aperture 1.30; Olympus America, Central Valley, PA) and 2 megapixel SPOT Insight camera (Diagnostic Instruments, Sterling Heights, MI). Image specifications were 24 bit RGB channels and 0.074 μm/pixel, 118 × 89 μm field of view. Slides were chosen that contained both lesion and adjacent normal appearing thyroid tissue (NL) where possible. The pathologist (J.A.O.) took 10–30 random images from non-overlapping fields of view of lesion and/or normal thyroid to guarantee that the data for each patient contained numerous nuclei for subsequent analysis. Nuclei were segmented semi-automatically utilizing the approach described in [6]. The segmented cells are visually inspected by the pathologist (J.A.O.) and on average 27 cells were taken from each patient leading to 756 nuclei for FA and 509 for FTC cases. The third dataset we employed contains two classes of galaxy images, including 225 elliptical galaxies and 223 spiral galaxies [14]. Finally, the last dataset is part of the Caltech-UCSD Bird 200 dataset, and it contains 30 images of two similar bird species, namely Gadwall and Mallard. Finally, we note that prior to the analysis shown below, each dataset was pre-processed so as to remove translations, rotations, and image flips as described in [21,22]. In addition, each grayscale image containing one segmented morphological exemplar was normalized so that the sum of its pixels was set to 1.

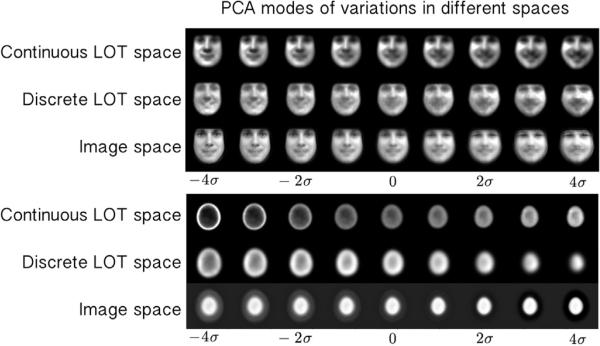

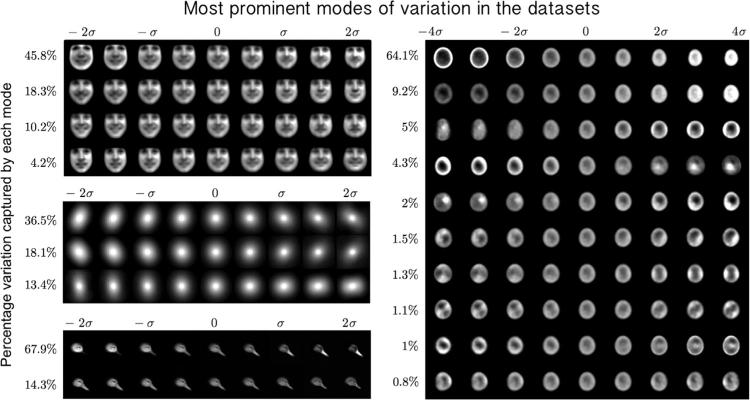

6.2. Visualizing modes of variation

One of the most important contributions of the LOT framework is to provide a visual image exploration tool which automatically captures and visualizes the modes of variations/discrimination in a set of images. We applied the proposed continuous LOT framework to model and visualize modes of variation in the datasets described above. Fig. 3 shows a comparison between the PCA modes obtained using image space (utilizing the L2 metric) [18], the discrete LOT-space as proposed by [21], and the continuous LOT-space obtained from the approach presented in this work. The photo-realistic improvement is self-evident. Fig. 4 (a), (b), (c), and (d) also shows the top 4 PCA modes for the human facial expression dataset, 3 PCA modes for the galaxy image dataset, 2 PCA modes for the bird image dataset, and the first 10 PCA modes for the nuclear chromatin distribution dataset, respectively. Several variations are visible: illumination changes, large vs. small, hairy vs. smooth, elongated vs. round, neutral vs. smiling, etc.

Fig. 3.

PCA modes in the continuous LOT space, discrete LOT space, and image space for the facial expression and the nuclei datasets.

Fig. 4.

The first 4 PCA modes for the facial expression dataset, capturing 78.5% of the variations in the dataset (a), the first 3 modes of variations for the galaxy dataset, capturing 68.1% of variations in the dataset (b), the first 2 modes of variations for the bird image dataset capturing 82.2% of the variations in the dataset (c), and the first 10 PCA modes for nuclei dataset, capturing 90.39% of the variations in the dataset (d). For visualization purposes, the images are contrast stretched.

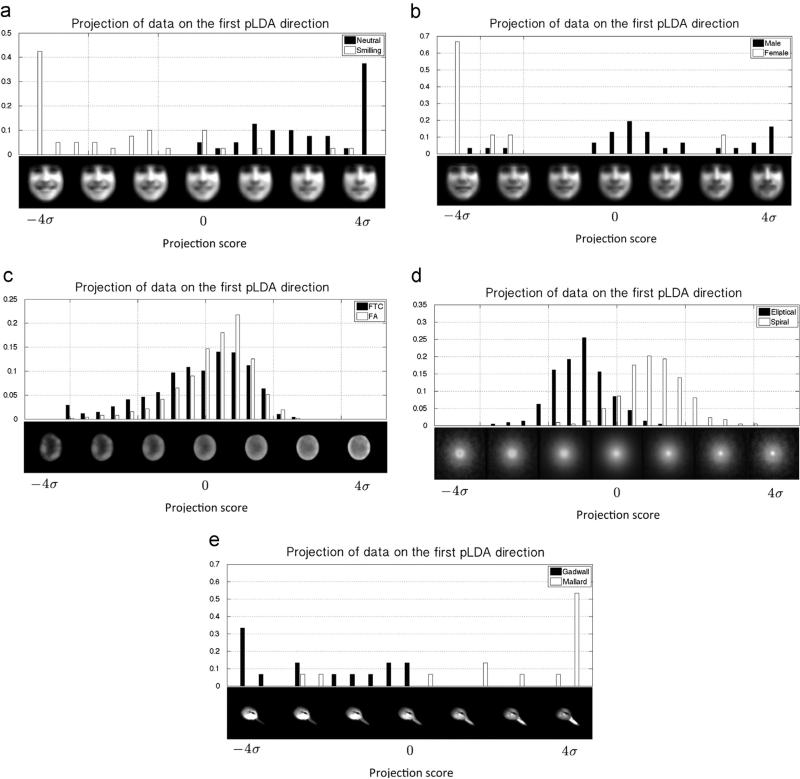

Fig. 5 shows discriminant directions between smiling vs. neutral faces, women vs. men, cancerous vs. normal nuclei images, eliptical vs. spiral galaxy images, and Mallard vs. Gadwall bird images. The histograms in Fig. 5 show the distribution of the projected LOT embeddings onto the corresponding vpLDA direction. The variations along vpLDA are computed about the mean as described above. It can be seen that the LOT framework represented in this paper is capable of capturing the visual differences of the images. We note that, in order to avoid over fitting, 20% of the dataset is kept out as the test data and vpLDA is calculated based on the remaining 80%, training data for each experiment. Fig. 5 shows the projection of the test data on the vpLDA calculated from the training dataset. The p-value (computed using only held out test data) for all experiments in Fig. 5, calculated from the Kolmogorov–Smirnov test (computed using held out data only), is zero to numerical precision. The visualization on the face image datasets confirm that the LOT approach captures the expected morphology changes between smiling and neutral faces, as well as female vs. male faces (e.g. appearance of facial hair). Differences between the FA and FTC nuclei can be summarized by the amount of relative chromatin that is present at the center of the nucleus, as opposed to its periphery. A similar statement can be made for summarizing differences in mass distributions between elliptical versus spiral galaxies. Spiral galaxies tend to have more mass, in relative terms, concentrated further away from the center of the galaxy. Finally, differences between the Gadwall and Mallard bird species can be summarized by the fact that the Gadwall species, on average, have thinner beaks, rounder heads, and brighter feather coloration in the head region. These examples are meant to highlight the type of information that the LOT and penalized LDA approaches, when combined, can capture, as well as to demonstrate some of its limitations (described below).

Fig. 5.

The histogram of the distribution of the projected LOT embeddings on the corresponding discriminant direction, and the visualized −4σ to 4σ variation of points around this direction about the mean for smiling and neutral faces: (a) male versus female faces (just neutral faces); (b) normal and cancerous cells; and (c) elliptical and spiral galaxies. The x-axis of the histograms is in the units of standard deviation.

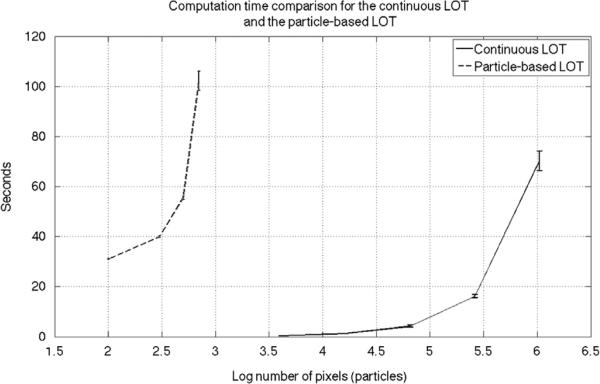

6.3. Computation time analysis

In order to compare the computation time for calculating the LOT embedding with particle-based and continuous LOT implementations we devised the following experiment. First we ran the particle-based code on the facial expression dataset images with 100, 300, 500, and 700 particles. The average computational time is reported in Fig. 6 (note the x-axis is in log scale). We note that the reported computational time includes the particle approximation step we described in [21,22]. For the continuous LOT code, on the other hand, we resized the images in the dataset to 64 × 64, 128 × 128, 256 × 256, 512 × 512 and 1024 × 1024 and ran the code. The average run time for the continuous LOT is also reported in Fig. 6. In order to make a fair comparison one can think of the total number of pixels as the number of particles and compare the results in that manner. It is clear that the continuous LOT code is significantly faster than the particle-based LOT code. We note that the computational complexity of each iteration of the continuous LOT code is of the order of O(n log (n)), where n is the total number of pixels in the image. The algorithm usually converges in less than 100 iterations. The codes were executed on a MacBook pro, with 2.9 GHz Intel Core i7 and 8 GB 1600 MHz DDR3.

Fig. 6.

Computation time comparison for the continuous LOT and the particle-based LOT. The x-axis shows the number of pixels in log scale for the continuous LOT and the number of particles in log scale for the particle-based LOT.

6.4. Classification results

Here we have tested the ability of the continuous LOT approach described above for classification tasks in the aforementioned image datasets. We have also compared the approach to our previous, particle-based method [21]. In doing so, we have limited the number of particles used in this method to 300. This is so that the average computation times between the continuous and particle-based versions are comparable while the visualizations are still acceptable. For 300 particles and images of size 256 × 256, the particle-based method is on average 10 times slower than the proposed method. In addition, we also compare the method described above to the tangent distance method described in [45], also used in face classification [12], and the truncated PCA coefficients (capturing 90% of variations). In all but one of the classification tasks, we have used a 10-fold cross validation technique, where a linear support vector machine classifier is computed on the training set, and tested on the held out test image. All classification results are shown in Table 1.

Table 1.

Accuracy table for classification of facial expressions, gender in facial expression dataset, galaxy dataset, and bird type (Mallard vs. Gadwall) using a linear SVM classifier with 10-fold cross validation in image space, truncated PCA coefficients, particle based LOT-space, and continuous LOT-space.

| Embedded space | Accuracy% |

|||

|---|---|---|---|---|

| Neutral vs. smiling | Spiral vs. elliptical | Male vs. female | Mallard vs. Gadwall | |

| Tangent metric | 77.35 ± 0.74 | 77.64 ± 0.51 | 81.04 ± 1.43 | 77.71 ± 2.3 |

| PCA coefficients | 69.05 ± 1.14 | 78.4 ± 0.54 | 79.42 ± 1.57 | 76.59 ± 1.6 |

| Particle-based LOT | 74.27 ± 1.28 | 72.5 ± 0.6 | 77.7 ± 1.4 | 81.76 ± 1.74 |

| Continuous LOT | 83.04 ± 0.8 | 77.82 ± 0.35 | 82.45 ± 0.8 | 85.26 ± 1.35 |

The classification strategy used for the nuclear dataset is different given the slightly different nature of the problem. Here labels are available only for sets of nuclei (not individual nuclei) and the task is to test whether a patient (not an individual nucleus) can be classified as FA or FTC. This leads to a need for estimating a low dimensional density for each class in the training set. To that end, we designed a classifier based on computing the K-nearest neighbors (K-NN) method on the LDA direction together with a majority voting step. A ‘leave one patient out’ scheme is used for cross validation, in which all nuclei corresponding to one patient are held out for testing and the nuclei from rest of the patients are used as the training data (to find the LDA direction as well as the optimum K in K-NN). The results are summarized in Table 2.

Table 2.

Accuracy table for patient classification in the thyroid nuclei dataset using K-NN on the first LDA direction in (a) image space, (b) truncated PCA coefficients, (c) particle based LOT-space, and (d) continuous LOT-space.

| Tangent metric | Label | PCA Coefficients | Label | ||||

| FA | FTC | FA | FTC | ||||

| Test | FA | 21 | 6 | Test | FA | 21 | 6 |

| FTC | 2 | 18 | FTC | 12 | 8 | ||

| (a) | Accuracy | 82.98% | (b) | Accuracy | 61.70% | ||

| Particle-based LOT | Label | Continous LOT | Label | ||||

| FA | FTC | FA | FTC | ||||

| Test | FA | 27 | 0 | Test | FA | 27 | 0 |

| FTC | 0 | 20 | FTC | 0 | 20 | ||

| (c) | Accuracy | 100% | (d) | Accuracy | 100% | ||

It can be seen that in all the experiments, the continuous LOT approach described above significantly outperforms our earlier particle-based version of the LOT. We also note that in all attempted classification tasks, the proposed LOT approach provides better or comparable accuracies in comparison to the tangent metric and the truncated PCA coefficients. We note that the p-values calculated from Welch's t-test between different methods on each dataset show that the improvement in accuracy for the proposed method compared to the particle-based LOT is statistically significant (p-value <0:01) for all datasets.

7. Summary and discussion

We described a new method for information extraction from image databases utilizing the mathematics of optimal transport. The approach can be viewed as a new invertible transformation method that computes the relative placement of intensities in an image dataset relative to a ‘reference’ point. Distances computed in the so-called linear optimal transport (LOT) space are akin to a linearized version of the well-known optimal transport (OT), also known as the earth mover's distance. Moreover, the transformation is invertible, thus allowing for direct visualization of any statistical model constructed in LOT space. In short, the framework thus enables exploratory visual interpretation of image databases, as well as supervised learning (classification), in a unified manner.

In practice, we envision this approach to be most useful in information discovery settings where optimal feature sets have yet to be discovered, as well as in tasks where a visual interpretation of any information is necessary. One such well-suited application is the detection of cancer from cell morphology. We have recently shown that the discrete LOT technique can be used to classify certain types of thyroid cancers with near perfect sensitivity and specificity [25] on a cohort of 94 patients. At the same time the technique can be utilized to provide biological information regarding chromatin reorganization differences in different cancer types. In contrast, the classification accuracy utilizing state of the art feature sets [33] is significantly lower. Similarly, we have recently shown that the discrete LOT approach also allows one to detect mesothelioma, a type of lung cancer usually associated with exposure to asbestos, directly from effusion cytology specimens [24], thus obviating the need for invasive biopsies which are often necessary for diagnosis. The advances discussed in this paper could be used to improve accuracy while at the same time significantly reduce the computation time involved in such diagnostic tests from a few hours on a Beowulf-type cluster [25] to minutes on a modern standard desktop workstation. We have shown that the approach can also produce visualizations of significantly higher resolution.

The work presented here builds on our own earlier work on this topic [21,22]. Relative to this work, the main innovation described here is an adaptation of the LOT framework to utilizing a continuous formulation for the underlying optimal transport problem, rather than a discrete formulation as done in [21,22]. In the process, we described a modification of the reference estimation algorithm based on estimating the intrinsic mean in the dataset, as well as described how to utilize deformation fields in order to compute the necessary LOT embedding. In this paper we utilized the algorithm described in [11] to solve the underlying OT problem, though other approaches can be used as well [23]. We have also described a method for calculating regions in LOT space where the transform, computed using deformation fields as described here, is invertible. Finally, we have also described how to utilize the continuous formulation of the LOT for tasks related to visualization using PCA and LDA.

We compared the performance of the ‘continuous’ LOT approach described here to the ‘discrete’ version we described earlier [21,22] in visualization and discrimination tasks using several image databases. The approach described here is much faster to compute than our previously described version, given that it relies on a gradient descent-estimate for transport maps, rather than discrete transport plans obtained via linear programming minimization. As a result, it is now possible to utilize images at their full resolution for visualization and discrimination tasks. Consequently, visualizations computed using PCA and penalized LDA look significantly more photo-realistic, classification accuracies are improved, in addition to the computation time being decreased. In addition, we compared the LOT distances computed using both continuous and discrete approaches to other distances often employed in visualization and discrimination tasks. We have shown that using the same classifier (linear SVM) the classification accuracy of the proposed LOT metric computed utilizing a continuous formulation for the underlying OT problem outperforms that of the tangent metric for images, the PCA subspace of the image space, and our earlier particle-based LOT approach.

While the approach is effective in mining information from image databases, it is worthwhile to point out a few of its limitations. We note that the convergence speed of the optimal transport solver depends on the smoothness of the input images. This effect is also demonstrated by Chartrand et al. [5], for example. Therefore, in order to ensure the smoothness of the input images, the images are filtered with a Gaussian filter of small standard deviation (1–2 pixels) as a preprocessing step. This process leads to the loss of high frequency content in the images being analyzed. Results reported in this paper, however, have found that this effect is not significant for the datasets we tested. In addition, we note that the ‘linearization’ afforded by the LOT approach we described here, while seemingly effective for many applications, does not provide for a perfect representation model for certain image datasets. We note, for example, that the models produced by our method in regions near the mouth (see Fig. 3), though visually superior to the other alternatives we tested, are not visually sharp. We postulate that this is due to the large intensity variations that are possible near this region (e.g. open versus closed mouth and visibility of teeth). Techniques for overcoming these and other issues will be subject of future work.

Supplementary Material

Acknowledgments

The authors would like to thank Dejan Slepčev for stimulating discussions. This work was financially supported by NSF Grant CCF 1421502, NIH Grants CA188938, GM090033, GM103712 and the John and Claire Bertucci Graduate Fellowship.

Biography

Soheil Kolouri received his B.S. degree in Electrical Engineering from Sharif University of Technology, Tehran, Iran, in 2010, and his M.S. degree in Electrical Engineering, in 2012, from Colorado State University, Fort Collins, Colorado. He received his doctorate degree in biomedical engineering from Carnegie Mellon University, in 2015, where his research was focused on applications of the optimal transport in image modeling, computer vision, and pattern recognition. His thesis, titled, “Transportbased pattern recognition and image modeling”, won the best thesis award from the Biomedical Engineering Department at Carnegie Mellon University.

Akif B. Tosun received his B.S. degree in computer engineering from Hacettepe University, Turkey, in 2006, and his Ph.D. degree in computer engineering at Bilkent University, Turkey, in 2012. He is currently a Post-Doctoral Research Scholar and his work includes the development of novel approaches for cancer diagnosis using image analysis techniques and machine learning algorithms at Carnegie Mellon University, USA.

John A. Ozolek graduated from the University of Pittsburgh School of Medicine with the M.D. degree, in 1989. He completed residency in Pediatrics and Fellowship in Neonatal-Perinatal Medicine at the University of Pittsburgh before practicing in the United States Air Force and private practice. He returned to train in Anatomic Pathology and completed a Fellowship in Pediatric Pathology at Children's Hospital of Pittsburgh where he remains on staff. He has extensive collaborations with the Center for Bioimage Informatics and Department of Mathematics at Carnegie Mellon University to develop algorithms and computational approaches for automating tissue recognition in research and clinical applications.

Gustavo K. Rohde earned B.S. degree in Physics and Mathematics, in 1999, and the M.S. degree in Electrical Engineering, in 2001, from Vanderbilt University. He received a doctorate in Applied Mathematics and Scientific Computation, in 2005, from the University of Maryland. He is currently an Associate Professor of Biomedical Engineering, and Electrical and Computer Engineering at Carnegie Mellon University.

Footnotes

Conflict of interest

None declared.

Appendix A. Supplementary data

Supplementary data associated with this paper can be found in the online version at http://dx.doi.org/10.1016/j.patcog.2015.09.019.

References

- 1.Angenent S, Haker S, Tannenbaum A. Minimizing flows for the Monge–Kantorovich problem. SIAM J. Math. Anal. 2003;35:61–97. [Google Scholar]

- 2.Belkin M, Niyogi P. Laplacian eigenmaps for dimensionality reduction and data representation. Neural Comput. 2003;15:1373–1396. [Google Scholar]

- 3.Benamou J-D, Brenier Y. A computational fluid mechanics solution to the Monge–Kantorovich mass transfer problem. Numer. Math. 2000;84:375–393. [Google Scholar]

- 4.Brenier Y. Polar factorization and monotone rearrangement of vector-valued functions. Commun. Pure Appl. Math. 1991;44:375–417. [Google Scholar]

- 5.Chartrand R, Vixie K, Wohlberg B, Bollt E. A gradient descent solution to the Monge–Kantorovich problem. Appl. Math. Sci. 2009;3:1071–1080. [Google Scholar]

- 6.Chen C, Wang W, Ozolek J, Lages N, Altschuler S, Wu L, Rohde GK. A template matching approach for segmenting microscopy images. IEEE Int. Symp. Biomed. 2012:768–771. [Google Scholar]

- 7.Cootes TF, Beeston C, Edwards GJ, Taylor CJ. Information Processing in Medical Imaging. Springer; Heidelberg, Germany: 1999. A unified framework for atlas matching using active appearance models; pp. 322–333. [Google Scholar]

- 8.Cootes TF, Edwards GJ, Taylor CJ. Active appearance models. IEEE Trans. Pattern Anal. Mach. Intell. 2001;23(6):682–685. [Google Scholar]

- 9.Gangbo W, McCann RJ. The geometry of optimal transportation. Acta Math. 1996;177:113–161. [Google Scholar]

- 10.Haber E, Rehman T, Tannenbaum A. An efficient numerical method for the solution of the l_2 optimal mass transfer problem. SIAM J. Sci. Comput. 2010;32:197–211. doi: 10.1137/080730238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Haker S, Zhu L, Tennembaum A, Angenent S. Optimal mass transport for registration and warping. Int. J. Comput. Vis. 2004;60:225–240. [Google Scholar]

- 12.Lu J, Plataniotis KN, Venetsanopoulos AN. Face recognition using LDA-based algorithms. IEEE Trans. Neural Netw. 2003;14:195–200. doi: 10.1109/TNN.2002.806647. [DOI] [PubMed] [Google Scholar]

- 13.Moser J. On the volume elements on a manifold. Trans. Am. Math. Soc. 1965;120:286–294. [Google Scholar]

- 14.Shamir L. Automatic morphological classification of galaxy images. Mon. Not. R. Astron. Soc. 2009;399:1367–1372. doi: 10.1111/j.1365-2966.2009.15366.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Stegmann M, Ersboll B, Larsen R. Fame—a flexible appearance modeling environment. IEEE Trans. Med. Imaging. 2003;22:1319–1331. doi: 10.1109/tmi.2003.817780. [DOI] [PubMed] [Google Scholar]

- 16.Tenembaum J, de Silva V, Langford JC. A global geometric framework for nonlinear dimensionality reduction. Science. 2000;290:2319–2323. doi: 10.1126/science.290.5500.2319. [DOI] [PubMed] [Google Scholar]

- 17.Tan X, Chen S, Zhou ZH, Zhang F. Face recognition from a single image per person: a survey. Pattern Recognit. 2006;39:1725–1745. [Google Scholar]

- 18.Turk M, Pentland A. Face recognition using eigenfaces. CVPR. 1991:586–591. [Google Scholar]

- 19.Wang W, Mo Y, Ozolek JA, Rohde GK. Penalized fisher discriminant analysis and its application to image-based morphometry. Pattern Recognit. Lett. 2011;32:2128–2135. doi: 10.1016/j.patrec.2011.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Wang W, Ozolek JA, Slepcev D, Lee AB, Chen C, Rohde GK. An optimal transportation approach for nuclear structure-based pathology. IEEE Trans. Med. Imaging. 2011;30:621–631. doi: 10.1109/TMI.2010.2089693. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Wang W, Slepcev D, Basu S, Ozolek JA, Rohde GK. A linear optimal transportation framework for quantifying and visualizing variations in sets of images. Int. J. Comput. Vis. 2013;101:254–269. doi: 10.1007/s11263-012-0566-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Basu S, Kolouri S, Rohde GK. Detecting and visualizing cell phenotype differences from microscopy images using transport-based morphometry. Proc. Natl. Acad. Sci USA. 2014;111:3448–3453. doi: 10.1073/pnas.1319779111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Kolouri S, Rohde GK. Quantifying and visualizing variations in sets of images using continuous linear optimal transport. Proc. SPIE Med. Imaging. 2014:903438. [Google Scholar]

- 24.Akif Burak Tosun, Yergiyev Oleksandr, Kolouri Soheil, Silverman Jan F., Rohde GustavoK. Detection of malignant mesothelioma using nuclear structure of mesothelial cells in effusion cytology specimens. Cytometry Part A. 2015;87(4):326–333. doi: 10.1002/cyto.a.22602. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Ozolek JA, Tosun AB, Wang W, Chen C, Kolouri S, Basu S, Huang H, Rohde GK. Accurate diagnosis of thyroid follicular lesions from nuclear morphology using supervised learning. J. Med. Imaging Anal. 2014;18:772–780. doi: 10.1016/j.media.2014.04.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Ferrarini L, Palm WM, Olofsen H, van Buchem MA, Reiber JH, Admiraal-Behloul F. Shape differences of the brain ventricles in Alzheimer's disease. Neuroimage. 2006;32:1060–1069. doi: 10.1016/j.neuroimage.2006.05.048. [DOI] [PubMed] [Google Scholar]

- 27.Bodini B, Khaleeli Z, Cercignani M, Miller DH, Thompson AJ, Ciccarelli O. Exploring the relationship between white matter and gray matter damage in early primary progressive multiple sclerosis: an in vivo study with TBSS and VBM. Hum. Brain Mapp. 2009;30:2852–2861. doi: 10.1002/hbm.20713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Chen X, Zhou X, Wong ST. Automated segmentation, classification, and tracking of cancer cell nuclei in time-lapse microscopy. IEEE Trans. Biomed. Eng. 2006;53:762–766. doi: 10.1109/TBME.2006.870201. [DOI] [PubMed] [Google Scholar]

- 30.Demir CG, Yener B. Automated Cancer Diagnosis Based on Histopathological Images: A Systematic Survey. Technical Report, Rensselaer Polytechnic Institute. 2005 [Google Scholar]

- 31.Boyer DM, Lipman Y, Clair ES, Puente J, Patel BA, Funkhouser T, Daubechies I. Algorithms to automatically quantify the geometric similarity of anatomical surfaces. Proc. Natl. Acad. Sci. USA. 2011;108:18221–18226. doi: 10.1073/pnas.1112822108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Fasel B, Luettin J. Automatic facial expression analysis: a survey. Pattern Recognit. 2003;36:259–275. [Google Scholar]

- 33.Wang W, Ozolek JA, Rohde GK. Detection and classification of thyroid follicular lesions based on nuclear structure from histopathology images. Cytometry A. 2010;77:485–494. doi: 10.1002/cyto.a.20853. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Yang M, Kpalma K, Ronsin J. A survey of shape feature extraction technique. Pattern Recognit. 2008:43–90. [Google Scholar]

- 35.Do MN, Vetterli M. Wavelet-based texture retrieval using generalized Gaussian density and Kullback–Leibler distance. IEEE Trans. Image Process. 2002;11:146–158. doi: 10.1109/83.982822. [DOI] [PubMed] [Google Scholar]

- 36.Rubner Y, Tomasi C, Guibas LJ. The earth mover's distance as a metric for image retrieval. Int. J. Comput. Vis. 2000;40:99–121. [Google Scholar]

- 37.Grenander U, Miller MI. Computational anatomy: an emerging discipline. Q. Appl. Math. 1998;56:617–694. [Google Scholar]

- 38.Joshi SC, Miller MI. Landmark matching via large deformation diffeo-morphisms. IEEE Trans. Image Process. 2000;9:1357–1370. doi: 10.1109/83.855431. [DOI] [PubMed] [Google Scholar]

- 39.Klassen E, Srivastava A, Mio W, Joshi SH. Analysis of planar shapes using geodesic paths on shape spaces. IEEE Trans. Pattern Anal. Mach. Intell. 2004;26:372–383. doi: 10.1109/TPAMI.2004.1262333. [DOI] [PubMed] [Google Scholar]

- 40.Tenenbaum JB, de Silva V, Langford JC. A global geometric framework for nonlinear dimensionality reduction. Science. 2000;290:2319–2323. doi: 10.1126/science.290.5500.2319. [DOI] [PubMed] [Google Scholar]

- 41.Belkin M, Niyogi P. Laplacian eigenmaps for dimensionality reduction and data representation. Neural Comput. 2003;15:1373–1396. [Google Scholar]

- 42.do Carmo MP. Riemannian Geometry. Springer; Bikhausen, Boston: 1992. [Google Scholar]

- 43.Ambrosio L. Calculus of Variations and Nonlinear Partial Differential Equations. Springer; Berlin, Heidelberg: 2008. Transport equation and Cauchy problem for non-smooth vector fields; pp. 1–41. [Google Scholar]

- 44.He DC, Wang L. Texture features based on texture spectrum. Pattern Recognit. 2008;24:391–399. [Google Scholar]

- 45.Simard P, LeCun Y, Denker JS. Efficient pattern recognition using a new transformation distance. Adv. Neural Inf. Process. 1992;5:50–58. [Google Scholar]

- 46.Chen GY, Bui TD, Krzyźak A. Rotation invariant pattern recognition using ridgelets, wavelet cycle-spinning and Fourier features. Pattern Recognit. 2005;38:2314–2322. [Google Scholar]

- 47.Guo Z, Zhang L, Zhang D. Rotation invariant texture classification using LBP variance (LBPV) with global matching. Pattern Recognit. 2010;43:706–719. [Google Scholar]

- 48.Volker B, Vetter T. Face recognition based on fitting a 3D morphable model. IEEE Trans. Pattern Anal. Mach. Intell. 2003;25:1063–1074. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.