Abstract

Motivation: Technological advances that allow routine identification of high-dimensional risk factors have led to high demand for statistical techniques that enable full utilization of these rich sources of information for genetics studies. Variable selection for censored outcome data as well as control of false discoveries (i.e. inclusion of irrelevant variables) in the presence of high-dimensional predictors present serious challenges. This article develops a computationally feasible method based on boosting and stability selection. Specifically, we modified the component-wise gradient boosting to improve the computational feasibility and introduced random permutation in stability selection for controlling false discoveries.

Results: We have proposed a high-dimensional variable selection method by incorporating stability selection to control false discovery. Comparisons between the proposed method and the commonly used univariate and Lasso approaches for variable selection reveal that the proposed method yields fewer false discoveries. The proposed method is applied to study the associations of 2339 common single-nucleotide polymorphisms (SNPs) with overall survival among cutaneous melanoma (CM) patients. The results have confirmed that BRCA2 pathway SNPs are likely to be associated with overall survival, as reported by previous literature. Moreover, we have identified several new Fanconi anemia (FA) pathway SNPs that are likely to modulate survival of CM patients.

Availability and implementation: The related source code and documents are freely available at https://sites.google.com/site/bestumich/issues.

Contact: yili@umich.edu

1 Introduction

Rapid advances in technology that have generated vast amounts of data from genetic or genome studies have led to a high demand for developing powerful statistical learning methods for extracting information effectively. For instance, understanding clinical and pathophysiologic heterogeneities among subjects at risk and designing effective treatment for appropriate subgroups is one of the most active areas in genetic studies. Wide heterogeneities present in patients’ response to treatments or therapies. Understanding such heterogeneities is crucial in personalized medicine, and discovery of genetic variants offers a feasible approach. However, serious statistical challenges arise when identifying real predictors among hundreds of thousands of candidates, and an urgent need has emerged for the development of effective algorithms for model building and variable selection.

The last three decades have given rise to many new statistical learning methods, including CART (Breiman et al., 1984), random forest (Breiman, 2001), neural networks (Bishop, 1995), SVMs (Boser et al., 1992) and high dimensional regression (Fan and Li, 2001, 2002; Gui and Li, 2005; Tibshirani, 1996, 1997). Boosting has emerged as a powerful framework for statistical learning. It was originally introduced in the field of machine learning for classifying binary outcomes (Freund and Schapire, 1996), and later its connection with statistical estimation was established by Friedman et al. (2000). Friedman (2001) proposed a gradient boosting framework for regression settings. Bühlmann and Yu (2003) proposed a component-wise boosting procedure based on cubic smoothing splines for L2 loss functions. Bühlmann (2006) demonstrated that the boosting procedure works well in high-dimensional settings. For censored outcome data, Ridgeway (1999) applied boosting to fit proportional hazards models, and Li and Luan (2005) developed a boosting procedure for modeling potentially non-linear functional forms in proportional hazards models.

Despite the popularity of aforementioned methods, issues such as false discovery (e.g. seletion of irrelevant SNPs) and difficulty in identifying weak signals present further barriers. Simultaneous inference procedure, including the Bonferroni correction, has been widely used in large-scale testing literature. However, in many high-dimensional settings, such as in genetic studies, variable selection is serving as a screening tool to identify a set of genetic variants for further investigation. Hence, a small number of false discoveries would be tolerable and simultaneous inference would be too conservative. In contrast, the false discovery rate (FDR), defined as the expected proportion of false positives among significant tests (Benjamini and Hochberg, 1995), is a more relevant metric for false discovery control under the framework of variable selection. However, few existing variable selection algorithms control false discoveries. This has brought an urgent need of developing computationally feasible methods that tackle both variable selection and false discovery control.

We propose a novel high-dimensional variable selection method for survival analysis by improving the existing variable selection methods in several aspects. First, we have developed a computationally feasible variable selection approach for high-dimensional survival analysis. Second, we have designed a random sampling scheme to improve the control of the false discovery rate. Finally, the proposed framework is flexible to accommodate complex data structures.

The rest of the article is organized as follows. In Section 2 we introduce notation and briefly review the L1 penalized estimation and gradient boosting method that are of direct relevance to our proposal. In Section 3 we develop the proposed approach, and in Section 4 we evaluate the practical utility of the proposal via intensive simulation studies. In Section 5 we apply the proposal to analyze a genome-wide association study of cutaneous melanoma. We conclude the article with a brief discussion in Section 6.

2 Model

2.1 Notation

Let Di denote the time from onset of cutaneous melanoma to death and Ci be the potential censoring time for patient i, . The observed survival time is , and the death indicator is given by . Let be a p-dimensional covariate vector (contains all the SNP information) for the ith patient. We assume that, conditional on , Di is independently censored by Ci. To model the death hazard, consider

where is the baseline hazard function and is a vector of parameters. The corresponding log-partial likelihood is given by

where is the at-risk set. The goal of variable selection is to identify , which contains all the variables that are associated with the risk of death.

2.2 L1 penalized estimation

Tibshirani (1997) proposed a Lasso procedure in the Cox model, e.g. estimate via the penalized partial likelihood optimization

| (1) |

where is the L1 norm. To solve (1), Tibshirani (1997) considered a penalized reweighted least squares approach. Let be the p × n covariate matrix and define . Let and be the gradient and Hessian of the log-partial likelihood with respect to respectively. Given the current estimator , a two-term Taylor expansion of the log-partial likelihood leads to

where . Similar to the problem of conditional likelihood (Hastie and Tibshirani, 1990), the matrix is non-diagonal, and solving (1) may require computations. To avoid this difficulty, Tibshirani (1997) used some heuristic arguments to approximate the Hessian matrix with a diagonal one, e.g. treated off-diagonal elements as zero. An iteratively procedure is then conducted based on the penalized reweighed least squares

| (2) |

where the weight for subject i is the ith diagonal entry of .

To obtain a more accurate estimation, Gui and Li (2005) used a Cholesky decomposition to obtain such that . The iterative procedure in (2) is then revised as

where and . Alternatively, Geoman (2010) combined gradient descent with Newton’s method and implemented his algorithm in an R package penalized.

2.3 Gradient boosting

Gradient boosting has emerged as a powerful tool for building predictive models; its application in the Cox proportional hazards models can be found in Ridgeway (1999) and Li and Luan (2005). The idea is to pursue iterative steepest ascent of the log likelihood function. At each step, given the current estimate of , say , let . The algorithm computes the gradient of the log-partial likelihood with respect to , the ith component of ,

for , and then fits this gradient (also called working response or pseudo response) to by a so-called base procedure (e.g. least squares estimation). Specifically, to facilitate variable selection, a component-wise algorithm can be implemented by restricting the search direction to be component-wise (Bühlmann and Yu, 2003; Li and Luan, 2005). For instance, fit component-wise model

for . Compute

and update , where v is a positive small constant (say 0.01) controlling the learning rate (Friedman, 2001). For least squares estimation, the gradient boosting is is exactly the usual forward stagewise procedure (termed as linear-regression version of the forward-stagewise boosting in Algorithm 16.1 of Hastie et al., 2009). Bühlmann and Hothorn (2007) refer to the same procedure as “L2boost”.

This approach is to detect a component-wise direction along which the partial likelihood would ascend most rapidly. At each boosting iteration only one component of is selected and updated. The variable selection can be achieved if boosting stops at an optimal number of iterations. This optimal number works as the regularization parameter and it can be determined by cross-validation (Simon et al., 2011). However, as we will show in simulation, the cross-validated choice still includes certain amount of false positive selections. A computationally feasible method is needed to control false discoveries.

2.4 Control of the false discovery rate (FDR)

Benjamini and Hochberg’s FDR-controlling procedure (Benjamini and Hochberg, 1995), or BH’s procedure for short, is a recent innovation for controlling the FDR. Consider a setting where we test a large number of tests simultaneously. Let R be the number of total discoveries (selection of SNPs) and let V be the number of false discoveries (selection of irrelevant SNPs). If we denote the False Discovery Proportion by

then FDR is simply the expectation of false discovery proportion (FDP). In the simplest setting (i.e. P-values associated all component tests are independent), BH’s procedure is able to control the FDR at any preselect level (called the FDR-control parameter).

In the past 20 years, BH’s procedure has inspired a great deal of research: many variants of the procedure have been proposed, and many insights and connections have been discovered. For instance, Efron (2008, 2012) and Storey (2003) have pointed out an interesting connection between the BH’s procedure and the popular Empirical Bayes method. In particular, they proposed a Bayesian version of the FDR which they call the Local FDR (Lfdr) and showed that two versions of FDR are intimately connected to each other. Another useful variant of BH’s procedure is the Significance Analysis of Microarrays (SAM; Tusher et al., 2001), a method that was originally designed to identify genes in microarray experiments. While the success of the BH’s procedure hinges on an accurate approximation of the P-values associated with individual tests, SAM is comparably more flexible for it is able to handle more general experimental layouts and summary statistics, where the P-values may be hard to obtain or to approximate. See Efron (2012) for a nice review on FDR-controlling methods, Lfdr and SAM.

3 Proposed methods

3.1 Component-wise gradient boosting procedure

To introduce the proposed method, we first consider a variant of component-wise gradient boosting method that is computationally efficient in high-dimensional settings.

Algorithm 1 (Componentwise Gradient Boosting)

Initialize . For , iterate the following steps:

- For , compute the componentwise gradient

(3) Compute

- Update , where can be estimated by one-step Newton’s update

Iterate until for some stopping iteration Mstop.

Algorithm 1 is closely connected to the traditional boosting procedure we described in Section 2.3, which first computes the working response, Ui, and then fits the working response to each covariate by least squares. For instance, under the chain rule of differentiation,

where Gj was defined in (3).

In contrast, Algorithm 1 is based on gradient with respect to and it avoids the calculation of working response. Such a component-wise update is connected with a minimization-maximization (MM) algorithm (Hunter and Lange, 2004; Lange, 2013). For instance, in a minorization step, given the mth step estimate , an application of Jensen’s inequality leads to the following minority surrogate function

where is defined implicitly, all and whenever . In the maximization step, we maximize (or monotonically increase) the selected component of the surrogate function to produce the next iteration estimators, e.g. consider and update . Then the boosting algorithm monotonically increase the original log-partial likelihood by increasing the surrogate functions. Note that as long as the ascent property is achieved, the choice of αj is not crucial, e.g. it can be considered as part of a control for step size. Moreover, as one only needs to increase the surrogate function instead of maximizing it, one-step Newton iterations (with step-size control) shall provide sufficient and rapid updates at each boosting step. The parameter v can be regarded as controlling the step size of the one-step Newton procedure. This may explain the reason that in practice the best strategy for learning rate of a boosting procedure is to set v to be very small (v < 0.1).

Instead of using , an alternative approach is to use the normalized updates with norm normalized to be 1, e.g. . Its main disadvantage is that its performance is sensitive to the choice of learning rate. Although provides an ascent direction, a sufficiently small step length may be needed. Empirically we found that the procedure with fitted provides better performance.

3.2 Boosting with stability selection for false discovery control

Stability Selection was recently introduced by Meinshausen and Bühlmann (2010) as a general technique designed to improve the performance of a variable selection algorithm. The idea is to identify variables that are included in the model with high probabilities when a variable selection procedure is performed on randomly sampled of the observations. For completeness of exposure, we summarize the procedure of stability selection as follows. Let I be a random subsample of of size , draw without replacement. Here is defined as the largest integer not greater than . For variable , the random sampling probability that the jth variable is selected by the stability selection is

where denotes the variable selected by the variable selection procedure based on the subsample I, and the empirical probability is with respect to the random sampling. For a threshold , the set of variables selected by stability selection is then defined as

A particularly attractive feature of stability selection is that its relatively insensitive to the tuning parameter (e.g. Mstop for boosting) and hence cross-validation can be avoided. However, a new regularization parameter needs to be determined is the threshold Πthres. To address this question, an error control was provided by an upper bound on the expected number of falsely selected variables (Meinshausen and Bühlmann, 2010; Theorem 1). More formally, let be the expected number of selected variables and define V to be the number of falsely selected variables. Assume an exchangeable condition, then the expected number V of falsely selected variables is bounded for by

Based on such a bound, the tuning parameter Πthres can be chosen such that is controlled at the desired level, e.g. for , if ,

| (4) |

The property of the above procedure relies on restricted assumptions such as exchangeability condition (e.g. the joint distribution of outcomes and covariates is invariant under permutations of non-informative variables), which, as noted by Meinshausen and van de Geer (2011), are not likely to hold for real data. In genetic studies with extensive correlation structure among SNP markers, the exchangeability condition fails and using threshold in (4) has been shown to suffer a loss of power (Alexander and Lange, 2011). Moreover, in computing the threshold in (4), we face a tradeoff. Commonly used variable selection procedures will select certain amount of false positives. On one hand, we want to be large to select the true informative predictors, but on the other hand, a large also can render Πthres large (which leads to too conservative threshold). If , we cannot control the error with the formula in (4).

To improve the performance of stability selection and determine a data-driven threshold for the selection frequency, we adopt the idea of SAM (Tusher et al., 2001) and propose a random permutation based stability selection boosting procedure.

Algorithm 2 (Boosting with Stability Selection and Permutation)

For , we draw random subsample of the data of size . On the sth subsample, implement the proposed boosting approach (e.g. Algorithm 1). Record the set of selected predictors at the sth subsampling, , and compute , where I(A) is an indicator function taking the value 1 when condition A holds and 0 otherwise.

For , randomly permute the outcomes so that the relation between covariates and outcomes is decoupled. Repeat the stability-based boosting described in step (a) on the permuted sample and record the set of selected predictors , and compute .

Order the values of for , and let be the jth largest value. Likewise let be the jth largest value of .

- Define the estimated empirical Bayes false discovery rate (Efron, 2012) corresponding to the jth largest as

- For a pre-specified value , calculate a data-driven threshold

Then this can be used to determine the selected variables. If q = 0.2 and 5 variables are selected with selection frequency greater than , then 1 of these 5 variables would be expected to be false positive.

4 Simulations

Finite-sample properties of the proposed method were evaluated through a series of simulation studies. Death times were generated from the exponential model, for , where n = 1000 and came from multivariate normal distributions. These 2000 predictors were in 10 blocks with equal numbers of predictors within each block. We considered three simulation schemes with within-block correlation coefficients varying between 0.2, 0.5 and 0.8. For all three schemes, the between-block correlation coefficients were 0 (i.e. independent between blocks). We chose 10 true signals; one from each block, with true β in . All other covariate effects are zero. Censoring times were generated from uniform distributions, with the percentage of censored subjects then being approximately 20–30%. Each data configuration was replicated 100 times.

We first assess the speed of our algorithm. Table 1 compares the computation time for the proposed approach with Lasso for proportional hazard models (implemented with R package penalized). These timings were taken on an Dell laptop (model XPS 15) with quad-core 2.1-GHz Intel Core i7-3612QM processor and 8 GB RAM. Numerically, we find the proposed approach is faster than R package penalized. As a gradient based method, at each iteration the computational speed of the proposed approach is faster than those approaches that require inverting the Hessian matrix. It is known that finding the proper regularization parameter is difficult for the Lasso procedure, especially for survival settings for which piece-wise linear solution path (LARS; Efron et al., 2004) is not available and a grid search (Simon et al., 2011) or bisection method (Geoman, 2010) is required (e.g. multiple Lasso procedures are needed for a series of tuning parameters). In contrast, in boosting procedure, the number of iteration works as tuning parameter and the selection of optimal tuning parameter can be implemented in a single boosting procedure. Moreover, the optimal choice is less critical as boosting is more robust to overfitting (Hastie et al., 2009).

Table 1.

Comparisons of computation time: 1 simulation loops; n = 1000 and p = 2000

| Lasso | Boosting |

|---|---|

| 7.49 min | 4.16 min |

The boosting procedure is described in Sections 3.1; The Lasso is implemented using R package penalized; 10-fold cross-validation was implemented to determine the optimal tuning parameters.

We compared the proposed methods, Lasso for proportional hazard models, univariate approaches with either Bonferroni correction (termed Univariate Bonferroni in Table 2) or Benjamini and Hochberg’s (1995) procedure for FDR control (below a threshold 0.2; termed Univariate FDR in Table 2). For Lasso and the boosting approach without stability control (Algorithm 1), 10-fold cross-validation was implemented to determine the optimal tuning parameters (e.g. Simon et al., 2011). For the boosting approach with stability selection, we repeatedly drew 100 random subsamples of the data of size . Both the thresholds defined in formula (4) and Algorithm 2 with q = 0.2 (termed S-Boosting-1 and S-Boosting-2 respectively) were used for variable selection. Table 2 shows that the boosting without stability selection (termed Boosting in Table 2) outperform the univariate approaches in the average number of false positives (FP), average FDP, average number of false negative (FN) and the empirical probabilities to identify the true signal (Power). Though the Lasso has comparable performances in terms of FN and Power, the FPs of the boosting methods are substantially fewer than the Lasso. Finally, the proposed boosting method with stability selection and permutation (S-Boosting-2) further reduces the FPs and it outperforms S-Boosting-1.

Table 2.

Summary of simulation results

| Correlation | Methods | FP | FDP | FN | Power |

|---|---|---|---|---|---|

| 0 | Univariate Bonferroni | 0.01 | 0 | 2.28 | 0.77 |

| Univariate FDR | 1.94 | 0.18 | 1.49 | 0.85 | |

| Lasso | 185.22 | 0.95 | 0 | 1 | |

| Boosting | 15.76 | 0.61 | 0 | 1 | |

| S-Boosting-1 | 0.01 | 0 | 0 | 1 | |

| S-Boosting-2 | 0 | 0 | 0 | 1 | |

| 0.5 | Univariate Bonferroni | 85.29 | 0.92 | 2.32 | 0.77 |

| Univariate FDR | 172.32 | 0.95 | 0.81 | 0.92 | |

| Lasso | 186.17 | 0.95 | 0 | 1 | |

| Boosting | 22.31 | 0.69 | 0 | 1 | |

| S-Boosting-1 | 0.08 | 0.01 | 0 | 1 | |

| S-Boosting-2 | 0.01 | 0 | 0 | 1 | |

| 0.8 | Univariate Bonferroni | 131.42 | 0.94 | 2.17 | 0.78 |

| Univariate FDR | 207.52 | 0.96 | 0.68 | 0.93 | |

| Lasso | 185.14 | 0.95 | 0 | 1 | |

| Boosting | 29.25 | 0.75 | 0 | 1 | |

| S-Boosting-1 | 0.36 | 0.03 | 0.1 | 0.99 | |

| S-Boosting-2 | 0.03 | 0.01 | 0 | 1 |

FP: the average number of false positives; FDP: false discovery proportion; FN: average number of false negative; Power: the empirical probabilities to identify the true signal

5 Application of cutaneous melanoma data

Cutaneous melanoma (CM) is one of the most aggressive skin cancers, causing the greatest number of skin cancer related deaths worldwide. Among the CM patients, wide heterogeneities are present. The commonly used clinicopathological variables, such as tumor stage and Breslow thickness (Balch et al., 2009), may have insufficient discriminative ability (Schramm and Mann, 2011). Discovery of genetic variants would offer a feasible approach to understanding mechanisms that may affect clinical outcomes and the sensitivity of individual cancer to therapy (Liu et al., 2012, 2013; Rendleman et al., 2013). We applied our proposed procedures to a genome-wide association study reported by Yin et al. (2015) to analyze the association of 2339 common single-nucleotide polymorphisms (SNPs) with overall survival in CM patients. Our goal was to identify SNPS that are relevant to overall survival among the patients.

The dataset contains a total of 858 CM patients, with 133 deaths observed during the follow-up, where the median follow-up time was 81.1 months. The overall survival time was calculated from the date of diagnosis to the date of death or the date of the last follow-up. Genotyped or imputed common SNPs (minor allele frequency , genotyping rate , Hardy-Weinberg equilibrium P-value and imputation r) within 14 autosomal FA genes or their -kb flanking regions were selected for association analysis (Yin et al., 2015). As a result, 321 genotyped SNPs and 2018 imputed SNPs in the FA pathway were selected for further analysis. Other covariates to adjust for included age at diagnosis, Clark level, tumor stage, Breslow thickness, sentinel lymph node biopsy and the mitotic rate.

The proposed boosting procedure with stability selection was implemented to select informative SNPs (coded as 0, 1; without or with minor alleles). The importance of predictors is evaluated by the proportion of times that the predictor is selected in the model among the 100 subsamples. We also compared the proposed methods with the Lasso, the boosting procedure without stability selection and univariate approaches. The results are summarized in Table 3. The Lasso procedure selected 25 SNPs. Among them, 12 SNPs with absolute coefficients larger than 0.01 are listed in Table 3. None of these predictors pass the univariate approaches with Bonferroni correction or Benjamini and Hochberg’s (1995) procedure for FDR control (with a threshold 0.2). As we found in Section 4, these results argue that the univariate approaches may have more false negatives than other methods. In contrast, the boosting procedure selected 7 predictors, which were a subset of top 12 SNPs selected by the Lasso. To further control the false selections, the estimated false discovery rate, , were also calculated to determine a data-driven threshold for the selection frequency such that . Three of the SNPs selected by both Lasso and boosting pass the threshold . The remaining variables find insignificant support from stability selection. Table 4 summarizes the numbers of selected variables from the Lasso and the boosting without or with stability selection. These results are consistent with those from simulation section. The Lasso tends to select too many variables. The boosting selects substantially fewer variables than the Lasso. The boosting procedure with stability selection provides a control for false positives.

Table 3.

Summary of selected SNPs by Lasso (sorted by the magnitude of coefficients; only predictors with absolute coefficients larger than 0.01 are included), their estimated coefficients by boosting without stability selection, P-values based on univariate approach, selection frequencies based on stability selection

| SNPs | Chromosome | Gene | P-value | Frequency (%) | ||

|---|---|---|---|---|---|---|

| rs74189161 | 13 | BRCA2 | −0.11 | −0.10 | 0.002 | 72* |

| rs356665 | 9 | FANCC | −0.09 | −0.04 | 0.03 | 88* |

| rs11649642 | 16 | FANCA | −0.08 | −0.05 | 0.01 | 27 |

| rs9567670 | 13 | BRCA2 | −0.07 | −0.03 | 0.01 | 51 |

| rs8081200 | 17 | BRIP1 | −0.06 | −0.02 | 0.05 | 38 |

| rs3087374 | 15 | FANC1 | −0.06 | −0.01 | 0.02 | 73* |

| rs35322368 | 9 | FANCC | 0.06 | 0 | 0.03 | 65 |

| rs57119673 | 16 | FANCA | −0.04 | −0.01 | 0.03 | 54 |

| rs8061528 | 16 | BTBD12 | −0.03 | 0 | 0.12 | 36 |

| rs2247233 | 15 | FANC1 | 0.02 | 0 | 0.15 | 39 |

| rs848286 | 2 | FANCL | 0.02 | 0 | 0.02 | 23 |

| rs62032982 | 16 | PALB2 | 0.01 | 0 | 0.04 | 34 |

: coefficients from Lasso; : coefficients from boosting; P-value: calculated from univariate approach; Frequency : selection frequencies across 100 subsampling; : estimated empirical Bayes false discovery rate (based 500 permuted samples); the false discovery control of the predictors under stability selection are coded by (*) to indicate that the selection frequencies pass the threshold.

Table 4.

Numbers of selected variables

| Lasso | Boosting | Stability selection |

|---|---|---|

| 25 | 7 | 3 |

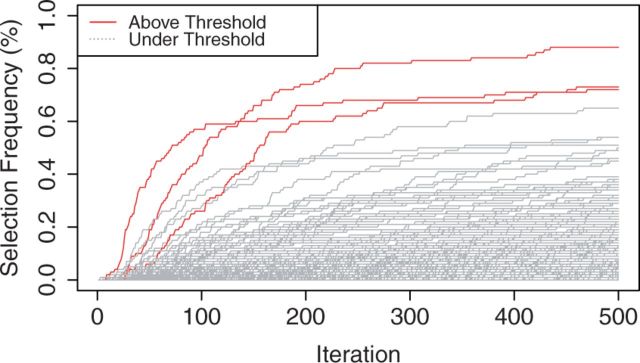

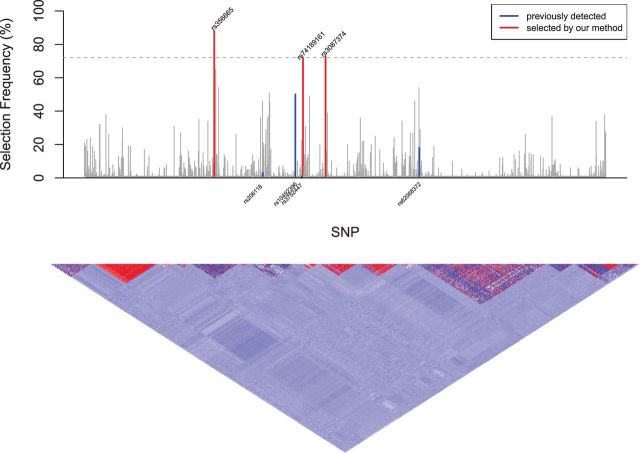

Figure 1 shows the stability path (selection frequencies across boosting iterations). The variables with selection frequencies larger than the threshold (estimated empirical Bayes false discovery rate ; based 500 permuted samples) are plotted as solid lines, while the path of the remaining variables are shown as broken lines. The top 3 variables stand out clearly and the number of boosting iteration is less critical. A Manhattan plot was given in Figure 2 with the dashed horizontal line corresponding to the estimated threshold . Three variables have selection frequencies larger than this dashed horizontal line. The vertical blue lines highlight the selection frequencies of the four previously-detected SNPs that are associated with overall survival of CM patients by Yin et al. (2015). The red vertical lines highlight the SNPs whose selection frequencies pass the estimated threshold. The lower panel of Figure 2 illustrates pairwise correlations across the 2339 SNPs with the strength of the correlation, from positive to negative, indicated by the color spectrum from red to dark blue. One of the top SNPs in our finding, rs74189161 (with selection frequency = 72% and ) is strongly correlated with rs3752447 identified by Yin et al. (2015), with correlation coefficients (calculated with plink v1.07; Purcell et al., 2007). Besides confirming the previously reported SNP, we also found some novel signals. For example, we identified a cluster of signals around SNP rs356665 in gene FANCC and a SNP rs3087374 in gene FANC1. Both two genes have previously been reported having regulation effects with the FA pathway (Thompson et al., 2012; Jenkins et al., 2012; Kao et al., 2011). Mutations in the FA pathway are identified in diverse cancer types (Hucl and Gallmeier, 2011) and therefore are likely to modulate the survival of CM patients.

Fig. 1.

Selection Path: selection frequencies across 500 boosting iterations; Threshold: estimated empirical Bayes false discovery rate (based 500 permuted samples)

Fig. 2.

Manhattarn Plot for Selection Frequency (%); dashed horizontal line: estimated threshold ; vertical blue lines: selection frequencies of the four previously-detected SNPs that are associated with overall survival of CM patients by Yin et al. (2015); red vertical lines: the SNPs whose selection frequencies pass the estimated threshold; the lower panel: pairwise correlations across the 2339 SNPs with the strength of the correlation, from positive to negative, indicated by the color spectrum from red to dark blue

6 Discussion

Reducing the number of false discoveries is often very desirable in biological applications since follow-up experiments can be costly and laborious. We have proposed a boosting method with stability selection to analyze high-dimensional data. We demonstrated and compared performances of the proposed method and the commonly used univariate approaches or Lasso for variable selection. The proposed method outperformed other methods in terms of substantially reduced false positives and low false negatives.

Finally, it is worth mentioning that the traditional gradient boosting approach described in Section 2.3 cannot accommodate some important models, including survival models with time-varying effects wherein the generic function eta not only depends on X, but also on time. In contrast, the proposed modification of gradient boosting works in flexible parameter spaces, even including infinite-dimensional functional spaces. In the latter case, as the search space is typically a functional space, one needs to calculate the Gâteaux derivative of the functional in order to determine the optimal descent direction. We will report the work elsewhere.

Funding

Drs Li and Lin’s research is partly supported by the Chinese Natural Science Foundation (11528102). Dr Wei’s research is partly supported by NIH grants R01CA100264 and R01CA133996. Dr Hyslop’s research is partly supported by a NIH grant P30CA014236. Dr Lee’s research is partly supported by NCI SPORE P50 CA093459, and philanthropic contributions to The University of Texas M.D. Anderson Cancer Center Moon Shots Program, the Miriam and Jim Mulva Research Fund, the Patrick M. McCarthy Foundation and the Marit Peterson Fund for Melanoma Research.

Conflict of Interest: none declared.

References

- Alexande D. H., Lange K. (2011) Stability selection for genome-wide association. Genetic Epidemiology, 35, 722–728. [DOI] [PubMed] [Google Scholar]

- Balch C.M., et al. (2009) Final version of 2009 AJCC melanoma staging and classification. J. Clin. Oncol., 27, 6199–6206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benjamini Y., Hochberg Y. (1995) Controlling the false discovery rate: a practical and powerful approach to multiple testing. J. R. Stat. Soc. Ser. B, 57, 289–300. [Google Scholar]

- Bishop C. (1995) Neural Networks for Pattern Recognition. Clarendon Press, Oxford. [Google Scholar]

- Boser B.E., et al. (1992) A training algorithm for optimal margin classifiers. In: Proceedings of the Fifth Annual ACM Workshop on Computational Learning Theory, 144–152. [Google Scholar]

- Breiman L., et al. (1984) Classification and Regression Trees. Wadsworth, New York. [Google Scholar]

- Breiman L. (2001) Random forests. Mach. Learn., 45, 5–32. [Google Scholar]

- Bühlmann P., van de Geer S. (2011) Statistics for High-Dimensional Data: Methods, Theory and Applications, Springer-Verlag Berlin Heidelberg. [Google Scholar]

- Bühlmann P., Yu B. (2003) Boosting with the L2 loss: regression and classification. J. Am. Stat. Assoc., 98, 324–339. [Google Scholar]

- Bühlmann P., Yu B. (2006) Boosting for high-dimensional linear models. Ann. Stat., 34, 559–583. [Google Scholar]

- Bühlmann P., Hothorn T. (2007) Boosting algorithms: regularization, prediction and model fitting. Stat. Sci., 22, 477–505. [Google Scholar]

- Efron B., et al. (2004) Least angle regression. Ann. Stat., 32, 407–499. [Google Scholar]

- Efron B. (2008) Microarrays, empirical Bayes and the two groups model. Stat. Sci., 23, 1–22. [Google Scholar]

- Efron B. (2012) Large-Scale Inference: Empirical Bayes Methods for Estimation, Testing, and Prediction. Institute of Mathematical Statistics Monographs, Cambridge University Press, Cambridge, United Kingdom. [Google Scholar]

- Fan J., Li R. (2001) Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc., 96, 1348–1360. [Google Scholar]

- Fan J., Li R. (2002) Variable selection for Cox’s proportional hazards model and frailty model. Ann. Stat., 30, 74–99. [Google Scholar]

- Freund Y., Schapire R. (1996) Experiments with a new boosting algorithm. Machine Learning: Proceedings of the Thirteenth International Conference, Morgan Kauffman, San Francisco, pp. 148–156. [Google Scholar]

- Friedman J.H., et al. (2000) Additive logistic regression: a statistical view of boosting (with discussion). Ann. Stat., 28, 337–407. [Google Scholar]

- Friedman J.H. (2001) Greedy function approximation: a gradient boosting machine. Ann. Stat., 29, 1189–1232. [Google Scholar]

- Geoman J.J. (2010) L1 penalized estimation in the Cox proportional hazards model. Biometrical Journal, 52, 70–84. [DOI] [PubMed] [Google Scholar]

- Gui J., Li H. (2005) Penalized cox regression analysis in the high-dimensional and low-sample size settings with application to microarray gene expression data. Bioinformatics, 21, 3001–3008. [DOI] [PubMed] [Google Scholar]

- Hastie T.J., Tibshirani R.J. (1990) Generalized Additive Models. Chapman and Hall/CRC. [DOI] [PubMed] [Google Scholar]

- Hastie T., et al. (2009) The Elements of Statistical Learning: Data Mining, Inference, and Prediction, Springer, New York. [Google Scholar]

- Hucl T., Callmeier E. (2010) DNA repair: exploiting the Fanconi Anemia Pathway as a potential therapeutic target. Physiol. Res., 60, 453–465. [DOI] [PubMed] [Google Scholar]

- Hunter D.R., Lange K. (2004) A tutorial on MM algorithms. Am. Stat., 58, 30–37. [Google Scholar]

- Jenkins C., et al. (2012) Targeting the Fanconi Anemia Pathway to identify tailored anticancer therapeutics. Anemia, Article ID 481583. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kao W.H., et al. (2011) Upregulation of Fanconi anemia DNA repair genes in melanoma compared with non-melanoma skin cancer. J. Investig. Dermatol., 131, 2139–2142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lange K. (2013) Optimization. 2nd edn Springer Texts in Statistics In: Casella G., et al. (eds), Springer, New York. [Google Scholar]

- Li H., Luan Y. (2005) Boosting proportional hazards models using smoothing splines, with applications to high-dimensional microarray data. Bioinformatics, 21, 2403–2409. [DOI] [PubMed] [Google Scholar]

- Liu H., et al. (2012) Influence of single nucleotide polymorphisms in the MMP1 promoter region on cutaneous melanoma progression. Melanoma Res., 22, 169–75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meinshausen N., Bühlmann P. (2010) Stability selection (with discussion). J. R. Stat. Soc. Ser. B, 72, 417–473. [Google Scholar]

- Purcell S., et al. (2007) PLINK: a toolset for whole-genome association and population-based linkage analysis. Am. J. Hum. Genet., 81, 559–575. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rendleman J., et al. (2013) Melanoma risk loci as determinants of melanoma recurrence and survival. J. Transl. Med., 11, 1–14. doi: 10.1186/1479-5876-11-279. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ridgeway G. (1999) The state of boosting. Comput. Sci. Stat., 31, 172–181. [Google Scholar]

- Schramm S.J., Mann G.J. (2011) Melanoma prognosis: a REMARK-based systematic review and bioinformatic analysis of immunohistochemical and gene microarray studies. Mol. Cancer Therap., 10, 1520–1528. [DOI] [PubMed] [Google Scholar]

- Simon N., et al. (2011) Regularization paths for Cox’s proportional hazards model via coordinate descent. J. Stat. Softw., 39, 1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Storey J.D. (2003) The positive false discovery rate: a Bayesian interpretation and the q-value. Ann. Stat., 31, 2013–2035. [Google Scholar]

- Thompson E.R., et al. (2012) Exome sequencing identifies rare deleterious mutations in DNA repair genes FANCC and BLM as potential breast cancer susceptibility alleles. PLOS Genet., 8, e1002894. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tibshirani R. (1996) Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B (Methodological), 58, 267–288. [Google Scholar]

- Tibshirani R. (1997) The lasso method for variable selection in the Cox model. Stat. Med., 16, 385–395. [DOI] [PubMed] [Google Scholar]

- Tusher V.G., et al. (2001) Significance analysis of microarrays applied to the ionizing radiation response. Proc. Natl. Acad. Sci. USA, 98, 5116–5121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yin J., et al. (2015) Genetic variants in Fanconi Anemia pathway genes BRCA2 and FANCA predict Melanoma survival. J. Investig. Dermatol. 135, 542–550. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhao D.S., Li Y. (2010) Principled sure independence screening for Cox models with ultra-high-dimensional covariates. manuscript, Harvard University. [Google Scholar]