Abstract

Standards are specifications to which the elements of a technology must conform. Here, we apply this notion to the biochemical ‘technologies' of nature, where objects like DNA and proteins, as well as processes like the regulation of gene activity are highly standardized. We introduce the concept of standards with multiple examples, ranging from the ancient genetic material RNA, to Palaeolithic stone axes, and digital electronics, and we discuss common ways in which standards emerge in nature and technology. We then focus on the question of how standards can facilitate technological and biological innovation. Innovation-enhancing standards include those of proteins and digital electronics. They share common features, such as that few standardized building blocks can be combined through standard interfaces to create myriad useful objects or processes. We argue that such features will also characterize the most innovation-enhancing standards of future technologies.

Keywords: innovation, evolution, technology

1. Introduction

Anybody who has travelled to a foreign country without the right electric outlet adaptor has made frustrating contact with the world of technology standards. After more than a century of public electric power, 14 incompatible outlet standards persist, as do similarly incompatible standards—of battery sizes, audio data formats, espresso capsules, and so on—in many other technologies.

A technology is a ‘means to fulfil a human purpose’ [1], and a technology standard is a set of specifications to which all elements of a product, process or format conform [2]. These definitions do not just apply to human technology, but they have analogues that apply to all of life. The reason is that one can view adaptive traits of organisms as technologies, means to fulfil the ‘purpose’ of any organism—to survive and reproduce. And because many parts of organisms and many of life's processes—such as DNA and its replication—recur in highly stereotypical ways across many species, one can think of them as being standardized.

It is important to distinguish standards, be they in nature or technology, from the processes that create them. In the human realm, many technologies, such as Adobe's pdf standard for formatting documents, can become de facto standards through their success in the marketplace. This process is a technological analogue to natural selection, which has established many of nature's standards. However, many human technology standards become established through a social decision process that has no known counterpart in nature. Such standards are de jure standards. They are unilaterally imposed by a regulatory body, a government or the military, or they can be offered for adoption by mutual agreement between manufacturers or other stakeholders—this is how standards organizations like the International Organization for Standardization arrive at thousands of standards [3]. Our primary focus will not be on the processes creating standards, but on standards themselves, and on their role in innovation.

In human technology, innovations are successful inventions that have achieved widespread diffusion by fulfilling a human purpose [4]. In nature, innovations are qualitatively novel traits that help organisms survive and reproduce [5]. Our focus is on qualitatively new technologies and traits, such as the transistor and the insect wing, but we are well aware that the line between merely quantitative and qualitative change is not clear-cut and that many innovations arise in a series of small steps.

In the next sections, we first provide several examples of standards in nature and technology, beginning from the most ancient standards that date to life's earliest days, proceeding to technological standardization in prehistoric cultures and concluding with industrial and post-industrial technologies. With these examples in mind, we will then briefly return to the question of how standards originate. But more important, we will ask what role standards play in innovation. Central to this role is the extent to which standards render different technologies interoperable.

2. From the primordial soup to nervous systems

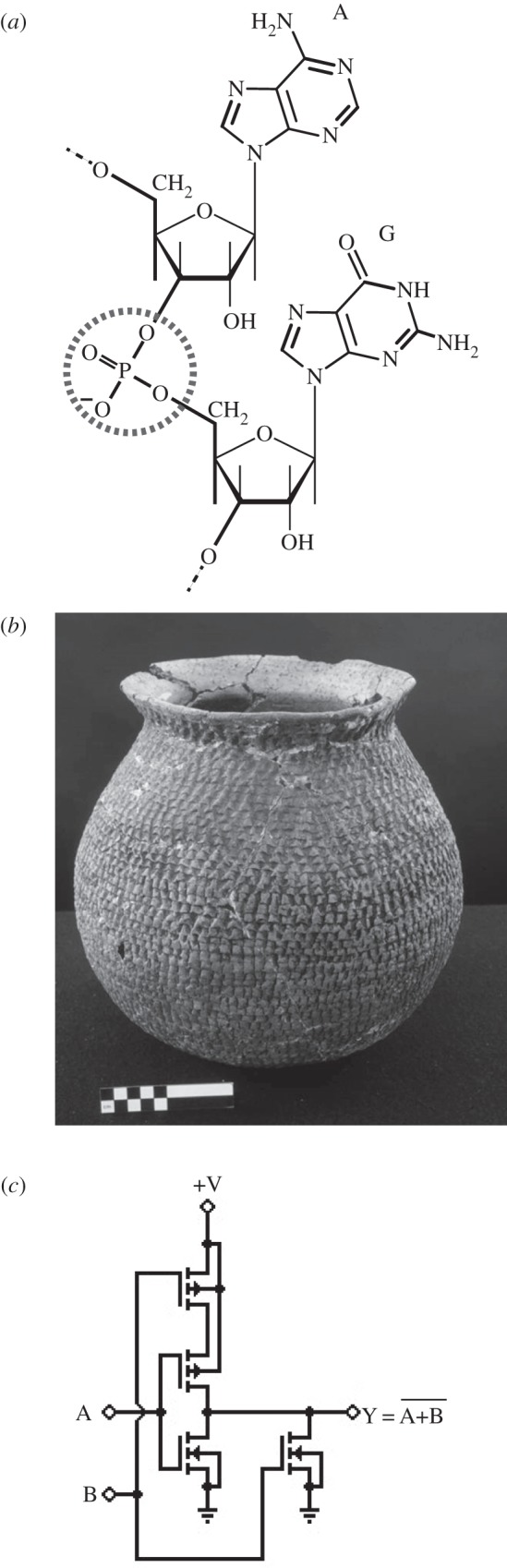

Early life was an RNA world [6–10], and RNA was one of life's first ‘technology standards', serving to store and transmit information [11]. RNA is a biochemical ‘technology’ where both the parts and their ‘interface’ are standardized. The parts are four different kinds of RNA building blocks—nucleotides—that are distinguished by the four bases adenine, cytosine, guanine and uracil. Their interface is the phosphodiester bond that links one specific oxygen molecule on a ribose sugar of one nucleotide to a different oxygen molecule on the next nucleotide, using a phosphate as a bridge (figure 1a). This interface is repeated stereotypically at each of the thousands of nucleotides that can comprise a single RNA string.

Figure 1.

Three examples of standards in different domains. (a) The phosphodiester bond (dashed circle), a standard interface linking the nucleotide building blocks of RNA and DNA, such as the nucleotides containing adenine (‘A’) and guanine (‘G’) of this RNA example. (b) An ancestral Pueblo cooking pot. The exterior texture was created by combining the raw materials of pottery with techniques from basket weaving. ‘Corrugated’ vessels like this improve cooking control relative to older, plain-surfaced vessels. Courtesy of Crow Canyon Archaeological Center. (c) A standard NOR (‘not OR’) logic gate comprised four transistors. Millions of identical copies of this and a few other gate types are interconnected on a single integrated circuit to perform logical and arithmetic functions.

Because of small differences between the chemical structure of RNA and DNA, RNA is a better catalyst of biochemical reactions, but it also has the disadvantage of being chemically less stable than DNA. It is not surprising then, that sometime early in life's evolution, the major tasks needed to perpetuate life—information storage and catalysis—became subdivided among two different classes of molecules. DNA became the primary information repository, and proteins—more versatile catalysts than RNA—became the dominant catalysts.

As in RNA, the parts and their interface are also highly standardized in DNA and proteins. DNA's parts are four nucleotides akin to those of RNA, and their interface is the same phosphodiester bond as in RNA. The parts of proteins are the 20 different kinds of amino acids, which are connected by their own standard interface, the peptide bond linking an amino group at the end of one amino acid to the carboxyl group at the end of another other amino acid. Again, this interface is stereotypically repeated thousands of times in proteins comprising thousands of amino acids.

The astounding universality of these standards becomes clear if one considers that more than 1030 organisms are alive today [12], and every single one ever examined is built around DNA, RNA and proteins. What is more, the nucleotide string of DNA is translated into the amino acid string of proteins through a nearly universal genetic code, where each of the 64 possible nucleotide triplets—codons—stands either for one of 20 amino acids, or for a translation start or stop signal [13]. Standards as universal as these are also behind the enormous success of genetic engineering and synthetic biology, which rely on them to modify one organism with components of another.

Although the architectures of RNA, DNA and proteins are life's most universal standards, others are not far behind. To build organs and tissues—from a plant's leaf to an insect's wing or a fish's fin—multitudes of genes that encode different proteins need to be expressed at just the right time and place. Such gene expression—the production of the RNA and protein encoded in a gene's DNA—requires, first, that an RNA polymerase enzyme transcribes a gene's DNA into an RNA copy, and second, that this transcript is translated into the amino acid sequence of a protein. The rate of transcription is regulated by transcription factors, proteins that bind specific short DNA words near the gene and that interact with the polymerase to activate or repress the initiation of transcription. Transcription factors bind specific, usually short DNA sequences, to help turn a gene on or off. This standardized process is repeated millions of times in different genes.

The activity of proteins is also regulated by standardized mechanisms. One of the most widespread revolves around protein kinases, proteins that recognize short amino acid sequences on other proteins (or on themselves) and attach a phosphate group to one of these amino acids. This chemical modification can alter a protein's function by changing its three-dimensional shape [14,15]. Since its establishment as a process standard, phosphorylation has come to be used in many different ways. For example, phosphorylation activates some proteins, whereas it inactivates others; it causes proteins to dissociate from some molecules, whereas it helps them bind tightly to others. Our genomes encode thousands of protein kinases, many of them with unique recognition sequences and protein targets. They are involved in almost every process important to life, such as the regulation of metabolism, cell division and intercellular communication [16, ch. 2]. Some kinases, such as that encoded by the yeast cell-cycle regulator cdc2, have conserved their function since the common ancestor of yeast and humans more than a billion years ago [17].

Another widespread class of standardized processes helps the cells and tissues of an organism communicate. We exemplify it with a molecular interface standard known as a G protein, which helps cells process information about the outside world [18]. The name derives from the ability of G proteins to bind guanosine triphosphate or GTP—an energy storage standard similar to the more widely known ATP. G-proteins are composed of three subunits—three different amino acid strings—referred to as the α, β and γ subunit, which bind receptor proteins that extend through the cell membrane into the extracellular space. When such a receptor becomes activated—usually when specific molecules bind to its extracellular surface—the receptor changes its three-dimensional structure such that a bound G-protein can bind GTP. This event causes the G-protein's subunits to dissociate from one another and to bind other effector proteins that communicate this activated state to the cell's interior [18]. G proteins are ubiquitous from slime moulds to humans, and wherever they occur, they relay information. Other communication processes use different standardized processes and objects, such as receptors for steroid hormones like oestrogen [16, ch. 3, 19], and for peptide hormones such as insulin, which occur in organisms as different as humans and fruit flies.

This smattering of examples does not do justice to the myriad standards that exist on all levels of biological organization, from protein and DNA motifs—parts of molecules that have similar functions in many organisms—to whole molecules and the circuits they form inside cells, to cell types, tissues and organs. Organisms and their cells import nutrients, excrete waste products, transport materials, propel themselves and communicate using processes that have originated, in many cases, more than a billion years ago and have spread to become standardized across many species. Among all those standards, we will mention only one more, because it is especially consequential. It is involved in the electrochemical communication of neurons, which are highly diverse in architecture and encode information in a variety of ways. Nonetheless, their communication shares a process standard: a voltage gradient that travels rapidly across a neuron's surface and can be transmitted to other neurons through chemical or electrical synapses. This process permits neural computation, which has become ever more sophisticated as neural evolution has created increasingly complex nervous systems. They range from diffuse nerve nets in lower metazoans to progressively concentrated nerve cords and ganglia, and the central nervous systems of vertebrates and humans with up to a trillion neurons [20].

3. From Palaeolithic to pre-industrial cultures

Organizing neurons into brains that use symbols and create tools enabled the emergence of human culture and its most important information technology: language [21]. Humans are able to parse vocalizations into phonemes—perceptually distinct sound units that can be strung together in innumerable ways to create the words and sentences we use in communicating information. This ability emerged during the Palaeolithic and allowed humans to create ‘infinite utterances from finite means' [22]. Phonemes are standardized units of speech. The system creating words and sentences from them rivals that of DNA in its combinatorial power. Human languages maintain inventories of between 15 and 60 phonemes [23], and historical studies show that these inventories tend to evolve in ways that balance the needs of efficiency (energy expenditure in speaking) and expressiveness (ability to convey meaning) [22]. As a result, distinct but phonetically similar phonemes can merge into one (Spanish /ll/ and /y/ have merged into a single phoneme /y/ in recent centuries), but following such changes, variations of a single phoneme often split into two. For example, English /ŋ/ was initially a phonetic variant of /n/ that occurred before /k/ or /g/, but it became a distinct phoneme when the final /g/ was dropped from words ending in /-ŋg/. This split was necessary for speakers to distinguish ‘king’ from ‘keen’, ‘sing’ from ‘seen’, etc. [24]. This pattern of ‘splits following mergers' is common in language history. It suggests that languages require a minimal number of phonemic standards to fulfil their role of conveying information via sound. We also note that phonemes, as the fundamental building blocks of language, are far more standardized across languages than are words and sentences.

Standards have also played an important role in creating and maintaining social groups and boundaries. A central element of human social organization is cooperation among individuals who are not close relatives [25]. To enable such cooperation, people must recognize those who belong to one's own ethnic group, clan, community or nation, often at a distance and at a glance. To this end, human groups develop distinctive styles of clothing, hair, tattooing, arts and crafts [26–30]. These cultural norms are a form of standard that structure social interaction in heterogeneous environments. Although the specific content of material culture styles varies dramatically across cultures, the use of such standards to signal group affiliation is universal [27,31]. Such styles play an analogous role to allorecognition systems in non-human biology, such as pheromones that enable insects to recognize nest-mates [32], and the adaptive immune system of vertebrates, which recognizes foreign molecules using antibodies with a standardized architecture.

The earliest physical traces of standardization in human technologies date from the Palaeolithic. During this era, stone tools such as handaxes, scrapers, projectile points or awls became increasingly standardized in their manufacture and form. Examples include handaxes from three Lower Palaeolithic sites in Israel whose shapes increased in regularity and symmetry over time [33]. Multiple factors have been invoked to account for tool standardization, including the evolution of increased cognitive abilities, the progressive tailoring of form to intended function through trial-and-error learning, the cultural transmission of tool designs and tool-making skills by imitative learning, and even the fracture properties of stone that constrain potential tool forms [34–39]. But perhaps the most compelling rationale for standardization lies in the increased usage of composite tools that have more than one part, such as shafted hunting tools, e.g. spears with wooden shafts and stone points. Standardized parts, such as spear tips that fit a shaft with standard diameter, make it easier to maintain, repair and copy such tools [40,41].

The emergence of standardized parts is clearly evident in the evolution of technology in small-scale societies. The Pueblo area of the US Southwest provides especially well-studied examples. In this area, pottery technology first emerged in the sixth century CE as cooks experimented with dried and fire-hardened clay as a means of toasting and popping maize kernels [42,43]. In subsequent centuries, functional vessels emerged from the development of standardized ingredients, techniques and recipes [44]. For example, pots suitable for cooking cornmeal needed to hold together when placed on an open fire and needed to cook at a simmer instead of a boil. Pueblo potters solved the first problem, thermal shock resistance, by developing recipes that involved specific ingredients, mixtures and processing steps that produced pottery fabrics with the needed properties. The second problem, cooking temperature, was initially solved by forming vessels with wide mouths, but such vessels were prone to spillage and contamination of the contents. A better solution was discovered when a creative potter applied a decorative technique from coiled basketry to the exterior surface of a clay pot. This technique involved laying a long ribbon of clay in a spiral pattern and pinching the outermost coil onto the growing vessel wall. The result was a radiator-like exterior that dissipated heat from the vessel contents (figure 1b). Increased cooking control made it feasible for cooking pots to be designed with smaller mouths, thus reducing spillage and contamination [45]. The obvious advantages of such vessels led to their rapid adoption—within a mere 40 years—across the entire ancestral Pueblo area [46]. Because of their superior properties, such cooking pots became a de facto technology standard between 900 CE and 1300 CE.

Pueblo housing illustrates a similar evolutionary pattern, where the emergence of standardized parts and techniques resulted in sturdier and more functional buildings. The earliest Pueblo shelters, dating to 400 CE and earlier, were shallow circular pits with internal posts supporting a superstructure of wood and adobe. These post-and-adobe buildings became a limitation as Pueblo families invested more time and energy in family farms [47] because they only lasted for about 15 years before needing to be rebuilt [48]. The solution was to create above-ground load-bearing walls. Initially, these walls were made of stacked sandstone slabs with flaked edges. Because the natural dimensions of the sandstone varied, these walls were somewhat unstable and short-lived. Increased stability required standardized building stones that could be laid in regular patterns using less mortar. A technique for producing such stones was invented around 1000 CE, and as in the case of pottery it involved applying an existing technique from another technology—in this case, the ‘sharpening’ of milling tools with a pecking-stone—to the shaping of building stones. The resulting control over building stone shape led to the emergence of distinct stone shapes for various stone masonry components [49,50].

Finally, evidence of standardization related to increased efficiency in production is abundant in the archaeological record of early civilizations. A good example is the emergence of standardized weights and measures [51]. Standards of measurement enabled better coordination in production and construction, and resulted in greater functionality of the built environment. The remarkable population density of the ancient Mesoamerican city of Teotihuacan, for example became possible in part through a strong regularity in the city's spatial organization, which was facilitated by standardized measurement units that were used in designing major public buildings and apartment compounds [52]. Similar standard units of measure were used in designing early cities in other ancient cultures [53]. In China, measurement standards were already used by about 2000 BCE to produce standardized jade figurines for ritual purposes [54]; and by about 1200 BCE to manufacture standardized bronze bells for military music and communication [51]. In the same way, standards of value, from shell beads to coins, have played an important role in economic development by enabling intermediate exchanges that facilitate flows of goods through social networks [55]; and standards for representing speech in visual form lay behind the emergence of writing systems which dramatically increased the rate and scale of information transfer in human societies [56].

4. From the industrial revolution to the information revolution

The industrial revolution saw a dramatic increase in the rate of technological innovation. Countless mechanical product innovations revolutionized everyday life: sewing machines, reapers, locks, clocks, bicycles, typewriters and calculators. These contained familiar building blocks (wheels, springs, gears, pulleys, axles, bearings, screws, hinges, cams and levers) connected by fasteners (screws, bolts, rivets, pins and straps) that had been used for centuries—in Roman chariots, mediaeval windmills, watermills and clocks. Prior to the industrial revolution, skilled artisans, using manual tools, hand-crafted them from wood and metal in limited volume [57]. During the nineteenth century, the advent of water- and steam-powered machine tools, advances in precision measuring tools, and Whitworth's standardization of screw threads enabled high-volume production of identical parts to sufficiently precise tolerances to permit interchangeability in complex multi-component products [58, ch. 14].

The American military became an enthusiastic early adopter of specialized machine tools to manufacture firearms that could be repaired in the field with interchangeable parts. This ‘armory practice’ spread to other American manufacturers via interactions with the machine tool makers and migration of skilled machinists between companies. It became known in Europe as the American System of Manufacture. Products from this system—sewing machines, typewriters, adding machines and bicycles—sold in the millions by the end of the century [59, ch. 1].

In parallel to nineteenth century mechanical innovations, new technological opportunities were created by the scientific understanding of electromagnetic fields and electric currents, and the development of new measurement tools and standard units. By the early twentieth century, new kinds of devices exploited this knowledge—batteries, resistors, capacitors, inductors, relays, electromagnets, solenoids, transformers, electric generators and motors, and vacuum tubes. These could be combined in myriad ways to provide new functionality for motive force, lighting, communications and calculation. There emerged standardized families of devices—mass produced by dozens of manufacturers—whose critical properties, electrical and physical, conformed to standardized sets of values within specified tolerances, enabling interchangeability and interoperability. These building blocks were the foundation of the electric power grid, which in turn enabled mass markets for many product innovations, including electric lighting; the telephone system; radio and television; punched card tabulating machines and calculators; vacuum cleaners, dishwashers, refrigerators, air conditioners and shavers [60, ch. 5–6, 61,62].

Midway into the twentieth century, three novel technology domains emerged and co-evolved—digital computers, solid-state semiconductor devices and computer programming. Each produced hierarchies of functional and inter-operational standards.

Computers with different versions of an architecture first proposed by John von Neumann and built by several organizations, including IBM, became the basis of the ‘mainframe’ commercial computer industry in the early 1950s [63]. The earliest computers used vacuum tubes as logic devices, but these were soon replaced by a new building block—the semiconductor transistor—which was superior in nearly all respects [64, ch. 2, 65]. To deal with design, packaging, testing and servicing these complex machines, modular designs based on standardized and interoperable ‘hardware’ building blocks were essential. The first layer was a set of electronic circuit modules for elementary logic functions like ‘AND’, ‘OR’, ‘NOT.’ These modules, interconnected, formed the higher level logic elements of a central processing unit (CPU), such as registers and adders, and interfaces to memory and I/O devices [64, ch. 6]. The problem of unreliable solder connections between the parts of these increasingly complex devices was solved with the invention of the planar integrated circuit (IC) semiconductor process in 1959, which permitted the fabrication of complete circuits on a single silicon chip [66, p. 74].

Waves of further innovations in semiconductor manufacturing led to an exponential increase—known as Moore's law—in the number of devices that could be economically fabricated on a single IC, from tens of transistors to billions of transistors per chip [67], while decreasing the cost and power dissipation per function and increasing performance. This enabled many generations of new standardized, interoperable IC functional building blocks–from subsystems like registers and arithmetic units, to entire CPUs, such as the Intel 8086 in the first IBM PC. In addition, standard memory chips with billions of bits of transistor memory cells supplanted older magnetic technologies. Semiconductor manufacturers must cooperate in defining interoperable standards for new IC building blocks, because such standards maximize production volumes that repay the huge capital investments in plant and chip design, and ensure multiple IC sources that help users reduce supply risk [64, ch. 8].

Exponential increases in computer performance and memory size required that computer programming evolve from an ‘art’ in the 1960s to an engineering discipline based on architectural approaches with hierarchies of standardized interoperable program modules. Programming ‘languages' with English-like grammar, like FORTRAN, COBOL and Basic, were followed by object-oriented languages with large libraries of standardized functional building blocks [4, ch. 6, 68]. Operating systems today rely on hierarchies of standardized services, including those that control input–output devices and memory allocation. Increasingly pervasive communication among computers required new communication standards, such as TCP/IP, constructed from many layers of standardized software modules, culminating in today's Internet, which hosts many other standard protocols: electronic mail, instant messaging and other social media, and the World Wide Web format standard [68,69, ch. 6].

5. How standards emerge

Most standards are not the only means to solve a problem or perform a task. In the biological realm, for example, DNA is clearly not the only conceivable information transmission standard. Not only do natural alternatives like RNA exist, chemists have successfully replicated DNA with synthetic bases [70,71], and synthetic molecules like peptide nucleic acid can store information and replicate [72,73]. Likewise, transcriptional regulation is not the only mechanism to regulate gene expression—others regulate transcript stability or translation rate—and protein phosphorylation is only one among multiple ways to regulate protein activity. In the human realm, languages work equally well regardless of whether speakers use tone, nasalization or vowel length as a basis for distinguishing phonemes; railway gauges different from today's standard 4 feet and 8.5 inch gauge fulfil the same purpose [74,75]; and even though the vacuum tube became the standard for early radio transmission, technologies based on a frequency alternator or an oscillating arc could have served as well [76].

Any successful technology can become standardized by spreading ‘vertically’, ‘horizontally’ or both. In biology, vertical transmission means genetic inheritance from parents to offspring, whereas horizontal transmission corresponds to mechanisms like lateral gene transfer, which organisms—especially bacteria—use to exchange DNA [77]. (The sexual recombination of higher organisms, where parents shuffle their genomes to produce offspring combines horizontal and vertical transmission.) Analogues to both modes of spreading also exist in human technology. The rapid spreading of cooking pots with high heat dissipation through Pueblo culture surely involved horizontal information transmission through imitative learning, and its subsequent persistence must have been supported by vertical transmission through cultural inheritance.

Whether a technology spreads vertically or horizontally, it can do so for different reasons. First, it may be superior to others, and natural selection or its analogue in technology may cause its spreading and standardization. For example, the IBM System/360, introduced in 1964, was selected by market forces as a de facto standard for mainframe computer architecture, forcing competitors to build software-compatible products or exit the market [64, ch. 2, 78]. Henry Ford's manufacturing methods that relied on standardized parts could produce millions of identical products at far lower cost than previous production methods—the Model T's price of $1000 in 1908 had fallen to $300 by 1924 [59, ch. 6, 60, p. 442]. The transistor performed the same functions as the triode vacuum tube but it had no fragile glass tube, dissipated far less power, was much smaller, performed faster, and had no warm-up time. Unfortunately, such comparisons between a current and inferior past standards are not as straightforward in biology, because life's current standards have emerged over eons, and their inferior alternatives are usually lost in time. Among the few exceptions is DNA itself, whose greater chemical stability make it superior for storing information relative to the more ancient RNA.

But not all standards become established because they are superior. Some may be ‘frozen accidents' and have succeeded to some extent by chance [79]. One example is the standard railroad gauge, which derives from the gauge used by the nineteenth century engineer George Stephenson for an experimental horse-drawn locomotive [74,75]. Others include the British Imperial system of measurements units (which has been increasingly replaced by the metric system in the last century), as well as the convention of driving on the left side of the road. The very existence of such historical standards testifies to the importance of standards in and of themselves. It also shows that the details of a standard may matter less than the fact that a standard has been established. A simple ‘first mover’ advantage has in fact been decisive in the emergence of many standards, for example the QWERTY computer keyboard, the HTML markup language, as well as the COBOL and Java programming languages [64,80,81].

Some standards may emerge through a mix of selection and chance. Consider the genetic code that organisms use to translate triplets of nucleotides into amino acids [82] and that, minor variations aside, is nearly universal [13]. On the one hand, the fact that myriad alternative codes exist suggest that the code's present day structure has been influenced by chance historical events. For example, the code's structure partly reflects the order in which evolution added novel amino acids to the chemical ‘alphabet’ of proteins. On the other hand, among many alternative codes, the present code shows an especially high tolerance for translation errors, suggesting that selection for robust translation has contributed to its emergence [82–84].

6. Standards and innovation

Among many deep similarities between biological evolution and technological change [85–89], two are most important for the role of standards in innovation. The first is that trial-and-error experimentation is important for both biological evolution, where it takes the form of random DNA mutations, and for technological change, where successful innovations are often preceded by multiple failures. The connection to standardization is straightforward: a widely adopted standard can be used in more trials, which increases the chances that one of these trials will lead to an innovation. An example from biology involves lateral gene transfer, through which bacteria effectively experiment with novel and potentially useful gene combinations. This mechanism to diffuse genetic information is made possible by the universality of nucleic acids as information storage standards, and it facilitates the creation and evaluation of new gene combinations by widely different bacteria.

However, a standard's widespread adoption is not sufficient for innovation to occur—some standards may simply not be conducive to innovation. To identify those that are, it is helpful to consider a second parallel between innovation in biology and technology. This is the combinatorial nature of innovation, which combines elements of existing technologies into new forms [1,90]. In the words of economist Brian Arthur, technologies ‘consist of parts organized into component subsystems or modules…the modules of technology over time become standardized parts', and entire technologies ‘come into being as fresh combinations of what already exists’ [1]. This combinatorial aspect is evident even in the relatively simple technologies of small-scale societies, as we have seen in the application of coiled basketry techniques to cooking pot design, and in milling stone sharpening techniques to stone masonry.

The combinatorial nature of innovation also permeates biology, and standards play an important role in it. A case in point is the G-protein interface standard mentioned earlier, which renders receptors and effectors interoperable. Through their ability to interact with multiple receptors [91], G-proteins have become involved in myriad information transfer processes. For example, they help detect odourants, perceive light, release hormones like cortisol and retain water by the kidney [18]. There is no clearer evidence of their innovative prowess than the more than 600 different receptors passing information to the G-proteins encoded in the human genome [92].

Other biological innovations emerge when genes change their expression, and the process standard of transcriptional regulation allows DNA mutations to modulate gene expression very easily. The reason is that individual transcription factors typically bind short DNA ‘words' of 5–15 nucleotides, in which alterations of single ‘letters' can readily alter transcription factor binding and thus gene regulation. In addition, short regulatory DNA words can easily arise in genomic DNA by chance alone, and thus lead to new gene regulation [93]. The evolution of flowering plants with their intricate architecture of floral organs, and the origin and diversification of vertebrates with body plans as different as those of fish, birds or mammals were driven by changes in gene regulation [94,95]. More generally, changes in transcriptional regulation have been instrumental in the origin and diversification of all animal body plans [95].

Relevant examples from human technologies include the creation of buildings with different functions based on different combinations of standardized material components, and the creation of new materials such as pottery or metal alloys through combinations of different raw materials according to standardized recipes. The mechanical inventions of the Industrial Revolution derive from combinations of a modest number of standard elements such as screws, wedges and levers, but they led to a dramatic increase in the overall innovation rate. The power of such combinatorial innovation was recognized at least as early as the eighteenth century, when the Swedish industrialist Christopher Polhem introduced his ‘mechanical alphabet’ of machine elements like levers and screws, and posited that one could build any mechanical device by combining them [96]. The same principle was at work when early in the twentieth century, mechanical devices were combined with electrical devices such as capacitors, relays and electric motors to create innovations such as the dial telephone system, vacuum cleaners and air conditioning. In addition to the combinatorial possibilities, standardization of relatively few parts stimulates innovation by the well-known learning curve effect [63,66], in which manufacturing unit costs decrease with the cumulative number of identical units produced.

Combining old parts to make new things does not necessarily mean that innovation is easy. It took ingenuity to combine three old technologies—a compressor, a combustion engine and a rotating turbine—into the internal combustion air-breathing turbofan engine, better known as the jet-engine, that revolutionized air travel [1]. To see what makes a standard especially powerful for combinatorial innovation, it is useful to examine the most innovative standards known to date—the biopolymers DNA, RNA and protein.

These technologies have three characteristic features. The first is a small number of elements—four nucleotides and 20 amino acids. The second is a standard interface for these elements—the phosphodiester bond in nucleic acids and the peptide bond in proteins—that allow different elements to connect via the same interface. The third is that combinations of these elements can form a huge number of objects with diverse and useful properties. Strings of 100 amino acids give rise to some 10130 proteins—more than life could have explored since its origins. Those that evolution has discovered to date perform most of life's tasks. They propel cells and organisms, create their shape, communicate between them and help catalyse thousands of different chemical reactions. They are second only to DNA and RNA, which encode not only proteins, but also the phenotype of every single organism in more than a million different species existing today. Not only do the three features of these standards facilitate innovation, they form the very basis of all innovation in nature.

An example with comparable scope in human technology comes from digital electronics, which uses a small number of standardized components, such as transistors, resistors and capacitors to build a modest number of computational modules, the ‘gates' that compute elementary Boolean logic functions, such as the AND, OR and NOR functions (figure 1c). These modules can be combined in arbitrary ways, because the output of any one gate can serve as the input to any other gate. Moreover, different combinations of these gates permit computation of a huge number of Boolean logic functions, and modern chips can contain hundreds of millions of gates. They can compute anything that is computable [97].

Among the three features that turn a standard into a platform of innovation—few components, standard interfaces and myriad useful combinations—standard interfaces are perhaps the most consequential. The reason is that they eliminate ingenuity as an essential ingredient to innovation. They have allowed nature to innovate through the blind process of mutation, recombination and natural selection; and they can do the same in technology, where evolutionary computation and genetic algorithms can evolve not only computer programs but also devices such as electronic circuits. Such algorithms are already able to create patentable inventions, and they may revolutionize the practice of innovation itself [98].

7. Summary and outlook

Standards constrain change by enforcing uniformity of objects and processes, but the right kinds of standards can leverage these constraints to facilitate innovation through combinatorial processes. The power of such processes is well recognized in the innovation literature [1,85,99], and best expressed for technological innovation by Brynjolfsson & McAfee: ‘Google self-driving cars, Waze, Web, Facebook, Instagram are simple combinations of existing technology … digital innovation is recombinant innovation in its purest form’ [100, p. 81].

Even though human culture has existed only for a sliver of time since life's origin, it has already given rise to myriad standards. They range from the basic standards of stone tools and language to sophisticated standards in digital electronics that are powerful drivers of innovation. Some of the innovations these standards have helped create are already superior to biology in some respects. They include logic gates that switch tens of millions times faster than neurons [101] and that can operate in the vacuum of outer space. Biology's most widespread standards with their billion year-long history make clear that the most successful future human technologies will share some key properties: a modest number of standardized building blocks that can be combined through standard interfaces to create an astronomical number of useful objects. Like DNA itself, such technologies can become flexible platforms of innovation. More than that, they permit innovation through trial and error, and the more widely adopted they are, the more innovative they become. Life's history also shows that with enough time—millions of years—even mindless processes can create universal standards. There is hope for those of us who keep forgetting to pack the right electric outlet adapter.

Acknowledgements

We thank Rob Boyd and Henry Wright for helpful discussions, as well as three anonymous reviewers and Geerat Vermeij for helpful comments on the manuscript.

Author contributions

All authors contributed to the synthesis of information from the literature and to the writing of the manuscript.

Competing interests

We declare that we have no competing interests.

Funding

We thank the Santa Fe Institute for its continued support. A.W. acknowledges Swiss National Science Foundation grant no. 31003A_146137.

References

- 1.Arthur WB. 2009. The nature of technology. What it is and how it evolves. New York, NY: Free Press. [Google Scholar]

- 2.Tassey G. 2000. Standardization in technology-based markets. Res. Policy 29, 587–602. ( 10.1016/s0048-7333(99)00091-8) [DOI] [Google Scholar]

- 3.International Organization for Standardization. 2012. Annual report. See http://www.iso.org/iso/home/about/annual_report-2012/.

- 4.Mowery DC, Rosenberg N. 1998. Paths of innovation: technological change in 20th century America. Cambridge, UK: Cambridge University Press. [Google Scholar]

- 5.Wagner A. 2011. The molecular origins of evolutionary innovations. Trends Genet. 27, 397–410. ( 10.1016/j.tig.2011.06.002) [DOI] [PubMed] [Google Scholar]

- 6.Benner SA, Ellington AD, Tauer A. 1989. Modern metabolism as a palimpsest of the RNA world. Proc. Natl Acad. Sci. USA 86, 7054–7058. ( 10.1073/pnas.86.18.7054) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Gilbert W. 1986. The RNA world. Nature 319, 618 ( 10.1038/319618a0) [DOI] [Google Scholar]

- 8.Chen X, Li N, Ellington AD. 2007. Ribozyme catalysis of metabolism in the RNA world. Chem. Biodivers. 4, 633–655. ( 10.1002/cbdv.200790055) [DOI] [PubMed] [Google Scholar]

- 9.Doherty EA, Doudna JA. 2000. Ribozyme structures and mechanisms. Annu. Rev. Biochem. 69, 597–615. ( 10.1146/annurev.biochem.69.1.597) [DOI] [PubMed] [Google Scholar]

- 10.Steitz TA, Moore PB. 2003. RNA, the first macromolecular catalyst: the ribosome is a ribozyme. Trends Biochem. Sci. 28, 411–418. ( 10.1016/s0968-0004(03)00169-5) [DOI] [PubMed] [Google Scholar]

- 11.Lewontin RC. 1970. The units of selection. Annu. Rev. Ecol. Syst. 1, 1–18. ( 10.1146/annurev.es.01.110170.000245) [DOI] [Google Scholar]

- 12.Whitman WB, Coleman DC, Wiebe WJ. 1998. Prokaryotes: the unseen majority. Proc. Natl Acad. Sci. USA 95, 6578–6583. ( 10.1073/pnas.95.12.6578) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Knight RD, Freeland SJ, Landweber LF. 2001. Rewiring the keyboard: evolvability of the genetic code. Nat. Rev. Genet. 2, 49–58. ( 10.1038/35047500) [DOI] [PubMed] [Google Scholar]

- 14.Manning G, Whyte DB, Martinez R, Hunter T, Sudarsanam S. 2002. The protein kinase complement of the human genome. Science 298, 1912–1934. ( 10.1126/science.1075762) [DOI] [PubMed] [Google Scholar]

- 15.Cohen P. 2002. Protein kinases—the major drug targets of the twenty-first century? Nat. Rev. Drug Discov. 1, 309–315. ( 10.1038/nrd773) [DOI] [PubMed] [Google Scholar]

- 16.Gerhart J, Kirschner M. 1998. Cells, embryos, and evolution. Boston, MA: Blackwell. [Google Scholar]

- 17.Osborn MJ, Miller JR. 2007. Rescuing yeast mutants with human genes. Brief. Funct. Genomic. Proteomic. 6, 104–111. ( 10.1093/bfgp/elm017) [DOI] [PubMed] [Google Scholar]

- 18.Neves SR, Ram PT, Iyengar R. 2002. G protein pathways. Science 296, 1636–1639. ( 10.1126/science.1071550) [DOI] [PubMed] [Google Scholar]

- 19.Thornton JW. 2001. Evolution of vertebrate steroid receptors from an ancestral estrogen receptor by ligand exploitation and serial genome expansions. Proc. Natl Acad. Sci. USA 98, 5671–5676. ( 10.1073/pnas.091553298) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Williams RW, Herrup K. 1988. The control of neuron number. Annu. Rev. Neurosci. 11, 423–453. ( 10.1146/annurev.neuro.11.1.423) [DOI] [PubMed] [Google Scholar]

- 21.Deutscher G. 2005. The unfolding of language: an evolutionary tour of mankind's greatest invention. New York, NY: Henry Holt and Company. [Google Scholar]

- 22.Pinker S. 1994. The language instinct: how the mind creates language. New York, NY: William Morrow and Company. [Google Scholar]

- 23.Hay J, Bauer L. 2007. Phoneme inventory size and population size. Language 83, 388–400. ( 10.1353/lan.2007.0071) [DOI] [Google Scholar]

- 24.Campbell L. 2013. Historical linguistics: an introduction, 3rd edn Cambridge, UK: The MIT Press. [Google Scholar]

- 25.Kaplan H, Hooper PL, Gurven M. 2009. The evolutionary and ecological roots of human social organization. Phil. Trans. R. Soc. B 364, 3289–3299. ( 10.1098/rstb.2009.0115) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Jones S. 1997. The archaeology of ethnicity: constructing identities in the past and present. London, UK: Routledge. [Google Scholar]

- 27.Wobst HM. 1977. Stylistic behavior and information exchange. In Papers for the director: research essays in honor of James B. Griffin (ed. Cleland CE.), pp. 317–342. Ann Arbor, MI: Museum of Anthropology, University of Michigan. [Google Scholar]

- 28.Conkey MW, Hastorf CA. 1990. Uses of style in archaeology. Cambridge, UK: Cambridge University Press. [Google Scholar]

- 29.Barth F. 1969. Introduction. In Ethnic groups and boundaries: the social organization of culture difference (ed. Barth F.), pp. 9–38. Prospect Heights, IL: Waveland Press, Inc. [Google Scholar]

- 30.Hebdige D. 2002. Subculture: the meaning of style. London, UK: Routledge. [Google Scholar]

- 31.Brown DE. 1991. Human universals. New York, NY: McGraw-Hill, Inc. [Google Scholar]

- 32.Gherardi F, Aquiloni L, Tricarico E. 2012. Revisiting social recognition systems in in vertebrates. Anim. Cogn. 15, 745–762. ( 10.1007/s10071-012-0513-y) [DOI] [PubMed] [Google Scholar]

- 33.Saragusti I, Sharon I, Katzenelson O, Avnir D. 1998. Quantitative analysis of the symmetry of artefacts: lower Paleolithic handaxes. J. Archaeol. Sci. 25, 817–825. ( 10.1006/jasc.1997.0265) [DOI] [Google Scholar]

- 34.Mellars P. 1989. Major issues in the emergence of modern humans. Curr. Anthropol. 30, 349–385. ( 10.1086/203755) [DOI] [Google Scholar]

- 35.Mellars P, Stringer C. 1989. The human revolution: behavioral and biological perspectives on the origins of modern humans. Edinburgh, UK: Edinburgh University Press. [Google Scholar]

- 36.Marks AE, Hietala HJ, Williams JK. 2001. Tool standardization in the Middle and Upper Palaeolithic: a closer look. Camb. Archaeol. J. 11, 17–44. ( 10.1017/S0959774301000026) [DOI] [Google Scholar]

- 37.Dibble HL. 1989. The implications of stone tool types for the presence of language during the middle Paleolithic. In The origins and dispersal of modern humans: behavioral and biological perspectives (eds Mellars P, Stringer CS), pp. 415–432. Edinburgh, UK: University of Edinburgh Press. [Google Scholar]

- 38.Chase PG. 1991. Symbols and Paleolithic artifacts—style, standardization, and the imposition of arbitrary form. J. Anthropol. Archaeol. 10, 193–214. ( 10.1016/0278-4165(91)90013-n) [DOI] [Google Scholar]

- 39.Boesch C, Boesch H. 1990. Tool use and tool making in wild chimpanzees. Folia Primatol. 54, 86–99. ( 10.1159/000156428) [DOI] [PubMed] [Google Scholar]

- 40.Bar-Yosef O, Kuhn SL. 1999. The big deal about blades: laminar technologies and human evolution. Am. Anthropol. 101, 322–338. ( 10.1525/aa.1999.101.2.322) [DOI] [Google Scholar]

- 41.Monnier G. 2006. Testing retouched flake tool standardization during the middle Paleolithic. In Transitions before the transition: evolution and stability in the middle Paleolithic and middle stone age (eds Hovers E, Kuhn SL), pp. 57–83. New York, NY: Springer. [Google Scholar]

- 42.Morris EH. 1927. The beginnings of pottery making in the San Juan area: unfired prototypes and wares of the earliest ceramic period. New York, NY: American Museum of Natural History. [Google Scholar]

- 43.Morris EA. 1980. Basketmaker caves in the Prayer Rock District, Northeastern Arizona. Tucson, AZ: University of Arizona Press. [Google Scholar]

- 44.Ortman SG. 2006. Ancient pottery of the Mesa Verde country: how ancestral Pueblo people made it, used it, and thought about it. In The Mesa Verde World (ed. Noble DG.), pp. 101–110. Santa Fe, NM: School of American Research Press. [Google Scholar]

- 45.Ortman SG. 2000. Artifacts. In The archaeology of Castle Rock pueblo: a thirteenth-century village in southwestern Colorado (ed. Kuckelman KA.). See http://www.crowcanyon.org/castlerock [Google Scholar]

- 46.Pierce C. 2005. Reverse engineering the ceramic cooking pot: cost and performance properties of plain and textured vessels. J. Archaeol. Method Theory 12, 117–157. ( 10.1007/s10816-005-5665-5) [DOI] [Google Scholar]

- 47.Wilshusen RH. 1988. Architectural trends in prehistoric Anasazi sites during A.D. 600 to 1200. In Dolores archaeological program: supporting studies: additive and reductive technologies (eds Blinman E, Phagan CJ, Wilshusen RH), pp. 599–633. Denver, CO: Bureau of Reclamation, Engineering and Research Center. [Google Scholar]

- 48.Cameron CM. 1990. The effect of varying estimates of pit structure use-life on prehistoric population estimates in the American Southwest. Kiva 55, 155–166. [Google Scholar]

- 49.Varien MD. 2012. Occupation span and the organization of residential activities: a cross-cultural model and case study from the Mesa Verde region. In Ancient households of the Americas: conceptualizing what households do (eds Douglas JG, Gonlin N), pp. 47–78. Boulder, CO: University Press of Colorado. [Google Scholar]

- 50.Thompson I. 1993. The towers of Hovenweep. Mesa Verde, CO: Mesa Verde Museum Association. [Google Scholar]

- 51.Crease RP. 2011. World in the balance. The historic quest for an absolute system of measurement. New York, NY: Norton. [Google Scholar]

- 52.Sugiyama S. 1993. Worldview materialized in Teotihuacan, Mexico. Latin Am. Antiquity 4, 103–129. ( 10.2307/971798) [DOI] [Google Scholar]

- 53.Dieter A. 1991. Building in Egypt: pharaonic stone masonry. Oxford, UK: Oxford University Press. [Google Scholar]

- 54.Keightley DN. 1995. A measure of man in early China: in search of the neolithic inch. Chin. Sci. 12, 18–40. [Google Scholar]

- 55.Plattner S. 1989. Economic anthropology. Stanford, CA: Stanford University Press. [Google Scholar]

- 56.Robinson A. 2007. The story of writing, 2nd edn New York, NY: Thames and Hudson. [Google Scholar]

- 57.Gies F, Gies J. 1994. Cathedral, forge, and waterwheel: technology and invention in the Middle Ages. New York, NY: HarperCollins Publishers. [Google Scholar]

- 58.Singer C, Holmyard EJ, Hall AR, Williams TI. 1958. A history of technology: the industrial revolution 1750–1850. New York, NY: Oxford University Press. [Google Scholar]

- 59.Hounshell DA. 1984. From the American system to mass production, 1800–1932: the development of manufacturing technology in the United States. Baltimore, MD: Johns Hopkins University Press. [Google Scholar]

- 60.Landes DS. 2003. The unbound Prometheus. Cambridge, UK: Cambridge University Press. [Google Scholar]

- 61.Campbell-Kelly M, Aspray W. 2014. Computer: a history of the information machine, 3rd edn Boulder, CO: Westview Press. [Google Scholar]

- 62.Schurr SH. 1990. Electricity in the American economy: agent of technological progress. New York, NY: Greenwood Press. [Google Scholar]

- 63.Aspray W. 1990. John von Neumann and the origins of modern computing. Cambridge, MA: MIT Press. [Google Scholar]

- 64.Ceruzzi PE. 2003. A history of modern computing, 2nd edn Cambridge, MA: MIT Press. [Google Scholar]

- 65.The Computer History Museum. 2015. 1953—transistorized computers emerge. See http://www.computerhistory.org/semiconductor/timeline/1953-transistorized-computers-emerge.html. [Google Scholar]

- 66.Braun E, Macdonald S. 1982. Revolution in miniature: the history and impact of semiconductor electronics re-explored in an updated and revised second edition, 2nd edn Cambridge, UK: Cambridge University Press. [Google Scholar]

- 67.Friedman TL. 2015. Moore's law turns 50. The New York Times, 13 May 2015.

- 68.Jones C. 2014. The technical and social history of software engineering. Upper Saddle River, NJ: Addison-Wesley. [Google Scholar]

- 69.Ceruzzi PE. 2012. Computing: a concise history. Cambridge, MA: MIT Press. [Google Scholar]

- 70.Kool ET. 2001. Hydrogen bonding, base stacking, and steric effects in DNA replication. Annu. Rev. Biophys. Biomol. Struct. 30, 1–22. ( 10.1146/annurev.biophys.30.1.1) [DOI] [PubMed] [Google Scholar]

- 71.Kool ET. 1998. Replication of non-hydrogen bonded bases by DNA polymerases: a mechanism for steric matching. Biopolymers 48, 3–17. () [DOI] [PubMed] [Google Scholar]

- 72.Nelson KE, Levy M, Miller SL. 2000. Peptide nucleic acids rather than RNA may have been the first genetic molecule. Proc. Natl Acad. Sci. USA 97, 3868–3871. ( 10.1073/pnas.97.8.3868) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Wittung P, Nielsen PE, Buchardt O, Egholm M, Norden B. 1994. DNA-like double helix formed by peptide nucleic acid. Nature 368, 561–563. ( 10.1038/368561a0) [DOI] [PubMed] [Google Scholar]

- 74.Rosen W. 2010. The most powerful idea in the world. Chicago, IL: The University of Chicago Press. [Google Scholar]

- 75.Puffert D. 2009. Tracks across continents, paths through history: the economic dynamics of standardization in railway gauge. Chicago, IL: University of Chicago Press. [Google Scholar]

- 76.Mokyr J. 2000. Natural history and economic history: is technological change an evolutionary process? Evanston, IL: Northwestern University; (http://faculty.wcas.northwestern.edu/~jmokyr/jerusalem1.PDF) [Google Scholar]

- 77.Bushman F. 2002. Lateral DNA transfer: mechanisms and consequences. Cold Spring Harbor, NY: Cold Spring Harbor University Press. [Google Scholar]

- 78.IBM's Mainframes: old dog, new tricks The Economist, 12 September 2012.

- 79.Crick FHC. 1968. Origin of the genetic code. J. Mol. Biol. 38, 367–379. ( 10.1016/0022-2836(68)90392-6) [DOI] [PubMed] [Google Scholar]

- 80.Mayo AJ, Nohria N, Singleton LG. 2007. Paths to power: how insiders and outsiders shaped American business leadership. Cambridge, MA: Harvard Business Review Press. [Google Scholar]

- 81.Leibowitz S, Margolis SE. 1990. The fable of the keys. J. Law Econ. 33, 1–26. ( 10.1086/467198) [DOI] [Google Scholar]

- 82.Freeland S, Hurst L. 1998. The genetic code is one in a million. J. Mol. Evol. 47, 238–248. ( 10.1007/PL00006381) [DOI] [PubMed] [Google Scholar]

- 83.Wong J. 1975. A co-evolution theory of the genetic code. Proc. Natl Acad. Sci. USA 72, 1909–1912. ( 10.1073/pnas.72.5.1909) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Ronneberg T, Landweber L, Freeland S. 2000. Testing a biosynthetic theory of the genetic code: fact or artifact? Proc. Natl Acad. Sci. USA 97, 13 690–13 695. ( 10.1073/pnas.250403097) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Wagner A, Rosen W. 2014. Spaces of the possible: universal Darwinism and the wall between technological and biological innovation. J. R. Soc. Interface 11, 20131190 ( 10.1098/rsif.2013.1190) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Johnson S. 2010. Where good ideas come from: the natural history of innovation. New York, NY: Riverhead. [Google Scholar]

- 87.Ziman J. 2003. Technological innovation as an evolutionary process. Cambridge, UK: Cambridge University Press. [Google Scholar]

- 88.Basalla G. 1988. The evolution of technology. Cambridge, UK: Cambridge University Press. [Google Scholar]

- 89.Lewens T. 2005. Organisms and artifacts. Design in nature and elsewhere. Cambridge, MA: MIT Press. [Google Scholar]

- 90.Wagner A. 2011. The origins of evolutionary innovations. A theory of transformative change in living systems. Oxford, UK: Oxford University Press. [Google Scholar]

- 91.Perez DM. 2003. The evolutionarily triumphant G-protein-coupled receptor. Mol. Pharmacol. 63, 1202–1205. ( 10.1124/mol.63.6.1202) [DOI] [PubMed] [Google Scholar]

- 92.Venter JC, et al. 2001. The sequence of the human genome. Science 291, 1304–1351. ( 10.1126/science.1058040) [DOI] [PubMed] [Google Scholar]

- 93.Stone J, Wray G. 2001. Rapid evolution of cis-regulatory sequences via local point mutations. Mol. Biol. Evol. 18, 1764–1770. ( 10.1093/oxfordjournals.molbev.a003964) [DOI] [PubMed] [Google Scholar]

- 94.Gilbert SF. 2010. Developmental biology. Sunderland, MA: Sinauer. [Google Scholar]

- 95.Carroll SB, Grenier JK, Weatherbee SD. 2001. From DNA to diversity. Molecular genetics and the evolution of animal design. Malden, MA: Blackwell. [Google Scholar]

- 96.Strandh S. 1987. Christopher Polhem and his mechanical alphabet. Tech. Cult. 10, 143–168. [Google Scholar]

- 97.Davis M. 2001. Engines of logic: mathematicians and the origin of the computer. New York, NY: Norton. [Google Scholar]

- 98.Plotkin R. 2009. The genie in the machine. Stanford, CA: Stanford University Press. [Google Scholar]

- 99.Kell DB, Lurie-Luke E. 2015. The virtue of innovation: innovation through the lenses of biological evolution. J. R. Soc. Interface 12, 20141183 ( 10.1098/rsif.2014.1183) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Brynjolfsson E, McAfee A. 2014. The second machine age: work, progress, and prosperity in a time of brilliant technologies, 1st edn New York, NY: Norton. [Google Scholar]

- 101.Amit DJ. 1989. Modeling brain function. The world of attractor neural networks. Cambridge, UK: Cambridge University Press. [Google Scholar]