Abstract

This study examined the timing of spontaneous self-monitoring in the naming responses of people with aphasia. Twelve people with aphasia completed a 615-item naming test twice, in separate sessions. Naming attempts were scored for accuracy and error type, and verbalizations indicating detection were coded as negation (e.g., “no, not that”) or repair attempts (i.e., a changed naming attempt). Focusing on phonological and semantic errors, we measured the timing of the errors and of the utterances that provided evidence of detection. The effects of error type and detection response type on error-to-detection latencies were analyzed using mixed-effects regression modeling. We first asked whether phonological errors and semantic errors differed in the timing of the detection process or repair planning. Results suggested that the two error types primarily differed with respect to repair planning. Specifically, repair attempts for phonological errors were initiated more quickly than repair attempts for semantic errors. We next asked whether this difference between the error types could be attributed to the tendency for phonological errors to have a high degree of phonological similarity with the subsequent repair attempts, thereby speeding the programming of the repairs. Results showed that greater phonological similarity between the error and the repair was associated with faster repair times for both error types, providing evidence of error-to-repair priming in spontaneous self-monitoring. When controlling for phonological overlap, significant effects of error type and repair accuracy on repair times were also found. These effects indicated that correct repairs of phonological errors were initiated particularly quickly, whereas repairs of semantic errors were initiated relatively slowly, regardless of their accuracy. We discuss the implications of these findings for theoretical accounts of self-monitoring and the role of speech error repair in learning.

Keywords: speech self-monitoring, aphasia, naming, error detection, repair

1. Introduction

Monitoring one’s own speech is critical for successful communication. Theories of speech monitoring postulate multiple processes in language production and comprehension systems that may be involved in this important behavior. One method of testing these proposed monitoring processes is to examine differences in the rate and/or timing of different monitoring behaviors. In the present study, we analyzed the timing of self-monitoring in the naming performance of individuals with aphasia to elucidate detection and repair processes that are associated with semantic and phonological naming errors.

1.1. Temporal characteristics of speech monitoring models

Theories of speech monitoring differ with regard to the proposed stages of speech production at which errors are detected, resulting in different predictions regarding the timing of monitoring processes. Speech production involves a sequence of complex cognitive and motoric computations beginning with conceptualization of a preverbal message, followed by the formulation of an internal representation of speech that is then articulated and finally perceived as audible speech (see Levelt, 1989; Postma, 2000). According to Levelt’s (1983, 1989) influential theory of speech monitoring, the auditory feedback from one’s own speech is parsed and monitored using the same comprehension system employed to understand others’ speech. The self-monitoring of audible speech is supported by evidence that rates of error detection and repair decrease when auditory feedback is masked with white noise (Lackner & Tuller, 1979; Oomen, Postma, & Kolk, 2001; Postma & Kolk, 1992). However, auditory comprehension of one’s own speech is a relatively slow process that does not explain the detection of errors prior to articulation (Motley, Camden, & Baars, 1982; Postma & Noordanus, 1996; Trewartha & Phillips, 2013) or rapid error detection in overt speech, such as the interruption of the incorrect word “yellow” observed in the utterance “to the ye…, to the orange node” (Levelt, 1983). To account for instances of fast error detection, the comprehension-based theory also postulates monitoring of inner speech by the comprehension system, which occurs after phonological or phonetic encoding of speech but prior to articulation (Levelt, 1983; 1989; Wheeldon & Levelt, 1995). In this framework, monitoring can occur prior to the formulation of inner speech only when detecting inappropriateness (e.g., ambiguity or incoherence) arising in the preverbal conceptualization stage (Levelt, 1983, 1989).

Other theories of monitoring propose that errors can be detected relatively early in speech production by relying on processes that occur during the formulation of speech. Production-based accounts postulate that error detection can utilize information generated by the production system directly, without involvement of comprehension systems. Proposed mechanisms include: specialized monitors capable of detecting erroneous output at multiple levels of speech production (De Smedt & Kempen, 1987; Laver, 1980; van Wijk & Kempen, 1987); an attention-summoning error signal that arises from unusual co-activation patterns among nodes in a connectionist network when units are erroneously selected (MacKay, 1987); and a domain-general monitoring system that is sensitive to conflict arising from competing representations at multiple levels of speech formulation (Nozari, Dell, & Schwartz, 2011). In addition, Pickering and Garrod (2013, 2014) propose a prediction-based account of monitoring that features internal models like those in motor control theory. This account assumes that the speaker constructs internal models of the intended utterance at multiple levels of production, each model specifying a production operation along with its predicted consequences. Such predictions are compared against the utterance prior to or after articulation, and the error signal resulting from this comparison forms the basis for speech monitoring.

Theories of monitoring also make different predictions with regard to how the timing of detection may differ for specific types of speech errors. During speech production, semantic errors arising from the selection of incorrect lexical items originate at an earlier stage than phonological errors arising from the selection of incorrect speech sounds. Hence, in Pickering and Garrod’s (2014) prediction-based model and in production-based models that propose that errors can be detected as soon as they arise, semantic errors should be detectable earlier than phonological errors. Levelt’s (1983, 1989) comprehension-based theory does not specify the timing of detection for semantic errors as compared to phonological errors, but other researchers have made suggestions within this framework. Hartsuiker et al. (2005) argue that postarticulatory monitoring, which uses the acoustical information in overt speech, is particularly important for detecting phonological errors. This would seem to predict relatively slow detection of phonological errors compared to other errors that are more likely to be monitored prior to articulation. In contrast, Pickering and Garrod (2014) suggest that comprehension-based monitoring predicts earlier detection of phonological errors compared to semantic errors because the input to the monitor must be a phonological or phonetic representation of inner or overt speech. Consistent with a phonological basis for the comprehension-based monitor, a series of experiments showed that speakers’ ability to halt their own word production as they monitored other auditory or visual words was sensitive to phonological similarity but not semantic similarity with the intended word (Slevc & Ferreira, 2006). Although few studies have tested differences in the timing of monitoring for specific types of errors, there is some evidence that phonological errors are detected more quickly than semantic- or syntactic-based lexical errors in one’s own spontaneous speech (Nooteboom, 2005) and when monitoring others’ speech (Oomen & Postma, 2002). Distinguishing between different types of speech errors may be particularly important when examining the monitoring abilities of people with aphasia, which is discussed in the following section.

1.2. Self-monitoring in aphasia

Research involving participants with aphasia provides important evidence regarding the relationships between language processes and self-monitoring. Particularly relevant for the present study, aphasic speech provides opportunities to distinguish between processes involved in error detection and those involved in error repair. Neurologically intact speakers commonly indicate detection of having produced a speech error by initiating a self-correction, sometimes preceded by an editing term, filled pause, or other disfluency (Blackmer & Mitton, 1991; Levelt, 1983; Nooteboom, 2005). These same monitoring behaviors are observed in people with aphasia, but in some types of aphasia their error detection takes the form of explicit response rejection (e.g., “no”; “that’s not right”), and detected errors may be followed by a correct repair, incorrect repair, or no repair attempt at all (Oomen et al., 2001; Sampson & Faroqi-Shah, 2011; Schlenck, Huber, & Willmes, 1987; Schwartz, Middleton, Brecher, Gagliardi, & Garvey, 2016). Our study took advantage of this variability in repair behaviors to investigate the timing of error detection with and without overt repair. Aphasic speech has also been examined for evidence of covert repair, i.e., pre-articulatory self-correction, as inferred from pauses, repeats, and other speech disfluencies (e.g,, Oomen et al., 2001; Oomen, Postma, & Kolk, 2005; Schlenck et al., 1987). Given the known difficulty of distinguishing covert repair-related disfluencies from the pauses, repeats, etc. that signal word-retrieval and speech-planning difficulties in aphasia (Nickels & Howard, 1995; Postma, 2000), we made no attempt to analyze covert repair in this study.

It has been known for some time that individuals with aphasia may exhibit monitoring impairments for a specific error type, for example ignoring semantic errors but regularly attempting repairs of phonological errors (Marshall, Rappaport, & Garcia-Bunuel, 1985; Stark, 1988), or the reverse. An experimental study of a patient with Broca’s aphasia revealed an informative pattern. The patient’s speech contained significantly more phonological than semantic errors, and he was less likely to repair his own phonological, compared to semantic, errors; yet he had little difficulty detecting and repairing phonological errors spoken by someone else (Oomen et al., 2005). This pattern points to a locus for the self-monitoring deficit within the production system itself.

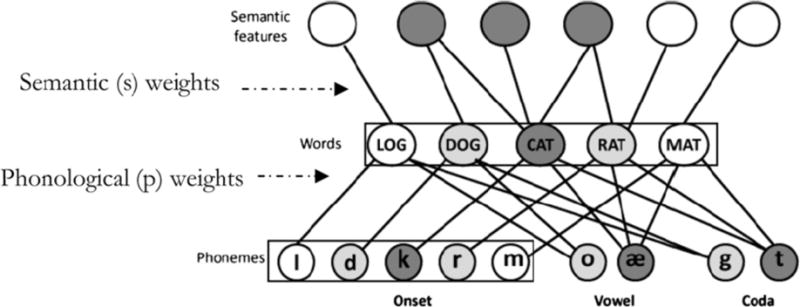

Nozari et al. (2011) developed a production-based account of speech error monitoring based on the interactive two-step model of lexical access (see Figure 1). Within this framework, the first step (word retrieval) involves mapping from semantic features to an intermediary lexical representation (hereafter, word), and the second step (phonological retrieval) involves mapping from the word to its constituent phonemes (Foygel & Dell, 2000; Schwartz, Dell, Martin, Gahl, & Sobel, 2006). At each step, activation of related units creates conflict and sets the stage for errors: semantic errors at step one and phonological errors at step two. Lesions in aphasia weaken connections between levels (s- and p-weights in Figure 1), resulting in higher levels of conflict and increased rates of error.

Figure 1.

Interactive two-step model of lexical access.

Building on this account of lexical access, Nozari et al. (2011) propose that high conflict within the normal system constitutes the signal of error that the external monitor uses to initiate detection/repair behaviors, without relying on speech perception. In aphasia, abnormally high levels of conflict at step one or step two render the error signal unreliable, resulting in impaired error monitoring at that step. Thus, an individual with weakened connections between semantic features and words (s-weights) would exhibit particular difficulty monitoring semantic errors, whereas an individual with weakened connections between words and phonemes (p-weights) would exhibit particular difficulty monitoring phonological errors. Nozari et al. (2011) tested this prediction in 29 speakers with aphasia. With each individual participant, they estimated the strength of s- and p-weights by fitting the two-step model of lexical access to performance on the Philadelphia Naming Test (PNT; Roach, Schwartz, Martin, Grewal, & Brecher, 1996), and they coded each PNT response with regard to whether the participant produced evidence of error detection. As expected, results showed that weaker weights (more errors) at a particular level of word production were associated with reduced detection rates only for errors arising from that level.

In addition to shedding light on how aspects of the language system influence monitoring, evidence from people with aphasia suggests that monitoring may in turn affect the language system and play a role in learning. Marshall et al. (1994) found positive correlations between aphasic individuals’ rates of error detection in a naming test and their change in performance on measures of language abilities following three months of aphasia treatment. However, this study does not necessarily indicate a causal relationship between self-monitoring and language recovery because, as the authors noted, higher rates of error detection may have reflected a more intact language system that was more likely to show positive change.

A recent study tested more directly whether spontaneous self-monitoring in aphasic participants’ naming performance reflects the representational strength of lexical items (the Strength hypothesis) and/or causally impacts future performance (the Learning hypothesis) (Schwartz et al., 2016). Participants in this study completed a naming test twice (Time 1 and Time 2) without receiving feedback from the experimenter, and three types of errors were selected for analysis: semantic errors, phonological errors, and self-interrupted naming attempts termed fragments. Statistical analyses were designed to test how self-monitoring behaviors for items eliciting an error (“errorful items”) are associated with later or earlier naming success with those same items. The forward analysis measured how monitoring at time 1 predicted performance at time 2; the backward analysis measured how monitoring at time 2 predicted performance at time 1. The Strength hypothesis predicts effects in both directions (forward and backward) on the following assumptions: 1) errorful items can vary in representational strength; 2) when errorful items are tested twice (without feedback), the stronger items are more likely to be named correctly at least once; and 3) errors on stronger items are more likely to be spontaneously detected and/or corrected. The Learning hypothesis predicts greater effects in the forward direction on the assumption that learning has a causal, and hence future-directed, impact on behavior. Results from all three error types (semantic, phonological, and fragment) supported the Strength hypothesis. Results from semantic errors alone additionally supported the Learning hypothesis. For both hypotheses, the supporting evidence derived from instances in which an error was both detected and corrected; detection without correction yielded results comparable to the control condition (undetected errors). In addition to providing novel evidence of a learning effect of monitoring, this study highlights differences in the monitoring of phonological and semantic errors and raises the possibility that key differences localize to processes involved in repair.

1.3. The present study

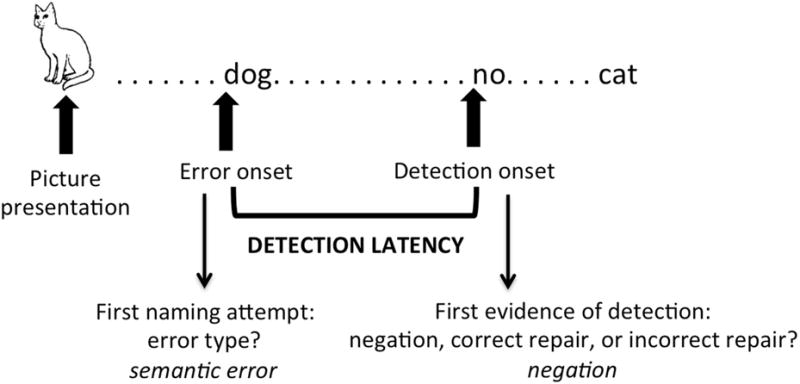

In the present study, we further examined self-monitoring processes by analyzing detection latencies for the monitoring responses collected in Schwartz et al. (2016). We focused on monitoring associated with semantic and phonological errors because 1) previous evidence from aphasia suggests that monitoring processes for semantic and phonological errors can undergo selective disruption (Nozari et al., 2011) and affect future behavior differently (Schwartz et al., 2016), and 2) some theories of monitoring lead to specific predictions regarding the timing of detection for these error types. For semantic and phonological errors that were detected in Schwartz et al.(2016), we recorded the timing of the errors and of the utterances that provided evidence of detection to determine error-to-detection latencies (hereafter, detection latencies). We then tested differences in detection latencies for semantic errors compared to phonological errors. Latency differences could result from different processes of error detection preceding a repair and/or from the repair process itself. To distinguish between these processes, we identified instances in which the first evidence of detection was a rejection of the naming attempt (e.g., “no, that’s not it”) and instances in which the first evidence of detection was an attempt to repair the error. If the timing of the detection process for semantic errors differs from phonological errors, we would expect a difference in detection latencies regardless of the type of detection. If the timing of repair planning for semantic errors differs from phonological errors, we would expect the difference in detection latencies to be greater for detection events of the repair type compared to those of the rejection type. Thus, by examining differences in detection latencies we hoped to identify monitoring processes in which differences between semantic and phonological errors arise.

2. Methods

2.1. Participants

Participants were 12 individuals with mild-to-moderate aphasia resulting from left hemisphere stroke. Selection criteria included good comprehension abilities and impaired naming. Individual participant characteristics and selected language test scores are reported in Table 1. Data were collected under research protocols approved by the Einstein Healthcare Network Institutional Review Board. Participants gave written consent and were paid for their participation.

Table 1.

Participant Characteristics

| Participant & Subtype | Gender | Age | Years of Education | Months Post-onset |

|---|---|---|---|---|

| Anomic1 | F | 63 | 12 | 20 |

| Anomic2 | F | 60 | 10 | 5 |

| Anomic3 | M | 62 | 19 | 6 |

| Anomic4 | M | 47 | 14 | 23 |

| Anomic5 | M | 55 | 16 | 10 |

| Broca1 | M | 48 | 14 | 11 |

| Broca2 | M | 59 | 16 | 81 |

| Broca3 | M | 58 | 18 | 109 |

| Conduction1 | F | 73 | 14 | 15 |

| Conduction2 | M | 53 | 12 | 61 |

| TCM1 | M | 54 | 16 | 4 |

| TCM2 | F | 73 | 12 | 24 |

| Mean | 58.8 | 14.4 | 30.8 | |

| Min; Max | 47; 73 | 10; 19 | 4; 109 | |

|

| ||||

| Participant & Subtype | Aphasia Severity | Naming | Auditory Comprehension | Word Comprehension |

| Anomic1 | 93 | 78 | 9.6 | 98 |

| Anomic2 | 89 | 79 | 9.2 | 86 |

| Anomic3 | 85 | 83 | 9.8 | 95 |

| Anomic4 | 74 | 83 | 7.2 | 95 |

| Anomic5 | 77 | 64 | 8.8 | 92 |

| Broca1 | 65 | 55 | 8.8 | 98 |

| Broca2 | 57 | 59 | 7.0 | 81 |

| Broca3 | 56 | 78 | 6.4 | 96 |

| Conduction1 | 83 | 65 | 9.1 | 90 |

| Conduction2 | 71 | 70 | 8.2 | 98 |

| TCM1 | 76 | 59 | 8.7 | 90 |

| TCM2 | 73 | 77 | 8.7 | 94 |

| Mean | 74.8 | 70.8 | 8.5 | 92.8 |

| Min; Max | 56; 93 | 55; 83 | 6.4; 9.8 | 81; 98 |

Note. TCM = transcortical motor. Aphasia Severity = Aphasia Quotient from the Western Aphasia Battery (Kertesz, 2007). Naming = percent correct on the Philadelphia Naming Test (Roach et al., 1996). Auditory comprehension = comprehension subtest score from the Western Aphasia Battery (max score = 10). Word comprehension = score on the Philadelphia Name Verification Test (Mirman et al., 2010), reflecting the percentage of items for which the correct name was verified and semantic and phonological foils were rejected.

2.2. Data collection and response coding

Details regarding data collection, response coding, and reliability are reported in Schwartz et al., 2016. In this section we provide information that is particularly relevant to the present study.

2.2.1. Data collection

Each participant completed a naming test consisting of 615 pictures of common objects two times over the course of two weeks, with the two administrations occurring in separate weeks. All pictures were presented in a random order on a computer, and participants were instructed to name each picture. To avoid any kind of experimenter-provided feedback, the experimenter advanced the trial when the participant pointed to a “thumbs up” picture, indicating he or she had given their final answer. If the participant did not provide a final answer within 20 seconds, the trial was advanced. All sessions were digitally recorded.

2.2.2. Response coding

Trained experts transcribed participants’ responses and coded the first naming attempt in each trial. The following were considered non-naming responses, rather than naming attempts: omissions (no responses and irrelevant comments, e.g., “I like that”), picture-part names (e.g., “stem” for “pumpkin”), and visual errors arising from misperception of the picture (e.g., “string” for “necklace”). Following established conventions (Roach et al., 1996), descriptions (e.g., “from Japan” for “sushi”), which often reflect a compensatory strategy, were also classified as non-naming responses. All other word or nonword responses were coded as naming attempts. If a naming attempt followed a non-naming response, the non-naming response was ignored and the naming attempt was coded as the response for the trial. Following these procedures, a change from a non-naming response to a naming attempt was not coded as evidence of error detection (see 2.2.3. Detection coding).

Each naming attempt was coded as correct or as one of 12 types of incorrect responses. A correct response was defined as a noun that shared all the correct phonemes with the target in the correct order, allowing for incorrect number marking (e.g., “cherry” for “cherries”). The present study focuses on two types of incorrect responses – semantic errors and phonological errors –that together constituted 56% of participants’ naming errors. Semantic errors were defined as nouns that represented a conceptual mismatch (e.g., “airplane” for “blimp”), including near-synonyms (e.g., “sleigh” for “sled”) but excluding correct superordinate responses (e.g., “insect” for “mosquito”). Phonological errors were defined as complete naming attempts (i.e., excluding self-interrupted fragments) that did not meet the criteria for a semantic error and whose phonological overlap with the target was greater than .50 according to the formula below (from Lecours & Lhermite, 1969). This formula provides a continuous measure of phonological similarity that is standardized across different word lengths. Shared phonemes (counting both consonants and vowels, with diphthongs as single constituents) were identified independent of position. Credit was assigned only once if a response had two instances of a single target phoneme. Phonological errors included words (e.g., “flower” for “feather”) as well as nonwords (e.g., “/blun/” for “balloon”).

2.2.3. Detection coding

For each naming attempt, trained experts also coded the presence or absence of utterances within the trial that indicated detection. Evidence of detection was defined as a negation of the naming attempt (e.g., “no,” “that’s not it,” etc.) or a changed naming attempt (e.g., for the target “weed”: “rake” changed to “weed”), including the affixation of a morpheme other than the plural morpheme (e.g., for the target “cheerleader”: “cheer” changed to “cheering”). In the present study, detected semantic and phonological errors were coded with respect to whether the first evidence of detection following the error was 1) a negation, 2) a correct repair (i.e., a changed naming attempt that matched the target), or 3) an incorrect repair (i.e., a changed naming attempt that did not match the target).

2.3. Latency coding

For each detected semantic or phonological error, trained research staff coded the timing of the trial onset (signaled by an auditory beep that coincided with picture presentation), error onset, error offset, and detection utterance onset. The audio recordings of the participants’ responses were viewed using Praat software (Boersma & Weenink, 2016) to display the formants and pulses of the recorded speech, and the timing of the appropriate onsets and offsets were recorded. The onset (or offset) of a voiced segment was defined as the first (or last) glottal pulse that extended through at least two formants. The onset (or offset) of an unvoiced segment was defined as the first (or last) visible increase in energy due to sound.

2.4. Detection latencies

For the purposes of this study, the detection latency was defined as the length of time from the onset of the error to the onset of the first utterance that indicated detection (see Figure 2). Considering that detection can begin before production of the error is completed, we timed from error onset rather than error offset to take into account the greater time provided for detection for longer error responses. By excluding the interval from stimulus onset to error onset, we avoided having the detection latency unduly influenced by earlier processing stages (e.g., the time required to process the visual stimulus and retrieve the word). On the other hand, monitoring processes may be initiated at some point before articulation of the error response, and such pre-articulatory monitoring time is not accounted for in our measurements. To address this issue, all analyses detailed below were also performed with log-transformed error latencies (i.e., trial start to error onset) included in the statistical models. All significant effects reported in the Results section were also significant in these secondary analyses (see Appendix A).

Figure 2.

Example of coding the error type, detection type, and detection latency for a response to the target “cat.”

It is also important to note that the classification of detection responses as negations or repair attempts in this study applies only to the first utterance providing evidence of error detection in each trial. In some cases, an initial detection response was followed by additional detection responses, including correct repairs (as depicted in Figure 2). Hence, the detection response rates reported in the results section do not include the total number of negations and repairs produced. Because our analyses focus on the speed, not the frequency, of detection responses, we selected the first detection response following each error as the measure of error detection. The presence and timing of later utterances are not included in the latency analyses or discussed in this paper.

2.5. Data analyses

Latencies were analyzed using linear mixed effects regression models (see Baayen, Davidson, & Bates, 2008) carried out in R version 3.1.2 using the lme4 package (R Core Team, 2014). Detection latencies were log-transformed prior to analyses because the distributions of the latency data were highly positively skewed, and the residuals of models using the raw latency data were similarly skewed. All models described below included participant random effects on the intercept to account for individual differences in the context of models of overall group performance. Individual items were not included as random effects because 76% of the items included in this study occurred no more than twice in the dataset. In addition, models failed to converge when using the maximal random effects structure (see Barr, Levy, Scheepers, & Tily, 2013), i.e., when including the effects of both error type and detection type. Because the focus of the analyses was to test differences between phonological errors and semantic errors, we chose to include the effect of error type in the random effects structures, which makes the significance test for the effect of error type more conservative.

Data analyses used a model comparisons approach. After fitting a base model of detection latencies with the intercept and random effects, fixed effects were added one at a time. Improvements in model fit were evaluated using the change in the deviance statistic (−2 times the log-likelihood), which is distributed as chi-square with degrees of freedom equal to the number of parameters added. Parameter estimates are reported with their standard errors and p-values estimated using the normal approximation for the t-values with alpha=0.05. To test whether the timing of error detection and/or repair differs for phonological errors compared to semantic errors, we fit a base model of detection latencies and sequentially added the effects of a two-level detection type factor (negation; repair attempt), a two-level error type factor (phonological; semantic), and the interaction between these two factors (Model Comparison A).

To further investigate factors relevant to error repair, we next performed a series of analyses for trials in which the first evidence of detection was a repair attempt. Based on the hypothesis that the production of an error may prime the production of a repair (see Hartsuiker & Kolk, 2001), we examined whether phonological similarity between the error and the subsequent repair predicted the speed of repair. Specifically, we tested the correlation between error-repair phonological overlap scores (using the formula reported in section 2.2.2. Response coding) and detection latencies for phonological errors and for semantic errors separately. To test whether repair accuracy and/or error type have significant effects on detection latencies when controlling for phonological overlap, we fit a base model of detection latencies and sequentially added the effects of phonological overlap, a two-level repair accuracy factor (correct repair; incorrect repair), a two-level error type factor (phonological; semantic), and the interaction between these two factors (Model Comparison B).

3. Results

3.1. Detection rates

Table 2 reports the total number of phonological errors and semantic errors in the analyzed corpus, broken down by presence and type of detection event (negation, correct repair attempt, incorrect repair attempt). The detection rate for phonological errors (38%) was higher than for semantic errors (28%). This was due to the relatively high frequency of repair attempts for phonological errors. By contrast, negations constituted only 8% of phonological error detection responses, compared to 38% of semantic error detection responses.

Table 2.

Phonological and Semantic Error Counts

| Undetected | Detected | ||||

|---|---|---|---|---|---|

| Error Type | Negation | Correct Repair | Incorrect Repair | Total | |

| Phonological | 563 (62%) | 28 (3%) | 131 (14%) | 189 (21%) | 911 (100%) |

| Semantic | 1335 (72%) | 194 (10%) | 123 (7%) | 199 (11%) | 1851 (100%) |

Note. Values in parentheses represent the percentage of total phonological errors (top row) or semantic errors (bottom row).

3.2. Detection latencies

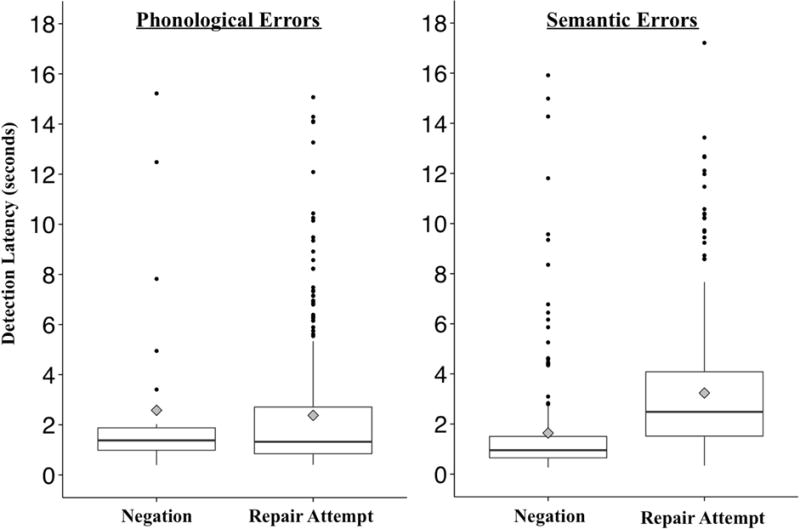

Table 3 displays mean detection latencies for phonological errors and for semantic errors, broken down by the type of detection response. Table 4 reports Model Comparison A, testing the effects of detection type and error type on detection latencies. There was a significant effect of detection type on detection latencies, χ2 (1) = 85.65, p<.001, indicating faster detection latencies for negations compared to repair attempts, Estimate = −0.623, SE = 0.064, p<.001. The effect of error type further improved model fit, χ2 (1) = 10.20, p<.01, indicating faster detection latencies for phonological errors compared to semantic errors, Estimate = −0.381, SE = 0.096, p<.001. Finally, the interaction between detection type and error type improved model fit, χ2 (1) = 25.35, p<.001. This interaction effect reflects that only detection responses of the repair type were initiated more quickly for phonological errors compared to semantic errors. Detection responses of the negation type showed a trend in the opposite direction, i.e., slower for phonological errors. Follow-up analyses tested the effect of error type on detection latencies separately for repair trials and for negation trials. There was a significant effect of error type for repair trials, χ2 (1) = 11.64, p<.001, but not for negation trials, χ2 (1) = 2.75, p=.10. The relatively fast repair attempts for phonological errors are apparent in the distributions of detection latencies for negations and repair attempts of each error type (see Figure 3).

Table 3.

Detection Latencies

| Type of Detection Response | ||||

|---|---|---|---|---|

| Error Type | Negation | Correct Repair | Incorrect Repair | Overall |

| Phonological | 2.58 (3.5) | 1.39 (1.3) | 3.06 (3.0) | 2.39 (2.6) |

| Semantic | 1.64 (2.4) | 3.25 (2.7) | 3.23 (2.5) | 2.64 (2.6) |

| Overall | 1.76 (2.6) | 2.29 (2.3) | 3.15 (2.7) | 2.54 (2.6) |

Note. Values represent mean time in seconds from the onset of the error to the onset of the first evidence of detection. Standard deviations are shown in parentheses.

Table 4.

Model comparisons

|

Model Comparison A

| |||||

|---|---|---|---|---|---|

| Model | logLik | deviance | Chisq | df | p-value |

| Detection Latency ~ 1 + (Error Type | Subject) | −1051.3 | 2102.6 | |||

| Detection Latency ~ Detection Type + (Error Type | Subject) | −1008.5 | 2017.0 | 85.65 | 1 | <0.001* |

| Detection Latency ~ Detection Type + Error Type+(Error Type | Subject) | −1003.4 | 2006.8 | 10.20 | 1 | <0.01* |

| Detection Latency ~ Detection Type*Error Type + (Error Type | Subject) | −990.7 | 1981.4 | 25.35 | 1 | <0.001* |

|

Model Comparison B

| |||||

|---|---|---|---|---|---|

| Model | logLik | deviance | Chisq | df | p-value |

| Detection Latency ~ 1 + (Error Type | Subject) | −737.5 | 1475.0 | |||

| Detection Latency ~ Phonological Overlap + (Error Type | Subject) | −718.1 | 1436.3 | 38.75 | 1 | <.001* |

| Detection Latency ~ Phonological Overlap + Repair Accuracy + (Error Type | Subject) | −713.6 | 1427.2 | 9.03 | 1 | <.01* |

| Detection Latency ~ Phonological Overlap + Repair Accuracy + Error Type + (Error Type | Subject) | −710.4 | 1420.7 | 6.53 | 1 | .01* |

| Detection Latency ~ Phonological Overlap + Repair Accuracy*Error Type + (Error Type | Subject) | −703.7 | 1407.5 | 13.22 | 1 | <.001* |

Note. The base model includes the intercept and random effects represented as (Error Type | Subject). The subsequent comparison models show the individually added fixed effects in bold, with “*” representing the complete set of main effects and interactions. Improvements in model fit were evaluated using the change in the deviance statistic, which is distributed as chi-squared with degrees of freedom equal to the number of parameters added.

Figure 3.

Distributions of detection latencies. The bottom, middle, and top of each box represent the 25th, 50th, and 75th percentiles, respectively. Points represent outliers greater than 1.5 times the interquartile range. The diamond overlaid on each plot represents the mean.

Because detection latencies were determined by how quickly participants initiated an utterance indicating error detection, they may have been affected by individual differences in the speed of word production, particularly when the participants produced repair attempts rather than simple “no” responses. Indeed, examination of mean detection latencies for each participant revealed substantial variability in the speed of error repair (see Table 5 for individual detection rates and latencies). Importantly, however, the effect of error type on detection latencies was largely consistent across individuals, with 10 out of 12 participants exhibiting a faster mean detection latency for phonological error repairs compared to semantic error repairs. Given the difference in repair times between the two error types, we next examined additional factors that may affect the time required to repair an error.

Table 5.

Individual Detection Rates and Latencies

| Participant & Subtype | Phonological errors (proportion detected) | Semantic errors (proportion detected) | Detection latency: Negation | Detection latency: Phonological error repair | Detection latency: Semantic error repair |

|---|---|---|---|---|---|

| Anomic1 | 62 (0.53) | 168 (0.49) | 2.034 | 1.099 | 3.077 |

| Anomic2 | 24 (0.13) | 147 (0.06) | 1.241 | 2.302 | 3.182 |

| Anomic3 | 28 (0.79) | 129 (0.16) | 1.628 | 1.091 | 1.727 |

| Anomic4 | 54 (0.17) | 149 (0.17) | 2.541 | 1.481 | 4.386 |

| Anomic5 | 100 (0.33) | 169 (0.34) | 2.038 | 2.531 | 4.548 |

| Broca1 | 105 (0.26) | 85 (0.13) | 4.496 | 1.949 | 2.610 |

| Broca2 | 69 (0.35) | 263 (0.38) | 0.891 | 2.439 | 2.847 |

| Broca3 | 74 (0.43) | 132 (0.24) | 1.476 | 1.007 | 3.729 |

| Conduction1 | 177 (0.74) | 111 (0.38) | 1.739 | 3.040 | 3.413 |

| Conduction2 | 87 (0.08) | 114 (0.06) | 0.870 | 1.028 | 1.547 |

| TCM1 | 50 (0.44) | 236 (0.41) | 2.137 | 4.188 | 2.918 |

| TCM2 | 81 (0.06) | 148 (0.23) | 3.381 | 4.274 | 3.422 |

| Mean | 76 (0.36) | 154 (0.25) | 1.759 | 2.377 | 3.238 |

Note. TCM = transcortical motor. Detection latencies represent the mean time in seconds from the onset of the error to the onset of the detection response.

3.3. Repair attempts

The analyses of repair attempts tested whether differences between phonological and semantic error repairs could be explained by differences in similarity between the errors and their respective repair attempts. In the present study, phonological errors tended to have a high phonological overlap with the subsequent repair attempts (M=0.59). Phonological errors had a particularly high overlap with correct repair attempts (M=0.71) because our classification of phonological errors required an overlap of greater than 0.5 with the correct response. In contrast, semantic errors tended to have less phonological overlap with their subsequent repair attempts (M=0.29). To examine whether this factor was associated with detection latencies, we first tested the relationship between error-repair phonological overlap and detection latencies for trials in which the first evidence of detection was a repair attempt. A significant correlation between phonological overlap and detection latencies was found for phonological errors, r = −0.36, p<.001, and for semantic errors, r = −0.13, p=.02, with greater phonological overlap associated with faster detection latencies.

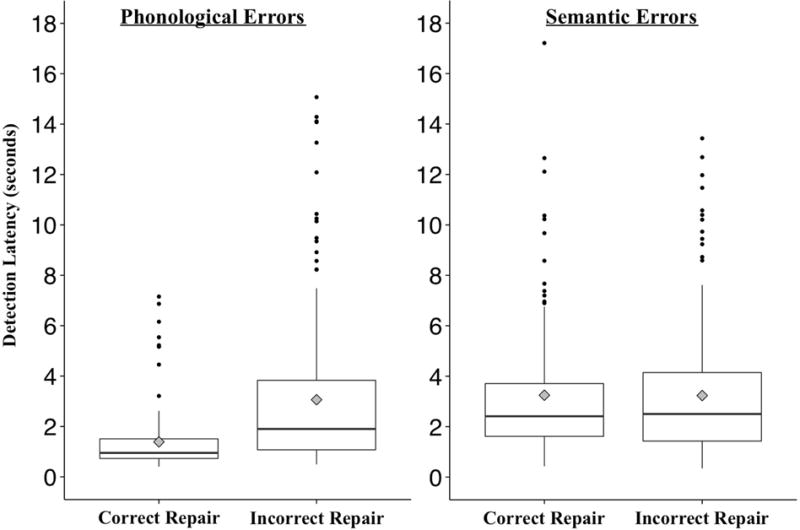

In Model Comparison B, we tested the effects of repair accuracy and error type in addition to the effect of phonological overlap (see Table 4). As expected, phonological overlap had a significant effect on detection latencies, χ2 (1) = 38.75, p<.001, with greater phonological overlap associated with faster detection latencies, Estimate = −0.728, SE = 0.112, p<.001. The effect of repair accuracy further improved model fit, χ2 (1) = 9.03, p<.01, indicating faster detection latencies for correct repairs compared to incorrect repairs, Estimate = −0.187, SE = 0.062, p<.01. The addition of error type further improved model fit, χ2 (1) = 6.53, p=.01, indicating faster detection latencies for phonological errors compared to semantic errors, Estimate = −0.316, SE = 0.110, p<.01. Finally, the interaction between repair accuracy and error type improved model fit, χ2 (1) = 13.22, p<.001. This interaction effect reflects that when controlling for phonological overlap, the accuracy of the repair attempt had a larger effect on detection latencies for phonological errors than for semantic errors, with correct repairs of phonological errors occurring particularly quickly. Follow-up analyses tested the effect of repair accuracy on detection latencies separately for phonological errors and for semantic errors, again controlling for phonological overlap in the models. There was a significant effect of repair accuracy for phonological errors, χ2 (1) = 17.64, p<.001, but not for semantic errors, χ2 (1) = 0.02, p=.89. This pattern of results is apparent in the distributions of detection latencies for correct and incorrect repairs of each error type (see Figure 4).

Figure 4.

Distributions of detection latencies for repair attempts. The bottom, middle, and top of each box represent the 25th, 50th, and 75th percentiles, respectively. Points represent outliers greater than 1.5 times the interquartile range. The diamond overlaid on each plot represents the mean.

4. Discussion

In this study, we analyzed the timing of spontaneous self-monitoring in the naming performance of individuals with aphasia to examine detection and repair processes that are associated with phonological and semantic errors. We first asked whether phonological errors and semantic errors differ in the timing of the detection process or the timing of repair planning. Results suggest that these two error types primarily differ with respect to repair planning. Specifically, repair attempts for phonological errors were initiated more quickly than repair attempts for semantic errors. We next asked whether this difference between the two error types could be attributed to the tendency for phonological errors to have a high degree of phonological similarity with the subsequent repair attempts, thereby speeding the programming of the repairs. Results showed that greater phonological similarity between the error and the repair was associated with faster repair times for both error types, supporting an error-to-repair priming effect (see Hartsuiker & Kolk, 2001). When controlling for phonological overlap, significant effects of error type and repair accuracy on repair times were also found. The results suggest that the type of error that is produced affects the speed of the subsequent repair, and in the following sections we discuss processes that may drive this effect.

4.1. Error detection

The results of the present study do not provide evidence for a difference in the timing of detection for phonological errors compared to semantic errors. Collapsing across different types of detection responses, faster detection latencies were observed for phonological errors compared to semantic errors. However, the significant interaction between error type and detection type indicated that this difference was attributable to trials in which the response indicating detection was a repair attempt, suggesting faster repair planning for phonological errors rather than faster detection.

For trials in which the participant produced a simple rejection of the error, there was a trend in the opposite direction, i.e., detection responses occurred more slowly for phonological errors compared to semantic errors. The low frequency of negations for phonological errors (see Table 2) may have resulted in insufficient power to detect significantly slower negations for phonological errors compared to semantic errors. However, the more frequent correct repair responses demonstrated fast detection as well as fast repair of phonological errors (see Table 3). Such fast repair planning for phonological errors may be an underlying cause of the rarity of negations for this error type. When a repair can be programmed rapidly, there is little pragmatic reason for the speaker to indicate rejection of the error prior to attempting a repair. Alternatively, editing terms like “no” may serve as a signal to the listener that an error is being rejected (see Levelt, 1989), and this signal may be more necessary when the wrong word has been selected than when a phonological error on the correct response has been produced.

These results suggest that the difference in detection latencies for phonological errors compared to semantic errors primarily resulted from different processes related to programming repairs for these two error types. Hence, the findings do not support the prediction that semantic errors are available for detection earlier than phonological errors, which is suggested by some production-based models (Nozari et al., 2011; van Wijk & Kempen, 1987) and Pickering and Garrod’s (2014) prediction-based model. The findings also do not support earlier detection of phonological errors, which might be expected if speakers monitor their errors by perceiving their own internal or overt speech (Levelt, 1983, 1989). However, it is important to note that the measure of detection latency in the present study collapses together the time required to detect the error and the time required to plan and initiate the detection utterance. More precise measurements of the timing of speech monitoring processes can be recorded with eyetracking and EEG paradigms (Acheson & Hagoort, 2014; Huettig & Hartsuiker, 2010; Severens, Janssens, Kühn, Brass, & Hartsuiker, 2011; Trewartha & Phillips, 2013), and further research using these methodologies may reveal differences in the timing of detection for different types of speech errors. In addition, more sensitive methodologies may be useful for measuring covert error detection and correction. These pre-articulatory processes are difficult to infer from overt speech alone, and so were outside the scope of the present study.

4.2. Error repair

A primary finding in this study was the observation of faster repair attempts for phonological errors compared to semantic errors. Contrary to this finding, there is evidence from previous studies that the production of words that are phonologically related to the target slows production of the subsequent repair, whereas the production of words that are semantically related speeds the subsequent repair (Hartsuiker, Pickering, & de Jong, 2005; Tydgat, Diependaele, Hartsuiker, & Pickering, 2012). These studies did not involve spontaneous naming errors, but rather used a paradigm designed to approximate speech repair situations by quickly replacing one target picture with another and thereby inducing repairs of naming attempts that were initially correct. Hence, the results of previous studies may differ from those of the present study due to differences in the definition of a repair (i.e., restarting speech after initially correct naming attempts vs. restarting speech after detection of erroneous naming attempts) and/or the population studied (i.e., neurologically intact adults vs. aphasic adults). Nevertheless, the evidence appears strong from previous work as well as the present study that residual activation from the production of a speech error may influence the production of a repair, and the effect of this activation can differ according to the type of error.

The effect of error type on the speed of subsequent repairs could be explained by processes at the word retrieval stage and/or the phonological retrieval stage of word production. Within the framework of the two-step model of lexical access (Foygel & Dell, 2000; Schwartz et al., 2006), a phonological error typically arises in the second step (phonological retrieval) after the correct lexical item has been selected in the first step. Hence, during the repair of a phonological error, the first step could be quickly reinstated or skipped entirely. In contrast, the repair of a semantic error would require reformulation of the first step as well as the second step. Processes occurring in the second step of lexical access would also contribute to faster repair times for phonological errors, for the following reason: By definition a phonological error shares phonemes with the correct response. Hence, the production of the error activates some of the correct phonemes during phonological retrieval. This activation could facilitate fast production of a repair attempt for a phonological error, particularly when the repair attempt is the correct response. This interpretation is consistent with the finding that greater phonological overlap between the error and the repair attempt is associated with faster repairs. Although error-to-repair priming has been assumed in models of monitoring (Hartsuiker & Kolk, 2001) and supported by the results of experimental monitoring paradigms (Hartsuiker et al., 2005; Tydgat et al., 2012), the present study provides the first evidence of this type of priming in spontaneous self-monitoring.

Alternatively, if one assumes that the correct word remains highly activated after any type of error is produced, then the first step of lexical access could be quickly reinstated for both semantic and phonological errors1. By this account, the second step of lexical access would be the source of the observed timing differences, with the second step completed more quickly following a phonological error due to the priming effect discussed above. However, the results of the present study indicated an effect of error type with phonological overlap controlled, suggesting that processes at the second step do not completely account for the differences in repair times. In addition, evidence from training naming in aphasia suggests that effortful, semantically-driven lexical retrieval promotes learning (Middleton, Schwartz, Rawson, & Garvey, 2015; Middleton, Schwartz, Rawson, Traut, & Verkuilen, 2016). Hence, the former account positing reformulation of both steps of lexical access during the repair of semantic errors may help explain the finding that self-correction of semantic, but not phonological, errors promoted learning in aphasic individuals (Schwartz et al., 2016).

In addition to overall faster repair times for phonological errors compared to semantic errors, results from this study showed that correct repairs occurred more quickly than incorrect repair attempts for phonological errors, whereas the accuracy of the repair did not affect repair times for semantic errors. This finding is consistent with previous evidence that phonological errors are typically corrected immediately or soon after their overt articulation during sentence production (Nooteboom, 2005; Postma & Kolk, 1992). As discussed previously, one factor contributing to this effect may be the high degree of phonological similarity between phonological errors and correct repairs of those errors. However, the results from this study indicate that error type and repair accuracy have significant effects on detection latencies even with phonological overlap controlled. The effect of repair accuracy on phonological errors could be the result of the typically fast repair process slowing down when the repair does not converge on the correct response. The effect could also arise if speakers occasionally repair phonological errors by a relatively slow process typically used for semantic errors, and this slow process is less likely to result in successful repairs of phonological errors.

4.3. Future directions and conclusions

An important topic for future research is self-monitoring behavior associated with other error types, including fragments and mixed errors. Fragmented responses (e.g., “/klo-/” for “pocket”) were not included in the present study because in most cases the intended word and the stage of production in which the error originated cannot be determined confidently. However, the study of fragmented responses may provide important evidence regarding relatively rapid error detection in aphasia, assuming that speech interruption quickly follows the moment of error detection (Levelt, 1983). Mixed errors, which are semantically and phonologically related to the target, were classified as semantic errors in the present study under the assumption that they typically originate from incorrect word retrieval in the first step of lexical access. This type of error did not occur frequently enough to be analyzed separately from other semantic errors. In future monitoring studies, classifying mixed errors separately from other semantic errors may help delineate the effects of error origin (step 1 of lexical access for semantic and mixed errors vs. step 2 for phonological errors) as opposed to the effects of phonological overlap with the target (low for semantic errors vs. high for mixed and phonological errors).

In addition, participants in the present study were selected based on good comprehension and impaired naming, irrespective of clinical subtype. Variability in self-monitoring behaviors across different subtypes of aphasia remains an important question that may shed light on relationships among specific linguistic and monitoring processes. Similarly, participant samples with greater variability in comprehension abilities could help determine whether good comprehension is necessary or sufficient for monitoring, possibly qualifying previous work that has shown dissociations between comprehension and monitoring abilities in aphasia (Maher, Gonzalez Rothi, & Heilman, 1994; Marshall et al., 1985; Marshall, Robson, Pring, & Chiat, 1998; Nozari et al., 2011; Sampson & Faroqi-Shah, 2011).

This line of research has important implications for theories of monitoring and for the potential roles of error detection and repair in aphasia recovery. The results of the present study suggest that the type of error that is produced affects the speed of the subsequent repair. Faster repairs of phonological errors relative to semantic errors may be explained by fast reinstatement of an initial word retrieval stage and/or error-to-repair priming of activated phonemes. A better understanding of these processes underlying error detection and repair may further the understanding of how self-monitoring relates to learning in aphasia.

Highlights.

We analyzed the timing of speech error detection and repair in aphasia.

Repair attempts occurred more quickly for phonological errors than semantic errors.

Correct repairs of phonological errors were initiated particularly quickly.

Results provide evidence of error-to-repair priming in spontaneous self-monitoring.

The type of error that is produced affects the speed of the subsequent repair.

Acknowledgments

This work was supported by National Institutes of Health research grants R01 DC000191, R03 DC012426, and T32 HD071844. We thank Dan Mirman and members of the Language and Cognitive Dynamics Laboratory for assistance in preparing data for analysis. We also thank Mackenzie Stabile, Adelyn Brecher, Maureen Gagliardi, and Kelly Garvey for assistance in coding data for this study and Roxana Botezatu for discussions relevant to preliminary analyses of the data.

Appendix A

| Model Comparison A

| |||||

|---|---|---|---|---|---|

| Model | logLik | deviance | Chisq | df | p-value |

| Detection Latency ~ 1 + (Error Type | Subject) | −1051.3 | 2102.6 | |||

| Detection Latency ~ Error Latency + (Error Type | Subject) | −1051.2 | 2102.4 | 0.19 | 1 | 0.66 |

| Detection Latency ~ Error Latency + Detection Type+(Error Type | Subject) | −1007.0 | 2014.1 | 88.37 | 1 | <.001* |

| Detection Latency ~ Error Latency + Detection Type + Error Type + (Error Type | Subject) | −1001.9 | 2003.8 | 10.32 | 1 | <.01* |

| Detection Latency ~ Error Latency + Detection Type*Error Type + (Error Type | Subject) | −989.7 | 1979.4 | 24.36 | 1 | <.001* |

| Model Comparison B

| |||||

|---|---|---|---|---|---|

| Model | logLik | deviance | Chisq | df | p-value |

| Detection Latency ~ 1 + (Error Type | Subject) | −737.5 | 1475.0 | |||

| Detection Latency ~ Error Latency + (Error Type | Subject) | −735.2 | 1470.3 | 4.72 | 1 | 0.03* |

| Detection Latency ~ Error Latency + Phonological Overlap + (Error Type | Subject) | −716.8 | 1433.5 | 36.79 | 1 | <.001* |

| Detection Latency ~ Error Latency + Phonological Overlap + Repair Accuracy + (Error Type | Subject) | −712.9 | 1425.7 | 7.77 | 1 | <.01* |

| Detection Latency ~ Error Latency + Phonological Overlap + Repair Accuracy + Error Type + (Error Type | Subject) | −709.5 | 1419.1 | 6.67 | 1 | <.01* |

| Detection Latency ~ Error Latency + Phonological Overlap + Repair Accuracy*Error Type + (Error Type | Subject) | −702.6 | 1405.2 | 13.92 | 1 | <.001* |

Note. The base model includes the intercept and random effects represented as (Error Type | Subject). The subsequent comparison models show the individually added fixed effects in bold, with “*” representing the complete set of main effects and interactions. Improvements in model fit were evaluated using the change in the deviance statistic, which is distributed as chi-squared with degrees of freedom equal to the number of parameters added.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Conflict of interest

The authors declare no competing financial interests.

We thank an anonymous reviewer for this suggestion.

Contributor Information

Julia Schuchard, Moss Rehabilitation Research Institute, 50 Township Line Road, Elkins Park, PA, USA 19027, 215-663-6292.

Erica L. Middleton, Moss Rehabilitation Research Institute, 50 Township Line Road, Elkins Park, PA, USA 19027, 215-663-6967

Myrna F. Schwartz, Moss Rehabilitation Research Institute, 50 Township Line Road, Elkins Park, PA, USA 19027

References

- Acheson DJ, Hagoort P. Twisting tongues to test for conflict-monitoring in speech production. Frontiers in Human Neuroscience. 2014;8(206):1–16. doi: 10.3389/fnhum.2014.00206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baayen RH, Davidson DJ, Bates DM. Mixed-effects modeling with crossed random effects for subjects and items. Journal of Memory and Language. 2008;59(4):390–412. [Google Scholar]

- Barr DJ, Levy R, Scheepers C, Tily HJ. Random effects structure for confirmatory hypothesis testing: Keep it maximal. Journal of Memory and Language. 2013;68(3):255–278. doi: 10.1016/j.jml.2012.11.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blackmer ER, Mitton JL. Theories of monitoring and the timing of repairs in spontaneous speech. Cognition. 1991;39(3):173–194. doi: 10.1016/0010-0277(91)90052-6. [DOI] [PubMed] [Google Scholar]

- Boersma P, Weenink D. Praat: doing phonetics by computer. 2016 URL http://www.praat.org/

- De Smedt K, Kempen G. Incremental sentence production, self-correction, and coordination. In: Kempen G, editor. Natural language generation: recent advances in artificial intelligence, psychology, and linguistics. Dordrecht: Kluwer; 1987. [Google Scholar]

- Dell GS, Martin N, Schwartz MF. A case-series test of the interactive two-step model of lexical access: Predicting word repetition from picture naming. Journal of Memory and Language. 2007;56(4):490–520. doi: 10.1016/j.jml.2006.05.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foygel D, Dell GS. Models of impaired lexical access in speech production. Journal of Memory and Language. 2000;43(2):182–216. [Google Scholar]

- Hartsuiker RJ, Kolk HHJ. Error monitoring in speech production: A computational test of the perceptual loop theory. Cognitive Psychology. 2001;42(2):113–157. doi: 10.1006/cogp.2000.0744. [DOI] [PubMed] [Google Scholar]

- Hartsuiker RJ, Kolk HHJ, Martensen H. The division of labor between internal and external speech monitoring. In: Hartsuiker RJ, Bastiaanse R, Postma A, Wijnen F, editors. Phonological encoding and monitoring in normal and pathological speech. New York, NY: Psychology Press; 2005. pp. 167–186. [Google Scholar]

- Hartsuiker RJ, Pickering MJ, de Jong NH. Semantic and phonological context effects in speech error repair. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2005;31(5):921–932. doi: 10.1037/0278-7393.31.5.921. [DOI] [PubMed] [Google Scholar]

- Huettig F, Hartsuiker RJ. Listening to yourself is like listening to others: External, but not internal, verbal self-monitoring is based on speech perception. Language and Cognitive Processes. 2010;25(3):347–374. [Google Scholar]

- Kertesz A. Western Aphasia Battery-Revised. San Antonio, TX: PsychCorp; 2007. [Google Scholar]

- Lackner JR, Tuller BH. Role of efference monitoring in the detection of self-produced speech errors. In: Cooper WE, Walker ECT, editors. Sentence processing: Studies dedicated to Merrill Garrett. Hillsdale, NJ: Earlbaum; 1979. pp. 281–294. [Google Scholar]

- Laver JDM. Monitoring systems in the neurolinguistic control of speech production. In: Fromkin VA, editor. Errors in linguistic performance: Slips of the tongue, ear, pen, and hand. New York: Academic Press; 1980. pp. 287–305. [Google Scholar]

- Lecours A, Lhermite F. Phonemic paraphasias: Linguistic structures and tentative hypotheses. Cortex. 1969;5:193–228. doi: 10.1016/s0010-9452(69)80031-6. [DOI] [PubMed] [Google Scholar]

- Levelt WJM. Monitoring and self-repair in speech. Cognition. 1983;14:41–104. doi: 10.1016/0010-0277(83)90026-4. [DOI] [PubMed] [Google Scholar]

- Levelt WJM. Speaking: From intention to articulation. Cambridge, MA: MIT Press; 1989. [Google Scholar]

- MacKay DG. The organization of perception and action: A theory for language and other cognitive skills. New York: Springer-Verlag; 1987. [Google Scholar]

- Maher LM, Gonzalez Rothi LJ, Heilman KM. Lack of error awareness in an aphasic patient with relatively preserved auditory comprehension. Brain and Language. 1994;46(3):402–418. doi: 10.1006/brln.1994.1022. [DOI] [PubMed] [Google Scholar]

- Marshall J, Robson J, Pring T, Chiat S. Why does monitoring fail in jargon aphasia? Comprehension, judgment, and therapy evidence. Brain and Language. 1998;63:79–107. doi: 10.1006/brln.1997.1936. [DOI] [PubMed] [Google Scholar]

- Marshall RC, Neuburger SI, Phillips DS. Verbal self-correction and improvement in treated aphasic clients. Aphasiology. 1994;8(6):535–547. [Google Scholar]

- Marshall RC, Rappaport BZ, Garcia-Bunuel L. Self-monitoring behavior in a case of severe auditory agnosia with aphasia. Brain and Language. 1985;24:297–313. doi: 10.1016/0093-934x(85)90137-3. [DOI] [PubMed] [Google Scholar]

- Middleton EL, Schwartz MF, Rawson KA, Garvey K. Test-enhanced learning versus errorless learning in aphasia rehabilitation: Testing competing psychological principles. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2015;41(4):1253–1261. doi: 10.1037/xlm0000091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Middleton EL, Schwartz MF, Rawson KA, Traut H, Verkuilen J. Towards a theory of learning for naming rehabilitation: Retrieval practice and spacing effects. Journal of Speech, Language, and Hearing Research. 2016;59(5):1111–1122. doi: 10.1044/2016_JSLHR-L-15-0303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mirman D, Strauss TJ, Brecher A, Walker GM, Sobel P, Dell GS, Schwartz MF. A large, searchable, web-based database of aphasic performance on picture naming and other tests of cognitive function. Cognitive Neuropsychology. 2010;27(6):495–504. doi: 10.1080/02643294.2011.574112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Motley MT, Camden CT, Baars BJ. Covert formulation and editing of anomalies in speech production: Evidence from experimentally elicited slips of the tongue. Journal of Verbal Learning and Verbal Behavior. 1982;21(5):578–594. [Google Scholar]

- Nickels L, Howard D. Phonological errors in aphasic naming: Comprehension, monitoring, and lexicality. Cortex. 1995;31:209–237. doi: 10.1016/s0010-9452(13)80360-7. [DOI] [PubMed] [Google Scholar]

- Nooteboom SG. Listening to oneself: Monitoring speech production. In: Hartsuiker RJ, Bastiaanse R, Postma A, Wijnen F, editors. Phonological encoding and monitoring in normal and pathological speech. New York, NY: Psychology Press; 2005. pp. 167–186. [Google Scholar]

- Nozari N, Dell GS, Schwartz MF. Is comprehension necessary for error detection? A conflict-based account of monitoring in speech production. Cognitive Psychology. 2011;63(1):1–33. doi: 10.1016/j.cogpsych.2011.05.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oomen CCE, Postma A. Limitations in processing resources and speech monitoring. Language and Cognitive Processes. 2002;17(2):163–184. [Google Scholar]

- Oomen CCE, Postma A, Kolk HH. Prearticulatory and postarticulatory self-monitoring in Broca’s aphasia. Cortex. 2001;37(5):627–641. doi: 10.1016/s0010-9452(08)70610-5. [DOI] [PubMed] [Google Scholar]

- Oomen CCE, Postma A, Kolk HHJ. Speech monitoring in aphasia: Error detection and repair behaviour in a patient with Broca’s aphasia. In: Hartsuiker RJ, Bastiaanse R, Postma A, Wijnen F, editors. Phonological encoding and monitoring in normal and pathological speech. New York, NY: Psychology Press; 2005. pp. 209–225. [Google Scholar]

- Pickering MJ, Garrod S. An integrated theory of language production and comprehension. Behavioral and Brain Sciences. 2013;36(4):329–392. doi: 10.1017/S0140525X12001495. [DOI] [PubMed] [Google Scholar]

- Pickering MJ, Garrod S. Self-, other-, and joint monitoring using forward models. Frontiers in Human Neuroscience. 2014;8(132):1–11. doi: 10.3389/fnhum.2014.00132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Postma A. Detection of errors during speech production: A review of speech monitoring models. Cognition. 2000;77(2):97–131. doi: 10.1016/s0010-0277(00)00090-1. [DOI] [PubMed] [Google Scholar]

- Postma A, Kolk H. The effects of noise masking and required accuracy on speech errors, disfluencies, and self-repairs. Journal of Speech and Hearing Research. 1992;35(3):537–544. doi: 10.1044/jshr.3503.537. [DOI] [PubMed] [Google Scholar]

- Postma A, Noordanus C. Production and detection of speech errors in silent, mouthed, noise-masked, and normal auditory feedback speech. Language and Speech. 1996;39(4):375–392. [Google Scholar]

- R Core Team. R: A language and environment for statistical computing. Vienna: R Foundation for Statistical Computing; 2014. URL: http://www.r-project.org/ [Google Scholar]

- Roach A, Schwartz MF, Martin N, Grewal RS, Brecher A. The Philadelphia naming test: Scoring and rationale. Clinical Aphasiology. 1996;24:121–133. [Google Scholar]

- Sampson M, Faroqi-Shah Y. Investigation of self-monitoring in fluent aphasia with jargon. Aphasiology. 2011;25(4):505–528. [Google Scholar]

- Schlenck KJ, Huber W, Willmes K. “Prepairs” and repairs: Different monitoring functions in aphasic language production. Brain and Language. 1987;30(2):226–244. doi: 10.1016/0093-934x(87)90100-3. [DOI] [PubMed] [Google Scholar]

- Schwartz MF, Dell GS, Martin N, Gahl S, Sobel P. A case-series test of the interactive two-step model of lexical access: Evidence from picture naming. Journal of Memory and Language. 2006;54(2):228–264. doi: 10.1016/j.jml.2006.05.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schwartz MF, Middleton EL, Brecher A, Gagliardi M, Garvey K. Does naming accuracy improve through self-monitoring of errors? Neuropsychologia. 2016;84:272–281. doi: 10.1016/j.neuropsychologia.2016.01.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Severens E, Janssens I, Kühn S, Brass M, Hartsuiker RJ. When the brain tames the tongue: Covert editing of inappropriate language. Psychophysiology. 2011;48:1252–1257. doi: 10.1111/j.1469-8986.2011.01190.x. [DOI] [PubMed] [Google Scholar]

- Slevc LR, Ferreira VS. Halting in single word production: A test of the perceptual loop theory of speech monitoring. Journal of Memory and Language. 2006;54(4):515–540. doi: 10.1016/j.jml.2005.11.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stark J. Aspects of automatic versus controlled processing, monitoring, metalinguistic tasks, and related phenomena in aphasia. In: Dressler W, Stark J, editors. Linguistic analyses of aphasic language. New York: Springer-Verlag; 1988. [Google Scholar]

- Trewartha KM, Phillips NA. Detecting self-produced speech errors before and after articulation: An ERP investigation. Frontiers in Human Neuroscience. 2013;7(763):1–12. doi: 10.3389/fnhum.2013.00763. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tydgat I, Diependaele K, Hartsuiker RJ, Pickering MJ. How lingering representations of abandoned context words affect speech production. Acta Psychologica. 2012;140:218–229. doi: 10.1016/j.actpsy.2012.02.004. [DOI] [PubMed] [Google Scholar]

- van Wijk C, Kempen G. A dual system for producing self-repairs in spontaneous speech: evidence from experimentally elicited corrections. Cognitive Psychology. 1987;19:403–440. [Google Scholar]

- Wheeldon LR, Levelt WJM. Monitoring the time course of phonological encoding. Journal of Memory and Language. 1995;34(3):311–334. [Google Scholar]