Abstract

Research has revealed a special mechanoreceptor, called C-tactile (CT) afferent, that is situated in hairy skin and that seems relevant for the processing of social touch. We pursued a possible role of this receptor in the perception of other social signals such as a person’s voice. Participants completed three sessions in which they heard surprised and neutral vocal and nonvocal sounds and detected rare sound repetitions. In a given session, participants received no touch or soft brushstrokes to the arm (CT innervated) or palm (CT free). Event-related potentials elicited to sounds revealed that stroking to the arm facilitated the integration of vocal and emotional information. The late positive potential was greater for surprised vocal relative to neutral vocal and nonvocal sounds, and this effect was greater for arm touch relative to both palm touch and no touch. Together, these results indicate that stroking to the arm facilitates the allocation of processing resources to emotional voices, thus supporting the possibility that CT stimulation benefits social perception cross-modally.

Electronic supplementary material

The online version of this article (10.3758/s13415-017-0537-5) contains supplementary material, which is available to authorized users.

Keywords: EEG, ERP, Nonverbal, Emotion recognition, Somatosensory

We, like many other animals, engage in friendly physical contact. Initially, this phenomenon was attributed to the hygienic needs of our furry ancestors and considered of little current value. However, the discovery that nonhuman primates groom more than is hygienically necessary changed this view (Dunbar, 2010). It highlighted that, besides keeping clean, touch has additional functions that could be preserved in humans. Here, we pursued this possibility by exploring the effect of touch on the neural correlates of voice perception.

Behavioral work has fostered the idea that touch promotes positive affect and facilitates social bonding (Dunbar, 2010; Gallace & Spence, 2010; Suvilehto, Glerean, Dunbar, Hari, & Nummenmaa, 2015). Neuroscience, in turn, has revealed a tactile system that could underpin such effects (Iggo, 1960; Nordin, 1990). This system comprises C-tactile (CT) afferents, which differ from Aβ mechanoreceptors in a number of ways. For example, they are unmyelinated, occur in nonglabrous skin only, and seem tuned to the physical characteristics of human touch. Specifically, they are maximally excited by low-pressure physical contact with typical body temperature (Ackerley et al., 2014) and a stroking speed of 1 to 10 cm per second (Löken, Wessberg, Morrison, McGlone, & Olausson, 2009). Moreover, their firing rates linearly predict subjective pleasure (Ackerley et al., 2014; Löken et al., 2009; Olausson et al., 2002; Olausson, Wessberg, Morrison, McGlone, & Vallbo, 2010). Looking at their projections, CT afferents behave similarly to other C fibers relevant for interoception and pain (Björnsdotter, Morrison, & Olausson, 2010). They have a slow conduction velocity and send fibers to the insula by-passing primary somatosensory cortex (Kaiser et al., 2015; Olausson et al., 2002). Thus, patients without Aβ afferents but intact CT afferents may experience a vague sense of pleasure from the stroking of CT-innervated skin despite being unable to properly discriminate the tactile sensation (Olausson et al., 2002).

Based on these findings, CT afferents have been proposed to underpin the affective and rewarding qualities of touch in social interactions (McGlone, Wessberg, & Olausson, 2014; Olausson et al., 2008; Olausson et al., 2002). Additionally, and this possibility was tested here, they may promote the perception of other social signals (e.g., voices). This possibility derives from the fact that CT projections reach the posterior superior temporal sulcus (Bennett, Bolling, Anderson, Pelphrey, & Kaiser, 2014; Kaiser et al., 2015), a known social processing hub that prefers social over nonsocial information and that integrates this information across modalities (Schirmer & Adolphs, 2017; Watson, Latinus, Charest, Crabbe, & Belin, 2014).

To explore CT effects on social processing, we recorded the electroencephalogram (EEG) while participants listened to emotional and neutral vocal and nonvocal sounds on the backdrop of CT-appropriate stroking on the arm, comparable stroking on the palm, or no stroking. We expected CT touch to modulate two event-related potential (ERP) components previously associated with emotion recognition and social perception. One component was an early positive deflection peaking around 200 ms following sound onset (P2), with greater amplitudes for emotional as compared to neutral (Jiang & Pell, 2015; Paulmann & Pell, 2010; Schirmer, Chen, Ching, Tan, & Hong, 2013; Schirmer & Gunter, 2017) and social as compared to nonsocial sounds (Charest et al., 2009; Schirmer & Gunter, 2017). The other component was a late positive potential (LPP) known for its sensitivity to a range of modality-unspecific stimulus (e.g., salience) and task (e.g., relevance) characteristics. For example, emotionality (Amrhein, Mühlberger, Pauli, & Wiedemann, 2004) and humanness (Schindler & Kissler, 2016) robustly increase the LPP. Additionally, the integration of different stimulus dimensions and of stimulus with contextual information is linked to this component (Diéguez-Risco, Aguado, Albert, & Hinojosa, 2013; Schirmer & Gunter, 2017).

In line with established evidence, we predicted larger P2 and LPP amplitudes for vocal as compared to nonvocal and emotional as compared to neutral stimuli. Moreover, we hypothesized emotion effects to be larger for vocal relative to nonvocal sounds, especially for later integrative processing in the LPP (Schirmer & Gunter, 2017). Critically, enhanced responses to vocal-emotional sounds should be amplified further by touch to CT-innervated skin if such touch interfaces with and promotes social perception.

Method

Participants

Eighteen women were recruited for this study. We focused on female participants because of established sex differences in nonverbal sensitivity (e.g., Schirmer & Gunter, 2017) and touch (Fisher, Rytting, & Heslin, 1976) for which exploration would have required a more complex design crossing participant and experimenter sex (18 participants in 4 groups—ff, fm, mf, mm—with 3 recording sessions = 216 recording sessions). No participants were excluded from data analysis. The number of participants was set a priori based on session counterbalancing and had to be a multiple of 6. All participants reported normal hearing and an absence of neurological impairments. All were right-handed as assessed with the Edinburgh Handedness Inventory and were on average 25 (SD = 2.6) years old. This research was conducted in accordance with the Declaration of Helsinki. All participants signed informed consent at the beginning of the experiment.

Stimulus materials

Vocalizations were taken from a previous study for which they were selected and normed (Schirmer & Gunter, 2017). In short, 33 speakers pronounced “Ah” neutrally as well as with anger, disgust, fear, happiness, sadness, and surprise. Recordings were made by the present investigators as well as Belin, Fillion-Bilodeau, and Gosselin (2008) in a soundproof chamber and digitized at 16 bits/44.1 kHz.

Thirty participants (12 male, 18 female, mean age = 22.07 years), not contributing to the main experiment, classified each vocalization as angry, disgusted, fearful, happy, sad, surprised, neutral or “other” in case none of the aforementioned options seemed adequate. For sounds not categorized as neutral, the participants were prompted to rate emotion intensity and arousal on two 5-point scales ranging from 1 (very weak) to 5 (very strong).

For this project, we selected the 27 best recognized surprise expressions (mean accuracy = 73.70%, SD = 20.47; mean intensity = 3.29, SD = 0.459; mean arousal = 3.26, SD = 0.401) as well as 27 matching neutral sounds (mean accuracy = 76.91%, SD = 16.77). We decided to use one rather than multiple emotions because we wanted to control stimulus variation/homogeneity/probability between the neutral and the emotion condition. Moreover, we selected surprise because of its good recognition and high arousal value. All selected sounds were normalized at the same root-mean-square value and subjected to spectral rotation (http://www.phon.ucl.ac.uk/resource/software.php), resulting in a comparable set of nonvocal sounds (Warren et al., 2006). As such, these sounds retained some affective content but were perceived as distinctly nonhuman. (For a more detailed acoustic and perceptual analysis, see Schirmer & Gunter, 2017.) Exemplary sounds may be downloaded here (https://osf.io/5n8md/).

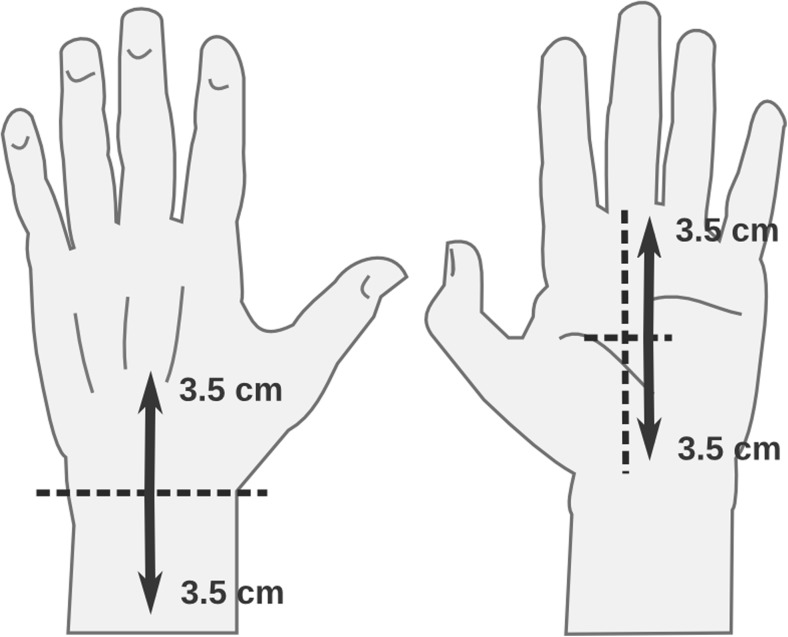

The touch stimulation was applied via a hand-held soft cosmetic brush, 3-cm in diameter, on short sections of the arm and palm. For the arm section, the experimenter first identified the transition between hand and wrist and then used a makeup pen to draw a 3.5-cm upward and downward boundary. For the palm section, the experimenter identified the palm midpoint and used the makeup pen to draw a 3.5-cm upward and downward boundary (see Fig. 1). Stroking was done by the experimenter at a speed of 3.5 cm per second. A repetitive fading tone was delivered to the experimenter over headphones. The tone was played every 2 seconds to facilitate accurate and constant stroke timing.

Fig. 1.

Touch preparation. Stroking areas for arm (left) and palm (right) are indicated by dark arrows

Procedure

Participants were tested individually on 3 days, separated by a week or longer. On these days, they interacted with the same female experimenter, who prepared them for the task and subsequently applied the touch condition. On each testing day, participants first underwent a standard setup procedure for the EEG recording. The EEG was recorded using 59 Ag/AgCl electrodes, which were located according to sites defined in the extended 10–20 system of the American Clinical Neurophysiology Society (2006). Individual electrodes were attached above and below the right eye and at the outer canthus of each eye to measure eye movements. One electrode was attached to the nose for data referencing. The nose was chosen as to facilitate comparisons with related research (Schirmer & Gunter, 2017) and the exploration of a possible auditory cortex involvement (Näätänen, Paavilainen, Rinne, & Alho, 2007). Electrode impedance was below 5 KΩ. The data was recorded at 500 Hz with a BrainAmp EEG system. Only an anti-aliasing filter was applied during data acquisition (i.e., sinc filter with a half-power cutoff at half the sampling rate).

Following the EEG setup, participants were prepared for both arm and palm touch—irrespective of whether they actually received this touch on that day. The experimenter used an alcohol swab to clean an ~8-cm area on both arm and palm and proceeded to mark the touch sections as described under “Stimulus Materials.” Subsequently, participants moved into the experimental chamber and sat down in front of a computer screen that was framed by two speakers. Next to them sat the female experimenter. A curtain separated both and allowed the participant to place his or her left arm out of sight, on a board accessible to the experimenter. The experimenter sat next to the participant during all three sessions, irrespective of whether she applied strokes.

Instructions presented on the screen informed participants that they would hear a sequence of sounds and that their task was to press the button on a button box (Dimension 16 × 8 × 1.5 cm; C&K digitast SERU switch/gray SER button was positioned at the lower middle position 8:2.5 cm) placed at the end of the right armrest using their right hand any time a sound was the same as the one before (one-back task). The task comprised three blocks in which sounds (i.e., 27 neutral/vocal, 27 surprised/vocal, 27 neutral/nonvocal, and 27 surprised/nonvocal) were played once in random order, with the constraint that a vocal stimulus and its spectral rotation (i.e., nonvocal counterpart) appeared in separate block halves as to avoid the emergence of potential acoustic associations. Additionally, 22 sounds were randomly selected for repetition in each block, which served to engage participants with the auditory material without highlighting the nature of the sounds and without necessitating a confounding motor response on nonrepeated, experimental trials. As such, each of the three blocks comprised 108 nonrepeated and 22 repeated trials. Across the experiment, a total of 324 sounds not requiring a motor response were designated for the EEG analysis, whereas 66 sounds requiring a motor response were designated for behavior analysis.

Each task trial started with a white fixation cross centered on a black background. After 500 ms, a sound played (average duration = 506 ms; SD = 25 ms) and the fixation cross remained for 1,000 ms after which it disappeared. An empty intertrial interval had a random duration between 2,000 and 4,000 ms.

In a given session, participants completed the task either with continuous stroking to the arm, the palm, or no stroking. Although CT afferents fatigue after repeated stroking, they do continue to fire (Nordin, 1990), and subjective experiences even after 50 minutes of stimulation are not negative (Triscoli, Ackerley, & Sailer, 2014). The order of touch conditions across the three sessions was fully counterbalanced. A session lasted about 30 minutes and included short breaks between blocks.

Before and after completing the task, participants indicated their current mood on a 5-point scale ranging from −2 (very negative) to +2 (very positive). Then they indicated how aroused they feel on a 5-point scale ranging from 0 to 4. Last, and only at the end of the task, they rated touch pleasantness on a 5-point scale ranging from −2 (very unpleasant) to +2 (very pleasant) if they had been stroked by the experimenter.

Data analysis

EEG data were processed with EEGLAB (Delorme & Makeig, 2004). The recordings were subjected to low-pass and high-pass filtering with a half-power cutoff at 30 and 0.1 Hz, respectively. The transition band was 7.5 Hz for the low-pass filter (−6 dB/octave; 221 pts) and 0.1 Hz for the high-pass filter (−6 dB/octave; 1,6501 pts). The continuous data were epoched using a 1,000-ms prestimulus window and a 1,000-ms poststimulus window. The resulting epochs were visually scanned for nontypical artifacts caused by drifts or muscle movements. Epochs containing such artifacts were removed. After the application of a 1 Hz high-pass filter, the data were subjected to an independent component analysis (Onton, Westerfield, Townsend, & Makeig, 2006) and the component structure resulting from this analysis was applied to the original epoched data set with the 30 to 0.1 Hz filter setting (Winkler, Debener, Müller, & Tangermann, 2015). Components reflecting typical artifacts (i.e., horizontal and vertical eye movements and eye blinks) were removed, and the data back-projected from component space into EEG channels space, re-epoched and baseline-corrected using a 200-ms prestimulus window and a 1,000-ms poststimulus window. The resulting epochs were again scanned visually for residual artifacts and affected epochs dropped from further analysis. ERPs were derived by averaging the remaining epochs for each condition and participant. A minimum of 58 trials and an average of 75 trials per condition entered statistical analysis.

The analysis windows for the two target ERP components was identified based on visual inspection of the average ERP waveforms as well as previous evidence (i.e., P2: 150–350 ms; LPP: 400–950 ms; Schirmer & Gunter, 2017). Mean voltages from within both windows were subjected to separate ANOVAs, with Voiceness (vocal, nonvocal), Emotion (surprised, neutral), Touch (arm, palm, no), Hemisphere (left, right), and Region (anterior, central, posterior) as repeated-measures factors. The factors Hemisphere and Region comprised average voltages computed across the following subgroups of electrodes: anterior left, Fp1, AF7, AF3, F5, F3, F1; anterior right, Fp2, AF8, AF4, F6, F4, F2; central left, FC3, FC1, C3, C1, CP3, CP1; central right, FC4, FC2, C4, C2, CP4, CP2; posterior left, P5, P3, P1, PO7, PO3, O1; posterior right, P6, P4, P2, PO8, PO4, O2. This selection of electrodes was based on previous research on emotional voice perception (Schirmer & Gunter, 2017) and ensured that the tested subgroups contained an equal number of electrodes while providing a broad scalp coverage that allowed the assessment of topographical effects. Our significance threshold was p = .05. We only report main effects and interactions involving the three experimental factors and only if the simple effects in follow-up analyses were at least marginally significant (p < .1). We report the generalized eta squared (ηG 2) as an effect-size measure for all effects and, if applicable, Greenhouse–Geisser corrected p values (pGG). An analysis of data referenced to the average of all electrodes is provided in the Supplementary Materials.

Results

Behavior

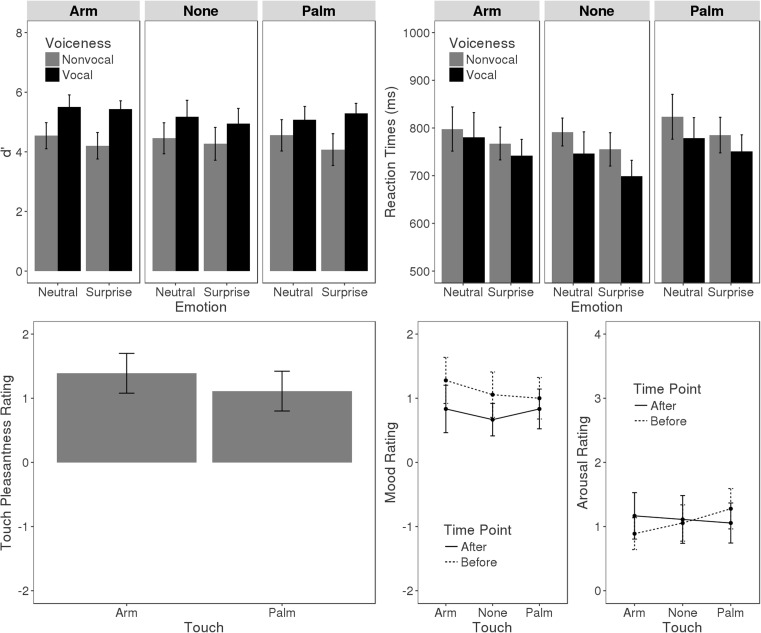

We analyzed performance in the target detection task to determine possible effects of touch. To this end, reaction times to correctly detected targets as well as d-prime scores derived by subtracting the normalized probability of false alarms from the normalized probability of hits were subjected to separate ANOVAs, with Voiceness (vocal, nonvocal), Emotion (surprised, neutral), and Touch (arm, palm, none) as repeated-measures factors. Results are illustrated in Fig. 2.

Fig. 2.

Behavioral results. The upper row presents d-primes and reaction times for the target detection task as a function of Touch, Voiceness, and Emotion. The lower row presents the rating scores from the preexperimental and postexperimental rating. Error bars indicate the 95% confidence interval

Correct response times showed main effects of Voiceness, F(1, 17) = 18.45, p < .001, ηG 2 = .018, and Emotion, F(1, 17) = 20.67, p < .001, ηG 2 = .017. Response times were shorter for vocal relative to nonvocal and for surprised relative to neutral sounds. D-prime analysis revealed a Voiceness main effect, F(1, 17) = 40.94, p < .001, ηG 2 = .164, indicating that responses were more accurate to vocal than to nonvocal sounds. The interaction of Emotion and Voiceness showed as a tendency, F(1, 17) = 3.51, p = .078, ηG 2 = .005, but follow-up test for vocal (p > .250) and nonvocal sounds (p = .102) were nonsignificant. The effect of Touch on response times and d-primes was nonsignificant (ps > .1)

A one-way ANOVA conducted on touch pleasantness ratings revealed no effect (p > .25). A two-way ANOVA on mood scores from before and after each session was conducted, with Touch and Rating Time Point (before, after) as repeated-measures factors. This revealed a main effect of Rating Time Point, F(1, 17) = 4.78, p = .043, ηG 2 = .038, indicating that participants felt more positive before than after the experiment. All other effects were nonsignificant (p > .25). An ANOVA on arousal scores was nonsignificant (ps > .213).

ERP

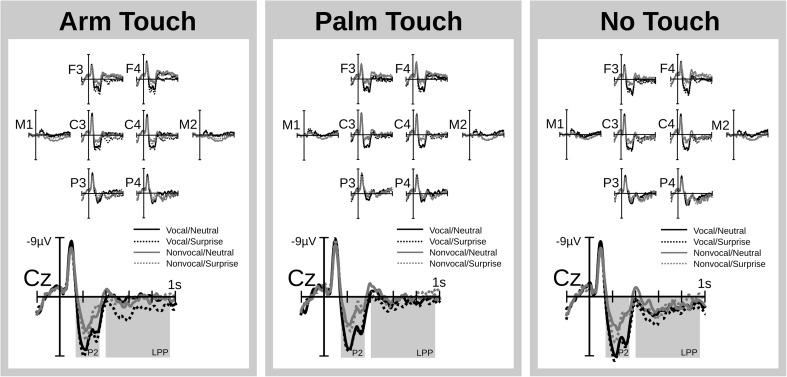

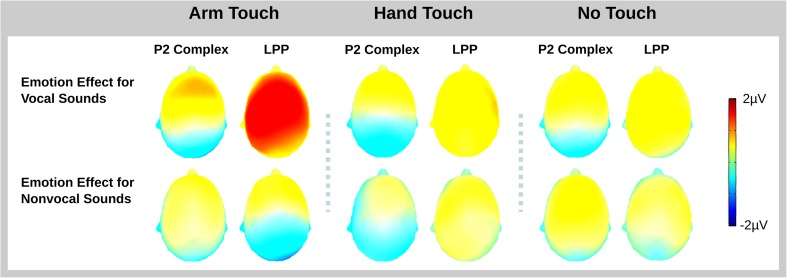

ERP time courses are illustrated in Fig. 3. Figure 4 presents a bar graph of mean voltages for the two analysis windows and the different experimental conditions. The topography of the interaction of Emotion, Voiceness, and Touch is illustrated in Fig. 5.

Fig. 3.

ERP traces. ERPs time locked to sound onset show voiceness and emotion effects that are independent of each other in the P2 and that interact with each other in the LPP. Illustrated are a subset of frontal (F), central (C), and parietal (P) recordings as well as mastoid recordings (M) from the left (uneven digit) and right (even digit) hemisphere. A vertex recording (Cz) is enlarged for better visibility. The interaction of voiceness and emotion in the LPP is stronger for the arm-touch than for palm-touch and no-touch conditions. The two statistical windows (P2, LPP) are shaded in gray

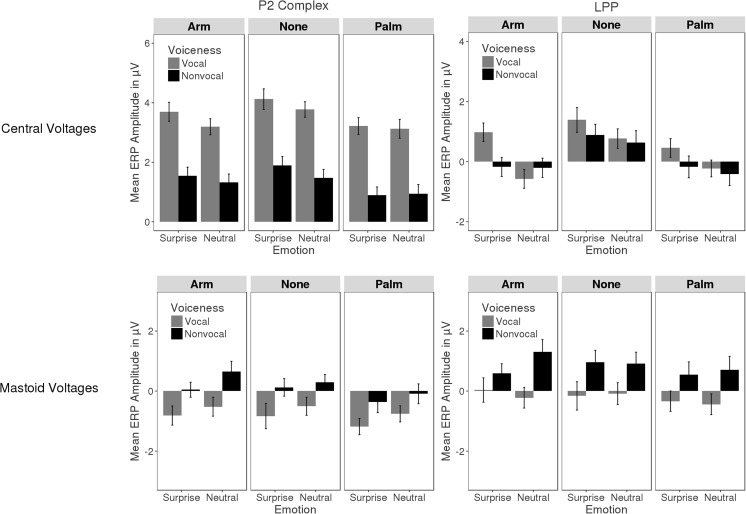

Fig. 4.

Bar graph of P2 (left) and LPP (right) mean voltages for the different experimental conditions computed across the left and right central region (top row) and the mastoids (bottom). Voiceness effects are most evident in the P2 with greater amplitude for vocal as compared with nonvocal sounds over central and the opposite over mastoid electrodes. Touch effects are most evident in the LPP where they interact with voiceness and emotion. Error bars reflect the 95% confidence interval

Fig. 5.

Topography of the voiceness by emotion interaction in the ERP. We plotted mean voltage differences computed by subtracting neutral from surprised sounds for vocal (top row) and nonvocal (bottom row) conditions within the two statistical windows. An interaction between voiceness and emotion was significantly stronger for the LPP in the arm-touch compared to the palm-touch and no-touch conditions. (Color figure online)

P2 complex

Visual inspection revealed a positive complex between 150 and 350 ms that was characterized by a large positive deflection with a small negative deflection superimposed. This pattern points to the presence of multiple components apart from a P2 including an N2, a P3 and/or a sound offset potential. For ease of reference, these patterns will be subsumed under the term P2 complex. Mean voltages of the P2 complex were subjected to an ANOVA, as described in the Method section. The results entailed an effect of Touch, F(2, 34) = 3.91, p = .03, ηG 2 = .014, marking greater P2 amplitudes for no touch relative to palm touch, F(1, 17) = 8.84, p = .008, ηG 2 = .022. Arm touch and palm touch (p = .149) as well as arm touch and no touch (p > .250) did not differ. All other effects involving Touch were nonsignificant (ps > .1).

The Voiceness main effect, F(1, 17) = 4.85, p < .001, ηG 2 = .074, was significant, as were the interactions of Voiceness and Region, F(2, 34) = 6.14, pGG < .001, ηG 2 = .026, and Voiceness, Region, and Hemisphere, F(2, 34) = 7.93, pGG = .007, ηG 2 = .0005. The latter effect was pursued for each level of Region. At anterior electrodes, the Voiceness × Hemisphere interaction, F(1, 17) = 6.45, p = .021, ηG 2 = .003, indicated that an increase in P2 amplitudes for vocal as compared to nonvocal sounds was larger over the right, F(1, 17) = 160.16, p < .0001, ηG 2 = .268, as compared to the left, F(1, 17) = 99.42, p < .001, ηG 2 = .258, hemisphere. At central electrodes, Voiceness enhanced P2 amplitudes irrespective of laterality, F(1, 17) = 77.64, p < .0001, ηG 2 = .141. At posterior electrodes, Voiceness interacted again with Hemisphere, F(1, 17) = 4.91, p = .04, ηG 2 = .0003, but follow-up analyses were nonsignificant (ps > .216).

Emotion became relevant in interaction with Region, F(2, 34) = 36.9, pGG < .0001, ηG 2 = .0041, with Region and Hemisphere, F(2, 34) = 8.25, pGG = .002, ηG 2 = .00007, as well as with Voiceness and Region, F(2, 34) = 4.62, pGG = .039, ηG 2 = .0006. Follow-up analyses for each region revealed that the Emotion × Voiceness interaction merely approached significance anteriorly, F(1, 17) = 3.59, p = .075, ηG 2 = .002, and was nonsignificant centrally and posteriorly (ps > .250). Similarly, the Emotion × Hemisphere interaction merely approached significance posteriorly, F(1, 17) = 3.38, p = .083, ηG 2 = .00005, and was nonsignificant centrally and anteriorly (ps > .139). Therefore, these effects were not pursued further. Instead, we focused on the Emotion main effect for each level of Region, which indicated that, anteriorly, P2 voltages were more positive for surprise than neutral sounds, F(1, 17) = 28.62, p < .0001, ηG 2 = .0162. Centrally, a similar effect was nonsignificant, F(1, 17) = 4.26, p = .055, ηG 2 = .0023, and posteriorly the effect reversed polarity, F(1, 17) = 6.11, p = .024, ηG 2 = .0031.

Late positive potential

Mean voltages between 400 and 950 ms were subjected to an ANOVA as described in the Method section. Whereas Emotion produced a significant main effect, F(1, 17) = 18.85, p < .001, ηG 2 = .005, the effects of Touch, F(2, 34) = 2.66, p = .085, ηG 2 = .019, and Voiceness were nonsignificant (p > .25). However, all three factors participated in a number of interactions, including Emotion and Voiceness, F(1, 17) = 5.17, p = .036, ηG 2 = .004; Emotion and Region, F(2, 34) = 5.67, pGG = .024, ηG 2 = .0009; Voiceness and Region, F(2, 34) = 48.66, pGG < .001, ηG 2 = .017; Emotion, Voiceness, and Touch, F(2, 34) = 3.33, p = .047, ηG 2 = .001; as well as Emotion, Voiceness, Touch, and Region, F(4, 68) = 2.61, p = .043, ηG 2 = .0004.

We explored the latter effect for each level of Region. At anterior electrodes, the interaction of Emotion, Voice, and Touch was nonsignificant (p > .250). Instead the Emotion × Voiceness interaction, F(1, 17) = 4.9, p = .041, ηG 2 = .002, indicated that across touch conditions, surprised sounds increased the LPP relative to neutral sounds when they were vocal, F(1, 17) = 28.4, p < .001, ηG 2 = .027, and to a smaller degree when they were nonvocal, F(1, 17) = 6.83, p =.018, ηG 2 = .005. At central and posterior electrodes, the interaction of Emotion, Voiceness and Touch was significant: central, F(2, 34) = 3.4, p = .045, ηG 2 = .002; posterior, F(2, 34) = 4.49, p = .018, ηG 2 = .002. To probe differences between the two touch conditions, specifically, we repeated these analyses excluding the no-touch condition. In other words, we conducted an Emotion × Voiceness × Touch ANOVA in which Touch had two levels only (arm, palm). A significant three-way interaction at posterior electrodes, F(1, 17) = 5.82, p = .027, ηG 2 = .0036, pointed to a differentiation between arm touch and palm touch. The three-way interaction was nonsignificant at central, F(1, 17) = 3.62, p = .074, ηG 2 = .0032, and anterior electrodes (p > .25).

We pursued the interaction of Emotion and Voiceness for arm touch, palm touch, and no touch over central and posterior sites. For arm touch, the interaction was significant: central, F(1, 17) = 11.9, p = .003, ηG 2 = .024; posterior, F(1, 17) = 11.64, p = .003, ηG 2 = .028, indicating that the LPP was larger for surprised as compared to neutral vocal but not nonvocal sounds: central, F(1, 17) = 29.87, p < .001, ηG 2 = .091; posterior, F(1, 17) = 6.95, p = .017, ηG 2 = .033. Moreover, for nonvocal sounds, this Emotion effect was absent over central (p > .25) and reversed polarity over posterior regions, F(1, 17) = 5.81, p = .027, ηG 2 = .023. During palm touch and no touch, the interaction of Emotion and Voiceness was nonsignificant (ps > .193), as was the Emotion main effect: central palm, F(1, 17) = 4.27, p = .054, ηG 2 = .009; central none, F(1, 17) = 3.91, p =.065, ηG 2 = .005; posterior palm/none (ps > .250).

Mastoid analysis

A polarity inversion of aforementioned auditory processing effects over the mastoid electrodes would be compatible with a source in auditory cortex and in line with prior evidence for a role of sensory regions in both early (Schirmer & Escoffier, 2010) and late emotion ERPs (Liu, Huang, McGinnis, Keil, & Ding, 2012). To probe this possibility, we subjected mastoid recordings of P2 and LPP time windows to separate analyses with Touch, Emotion, Voiceness and Hemisphere as repeated measures factors.

Analysis in the P2 window revealed main effects of Emotion, F(1, 17) = 24.87, p < .001, ηG 2 = .016, and Voiceness, F(1, 17) = 45.59, p < .001, ηG 2 = .092, that were opposite to those reported above. The ERP was less positive to surprised and vocal sounds than to neutral and nonvocal sounds (see Fig. 4). Additionally, a Touch × Hemisphere interaction, F(2, 34) = 3.76, pGG = .05, ηG 2 = .003, indicated that touch modulated the ERP over the left, F(2, 34) = 3.92, p = .03, ηG 2 = .032, but not the right hemisphere (p > .250). Over the left hemisphere, we found effects comparable to those described above. The ERP was more positive for arm touch, F(1, 17) = 7.1, p = .016, ηG 2 = .038, and no touch, F(1, 17) = 4.89, p = .041, ηG 2 = .033, as compared with palm touch. Arm touch and no touch did not differ (p > .250).

Analysis of the LPP window revealed a Voiceness effect, F(1, 17) = 96.24, p < .001, ηG 2 = .101, and an interaction of Emotion, Voiceness, and Touch, F(2, 34) = 4.07, p = .026, ηG 2 = .005, both pointing to a partial polarity reversal of frontocentral effects. Follow-up analyses showed that the Emotion × Voiceness interaction was significant for arm touch, F(1, 17) = 11.05, p = .004, ηG 2 = .024, but not for palm touch and no touch (ps > .250). For arm touch only, the ERP was less positive for surprised as compared to neutral nonvocal sounds, F(1, 17) = 9.22, p = .007, ηG 2 = .05. A similar effect was nonsignificant for vocal sounds (p > .250). For palm touch and no touch, the Voiceness main effect indicated that the ERP was less positive for vocal as compared to nonvocal sounds: palm, F(1, 17) = 21.21, p < .001, ηG 2 = .098; none, F(1, 17) = 30.89, p < .001, ηG 2 = .104). The Emotion effect was nonsignificant (ps > .250).

Discussion

Here we explored whether and how touch modulates vocal-emotional processing. We found that touch to both the arm and the palm was perceived as pleasant without changing the participants’ affective state. Moreover, touch did not interfere with the participants’ ability to detect sound repetitions in the experimental task. Yet it modulated how sounds were represented in the brain, and this modulation differed as a function of whether and where participants were touched. What follows is a more detailed discussion of these findings focusing on the nature of vocal-emotional ERP effects, the role of touch for voice perception, and the relevance of our findings for the social brain.

It is well established that the human brain prioritizes emotional over mundane and social over nonsocial information (Schirmer & Adolphs, 2017). In the context of fMRI, affective stimuli excite aspects of the sensory system more strongly than do neutral stimuli (e.g., Grandjean et al., 2005). Similar effects emerge for social as compared to nonsocial stimuli, as in the contrast of faces with nonface objects (e.g., houses; Kanwisher, McDermott, & Chun, 1997), voices with nonvocal sounds (e.g., nature sounds; Belin, Zatorre, Lafaille, Ahad, & Pike, 2000), or touch with human as compared to nonhuman characteristics (e.g., skin temperature; Ackerley et al., 2014; for a review, see Schirmer & Adolphs, 2017). In the ERP, these results are mirrored by greater amplitudes for emotional and social events implying that more neurons are recruited or that neurons respond more synchronously or vigorously (e.g., Bentin, Allison, Puce, Perez, & McCarthy, 1996; Schirmer & Gunter, 2017).

The present results agree with this when considering both the P2 complex and the LPP. In line with existing evidence (Jiang & Pell, 2015; Sauter & Eimer, 2010; Schirmer, Chen, et al., 2013; Schirmer & Gunter, 2017), the P2 complex was larger for emotional relative to neutral sounds over anterior, and marginally so over central regions. Likewise, the P2 was more positive for vocal than for nonvocal sounds over frontocentral regions. Both, the P2 emotion and the P2 voiceness effect were fairly independent of each other and reversed polarity over the mastoids, pointing to possible sources in auditory cortex (Näätänen et al., 2007). Looking at the LPP, we found that emotion and voiceness interacted. A greater LPP emotion effect for vocal as compared to nonvocal sounds implied that affective and social information now became integrated. In other words, listeners, instead of representing emotional significance and humanness separately, now perceived the sound as originating from a surprised human and prioritized the sound in awareness (Schirmer & Gunter, 2017). The frontocentral topography of this process accords with a possible contribution of prefrontal cortex and insula, both brain regions where the different senses as well as higher order perceptual and cognitive processes merge (Klasen, Chen, & Mathiak, 2012; Nieuwenhuys, 2012; Schirmer, Meck, & Penney, 2016) and where emotion effects in the LPP are at least partially regulated (Hajcak et al., 2010; Liu et al., 2012; Moratti, Saugar, & Strange, 2011; Schindler & Kissler, 2016). The LPP mastoid effects, furthermore, raise the possibility that these higher order integrative mechanisms may partially modulate processing in auditory cortex in a top-down manner.

Although the present results inform about vocal-emotional processing, of primary importance are first insights into the role of touch. Such insights emerged by exploring auditory perception in the context of CT-targeted touch to the arm, touch to the palm, and no touch. As expected, we found original evidence for online touch effects that dissociate from simple changes in pleasure or mood. Arm-touch and palm-touch conditions were perceived as equally pleasant, and neither produced subjective mood differences relative to the no-touch control. Yet the two touch conditions distinctly modulated the auditory ERP.

In the P2 time range, palm touch but not arm touch significantly reduced the ERP relative to no touch, suggesting that palm touch in particular interfered with early perceptual representations. Compared to the arm, the palm has a denser distribution of Aβ mechanoreceptors and is hence more sensitive and discriminative (Weinstein, 1968). In the LPP time range, both palm touch and arm touch reduced component amplitudes. However, effects differentiated as a function of emotion and voiceness. Specifically, an interaction between emotion and voiceness showed only anteriorly when participants were stroked on the palm. In contrast, when participants were stroked on the arm, the interaction showed across the scalp. In other words, the larger LPP emotion response to vocal when compared with nonvocal sounds was enhanced for arm touch relative to palm touch. Moreover, LPP effects in the no-touch condition differed from arm touch but not palm touch. Thus, one may speculate that arm touch facilitated the integration of emotional and vocal information and enhanced attentional engagement with emotional voices relative to neutral voices and nonvocal sounds.

The fact that CT afferents are present in the arm but not the palm marks CT afferents as a possible candidate in the present results and suggests that CT stimulation could benefit social interactions by emphasizing socioemotional information. This possibility agrees with existing work on the processing characteristics of CT afferents (for reviews, see Björnsdotter et al., 2010; Schirmer & Adolphs, 2017). Additionally, it accords with other research on touch that did not specifically explore CT effects and that provides more general behavioral and brain evidence for touch effects in adults and children. In adults, a number of field and laboratory studies demonstrated that casual touch increases good will and compliance. In the 1970s, Fisher and colleagues instructed library clerks to return library cards to customers by either briefly touching or not touching them (Fisher et al., 1976). Compared to customers who were not touched, those who were touched rated the library and its staff more positively. Follow-up studies replicated and extended these results showing, for example, that touch increases how much people tip, how honest they are, and whether they take prescribed medication (Schirmer, Wijaya, & Liu, 2016, for a review). More recent evidence from neuroscience complements these findings. In an ERP setting, gentle pressure to the arm, irrespective of whether provided by and attributed to a friend or a machine, enhanced LPP differences between emotional and neutral images that contained social elements (i.e., human or nonhuman animal), implying better emotion differentiation (Schirmer et al., 2011).

Further relevant for the present effects is evidence on the role of parental touch for early social development. A large body of literature documents benefits of skin-to-skin contact during infancy and early childhood (Field, Diego, Hernandez-Reif, Deeds, & Figuereido, 2006; Vickers, Ohlsson, Lacy, & Horsley, 2004), including aspects of social functioning such as the management of negative emotions and responsiveness to caregivers (for a review, see Field, Diego, & Hernandez-Reif, 2010). Children receiving more maternal touch reach out to their mothers more and have an accelerated development of the adult face bias whereby attention shifts to face rather than nonface objects (Reece, Ebstein, Cheng, Ng, & Schirmer, 2016). Furthermore, high-touch children differ from low-touch children in how they activate the “social brain.” When wakefully at rest, they engage the right posterior superior temporal sulcus more strongly and show greater functional connectivity between this region and the medial prefrontal cortex (Brauer, Xiao, Poulain, Friederici, & Schirmer, 2016)—both areas implicated in understanding others’ emotions (Escoffier, Zhong, Schirmer, & Qiu, 2013; Schirmer & Adolphs, 2017). Converging evidence from nonhuman animals implies that tactile stimulation of hairy skin is causally relevant for improved socioemotional functioning in offspring (Zhang & Meaney, 2010).

Although the present study provides compelling insights into the role of touch for neural responses to vocal emotions, it raises a number of questions for future research. First, one may ask why touch effects were absent from the behavioral measures and relatively small in the ERP. Several aspects of experimental design and touch context may be relevant here. For example, an influence on n-back performance may become apparent with a larger proportion of response trials. Presently, this proportion was small (16.5 response vs. 81 nonresponse trials per cell in the design) as to maximize the number of nonresponse trials for the ERP (Luck, 2005). In subsequent studies, the proportion of response trials could be increased in combination with an analysis of response-locked ERP components, such as the readiness potential (e.g., Eder, Leuthold, Rothermund, & Schweinberger, 2012). Additional factors that may be relevant for the present touch effects concern the nature of the task and the tactile stimulus. The task directed attention to stimulus acoustics rather than to socioemotional features, thus emphasizing stimulus-driven implicit processing. Future studies may wish to contrast this with explicit processing by engaging participants at a socioemotional level—a condition known to enhance the kind of integrative mechanisms that were of interest here (e.g., Schirmer et al., 2006). Like the task, the tactile stimulus was nonsocial (i.e., brush rather than hand strokes) and applied in a, perhaps artificially, rhythmic and continuous manner, fatiguing CT afferents to some degree (Nordin, 1990) and potentially producing autonomic or hormonal effects (Ditzen et al., 2007; Morhenn, Beavin, & Zak, 2012; Okabe, Yoshida, Takayanagi, & Onaka, 2015). These issues represent the flip-side of our attempt to standardize the physical properties of touch (e.g., touch temperature) and to prevent carryover effects between touch conditions. Future research should use actual human touch as to explore a possible facilitation of touch effects through attributional mechanisms (but see Schirmer et al., 2011). Additionally, a random presentation of touch conditions, together with a long intertrial interval, may enhance touch effects, as short occasional touches may be expected to be more powerful (Triscoli et al., 2014).

A second line of inquiry arising from our results concerns the differential effects of arm and palm touch. On one hand, one may wish to better specify the role of CT afferents. Other receptors differ between nonglabrous and glabrous skin (e.g., hair follicles), offering an alternative explanation for arm/palm differences—an explanation that could be ruled out by, for example, studying certain somatosensory patients (Olausson et al., 2002). On the other hand, one should explore potential commonalities in the touch effects on arm and palm. The fact that palm effects fell somewhere in between arm-touch and no-touch effects suggests that touch, irrespective of location, might shape socioemotional responding. This agrees with human evidence that short touches to glabrous skin impact affect and attitudes (Fisher et al., 1976). It is also in line with evidence from nonhuman animals that have no CT afferents but nevertheless experience stress relief from touch (Schirmer, Jesuthasan, & Mathuru, 2013). Possibly touch provides a very basic signal of being connected with others.

Last, we examined only women here, leaving open the question of whether touch effects differ between the sexes and between same and opposite sex interactions. Extant research points to such effects. Touch studies that addressed differences between female and male touchees found a greater response in the former as compared with the latter group (Fisher et al., 1976; Patterson, Powell, & Lenihan, 1986). In line with this, there is evidence for greater female sensitivity to facial (e.g., Schirmer, Seow, & Penney, 2013) and vocal expressions (e.g., Schirmer & Gunter, 2017)—especially when expressions are subtle or task-irrelevant. Additionally, how men and women direct their attention is modulated by the sex of interaction partners in complex ways (Alexander & Charles, 2009; Amon, 2015). In the context of touch, biases have been identified (e.g., young men touch young women more than vice versa; Hall, 1996; Hall & Veccia, 1990) that need to be explored vis-à-vis their perceptual consequences. In fact, the interplay of sensory (i.e., tactile stimulus) and attributional (i.e., who is touching whom) effects in how the sexes experience touch constitutes an interesting avenue for further research, with potential applications to clinical disorders that are marked by sex-specific prevalences (e.g., autism and depression).

To conclude, this study revealed distinct influences of arm touch and palm touch on ongoing mental functioning. Palm touch interfered with cross-modal perceptual and attentional processing as indicated by a reduction of the auditory ERP in both P2 and LPP time range. In contrast, arm touch affected the LPP only and did so in a more differentiated manner. Specifically, it facilitated the integration of vocal and emotional information and dampened responses to sounds, excepting those to emotional voices. Together, these results point to the activation of CT afferents in hairy skin as a possible mechanism for the facilitation of neural responses to social signals with affective relevance. Such stimulus-driven effects may then interact with the influence of contextual factors (e.g., social goals, toucher–touchee relationship) to shape social attitudes and behavior. Thus, in interactional settings as diverse as a mother caring for her child or business colleagues negotiating a contract, social touch may facilitate emotional exchange.

Electronic supplementary material

(DOC 133 kb)

Acknowledgements

Open access funding provided by Max Planck Society.

Author contributions

A.S. and T.G. conceptualized and conducted the study. A.S. analyzed the data. A.S. and T.G. prepared the manuscript for submission.

Footnotes

Electronic supplementary material

The online version of this article (10.3758/s13415-017-0537-5) contains supplementary material, which is available to authorized users.

References

- Ackerley R, Wasling HB, Liljencrantz J, Olausson H, Johnson RD, Wessberg J. Human C-tactile afferents are tuned to the temperature of a skin-stroking caress. The Journal of Neuroscience. 2014;34(8):2879–2883. doi: 10.1523/JNEUROSCI.2847-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alexander GM, Charles N. Sex differences in adults’ relative visual interest in female and male faces, toys, and play styles. Archives of Sexual Behavior. 2009;38(3):434–441. doi: 10.1007/s10508-008-9429-7. [DOI] [PubMed] [Google Scholar]

- American Clinical Neurophysiology Society Guideline 5: Guidelines for standard electrode position nomenclature. Journal of Clinical Neurophysiology: Official Publication of the American Electroencephalographic Society. 2006;23(2):107–110. doi: 10.1097/00004691-200604000-00006. [DOI] [PubMed] [Google Scholar]

- Amon, M. J. (2015). Visual attention in mixed-gender groups. Frontiers in Psychology, 5. 10.3389/fpsyg.2014.01569 [DOI] [PMC free article] [PubMed]

- Amrhein C, Mühlberger A, Pauli P, Wiedemann G. Modulation of event-related brain potentials during affective picture processing: A complement to startle reflex and skin conductance response? International Journal of Psychophysiology: Official Journal of the International Organization of Psychophysiology. 2004;54(3):231–240. doi: 10.1016/j.ijpsycho.2004.05.009. [DOI] [PubMed] [Google Scholar]

- Belin P, Fillion-Bilodeau S, Gosselin F. The Montreal Affective Voices: A validated set of nonverbal affect bursts for research on auditory affective processing. Behavior Research Methods. 2008;40(2):531–539. doi: 10.3758/BRM.40.2.531. [DOI] [PubMed] [Google Scholar]

- Belin P, Zatorre RJ, Lafaille P, Ahad P, Pike B. Voice-selective areas in human auditory cortex. Nature. 2000;403(6767):309–312. doi: 10.1038/35002078. [DOI] [PubMed] [Google Scholar]

- Bennett RH, Bolling DZ, Anderson LC, Pelphrey KA, Kaiser MD. fNIRS detects temporal lobe response to affective touch. Social Cognitive and Affective Neuroscience. 2014;9(4):470–476. doi: 10.1093/scan/nst008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bentin S, Allison T, Puce A, Perez E, McCarthy G. Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience. 1996;8(6):551–565. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Björnsdotter M, Morrison I, Olausson H. Feeling good: On the role of C fiber mediated touch in interoception. Experimental Brain Research. 2010;207(3/4):149–155. doi: 10.1007/s00221-010-2408-y. [DOI] [PubMed] [Google Scholar]

- Brauer, J., Xiao, Y., Poulain, T., Friederici, A. D., & Schirmer, A. (2016). Frequency of maternal touch predicts resting activity and connectivity of the developing social brain. Cerebral Cortex10.1093/cercor/bhw137 [DOI] [PMC free article] [PubMed]

- Charest I, Pernet CR, Rousselet GA, Quiñones I, Latinus M, Fillion-Bilodeau S, et al. Electrophysiological evidence for an early processing of human voices. BMC Neuroscience. 2009;10(1):127. doi: 10.1186/1471-2202-10-127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Delorme A, Makeig S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. Journal of Neuroscience Methods. 2004;134(1):9–21. doi: 10.1016/j.jneumeth.2003.10.009. [DOI] [PubMed] [Google Scholar]

- Diéguez-Risco T, Aguado L, Albert J, Hinojosa JA. Faces in context: Modulation of expression processing by situational information. Social Neuroscience. 2013;8(6):601–620. doi: 10.1080/17470919.2013.834842. [DOI] [PubMed] [Google Scholar]

- Ditzen B, Neumann ID, Bodenmann G, von Dawans B, Turner RA, Ehlert U, Heinrichs M. Effects of different kinds of couple interaction on cortisol and heart rate responses to stress in women. Psychoneuroendocrinology. 2007;32(5):565–574. doi: 10.1016/j.psyneuen.2007.03.011. [DOI] [PubMed] [Google Scholar]

- Dunbar RIM. The social role of touch in humans and primates: Behavioural function and neurobiological mechanisms. Neuroscience & Biobehavioral Reviews. 2010;34(2):260–268. doi: 10.1016/j.neubiorev.2008.07.001. [DOI] [PubMed] [Google Scholar]

- Eder AB, Leuthold H, Rothermund K, Schweinberger SR. Automatic response activation in sequential affective priming: An ERP study. Social Cognitive and Affective Neuroscience. 2012;7(4):436–445. doi: 10.1093/scan/nsr033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Escoffier N, Zhong J, Schirmer A, Qiu A. Emotional expressions in voice and music: Same code, same effect? Human Brain Mapping. 2013;34(8):1796–1810. doi: 10.1002/hbm.22029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Field T, Diego M, Hernandez-Reif M. Preterm infant massage therapy research: A review. Infant Behavior & Development. 2010;33(2):115–124. doi: 10.1016/j.infbeh.2009.12.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Field T, Diego MA, Hernandez-Reif M, Deeds O, Figuereido B. Moderate versus light pressure massage therapy leads to greater weight gain in preterm infants. Infant Behavior & Development. 2006;29(4):574–578. doi: 10.1016/j.infbeh.2006.07.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fisher JD, Rytting M, Heslin R. Hands touching hands: Affective and evaluative effects of an interpersonal touch. Sociometry. 1976;39(4):416–421. doi: 10.2307/3033506. [DOI] [PubMed] [Google Scholar]

- Gallace A, Spence C. The science of interpersonal touch: An overview. Neuroscience & Biobehavioral Reviews. 2010;34(2):246–259. doi: 10.1016/j.neubiorev.2008.10.004. [DOI] [PubMed] [Google Scholar]

- Grandjean D, Sander D, Pourtois G, Schwartz S, Seghier ML, Scherer KR, Vuilleumier P. The voices of wrath: Brain responses to angry prosody in meaningless speech. Nature Neuroscience. 2005;8(2):145–146. doi: 10.1038/nn1392. [DOI] [PubMed] [Google Scholar]

- Hajcak G, Anderson BS, Arana A, Borckardt J, Takacs I, George MS, Nahas Z. Dorsolateral prefrontal cortex stimulation modulates electrocortical measures of visual attention: Evidence from direct bilateral epidural cortical stimulation in treatment-resistant mood disorder. Neuroscience. 2010;170(1):281–288. doi: 10.1016/j.neuroscience.2010.04.069. [DOI] [PubMed] [Google Scholar]

- Hall JA. Touch, status, and gender at professional meetings. Journal of Nonverbal Behavior. 1996;20(1):23–44. doi: 10.1007/BF02248713. [DOI] [Google Scholar]

- Hall JA, Veccia EM. More “touching” observations: New insights on men, women, and interpersonal touch. Journal of Personality and Social Psychology. 1990;59(6):1155–1162. doi: 10.1037/0022-3514.59.6.1155. [DOI] [Google Scholar]

- Iggo A. Cutaneous mechanoreceptors with afferent C fibres. The Journal of Physiology. 1960;152(2):337–353. doi: 10.1113/jphysiol.1960.sp006491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiang X, Pell MD. On how the brain decodes vocal cues about speaker confidence. Cortex. 2015;66:9–34. doi: 10.1016/j.cortex.2015.02.002. [DOI] [PubMed] [Google Scholar]

- Kaiser, M. D., Yang, D. Y.-J., Voos, A. C., Bennett, R. H., Gordon, I., Pretzsch, C., … Pelphrey, K. A. (2015). Brain mechanisms for processing affective (and nonaffective) touch are atypical in autism. Cerebral Cortex10.1093/cercor/bhv125 [DOI] [PMC free article] [PubMed]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: A module in human extrastriate cortex specialized for face perception. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience. 1997;17(11):4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klasen M, Chen Y-H, Mathiak K. Multisensory emotions: Perception, combination and underlying neural processes. Reviews in the Neurosciences. 2012;23(4):381–392. doi: 10.1515/revneuro-2012-0040. [DOI] [PubMed] [Google Scholar]

- Liu Y, Huang H, McGinnis M, Keil A, Ding M. Neural substrate of the late positive potential in emotional processing. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience. 2012;32(42):14563–14572. doi: 10.1523/JNEUROSCI.3109-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Löken LS, Wessberg J, Morrison I, McGlone F, Olausson H. Coding of pleasant touch by unmyelinated afferents in humans. Nature Neuroscience. 2009;12(5):547–548. doi: 10.1038/nn.2312. [DOI] [PubMed] [Google Scholar]

- Luck, S. (2005). An introduction to the event-related potential technique. Retrieved from https://mitpress.mit.edu/books/introduction-event-related-potential-technique

- McGlone F, Wessberg J, Olausson H. Discriminative and affective touch: Sensing and feeling. Neuron. 2014;82(4):737–755. doi: 10.1016/j.neuron.2014.05.001. [DOI] [PubMed] [Google Scholar]

- Moratti S, Saugar C, Strange BA. Prefrontal-occipitoparietal coupling underlies late latency human neuronal responses to emotion. Journal of Neuroscience. 2011;31(47):17278–17286. doi: 10.1523/JNEUROSCI.2917-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morhenn V, Beavin LE, Zak PJ. Massage increases oxytocin and reduces adrenocorticotropin hormone in humans. Alternative Therapies in Health and Medicine. 2012;18(6):11–18. [PubMed] [Google Scholar]

- Näätänen R, Paavilainen P, Rinne T, Alho K. The mismatch negativity (MMN) in basic research of central auditory processing: A review. Clinical Neurophysiology: Official Journal of the International Federation of Clinical Neurophysiology. 2007;118(12):2544–2590. doi: 10.1016/j.clinph.2007.04.026. [DOI] [PubMed] [Google Scholar]

- Nieuwenhuys R. The insular cortex: A review. Progress in Brain Research. 2012;195:123–163. doi: 10.1016/B978-0-444-53860-4.00007-6. [DOI] [PubMed] [Google Scholar]

- Nordin M. Low-threshold mechanoreceptive and nociceptive units with unmyelinated (C) fibres in the human supraorbital nerve. The Journal of Physiology. 1990;426:229–240. doi: 10.1113/jphysiol.1990.sp018135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Okabe S, Yoshida M, Takayanagi Y, Onaka T. Activation of hypothalamic oxytocin neurons following tactile stimuli in rats. Neuroscience Letters. 2015;600:22–27. doi: 10.1016/j.neulet.2015.05.055. [DOI] [PubMed] [Google Scholar]

- Olausson H, Cole J, Rylander K, McGlone F, Lamarre Y, Wallin BG, et al. Functional role of unmyelinated tactile afferents in human hairy skin: Sympathetic response and perceptual localization. Experimental Brain Research. 2008;184(1):135–140. doi: 10.1007/s00221-007-1175-x. [DOI] [PubMed] [Google Scholar]

- Olausson H, Lamarre Y, Backlund H, Morin C, Wallin BG, Starck G, et al. Unmyelinated tactile afferents signal touch and project to insular cortex. Nature Neuroscience. 2002;5(9):900–904. doi: 10.1038/nn896. [DOI] [PubMed] [Google Scholar]

- Olausson H, Wessberg J, Morrison I, McGlone F, Vallbo A. The neurophysiology of unmyelinated tactile afferents. Neuroscience & Biobehavioral Reviews. 2010;34(2):185–191. doi: 10.1016/j.neubiorev.2008.09.011. [DOI] [PubMed] [Google Scholar]

- Onton J, Westerfield M, Townsend J, Makeig S. Imaging human EEG dynamics using independent component analysis. Neuroscience and Biobehavioral Reviews. 2006;30(6):808–822. doi: 10.1016/j.neubiorev.2006.06.007. [DOI] [PubMed] [Google Scholar]

- Patterson ML, Powell JL, Lenihan MG. Touch, compliance, and interpersonal affect. Journal of Nonverbal Behavior. 1986;10(1):41–50. doi: 10.1007/BF00987204. [DOI] [Google Scholar]

- Paulmann S, Pell MD. Contextual influences of emotional speech prosody on face processing: How much is enough? Cognitive, Affective, & Behavioral Neuroscience. 2010;10(2):230–42. doi: 10.3758/CABN.10.2.230. [DOI] [PubMed] [Google Scholar]

- Reece C, Ebstein R, Cheng X, Ng T, Schirmer A. Maternal touch predicts social orienting in young children. Cognitive Development. 2016;39:128–140. doi: 10.1016/j.cogdev.2016.05.001. [DOI] [Google Scholar]

- Sauter DA, Eimer M. Rapid detection of emotion from human vocalizations. Journal of Cognitive Neuroscience. 2010;22(3):474–481. doi: 10.1162/jocn.2009.21215. [DOI] [PubMed] [Google Scholar]

- Schindler S, Kissler J. People matter: Perceived sender identity modulates cerebral processing of socio-emotional language feedback. NeuroImage. 2016;134:160–169. doi: 10.1016/j.neuroimage.2016.03.052. [DOI] [PubMed] [Google Scholar]

- Schirmer A, Adolphs R. Emotion perception from face, voice, and touch: Comparisons and convergence. Trends in Cognitive Sciences. 2017;21(3):216–228. doi: 10.1016/j.tics.2017.01.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schirmer A, Chen C-B, Ching A, Tan L, Hong RY. Vocal emotions influence verbal memory: Neural correlates and interindividual differences. Cognitive, Affective, & Behavioral Neuroscience. 2013;13(1):80–93. doi: 10.3758/s13415-012-0132-8. [DOI] [PubMed] [Google Scholar]

- Schirmer A, Escoffier N. Emotional MMN: Anxiety and heart rate correlate with the ERP signature for auditory change detection. Clinical Neurophysiology: Official Journal of the International Federation of Clinical Neurophysiology. 2010;121(1):53–59. doi: 10.1016/j.clinph.2009.09.029. [DOI] [PubMed] [Google Scholar]

- Schirmer A, Gunter TC. Temporal signatures of processing voiceness and emotion in sound. Social Cognitive and Affective Neuroscience. 2017;12(6):902–909. doi: 10.1093/scan/nsx020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schirmer A, Jesuthasan S, Mathuru AS. Tactile stimulation reduces fear in fish. Frontiers in Behavioral Neuroscience. 2013;7:167. doi: 10.3389/fnbeh.2013.00167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schirmer A, Lui M, Maess B, Escoffier N, Chan M, Penney TB. Task and sex modulate the brain response to emotional incongruity in Asian listeners. Emotion (Washington, D.C.) 2006;6(3):406–417. doi: 10.1037/1528-3542.6.3.406. [DOI] [PubMed] [Google Scholar]

- Schirmer A, Meck WH, Penney TB. The socio-temporal brain: Connecting people in time. Trends in Cognitive Sciences. 2016;20(10):760–772. doi: 10.1016/j.tics.2016.08.002. [DOI] [PubMed] [Google Scholar]

- Schirmer A, Seow CS, Penney TB. Humans process dog and human facial affect in similar ways. PLoS ONE. 2013;8(9):e74591. doi: 10.1371/journal.pone.0074591. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schirmer A, Teh KS, Wang S, Vijayakumar R, Ching A, Nithianantham D, et al. Squeeze me, but don’t tease me: Human and mechanical touch enhance visual attention and emotion discrimination. Social Neuroscience. 2011;6(3):219–230. doi: 10.1080/17470919.2010.507958. [DOI] [PubMed] [Google Scholar]

- Schirmer A, Wijaya MT, Liu S. The Midas effect: How somatosensory impressions shape affect and other-concern. In: Olausson H, Wessberg J, Morrison I, McGlone F, editors. Affective touch and the neurophysiology of CT afferents. New York: Springer; 2016. pp. 283–299. [Google Scholar]

- Suvilehto JT, Glerean E, Dunbar RIM, Hari R, Nummenmaa L. Topography of social touching depends on emotional bonds between humans. Proceedings of the National Academy of Sciences. 2015;112(45):13811–13816. doi: 10.1073/pnas.1519231112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Triscoli C, Ackerley R, Sailer U. Touch satiety: Differential effects of stroking velocity on liking and wanting touch over repetitions. PLoS ONE. 2014;9(11):e113425. doi: 10.1371/journal.pone.0113425. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vickers, A., Ohlsson, A., Lacy, J. B., & Horsley, A. (2004). Massage for promoting growth and development of preterm and/or low birth-weight infants. Cochrane Database of Systematic Reviews10.1002/14651858.CD000390.pub2 [DOI] [PMC free article] [PubMed]

- Warren JE, Sauter DA, Eisner F, Wiland J, Dresner MA, Wise RJS, et al. Positive emotions preferentially engage an auditory–motor “mirror” system. The Journal of Neuroscience. 2006;26(50):13067–13075. doi: 10.1523/JNEUROSCI.3907-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watson R, Latinus M, Charest I, Crabbe F, Belin P. People-selectivity, audiovisual integration and heteromodality in the superior temporal sulcus. Cortex: A Journal Devoted to the Study of the Nervous System and Behavior. 2014;50(100):125–136. doi: 10.1016/j.cortex.2013.07.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weinstein S. Intensive and extensive aspects of tactile sensitivity as a function of body part, sex and laterality. In: Kenshalo DR, editor. The skin senses. Springfield: Charles C. Thomas; 1968. pp. 195–218. [Google Scholar]

- Winkler, I., Debener, S., Müller, K.-R., & Tangermann, M. (2015). On the influence of high-pass filtering on ICA-based artifact reduction in EEG-ERP. Paper presented at the 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society. 10.1109/EMBC.2015.7319296 [DOI] [PubMed]

- Zhang TY, Meaney MJ. Epigenetics and the environmental regulation of the genome and its function. Annual Review of Psychology. 2010;61:439–466. doi: 10.1146/annurev.psych.60.110707.163625. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(DOC 133 kb)