Abstract

Gene regulatory networks (GRNs) control cellular function and decision making during tissue development and homeostasis. Mathematical tools based on dynamical systems theory are often used to model these networks, but the size and complexity of these models mean that their behaviour is not always intuitive and the underlying mechanisms can be difficult to decipher. For this reason, methods that simplify and aid exploration of complex networks are necessary. To this end we develop a broadly applicable form of the Zwanzig-Mori projection. By first converting a thermodynamic state ensemble model of gene regulation into mass action reactions we derive a general method that produces a set of time evolution equations for a subset of components of a network. The influence of the rest of the network, the bulk, is captured by memory functions that describe how the subnetwork reacts to its own past state via components in the bulk. These memory functions provide probes of near-steady state dynamics, revealing information not easily accessible otherwise. We illustrate the method on a simple cross-repressive transcriptional motif to show that memory functions not only simplify the analysis of the subnetwork but also have a natural interpretation. We then apply the approach to a GRN from the vertebrate neural tube, a well characterised developmental transcriptional network composed of four interacting transcription factors. The memory functions reveal the function of specific links within the neural tube network and identify features of the regulatory structure that specifically increase the robustness of the network to initial conditions. Taken together, the study provides evidence that Zwanzig-Mori projections offer powerful and effective tools for simplifying and exploring the behaviour of GRNs.

Author summary

Gene regulatory networks are essential for cell fate specification and function. But the recursive links that comprise these networks often make determining their properties and behaviour complicated. Computational models of these networks can also be difficult to decipher. To reduce the complexity of such models we employ a Zwanzig-Mori projection approach. This allows a system of ordinary differential equations, representing a network, to be reduced to an arbitrary subnetwork consisting of part of the initial network, with the rest of the network (bulk) captured by memory functions. These memory functions account for the bulk by describing signals that return to the subnetwork after some time, having passed through the bulk. We show how this approach can be used to simplify analysis and to probe the behaviour of a gene regulatory network. Applying the method to a transcriptional network in the vertebrate neural tube reveals previously unappreciated properties of the network. By taking advantage of the structure of the memory functions we identify interactions within the network that are unnecessary for sustaining correct patterning. Upon further investigation we find that these interactions are important for conferring robustness to variation in initial conditions. Taken together we demonstrate the validity and applicability of the Zwanzig-Mori projection approach to gene regulatory networks.

Introduction

Biological systems are complex, comprising multiple interacting components. In many cases this complexity makes it difficult to identify underlying mechanisms and to understand the function of a system. Gene regulatory networks (GRNs) are an example of this problem [1]. A GRN comprises the set of interacting genes responsible for the development, differentiation or homoeostasis of a tissue and provides a formal system-level, causative explanation for gene regulation. In physical terms, a GRN consists of modular DNA sequences—cis regulatory elements—that bind to specific sets of transcriptional activators and repressors, which control the expression of associated genes. Some of the regulated genes are themselves transcriptional regulators. Thus, at the core of a GRN is a recursive set of regulatory links that forms a transcriptional network, the dynamics of which is responsible for the spatial and temporal patterns of gene expression.

Attempts have been made to map large transcriptional networks, yet even for relatively small networks, the number of links and the feedback within the system make intuitive understanding difficult to obtain. Various computational models have been developed to address this. Logical models, which describe regulatory interactions qualitatively, provide a flexible and simplifying formalism to explore and understand the behaviour of a network [2]. However, these approaches are unable to capture subtler features of a network that depend on specific aspects of the timing or concentration of components of the network. For this, continuous models based on, for example, ordinary differential equations (ODEs) are often employed [3, 4]. These models describe gene regulation in much greater detail, and there are well-developed mathematical theories that provide powerful tools to distill the dynamical details of such systems. These have been used successfully to gain insight into the operation of GRNs and suggest explanations for otherwise difficult to understand behaviours, including emergent phenomena such as self-organisation, oscillations, spatial patterning, and scaling of pattern size [5–8]. A disadvantage of this approach, however, is that ODE models often require a large number of parameters, including binding affinities, degradation rates, production rates, etc. While it is often possible to numerically analyse large systems, the power of analytical tools is in many cases lost due to lack of knowledge of parameters or the complexity of the network.

Various techniques have been developed to reduce the complexity of models yet preserve specific features of the behaviour of the system. For Boolean networks it is possible to remove specific components while restructuring other parts of the network to conserve the logical structure, but this requires quite specific network topologies [9]. In the case of mass action reactions, one approach to simplification is to generate intermediate species in a systematic way such as to make different systems comparable. This then allows the identification of motifs that can be simplified. This approach comes with the natural limitation that the species involved cannot be chosen a priori and must conform to a particular network structure [10]. Other methods to reduce complexity involve removing nodes or perturbing the system and analysing a posteriori the effect that this has on the dynamics of the network. This can be helpful to develop intuition, but generally requires the investigation of a large number of combinations of perturbations [11, 12]. A further approach, although applicable only in rather specific cases, is to enforce a timescale separation between network nodes that is chosen not to perturb the dynamics significantly [13]. One can find “morphisms” that relate network structure to function in order to then simplify a set of nodes into potentially simpler motifs; this can reveal an interpretation of how certain motifs work but does not provide a direct conversion from the original network [14]. Less formal methods for replacing well understood network motifs with simpler motifs producing similar behaviour have also been extensively explored [15]. Along similar lines, entire parts of a network can be substituted with effective nodes with more complicated dynamics that produce similar output for a set of chosen species, although the feasiblity of this depends on network structure and the complexity of the original dynamics [16].

We focus in this paper on reducing model complexity by tracking a subnetwork that is embedded within the remainder—or “bulk”—of a larger system. Analysing the behaviour of the subnetwork and its interaction with the bulk can help reveal and rationalise the properties of the entire network. With any coarse graining approach, including those discussed above, a compromise must be reached between the precision of the method, i.e. how well it captures the dynamics of the full network, and the simplicity and interpretability of the resulting description. For example, the introduction of intermediate species can produce model reductions with high precision but these may not be interpretable [10], while morphism approaches provide interpretable insights into the structure but capture the full model dynamics only for specific initial conditions and system parameters [14]. We consider important for interpretability the ability to choose a subnetwork, guided by biological relevance or the availability of data; none of the methods described above allow this and instead identify a subnetwork based on their internal criteria.

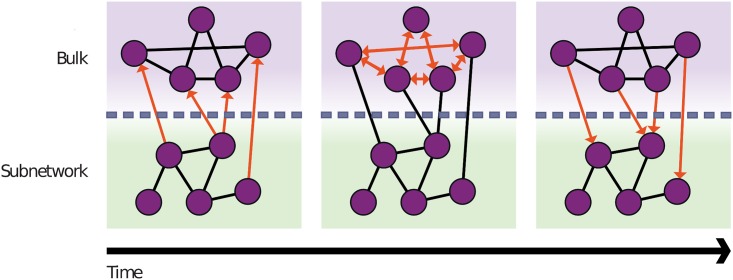

One class of methods that offers the potential to balance accuracy and interpretability are Zwanzig-Mori projections [17, 18]. Originally developed to allow the extraction of macroscopic equations from a microscopic description of the dynamics—a brief overview can be found in e.g. [19]—these methods have since been used to separate a network into an arbitarily chosen subnetwork and bulk in ways that preserve substantial features of the original temporal dynamics [20, 21]. Used in this way, the approach describes the concentration of components in the subnetwork in detail, while the activities of the species in the bulk are replaced with so-called ‘memory functions’. These memory functions are derived from the detailed kinetic description of the remainder of the network and summarise how, by acting via the bulk, the past behaviour of the subnetwork influences its current state (Fig 1). The resulting memory functions are functions of time difference, describing the amplitude of a signal returning from the bulk some specified time after the original signal left the subnetwork. Additionally, it is possible to separate each memory function in order to analyse through which bulk species the signal is flowing, thus providing a means to gain a more systematic understanding of a system.

Fig 1. Sketch describing the concept of memory within a reaction network.

A network is divided into bulk and subnetwork, x axis represents time. Concentration changes in the subnetwork act as signals that leave the subnetwork and travel into the bulk. There they interact with other bulk species and return at a later timepoint via bulk-to-subnetwork interactions. The net effect of such interactions is thus that the subnetwork reacts to its own past. The precise influence of past subnetwork states is governed by memory functions that depend on the time difference, i.e. on how long ago the relevant signal has left the subnetwork.

Evolving from its original applications to critical dynamics and supercooled liquids near the glass transition [22], this approach has been applied to biochemical networks [20], where it has been used to analyse the dynamics of signaling in the EGFR (epidermal growth factor receptor) network. It has since been developed further to include also enzymatic Michaelis-Menten reactions [21]. Here we apply the Zwanzig-Mori approach to the analysis of GRNs. We derive a general method that is applicable to any thermodynamic state ensemble representation of protein dynamics including transcriptional networks. These thermodynamic equations mechanistically represent interactions between proteins via transcriptional regulation [23] and can be expanded into mass action reactions to which the Zwanzig-Mori projection formalism can be applied. This approach not only justifies a heuristic method where the thermodynamic equations are expanded to quadratic order around a steady state to obtain a set of effective unary and binary reactions, but also opens up the possibility of developing more advanced projection approximations. We test the method on a cross repressive motif, where we show that we are able to qualitatively reproduce the dynamics of the system and obtain a simple intuitive interpretation of the memory functions. Finally, we use the approach on a GRN from the vertebrate neural tube, a well characterised developmental GRN composed of four interacting transcription factors [24]. This reveals the importance of specific links within the network and identifies features that appear to be present primarily to increase the robustness of the network to initial conditions, rather than maintain the steady states. Taken together, the study provides evidence that Zwanzig-Mori projections are a powerful and efficient method to simplify and explore the behaviour of gene regulatory networks, with memory functions providing new tools for probing the dynamics near steady state.

Methods

Thermodynamic state ensemble models for gene regulation dynamics

Several classes of model have been developed to describe gene regulation. One such class consists of thermodynamic state ensemble models in which equations are constructed that relate interactions between key components of the regulatory mechanism to gene expression [23, 25, 26]. In these models, all possible states of a regulated gene are enumerated. Each state consists of a specific set of transcription factors bound to a DNA cis-regulatory element, weighted by the affinity of interactions. The rate at which a protein is produced is then represented as a ratio of the weight of the subset of states that promote gene expression, to the total weight of all states. We define the vector x such that it contains the protein concentrations for each gene. We can then generically define the time evolution for any protein concentration, indexed by j, as

| (1) |

| (2) |

Here βj is the decay rate of protein j. We use the summation over n to represent all possible binding conformations for the DNA that produces protein j. The term α(j,n) represents the production rate of protein j from a particular DNA conformation n. The factor w(j,n) is the specific affinity for individual proteins to the DNA that produces species xj. Finally x(j,n) is the concentration of DNA producing protein j and in conformation n. This is assigned in (2) as the weight of the conformation, dependent on the protein concentrations in a mass-action form, normalised by the total weight of all conformations. This ensures that x(j,n) lies in the range [0, 1], so it is not an absolute concentration; any overall DNA concentration scale is therefore to be understood as incorporated into the protein production rates α(j,n). Accordingly one can also think of x(j,n) as the probability of finding a certain conformation n of the DNA producing protein j. As made explicit in (2), the DNA conformation label n is a collection of integers ni counting how many copies of protein i are bound to the DNA.

Setup of projection method

The Zwanzig-Mori method in general starts from the choice of a set of observables for which dynamical equations are to be obtained. Initially we select, as the simplest possible observables, the deviations from steady state of the concentrations of the chosen subnetwork species. For these observables the Zwanzig-Mori projection leads to a set of dynamical equations of the form

| (3) |

Here δxi are the subnetwork concentration deviations as defined explicitly below and Ns indicates the number of subnetwork species. The Ωji define a rate matrix Ω that represents subnetwork interactions. The ri(t) are so-called random forces that arise from the uncertainty about the initial (time t = 0) state of the bulk concentrations. (For the linear dynamics they can be expressed in closed form [20] but this is not useful in our context as discussed in Sec. Nonlinear projected equations below.)

The key quantities in the eq (3) are the memory functions Mji(Δt), which describe how past concentration fluctuations δxj(t′) influence the current time evolution. However, while the projected equations are formally exact, it is in general impossible to evaluate these memory functions explicitly. One scenario where this can be done is a network of unary reactions, where the full reaction equations are linear in the concentrations [20]. This suggests a heuristic approach to calculating memory functions in the current context, which is to linearize the thermodynamic equations. One can think of this as generating a set of effective unary reactions (at least loosely; see Sec. Justifying the heuristics: Network expansion). Explicitly, one linearly expands the time evolution eqs (1) with (2) inserted. The expansion is performed around a steady state with concentrations yi, i.e. in terms of the deviations δxi = xi − yi. The r.h.s. of (1) then contains only linear terms in the δxi since constant terms cancel out because of the steady state condition. One can therefore write the time evolution equations in matrix form [20]

| (4) |

for an appropriately defined matrix L with entries Lji.

Projected equations for linearised dynamics

From the matrix L one can calculate the terms in the (linear) projected dynamics of the subnetwork concentrations from (3) as shown in [20]. We summarize the method here. One separates the set of all (linear in concentration) observables into subnetwork (s) and bulk (b), and assumes as above that these concentration deviations δxi are numbered so that the first Ns are the subnetwork observables while the rest are the bulk observables. The matrix L for the linearised dynamics then separates into blocks according to the different observables:

| (5) |

where labels S and B represent respectively the set of species in the subnetwork and in the bulk.

We use the labels S and B here to represent subnetwork and bulk species respectively, this will be useful once more subnetwork and bulk observables arise (see Sec. Mathematical results). The rate matrix Ω with entries Ωji in the projected eq (3) is then simply the subnetwork block of L,

| (6) |

The memory functions similarly form the elements of a memory matrix, which can be expressed in terms of the blocks of L as [20]

| (7) |

This form allows straightforward evaluation of the memory functions starting from any given matrix L describing the linearised dynamics.

Nonlinear projected equations

To capture the leading nonlinear corrections to the subnetwork dynamics, it is natural to enlarge the set of subnetwork observables by adding quadratic observables, i.e. products of concentration deviations. The projected equations then become

| (8) |

This form is analogous to (3) but now includes the quadratic observables that we have retained, both in the subnetwork interactions (rate matrix terms) and in the memory terms. Once again ri(t) represents the random forces but in this nonlinear case further characterization would require knowledge of the statistics of the initial bulk fluctuations, which is not generally available. We therefore disregard these terms, making (3) a closed system of equations for the time evolution of the subnetwork concentrations. Note that neglecting the random forces is equivalent to assuming that the bulk concentrations are at their steady state values at the initial time. Formally these initial bulk concentrations should be random rather than deterministic to make the Zwanzig-Mori projection well defined; we follow the strategy of [20] here and use initial Poisson distributions in the limit of vanishing variance. This limit has already been taken in the results above [20]. How large protein copy numbers have to be to allow stochastic effects to be safely neglected is something that could be studied in future work; the answer will depend, among other things, on the degree of nonlinearity of the GRN time evolution equations [27].

Moving on to the nonlinear memory functions, we are again faced with the difficulty that these cannot in general be evaluated explicitly. However, previous work [20] has developed a systematic approximation technique, which is applicable to the case of reaction networks with unary and binary reactions described by mass action kinetics. In such networks the time evolution equations for linear observables have linear and quadratic terms on the r.h.s. Inserting these into the equations for quadratic observables using the product rule gives e.g.

| (9) |

and one sees that cubic terms arise. The approximation in [20] neglects these terms in the spirit of an expansion to second order in the changes from steady state δx. These changes are therefore implicitly assumed to be small. Defining a vector z that collects all δx variables and their products such as , δx1 δx2, one can now again write a matrix form of the time evolution equations:

| (10) |

The rate matrix entries and memory functions can then be calculated [20] from the general formulae (6 and 7), applied to the expanded L matrix constructed as explained above. Because the subnetwork block S now contains linear {s} and quadratic observables {ss}, the matrices have the corresponding block structures. The coefficients Ωji are collected in the block Ωs,s, for example, and the Ω(jk)i in the block Ωss,s of the overall rate matrix Ω. The memory functions Mji(Δt) and M(jk)i(Δt) are similarly contained in blocks Ms,s and Mss,s of the memory matrix M.

To apply the above technique in order to derive nonlinear projected subnetwork equations for GRN dynamics, the obvious heuristic route is again to expand the dynamical eq (1), this time to second order in the δxi, to obtain an effective set of unary and binary reactions. This set defines the matrix L, from which the rate matrix and memory functions can then be found as explained above.

Justifying the heuristics: Network expansion

The heuristic method set out above for deriving linear or nonlinear subnetwork equations for GRN dynamics is a useful practical recipe, but its mathematical basis is not obvious. One difficulty is that the thermodynamic GRN eq (1) involve rational functions of the concentrations such as 1/(1 + x1), and while these can be Taylor expanded they have only finite radius of convergence. Not only does the heuristic method then have to throw away an infinite number of higher order terms when truncating the Taylor expansion after linear or quadratic order, but it may implicitly be using the resulting approximation outside the regime where the expansion is even convergent. In addition, while we have loosely talked about the expanded time evolution equations as representing networks of effective reactions, such an interpretation is in no way assured as there is no underlying mechanistic model and hence no constraints that would ensure an appropriate stoichiometry or even positivity of rate constants.

Our mathematical contribution in this paper is to demonstrate that the above limitations can be resolved, and the heuristic approach justified, by initially expanding the thermodynamic eqs (1 and 2) into a network of well-defined unary and binary reactions. The way to achieve this is already suggested by (2): we represent each possible existing DNA conformation as an additional species whose concentration has its own dynamical evolution, such that in an appropriate limit of fast DNA binding and unbinding, it reaches the quasi-steady state (QSS) values of the DNA conformation concentrations (2). The prefix “quasi” refers to the fact that this steady state is for given protein concentrations, which themselves change slowly in time. We can then apply the Zwanzig-Mori method to this network to extract memory functions for the chosen subnetwork. In doing this we can justify removing higher order terms as in [20] as the system is composed of only first and second order reactions. Finally the limit of fast DNA binding and unbinding has to be taken. Our result is that this procedure results in exactly the same projected equations as the heuristic approach described above, thus putting the method on a firm mathematical footing. The conclusion applies not only in the context of GRNs but in all networks with the appropriate timescale separation, provided that all fast observables are treated as part of the bulk. More sophisticated projection methods can then be derived by retaining some fast observables (in our case, subnetwork DNA) in the subnetwork. For the case of Michaelis-Menten dynamics, previous work has shown that such an approach allows one to derive projected equations that retain all subnetwork nonlinearities [21]. More generally, by embedding GRN models based on thermodynamic state ensembles into the broad class of mass action reaction networks, our network expansion method opens up the possibility of incorporating stochastic effects and of applying a broad range of other approximation and model reduction techniques [28].

Results

In this section we first provide our mathematical results, where we use a network expansion technique to provide a justification for the heuristic approach to finding memory functions for GRNs that was described in Secs. Projected equations for linearised dynamics and Nonlinear projected equations above. We then demonstrate applications of the approach with case studies. We first illustrate the memory function method on a simple example system. Finally, the results from the biological application to neural tube patterning are given in Sec. Application to neural tube network onwards.

Mathematical results

Network expansion applied to a protein-DNA binding mechanism

Our network expansion approach necessitates defining new reaction rates for binding and unbinding of protein to/from DNA that are consistent with the affinities w from the thermodynamic description. To be explicit, we define as the rate constant for protein p to bind to DNA coding for protein j and in binding conformation n, with a similar meaning for . These rates will thus describe the interaction each DNA species has with the protein species that bind to it. With these rate constants we write down an equation for the time evolution of each DNA concentration x(j,n):

| (11) |

The first term on the r.h.s. of (11) represents binding of protein p to DNA conformation (j, n − ep) to make DNA conformation (j, n). Here ep is a unit vector whose i-th entry is δip, i.e. a 1 at position p and zeros elsewhere. Similarly the third term on the right of (11) describes unbinding of protein p. The other two terms capture how DNA in conformation (j, n) can be lost by these two processes.

We next establish the relationship between the rate constants k in the mass action representation (11) and the affinities w of the thermodynamic form. The rate constants need to be chosen to ensure the QSS (2) of the DNA concentrations. The simplest assumption is detailed balance, which requires that in this steady state the net change in DNA concentration from any individual pair of binding and unbinding reactions between two conformations vanishes. Detailed balance means, in particular, that where there are multiple reaction paths from one DNA conformation to another, which might be distinguished by the order in which the various proteins bind, each path carries zero reaction flux rather than having a preferred forward or backward reaction direction. Balancing in this way the net change from binding of protein p to DNA in conformation (j, n) with the reverse unbinding transition, for the QSS concentrations (2), gives

| (12) |

The protein concentrations cancel from this as they should and we are left with a constraint on the rate constants:

| (13) |

To finish our construction, we need to ensure that the DNA concentrations reach the QSS values that we have imposed above with the detailed balance constraint (13). This requires that we make all DNA binding and unbinding reactions fast. Formally we introduce a fast rate factor γ and write all rate constants in the form , with the understanding that we will take the limit γ → ∞ at fixed . This construction is analogous to the one previously used for Michaelis-Menten reactions [21]. As there, we also need to ensure that the changes to protein concentrations from binding to DNA are negligible, as such effects are not described by the original thermodynamic eq (1). (We do, however, include them in the finite-γ numerics of Fig 2B.) This is achieved by scaling all DNA concentrations as . The intuition for this construction is that the amount of protein bound to DNA is, at most, of the order of the total concentration of DNA, which vanishes for γ′ → ∞. Finally, to compensate for the DNA concentration scaling, protein production rates have to be scaled as .

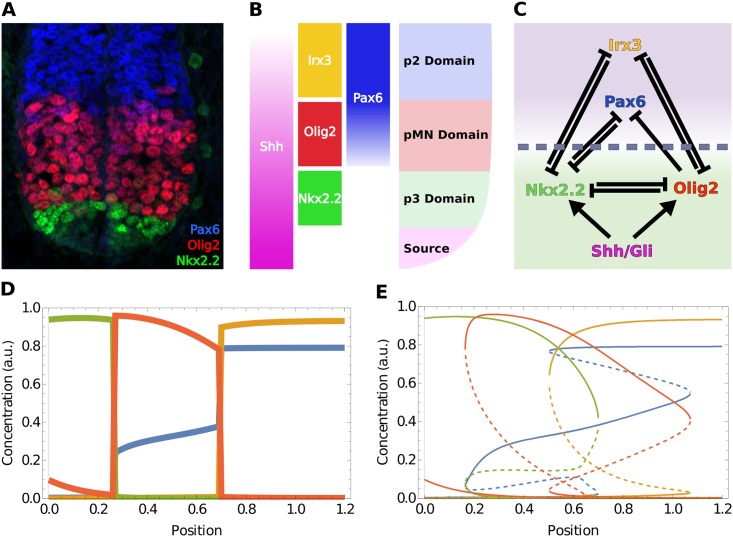

Fig 2. Example of application of Zwanzig-Mori projection.

(A) Illustration of the methodology, for the example of a cross repressive motif. First the nonlinear thermodynamic reactions (left) are expanded into mass action reactions with an appropriate timescale separation (centre). This generates additional nodes that represent the possible DNA conformations for both proteins, with e.g. g1DNA/g2Prot indicating DNA for gene 1 with protein 2 bound to it. To the expanded network we can apply the projection approach, retaining only the concentration of protein 1 in the subnetwork. The effect of the rest of the network—the bulk—is captured via memory terms (right). (B) Comparison of the cross repressive motif described via the original thermodynamic equations and the expanded mass action equations with and without timescale separation. Already for a moderate fast rate factor of γ = 10 the mass action and thermodynamic time evolutions are visually indistinguishable. Two time courses are plotted in Fig. (B-D), for g1Prot & g2Prot. (C-D) Demonstration of the projection approach with g1Prot in subnetwork and g2Prot in bulk. The projected equations track the dynamics of the original thermodynamic equation with a reduced system that contains memory functions. However, one can observe in (C) that accuracy can be lost in the transient if the system is initiated too far away from the fixed steady state. (D) Starting nearer to the steady state (at t = 1) substantially increases the accuracy of the projected description. (E) Example of memory functions of protein 1 to itself, decaying with time difference. The linear memory function is positive as expected from the network (positive feedback loop), while the nonlinear term is negative to correct for range-limiting nonlinearities that the linear terms cannot capture. Parameters used are α1 = 1, α2 = 1, wp1 = 1, wp2 = 2, w1 = 2, w2 = 2, β1 = 1/2, β2 = 1/2.

With the above rescalings, the limit γ → ∞ ensures that the “fast” species, i.e. the DNA concentrations are always in QSS with respect to the “slow” protein concentrations. The limit γ′ → ∞, on the other hand, means that the effects of binding to, and unbinding from, DNA can be neglected in the equations for the protein concentrations. These statements can be shown mathematically along the lines of the arguments in [21]. After dropping all tildes on rates and concentrations again—this is the notational convention we adopt for the rest of the paper—the time evolution of the concentrations in our expanded network is given by (1) for the protein equations, while for the DNA species one has

| (14) |

Note that in the above argument we do not require a specific relation between γ and γ′. In reality the rate for producing a single protein from the appropriate DNA, which is of order γ′, is lower than the DNA binding/unbinding rates, which are O(γ). However, quantifying this would be non-trivial as the model described here simplifies many biological steps into each of the two elementary processes of protein production and binding/unbinding. In any event, for our argument to hold we only need both γ and γ′ to be large, and for simplicity we take then γ = γ′ as was done in [21].

Obtaining linearised projected equations

We now proceed to use the expanded network to derive linearised projected equations. After the expansion, this requires us to keep track of deviations from steady state of all protein and DNA concentrations. We label these observables by “s” and “b” for subnetwork and bulk proteins as before, and “a” for DNA. Below we will refer to the DNA species in the system as “fast”, as expressed mathematically by the factor γ on the r.h.s. of (14), while protein species are “slow”. Note that the rate constants for protein production are also fast, of order γ′, but that the resulting changes to protein concentrations are slow because of the low concentration of (protein-producing) DNA.

We construct our L matrix by placing the DNA in the bulk, such that S = {s} and B = {b, a}, giving the block form

|

(15) |

The expression (7) for the resulting memory function now explicitly involves the fast rate factor γ and the rate constants used in the construction of our expanded network. These parameters appear in the third column in the second expression for L in (15), which encodes the time evolution equations for the DNA species. Our remaining task is to take the limit γ → ∞, with the aim of obtaining an expression for the memory function that only involves parameters of the original thermodynamic eqs (1 and 2). To take this limit, one notes that all blocks in the third column of (15) are proportional to γ. As the memory function contains an exponential of L, it will then have contributions decaying for time differences of order 1/γ, arising from the DNA dynamics, as well as slow contributions decaying on timescales of order unity.

For γ → ∞ the fast memory function contributions become effectively instantaneous and add to the rate matrix, and only the slow contributions remain in the memory function. This separation into fast and slow pieces of the memory can be performed using the method in [21] because L has exactly the same division into fast and slow blocks as there. It leads to the simple result that the final rate matrix and (slow) memory function for γ → ∞ can be calculated from an effective L-matrix no longer involving the fast degrees of freedom. To state the expression for the effective L, we first isolate the slow piece of our original L:

| (16) |

(The zero blocks here arise from the fact that subnetwork and bulk proteins do not interact directly in our GRN setting, but only via the DNA species.) The effective L-matrix that gives us the rate matrix and memory function for γ → ∞ is then of the form Leff = L∖a + ΔL∖a, which written out reads:

| (17) |

Here the second term including the minus is the additional contribution ΔL∖a from the fast degrees of freedom. The matrix Leff can be inserted directly into (6 and 7) to obtain the projected equations for linearised dynamics, with the fast rate limit already taken.

Fast binding limit as quasi-steady state elimination

As in [21], the result (17) has a simple interpretation: Leff can be obtained by eliminating the fast degrees of freedom from the linearised time evolution equations using a QSS condition. Using the block form (5), these equations can be written as

| (18a) |

| (18b) |

| (18c) |

where δxsT, δxbT and δxaT are vectors collecting the concentration deviations of subnetwork proteins, bulk proteins and DNA species, respectively. All blocks of L appearing in (18c) are proportional to γ. This justifies elimination of the fast variables using a QSS condition for these fast (DNA) degrees of freedom, effectively setting the r.h.s. of (18c) to zero. The fast species concentrations are then expressed as:

| (19) |

If we now substitute this back into the time evolution eqs (18a and 18b) we obtain:

| (20) |

Writing these time evolution equations in matrix form gives exactly the effective L-matrix Leff defined in (17), as claimed. Two terms have been highlighted here by brackets for later comparison.

Equivalence to heuristic linearisation

So far we have shown that the rate matrix and memory functions for the linearised dynamics can be found by applying standard projection results to a set of time evolution equations for only the slow degrees of freedom. This reduced set of equations is obtained by first expanding the thermodynamic equations into a set of mass-action equations, linearising, and finally eliminating the fast variables using a QSS assumption. We next show that this procedure is equivalent to directly linearising the original thermodynamic equations, for any case where there is a timescale separation. This generalises previous work [21] that only considered separate (non-interacting) fast species. To verify the equivalence, it is useful to write the expanded mass-action equations in the generic form

| (21) |

where xs, xb and xa are generic components of xs, xb and xa, respectively. This expanded description is constructed so that for γ → ∞, when the xa can be replaced by their QSS values , one recovers the original thermodynamic equations. These can therefore be written as

| (22) |

| (23) |

where the dependence of the on xs and xb is defined implicitly by

| (24) |

Expanding now the thermodynamic equations to linear order around a steady state one has

| (25) |

Here all derivatives are evaluated at the steady state, and the terms in the second line arise from the variation of the QSS values of the fast variables. The required coefficients of the type can be found by differentiating (24) with respect to the relevant slow variable, here xs:

| (26) |

We can now show that (25) is identical to (20) derived above. To see this, we note that by linearising the expanded mass action eq (21) one can identify the entries of L: for example, Lb,s has entries ∂Rs/∂xb, Ls,a has entries γ ∂Ra/∂xs etc. The QSS coefficients arising from (26) can then be written in matrix form as , with a similar expression for . Inserting these expressions into (25) does indeed lead directly to (20), with matching terms indicated by the brackets. An exactly analogous argument demonstrates the equivalence for ∂t δxb.

With the above arguments we have justified the heuristic method of Sec. Projected equations for linearised dynamics for obtaining linearised projected equations for the dynamics of a subnetwork within a larger GRN written in thermodynamic form: expand the GRN equations to linear order in protein concentrations around a steady state. Then construct from this the matrix Leff and partition this into “s” and “b” blocks according to the chosen subnetwork–bulk split. Finally determine the rate matrix and memory function matrix from (6 and 7). We invoked an expanded network of binary reactions involving DNA conformations to derive that this is the correct method, but note that its application only requires the original thermodynamic equations as input. Therefore the resulting projected equations are, as they should be, independent of the details of the rates used in the construction of the expanded network.

Derivation of nonlinear projected equations

To capture more of the nonlinearity inherent in GRNs one can extend the approach described in the previous sections, to include terms that are quadratic in the deviations from a steady state. The memory function then contains fast and slow contributions, with the former being transformed into additional rate matrix terms when γ → ∞. The resulting rate matrix and slow memory functions can be obtained from an effective L-matrix involving only slow (linear and quadratic) observables. As before, this Leff can be viewed as arising from a QSS elimination of the fast observables. Finally, one can show that this explicit elimination of the fast observables is equivalent to directly expanding the original thermodynamic equations to quadratic order in concentration deviations from the steady state: this justifies the heuristic method given in the Sec. Nonlinear projected equations.

Except for the last step, the above chain of argument is analogous to the linearised dynamics case, being based entirely on the identical structure of the partition—compare (5) and (S1 Text, Eq. S.1)—of L into fast and slow blocks. The final reduction to a quadratic expansion of the thermodynamic equations is more subtle because the projection approach treats quadratic observables as distinct and not directly tied to products of linear observables. We defer the details to S1 Text and note only that our derivation there both simplifies and generalises that in [21], from enzyme species that do not interact with each other to arbitrary interactions of the fast degrees of freedom.

Case studies

Zwangzig-Mori projection of a cross-repression motif

We applied the Zwanzig-Mori projection method to a cross repressive motif to illustrate our approach. Fig 2A shows the expansion procedure for this very simple regulation network: each of two genes is repressed through one binding site for the other factor. This system can be expanded to represent all possible DNA conformations, bound and unbound, as shown graphically by the four outer nodes in the network in the centre of Fig 2A. As the arrows indicate, in the expanded network unbound DNA can bind protein to form bound DNA; the reverse process is also possible. In this way one creates a set of reaction equations that has been extended and brought into mass action form by including the concentration of DNA conformations. The equations used for Fig 2 are of the form of (1) & (2):

| (27) |

and conversely for g2Prot. In numerical simulations of the expanded network we first checked the fast rate limit, which we control by setting the ratio, γ, of typical DNA reaction rates to protein production rates. Fig 2B shows that already with a moderate value of γ = 10 the predicted time courses of protein concentrations are almost identical to those predicted by the thermodynamic equations. As γ → ∞ the trajectories from the mass action equations and the thermodynamic equations become identical.

We chose to separate the system so that one protein species, g1Prot, is in the subnetwork and the other, g2Prot, is in the bulk. After expanding into protein and DNA species we proceeded to place all DNA species along with g2Prot in the bulk. Because we have performed the expansion around a steady state, the method is more precise when close to the steady state as expected (Fig 2D). Note that the cross-repressive motif with one binding site is not bistable. It could be made bistable by adding binding sites; with the current approach the behaviour around each steady state would then have to be analysed separately, as we also do in the neural tube application in the Sec. Application to neural tube network. More general projection methods can overcome this limitation as detailed in the Discussion, although it is clear that there are limits of principle here. For example, where the dynamical choice of steady state depends crucially on the initial concentration of a bulk species, this effect cannot be captured by a subnetwork description that by definition does not track this species.

Next we analyse the memory functions in our cross-repressive example, which can be thought of as describing the strength of a signal returning from the bulk a specific time after the original signal entered the bulk from the subnetwork (Fig 2E). The amplitude of the leading linear memory function—where the signal is δx1(t′)—is positive. This makes sense as, in the cross repressive motif, a protein is inhibiting its inhibitor, thus promoting its own production. The second order memory function governs the effects of the signal . It is, in this case, negative and acts as a correction that captures nonlinearities beyond the linear memory. We observe that the decay in time of the memory functions is determined by the decay rate of the bulk protein. This is consistent with the memory function describing a protein repressing its own repressor, hence the effect only lasts as long as the repressor protein is present. Overall, the memory functions provide compact and readable descriptions of how the bulk modifies and influences the activity of the subnetwork.

Application to neural tube network

Reducing part of a network into memory functions offers a way to simplify and analyse the effect of the factors in this bulk part on the remaining subnetwork. To illustrate this approach we chose to analyse a four gene network involved in the embryonic patterning of the vertebrate neural tube [29, 30]. The developing neural tube is a well characterised example of developmental pattern formation. In this tissue, the secreted molecule, Sonic-Hedgehog (Shh) forms a ventral to dorsal gradient [31]. This in turn generates discrete domains of gene expression that define the progenitors of the distinct neuronal subtypes that comprise spinal cord circuitry (Fig 3A and 3B). A transcriptional network has been identified that is controlled by graded Shh signaling and is responsible for specifying ventral progenitor domains [30, 32]. Genetic and molecular experiments have determined the activity of the components of this network (Fig 3C) and documented the temporal dynamics of pattern formation in vitro and in vivo [24, 30, 33]. For the three most ventral domains of progenitors, four transcription factors are essential, Nkx2.2, Olig2, Pax6 and Irx3. The most ventral domain (p3 domain) is characterised by high Nkx2.2 and low levels of the other three proteins; the domain adjacent to this (pMN domain) has high levels of Olig2, medium levels of Pax6 and low levels of the other two proteins. Dorsal to this, the p2 domain expresses high levels of Pax6 and Irx3 and low levels of the other two proteins.

Fig 3. Patterning of the vertebrate neural tube.

(A) Antibody staining of Wild type (WT) mouse stained for three of the main bands in dorso-ventral patterning. (Image provided by Katherine Exelby). (B) Illustration of neural tube patterning: ventral Shh secreted from the notocord and floor plate (termed “Source”) generates patterned domains along the dorso-ventral axis. Each domain is defined by the expression of a characteristic set of genes. (C) GRN that patterns the three most ventral domains of the neural tube. The chosen separation of bulk (purple) and subnetwork (green) in the application of the Zwanzig-Mori projection is also shown. (D) Simulations of steady state pattern along the dorsoventral axis using a set of thermodynamic equations of the form of eqs (1 and 2). These equations were taken—along with appropriate initial conditions of 0 for all species—from [24]. For all plots where x axis represents neural tube position, zero corresponds to the most ventral point. (E) Full bifurcation diagram illustrating the multistable nature of the network. Shown are steady state concentrations of the four molecular species against neural tube position, with unstable steady states marked dashed. Colours in (D, E) identify genes/proteins in the same way as in the labelling of the illustration (B) and of the network nodes in (C). This colour code is used throughout the paper unless otherwise noted.

Mathematical models have been formulated based on the experimental data and these are able to replicate key aspects of cell patterning [24, 33] (Fig 3D). We take advantage of these to develop a Zwanzig-Mori projection of the system (using equations as described in [24] & S3 Text). To this end, we chose Nkx2.2 and Olig2 to be the subnetwork species, given that they are receiving direct input from Shh (Fig 3C), and replaced Irx3 and Pax6 with memory functions.

Linear memory analysis

We first examined the properties of the linear memory functions. These are the most substantial contributions, close to the specific steady states, of the species in the bulk (Pax6 and Irx3) on the temporal changes in the activity of Nkx2.2 and Olig2. We note that the system is multistable (Fig 3E) and different combinations of steady states are available at different positions along the dorsal ventral axis (Shh gradient). We therefore analysed each possible steady state along the neural tube. In this case, as the bulk species are mutual repressors with the subnetwork species, they form a positive feedback loop with the factors in the subnetwork. As a consequence, each of the linear memory functions is positive and exponentially decaying on the time-scale of protein degradation of the bulk species. Thus the relative contribution of each of the memory functions can be assessed by comparing their amplitude, defined as the memory function value at zero time difference.

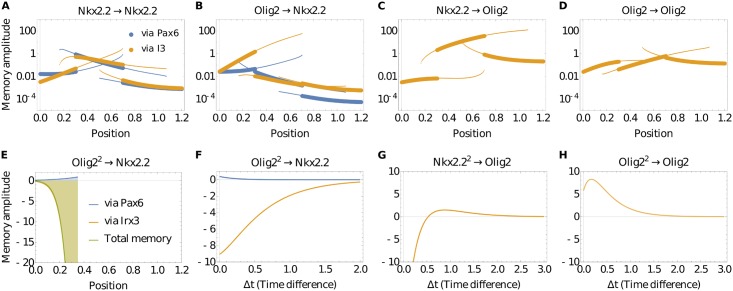

We calculated the amplitudes of the memory functions and determined the memory effects that Nkx2.2 and Olig2 receive over time. Furthermore, we used the method developed in [20] to decompose the memory into contributions from memory signals passing through the two different bulk species (Fig 4A–4D). This allowed us to determine the importance of specific regulatory interactions between transcription factors (TFs) at every position of the neural tube model, and assess their respective contributions. The results indicate that, for the most part, there is only a small memory amplitude of Nkx2.2 to the past of Olig2 passing through Pax6 (Fig 4B; note the logarithmic y-axis): the memory of (past) Olig2 on Nkx2.2 is largely dominated by memory through Irx3. The only exception is for steady states that are not reached during normal neural tube patterning (lower pair of curves in the neural tube position range ≈ [0.2 … 0.45] in Fig 4B). These steady states exist because the system is multistable, but the initial conditions that lead to them are incompatible with physiological conditions. We additionally observe in Fig 4A that the memory of Nkx2.2 to itself is for the most part shared between Irx3 and Pax6, with no particular TF being dominant. There are again exceptions from this, but only in steady states that are biologically unreasonable. In the case of the memory of Olig2, it can only be influenced by Irx3 as is clear from the structure of the network in Fig 3C. Channel decomposition is therefore unnecessary. We observe that the memory effects of Olig2 to itself are strongest in the p3 domain, and the opposite is true (memory of Nkx2.2 on Olig2 is stronger) in the pMN domain.

Fig 4. Memory amplitude and temporal dynamics.

(A) Amplitude of memory (memory function at Δt = 0) of Nkx2.2 to itself along the neural tube. There are multiple lines as the analysis was performed at all possible stable steady states. The vertical axis is logarithmic to make the range of amplitudes easier to appreciate. Colours identify the memory amplitude contribution from each of the two possible bulk channels, via Irx3 and Pax6, respectively. Thick lines indicate physiological states, while thin lines indicate states that are not usually observed in vivo. (B) Linear memory amplitude of (past) Olig2 on Nkx2.2 along the neural tube. The memory via Pax6 is for the most part below the memory via Irx3 in each pair of corresponding curves. (C,D) Memory amplitudes of Olig2 to Nkx2.2 (C) and to itself (D). No channel decomposition is performed as Olig2 receives memory only via the Irx3 channel. (E) Nonlinear memory of (past) Olig2 squared on Nkx2.2 in the p3 domain, where dynamics are dominated by Irx3; memory via Pax6 is negligible by comparison. (F) Nonlinear memory function of (past) Olig2 squared on Nkx2.2 from position 0.2 in (E), plotted to show that the relative contribution of the Pax6 channel is small also for all time differences. (G,H) Nonlinear memory functions of Olig2 to Nkx2.2 (G) and itself (H) in the p2 domain at position 0.7, exemplifying the potential for nontrivial time dependences (including non-monotonicity and sign changes) in the nonlinear memory functions.

We observe in Fig 3 that the order of magnitude of the memory amplitudes changes substantially with neural tube position. This is a consequence of the system becoming more or less sensitive to concentration fluctuations of a given species within the network. An example of this is Nkx2.2 memory to itself via Pax6 in the pMN domain (Fig 4A), where dorsally the lower levels of Shh mean that the binding sites of Nkx2.2 are less occupied by the active form of Gli (Gli is the transcriptional effector downstream of Shh that binds to Nkx2.2 & Olig2, see [24] for details on how this is implemented). Active Gli sets the rate of production of Nkx2.2 and thus has a direct effect on the amplitude of the memory functions. In addition to this, as one moves dorsally in the pMN domain, the steady state concentration of Pax6 is increasing (Fig 3E, also observed experimentally). This increase causes the system to become insensitive to fluctuations of Pax6 proteins as Pax6 binding sites are typically already saturated. This phenomenon is at work also in the other memory function amplitudes that change across neural tube position as seen in Fig 4A–4D.

Nonlinear memory analysis

We next examined the second order memory functions. These provide corrections to the linear memory terms and encode information about how the bulk nodes drive the system towards a steady state. Quantitative accuracy in capturing the dynamics of the full nonlinear network will also be improved to the extent possible within an expansion around a steady state, but such accuracy is not our main focus—it is the additional insights gained from the memory functions that we are after.

It is apparent that once memory terms are included, different trajectories in the subnetwork plane cross. This is visually most obvious with the full nonlinear memory terms (S1C and S1G Fig) and consistent with the full dynamics (S1D and S1H Fig). The crossings arise from the fact that, in the presence of memory, systems in the same state can follow different trajectories towards a steady state depending on their past. In the full dynamics, the additional information as to which path a system will take is contained in the bulk species, which effectively hold the information on the past of the system. In the case of the memory functions, the memory is not contained in network nodes outside of the subnetwork, but is represented explicitly via the memory terms, with the time difference dependence of the memory functions indicating, for example, the timescale of the memory. This offers a useful abstraction: while it is not easy to visualize the four-dimensional concentration space for the full dynamics, the projection approach reduces the system to two components with memory of their own past.

In the nonlinear memory functions, we can assess the importance of the link from Olig2 to Pax6 by considering the squared deviations of Olig2: the Pax6 channel contribution to this memory necessarily involves signals being propagated via this Olig2→Pax6 link. We find that the Pax6 channel makes a very small contribution to the nonlinear memory amplitude in comparison to the Irx3 channel. This is shown in Fig 4E for the p3 domain but is true also for all other states. We additionally checked the time-dependence of the memory function: from Fig 4F one sees that the relative contribution of the Pax6 channel to the nonlinear memory of (past) Olig2 squared on Nkx2.2 remains small at all time-differences. Together with our results above for linear memory functions, we thus conclude that the repression of Pax6 by Olig2 is dispensable for maintaining an established dorsal-ventral pattern. We plot an example of nonlinear memory functions (Fig 4G and 4H) to illustrate that their dependence on time difference can include non-monotonicities and sign changes that mean memory amplitudes do not tell the full story of the effect of nonlinear memory. The effects of including the memory terms can be confirmed and further visualised by considering the effective drift vector, which contains the rates of change of the concentrations of Nkx2.2 and Olig2. We describe this approach in S2 Text, where we also discuss further how specific contributions of the nonlinear memory affect the dynamics, for example in the approach to the state in the p2 domain.

Exploration of network properties

The analysis of both linear and nonlinear memory functions in the previous section suggested that the repression of Pax6 by Olig2 is not critical for the dynamics of the steady states observed in neural tube patterning. We based this on the observation that the amplitude of memory that is transmitted through this link is relatively small compared to the other memory functions, for all biologically relevant steady states.

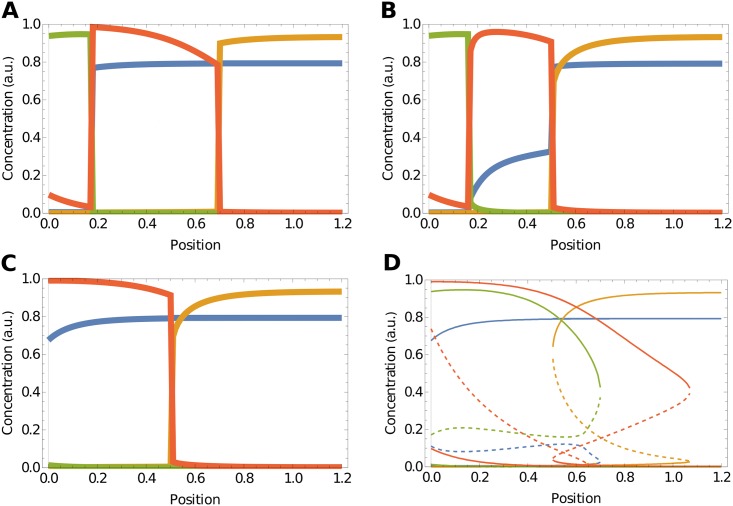

To test this prediction of the memory function analysis, we removed the repressive link from Olig2 to Pax6 in the full model. Consistent with our prediction of the relative insignificance of the link from Olig2 to Pax6, the resulting steady state concentrations of the simulations are similar to those in the original system (Fig 5A). The only qualitative change is the increased level of Pax6 between neural tube position 0.2 and 0.7, which results from the loss of the repressive link. But the spatial aspects, such as the positional sequence of genes and qualitative concentrations, of the domains are otherwise unaltered.

Fig 5. Olig2 repression of Pax6 increases robustness to initial conditions.

(A) Patterning without the repressive link from Olig2 to Pax6, showing steady states reached from standard initial conditions. Compared to the full network, the qualitative domain structure is conserved. (B) Patterning for the full network for initial conditions with high levels of Pax6 and Irx3 is qualitatively identical to low initial levels of Pax6 and Irx3. Initial conditions [Pax6] = [Irx3] = 1. (C) Patterning without the repressive link from Olig2 to Pax6 for initial conditions with high levels of Pax6 and Irx3. The p3 domain is lost as a consequence of the different initial conditions. (D) Bifurcation diagram of the network without Olig2-Pax6 repression. The region in which the pMN state (Olig2 high) is stable has expanded ventrally, thus making the ventralmost region bistable.

Since the repressive link between Olig2 and Pax6 has been experimentally documented [33–35] but from our analysis is not required for the dynamics around the steady state, we sought to understand what purpose it might serve during development. We performed simulations from varying initial conditions for both WT (Fig 5B) and the system lacking the link (Fig 5C). These show that the p3 domain is reached from only a small range of initial conditions following the removal of the repressive link between Olig2 and Pax6. To rationalise this, we performed a bifurcation analysis (Fig 5D). This indicated that the removal of the Olig2 inhibition of Pax6 markedly increased the range of positions at which the Olig2 steady state is present, thus facilitating its invasion into the Nkx2.2 domain. In particular, under initial conditions of high Pax6 and Irx3, the pMN domain is induced ventrally. In the unperturbed network it is not possible to reach this steady state in the ventralmost part because the system is monostable, allowing only the p3 domain to arise. On the other hand, in the absence of Olig2 inhibition of Pax6, the ventral region is bistable. Hence, depending on the initial conditions a pMN fate can be reached (Fig 5D). Taken together, this analysis suggests that the repressive link from Olig2 to Pax6 contributes to the robustness of the system for patterning the neural tube.

A potential explanation for the seemingly dispensable regulatory interaction between Olig2 and Pax6 arises from considering the normal development of the neural tube. During embryonic neural tube development, neural progenitors are generated by the process of neural induction and this initiates the expression of neural genes including Pax6 and Irx3. At the same time, cells of the notochord (which underlies the ventral midline of the neural tube) begin to secrete Shh. Shh spreads into the neural tube and neural progenitors respond to the signal. The consequence is that in the ventral neural tube neural progenitors begin responding to Shh at intermediate levels of Pax6 and Irx3. A more appropriate initial condition, representative of the in vivo situation, is for Pax6 and Irx3 to be somewhere between 0 and maximum. Moreover, heterogeneity between cells, as well as variation in the exact timing of developmental events along the rostral-caudal axis of the embryo, means that the precise initial conditions—the levels of Pax6 and Irx3 when cells respond to Shh signalling—will vary. Hence the robustness provided by the Olig2-Pax6 link may play an important role in ensuring reliable pattern formation in the developing neural tube.

Discussion

We have developed a generally applicable method for applying Zwanzig-Mori projections to transcriptional networks that allows the analysis of subnetworks extracted from a larger network. We demonstrated the approach on a simple genetic cross repressive motif, to illustrate the minimal example of a memory function, and on a more complicated, but well-characterised, transcriptional network operating in the ventral part of the vertebrate neural tube. This showed how the method allows the function and importance of specific links within a network to be defined in an intuitive manner. We used these insights to identify structural features of the neural tube network that appear primarily to increase the robustness of the network to initial conditions, rather than maintain its steady state.

The projection technique is straightforward to implement using the methodology described, and can be applied to any GRN following the thermodynamic formalism (including activation, repression, competitive and cooperative binding). Further protein-protein interactions could readily be incorporated as these would be represented by simple first order (unary) or second order (binary) interactions. Thus, in addition to gene regulation, nonlinear protein-protein interactions mediated by enzymes such as those found in signal transduction pathways could be included in the analysis [21]. It would also be possible to include Hill functions as long as these possess integer exponents [27]. Note that our treatment relies on mass action kinetics, which implicitly assumes that the different molecular species are well mixed by diffusion processes that are faster than any of the reaction kinetics [36]. The size of the matrices involved in constructing the memory functions scales as the square of the number of bulk species, which in our experience means that networks with up to 200 nodes can be investigated without difficulty. Thus, the generality of the method and the ability to implement it algorithmically provide a comprehensive mathematical toolkit to simplify and analyse dynamical systems describing a range of cellular and molecular processes.

In our mathematical treatment we took advantage of an argument of timescale separation for molecular mechanisms operating in transcriptional networks, in which fast processes (DNA-protein interactions) are assumed to be in QSS compared to the slow processes (changes in protein concentration). We also assume the effective concentration of DNA species to be small in comparison to that of protein species. We consider these to be reasonable assumptions because DNA-protein interactions occur at a much faster rate than the changes in protein concentrations produced by transcription and translation [37]. Moreover, there are usually at most four DNA copies of each gene per cell, whereas the number of individual protein molecules is normally considerably higher. The production of individual proteins per DNA copy number, i.e. the relevant rate constant, can then be large while the resulting relative changes to protein concentration are slow. These assumptions allowed us to construct an extended reaction network involving fast molecular (DNA) species and at most binary reactions. This construction is sufficiently general for it to be applicable to other systems with a timescale separation, as long as they satisfy the above conditions for the relevant concentrations and rate constants.

An important component of this Zwanzig-Mori projection method is that the memory functions describe all the behaviours of a system in the vicinity of a steady state. Even though this comes at the cost of predictions becoming increasingly inaccurate the further the system is from the fixed point, the information around the steady state is sufficient to distil relevant properties of the network. We therefore employ the method as a tool for probing the dynamics near a chosen steady state, rather than for tracking dynamics. The benefit is that the resulting memory functions are substantially simpler than the original system. There is no need to perform simulations to analyse memory functions or to test different positions in phase space as a steady state is approached. Moreover, the method can also be applied to an arbitrarily chosen subnetwork of a generic network whereas other model reduction approaches—as discussed in the Introduction—do not allow this, and are also more restrictive as they require specific network motifs or functional forms of the dynamics.

The memory functions produced by the Zwanzig-Mori approach allow exploration of the amplitudes and timescales of the interactions between the subnetwork and bulk. This can reveal information that is not otherwise easy to discern from the parameters of the full dynamics or from the functional form of the equations. Each memory function represents the total of the contributions from a given subnetwork component that feeds back to the subnetwork over time after passing through the bulk. This total memory can be decomposed into channels that describe the flow of signals through specific components in the bulk. In this way, the channels that dominate a memory effect can be identified, providing insight into the dynamical mechanisms responsible for achieving and maintaining a steady state. The memory functions thus help to extract features of the dynamics that would not be readily detectable in direct simulations of the time evolution equations.

An example of the type of insight provided by the projection method came from our analysis of the neural tube network. We focused on a subnetwork comprising Nkx2.2 and Olig2. Decomposition of the memory functions suggested that an experimentally documented repression of Pax6 by Olig2 [34] plays only a minor role in sustaining the steady state pattern. Consistent with this prediction, simulations in which this regulatory link had been eliminated in the full model showed qualitatively unchanged steady states. We note that removal of the repressive link between Olig2 and Pax6 is distinct from the removal of Olig2 itself [24, 30, 33]. In the Olig2 mutant, the repressive effect of Olig2 on both Nkx2.2 and Pax6 are eliminated whereas the removal of the Pax6-Olig2 link leaves the repressive effect of Olig2 on Nkx2.2 intact. The memory function analysis thus raised the question of what purpose the Olig2-Pax6 regulation might serve and prompted us to explore the transient system dynamics, specifically the effect of the initial conditions, which are unknown in the in vivo system. Simulations in the absence of the Olig2-Pax6 repression, compared to the full model, revealed a marked increase in sensitivity to these initial conditions. Comparison of the bifurcation diagrams of the systems, with and without the Pax6-Olig2 link, indicated that the well-defined Nkx2.2 monostable region (p3 domain)—which appears at high levels of Shh signalling in the full system—was replaced by a region of bistability for Nkx2.2 and Olig2 in the absence of the Olig2-Pax6 regulation. In this latter case the steady state induced by high levels of signal therefore depends on the initial concentrations of Pax6 and Irx3. This suggests that, while not necessary to maintain steady states, the Olig2-Pax6 regulation ensures access to the appropriate steady states irrespective of the initial levels of Pax6 and Irx3. As these may differ between cells and at different locations along the rostral-caudal axis of the neural tube, the Olig2-Pax6 regulatory link might make the system less sensitive to these variations. This implies that the primary purpose of Olig2 repression of Pax6 is to increase the robustness of pattern formation.

Alternative implementations of the Zwanzig-Mori projection [38–40] have been developed that do not rely on expanding around a fixed steady state and we are currently adapting these for transcriptional networks. While these alternative methods may provide a solution to the specific limitations of our current Zwanzig-Mori projection method, the nonlinear memory functions they produce are much richer. Further work will be needed to fully understand the more complex information they encode. Projection methods might also provide a way to analyse systems for which only partial information is available. Given a known subnetwork that is not able to fully reproduce experimental observations, the incorporation of memory functions provides a means to explore the possible effects of unknown factors. This could then be used to identify plausible network structures that generate these memory functions. Taken together therefore, the projection approach provides tools to simplify, visualise and explore the behaviour of large networks that would otherwise be difficult to analyse in their entirety.

Supporting information

In this Appendix we give the details of the projection method with linear and quadratic observables, applied to an expanded network as outlined in Sec. Nonlinear projected equations.

(PDF)

In this Appendix we give additional detail of the wide variety of effects that memory functions contribute to the behaviour of a system. Specifically, we explore the effect of the contributions from nonlinear memory terms and provide an alternative visualisation using a first order time expansion of the effective drift of the system.

(PDF)

Equations and parameters used in model of neural tube patterning network.

(PDF)

Trajectories approaching a high Olig2 state in the pMN domain from a range of initial conditions, with no memory (A), with only linear memory (B), with linear and nonlinear memory (C), and the full dynamics (D). Trajectories approaching a low Olig2, low Nkx2.2 state in the p2 domain from different initial conditions, with no memory (E), with only linear memory (F), with linear and nonlinear memory (G), and the full dynamics (H). All figures are parametric plots, showing Nkx2.2 and Olig2 concentrations on the x- and y-axis, respectively, with time as curve parameter. The trajectories are coloured to represent the norm of the drift vector of the system as indicated by the colour scale; high values indicate the system is evolving quickly while the opposite is true for low values. Scalebars on the right apply to the corresponding row. Path crossing can be seen in (C–D) & (G–H), illustrating the importance of nonlinear memory terms to reproduce qualitative features of the full thermodynamic equations.

(TIF)

(A-D) Nonlinear memory amplitudes across neural tube positions within the pMN domain. The plot titles indicate the type of memory, e.g. (C) shows memory of (past) Nkx2.2 squared fluctuation on Olig2. Memory effects of past Olig2-fluctuations in the pMN domain are negligible. (E-H) Nonlinear memory amplitudes across neural tube positions within the p2 domain. Nkx2.2 receives very little quadratic memory influence in this domain. The x-axis represents neural tube position in all plots. Blue and yellow lines indicate the decomposition into Pax6 and Irx3 channels, while green lines indicate the total memory.

(TIF)

Colour map and contour plots of the norm of the effective drift, in conditions identical to those in S1A–S1C Fig for the first row (pMN domain) and S1E–S1G Fig (p2 domain) for the second row. (A,D) Memoryless drift, (B,E) drift with linear memory, (C,F) drift with linear and nonlinear memory. The scalebar on the right applies to each entire row. Effective drift norms have been calculated for time t = 0.8.

(TIF)

Acknowledgments

We are grateful to Katherine Exelby for providing microscopy images.

Data Availability

All relevant data are within the paper and its Supporting Information files.

Funding Statement

EHD and PS acknowledge the stimulating research environment provided by the EPSRC Centre for Doctoral Training in Cross-Disciplinary Approaches to Non-Equilibrium Systems (CANES, EP/L015854/1) (https://www.epsrc.ac.uk/skills/students/centres/profiles/crossdisciplinaryapproachestononequilibriumsystems/). RPC was supported by the Wellcome Trust (WT098325MA) EHD and JB are supported by the Francis Crick Institute which receives its core funding from Cancer Research UK (FC001051) (https://www.cancerresearchuk.org/), UK Medical Research Council (FC001051) (https://www.mrc.ac.uk/), Wellcome Trust (FC001051 and WT098326MA) (https://wellcome.ac.uk/). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Levine M, Davidson EH. Gene regulatory networks for development. Proceedings of the National Academy of Sciences. 2005;102(14):4936–4942. doi: 10.1073/pnas.0408031102 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Glass L, Kauffman SA. The logical analysis of continuous, non-linear biochemical control networks. Journal of Theoretical Biology. 1973;39(1):103–129. doi: 10.1016/0022-5193(73)90208-7 [DOI] [PubMed] [Google Scholar]

- 3. Mogilner A, Wollman R, Marshall WF. Quantitative Modeling in Cell Biology: What Is It Good for? Developmental Cell. 2006;11(3):279–287. [DOI] [PubMed] [Google Scholar]

- 4. Craciun G, Tang Y, Feinberg M. Understanding bistability in complex enzyme-driven reaction networks. Proceedings of the National Academy of Sciences. 2006;103(23):8697–8702. doi: 10.1073/pnas.0602767103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Kondo S, Miura T. Reaction-Diffusion Model as a Framework for Understanding Biological Pattern Formation. Science. 2010;329(5999):1616–1620. doi: 10.1126/science.1179047 [DOI] [PubMed] [Google Scholar]

- 6. Novák B, Tyson JJ. Design principles of biochemical oscillators. Nature Reviews Molecular Cell Biology. 2008;9(12):981–991. doi: 10.1038/nrm2530 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Perkins TJ, Jaeger J, Reinitz J, Glass L. Reverse engineering the gap gene network of Drosophila melanogaster. PLoS Computational Biology. 2006;2(5):417–428. doi: 10.1371/journal.pcbi.0020051 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Umulis DM, Shimmi O, O’Connor MB, Othmer HG. Organism-Scale Modeling of Early Drosophila Patterning via Bone Morphogenetic Proteins. Developmental Cell. 2010;18(2):260–274. doi: 10.1016/j.devcel.2010.01.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Naldi A, Remy E, Thieffry D, Chaouiya C. Dynamically consistent reduction of logical regulatory graphs. Theoretical Computer Science. 2011;412(21):2207–2218. doi: 10.1016/j.tcs.2010.10.021 [Google Scholar]

- 10. Feliu E, Wiuf C. Simplifying biochemical models with intermediate species. Journal of The Royal Society Interface. 2013;10(87):20130484–20130484. doi: 10.1098/rsif.2013.0484 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Okino MS, Mavrovouniotis ML. Simplification of Mathematical Models of Chemical Reaction Systems. Chemical Reviews. 1998;98(2):391–408. doi: 10.1021/cr950223l [DOI] [PubMed] [Google Scholar]

- 12. Gay S, Soliman S, Fages F. A graphical method for reducing and relating models in systems biology. Bioinformatics. 2010;26(18). doi: 10.1093/bioinformatics/btq388 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Sunnaker M, Cedersund G, Jirstrand M. A method for zooming of nonlinear models of biochemical systems. BMC systems biology. 2011;5(1):140 doi: 10.1186/1752-0509-5-140 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Cardelli L. Morphisms of reaction networks that couple structure to function. BMC Systems Biology. 2014;8(1):84 doi: 10.1186/1752-0509-8-84 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Alon U. Network motifs: Theory and experimental approaches. Nature Reviews Genetics. 2007;8(6):450–461. doi: 10.1038/nrg2102 [DOI] [PubMed] [Google Scholar]

- 16. Apri M, de Gee M, Molenaar J. Complexity reduction preserving dynamical behavior of biochemical networks. Journal of Theoretical Biology. 2012;304:16–26. doi: 10.1016/j.jtbi.2012.03.019 [DOI] [PubMed] [Google Scholar]

- 17. Mori H. Transport, Collective Motion, and Brownian Motion. Progress of Theoretical Physics. 1965;33(3):423–455. doi: 10.1143/PTP.33.423 [Google Scholar]

- 18. Zwanzig R. Memory effects in irreversible thermodynamics. Physical Review. 1961;124(4):983–992. doi: 10.1103/PhysRev.124.983 [Google Scholar]

- 19. Ritort F, Sollich P. Glassy dynamics of kinetically constrained models. Advances in Physics. 2003;52(4):219–342. doi: 10.1080/0001873031000093582 [Google Scholar]

- 20. Rubin KJ, Lawler K, Sollich P, Ng T. Memory effects in biochemical networks as the natural counterpart of extrinsic noise. Journal of Theoretical Biology. 2014;357:245–267. doi: 10.1016/j.jtbi.2014.06.002 [DOI] [PubMed] [Google Scholar]

- 21. Rubin KJ, Sollich P. Michaelis-Menten dynamics in protein subnetworks. Journal of Chemical Physics. 2016;144(17):174114 doi: 10.1063/1.4947478 [DOI] [PubMed] [Google Scholar]

- 22. Gotze W, Sjogren L. Relaxation processes in supercooled liquids. Reports on Progress in Physics. 1992;55(3):241–376. doi: 10.1088/0034-4885/55/3/001 [Google Scholar]

- 23. Bintu L, Buchler NE, Garcia HG, Gerland U, Hwa T, Kondev J, et al. Transcriptional regulation by the numbers: Models. Current Opinion in Genetics and Development. 2005;15(2):116–124. doi: 10.1016/j.gde.2005.02.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Cohen M, Page KM, Perez-Carrasco R, Barnes CP, Briscoe J. A theoretical framework for the regulation of Shh morphogen-controlled gene expression. Development. 2014;141(20):3868–3878. doi: 10.1242/dev.112573 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Shea MA, Ackers GK. The OR control system of bacteriophage lambda. Journal of Molecular Biology. 1985;181(2):211–230. doi: 10.1016/0022-2836(85)90086-5 [DOI] [PubMed] [Google Scholar]

- 26. Sherman MS, Cohen BA. Thermodynamic state ensemble models of cis-regulation. PLoS Computational Biology. 2012;8(3):e1002407 doi: 10.1371/journal.pcbi.1002407 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Thomas P, Straube AV, Grima R. The slow-scale linear noise approximation: an accurate, reduced stochastic description of biochemical networks under timescale separation conditions. BMC systems biology. 2012;6(ii):39 doi: 10.1186/1752-0509-6-39 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Schnoerr D, Sanguinetti G, Grima R. Approximation and inference methods for stochastic biochemical kinetics—A tutorial review. Journal of Physics A: Mathematical and Theoretical. 2017;50(9):093001 doi: 10.1088/1751-8121/aa54d9 [Google Scholar]