Significance

Probing functional interactions among the nodes in a network is crucial to understanding how complex systems work. Existing methodologies widely assume static network structures or Gaussian statistics or do not take account of likely sparse interactions. They are therefore not well-suited to neuronal spiking data with rapid task-dependent dynamics, binary statistics, and sparse functional dependencies. We develop an inference framework for extracting functional network dynamics from neuronal data by integrating techniques from adaptive filtering, compressed sensing, point processes, and high-dimensional statistics. We derive efficient estimation algorithms and precise statistical inference procedures. We apply our proposed techniques to experimentally recorded neuronal data to probe the neuronal functional networks underlying attentive behavior. Our techniques provide substantial gains in computation, resolution, and statistical robustness.

Keywords: Granger causality, adaptive filtering, functional network dynamics, point processes, sparsity

Abstract

Quantifying the functional relations between the nodes in a network based on local observations is a key challenge in studying complex systems. Most existing time series analysis techniques for this purpose provide static estimates of the network properties, pertain to stationary Gaussian data, or do not take into account the ubiquitous sparsity in the underlying functional networks. When applied to spike recordings from neuronal ensembles undergoing rapid task-dependent dynamics, they thus hinder a precise statistical characterization of the dynamic neuronal functional networks underlying adaptive behavior. We develop a dynamic estimation and inference paradigm for extracting functional neuronal network dynamics in the sense of Granger, by integrating techniques from adaptive filtering, compressed sensing, point process theory, and high-dimensional statistics. We demonstrate the utility of our proposed paradigm through theoretical analysis, algorithm development, and application to synthetic and real data. Application of our techniques to two-photon Ca2+ imaging experiments from the mouse auditory cortex reveals unique features of the functional neuronal network structures underlying spontaneous activity at unprecedented spatiotemporal resolution. Our analysis of simultaneous recordings from the ferret auditory and prefrontal cortical areas suggests evidence for the role of rapid top-down and bottom-up functional dynamics across these areas involved in robust attentive behavior.

Converging lines of evidence in neuroscience, from neuronal network models and neurophysiology (1–8) to resting-state imaging (9–11), suggest that sophisticated brain function results from the emergence of distributed, dynamic, and sparse functional networks underlying the brain activity. These networks are highly dynamic and task-dependent, which allows the brain to rapidly adapt to abrupt changes in the environment, resulting in robust function. To exploit modern-day neuronal recordings to gain insight into the mechanisms of these complex dynamic functional networks, computationally efficient time series analysis techniques capable of simultaneously capturing their dynamicity, sparsity, and statistical characteristics are required.

Historically, various techniques such as cross-correlogram (12) and joint peristimulus time histogram (13) analyses have been used for inferring the statistical relationship between pairs of spike trains (12–14). Despite being widely used, these methods are unable to provide reliable estimates of the underlying directional patterns of causal interactions among an ensemble of interacting neurons due to the intrinsic deficiencies in identification of directionality, low sensitivity to inhibitory interactions (15), and susceptibility to the indirect interactions and latent common inputs.

Methods based on Granger causality (GC) analysis have shown promise in addressing these shortcomings and have thus been used for inferring functional interactions from neural data of different modalities (16–19). The rationale behind GC analysis is based on two principles: the temporal precedence of cause over effect and the unique information of cause about the effect. Given two time series , if including the history of can improve the prediction of , it is implied that the history of contains unique information about , not captured by other covariates. In this case, we say that has a G-causal link to .

Numerous efforts have been dedicated to extending the bivariate GC measure to more general settings, such as the conditional form of GC in ref. 20 for multivariate setting, and several frequency-domain variants of GC (21–23). Despite significant advances in time series analysis using GC and its variants, when applied to neuronal data, the existing methods exhibit several drawbacks.

First, most existing methods for causality inference provide static estimates of the causal influences associated with the entire data duration. Although suitable for the analysis of stationary neural data, they are not able to capture the rapid task-dependent changes in the underlying neural dynamics. To address this challenge, several time-varying measures of causality have been proposed in the literature based on Bayesian filtering and wavelets (24–30). Second, there are very few causal inference approaches to take into account the sparsity of the functional networks (31–33). As an example, authors in ref. 31 introduced a method for sparse identification of functional connectivity patterns from large-scale functional imaging data. Despite their success in inferring sparse connectivity patterns, these techniques assume static connectivity structures.

Third, most existing approaches are tailored for continuous-time data, such as electroencephalography (EEG) and local field potential recordings, which limits their utility when applied to binary neuronal spike recordings. These methods are generally based on multivariate autoregressive (MVAR) modeling, with a few nonparametric exceptions (30, 34). Some efforts have been made to adapt the MVAR modeling to neuronal spike trains (17, 35, 36). For instance, the binary spikes were preprocessed in refs. 17 and 35 via a smoothing kernel, which significantly distorts the temporal details of the neuronal dynamics. In addition, the frequency-domain GC analysis techniques implicitly assume that the data have rich oscillatory dynamics. Although this assumption is valid for steady-state EEG responses or resting-state recordings, spike trains recorded from cortical neuronal ensembles often do not exhibit any oscillatory behavior.

To address the third challenge, point process modeling and estimation have been successfully used in capturing the stochastic dynamics of binary neuronal spiking data (37, 38). This framework has been particularly used for inferring functional interactions in neuronal ensembles from spike recordings (32, 38–42). A maximum likelihood (ML)-based approach was introduced in ref. 38 based on a network likelihood formulation of the point process model; a model-based Bayesian approach based on point process likelihood models with sparse priors on the connectivity pattern was introduced in ref. 32. Among the more recent results, an information-theoretic measure of causality is proposed in ref. 41; a static GC measure based on point process likelihoods is proposed in ref. 40. However, a modeling and estimation framework to simultaneously take into account the dynamicity and sparsity of the G-causal influences as well as the statistical properties of binary neuronal spiking data is lacking.

In this paper, we close this gap by developing a dynamic measure of GC by integrating the forgetting-factor mechanism of recursive least squares (RLS), point process modeling, and sparse estimation. To this end, we first exploit the prevalent parsimony of neurophysiological time constants manifested in neuronal spiking dynamics, such as those in sensory neurons with sharp tunings, as well as the potential low-dimensional structure of the underlying functional networks. These features can be captured by point process models in which the cross-history dependence of the neurons is described by sparse vectors. We then use an exponentially weighted log-likelihood framework (43) to recursively estimate the model parameters via sparse adaptive filtering, thereby defining a dynamic measure of GC, which we call the adaptive GC (AGC) measure.

The significance of sparsity in our approach is twofold. First, while the functional networks may not be truly sparse, they can often be parsimoniously described by a sparse set of significant functional links. Our models can indeed capture these significant links through sparse cross-history dependence. Second, sparsity enables stable estimation in the face of limited data. This is particularly important for adaptive estimation, where the goal is to reliably estimate a large number of cross-history parameters using short, effective observation windows.

We next develop a statistical inference framework for the proposed AGC measure by extending classical results on the analysis of deviance to our sparse dynamic point process setting. We provide simulation studies to evaluate the identification and tracking capabilities of our proposed methodology, which reveal remarkable performance gains compared with existing techniques, in both detecting the existing G-causal links and avoiding false alarms, while capturing the dynamics of the G-causal interactions in a neuronal ensemble. We finally apply our techniques to two experimentally recorded datasets: two-photon imaging data from the mouse auditory cortex under spontaneous activity and simultaneous single-unit recordings from the ferret primary auditory (A1) and prefrontal cortices (PFC) under a tone-detection task. Our analyses reveal the temporal details of the functional interactions between A1 and PFC under attentive behavior as well as among the auditory neurons under spontaneous activity at unprecedented spatiotemporal resolutions. In addition to their utility in analyzing neuronal data, our techniques have potential application in extracting functional network dynamics in other domains beyond neuroscience, such as social networks or gene regulatory networks, thanks to the plug-and-play nature of the algorithms used in our inference framework.

Theory and Algorithms

Preliminaries and Notations.

We use point process modeling to capture neuronal spiking statistics. A point process is a stochastic sequence of discrete events occurring at random points in continuous time. When adapted to the discrete time domain, point process models have proven to be successful in capturing the statistics of neuronal spiking (37, 44–46). Our analysis in this paper is based on discrete point process models, in which the observation interval is discretized to bins of length . By choosing small enough, the resulting neuronal data transform into a binary sequence . The statistics of this sequence can be fully characterized by its conditional intensity function (CIF) denoted by , representing the neuron’s instantaneous firing rate at time bin conditional on all of the data and covariates up to time . The binary spiking sequence can be modeled by a conditionally independent Bernoulli process with success probability of .

Suppose that at time bin the effective neural covariates are collected in a vector . Such covariates include the neuron’s spiking history, the history of the activity of other neurons, and extrinsic stimuli. A dynamic generalized linear model (GLM) with logistic link function for this neuron’s CIF (43) is given by

| [1] |

where , for , is the logistic function, and denotes the time-varying parameter vector of length at time , characterizing the dynamics of the underlying neuronal encoding process. We consider a multiscale window-based model for with piecewise constant dynamics within windows of length samples. To this end, we segment the spiking activity to windows of length bins and assume that for all , where . For notational convenience, we denote the spiking activity associated with time window by a vector , the CIF vector by , and the matrix of covariates by with rows corresponding to the covariate vectors. We assume that the parameter vectors are sparse.

To capture the adaptivity manifested in the spiking dynamics, we use the forgetting factor mechanism of RLS algorithms (47) and combine the data log-likelihoods up to time using an exponential weighting scheme (43):

| [2] |

where denotes a generic parameter vector, is the forgetting factor parameter, and is the vector of all-ones of length . When applied to vectors, the functions and are understood to act in an elementwise fashion.

The parameter vectors for can be efficiently estimated from the data using a sparse adaptive point process filter, referred to as (43). The algorithm estimates the sparse time-varying parameter vectors from point process observations in an online fashion by recursively maximizing a sequence of -regularized exponentially weighted log-likelihoods via a proximal algorithm:

| [3] |

where is a regularization parameter and can be selected analytically or through cross-validation (43). Statistical confidence regions for the estimates can be computed using a recursive nodewise regression procedure (43). Throughout the rest of paper, we use the algorithm for adaptive parameter estimation.

The AGC Measure.

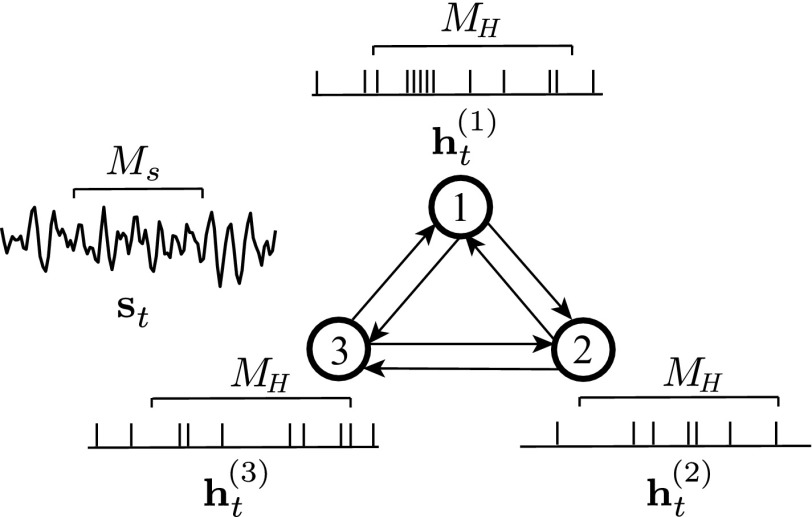

Consider simultaneous spike recordings from an ensemble of neurons indexed by , denoted by over the time bins . At time , the spiking statistics of each neuron are modeled via the CIF formulation of Eq. 1 using a sparse modulation parameter vector consisting of a scalar baseline firing parameter , a collection of sparse history dependence parameter vectors of size , in which represents the contribution of the spiking history of neuron to the CIF of neuron , and accounts for the stimulus modulation vector (e.g., receptive field). Let be the spike count of neuron within the -th spike counting window of length , where for and . The covariates associated with the ensemble activity are given by , where denotes the history of spike counts of neuron within nonoverlapping windows of up to a lag of , and is the vector of neural stimuli in effect at bin . We refer to this model, where the history of all of the neurons in the ensemble is taken into account, as the full model. Fig. 1 shows an example of the neuronal ensemble and the corresponding covariates for .

Fig. 1.

An example of the neuronal ensemble model for neurons and . The CIF of neuron (2) can be expressed as .

To assess the G-causal influences, a likelihood-based GC measure has been proposed in ref. 40 for point process models. Consider neuron as the target neuron with an observation vector . Let denote the history of the covariates of neuron . The parameter vector and covariate history of neuron after excluding the effect of neuron are denoted by and , respectively, and compose the so-called reduced model. The log-likelihood ratio statistic associated with the G-causal influence of neuron on neuron can be defined as

| [4] |

where denotes the likelihood of estimated parameter vector given the observation sequence and the history of the covariates , and . Based on this formulation, the GC effect from neuron to neuron can be measured as the reduction in the point process log-likelihood of neuron in the reduced model as compared with the full model. Note that the signum function determines the effective excitatory or inhibitory nature of this influence.

Most existing formulations of GC leverage the MVAR modeling framework (20–29, 31, 35), which pertains to data with linear Gaussian statistics. The GC measure in Eq. 4, however, benefits from the likelihood-based inference methodology and covers a wide range of complex statistical models. Both the MVAR-based GC measure and its log-likelihood-based point process variant of ref. 40 assume that the underlying time series are stationary (i.e., the modulation parameters are all static). In many scenarios of interest, however, the underlying dynamics exhibit nonstationarity. An example of such a scenario is the task-dependent receptive field plasticity phenomenon (43, 48, 49). In addition, ML estimation used by these techniques does not capture the underlying sparsity of the parameters and often exhibits poor performance, when the data length is short or the number of neurons is large.

To account for possible changes in the ensemble parameters and their underlying sparsity, we introduce the AGC measure, which is capable of capturing the dynamics of G-causal influences in the ensemble. To this end, we make two major modifications to the classical GC measure. First, we leverage the exponentially weighted log-likelihood formulation of Eq. 2 to induce adaptivity into the GC measure. Second, we exploit the possible sparsity of the ensemble parameters. Replacing the standard data log-likelihoods in Eq. 4 by their sparse adaptive counterparts given in Eqs. 2 and 3, we define the AGC measure from neuron to neuron at time window as

| [5] |

Although these modifications bring about crucial advantages in capturing the functional network dynamics in a robust fashion, they require construction of a statistical inference framework in order for the proposed AGC measure to be useful. We address these issues in the forthcoming section.

Statistical Inference of the AGC Measure.

Due to the stochastic and often biased nature of GC estimates, nonzero values of GC do not necessarily imply existence of G-causal influences. Hence, a statistical inference framework is required to assess the significance of the extracted G-causal interactions.

Consider two nested GLM models, referred to as full and reduced models, with parameters and , respectively, in which the latter is a special case of the former. To assess the significance of a GC link, one can test for the null hypothesis against the alternative . The commonly used test statistic is referred to as the deviance difference of the two models and is defined as , where is the log-likelihood and and denote the parameter estimates under the full and reduced models, respectively. The deviance difference for the likelihood-based GC is twice the right-hand side of Eq. 4, modulo the signum function.

To perform the foregoing hypothesis test, the distributions of the deviance difference under the two hypotheses need to be characterized. Although these distributions are known for the classical GC measure (50–52), they cannot be readily extended to our AGC measure for two main reasons. First, the log-likelihoods are replaced by their exponentially weighted counterparts, which suppresses their dependence on the data length due to the forgetting factor mechanism. Second, unlike ML estimates, which are asymptotically unbiased, the -regularized ML estimates are biased and hence violate the common asymptotic normality assumptions.

To address these challenges, inspired by recent results in high-dimensional regression (53, 54), we define the adaptive de-biased deviance as

| [6] |

where and are the gradient vector and Hessian matrix of the exponentially weighted log-likelihood function , and and denote the true and estimated parameter vector at time window , respectively. The adaptive de-biased deviance is composed of two main terms: The first term is twice the exponentially weighted log-likelihood ratio statistic, which is analogous to the standard deviance difference, whereas the second is a bias correction term. The bias correction term compensates for the effect of the -regularization bias imposed in favor of enforcing sparsity in the estimate . The effect of forgetting factor mechanism appears in the form of the scaling . Finally, we define a test statistic referred to as adaptive de-biased deviance difference:

| [7] |

In what follows, we will mainly work with , as opposed to its biased version given by in Eq. 5. Note that , where is the difference of the bias terms of the full and reduced models.

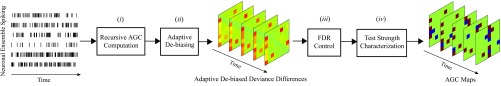

There are four major challenges in inferring a GC influence from : (i) efficient computation of from the data, (ii) determining the distribution of under the absence and presence of a GC link, (iii) controlling the false discovery rate (FDR), and (iv) assessing the significance of the detected GC links. We will address these challenges in the remainder of this section. Fig. 2 shows a schematic depiction of the overall inference procedure, which we will discuss next.

Fig. 2.

Schematic depiction of the inference procedure for the AGC measure.

(i) Recursive computation of the AGC.

The computation of the adaptive de-biased deviance differences for all of the possible links and at all times is required for our statistical analysis. Therefore, in order for the analysis to scale favorably with the network size and the data length , it is crucial to develop an efficient framework for the computation of the AGC measure. The RLS-inspired exponential weighting of the log-likelihoods in Eq. 2 indeed paves the way for the recursive computation of the AGC measure. The recursive procedure for computing for a generic estimate is given in Algorithm S3 in SI Appendix, section 3, from which the AGC measure of Eq. 5 can be computed. This step comprises the recursive AGC computation block in Fig. 2.

(ii) Asymptotic distributional analysis of the AGC.

Let and denote the dimensions of and , respectively. In Theorem S1 in SI Appendix, section 1 we establish the following result under mild technical conditions: As ,

-

i)

in the absence of a GC link from to , , and

-

ii)

in the presence of a GC link from to , if the corresponding cross-history coefficients scale at least as , then ,

where is the dimensionality difference of the two nested models, and is the corresponding noncentrality parameter at time window .

Theorem S1 has two main implications. First, it establishes that our proposed adaptive de-biased deviance difference statistic admits simple asymptotic distributional characterization. Given that these asymptotic distributions form the main ingredients of the forthcoming inference procedure, the second block in Fig. 2 serves to highlight the significance of adaptive de-biasing. As shown in SI Appendix, section 3, the bias can also be computed in a recursive fashion.

Second, given that for the noncentral chi-squared distribution coincides with the chi-squared distribution, the noncentrality parameter plays a key role in separating the distributions under the null and alternative hypotheses: When the deviance difference is close to zero, the null hypothesis is likely to be true (i.e., no GC link). When the deviance difference is large, the alternative is likely to be true (i.e., a GC link exists) (See Remark 2 in SI Appendix, section 1, for further discussion.) The noncentrality parameter , however, is a complicated function of the true values of the parameters and cannot be directly observed. In the next two subsections, we initially assume that an estimate is at hand, and later on derive an algorithm for its estimation.

The output of the second block in Fig. 2 is the de-biased deviance differences corresponding to all pairs of neurons (shown in 2D as deviance difference maps). In the next two subsections we will show how to translate the deviance differences to statistically interpretable AGC links.

(iii) FDR control.

First, we use part i of the result of Theorem S1 to control the FDR in a multiple hypothesis testing framework. To this end, we use the Benjamini–Yekutieli (BY) procedure (55). The BY procedure aims at controlling the FDR, which is the expected ratio of incorrectly rejected null hypotheses, or namely “false discoveries,” at a desired significance level .

To identify significant GC interactions while avoiding spurious false positives, we conduct multiple hypothesis tests on the set of pairwise possible GC interactions among the neurons at each time step . The null hypothesis corresponds to lack of a GC link from neuron to at time step . Thus, rejection of the null hypothesis amounts to discovering a GC link at time step . We first compute for all possible links in . Based on Theorem S1, under null hypothesis we have as . Hence, by virtue of convergence in distribution, for close to , thresholding the test statistic results in a consistent approximation to limiting the false positive rate: is rejected at a confidence level of , if , where is the inverse CDF of a distribution with degrees of freedom. Using the BY procedure, we can thus control the mean FDR at a rate of for all tests (Fig. 2, third block). Algorithm S1 in SI Appendix, section 2, summarizes this procedure.

(iv) Test strength characterization via noncentral filtering and smoothing algorithm.

Next, we use part ii of the result of Theorem S1 to assess the significance of the tests for the detected GC links. Under the alternative hypothesis, Theorem S1 implies that as . Hence, by virtue of convergence in distribution, the false negative rate can be estimated by , at a confidence level of , where represents the CDF of a noncentral distribution with degrees of freedom and the estimate of the noncentrality parameter . To quantify the significance of an estimated GC link, we use the Youden’s -statistic, which is an effective measure often used for summarizing the overall performance of a diagnostic test. The J-statistic in our setting is given by

| [8] |

for a fixed significance level . Note that the J-statistic can take values in . The case of being close to one represents high sensitivity and specificity of the test statistic, which coincides with large values of noncentrality. One advantage of the J-statistic over the conventional P value is that it accounts for both type I and type II errors. In the context of GC analysis, the J-statistic for each possible link can serve as a normalized indicator of how reliable the detected link is. For consistency, we assign a value of , when the null hypothesis is not rejected.

It remains to estimate the unknown noncentrality parameters given the observed deviance differences . Under the assumption that changes smoothly in time, this can be carried out efficiently by a noncentral filtering and smoothing algorithm, which is given by Algorithm S2 in SI Appendix, section 2. Given these estimates, the J-statistics for the rejected nulls can be computed at (Fig. 2, fourth block), as summarized in Algorithm S1 in SI Appendix, section 2.

Algorithm 1: AGC Inference from Ensemble Neuronal Spiking

Input: Spike trains and parameters .

-

1.

for , do

-

2.

Recursively estimate the sparse time-varying modulation parameter vectors and corresponding to full and reduced GLMs using (43),

-

3.

Recursively compute the adaptive de-biased deviance differences (Algorithm S3),

-

4.

Perform noncentral -squared filtering and smoothing to estimate the noncentrality parameters from (Algorithm S2),

-

5.

k = 1, , K

-

6.

Apply BY rejection rule to the ensemble set of GC tests to control FDR at rate (Algorithm S1),

-

7.

Compute AGC maps based on the -statistics as (Algorithm S1).

Output: AGC maps.

Summary of Advantages over Existing Work.

Algorithm 1 summarizes the overall AGC inference procedure. Choices of the parameters involved in Algorithm 1 and its computational complexity are discussed in SI Appendix, sections 4–6. Before presenting applications to synthetic and real data, we summarize the advantages of our methodology over existing work:

-

i)

Sparse dynamic GLM modeling provides more accurate estimates of the parameters (43), and hence more reliable detection of the GC links, as compared with existing static methods based on ML. We examine this aspect of our methodology in SI Appendix, section 8, using an illustrative simulation study;

-

ii)

Relating the noncentrality parameters to the test strengths of the detected GC links is not used by existing techniques. In light of Theorem S1 and the need for estimating the noncentrality parameters, we devised a noncentral filtering and smoothing algorithm to exploit the entire observed data for obtaining reliable estimates;

-

iii)

Exponential weighting of the log-likelihoods admits construction of adaptive filters for estimating the network parameters in a recursive fashion, which significantly reduces the computational complexity of our inference procedure; and

-

iv)

Characterization of AGC via the J-statistic as a normalized measure of hypothesis test strength for each detected GC link can be further used for graph-theoretic analysis of the inferred functional networks. By viewing the J-statistic as a surrogate for link strength, the AGC networks can be refined by thresholding the J-statistics, and access to the distribution of the J-statistics in a network allows one to perform further hypothesis tests regarding the network function (56).

In the next section, we illustrate these advantages by comparing our methodology with two representative techniques for inferring functional network dynamics.

Applications

A Simulated Example.

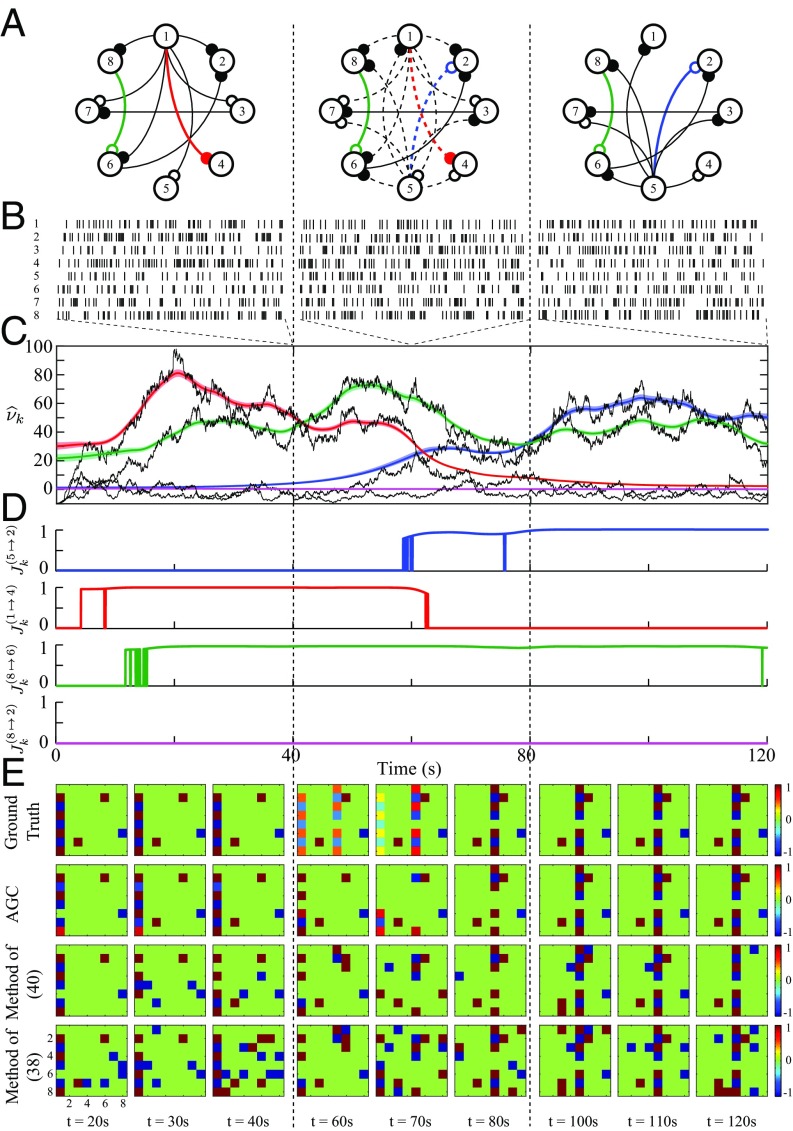

We consider a network of functionally interconnected neurons whose connectivity pattern evolves in time. As illustrated in Fig. 3A, the network dynamics undergo three main states, each covering one-third ( s) of the simulation period: (i) the first static state, where neuron plays a dominant role, causally influencing all other neurons; (ii) the intermediate dynamic state, where neuron loses the dominant role to neuron , as its causal influences smoothly decay, while a new set of causal interactions from neuron to all of the other neurons emerge; and (iii) the final static state, where all of the causal links from neuron are completely vanished and the links from neuron are stabilized. The network also comprises three static causal links, for example, , which remain constant throughout.

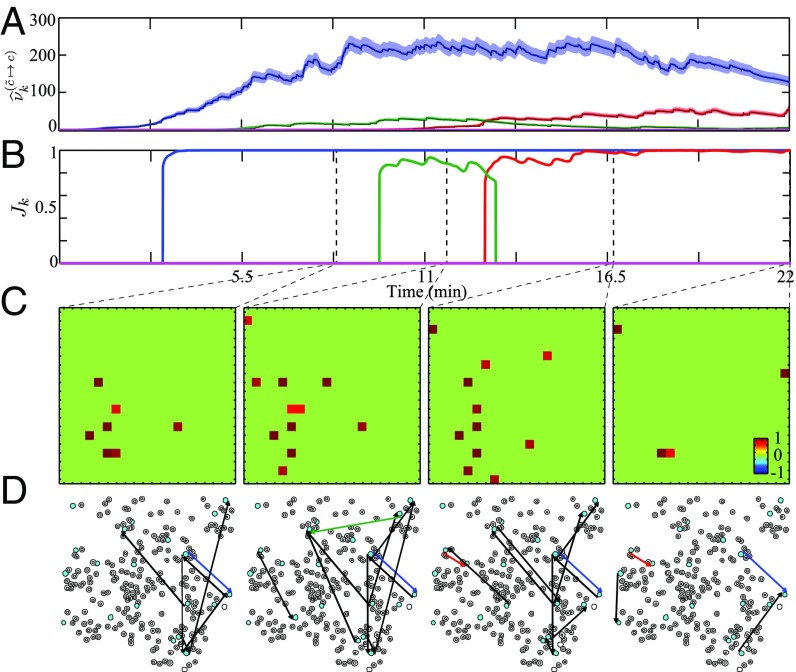

Fig. 3.

Functional network dynamics inference from simulated spikes. (A) Three states of the functional network evolution, where neurons (vertices) are interacting through static (solid edges) or dynamic (dashed edges) causal links of inhibitory (open circles) or excitatory (filled circles) nature. (B) One realization of simulated spikes within windows of s selected at s. (C) Estimated noncentrality across time corresponding to four selected GC links (color-coded in network map), along with the shifted deviance differences (black traces) and the confidence regions for each estimated trace (colored traces). (D) Four panels of estimated -statistics corresponding to the selected GC links. (E) Performance comparison of the causal inference methods: (i) the proposed AGC method (second row), (ii) the static GC method in ref. 40 (third row), and (iii) the functional connectivity method in ref. 38 (last row), along with the true causality maps (first row). Each panel represents the estimated causality map at a specific time.

An observation period of is discretized to bins of length . We use a point process model with Bernoulli spiking statistics to generate the binary spike trains for all neurons, where the CIF is modeled given the dynamic GLM of Eq. 1. The details of the parameter selection and estimation are given in SI Appendix, section 5. Fig. 3B shows a realization of the simulated spike trains indicated by black vertical lines for all eight neurons within three sample windows of length s, with end points at , selected from the three segments of the simulation. Note that the G-causal pattern of Fig. 3A is unknown to the estimator, and is to be inferred from the simulated spike trains. Fig. 3C shows the time course of the estimated noncentrality parameters and their confidence intervals obtained by the noncentral filtering and smoothing algorithm associated with four selected GC links: (i) a dynamic weakening GC link (red), (ii) a dynamic strengthening GC link (blue), (iii) a static link (green), and (iv) a nonexisting GC link (magenta). Black traces show the shifted observed deviances . Fig. 3D represents the time course of the estimated -statistics plotted in four separate panels, where the FDR is controlled at a rate .

In Fig. 3C, the estimates of corresponding to the three existing GC links take significant values, correctly identifying the G-causal interactions, while takes values close to zero for the nonexisting link, implying no significant G-causal interaction. The time course of changes for both dynamic links and the static link is closely tracked by the noncentrality parameters, albeit with an apparent delay. This delay is due to the choice of the effective window length and highlights the trade-off between estimation accuracy and delay. While it is possible to reduce this delay by choosing smaller effective windows, for the sake of accuracy of parameter estimation, and thereby robust detection of the AGC links, we have chosen the effective window length to be s (a fraction of the -s transition period) to incur a tolerable delay. The aforementioned performance is echoed in the test strengths quantified by the -statistics shown in Fig. 3D. Even though the noncentrality parameters in Fig. 3C track the changes of the network parameters much faster, the J-statistics may lag behind due to the conservative statistical thresholds set by the FDR control procedure. By choosing a higher FDR level, the J-statistics will capture the changes much faster, but at the expense of possibly more false discoveries. It is noteworthy that our proposed method distinguishes the direct GC links from the indirect ones, as it correctly detects the direct GC links and but rejects the existence of the corresponding indirect link .

The top row in Fig. 3E shows the ground truth G-causal maps plotted at nine time instances (three per segment). Each map represents an color-coded array showing the excitatory, inhibitory, and no-GC links in red, blue, and green, respectively. The AGC maps estimated by our method are shown in the second row, where each entry represents the -statistic of the estimated GC link , where the excitatory or inhibitory nature of the links is determined by the sign of the AGC measure, accounting for the aggregate cross-history contribution, and is not indicative of the morphological identity of the connections.

We compare the AGC maps with two other methods: the static GC method of ref. 40 (third row), and the functional connectivity analysis of ref. 38 (final row). To adapt these methods to the time-varying setting, we used nonoverlapping window segments whose length is chosen to match the effective window length of our method. Within each window, the signed binary functional connectivity is estimated using the methods outlined in refs. 38 and 40. The true model order and the same significance level are used for all methods (see SI Appendix, section 5, for more details). Fig. 3E (last two rows) shows the connectivity maps obtained by refs. 38 and 40. On a qualitative level, Fig. 3E reveals the favorable performance of our proposed framework in terms of both identification and tracking of the GC influences. The method of ref. 40 results in both high false positive and false negative errors and fails to track the GC dynamics due to highly variable parameter estimates. Similarly, the method of ref. 38 shows poor false positive rejection and tracking performance.

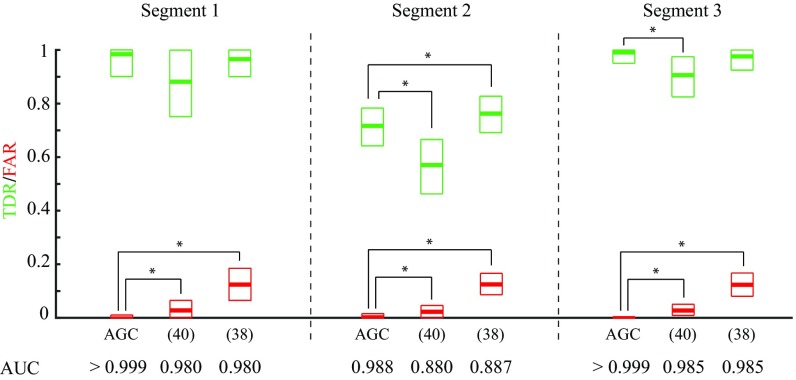

To quantify the foregoing performance comparison, we repeated the simulation for realizations of spike trains randomly generated based on the network dynamics in Fig. 3A. Fig. 4 represents the performance results in terms of true detection rate (TDR) and false alarm rate (FAR), which are shown in green and red, respectively. Boxes represent the mean and confidence intervals. Based on the Wilcoxon signed-rank test with , our method has a significantly lower FAR compared with both ref. 38 (effect sizes of for all segments) and ref. 40 ( and for the three segments, respectively). Our achieved TDRs are also significantly higher than those of ref. 40 ( and for the three segments, respectively) and are only outperformed by ref. 38 in the middle segment ( and for the three segments, respectively). By varying the significance levels for all of the algorithms, we also evaluated their receiver operating characteristic (ROC) performance, whose area under the curve (AUC) values for the three segments are indicated in Fig. 4. Further details on the comparison procedure as well as assessing the robustness of our method to the choice of parameters are given in SI Appendix, section 7. It is noteworthy that our method is the only one with consistently low FAR (), while maintaining high TDR. Finally, both methods in refs. 38 and 40 output binary connectivity maps, as opposed to AGC, which provides normalized continuous-valued test strengths of the detected GC links. In the spirit of easing reproducibility, we have archived a MATLAB implementation that fully generates Fig. 3 on GitHub (https://github.com/Arsha89/AGC_Analysis).

Fig. 4.

Performance comparison of AGC inference with the methods of refs. 40 and 38 in terms of TDR (green) and FAR (red) for the three segments of the simulation period. Boxes represent the mean and confidence intervals. Asterisks indicate significant difference with effect size of (Wilcoxon signed-rank test, ). AUC values corresponding to the ROC performance of the three algorithms are reported at the bottom (see SI Appendix, section 7 for more details).

Application to Real Data: Spontaneous Activity in the Mouse Auditory Cortex.

In this section, we apply our proposed method to experimentally recorded neuronal population data from the mouse auditory cortex. We imaged the spontaneous activity in the auditory cortex of an awake mouse with in vivo two-photon calcium imaging (see SI Appendix, section 13 for details of the experimental procedures). Within an imaged field of view, the activity of neurons is recorded at a sampling rate of for a total duration of . Spike trains are inferred from the fluorescence traces using the constrained-foopsi technique (57). For GC inference, a subset of neurons exhibiting high spiking activity were selected, as many of the neurons in the ensemble are relatively silent. The FDR is controlled at a rate of for testing the possible GC links. Fig. 5 A and B show the time course of the noncentrality estimates and -statistics for four selected candidate GC links, respectively. These representative GC links consist of a persistent link (blue), two transient links (red) and (green), and an insignificant link (magenta). Fig. 5 C and D show four snapshots of the AGC map estimates, respectively in the matrix form and as a network overlaid on the slice, at time-stamps . Other than the three color-coded significant links, the rest of the detected G-causal links are indicated by black arrows.

Fig. 5.

Adaptive G-causal interactions among ensemble of neurons in mouse auditory cortex under spontaneous activity. The time course of estimated GC changes for four selected GC links obtained through (A) noncentrality parameter and (B) J-statistics . (C) AGC map estimates at four selected points in time, marked by the dashed vertical lines in the top panel. (D) network maps overlaid on the slice, showing cells with black circles and the selected cells highlighted in cyan. The detected GC links are depicted in black directed arrows and colored for the selected links.

The detected G-causal maps are considerably sparse (maximum out of possible links), with a few persistent GC links and a multitude of transient links emerging and vanishing over time (Movies S1 and S2). The sparsity of the AGC maps is consistent with sparse activity in auditory cortex (58). A careful inspection of the spatial pattern of the AGC links reveals that the detected links correspond to distances in the range of . These distances are consistent with in vitro measurements of the spatial patterns of intralaminar connectivity within the mouse auditory cortex (59), showing a significant peak in the connection probability within the mean radial range of . These results indicate that the proposed AGC method is able to detect underlying connectivity patterns among neurons.

Application to Real Data: Ferret Cortical Activity During Active Behavior.

Studies of the PFC have revealed its association with high-level executive functions such as decision making and attention (60–62). In particular, recent findings suggest that PFC is engaged in cognitive control of auditory behavior (62), through a top-down feedback to sensory cortical areas, resulting in enhancement of goal-directed behavior. It is conjectured in ref. 63 that the top-down feedback from PFC triggers adaptive changes in the receptive field properties of A1 neurons during active attentive behavior, to facilitate the processing of task-specific stimulus features. This conjecture has been examined in the context of visual processing, where top-down influences exerted on the visual cortical pathways have been shown to alter the functional properties of cortical neurons (64, 65).

To examine this conjecture at a single-unit level, we apply our proposed AGC inference method to single-unit spiking activities from an ensemble of neurons simultaneously recorded from two cortical regions of A1 and PFC in ferrets during a series of passive listening and active auditory task conditions. In this application, we sought to reveal the significant task-specific changes in the G-causal interactions within or between PFC and A1 regions at the single-unit level during active behavior. We used the spike data recordings from a large set of experiments (more than ) conducted on three ferrets for GC inference analysis (data from the Neural Systems Laboratory, Institute for Systems Research, University of Maryland, College Park, MD). During each trial in an auditory discrimination task, the ferrets were presented with a random sequence of broadband noise-like acoustic stimuli known as temporally orthogonal ripple combinations (TORCs) along with randomized presentations of the target tone. Ferrets were trained to attend to the spectrotemporal features of the presented sounds and discriminate the tonal target from the background reference stimuli (see ref. 63 for details of the experimental procedures). Due to their broadband noise-like features, the TORCs and the corresponding neural responses admit efficient estimation of the spectrotemporal tuning of the primary auditory neurons via sparse regression (43, 66).

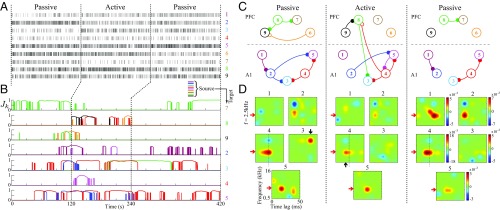

Fig. 6 shows our results on a selected experiment in which a total number of single units were detected through spike sorting (five units in A1 and four units in PFC). The selected experiment consists of three main blocks: passive listening pretask, active task, and passive listening post-task, composed of and repetitions, respectively. Within each repetition, a complete set of randomly permuted TORCs were presented along with a randomized presentation of the target tone at . Fig. 6A shows the activity of all of the units during the first repetition of each block, separated by vertical dashed lines. Fig. 6B shows the time courses of the inferred -statistics, where each row represents the significant incoming GC links from all of the other units. Each unit and its significant outgoing GC links are color-coded uniquely as labeled on the right side of each panel. For brevity, the significant GC links that show a degree of persistence during at least one block of the experiment are plotted. Fig. 6C depicts the representative network maps of the GC links among the nine units during the three main blocks, where each significant GC link from panel Fig. 6B is indicated by a directional link. Finally, Fig. 6D exhibits snapshots of the STRFs of all of the five A1 units, taken at the end point of each block. The red arrow marks the tonal target.

Fig. 6.

Dynamic inference of G-causal influences between single-units in ferret PFC and A1 during auditory task. (A) Spike trains corresponding to the first repetition of each block. (B) Time course of significant GC changes through -statistics for selected single-units (FDR controlled at ). (C) Detected patterns of network AGC maps during three main blocks of experiment. (D) Spectrotemporal receptive field (STRF) snapshots of A1 units at the end points of three blocks of experiment.

Three major task-specific dynamic effects can be inferred from Fig. 6: (i) a significant bottom-up GC link from the target-tuned A1 unit during active behavior, (ii) a persistent task-relevant top-down GC link, and (iii) task-relevant plasticity and rapid tuning changes within A1. First, unit in A1 shows strong frequency selectivity to the target around kHz during the whole experiment (vertical dashed lines, Fig. 6D). Moreover, its STRF dynamics reveal a plastic shift of the target-tuned facilitative regions to shorter latencies following the active attentive behavior (upward arrow, middle panel; see also Movie S3). Strikingly, a bottom-up GC link from the very same strongly target-tuned unit to PFC (red link, ) emerges during the active task (second row, Fig. 6B), temporally preceding any top-down significant GC link.

The second effect appears as a strong top-down GC link (green link, ) which builds up during the active auditory behavior and even persists during a few repetitions of the post-active condition (fifth row, Fig. 6B). The onset of this top-down GC link coincides with a dramatic and rapid change in the STRF of the A1 unit , which was initially tuned to kHz (downward arrow, Fig. 6D, Left) but eventually gets suppressed at this nontarget frequency (Fig. 6D, Middle) by getting G-causally influenced by the PFC unit . This effect reveals the relationship between the top-down network dynamics and the changes in the tuning of the A1 units. We examine the dynamics of the parameters of the foregoing bottom-up and top-down links in detail in SI Appendix, section 10, for further clarification. The third effect concerns the emergence and strengthening of frequency selectivity in some of the A1 units (e.g., units and , Fig. 6D, Right) to the target tone, which alludes to a salient synaptic reinforcement effect within A1 during and after the active task.

In addition to these interregion GC links, multiple instances of GC links within A1 (e.g., ) or within PFC (e.g., ) emerge or vanish during the active block, which accounts for the task-specific network-level changes within the cortical regions that are involved in active listening. A salient instance of this phenomenon can be observed in the dynamics of unit , whose GC links within PFC significantly change during the active behavior: As it gets G-causally linked to the lower-level A1 region, its GC links to the other PFC units fade away (rows 1, 3 and 5, Fig. 6B). It is noteworthy that the fluctuating instances of the J-statistics (e.g., Fig. 6B, fourth row, third segment) are due to the FDR control procedure, and there is no evidence to believe that they have a neurophysiological basis. To reduce these fluctuations, one can choose a higher FDR rate. If these effects persist at high FDR rates, further inspection of the cross-history coefficients is needed to assess their possible neurophysiological basis (see SI Appendix, section 10 for further discussion).

To validate our results in the absence of ground truth, we assess their reliability using surrogate data obtained by random shuffling and network subsampling in SI Appendix, section 11 and verify the robustness of the inferred task-dependent functional network dynamics against the aforementioned adversarial perturbations. In conclusion, our methodology enabled the extraction of the top-down and bottom-up network-level dynamics that were previously conjectured in ref. 63 to be involved in active attentive behavior, at the neuronal scale with high spatiotemporal resolution. In SI Appendix, section 12 we present our analysis of another experiment, which further corroborates our findings.

Discussion and Concluding Remarks

Summary and Extensions of Our Contributions.

Most widely adopted time series analysis techniques for quantifying functional causal relations among the nodes in a network assume static functional structures or otherwise enforce dynamics using sliding windows. While they have proven successful in analyzing stationary Gaussian time series, when applied to spike recordings from neuronal ensembles undergoing rapid task-dependent dynamics they hinder a precise statistical characterization of the sparse dynamic neuronal functional networks underlying adaptive behavior.

To address these shortcomings, we developed a dynamic inference paradigm for extracting functional neuronal network dynamics in the sense of Granger, by integrating techniques from adaptive filtering, compressed sensing, point process theory, and high-dimensional statistics. We proposed a measure of time-varying GC, namely AGC, and demonstrated its utility through theoretical analysis, algorithm development, and application to synthetic and real data. Our analysis of the mouse auditory cortical data revealed unique features of the functional neuronal network structures underlying spontaneous activity at unprecedented spatial resolution. Application of our techniques to simultaneous recordings from the ferret auditory and prefrontal cortical areas suggested evidence for the role of rapid top-down and bottom-up functional dynamics across these areas involved in robust attentive behavior.

The plug-and-play nature of the algorithms used in our framework enables it to be generalized for application to various other domains beyond neuroscience, such as the analysis of social networks or gene regulatory networks. As an example, the GLM models can be generalized to account for -ary data, the forgetting factor mechanism for inducing adaptivity can be extended to state-space models governing the coefficient dynamics, and the FDR correction can be replaced by more recent techniques such as knockoff filters (67). To ease reproducibility and aid the adoption of our method, we have archived a MATLAB implementation on GitHub (https://github.com/Arsha89/AGC_Analysis).

Limitations of Our Approach.

In closing, it is worth discussing two potential limitations of our proposed paradigm.

Confounding effects due to network subsampling.

A common criticism of statistical causality measures, such as the GC, directed information, or transfer entropy, is susceptibility to latent confounding causal effects arising from network subsampling. In practice, these methods are typically applied to a small subnetwork of the circuits involved in neuronal processing. Given that each neuron may receive thousands of synaptic inputs, lack of access to a large number of latent confounding inputs can affect the validity of the causal inference results obtained by these methods.

We have evaluated the robustness of our method against such confounding effects using comprehensive numerical studies in SI Appendix, section 9. These studies involve scenarios with deterministic and stochastic latent common inputs as well as confounding effects due to network subsampling and suggest that our techniques indeed exhibit a degree of immunity to such confounding effects. We argue that this performance is due to explicit modeling of the dynamics of the Granger causal effects in the GLM framework, invoking the sparsity hypothesis, and using sharp statistical inference procedures (see SI Appendix, section 9 for further discussion).

Biological interpretation.

The functional network characterization provided by our framework must not be readily interpreted as direct or synaptic connections that result in causal effects. Our analysis results in a sparse number of GC interactions between neurons that can appear and vanish over time in a task-specific fashion. While it is possible that these connections reflect synaptic contacts between neurons, as changes in synaptic strengths can be induced rapidly within minutes (68), the observed GC dynamics could also be due to other underlying mechanisms such as desynchronization of inputs, altered shunting, or dendritic filtering. Thus, these plasticity effects remain to be tested with ground truth experiments. An alternative and inclusive view is that these links reflect a measure of information transferred from one neuron to another.

The relatively rapid switching of these links, however, must be interpreted with caution: While some of the rapid fluctuations are due to the use of the FDR control procedure (as discussed in the Applications), sudden emergence or disappearance of a link does not necessarily imply sudden changes in the causal structure or information transfer in the network. A sudden disappearance of a steady link most likely reflects the fact that given the amount of currently available data, there is not enough evidence to maintain the existence of the link at the group level with the desired statistical confidence; similarly, a sudden emergence of a link most likely implies that enough evidence has just been accumulated to justify its presence with statistical confidence. The gradual effects of these interactions are indeed reflected in the dynamics of the noncentrality parameters estimated by our methods.

As demonstrated by the applications of our inference procedures, our framework provides a robust characterization of the dynamic statistical dependencies in the network in the sense of Granger at high temporal resolution. This characterization can be readily used at a phenomenological level to describe the dynamic network-level functional correlates of behavior, as demonstrated by our real data applications. More importantly, this characterization can serve as a guideline in forming hypotheses for further testing of the direct causal effects using experimental procedures such as lesion studies, microstimulation, or optogenetics in animal models.

Supplementary Material

Acknowledgments

This work was supported in part by National Science Foundation Grant 1552946 and National Institutes of Health Grants R01-DC009607 and U01-NS090569.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

Data deposition: The experimental data used in this paper have been deposited on the Digital Repository at the University of Maryland at hdl.handle.net/1903/20546, and the MATLAB implementation of the algorithms is archived on GitHub at https://github.com/Arsha89/AGC_Analysis.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1718154115/-/DCSupplemental.

References

- 1.Olshausen BA, Field DJ. Emergence of simple-cell receptive field properties by learning a sparse code for natural images. Nature. 1996;381:607–609. doi: 10.1038/381607a0. [DOI] [PubMed] [Google Scholar]

- 2.Olshausen BA, Field DJ. Sparse coding of sensory inputs. Curr Opin Neurobiol. 2004;14:481–487. doi: 10.1016/j.conb.2004.07.007. [DOI] [PubMed] [Google Scholar]

- 3.Sporns O, Zwi JD. The small world of the cerebral cortex. Neuroinformatics. 2004;2:145–162. doi: 10.1385/NI:2:2:145. [DOI] [PubMed] [Google Scholar]

- 4.Song S, Sjöström PJ, Reigl M, Nelson S, Chklovskii DB. Highly nonrandom features of synaptic connectivity in local cortical circuits. PLoS Biol. 2005;3:e68. doi: 10.1371/journal.pbio.0030068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Rehn M, Sommer FT. A network that uses few active neurons to code visual input predicts the diverse shapes of cortical receptive fields. J Comput Neurosci. 2007;22:135–146. doi: 10.1007/s10827-006-0003-9. [DOI] [PubMed] [Google Scholar]

- 6.Druckmann S, Hu T, Chklovskii DB. A mechanistic model of early sensory processing based on subtracting sparse representations. In: Bartlett P, Pereira F, Burges CJC, Bottou L, Weinberger KZ, editors. Advances in Neural Information Processing Systems 25. Curran Associates; Red Hook, NY: 2012. pp. 1988–1996. [Google Scholar]

- 7.Ganguli S, Sompolinsky H. Compressed sensing, sparsity, and dimensionality in neuronal information processing and data analysis. Annu Rev Neurosci. 2012;35:485–508. doi: 10.1146/annurev-neuro-062111-150410. [DOI] [PubMed] [Google Scholar]

- 8.Babadi B, Sompolinsky H. Sparseness and expansion in sensory representations. Neuron. 2014;83:1213–1226. doi: 10.1016/j.neuron.2014.07.035. [DOI] [PubMed] [Google Scholar]

- 9.Greicius MD, Krasnow B, Reiss AL, Menon V. Functional connectivity in the resting brain: A network analysis of the default mode hypothesis. Proc Natl Acad Sci USA. 2003;100:253–258. doi: 10.1073/pnas.0135058100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Damoiseaux J, et al. Consistent resting-state networks across healthy subjects. Proc Natl Acad Sci USA. 2006;103:13848–13853. doi: 10.1073/pnas.0601417103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Hagmann P, et al. Mapping the structural core of human cerebral cortex. PLoS Biol. 2008;6:e159. doi: 10.1371/journal.pbio.0060159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Perkel DH, Gerstein GL, Moore GP. Neuronal spike trains and stochastic point processes: II. Simultaneous spike trains. Biophys J. 1967;7:419–440. doi: 10.1016/S0006-3495(67)86597-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Gerstein GL, Perkel DH. Simultaneously recorded trains of action potentials: Analysis and functional interpretation. Science. 1969;164:828–830. doi: 10.1126/science.164.3881.828. [DOI] [PubMed] [Google Scholar]

- 14.Brody CD. Correlations without synchrony. Neural Comput. 1999;11:1537–1551. doi: 10.1162/089976699300016133. [DOI] [PubMed] [Google Scholar]

- 15.Aertsen AM, Gerstein GL. Evaluation of neuronal connectivity: Sensitivity of cross-correlation. Brain Res. 1985;340:341–354. doi: 10.1016/0006-8993(85)90931-x. [DOI] [PubMed] [Google Scholar]

- 16.Bernasconi C, König P. On the directionality of cortical interactions studied by structural analysis of electrophysiological recordings. Biol Cybern. 1999;81:199–210. doi: 10.1007/s004220050556. [DOI] [PubMed] [Google Scholar]

- 17.Kamiński M, Ding M, Truccolo WA, Bressler SL. Evaluating causal relations in neural systems: Granger causality, directed transfer function and statistical assessment of significance. Biol Cybern. 2001;85:145–157. doi: 10.1007/s004220000235. [DOI] [PubMed] [Google Scholar]

- 18.Goebel R, Roebroeck A, Kim DS, Formisano E. Investigating directed cortical interactions in time-resolved fMRI data using vector autoregressive modeling and Granger causality mapping. Magn Reson Imaging. 2003;21:1251–1261. doi: 10.1016/j.mri.2003.08.026. [DOI] [PubMed] [Google Scholar]

- 19.Brovelli A, et al. Beta oscillations in a large-scale sensorimotor cortical network: Directional influences revealed by Granger causality. Proc Natl Acad Sci USA. 2004;101:9849–9854. doi: 10.1073/pnas.0308538101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Geweke JF. Measures of conditional linear dependence and feedback between time series. J Am Stat Assoc. 1984;79:907–915. [Google Scholar]

- 21.Geweke J. Measurement of linear dependence and feedback between multiple time series. J Am Stat Assoc. 1982;77:304–313. [Google Scholar]

- 22.Kaminski M, Blinowska KJ. A new method of the description of the information flow in the brain structures. Biol cybernetics. 1991;65:203–210. doi: 10.1007/BF00198091. [DOI] [PubMed] [Google Scholar]

- 23.Baccalá LA, Sameshima K. Partial directed coherence: A new concept in neural structure determination. Biol Cybern. 2001;84:463–474. doi: 10.1007/PL00007990. [DOI] [PubMed] [Google Scholar]

- 24.Sommerlade L, et al. Inference of Granger causal time-dependent influences in noisy multivariate time series. J Neurosci Methods. 2012;203:173–185. doi: 10.1016/j.jneumeth.2011.08.042. [DOI] [PubMed] [Google Scholar]

- 25.Milde T, et al. A new Kalman filter approach for the estimation of high-dimensional time-variant multivariate ar models and its application in analysis of laser-evoked brain potentials. Neuroimage. 2010;50:960–969. doi: 10.1016/j.neuroimage.2009.12.110. [DOI] [PubMed] [Google Scholar]

- 26.Havlicek M, Jan J, Brazdil M, Calhoun VD. Dynamic granger causality based on Kalman filter for evaluation of functional network connectivity in fMRI data. Neuroimage. 2010;53:65–77. doi: 10.1016/j.neuroimage.2010.05.063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Möller E, Schack B, Arnold M, Witte H. Instantaneous multivariate EEG coherence analysis by means of adaptive high-dimensional autoregressive models. J Neurosci Methods. 2001;105:143–158. doi: 10.1016/s0165-0270(00)00350-2. [DOI] [PubMed] [Google Scholar]

- 28.Hesse W, Möller E, Arnold M, Schack B. The use of time-variant EEG Granger causality for inspecting directed interdependencies of neural assemblies. J Neurosci Methods. 2003;124:27–44. doi: 10.1016/s0165-0270(02)00366-7. [DOI] [PubMed] [Google Scholar]

- 29.Astolfi L, et al. Tracking the time-varying cortical connectivity patterns by adaptive multivariate estimators. IEEE Trans Biomed Eng. 2008;55:902–913. doi: 10.1109/TBME.2007.905419. [DOI] [PubMed] [Google Scholar]

- 30.Sato JR, et al. A method to produce evolving functional connectivity maps during the course of an fMRI experiment using wavelet-based time-varying Granger causality. Neuroimage. 2006;31:187–196. doi: 10.1016/j.neuroimage.2005.11.039. [DOI] [PubMed] [Google Scholar]

- 31.Valdés-Sosa PA, et al. Estimating brain functional connectivity with sparse multivariate autoregression. Philos Trans R Soc Lond B Biol Sci. 2005;360:969–981. doi: 10.1098/rstb.2005.1654. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Stevenson IH, et al. Bayesian inference of functional connectivity and network structure from spikes. IEEE Trans Neural Syst Rehabil Eng. 2009;17:203–213. doi: 10.1109/TNSRE.2008.2010471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Zhou Z, et al. Detecting directional influence in fMRI connectivity analysis using PCA based granger causality. Brain Res. 2009;1289:22–29. doi: 10.1016/j.brainres.2009.06.096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Dhamala M, Rangarajan G, Ding M. Analyzing information flow in brain networks with nonparametric Granger causality. Neuroimage. 2008;41:354–362. doi: 10.1016/j.neuroimage.2008.02.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Sameshima K, Baccalá LA. Using partial directed coherence to describe neuronal ensemble interactions. J Neurosci Methods. 1999;94:93–103. doi: 10.1016/s0165-0270(99)00128-4. [DOI] [PubMed] [Google Scholar]

- 36.Krumin M, Shoham S. Multivariate autoregressive modeling and Granger causality analysis of multiple spike trains. Comput intelligence Neurosci. 2010;2010:10. doi: 10.1155/2010/752428. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Truccolo W, Eden UT, Fellows MR, Donoghue JP, Brown EN. A point process framework for relating neural spiking activity to spiking history, neural ensemble, and extrinsic covariate effects. J Neurophysiol. 2005;93:1074–1089. doi: 10.1152/jn.00697.2004. [DOI] [PubMed] [Google Scholar]

- 38.Okatan M, Wilson MA, Brown EN. Analyzing functional connectivity using a network likelihood model of ensemble neural spiking activity. Neural Comput. 2005;17:1927–1961. doi: 10.1162/0899766054322973. [DOI] [PubMed] [Google Scholar]

- 39.Nedungadi AG, Rangarajan G, Jain N, Ding M. Analyzing multiple spike trains with nonparametric Granger causality. J Comput Neurosci. 2009;27:55–64. doi: 10.1007/s10827-008-0126-2. [DOI] [PubMed] [Google Scholar]

- 40.Kim S, Putrino D, Ghosh S, Brown EN. A Granger causality measure for point process models of ensemble neural spiking activity. PLoS Comput Biol. 2011;7:e1001110. doi: 10.1371/journal.pcbi.1001110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Quinn CJ, Coleman TP, Kiyavash N, Hatsopoulos NG. Estimating the directed information to infer causal relationships in ensemble neural spike train recordings. J Comput Neurosci. 2011;30:17–44. doi: 10.1007/s10827-010-0247-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Kim S, Quinn CJ, Kiyavash N, Coleman TP. Dynamic and succinct statistical analysis of neuroscience data. Proc IEEE. 2014;102:683–698. [Google Scholar]

- 43.Sheikhattar A, Fritz JB, Shamma SA, Babadi B. Recursive sparse point process regression with application to spectrotemporal receptive field plasticity analysis. IEEE Trans Signal Process. 2016;64:2026–2039. [Google Scholar]

- 44.Smith A, Brown EN. Estimating a state-space model from point process observations. Neural Comput. 2003;15:965–991. doi: 10.1162/089976603765202622. [DOI] [PubMed] [Google Scholar]

- 45.Paninski L. Maximum likelihood estimation of cascade point-process neural encoding models. Netw Comput Neural Syst. 2004;15:243–262. [PubMed] [Google Scholar]

- 46.Paninski L, Pillow J, Lewi J. Statistical models for neural encoding, decoding, and optimal stimulus design. Prog Brain Res. 2007;165:493–507. doi: 10.1016/S0079-6123(06)65031-0. [DOI] [PubMed] [Google Scholar]

- 47.Haykin SS. Adaptive Filter Theory. Prentice Hall; Upper Saddle River, NJ: 1996. [Google Scholar]

- 48.Brown EN, Nguyen DP, Frank LM, Wilson MA, Solo V. An analysis of neural receptive field plasticity by point process adaptive filtering. Proc Natl Acad Sci USA. 2001;98:12261–12266. doi: 10.1073/pnas.201409398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Fritz J, Shamma S, Elhilali M, Klein D. Rapid task-related plasticity of spectrotemporal receptive fields in primary auditory cortex. Nat Neurosci. 2003;6:1216–1223. doi: 10.1038/nn1141. [DOI] [PubMed] [Google Scholar]

- 50.Wilks SS. The large-sample distribution of the likelihood ratio for testing composite hypotheses. Ann Math Stat. 1938;9:60–62. [Google Scholar]

- 51.Davidson RR, Lever WE. The limiting distribution of the likelihood ratio statistic under a class of local alternatives. Sankhyā: Indian J Stat Ser A. 1970;32:209–224. [Google Scholar]

- 52.Peers H. Likelihood ratio and associated test criteria. Biometrika. 1971;58:577–587. [Google Scholar]

- 53.Van de Geer S, Bühlmann P, Ritov Y, Dezeure R. On asymptotically optimal confidence regions and tests for high-dimensional models. Ann Stat. 2014;42:1166–1202. [Google Scholar]

- 54.Javanmard A, Montanari A. Confidence intervals and hypothesis testing for high-dimensional regression. The J Machine Learn Res. 2014;15:2869–2909. [Google Scholar]

- 55.Benjamini Y, Yekutieli D. The control of the false discovery rate in multiple testing under dependency. Ann Stat. 2001;29:1165–1188. [Google Scholar]

- 56.Francis etal. Small networks encode decision-making in primary auditory cortex. Neuron. 2018;97:885–897. doi: 10.1016/j.neuron.2018.01.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Pnevmatikakis EA, et al. Simultaneous denoising, deconvolution, and demixing of calcium imaging data. Neuron. 2016;89:285–299. doi: 10.1016/j.neuron.2015.11.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Hromádka T, Zador AM. Representations in auditory cortex. Curr Opin Neurobiol. 2009;19:430–433. doi: 10.1016/j.conb.2009.07.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Watkins PV, Kao JP, Kanold PO. Spatial pattern of intra-laminar connectivity in supragranular mouse auditory cortex. Front Neural circuits. 2014;8:15. doi: 10.3389/fncir.2014.00015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Miller EK, Cohen JD. An integrative theory of prefrontal cortex function. Annu Rev Neurosci. 2001;24:167–202. doi: 10.1146/annurev.neuro.24.1.167. [DOI] [PubMed] [Google Scholar]

- 61.Gold JI, Shadlen MN. The neural basis of decision making. Annu Rev Neurosci. 2007;30:535–574. doi: 10.1146/annurev.neuro.29.051605.113038. [DOI] [PubMed] [Google Scholar]

- 62.Buschman TJ, Miller EK. Top-down versus bottom-up control of attention in the prefrontal and posterior parietal cortices. Science. 2007;315:1860–1862. doi: 10.1126/science.1138071. [DOI] [PubMed] [Google Scholar]

- 63.Fritz JB, David SV, Radtke-Schuller S, Yin P, Shamma SA. Adaptive, behaviorally gated, persistent encoding of task-relevant auditory information in ferret frontal cortex. Nat Neurosci. 2010;13:1011–1019. doi: 10.1038/nn.2598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Gilbert CD, Li W. Top-down influences on visual processing. Nat Rev Neurosci. 2013;14:350–363. doi: 10.1038/nrn3476. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Piëch V, Li W, Reeke GN, Gilbert CD. Network model of top-down influences on local gain and contextual interactions in visual cortex. Proc Natl Acad Sci USA. 2013;110:E4108–E4117. doi: 10.1073/pnas.1317019110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Klein DJ, Simon JZ, Depireux DA, Shamma SA. Stimulus-invariant processing and spectrotemporal reverse correlation in primary auditory cortex. J Comput Neurosci. 2006;20:111–136. doi: 10.1007/s10827-005-3589-4. [DOI] [PubMed] [Google Scholar]

- 67.Barber RF, Candès EJ. Controlling the false discovery rate via knockoffs. Ann Stat. 2015;43:2055–2085. [Google Scholar]

- 68.Cooke SF, Bear MF. Visual experience induces long-term potentiation in the primary visual cortex. J Neurosci. 2010;30:16304–16313. doi: 10.1523/JNEUROSCI.4333-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.