Abstract

This paper studies hypothesis testing and parameter estimation in the context of the divide-and-conquer algorithm. In a unified likelihood based framework, we propose new test statistics and point estimators obtained by aggregating various statistics from k subsamples of size n/k, where n is the sample size. In both low dimensional and sparse high dimensional settings, we address the important question of how large k can be, as n grows large, such that the loss of efficiency due to the divide-and-conquer algorithm is negligible. In other words, the resulting estimators have the same inferential efficiencies and estimation rates as an oracle with access to the full sample. Thorough numerical results are provided to back up the theory.

Keywords and phrases: Divide and conquer, debiasing, massive data, thresholding

MSC 2010 subject classifications: Primary 62F05, 62F10, secondary 62F12

1. Introduction

In recent years, the field of statistics has developed apace in response to the opportunities and challenges spawned from the ‘data revolution’, which marked the dawn of an era characterized by the availability of enormous datasets. An extensive toolkit of methodology is now in place for addressing a wide range of high dimensional problems, whereby the number of unknown parameters, d, is much larger than the number of observations, n. However, many modern datasets are instead characterized by n and d both large. The latter presents intimidating practical challenges resulting from storage and computational limitations, as well as numerous statistical challenges (Fan et al., 2014). It is important that statistical methodology targeting modern application areas does not lose sight of the practical burdens associated with manipulating such large scale datasets. In this vein, incisive new algorithms have been developed for exploiting modern computing architectures and recent advances in distributed computing. These algorithms enjoy computational or communication efficiency and facilitate data handling and storage, but come with a statistical overhead if inappropriately tuned.

With increased mindfulness of the algorithmic difficulties associated with large datasets, the statistical community has witnessed a surge in recent activity in the statistical analysis of various divide and conquer (DC) algorithms, which randomly partition the n observations into k subsamples of size nk = n/k, construct statistics based on each subsample, and aggregate them in a suitable way. In splitting the dataset, a single, very large scale estimation or testing problem with computational complexity O(γ(n)), for a given function γ(·) that depends on the underlying problem, is transformed into k smaller problems with computational complexity O(γ(n/k)) on each machine. What get lost in this process are the interactions of split subsamples in each machine. They are not recoverable without additional rounds of communication or without additional communication between the machines. Since every additional split of the dataset incurs some efficiency loss, it is of significant practical interest to derive a theoretical upper bound on the number of subsamples k that delivers the same asymptotic statistical performance as the practically unavailable “oracle” procedure based on the full sample.

We develop communication efficient generalizations of the Wald and Rao’s score tests for the sparse high dimensional scheme, as well as communication efficient estimators for the parameters of the sparse high dimensional and low dimensional linear and generalized linear models. In all cases we give the upper bound on k for preserving the statistical error of the analogous full sample procedure. While hypothesis testing in a low dimensional context is straightforward, in the sparse high dimensional setting, nuisance parameters introduce a non-negligible bias, causing classical low dimensional theory to break down. In our high dimensional Wald construction, the phenomenon is remedied through a debiasing of the estimator, which gives rise to a test statistic with tractable limiting distribution, as documented in the k = 1 (no sample split) setting in Zhang and Zhang (2014) and van de Geer et al. (2014). For the high dimensional analogue of Rao’s score statistic, the incorporation of a correction factor increases the convergence rate of higher order terms, thereby vanquishing the effect of the nuisance parameters. The approach is introduced in the k = 1 setting in Ning and Liu (2014), where the test statistic is shown to possess a tractable limit distribution. However, the computation complexity for the debiased estimators increases by an order of magnitude, due to solving d high-dimensional regularization problems. This motivates us to appeal to the divide and conquer strategy.

We develop the theory and methodology for DC versions of these tests. In the case of k = 1, each of the above test statistics can be decomposed into a dominant term with tractable limit distribution and a negligible remainder term. The DC extension requires delicate control of these remainder terms to ensure the error accumulation remains sufficiently small so as not to materially contaminate the leading term. We obtain an upper bound on the number of permitted subsamples, k, subject to a statistical guarantee. More specifically, we find that the theoretical upper bound on the number of subsamples guaranteeing the same inferential or estimation efficiency as the whole-sample procedure is in the linear model, where s is the sparsity of the parameter vector. In the generalized linear model the scaling is , where s1 is the sparsity of the inverse information matrix.

For sparse high dimensional estimation problems, we use the same debiasing technique introduced in the high dimensional testing problems to obtain a thresholded divide and conquer estimator that achieves the full sample minimax rate. The appropriate scaling is found to be for the estimation of the sparse parameter vector in the high dimensional linear model and for the high dimensional generalized linear model. Moreover, we find that the loss incurred by the divide and conquer strategy, as quantified by the distance between the DC estimator and the full sample estimator, is negligible in comparison to the statistical error of the full sample estimator provided that k is not too large. In the context of estimation, the optimal scaling of k with n and d is also developed for the low dimensional linear and generalized linear model. This theory is of independent interest. It also allows us to study a refitted estimation procedure under a minimal signal strength assumption.

1.1. Related Literature

A partial list of references covering DC algorithms from a statistical perspective is Chen and Xie (2012), Zhang et al. (2013), Kleiner et al. (2014), Liu and Ihler (2014) and Zhao et al. (2014a). The closest works to ours are Zhang et al. (2013), Lee et al. (2015) and Rosenblatt and Nadler (2016). Zhang et al. (2013) consider the distributed estimator for kernel ridge regression. In the context of d < n, Zhang et al. (2013) propose the distributed estimator by averaging the kernel ridge regression estimators for each data split. They obtain an explicit upper bound on the number of splits yielding the minimax optimal rates for the mean squared error. However, it is not straightforward to generalize their estimator to the high dimensional setting. In an independent work, Lee et al. (2015) propose the same debiasing approach of van de Geer et al. (2014) to allow aggregation of local estimates on distributed data splits in the context of sparse high dimensional linear and generalized linear models. Though using different techniques of proofs, the conclusions of Lee et al. (2015) in terms of the optimal choice of tuning parameter scaling and the upper bound on the permissible number of sample splits is of the same order. Our work differs from theirs in two aspects: (1) our work also contributes to the distributed testing in sparse high dimensional models and (2) we propose a refitted distributed estimator which has the oracle rate. Our results on hypothesis testing reveal a different phenomenon to that found in estimation, as we observe through the different requirements on the scaling of k. On the estimation side, our results also differ from those of Lee et al. (2015) in that our additional refitting step allows us to achieve the oracle rate. Rosenblatt and Nadler (2016) consider the distributed empirical risk minimization for M-estimators. They require the dimension of the interest parameter to satisfy the scaling condition d/n → κ ∈ (0, 1), which rules out the d ≫ n case. They quantify the accuracy loss over the full sample estimator in terms of the number of splits.

1.2. Organization of the paper

The rest of the paper is organized as follows. Section 2 collects notation and details of a generic likelihood based framework. Section 3 covers testing, providing high dimensional DC analogues of the Wald test (Section 3.1) and Rao’s score test (Section 3.2), in each case deriving a tractable limit distribution for the corresponding test statistic under standard assumptions. Section 4 covers distributed estimation, proposing an aggregated estimator of the unknown parameters of linear and generalized linear models in low dimensional and sparse high dimensional scenarios, as well as a refitting procedure that improves the estimation rate, with the same scaling, under a minimal signal strength assumption. Section 5 provides numerical experiments to back up the developed theory. In Section 6 we discuss our results together with remaining future challenges. Proofs of our main results are collected in Section 7, while the statement and proofs of a number of technical lemmas are deferred to the Supplementary Material.

2. Background and Notation

We first collect the general notation, before providing a formal statement of our statistical problems. More specialized notation is introduced in context.

2.1. Generic Notation

We adopt the common convention of using bold-face letters for vectors only, while regular font is used for both matrices and scalars. |·| denotes both absolute value and cardinality of a set, with the context ensuring no ambiguity. For x = (x1, …, xd)T ∈ ℝd, and 1 ≤ q ≤ ∞, we define , ||x||0 = |supp(x)|, where supp(x) = {j : xj ≠ 0}. Write ||x||∞ = max1≤j≤d |xj|, while for a matrix M = [Mjk], let ||M||max = maxj,k |Mjk|, ||M||1 = Σj,k |Mjk|. For any matrix M we use Mℓ to index the transposed ℓth row of M and [M]ℓ to index the ℓth column. The sub-Gaussian norm of a scalar random variable X is defined as ||X||ψ2 = supq≥1 q−1/2(𝔼|X|q)1/q. For a random vector X ∈ ℝd, its sub-Gaussian norm is defined as ||X||ψ2 = supx∈𝕊d−1||〈X, x〉||ψ2, where 𝕊d−1 denotes the unit sphere in ℝd. Let Id denote the d × d identity matrix; when the dimension is clear from the context, we omit the subscript. We also denote the Hadamard product of two matrices A and B as A ∘ B and (A ∘ B)jk = AjkBjk for any j, k. {e1, …, ed} denotes the canonical basis for ℝd. For a vector v ∈ ℝd and a set of indices 𝒮 ⊆ {1, …, d}, v𝒮 is the vector of length |𝒮| whose components are {vj : j ∈ 𝒮}. Additionally, for a vector v with jth element vj, we use the notation v−j to denote the remaining vector when the jth element is removed. With slight abuse of notation, we write v = (vj, v−j) when we wish to emphasize the dependence of v on vj and v−j individually. The gradient of a function f(x) is denoted by ∇f(x), while ∇xf(x, y) denotes the gradient of f(x, y) with respect to x, and denotes the matrix of cross partial derivatives with respect to the elements of x and y. For a scalar η, we simply write f′(η) := ∇ηf(η) and . For a random variable X and a sequence of random variables, {Xn}, we write Xn ⇝ X when {Xn} converges weakly to X. If X is a random variable with standard distribution, say FX, we simply write Xn ⇝ FX. Given a, b ∈ ℝ, let a ∨ b and a ∧ b denote the maximum and minimum of a and b. We also make use of the notation an ≲ bn (an ≳ bn) if an is less than (greater than) bn up to a constant, and an ≍ bn if an is the same order as bn.

2.2. General Likelihood based Framework

Let be n i.i.d. copies of the random vector (XT, Y)T, whose realizations take values in ℝd × 𝒴. Write the collection of these n i.i.d. random couples as with Y = (Y1, …, Yn)T and X = (X1, …,Xn)T ∈ ℝn×d. Conditional on Xi, we assume Yi is distributed as Fβ* for all i ∈ {1, …, n}, where Fβ* is a known distribution parameterized by a sparse d-dimensional vector β* and has a density or mass function fβ*. We thus define the negative log-likelihood function, ℓn(β), as

| (2.1) |

We use J* = J(β*) to denote the information matrix and Θ* to denote (J*)−1, where .

For testing problems, our goal is to test for a specific fixed index v ∈ {1, …, d}. We partition β* as , where is a vector of nuisance parameters and is the parameter of interest. To handle the curse of dimensionality, we exploit a penalized M-estimator defined as,

| (2.2) |

with Pλ(β) a sparsity inducing penalty function with a regularization parameter λ. Examples of Pλ(β) include the convex ℓ1 penalty, which, in the context of the linear model, gives rise to the Lasso estimator (Tibshirani, 1996),

| (2.3) |

Other penalties include folded concave penalties such as the smoothly clipped absolute deviation (SCAD) penalty (Fan and Li, 2001) and minimax concave MCP penalty (Zhang, 2010), which eliminate the estimation bias and attain the oracle rates of convergence (Loh and Wainwright, 2013; Wang et al., 2014a). The SCAD penalty is defined as

| (2.4) |

for a given parameter a > 0 and MCP penalty is given by

| (2.5) |

where b > 0 is a fixed parameter. The only requirement we have on Pλ(β) is that it induces an estimator satisfying the following condition.

Condition 2.1

For any δ ∈ (0, 1), if ,

| (2.6) |

where s is the sparsity of β*, i.e., s = ||β*||0.

Condition 2.1 is crucial for the theory developed in Sections 3 and 4. Under suitable conditions on the design matrix X, it holds for the Lasso, SCAD and MCP. See Bühlmann and van de Geer (2011); Fan and Li (2001); Zhang (2010) respectively and Zhang and Zhang (2012).

The DC algorithm randomly and evenly partitions 𝒟 into k disjoint subsets 𝒟1, …,𝒟k, so that , 𝒟j ∩𝒟ℓ = Ø for all j, ℓ ∈ {1, …, k}, and |𝒟1| = |𝒟2| = ··· = |𝒟k| = nk = n/k, where it is implicitly assumed that n can be divided evenly. Let ℐj ⊂ {1, …, n} be the index set corresponding to the elements of 𝒟j. Then for an arbitrary n × d matrix A, A(j) = [Aiℓ]i∈ℐj,1≤ℓ≤d. For an arbitrary estimator τ̂, we write τ̂ (𝒟j) when the estimator is constructed based only on 𝒟j. Finally, we write to denote the negative log-likelihood function based on 𝒟j.

While the results of this paper hold in a general likelihood based framework, for simplicity we state conditions at the population level for the generalized linear model (GLM) with canonical link. A much more general set of statements appear in the auxiliary lemmas upon which our main results are based. Under GLM with the canonical link, the response follows the distribution,

| (2.7) |

where . The negative log-likelihood corresponding to (2.7) is given, up to an affine transformation, by

| (2.8) |

and the gradient and Hessian of ℓn(β) are respectively

where μ(β) = (b′(η1), …, b′(ηn))T and D(β) = diag{b″(η1), …, b″(ηn)}. In this setting, and .

3. Divide and Conquer Hypothesis Tests

In the context of the two classical testing frameworks, the Wald and Rao’s score tests, our objective is to construct a test statistic S̄n with low communication cost and a tractable limiting distribution F. From this statistic we define a test of size α of the null hypothesis, , against the alternative, , as a partition of the sample space described by

| (3.1) |

for a two sided test.

3.1. Two Divide and Conquer Wald Type Constructions

For the high dimensional linear model, Zhang and Zhang (2014), van de Geer et al. (2014) and Javanmard and Montanari (2014) propose methods for debiasing the Lasso estimator with a view to constructing high dimensional analogues of Wald statistics and confidence intervals for low-dimensional coordinates. As pointed out by Zhang and Zhang (2014), the debiased estimator does not impose the minimum signal condition used in establishing oracle properties of regularized estimators (Fan and Li, 2001; Fan and Lv, 2011; Loh and Wainwright, 2015; Wang et al., 2014b; Zhang and Zhang, 2012) and hence has wider applicability than those inferences based on the oracle properties. The method of van de Geer et al. (2014) is appealing in that it accommodates a general penalized likelihood based framework, while the Javanmard and Montanari (2014) approach is appealing in that it optimizes asymptotic variance and requires a weaker condition than van de Geer et al. (2014) in the specific case of the linear model. We consider the DC analogues of Javanmard and Montanari (2014) and van de Geer et al. (2014) in Sections 3.1.1 and 3.1.2 respectively.

3.1.1. Lasso based Wald Test for the Linear Model

The linear model assumes

| (3.2) |

where are i.i.d. with 𝔼(εi) = 0 and variance σ2. For concreteness, we focus on a Lasso based method, but our procedure is also valid when other pilot estimators are used. We describe a modification of the bias correction method introduced in Javanmard and Montanari (2014) as a means to testing hypotheses on low dimensional coordinates of β* via pivotal test statistics.

On each subset 𝒟j, we compute the debiased estimator of β* as in Javanmard and Montanari (2014) as

| (3.3) |

where the superscript d is used to indicate the debiased version of the estimator, and mv is the solution of

| (3.4) |

The choice of tuning parameters ϑ1 and ϑ2 is discussed in Javanmard and Montanari (2014) and Zhao et al. (2014a) and they suggest to choose , ϑ2n−1/2 = o(1). In the context of our DC procedure, ϑ1 and ϑ2 rely on k and should be chosen as , ϑ2n−1/2 = o(1), as quantified in Theorem 3.3. Above, is the sample covariance based on 𝒟j, whose population counterpart is and M(j) is its regularized inverse. The second term in (3.3) is a bias correction term, while is shown in Javanmard and Montanari (2014) to be the variance of the vth component of β̂d(𝒟j). The parameter ϑ1, which tends to zero, controls the bias of the debiased estimator (3.3) and the optimization in (3.4) minimizes the variance of the resulting estimator.

Solving d optimization problems in (3.4) increases an order of magnitude of computation complexity even for k = 1. Thus, it is necessary to appeal to the divide and conquer strategy to reduce the computation burden. This gives rise to the question how large k can be in order to maintain the same statistical properties as the whole sample one (k = 1).

Because our DC procedure gives rise to smaller samples, Σ̂ is singular. This singularity does not pose a statistical problem but it does make the optimization problem ill-posed. To overcome the singularity in Σ̂ and the resulting instability of the algorithm, we propose a change of variables. More specifically, noting that M(j) is not required explicitly, but rather the product M(j)(X(j))T, we propose

| (3.5) |

from which we construct M(j)(X(j))T = BT, where B = (b1, …, bd). The algorithm in equation (3.5) is crucial to the success of our procedure in practice.

The following conditions on the data generating process and the tail behavior of the design vectors are imposed in Javanmard and Montanari (2014). Both conditions are used to derive the theoretical properties of the DC Wald test statistic based on the aggregated debiased estimator, .

Condition 3.1

are i.i.d. and Σ satisfies 0 < Cmin ≤ λmin(Σ) ≤ λmax(Σ) ≤ Cmax.

Condition 3.2

The rows of X are sub-Gaussian with ||Xi||ψ2 ≤ κ, i = 1, …, n.

Note that under the two conditions above, there exists a constant κ1 > 0 such that . Without loss of generality, we set κ1 = κ. Our first main theorem provides the relative scaling of the various tuning parameters involved in the construction of β̄d.

Theorem 3.3

Suppose Conditions 2.1, 3.1 and 3.2 are fulfilled. Suppose and choose ϑ1, ϑ2 and k such that , ϑ2n−1/2 = o(1) and . For any v ∈ {1, …, d}, we have

| (3.6) |

where .

Theorem 3.3 entertains the prospect of a divide and conquer Wald statistic of the form

| (3.7) |

for , where σ̄ is an estimator for σ based on the k subsamples. On the left hand side of equation (3.7) we suppress the dependence on v to simplify notation. As an estimator for σ, a simple suggestion with the same computational complexity is σ̄ where

| (3.8) |

One can use the refitted cross-validation procedure of Fan et al. (2012) to reduce the bias of the estimate. In Lemma 3.4 we show that with the scaling of k and λ required for the weak convergence results of Theorem 3.3, consistency of σ̄2 is also achieved.

Lemma 3.4

Suppose 𝔼[εi|Xi] = 0 for all i ∈ {1, …, n}. Then with and , |σ̄2 − σ2| = oℙ(1).

With Lemma 3.4 and Theorem 3.3 at hand, we establish in Corollary 3.5 the asymptotic distribution of S̄n under the null hypothesis . This holds for each component v ∈ {1, …, d}.

Corollary 3.5

Suppose Conditions 3.1 and 3.2 are fulfilled, , and λ, ϑ1 and ϑ2 are chosen as and ϑ2n−1/2 = o(1). Then provided , under , we have

| (3.9) |

where Φ(·) is the cdf of a standard normal distribution.

3.1.2. Wald Test in the Likelihood Based Framework

An alternative route to debiasing the Lasso estimator of β* is the one proposed in van de Geer et al. (2014). Their so called desparsified estimator of β* is more general than the debiased estimator of Javanmard and Montanari (2014) in that it accommodates generic estimators of the form (2.2) as pilot estimators, but the latter optimizes the variance of the resulting estimator. The desparsified estimator for subsample 𝒟j is

| (3.10) |

where Θ̂(j) is a regularized inverse of the Hessian matrix of second order derivatives of at β̂λ(𝒟j), denoted by . We will make this explicit in due course. The estimator resembles the classical one-step estimator (Bickel, 1975), but now in the high-dimensional setting via regularized inverse of the Hessian matrix Ĵ(j), which reduces to the empirical covariance of the design matrix in the case of the linear model. From equation (3.10), the aggregated debiased estimator over the k subsamples is defined as .

We now use the nodewise Lasso (Meinshausen and Bühlmann, 2006) to approximately invert Ĵ(j) via L1-regularization. The basic idea is to find the regularized invert row by row via a penalized L1-regression, which is the same as regressing the variable Xv on X−v but expressed in the sample covariance form. For each row v ∈ 1, …, d, consider the optimization

| (3.11) |

where denotes the vth row of Ĵ(j) without the (v, v)th diagonal element, and is the principal submatrix without the vth row and vth column. Introduce

| (3.12) |

and Ξ̂(j) = diag(τ̂1(𝒟j), …, τ̂d(𝒟j)), where . Θ̂(j) in equation (3.10) is given by

| (3.13) |

and we define as the transposed vth row of Θ̂(j).

Theorem 3.8 establishes the limit distribution of the term,

| (3.14) |

for any v ∈ {1, …, d} under the null hypothesis . This provides the basis for the statistical testing based on divide-and-conquer. We need the following condition. Recall that J* = 𝔼[∇ββℓn(β*)] and consider the generalized linear model (2.7).

Condition 3.6

(i) are i.i.d., 0 < Cmin ≤ λmin(Σ) ≤ λmax(Σ) ≤ Cmax, λmin(J*) ≥ Lmin > 0, ||J*||max < U1 < ∞. (ii) For some constant M < ∞, and max1≤i≤n ||Xi||∞ ≤ M. (iii) There exist finite constants U2, U3 > 0 such that b″(η) < U2 and b‴(η) < U3 for all η ∈ ℝ.

The same assumptions appear in van de Geer et al. (2014). In the case of the Gaussian GLM, the condition on λmin(J*) reduces to the requirement that the covariance of the design has minimal eigenvalue bounded away from zero, which is a standard assumption. We require ||J*||max < ∞ to control the estimation error of different functionals of J*. The restriction in (ii) on the covariates and the projection of the covariates are imposed for technical simplicity; it can be extended to the case of exponential tails (see Fan and Song, 2010). Note that where ϕ is the dispersion parameter in (2.7), so b″(η) < U2 essentially implies an upper bound on the variance of the response. In fact, Lemma E.2 shows that b″(η) < U2 can guarantee that the response is sub-Gaussian. b‴(η) < U3 is used to derive the Lipschitz property of with respect to β as shown in Lemma E.5. We emphasize that no requirement in Condition 3.6 is specific to the divide and conquer framework.

The assumption of bounded design in (ii) can be relaxed to the sub- Gaussian design. However, the price to pay is that the allowable number of subsets k is smaller than the bounded case, which means we need a larger sub-sample size. To be more precise, the order of maximum k for the sub-Gaussian design has an extra factor, which is a polynomial of , compared to the order for the bounded design. This logarithmic factor comes from different Lipschitz properties of in the two designs, which is fully explained in Lemma E.5 in the Supplementary Material. In the following theorems, we only present results for the case of bounded design for technical simplicity.

In addition, recalling that Θ* = (J*)−1, where , we impose Condition 3.7 on Θ* and its estimator Θ̂.

Condition 3.7

(i) . (ii) . (iii) For v = 1, …, d, whenever in (3.11), we have

where C is a constant and s1 is such that for all v ∈ {1, …, d}.

Part (i) of Corollary 3.7 ensures that the variances of each component of the debiased estimator exist, guaranteeing the existence of the Wald statistic. Parts (ii) and (iii) are imposed directly for technical simplicity. Results of this nature have been established under a similar set of assumptions in van de Geer et al. (2014) and Negahban et al. (2009) for convex penalties and in Wang et al. (2014a) and Loh and Wainwright (2015) for folded concave penalties.

As a step towards deriving the limit distribution of the proposed divide and conquer Wald statistic in the GLM framework, we establish the asymptotic behavior of the aggregated debiased estimator for every given v ∈ [d].

Theorem 3.8

Under Conditions 2.1, 3.6 and 3.7, with , we have

| (3.15) |

for any k ≪ d satisfying , where is the transposed vth row of Θ̂(j).

The proof of Theorem 3.8 shows that for the Wald test procedure, the divide and conquer estimator is asymptotically as efficient as the full sample estimator β̂v, i.e.,

A corollary of Theorem 3.8 provides the asymptotic distribution of the Wald statistic in equation (3.14) under the null hypothesis.

Corollary 3.9

Let S̄n be as in equation (3.14), with replaced with an estimator Θ̃vv. Then under the conditions of Theorem 3.8 and , provided |Θ̃vv − Θvv| = oℙ(1) under the scaling , we have

Remark 3.10

Although Theorem 3.8 and Corollary 3.9 are stated only for the GLM, their proofs are in fact an application of two more general results. Further details are available in Lemmas E.7 and E.8 in the Supplementary Material.

We return to the issue of estimating in Section 4, where we introduce a consistent estimator of that preserves the scaling of Theorem 3.8 and Corollary 3.9.

3.2. Divide and Conquer Score Test

In this section, we use ∇vf(β) and ∇−vf(β) to denote, respectively, the partial derivative of f with respect to βv and the partial derivative vector of f with respect to β−v. and are analogously defined.

In the low dimensional setting (where d is fixed), Rao’s score test of against is based on , where β̃−v is a constrained maximum likelihood estimator of , constructed as . If H0 is false, imposing the constraint postulated by H0 significantly violates the first order conditions from M-estimation with high probability; this is the principle underpinning the classical score test. Under regularity conditions, it can be shown (e.g. Cox and Hinkley, 1974) that

where is given by , with and the partitions of the information matrix J* = J(β*),

| (3.16) |

The problems associated with the use of the classical score statistic in the presence of a high dimensional nuisance parameter are brought to light by Ning and Liu (2014), who propose a remedy via the decorrelated score. The problem stems from the inversion of the matrix in high dimensions. The decorrelated score is defined as

| (3.17) |

where . For a regularized estimator ŵ of w*, to be defined below, we consider the score estimator

| (3.18) |

Hence, provided w* is sufficiently sparse to avoid excessive noise accumulation, we are able to achieve consistency of ŵ under the high dimensional setting, ultimately giving rise to a tractable limit distribution of a suitable rescaling of . Since is restricted under the null hypothesis, , the statistic in (3.18) is accessible once H0 is imposed. As Ning and Liu (2014) point out, w* is the solution to

under .

Our divide and conquer score statistic under is

| (3.19) |

and we estimate w* using the Dantzig selector of Candes and Tao (2007)

Theorem 3.11

Let Ĵv|−v be a consistent estimator of and

Suppose ||w*||1 ≲ s1 and Conditions 2.1 and 3.6 are fulfilled. Then under with ,

for any k ≪ d satisfying , where is defined in equation (3.19).

Remark 3.12

By the definition of w* and the block matrix inversion formula for Θ* = (J*)−1, sparsity of w* is implied by sparsity of Θ* as assumed in van de Geer et al. (2014) and Condition 3.7 of Section 3.1.2. In turn, ||w*||0 ≲ s1 implies ||w*||1 ≲ s1 provided that the elements of w* are bounded.

Remark 3.13

Although Theorem 3.11 is stated in the penalized GLM setting, the result holds more generally; further details are available in Lemma E.13 in the Supplementary Material.

To maintain the same computational complexity, an estimator of the conditional information needs to be constructed using a DC procedure. For this, we propose to use

where the divide and conquer estimator and . Note that for certain v, the communication cost for calculating J̄v|−v is not high, since all the involved quantities and are scalars, d-dimensional vectors and d-dimensional vectors respectively. The communication cost is thus of order O(kd). We do not communicate the entire huge hessian matrix here.

Lemma 3.14

Suppose ||w*||1 = O(s1) and Conditions 2.1 and 3.6 are fulfilled. Then for any k ≪ d satisfying .

By Lemma 3.14, we show that J̄v|−v is consistent and can be applied to Theorem 3.11.

4. Accuracy of Distributed Estimation

This section focuses on high-dimensional (d ≫ n) divide-and-conquer estimators for linear and generalized linear models. As explained below Theorem 3.8 in Section 3, the efficiency loss from the divide-and-conquer process is asymptotically zero. This motivates us to consider ||β̄d − β̂d||, the loss incurred by the divide and conquer strategy in comparison with the practically unavailable full sample debiased estimator β̂d, where ||·|| is certain norm. Indeed, it turns out that, for k not too large, β̄d − β̂d appears only as a higher order term in the decomposition of β̄d − β* and thus ||β̄d − β̂d|| is negligible compared to the statistical error, ||β̂d − β*||. In other words, the divide-and-conquer errors are statistically negligible.

Compared with calculating the full sample debiased Lasso estimator, our proposed DC strategy enjoys computational advantages since it is highly parallel and each subsample problem has a much smaller scale than the full sample problem given a suitably large k. However, relative to just the full sample penalized M-estimator (e.g., Lasso), distributed point estimation does not entail a computational gain like distributed testing, since our distributed algorithm requires debiasing each component of the Lasso estimator and hence brings high expense of computation. The bottleneck of computation of our DC procedure comes from the d extra debiasing steps. To mitigate this problem, we can actually debias each component of β̂ in a parallel fashion. According to the optimization procedures (3.4) and (3.11), debiasing one component of the Lasso estimator is entirely independent of the debiasing of another component. Therefore, as long as each branch computer in the cluster shares the sub-dataset 𝒟j and the Lasso estimator β̂(j), they can work in parallel and collectively return to a central server all the components of the debiased Lasso estimator. This parallelization reduces the time complexity significantly.

When the minimum signal strength is sufficiently strong, thresholding β̄d achieves exact support recovery, motivating a refitting procedure based on the low dimensional selected variables. As a means to understanding the theoretical properties of this refitting procedure, as well as for independent interest, we develop new theories and methodologies for the low dimensional (d < n) linear and generalized linear models in Appendixes A and B in the Supplementary Material respectively. We show that simple averaging of low dimensional OLS or GLM estimators (denoted uniformly as β̂(j), without superscript d as debiasing is not necessary) suffices to preserve the statistical error, i.e., achieving the same statistical accuracy as the estimator based on the full sample. This is because, in contrast to the high dimensional setting, parameters are not penalized in the low dimensional case. With β̄ the average of β̂(j) over the k machines and β̂ the full sample counterpart (k = 1), we derive the rate of convergence of ||β̄ − β̂||2. Refitted estimation using only the selected covariates allows us to eliminate the log d term in the statistical rate of convergence of the estimator under high-dimensional settings. We present theoretical results on the refitting estimation as corollaries to the low-dimensional regression results in Appendixes A and B in the Supplementary Material.

4.1. The High-Dimensional Linear Model

Recall that the high dimensional DC estimator is , where β̂d(𝒟j) for 1 ≤ j ≤ k is the debiased estimator defined in (3.3). We also denote the debiased Lasso estimator using the entire dataset as . The following lemma shows that not only is β̄d asymptotically normal, it approximates the full sample estimator β̂d so well that it has the same statistical error as β̂d provided the number of subsamples k is not too large.

Lemma 4.1

Consider the linear model (3.2). Under the Conditions 3.1 and 3.2, if λ, ϑ1 and ϑ2 are chosen as and ϑ2n−1/2 = o(1), we have with probability 1 − c/d,

| (4.1) |

Remark 4.2

The term in (4.1) is the estimation error of ||β̂d − β*||∞, while the term (sk log d)/n is the rate of the distance between the divide and conquer estimator and the full sample estimator. Lemma 4.1 does not rely on any specific choice of k. However, in order for the aggregated estimator β̄d to attain the same ||·||∞ norm estimation error as the full sample Lasso estimator, β̂Lasso, the required scaling is . This is a weaker scaling requirement than that of Theorem 3.3 because the latter entails a guarantee of asymptotic normality, which is a stronger result. It is for the same reason that our estimation results only require O(·) scaling whilst those for testing require o(·) scaling.

Rosenblatt and Nadler (2016) show that in the high-dimensional regime where d/nk → κ ∈ (0, 1), the divide and conquer procedure suffers from first-order accuracy loss. This seems a contradiction to our result, since our dimension is even higher than their context, but we have no first-order accuracy loss while averaging debiased estimators based on subsamples, as long as we have an appropriate number of data splits. In fact, in the highdimensional sparse linear regression, the intrinsic dimension is the sparsity s rather than d, which is regarded instead as the ambient dimension. The sparsity assumption changes the original high-dimensional problem to be an intrinsically low-dimensional one and thus allows us to escape from any first-order accuracy loss of the divide and conquer procedure. Given s = o(nk), we can treat high-dimensional sparse linear regression approximately as the classical linear regression setting where d = o(nk). Hence we expect no first-order accuracy loss from the divide and conquer procedure here.

Although β̄d achieves the same rate as the Lasso estimator under the infinity norm, it cannot achieve the minimax rate in ℓ2 norm since it is not a sparse estimator. To obtain an estimator with the ℓ2 minimax rate, we sparsify β̄d by hard thresholding. For any β ∈ ℝd, define the hard thresholding operator 𝒯ν such that the jth entry of 𝒯ν(β) is

| (4.2) |

According to (4.1), if , we have with high probability. The following theorem characterizes the estimation error, ||𝒯ν(β̄d) − β*||2, and divide and conquer error, ||𝒯ν(β̄d) − 𝒯ν(β̂d)||2, of the thresholded estimator 𝒯ν(β̄d).

Theorem 4.3

Under the linear model (3.2), suppose Conditions 3.1 and 3.2 are fulfilled and choose and ϑ2n−1/2 = o(1). Take the parameter of the hard threshold operator in (4.2) as for some sufficiently large constant C0. If the number of subsamples satisfies , for large enough d and n, we have with probability 1 − c/d,

Remark 4.4

In fact, in the proof of Theorem 4.3, we show that if the thresholding parameter ν satisfies ν ≥ ||β̄d − β*||∞, we have ; it is for this reason that we choose . Unfortunately, the constant is difficult to choose in practice. In the following paragraphs we propose a practical method to select the tuning parameter ν.

Let (M(j)X(j)T)ℓ denote the transposed ℓth row of M(j)X(j)T. Inspection of the proof of Theorem 3.3 reveals that the leading term of satisfies

Chernozhukov et al. (2013) propose the Gaussian multiplier bootstrap to estimate the quantile of T0. Let be i.i.d. standard normal random variable independent of . Consider the statistic

where ε̂(j) ∈ ℝnk is an estimator of ε(j) such that for any i ∈ ℐj, , and ξ(j) is a subvector of with indices in ℐj. Recall that “∘” denotes the Hadamard product. The α-quantile of W0 conditioning on is defined as cW0(α) = inf{t | ℙ(W0 ≤ t | Y, X) ≥ α}. We estimate cW0(α) by Monte-Carlo and thus choose . This choice ensures

which coincides with the ℓ2 convergence rate of the Lasso.

Remark 4.5

Lemma 4.1 and Theorem 4.3 show that if the number of subsamples satisfies and , and thus the error incurred by the divide and conquer procedure is negligible compared to the statistical minimax rate. The reason for this contraction phenomenon is that β̄d and β̂d share the same leading term in their Taylor expansions around β*. The difference between them is only the difference of two remainder terms which has a smaller order than the leading term. We uncover a similar phenomenon in the low dimensional case covered in Appendix A in the Supplementary Material. However, in the low dimensional case ℓ2 norm consistency is automatic while the high dimensional case requires an additional thresholding step to guarantee sparsity and, consequently, ℓ2 norm consistency.

4.2. The High-Dimensional Generalized Linear Model

We generalize the DC estimation of the linear model to GLM. Recall that β̂d(𝒟j) is the de-biased estimator defined in (3.10) and the aggregated estimator is . We still denote . The next lemma bounds the error incurred by splitting the sample and the statistical rate of convergence of β̄d in terms of the infinity norm.

Lemma 4.6

Consider the generalized linear model (2.7) with canonical link. Under Conditions 2.1, 3.6 and 3.7, for β̂λ with , we have with probability 1 − c/d, there exists a constant C > 0, such that

Remark 4.7

The term in above is the estimation error of ||β̂d − β*||∞, while the error term (s ∨ s1)k log d/n is attributable to the distance between β̄d and β̂d.

Applying a similar thresholding step as in the linear model, we quantify the ℓ2-norm estimation error, ||𝒯ν(β̄d) − β*||2 and the distance between the divide and conquer estimator and full sample estimator ||𝒯ν(β̄d) − 𝒯ν(β̂d)||2.

Theorem 4.8

For the GLM (2.7), under Conditions 2.1 – 3.7, choose and . Take the parameter of the hard threshold operator in (4.2) as for some sufficiently large constant C0. If the number of subsamples satisfies , for large enough d and n, we have with probability 1 − c/d,

| (4.3) |

Remark 4.9

As in the case of the linear model, Theorem 4.8 reveals that the loss incurred by the divide and conquer procedure is negligible compared to the statistical minimax estimation error provided .

A similar proof strategy to that of Theorem 4.8 allows us to construct an estimator of that achieves the required consistency with the scaling of Corollary 3.9. Our estimator is Θ̃vv: = [𝒯ζ(Θ̄)]vv, where and 𝒯ζ(·) is the thresholding operator defined in equation (4.2) with for some sufficiently large constant C1.

Corollary 4.10

Under the conditions and scaling of Theorem 3.8, .

Substituting this estimator in Corollary 3.9 delivers a practically implementable test statistic based on subsamples.

Remark 4.11

Notice that point estimation requires less stringent scaling of k than hypothesis testing in both the linear and generalized linear models. This is because the testing and estimation require different rates for the higher order term Δ in the decomposition

where Z is the leading term contributing to the asymptotic normality of . For hypothesis testing, we need to guarantee the asymptotic normality. For estimation, we need to match the minimax rate of ||β̄d − β*||∞. Therefore, the number of splits k for testing is more stringent by a factor of than in estimation.

5. Simulations

In this section, we illustrate and validate our theoretical findings through simulations. For hypothesis testing, we use QQ plots to compare the distribution of p-values for divide and conquer test statistics to their theoretical uniform distribution. We also investigate the estimated type I error and power of the divide and conquer tests. For estimation, we validate our claim in the beginning of Section 4 that the loss incurred by the divide and conquer strategy is negligible compared with the statistical error of the corresponding full sample estimator in the high dimensional case. Specifically, we compare the performance of the divide and conquer thresholding estimator of Section 4.1 with the full sample Lasso and the average Lasso over subsamples. An analogous empirical verification of the theory is performed for the low dimensional case as well; we put it in Appendixes C and D of the Supplementary Material.

5.1. Results on Hypothesis Testing

We explore the probability of rejection of a null hypothesis of the form when data are generated according to the linear model,

where and

where d = 5000 and s = 3. In each Monte Carlo replication, we split the initial sample of size n into k subsamples of size n/k. In particular we choose n = 5000 and k ∈ {1, 2, 5, 10, 20, 25, 40, 50, 100, 200, 500}. The number of Monte Carlo replications is 500. Using β̂Lasso as a preliminary estimator of β*, we construct Wald and Rao’s score test statistics as described in Sections 3.1.2 and 3.2 respectively.

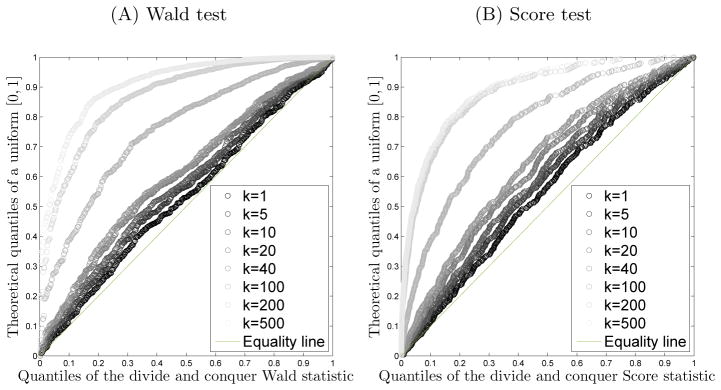

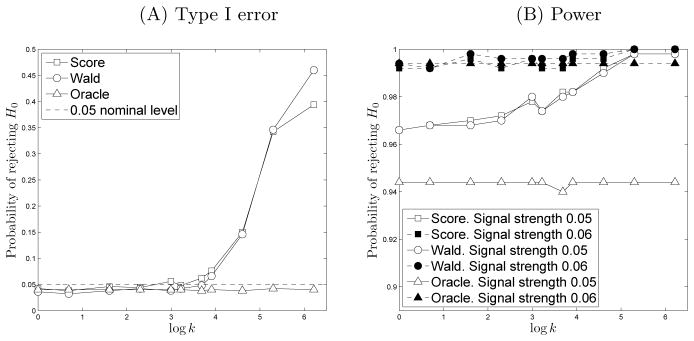

Panels (A) and (B) of Figure 1 are QQ plots of the p-values of the divide and conquer Wald and score test statistics under the null hypothesis against the theoretical quantiles of of the uniform [0,1] distribution for eight different values of k. For both test constructions, the distributions of the p-values are close to uniform and remain so as we split the data set. When k ≥ 100, the distribution of the corresponding p-values deviates from the uniform distribution visibly, as expected from the theory developed in Sections 3.1.2 and 3.2. Panel (A) of Figure 2 shows that, for both test constructions, when the number of splits k ≤ 50, the empirical level of the test is close to both the nominal α = 0.05 level and the level of the full sample oracle OLS estimator which knows the true support of β*. On the other hand, the type I error increases dramatically when k is larger than 50. This is consistent with asymptotic normality of the test statistics we established when k is controlled appropriately. Panel (B) of Figure 2 displays the power of the test for two different signal strengths, and 0.06. We see that the power for the Score and Wald tests improves when the signal strength goes from 0.05 to 0.06. In addition, we find that the power is high regardless of how large k is. However, Figure 2(A) shows that the Type I error is large when k is large, which makes the tests invalid. Therefore, these results illustrate that the Type I and II errors are controllable when the number of splits k is relatively small. We also record the wall time for computation for these k’s in Table 1. The wall time is computed by taking the maximal time taken for each split and averaged over replications.

Fig 1.

QQ plots of the p-values of the Wald (A) and score (B) divide and conquer test statistics against the theoretical quantiles of the uniform [0,1] distribution under the null hypothesis.

Fig 2.

(A) Estimated probabilities of type I error for the Wald and score tests as a function of k. (B) Estimated power with signal strength 0.05 and 0.06 for the Wald, and score tests as a function of k.

Table 1.

Computation time for the divide and conquer testing and estimation, where k = 1 corresponds to the non-splitting case and k > 1 corresponds to the distributed case.

| k | 1 | 2 | 5 | 10 | 20 | 25 | 50 | 100 | 200 |

|---|---|---|---|---|---|---|---|---|---|

| Score test (s) | 364.39 | 73.22 | 35.09 | 23.61 | 23.56 | 20.78 | 24.13 | 37.53 | 64.67 |

| Wald test (s) | 426.23 | 68.95 | 19.66 | 10.09 | 6.70 | 5.71 | 3.88 | 2.60 | 1.91 |

| 𝒯ν(β̄d)(103s) | 61.50 | 30.00 | 7.92 | 6.58 | 4.48 | 2.94 | 2.64 | 2.11 | 1.66 |

| Split Lasso (s) | 89.18 | 32.02 | 34.57 | 6.47 | 4.87 | 4.16 | 2.56 | 1.92 | 2.64 |

5.2. Results on Estimation

In this section, we turn our attention to experimental validation of our divide and conquer estimation theory, focusing first on the low dimensional case and then on the high dimensional case.

5.2.1. The High Dimensional Linear Model

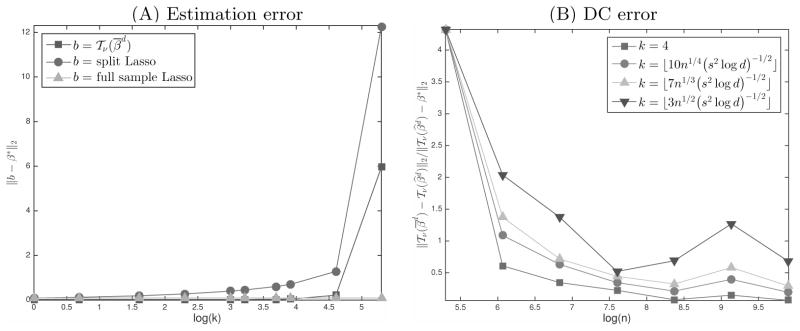

We now consider the same setting of Section 5.1 with n = 5000, d = 5000 and for all j in the support of β*. In this context, we analyze the performance of the thresholded averaged debiased estimator of Section 4.1. Figure 3(A) depicts the average over 100 Monte Carlo replications of ||b − β*||2 for three different estimators: debiased divide-and-conquer b = 𝒯ν(β̄d), the Lasso estimator based on the whole sample b = β̂Lasso and the estimator obtained by naïvely averaging the Lasso estimators from the k subsamples b = β̄Lasso. The parameter ν is taken as in the specification of 𝒯ν(β̄d). As expected, the performance of β̄Lasso deteriorates sharply as k increases. 𝒯ν(β̄d) outperforms β̂Lasso as long as k is not too large. This is expected because, for sufficiently large signal strength, both β̂Lasso and 𝒯ν(β̄d) recover the correct support, however 𝒯ν(β̄d) is unbiased for those in the support of β*, whilst β̂Lasso is biased. Figure 3(B) shows the error incurred by the divide and conquer procedure ||𝒯ν(β̄d) − 𝒯ν(β̂d)||2 relative to the statistical error of the full sample estimator, ||𝒯ν(β̄d) − β*||2, for four different scalings of k. We observe that, with , the relative error incurred by the divide and conquer procedure can hardly converge. This is consistent with Theorem 4.3. Given the lower bound of statistical error of the full sample Lasso estimator β̂, From Theorem 4.3 we derive that

Fig 3.

(A): Statistical error of the DC estimator, split Lasso and the full sample Lasso for k ∈ {1, 2, 5, 10, 20, 25, 40, 50, 100, 200} when n = 5000, d = 5000. (B): Euclidean norm difference between the DC thresholded debiased estimator and its full sample analogue.

When , the righthand side is an O(1) term. Therefore the line with inverted triangles in Figure 3(B) implies that the statistical error rate we developed in Theorem 4.3 is tight. We also record the wall time for estimation computation for these k’s in Table 1. The wall time is computed by taking the maximal time taken for each splits and averaged over replications. We notice that the computation time decreases with k at first due to the parallel algorithm. However, for the score test and split Lasso, the time becomes increasing when k is large, this is because the computation time to aggregate results from different splits is no longer negligible for very large k’s.

6. Discussion

With the advent of the data revolution comes the need to modernize the classical statistical tool kit. For very large scale datasets, distribution of data across multiple machines is the only practical way to overcome storage and computational limitations. It is thus essential to build aggregation procedures for conducting inference based on the combined output of multiple machines. We successfully achieve this objective, deriving divide and conquer analogues of the Wald and score statistics and providing statistical guarantees on their performance as the number of sample splits grows to infinity with the full sample size. Tractable limit distributions of each DC test statistic are derived. These distributions are valid as long as the number of subsamples, k, does not grow too quickly. In particular, is required in a general likelihood based framework. If k grows faster than , remainder terms become nonnegligible and contaminate the tractable limit distribution of the leading term. When attention is restricted to the linear model, a faster growth rate of is allowed.

The divide and conquer strategy is also successfully applied to estimation of regression parameters. We obtain the rate of the loss incurred by the divide and conquer strategy. Based on this result, we derive an upper bound on the number of subsamples for preserving the statistical error. For low-dimensional models, simple averaging is shown to be effective in preserving the statistical error, so long as k = O(n/d) for the linear model and for the generalized linear model. For high-dimensional models, the debiased estimator used in the Wald construction is also successfully employed, achieving the same statistical error as the Lasso based on the full sample, so long as .

Our contribution advances the understanding of distributed inference in the presence of large scale and distributed data, but there is still a great deal of work to be done in the area. We focus here on the fundamentals of hypothesis testing and estimation in the divide and conquer setting. Beyond this, there is a whole tool kit of statistical methodology designed for the single sample setting, whose split sample asymptotic properties are yet to be understood.

7. Proofs

In this section, we present the proofs of the main theorems appearing in Sections 3 and 4. The statements and proofs of several auxiliary lemmas appear in the Supplementary Material. To simplify notation, we take without loss of generality.

7.1. Proofs for Section 3.1

The proof of Theorem 3.3, relies on the following lemma, which bounds the probability that optimization problems in (3.4) are feasible.

Lemma 7.1

Assume satisfies Cmin < λmin(Σ) ≤ λmax(Σ) ≤ Cmax as well as ||Σ−1/2X1||ψ2 = κ, then we have

where .

Proof

The proof is an application of the union bound in Lemma 6.2 of Javanmard and Montanari (2014).

Proof of Theorem 3.3

For 1 ≤ j ≤ k, let , where . From Theorem F.1, we know that as long as holds uniformly for j = 1, …, d,

Then we define

We now establish the asymptotic normality of V̄n by verifying the requirements of the Lindeberg-Feller central limit theorem (e.g. Kallenberg, 1997, Theorem 4.12). By the fact that εi is independent of X for all i and 𝔼[εi] = 0,

By independence of and the definition of Σ̂(j), we also have

Therefore we have

It only remains to verify the Lindeberg condition, i.e.,

| (7.1) |

whose verification is relegated to the Appendix E of the Supplementary Material. Finally we reach the conclusion by the Slutsky’s Theorem.

Proof of Corollary 3.5

Let , where n is the total sample size. According to Theorem 3.3, when ℱn holds, we have

From the proof of Lemma 13 in Javanmard and Montanari (2014), limn→∞ ℙ(ℱn) = 1. For any t ∈ ℝ and δ > 0, by applying dominating convergence Theorem to 𝟙{|ℙ(S̄n ≤ t | X) − Φ(t)| > δ and ℱn holds}, we have

According to the dominate convergence theorem, since ℙ(S̄n ≤ t | X) ∈ [0, 1], we have

Therefore, we complete the proof of the corollary.

The proofs of Theorem 3.8 and Corollary 3.9 are stated as an application of Lemmas E.7 and E.8 in the Supplementary Material, which apply under a more general set of requirements. We present the proof of Theorem 3.8 below and defer Corollary 3.9 to Appendix E in the Supplementary Materials.

Proof of Theorem 3.8

We verify (A1)–(A4) of Lemma E.7. For (A1), decompose the object of interest as

where Δ1 can be further decomposed and bounded by

We have

and by Condition 3.7, ψ = o(d−1) = o(k−1) for any , a fortiori for q a constant. Since Xi is sub-Gaussian, a matching probability bound can easily be obtained for Δ2, thus we obtain

for ψ = o(k−1). (A2) and (A3) of Lemma E.7 are applications of Lemmas E.3 and E.4 respectively. To establish (A4), observe that

where and . We thus consider |Δℓ(β̂λ(𝒟j) − β*)| for ℓ = 1, 2, 3.

by Lemma E.4, thus ℙ(|Δ2 · (β̂λ(𝒟j) − β*)| > t) < δ for t ≍ MU3n−1sk log(d/δ). Invoking Hölder’s inequality, Hoeffding’s inequality and Condition 2.1, we also obtain, for t ≍ n−1sk log(d/δ),

Therefore ℙ(|Δ2(β̂λ(𝒟j) − β*)| > t) < 2δ. Finally, with t n−1(s ∨ s1)k log(d/δ),

hence ℙ(|Δ1(β̂λ(𝒟j) − β*)| > t) < 2δ. This follows because by Lemma E.4,

and by Lemma C.4 of Ning and Liu (2014),

7.2. Proofs for Theorems in Section 3.2

The proof of Theorem 3.11 relies on several preliminary lemmas, collected in Appendix E in the Supplementary Material. Without loss of generality we set to ease notation.

Proof of Theorem 3.11

Since , and (B1)–(B4) of Condition E.9 in the Supplementary Material are fulfilled under Conditions 3.6 and 2.1 by Lemma E.10 (see Appendix E in the Supplementary Material). The proof is now simply an application of Lemma E.13 in the Supplementary Material with under the restriction of the null hypothesis.

Proof of Lemma 3.14

The proof is an application of Lemma E.16 in the Supplementary Material, noting that (B1)–(B5) of Condition E.9 in the Supplementary Material are fulfilled under Conditions 3.6 and 2.1 by Lemmas E.10 and E.11 in the Supplementary Material.

7.3. Proofs for Theorems in Section 4

Recall from Section 2 that for an arbitrary matrix M, Mℓ denotes the transposed ℓth row of M and [M]ℓ denotes the ℓth column of M.

Proof of Theorem 4.3

By Lemma 4.1 and , there exists a sufficiently large C0 such that for the event , we have ℙ(ℰ) ≥ 1 − c/d. We choose , which implies that, under ℰ, we have ν ≥ ||β̄d − β*||∞.

Let 𝒮 be the support of β*. The derivations in the remainder of the proof hold on the event ℰ. Observe as . For j ∈ 𝒮, if , we have and thus . While if . Therefore, on the event ℰ,

The statement of the theorem follows because and ℙ(ℰ) ≥ 1 − c/d. Following the same reasoning, on the event , we have

As Lemma 4.1 also gives ℙ(ℰ′) ≥ 1 − c/d, the proof is complete.

Proof of Corollary 4.10

By an analogous proof strategy to that of Theorem 4.8, under the conditions of the Corollary provided .

Supplementary Material

Acknowledgments

The authors thank Weichen Wang, Jason Lee and Yuekai Sun for helpful comments.

References

- Bickel PJ. One-step huber estimates in the linear model. Journal of the American Statistical Association. 1975;70:428–434. [Google Scholar]

- Bühlmann P, van de Geer S. Statistics for high-dimensional data: methods, theory and applications. Springer; 2011. [Google Scholar]

- Candes E, Tao T. The Dantzig selector: statistical estimation when p is much larger than n. Ann Statist. 2007;35:2313–2351. [Google Scholar]

- Chen X, Xie M. Tech Rep 2012-01. Department of Statistics, Rutgers University; 2012. A split and conquer approach for analysis of extraordinarily large data. [Google Scholar]

- Chernozhukov V, Chetverikov D, Kato K. Gaussian approximations and multiplier bootstrap for maxima of sums of high-dimensional random vectors. Ann Statist. 2013;41:2786–2819. [Google Scholar]

- Cox DR, Hinkley DV. Theoretical statistics. Chapman and Hall; London: 1974. [Google Scholar]

- Fan J, Guo S, Hao N. Variance estimation using refitted cross-validation in ultrahigh dimensional regression. J R Stat Soc Ser B Stat Methodol. 2012;74:37–65. doi: 10.1111/j.1467-9868.2011.01005.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Han F, Liu H. Challenges of big data analysis. National Sci Rev. 2014;1:293–314. doi: 10.1093/nsr/nwt032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Li R. Variable selection via nonconcave penalized likelihood and its oracle properties. J Amer Statist Assoc. 2001;96:1348–1360. [Google Scholar]

- Fan J, Lv J. Nonconcave penalized likelihood with np-dimensionality. Information Theory, IEEE Transactions on. 2011;57:5467–5484. doi: 10.1109/TIT.2011.2158486. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Song R. Sure independence screening in generalized linear models with NP-dimensionality. Ann Statist. 2010;38:3567–3604. [Google Scholar]

- Javanmard A, Montanari A. Confidence intervals and hypothesis testing for high-dimensional regression. Journal of Machine Learning Research. 2014;15:2869–2909. [Google Scholar]

- Kallenberg O. Probability and its Applications (New York) Springer-Verlag; New York: 1997. Foundations of modern probability. [Google Scholar]

- Kleiner A, Talwalkar A, Sarkar P, Jordan MI. A scalable bootstrap for massive data. J R Stat Soc Ser B Stat Methodol. 2014;76:795–816. [Google Scholar]

- Lee JD, Sun Y, Liu Q, Taylor JE. Communication-efficient sparse regression: a one-shot approach. 2015 ArXiv 1503.04337. [Google Scholar]

- Liu Q, Ihler AT. Distributed estimation, information loss and exponential families. Advances in Neural Information Processing Systems 2014 [Google Scholar]

- Loh P-L, Wainwright MJ. Regularized m-estimators with nonconvexity: Statistical and algorithmic theory for local optima. In: Burges C, Bottou L, Welling M, Ghahramani Z, Weinberger K, editors. Advances in Neural Information Processing Systems. 2013. pp. 26pp. 476–484. [Google Scholar]

- Loh P-L, Wainwright MJ. Regularized M-estimators with nonconvexity: statistical and algorithmic theory for local optima. J Mach Learn Res. 2015;16:559–616. [Google Scholar]

- Meinshausen N, Bühlmann P. High-dimensional graphs and variable selection with the lasso. Ann Statist. 2006;34:1436–1462. [Google Scholar]

- Negahban S, Yu B, Wainwright MJ, Ravikumar PK. A unified framework for high-dimensional analysis of m-estimators with decomposable regularizers. Advances in Neural Information Processing Systems 2009 [Google Scholar]

- Ning Y, Liu H. A General Theory of Hypothesis Tests and Confidence Regions for Sparse High Dimensional Models. 2014 ArXiv 1412.8765. [Google Scholar]

- Rosenblatt JD, Nadler B. On the optimality of averaging in distributed statistical learning. Information and Inference. 2016:iaw013. [Google Scholar]

- Tibshirani R. Regression shrinkage and selection via the lasso. J Roy Statist Soc Ser B. 1996;58:267–288. [Google Scholar]

- van de Geer S, Bühlmann P, Ritov Y, Dezeure R. On asymptotically optimal confidence regions and tests for high-dimensional models. Ann Statist. 2014;42:1166–1202. [Google Scholar]

- Vershynin R. Introduction to the non-asymptotic analysis of random matrices. 2010 arXiv preprint arXiv:1011.3027. [Google Scholar]

- Wang Z, Liu H, Zhang T. Optimal computational and statistical rates of convergence for sparse nonconvex learning problems. Ann Statist. 2014a;42:2164–2201. doi: 10.1214/14-AOS1238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang Z, Liu H, Zhang T. Optimal computational and statistical rates of convergence for sparse nonconvex learning problems. Ann Statist. 2014b;42:2164–2201. doi: 10.1214/14-AOS1238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang C-H. Nearly unbiased variable selection under minimax concave penalty. Ann Statist. 2010;38:894–942. [Google Scholar]

- Zhang C-H, Zhang SS. Confidence intervals for low dimensional parameters in high dimensional linear models. J R Stat Soc Ser B Stat Methodol. 2014;76:217–242. [Google Scholar]

- Zhang C-H, Zhang T. A general theory of concave regularization for high-dimensional sparse estimation problems. Statistical Science. 2012;27:576–593. [Google Scholar]

- Zhang Y, Duchi JC, Wainwright MJ. Divide and Conquer Kernel Ridge Regression: A Distributed Algorithm with Minimax Optimal Rates. 2013 ArXiv e-prints. [Google Scholar]

- Zhao T, Cheng G, Liu H. A Partially Linear Framework for Massive Heterogeneous Data. 2014a doi: 10.1214/15-AOS1410. ArXiv 1410.8570. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhao T, Kolar M, Liu H. A General Framework for Robust Testing and Confidence Regions in High-Dimensional Quantile Regression. 2014b ArXiv 1412.8724. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.