Abstract

Individual people differ in their ability to reason, solve problems, think abstractly, plan and learn. A reliable measure of this general ability, also known as intelligence, can be derived from scores across a diverse set of cognitive tasks. There is great interest in understanding the neural underpinnings of individual differences in intelligence, because it is the single best predictor of long-term life success. The most replicated neural correlate of human intelligence to date is total brain volume; however, this coarse morphometric correlate says little about function. Here, we ask whether measurements of the activity of the resting brain (resting-state fMRI) might also carry information about intelligence. We used the final release of the Young Adult Human Connectome Project (N = 884 subjects after exclusions), providing a full hour of resting-state fMRI per subject; controlled for gender, age and brain volume; and derived a reliable estimate of general intelligence from scores on multiple cognitive tasks. Using a cross-validated predictive framework, we predicted 20% of the variance in general intelligence in the sampled population from their resting-state connectivity matrices. Interestingly, no single anatomical structure or network was responsible or necessary for this prediction, which instead relied on redundant information distributed across the brain.

This article is part of the theme issue ‘Causes and consequences of individual differences in cognitive abilities’.

Keywords: resting-state fMRI, general intelligence, individual differences, prediction, functional connectivity, brain–behaviour relationship

1. Introduction

Most psychologists agree that there is, in addition to specific cognitive abilities, a very general mental capability to reason, think abstractly, solve problems, plan and learn across domains [1]. This ability, intelligence, does not refer to a person's sheer amount of knowledge but rather to their ability to recognize, acquire, organize, update, select and apply this knowledge [2], to reason and make comparisons [3]. There are large and reliable individual differences in intelligence across species: some people are smarter than others, and some rats are smarter than others [4]. What is more, these differences matter. Intelligence is one of the most robust predictors of conventional measures of educational achievement [5], job performance [6], socio-economic success [7], social mobility [8], health [9] and longevity [10,11]; and as life becomes increasingly complex, intelligence may play an ever-increased role in life outcome [2]. Despite this overwhelming convergence of evidence for the construct of ‘intelligence’, there is considerable debate about what it really is, how best to measure it, and in particular what predictors and mechanisms for its variability across individuals we could find in the human brain.

(a). Measuring intelligence: the structure of cognitive abilities

Intelligence tests are among the most reliable, and valid, of all psychological tests and assessments [1]. Psychologists are so confident in the psychometric properties of intelligence tests that, almost 100 years ago, Edwin G. Boring famously wrote: ‘Intelligence is what the tests test’ [12]. A comprehensive modern intelligence assessment (such as the Wechsler Adult Intelligence Scale, Fourth Edition or WAIS-IV [13]) comprises tasks that assess several aspects of intelligence: some assess verbal comprehension (e.g. word definition, general knowledge and verbal reasoning), some assess visuo-spatial reasoning (e.g. puzzle construction, matrix reasoning and visual perception), some assess working memory (e.g. digit span, mental arithmetic and mental manipulation) and some assess mental-processing speed (e.g. reaction time for detection). The scores on all of these tasks are tallied and compared to a normative, age-matched sample to calculate a standardized Full Scale Intelligence Quotient (FSIQ) score.

One of the most important findings in intelligence research is that performances on all these seemingly disparate tasks—and many other cognitive tasks—are positively correlated: individuals who perform above average on, say, visual perception also tend to perform above average on, say, word definition. Spearman [14] described this phenomenon as the ‘positive manifold’, and since then it has been described in a number of non-human animal species [15]. To account for this empirical observation, he posited the existence of a general factor of intelligence, the ‘g-factor’, or simply ‘g’, which no single task can perfectly measure, but which can be derived from performance on several cognitive tasks through factor analysis. The g-factor captures around half of people's intellectual differences [16] and shows good reliability across sets of cognitive tasks [17]. The empirical observation of the positive manifold is well established [18,19]. The descriptive value of Spearman's g is beyond doubt; however, its interpretation—that psychometric g reflects a general aspect of brain functioning—was challenged early on [20] and remains a topic of debate to this day among intelligence researchers [21–23]. The leading alternative theory posits that each cognitive test involves several mental processes, and that the sampled mental processes overlap across tests; in this situation, performance on all tests appears to be positively correlated (‘Process Overlap Theory’ [19]). The common factor, in this framework, is a consequence of the positive manifold, rather than its cause. There is unfortunately no statistical means of distinguishing between alternative theories on the basis of the psychometric data alone [22].

(b). The search for biological substrates of intelligence

Differential psychology—the psychological discipline that studies individual differences between people and, increasingly, between individuals in non-human animal species (as illustrated in this journal issue [24])—has three main aims: to describe the trait of interest accurately, to establish its impact in real life [25,26] and to understand its ætiology, including its biological basis [27–30]. Much headway has been made with respect to the first two aims for intelligence in humans, as described above. The third aim, despite much effort, has remained elusive.

Individual differences in intelligence are relatively stable: one of the best predictors of intelligence in old age is—perhaps unsurprisingly—intelligence in childhood [31]. Intelligence has a strong genetic component [5,32,33]; Genome-wide association studies (GWAS) suggest that intelligence is highly polygenic (no single gene accounts for a large fraction of the variance) [34,35]. While high heritability points to genes as a biological substrate of intelligence differences, individual differences in cognitive ability are instantiated in brain function. Arguably, studying this measure—the most proximal substrate of intelligent behaviour—may yield more direct insight about the actual mechanisms of intelligence than genetic studies have so far. Since intelligence is a relatively stable trait, its aetiology should be found in stable aspects of brain function, and hence also in aspects of brain structure.

Most of the data on the neural basis of intelligence in humans come from neuroimaging and from lesion studies. Kievit et al. [36] recently described the current state of the neuroscience of intelligence as ‘an embarrassment of riches'—a plethora of neuroimaging-derived properties of the brain, structural and functional, have been linked to intelligence over the years, albeit with largely dubious reliability and reproducibility (see below). It is worth noting that the naive search for a simple neurobiological correlate of intelligence faces a major theoretical hurdle, which is best understood through analogy [36]. Imagine a researcher trying to find the biological basis of the construct of ‘physical fitness’. If they search for a single physical property, they would probably fall short of their goal. Indeed, physical fitness is a composite of several physical properties (such as cardiorespiratory endurance, muscular strength, muscular endurance, body composition, flexibility), and cannot be equated with any single one, or even any specific combination of these factors. It is very likely that intelligence is of a similar composite nature, as suggested by genetic data [34]. Furthermore, individuals may score identically on an IQ test by using different cognitive strategies, or different brain structures [30]. This is an important picture to hold in mind as one searches for neural correlates of intelligence.

There are several in-depth reviews of the neurobiological substrates of intelligence, to which the interested reader is referred for a complete treatment, including structural studies [30,37–39]. Extant functional neuroimaging studies (using EEG, PET, rCBF and fMRI) have been summarized as supporting the notion that intelligence is a network property of the brain, related to neural efficiency [37,40,41]. Foundational studies had very low sample sizes; more recent, better-powered studies have found correlations between intelligence and the global connectivity of a small region in lateral prefrontal cortex (N = 78) [42]; the nodal efficiency of hub regions in the salience network (N = 54) [43]; the modularity of frontal and parietal networks (N = 309) [44]; and several other somewhat disparate reports.

(c). The current study

Extant literature on functional MRI-based correlates of intelligence suffers from the same caveats as most fMRI-based individual-differences research to date [45]: small-sample sizes, and lack of out-of-sample generalization. A predictive framework was first used in a recent study [46], which found that fluid intelligence as estimated from a short Progressive Matrices test could be predicted from functional connectivity matrices in an early, relatively small-sample release (N = 118) of the Human Connectome Project (HCP) dataset [47], with a correlation rLOSO = 0.50 between observed and predicted scores (the subscript ‘LOSO’ denotes the use of a leave-one-subject-out cross-validation framework). Since this early report, the authors revised the effect size down to rLOSO = 0.22 using later data releases (N = 606) [48]. Recent work in our group, in which we further control for confounding effects of age, gender, brain size and motion, as well as using a leave-one-family-out cross-validation framework (LOFO) instead of the original LOSO framework (thus accounting for the family structure of the HCP dataset, see Methods), further revised the effect size down to about rLOFO = 0.09 using methods matched as closely as possible to the original study [46]; yet using improvements including better inter-subject alignment and multivariate modelling, we found rLOFO = 0.263 (N = 884) [49]. Note that this effect size is comparable to recent estimates of the relationship between brain size and intelligence [50]. Though the explained variance is small, it would, in fact, fall around the 65th percentile of correlations observed in individual-differences research [51] (with the caveat that rLOFO correlation is derived from a cross-validation procedure, which breaks the assumption of independence between individual data points).

According to recent guidelines [52], the assessment of general intelligence with HCP's 24-item Progressive Matrices would be considered of ‘fair’ quality (one test, one cognitive dimension, testing time less than 19 min), and would be expected to correlate with general intelligence in the range 0.50–0.71. This rather low measurement quality itself is likely to attenuate the magnitude of the relationship between neural data and general intelligence. Fortunately, there are several other measures of cognition in the HCP, which we here decided to leverage to derive a better estimate of general intelligence—one that would meet criteria for an ‘excellent’ quality measurement (more than nine tests, more than three dimensions, testing time more than 40 min), and thus be expected to correlate with the general factor of intelligence above 0.95.

Our main aims in this study were to: (i) predict an excellent estimate of general intelligence from resting-state functional connectivity in a large sample of subjects from the HCP; (ii) depending on the success of (i), gain some anatomical insight on which functional connections matter for these predictions. The current study paves the way for a reliable neuroimaging-based science of intelligence differences (large sample size; predictive framework; valid, reliable psychometric construct).

2. Methods

Many of the methods, in particular the preprocessing of fMRI data and the predictive analyses, were developed and described in more detail in our recent publication on personality [49].

(a). Dataset

We used data from a public repository, the 1200-subject release of the Human Connectome Project (HCP) [47]. The HCP provides MRI data and extensive behavioural assessment from almost 1200 subjects. Acquisition parameters and ‘minimal’ preprocessing of the resting-state fMRI data is described in the original publication [53]. Briefly, each subject underwent two sessions of resting-state fMRI on separate days, each session with two separate 14 min 24 s acquisitions generating 1200 volumes (customized Siemens Skyra 3 Tesla MRI scanner, TR = 720 ms, TE = 33 ms, flip angle = 52°, voxel size = 2 mm isotropic, 72 slices, matrix = 104 × 90, FOV = 208 × 180 mm, multiband acceleration factor = 8). The two runs acquired on the same day differed in the phase-encoding direction, left-right and right-left (which leads to differential signal intensity especially in ventral temporal and frontal structures). The HCP data were downloaded in their minimally preprocessed form, i.e. after motion correction, B0 distortion correction, coregistration to T1-weighted images and normalization to surface template.

(b). Cognitive-ability tasks

Previous studies of the neural correlates of intelligence in the HCP [46,48] have relied on the number of correct responses on form A of the 24 (+3 bonus)–item Penn Matrix Reasoning Test (PMAT), a test of non-verbal reasoning ability that can be administered in under 10 min (mean = 4.6, s.d. = 3 min; [54]), and is included in the University of Pennsylvania Computerized Neurocognitive Battery (Penn CNB, [55–57]). The PMAT [58,59] is designed to parallel many of the psychometric properties of the Raven's Standard Progressive Matrices test (RSPM, originally published in 1938, which comprises 60 items [60]), while limiting learning effects and expanding the representation of the abstract reasoning construct (Ruben Gur 2017, personal communication).

Assessment of cognitive ability in the HCP [61] also includes several tasks from the Blueprint for Neuroscience Research–funded NIH Toolbox for Assessment of Neurological and Behavioral function (http://www.nihtoolbox.org), as well as other tasks from the Penn computerized neurocognitive battery [56]. These other tasks can be leveraged to derive a better measure of the general intelligence factor [52]. We included all cognitive tasks listed in the HCP Data Dictionary, except for: (i) the delay discounting task, which is not a measure of ability (i.e. there is not a correct response), and (ii) the Short Penn Continuous Performance Test, which is about sustained attention rather than cognitive ability, and whose distribution departed too much from normality (data not shown). Our initial selection thus consisted of 10 tasks (NIH Toolbox: dimensional change card sort; flanker inhibitory control and attention; list sorting working memory; picture sequence memory; picture vocabulary; pattern comparison processing speed; oral reading recognition; Penn CNB: Penn progressive matrices; Penn word memory test; variable short Penn line orientation), which are also listed in the electronic supplementary material, table S1 along with a brief description (the descriptions are copied almost word for word from the HCP Data Dictionary). When several outcome measures were available for a given task, we selected the one that best captured ability; when both age-adjusted and unadjusted scores were available, we included the unadjusted scores. Though some of the NIH toolbox scores combine accuracy and reaction time, we only considered accuracies for the Penn CNB tasks (to avoid confounding ability and processing speed; but see [57]).

(c). Subject selection

The total number of subjects in the 1200-subject release of the HCP dataset is N = 1206. We applied the following criteria to include/exclude subjects from our analyses (listing in parentheses the HCP database field codes). (i) Complete neuropsychological datasets. Subjects must have completed all relevant neuropsychological testing (PMAT_Compl = True, NEO-FFI_Compl = True, Non-TB_Compl = True, VisProc_Compl = True, SCPT_Compl = True, IWRD_Compl = True, VSPLOT_Compl = True) and the Mini Mental Status Exam (MMSE_Compl = True). Any subjects with missing values in any of the tests or test items were discarded. This left us with N = 1183 subjects. (ii) Cognitive compromise. We excluded subjects with a score of 26 or below on the MMSE, which could indicate marked cognitive impairment in this highly educated sample of adults under age 40 [62]. This left us with N = 1181 subjects (638 females, 28.8 ± 3.7 year old, range 22–37 year old). This is the sample of subjects available for factor analyses. Furthermore, (iii) subjects must have completed all resting-state fMRI scans (3T_RS-fMRI_PctCompl = 100), which leaves us with N = 988 subjects. Finally, (iv) we further excluded subjects with a root-mean-squared frame-to-frame head motion estimate (Movement_Relative_RMS.txt) exceeding 0.15 mm in any of the four resting-state runs (threshold similar to [46]). This left us with the final sample of N = 884 subjects (475 females, 28.6 ± 3.7 year old, range 22–36 year old) for predictive analyses based on resting-state data.

(d). Deriving the general factor of intelligence, g

There are several methods in the literature to derive a general factor of intelligence from scores on a set of cognitive tasks. The simplest consists in using a standardized sum score composite; this is the conventional approach when all scores come from a well-validated battery. However, because we are here including scores from two different cognitive batteries (NIH toolbox and Penn CNB), we sought to characterize the structure of cognitive abilities in our sample using factor analysis, and then derive scores for the general factor. We conducted an exploratory factor analysis (EFA), specifying the bi-factor model of intelligence—a common factor g which loads on all test scores, and several group factors that each load on subsets of the test scores; all latent factors are orthogonal to one another—using the psych (v. 1.7.8) package [63] in R (v. 3.4.2). We specifically used the omega function, which conducts a factor analysis (with maximum-likelihood estimation) of the dataset, rotates the factors obliquely (using ‘oblimin’ rotation), factors the resulting correlation matrix, then does a Schmid–Leiman transformation [64] to find general factor loadings. Model fit was assessed using several commonly used statistics in factor analysis [65]: the comparative fit index (CFI; should be as close to 1 as possible; values greater than 0.95 are considered a good fit); the root mean squared error of approximation (RMSEA; should be as close to 0 as possible; values less than 0.06 are considered a good fit); the standardized root mean squared residual (SRMR; should be as close to 0 as possible; values less than 0.08 are considered a good fit); and the Bayesian information criterion (BIC; better models have lower values, can be negative). Factor scores can be derived using different methods [66], for example, the regression method. This approach mimics the one taken by Gläscher et al. [67]. It is, however, usually preferable to derive scores from a confirmatory factor analysis (CFA). The main difference between EFA and CFA is that in EFA, observed task scores are allowed to cross-load freely on several group factors, while in CFA such cross-loadings can be forbidden. For the purpose of deriving the general factor of intelligence, there is little difference between using CFA and EFA in practice; we conducted a CFA using the lavaan (v. 0.5-23.1097) package [68] in R to verify this (see electronic supplementary material, figure S1).

(e). Assessment and removal of potential confounds

We computed the correlation of the general factor of intelligence g with Gender (HCP variable: Gender), Handedness and Age (restricted HCP variables: Handedness, Age_in_Yrs). We also looked for differences in g in our subject sample with variables that are likely to affect FC matrices, such as brain size (we used FS_BrainSeg_Vol), motion (we computed the sum of framewise displacement in each run) and the multiband reconstruction algorithm, which changed in the third quarter of HCP data collection (fMRI_3T_ReconVrs). We then used multiple linear regression to regress these variables from g scores and remove their confounding effects.

Note that we did not control for differences in cortical thickness and other morphometric features, which have been reported to be correlated with intelligence (e.g. [69]). These probably interact with FC measures and should eventually be accounted for in a full model, yet this was deemed outside the scope of the present study.

Note also that we did not consider Educational Achievement (EA) and Socio-Economic Status (SES) as confounds in this analysis, as they have been described as consequences of intelligence and thus controlling for them would remove meaningful variance. A full model considering these variables is a future direction for this work.

(f). Data preprocessing

We recently explored the effects of several preprocessing pipelines on the prediction of personality factors and PMAT scores in the HCP dataset [49]. Here we adopted the preprocessing pipeline which was found to produce the highest prediction scores in that study. The pipeline reproduces as closely as possible the strategy described in [46] and consists of seven consecutive steps: (1) the signal at each voxel is z-score normalized; (2) using tissue masks, temporal drifts from cerebrospinal fluid (CSF) and white matter (WM) are removed with third-degree Legendre polynomial regressors; (3) the mean signals of CSF and WM are computed and regressed from gray matter voxels; (4) translational and rotational realignment parameters and their temporal derivatives are used as explanatory variables in motion regression; (5) signals are low-pass filtered with a Gaussian kernel with a standard deviation of 1 TR, i.e. 720 ms in the HCP dataset; (6) the temporal drift from gray matter signal is removed using a third-degree Legendre polynomial regressor; (7) global signal regression is performed. These operations were performed using an in-house, Python (v. 2.7.14)-based pipeline (mostly based on open source libraries and frameworks for scientific computing, including SciPy (v. 0.19.0), Numpy (v. 1.11.3), NiLearn (v. 0.2.6), NiBabel (v. 2.1.0), Scikit-learn (v. 0.18.1) [70–74]).

(g). Inter-subject alignment, parcellation and functional connectivity matrix generation

We used surface-based multi-modally aligned cortical data (MSM-All [75]), together with a parcellation that was derived from these data using an objective semi-automated neuroanatomical approach [76]. The parcellation has 360 nodes, 180 for each hemisphere. These nodes can be attributed to the major resting-state networks [77] (figure 3a). Time-series extraction simply consisted in averaging data from vertices within each parcel, and matrix generation in pairwise correlating parcel time series (Pearson correlation coefficient). We concatenated time series across runs to derive average FC matrices (REST1: from concatenated REST1_LR and REST1_RL time series; REST2: from concatenated REST2_LR and REST2_RL time series; REST12: from concatenated REST1_LR, REST1_RL, REST2_LR and REST2_RL time series). There are (360 × 359)/2 = 64 620 undirected edges in a network of 360 nodes. This is the dimensionality of the feature space for prediction.

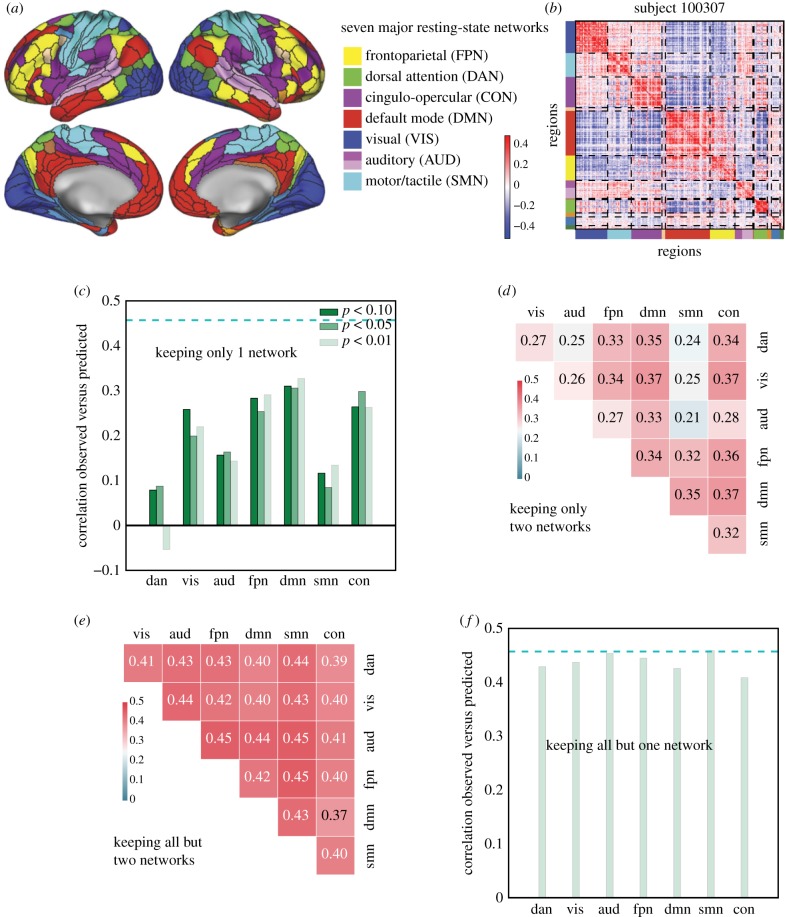

Figure 3.

A distributed neural basis for g. (a) Assignment of parcels to major resting-state networks, reproduced from Ito et al. [77]. (b) Example REST12 functional connectivity matrix ordered by network, for an individual subject (id = 100 307). (c) Prediction performance for REST12 matrices (as Pearson correlation between observed and predicted scores) using only within network edges, for the seven main resting-state networks listed in (a). DMN, FPN, CON and VIS carry the most information about g. The three shades of green correspond to three univariate thresholds for initial feature selection (we used p < 0.01 as in the main analysis; as well as p < 0.05 and p < 0.1 to make sure that the results were not limited by the inclusion of too few edges). The dashed cyan line shows for comparison the prediction performance for the whole-brain connectivity matrix (same data as in figure 2). (d) Prediction performance with two networks only (univariate feature selection with p < 0.01). (e) Prediction performance for REST12 matrices after ‘lesioning’ two networks (univariate feature selection with p < 0.01). (f) Prediction performance after lesioning one network (univariate feature selection with p < 0.01).

(h). Prediction model

We used a univariate feature filtering approach to reduce the number of features, discarding edges for which the p-value of the correlation with the behavioural score is greater than a set threshold, for example, p < 0.01 (as in [46]). We then used Elastic Net regression to learn the relationship with behaviour; based on our previous work [49] and on the fact that it is unlikely that just a few edges contribute to prediction, we fixed the L1 ratio (which weights the L1- and L2-regularizations) to 0.01, which amounts to almost pure ridge regression. We used threefold nested cross-validation (with balanced ‘classes’, based on a partitioning of the training data into quartiles) to choose the alpha parameter (among 50 possible values) that weighs the penalty term.

(i). Cross-validation scheme

In the HCP dataset, several subjects are genetically related (in our final subject sample there were 410 unique families). To avoid biasing the results due to this family structure (e.g. perhaps having a sibling in the training set would facilitate prediction for a test subject, if both intelligence and functional connectivity are heritable), we implemented a leave-one-family-out cross-validation scheme for all predictive analyses.

(j). Statistical assessment of predictions

Several measures can be used to assess the quality of predictions, which we described in more detail in our previous publication [49]. Here we report the Pearson correlation coefficient between observed scores and predicted scores, the coefficient of determination R2, and the related normalized root mean square deviation (nRMSD).

In a cross-validation scheme, the folds are not independent of each other. This means that statistical assessment of the cross-validated performance using parametric statistical tests is problematic [49,78,79]. Proper statistical assessment should thus be done using permutation testing on the actual data. To establish the empirical distribution of chance, we ran our predictive analysis using 1000 random permutations of the scores (shuffling scores randomly between subjects, keeping everything else exactly the same, including the family structure).

3. Results

(a). A general factor, g, accounts for 58% of the covariance structure of cognitive tasks in the HCP sample

All selected cognitive task scores (electronic supplementary material, table S1) correlated positively with one another, as expected from the well-known positive manifold (figure 1a). A parallel analysis suggested an underlying 4-factor structure (figure 1b). An exploratory bifactor analysis with a general factor g and 4 group factors fit the data very well (CFI = 0.990; RMSEA = 0.0311; SRMR = 0.0201; BIC = −0.519), much better than a single factor model (CFI = 0.719; RMSEA = 0.1398; SRMR = 0.0887; BIC = 591.172). The solution is depicted in figure 1c. The four factors can naturally be interpreted as: (1) Crystallized Ability [cry] (PicVocab_Unadj + ReadEng_Unadj); (2) Processing Speed [spd] (CardSort_Unadj + Flanker_Unadj + ProcSpeed_Unadj); (3) Visuospatial Ability [vis] (PMAT24_A_CR + VSPLOT_TC) and (4) Memory [mem] (IWRD_TOT + PicSeq_Unadj + ListSort_Unadj).

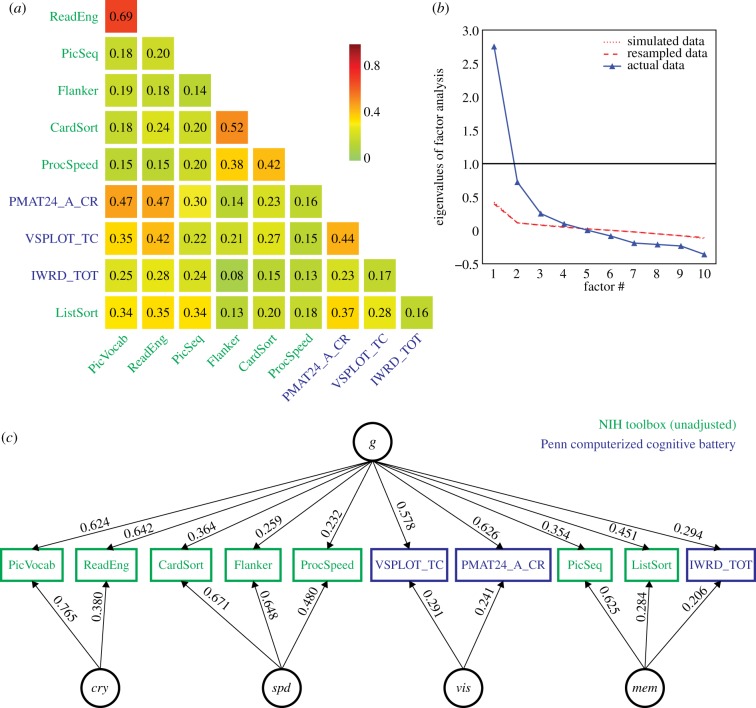

Figure 1.

Exploratory factor analysis of select cognitive tasks (electronic supplementary material, table S1) in the HCP dataset, using N = 1181 subjects. (a) All cognitive task scores correlated positively with one another, reflecting the well-established positive manifold (see also the electronic supplementary material, figure S2 for scatter plots). (b) A parallel analysis suggested the presence of four latent factors from the covariance structure of cognitive task scores. Note that the simulated and resampled data lines are nearly indistinguishable. (c) A bifactor analysis fit the data well (see fit statistics in text), and yielded a theoretically plausible solution with a general factor (g) and four group factors which can be interpreted as crystallized ability (cry), processing speed (spd), visuospatial ability (vis) and memory (mem). Loadings less than 0.2 are not displayed.

Across all cognitive task scores, this general intelligence factor accounted for 58.5% of the variance (coefficient omega_hierarchical ωh [80–82]), while group factors accounted for 18.2% of the variance (with 15.5% of the variance unaccounted for). Another important metric is coefficient omega subscale ωs [83] which quantifies the reliable variance across the tasks accounted for by each subscale, beyond that accounted for by the general factor; we found  ,

,  ,

, and

and  . While some of these subscale factors account for a substantial proportion of the variance across their respective tasks, their measurement quality is at most fair [52] due to the limited number of constituent tasks; thus we chose to focus on the general factor g only in the present study.

. While some of these subscale factors account for a substantial proportion of the variance across their respective tasks, their measurement quality is at most fair [52] due to the limited number of constituent tasks; thus we chose to focus on the general factor g only in the present study.

Factor scores are indeterminate, and several alternate methods exist to derive them from a structural model [66]. To avoid this issue, most researchers prefer to remain in latent space for further analyses [36]. However, for subsequent analyses we required factor scores for g, which we derived using regression-based weights (‘Thurstone’ method). We compared the general factor scores derived from this exploratory factor analysis (EFA) with a simple composite score consisting of the sum of standardized observed test scores. As expected, we found that the simple composite score correlates highly with the EFA-derived g (r = 0.91).

(b). Brain size, gender and motion are correlated with g

There are known effects of gender [84,85], age [86,87], in-scanner motion [88–90] and brain size [91] on the functional connectivity patterns measured in the resting-state with fMRI. It is thus necessary to control for these variables [92]: indeed, if intelligence is correlated with gender, one would be able to predict some of the variance in intelligence solely from functional connections that are related to gender. The easiest way to control for these confounds is to remove any relationship between the confounding variables and the score of interest in our sample of subjects, which can be done using multiple regression. Note that this approach may be too conservative, and that more work remains to be done on dealing with such confounds (see Discussion).

We characterized the relationship between intelligence and each of the confounding variables listed above in our subject sample (electronic supplementary material, figure S3). Intelligence was correlated with gender (men scored higher in our sample), age (younger scored higher in our sample—note the limited age range 22–36 year old), and brain size (larger brains scored higher) [50,52]. There was no relationship between handedness and intelligence in our sample (r = 2 × 10−6). Motion, quantified as the sum of frame-to-frame displacement over the course of a run (and averaged separately for REST1 and REST2) was correlated with intelligence: subjects scoring lower on intelligence moved more during the resting-state. Note that motion in REST1 was highly correlated (r = 0.72) with motion in REST2, indicating that motion itself may be a stable trait, and correlated with other traits. While the interpretation of these complex relationships would require further work outside the scope of this study (using partial correlations, and mediation models, to disentangle effects), it is critical to remove shared variance between intelligence and the primary confounding variables before proceeding further. This ensures that our model is trained specifically to predict intelligence, rather than confounds that covary with it in our subject sample [92]. However, there are several other variables that we do not explicitly account for here, for example, the Openness personality trait which we previously found to be correlated with intelligence [49].

Another possible confound, specific to the HCP dataset, is a difference in the image reconstruction algorithm between subjects collected prior to and after April 2013. The reconstruction version leaves a notable signature on the data that can make a large difference in the final analyses produced [93]. We found a small, but significant correlation with intelligence in our sample (indicating that subjects imaged with the old reconstruction version were, on average, less intelligent than the ones imaged with the newer reconstruction version). This confound is, of course, a simple sampling bias artefact with no meaning. Yet, this significant artifactual correlation must be removed, by including the reconstruction factor as a confound variable.

Importantly, the multiple linear regression used for removing the variance shared with confounds was fitted on the training data (in each cross-validation fold during the prediction analysis), and then the fitted weights were applied to remove the effects of confounds in both the training and test data. This is critical to avoid any leakage of information, however negligible, from the test data into the training data.

(c). Resting-state FC predicts 20% of the variance in g across subjects

We computed a resting-state functional connectivity matrix for each subject from close to 1 h of resting-state data (REST12), yielding a very reliable estimate of the stable functional network of each individual—with 1 h of scan time, a recent study found the test–retest reliability of an individual's FC matrix to be above r = 0.96 (see fig. 4c in [94]).

We used a leave-one-family-out cross-validation scheme to train a regularized linear model and predict general intelligence from functional connectivity matrices (features are the 64 620 undirected edges), in our sample of 884 subjects. We found a significant correlation between observed and predicted g scores (r = 0.457, P1000 < 0.001, based on 1000 permutations), a coefficient of determination that differs significantly from chance (R2 = 0.206; P1000 < 0.001), and an nRMSD that is significantly lower than its null distribution (nRMSD = 0.892; P1000 < 0.001; figure 2).

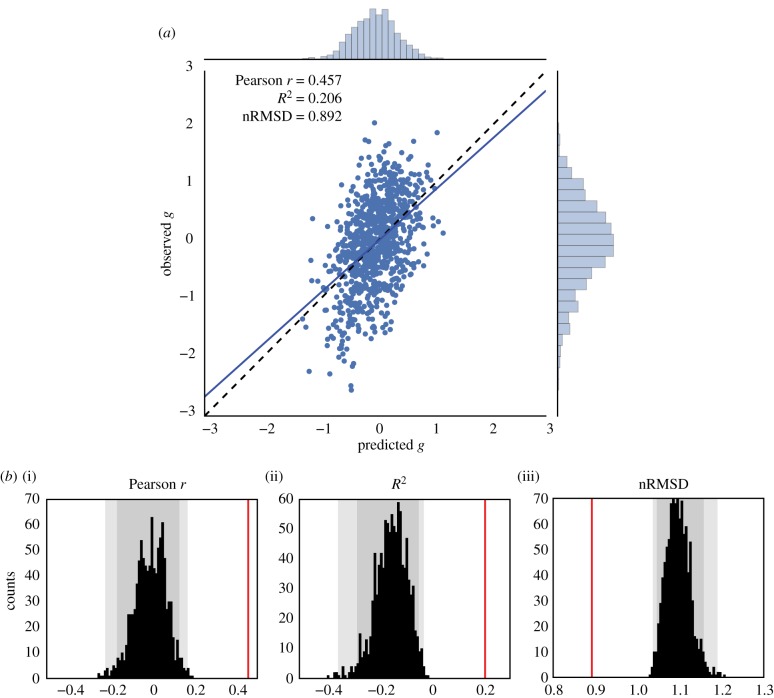

Figure 2.

Prediction of the general factor of intelligence g from resting-state functional connectivity, averaging all resting-state runs for each subject (REST12, totalling almost 1 h of fMRI data). (a) Observed versus predicted values of the general factor of intelligence. The regression line had a slope close to 1, as expected theoretically [95]. The correlation coefficient was r = 0.457 (REST1 only, r = 0.419; REST2 only, r = 0.312). (b) Evaluation of prediction performance according to several statistics and their distributions under the null hypothesis, as simulated through permutation testing (with 1000 surrogate datasets). All fit statistics (red lines) fell far out of the confidence intervals under the null hypothesis. (i) The correlation between observed and predicted values; (ii) the coefficient of determination R2, interpretable as the proportion of explained variance; (iii) the nRMSD, which indicates the average difference between observed and predicted scores. Faint grey shade: p < 0.05; darker grey shade: p < 0.01, permutation tests.

For comparison, we previously found that the prediction of intelligence as estimated by PMAT24_A_CR scores [49] captured less variance (r = 0.263, P1000 < 0.001; R2 = 0.047, P1000 < 0.001; nRMSD = 0.977, P1000 < 0.001). It is likely that the moderating effect of inferior measurement quality with the PMAT24_A_CR as compared to our factor-derived g limited prediction performance [52].

Similarly, using only 30 min of resting-state data (one session, two runs) to derive functional connectivity matrices had a moderating effect on prediction performance. With 30 min of scan time, test–retest reliability of FC matrices falls to about r = 0.92 (according to fig. 4c in [94]). Predicting g using REST1, we found r = 0.419, R2 = 0.170, nRMSD = 0.912; using REST2, we found r = 0.312, R2 = 0.067, nRMSD = 0.966.

(d). Predictive edges are distributed in FPN, CON, DMN, and VIS networks

We have demonstrated that a substantial and statistically highly significant amount of variance (about 20%) in general intelligence g across our sample of subjects can be predicted from resting-state functional connectivity. Is there a specific set of edges (connectivity between specific anatomical parcels) in the brain that carries most of the information? The Parieto-Frontal Integration Theory (P-FIT) of intelligence [37] postulates roles for cortical regions in the prefrontal (Brodmann areas (BA) 6, 9–10, 45–47), parietal (BA 7, 39–40), occipital (BA 18–19), and temporal association cortex (BA 21, 37).

To address this question, we used a descriptive network selection/elimination approach. We focused on the 7 major resting-state networks [96]; Ito and colleagues recently assigned each region of the parcellation used here [76] to these functional networks using the Generalized Louvain method for community detection with resting-state fMRI data [77] (figure 3a). We verified that this published network assignment indeed clustered regions that have similar connectivity patterns at the level of single subjects (figure 3b). We then asked how well we could predict g keeping edges within only one network ( combinations, figure 3c), or within/between two networks (

combinations, figure 3c), or within/between two networks ( combinations, figure 3d). For all these analyses we carried out exactly the same methods as described above (including the univariate feature selection step), but training and testing on a reduced feature space (selecting edges according to networks). Prediction performance within a single network, or with two networks, was much lower compared to performance with the full set of edges (one network, maximum performance r = 0.327; two networks, maximum performance r = 0.373); however, some networks were found to carry more information than others: the most informative networks were CON, DMN, FPN and VIS, while DAN, AUD and SMN carried very little information. These results are in good agreement with the P-FIT [37]; in particular, in addition to the eponymous frontal and parietal regions which have been reported in other studies already [46,67], we evidenced information in the VIS network as postulated by P-FIT. We next explored how the removal of networks affected prediction: we ‘lesioned’ a single network (

combinations, figure 3d). For all these analyses we carried out exactly the same methods as described above (including the univariate feature selection step), but training and testing on a reduced feature space (selecting edges according to networks). Prediction performance within a single network, or with two networks, was much lower compared to performance with the full set of edges (one network, maximum performance r = 0.327; two networks, maximum performance r = 0.373); however, some networks were found to carry more information than others: the most informative networks were CON, DMN, FPN and VIS, while DAN, AUD and SMN carried very little information. These results are in good agreement with the P-FIT [37]; in particular, in addition to the eponymous frontal and parietal regions which have been reported in other studies already [46,67], we evidenced information in the VIS network as postulated by P-FIT. We next explored how the removal of networks affected prediction: we ‘lesioned’ a single network ( , figure 3f) or two networks (

, figure 3f) or two networks ( , figure 3e). We found that lesioning one or two networks had a very small effect on the prediction of g (lesion one network, minimum performance r = 0.409; lesion two networks, minimum performance r = 0.373), indicating there is distributed and redundant information about g in functional connectivity patterns across several brain networks.

, figure 3e). We found that lesioning one or two networks had a very small effect on the prediction of g (lesion one network, minimum performance r = 0.409; lesion two networks, minimum performance r = 0.373), indicating there is distributed and redundant information about g in functional connectivity patterns across several brain networks.

4. Discussion

Deary et al. recently wrote [97]: ‘the effort to understand the psychobiology of intelligence has a resemblance with digging the tunnel between England and France: We hope, with workers on both sides having a good sense of direction, that we can meet and marry brain biology and cognitive differences’. The present study is a step in this direction, offering the to-date most robust investigation specifically focused on predicting intelligence from resting-state fMRI data. Here we used factor analysis of the scores on 10 cognitive tasks to derive a bi-factor model of intelligence, including a common g-factor and broad ability factors, which is the standard in the field of intelligence research [52,98]. We used reliable estimates of functional connectivity in a large sample of subjects, from close to one hour of high-quality resting-state fMRI data per subject. We used the best available inter-subject alignment algorithm (MSM-All), a stringent control for confounding variables, and out-of-sample prediction. With these state-of-the-art methods on both ends of the tunnel, we demonstrated a strong relationship between general intelligence g and resting-state functional connectivity (at least as strong as the well-established relationship of intelligence with brain size [50,52]).

We further established that predictive network edges were fairly distributed throughout the brain, though they mostly fell within four of the seven major resting-state networks: the fronto-parietal network, the default mode network, the control network, and the visual network. These findings are in general agreement with the parieto-frontal integration theory (P-FIT) of intelligence. This neuroanatomical description of informative edges should be considered preliminary, as we have yet to explore how it is affected by analytical choices, such as the brain parcellation scheme and the predictive model. Removing the nodes from one or two networks had little effect on prediction scores (figure 3e,f), supporting the conclusion that information is quite distributed. This latter finding might seem at odds with prior reports that focal lesions in frontal or parietal lobe are correlated with reduced intelligence [67]. However, there is a fundamental difference here: actual neurological lesions [99] would remove the function of the lesioned regions, and would thus remove information not only for edges directly linked to the lesioned parcels, but remove additional information in complex ways all across the brain. Our virtual ‘lesions’ do not do this and instead retain information from the ‘lesioned’ regions that is broadcast across other brain regions.

Our findings considerably extend a previous report [46] which had hinted at a relationship between resting-state functional connectivity and intelligence using a much smaller subject sample (N = 117), no account of potential confounding variables, a cross-validation scheme that did not respect family structure, a less functionally accurate inter-subject alignment, and a lower quality measure of intelligence (the short modified version of Raven's Progressive Matrices). We indeed found evidence that measurement quality, both on the behavioural and on the neural side, moderated the effect size of the relationship between brain and behaviour [52]. We achieved better prediction performance using g rather than the number of correct responses on the PMAT24_A test; and we achieved better performance using REST12 matrices (approx. 1 h of data per subject) rather than REST1 or REST2 matrices (approx. 30 min of data per subject). Though this is of course expected statistically -- the noise ceiling gets lower as noise increases for the two variables that are correlated -- this is an important observation for future explorations in other datasets. In many aspects the neural and behavioural data in the HCP are of higher quality than most other large-scale neuroimaging projects; conducting similar analyses in other datasets may yield smaller effect sizes solely because of lower data quality and, just as importantly, quantity. Despite this caveat, and despite the care that we took to use cross-validated predictions to assess out-of-sample generalizability, it will be important to replicate our finding in an independent dataset, if only to establish the bounds of the generalizability of our findings. Though beyond the scope of this study, we are already exploring three candidate publicly available datasets with suitable imaging and behavioural assessment in large cohorts of subjects: the Cambridge Centre for Ageing and Neuroscience (Cam-CAN, [100]); the Nathan Kline Institute Rockland sample [101]; and the UK Biobank [102].

The general factor of intelligence g that we derived from 10 cognitive task scores is as reliable as it can be in this dataset, and would be unlikely to improve substantially even if additional measures were available. An interesting question for future studies will be to look at the predictability of the subscales: crystallized ability, processing speed, visuospatial reasoning, and memory. Addition of tasks in each of these subdomains would increase the reliability of these specific ability factors, and allow for a more precise exploration of their neural bases. This would require a much longer assessment and many more ability tests. Rather than build an entirely new dataset from scratch, the possibility of testing all HCP subjects again on a lengthier, diverse cognitive ability battery should be considered [103].

Another factor that can moderate the relationship between variables is range restriction of variables [104]. Here, there is some concern that the range of intelligence scores in the HCP subject sample may be restricted to the higher end of the distribution. While published normative data is currently unavailable for the Penn matrix reasoning test and other Penn CNB tests in the age range of our subjects, the NIH toolbox tests provide age-normed scores. Inspection of these scores indicates that the HCP subject sample is indeed biased towards higher scores (in particular for crystallized abilities; see electronic supplementary material, figure S4). This sampling bias is a well-known, systemic issue in experimental psychology [105], and one that is difficult to avoid despite efforts to recruit from the entire population. A natural question to ask is whether the neural bases of mental retardation, and of genius at the other end of the spectrum, lie in the same continuum as what we describe in this study. For instance, it is well known that macrocephaly (unusually large brains) can also be associated with mental retardation, so that the association of intelligence with larger brain size only holds within the normal range. Future studies in samples with a larger range of intelligence should explore this important question.

Though we qualified our approach as state of the art on the behavioural side, intelligence researchers would probably object that deriving factor scores is a thing of the past, and that analyses should be conducted in latent space (in part because of factor indeterminacy). This objection can mostly be ignored in our situation, where we only looked at the general factor g, given that it can be so precisely estimated in our dataset. However, to study specific factors we should consider casting the brain–behaviour relationship problem within the framework of structural equation modelling (e.g. [36]).

We were very careful to regress out several potential confounds [92], such as brain size, gender and age, before performing predictions. While this gives us some comfort that the results reported here are indeed specific to general intelligence, the confound regression approach could certainly be improved further. There are two main concerns: one the one hand, we may be throwing out relevant variance and injecting noise into the g scores by bluntly regressing out confounding variables—a more careful cleanup should be attempted, for example, using a well-specified structural equation model [106]. However, the approach we took is superior to ignoring the issue of potential confounds altogether (as prior studies have done), which is likely to inflate predictability and compromise interpretation. On the other hand, the list of confounding variables that we considered was not exhaustive: for example, we did not regress out variance in the Openness factor of personality, which we have previously found to be correlated with intelligence [49]; we also did not consider effects of educational achievement or socio-economic status; and there are certain to be other confounds that were not measured at all. Furthermore, it is very likely that regressing out the mean framewise displacement does not properly account for nonlinear effects of motion. Cleaning resting-state fMRI data of the effects of motion remains a very intense topic of research for studies of brain–behaviour relationships [107–109]. While we are confident that our current results are not solely explained by motion in the scanner, a full quantification of this issue remains warranted.

It is worth mentioning a related, entirely data-driven study that was recently conducted by Smith and colleagues on an earlier release of the same HCP dataset (N = 461) [110]. Using canonical correlation analysis (CCA), the authors demonstrated that a network of brain regions that closely resembled the default mode network was highly related to a linear combination of behavioural scores that they label a ‘positive-negative mode of population covariation’. In essence, this combination is a neurally derived general factor, encompassing cognitive and other behavioural tasks. Our study is in general agreement with these results, as we found high predictability of general intelligence g from connections within the default mode network.

Where do we go from here? As we know that task functional MRI [37,40] and structural MRI data (brain size [50], as well as morphometric features [69]) also hold information that is predictive of cognitive ability, a natural question is whether combining functional and structural data will allow us to account for more variance in the general intelligence factor g. The more variance we can account for, the more trustworthy and thus interpretable our models become, and we can hope to further refine our understanding of the neural bases of general intelligence.

Of course, mere prediction does not yet illuminate mechanisms, and we would ultimately wish to have a much more detailed causal model that explains how genetic factors, brain structure, brain function and individual differences in variables such as g and personality relate to real-life outcomes. Given that g is already known to predict outcomes such as lifespan and salary, a structural model incorporating all of these variables should provide us with the most comprehensive understanding of the mechanisms, and the most effective information for targeted interventions.

Finally, we would like to situate this paper in the broader context of this special issue. Intelligence can be quantified across species and is highly heritable. Are similar brain networks the most predictive of variability in intelligence across mammals? Are there measures of heritability or brain structure, as compared to brain function, that might be better predictors in some species than others? It would be intriguing to find that humans share with other species a core set of genetically specified constraints on intelligence, but that humans are unique in the extent to which education and learning can modify intelligence through the incorporation of additional variability in brain function.

Supplementary Material

Acknowledgements

We thank Stuart Ritchie, Gilles Gignac, William Revelle and Ruben Gur for invaluable advice on the behavioural side of the analyses—though the final analytical choices rest solely with the authors.

Data accessibility

The Young Adult HCP dataset is publicly available at https://www.humanconnectome.org/study/hcp-young-adult. Analysis scripts are available in the following public repository: https://github.com/adolphslab/HCP_MRI-behavior.

Authors' contributions

J.D. and P.G. developed the overall general analysis framework and conducted some of the initial analyses for the paper. J.D. conducted all final analyses and produced all figures. L.P. helped with literature search, analysis of behavioural data and interpretation of the results. J.D. and R.A. wrote the initial manuscript and all authors contributed to the final manuscript. All authors contributed to planning and discussion on this project.

Competing interest

The authors declare no conflict of interest.

Funding

This work was supported by NIMH grant no. 2P50MH094258 (PI: R.A.), the Chen Neuroscience Institute, the Carver Mead Seed Fund, and a NARSAD Young Investigator Grant from the Brain and Behavior Research Foundation (PI: J.D.).

References

- 1.Gottfredson LS. 1997. Mainstream science on intelligence: An editorial with 52 signatories, history, and bibliography. Intelligence 24, 13–23. ( 10.1016/S0160-2896(97)90011-8) [DOI] [Google Scholar]

- 2.Gottfredson LS. 1997. Why g matters: the complexity of everyday life. Intelligence 24, 79–132. ( 10.1016/S0160-2896(97)90014-3) [DOI] [Google Scholar]

- 3.Jensen AR. 1981. Straight talk about mental tests. New York, NY, USA: The Free Press. [Google Scholar]

- 4.Burkart JM, Schubiger MN, van Schaik CP. 2016. The evolution of general intelligence. Behav. Brain Sci. 40, 1–65. [DOI] [PubMed] [Google Scholar]

- 5.Plomin R, Deary IJ. 2015. Genetics and intelligence differences: five special findings. Mol. Psychiatry 20, 98–108. ( 10.1038/mp.2014.105) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Kuncel NR, Hezlett SA. 2010. Fact and fiction in cognitive ability testing for admissions and hiring decisions. Curr. Dir. Psychol. Sci. 19, 339–345. ( 10.1177/0963721410389459) [DOI] [Google Scholar]

- 7.Strenze T. 2007. Intelligence and socioeconomic success: a meta-analytic review of longitudinal research. Intelligence 35, 401–426. ( 10.1016/j.intell.2006.09.004) [DOI] [Google Scholar]

- 8.Deary IJ, Taylor MD, Hart CL, Wilson V, Smith GD, Blane D, Starr JM. 2005. Intergenerational social mobility and mid-life status attainment: influences of childhood intelligence, childhood social factors, and education. Intelligence 33, 455–472. ( 10.1016/j.intell.2005.06.003) [DOI] [Google Scholar]

- 9.Gottfredson LS. 2004. Intelligence: is it the epidemiologists' elusive‘ fundamental cause’ of social class inequalities in health? J. Pers. Soc. Psychol. 86, 174 ( 10.1037/0022-3514.86.1.174) [DOI] [PubMed] [Google Scholar]

- 10.Deary I. 2008. Why do intelligent people live longer? Nature 456, 175–176. ( 10.1038/456175a) [DOI] [PubMed] [Google Scholar]

- 11.Calvin CM, Deary IJ, Fenton C, Roberts BA, Der G, Leckenby N, Batty GD. 2011. Intelligence in youth and all-cause-mortality: systematic review with meta-analysis. Int. J. Epidemiol. 40, 626–644. ( 10.1093/ije/dyq190) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Boring EG. 1923. Intelligence as the tests test it. New Republic 36, 35–37. [Google Scholar]

- 13.Wechsler D. 2008. WAIS-IV: wechsler adult intelligence scale. New York, NY: Pearson. [Google Scholar]

- 14.Spearman C. 1904. ‘ General intelligence,’ objectively determined and measured. Am. J. Psychol. 15, 201–292. ( 10.2307/1412107) [DOI] [Google Scholar]

- 15.Kolata S, Light K, Matzel LD. 2008. Domain-specific and domain-general learning factors are expressed in genetically heterogeneous CD-1 mice. Intelligence 36, 619–629. ( 10.1016/j.intell.2007.12.001) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Jensen AR. 1998. The g factor: the science of mental ability. Westport, CT: Praeger. [Google Scholar]

- 17.Johnson W, Nijenhuis J te, Bouchard TJ. 2008. Still just 1 g: consistent results from five test batteries. Intelligence 36, 81–95. ( 10.1016/j.intell.2007.06.001) [DOI] [Google Scholar]

- 18.Carroll JB. 1993. Human cognitive abilities: a survey of factor-analytic studies. Cambridge, UK: Cambridge University Press. [Google Scholar]

- 19.Kovacs K, Conway ARA. 2016. Process overlap theory: a unified account of the general factor of intelligence. Psychol. Inq. 27, 151–177. ( 10.1080/1047840X.2016.1153946) [DOI] [Google Scholar]

- 20.Thomson GH. 1916. A hierarchy without a general factor. Br. J. Psychol. 8, 271–281. ( 10.1111/j.2044-8295.1916.tb00133.x) [DOI] [Google Scholar]

- 21.van der Maas HLJ, Dolan CV, Grasman RPPP, Wicherts JM, Huizenga HM, Raijmakers MEJ. 2006. A dynamical model of general intelligence: the positive manifold of intelligence by mutualism. Psychol. Rev. 113, 842–861. ( 10.1037/0033-295X.113.4.842) [DOI] [PubMed] [Google Scholar]

- 22.Bartholomew DJ, Deary IJ, Lawn M. 2009. A new lease of life for Thomson's bonds model of intelligence. Psychol. Rev. 116, 567–579. ( 10.1037/a0016262) [DOI] [PubMed] [Google Scholar]

- 23.McFarland DJ. 2012. A single g factor is not necessary to simulate positive correlations between cognitive tests. J. Clin. Exp. Neuropsychol. 34, 378–384. ( 10.1080/13803395.2011.645018) [DOI] [PubMed] [Google Scholar]

- 24.Cauchoix M, et al. 2018. The repeatability of cognitive performance: a meta-analysis. Phil. Trans. R. Soc. B 373, 20170281 ( 10.1098/rstb.2017.0281) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Huebner F, Fichtel C, Kappeler PM. 2018. Linking cognition with fitness in a wild primate: fitness correlates of problem-solving performance and spatial learning ability. Phil. Trans. R. Soc. B 373, 20170295 ( 10.1098/rstb.2017.0295) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Madden JR, Langley EJG, Whiteside MA, Beardsworth CE, van Horik JO. 2018. The quick are the dead: pheasants that are slow to reverse a learned association survive for longer in the wild. Phil. Trans. R. Soc. B 373, 20170297 ( 10.1098/rstb.2017.0297) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Pike TW, Ramsey M, Wilkinson A. 2018. Environmentally induced changes to brain morphology predict cognitive performance. Phil. Trans. R. Soc. B 373, 20170287 ( 10.1098/rstb.2017.0287) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Sauce B, Bendrath S, Herzfeld M, Siegel D, Style C, Rab S, Korabelnikov J, Matzel LD. 2018. The impact of environmental interventions among mouse siblings on the heritability and malleability of general cognitive ability. Phil. Trans. R. Soc. B 373, 20170289 ( 10.1098/rstb.2017.0289) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Sorato E, Zidar J, Garnham L, Wilson A, Løvlie H. 2018. Heritabilities and co-variation among cognitive traits in red junglefowl. Phil. Trans. R. Soc. B 373, 20170285 ( 10.1098/rstb.2017.0285) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Deary IJ, Penke L, Johnson W. 2010. The neuroscience of human intelligence differences. Nat. Rev. Neurosci. 11, 201–211. ( 10.1038/nrn2793) [DOI] [PubMed] [Google Scholar]

- 31.Deary IJ, Pattie A, Starr JM. 2013. The stability of intelligence from age 11 to age 90 years: the Lothian birth cohort of 1921. Psychol. Sci. 24, 2361–2368. ( 10.1177/0956797613486487) [DOI] [PubMed] [Google Scholar]

- 32.Plomin R, Fulker DW, Corley R, DeFries JC. 1997. Nature, nurture, and cognitive development from 1 to 16 years: a parent-offspring adoption study. Psychol. Sci. 8, 442–447. ( 10.1111/j.1467-9280.1997.tb00458.x) [DOI] [Google Scholar]

- 33.Davies G, et al. 2011. Genome-wide association studies establish that human intelligence is highly heritable and polygenic. Mol. Psychiatry 16, 996–1005. ( 10.1038/mp.2011.85) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Davies G, et al. 2015. Genetic contributions to variation in general cognitive function: a meta-analysis of genome-wide association studies in the CHARGE consortium (N = 53 949). Mol. Psychiatry 20, 183 ( 10.1038/mp.2014.188) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Arslan RC, Penke L. 2015. Zeroing in on the genetics of intelligence. J. Intell. 3, 41–45. ( 10.3390/jintelligence3020041) [DOI] [Google Scholar]

- 36.Kievit RA, van Rooijen H, Wicherts JM, Waldorp LJ, Kan K-J, Scholte HS, Borsboom D. 2012. Intelligence and the brain: a model-based approach. Cogn. Neurosci. 3, 89–97. ( 10.1080/17588928.2011.628383) [DOI] [PubMed] [Google Scholar]

- 37.Jung RE, Haier RJ. 2007. The parieto-frontal integration theory (P-FIT) of intelligence: converging neuroimaging evidence. Behav. Brain Sci. 30, 135–154; discussion 154–87 ( 10.1017/S0140525X07001185) [DOI] [PubMed] [Google Scholar]

- 38.Colom R, Karama S, Jung RE, Haier RJ. 2010. Human intelligence and brain networks. Dialogues Clin. Neurosci. 12, 489–501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Haier RJ. 2016. The neuroscience of intelligence. Cambridge, UK: Cambridge University Press. [Google Scholar]

- 40.Neubauer AC, Fink A. 2009. Intelligence and neural efficiency. Neurosci. Biobehav. Rev. 33, 1004–1023. ( 10.1016/j.neubiorev.2009.04.001) [DOI] [PubMed] [Google Scholar]

- 41.van den Heuvel MP, Stam CJ, Kahn RS, Hulshoff Pol HE. 2009. Efficiency of functional brain networks and intellectual performance. J. Neurosci. 29, 7619–7624. ( 10.1523/JNEUROSCI.1443-09.2009) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Cole MW, Yarkoni T, Repovs G, Anticevic A, Braver TS. 2012. Global connectivity of prefrontal cortex predicts cognitive control and intelligence. J. Neurosci. 32, 8988–8999. ( 10.1523/JNEUROSCI.0536-12.2012) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Hilger K, Ekman M, Fiebach CJ, Basten U. 2017. Efficient hubs in the intelligent brain: nodal efficiency of hub regions in the salience network is associated with general intelligence. Intelligence 60, 10–25. ( 10.1016/j.intell.2016.11.001) [DOI] [Google Scholar]

- 44.Hilger K, Ekman M, Fiebach CJ, Basten U. 2017. Intelligence is associated with the modular structure of intrinsic brain networks. Sci. Rep. 7, 16088 ( 10.1038/s41598-017-15795-7) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Dubois J, Adolphs R. 2016. Building a science of individual differences from fMRI. Trends Cogn. Sci. 20, 425–443. ( 10.1016/j.tics.2016.03.014) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Finn ES, Shen X, Scheinost D, Rosenberg MD, Huang J, Chun MM, Papademetris X, Constable RT. 2015. Functional connectome fingerprinting: identifying individuals using patterns of brain connectivity. Nat. Neurosci. 18, 1664–1671. ( 10.1038/nn.4135) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Van Essen DC, Smith SM, Barch DM, Behrens TEJ, Yacoub E, Ugurbil K, WU-Minn HCP consortium . 2013. The WU-Minn human connectome project: an overview. Neuroimage 80, 62–79. ( 10.1016/j.neuroimage.2013.05.041) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Noble S, Spann MN, Tokoglu F, Shen X, Constable RT, Scheinost D. 2017. Influences on the test-retest reliability of functional connectivity MRI and its relationship with behavioral utility. Cereb. Cortex 27, 5415–5429. ( 10.1093/cercor/bhx230) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Dubois J, Galdi P, Han Y, Paul LK, Adolphs R. 2018. Resting-state functional brain connectivity best predicts the personality dimension of openness to experience. Personality Neuroscience 1. ( 10.1017/pen.2018.8) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Pietschnig J, Penke L, Wicherts JM, Zeiler M, Voracek M. 2015. Meta-analysis of associations between human brain volume and intelligence differences: how strong are they and what do they mean? Neurosci. Biobehav. Rev. 57, 411–432. ( 10.1016/j.neubiorev.2015.09.017) [DOI] [PubMed] [Google Scholar]

- 51.Gignac GE, Szodorai ET. 2016. Effect size guidelines for individual differences researchers. Pers. Individ. Dif. 102, 74–78. ( 10.1016/j.paid.2016.06.069) [DOI] [Google Scholar]

- 52.Gignac GE, Bates TC. 2017. Brain volume and intelligence: the moderating role of intelligence measurement quality. Intelligence 64, 18–29. ( 10.1016/j.intell.2017.06.004) [DOI] [Google Scholar]

- 53.Glasser MF, et al. 2013. The minimal preprocessing pipelines for the Human connectome project. Neuroimage 80, 105–124. ( 10.1016/j.neuroimage.2013.04.127) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Gur RC, et al. 2012. Age group and sex differences in performance on a computerized neurocognitive battery in children age 8–21. Neuropsychology 26, 251–265. ( 10.1037/a0026712) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Gur RC, Ragland JD, Moberg PJ, Turner TH, Bilker WB, Kohler C, Siegel SJ, Gur RE. 2001. Computerized neurocognitive scanning: I. Methodology and validation in healthy people. Neuropsychopharmacology 25, 766–776. ( 10.1016/S0893-133X(01)00278-0) [DOI] [PubMed] [Google Scholar]

- 56.Gur RC, Richard J, Hughett P, Calkins ME, Macy L, Bilker WB, Brensinger C, Gur RE. 2010. A cognitive neuroscience-based computerized battery for efficient measurement of individual differences: standardization and initial construct validation. J. Neurosci. Methods 187, 254–262. ( 10.1016/j.jneumeth.2009.11.017) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Moore TM, Reise SP, Gur RE, Hakonarson H, Gur RC. 2015. Psychometric properties of the penn computerized neurocognitive battery. Neuropsychology 29, 235–246. ( 10.1037/neu0000093) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Bilker WB, Hansen JA, Brensinger CM, Richard J, Gur RE, Gur RC. 2012. Development of abbreviated nine-item forms of the Raven's standard progressive matrices test. Assessment 19, 354–369. ( 10.1177/1073191112446655) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Williams JE, McCord DM. 2006. Equivalence of standard and computerized versions of the raven progressive matrices test. Comput. Human Behav. 22, 791–800. ( 10.1016/j.chb.2004.03.005) [DOI] [Google Scholar]

- 60.Raven JC. 1938. Raven's progressive matrices (1938) : sets A, B, C, D, E. Melbourne, Australia: Australian Council for Educational Research. [Google Scholar]

- 61.Barch DM, et al. 2013. Function in the human connectome: task-fMRI and individual differences in behavior. Neuroimage 80, 169–189. ( 10.1016/j.neuroimage.2013.05.033) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Crum RM, Anthony JC, Bassett SS, Folstein MF. 1993. Population-based norms for the mini-mental state examination by age and educational level. JAMA 269, 2386–2391. ( 10.1001/jama.1993.03500180078038) [DOI] [PubMed] [Google Scholar]

- 63.Revelle W. 2016. psych: Procedures for Personality and Psychological Research, Northwestern University, Evanston, Illinois, USA. https://CRAN.R-project.org/package=psych (Version 1.7.8) [Google Scholar]

- 64.Schmid J, Leiman JM. 1957. The development of hierarchical factor solutions. Psychometrika 22, 53–61. ( 10.1007/BF02289209) [DOI] [Google Scholar]

- 65.Hu L, Bentler PM. 1999. Cutoff criteria for fit indexes in covariance structure analysis: conventional criteria versus new alternatives. Struct. Equ. Modeling 6, 1–55. ( 10.1080/10705519909540118) [DOI] [Google Scholar]

- 66.Grice JW. 2001. Computing and evaluating factor scores. Psychol. Methods 6, 430–450. ( 10.1037/1082-989X.6.4.430) [DOI] [PubMed] [Google Scholar]

- 67.Gläscher J, Rudrauf D, Colom R, Paul LK, Tranel D, Damasio H, Adolphs R. 2010. Distributed neural system for general intelligence revealed by lesion mapping. Proc. Natl Acad. Sci. USA 107, 4705–4709. ( 10.1073/pnas.0910397107) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Rosseel Y. 2012. lavaan: An R package for structural equation modeling. J. Stat. Softw. 48, 1–36. ( 10.18637/jss.v048.i02) [DOI] [Google Scholar]

- 69.Seidlitz J, et al. 2018. Morphometric similarity networks detect microscale cortical organization and predict inter-individual cognitive variation. Neuron 97, 231–247.e7. ( 10.1016/j.neuron.2017.11.039) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.van der Walt S, Colbert SC, Varoquaux G. 2011. The NumPy Array: a structure for efficient numerical computation. Comput. Sci. Eng. 13, 22–30. ( 10.1109/MCSE.2011.37) [DOI] [Google Scholar]

- 71.Pedregosa F, et al. 2011. Scikit-learn: machine learning in python. J. Mach. Learn. Res. 12, 2825–2830. [Google Scholar]

- 72.Gorgolewski K, Burns CD, Madison C, Clark D, Halchenko YO, Waskom ML, Ghosh SS. 2011. Nipype: a flexible, lightweight and extensible neuroimaging data processing framework in python. Front. Neuroinform. 5, 13 ( 10.3389/fninf.2011.00013) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Abraham A, Pedregosa F, Eickenberg M, Gervais P, Mueller A, Kossaifi J, Gramfort A, Thirion B, Varoquaux G. 2014. Machine learning for neuroimaging with scikit-learn. Front. Neuroinform. 8, 14 (https://github.com/nipy/nipype/blob/master/doc/about.rst) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Gorgolewski KJ, et al. 2017. nipy/nipype: Release 0.13.1. ( https://github.com/nipy/nipype/blob/master/doc/about.rst)

- 75.Robinson EC, et al. 2018. Multimodal surface matching with higher-order smoothness constraints. NeuroImage 167, 453–465. ( 10.1016/j.neuroimage.2017.10.037) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Glasser MF, et al. 2016. A multi-modal parcellation of human cerebral cortex. Nature 536, 171–178. ( 10.1038/nature18933) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Ito T, Kulkarni KR, Schultz DH, Mill RD, Chen RH, Solomyak LI, Cole MW. 2017. Cognitive task information is transferred between brain regions via resting-state network topology. Nat. Commun. 8, 1027 ( 10.1038/s41467-017-01000-w) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Noirhomme Q, Lesenfants D, Gomez F, Soddu A, Schrouff J, Garraux G, Luxen A, Phillips C, Laureys S. 2014. Biased binomial assessment of cross-validated estimation of classification accuracies illustrated in diagnosis predictions. Neuroimage Clin. 4, 687–694. ( 10.1016/j.nicl.2014.04.004) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Combrisson E, Jerbi K. 2015. Exceeding chance level by chance: the caveat of theoretical chance levels in brain signal classification and statistical assessment of decoding accuracy. J. Neurosci. Methods 250, 126–136. ( 10.1016/j.jneumeth.2015.01.010) [DOI] [PubMed] [Google Scholar]

- 80.McDonald RP. 1970. The theoretical foundations of principal factor analysis, canonical factor analysis, and alpha factor analysis. Br. J. Math. Stat. Psychol. 23, 1–21. ( 10.1111/j.2044-8317.1970.tb00432.x) [DOI] [Google Scholar]

- 81.Zinbarg RE, Revelle W, Yovel I, Li W. 2005. Cronbach's α, Revelle's β, and Mcdonald's ωH: their relations with each other and two alternative conceptualizations of reliability. Psychometrika 70, 123–133. ( 10.1007/s11336-003-0974-7) [DOI] [Google Scholar]

- 82.Zinbarg RE, Yovel I, Revelle W, McDonald RP. 2006. Estimating generalizability to a latent variable common to all of a scale's indicators: a comparison of estimators for ωh. Appl. Psychol. Meas. 30, 121–144. ( 10.1177/0146621605278814) [DOI] [Google Scholar]

- 83.Reise SP. 2012. Invited paper: the rediscovery of bifactor measurement models. Multivariate Behav. Res. 47, 667–696. ( 10.1080/00273171.2012.715555) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Ruigrok ANV, Salimi-Khorshidi G, Lai M-C, Baron-Cohen S, Lombardo MV, Tait RJ, Suckling J. 2014. A meta-analysis of sex differences in human brain structure. Neurosci. Biobehav. Rev. 39, 34–50. ( 10.1016/j.neubiorev.2013.12.004) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Trabzuni D, Ramasamy A, Imran S, Walker R, Smith C, Weale ME, Hardy J, Ryten M, North American Brain Expression Consortium. 2013. Widespread sex differences in gene expression and splicing in the adult human brain. Nat. Commun. 4, 2771 ( 10.1038/ncomms3771) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Dosenbach NUF, et al. 2010. Prediction of individual brain maturity using fMRI. Science 329, 1358–1361. ( 10.1126/science.1194144) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Geerligs L, Renken RJ, Saliasi E, Maurits NM, Lorist MM. 2015. A brain-wide study of age-related changes in functional connectivity. Cereb. Cortex 25, 1987–1999. ( 10.1093/cercor/bhu012) [DOI] [PubMed] [Google Scholar]

- 88.Power JD, Barnes KA, Snyder AZ, Schlaggar BL, Petersen SE. 2012. Spurious but systematic correlations in functional connectivity MRI networks arise from subject motion. Neuroimage 59, 2142–2154. ( 10.1016/j.neuroimage.2011.10.018) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Satterthwaite TD, et al. 2013. An improved framework for confound regression and filtering for control of motion artifact in the preprocessing of resting-state functional connectivity data. Neuroimage 64, 240–256. ( 10.1016/j.neuroimage.2012.08.052) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Tyszka JM, Kennedy DP, Paul LK, Adolphs R. 2014. Largely typical patterns of resting-state functional connectivity in high-functioning adults with autism. Cereb. Cortex 24, 1894–1905. ( 10.1093/cercor/bht040) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Hänggi J, Fövenyi L, Liem F, Meyer M, Jäncke L. 2014. The hypothesis of neuronal interconnectivity as a function of brain size-a general organization principle of the human connectome. Front. Hum. Neurosci. 8, 915 ( 10.3389/fnhum.2014.00915) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Smith SM, Nichols TE. 2018. Statistical Challenges in ‘Big Data’ human neuroimaging. Neuron 97, 263–268. ( 10.1016/j.neuron.2017.12.018) [DOI] [PubMed] [Google Scholar]

- 93.Elam J. 2015. Ramifications of Image Reconstruction Version Differences. HCP Data Release Updates: Known Issues and Planned fixes. See https://wiki.humanconnectome.org/display/PublicData/Ramifications+of+Image+Reconstruction+Version+Differences.

- 94.Laumann TO, et al. 2015. Functional system and areal organization of a highly sampled individual human brain. Neuron 87, 657–670. ( 10.1016/j.neuron.2015.06.037) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Piñeiro G, Perelman S, Guerschman JP, Paruelo JM. 2008. How to evaluate models: observed vs. predicted or predicted vs. observed? Ecol. Modell. 216, 316–322. ( 10.1016/j.ecolmodel.2008.05.006) [DOI] [Google Scholar]

- 96.Yeo BTT, et al. 2011. The organization of the human cerebral cortex estimated by intrinsic functional connectivity. J. Neurophysiol. 106, 1125–1165. ( 10.1152/jn.00338.2011) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Deary IJ, Cox SR, Ritchie SJ. 2016. Getting spearman off the skyhook: one more in a century (Since Thomson, 1916) of attempts to vanquish g. Psychol. Inq. 27, 192–199. ( 10.1080/1047840X.2016.1186525) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Major JT, Johnson W, Deary IJ. 2012. Comparing models of intelligence in Project TALENT: The VPR model fits better than the CHC and extended Gf–Gc models. Intelligence 40, 543–559. ( 10.1016/j.intell.2012.07.006) [DOI] [Google Scholar]

- 99.Adolphs R. 2016. Human lesion studies in the 21st century. Neuron 90, 1151–1153. ( 10.1016/j.neuron.2016.05.014) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Taylor JR, Williams N, Cusack R, Auer T, Shafto MA, Dixon M, Tyler LK, Cam-Can, Henson RN. 2015. The cambridge centre for ageing and neuroscience (Cam-CAN) data repository: structural and functional MRI, MEG, and cognitive data from a cross-sectional adult lifespan sample. Neuroimage 144, 262–269. ( 10.1016/j.neuroimage.2015.09.018) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Nooner KB, et al. 2012. The NKI-rockland sample: a model for accelerating the pace of discovery science in psychiatry. Front. Neurosci. 6, 152 ( 10.3389/fnins.2012.00152) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Sudlow C, et al. 2015. UK Biobank: an open access resource for identifying the causes of a wide range of complex diseases of middle and old age. PLoS Med. 12, e1001779 ( 10.1371/journal.pmed.1001779) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Mar RA, Spreng RN, Deyoung CG. 2013. How to produce personality neuroscience research with high statistical power and low additional cost. Cogn. Affect. Behav. Neurosci. 13, 674–685. ( 10.3758/s13415-013-0202-6) [DOI] [PubMed] [Google Scholar]

- 104.Le H, Schmidt FL. 2006. Correcting for indirect range restriction in meta-analysis: testing a new meta-analytic procedure. Psychol. Methods 11, 416–438. ( 10.1037/1082-989X.11.4.416) [DOI] [PubMed] [Google Scholar]

- 105.Henrich J, Heine SJ, Norenzayan A. 2010. Most people are not WEIRD. Nature 466, 29 ( 10.1038/466029a) [DOI] [PubMed] [Google Scholar]