ABSTRACT

Predicting a phenotype and understanding which variables improve that prediction are two very challenging and overlapping problems in the analysis of high‐dimensional (HD) data such as those arising from genomic and brain imaging studies. It is often believed that the number of truly important predictors is small relative to the total number of variables, making computational approaches to variable selection and dimension reduction extremely important. To reduce dimensionality, commonly used two‐step methods first cluster the data in some way, and build models using cluster summaries to predict the phenotype. It is known that important exposure variables can alter correlation patterns between clusters of HD variables, that is, alter network properties of the variables. However, it is not well understood whether such altered clustering is informative in prediction. Here, assuming there is a binary exposure with such network‐altering effects, we explore whether the use of exposure‐dependent clustering relationships in dimension reduction can improve predictive modeling in a two‐step framework. Hence, we propose a modeling framework called ECLUST to test this hypothesis, and evaluate its performance through extensive simulations. With ECLUST, we found improved prediction and variable selection performance compared to methods that do not consider the environment in the clustering step, or to methods that use the original data as features. We further illustrate this modeling framework through the analysis of three data sets from very different fields, each with HD data, a binary exposure, and a phenotype of interest. Our method is available in the eclust CRAN package.

Keywords: gene‐environment interaction, high‐dimensional clustering, prediction models, topological overlap matrix, penalized regression

1. INTRODUCTION

In this paper, we consider the prediction of an outcome variable y observed on n individuals from p variables, where p is much larger than n. Challenges in this high‐dimensional (HD) context include not only building a good predictor which will perform well in an independent data set, but also being able to interpret the factors that contribute to the predictions. This latter issue can be very challenging in ultra‐HD predictor sets. For example, multiple different sets of covariates may provide equivalent measures of goodness of fit (Fan, Han, & Liu, 2014), and therefore how does one decide which are important? If many variables are highly correlated, interpretation may be improved by acknowledging the existence of an underlying or latent factor generating these patterns. In consequence, many authors have suggested a two‐step procedure where the first step is to cluster or group variables in the design matrix in an interpretable way, and then to perform model fitting in the second step using a summary measure of each group of variables.

There are several advantages to these two‐step methods. Through the reduction of the dimension of the model, the results are often more stable with smaller prediction variance, and through identification of sets of correlated variables, the resulting clusters can provide an easier route to interpretation. From a practical point of view, two‐step approaches are both flexible and easy to implement because efficient algorithms exist for both clustering (e.g., Müllner, 2013) and model fitting (e.g., Friedman, Hastie, & Tibshirani, 2010; Kuhn, 2008; Yang & Zou, 2014), particularly in the case when the outcome variable is continuous.

This two‐step idea dates back to 1957 when Kendall first proposed using principal components in regression (Kendall, 1957). Hierarchical clustering based on the correlation of the design matrix has also been used to create groups of genes in microarray studies. For example, at each level of a hierarchy, cluster averages have been used as new sets of potential predictors in both forward–backward selection (Hastie, Tibshirani, Botstein, & Brown, 2001) or the lasso (Park, Hastie, & Tibshirani, 2007). Bühlmann et al. proposed a bottom‐up agglomerative clustering algorithm based on canonical correlations and used the group lasso on the derived clusters (Bühlmann, Rütimann, van de Geer, & Zhang, 2013). A more recent proposal performs sparse regression on cluster prototypes (Reid & Tibshirani, 2016), that is, extracting the most representative gene in a cluster instead of averaging them.

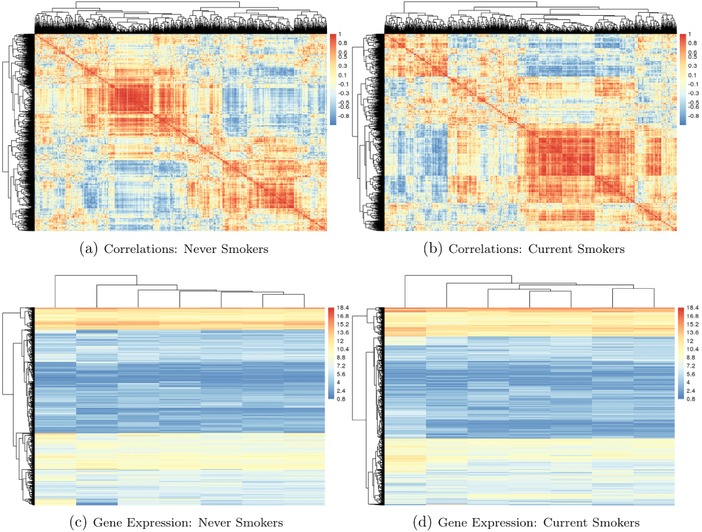

These two‐step approaches usually group variables based on a matrix of correlations or some transformation of the correlations. However, when there are external factors, such as exposures, that can alter correlation patterns, a dimension reduction step that ignores this information may be suboptimal. Many of the HD genomic data sets currently being generated capture a possibly dynamic view of how a tissue is functioning, and demonstrate differential patterns of coregulation or correlation under different conditions. We illustrate this critical point with an example of a microarray gene expression data set available in the COPDSexualDimorphism.data package (Sathirapongsasuti, 2013) from Bioconductor. This study measured gene expression in chronic obstructive pulmonary disease (COPD) patients and controls in addition to their age, gender, and smoking status. To see if there was any effect of smoking status on gene expression, we plotted the expression profiles separately for current and never smokers. To balance the covariate profiles, we matched subjects from each group on age, gender and COPD case status, resulting in a sample size of 7 in each group. Heatmaps in Figure 1 show gene expression levels and the corresponding gene–gene correlation matrices as a function of dichotomized smoking status for 2,900 genes with large variability. Evidently, there are substantial differences in correlation patterns between the smoking groups (Figures 1a and 1b). However, it is difficult to discern any patterns or major differences between the groups when examining the gene expression levels directly (Figures 1c and 1d). This example highlights two key points: (1) environmental exposures can have a widespread effect on regulatory networks and (2) this effect may be more easily discerned by looking at a measure for gene similarity, relative to analyzing raw expression data.

Figure 1.

Heatmaps of correlations between genes (top) and gene expression data (bottom: rows are genes and columns are subjects) stratified by smoking status from a microarray study of COPD (Sathirapongsasuti, 2013). The 20% most variable genes are displayed (2,900 genes)

Note: There are seven subjects in each group, matched on COPD case status, gender, and age. Data are available at Bioconductor in the COPDSexualDimorphism.data package.

Many other examples of altered coregulation and phenotype associations can be found. For instance, in a pediatric brain development study, very different correlation patterns of cortical thickness within brain regions were observed across age groups, consistent with a process of fine‐tuning an immature brain system into a mature one (Khundrakpam et al., 2013). A comparison of gene expression levels in bone marrow from 327 children with acute leukemia found several differentially coexpressed genes in Philadelphia‐positive leukemias compared to the cytogenetically normal group (Kostka & Spang, 2004). To give the third example, an analysis of RNA‐sequencing data from The Cancer Genome Atlas (TCGA) revealed very different correlation patterns among sets of genes in tumors grouped according to their missense or null mutations in the TP53 tumor suppressor gene (Oros Klein et al., 2016).

Therefore, in this paper, we pose the question whether clustering or dimension reduction that incorporates known covariate or exposure information can improve prediction models in HD genomic data settings. Substantial evidence of dysregulation of genomic coregulation has been observed in a variety of contexts, however we are not aware of any work that carefully examines how this might impact the performance of prediction models. We propose a conceptual analytic strategy called ECLUST, for prediction of a continuous or binary outcome in HD contexts while exploiting exposure‐sensitive data clusters. We restrict our attention to two‐step algorithms in order to implement a covariate‐driven clustering.

Specifically, we hypothesize that within two‐step methods, variable grouping that considers exposure information can lead to improved predictive accuracy and interpretability. We use simulations to compare our proposed method to comparable approaches that combine data reduction with predictive modeling. We are focusing our attention primarily on the performance of alternative dimension reduction strategies within the first step of a two‐step method. Therefore, performance of each strategy is compared for several appropriate Step 2 predictive models. We then illustrate these concepts more concretely by analyzing three data sets. Our method and the functions used to conduct the simulation studies have been implemented in the R package eclust (Bhatnagar, 2017), available on CRAN. Extensive documentation of the package is available at http://sahirbhatnagar.com/eclust/.

2. METHODS

Assume that there is a single binary environmental factor E of importance, and an HD data set X (n observations, p features) of relevance. This could be genome‐wide epigenetic data, gene expression data, or brain imaging data, for example. Assume that there is a continuous or binary phenotype of interest Y and that the environment has a widespread effect on the HD data, that is, affects many elements of the HD data. The primary goal is to improve the prediction of Y by identifying interactions between E and X through a carefully constructed data reduction strategy that exploits E‐dependent correlation patterns. The secondary goal is to improve identification of the elements of X that are involved; we denote this subset by S 0. We hypothesize that a systems‐based perspective will be informative when exploring the factors that are associated with a phenotype of interest, and in particular we hypothesize that incorporation of environmental factors into predictive models in a way that retains a HD perspective will improve results and interpretation.

2.1. Potential impacts of covariate‐dependent coregulation

Motivated by real‐world examples of differential coexpression, we first demonstrate that environment‐dependent correlations in can induce an interaction model. Without loss of generality, let and the relationship between X 1 and X 2 depend on the environment such that

| (1) |

where is an error term and ψ is a slope parameter, that is,

Consider the 3‐predictor regression model

| (2) |

where is another error term which is independent of . At first glance, (2) does not contain any interaction terms. However, substituting (1) for in (2) we get

| (3) |

The third term in (3) resembles an interaction model, with being the interaction parameter. We present the second illustration showing how nonlinearity can induce interactions. Suppose

| (4) |

Substituting (1) for in (4), we obtain a nonlinear interaction term. Equation (4) provided partial motivation for the model used in our third simulation scenario. Some motivation for this model and a graphical representation are presented in the Section 3.

2.2. Proposed framework and algorithm

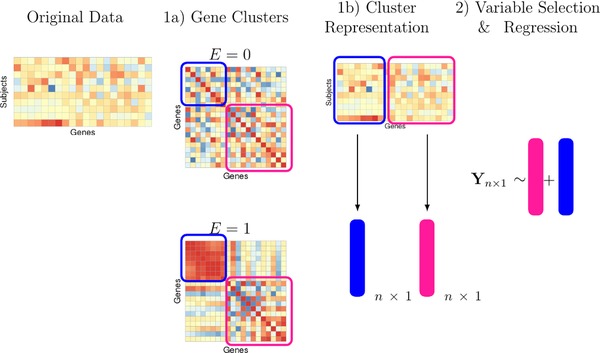

We restrict attention to methods containing two phases as illustrated in Figure 2(1a), a clustering stage where variables are clustered based on some measure of similarity, Figure 2(1b), a dimension reduction stage where a summary measure is created for each of the clusters, and Figure 2(2), a simultaneous variable selection and regression stage on the summarized cluster measures. Although this framework appears very similar to any two‐step approach, our hypothesis is that allowing the clustering in Step 1a to depend on the environment variable can lead to improvements in prediction after Step 2. Hence, methods in Step 1a are adapted to this end, as described in the following sections. Our focus in this manuscript is on the clustering and cluster representation steps. Therefore, we compare several well‐known methods for variable selection and regression that are best adapted to our simulation designs and data sets.

Figure 2.

An overview of our proposed method

Note: (1a) A measure of similarity is calculated separately for both groups and clustering is performed on a linear combination of these two matrices. (1b) We reduce the dimension of each cluster by taking a summary measure. (2) Variable selection and regression is performed on the cluster representatives, E, and their interaction with E

2.2.1. Step 1a: Clustering using coexpression networks that are influenced by the environment

In agglomerative clustering, a measure of similarity between sets of observations is required in order to decide which clusters should be combined. Common choices include Euclidean, maximum, and absolute distance. A more natural choice in genomic or brain imaging data is to use the Pearson correlation (or its absolute value) because the derived clusters are biologically interpretable. Indeed, genes that cluster together are correlated and thus likely to be involved in the same cellular process. Similarly, cortical thickness measures of the brain tend to be correlated within predefined regions such as the left and right hemisphere, or frontal and temporal regions (Sato et al., 2013). However, the information on the connection between two variables, as measured by the Pearson correlation, for example, may be noisy or incomplete. Thus it is of interest to consider alternative measures of pairwise interconnectedness. Gene coexpression networks are being used to explore the system‐level function of genes, where nodes represent genes and are connected if they are significantly coexpressed (Zhang & Horvath, 2005), and here we use their overlap measure (Ravasz, Somera, Mongru, Oltvai, & Barabási, 2002) to capture connectnedness between two X variables within each environmental condition. As was discussed earlier, genes can exhibit very different patterns of correlation in one environment versus the other (e.g. Figure 1). Furthermore, measures of similarity that go beyond pairwise correlations and consider the shared connectedness between nodes can be useful in elucidating networks that are biologically meaningful. Therefore, we propose to first look at the topological overlap matrix (TOM) separately for exposed () and unexposed () individuals (see supplementary Section A for details on the TOM). We then seek to identify nodes that are very different between environments. We determine differential coexpression using the absolute difference (Oros Klein et al., 2016). We then use hierarchical clustering with average linkage on the derived difference matrix to identify these differentially coexpressed variables. Clusters are automatically chosen using the dynamicTreeCut (Langfelder, Zhang, & Horvath, 2008) algorithm. Of course, there could be other clusters which are not sensitive to the environment. For this reason, we also create a set of clusters based on the TOM for all subjects denoted . This will lead to each covariate appearing in two clusters. In the sequel, we denote the clusters derived from as the set , and those derived from as the set , where .

2.2.2. Step 1b: Dimension reduction via cluster representative

Once the clusters have been identified in phase 1, we proceed to reduce the dimensionality of the overall problem by creating a summary measure for each cluster. A low‐dimensional structure, that is, grouping when captured in a regression model, improves predictive performance and facilitates a model's interpretability. We propose to summarize a cluster by a single representative number. Specifically, we chose the average values across all measures (Bühlmann et al., 2013; Park et al., 2007), and the first principal component (Langfelder & Horvath, 2007). These representative measures are indexed by their cluster, that is, the variables to be used in our predictive models are for clusters that do not consider E, as well as for E‐derived clusters. The tilde notation on the X is to emphasize that these variables are different from the separate variables in the original data.

2.2.3. Step 2: Variable selection and regression

Because the clustering in phase 1 is unsupervised, it is possible that the derived latent representations from phase 2 will not be associated with the response. We therefore use penalized methods for supervised variable selection, including the lasso (Tibshirani, 1996) and elasticnet (Zou & Hastie, 2005) for linear models, and multivariate adaptive regression splines (MARS; Friedman, 1991) for nonlinear models. We argue that the selected nonzero predictors in this model will represent clusters of genes that interact with the environment and are associated with the phenotype. Such an additive model might be insufficient for predicting the outcome. In this case we may directly include the environment variable, the summary measures, and their interaction. In the light of our goals to improve prediction and interpretability, we consider the following model:

| (5) |

where is a known link function, and are linear combinations of (from Step 1b). The primary comparison is models with only versus models with and . Given the context of either the simulation or the data set, we use either linear models or nonlinear models. Our general approach, ECLUST, can therefore be summarized by the algorithm in Table 1.

Table 1.

Details of ECLUST algorithm

| Step | Description, Softwarea and Reference |

|---|---|

| 1a) |

|

| 1b) |

|

| 2) |

|

All functions are implemented in R (R Core Team, 2016). The naming convention is as follows: package_name::package_function. Default settings used for all functions unless indicated otherwise.

3. SIMULATION STUDIES

We have evaluated the performance of our ECLUST method in a variety of simulated scenarios. For each simulation scenario, we compared ECLUST to the following analytic approaches: (1) regression and variable selection is performed on the model which consists of the original variables, E and their interaction with E (SEPARATE), and (2) clustering is performed without considering the environmental exposure followed by regression and variable selection on the cluster representations, E, and their interaction with E (CLUST). A detailed description of the methods being compared is summarized in Table 2. We have designed six simulation scenarios that illustrate different kinds of relationships between the variables and the response. For all scenarios, we have created HD data sets with p predictors (), and sample sizes of . We also assume that we have two data sets for each simulation—a training data set where the parameters are estimated, and a testing data set where prediction performance is evaluated, each of size . The number of subjects who were exposed () and unexposed () and the number of truly associated parameters () remain fixed across the 6 simulation scenarios. Let

| (6) |

where is the linear predictor, the error term ε is generated from a standard normal distribution, and k is chosen such that the signal‐to‐noise ratio is 0.2, 1 and 2 (e.g. the variance of the response variable Y due to ε is of the variance of Y due to ).

Table 2.

Summary of methods used in simulation study

| General approach | Summary Measure of feature clusters | Descriptiona, b |

|---|---|---|

| SEPARATE | NA | Regression of the original predictors on the response, i.e., no transformation of the predictors is being done here |

| CLUST | 1st PC, average | Create clusters of predictors without using the environment variable . Use the summary measure of each cluster as inputs of the regression model. |

| ECLUST | 1st PC, average | Create clusters of predictors using the environment variable where , as well as clusters without the environment variable . Use summary measures of as inputs of the regression model. |

Simulations 1 and 2 used lasso and elasticnet for the linear models, and Simulation 3 used MARS for estimating nonlinear effects.

Simulations 4–6 convert the continuous response generated in simulations 1–3, respectively, into a binary response.

PC: principal component.

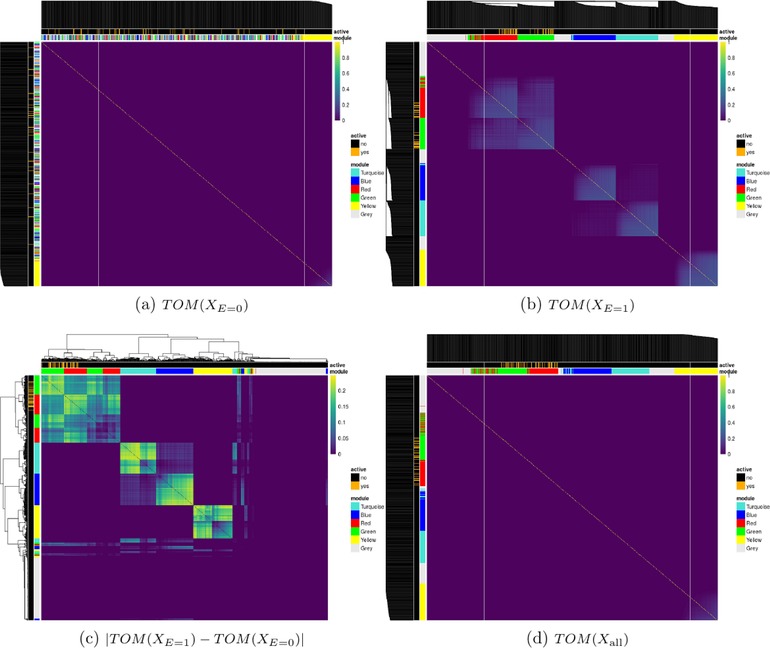

3.1. The design matrix

We generated covariate data in blocks using the simulateDatExpr function from the WGCNA package in R (version 1.51). This generates data from a latent vector: first a seed vector is simulated, then covariates are generated with varying degree of correlation with the seed vector in a given block. We simulated five clusters (blocks), each of size 750 variables, and labeled them by color (turquoise, blue, red, green, and yellow), while the remaining 1,250 variables were simulated as independent standard normal vectors (gray) (Figure 3). For the unexposed observations (), only the predictors in the yellow block were simulated with correlation, while all other covariates were independent within and between blocks. The TOM values are very small for the yellow cluster because it is not correlated with any of its neighbors. For the exposed observations (), all five blocks contained predictors that are correlated. The blue and turquoise blocks are set to have an average correlation of 0.6. The average correlation was varied for both green and red clusters and the active set S 0, that are directly associated with y, was distributed evenly between these two blocks. Heatmaps of the TOM for this environment‐dependent correlation structure are shown in Figure 3 with annotations for the true clusters and active variables. This design matrix shows widespread changes in gene networks in the exposed environment, and this subsequently affects the phenotype through the two associated clusters. There are also pathways that respond to changes in the environment but are not associated with the response (blue and turquoise), while others that are neither active in the disease nor affected by the environment (yellow).

Figure 3.

Topological overlap matrices (TOM) of simulated predictors based on subjects with (a) , (b) , (c) their absolute difference, and (d) all subjects

Note: Dendrograms are from hierarchical clustering (average linkage) of one minus the TOM for (a), (b), and (d) and the Euclidean distance for (c). Some variables in the red and green clusters are associated with the outcome variable. The module annotation represents the true cluster membership for each predictor, and the active annotation represents the truly associated predictors with the response.

3.2. The response

The first three simulation scenarios differ in how the linear predictor in (6) is defined, and also in the choice of regression model used to fit the data. In simulations 1 and 2, we use lasso (Tibshirani, 1996) and elasticnet (Zou & Hastie, 2005) to fit linear models; then we use MARS (Friedman, 1991) in Simulation 3 to estimate nonlinear effects. In simulations 4–6, we use the GLM version of these models, respectively, because the responses are binary.

3.2.1. Simulation 1

Simulation 1 was designed to evaluate performance when there are no explicit interactions between X and E (see Equation (3)). We generated the linear predictor from

| (7) |

where and . That is, only the first 250 predictors of both red and green blocks are active. In this setting, only the main effects model is being fit to the simulated data.

3.2.2. Simulation 2

In the second scenario, we explicitly simulated interactions. All nonzero main effects also had a corresponding nonzero interaction effect with E. We generated the linear predictor from

| (8) |

where , or , and . In this setting, both main effects and their interactions with E are being fit to the simulated data.

3.2.3. Simulation 3

In the third simulation, we investigated the performance of the ECLUST approach in the presence of nonlinear effects of the predictors on the phenotype:

| (9) |

where

| (10) |

| (11) |

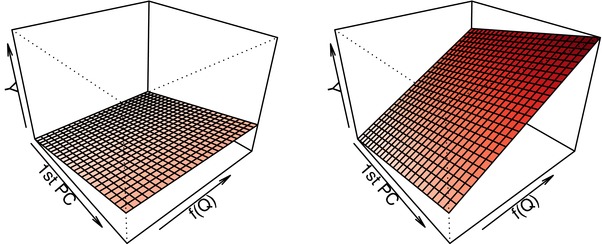

The design of this simulation was partially motivated by considering the idea of canalization, where systems operate within appropriate parameters until sufficient perturbations accumulate (e.g., Gibson, 2009). In this third simulation, we set , and . We assume that the data have been appropriately normalized, and that the correlation between any two features is greater than or equal to 0. In Simulation 3, we tried to capture the idea that an exposure could lead to coregulation or disregulation of a cluster of Xs, which in itself directly impacts Y. Hence, we defined coregulation as the Xs being similar in magnitude and disregulation, as the Xs being very different. The term in (10) is defined such that the higher values would correspond to strong coregulation, whereas the lower values correspond to disregulation. For example, suppose ranges from −5 to 0. It will be −5 when there are lots of variability (disregulation), and 0 when there is none (strong coregulation). The function in (11) simply maps to the [0,1] range. In order to get an idea of the relationship in (9), Figure 4 displays the response Y as a function of the first principal component of (denoted by 1st PC) and . We see that the lower values of (which implies disregulation of the features) lead to a lower Y. In this setting, although the clusters do not explicitly include interactions between the X variables, the MARS algorithm allows the possibility of two‐way interactions between any of the variables.

Figure 4.

Visualization of the relationship between the response, the first principal component of the main effects and in (9) for (left) and (right) in simulation scenario 3

Note: This graphic also depicts the intuition behind model (4).

3.2.4. Simulations 4–6

We used the same simulation setup as above, except that we took the continuous outcome Y, defined and used this to generate a two‐class outcome z with and . The true parameters were simulated as , , or . Simulations 4–6 are the binary response versions of simulations 1–3, respectively. The larger odds ratio for E compared to the odds ratio for X is motivated by certain environmental factors that are well known to have substantial impacts on disease risks and phenotypes. For example, body mass index (BMI) has been estimated to explain a large proportion of variation in bone mineral density (BMD) in women (10–20%; Felson, Zhang, Hannan, & Anderson, 1993). This can be converted to a slope of 0.31–0.44 assuming variables are standardized, that is, changes of 0.3–0.4 standard deviations in BMD per standard deviation change in weight. In contrast, the majority of single nucleotide polymorphisms (SNPs) and rare variants have effect sizes under 0.10 standard deviations on BMD (Kemp et al., 2017).

3.3. Measures of performance

Simulation performance was assessed with measures of model fit, prediction accuracy, and feature stability. Several measures for each of these categories, and the specific formulae used are provided in Table 3. We simulated both a training data set and a test data set for each simulation: all tuning parameters for model selection were selected using the training sets only. Although most of the measures of model fit were calculated on the test data sets, true‐positive rate, false‐positive rate, and correct sparsity were calculated on the training set only. The root mean squared error is determined by predicting the response for the test set using the fitted model on the training set. The area under the curve is determined using the trapezoidal rule (Robin et al., 2011). The stability of feature importance is defined as the variability of feature weights under perturbations of the training set, that is, small modifications in the training set should not lead to considerable changes in the set of important covariates (Toloşi & Lengauer, 2011). A feature selection algorithm produces a weight (e.g., ), a ranking (e.g., ) and a subset of features (e.g., , where is the indicator function). In the CLUST and ECLUST methods, we defined a predictor to be nonzero if its corresponding cluster representative weight was nonzero. Using 10‐fold cross‐validation (CV), we evaluated the similarity between two features and their rankings using Pearson and Spearman correlation, respectively. For each CV fold we reran the models and took the average Pearson/Spearman correlations of the combinations of estimated coefficients vectors. To measure the similarity between two subsets of features, we took the average of the Jaccard distance in each fold. A Jaccard distance of 1 indicates perfect agreement between two sets while no agreement will result in a distance of 0. For MARS models we do not report the Pearson/Spearman stability rankings due to the adaptive and functional nature of the model (there are many possible combinations of predictors, each of which are linear basis functions).

Table 3.

Measures of Performance

| Measure | Formula | ||

|---|---|---|---|

| Model fit | |||

| True‐positive rate (TPR) |

|

||

| False‐positive rate (TPR) |

|

||

| Correct sparsity (Witten, Shojaie, & Zhang, 2014) |

|

||

| Prediction accuracy | |||

| Root mean squared error (RMSE) |

|

||

| Area under the curve (AUC) | Trapezoidal rule | ||

| Hosmer–Lemeshow test () | χ2 test statistic | ||

| Feature stability using K‐fold cross‐validation on training set (Kalousis, Prados, & Hilario, 2007) | |||

| Pearson Correlation (ρ) (Pearson, 1895) |

|

||

| Spearman Correlation (r) (Spearman, 1904) |

|

||

| Jaccard Distance (Jaccard, 1912) |

|

||

: fitting procedure on the training set.

S 0: index of active set .

: index of the set of nonzero estimated coefficients .

: is the cardinality of set A.

3.4. Results

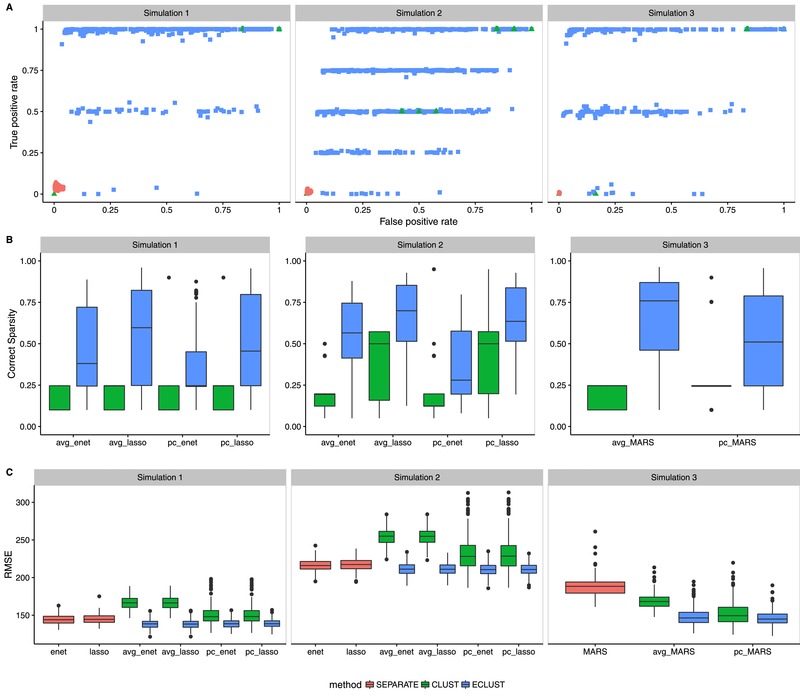

All reported results are based on 200 simulation runs. We graphically summarized the results across simulations 1–3 for model fit (Figure 5) and feature stability (Figure 6). The results for simulations 4–6 are shown in supplementary Section B, Figures S1– S6. We restrict our attention to , , and . The model names are labeled as summary measure_model (e.g., avg_lasso corresponds using the average of the features in a cluster as inputs into a lasso regression model). When there is no summary measure appearing in the model name that indicates that the original variables were used (e.g., enet means all separate features were used in the elasticnet model). In Figure 5, panel A, we plot the true‐positive rate against the false‐positive rate for each of the 200 simulations. We see that across all simulation scenarios, the SEPARATE method has extremely poor sensitivity compared to both CLUST and ECLUST, which do much better at identifying the active variables, though the resulting models are not always sparse. The relatively few number of green points in panel A is due to the small number of estimated clusters (supplementary Section C, Figure S7) leading to very little variability in performance across simulations. The better performance of ECLUST over CLUST is noticeable as more points lie in the top left part of the plot. The horizontal banding in panel A reflects the stability of the TOM‐based clustering approach. ECLUST also does better than CLUST in correctly determining whether a feature is zero or nonzero (Figure 5, panel B). Importantly, across all three simulation scenarios, ECLUST outperforms the competing methods in terms of RMSE (Figure 5, panel C), regardless of the summary measure and modeling procedure. We present the distribution for the effective number of variables selected in the supplementary material (Figures S8 and S9). We see that the median number of variables selected from ECLUST is less than the median number of variables selected from CLUST, though ECLUST has more variability.

Figure 5.

Model fit results from simulations 1–3 with , , and

Note: SEPARATE results are in pink, CLUST in green and ECLUST in blue.

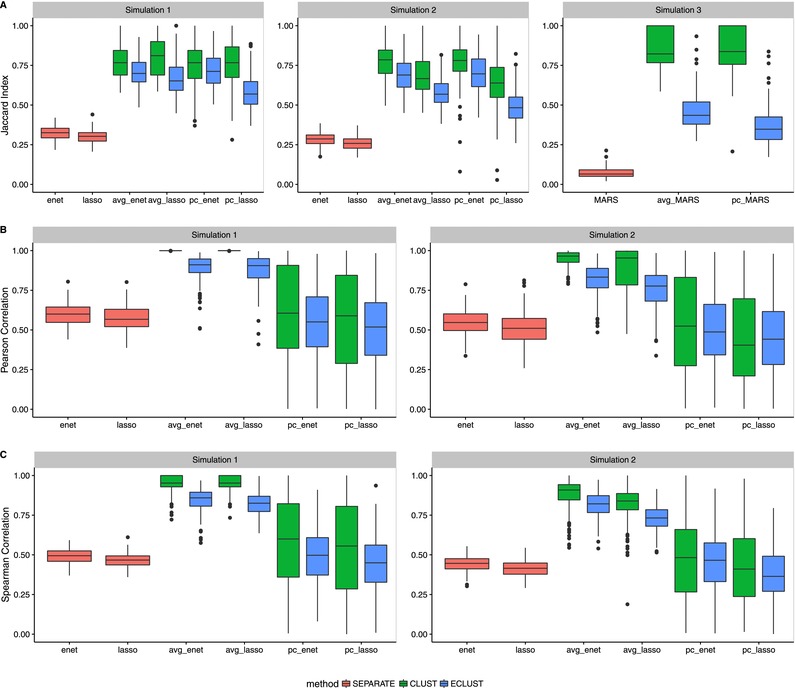

Figure 6.

Stability results from simulations 1–3 for , , and

Note: SEPARATE results are in pink, CLUST in green and ECLUST in blue.

Although the approach using all separate original variables (SEPARATE) produce sparse models, they are sensitive to small perturbations of the data across all stability measures (Figure 6), that is, similar data sets produce very different models. Although the median for the CLUST approach is always slightly better than the median for ECLUST across all stability measures, CLUST results can be much more variable, particularly when stability is measured by the agreement between the value and the ranking of the estimated coefficients across CV folds (Figure 6, panels B and C). The number of estimated clusters, and therefore the number of features in the regression model, tends to be much smaller in CLUST compared to ECLUST, and this explains its poorer performance using the stability measures in Figure 6, because there are more coefficients to estimate. Overall, we observe that the relative performance of ECLUST versus CLUST in terms of stability is consistent across the two summary measures (average or principal component) and across the penalization procedures. The complete results for different values of ρ, and (when applicable) are available in the supplementary Section D, Figures S10– S15 for Simulation 1, Figures S16– S21 for Simulation 2, and Figures S22– S25 for Simulation 3. They show that these conclusions are not sensitive to the , ρ, or . Similar conclusions are made for a binary outcome using logistic regression versions of the lasso, elasticnet, and MARS. ECLUST and CLUST also have better calibration than the SEPARATE method for both linear and nonlinear models (supplementary Section B, Figures S3– S6). The distributions of Hosmer–Lemeshow (HL) P‐values do not follow uniformity. This is in part due to the fact that the HL test has low power in the presence of continuous‐dichotomous variable interactions (Hosmer, Hosmer, Le Cessie, & Lemeshow, 1997). Upon inspection of the Q–Q plots, we see that the models have difficulty predicting risks at the boundaries which is a known issue in most models. We also have a small sample size of 200, which means there are on average only 20 subjects in each of the 10 bins. Furthermore, the HL test is sensitive to the choice of bins and method of computing quantiles. Nevertheless, the improved fit relative to the SEPARATE analysis is quite clear.

We also ran all our simulations using the Pearson correlation matrix as a measure of similarity in order to compare its performance against the TOM. The complete results are in supplementary Section E, Figures S26– S31 for Simulation 1, Figures S32– S37 for Simulation 2, and Figures S38– S41 for Simulation 3. In general, we see slightly better performance of CLUST over ECLUST when using Pearson correlations. This result is probably due to the imprecision in the estimated correlations. The exposure‐dependent similarity matrices are quite noisy, and the variability is even larger when we examine the differences between two correlation matrices. Such large levels of variability have a negative impact on the clustering algorithm's ability to detecting the true clusters.

4. ANALYSIS OF THREE DATA SETS

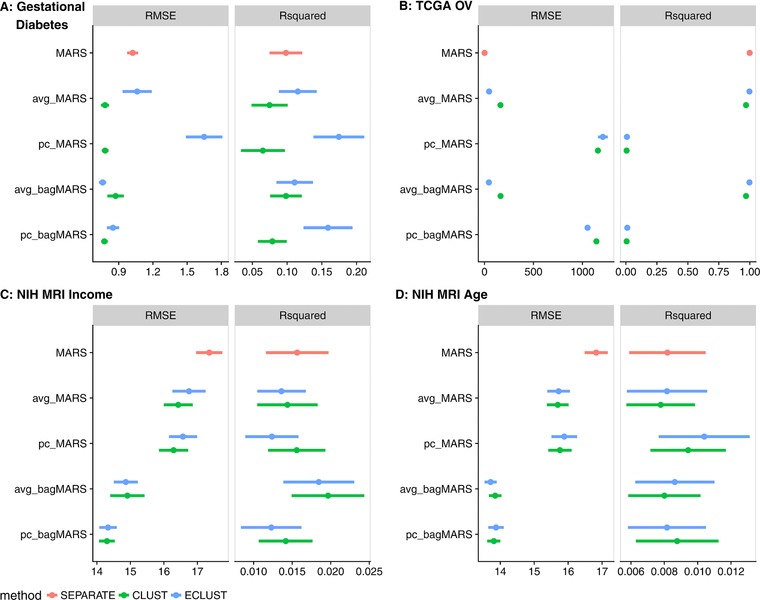

In this section we demonstrate the performance of ECLUST on three HD data sets with contrasting motivations and features. In the first data set, normal brain development is examined in conjunction with intelligence scores. In the second data set, we aim to identify molecular subtypes of ovarian cancer using gene expression data. The investigators' goal in the third data set is to examine the impact of gestational diabetes mellitus (GDM) on childhood obesity in a sample of mother–child pairs from a prospective birth cohort. The data sets comprise a range of sample sizes, and both amount of clustering in the HD data and strength of the effects of the designated exposure variables vary substantially. Due to the complex nature of these data sets, we decided to use MARS models for Step 2 of our algorithm for all three data sets, as outlined in Table 1. In order to assess performance in these data sets, we have computed the 0.632 estimator (Efron, 1983) and the 95% confidence interval of the R 2 and RMSE from 100 bootstrap samples. The R 2 reported here is defined as the squared Pearson correlation coefficient between the observed and predicted response (Kvålseth, 1985), and the RMSE is defined as in Table 3. Because MARS models can result in unstable predictors (Kuhn, 2008), we also report the results of bagged MARS from bootstrap samples, where bagging (Breiman, 1996) refers to averaging the predictions from each of the MARS models fit on the B bootstrap samples.

4.1. NIH MRI study of normal brain development

The NIH MRI Study of Normal Brain Development, started in 2001, was a 7‐year longitudinal multisite project that used magnetic resonance technologies to characterize brain maturation in 433 medically healthy, psychiatrically normal children aged 4.5–18 (Evans, A. C., & Brain Development Cooperative Group, 2006). The goal of this study was to provide researchers with a representative and reliable source of healthy control subject data as a basis for understanding atypical brain development associated with a variety of developmental, neurological, and neuropsychiatric disorders affecting children and adults. Brain imaging data (e.g., cortical surface thickness, intracranial volume), behavioral measures (e.g., IQ scores, psychiatric interviews, behavioral ratings), and demographics (e.g., socioeconomic status) were collected at two year intervals for three time points and are publicly available upon request. Previous research using these data found that level of intelligence and age correlate with cortical thickness (Khundrakpam et al., 2013; Shaw et al., 2006), but to our knowledge no such relation between income and cortical thickness has been observed. We therefore used these data to see the performance of ECLUST in the presence (age) and absence (income) of an effect on the correlations in the HD data. We analyzed the 10,000 most variable regions on the cortical surface from brain scans corresponding to the first sampled time point only. We used binary age (166 ⩽11.3 and 172 >11.3) and binary income (142 high and 133 low income) indicator as the environment variables and standardized IQ scores as the response. We identified 22 clusters from and 57 clusters from when using age as the environment, and 86 clusters from and 49 clusters from when using income as the environment. Results are shown in Figure 7, panels C and D. The method that uses all individual variables as predictors (pink) has better R 2 but also worse RMSE compared to CLUST and ECLUST, likely due to overfitting. There is a slight benefit in performance for ECLUST over CLUST when using age as the environment (panel D). Importantly, we observe very similar performance between CLUST and ECLUST across all models (panel C), suggesting very little impact on the prediction performance when including features derived both with and without the E variable, in a situation where they are unlikely to be relevant.

Figure 7.

Model fit measures from analysis of three data sets: (A) gestational diabetes birth‐cohort, (B) TCGA Ovarian Cancer study, (C) NIH MRI Study with income as the environment variable, and (D) NIH MRI Study with age as the environment variable

4.2. Gene expression study of ovarian cancer

Differences in gene expression profiles have led to the identification of robust molecular subtypes of ovarian cancer; these are of biological and clinical importance because they have been shown to correlate with overall survival (Tothill et al., 2008). Improving prediction of survival time based on gene expression signatures can lead to targeted therapeutic interventions (Helland et al., 2011). The proposed ECLUST algorithm was applied to gene expression data from 511 ovarian cancer patients profiled by the Affymetrix Human Genome U133A 2.0 Array. The data were obtained from the TCGA Research Network: http://cancergenome.nih.gov/ and downloaded via the TCGA2STAT R library (Wan, Allen, Anderson, & Liu, 2015). Using the 881 signature genes from Helland et al. (2011), we grouped subjects into two groups based on the results in this paper, to create a “positive control” environmental variable expected to have a strong effect. Specifically, we defined an environment variable in our framework as: for subtypes C1 and C2 (), and for subtypes C4 and C5 (). Overall survival time (log‐transformed) was used as the response variable. Because these genes were ascertained on survival time, we expected the method using all genes without clustering to have the best performance, and hence one goal of this analysis was to see if ECLUST performed significantly worse as a result of summarizing the data into a lower dimension. We found three clusters from and three clusters from ; results are shown in Figure 7, panel C. Across all models, ECLUST performs slightly better than CLUST. Furthermore, it performs almost as well as the separate variable method, with the added advantage of dealing with a much smaller number of predictors (881 with SEPARATE compared to 6 with ECLUST).

4.3. Gestational diabetes, epigenetics, and metabolic disease

Events during pregnancy are suspected to play a role in childhood obesity development but only little is known about the mechanisms involved. Indeed, children born to women who had GDM in pregnancy are more likely to be overweight and obese (Wendland et al., 2012), and evidence suggests epigenetic factors are important piece of the puzzle (Bouchard et al., 2010, 2012). Recently, methylation changes in placenta and cord blood were associated with GDM (Ruchat et al., 2013), and here we explore how these changes are associated with obesity in the children at the age of about 5. DNA methylation in placenta was measured with the Infinium HumanMethylation450 BeadChip (Illumina, Inc.; Bibikova et al., 2011) microarray in a sample of 28 women, 20 of whom had a GDM‐affected pregnancy, and here we used GDM status as our E variable, assuming that this has widespread effects on DNA methylation and on its correlation patterns. Our response, Y, is the standardized BMI in the offspring at the age of 5. In contrast to the previous two examples, here we had no particular expectation of how ECLUST would perform. Using the 10,000 most variable probes, we found 2 clusters from and 75 clusters from . The predictive model results from a MARS analysis are shown in Figure 7, panel A. When using R 2 as the measure of performance, ECLUST outperforms both SEPARATE and CLUST methods. When using RMSE as the measure of model performance, performance tended to be better with CLUST rather than ECLUST perhaps in part due to the small number of clusters derived from relative to . Overall, the ECLUST algorithm with bagged MARS and the first PC of each cluster performed best, that is, it had a better R 2 than CLUST with comparable RMSE. The sample size here is very small, and therefore the stability of the model fits is limited. The probes in these clusters mapped to 164 genes and these genes were selected to conduct pathway analyses using the Ingenuity Pathway Analysis (IPA) software (Ingenuity System). IPA compares the selected genes to a reference list of genes included in many biological pathways using a hypergeometric test. Smaller P values are evidence for overrepresented gene ontology categories in the input gene list. The results are summarized in Table 4 and provide some biological validation of our ECLUST method. For example, the hepatic system is involved with the metabolism of glucose and lipids (Saltiel & Kahn, 2001), and behavior and neurodevelopment are associated with obesity (Epstein, Paluch, Kilanowski, & Raynor, 2004). Furthermore, it is interesting that embryonic and organ development pathways are involved because GDM is associated with macrosomia (Ehrenberg, Mercer, & Catalano, 2004).

Table 4.

Ingenuity Pathway Analysis results—top‐ranked diseases and disorders, and physiological system development and function epigentically affected by gestational diabetes mellitus and associated with childhood body mass index

| Category | Name | P values | n a |

|---|---|---|---|

| Diseases and disorders | Hepatic system disease | [9.61e−7 to 5.17e−7] | 75 |

| Physiological system development and function | |||

| Behavior | [1.35e−2 to 7.82e−8] | 33 | |

| Embryonic development | [1.35e−2 to 2.63e−8] | 26 | |

| Nervous system development and function | [1.35e−2 to 2.63e−8] | 43 | |

| Organ development | [1.35e−2 to 2.63e−8] | 20 | |

| Organismal development | [1.35e−2 to 2.63e−8] | 34 | |

Number of genes involved in each pathway.

5. DISCUSSION

The challenge of precision medicine is to appropriately fit treatments or recommendations to each individual. Data such as gene expression, DNA methylation levels, or magnetic resonance imaging (MRI) signals are examples of HD measurements that capture multiple aspects of how a tissue is functioning. These data often show patterns associated with disease, and major investments are being made in the genomics research community to generate such HD data. Analytic tools increasing prediction accuracy are needed to maximize the productivity of these investments. However, the effects of exposures have usually been overlooked, but these are crucial because they can lead to ways to intervene. Hence, it is essential to have a clear understanding of how exposures modify HD measures, and how the combination leads to disease. Existing methods for prediction (of disease), which are based on HD data and interactions with exposures, fall far short of being able to obtain this clear understanding. Most methods have low power and poor interpretability, and furthermore, modeling and interpretation problems are exacerbated when there is interest in interactions. In general, power to estimate interactions is low, and the number of possible interactions could be enormous. Therefore, here we have proposed a strategy to leverage situations where a covariate (e.g., an exposure) has a widespread effect on one or more HD measures, for example, GDM on methylation levels. We have shown that this expected pattern can be used to construct dimension‐reduced predictor variables that inherently capture the systemic covariate effects. These dimension‐reduced variables, constructed without using the phenotype, can then be used in predictive models of any type. In contrast to some common analysis strategies that model the effects of individual predictors on outcome, our approach makes a step toward a systems‐based perspective that we believe will be more informative when exploring the factors that are associated with disease or a phenotype of interest. We have shown, through simulations and real data analysis, that incorporation of environmental factors into predictive models in a way that retains a HD perspective can improve results and interpretation for both linear and nonlinear effects.

We proposed two key methodological steps necessary to maximize predictive model interpretability when using HD data and a binary exposure: (1) dimension reduction of HD data built on exposure sensitivity and (2) implementation of penalized prediction models. In the first step, we proposed to identify exposure‐sensitive HD pairs by contrasting the TOM between exposed and unexposed individuals; then we cluster the elements in these HD pairs to find exposure‐sensitive coregulated sets. New dimension‐reduced variables that capture exposure‐sensitive features (e.g., the first principal component of each cluster) were then defined. In the second step we implemented linear and nonlinear variable selection methods using the dimension‐reduced variables to ensure stability of the predictive model. The ECLUST method has been implemented in the eclust (Bhatnagar, 2017) R package publicly available on CRAN. Our method along with computationally efficient algorithms, allows the analysis of up to 10,000 variables at a time on a laptop computer.

The methods that we have proposed here are currently only applicable when three data elements are available. Specifically a binary environmental exposure, an HD data set that can be affected by the exposure, and a single phenotype. When comparing the TOM and Pearson correlations as a measure of similarity, our simulations showed that the performance of ECLUST was worse with correlations. This speaks to the potential of developing a better measure than the difference of two matrices. For example, we are currently exploring ways in which to handle continuous exposures or multiple exposures. The best way to construct an exposure‐sensitive distance matrix that can be used for clustering is not obvious in these situations. One possible solution relies on a nonparametric smoothing based approach where weighted correlations are calculated. These weights can be derived from a kernel‐based summary of the exposure covariates (e.g., Qiu, Han, Liu, & Caffo, 2016). Then, contrasting unweighted and weighted matrices will allow construction of covariate‐sensitive clusters. The choice of summary measure for each cluster also warrants further study. Although principal components and averages are well understood and easy to implement, the main shortcoming is that they involve all original variables in the group. As the size of the groups increase, the interpretability of these measures decreases. Nonnegative matrix factorization (Lee & Seung, 2001) and sparse principal component analysis (SPCA; Witten, Tibshirani, & Hastie, 2009) are alternatives that find sparse and potentially interpretable factors. Furthermore, structured SPCA (Jenatton, Obozinski, & Bach, 2010) goes beyond restricting the cardinality of the contributing factors by imposing some a priori structural constraints deemed relevant to model the data at hand.

We are all aware that our exposures and environments impact our health and risks of disease, however detecting how the environment acts is extremely difficult. Furthermore, it is very challenging to develop reliable and understandable ways of predicting the risk of disease in individuals, based on HD data such as genomic or imaging measures, and this challenge is exacerbated when there are environmental exposures that lead to many subtle alterations in the genomic measurements. Hence, we have developed an algorithm and an easy‐to‐use software package to transform analysis of how environmental exposures impact human health, through an innovative signal‐extracting approach for HD measurements. Evidently, the model fitting here is performed using existing methods; our goal is to illustrate the potential of improved dimension reduction in two‐stage methods, in order to generate discussion and new perspectives. If such an approach can lead to more interpretable results that identify gene–environment interactions and their effects on diseases and traits, the resulting understanding of how exposures influence the high‐volume measurements now available in precision medicine will have important implications for health management and drug discovery.

AVAILABILITY OF DATA AND MATERIAL

NIH MRI Study of Normal Brain Development data are available in the Pediatric MRI Data Repository, https://pediatricmri.nih.gov/

Gene Expression Study of Ovarian Cancer data are available in the Genomic Data Commons repository, https://gdc.cancer.gov/, and were downloaded via the TCGA2STAT R library Wan et al. (2015)

Gestational diabetes, epigenetics, and metabolic disease: the clinical data, similarity matrices and cluster summaries are available at Zenodo [10.5281/zenodo.259222]. The raw analyzed during the current study are not publicly available due to reasons of confidentiality, although specific collaborations with LB can be requested.

CONFLICT OF INTEREST

The authors declare no conflicts of interest.

Supporting information

Supplementary material.

ACKNOWLEDGMENTS

SRB was supported by the Ludmer Centre for Neuroinformatics and Mental Health.

SRB, CMTG, YY, and MB contributed to the conceptualization of this research; SRB, LB, and BK contributed to the data curation; SRB contributed to the formal analysis, software, visualization; SRB and CMTG contributed to the methodology; SRB and CMTG contributed to writing the original draft; SRB, CMTG, YY, BK, ACE, MB, and LB contributed to writing, reviewing, and editing the draft.

Bhatnagar SR, Yang Y, Khundrakpam B, Evans AC, Blanchette M, Bouchard L, Greenwood CMT. An analytic approach for interpretable predictive models in high‐dimensional data in the presence of interactions with exposures. Genet Epidemiol. 2018;42:233–249. 10.1002/gepi.22112

The copyright line for this article was changed on 26th March after original online publication.

REFERENCES

- Bhatnagar, S. R. (2017). eclust: environment based clustering for interpretable predictive models in high dimensional data. R package version 0.1.0. Retrieved from https://github.com/sahirbhatnagar/eclust/

- Bibikova, M. , Barnes, B. , Tsan, C. , Ho, V. , Klotzle, B. , Le, J. M. , … Shen, R. (2011). High density dna methylation array with single CpG site resolution. Genomics, 98(4), 288–295. [DOI] [PubMed] [Google Scholar]

- Bouchard, L. , Hivert, M.‐F. , Guay, S.‐P. , St‐Pierre, J. , Perron, P. , & Brisson, D. (2012). Placental adiponectin gene dna methylation levels are associated with mothers' blood glucose concentration. Diabetes, 61(5), 1272–1280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouchard, L. , Thibault, S. , Guay, S.‐P. , Santure, M. , Monpetit, A. , St‐Pierre, J. , … Brisson, D. (2010). Leptin gene epigenetic adaptation to impaired glucose metabolism during pregnancy. Diabetes Care, 33(11), 2436–2441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Breiman, L. (1996). Bagging predictors. Machine Learning, 24(2), 123–140. [Google Scholar]

- Bühlmann, P. , Rütimann, P. , van de Geer, S. , & Zhang, C.‐H. (2013). Correlated variables in regression: clustering and sparse estimation. Journal of Statistical Planning and Inference, 143(11), 1835–1858. [Google Scholar]

- Efron, B. (1983). Estimating the error rate of a prediction rule: improvement on cross‐validation. Journal of the American Statistical Association, 78(382), 316–331. [Google Scholar]

- Ehrenberg, H. M. , Mercer, B. M. , & Catalano, P. M. (2004). The influence of obesity and diabetes on the prevalence of macrosomia. American Journal of Obstetrics and Gynecology, 191(3), 964–968. [DOI] [PubMed] [Google Scholar]

- Epstein, L. H. , Paluch, R. A. , Kilanowski, C. K. , & Raynor, H. A. (2004). The effect of reinforcement or stimulus control to reduce sedentary behavior in the treatment of pediatric obesity. Health Psychology, 23(4), 371. [DOI] [PubMed] [Google Scholar]

- Evans, A. C. , & Brain Development Cooperative Group., (2006). The NIH MRI study of normal brain development. Neuroimage, 30(1), 184–202. [DOI] [PubMed] [Google Scholar]

- Fan, J. , Han, F. , & Liu, H. (2014). Challenges of big data analysis. National Science Review, 1(2), 293–314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Felson, D. T. , Zhang, Y. , Hannan, M. T. , & Anderson, J. J. (1993). Effects of weight and body mass index on bone mineral density in men and women: the Framingham study. Journal of Bone and Mineral Research, 8(5), 567–573. [DOI] [PubMed] [Google Scholar]

- Friedman, J. , Hastie, T. , & Tibshirani, R. (2010). Regularization paths for generalized linear models via coordinate descent. Journal of Statistical Software, 33(1), 1. [PMC free article] [PubMed] [Google Scholar]

- Friedman, J. H. (1991). Multivariate adaptive regression splines. Annals of Statistics, 1–67. [Google Scholar]

- Gibson, G. (2009). Decanalization and the origin of complex disease. Nature Reviews Genetics, 10(2), 134. [DOI] [PubMed] [Google Scholar]

- Hastie, T. , Tibshirani, R. , Botstein, D. , & Brown, P. (2001). Supervised harvesting of expression trees. Genome Biology, 2(1), 1–0003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Helland, Å. , Anglesio, M. S. , George, J. , Cowin, P. A. , Johnstone, C. N. , House, C. M. , … Rustgi, A. K. (2011). Deregulation of MYCN, LIN28b and LET7 in a molecular subtype of aggressive high‐grade serous ovarian cancers. PloS One, 6(4), e18064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hosmer, D. W. , Hosmer, T. , Le Cessie, S. , & Lemeshow, S. (1997). A comparison of goodness‐of‐fit tests for the logistic regression model. Statistics in Medicine, 16(9), 965–980. [DOI] [PubMed] [Google Scholar]

- Jaccard, P. (1912). The distribution of the flora in the alpine zone. New Phytologist, 11(2), 37–50. [Google Scholar]

- Jenatton, R. , Obozinski, G. , & Bach, F. (2010). Structured sparse principal component analysis In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics (pp. 366–373). [Google Scholar]

- Kalousis, A. , Prados, J. , & Hilario, M. (2007). Stability of feature selection algorithms: a study on high‐dimensional spaces. Knowledge and Information Systems, 12(1), 95–116. [Google Scholar]

- Kemp, J. P. , Morris, J. A. , Medina‐Gomez, C. , Forgetta, V. , Warrington, N. M. , Youlten, S. E. , … Evans, D. M. (2017). Identification of 153 new loci associated with heel bone mineral density and functional involvement of gpc6 in osteoporosis. Nature Genetics, 49, 1468. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kendall, M. (1957). A course in multivariate analysis. London: Griffin. [Google Scholar]

- Khundrakpam, B. S. , Reid, A. , Brauer, J. , Carbonell, F. , Lewis, J. , Ameis, S. , … Brain Development Cooperative Group . (2013). Developmental changes in organization of structural brain networks. Cerebral Cortex, 23(9), 2072–2085. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kostka, D. , & Spang, R. (2004). Finding disease specific alterations in the co‐expression of genes. Bioinformatics, 20(Suppl. 1), i194–i199. [DOI] [PubMed] [Google Scholar]

- Kuhn, M. (2008). caret package. Journal of Statistical Software, 28(5). Retrieved from http://www.jstatsoft.org/index [Google Scholar]

- Kvålseth, T. O. (1985). Cautionary note about r 2. American Statistician, 39(4), 279–285. [Google Scholar]

- Langfelder, P. , & Horvath, S. (2007). Eigengene networks for studying the relationships between co‐expression modules. BMC Systems Biology, 1(1), 54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Langfelder, P. , & Horvath, S. (2008). Wgcna: an R package for weighted correlation network analysis. BMC Bioinformatics, 9(1), 1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Langfelder, P. , Zhang, B. , & Horvath, S. (2008). Defining clusters from a hierarchical cluster tree: the dynamic tree cut package for r. Bioinformatics, 24(5), 719–720. [DOI] [PubMed] [Google Scholar]

- Langfelder, P. , Zhang, B. , & with contributions from Horvath, S . (2016). dynamicTreeCut: methods for detection of clusters in hierarchical clustering dendrograms . R package version 1.63‐1. Retrieved from http://www.genetics.ucla.edu/labs/horvath/CoexpressionNetwork/BranchCutting/

- Lee, D. D. , & Seung, H. S. (2001). Algorithms for non‐negative matrix factorization In Leen T. K., Dietterich T. G., & Tresp V. (Eds.), Advances in neural information processing systems (Vol. 13, pp. 556–562). Cambridge, MA: MIT Press. [Google Scholar]

- Milborrow, S. (2011). Derived from mda: MARS by Trevor Hastie and Rob Tibshirani. Earth: multivariate adaptive regression splines. R package version 3.2‐0. Retrieved from http://CRAN.R-project.org/package=earth.

- Müllner, D. (2013). fastcluster: fast hierarchical, agglomerative clustering routines for R and Python. Journal of Statistical Software, 53(9), 1–18. [Google Scholar]

- Oros Klein, K. , Oualkacha, K. , Lafond, M.‐H. , Bhatnagar, S. , Tonin, P. N. , & Greenwood, C. M. (2016). Gene coexpression analyses differentiate networks associated with diverse cancers harboring tp53 missense or null mutations. Frontiers in Genetics, 7, 137. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Park, M. Y. , Hastie, T. , & Tibshirani, R. (2007). Averaged gene expressions for regression. Biostatistics, 8(2), 212–227. [DOI] [PubMed] [Google Scholar]

- Pearson, K. (1895). Note on regression and inheritance in the case of two parents. Proceedings of the Royal Society of London, 240–242. [Google Scholar]

- Qiu, H. , Han, F. , Liu, H. , & Caffo, B. (2016). Joint estimation of multiple graphical models from high dimensional time series. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 78(2), 487–504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- R Core Team . (2016). R: a language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing. [Google Scholar]

- Ravasz, E. , Somera, A. L. , Mongru, D. A. , Oltvai, Z. N. , & Barabási, A.‐L. (2002). Hierarchical organization of modularity in metabolic networks. Science, 297(5586), 1551–1555. [DOI] [PubMed] [Google Scholar]

- Reid, S. , & Tibshirani, R. (2016). Sparse regression and marginal testing using cluster prototypes. Biostatistics, 17(2), 364–376. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robin, X. , Turck, N. , Hainard, A. , Tiberti, N. , Lisacek, F. , Sanchez, J.‐C. , & MÃijller, M. (2011). proc: an open‐source package for r and s+ to analyze and compare roc curves. BMC Bioinformatics, 12, 77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ruchat, S.‐M. , Houde, A.‐A. , Voisin, G. , St‐Pierre, J. , Perron, P. , Baillargeon, J.‐P. , … Bouchard, L. (2013). Gestational diabetes mellitus epigenetically affects genes predominantly involved in metabolic diseases. Epigenetics, 8(9), 935–943. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saltiel, A. R. , & Kahn, C. R. (2001). Insulin signalling and the regulation of glucose and lipid metabolism. Nature, 414(6865), 799–806. [DOI] [PubMed] [Google Scholar]

- Sathirapongsasuti, J. F. (2013). COPDSexualDimorphism.data: data to support sexually dimorphic and COPD differential analysis for gene expression and methylation. R package version 1.4.0. Retrieved from https://git.bioconductor.org/packages/COPDSexualDimorphism.data

- Sato, J. R. , Hoexter, M. Q. , de Magalhães Oliveira, P. P. , Brammer, M. J. , Murphy, D. , … Ecker, C. (2013). Inter‐regional cortical thickness correlations are associated with autistic symptoms: a machine‐learning approach. Journal of Psychiatric Research, 47(4), 453–459. [DOI] [PubMed] [Google Scholar]

- Shaw, P. , Greenstein, D. , Lerch, J. , Clasen, L. , Lenroot, R. , Gogtay, N. e. a. , … Giedd, J. (2006). Intellectual ability and cortical development in children and adolescents. Nature, 440(7084), 676–679. [DOI] [PubMed] [Google Scholar]

- Spearman, C. (1904). The proof and measurement of association between two things. American Journal of Psychology, 15(1), 72–101. [PubMed] [Google Scholar]

- Tibshirani, R. (1996). Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society. Series B (Methodological), 58(1), 267–288. [Google Scholar]

- Toloşi, L. , & Lengauer, T. (2011). Classification with correlated features: unreliability of feature ranking and solutions. Bioinformatics, 27(14), 1986–1994. [DOI] [PubMed] [Google Scholar]

- Tothill, R. W. , Tinker, A. V. , George, J. , Brown, R. , Fox, S. B. , Lade, S. , … Bowtell, D. D. (2008). Novel molecular subtypes of serous and endometrioid ovarian cancer linked to clinical outcome. Clinical Cancer Research, 14(16), 5198–5208. [DOI] [PubMed] [Google Scholar]

- Wan, Y.‐W. , Allen, G. I. , Anderson, M. L. , & Liu, Z. (2015). TCGA2STAT: simple TCGA data access for integrated statistical analysis in R . R package version 1.2. Retrieved from http://www.liuzlab.org/TCGA2STAT/ [DOI] [PubMed]

- Wendland, E. M. , Torloni, M. R. , Falavigna, M. , Trujillo, J. , Dode, M. A. , Campos, M. A. , … Schmidt, M. I. (2012). Gestational diabetes and pregnancy outcomes‐a systematic review of the World Health Organization (WHO) and the International Association of Diabetes in Pregnancy Study Groups (IADPSG) diagnostic criteria. BMC Pregnancy and Childbirth, 12(1), 1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Witten, D. M. , Shojaie, A. , & Zhang, F. (2014). The cluster elastic net for high‐dimensional regression with unknown variable grouping. Technometrics, 56(1), 112–122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Witten, D. M. , Tibshirani, R. , & Hastie, T. (2009). A penalized matrix decomposition, with applications to sparse principal components and canonical correlation analysis. Biostatistics, 10(3), 515–534. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yang, Y. , & Zou, H. (2014). gglasso: group lasso penalized learning using a unified bmd algorithm. R package version 1.3. Retrieved from https://github.com/emeryyi/gglasso

- Zhang, B. , & Horvath, S. (2005). A general framework for weighted gene co‐expression network analysis. Statistical Applications in Genetics and Molecular Biology, 4(1), Epub 2005. 10.2202/1544-6115.1128 [DOI] [PubMed] [Google Scholar]

- Zou, H. , & Hastie, T. (2005). Regularization and variable selection via the elastic net. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 67(2), 301–320. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary material.